1. Introduction

The building segment is responsible for a considerable percentage of world energy consumption, with heating, ventilation, and air conditioning (HVAC) systems being among the main energy users. Residential buildings account for ~60% energy consumption in the world [

1]. Increasing energy sustainability needs, indoor comfort requirements, and operational efficiency concerns have made intelligent, data-centered HVAC management solutions more necessary. Innovative developments in artificial intelligence (AI), Internet of Things (IoT), communication protocols, and multi-objective optimization [

2] have accelerated the creation of predictive and adaptive control systems to harmonize energy saving with occupant convenience.

Schedule-based and rule-based conventional HVAC control approaches tend to lag in handling dynamic and nonlinear indoor spaces with varying occupancy rates, external weather fluctuations, and thermal inertia in buildings. Unlike such conventional approaches, AI-based models, especially those that incorporate deep learning and reinforcement learning (RL), can learn intricate patterns from past data and adjust control strategies in real time. Methods in reinforcement learning have shown promising outcomes in maximizing building energy performance by allowing agents to dynamically modify control actions in response to environmental feedback. Effective practical deployment rests not only on learning performance but also on seamless, reliable communication among sensors, actuators, and controllers.

Here, industrial-grade communication protocols like Modbus TCP have gained more significance. Modbus TCP offers an open, high-data-rate, and reliable device-level communication protocol over IP-based networks that facilitates real-time data acquisition and actuation needed for smart HVAC control [

3].

To provide a comprehensive understanding of the proposed AI-based indoor temperature control system, this paper is organized into six main sections.

Section 1 provides the introduction of the paper,

Section 2 consists of the related work,

Section 3 provides an overview of the methodology,

Section 4 presents the experimental setup to conduct this study and discuss the results, while

Section 5 and

Section 6 discuss the conclusion and future work of the study.

2. Related Work

Recent developments in building automation have focused on smart HVAC systems that incorporate artificial intelligence (AI), Internet of Things (IoT), and predictive models to enhance energy efficiency and thermal comfort [

4]. Moreover, by considering the indoor environmental parameters, occupant comfort and energy efficiency can be enhanced by using predictive optimization [

5].

AI-driven predictive control is an appealing substitute for rule-based conventional thermostats. Zubair et al. [

6] presented an AI-based hierarchical approach for forecasting indoor air temperature (IAT) using Vision Transformers (ViTs) and Bi-LSTM models for energy-efficient HVAC scheduling. Park and Kim [

7] used Multilayer Perceptrons (MLPs) to perform short-term indoor temperature forecasting at multiple resolution levels in hours, thereby establishing AI models’ suitability for deployment at the microcontroller level. Ramadan et al. [

8] extended AI-based temperature forecasting through comparative studies of multiple machine learning models to emphasize ANN and Extra Trees as the most accurate techniques for dynamic thermal behavior in indoor spaces. Besides blackbox approaches, wearable and human-in-the-loop systems have been implemented to enhance individualized comfort. Cho et al. [

9] proposed an AI-based wireless wearable sensor that collects body thermal data from skin temperature monitoring in real time to provide dynamic HVAC adaptation based on physiological feedback. The system achieved an accuracy of 93.9 in thermal comfort prediction, providing an AI-based, human-centric temperature control system that could potentially save energy by 20%.

Reinforcement Learning (RL) has also been receiving attention for energy optimization. Silvestri et al. [

10] used an SAC Deep RL controller on an actual building environment, surpassing PI and MPC controllers by 50% in energy savings while enhancing indoor temperature control by 68%. This is indicative of the application potential of Reinforcement Learning in building control with non-linear dynamics and variability in occupant occurrences.

An important aspect of smart control systems is a secure and scalable communications protocol. Yue [

11] developed an intelligent monitoring system with two tiers based on both Modbus/RTU and Modbus TCP, effectively achieving serial communications’ high dependability in combination with TCP/IP-based speed and scalability. The use of Modbus TCP protocol is gaining ground in building automation for HVAC component control as well as for enabling real-time communications between distributed devices.

The security requirement for such systems is highlighted by their vulnerability to cyberattacks. Mughaid et al. [

12] presented a simulation-based framework for authenticating Modbus TCP in SCADA systems based on ID-based encryption as well as light-weight IDS/IPS to harden communication integrity. As smart control systems play an increasingly greater role in contemporary buildings, protection of the Modbus layer is vital for safe and uninterrupted HVAC functioning.

Integration between AI and IoT in building settings is further presented by Zhao et al. [

13], who developed an intelligent thermal comfort control system based on PMV-based AI algorithms as well as multiple sensors in a network to optimize HVAC functioning. In similar lines, Bernal et al. [

14] developed an economically viable mini-split AC control system using PLCs and NodeMCU-based modules to achieve energy saving by 10.63% while maintaining stable indoor temperatures at 22–24 °C.

This is complemented by neural network-based forecasting techniques such as those by Shahrour et al. [

8], who utilized gray-box models combined with ANN to forecast IAT using a wireless sensor network in test buildings. An et al. [

15] and Li et al. [

16] built on these by utilizing Elman neural network as well as SVM to forecast cooling load and temperature at varying meteorological inputs.

From an architecture point of view, Yan et al. [

17] designed an ECA-based real-time database system for smart building control, while Singh et al. [

18] discussed cloud-based room automation via rule sets based on occupancy. Such architectures highlight distributed control models for smart buildings.

All these efforts point toward intelligent, networked, and secure HVAC systems. Whether in terms of AI-based temperature control systems, Reinforcement Learning, or safe Modbus TCP-based systems, integration of sensing, prediction, and real-time control is defining next-generation energy-efficient, occupant-centric building spaces.

3. Methodology

In this study, we proposed a predictive optimal control mechanism of indoor temperature for energy sustainability, indoor comfort, and operational efficiency. For this purpose, two primary AI models have been utilized. The first one is an LSTM-based model designed to predict future temperature changes using historical sensor data. LSTM (Long Short-Term Memory), a specialized type of RNN, effectively addresses the long-term dependency issues of conventional RNNs, accurately capturing essential temporal patterns even from extended time-series data. The second model employs DQN (Deep Q-Network), a reinforcement learning algorithm, to determine optimal HVAC control parameters. The DQN model learns state-action value functions, deriving optimal control conditions to minimize energy consumption while maintaining user comfort. Sensor data collected in real time were stored in InfluxDB, a time-series database. Additionally, an external dataset consisting of 8761 hourly average temperature measurements from the experimental area in Cheonan, provided by the Korea Meteorological Administration for January to December 2023, was analyzed. The sensors used in the system exhibit error margins of ±0.2 °C for temperature, ±3% RH for humidity, and ±1% for voltage and current measurements.

3.1. Data Collection

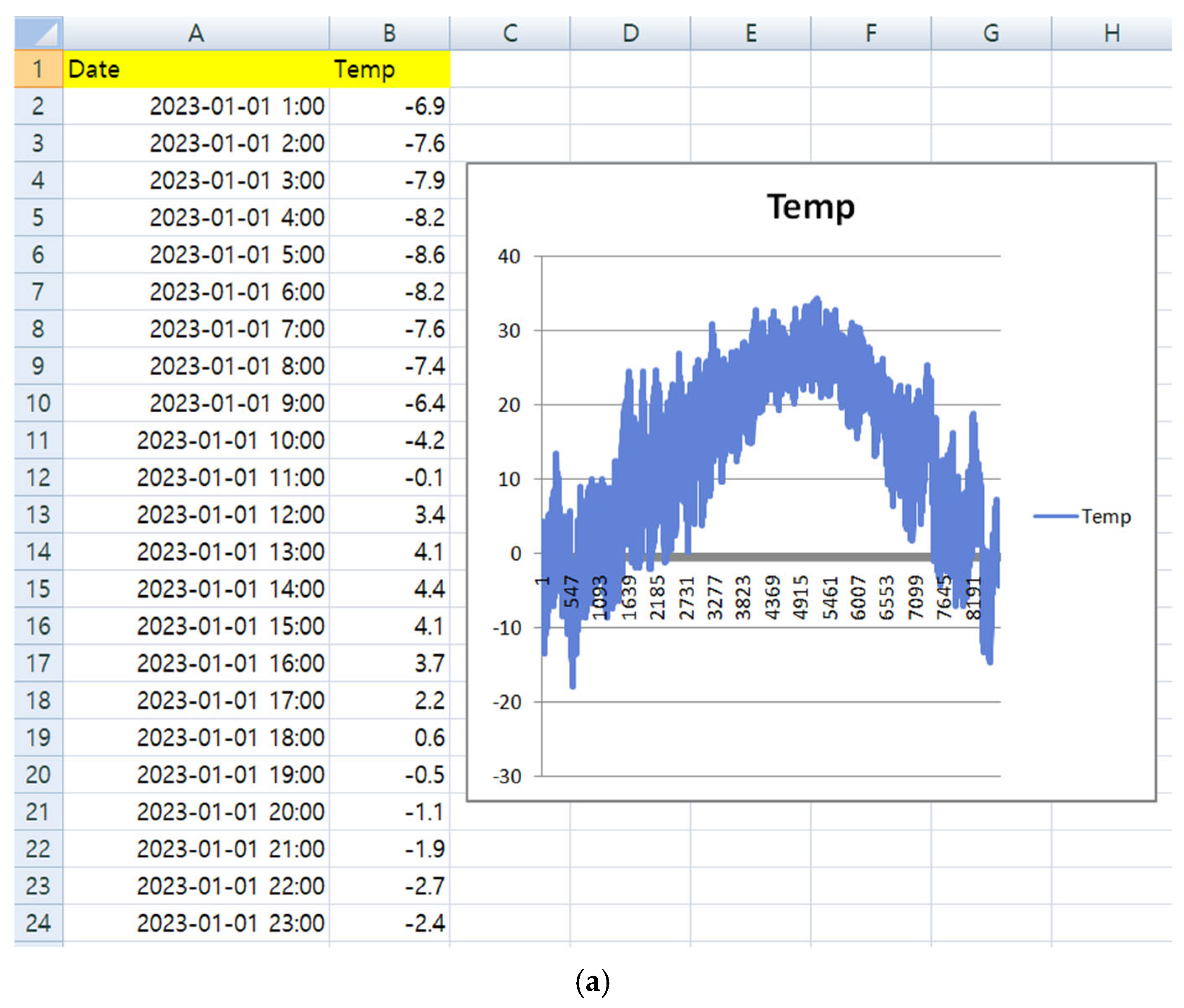

The dataset used in this study was structured as follows: outdoor temperature data were sourced from the open weather data portal of the Korea Meteorological Administration for Cheonan City, South Korea, in 2023, while indoor temperature data were generated by simulating average indoor temperature conditions representative of the four seasons. Each dataset consists of 8761 hourly temperature measurements collected over a one-year period, with both time and temperature values recorded to a precision of one decimal place. Based on these datasets, the LSTM model was subsequently developed.

Figure 1a presents the outdoor temperature data sourced from the Korea Meteorological Administration’s open weather data portal for Cheonan, South Korea, whereas

Figure 1b presents the simulated average indoor temperature data representative of a typical South Korean household. For clarity, the outdoor temperature data shown correspond to the 24 h measurements recorded on 1 January 2023, whereas the indoor temperature data represent simulated 24 h temperature values for 31 December 2023.

The rationale for not utilizing more than one year of data to develop the model stems from the observation that graphing outdoor temperature data from the three years prior to 2023 revealed no significant variations that would meaningfully alter the control parameters. Consequently, only the measured and simulated temperature data from 2023 were employed. The LSTM model was trained to predict indoor temperature at time t + 1, using input features including timestamp encoding, outdoor temperature from KMA data, and a simulated indoor temperature profile based on a 25 °C comfort setting. All data were sampled hourly.

3.2. Predictive Optimal Control Mechanism of Indoor Temperature

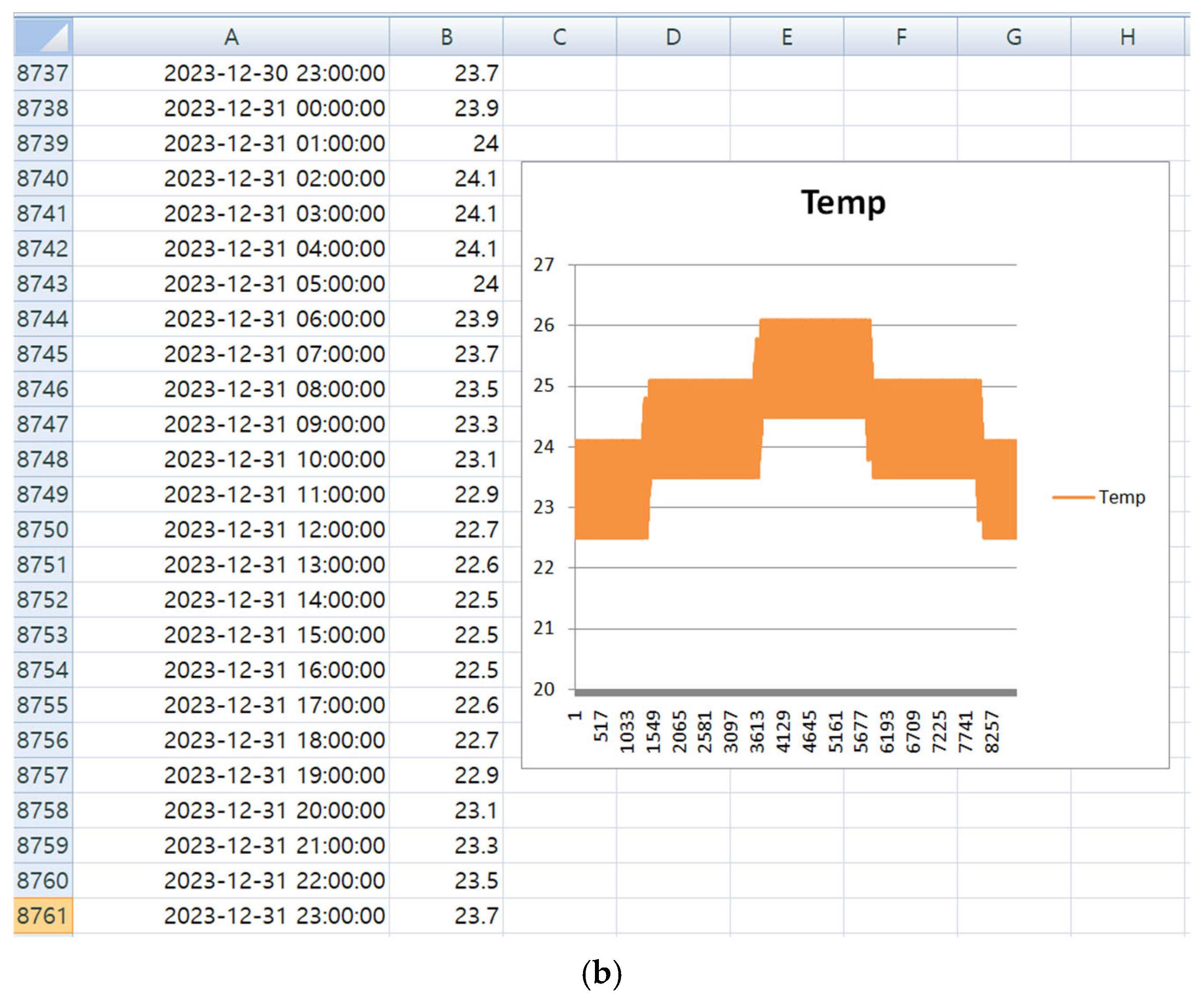

The architecture and configuration of the system consists of the main-part and the sub-part, which are remotely connected using Modbus TCP/IP-based communication protocols. The main-part runs AI algorithms to supervise the overall operation of the system, while the sub-part collects sensor data on temperature and humidity and automatically adjusts room temperature by controlling the heater and air conditioner via a power relay. In addition, the sub-part implements a hysteresis control mechanism to ensure stable operation of the heating and cooling devices. In this experiment, a Raspberry Pi 4B served as the controller of the sub-part and also functioned as a simple experimental server to facilitate comparative experiments, including real-time sensor data monitoring.

Figure 2 shows the configuration of the entire system proposed in this paper.

The NVIDIA Jetson Orin Nano 8 GB serves as the AI Main-part in the system and acts as a central controller via Modbus TCP. It is a compact, high-performance embedded platform designed to support efficient AI computing tasks, featuring NVIDIA Ampere architecture-based GPUs, and is ideal for environments that require simple yet effective AI-based decision-making, optimized for real-time inference and deep learning model training. The processor integrates a 6-core ARM Cortex-A78AE CPU that supports multi-threading tasks and real-time data processing, along with 8 GB of LPDDR5 memory for managing datasets. It also offers expandable storage options for long-term data storage, including a 500 GB SSD installed for this purpose. The Jetson Orin Nano provides various I/O ports, including USB, GPIO, I2C, and SPI, to facilitate smooth communication with external sensors and control devices. While in sub-part, the physical sensors and actuators such as the heater and air conditioner are connected and are controlled by a Raspberry Pi microcontroller, which handles the physical control by receiving sensor inputs.

3.3. Communication Platform Based on Modbus for Temperature Control

The Modbus protocol, one of several communication methods used in industrial automation, is widely adopted due to its simple communication structure, ease of driver implementation, flexibility in connecting various devices, and compatibility with existing temperature controllers. In this study, Modbus is implemented as a TCP/IP-based communication protocol within an AI-based indoor temperature control system to facilitate real-time data exchange between a central AI controller (main-part) and distributed temperature control devices (sub-parts). This Modbus TCP implementation ensures efficient temperature control, continuous monitoring, and seamless communication between HVAC components.

The proposed temperature control system employs a client–server architecture based on the Modbus TCP protocol. The central AI-based controller (main-part) acts as the Modbus TCP master, issuing control commands and requesting sensor data. In contrast, the distributed controllers installed in individual rooms (sub-parts) function as Modbus TCP slaves, responding to these commands and transmitting local sensor data, such as temperature, humidity, and power consumption, back to the master controller [

19,

20]. This master–slave communication structure leverages Modbus TCP’s capability to overcome limitations related to multiplex communication inherent in traditional RS-485 communication.

The system architecture is based on individual Modbus transactions, each consisting of a master request and a corresponding slave response. The implementation adopts an Ethernet-based Modbus TCP frame structure to ensure minimal latency and optimal reliability. The architecture utilizes dedicated Modbus registers for efficient data management: Input registers (function code 0x04) store temperature and humidity sensor readings, while holding registers (function code 0x03) hold HVAC control parameters. The implemented system primarily uses three standard Modbus TCP function codes: 0x03 to read HVAC parameters, 0x04 to acquire sensor data, and 0x06 to dynamically adjust HVAC settings.

During temperature monitoring tasks, the AI controller initiates a Read Input Registers (0x04) request to access sensor data, receiving a 16-bit numeric response from the relevant control devices. The message protocol for temperature collection follows the standardized Modbus TCP frame structure. For instance, to accurately transmit a temperature reading such as 25.0 °C, the numeric value is multiplied by an agreed-upon scaling factor (e.g., 100) prior to transmission, converting it into an integer format (e.g., 2500). Upon receiving the transmitted data, the master or slave device divides the integer by the same scaling factor (100) to convert it back into its original floating-point format, ensuring precise and consistent handling of real numbers in control algorithms.

The communication protocol conforms to the standardized Modbus TCP frame structure, which includes the following components:

A 2-byte Transaction ID serving as a unique request identifier.

A static 2-byte Protocol ID (0x0000) for Modbus TCP.

A 2-byte Length field indicating request size.

A single-byte Unit ID for slave device identification.

A Function Code byte specifying the operation type.

A 2-byte Register Address indicating data location.

A 2-byte Register Count specifying the read scope.

To acquire temperature data from register 0x000A, the system exchanges the following messages:

Request (Master → Slave):

Response (Slave → Master):

After establishing the data acquisition protocol, the system implements mechanisms for the dynamic control of HVAC operations [

21,

22]. The AI controller dynamically adjusts HVAC operation by modifying holding registers (function code 0x03), which control heating and cooling parameters. A typical adjustment of the cooling setpoint is performed using the Write Single Register (0x06) command:

This command architecture enables accurate and real-time temperature control within smart buildings, as well as local control of environments such as small rooms. The integration of Modbus TCP into the AI-driven HVAC system provides several technical advantages: seamless interoperability with industrial control systems, responsive temperature adjustment facilitated by low-latency TCP communication, easy system expansion through a modular design, and cost-effective implementation on a large scale with minimal hardware requirements.

3.4. Indoor Temperature Prediction Algorithms Based on LSTM

Long Short-Term Memory (LSTM) is an advanced type of recurrent neural network (RNN) designed to effectively capture long-term dependencies in time-series data. It is particularly suitable for modeling continuous time series, such as indoor temperature regulation. Using LSTM, we developed a predictive control algorithm, the details of which are described below. In this LSTM model, the input sequence consists of one year of temperature data, including outdoor and indoor temperatures, collected at each of the 8761 hourly time steps (t = 1 to 8761). The input vector

for each time step t is defined by Equation (1) as follows [

23,

24,

25,

26].

: encoding representing date/time information.

: the outside temperature at time t.

: the room temperature at time t.

At each time point

, the following gate operations are performed using the hidden state

and cell state

from the previous time step. The fundamental equations of the LSTM are defined in Equations (2)–(7):

σ(⋅): sigmoid activation function (output range 0~1).

tanh(⋅): enable hyperbolic tangent (output range −1 to 1).

: weight and bias parameters of each gate.

At the output layer, the predicted temperature is derived from the hidden state

, providing an estimate of the indoor temperature at the subsequent time step

as described below:

The prediction output from the LSTM model, generated using one year’s worth of outdoor and indoor temperature data, serves as the target control parameter and exhibits characteristics consistent with the existing control framework. This predicted output is subsequently provided as an input to the DQN algorithm, where it is utilized within the reinforcement learning process. The final LSTM prediction is defined by Equation (8).

3.5. Optimal Temperature Using Deep Q-Network (DQN)

DQN is a reinforcement learning algorithm that combines Q-learning with deep neural networks, enabling a system to learn optimal control policies through interactions with its environment [

27]. The fundamental equation of DQN is defined by Equation (9):

: indoor temperature at the next point predicted by LSTM.

: current measured average humidity.

: power consumption (related to heater/air conditioner operation status).

user-specified setting temperature.

: expected reward for taking action in state .

: learning rate.

: immediate reward.

: discount factor for future rewards.

: maximum possible Q-value in the next state.

Here, Equation (10) represents the Q-value update equation used in DQN. Additionally, the DQN utilizes deep neural networks to approximate the Q-value function based on dynamically changing environmental data in real time, while enhancing learning stability through a replay buffer that stores past experiences and a target network that provides consistent Q-value targets during updates. In a temperature control system, the state (s) may encompass variables such as current indoor temperature, outdoor temperature, time, and occupancy, whereas the action (a) represents adjustments to the target setpoint temperatures for heating and cooling, as well as system control commands. The reward (r) is designed to strike a balance between energy consumption and the comfort level of the indoor air temperature. By utilizing temperature data generated by the LSTM, the DQN learns control strategies that optimize energy efficiency and user comfort across diverse environmental conditions. Specifically, in indoor temperature regulation, the DQN automates complex decision-making processes—such as optimizing preheating and precooling times, adapting to usage patterns, and detecting changes in thermal dynamics—thereby enabling greater real-time adaptability to ambient conditions compared to traditional rule-based control systems [

28].

The final control temperature calculation content, DQN, selects the optimal control action based on the state

to calculate the final control temperature

. This can be expressed as a weighted average of the LSTM prediction and the DQN correction value, as shown in the following equation:

β: the weight of LSTM prediction and DQN correction.

: directly regressed as a temperature correction output by the DQN, without incorporating power consumption or cost metrics in the reward structure.

Thus, the final control temperature output by the DQN is given by Equation (11), where includes user-defined control values from JSON input.

3.6. Adaptive Heater and Air Conditioner Control Mechanisms

Finally, the control system receives the value remotely and controls the heater and air conditioner according to the pre-defined threshold range.

Equations (12) and (13) represent the equations of the sub-parts that control the hysteresis value during heater control and air conditioner control. Here, and are the slash hold values of the heater and air conditioner, respectively.

When the final output is an analog signal (e.g., 4–20 mA or 0–5 V), the load driver can be operated using a continuous control signal. In contrast, with an ON/OFF control approach, the heater or air conditioner is activated through periodic switching cycles to regulate temperature. However, frequent switching inherent in ON/OFF control may result in adverse effects, such as motor surges, contact wear, or reduced lifespan of devices like air conditioners. To effectively mitigate these issues, a hysteresis control technique was implemented at the final control stage.

4. Experimental Setup and Results

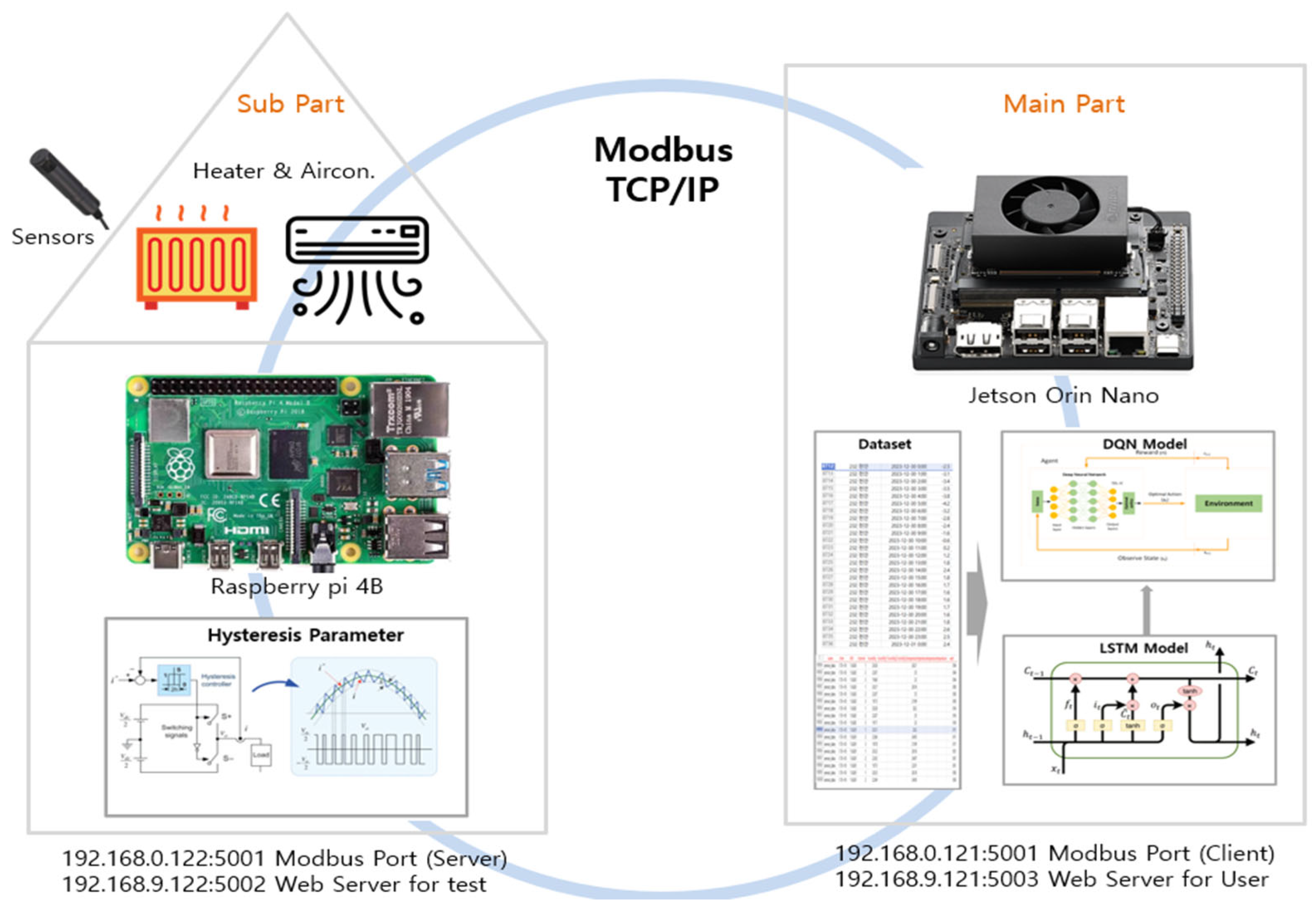

This part offers an in-depth description of the system setup and results from the experimental phase of the research. The setup was created to test the performance of AI-based temperature control algorithms in real time using sensor data. A prefabricated test chamber was installed with some HVAC equipment, such as an air conditioner, electric heater, and circulation fan, controlled by an NVIDIA Jetson Orin Nano. The system was configured to mimic standard indoor environmental setpoints, with sensors measuring temperature, humidity, and energy consumption in real time. Data captured from the experiment were utilized to train AI models, such as an LSTM model for forecasting temperature and a DQN model for control optimization. Actual performance results from the AI-based control system, which were tested on the basis of real-time data measurement and prediction from models, are discussed in this part, illustrating the capabilities of algorithms in controlling energy consumption to achieve the desired temperature setpoint with reduced wear on mechanical components.

4.1. Development Environment

The details of the development environment to conduct this study are given below in

Table 1.

The data processing and system control algorithms were developed using Python 3.8 and C++. The AI model was implemented using TensorFlow 2.15.0, a widely used deep learning framework. Multithreading and asynchronous communication techniques were C++employed to enhance system responsiveness and real-time data processing capabilities. Flask served as the backend service, Gunicorn was used as the application server, and Grafana provided real-time data visualization and system configuration through an intuitive graphical interface. Front-end development was performed using HTML, CSS, and JavaScript.

4.2. Experimental Setup

To conduct this study, a 3.3-square-meter prefabricated test space was used. Within this space, a compact air conditioner (AC 220 V, 2.6 A, 550 W), an electric heater (AC 220 V, 4.5 A, 1000 W), and a circulation fan (5 V, 0.6 A, 3 W) were installed to replicate typical indoor conditions. These devices were controlled by an NVIDIA Jetson Orin Nano 8 GB, which functioned as the central AI controller operating on Ubuntu 22.04 with JetPack 6.1 SDK. This setup enabled real-time processing and integration of various HVAC control functions.

Temperature and humidity sensors were installed throughout the experimental space to continuously monitor average environmental conditions. Current and voltage sensors were also implemented to measure power consumption in real time, enabling comprehensive energy tracking during system operation. A total of 8761 data points collected during the testing phase were converted into Excel files and subsequently used for AI model training, providing a robust dataset to refine the control algorithms. Additionally, a hysteresis control mechanism with a ±1 °C tolerance around a 25 °C setpoint was implemented to minimize unnecessary cycling of equipment. This hysteresis mechanism effectively reduced frequent ON/OFF cycles, decreasing mechanical wear, extending component lifespan, and mitigating potential power surges.

The hysteresis control mechanism was integrated into the system to manage real HVAC operations by leveraging prediction results obtained from the LSTM model. Control optimization was conducted in real time by directly incorporating sensor data into the AI decision-making process. The AI algorithm, deployed on the NVIDIA Jetson Orin Nano, processed sensor data in real time to dynamically adjust the control parameters. Throughout this process, real-time inference speed was significantly improved by applying model optimization techniques utilizing TensorRT, thereby ensuring rapid and accurate control decisions. The use of TensorRT was particularly crucial for enhancing the AI model’s responsiveness in dynamic indoor environments, where prompt adjustments are essential.

Python was chosen for data processing and AI model implementation because it provides a wide range of libraries suitable for machine learning tasks. Low-level driver programming was conducted using C/C++, facilitating real-time data acquisition and precise device control, thus providing the required efficiency and performance. NVIDIA’s CUDA framework was implemented for parallel processing, significantly accelerating computations within neural network models. Additionally, Docker containers were utilized to maintain modular independence and software portability, enabling seamless integration and deployment of various components across different platforms and substantially reducing overall development complexity.

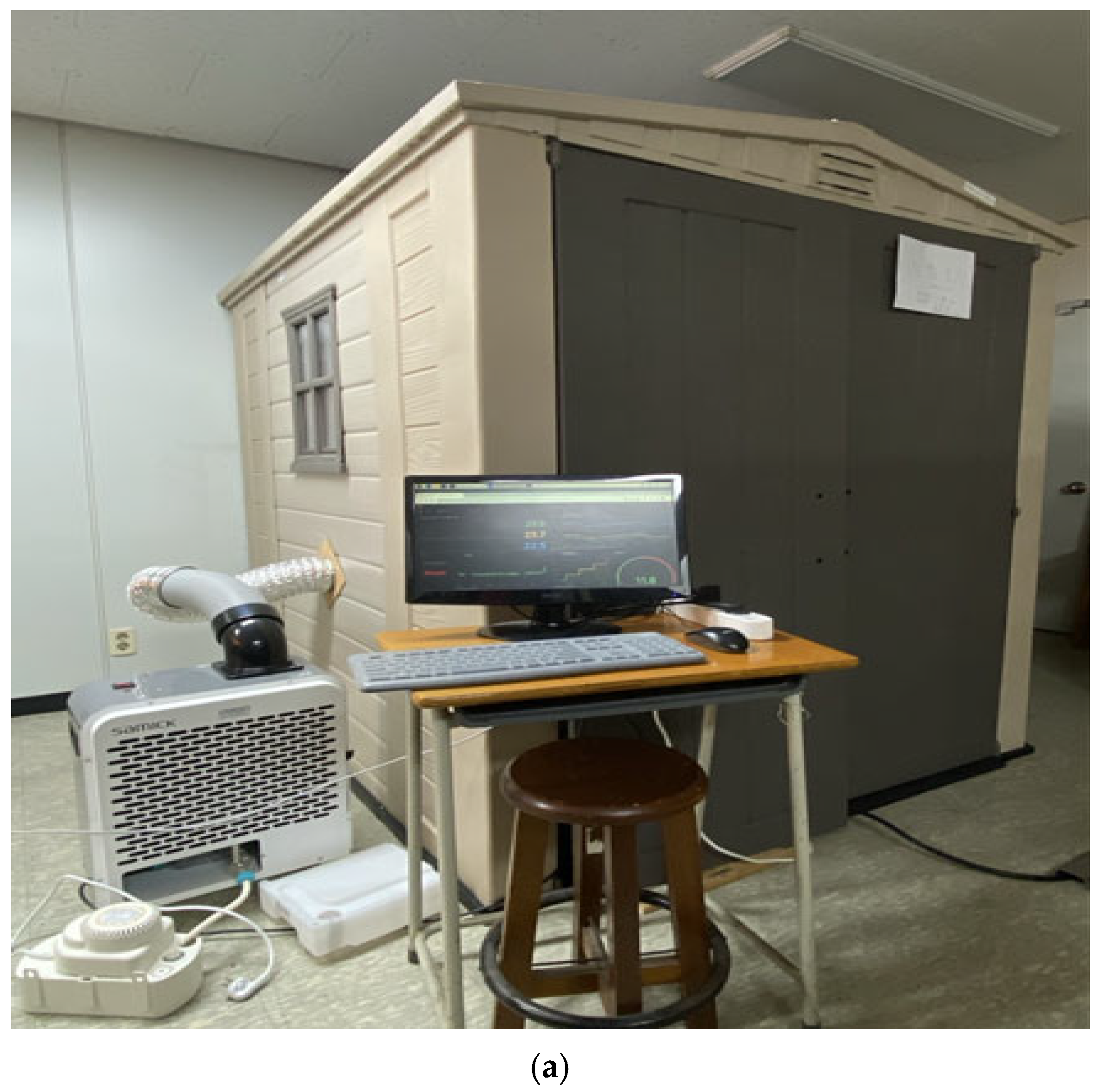

In the figures below,

Figure 3a,b display the overall setup to carry out this work. Equipped with air conditioning units, monitoring sensors, temperature control devices, heaters, blowers, and a system for real-time monitoring and data analysis, this setup is used for AI-based climate and temperature control. As this system is mainly divided into two parts, including the main part and sub-part,

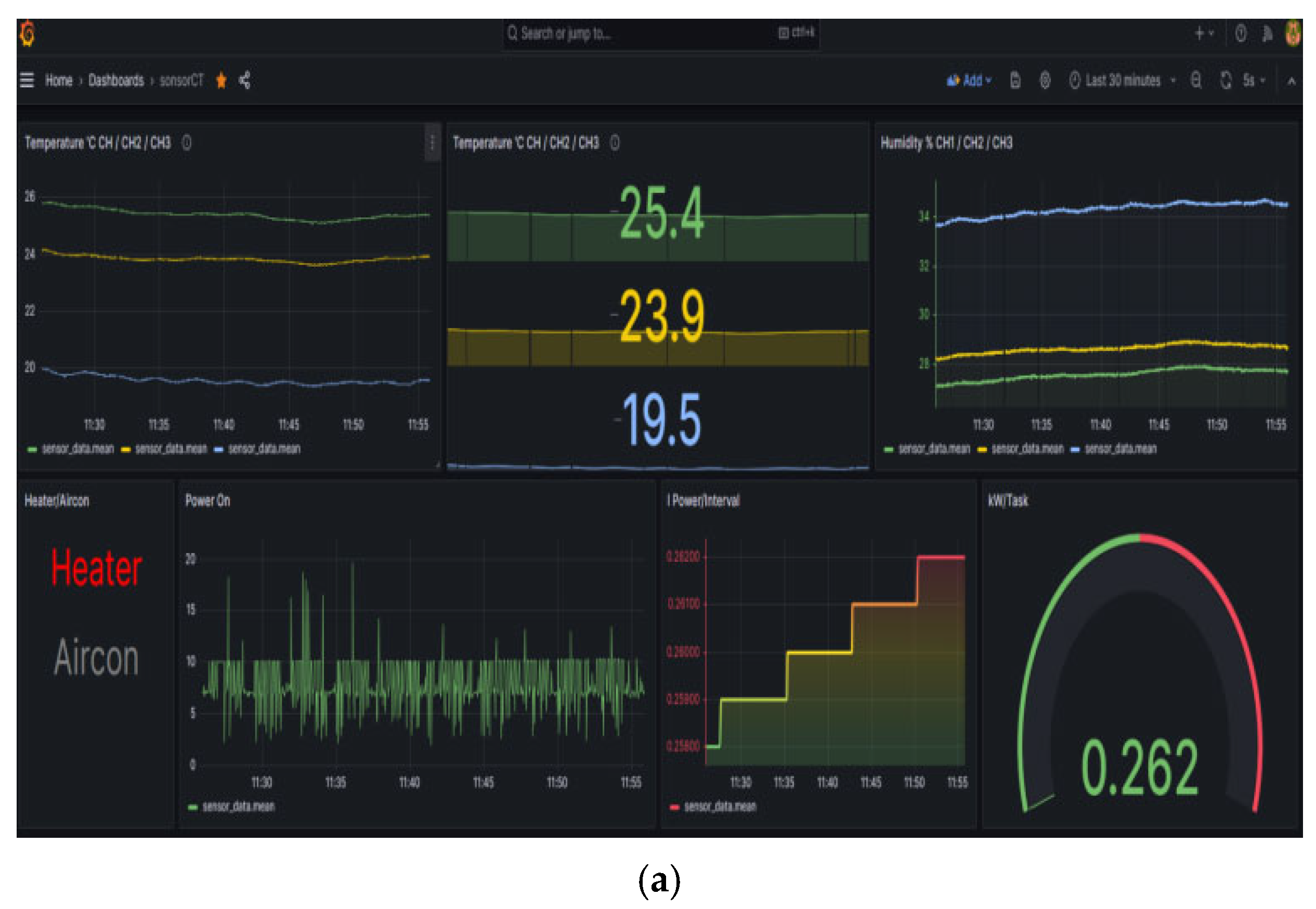

Figure 4a represents the sub-parts of the system in which the status of the system, such as power consumption, humidity level, and temperature, along with control values, are being monitored. System status and the control values of the main part are displayed in

Figure 4b. The main-part provides real-time system status of sensor data, power consumption, temperature, and humidity. It displays the latest sensor readings, AI-predicted temperature control values, and system control values.

4.3. Results and Analysis

An empirical analysis was conducted in this study to validate the temperature control algorithm, beginning with the dataset preprocessing required for constructing the LSTM model. The outdoor and indoor temperature datasets were imported and sorted chronologically by timestamp. Subsequently, hour and month information were extracted from each timestamp, and sine and cosine transformations were applied to create features capturing periodicity. All features—including indoor and outdoor temperatures, along with the sine and cosine values corresponding to hour and month—were normalized to a range between 0 and 1 using the MinMaxScaler (version 1.7.0). Next, input sequences were constructed using temperature data from the preceding 24 h (seq_length = 24) to predict the temperature for the following 24 h (forecast_horizon = 24). The entire dataset was then partitioned into training and testing sets in 80–20% ratio. The LSTM model, composed of two LSTM layers followed by a dense layer, was trained on the training set. The predicted temperatures generated from the test dataset were inverse-transformed, constrained to an upper temperature limit, and smoothed using exponential smoothing. Finally, the smoothed predictions were plotted against the actual temperatures on a 24 h prediction time axis to evaluate the performance of the LSTM model.

Figure 5a presents a terminal output illustrating the LSTM model’s training progress. The model was trained for a total of 25 epochs, with each epoch consisting of 435 mini-batches. Each training step took approximately 31 to 70 milliseconds, resulting in an average epoch duration of about 30 s. Both the training and validation losses gradually decreased from an initial value of approximately 0.003, eventually converging around 0.0024. The close alignment between the two loss curves throughout training indicates the absence of significant overfitting. Therefore, it can be concluded that the model successfully learned the underlying data patterns and achieved stable convergence. The graph in

Figure 5b compares the 24 h indoor temperature changes predicted by the LSTM model with the actual measured temperature changes. The

x-axis represents the number of hours from the current time, while the

y-axis denotes temperature (°C). The solid line represents the predicted temperature curve from the LSTM model, processed with exponential smoothing, illustrating a smooth variation. The dashed line corresponds to the actual measured temperature data (test set), allowing for a comparison with the model’s predictions. The

x-axis range of 0 to 24 signifies the elapsed time from the current moment, visualizing a 24 h prediction sample for a specific time point and demonstrating that the predicted versus actual temperatures over the 24 h following that time point are effectively forecasted. Although visual comparison (

Figure 5b) shows high alignment between predicted and actual indoor temperatures, we additionally computed evaluation metrics based on the test set. The Mean Absolute Error (MAE) was approximately 0.06 °C, and the Root Mean Square Error (RMSE) was 0.08 °C, with prediction errors consistently below ±0.1 °C in most time periods.

The LSTM model predicts the indoor temperature at the subsequent time step based on indoor and outdoor temperature data from the preceding 24 h. This predicted temperature is integrated into a six-dimensional state vector for input into the DQN model, which incorporates additional real-time environmental variables and a user-specified temperature setpoint. Specifically, the input state vector to the DQN consists of [LSTM-predicted temperature, average humidity, indoor temperature, outdoor temperature, power consumption, and user-defined offset value]. The user offset and immediate setpoint values are configured manually via a JSON file and reflected instantaneously in the state vector. The DQN processes this state vector through Q-learning updates to compute an optimal compensation value, which is combined with the LSTM-predicted temperature using a weighted average to determine the final temperature setpoint (final_setpoint). Finally, the controller regulates the indoor temperature by activating the heater and air conditioner within predefined threshold ranges based on the remotely received final_setpoint.

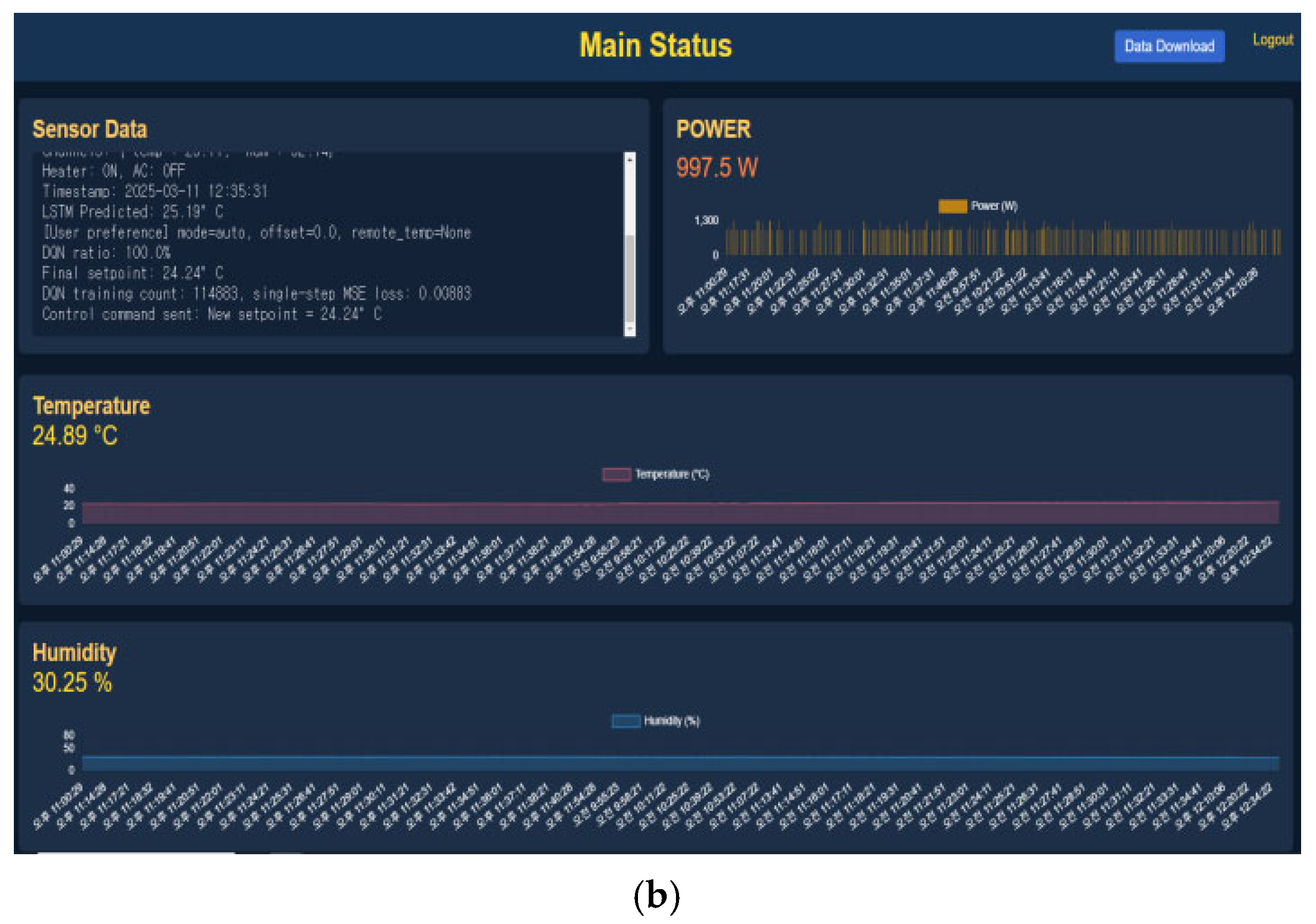

Figure 6a illustrates the terminal status window of the control section where the heater and air conditioner are installed. This terminal output displays the hourly measurements of power consumption, temperature, and humidity values recorded at different heights within the experimental warehouse (approximately a 70 cm vertical difference between channels). Additionally, it shows the operating status of the heater and air conditioner controlled by the hysteresis settings, along with the remote-control status via Modbus TCP.

Figure 6b presents the status window reflecting the control actions executed based on the final temperature setpoint generated by the DQN algorithm. This includes temperature and humidity values collected from the experimental warehouse, operational status of the heater and air conditioner, the LSTM model’s predicted temperature output from the AI main controller, the user-defined offset value, the selected control method, and the mean squared error (MSE) value.

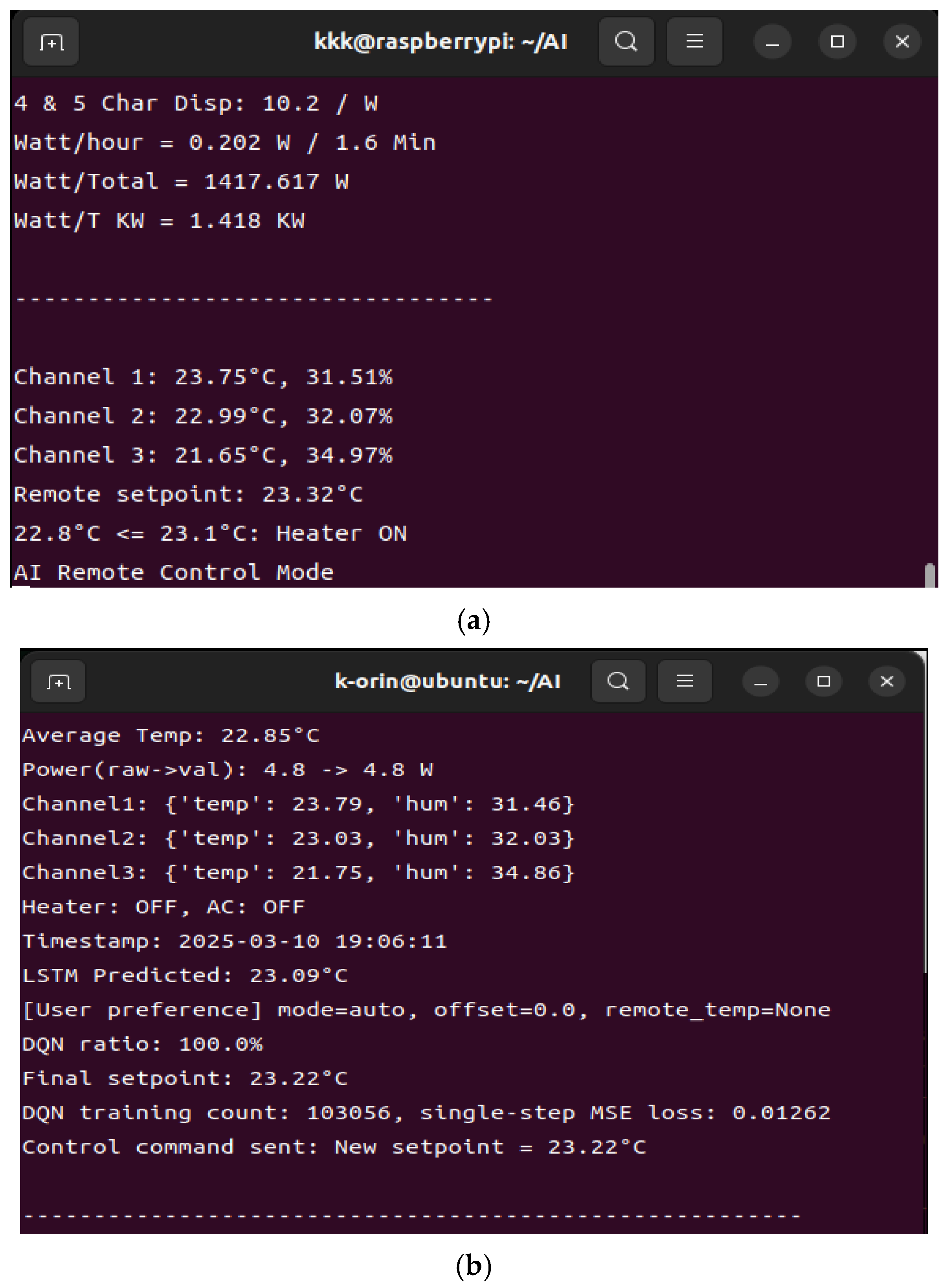

Figure 7a presents the Excel dataset, including hourly measurements of indoor average temperature (avg_temp), control target temperature (final_setpoint), humidity (avg_humidity), power consumption (power_watt), and the predicted temperature from the LSTM model (LSTM_predicted), recorded on 4 March 2025.

Figure 7b illustrates the hourly variations of both the indoor average temperature and the target temperature (final_setpoint) for the same date, clearly showing that the controlled target and actual indoor temperatures exhibit highly similar trends. In particular, the two temperature curves closely align throughout most periods, confirming that the control system effectively tracks the desired temperature setpoint. However, during certain intervals with notable temperature fluctuations, the target temperature slightly precedes the actual temperature, which is a natural characteristic of prediction-based temperature control systems.

Table 2 presents the AI-calculated final setpoint temperatures (final_setpoint) at approximately 1 h intervals starting from 00:00 on 4 March 2025, alongside the corresponding measured average indoor temperatures (avg_temp). According to the data, the final setpoint temperature gradually increased over time, peaking at 23.51 °C around 11:00 a.m. The actual measured indoor temperature closely followed this trend, consistently maintaining values within a slight margin of error (approximately ±0.1 °C). This demonstrates the effectiveness of the implemented algorithm, as the DQN efficiently controls indoor temperature by integrating user-defined settings, power consumption data, humidity levels, and temperature predictions from the LSTM model.

5. Conclusions

This study demonstrates the potential of AI-based temperature control to significantly enhance indoor environmental quality and energy efficiency compared to conventional systems. The AI-based control system is designed to maintain stable indoor temperature and humidity levels while minimizing energy consumption, ensuring both efficiency and occupant comfort. Optimal control achieved through reinforcement learning in the main AI controller not only reduces unnecessary energy usage but also allows the system to predict and adapt dynamically to variations in user behavior and environmental conditions. By leveraging machine learning models such as LSTM and DQN, the system can continuously learn and refine its performance for further optimization.

The AI main controller, utilizing Modbus TCP, can simultaneously manage multiple distributed sub-part units. In other words, the system can effectively control several HVAC units responsible for air temperature regulation across various rooms or zones, enabling centralized and efficient management. Moreover, by replacing the Raspberry Pi used in the experimental setup with a simpler microcontroller (MCU) and applying the Modbus TCP protocol, multiple rooms within a single building can be managed concurrently. This approach reduces overall costs, simplifies hardware requirements, and enhances system scalability, making it suitable for large-scale implementations.