1. Introduction

Respiratory diseases, including community-acquired pneumonia, asthma, and acute asthma exacerbations, are a major source of morbidity and mortality in children, with the World Health Organization (WHO) identifying acute respiratory infections as the leading cause of death in children under five years of age [

1,

2,

3]. In the United States, 6.3 million children are diagnosed with asthma. Most respiratory tract infections (RTIs) are self-limiting, and they impose a significant financial burden, costing billions annually in ambulatory care [

4,

5,

6,

7]. While diagnostic tests such as chest radiographs and assessments of vital signs and symptoms are essential, auscultation and clinical observation remain critical in diagnosing pediatric respiratory conditions. Misdiagnosis or misinterpretation in this area can lead to unnecessary pressure on healthcare resources.

The evaluation of bodily sounds dates back to ancient Egypt [

5]. A major milestone occurred in 1816 when Dr. René Laennec invented the first stethoscope using a rolled sheet of paper [

8]. This innovation quickly became a typical assessment method in clinical practice, and the stethoscope has since remained a fundamental diagnostic tool [

8]. Lung auscultation, in particular, continues to be a vital component of respiratory assessments due to its accessibility, affordability, and clinical relevance [

9]. However, despite its advantages, chest auscultation suffers from significant inter-listener variability. It requires the overall pooled sensitivity for lung auscultation to be 37% and specificity 89% [

10], which limits its diagnostic reliability [

8].

To address these limitations, in recent years, many researchers have tried to develop technology-assisted auscultation and have used computerized approaches to analyze breath and lung sounds [

9,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29]. Some studies have explored general respiratory sound analysis [

9], while others have focused on specific conditions such as chronic obstructive pulmonary disease (COPD) [

11]. For instance, Nagasaka and Guntupalli developed methods to analyze wheeze signals in asthma patients [

11], and Verder et al. introduced an artificial intelligence (AI)-based algorithm to predict bronchopulmonary dysplasia in newborns, aiming to improve clinical outcomes. Additionally, computerized lung sound analysis tools have been proposed to support disease diagnosis [

16,

17]. R. Palaniappan developed a system using computerized analysis to classify respiratory pathologies from lung sounds [

25], distinct from broader breath signals, while Brian R. Snider applied hidden Markov models (HMMs) to automate breath sound classification for sleep-disordered breathing [

26]. These approaches are tailored to adult conditions like chronic obstructive pulmonary disease (COPD) [

11], asthma [

5,

27], pneumonia [

27], and bronchopulmonary dysplasia [

11,

28]. Since respiratory sounds in children differ significantly from those in adults [

9,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29], these models are insufficient for early childhood applications [

30]. Researchers also use machine learning to interpret children’s lung sounds. Kevat and Kalirakah proposed the use of digital stethoscopes to detect pathological breath sounds in children [

31]. Kim and colleagues conducted research on deep learning models for detecting wheezing in pediatric patients [

32]. Park and Kim employed support vector machines (SVMs) to classify breath sounds in children, including distinctions between normal and abnormal, crackles and wheezing, normal and crackles, and normal and wheezing [

33]. Ruchonnet-Métrailler and Siebert also applied deep learning techniques to analyze breath sounds in children with asthma [

34]. Mochizuki developed an automated procedure for analyzing lung sounds in children with a history of wheezing [

35]. Moon and Ji utilized machine learning-based strategies to improve wheezing detection in pediatric populations [

36]. However, most existing studies focus either on distinguishing normal from abnormal breath sounds—such as wheezing or crackles—or single respiratory conditions like asthma. There is limited research targeting disease-specific classification across a broader range of pediatric respiratory diseases, such as croup or pneumonia.

Breath sounds, characterized as non-stationary and time-varying, pose significant challenges to traditional speech signal processing techniques, necessitating tailored adaptations for effective analysis. In young children, unique anatomical and developmental differences produce breath sounds with distinct individual traits that deviate from standard speech signal models. Consequently, applying fixed-parameter LPCs or MFCCs to pediatric breath sounds proves suboptimal. This paper introduces a novel method leveraging Dynamic Linear Prediction Coefficients (DLPCs) for breath sound recognition. Unlike conventional LPCs, DLPCs treat coefficients as time-dependent functions rather than static values, adeptly capturing evolving frequency patterns in non-stationary signals. This approach enables analysis of extended signal segments, lowering computational demands while preserving flexibility across diverse subjects.

Moreover, in order to capture the nonlinear chaotic dynamics of disordered breathing, such as airflow turbulence or airway obstruction, which are critical indicators of respiratory pathologies in pediatric populations, and enable robust classification of normal versus abnormal breath sounds, this paper employs a spectral entropy approach as another complementary feature [

37,

38,

39]. Spectral entropy quantifies the complexity and irregularity of acoustic signals by computing the Shannon entropy of the normalized power spectral density across breath sound segments. This approach allows us to detect subtle physiological changes, differentiate distinct pathologies, and maintain resilience against ambient noise.

Building on previous work [

40,

41], the proposed methods advance breath sound analysis by incorporating information from the time, frequency, and spectrogram domains. The approach is guided and validated by input from health professionals. Two distinct features—Dynamic Linear Prediction Coefficients (DLPCs) and spectral entropy—are introduced to capture dynamic acoustic patterns with greater accuracy. These features are evaluated using random forest and logistic regression classifiers to assess their respective strengths and limitations. This comparative analysis helps to identify the most effective feature representations for future integration or optimization, and it provides a robust framework for pediatric breath sound classification.

Section 2 examines respiratory sounds and respiratory diseases in young children and their characteristics, followed by an acoustic analysis using signal processing techniques.

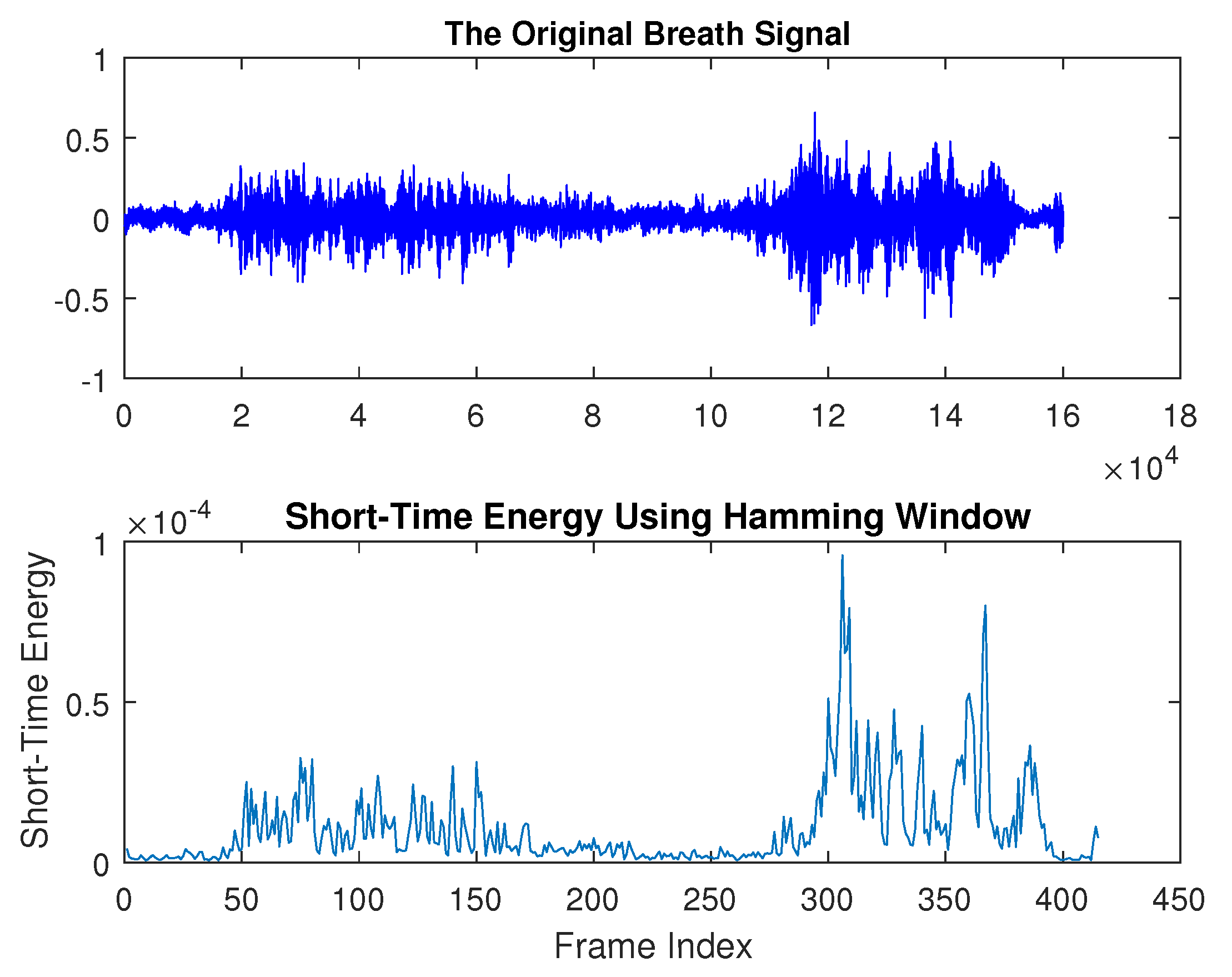

Section 3 discusses breath sound preprocessing techniques and detection methods.

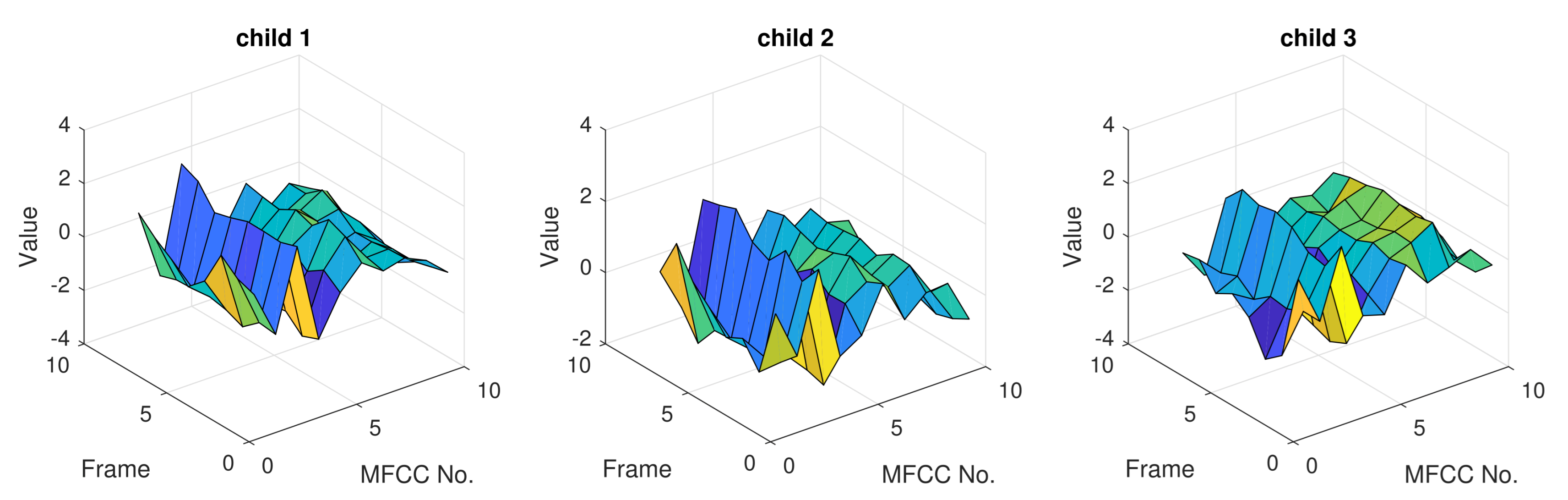

Section 4 introduces pattern extraction methods, including commonly used speech signal features—Linear Predictive Coding (LPC), Linear Predictive Cepstral Coefficients (LPCCs), and Mel–Frequency Cepstral Coefficients (MFCCs)—as well as newly proposed features for breath sound analysis, such as dynamic LPC and spectral entropy.

Section 5 explores breath sound recognition using the K-Nearest Neighbor (KNN), hidden Markov model (HMM), artificial neural network (ANN), logistic regression, and decision tree classifiers.

Section 6 and

Section 7 present and discuss the experimental results, while

Section 8 summarizes the findings and discusses future directions.

2. Breath Sound and Respiratory Diseases in Young Children

Breath sounds are created in the large airways as a result of vibrations that are generated from air velocity and turbulence [

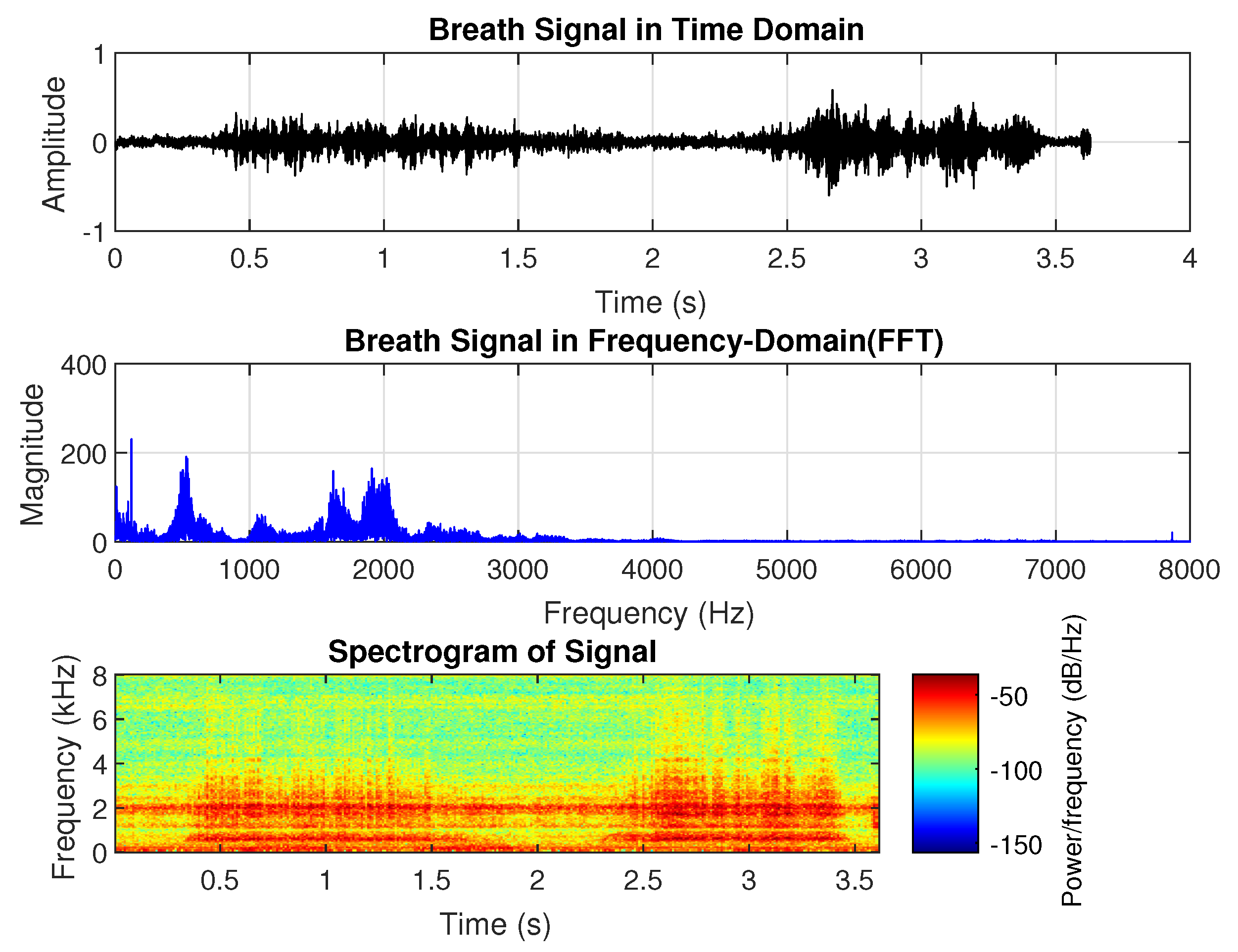

3]. Breath sounds can be heard through a stethoscope and are regarded as one of the most significant bio-signals used to diagnose certain respiratory abnormalities and are widely used by physicians in their practice. Breath sounds are typically categorized into two groups: normal breath sounds, which occur in the absence of respiratory issues, and abnormal or adventitious sounds. Normal breath sounds include both inspiratory and expiratory sounds, which can be heard in the airways during inhalation and exhalation. When auscultating clear lungs—free from swelling, mucus, or blockages—breath sounds are smooth and soft. Normal lung sounds create a smooth, soft sound that can be heard when breathing in and out and are referred to as vesicular lung sounds; i.e., nothing is blocking the airways and they are not narrowed or swollen. Normal breath sounds, as shown in

Figure 1, have the longest duration compared to abnormal breath sounds. They consist of two distinguishable phases: inhalation and exhalation. The highest peak in frequency occurs around 100 Hz, with additional dominant frequency groups at approximately 500 Hz, 1600 Hz, and 1800 Hz. In the spectrogram, both inhalation and exhalation are clearly recognizable, with frequencies around 100 Hz and 1800 Hz present throughout the entire duration.

Abnormal breath sounds include crackles, wheezes, rhonchi, stridor, and pleural rub. Wheezes are continuous musical adventitious lung sounds, typically with a dominant frequency over 100 Hz and a duration exceeding 100 ms [

42]. They are associated with partial airway obstruction, and their presence during breathing often reflects the severity of conditions such as children’s nocturnal asthma. Wheezing in children is also used to assess asthma predisposition. Crackles, in contrast, are discontinuous and explosive sounds, usually occurring during inspiration and lasting less than 100 ms [

42]. They feature a rapid initial pressure deflection followed by a short oscillation and are classified by duration: fine crackles last under 10 ms, while coarse crackles last over 10 ms [

42].

Pneumonia is an inflammatory lung condition affecting the alveoli, with symptoms such as cough, chest pain, fever, and difficulty breathing. It is the leading cause of hospitalization for children in the United States [

43]. Pediatric patients with pneumonia, malignancy, or other pulmonary conditions may exhibit a pleural rub in their breath sounds. Pleural rub is characterized by a coarse grating sound resulting from the pleural surfaces rubbing against each other. It can be heard during both inhalation and exhalation [

44]. Pleural friction rubs are distinctive sounds akin to “the sound made by walking on fresh snow” when the pleural surfaces become rough or inflamed due to conditions like pleural effusion, pleurisy, or serositis [

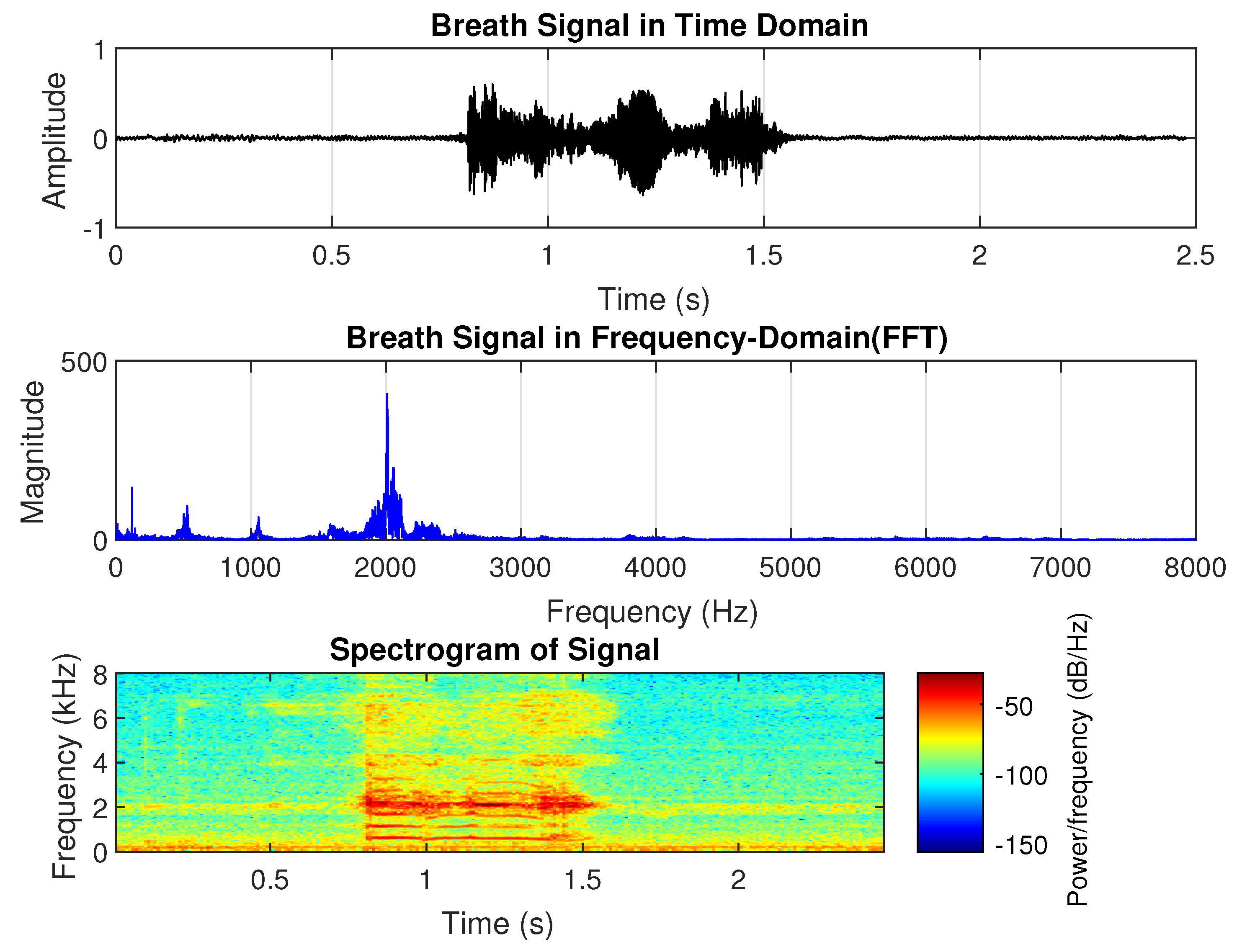

44]. From the sample shown in

Figure 2, the dominant frequency of pneumonia-related breath sounds is approximately 2000 Hz, with harmonics around 4 kHz and 6 kHz. The duration of the sound is shorter than the normal breath sound in the time domain. There is no significant difference in amplitude between inhalation and exhalation. However, the harmonic power decreases between phases, particularly in the higher-order harmonics.

Asthma is a chronic condition that causes inflammation and narrowing of the airways, leading to wheezing, chest tightness, and shortness of breath. It typically begins in childhood, affecting approximately 6% of children, and ranks as the third leading cause of death from respiratory diseases [

45]. Wheezing, a high-pitched whistling sound produced during breathing, is commonly associated with asthma. It can occur during exhalation (expiration) or inhalation (inspiration) and may or may not be accompanied by breathing difficulties.

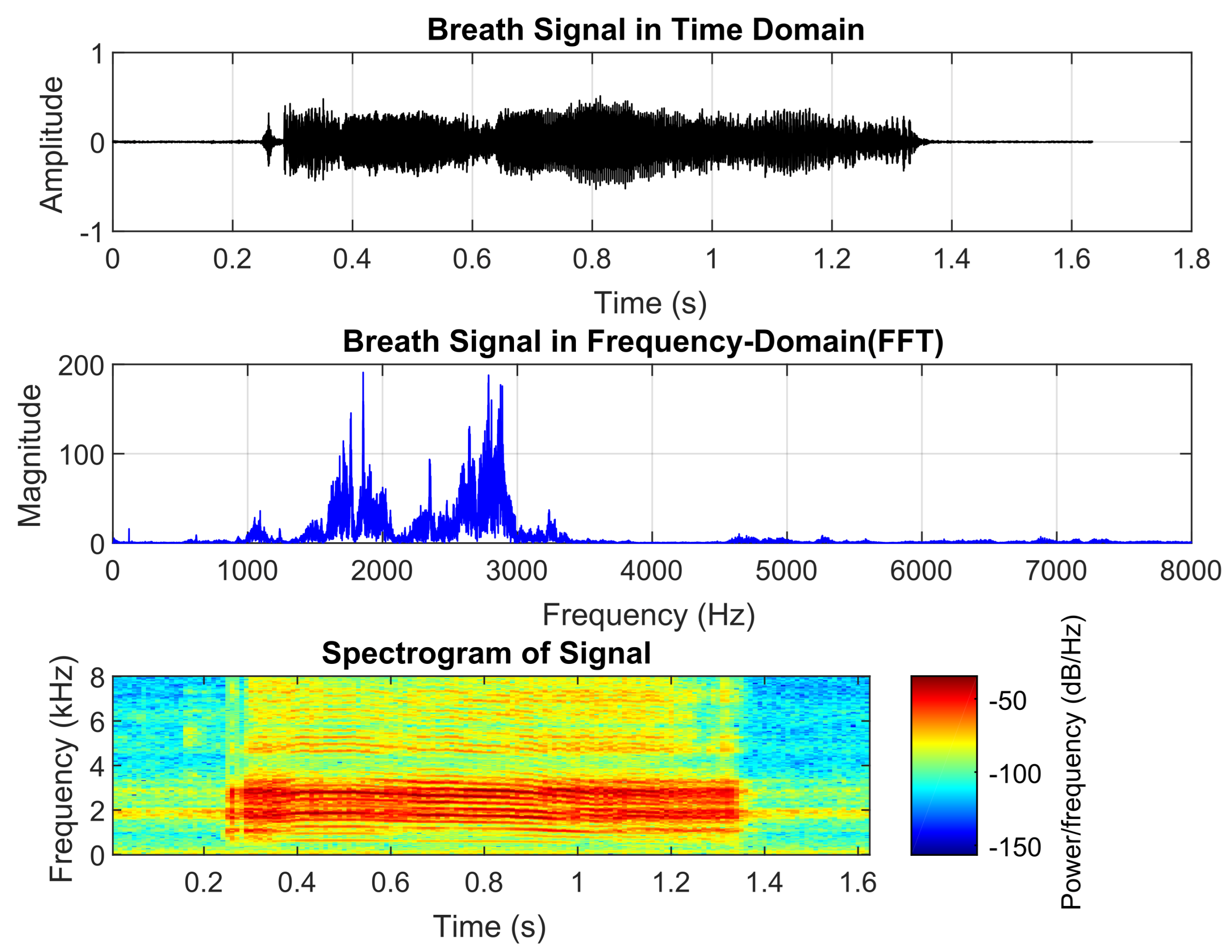

Figure 3 shows asthmatic breath sounds, which have a longer duration than those of pneumonia but are shorter than normal breath sounds. They exhibit two dominant frequencies, around 1800 Hz and 2700 Hz, which are significantly higher than normal breath sounds. The spectrogram appears relatively steady. These acoustic characteristics align with healthcare providers’ perceptions of asthmatic wheezing as they often describe it as a continuous high-pitched sound with a distinct tonal quality.

Croup, a viral infection affecting the upper airways, produces a distinctive barking cough and noticeable breathing difficulties. The associated breath sounds include stridor, a high-pitched squeaky noise during inhalation caused by swollen, narrowed airways; a barking cough, characterized by a loud, harsh, seal-like sound; and hoarseness, resulting from vocal cord inflammation.

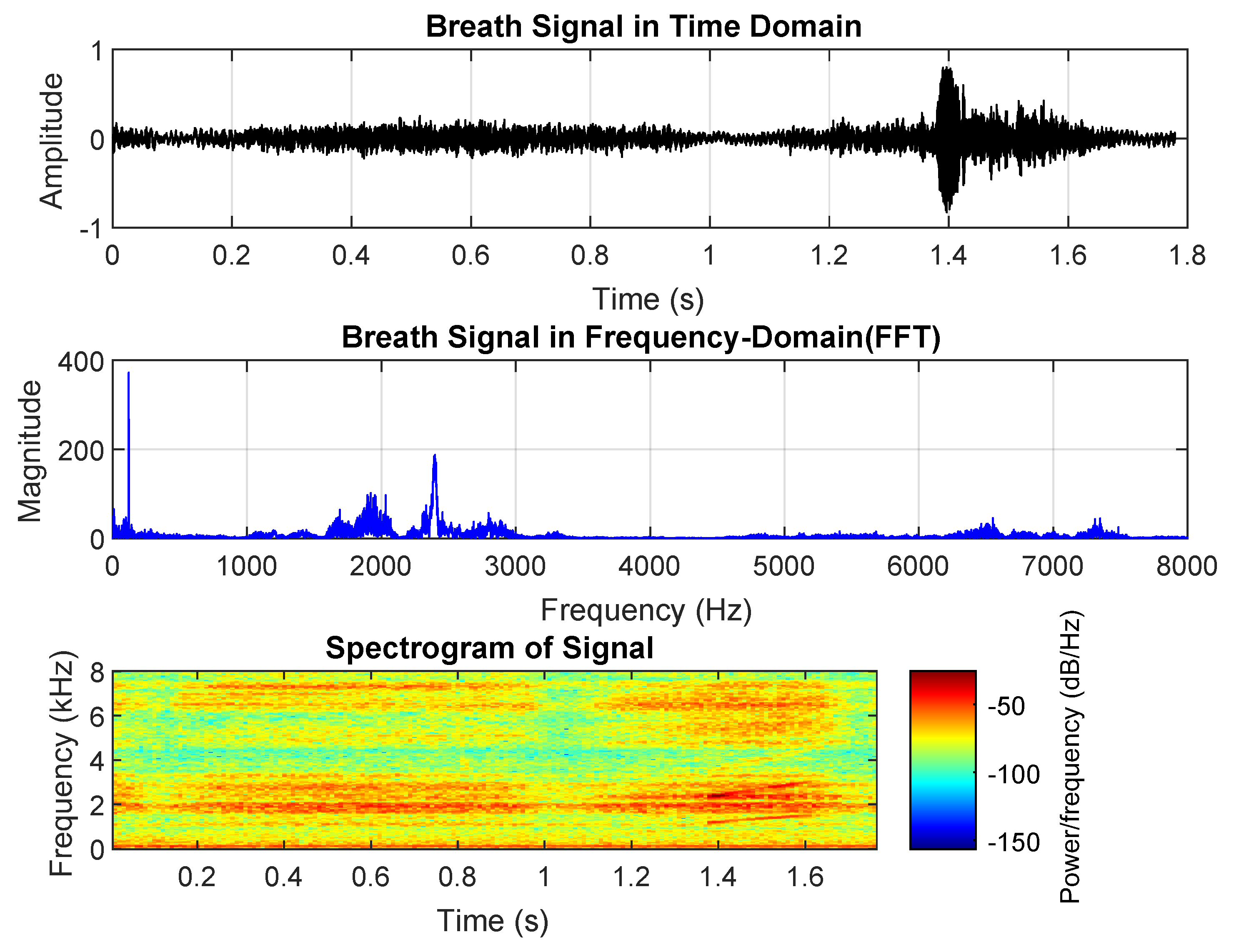

As shown in

Figure 4, the inhalation phase has a shorter duration and significantly higher amplitude than exhalation, aligning with the obstruction of the upper airway. Croup-related breath sounds contain more high-frequency components, particularly in the 6000–8000 Hz range, which corresponds to the characteristic high-pitched noise. The overall breath cycle is longer than that of asthma and pneumonia but shorter than normal breath sounds.

5. Classification

This section presents the classification of breath sounds, which is vital for diagnosing and monitoring respiratory conditions. By analyzing the features extracted from breath sound signals, various classification methods can effectively distinguish between normal and abnormal breath patterns. We discuss different techniques, highlighting their performance in identifying respiratory diseases such as asthma, pneumonia, and croup.

5.1. K-Nearest Neighbor (KNN)

K-Nearest Neighbor (KNN) is a widely used reliable classification approach [

49]. It is based on the Euclidean distance between a test sample and the training samples. Let

be an input sample with

p features

,

n be the number of input samples

, and

p is the number of features

. The Euclidean distance between sample

and

can be calculated as

A cell that covers all neighboring points that are the nearest to each sample can be expressed as

In the context above,

represents the region or cell associated with sample

, while

x refers to any point within that cell. This framework embodies two fundamental aspects of the coordinate system [

49]: every point inside a cell is considered among the nearest neighbors of the sample defining that cell, and the closest sample to any point is determined by the nearest boundary of these cells. Leveraging this property, the K-Nearest Neighbor (KNN) algorithm classifies a test sample by assigning it the most common category among its

k nearest training samples. To avoid ties in voting,

k is typically chosen as an odd number. When

k equals 1, the method is known as the nearest neighbor classifier.

5.2. Artificial Neural Network (ANN)

An artificial neural network (ANN) is a computational model inspired by the brain’s synaptic connections, designed to process information. It consists of numerous nodes (or neurons), each representing a specific output function known as the activation function. The connections between nodes, called synaptic weights, define the relationship between them. The output of an ANN depends on how the nodes are connected, the connection weights, and the activation function. Key characteristics of ANNs include their ability to learn from examples and generalize results through the activation function. During the training process, the knowledge learned is stored in the synaptic weights of the neurons [

50].

For breath sound signals with different causes, it is assumed that they exhibit distinct feature patterns. By learning from various breath sounds, ANNs can classify them into different categories, storing these feature patterns as “codebooks.” When an unknown breath sound is presented, it can be recognized by comparing its features to the stored “codebooks,” thereby classifying the cause it belongs to.

Self-organizing ANNs can evaluate input patterns, organize themselves to learn from the collective input set, and categorize similar patterns into groups [

50]. Self-organized learning typically involves frequent adjustments to the network’s synaptic weights based on the input patterns [

50]. One such self-organizing network model is Linear Vector Quantization (LVQ), a feed-forward ANN widely used in pattern recognition and optimization tasks. LVQ can be effectively applied to classify different causes of breath sounds.

This work utilizes the LVQ model for classifying breath sounds. The input to the LVQ neural network comprises 10 elements, reflecting the structure of the breath sound feature data. The classification categories addressed in this thesis are defined as follows:

Category Class 1: Pneumonia.

Category Class 2: Asthma.

Category Class 3: Croup.

Category Class 4: Normal.

For breath sound signals, if n-order coefficient feature is extracted, the input vector of LVQ has

n elements as

The weight matrix of LVQ neural network, when n = 10, is

Here, represents the patterns of Class i, . The subscript of each weight coefficient corresponds to the connection from the jth input element to the ith output neuron. The LVQ ANN model must be trained with labeled breath sound data to obtain these reference codebooks. The training procedure is carried out through the following steps:

- 1.

Initialize all weight vectors , , , and by selecting a 10-order breath sound feature from each breath sound class. Set the adaptive learning rate as with . The training iteration index starts at ;

- 2.

Let M be the number of training input feature vectors per iteration. For each training input vector , where , perform steps 3 and 4;

- 3.

Find the weight vector index q that minimizes the Euclidean distance . Record this index as , which is the training output class number for ;

- 4.

Update the weight vector

as follows:

where

is the known class number of input

; for example, if input

is a breath sound for croup,

. Only

is updated, and the updating rule depends on whether the real class number is the same as the LVQ output class number

q in step (4);

- 5.

Here, we have ; let for following iteration, and repeat steps (2) to (4) until k = K, where K is the total iteration number.

After training, , , , and serve as the reference “codebooks” for their respective classes. With these codebooks established, the trained ANN can be used to classify incoming breath sound signals.

5.3. Hidden Markov Model

A Markov model represents a system that can exist in one of a finite set of

N distinct states. At time

t, the system transitions from state

to state

, where

denotes the current state. In a time-independent first-order Markov model, the assumption is that the next state depends solely on the current state [

26]. The transitions between states are governed by state transition probabilities, defined as follows:

where

is state

i of

N states;

is an element of the state transition matrix

. The state transition coefficients

are non-negative, so

The observation symbol probability distribution in state

j,

, where

here,

M provides the value of distinct observation symbols per state;

is the individual symbols. The initial state distribution is given by

, where

is the probability of the initial state

being in the state

iA hidden Markov model (HMM) is an extension of a Markov model in which the states themselves are not directly observable. Instead, only the output generated by the system—known as the observation sequence—is available for analysis [

46]. Based on the underlying HMM framework, once suitable values are assigned to the number of states

N, the number of distinct observation symbols

M, the state transition matrix

, the observation probability distribution

B, and the initial state distribution

, the HMM can function as a probabilistic generator capable of producing observation sequences:

where

represents symbols from

;

T is the value of the observations in the sequence:

- 1.

Choose an initial state according to the initial state distribution , which is set to in this research.

- 2.

Set .

- 3.

Select the observation based on the symbol probability distribution for the current state .

- 4.

Transition to the next state using the state transition probability distribution from state .

- 5.

Set ; repeat step 3 if . Otherwise, end the procedure.

5.4. Random Forest Classifier

The random forest (RF) classifier aggregates predictions from

B decision trees, each trained on a bootstrap sample of the dataset. For a breath sound input

x (e.g., DLPC and spectral entropy features), each tree

predicts a class:

where

, and

I is the indicator function.

The final RF prediction is determined by majority voting across

B trees:

Feature randomness is introduced by selecting

features at each split, where

p is the total number of features. The out-of-bag (OOB) error, estimated from samples not used in training each tree, is

5.5. Logistic Regression Classifier

Logistic regression (LR) models breath sound classification using spectral entropy and DLPC features. For an input

, the score for each class

is

The probability of class

c is computed via the softmax function:

The model minimizes the cross-entropy loss:

weights are updated via gradient descent:

7. Discussion

Recorded signals contain undesired components, such as environmental ambient noise, heartbeat sounds, and speech from patients or physicians. A band-pass filter ranging from 50 to 2500 Hz—corresponding to the typical bandwidth of breath sounds—is commonly applied during data collection and preprocessing to suppress these unwanted signals. However, this approach has limited effectiveness against interfering signals that overlap in frequency with breath sounds, including heartbeats, broadband noise, and human speech. Such interference can impact signal quality and consistency.

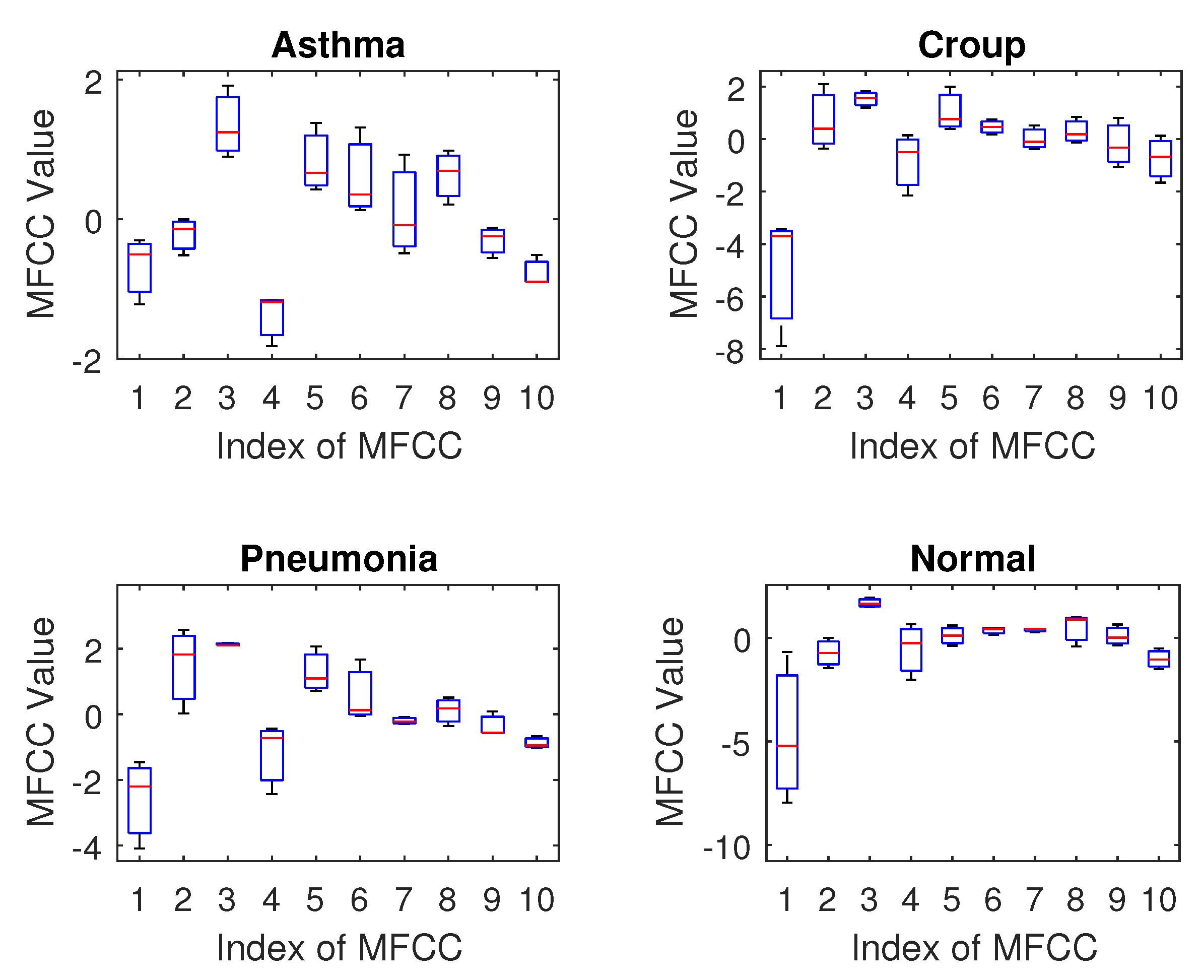

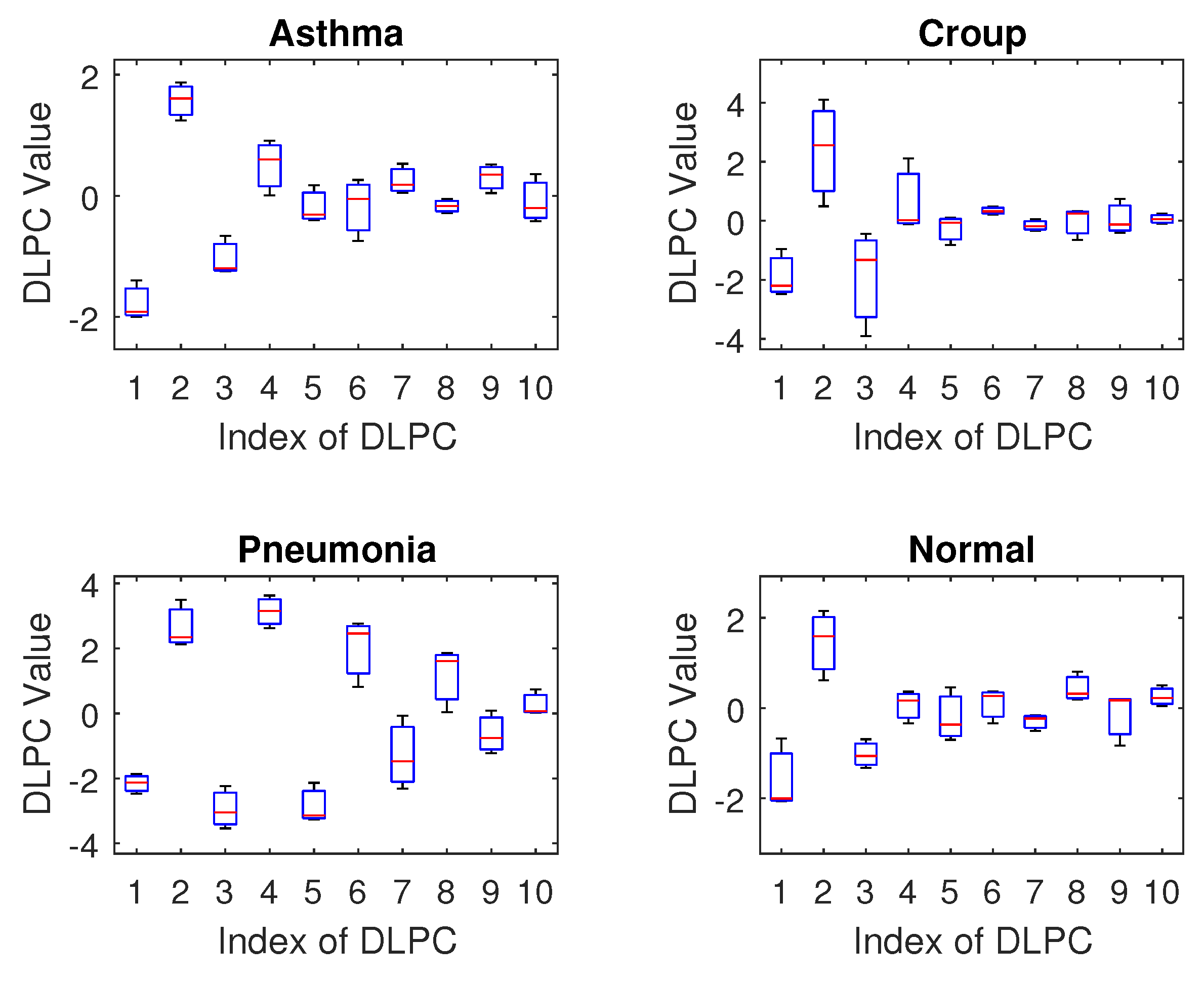

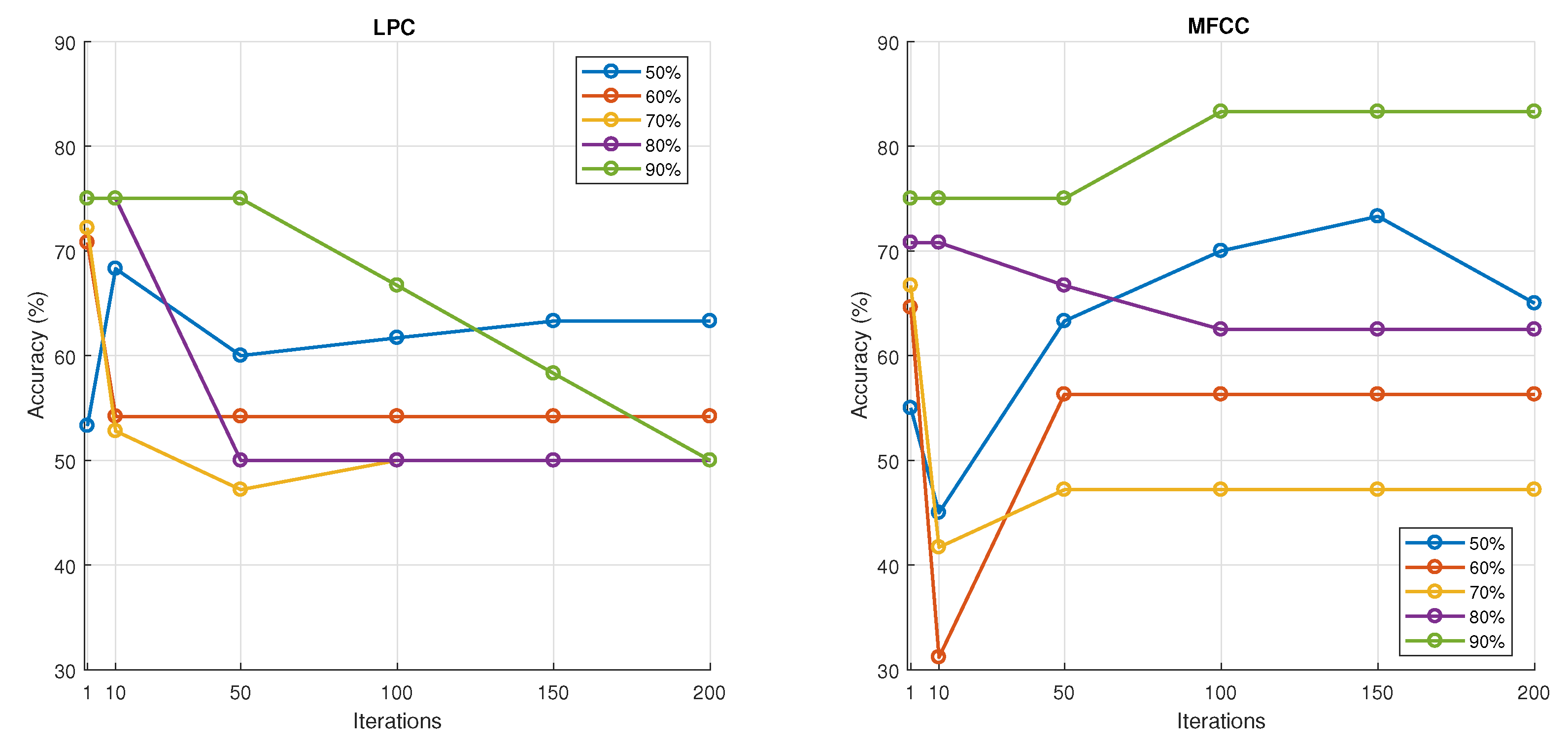

Regarding feature selection, our results indicate that the MFCC (Mel–Frequency Cepstral Coefficient) is a promising feature for breath sound classification. The DLPC (Dynamic Linear Prediction Coefficient) also demonstrates reasonable performance with reduced computational complexity, making it suitable for training deep learning models on limited datasets. In contrast, the LPC and LPCC were found to be less effective as they showed high similarity across different classes, suggesting they are not sensitive enough to distinguish the unique acoustic patterns of various breath sounds.

Spectral entropy emerged as a strong candidate feature, particularly for detecting low-probability events such as respiratory diseases. Its ability to capture both the frequency characteristics and the underlying probability distribution of the signal makes it well-suited for this task. However, given the limited dataset size, the observed high accuracy may be a result of overfitting. Further investigation with larger datasets is needed to confirm its generalizability.

Among the classifiers tested, artificial neural networks (ANNs) and random forest demonstrated the most promising results, achieving high accuracy and generalization within the constraints of the current dataset. K-Nearest Neighbor (KNN) also showed reasonable performance and can serve as a strong baseline for small- to medium-sized datasets.

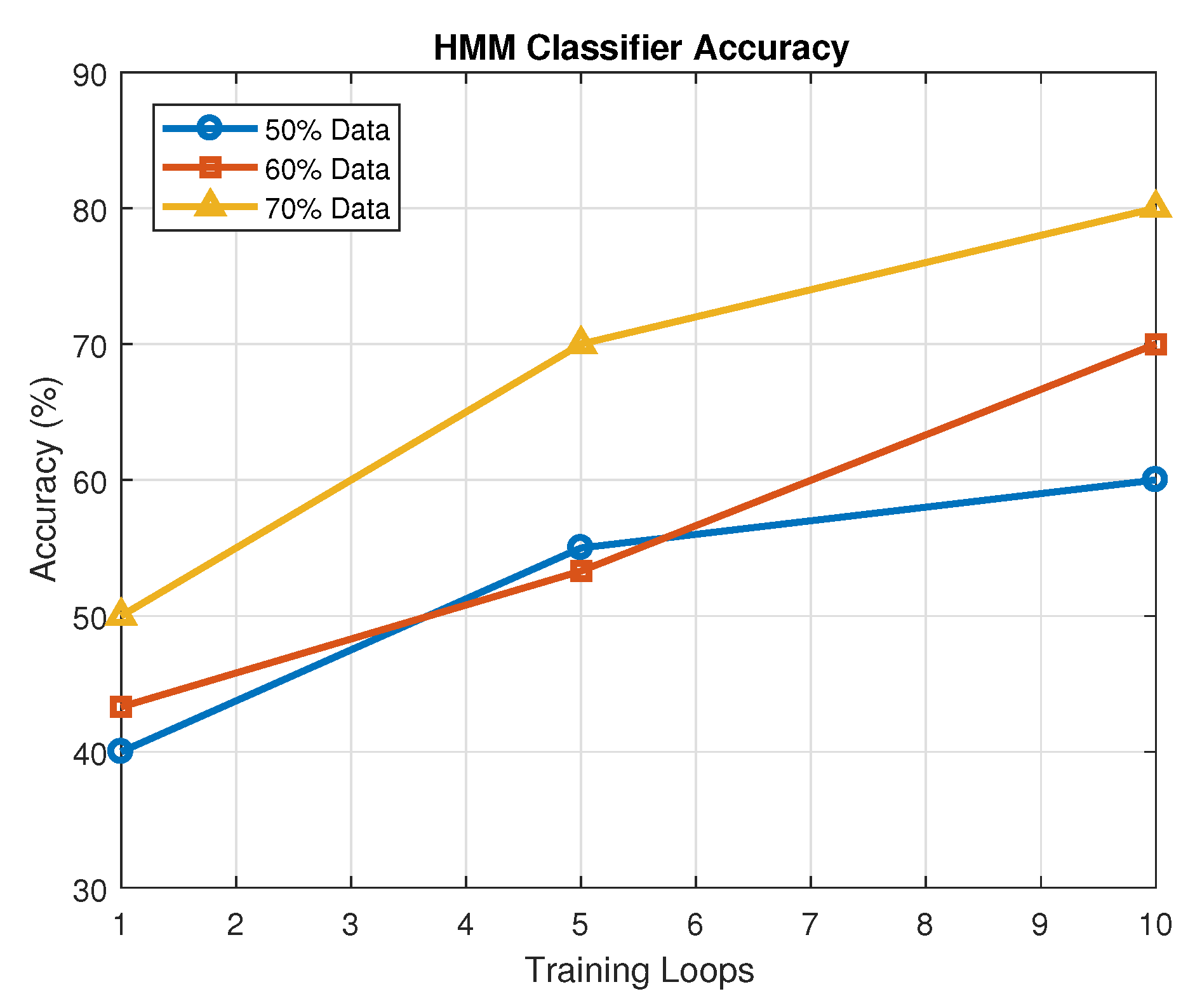

In contrast, logistic regression did not perform well in our experiments, suggesting it is not well-suited for capturing the complex nonlinear patterns inherent in breath sound data. Hidden Markov models (HMMs) showed performance comparable to ANNs, indicating their potential in modeling temporal dynamics, although further tuning and data expansion may enhance their effectiveness.

Looking ahead, more advanced architectures, such as convolutional neural networks (CNNs) or recurrent neural networks (RNNs), could be explored, especially as more data becomes available. These models may provide improved feature representation and classification accuracy by leveraging spatial and temporal dependencies in the audio signals.

To ensure the robustness and generalizability of the proposed system, it is essential to expand the dataset with a larger number of diverse samples. This will facilitate more comprehensive statistical analysis, improve classifier training, and support the development of more sophisticated models. The proposed breath sound analysis system demonstrates significant potential for application in real-world healthcare settings. In particular, it can serve as a valuable screening tool in primary care environments, assisting clinicians in early identification of pediatric respiratory conditions such as asthma, croup, and pneumonia. Additionally, due to its lightweight computational requirements and interpretability, the system is well-suited for home monitoring, especially in households with young children who are prone to respiratory infections. With minimal training, non-specialist caregivers—including parents and community health workers—could use this tool to capture breath sounds and conduct preliminary assessments, enabling earlier medical intervention and reducing the burden on emergency services. This approach aligns with the growing trend of integrating AI-powered diagnostics into accessible user-friendly platforms to promote preventive care and reduce gaps in healthcare access.

8. Conclusions

This paper introduces innovative approaches for detecting and recognizing breath sounds in young children, tailored to be individually independent for general application and robust against noisy environments. By extracting audio features—such as Dynamic Linear Prediction Coefficients (DLPCs) and spectral entropy—in both the time and frequency domains, and aligning these with spectrogram analysis informed by health professionals, we developed a comprehensive framework for breath sound classification. Clinical data from 120 samples across infants, toddlers, and preschoolers (aged 2 months to 6 years) were used to design and validate these methods, with the experiments demonstrating high accuracy: K-Nearest Neighbor (KNN) and random forest (RF) achieved 97% accuracy. These results highlight the efficacy of DLPCs and spectral entropy in capturing the nonstationary, chaotic patterns of pediatric breath sounds, enabling precise differentiation of conditions like asthma, croup, pneumonia, and normal breathing. Future work will expand the classification to include additional respiratory classes (e.g., bronchitis and wheezing subtypes), increase the dataset size for greater generalizability, and enhance performance through advanced feature engineering or classifier optimization. The proposed system offers a practical tool for parents and novice caregivers, facilitating early monitoring and screening of respiratory health to mitigate risks of impairment in young children and reduce healthcare costs. Looking forward, this approach lays a foundation for scalable human–machine cooperative systems, with potential enhancements through expanded datasets and integration with real-time diagnostic platforms. The potential of the system as a screening instrument in primary care has thus been investigated. The utilization of the aforementioned apparatus is to be executed within the confines of a residential setting, with the implementation of remote guidance. The potential exists for expansion to other childhood respiratory diseases.