A Gamified AI-Driven System for Depression Monitoring and Management

Abstract

1. Introduction

- RQ1:Can integrating gamified micro-interventions, adaptive ecological surveys, and environmental sensing enhance the early detection of depressive symptom patterns?

- RQ2: How can an AI-enabled digital mental health system dynamically personalize user experiences based on multimodal behavioral and contextual inputs?

- RQ3: How can such a system support end-user self-regulation and researcher-led experimentation within the same operational framework?

- System design: A novel, AI-driven architecture integrating gamified micro-interventions, adaptive surveys, and environmental data for depression monitoring.

- Simulation and evaluation framework: A synthetic data pipeline for safe, large-scale testing and validation of mental health algorithms.

- Dual functionality: A unified platform serving user self-regulation and researcher experimentation, simultaneously advancing clinical and scientific goals.

2. Background and Related Works

2.1. Gamification in Mental Health Tools

2.2. Adaptive Daily Surveys for Emotional Monitoring

2.3. AI for Personalized Depression Support

2.4. Environmental Context: Temperature and Mood

2.5. Motivation and Research Direction

3. Materials and Methods: A Gamified AI-Driven System

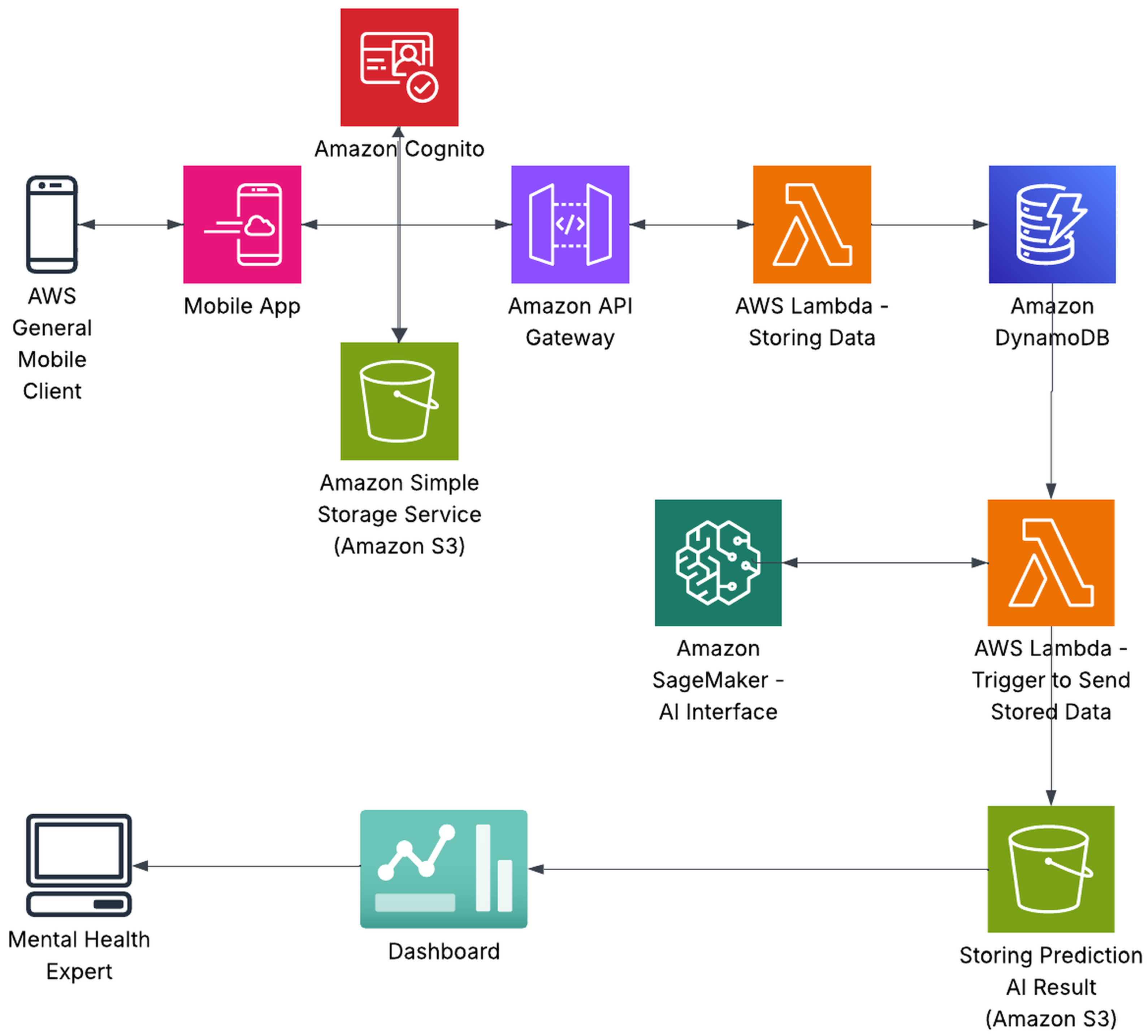

3.1. System Architecture

- Multi-platform application: A cross-compatible user interface supporting Android, iOS, and web platforms to ensure broad accessibility.

- Cloud-based infrastructure: A secure and scalable back end hosted on AWS, utilizing DynamoDB (a NoSQL database) for efficient data storage and management. This infrastructure supports API services, user authentication, and real-time data processing.

- AI analytics and simulation core: This layer leverages AWS SageMaker to implement machine learning models that analyze user behavioral patterns, estimate risk levels related to depressive symptom severity and relapse, and simulate synthetic scenarios for testing intervention strategies. The risk estimation component assesses the likelihood of adverse mental health episodes, enabling timely personalized interventions.

3.1.1. Multi-Platform Application

3.1.2. Cloud-Based Infrastructure

- API gateway: Manages secure requests between front-end and back-end.

- Lambda functions: Processes incoming data and triggers downstream workflows.

- DynamoDB: Stores structured user records, including mood logs and environmental readings.

- Cognito: Handles authentication and personalized user access.

- SageMaker: Facilitates model training, simulation, and batch inference.

3.1.3. AI Analytics and Simulation Core

3.2. System Component

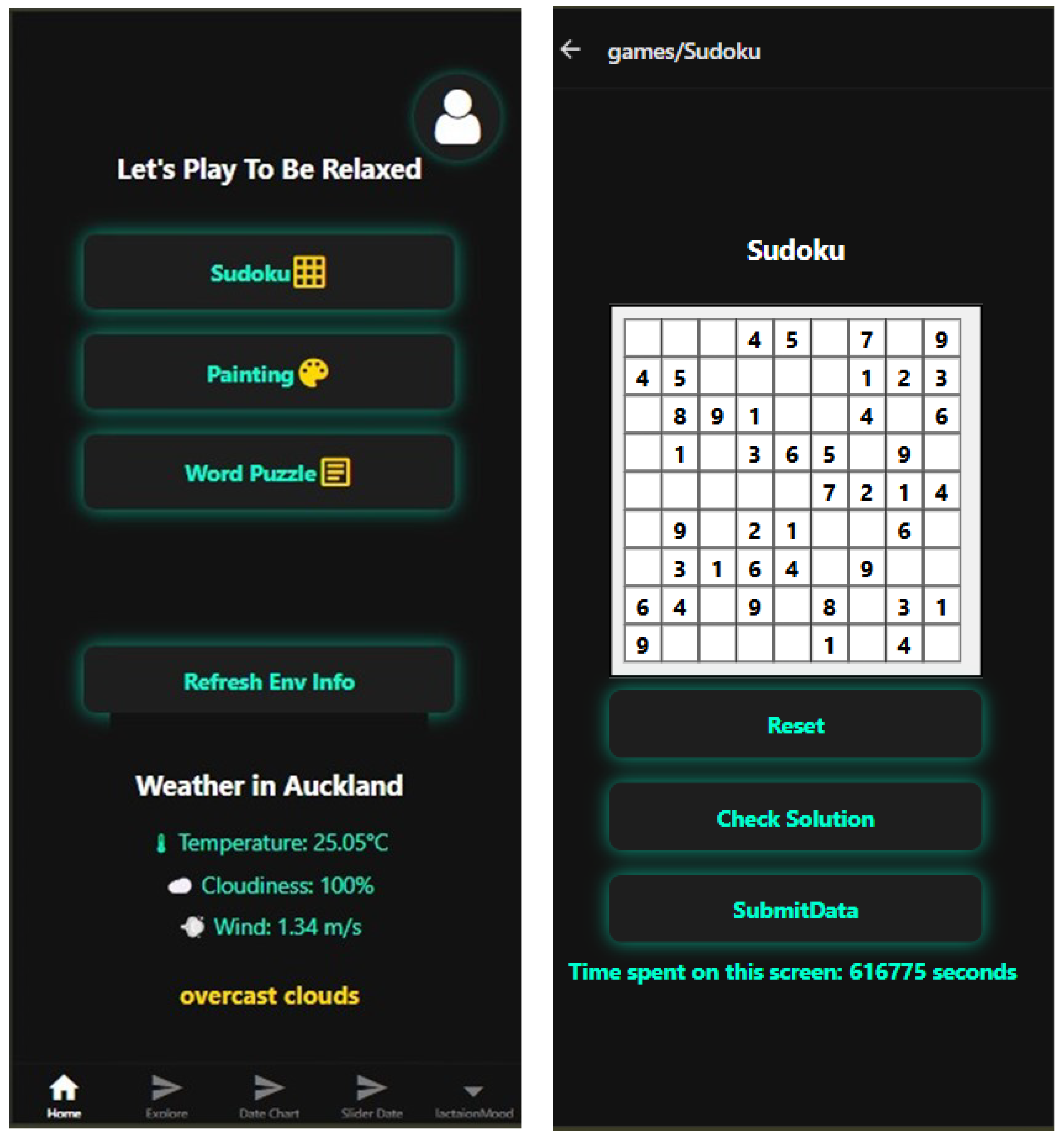

- Therapeutic micro-games: The system incorporates lightweight micro-games designed to assess and support cognitive and emotional functioning. These games target attention span, decision-making speed, working memory, and error sensitivity, domains that are frequently affected by depression and stress-related disorders. Prior research has demonstrated that game-based tasks can capture fine-grained behavioral markers and serve as engaging interventions to support emotion regulation and cognitive flexibility [33,34]. Short, interactive games reduce friction and increase adherence, making them suitable for frequent self-monitoring and low-intensity therapeutic engagement. Figure 3 illustrates an example game interface.

- Adaptive daily surveys: The platform employs short daily self-report surveys grounded in EMA principles, focusing on core themes such as mood, stress levels, energy, sleep quality, social interaction, and cognitive clarity. Survey questions are context-aware and adapt dynamically based on user history and behavioral data, ensuring relevance while reducing repetition and survey fatigue. This adaptive approach enables high-frequency yet low-burden mental health monitoring, enhancing the granularity and accuracy of symptom tracking [35].

- Environmental context awareness: Real-time environmental data, including temperature, humidity, and light exposure, are collected to provide contextual layers for emotional assessment. This data is correlated with user-reported mood states to explore environmental influences on mental well-being.

- AI-driven analytics: The platform leverages machine learning models to integrate data from micro-game performance, adaptive surveys, and environmental sensors. These models first identify hidden behavioral patterns and correlations, such as changes in cognitive speed, mood variability, and environmental influences, indicative of emerging depressive trends. By learning from historical data, the system can predict potential mental health risks and deliver real-time, personalized feedback aligned with each user’s evolving profile.

3.3. Embedded Research Capabilities

3.4. Technical Implementation Details

- scikit-learn 1.4 for random forest and logistic regression models.

- TensorFlow 2.15 for the feedforward neural network model.

- NumPy 1.26, Pandas 2.2, and Matplotlib 3.8 for data processing and visualization.

- eli5 for permutation-based feature importance analysis.

4. Results: Simulation-Based Evaluation of Depression Detection Models

4.1. Evaluation Objectives

- The capacity of AI models to classify depressive states from multimodal data.

- The feasibility of adaptive survey logic under evolving user states.

- The interpretability and robustness of learned models via visual analytics and feature attribution.

4.2. Data Simulation Framework

Synthetic Dataset Structure

- Behavioral features: game accuracy, average response latency, error rate, and interaction frequency, representing engagement and performance under cognitive load.

- Cognitive features: baseline cognitive score, working memory load, and attention span indicators were sampled from normal distributions informed by psychological literature.

- Emotional states: self-reported mood, stress level, and energy level, generated dynamically across sessions to simulate fluctuating affective profiles.

- Contextual features: temperature, humidity, and time of day correlated probabilistically with mood and behavioral responses based on findings from environmental psychology studies.

- Temporal dynamics: each synthetic user had 10–30 sessions over time to introduce variability in longitudinal patterns (such as fatigue, recovery trends, or mood swings).

4.3. Machine Learning Pipeline

4.3.1. Preprocessing

4.3.2. Model Selection and Training

- Random forest: An ensemble of 100 decision trees using Gini impurity for feature splitting.

- Logistic regression: A regularized linear model optimized with the LBFGS solver.

- Neural network: A feedforward network with two hidden layers (64 and 32 neurons) using ReLU activation and trained with the Adam optimizer.

4.3.3. Results Summary

4.3.4. Visualization and Feature Importance

4.4. Depression Scoring and Classification Algorithm

- Feature aggregation: Each synthetic user session included behavioral (such as game accuracy and response latency), cognitive (such as baseline cognitive score, attention span), and contextual (such as temperature and humidity) variables.

- Weighted composite scoring: A continuous depression score was calculated for each user session using the following equation:where is the value of feature j for session i, and is its empirically assigned weight based on relevance in the literature. For instance, mood variability and energy received higher weights, while environmental features were assigned lower weights.

- Normalization: Depression scores were min-max scaled to the range to standardize the output across sessions.

- Label assignment: The normalized scores were binarized using the dataset median. Sessions with scores above the median were labeled as “depressed”, while those below were labeled as “non-depressed”. This binarization enabled the construction of a balanced binary classification task.

- Model training: Supervised machine learning models—including random forest, logistic regression, and neural networks—were trained to predict the binary depression label using the complete feature set. We applied stratified sampling and cross-validation to ensure robustness and generalizability.

4.5. Adaptive Survey Logic Simulation

4.6. Ethical Considerations

5. Discussion

5.1. Validity of Synthetic Evaluation

5.2. Adaptive and Context-Aware Modeling

5.3. Research Utility and Generalizability

6. Conclusions

Future Work

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Depressive Disorder (Depression). 2023. Available online: https://www.who.int/news-room/fact-sheets/detail/depression (accessed on 16 May 2025).

- Larsen, M.E.; Huckvale, K.; Nicholas, J.; Torous, J.; Birrell, L.; Li, E.; Reda, B. Using science to sell apps: Evaluation of mental health app store quality claims. NPJ Digit. Med. 2019, 2, 18. [Google Scholar] [CrossRef] [PubMed]

- Fleming, T.M.; Bavin, L.; Stasiak, K.; Hermansson-Webb, E.; Merry, S.N.; Cheek, C.; Lucassen, M.; Lau, H.M.; Pollmuller, B.; Hetrick, S. Serious games and gamification for mental health: Current status and promising directions. Front. Psychiatry 2017, 7, 215. [Google Scholar] [CrossRef]

- Zamani, S.; Nguyen, M.; Sinha, R. Integrating Environmental Data for Mental Health Monitoring: A Data-Driven IoT-Based Approach. Appl. Sci. 2025, 15, 912. [Google Scholar] [CrossRef]

- Ng, M.Y.; Frederick, J.A.; Fisher, A.J.; Allen, N.B.; Pettit, J.W.; McMakin, D.L. Identifying Person-Specific Drivers of Depression in Adolescents: Protocol for a Smartphone-Based Ecological Momentary Assessment and Passive Sensing Study. JMIR Res. Protoc. 2024, 13, e43931. [Google Scholar] [CrossRef]

- Roepke, A.M.; Jaffee, S.R.; Riffle, O.M.; McGonigal, J.; Broome, R.; Maxwell, B. Randomized controlled trial of SuperBetter, a smartphone-based/internet-based self-help tool to reduce depressive symptoms. Games Health J. 2015, 4, 235–246. [Google Scholar] [CrossRef] [PubMed]

- Merry, S.N.; Stasiak, K.; Shepherd, M.; Frampton, C.; Fleming, T.; Lucassen, M.F. The effectiveness of SPARX, a computerised self help intervention for adolescents seeking help for depression: Randomised controlled non-inferiority trial. BMJ 2012, 344, e2598. [Google Scholar] [CrossRef]

- Wasil, A.R.; Gillespie, S.; Patel, R.; Petre, A.; Venturo-Conerly, K.E.; Shingleton, R.M.; Weisz, J.R.; DeRubeis, R.J. Reassessing evidence-based content in popular smartphone apps for depression and anxiety: Developing and applying user-adjusted analyses. J. Consult. Clin. Psychol. 2020, 88, 983. [Google Scholar] [CrossRef]

- Tondello, G.F.; Nacke, L.E. Player characteristics and video game preferences. In Proceedings of the Annual Symposium on Computer-Human Interaction in Play, Barcelona, Spain, 22–25 October 2019; pp. 365–378. [Google Scholar]

- Cheng, P.; Luik, A.I.; Fellman-Couture, C.; Peterson, E.; Joseph, C.L.; Tallent, G.; Tran, K.M.; Ahmedani, B.K.; Roehrs, T.; Roth, T.; et al. Efficacy of digital CBT for insomnia to reduce depression across demographic groups: A randomized trial. Psychol. Med. 2019, 49, 491–500. [Google Scholar] [CrossRef]

- Torous, J.; Myrick, K.J.; Rauseo-Ricupero, N.; Firth, J. Digital mental health and COVID-19: Using technology today to accelerate the curve on access and quality tomorrow. JMIR Ment. Health 2020, 7, e18848. [Google Scholar] [CrossRef]

- Kramer, A.D.; Guillory, J.E.; Hancock, J.T. Experimental evidence of massive-scale emotional contagion through social networks. Proc. Natl. Acad. Sci. USA 2014, 111, 8788–8790. [Google Scholar] [CrossRef]

- Wahle, F.; Kowatsch, T.; Fleisch, E.; Rufer, M.; Weidt, S. Mobile sensing and support for people with depression: A pilot trial in the wild. JMIR MHealth UHealth 2016, 4, e5960. [Google Scholar] [CrossRef]

- Rosenblum, M.; Miller, P.; Reist, B.; Stuart, E.A.; Thieme, M.; Louis, T.A. Adaptive design in surveys and clinical trials: Similarities, differences and opportunities for cross-fertilization. J. R. Stat. Soc. Ser. A Stat. Soc. 2019, 182, 963–982. [Google Scholar] [CrossRef]

- Rintala, A.; Wampers, M.; Myin-Germeys, I.; Viechtbauer, W. Response compliance and predictors thereof in studies using the experience sampling method. Psychol. Assess. 2019, 31, 226. [Google Scholar] [CrossRef]

- Mohr, D.C.; Zhang, M.; Schueller, S.M. Personal sensing: Understanding mental health using ubiquitous sensors and machine learning. Annu. Rev. Clin. Psychol. 2017, 13, 23–47. [Google Scholar] [CrossRef]

- Fitzpatrick, K.K.; Darcy, A.; Vierhile, M. Delivering cognitive behavior therapy to young adults with symptoms of depression and anxiety using a fully automated conversational agent (Woebot): A randomized controlled trial. JMIR Ment. Health 2017, 4, e7785. [Google Scholar] [CrossRef] [PubMed]

- Kumar, A.; Nayar, K.R. COVID 19 and its mental health consequences. J. Ment. Health 2021, 30, 1–2. [Google Scholar] [CrossRef] [PubMed]

- Zamani, S.; Sinha, R.; Nguyen, M.; Madanian, S. Enhancing emotional well-being with IoT data solutions for depression: A systematic review. IEEE J. Biomed. Health Inform. 2025, 29, 1919–1930. [Google Scholar] [CrossRef]

- Holzinger, A.; Biemann, C.; Pattichis, C.S.; Kell, D.B. What do we need to build explainable AI systems for the medical domain? arXiv 2017, arXiv:1712.09923. [Google Scholar]

- Zhong, Z.; Wang, Z. Intelligent Depression Prevention via LLM-Based Dialogue Analysis: Overcoming the Limitations of Scale-Dependent Diagnosis through Precise Emotional Pattern Recognition. arXiv 2025, arXiv:2504.16504. [Google Scholar]

- Qiu, J.; He, Y.; Juan, X.; Wang, Y.; Liu, Y.; Yao, Z.; Wu, Y.; Jiang, X.; Yang, L.; Wang, M. EmoAgent: Assessing and Safeguarding Human-AI Interaction for Mental Health Safety. arXiv 2025, arXiv:2504.09689. [Google Scholar]

- Hou, Y.; Chen, W.; Chen, S.; Liu, X.; Zhu, Y.; Cui, X.; Cao, B. Associations between indoor thermal environment assessment, mental health, and insomnia in winter. Sustain. Cities Soc. 2024, 114, 105751. [Google Scholar] [CrossRef]

- Obradovich, N.; Migliorini, R.; Paulus, M.P.; Rahwan, I. Empirical evidence of mental health risks posed by climate change. Proc. Natl. Acad. Sci. USA 2018, 115, 10953–10958. [Google Scholar] [CrossRef] [PubMed]

- Noelke, C.; McGovern, M.; Corsi, D.J.; Jimenez, M.P.; Stern, A.; Wing, I.S.; Berkman, L. Increasing ambient temperature reduces emotional well-being. Environ. Res. 2016, 151, 124–129. [Google Scholar] [CrossRef]

- Melrose, S. Seasonal affective disorder: An overview of assessment and treatment approaches. Depress. Res. Treat. 2015, 2015, 178564. [Google Scholar] [CrossRef]

- McLeod, K.; Spachos, P.; Plataniotis, K.N. Smartphone-based wellness assessment using mobile environmental sensors. IEEE Syst. J. 2020, 15, 1989–1999. [Google Scholar] [CrossRef]

- Bloomfield, L.S.; Fudolig, M.I.; Kim, J.; Llorin, J.; Lovato, J.L.; McGinnis, E.W.; McGinnis, R.S.; Price, M.; Ricketts, T.H.; Dodds, P.S.; et al. Predicting stress in first-year college students using sleep data from wearable devices. PLoS Digit. Health 2024, 3, e0000473. [Google Scholar] [CrossRef]

- Tang, C.; Yi, W.; Xu, M.; Jin, Y.; Zhang, Z.; Chen, X.; Liao, C.; Kang, M.; Gao, S.; Smielewski, P.; et al. A deep learning–enabled smart garment for accurate and versatile monitoring of sleep conditions in daily life. Proc. Natl. Acad. Sci. USA 2025, 122, e2420498122. [Google Scholar] [CrossRef] [PubMed]

- Fonseka, L.N.; Woo, B.K. Wearables in schizophrenia: Update on current and future clinical applications. JMIR MHealth UHealth 2022, 10, e35600. [Google Scholar] [CrossRef]

- Voon, L. How Human-Centered Design Can Help Public Agencies Design Better Digital Services. 2022. Available online: https://aws.amazon.com/cn/blogs/publicsector/how-human-centered-design-help-public-agencies-design-better-digital-services/ (accessed on 16 May 2025).

- Garvin, C. Transforming Student Wellbeing Support with Amazon Bedrock and SXP.ai. 2025. Available online: https://aws.amazon.com/cn/blogs/publicsector/transforming-student-wellbeing-support-with-amazon-bedrock-and-sxp-ai/ (accessed on 16 May 2025).

- Pine, D.S.; Cohen, J.A. Cognitive behavioral therapy, serotonin, and neural mechanisms of depression: The role of learning. Psychol. Med. 2011, 41, 1219–1230. [Google Scholar]

- Holtz, B.E.; Murray, J.A.; Hershey, T. Serious games for cognitive training in mental health conditions: A systematic review. JMIR Ment. Health 2021, 8, e22007. [Google Scholar]

- Shiffman, S.; Stone, A.A.; Hufford, M.R. Ecological Momentary Assessment. Annu. Rev. Clin. Psychol. 2008, 4, 1–32. [Google Scholar] [CrossRef] [PubMed]

| Category | Feature Name | Data Type | Generation Method |

|---|---|---|---|

| Behavioral | Game Accuracy | Continuous | Sampled from a normal distribution |

| Response Latency | Continuous | Sampled from normal a distribution | |

| Error Rate | Continuous | Derived from gameplay | |

| Interaction Frequency | Count | Poisson distribution | |

| Cognitive | Baseline cognitive score | Continuous | Sampled from a normal distribution |

| Working Memory Load | Ordinal | Mapped from the cognitive scale | |

| Attention Span | Ordinal | Mapped from EMA | |

| Emotional | Mood Score | Continuous | Fluctuates per session |

| Stress Level | Ordinal | Dependent on temperature and performance | |

| Energy Level | Ordinal | Correlated with stress and activity | |

| Contextual | Temperature | Continuous | Realistic seasonal values |

| Humidity | Continuous | Realistic seasonal values | |

| Time of Day | Categorical | Random morning/afternoon/evening split | |

| Temporal | Session Index (1–30) | Integer | Sequential with session-specific noise |

| Model | Accuracy | Precision | Recall | F1 Score | ROC-AUC |

|---|---|---|---|---|---|

| Random Forest | 0.880 | 0.887 | 0.869 | 0.878 | 0.915 |

| Logistic Regression | 0.670 | 0.685 | 0.616 | 0.649 | 0.723 |

| Neural Network | 0.865 | 0.883 | 0.838 | 0.860 | 0.931 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zamani, S.; Rostami, A.; Nguyen, M.; Sinha, R.; Madanian, S. A Gamified AI-Driven System for Depression Monitoring and Management. Appl. Sci. 2025, 15, 7088. https://doi.org/10.3390/app15137088

Zamani S, Rostami A, Nguyen M, Sinha R, Madanian S. A Gamified AI-Driven System for Depression Monitoring and Management. Applied Sciences. 2025; 15(13):7088. https://doi.org/10.3390/app15137088

Chicago/Turabian StyleZamani, Sanaz, Adnan Rostami, Minh Nguyen, Roopak Sinha, and Samaneh Madanian. 2025. "A Gamified AI-Driven System for Depression Monitoring and Management" Applied Sciences 15, no. 13: 7088. https://doi.org/10.3390/app15137088

APA StyleZamani, S., Rostami, A., Nguyen, M., Sinha, R., & Madanian, S. (2025). A Gamified AI-Driven System for Depression Monitoring and Management. Applied Sciences, 15(13), 7088. https://doi.org/10.3390/app15137088