YOLO-PWSL-Enhanced Robotic Fish: An Integrated Object Detection System for Underwater Monitoring

Abstract

1. Introduction

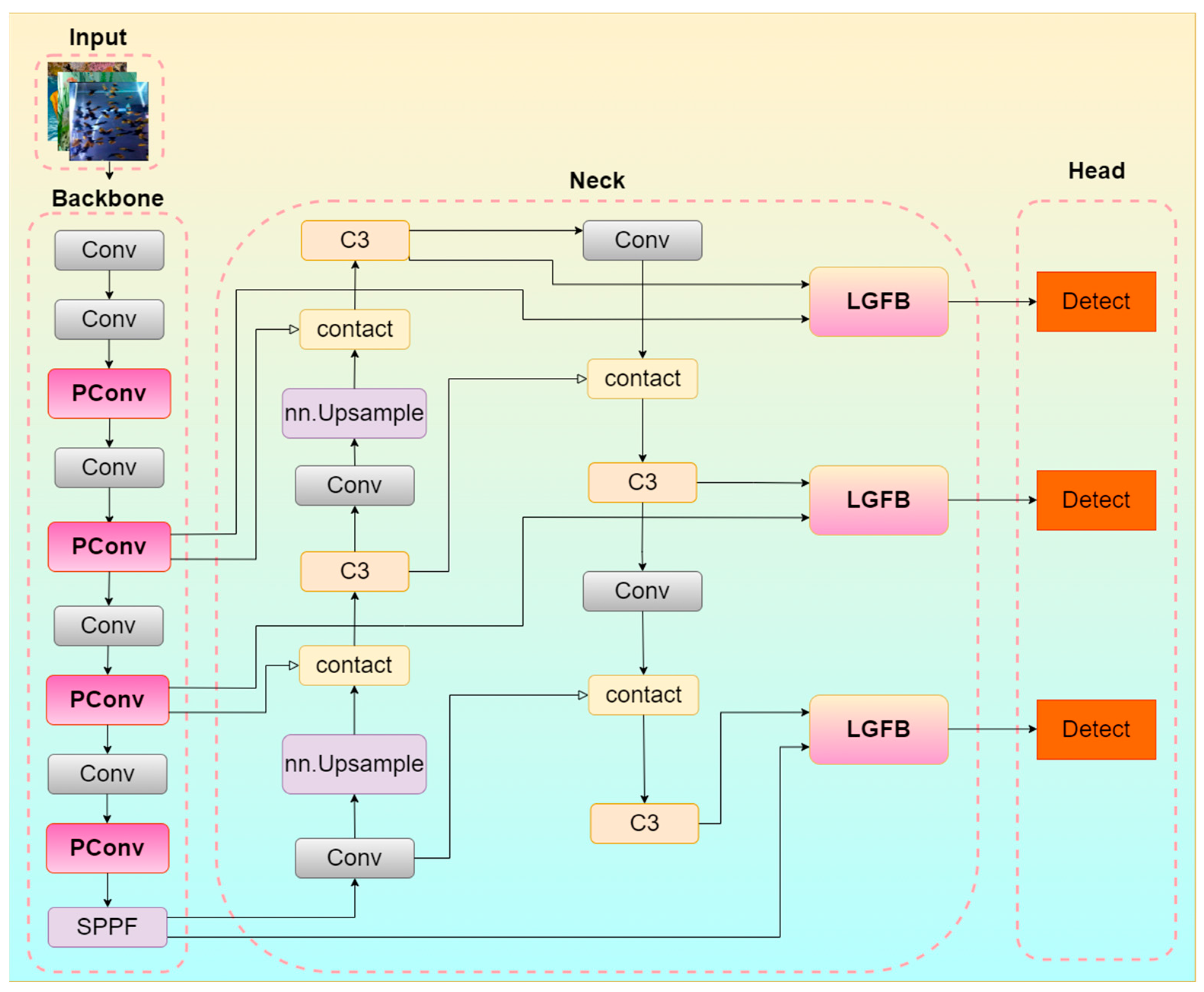

- We design an attention fusion block LGFB (Local–Global Fusion Block), which improves perception in complex scenes by combining local and global attention branches with feature processing.

- We introduce the loss function Wise-IoU and optimize the accuracy of the detection frame by embedding ShapeIoU in it, a loss computation method that combines target shape features and dynamically adjusted bounding box regression.

- We introduce a lightweight convolutional PConv, which enhances feature extraction by relying only on valid pixels in the computation process and effectively solves the missing data problem. Through experimental analysis, the introduction of lightweight convolutional PConv not only reduces the number of model parameters but also improves the accuracy.

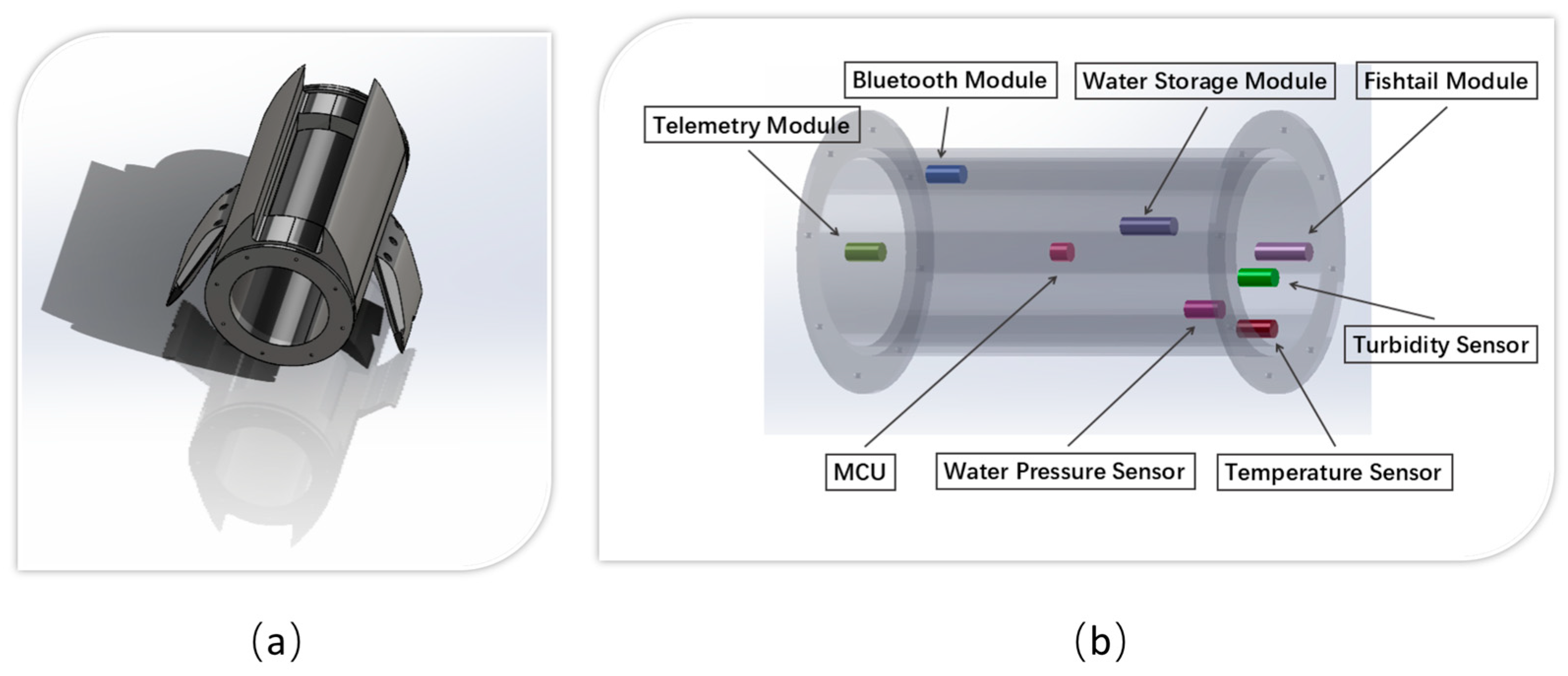

- We design a sinking and floating system to control the stability of the bionic fish in water by a PID algorithm combined with depth sensors and reasonably equipped with temperature, turbidity, depth sensors and a foldable robotic arm.

2. Materials and Methods

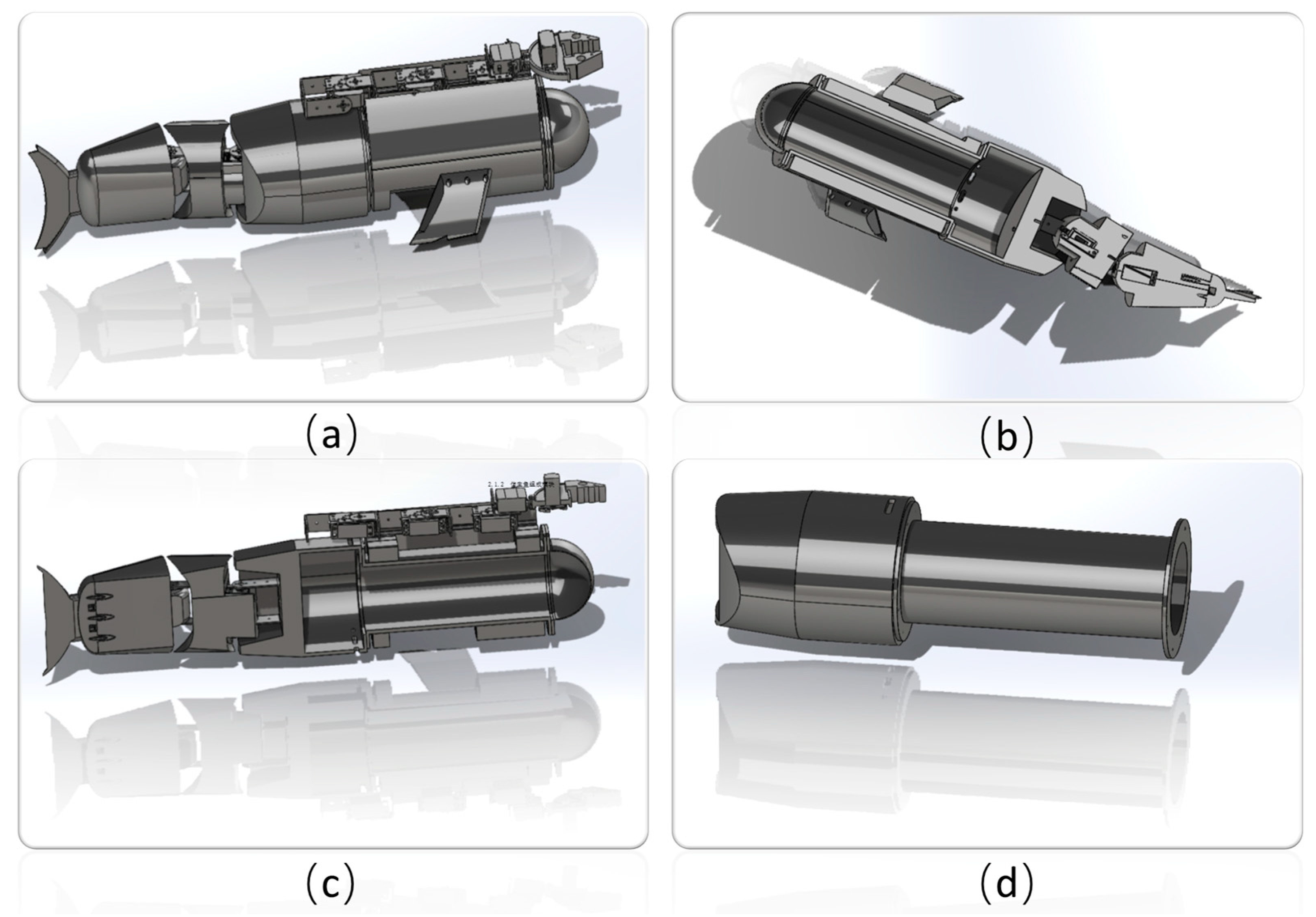

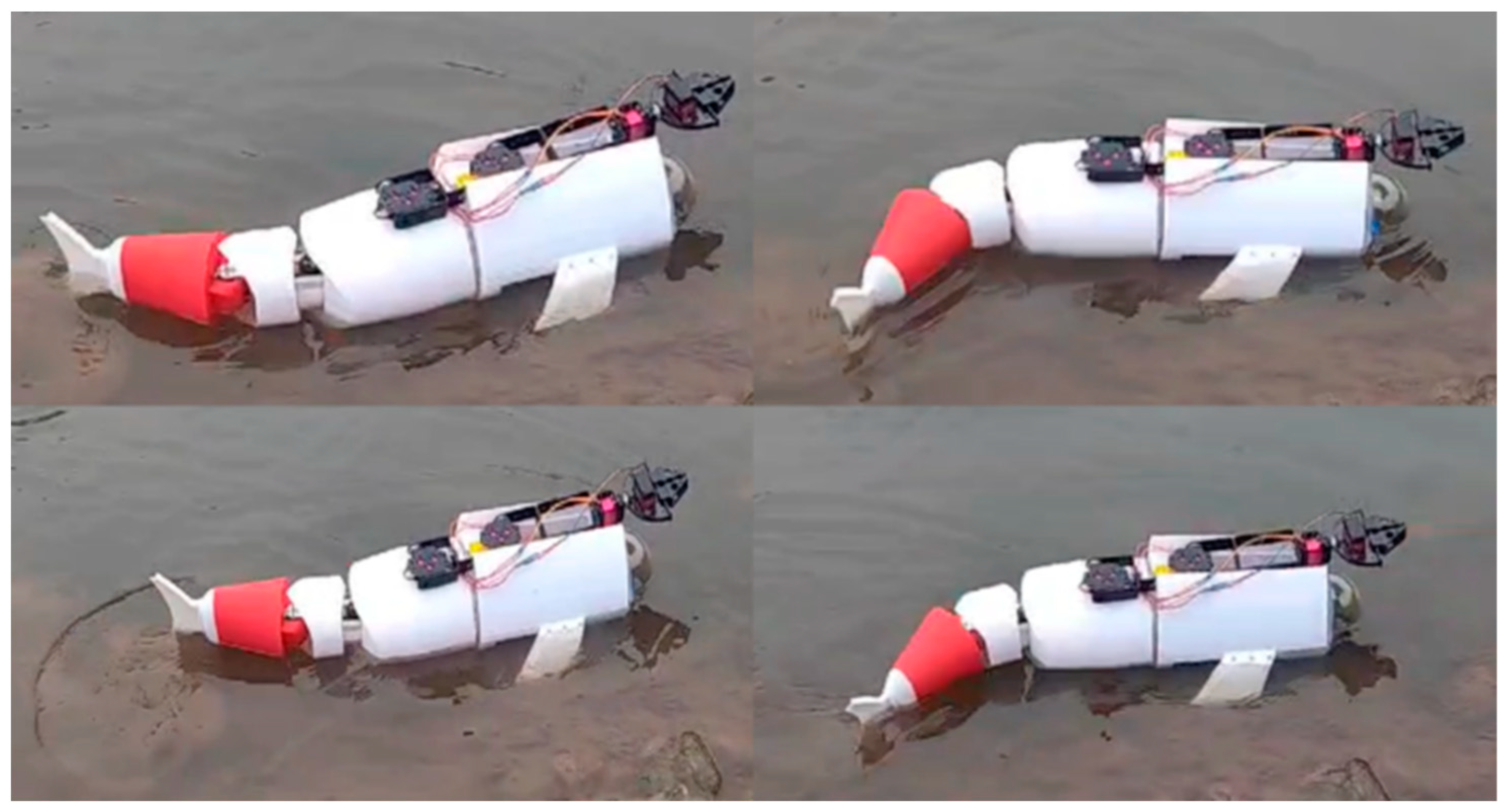

2.1. Design of a Bionic Mechanical Fish

2.1.1. Overall Structure of the Bionic Fish

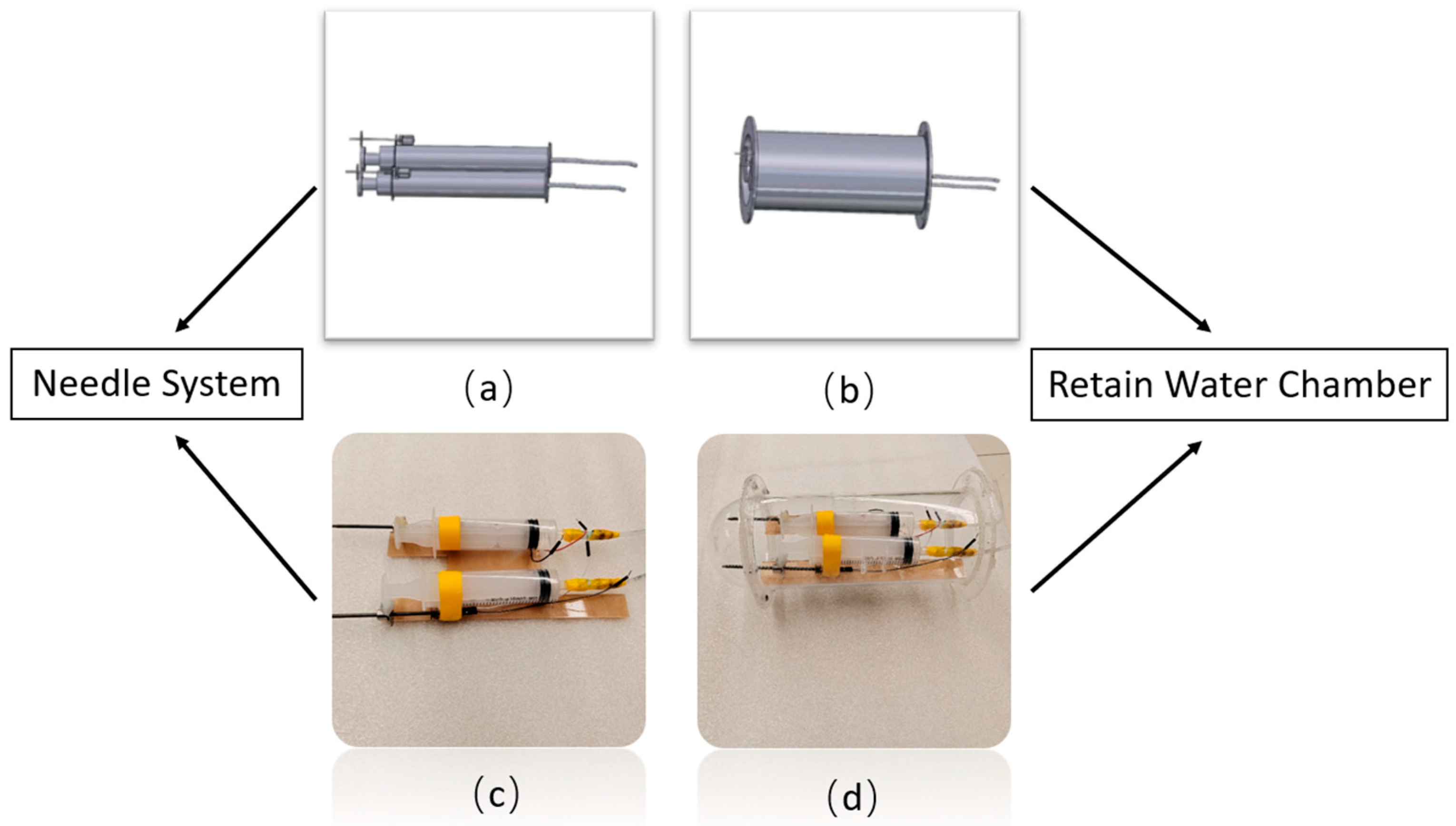

2.1.2. Bionic Fish Composition Modules

- (a)

- STM32H7B0VBT6 System Board

- (b)

- Power system

- (c)

- Reservoir module

- (d)

- Water pressure sensor

- (e)

- Turbidity sensor

- (f)

- Temperature sensor

- (g)

- Image transmission module

- (h)

- Communication module

- (i)

- Robotic arm

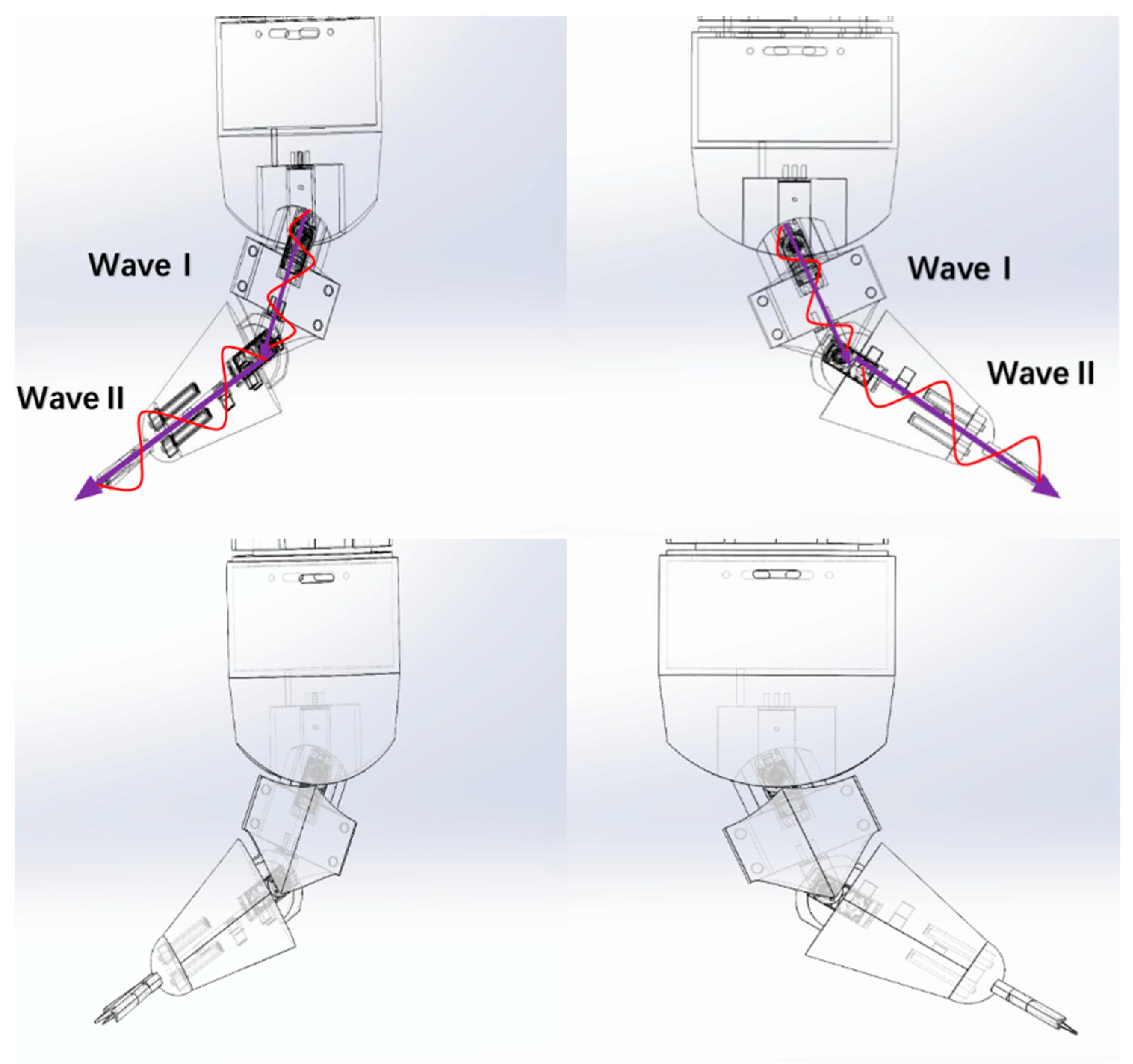

2.2. Tail Fin Swing Design

2.2.1. Tail Fin Drive Design

2.2.2. Control Design

- (1)

- Depth feedback:

- (2)

- PID mathematical model transfer function:

2.2.3. Power Control

2.2.4. Circuit Design

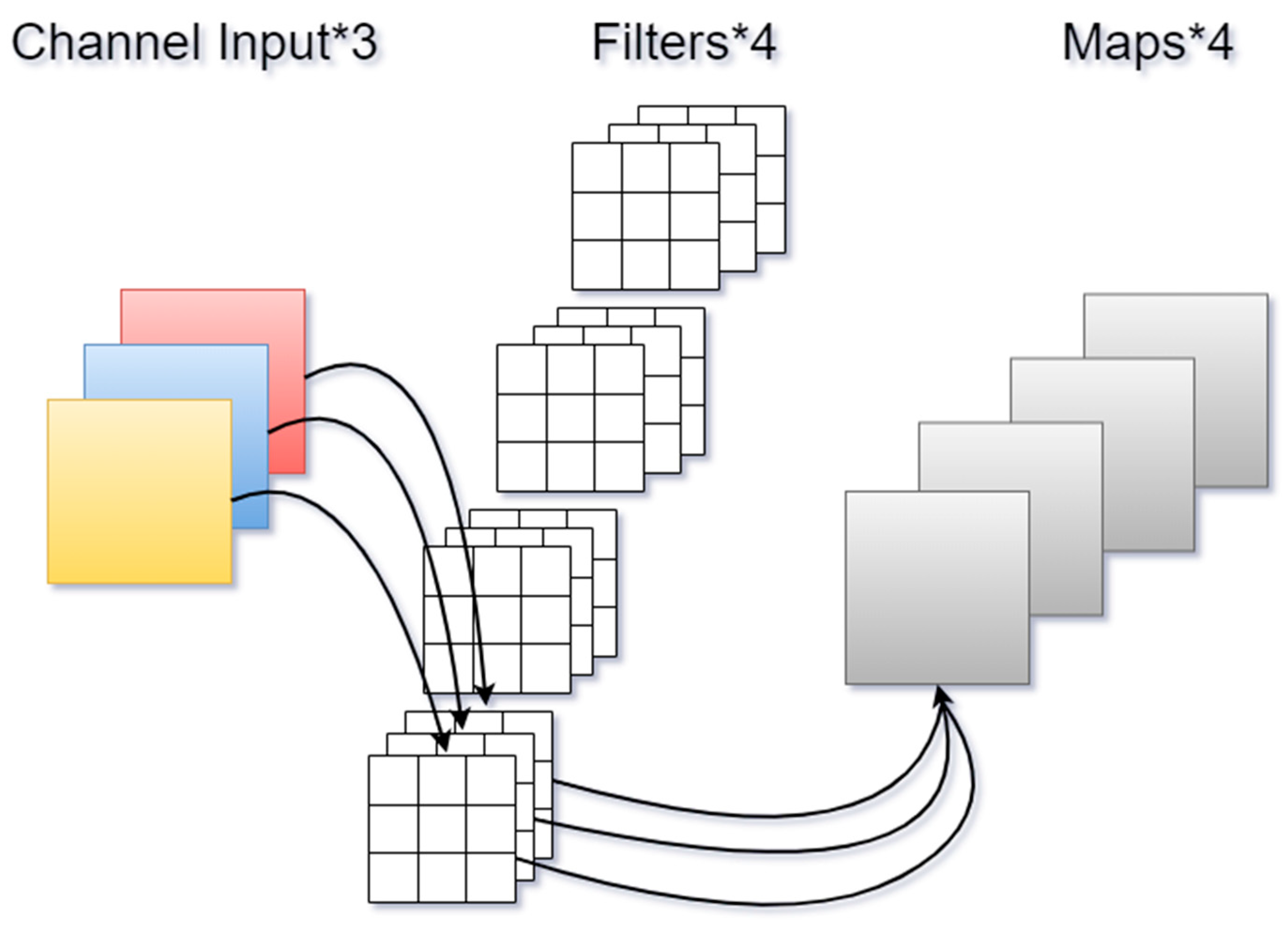

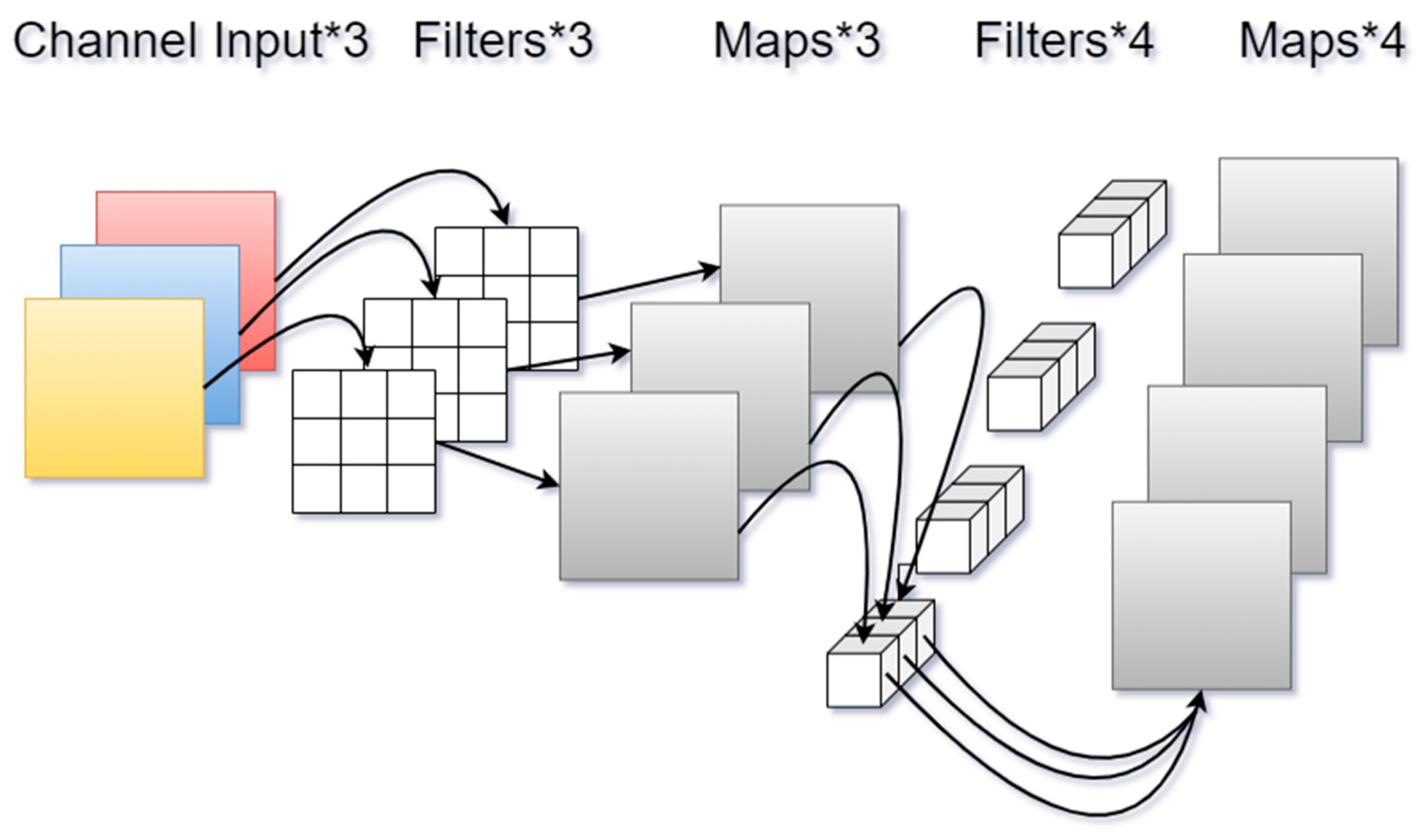

2.3. YOLO-PWSL

2.3.1. Architecture of YOLO-PWSL

2.3.2. LGFB (Local–Global Fusion Block) Module

2.3.3. Wise-ShapeIoU Loss Function

- (1)

- ShapeIoU

- (2)

- Wise-IoU

- (3)

- Wise-ShapeIoU

2.3.4. PConv Module

- (1)

- Conv

- (2)

- DWConv

- (3)

- PConv:

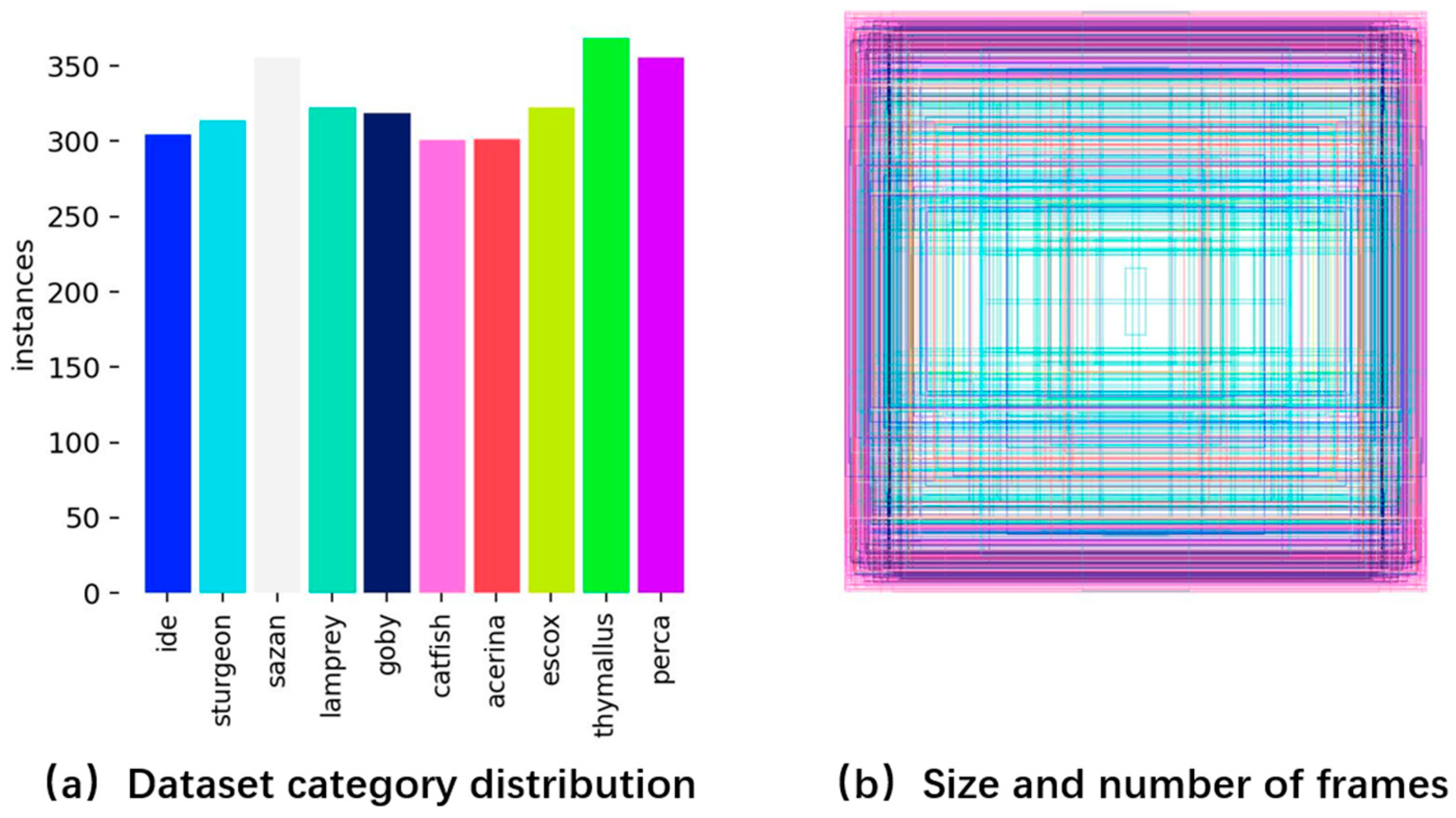

3. Experimental Results and Analysis

3.1. Experimental Environment

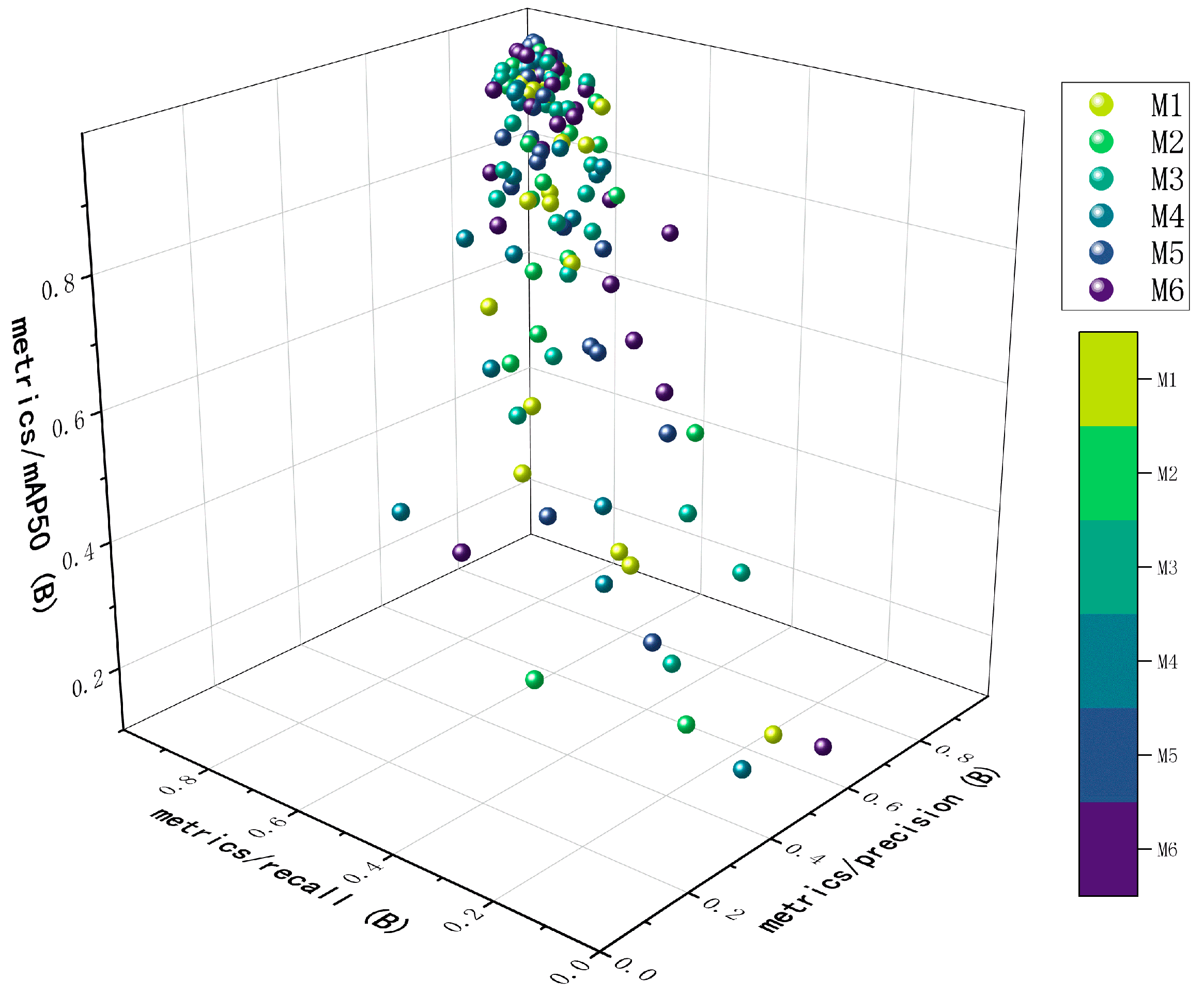

3.2. Ablation Experiment and Comparative Experiments

3.2.1. Calculation of Indicators

3.2.2. Comparative Analysis

- (1)

- We conducted a comparison experiment between LGFB and common attention modules, such as SE (Squeeze-and-Excitation Networks) and CBAM (Convolutional Block Attention Module), and the experimental data are shown in Table 2.

- (2)

- We conducted comparative experiments on Conv, DWConv, and PConv, and the results of the experiments are shown in Table 3.

- (3)

- In order to better validate and demonstrate the effectiveness of this improved model, we compared YOLO-PWSL with three target detection models to extend our comparison, including SSD, Faster R-CNN, and Yolov5s, and the results of the tests are shown in Table 4.

3.2.3. Independence and Synergy Analysis

- (a)

- Independent Effect Analysis

- (b)

- Synergistic Effect Analysis

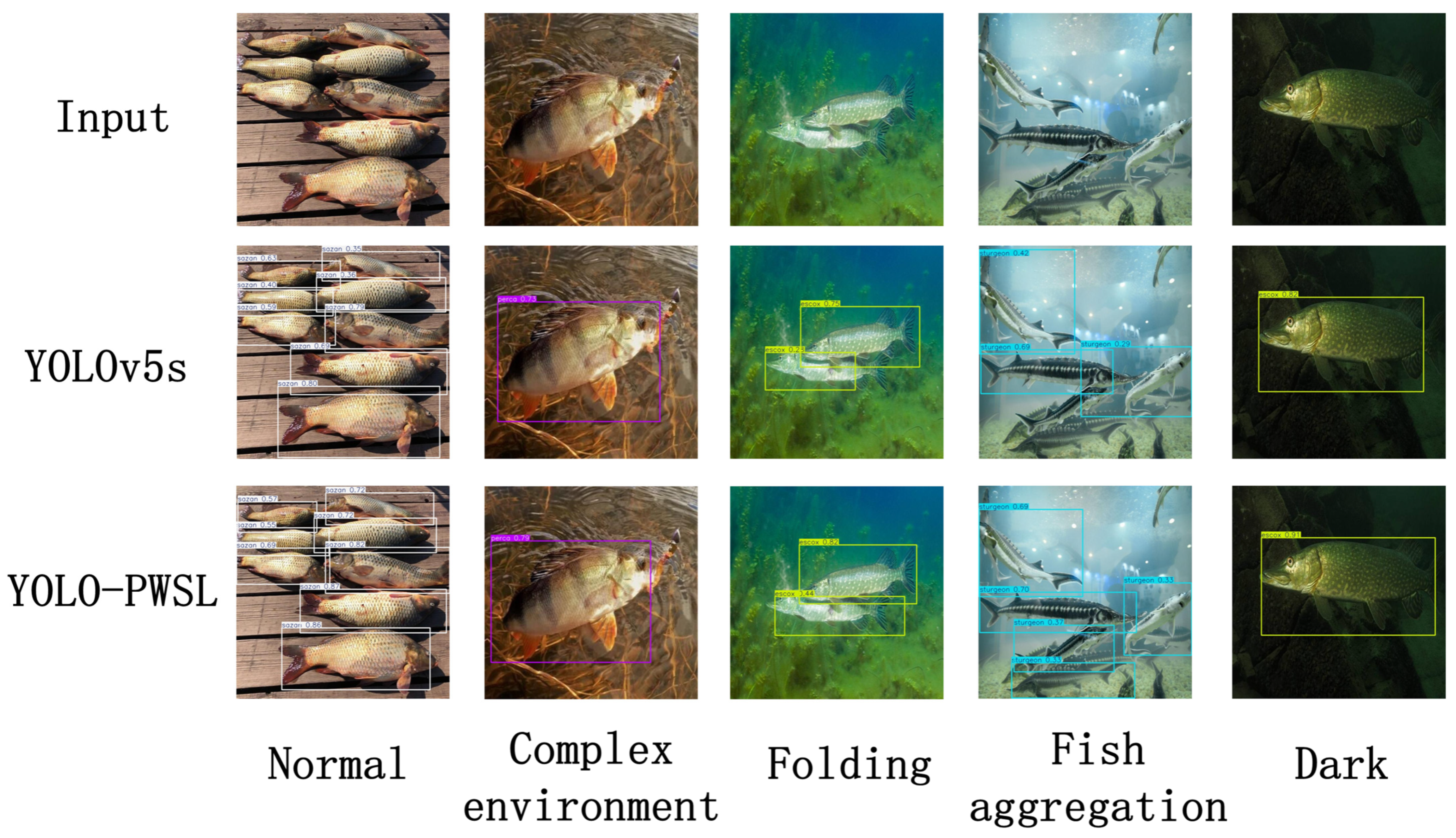

3.2.4. Analysis of the Visualization Results

3.3. Bionic Mechanical Fish

3.3.1. Structure and Assembly

3.3.2. System Control

3.3.3. Visual Recognition

3.3.4. Water Quality Monitoring Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Shi, Z.; Xue, D.; Xu, J. Global Marine Product Space and Coastal Countries’ Productive Capabilities, 1995–2021. Land 2025, 14, 378. [Google Scholar] [CrossRef]

- Qin, A.; Ning, D. Developments, Applications, and Innovations in Agricultural Sciences and Biotechnologies. Appl. Sci. 2025, 15, 4381. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, C.; Guo, B. Biodiversity—The Cornerstone of Sustainable Aquaculture Development: Insights From the Breeding of Approved Fish Varieties for Aquaculture From 1996 to 2024 in China. Rev. Aquac. 2025, 17, e70003. [Google Scholar] [CrossRef]

- Wang, Q.; Liu, H.; Sui, J. Mariculture: Developments, present status and prospects. In Aquaculture in China: Success Stories and Modern Trends; Wiley: Hoboken, NJ, USA, 2018; pp. 38–54. [Google Scholar]

- Wang, Y.; Zheng, Y.; Qian, X.; Yang, X.; Chen, J.; Wu, W. Aquaculture Wastewater Pollution and Purification Technology in China: Research Progress. J. Agric. 2022, 12, 65–70. [Google Scholar]

- Ubina, N.A.; Cheng, S.-C. A Review of Unmanned System Technologies with Its Application to Aquaculture Farm Monitoring and Management. Drones 2022, 6, 12. [Google Scholar] [CrossRef]

- Parra, L.; Sendra, S.; Garcia, L.; Lloret, J. Smart Low-Cost Control System for Fish Farm Facilities. Appl. Sci. 2024, 14, 6244. [Google Scholar] [CrossRef]

- Ullah, I.; Adhikari, D.; Khan, H.; Anwar, M.S.; Ahmad, S.; Bai, X. Mobile robot localization: Current challenges and future prospective. Comput. Sci. Rev. 2024, 53, 100651. [Google Scholar] [CrossRef]

- Huang, Y.-P.; Khabusi, S.P. Artificial Intelligence of Things (AIoT) Advances in Aquaculture: A Review. Processes 2025, 13, 73. [Google Scholar] [CrossRef]

- Davis, A.; Wills, P.S.; Garvey, J.E.; Fairman, W.; Karim, M.A.; Ouyang, B. Developing and Field Testing Path Planning for Robotic Aquaculture Water Quality Monitoring. Appl. Sci. 2023, 13, 2805. [Google Scholar] [CrossRef]

- Ullah, I.; Ali, F.; Sharafian, A.; Ali, A.; Naeem, H.Y.; Bai, X. Optimizing underwater connectivity through multi-attribute decision-making for underwater IoT deployments using remote sensing technologies. Front. Mar. Sci. 2024, 11, 1468481. [Google Scholar] [CrossRef]

- Ma, F.; Fan, Z.; Nikolaeva, A.; Bao, H. Redefining Aquaculture Safety with Artificial Intelligence: Design Innovations, Trends, and Future Perspectives. Fishes 2025, 10, 88. [Google Scholar] [CrossRef]

- Ma, S.; Zhao, Q.; Ding, M.; Zhang, M.; Zhao, L.; Huang, C.; Zhang, J.; Liang, X.; Yuan, J.; Wang, X.; et al. A Review of Robotic Fish Based on Smart Materials. Biomimetics 2023, 8, 227. [Google Scholar] [CrossRef] [PubMed]

- Singh, N.; Gupta, A.; Mukherjee, S. A dynamic model for underwater robotic fish with a servo actuated pectoral fin. SN Appl. Sci. 2019, 1, 659. [Google Scholar] [CrossRef]

- Duraisamy, P.; Kumar Sidharthan, R.; Nagarajan Santhanakrishnan, M. Design, Modeling, and Control of Biomimetic Fish Robot: A Review. J. Bionic Eng. 2019, 16, 967–993. [Google Scholar] [CrossRef]

- Wang, J. Robotic Fish: Development, Modeling, and Application to Mobile Sensing; ProQuest: Ann Arbor, MI, USA, 2014. [Google Scholar]

- Tong, X.; Tang, C. Design of a monitoring system for robotic fish in underwater environment. Int. J. Veh. Inf. Commun. Syst. 2017, 1, 321. [Google Scholar] [CrossRef]

- Costa, D.; Palmieri, G.; Palpacelli, M.-C.; Panebianco, L.; Scaradozzi, D. Design of a Bio-Inspired Autonomous Underwater Robot. J. Intell. Robot. Syst. 2018, 91, 181–192. [Google Scholar] [CrossRef]

- Chen, X.; Li, D.; Mo, D.; Cui, Z.; Li, X.; Lian, H.; Gong, M. Three-Dimensional Printed Biomimetic Robotic Fish for Dynamic Monitoring of Water Quality in Aquaculture. Micromachines 2023, 14, 1578. [Google Scholar] [CrossRef]

- Zhao, D.; Lu, K.; Qian, W. Hydrodynamic Resistance Analysis of Large Biomimetic Yellow Croaker Model: Effects of Shape, Body Length, and Material Based on CFD. Fluids 2025, 10, 107. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, Y.; Chen, X.; Kong, X.; Liu, B.; Jiang, S. Compatibilities of Cyprinus carpio with Varied Colors of Robotic Fish. Fishes 2024, 9, 211. [Google Scholar] [CrossRef]

- Tanev, I. Speed and Energy Efficiency of a Fish Robot Featuring Exponential Patterns of Control. Actuators 2025, 14, 119. [Google Scholar] [CrossRef]

- Nayoun, M.N.I.; Hossain, S.A.; Rezaul, K.M.; Siddiquee, K.N.e.A.; Islam, M.S.; Jannat, T. Internet of Things-Driven Precision in Fish Farming: A Deep Dive into Automated Temperature, Oxygen, and pH Regulation. Computers 2024, 13, 267. [Google Scholar] [CrossRef]

- Misbahuddin, M.; Cokrowati, N.; Iqbal, M.S.; Farobie, O.; Amrullah, A.; Ernawati, L. Kalman Filter-Enhanced Data Aggregation in LoRaWAN-Based IoT Framework for Aquaculture Monitoring in Sargassum sp. Cultivation. Computers 2025, 14, 151. [Google Scholar] [CrossRef]

- Hussain, A.; Hussain, T.; Ullah, I.; Muminov, B.; Khan, M.Z.; Alfarraj, O.; Gafar, A. CR-NBEER: Cooperative-Relay Neighboring-Based Energy Efficient Routing Protocol for Marine Underwater Sensor Networks. J. Mar. Sci. Eng. 2023, 11, 1474. [Google Scholar] [CrossRef]

- Wei, L.; Dragomir, A.; Dumitru, E.; Christian, S.; Scott, R.; Cheng-Yang, F.; Berg, A.C. Ssd: Single shot multibox detector. In Lecture Notes in Computer Science; Springer: Cham, Germany, 2016. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the Computer Vision & Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. arXiv 2013, arXiv:1311.2524. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Khan, S.; Ullah, I.; Ali, F.; Shafiq, M.; Ghadi, Y.Y.; Kim, T. Deep learning-based marine big data fusion for ocean environment monitoring: Towards shape optimization and salient objects detection. Front. Mar. Sci. 2023, 9, 1094915. [Google Scholar] [CrossRef]

- Yan, Z.; Hao, L.; Yang, J.; Zhou, J. Real-Time Underwater Fish Detection and Recognition Based on CBAM-YOLO Network with Lightweight Design. J. Mar. Sci. Eng. 2024, 12, 1302. [Google Scholar] [CrossRef]

- Dinakaran, R.; Zhang, L.; Li, C.-T.; Bouridane, A.; Jiang, R. Robust and Fair Undersea Target Detection with Automated Underwater Vehicles for Biodiversity Data Collection. Remote Sens. 2022, 14, 3680. [Google Scholar] [CrossRef]

- Li, Z.; Zheng, B.; Chao, D.; Zhu, W.; Li, H.; Duan, J.; Zhang, X.; Zhang, Z.; Fu, W.; Zhang, Y. Underwater-Yolo: Underwater Object Detection Network with Dilated Deformable Convolutions and Dual-Branch Occlusion Attention Mechanism. J. Mar. Sci. Eng. 2024, 12, 2291. [Google Scholar] [CrossRef]

- Tong, C.; Li, B.; Wu, J.; Xu, X. Developing a Dead Fish Recognition Model Based on an Improved YOLOv5s Model. Appl. Sci. 2025, 15, 3463. [Google Scholar] [CrossRef]

- Diamanti, E.; Ødegård, Ø. Visual sensing on marine robotics for the 3D documentation of Underwater Cultural Heritage: A review. J. Archaeol. Sci. 2024, 166, 105985. [Google Scholar] [CrossRef]

- Lu, Y.; Chen, X.; Wu, Z.; Yu, J.; Wen, L. A novel robotic visual perception framework for underwater operation. Front. Inf. Technol. Electron. Eng. 2022, 23, 1602–1619. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, Z.; Yang, H.; Liu, Z.; Liu, J. Design and Realization of a Novel Robotic Manta Ray for Sea Cucumber Recognition, Location, and Approach. Biomimetics 2023, 8, 345. [Google Scholar] [CrossRef]

- Wang, S.; Han, Y.; Mao, S. Innovation Concept Model and Prototype Validation of Robotic Fish with a Spatial Oscillating Rigid Caudal Fin. J. Mar. Sci. Eng. 2021, 9, 435. [Google Scholar] [CrossRef]

- Zhang, H.; Zhang, S. Shape-IoU: More Accurate Metric considering Bounding Box Shape and Scale. arXiv 2023, arXiv:2312.17663. [Google Scholar]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.H.; Chan, S.H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

| Parameters | Values |

|---|---|

| Image Size | 640 × 640 |

| Batch size | 4 |

| Learning rate | 0.01 |

| Momentum factor | 0.937 |

| Weight decay coefficient | 0.0005 |

| Iterations | 120 |

| Method | P | R | mAP50 |

|---|---|---|---|

| SE | 0.919 | 0.873 | 0.918 |

| CBAM | 0.877 | 0.919 | 0.941 |

| LGFB | 0.915 | 0.903 | 0.948 |

| Method | P | R | mAP50 | GFLOPs |

|---|---|---|---|---|

| Conv | 0.882 | 0.891 | 0.931 | 24.1 |

| DWConv | 0.921 | 0.862 | 0.935 | 20.3 |

| PConv | 0.914 | 0.924 | 0.951 | 19.9 |

| Method | P | R | mAP50 | GFLOPs | Params (M) |

|---|---|---|---|---|---|

| SSD | 0.867 | 0.833 | 0.868 | 62.7 | 26.28 |

| Faster R-CNN | 0.732 | 0.912 | 0.906 | 370.2 | 137.09 |

| Yolov5s | 0.882 | 0.891 | 0.931 | 24.1 | 9.11 |

| Ours | 0.929 | 0.897 | 0.961 | 21.0 | 7.31 |

| Model | mAP50 | GFLOPs | FPS |

|---|---|---|---|

| M1 | 0.931 | 24.1 | 81.65 |

| M2 | 0.951 | 19.9 | 98.49 |

| M3 | 0.945 | 24.1 | 79.52 |

| M4 | 0.948 | 25.1 | 66.48 |

| M5 M6 | 0.958 0.961 | 19.9 21 | 95.33 87.6 |

| Samples | Water Temperature (°C) | Water Pressure (mm) | Turbidity (ppm) | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Test | Actual | Error | Test | Actual | Error | Test | Actual | Error | |

| 1 | 43.00 | 43.24 | 0.24 | 305 | 309 | 4 | 9.897 | 10 | 0.103 |

| 2 | 40.47 | 41.12 | 0.65 | 297 | 301 | 4 | 19.905 | 20 | 0.095 |

| 3 | 40.78 | 40.41 | 0.37 | 232 | 237 | 5 | 29.841 | 30 | 0.159 |

| 4 | 39.46 | 39.50 | 0.04 | 182 | 188 | 6 | 39.885 | 40 | 0.115 |

| 5 | 38.54 | 38.61 | 0.07 | 147 | 155 | 8 | 49.877 | 50 | 0.123 |

| 6 | 35.23 | 35.43 | 0.20 | 120 | 123 | 3 | 54.844 | 55 | 0.156 |

| 7 | 27.13 | 27.27 | 0.14 | 83 | 88 | 5 | 69.881 | 70 | 0.119 |

| 8 | 25.02 | 25.34 | 0.32 | 45 | 43 | 2 | 74.859 | 75 | 0.141 |

| 9 | 22.69 | 22.93 | 0.24 | 25 | 14 | 11 | 99.87 | 100 | 0.130 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, L.; Tang, Y.; Zhang, W.; Tang, Q.; Hao, H. YOLO-PWSL-Enhanced Robotic Fish: An Integrated Object Detection System for Underwater Monitoring. Appl. Sci. 2025, 15, 7052. https://doi.org/10.3390/app15137052

Lei L, Tang Y, Zhang W, Tang Q, Hao H. YOLO-PWSL-Enhanced Robotic Fish: An Integrated Object Detection System for Underwater Monitoring. Applied Sciences. 2025; 15(13):7052. https://doi.org/10.3390/app15137052

Chicago/Turabian StyleLei, Lingrui, Ying Tang, Weidong Zhang, Quan Tang, and Haichi Hao. 2025. "YOLO-PWSL-Enhanced Robotic Fish: An Integrated Object Detection System for Underwater Monitoring" Applied Sciences 15, no. 13: 7052. https://doi.org/10.3390/app15137052

APA StyleLei, L., Tang, Y., Zhang, W., Tang, Q., & Hao, H. (2025). YOLO-PWSL-Enhanced Robotic Fish: An Integrated Object Detection System for Underwater Monitoring. Applied Sciences, 15(13), 7052. https://doi.org/10.3390/app15137052