Root Cause Analysis of Cast Product Defects with Two-Branch Reasoning Network Based on Continuous Casting Quality Knowledge Graph

Abstract

1. Introduction

- We propose KGTBRN in the continuous casting domain. Aiming at the requirements of root cause analysis for cast product defects, one connection layer and one capsule layer are used to deal with different types of entity-side reasoning tasks, so as to improve both the adaptability of KGTBRN to different relation features and the reasoning accuracy.

- KGTBRN is experimentally verified on a continuous casting data set and compared with existing cutting-edge methods. The experimental results show that KGTBRN obtains the best mean reciprocal rank and the highest HITS@1, HITS@3 and HITS@10 on the continuous casting data set. It also demonstrates clear advantages on the benchmark data set FB15k-237 and shows good generalization performance on the WN18RR data set.

2. Related Works

2.1. Root Cause Traceability of Cast Product Defects

2.2. Industrial KGs

2.3. GATs

2.4. Knowledge Graph Completion

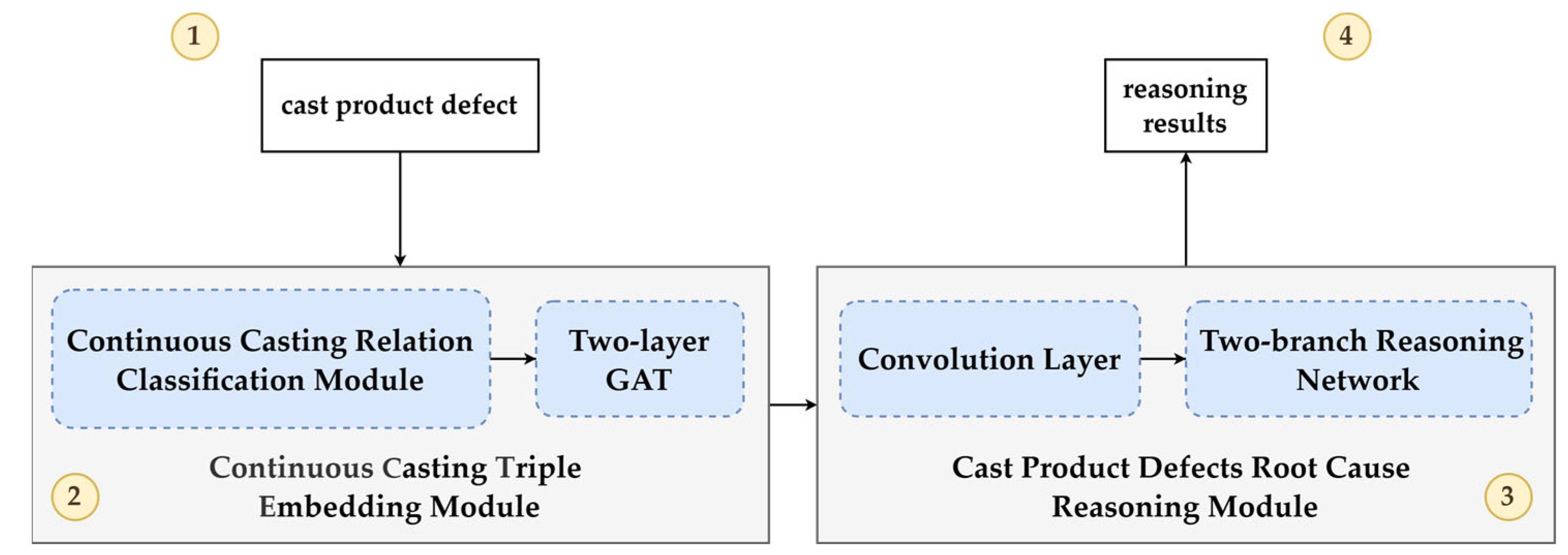

3. Methods

3.1. Problem Modeling

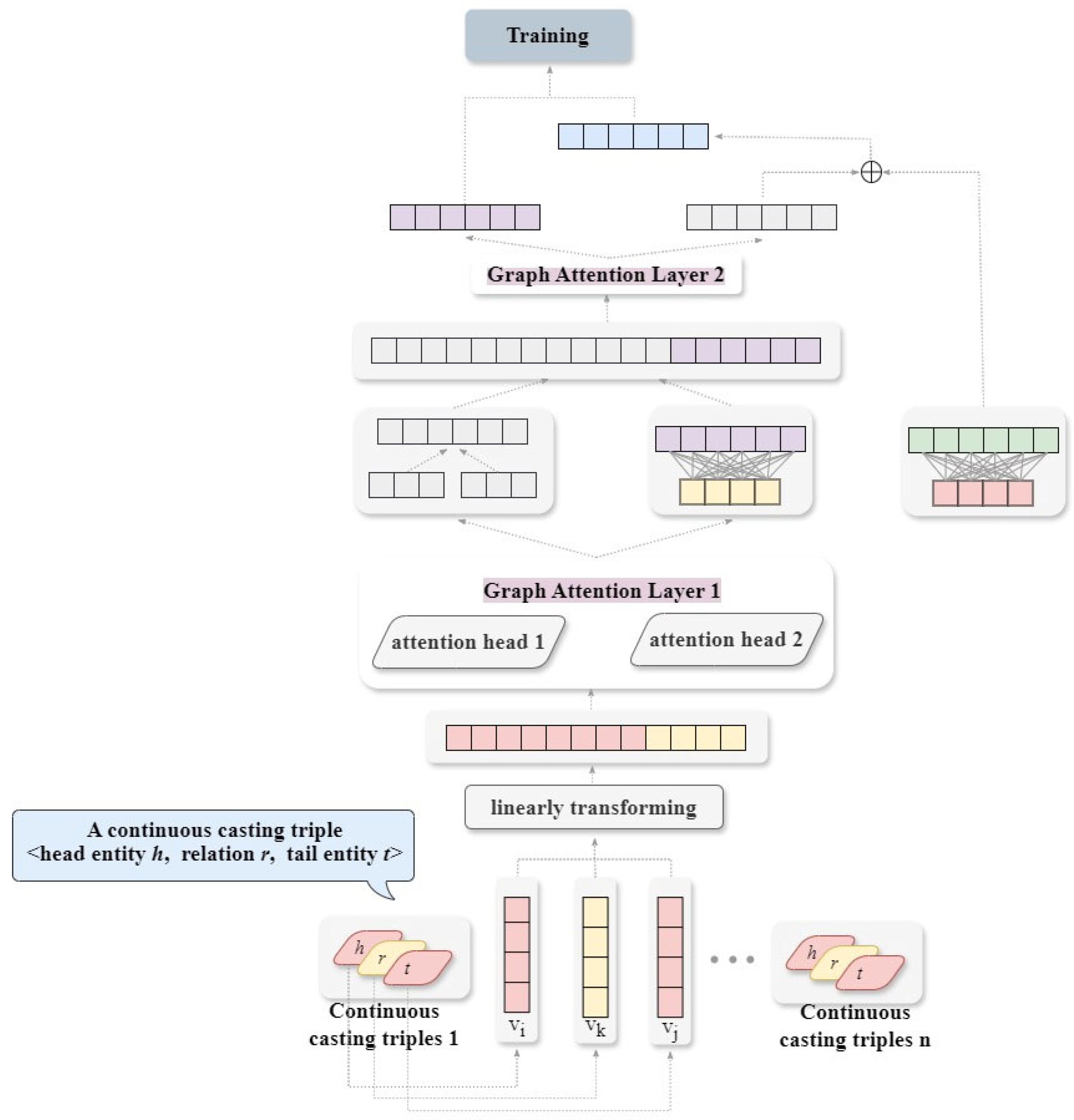

3.2. Continuous Casting Triple-Embedding Module Based on GAT

3.2.1. Continuous Casting Relation Classification Module

3.2.2. GAT Embedding Module

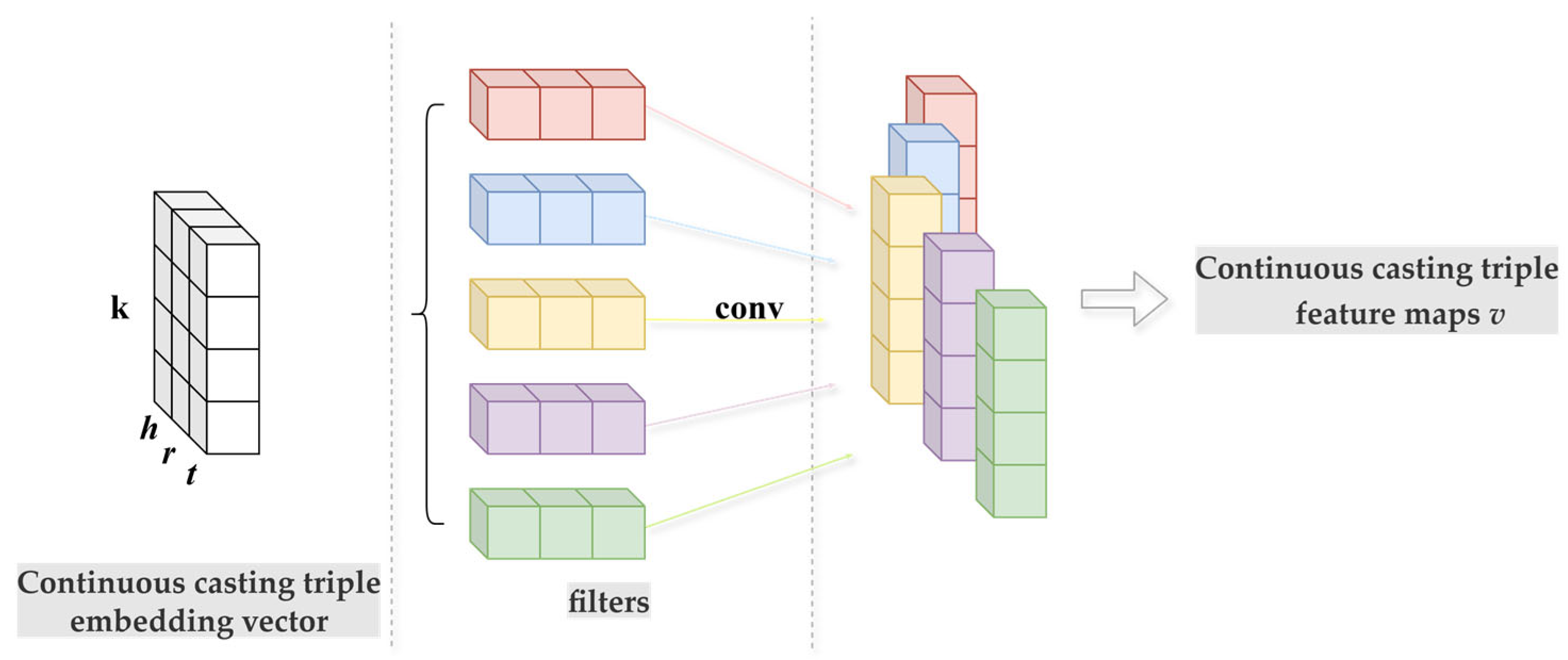

3.3. Cast Product Defect Root Cause Reasoning Module of C2Q-KG Based on Two-Branch Reasoning Network

- (i)

- Replace the existing head entity, and retain the relation and tail entity to generate a negative sample of the 1-N type, which is used for training the reasoning tasks of the head entity in the 1-N type casting triple.

- (ii)

- Replace the existing tail entity, and retain the relation and head entity to generate a negative sample of the 1-N type, which is used for training the reasoning tasks of the tail entity in the 1-N type casting triple.

4. Experiment and Analysis

4.1. C2Q-KG

4.2. Performance Evaluation of Root Cause Analysis Model for Cast Product Defects

4.3. Ablation Experiment of Root Cause Analysis Model for Cast Product Defects

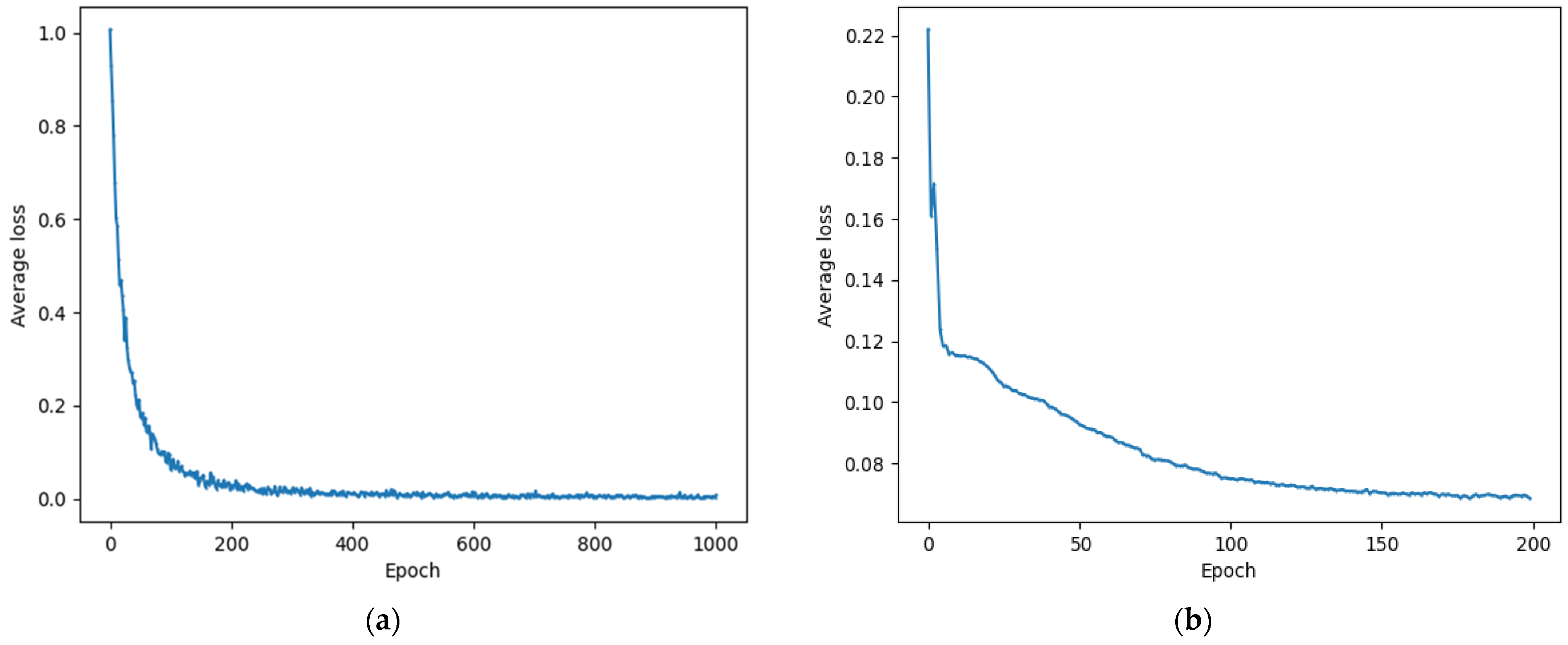

4.4. Experiments on Hyperparameters of GAT Layer and Reasoning Layer

4.5. Generalization Experiment of Root Cause Analysis Model for Cast Product Defects

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Xia, L.; Liang, Y.; Leng, J.; Zheng, P. Maintenance Planning Recommendation of Complex Industrial Equipment Based on Knowledge Graph and Graph Neural Network. Reliab. Eng. Syst. Saf. 2023, 232, 109068. [Google Scholar] [CrossRef]

- Birs, I.R.; Muresan, C.; Copot, D.; Ionescu, C.-M. Model identification and control of electromagnetic actuation in continuous casting process with improved quality. IEEE-CAA J. Autom. Sin. 2023, 10, 203–215. [Google Scholar] [CrossRef]

- Chandak, P.; Huang, K.; Zitnik, M. Building a knowledge graph to enable precision medicine. Sci. Data 2023, 10, 67. [Google Scholar] [CrossRef]

- Liu, Z.; Xiao, L.; Chen, J.; Song, L.; Qi, P.; Tong, Z. A Multimodal Knowledge Graph for Medical Decision Making Centred Around Personal Values. In Proceedings of the 2023 26th International Conference on Computer Supported Cooperative Work in Design (CSCWD), Rio de Janeiro, Brazil, 24–26 May 2023; pp. 1638–1643. [Google Scholar]

- Abu-Salih, B. Domain-specific knowledge graphs: A survey. J. Netw. Comput. Appl. 2021, 185, 103076. [Google Scholar] [CrossRef]

- Zhang, Z.; Ni, Z.; Liu, Z.; Xia, S. Predicting Dynamic Relationship for Financial Knowledge Graph. Data Anal. Knowl. Discov. 2023, 7, 39–50. [Google Scholar] [CrossRef]

- Fani, V.; Antomarioni, S.; Bandinelli, R.; Ciarapica, F.E. Data Mining and Augmented Reality: An Application to the Fashion Industry. Appl. Sci. 2023, 13, 2317. [Google Scholar] [CrossRef]

- Liu, S.; Lyu, Q.; Liu, X.; Sun, Y. Synthetically Predicting the Quality Index of Sinter Using Machine Learning Model. Ironmak. Steelmak. 2020, 47, 828–836. [Google Scholar]

- Lee, S.Y.; Tama, B.A.; Choi, C.; Hwang, J.-Y.; Bang, J.; Lee, S. Spatial and Sequential Deep Learning Approach for Predicting Temperature Distribution in a Steel-Making Continuous Casting Process. IEEE Access 2020, 8, 21953–21965. [Google Scholar] [CrossRef]

- Zhang, J.; Ling, W. Quality Defect Tracing of Hot Rolled Strip Based on Knowledge Graph Reasoning. Comput. Integr. Manuf. Syst. 2024, 30, 1105. [Google Scholar]

- Wu, X.; Kang, H.; Yuan, S.; Jiang, W.; Gao, Q.; Mi, J. Anomaly Detection of Liquid Level in Mold During Continuous Casting by Using Forecasting and Error Generation. Appl. Sci. 2023, 13, 7457. [Google Scholar] [CrossRef]

- Takalo-Mattila, J.; Heiskanen, M.; Kyllonen, V.; Maatta, L.; Bogdanoff, A. Explainable Steel Quality Prediction System Based on Gradient Boosting Decision Trees. IEEE Access 2022, 10, 68099–68110. [Google Scholar] [CrossRef]

- Li, Y.; Li, Y.; Liu, J.; Fan, Z.; Wang, Q. Research on Segmentation of Steel Surface Defect Images Based on Improved Res-UNet Network. J. Electron. Inf. Technol. 2022, 44, 1513–1520. [Google Scholar]

- Cao, Y.; Jia, F.; Manogaran, G. Efficient Traceability Systems of Steel Products Using Blockchain-Based Industrial Internet of Things. IEEE Trans. Ind. Inform. 2020, 16, 6004–6012. [Google Scholar] [CrossRef]

- Kim, J.; Kwon, Y.; Jo, Y.; Choi, E. KG-GPT: A General Framework for Reasoning on Knowledge Graphs Using Large Language Models. In Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP), Singapore, 6–10 December 2023; pp. 9410–9421. [Google Scholar]

- Wu, T.; Qi, G.; Li, C.; Wang, M. A Survey of Techniques for Constructing Chinese Knowledge Graphs and Their Applications. Sustainability 2018, 10, 3245. [Google Scholar] [CrossRef]

- Wu, X.; She, Y.; Wang, X.; Lu, H.; Gao, Q. Domain-Specific Knowledge Graph for Quality Engineering of Continuous Casting: Joint Extraction-Based Construction and Adversarial Training Enhanced Alignment. Appl. Sci. 2025, 15, 5674. [Google Scholar] [CrossRef]

- Zhou, L.; Pan, M.; Sikorski, J.J.; Garud, S.; Aditya, L.K.; Kleinelanghorst, M.J.; Karimi, I.A.; Kraft, M. Towards an Ontological Infrastructure for Chemical Process Simulation and Optimization in the Context of Eco-Industrial Parks. Appl. Energy 2017, 204, 1284–1298. [Google Scholar] [CrossRef]

- Chen, Z.; Liu, Y.; Valera-Medina, A.; Robinson, F. Multi-Sourced Modelling for Strip Breakage Using Knowledge Graph Embeddings. Procedia CIRP 2021, 104, 1884–1889. [Google Scholar] [CrossRef]

- Zheng, P.; Xia, L.; Li, C.; Li, X.; Liu, B. Towards Self-X cognitive manufacturing network: An industrial knowledge graph-based multi-agent reinforcement learning approach. J. Manuf. Syst. 2021, 61, 16–26. [Google Scholar] [CrossRef]

- Mou, T.; Li, S. Knowledge Graph Construction for Control Systems in Process Industry. Chin. J. Intell. Sci. Technol 2022, 4, 129–141. [Google Scholar]

- Wu, Z.; Pan, S.; Chen, F.; Long, G.; Zhang, C.; Yu, P.S. A Comprehensive Survey on Graph Neural Networks. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4–24. [Google Scholar] [CrossRef]

- Veličković, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph Attention Networks. In Proceedings of the Sixth International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018. [Google Scholar]

- Wang, Q.; Jiang, H.; Yi, S.; Yang, L.; Nai, H.; Nie, Q. Hyperbolic Representation Learning for Complex Networks. J. Softw. 2020, 32, 93–117. [Google Scholar]

- Wang, X.; Ji, H.; Shi, C.; Wang, B.; Ye, Y.; Cui, P.; Yu, P.S. Heterogeneous Graph Attention Network. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019; pp. 2022–2032. [Google Scholar]

- Wang, Q.; Mao, Z.; Wang, B.; Guo, L. Knowledge Graph Embedding: A Survey of Approaches and Applications. IEEE Trans. Knowl. Data Eng. 2017, 29, 2724–2743. [Google Scholar] [CrossRef]

- Deng, W.; Zhang, Y.; Yu, H.; Li, H. Knowledge Graph Embedding Based on Dynamic Adaptive Atrous Convolution and Attention Mechanism for Link Prediction. Inf. Process. Manag. 2024, 61, 103642. [Google Scholar] [CrossRef]

- Sun, Z.; Deng, Z.-H.; Nie, J.-Y.; Tang, J. Rotate: Knowledge graph embedding by relational rotation in complex space. In Proceedings of the Seventh International Conference on Learning Representations, New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Abboud, R.; Ceylan, I.; Lukasiewicz, T.; Salvatori, T. Boxe: A Box Embedding Model for Knowledge Base Completion. Adv. Neural Inf. Process. Syst. 2020, 33, 9649–9661. [Google Scholar]

- Niu, G.; Li, B.; Zhang, Y.; Sheng, Y.; Shi, C.; Li, J.; Pu, S. Joint Semantics and Data-Driven Path Representation for Knowledge Graph Reasoning. Neurocomputing 2022, 483, 249–261. [Google Scholar] [CrossRef]

- Le, T.; Le, N.; Le, B. Knowledge Graph Embedding by Relational Rotation and Complex Convolution for Link Prediction. Expert Syst. Appl. 2023, 214, 119–122. [Google Scholar] [CrossRef]

- Wang, Z.; Li, L.; Zeng, D. Srgcn: Graph-Based Multi-Hop Reasoning on Knowledge Graphs. Neurocomputing 2021, 454, 280–290. [Google Scholar] [CrossRef]

- Junhua, D.; Yucheng, H.; Yi-an, Z.; Dong, Z. Attention-Based Relational Graph Convolutional Network for Knowledge Graph Reasoning. In Proceedings of the 2022 21st International Symposium on Communications and Information Technologies (ISCIT), Xi’an, China, 27–30 September 2022; pp. 216–221. [Google Scholar]

- Marra, G.; Diligenti, M.; Giannini, F. Relational reasoning networks. Knowl.-Based Syst. 2025, 310, 112822. [Google Scholar] [CrossRef]

- Nathani, D.; Chauhan, J.; Sharma, C.; Kaul, M. Learning Attention-based Embeddings for Relation Prediction in Knowledge Graphs. In Proceedings of the 57th Annual Meeting of the Association-for-Computational-Linguistics (ACL), Florence, Italy, 28 July–2 August 2019; pp. 4710–4723. [Google Scholar]

- Nguyen, D.Q.; Nguyen, T.D.; Nguyen, D.Q.; Phung, D. A Novel Embedding Model for Knowledge Base Completion Based on Convolutional Neural Network. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, New Orleans, LA, USA, 1–6 June 2018; pp. 327–333. [Google Scholar]

- Nguyen, D.Q.; Vu, T.; Nguyen, T.D.; Nguyen, D.Q.; Phung, D.; Assoc Computat, L. A Capsule Network-based Embedding Model for Knowledge Graph Completion and Search Personalization. In Proceedings of the Conference of the North-American-Chapter of the Association-for-Computational-Linguistics—Human Language Technologies (NAACL-HLT), Minneapolis, MN, USA, 2–7 June 2019; pp. 2180–2189. [Google Scholar]

- Bordes, A.; Usunier, N.; Garcia-Duran, A.; Weston, J.; Yakhnenko, O. Translating Embeddings for Modeling Multi-Relational Data. Adv. Neural Inf. Process. Syst. 2013, 26, 26. [Google Scholar]

- Yang, B.; Yih, W.-t.; He, X.; Gao, J.; Deng, L. Embedding Entities and Relations for Learning and Inference in Knowledge Bases. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014; pp. 2181–2187. [Google Scholar]

- Trouillon, T.; Welbl, J.; Riedel, S.; Gaussier, É.; Bouchard, G. Complex Embeddings for Simple Link Prediction. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; pp. 2071–2080. [Google Scholar]

- Youn, J.; Tagkopoulos, I. KGLM: Integrating Knowledge Graph Structure in Language Models for Link Prediction. In Proceedings of the STARSEM, Seattle, WA, USA, 14–15 July 2022. [Google Scholar]

- Li, H.; Yu, B.; Wei, Y.; Wang, K.; Da Xu, R.Y.; Wang, B. Kermit: Knowledge Graph Completion of Enhanced Relation Modeling with Inverse Transformation. arXiv 2023, arXiv:2309.14770. [Google Scholar] [CrossRef]

- Dettmers, T.; Minervini, P.; Stenetorp, P.; Riedel, S. Convolutional 2D Knowledge Graph Embeddings. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence/30th Innovative Applications of Artificial Intelligence Conference/8th AAAI Symposium on Educational Advances in Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 1811–1818. [Google Scholar]

- Zhu, Z.; Zhang, Z.; Xhonneux, L.-P.; Tang, J. Neural Bellman-Ford Networks: A General Graph Neural Network Framework for Link Prediction. In Proceedings of the 35th Annual Conference on Neural Information Processing Systems (NeurIPS), Electr Network, Online, 6–14 December 2021; pp. 29476–29490. [Google Scholar]

| Continuous Casting Head Entity | Continuous Casting Relation | Continuous Casting Tail Entity | Relation Type | Reasoning Need | Reasoning Selection |

|---|---|---|---|---|---|

| Uneven water cooling in secondary cooling zone | Lead to | Columnar crystals grow faster | N-N | Continuous casting head entity reasoning | Capsule layer |

| Liquid steel enriched with solute elements is sucked into the void | Cause | Central segregation | 1-N | Continuous casting head entity reasoning | Connection layer |

| Bridging | Lead to | The enriched solute element molten steel is sucked into the void | N-N | Continuous casting head entity reasoning | Capsule layer |

| Belly drum | Solution | Keep the clamp roller rigid | 1-1 | Continuous casting tail entity reasoning | Connection layer |

| Parameter | Number |

|---|---|

| continuous casting entities | 1144 |

| continuous casting relations | 5 |

| continuous casting triples | 1626 |

| describe | 144 |

| lead to | 349 |

| feature is | 261 |

| solution | 297 |

| cause | 575 |

| total | 2396 |

| Model | MRR | HITS@1 | HITS@3 | HITS@10 |

|---|---|---|---|---|

| TransE | 0.492 | 19.5 | 24.7 | 56.8 |

| DistMult | 0.614 | 21.9 | 30.2 | 65.4 |

| ComplEX | 0.597 | 22.8 | 31.5 | 62.1 |

| KGLM | 0.712 | 31.4 | 42.2 | 67.5 |

| KERMIT | 0.697 | 30.3 | 45.4 | 71.3 |

| Ours | 0.893 | 37.2 | 51.6 | 76.9 |

| Ablation | HITS@1 | HITS@3 | HITS@10 |

|---|---|---|---|

| Model-attention | 31.5 | 43.7 | 69.8 |

| Model-concat | 32.6 | 46.9 | 71.0 |

| Model-capsnet | 35.2 | 48.2 | 73.4 |

| All | 37.2 | 51.6 | 76.9 |

| Hyperparameter | Number of Iterations | HITS@1 | HITS@3 | HITS@10 |

|---|---|---|---|---|

| epoch_attention | 1000 | 37.2 | 51.6 | 76.9 |

| epoch_reasoning | 200 | |||

| epoch_attention | 1000 | 33.8 | 46.7 | 72.0 |

| epoch_reasoning | 150 | |||

| epoch_attention | 1000 | 33.6 | 44.2 | 67.3 |

| epoch_reasoning | 250 | |||

| epoch_attention | 500 | 35.5 | 47.3 | 73.6 |

| epoch_reasoning | 200 | |||

| epoch_attention | 500 | 34.2 | 48.5 | 74.4 |

| epoch_reasoning | 150 | |||

| epoch_attention | 500 | 32.0 | 45.1 | 71.8 |

| epoch_reasoning | 250 | |||

| epoch_attention | 800 | 36.8 | 50.3 | 74.2 |

| epoch_reasoning | 200 | |||

| epoch_attention | 800 | 35.4 | 48.9 | 75.1 |

| epoch_reasoning | 150 | |||

| epoch_attention | 800 | 36.4 | 47.1 | 69.4 |

| epoch_reasoning | 250 |

| Model | HITS@1 | HITS@3 | HITS@10 |

|---|---|---|---|

| ConvE | 23.7 | 35.6 | 50.1 |

| TransE | 19.9 | 31.4 | 47.1 |

| ConvKB | 24.6 | 35.2 | 51.7 |

| KGLM | 20.0 | 31.4 | 46.8 |

| KERMIT | 26.6 | 39.6 | 54.7 |

| NBFNET | 32.1 | 45.4 | 59.9 |

| Ours | 34.8 | 47.3 | 58.3 |

| Model | HITS@1 | HITS@3 | HITS@10 |

|---|---|---|---|

| ConvE | 41.5 | 46.3 | 50.1 |

| TransE | 38.7 | 44.1 | 53.8 |

| ConvKB | 53.5 | 44.5 | 56.8 |

| KGLM | 33.0 | 53.8 | 74.1 |

| KERMIT | 59.1 | 66.4 | 78.0 |

| NBFNET | 48.5 | 56.4 | 64.1 |

| Ours | 58.5 | 67.7 | 77.9 |

| Model | Percentage of Relation Type (%) | |||

|---|---|---|---|---|

| 1-1 | 1-N | N-1 | N-N | |

| FB15K-237 | 0.94 | 6.32 | 22.03 | 70.72 |

| Continuous cast data set | 8.9 | 21.5 | 34.3 | 35.3 |

| Casting Defect | Root Cause Analysis Task | Root Cause Analysis Results | |

|---|---|---|---|

| Root Cause 1 | Root Cause 2 | ||

| Cracks appear on the surface of the casting | <?, cause, surface cracks> Head entity reasoning | The first brittleness temperature zone moves towards low temperature | Deviation in critical stress appears |

| Casting belly bulging | <?, cause, bulge> Head entity reasoning | Casting shell thinning | Liquid molten steel static pressure deviation |

| The thickness of the solidified shell is uneven | <?, lead to, uneven thickness of solidified shell> Head entity reasoning | Mold liquid level fluctuation | The protective slag does not melt well |

| Air bubbles escape from the molten steel | <?, lead to, bubble escape> Head entity reasoning | Oxygen, nitrogen, hydrogen, argon and carbon are at the front of the solidification interface | Shear effect of molten steel |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, X.; Wang, X.; She, Y.; Sun, M.; Gao, Q. Root Cause Analysis of Cast Product Defects with Two-Branch Reasoning Network Based on Continuous Casting Quality Knowledge Graph. Appl. Sci. 2025, 15, 6996. https://doi.org/10.3390/app15136996

Wu X, Wang X, She Y, Sun M, Gao Q. Root Cause Analysis of Cast Product Defects with Two-Branch Reasoning Network Based on Continuous Casting Quality Knowledge Graph. Applied Sciences. 2025; 15(13):6996. https://doi.org/10.3390/app15136996

Chicago/Turabian StyleWu, Xiaojun, Xinyi Wang, Yue She, Mengmeng Sun, and Qi Gao. 2025. "Root Cause Analysis of Cast Product Defects with Two-Branch Reasoning Network Based on Continuous Casting Quality Knowledge Graph" Applied Sciences 15, no. 13: 6996. https://doi.org/10.3390/app15136996

APA StyleWu, X., Wang, X., She, Y., Sun, M., & Gao, Q. (2025). Root Cause Analysis of Cast Product Defects with Two-Branch Reasoning Network Based on Continuous Casting Quality Knowledge Graph. Applied Sciences, 15(13), 6996. https://doi.org/10.3390/app15136996