Abstract

Periodontitis is a severe infection that damages the bone surrounding teeth, making severity assessment challenging for dentists. Utilizing artificial intelligence (AI) techniques enhances diagnostic accuracy in radiographic analysis, enabling a more efficient and precise diagnosis. The aim of this research is to develop an AI-based diagnostic system comprising two deep learning stages, designed to efficiently detect and classify periodontitis from panoramic radiographs. In the proposed two-stage system, a binary classifier is first utilized to determine the presence of periodontal bone loss, which is then localized and classified by the second AI model. Based on our extensive research, we concluded that convolutional neural networks (CNNs) are the most effective type of neural network for addressing our problem. To ensure the accuracy and reliability of results, we developed two robust CNN models, YOLOv8 and MobileNet-v2, that have shown significant performance in similar applications. The primary model, MobileNet-v2, was utilized to detect periodontitis in panoramic radiographs, while the secondary model, YOLOv8, was developed to localize the affected regions and classify the severity level of the periodontitis. A custom dataset was created to comprise 817 panoramic images. Our developed YOLOv8 model achieved a testing precision of 0.74 and a recall of 0.7, while the MobileNet-v2 model achieved a testing accuracy of 0.88 and a recall of 1.0. These results were further validated by an oral radiology specialist to ensure their accuracy and reliability. Our proposed system is considered to be efficient, cost-effective, and easy to use compared to existing systems in the literature and similar software services available in the market for enhancing diagnosis of periodontitis in clinical practice.

Keywords:

AI; deep learning; periodontal bone loss; PBL; periodontitis; CNN; YOLO v8; MobileNet-v2; panoramic radiographs; oral radiology 1. Introduction

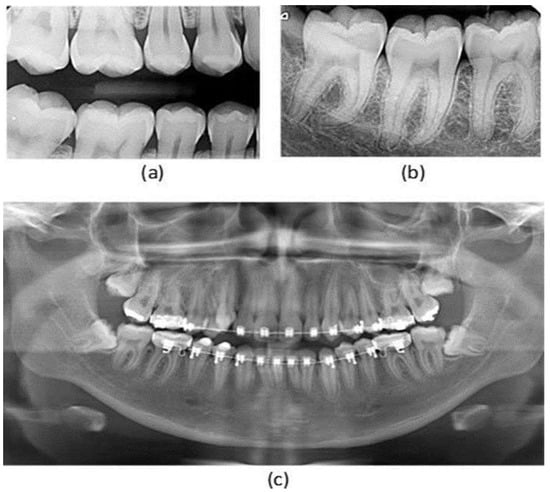

Periodontitis, affecting over 740 million people globally, is a chronic inflammatory disease that results in bone and attachment loss, potentially leading to tooth loss. Beyond its oral health implications, it is associated with systemic conditions such as heart disease, stroke, and diabetes. Early diagnosis is critical to prevent progression to advanced stages, where tooth support structures are destroyed [1]. However, current diagnostic methods, such as soft tissue probing and radiographic imaging, pose clinical challenges due to their limited reliability and dependence on expert interpretation. Radiographic imaging, particularly panoramic, bitewing, and periapical radiographs (Figure 1), is commonly used in dental clinics to support early detection and diagnosis [2].

Figure 1.

Types of X-ray images: (a) bitewing X-ray, (b) periapical X-ray, (c) panoramic X-ray [2].

Traditional methods often fall short in detecting early-stage disease or assessing severity (mild, moderate, and severe), making it essential to explore more robust diagnostic tools. Artificial Intelligence (AI), particularly Convolutional Neural Networks (CNNs), offers promising capabilities for improving diagnostic accuracy and efficiency in identifying serious conditions like periodontitis [1,3]. The CNN is a type of deep learning model designed to automatically learn and identify image patterns, making them highly effective in detecting and classifying periodontal bone loss from radiographs [4,5,6,7]. Compared to traditional machine learning models, CNNs such as Faster R-CNN and YOLO excel in automation, speed, and reliability [2,3,4,5,6]. They are capable of handling large datasets, reducing clinician workload, and improving precision while potentially lowering costs and minimizing misclassification in clinical practice [8,9,10,11]. These benefits position CNNs as a promising tool for advancing periodontal diagnosis and management [12,13,14].

Panoramic radiographs are favored in dental practice due to their wide coverage, low radiation dose, and diagnostic versatility. While several CNN-based models have utilized panoramic images in the literature, numerous limitations and challenges persist, such as small datasets, lack of standardized labeling, and limited exploration of advanced model architectures [15,16,17,18]. Additional difficulties include the presence of braces, misaligned teeth, and poor image quality due to artifacts, which complicate severity assessment [4,5,6]. Moreover, to the best of our knowledge, no prior studies have attempted to classify the full range of periodontitis severity from panoramic images, revealing a major gap in the literature.

This significant research gap motivates our research study, in which we propose a novel AI-based classification system to enable accurate early detection of periodontitis severity from panoramic radiographs. This study aims to develop a two-stage deep learning framework as follows: a primary CNN model detects the presence of periodontal bone loss (PBL), and a secondary model localizes and classifies its severity. This two-stage detection and classification system aims to reduce false positives in the overall diagnosis process while optimizing each model’s performance, leading to an efficient and reliable diagnostic tool targeting early detection and diagnosis of PBL, particularly for use by non-experts at primary healthcare and specialized dental clinics.

2. Materials and Methods

2.1. Proposed System

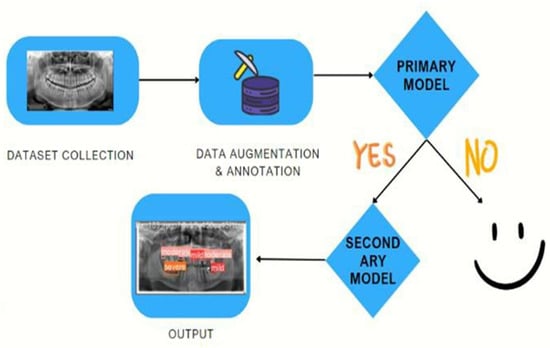

In this research, we propose an AI-based system that comprises two robust models as two screening stages to enhance and facilitate the PBL diagnosis process using panoramic radiographs. Our proposed system pipeline is depicted in Figure 2, where it starts with the acquisition of the dataset, to be subsequently subjected to some preprocessing techniques. The processed and annotated data are fed into our primary model. This model performs binary classification to determine the presence of periodontal bone loss. If the image is classified as indicative of periodontitis, it is then passed to our secondary model, which is responsible for identifying the location of the periodontal bone loss as well as classifying its severity.

Figure 2.

Our proposed detection-classification system pipeline.

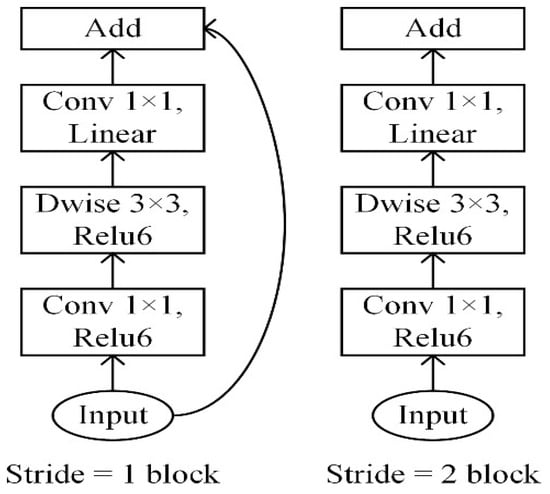

The development of a sophisticated deep learning model for the diagnosis of periodontal bone loss in panoramic radiographs has been meticulously executed [19]. Employing advanced tools like TensorFlow and Keras [20], we incorporated the pre-trained MobileNetV2 model, as shown in Figure 3, for binary classification tasks. MobileNetV2 is particularly well-suited and fine-tuned for this task due to its efficient use of inverted residuals and linear bottlenecks [21], which maintain high accuracy while reducing computational complexity. Rather than utilizing the default output layer, we thoughtfully added custom layers to capture key features essential for disease detection. OpenCV is utilized for image processing, as shown in Figure 4, while NumPy and Pandas assist in managing and augmenting the data. Before inputting images into the model, they are resized to 350 × 350 pixels and brightened by 10% for improved clarity and consistency [22]. Visualizations are used to comprehend the dataset, track model performance, and evaluate the effects of augmentation. During training, we focused on accuracy and recall metrics, optimizing the model with the Adam optimizer and applying techniques such as zooming and flipping to enhance generalization.

Figure 3.

MobileNetv2 architecture [21].

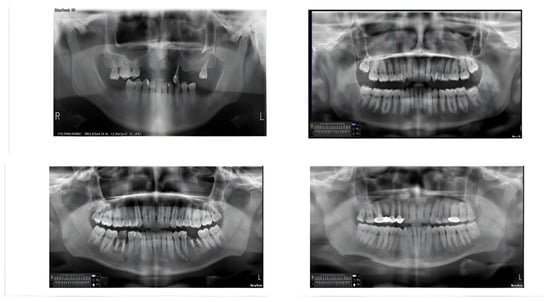

Figure 4.

Samples from the dataset using OpenCV before training.

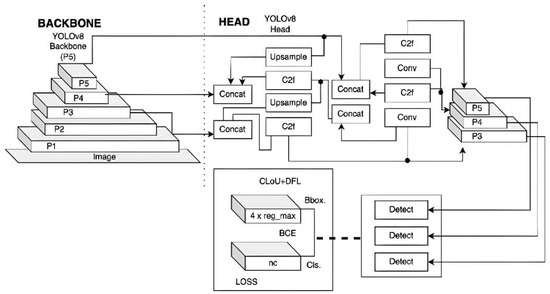

Moreover, we have seamlessly integrated YOLOv8, with its advanced architecture shown in Figure 5, as our secondary model to detect the location of periodontitis in the radiographs and classify the severity of each instance [23]. YOLOv8’s single-stage detection framework, coupled with its anchor-free approach and Feature Pyramid Network (FPN), allows for real-time detection and accurate classification of periodontal disease severity [22,24]. It was customized, fine-tuned, and trained using a custom dataset of periodontal bone loss for 50 epochs with an image size of 640 × 640. The main output of our developed YOLOv8 secondary model is localizing all existing PBL areas (one or more) in a single radiograph image while reporting the severity level for each detected PBL as a mild, moderate, or severe case. This all-inclusive two-model diagnosis system aims to automate PBL disease detection and severity assessment, ultimately leading to improved patient care outcomes.

Figure 5.

YOLOv8 architecture [23].

2.2. Dataset Collection and Preparation

For our dataset, we have meticulously curated a collection of 817 panoramic radiographic images, each annotated to identify varying degrees of periodontal bone loss. Of these images, 562 were generously provided by the College of Dentistry, Oral and Maxillofacial Diagnosis and Surgery Department at City University Ajman, UAE, using the Newtom Go 2D/2D Pan/Ceph machine, NewTom NNT Software (https://www.newtom.it/), Cefla Imaging, Imola, Italy, and it is worth mentioning that these are our custom private dataset. The remaining 255 images were sourced from the Cerda Mardini dataset (2022) [17]. Table 1 summarizes the collected dataset, where the primary model (MobileNetV2) was trained using the collected 562 images from City University Ajman and the secondary model (YOLOv8) was trained using the combined dataset of 817 images.

Table 1.

Summary of the dataset used in the training phase of the proposed AI models.

This research was conducted in accordance with the principles outlined in the Declaration of Helsinki. Ethical approval was obtained from the relevant institutional review board, and informed consent was secured from all patients through a dedicated consent form, which explicitly stated that their radiographs and photographs may be used for research purposes. The inclusion and exclusion criteria used for selecting the panoramic radiographs are as follows:

2.2.1. Inclusion Criteria

- Image Quality: Radiographs with clear visualization of dental structures, including alveolar bone levels and periodontal ligament space.

- Diagnostic Relevance: Radiographs that provide sufficient detail for periodontal bone loss assessment.

- Patient Age: Radiographs from patients aged 18 years and above to ensure complete dentition development.

- Clinical History: Cases with documented periodontal diagnosis or relevant clinical history.

- Radiograph Type: Only digital panoramic radiographs were considered to ensure image quality and format consistency.

- Timeframe: Radiographs taken within the past three years to ensure data relevance to current clinical practices.

2.2.2. Exclusion Criteria

- Poor Image Quality: Radiographs with severe distortions, artifacts, or insufficient contrast that may hinder accurate diagnosis.

- Incomplete Dental Records: Cases lacking sufficient clinical history or periodontal diagnosis details.

- Primary Dentition: Radiographs showing primary or mixed dentition were excluded to maintain focus on adult periodontal conditions.

- Post-Surgical Radiographs: Images taken after surgical interventions that alter bone structure significantly.

- Non-Panoramic Images: Radiographs such as periapical, bitewing, or CBCT scans that do not meet the panoramic format requirement.

- Pathological Conditions: Cases with extensive bone pathology unrelated to periodontal disease (e.g., tumors and cysts) were excluded to avoid confounding factors.

For the annotation process, we utilized Roboflow, a leading platform for computer vision and image annotation tasks [24]. Roboflow provides an intuitive interface that simplifies the labeling of complex medical images, allowing for high precision in identifying features such as periodontal bone loss. The platform supports various data formats and offers robust tools for data augmentation, which were critical in enhancing the diversity and quality of our dataset.

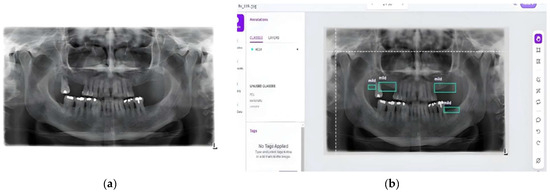

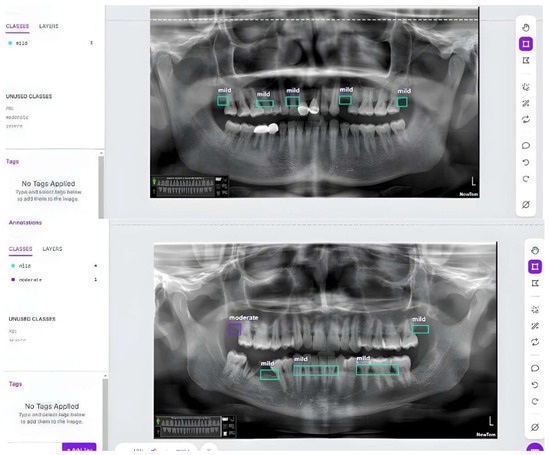

The annotation process involved carefully marking regions of interest in each image to indicate the severity of bone loss. Figure 6 and Figure 7 illustrate the pre- and post-annotation versions of some images. All annotated images had been developed and validated by a board of certified oral and maxillofacial radiologists. These annotated images served as the foundation for training our deep learning models, ensuring that the models could accurately detect and classify different levels of bone loss.

Figure 6.

Sample from the collected dataset [17]: (a) before annotation and (b) after annotation using Roboflow.

Figure 7.

Samples from our custom dataset after annotation using Roboflow.

To ensure the quality of the data, 80% of the images were designated for training purposes, while 20% were reserved for validation. Additionally, for testing purposes, a separate set of 600 panoramic radiographs was utilized from the Roboflow library. Access to these images can be granted upon request. All images underwent resizing to dimensions of 350 × 350 pixels with a 10% increase in brightness for binary classification and to 640 × 640 pixels with 13% gray-scaling for multi-classification purposes. Only data augmentations were applied during preprocessing as rotation and scaling, with no additional steps taken. In summary, our total training dataset for both models comprises 817 annotated panoramic radiographic images, while a separate dataset of 600 panoramic radiographs (https://zenodo.org/records/4457648, accessed on 6 June 2025) was used for testing purposes.

3. Results

3.1. Implementation Details of the Proposed System

The two AI models used to build up our proposed system have been trained using the collected dataset, which comprises 817 panoramic images, and tested using the test dataset of 600 panoramic images. Our first model, MobileNetV2, was trained for 10, 15, 17, and 20 epochs. However, the optimal number of epochs was determined to be 15, and all displayed results for this model are based on 15 epochs of training. Our second model, YOLOv8, was trained for 30 and 50 epochs for object detection of periodontal bone loss for the three severities. However, the optimal number of epochs was determined to be 50, and all displayed results for this model are based on 50 epochs of training. The model’s performance was validated in addition to the validation step performed by a board-certified oral and maxillofacial radiologist, ensuring the accuracy and reliability of the model’s classifications.

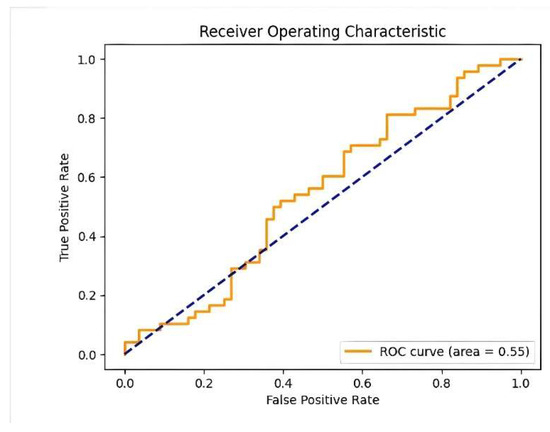

3.2. Results of the Primary Model (MobileNetV2)

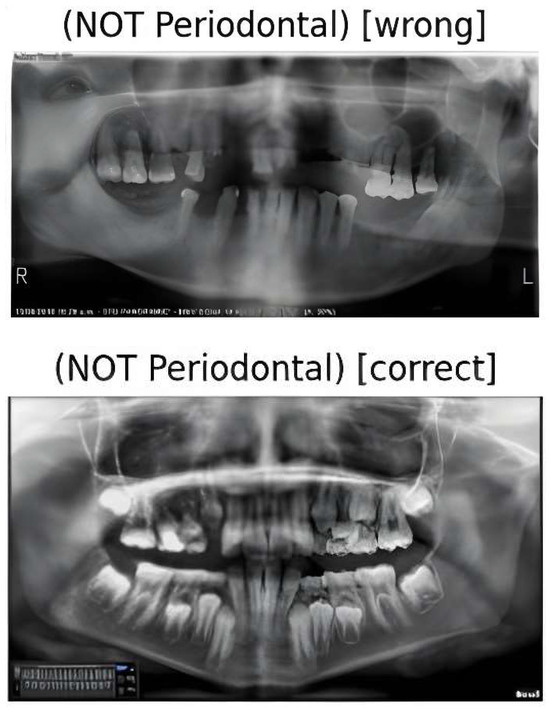

Following the training of the MobileNetV2 model, we proceeded to evaluate its performance using the test dataset. Our model demonstrated a high testing accuracy of 88% with a recall of 1.0 and a loss of 0.2966, which underscores the effectiveness of our approach. Figure 8 shows the performance of our binary classification model (MobileNetv2) across different threshold settings, represented by a ROC (Receiver Operating Characteristic) curve. These results highlight the model’s ability to accurately predict both periodontal and non-periodontal bone loss, validating our decision to employ this model and dataset for this study. Figure 9 shows some test samples during the model validation process, illustrating the performance of the MobileNetV2 model in detecting and classifying PBL patterns from panoramic radiographs.

Figure 8.

Performance of the binary classification model across different threshold settings (ROC curve).

Figure 9.

Two test cases for our model prediction with false and true non-periodontal bone loss detection.

3.3. Results of the Secondary Model (YOLOv8)

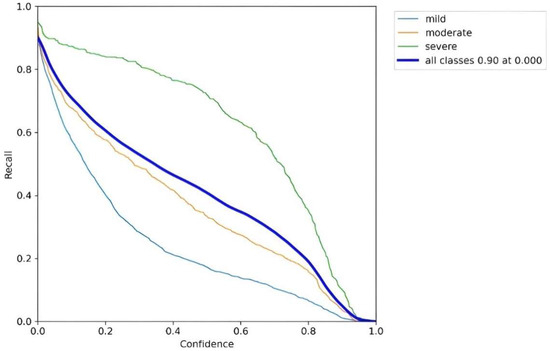

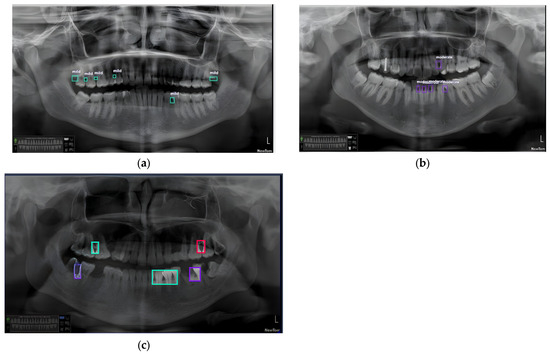

Following the training of the YOLOv8 model on the training dataset, we evaluated its performance using the test dataset. The developed model achieved a precision of 0.74 and a recall of 0.7. Figure 10 illustrates the recall-confidence curve obtained for our YOLOv8 model. These results highlight a significant advantage of our approach, as the model is capable of classifying all severities of PBL, whereas other models were limited to merely detecting the location of the bone loss. The developed model was examined by various case studies to gauge its performance in classifying the three severities of PBL as follows: mild, moderate, and severe. Figure 11a illustrates a panoramic radiograph portraying a case of mild PBL. In the second instance, a radiograph illustrating moderate bone loss is presented in Figure 11b. In the third scenario, a panoramic radiograph is used, depicting all different severities in one image, as shown in Figure 11c. These results had been validated by a dental specialist, where green color shows the mild periodontitis, purple color shows moderate periodontitis, and the red color is used for the severe periodontitis. Our case studies are intended to evaluate the robustness and reliability of our developed YOLOv8 model for detecting, localizing, and classifying PBL across various severity levels, aiming to contribute to enhanced diagnostic capabilities in dental imaging.

Figure 10.

Recall-confidence curve for our YOLOv8 model.

Figure 11.

(a) Panoramic radiograph sample with multiple areas classified as mild periodontitis stage, (b) sample with multiple areas classified as moderate periodontitis stage, and (c) sample with multiple areas classified with all periodontitis stages.

4. Discussion

4.1. Critical Analysis and Comparison with Related Study

Recent literature has demonstrated a growing interest in leveraging AI, particularly CNN-based deep learning models, to address the diagnostic limitations of traditional methods in detecting PBL. Our review of over 25 studies revealed a variety of deep learning architectures, datasets, and diagnostic goals applied to different types of dental radiographs, including bitewing, periapical, and panoramic images. Several notable studies have demonstrated the potential of CNN-based models in detecting periodontal conditions across these modalities [1,4,5,9,10,11,12,13,14,15,16,17,18]. For instance, Li et al. [5] used YOLOv4 for bitewing radiographs, achieving high accuracy in detecting caries and periodontitis. Chen [4] utilized deep CNNs for periapical images, reporting 72.8% accuracy in assessing periodontitis severity. Amasya et al. [9] implemented Mask R-CNN on panoramic radiographs, demonstrating expert-level segmentation performance, while Ryu et al. [1] achieved an AUC of 0.91 using Faster R-CNN on a large-scale dataset. Additionally, Arora et al. [17] explored CNNs for bone loss classification in periapical images, and Abdalla-Aslan et al. [12] incorporated image enhancement techniques to improve CNN-based performance. Other efforts, such as those by Ferdian et al. [16] and Chen et al. [11], focused on optimizing model architectures and preprocessing pipelines to enhance diagnostic reliability.

Despite these promising developments, many of these studies primarily focused on detection rather than granular severity classification. Moreover, their performance and generalizability were often constrained by the use of limited, imbalanced, or modality-specific datasets.

In response to these gaps, we developed and evaluated two complementary AI models, MobileNetV2 and YOLOv8, for the detection and classification of PBL from panoramic radiographs, aiming to establish an efficient two-stage screening system. MobileNetV2 achieved a high binary classification accuracy of 0.88, making it suitable for distinguishing between healthy and diseased cases. YOLOv8, in contrast, was tailored for detailed severity classification, reaching a significant precision of 0.74. Together, these models form a cohesive diagnostic framework that not only automates detection but also delivers clinically meaningful insights to support dentists in decision-making. The thorough method of diagnostic categorization is illustrated and exemplified in Figure 11, highlighting the models’ effectiveness in identifying and classifying PBL at various severity levels. Furthermore, all results were validated by expert dental practitioners to ensure clinical applicability.

Compared to previous studies, our proposed system introduces several key advancements that directly address the identified research gaps. Most notably, it is among the first AI-based systems designed specifically for classifying different severity levels of PBL using panoramic radiographs, a need strongly emphasized by dental experts. This severity classification supports a more nuanced and efficient diagnostic process, reducing clinicians’ workload and enabling timely treatment decisions. Our novel two-stage framework, using MobileNetV2 for binary detection and YOLOv8 for severity classification, offers a comprehensive and clinically relevant solution. By exclusively leveraging panoramic radiographs, which are widely used for their broad coverage and low radiation exposure, our system aligns with common diagnostic practices. Additionally, we addressed the limitations of previous studies by training our models on a clinical and diverse dataset, improving both robustness and generalizability.

As a result, our two-stage diagnostic tool surpasses or matches existing state-of-the-art methods across key evaluation metrics, offering a novel, efficient, and clinically aligned solution to assist dentists in early diagnosis and management of periodontitis.

The following subsections present a detailed comparative evaluation of our models against prior studies, underscoring the innovation and clinical impact of our proposed system.

4.2. Evaluation of Developed Models

4.2.1. The Primary Model (MobileNetV2)

Table 2 shows our comparative analysis, presenting the performance of other CNN models applied to binary classification tasks in dental image diagnostics using different datasets. It is important to note that this evaluation is limited to binary classification only, making other studies comparable. From Table 2, it is clear that our developed MobileNetV2 model has shown remarkable classifying proficiency compared to other models, with 0.88 accuracy and 1.00 recall in classifying 526 panoramic images. In comparison, the VGG-19 model, on a dataset of 1044 periapical radiographic images, achieved an accuracy of 0.788, which is appreciable [25]. The U-Net model in [1] was tested on 640 panoramic radiographic images and demonstrated an accuracy of 0.73 with a recall score of 0.57, thereby maintaining the classification level. Also, the VGG-16 model, evaluated on 1724 intraoral periapical images [13], gave precision and F1-score values of 0.73 and 0.75, respectively, thereby reflecting its robustness for binary classification tasks.

Table 2.

Comparison of the proposed MobileNetV2 with other existing classification models.

Generally, our results indicate that our developed MobileNetV2 model is more effective in the binary classification of dental images compared to other architectures, achieving the highest accuracy despite the limited dataset size used. This comparative analysis helps highlight the relative strengths and limitations of different CNN models in addressing binary classification challenges within dental diagnostics.

4.2.2. The Secondary Model (YOLOv8)

A comparison with related models that exist in the literature was summarized in Table 3, which gives insight into the effectiveness of different models in dental image analysis, hence providing a comprehensive view of their performance across varied datasets. Our developed YOLOv8 model, trained on a set of 817 panoramic images, yielded a commendable precision of 0.74 and a recall of 0.70, thus showing its capability of correct detection of PBL while outperforming other existing models [18]. Moreover, slightly increased sensitivity and precision values were noted with the YOLOv5 model when it was tested on a larger dataset involving 1543 images, suggesting the efficacy of this model in similar tasks [26].

Table 3.

Comparison of the proposed YOLOv8 with other existing classification models.

Other advanced models, such as ViT-base/large, BEiT-base/large, and DeiTbase, trained on a large dataset of 21,819 images, demonstrated a high accuracy of 0.84 [27,28,29,30], further demonstrating the flexibility and adaptability of different approaches in dental image analysis. However, the performance of such advanced AI and transformer models is highly constrained, with very large dataset sizes essential for training [27,28,29,30]. Notably, while all of these models aim to detect PBL, our YOLOv8 model can do it to a deeper level by classifying the severity level. In addition to localizing areas of bone loss, it also evaluates the severity of the same conditions and thus presents valuable information for treatment planning. All these findings emphasized nuanced analysis for better diagnostic accuracy and effective treatment strategies in dentistry.

4.3. Clinical Implications

Our extensive case studies, exhibiting PBL severity levels through panoramic radiographs as illustrated in Figure 11, present a concrete demonstration of the resilience and reproducibility of our proposed system in recognizing, localizing, and classifying PBL. The sophisticated analysis given by the secondary model, YOLOv8, not only aids in finding areas of bone loss but also analyzes severity levels, thereby presenting vital information needed for individualized treatment planning and intervention methods in dentistry. In essence, the inclusion of both MobileNetV2 and YOLOv8 models inside our suggested diagnostic system elucidates a synergistic approach to PBL diagnosis. The MobileNetV2 demonstrates exceptional performance in binary classification tasks, achieving unmatched accuracy and recall rates, whereas YOLOv8 enhances diagnostic precision by providing detailed severity classification, thereby improving the depth and accuracy of periodontal bone loss evaluations.

The endorsement of our findings by board-certified oral and maxillofacial radiologists reinforces the dependability and precision of our AI-driven diagnostic system, indicating substantial progress in dental imaging methodologies and patient care results. Moreover, the presented comparative analysis offers a thorough examination of the distinct strengths and contributions of various AI models in tackling binary classification issues in dental diagnostics, facilitating improved diagnostic precision and effective treatment approaches in dentistry.

4.4. Study Limitations

- Notwithstanding the encouraging results, our study possesses some limitations that suggest potential directions for future research:

- Limited Sample Size: Although our dataset offered a satisfactory basis, an expanded dataset with enhanced diversity in patient demographics, radiograph quality, and dental diseases would further substantiate the model’s robustness.

- Dataset Imbalance: The disparity in PBL categories may have affected the efficacy of the YOLOv8 model, especially in moderate and severe instances.

- Generalization to Clinical Settings: The controlled environment of our data collection may not accurately reflect real-world dentistry clinics. Subsequent research should evaluate model efficacy under diverse imaging settings and apparatus.

5. Conclusions

In this comprehensive study, an AI-powered two-stage diagnostic system was designed to enable early detection and accurate diagnosis of periodontitis from panoramic radiographs, specifically targeting primary healthcare settings with non-experts as well as specialized dental clinics. The proposed system utilized two advanced AI models, MobileNetV2 and YOLOv8, in a two-stage screening process, where the primary model identifies whether a radiograph shows signs of PBL, and, if detected, the second model pinpoints its location and assesses its severity. This two-stage detection and classification system aims to reduce false positives and optimize model performance, ultimately providing an efficient, reliable, and clinically practical diagnostic tool to support the early detection and effective management of periodontitis.

We successfully collected and annotated a custom private dataset from various dental clinics, consisting of 817 panoramic radiographs for training and an additional 600 radiographs for testing. The primary model, MobileNetV2, demonstrated a high accuracy of 88% in detecting PBL, while the secondary model, YOLOv8, achieved 74% precision, effectively localizing PBL instances and classifying their severity. To validate our models’ performance, we consulted a board-certified oral and maxillofacial radiologist, whose endorsement, along with our models’ validation, ensures the accuracy and reliability of the detection and classification results and reinforces the practical relevance of our diagnostic system in clinical settings. Consequently, our research advances AI’s role in enhancing dental imaging diagnostics by providing a precise, efficient tool for evaluating PBL, with the potential to transform dental diagnostics and improve patient outcomes. Notably, our proposed system addresses the observed research gap in the literature by introducing, to the best of our knowledge, the first diagnostic tool capable of classifying three levels of PBL severity, thereby aiding dentists in making more accurate diagnoses. Additionally, our developed models outperformed similar existing models in the literature, setting a new benchmark for AI-based dental diagnostics.

For future study, other advanced AI models will be investigated, aiming to further enhance the reliability and efficiency of the diagnostic system. Furthermore, utilizing a larger and more balanced dataset is recommended to optimize performance and improve generalizability. Additionally, we believe that integrating our proposed system into a comprehensive software solution would greatly benefit dentists by providing an efficient, easy-to-use, and cost-effective tool for diagnosing and managing periodontitis, thus further enhancing clinical practice and patient outcomes.

Author Contributions

Conceptualization, N.N.F.R., A.Z.A. and S.A.; methodology, N.N.F.R., G.S. and S.A.; software, G.S. and S.A.; validation, N.N.F.R., G.S., A.Z.A. and S.A.; formal analysis, N.N.F.R., G.S. and S.A.; investigation, N.N.F.R., G.S. and S.A.; data curation, N.N.F.R., G.S., A.Z.A. and S.A.; writing—original draft preparation, G.S. and S.A.; writing—review and editing, N.N.F.R., G.S., A.Z.A. and S.A.; supervision, N.N.F.R. and S.A.; project administration, S.A. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by City University Ajman, United Arab Emirates. The authors contributed the remaining funding.

Institutional Review Board Statement

This study was conducted following approval from the Institutional Review Board of City University Ajman, United Arab Emirates, on 19 September 2024, under protocol code 092024-02. All procedures adhered to ethical standards in accordance with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all patients before the acquisition of their dental images. Patients agreed to the use of their photographs and radiographs for research, educational, and professional purposes, with full assurance of confidentiality and the right to withdraw consent at any time, as outlined in the clinic’s standardized consent form.

Data Availability Statement

The dataset supporting the results of this article is available from the authors on request.

Acknowledgments

The authors gratefully acknowledge the collaboration and technical support provided by George Sherif and Shereen Afifi of the Computer Science and Engineering Department at the Faculty of Media Engineering and Technology, German University in Cairo, who led the development of the AI models used in this research. Special appreciation is extended to Ahmed Z. Abdelkarim from the Department of Specialty Dentistry at Bitonte College of Dentistry, Northeast Ohio Medical University for his expert validation and valuable insights. The authors also thank Nader Nabil Fouad Rezallah of City University Ajman for his significant contributions to data provision and overall research coordination. Appreciation is further extended to all colleagues who contributed to data collection, annotation, and manuscript preparation.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ryu, J.; Lee, D.M.; Jung, Y.H.; Kwon, O.; Park, S.; Hwang, J.; Lee, J.Y. Automated Detection of Periodontal Bone Loss Using Deep Learning and Panoramic Radiographs: A Convolutional Neural Network Approach. Appl. Sci. 2023, 13, 5261. [Google Scholar] [CrossRef]

- Jader, G.; Fontineli, J.; Ruiz, M.; Abdalla, K.; Pithon, M.; Oliveira, L. Deep instance segmentation of teeth in panoramic X-ray images. In Proceedings of the 2018 31st SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Parana, Brazil, 29 October–1 November 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 400–407. [Google Scholar]

- Karacaoglu, F.; Kolsuz, M.E.; Bagis, N.; Evli, C.; Orhan, K. Development and Validation of Intraoral Periapical Radiography-Based Machine Learning Model for Periodontal Defect Diagnosis. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2023, 237, 607–618. [Google Scholar] [CrossRef] [PubMed]

- Chen, I.H.; Lin, C.H.; Lee, M.K.; Chen, T.E.; Lan, T.H.; Chang, C.M.; Tseng, T.Y.; Wang, T.; Du, J.K. Convolutional-Neural-Network-Based Radiographs Evaluation Assisting in Early Diagnosis of the Periodontal Bone Loss via Periapical Radiograph. J. Dent. Sci. 2024, 19, 550–559. [Google Scholar] [CrossRef] [PubMed]

- Li, K.C.; Mao, Y.C.; Lin, M.F.; Li, Y.Q.; Chen, C.A.; Chen, T.Y.; Abu, P.A.R. Detection of Tooth Position by YOLOv4 and Various Dental Problems Based on CNN With Bitewing Radiograph. IEEE Access 2024, 12, 11822–11835. [Google Scholar] [CrossRef]

- Alotaibi, G.; Awawdeh, M.; Farook, F.F.; Aljohani, M.; Aldhafiri, R.M.; Aldhoayan, M. Artificial Intelligence (AI) Diagnostic Tools: Utilizing a Convolutional Neural Network (CNN) to Assess Periodontal Bone Level Radiographically—A Retrospective Study. BMC Oral Health 2022, 22, 399. [Google Scholar] [CrossRef]

- Krois, J.; Ekert, T.; Meinhold, L.; Golla, T.; Kharbot, B.; Wittemeier, A.; Dörfer, C.; Schwendicke, F. Deep learning for the radiographic detection of periodontal bone loss. Sci. Rep. 2019, 9, 8495. [Google Scholar] [CrossRef]

- Chang, H.J.; Lee, S.J.; Yong, T.H.; Shin, N.Y.; Jang, B.G.; Kim, J.E.; Huh, K.H.; Lee, S.S.; Heo, M.S.; Choi, S.C.; et al. Deep Learning Hybrid Method to Automatically Diagnose Periodontal Bone Loss and Stage Periodontitis. Sci. Rep. 2020, 10, 7531. [Google Scholar] [CrossRef]

- Amasya, H.; Jaju, P.P.; Ezhov, M.; Gusarev, M.; Atakan, C.; Sanders, A.; Manulius, D.; Golitskya, M.; Shrivastava, K.; Singh, A.; et al. Development and Validation of an Artificial Intelligence Software for Periodontal Bone Loss in Panoramic Imaging. Int. J. Imaging Syst. Technol. 2024, 34, e22973. [Google Scholar] [CrossRef]

- Lin, P.; Huang, P.; Huang, P. Automatic Methods for Alveolar Bone Loss Degree Measurement in Periodontitis Periapical Radiographs. Comput. Methods Programs Biomed. 2017, 148, 1–11. [Google Scholar] [CrossRef]

- Mohammad-Rahimi, H.; Motamedian, S.R.; Pirayesh, Z.; Haiat, A.; Zahedrozegar, S.; Mahmoudinia, E.; Rohban, M.H.; Krois, J.; Lee, J.-H.; Schwendicke, F. Deep Learning in Periodontology and Oral Implantology: A Scoping Review. J. Periodontal Res. 2022, 57, 942–951. [Google Scholar] [CrossRef]

- Abdalla-Aslan, R.; Yeshua, T.; Kabla, D.; Leichter, I.; Nadler, C. An artificial intelligence system using machine-learning for automatic detection and classification of dental restorations in panoramic radiography. Oral Surg. Oral Med. Oral Pathol. Oral Radiol. 2020, 130, 593–602. [Google Scholar] [CrossRef] [PubMed]

- Ossowska, A.; Kusiak, A.; Świetlik, D. Evaluation of the Progression of Periodontitis with the Use of Neural Networks. J. Clin. Med. 2022, 11, 4667. [Google Scholar] [CrossRef] [PubMed]

- Piel, B.T.; Elsbury, K.; Herrera, C.; Potts, L. Artificial Intelligence Aiding in the Periodontal Assessment. Ph.D. Thesis, Texas A&M University, College Station, TX, USA, 2022. [Google Scholar]

- Li, X.; Zhao, D.; Xie, J.; Wen, H.; Liu, C.; Li, Y.; Li, W.; Wang, S. Deep Learning for Classifying the Stages of Periodontitis on Dental Images: A Systematic Review and Meta-Analysis. BMC Oral. Health 2023, 23, 1017. [Google Scholar] [CrossRef] [PubMed]

- Kurt-Bayrakdar, S.; Bayrakdar, İ.; Yavuz, M.B.; Sali, N.; Çelik, Ö.; Köse, O.; Saylan, B.C.U.; Kuleli, B.; Jagtap, R.; Orhan, K. Detection of Periodontal Bone Loss Patterns and Furcation Defects from Panoramic Radiographs Using Deep Learning Algorithm: A Retrospective Study. BMC Oral Health 2024, 24, 155. [Google Scholar] [CrossRef]

- Mardini, D.C.; Mardini, P.C.; Iturriaga, D.P.V.; Borroto, D.R.O. Determining the Efficacy of a Machine Learning Model for Measuring Periodontal Bone Loss. BMC Oral Health 2022, 22, 399. [Google Scholar] [CrossRef]

- Shon, H.S.; Kong, V.; Park, J.S.; Jang, W.; Cha, E.J.; Kim, S.Y.; Lee, E.Y.; Kang, T.G.; Kim, K.A. Deep Learning Model for Classifying Periodontitis Stages on Dental Panoramic Radiography. Appl. Sci. 2022, 12, 8500. [Google Scholar] [CrossRef]

- CranioCatch. AI Detection of Alveolar Bone Loss in Panoramic Radiographs. 2024. Available online: https://www.craniocatch.com/en/academic/ai-detection-alveolar-bone-loss/ (accessed on 10 March 2024).

- TensorFlow I/O—Decode DICOM Files for Medical Imaging. Available online: https://www.tensorflow.org/io/tutorials/dicom (accessed on 10 March 2024).

- Xue, B.; Chang, B.; Du, D. Multi-output monitoring of high-speed laser welding state based on deep learning. Sensors 2021, 21, 1626. [Google Scholar] [CrossRef]

- Widayani, A.; Putra, A.M.; Maghriebi, A.R.; Adi, D.Z.C.; Ridho, M.H.F. Review of Application YOLOv8 in Medical Imaging. Indones. Appl. Phys. Lett. 2024, 5, 23–33. [Google Scholar] [CrossRef]

- Salekin, S.U.; Ullah, M.H.; Khan, A.A.; Jalal, M.S.; Nguyen, H.H.; Farid, D.M. Bangladeshi Native Vehicle Classification Employing YOLOv8; Springer Nature: Singapore, 2023; pp. 185–199. [Google Scholar]

- Trending AI Tools—Roboflow. Available online: https://www.trendingaitools.com/ai-tools/roboflow/ (accessed on 10 March 2024).

- Lee, J.H. Diagnosis and Prediction of Periodontally Compromised Teeth Using a Deep Learning-Based Convolutional Neural Network Algorithm. J. Periodontal Implant. Sci. 2018, 48, 114–123. [Google Scholar] [CrossRef]

- Uzun Saylan, B.C.; Baydar, O.; Yeşilova, E.; Kurt Bayrakdar, S.; Bilgir, E.; Bayrakdar, İ.Ş.; Çelik, Ö.; Orhan, K. Assessing the Effectiveness of Artificial Intelligence Models for Detecting Alveolar Bone Loss in Periodontal Disease: A Panoramic Radiograph Study. Diagnostics 2023, 13, 1800. [Google Scholar] [CrossRef]

- Zhang, X.; Guo, E.; Liu, X.; Zhao, H.; Yang, J.; Li, W.; Wu, W.; Sun, W. Enhancing furcation involvement classification on panoramic radiographs with vision transformers. BMC Oral Health 2025, 25, 153. [Google Scholar] [CrossRef] [PubMed]

- Tavasoli, R.; VarastehNezhad, A.; Farbeh, H. Hybrid Vision Transformer for Detection of Dentigerous Cysts in Dental Radiography Images. In Proceedings of the 2024 14th International Conference on Computer and Knowledge Engineering (ICCKE), Mashhad, Iran, 19–20 November 2024; pp. 143–148. [Google Scholar]

- Sheng, C.; Wang, L.; Huang, Z.; Wang, T.; Guo, Y.; Hou, W.; Xu, L.; Wang, J.; Yan, X. Transformer-based deep learning network for tooth segmentation on panoramic radiographs. J. Syst. Sci. Complex. 2023, 36, 257–272. [Google Scholar] [CrossRef] [PubMed]

- Dujic, H.; Meyer, O.; Hoss, P.; Wölfle, U.C.; Wülk, A.; Meusburger, T.; Meier, L.; Gruhn, V.; Hesenius, M.; Hickel, R.; et al. Automatized detection of periodontal bone loss on periapical radiographs by vision transformer networks. Diagnostics 2023, 13, 3562. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).