Resilient AI in Therapeutic Rehabilitation: The Integration of Computer Vision and Deep Learning for Dynamic Therapy Adaptation

Abstract

1. Introduction

Healthcare 5.0

- Reliability: The system must guarantee constant and safe operation, performing expected operations without errors; any malfunctions could have critical consequences on the health of patients [12].

- Personalization: Services must be able to adapt to the specific needs of each patient, optimizing treatment based on factors such as genetics, behavior, environment, and medical care, especially in the presence of multiple conditions.

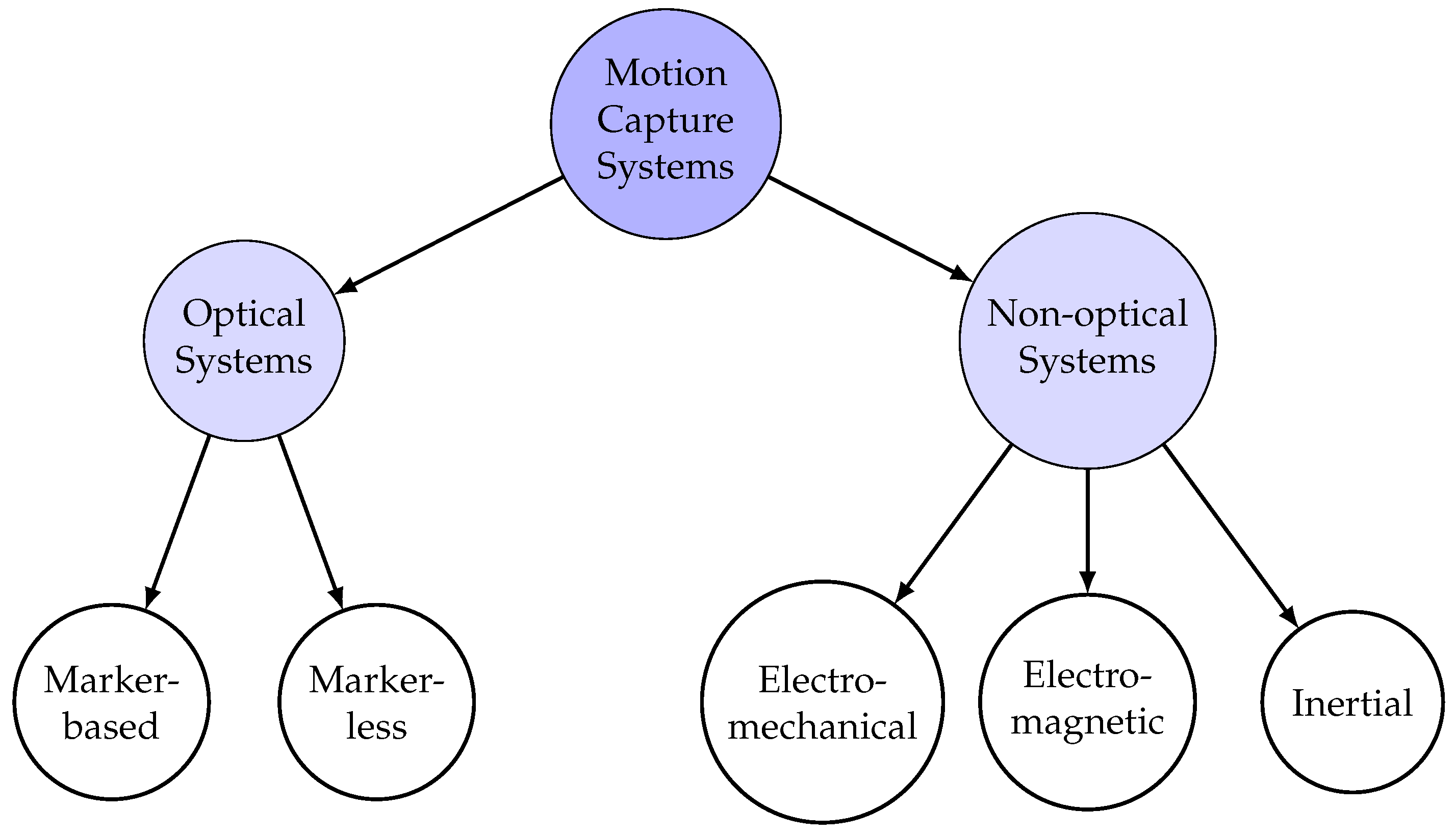

2. Motion Capture System

2.1. Markerless Systems

2.1.1. Subject Volume Generation: The Visual Hull

2.1.2. Model Definition, Generation, and Matching

- Anatomical model:The anatomical model describes the three-dimensional shape of the body as a triangular mesh—a structure composed of an ordered list of 3D points (called vertices) and triangles connecting these points to define surfaces.Each point is identified by three coordinates (x, y, z) in three-dimensional space, and each triangle is described by the three vertices that form it, listed in counterclockwise order.

- Kinematic model:The body is represented as an articulated system, composed of main segments (such as arms, legs, trunk, etc.) connected to each other by joints that allow complex movements, both rotational and translational. Each segment has a “parent segment” (for example, the arm is connected to the trunk) and may have one or more “child segments” (for example, the forearm is connected to the arm). It also has a local coordinate system that defines its position relative to the segment to which it is connected.

- –

- A specific algorithm is applied to obtain the necessary transformations useful for aligning the segments of the reference mesh with the mesh of the subject to be analyzed.

- –

- The mesh of the subject is divided into body segments using a proximity criterion: each point of the mesh is associated with the closest body part of the reference mesh.

- –

- For each body segment, an inverse transformation is applied to the one adopted in the first step to realign the mesh of the subject to the reference pose.

- –

- Finally, the reference mesh is transformed to fit the shape of the subject, minimizing the difference between the vertices of the analyzed mesh and those of the reference mesh.

2.2. State of the Art in Markerless Motion Capture Systems and Computer Vision for Rehabilitation

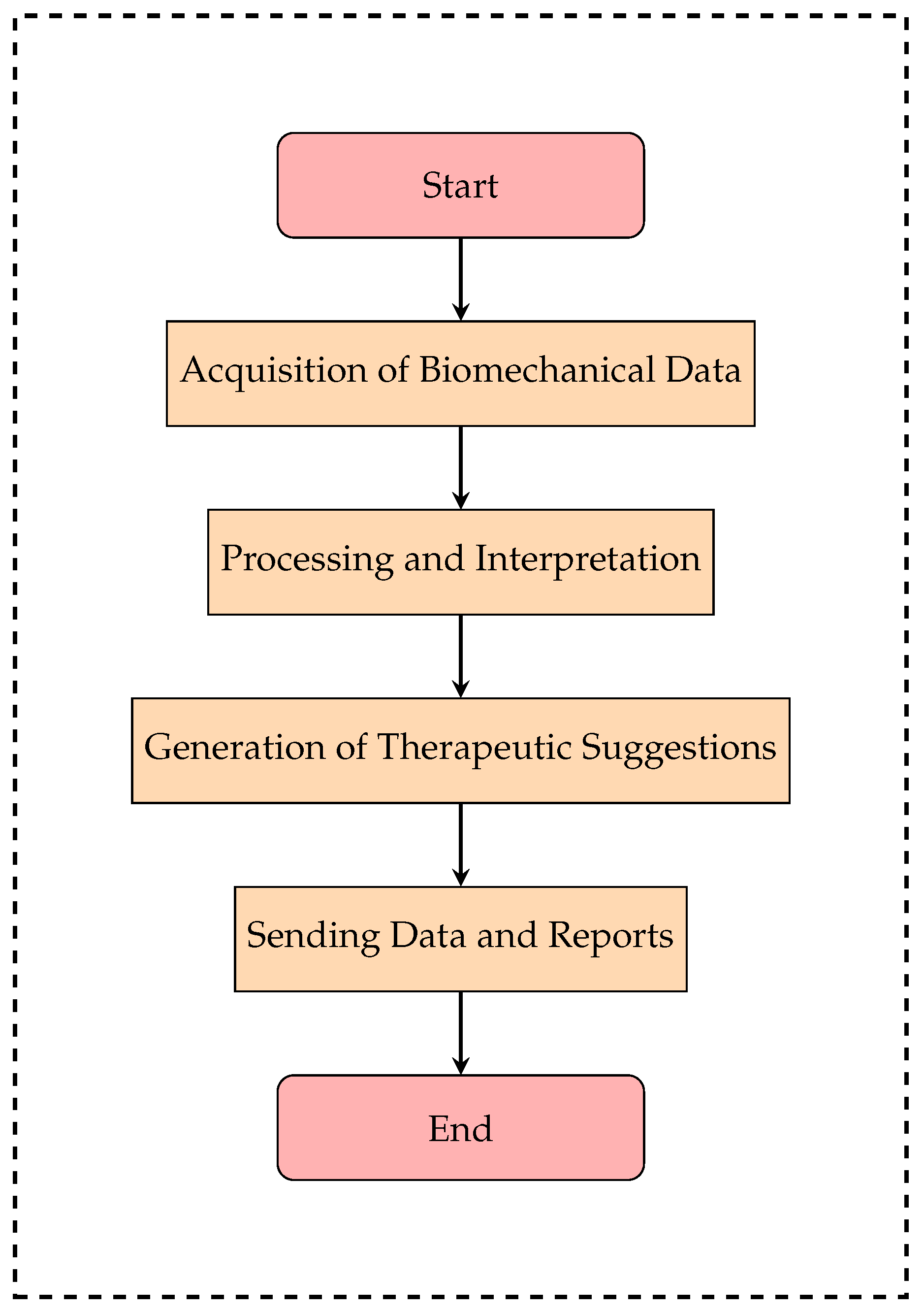

3. Vision System for Therapeutic Adaptation: Analysis of Functional Requirements

- Vision Module:

- –

- Acquires and analyzes the patient’s movements through RGB-D cameras and computer vision algorithms.

- –

- Extracts fundamental biomechanical parameters such as amplitude, precision, speed, fluidity, and symmetry of movements.

- –

- Converts the data into a structured format for the suggestion module.

- Suggestion Module:

- –

- Analyzes data received from the vision module and develops personalized therapy.

- –

- If the patient shows improvement, the module reduces the level of assistance and increases the difficulty of the exercises.

- –

- If progress is limited, it suggests increasing motor support or sends an alert to the therapist for a review of the therapy.

- –

- All suggestions are recorded to allow for the monitoring of the system’s decisions.

3.1. Functional Requirements of the Vision Module

3.2. Functional Requirements of the Suggestion Module

- –

- Reinforcement Learning: The system progressively learns from the data collected during rehabilitation sessions, optimizing decision thresholds to improve the precision of recommendations.

- –

- Dynamic threshold analysis: The system automatically adjusts the levels of difficulty and motor assistance, avoiding sudden changes and ensuring gradual adaptation to therapy.

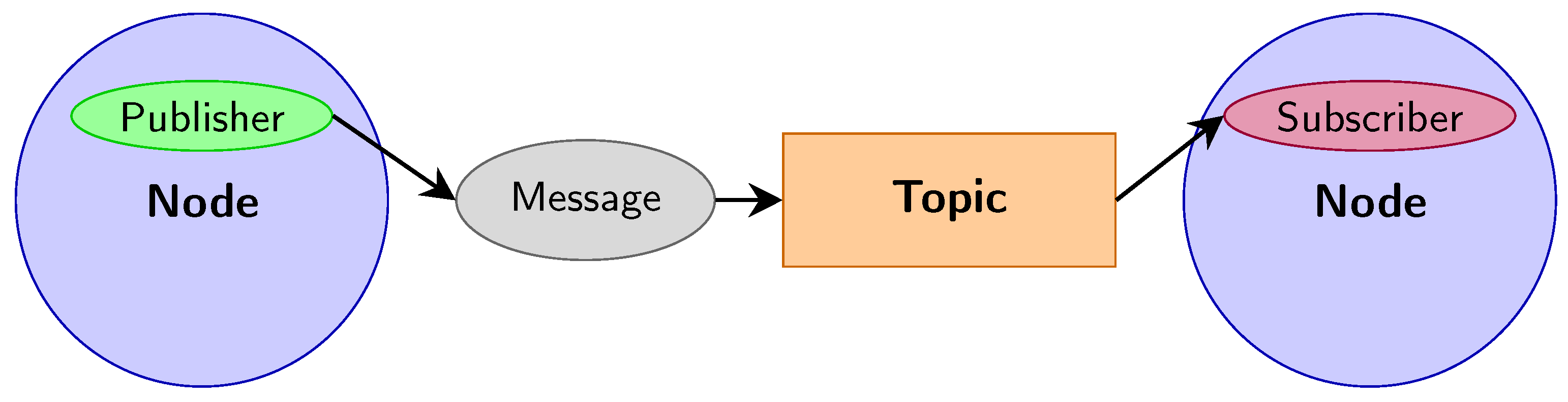

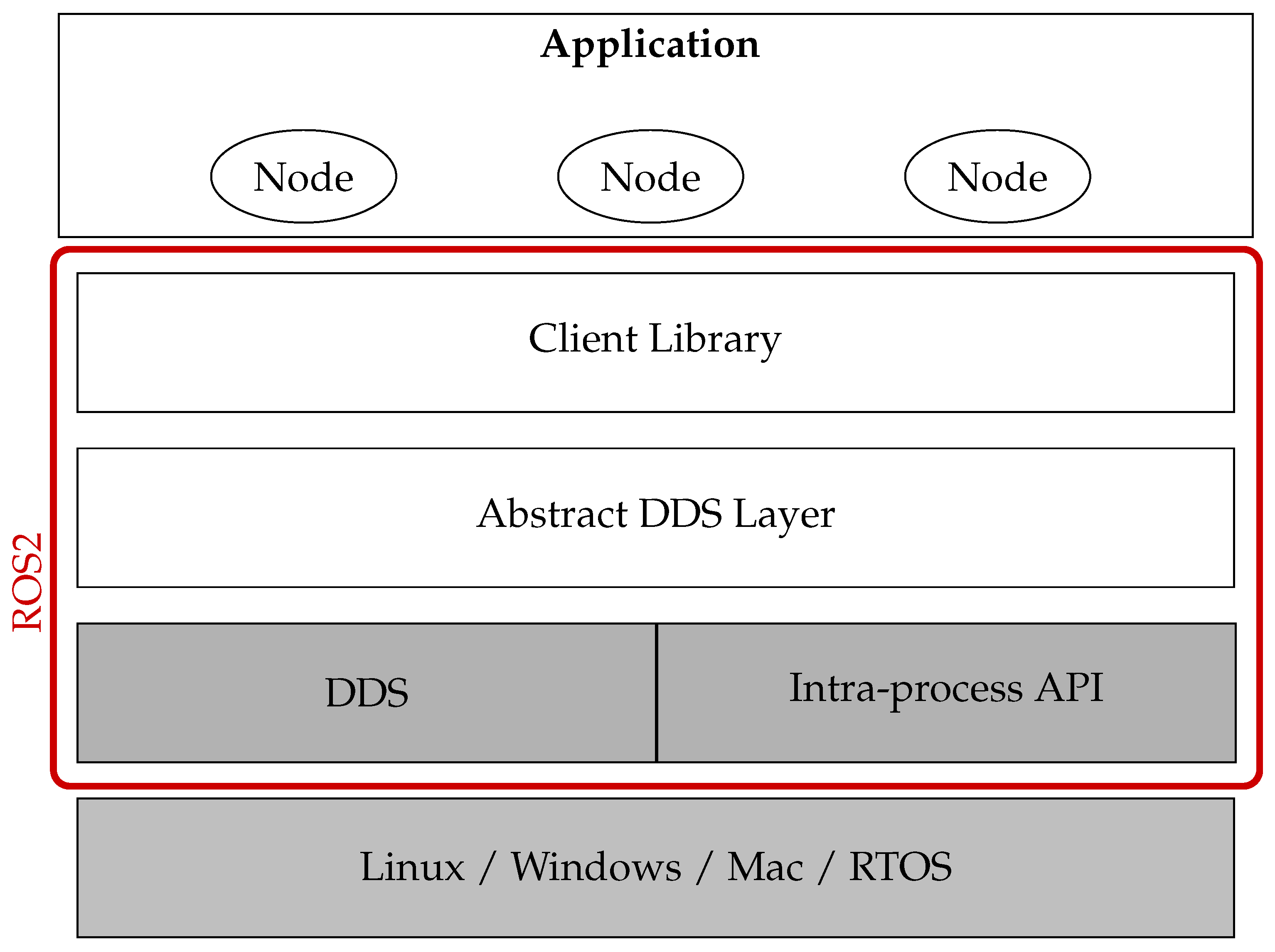

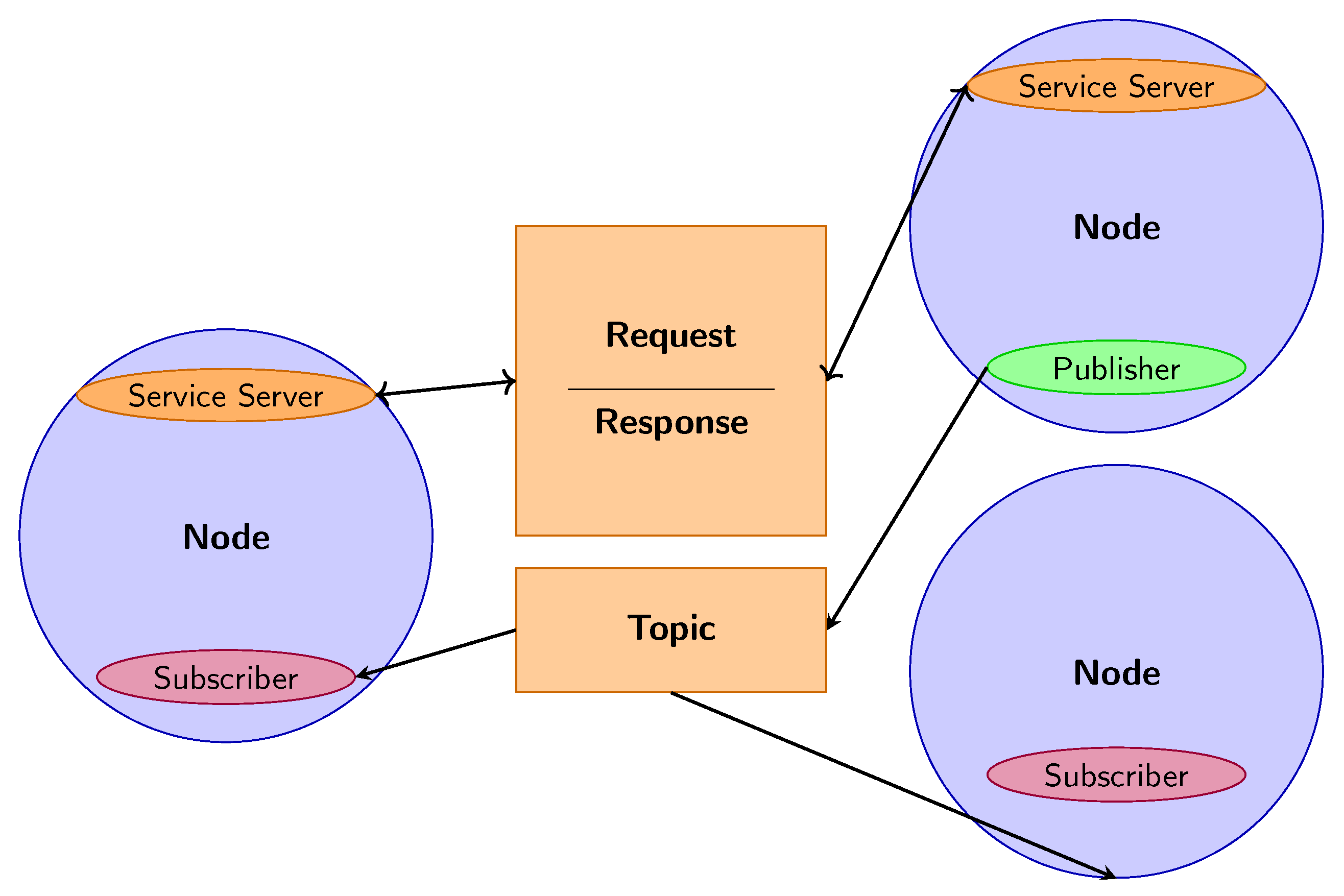

3.3. Software Architecture and Compatibility

- –

- ROS2 Nodes: The vision module acquires biomechanical data, while the suggestion module processes the information received to generate personalized recommendations.

- –

- ROS2 Topic Biomechanical data is published on a ROS2 topic, to which the suggestion module subscribes to receive the parameters needed for analysis in real time.

- –

- ROS2 Services and Actions: Services manage specific requests between modules, while actions allow the coordination of long-term operations, such as the progressive adaptation of motor assistance.

- –

- MQTT (Message Queuing Telemetry Transport): optimizes data transmission by reducing latency and bandwidth consumption.

- –

- ZeroMQ: ensures fast and reliable communication between modules, ideal for distributed architectures.

- –

- REST APIs: allow integration with therapeutic dashboards, offering therapists an intuitive interface to monitor patient progress.

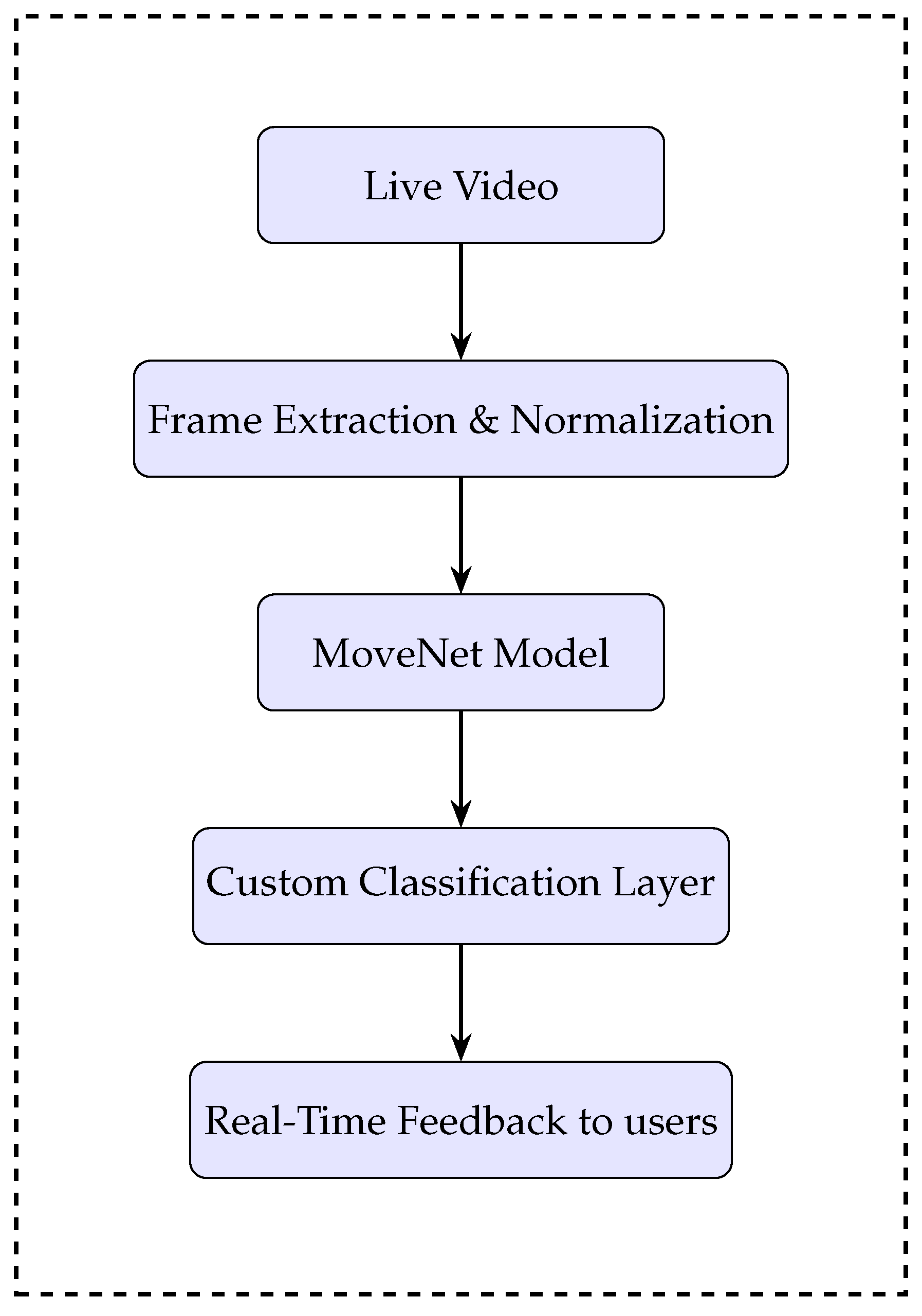

4. Methodology: Computer Vision and Deep Learning

Computer Vision

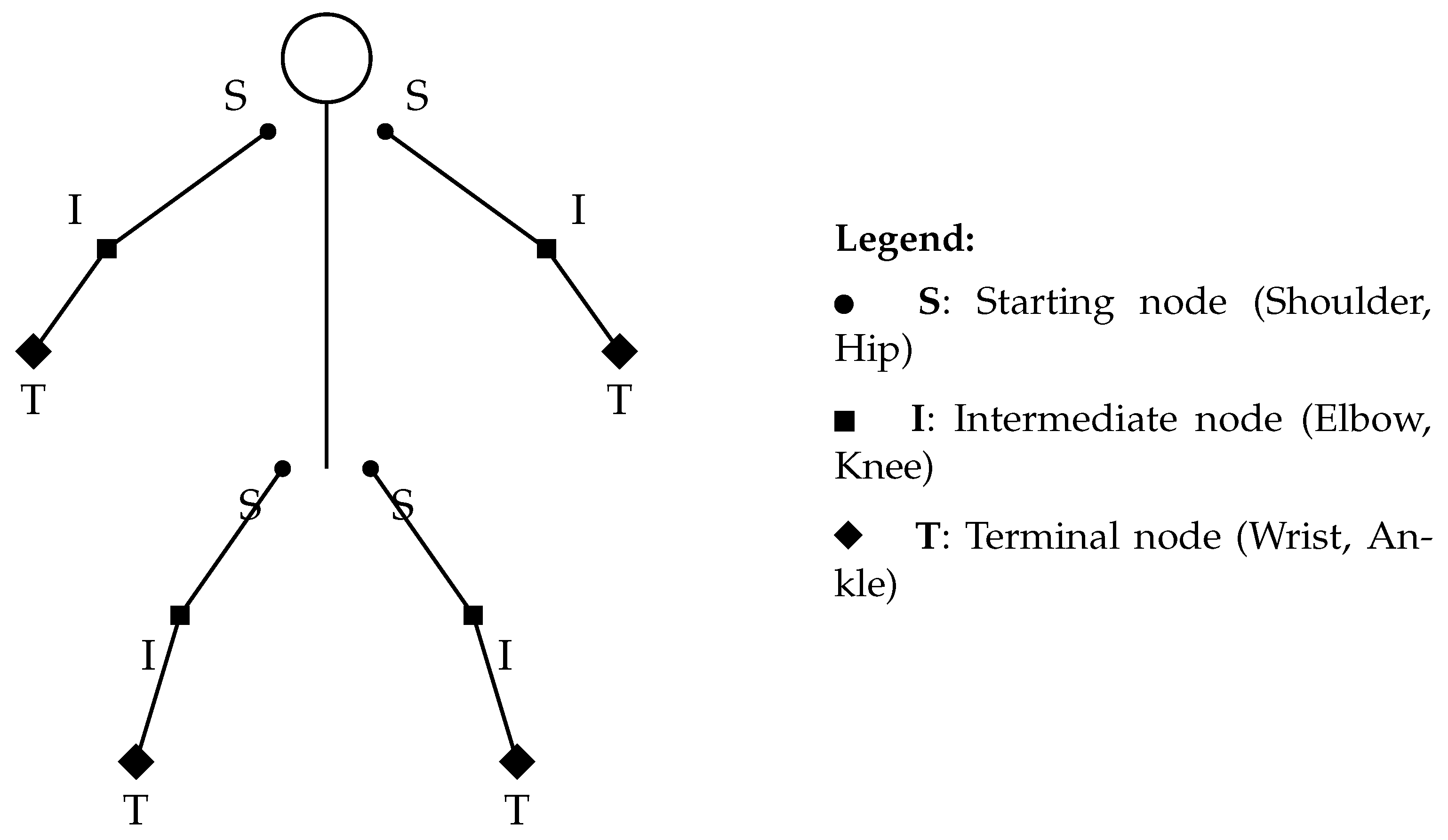

- Initial Motion Analysis: During the initial calibration phase, the system captures a continuous sequence of frames in the first few seconds. Each image is converted to a digital skeleton and saved in an XML file. Based on this dataset, the following joint reference points are identified for each limb involved (Figure 8):

- –

- Starting node: the limb joint closest to the trunk of the body (such as the shoulder or hip).

- –

- Intermediate node: the central point of the limb (such as the elbow or knee).

- –

- Terminal node: the extremity of the limb (such as the hand or foot).

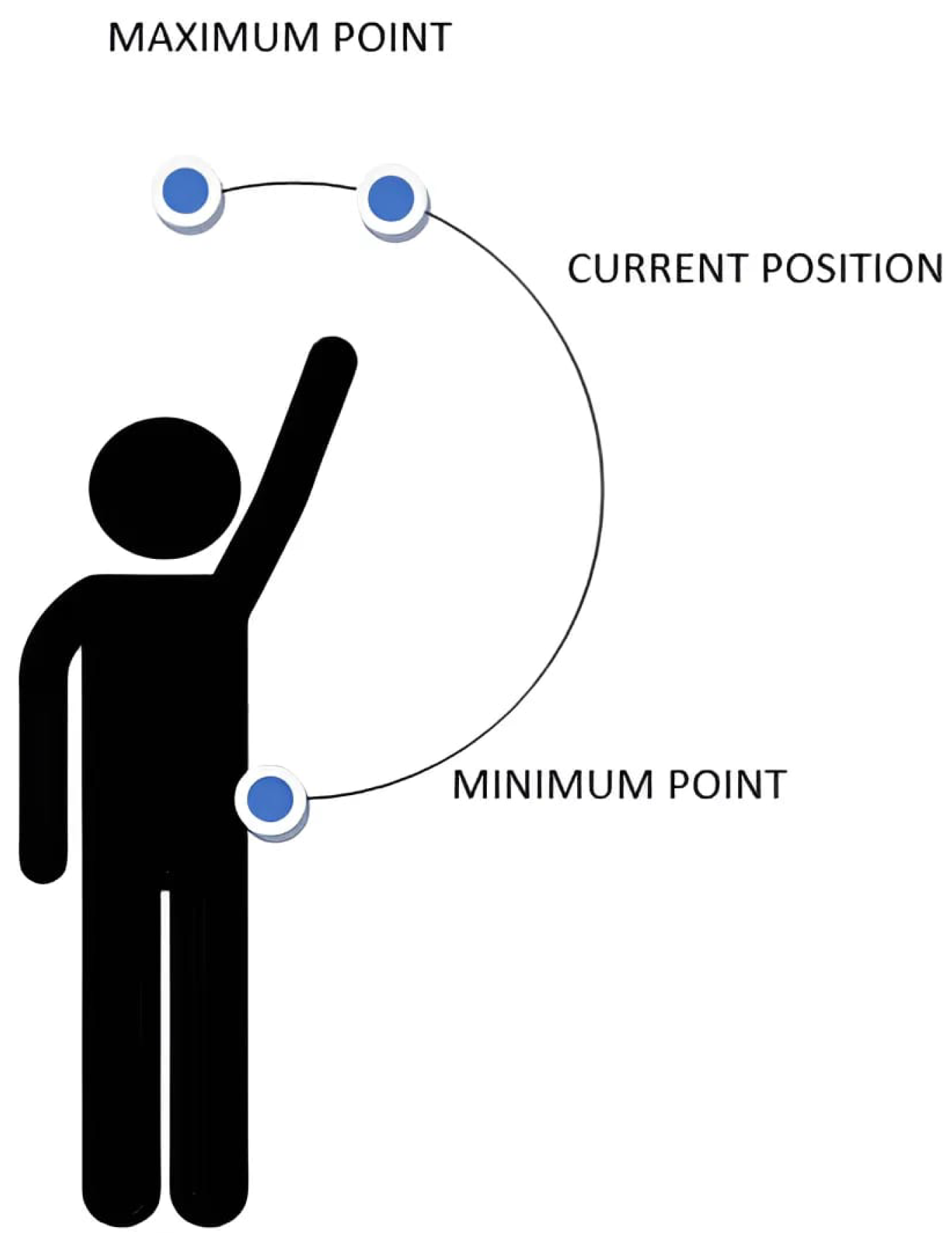

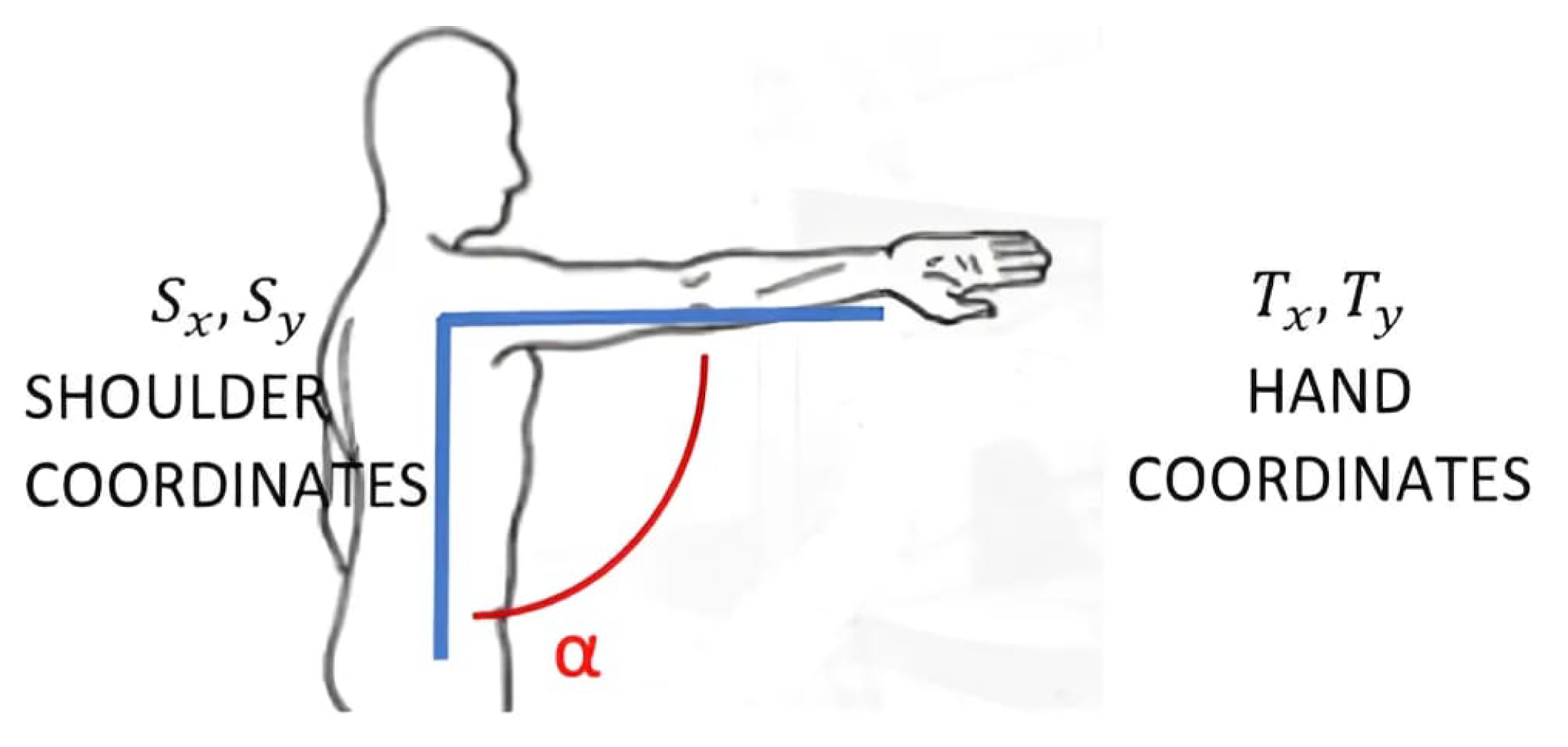

Each set of detections produces a series of spatial vectors, from which an average value is computed to represent a reference position for each joint. This strategy improves the reliability of the data by minimizing the impact of temporary tracking errors or minor involuntary movements. - Measurement of Limb Lengths: In this stage, the actual length of the user’s limbs is calculated using the Euclidean distance formula in 3D space. The length is determined by summing the distances between three key joint points:

- –

- Distance between the starting node (S) and the intermediate node (I);

- –

- Distance between the intermediate node (I) the and terminal node (T).

- –

- is the starting node position (e.g., shoulder or hip);

- –

- is the terminal node position (e.g., hand or foot);

- –

- is the limb length, i.e., the Euclidean distance between the two nodes;

- –

- and represent the percentage of movement extension along the horizontal and vertical axes, respectively, normalized by the limb length.

- The formula for measures the vertical displacement, adjusted by the initial vertical position of the starting node and also normalized by the limb length.

5. Deep Learning

- –

- Data collection: The images used in the system were extracted as frames from videos belonging to the open-source dataset REHAB24-6 [78]. This dataset contains annotated rehabilitation exercise videos, providing a diverse set of movements focused on upper and lower limb motions. The dataset was built by collecting approximately 2500 images and videos of patients performing common rehabilitative exercises, mainly focused on arm and leg movements such as upper limb rotations, flexions and extensions, lifts, and abductions. The exercises were performed both correctly and incorrectly to enable the model to learn the differences between proper and improper executions. The footage was captured using a high-resolution camera in various environments to ensure good variability in lighting conditions, angles, and clothing types. All images were resized to a fixed resolution of 300 × 300 pixels. The dataset was randomly split into a training set (70%) and a test set (30%).

- –

- Joint estimation: The system was developed using the Python (version 3.13.4) programming language within the Jupyter Notebook (version 7.4) environment. To estimate the positions of the body’s joints, a deep learning model was used—specifically, a modified version of MoveNet Thunder–SinglePose, a pre-trained Convolutional Neural Network designed for human posture analysis. The model, provided by TensorFlow and developed in collaboration with the company Include Health, outputs the coordinates of 17 key body points (such as head, shoulders, elbows, wrists, hips, knees, and ankles) based on RGB images or real-time video streams.

- –

- Movement correction: The data obtained from the MoveNet model represent the estimated spatial coordinates of the body keypoints (e.g., head, shoulders, elbows, and wrists) during the execution of an exercise.To assess the correctness of the movement, these estimated coordinates are compared with a set of reference values, which represent the ideal or expected positions of the keypoints based on correctly performed movements.The comparison is based on two fundamental aspects: the distance and the orientation between the estimated keypoints () and the correct or reference keypoints ().The Euclidean distance between the two coordinate vectors is used to measure the overall spatial difference between the estimated and ideal positions. This distance is calculated aswhere:

- *

- is the vector of the correct keypoint coordinates (e.g., 3D coordinates of head, shoulders, etc.);

- *

- is the vector of coordinates estimated by the model;

- *

- n is the total number of coordinates being compared (e.g., 3 for each point in 3D, multiplied by the number of keypoints);

- *

- C represents the overall deviation measure of the current movement compared to the correct one.

A low value of C indicates that the estimated movement is very close to the reference one and therefore likely performed correctly. Conversely, a high value indicates a significant difference, suggesting an error or deviation in execution.Moreover, the system also considers the relative orientation of the points to ensure not only that small spatial deviations are detected but also that the posture and movement direction are consistent with the expected model.This measure C is then used as a correction vector that guides real-time feedback to the user, indicating if and how the movement should be adjusted to match the ideal model.To quantify the reliability of each estimated keypoint, the system computes a confidence score based on the difference between the predicted and correct keypoint coordinates. This score helps to assess how certain the model is about its estimation.The confidence score is derived using the Mean Squared Error (MSE), which measures the average squared difference between the predicted coordinates and the reference coordinates :where- *

- N = total number of coordinate points considered (e.g., all keypoint coordinates);

- *

- = the i-th coordinate of the correct/reference keypoint;

- *

- = the i-th coordinate of the predicted keypoint by the model.

The Root Mean Squared Error (RMSE) is then calculated as the square root of the MSE:The RMSE provides a metric in units similar to the original data, making it easier to interpret the average magnitude of the estimation error.In this system, the confidence score is derived from the RMSE value and normalized to lie between 0 and 1, where a higher score indicates higher confidence in the keypoint estimation.A threshold of 0.8 is established: if the confidence score exceeds this value, the estimated movement is considered reliable and can be evaluated further. If the confidence score falls below this threshold, the system displays a warning to the user, indicating that the estimation is uncertain and that the feedback provided may not be accurate.This mechanism ensures that the system only processes and provides feedback on movements when the pose estimation is sufficiently precise, enhancing the robustness and trustworthiness of the rehabilitative monitoring.

5.1. System Architecture

5.2. Model Training

5.3. Results

6. Vision System for Therapeutic Adaptation: Definition of Integration Strategies

6.1. Integration Testing and Verification

6.2. Test Results and System Validation

6.3. Security, Regulatory Compliance, and Data Protection Measures

- –

- TLS encryption: protects communication between modules, preventing unauthorized access to transmitted data.

- –

- Multi-level authentication: controls system access, ensuring that only authorized users can modify therapy parameters.

- –

- Advanced monitoring and logging: implements fail-safe mechanisms to detect anomalies and activate automatic recovery procedures.

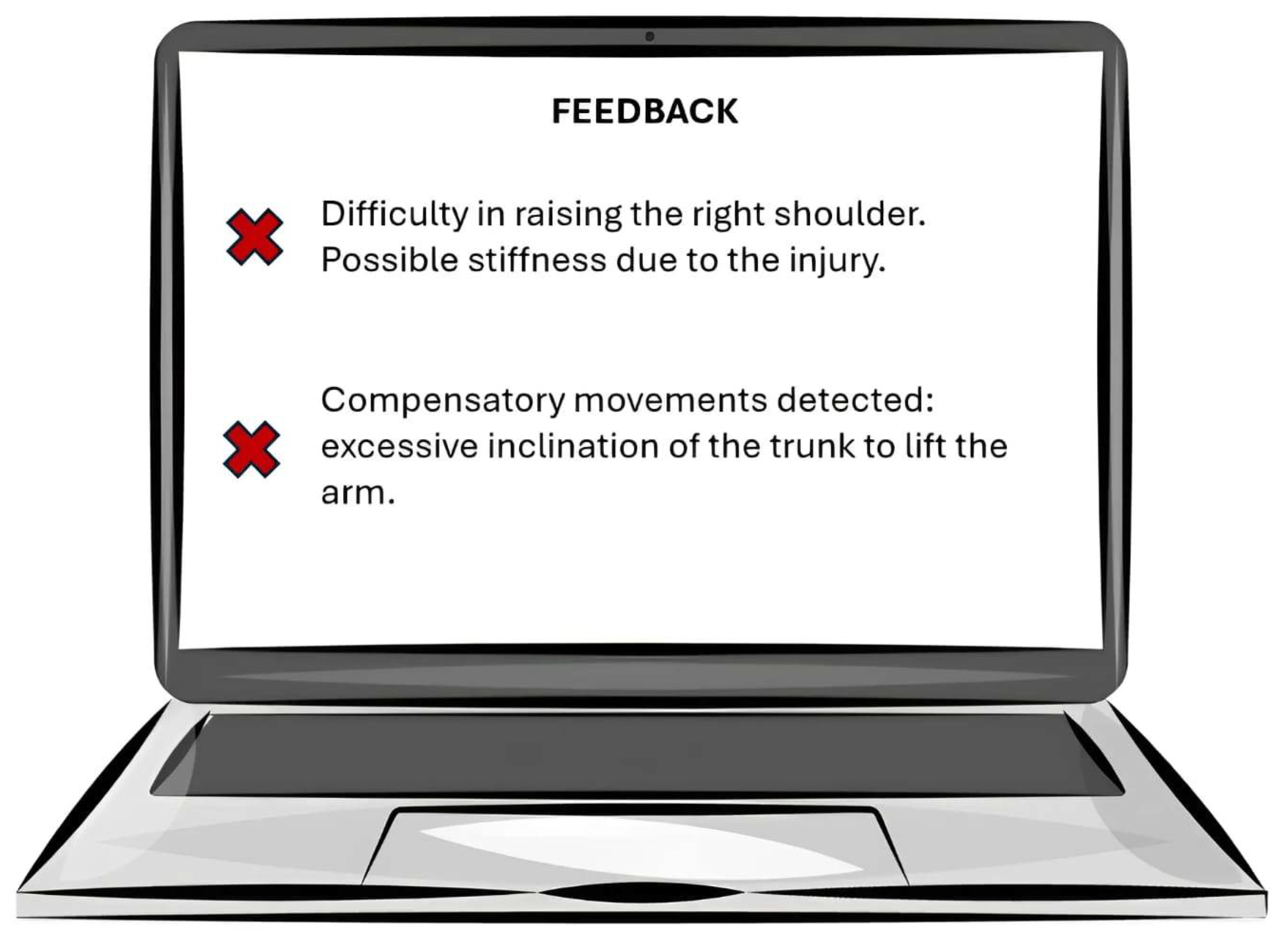

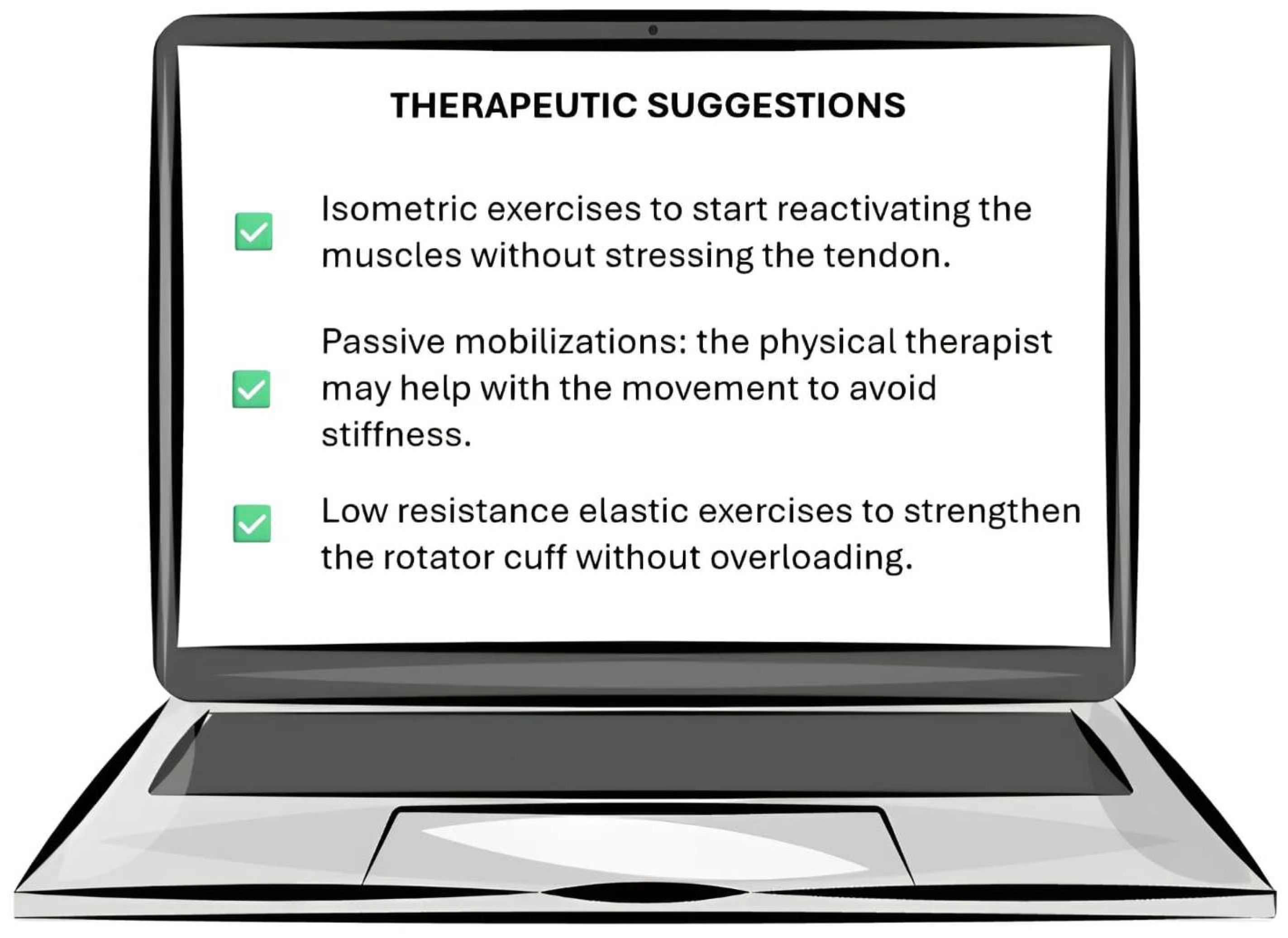

7. Case Study: Rehabilitation of the Rotator Cuff in a Volleyball Player

7.1. Main Functions of the Rehabilitation System

7.1.1. Movement Detection and Analysis

7.1.2. Identification of Problems

7.1.3. Suggestion of Personalized Therapies

8. Future Developments

- Continuous real-time machine learning and adaptive personalization;

- Integration with virtual reality (VR) and augmented reality (AR) technologies;

- Multi-user support and application in clinical and healthcare settings;

- Expansion of hardware compatibility and integration with biometric devices;

- Improvement of interoperability with existing healthcare systems.

9. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Bejnordi, B.E.; Veta, M.; van Diest, P.J.; van Ginneken, B.; Karssemeijer, N.; Litjens, G.; van der Laak, J.A.W.M.; The CAMELYON16 Consortium; Hermsen, M.; Manson, Q.F.; et al. Diagnostic Assessment of Deep Learning Algorithms for Detection of Lymph Node Metastases in Women with Breast Cancer. JAMA 2017, 318, 2199–2210. [Google Scholar] [CrossRef] [PubMed]

- Esteva, A.; Kuprel, B.; Novoa, R.A.; Ko, J.; Swetter, S.M.; Blau, H.M.; Thrun, S. Dermatologist-Level Classification of Skin Cancer with Deep Neural Networks. Nature 2017, 542, 115–118. [Google Scholar] [CrossRef] [PubMed]

- Silvestri, S.; Gargiulo, F.; Ciampi, M. Iterative annotation of biomedical ner corpora with deep neural networks and knowledge bases. Appl. Sci. 2022, 12, 5775. [Google Scholar] [CrossRef]

- Silvestri, S.; Tricomi, G.; Bassolillo, S.R.; De Benedictis, R.; Ciampi, M. An Urban Intelligence Architecture for Heterogeneous Data and Application Integration, Deployment and Orchestration. Sensors 2024, 24, 2376. [Google Scholar] [CrossRef]

- TSUA. Transforming Our World: The 2030 Agenda for Sustainable Development. September 2015. Available online: https://sdgs.un.org/2030agenda (accessed on 28 February 2025).

- Ramson, S.J.; Vishnu, S.; Shanmugam, M. Applications of Internet of Things (IoT)—An overview. In Proceedings of the 2020 5th International Conference on Devices, Circuits and Systems (ICDCS), Coimbatore, India, 5–6 March 2020; pp. 92–95. [Google Scholar]

- Francesco, G.; Stefano, S.; Mario, C. A big data architecture for knowledge discovery. In Proceedings of the 2017 IEEE Symposium on Computers and Communications (ISCC), Heraklion, Greece, 3–6 July 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 82–87. [Google Scholar]

- AlShorman, O.; AlShorman, B.; Alkhassaweneh, M.; Alkahtani, F. Review of internet of medical things (IoMT)–based remote health monitoring through wearable sensors: A case study for diabetic patients. Indonesian J. Elect. Eng. Comput. Sci. 2020, 20, 414–422. [Google Scholar] [CrossRef]

- Silvestri, S.; Islam, S.; Amelin, D.; Weiler, G.; Papastergiou, S.; Ciampi, M. Cyber threat assessment and management for securing healthcare ecosystems using natural language processing. Int. J. Inf. Secur. 2024, 23, 31–50. [Google Scholar] [CrossRef]

- Firesmith, D. System Resilience: What Exactly Is It? Available online: https://insights.sei.cmu.edu/blog/system-resilience-what-exactly-is-it/ (accessed on 18 December 2024).

- Avizienis, A.; Laprie, J.-C.; Randell, B.; Landwehr, C. Basic concepts and taxonomy of dependable and secure computing. IEEE Trans. Depend. Sec. Comput. 2004, 1, 11–33. [Google Scholar] [CrossRef]

- Taimoor, N.; Rehman, S. Reliable and Resilient AI and IoT-Based Personalised Healthcare Services: A Survey. IEEE Access 2021, 10, 535–563. [Google Scholar] [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281. [Google Scholar] [CrossRef]

- Kanko, R.M.; Laende, E.K.; Strutzenberger, G.; Brown, M.; Selbie, W.S.; DePaul, V.; Scott, S.H.; Deluzio, K.J. Assessment of spatiotemporal gait parameters using a deep learning algorithm-based markerless Motion Capture system. J. Biomech. 2021, 122, 110414. [Google Scholar] [CrossRef]

- Drazan, J.F.; Phillips, W.T.; Seethapathi, N.; Hullfish, T.J.; Baxter, J.R. Moving outside the lab: Markerless Motion Capture accurately quantifies sagittal plane kinematics during the vertical jump. J. Biomech. 2021, 125, 110547. [Google Scholar] [CrossRef] [PubMed]

- Mündermann, L.; Corazza, S.; Andriacchi, T.P. The evolution of methods for the capture of human movement leading to markerless Motion Capture for biomechanical applications. J. NeuroEng. Rehabil. 2006, 3, 6. [Google Scholar] [CrossRef] [PubMed]

- Corazza, S.; Mündermann, L.; Gambaretto, E.; Andriacchi, T.P. Markerless Motion Capture through Visual Hull, Articulated ICP and Subject Specific Model Generation. Int. J. Comput. Vis. 2010, 87, 156–169. [Google Scholar] [CrossRef]

- Andriacchi, T.P.; Alexander, E.J. Studies of human locomotion: Past, present and future. J. Biomech. 2000, 33, 1217–1224. [Google Scholar] [CrossRef] [PubMed]

- Harris, G.F.; Smith, P.A. Human Motion Analysis: Current Applications and Future Directions; IEEE Press: New York, NY, USA, 1996. [Google Scholar]

- Mündermann, A.; Dyrby, C.O.; Hurwitz, D.E.; Sharma, L.; Andriacchi, T.P. Potential strategies to reduce medial compartment loading in patients with knee OA of varying severity: Reduced walking speed. Arthritis Rheum. 2004, 50, 1172–1178. [Google Scholar] [CrossRef]

- Simon, R.S. Quantification of human motion: Gait analysis benefits and limitations to its application to clinical problems. J. Biomech. 2004, 37, 1869–1880. [Google Scholar] [CrossRef]

- Wang, L.; Hu, W.; Tan, T. Recent Developments in Human Motion Analysis. Pattern Recognit. 2003, 36, 585–601. [Google Scholar] [CrossRef]

- Moeslund, G.; Granum, E. A survey of computer vision-based human Motion Capture. Comput. Vis. Image Underst. 2001, 81, 231–268. [Google Scholar] [CrossRef]

- Kanade, T.; Collins, R.; Lipton, A.; Burt, P.; Wixson, L. Advances in cooperative multi-sensor video surveillance. Darpa Image Underst. Workshop 1998, 1, 2. [Google Scholar]

- Lee, H.J.; Chen, Z. Determination of 3D human body posture from a single view. Comp Vision Graph. Image Process 1985, 30, 148–168. [Google Scholar] [CrossRef]

- Hogg, D. Model-based vision: A program to see a walking person. Image Vis. Comput. 1983, 1, 5–20. [Google Scholar] [CrossRef]

- Gavrila, D.; Davis, L. 3-D model-based tracking of humans in action: A multi-view approach. In Proceedings of the Conference on Computer Vision and Pattern Recognition, San Francisco, CA, USA, 18–20 June 1996. [Google Scholar]

- Yamamoto, M.; Koshikawa, K. Human motion analysis based on a robot arm model. In Proceedings of the Computer Vision and Pattern Recognition, Maui, HI, USA, 3–6 June 1991. [Google Scholar]

- Darrel, T.; Maes, P.; Blumberg, B.; Pentl, A.P. A novel environment for situated vision and behavior. In Proceedings of the Workshop for Visual Behaviors at CVPR, Seattle, WA, USA, 21–23 June 1994. [Google Scholar]

- Bharatkumar, A.G.; Daigle, K.E.; Pandy, M.G.; Cai, Q.; Aggarwal, J.K. Lower limb kinematics of human walking with transformation. In Proceedings of the Workshop on Motion of Non-Rigid and Articulated Objects, Austin, TX, USA, 11–12 November 1994. [Google Scholar]

- Cedras, C.; Shah, M. Motion-based recognition: A survey. Image And Vis. Comput. 1995, 13, 129–155. [Google Scholar] [CrossRef]

- Zakotnik, J.; Matheson, T.; Dürr, V. A posture optimization algorithm for model-based Motion Capture of movement sequences. J. Neurosci. Methods 2004, 135, 43–54. [Google Scholar] [CrossRef] [PubMed]

- Lanshammar, H.; Persson, T.; Medved, V. Comparison between a marker-based and a marker-free method to estimate centre of rotation using video image analysis. In Proceedings of the Second World Congress of Biomechanics, Amsterdam, The Netherlands, 10–15 July 1994. [Google Scholar]

- Persson, T. A marker-free method for tracking human lower limb segments based on model matching. Int. J. Biomed. Comput. 1996, 41, 87–97. [Google Scholar] [CrossRef]

- Pinzke, S.; Kopp, L. Marker-less systems for tracking working postures—Results from two experiments. Appl. Ergon. 2001, 32, 461–471. [Google Scholar] [CrossRef]

- Legrand, L.; Marzani, F.; Dusserre, L. A marker-free system for the analysis of movement disabilities. Medinfo 1998, 9, 1066–1070. [Google Scholar]

- Marzani, F.; Calais, E.; Legr, L. A 3-D marker-free system for the analysis of movement disabilities—An application to the legs. IEEE Trans. Inf. Technol. Biomed. 2001, 5, 18–26. [Google Scholar] [CrossRef]

- Gargiulo, F.; Silvestri, S.; Ciampi, M. A clustering based methodology to support the translation of medical specifications to software models. Appl. Soft Comput. 2018, 71, 199–212. [Google Scholar] [CrossRef]

- Ar, I.; Akgul, Y.S. A computerized recognition system for the home-based physiotherapy exercises using an RGBD camera. IEEE Trans. Neural Syst. Rehabil. Eng. 2014, 22, 1160–1171. [Google Scholar] [CrossRef]

- Tinetti, M.E.; Speechley, M.; Ginter, S.F. Risk factors for falls among elderly persons living in the community. N. Engl. J. Med. 1988, 319, 1701–1707. [Google Scholar] [CrossRef]

- Nalci, A.; Khodamoradi, A.; Balkan, O.; Nahab, F.; Garudadri, H. A computer vision based candidate for functional balance test. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3504–3508. [Google Scholar]

- van Baar, M.E.; Dekker, J.; Oostendorp, R.A.B.; Bijl, D.; Voorn, T.B.; Bijlsma, J.W.J. Effectiveness of exercise in patients with osteoarthritis of hip or knee: Nine months’ follow up. Ann. Rheum. Dis. 2001, 60, 1123–1130. [Google Scholar] [CrossRef] [PubMed]

- Stroppa, F.; Stroppa, M.S.; Marcheschi, S.; Loconsole, C.; Sotgiu, E.; Solazzi, M.; Buongiorno, D.; Frisoli, A. Real-time 3D tracker in robot-based neurorehabilitation. In Computer Vision and Pattern Recognition, Computer Vision for Assistive Healthcare; Leo, M., Farinella, G.M., Eds.; Academic Press: Cambridge, MA, USA, 2018; pp. 75–104. [Google Scholar]

- Baptista, R.; Ghorbel, E.; Shabayek, A.E.R.; Moissenet, F.; Aouada, D.; Douchet, A.; André, M.; Pager, J.; Bouill, S. Home self-training: Visual feedback for assisting physical activity for stroke survivors. Comput. Methods Programs Biomed. 2019, 176, 111–120. [Google Scholar] [CrossRef] [PubMed]

- Wijekoon, A. Reasoning with multi-modal sensor streams for m-health applications. In Proceedings of the Workshop Proceedings for the 26th International Conference on Case-Based Reasoning (ICCBR 2018), Stockholm, Sweden, 9–12 July 2018; pp. 234–238. [Google Scholar]

- Rybarczyk, Y.; Medina, J.L.P.; Leconte, L.; Jimenes, K.; González, M.; Esparza, D. Implementation and assessment of an intelligent motor tele-rehabilitation platform. Electronics 2019, 8, 58. [Google Scholar] [CrossRef]

- Dorado, J.; del Toro, X.; Santofimia, M.J.; Parreno, A.; Cantarero, R.; Rubio, A.; Lopez, J.C. A computer-vision-based system for at-home rheumatoid arthritis rehabilitation. Int. J. Distrib. Sens. Netw. 2019, 15, 15501477198. [Google Scholar] [CrossRef]

- Peer, P.; Jaklic, A.; Sajn, L. A computer vision-based system for a rehabilitation of a human hand. Period Biol. 2013, 115, 535–544. [Google Scholar]

- Salisbury, J.P.; Liu, R.; Minahan, L.M.; Shin, H.Y.; Karnati, S.V.P.; Duffy, S.E. Patient engagement platform for remote monitoring of vestibular rehabilitation with applications in concussion management and elderly fall prevention. In Proceedings of the 2018 IEEE International Conference on Healthcare Informatics (ICHI), New York, NY, USA, 4–7 June 2018; pp. 422–423. [Google Scholar]

- Internationale Vojta Gesellschaft e.V. Vojta Therapy. 2020. Available online: https://www.vojta.com/en/the-vojta-principle/vojta-therapy (accessed on 23 November 2020).

- Khan, M.H.; Helsper, J.; Farid, M.S.; Grzegorzek, M. A computer vision-based system for monitoring Vojta therapy. Int. J. Med. Inform. 2018, 113, 85–95. [Google Scholar] [CrossRef]

- Chen, Y.L.; Liu, C.H.; Yu, C.W.; Lee, P.; Kuo, Y.W. An upper extremity rehabilitation system using efficient vision-based action identification techniques. Appl Sci. 2018, 8, 1161. [Google Scholar] [CrossRef]

- Rammer, J.; Slavens, B.; Krzak, J.; Winters, J.; Riedel, S.; Harris, G. Assessment of a marker-less motion analysis system for manual wheelchair application. J. Neuroeng. Rehabil. 2018, 15, 96. [Google Scholar] [CrossRef]

- OpenSim. Available online: https://simtk.org/projects/opensim (accessed on 24 November 2020).

- Buonaiuto, G.; Guarasci, R.; Minutolo, R.; De Pietro, G.; Esposito, M. Quantum Transfer Learning for Acceptability Judgements. Quantum Mach. Intell. 2024, 6, 13. [Google Scholar] [CrossRef]

- Gargiulo, F.; Minutolo, A.; Guarasci, R.; Damiano, E.; De Pietro, G.; Fujita, H.; Esposito, M. An ELECTRA-Based Model for Neural Coreference Resolution. IEEE Access 2022, 10, 75144–75157. [Google Scholar] [CrossRef]

- Minutolo, A.; Guarasci, R.; Damiano, E.; De Pietro, G.; Fujita, H.; Esposito, M. A multi-level methodology for the automated translation of a coreference resolution dataset: An application to the Italian language. Neural Comput. Appl. 2022, 34, 22493–22518. [Google Scholar] [CrossRef]

- Maskeliūnas, R.; Damaševičius, R.; Blažauskas, T.; Canbulut, C.; Adomavičienė, A.; Griškevičius, J. BiomacVR: A Virtual Reality-Based System for Precise Human Posture and Motion Analysis in Rehabilitation Exercises Using Depth Sensors. Electronics 2023, 12, 339. [Google Scholar] [CrossRef]

- Lim, J.H.; He, K.; Yi, Z.; Hou, C.; Zhang, C.; Sui, Y.; Li, L. Adaptive Learning Based Upper-Limb Rehabilitation Training System with Collaborative Robot. In Proceedings of the 2023 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Sydney, Australia, 24–27 July 2023; pp. 1–5. [Google Scholar]

- Çubukçu, B.; Yüzgeç, U.; Zileli, A.; Zılelı, R. Kinect-Based Integrated Physiotherapy Mentor Application for Shoulder Damage. Future Gener. Comput. Syst. 2021, 122, 105–116. [Google Scholar]

- Saratean, T.; Antal, M.; Pop, C.; Cioara, T.; Anghel, I.; Salomie, I. A Physiotheraphy Coaching System Based on Kinect Sensor. In Proceedings of the 2020 IEEE 16th International Conference on Intelligent Computer Communication and Processing (ICCP), Cluj-Napoca, Romania, 3–5 September 2020; pp. 535–540. [Google Scholar]

- Wagner, J.; Szymański, M.; Błażkiewicz, M.; Kaczmarczyk, K. Methods for Spatiotemporal Analysis of Human Gait Based on ˙ Data from Depth Sensors. Sensors 2023, 23, 1218. [Google Scholar] [CrossRef]

- Bijalwan, V.; Semwal, V.B.; Singh, G.; Mandal, T.K. HDL-PSR: Modelling Spatio-Temporal Features Using Hybrid Deep Learning Approach for Post-Stroke Rehabilitation. Neural Process. Lett. 2023, 55, 279–298. [Google Scholar] [CrossRef]

- Keller, A.V.; Torres-Espin, A.; Peterson, T.A.; Booker, J.; O’Neill, C.; Lotz, J.C.; Bailey, J.F.; Ferguson, A.R.; Matthew, R.P. Unsupervised Machine Learning on Motion Capture Data Uncovers Movement Strategies in Low Back Pain. Front. Bioeng. Biotechnol. 2022, 10, 868684. [Google Scholar] [CrossRef]

- Trinidad-Fernández, M.; Cuesta-Vargas, A.; Vaes, P.; Beckwée, D.; Moreno, F.Á.; González-Jiménez, J.; Fernández-Nebro, A.; Manrique-Arija, S.; Ureña-Garnica, I.; González-Sánchez, M. Human Motion Capture for Movement Limitation Analysis Using an RGB-D Camera in Spondyloarthritis: A Validation Study. Med. Biol. Eng. Comput. 2021, 59, 2127–2137. [Google Scholar] [CrossRef]

- Hustinawaty, H.; Rumambi, T.; Hermita, M. Skeletonization of the Straight Leg Raise Movement Using the Kinect SDK. Int. J. Adv. Comput. Sci. Appl. 2021, 12, 0120683. [Google Scholar]

- Girase, H.; Nyayapati, P.; Booker, J.; Lotz, J.C.; Bailey, J.F.; Matthew, R.P. Automated Assessment and Classification of Spine, Hip, and Knee Pathologies from Sit-to-Stand Movements Collected in Clinical Practice. J. Biomech. 2021, 128, 110786. [Google Scholar] [CrossRef]

- Lim, D.; Pei, W.; Lee, J.W.; Musselman, K.E.; Masani, K. Feasibility of Using a Depth Camera or Pressure Mat for Visual Feedback Balance Training with Functional Electrical Stimulation. Biomed. Eng. Online 2024, 23, 19. [Google Scholar] [CrossRef]

- Raza, A.; Qadri, A.M.; Akhtar, I.; Samee, N.A.; Alabdulhafith, M. LogRF: An Approach to Human Pose Estimation Using Skeleton Landmarks for Physiotherapy Fitness Exercise Correction. IEEE Access 2023, 11, 107930–107939. [Google Scholar] [CrossRef]

- Khan, M.A.A.H.; Murikipudi, M.; Azmee, A.A. Post-Stroke Exercise Assessment Using Hybrid Quantum Neural Network. In Proceedings of the 2023 IEEE 47th Annual Computers, Software, and Applications Conference (COMPSAC), Torino, Italy, 26–30 June 2023; pp. 539–548. [Google Scholar]

- Wei, W.; Mcelroy, C.; Dey, S. Using Sensors and Deep Learning to Enable On-Demand Balance Evaluation for Effective Physical Therapy. IEEE Access 2020, 8, 99889–99899. [Google Scholar] [CrossRef]

- Uccheddu, F.; Governi, L.; Furferi, R.; Carfagni, M. Home Physiotherapy Rehabilitation Based on RGB-D Sensors: A Hybrid Approach to the Joints Angular Range of Motion Estimation. Int. J. Interact. Des. Manuf. (IJIDeM) 2021, 15, 99–102. [Google Scholar] [CrossRef]

- Trinidad-Fernández, M.; Beckwée, D.; Cuesta-Vargas, A.; González-Sánchez, M.; Moreno, F.A.; González-Jiménez, J.; Joos, E.; Vaes, P. Validation, Reliability, and Responsiveness Outcomes of Kinematic Assessment with an RGB-D Camera to Analyze Movement in Subacute and Chronic Low Back Pain. Sensors 2020, 20, 689. [Google Scholar] [CrossRef]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cox, D.R. The regression analysis of binary sequences. J. R. Stat. Soc. Ser. Stat. Methodol. 1958, 20, 215–232. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Wold, S.; Esbensen, K.; Geladi, P. Principal component analysis. Chemom. Intell. Lab. Syst. 1987, 2, 37–52. [Google Scholar] [CrossRef]

- Černek, A.; Sedmidubsky, J.; Budikova, P. REHAB24-, Physical Therapy Dataset for Analyzing Pose Estimation Methods. In Proceedings of the 17th International Conference on Similarity Search and Applications (SISAP), Providence, RI, USA, 4–6 November 2024; Springer: Berlin/Heidelberg, Germany, 2024; p. 14. [Google Scholar]

- Voigt, P.; von dem Bussche, A. The EU General Data Protection Regulation (GDPR): A Practical Guide; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- European Commission. Proposal for a Regulation Laying Down Harmonized Rules on Artificial Intelligence (Artificial Intelligence Act). Available online: https://digital-strategy.ec.europa.eu/en/library/proposal-regulation-laying-down-harmonised-rules-artificial-intelligence (accessed on 24 November 2020).

- Ciampi, M.; Sicuranza, M.; Silvestri, S. A Privacy-Preserving and Standard-Based Architecture for Secondary Use of Clinical Data. Information 2022, 13, 87. [Google Scholar] [CrossRef]

| Optical Systems | Non-Optical Systems |

|---|---|

| Marker-based | Electro-mechanical |

| Marker-less | Electro-magnetic |

| Inertial |

| Component | Description |

|---|---|

| Symmetry of Movement | Identifies asymmetry, such as the right shoulder being lower than the left. |

| Range of Motion | Assesses the ability of the right arm to be raised compared to the left, indicating limitations. |

| Speed and Acceleration | Detects compensatory movements like leaning on the back and stiffness, which may indicate difficulty in movement. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cirillo, E.; Conte, C.; Moccardi, A.; Fonisto, M. Resilient AI in Therapeutic Rehabilitation: The Integration of Computer Vision and Deep Learning for Dynamic Therapy Adaptation. Appl. Sci. 2025, 15, 6800. https://doi.org/10.3390/app15126800

Cirillo E, Conte C, Moccardi A, Fonisto M. Resilient AI in Therapeutic Rehabilitation: The Integration of Computer Vision and Deep Learning for Dynamic Therapy Adaptation. Applied Sciences. 2025; 15(12):6800. https://doi.org/10.3390/app15126800

Chicago/Turabian StyleCirillo, Egidia, Claudia Conte, Alberto Moccardi, and Mattia Fonisto. 2025. "Resilient AI in Therapeutic Rehabilitation: The Integration of Computer Vision and Deep Learning for Dynamic Therapy Adaptation" Applied Sciences 15, no. 12: 6800. https://doi.org/10.3390/app15126800

APA StyleCirillo, E., Conte, C., Moccardi, A., & Fonisto, M. (2025). Resilient AI in Therapeutic Rehabilitation: The Integration of Computer Vision and Deep Learning for Dynamic Therapy Adaptation. Applied Sciences, 15(12), 6800. https://doi.org/10.3390/app15126800