Abstract

The increasing complexity of software systems has heightened the need for efficient and accurate vulnerability detection. Large Language Models have emerged as promising tools in this domain; however, their reasoning capabilities and limitations remain insufficiently explored. This study presents a systematic evaluation of different Large Language Models with and without explicit reasoning mechanisms, including Claude-3.5-Haiku, GPT-4o-Mini, DeepSeek-V3, O3-Mini, and DeepSeek-R1. Experimental results demonstrate that reasoning-enabled models, particularly DeepSeek-R1, outperform their non-reasoning counterparts by leveraging structured step-by-step inference strategies and valuable reasoning traces. With proposed data processing and prompt design in the interaction, DeepSeek-R1 achieves an accuracy of 0.9507 and an F1-score of 0.9659 on the Software Assurance Reference Dataset. These findings highlight the potential of integrating reasoning-enabled Large Language Models into vulnerability detection frameworks to simultaneously improve detection performance and interpretability.

1. Introduction

With the rapid advancement of digital technologies, foundational network infrastructure, computer hardware, and intelligent devices are playing an increasingly vital role across social, economic, and defense sectors. These technologies have improved the efficiency of information processing and transmission, accelerating the intelligent transformation of numerous industries. However, software security challenges, such as cyberattacks, unauthorized access, and leakage of personal information, are increasingly severe, posing significant threats to system reliability and operational safety. Attackers frequently exploit software vulnerabilities to conduct remote intrusions, which may result in severe disruptions to critical systems. Consequently, enhancing software security, particularly the detection of code vulnerabilities, has become an urgent area of research.

Code vulnerability detection aims to identify potential security flaws in software systems. Traditional approaches are primarily based on machine learning techniques, such as static analysis, dynamic analysis, manually designed, or learning-based feature extraction methods [1,2,3]. In recent years, deep learning-based methods have emerged as effective alternatives, with Graph Neural Networks (GNNs) gaining particular attention [4,5]. GNNs are capable of modeling syntactic and semantic relationships through graph-structured representations, enabling more accurate detection. As a result, GNN-based techniques have been widely adopted in the prior studies on vulnerability detection [6,7].

The emergence of Large Language Models (LLMs), such as GPT and DeepSeek, has significantly advanced natural language and code understanding tasks. These models, trained on large-scale datasets and comprising billions of parameters, have demonstrated strong performance in areas which is previously considered challenging for artificial intelligence. The ChatGPT series has been applied in creative writing, programming assistance, and problem-solving through interactive dialogues [8,9,10]. This success has inspired researchers to investigate the applicability of LLMs in the field of code vulnerability detection [11,12,13,14,15]. Fu et al. [14] evaluated the performance of ChatGPT across the pipeline of software vulnerability analysis. Bsharat et al. [15] showed that a prompting strategy can improve vulnerability detection performance in non-reasoning GPT models.

The preliminary studies have explored vulnerability detection using models like GPT for identifying code-level bugs; they often fail to fully consider the reasoning capabilities inherent in reasoning LLMs. Some existing works choose to focus on the prompt engineering with LLMs like GPT-4o or Claude-3.5, while comprehensive evaluations comparing reasoning and non-reasoning models remain scarce. In particular, little attention has been given to reasoning-enhanced models like DeepSeek-R1, which not only generate predictions but also provide a critical and valuable reasoning analysis process compared with the prior black-box approaches. The detailed study on the multi-step reasoning traces for vulnerability detection and the limitations in error analysis has not been fully investigated.

To address these gaps, this study presents a reasoning-aware vulnerability detection framework based on DeepSeek-R1. In contrast to prior work, the proposed framework conducts the first comprehensive comparative analysis between reasoning-capable and non-reasoning LLMs on vulnerability detection. Furthermore, this work explicitly incorporates and evaluates reasoning traces, offering more accurate predictions and valuable interpretability for vulnerability remediation.

The key contributions of this work are summarized as follows:

- A novel LLM-based framework for code vulnerability detection is proposed, which explicitly incorporates reasoning traces through DeepSeek-R1. This enables interpretable detection workflows that go beyond traditional black-box results.

- A first systematic comparative study is conducted between reasoning models (e.g., DeepSeek-R1) and widely used non-reasoning LLMs (e.g., GPT-4o, Claude-3.5, DeepSeek-V3), highlighting their respective strengths and limitations in detecting different software vulnerabilities.

- The impact of multi-step logical reasoning on detection performance is empirically analyzed. A case study and detailed error analysis further reveal the strengths and current limitations of reasoning-based LLMs, offering valuable insights for future research on code remediation.

This work thus provides a new perspective on how reasoning-augmented LLMs can advance vulnerability detection and lays a foundation for more interpretable and robust AI-assisted security analysis. The remainder of this paper is organized as follows: Section 2 reviews related work on traditional and LLM-based vulnerability detection techniques. Section 3 describes the proposed methodology, including data collection, data processing, and the design of prompting strategies. Section 4 presents the experimental setup, datasets, and results, followed by a comparative analysis of model performance, cost comparison, case study, and error analysis. Section 5 concludes the paper by answering the research questions, summarizing the key contributions, discussing limitations, and outlining future research directions for advancing LLM-based vulnerability detection.

2. Related Works

2.1. Code Vulnerability Detection

Code vulnerabilities refer to flaws in the design or implementation of a software system that can lead to unexpected or undesired behaviors during execution. Traditional vulnerability detection methods primarily rely on static analysis, dynamic analysis, and handcrafted feature extraction techniques. Static analysis detects potential vulnerabilities by examining the code’s structure, syntax, and control flow without executing the program. For example, in 2010, Pham et al. [16] proposed SecureSync, a reproducibility-based method utilizing an extended Abstract Syntax Tree (AST). However, tree-based representations often suffer from high computational complexity, which limits their scalability on large codebases. In 2018, Li et al. [17] introduced VulPecker, which detects vulnerabilities based on source code similarity. More recently, Zivkovic et al. [18] proposed an enhanced XGBoost model and used the HARSA algorithm to perform defect classification. While static analysis methods are efficient at scanning large volumes of code, they struggle with complex control flows and context-dependent vulnerabilities, often leading to high false-positive and false-negative rates.

In contrast, dynamic analysis techniques detect vulnerabilities by monitoring the actual runtime behavior of a program. These methods can capture execution context and real data flows. For instance, Mayhem [19] prioritizes execution paths involving memory accesses using symbolic addresses to uncover exploitable bugs. In 2022, Google’s security team introduced LibFuzzer [20], a fuzzing-based tool that identifies bugs by generating a large number of randomized test inputs. Although dynamic analysis can uncover complex runtime vulnerabilities, it is often inefficient due to long execution times and heavy dependence on the testing environment. Moreover, it may fail to cover all execution paths.

In recent years, deep learning has driven the development of data-driven vulnerability detection approaches. Among them, GNNs have gained attention due to their ability to model graph structural and semantic relationships in code. In 2018, Li et al. [6] proposed VulDeePecker, which utilizes a Bidirectional Long Short-Term Memory (BLSTM) network to automatically learn features from source code. Building on this, Cheng et al. [21] introduced VGDetector in 2019, which leverages Graph Convolutional Network (GCN) to analyze program slices by converting them into graph structures. This representation enables the model to capture dependencies among code components. GNN-based methods have shown promising results, particularly in detecting complex and deeply embedded vulnerabilities, due to their ability to reason over code structure and data flow.

With the advancement of Natural Language Processing (NLP) powered by AI technologies, source code has increasingly been treated as a form of language as well. In 2020, Feng et al. [22] proposed CodeBERT based on the Transformer architecture and introduced a bimodal pre-trained model for programming and natural language. While CodeBERT processes code as a sequence of tokens, it inherently overlooks the structural graph properties of code. To address this limitation, Guo et al. [23] introduced GraphCodeBERT in 2021, which explicitly incorporates structure information such as data flow and syntax trees via a novel data flow edge prediction task during pre-training. This enhancement allows the model to better capture the semantic dependencies. In 2024, Petrovic [24] proposed a hybrid two-layer framework combining NLP-based techniques with CNN classifiers for software defect detection. Such hybrid models show improved capability in handling large feature spaces and learning intermediate representations.

Despite these advances, NLP-based methods still face challenges in handling long-range dependencies and modeling complex control flows. Recent developments in LLMs have sparked new interest in addressing these issues, leveraging their superior contextual understanding and reasoning capabilities for more effective code analysis.

2.2. Large Language Models

In recent years, LLMs have become a central focus in artificial intelligence research, demonstrating strong capabilities across NLP, code generation, and general reasoning tasks. The foundation for modern LLMs was laid in 2017 when Vaswani et al. [25] proposed the Transformer architecture, which replaced recurrence with multi-head self-attention. Serving as the core architecture of models such as GPT, the Transformer predicts tokens based on contextual embedding. The model parameters (weights and biases) largely determine the model’s expressiveness. Consequently, the scale of parameters has become a key indicator of model performance.

In 2020, OpenAI introduced GPT-3 [26], a landmark model with 175 billion parameters, marking a significant advancement in language modeling. The subsequent GPT-3.5 version improved upon GPT-3′s performance and efficiency, paving the way for GPT-4 released in 2023. Although its parameter count remains undisclosed, GPT-4 [27] achieved superior performance across multiple industry-standard benchmarks. GPT-4 Turbo, released in April 2024, further extended input length capacity to 128,000 tokens while optimizing speed and cost. In May 2024, OpenAI introduced the multimodal model GPT-4o [28], capable of processing and generating text, images, and audio. With a response latency of just a few seconds, GPT-4o enabled the development of low-latency interactive applications. A lightweight variant, GPT-4o-Mini, was subsequently released to maintain multimodal functionality while reducing computational demands, making it suitable for deployment in resource-constrained environments.

To address tasks requiring more extensive reasoning, OpenAI developed the GPT-o1 series of reasoning-focused models. These models are optimized for solving complex problems in scientific, mathematical, and coding domains. Building on this series, O3-Mini [29], released in January 2025, offers improved reasoning performance with lower latency and computational cost.

In parallel, Anthropic launched the Claude series of LLMs. Claude-1, released in March 2023 with 52 billion parameters, emphasized safety and transparency in model behavior. Claude-2, introduced in July 2023 [30], enhanced performance in coding, reasoning, and creative tasks. The Claude-3 family, released in February 2024, comprises Haiku, Sonnet, and Opus, each optimized for different workloads—ranging from fast, lightweight tasks to high-complexity inference. Continued iterations in the Claude series reveal a strong commitment to balancing reasoning power with deployment scalability. Claude-3.5 Haiku was released in June 2024 [31], offering twice the speed and reduced costs compared to Claude-3 Opus [32].

On the domestic front, DeepSeek, established in July 2023, focuses on the development of high-performance LLMs. In November 2023, DeepSeek launched DeepSeek-Coder [33], initiating its exploration into AI for code tasks. By December 2024, DeepSeek-V3 [34] was introduced, achieving performance comparable to GPT-4 and Claude-3.5, while improving training efficiency and reducing inference costs. These advancements laid the foundation for a new generation of reasoning-centric models. A major milestone was reached in January 2025 with the release of DeepSeek-R1 [35], a reasoning LLM designed for knowledge-intensive and document-oriented tasks. DeepSeek-R1 outperformed its predecessors on benchmarks such as MMLU [36], GPQA Diamond [37], FRAMES [38], and demonstrated superior capabilities in mathematical reasoning and scientific analysis.

2.3. LLMs in Vulnerability Detection

With the rapid advancement of LLMs, numerous studies have begun to investigate their applications in code vulnerability detection, analyzing their potential, effectiveness, and inherent limitations. In 2022, Hanif [13] proposed a RoBERTa-based vulnerability detection model, VulBERTa, from open-source projects. The model introduced a custom tokenizer and demonstrated strong performance over VulDeePecker despite limited training data. In 2023, Fu et al. [14] evaluated the performance of ChatGPT across the full pipeline of software vulnerability analysis. Their results indicated that although general-purpose LLMs such as GPT-4 have made notable progress in general reasoning tasks, they still underperform compared to domain-specific models like NLP-based CodeBERT and GraphCodeBERT in specialized vulnerability detection tasks. Their study further indicated that task-specific fine-tuning is essential to bridge the gap between general-purpose models and specialized security detection frameworks.

In a comparative study, Espejel et al. [39] examined the reasoning capabilities of GPT-3.5, GPT-4, and BARD in a zero-shot setting, highlighting the significance of prompt engineering in enhancing model performance. The findings demonstrated that prompt variation can substantially influence output quality, underscoring the critical role of prompt design in LLM-based vulnerability detection. Consistent with these insights, OpenAI’s technical report on GPT-4 acknowledged the model’s limitations in security-related tasks and emphasized the impact of prompt formulation and fine-tuning on task effectiveness. Similarly, Bsharat et al. [15] showed that a simple prompting strategy can considerably improve vulnerability detection performance in non-reasoning GPT models.

Expanding on this line of research, Zhang et al. [40] incorporated structural and sequential auxiliary information into prompts to evaluate their effect on ChatGPT’s performance in detecting vulnerabilities. Their results confirmed that prompt enrichment can enhance detection accuracy; however, they also revealed potential drawbacks, such as diminished precision for certain vulnerability categories, indicating that performance gains may not be uniformly distributed across different vulnerability types. From a behavioral perspective, Ranaldi et al. [41] explored the reliability of LLMs in the presence of human interaction and feedback. They identified that while LLMs perform well in objective analytical tasks, they are prone to sycophantic tendencies in subjective scenarios. This susceptibility to user biases and assumptions raises concerns about the robustness and trustworthiness of LLM outputs in security-sensitive domains. Their findings further highlight the importance of robust prompt engineering and the necessity of designing interaction-aware LLM evaluation strategies.

Notably, most of the aforementioned prompt engineering strategies target non-reasoning models. The extent to which such strategies are effective for reasoning-based LLMs remains underexplored. Wang et al. [42] investigated this distinction by comparing prompt engineering methods applied to both reasoning and non-reasoning models. His findings suggest that techniques effective for execution-oriented models may not yield comparable improvements in reasoning models. In fact, overly complex prompts may lead to degraded performance, implying that prompt complexity must be carefully balanced to fully leverage the reasoning abilities of such models in vulnerability detection. Furthermore, Zhang et al. [43] introduce a method for instruction tuning that enables language models to produce more secure code. They proposed SecCoder-XL to effectively enhance the generalizability and robustness of secure code generation. Harsha et al. [44] studied runtime strategies for LLMs in high-stakes applications such as medical diagnostics. Their proposed framework, MedPrompt, successfully guided general-purpose LLMs to perform well in domain-specific benchmarks. Importantly, their study highlighted the performance of GPT-o1, a novel reasoning-centric model, which significantly outperformed the GPT-4 series even without extensive prompt engineering. However, the performance of GPT-o1 was constrained by prompt-free usage [45], suggesting that while few-shot learning may be less relevant for native reasoning models, contextual grounding remains important for optimal performance.

3. Methodology

In this section, an end-to-end workflow is proposed for software vulnerability detection utilizing LLMs, and a comparative analysis between reasoning models and non-reasoning models is conducted with a widely used software vulnerability dataset. The workflow is designed to answer the following key questions:

RQ1: Do reasoning-aware LLMs outperform non-reasoning models in software vulnerability detection?

RQ2: What are the trade-offs between performance and cost for different models?

RQ3: Can reasoning traces from DeepSeek-R1 provide interpretable insights into its decision-making process?

RQ4: What are the potential limitations of reasoning-aware LLMs in the context of software vulnerability detection?

3.1. Framework Overview

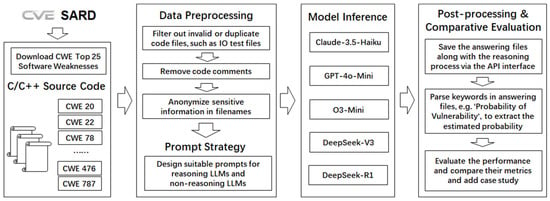

The end-to-end workflow is illustrated in Figure 1 for vulnerability detection utilizing different LLMs, and it outlines the key stages from data acquisition to comparative evaluation. The workflow begins by referencing the Top 25 most dangerous software weaknesses in the Common Weakness Enumeration (CWE) project [46]. The corresponding test cases are retrieved from the Software Assurance Reference Dataset (SARD) [47].

Figure 1.

The proposed workflow for vulnerability detection using LLMs.

As shown in the Data Preprocessing Stage of Figure 1, a comprehensive pipeline is implemented to ensure data quality and consistency in the experiment. Firstly, invalid or duplicate code samples are removed. Secondly, code comments are stripped to prevent misleading contextual cues that may bias the model’s decision-making. Furthermore, filenames containing sensitive or potentially identifying content are anonymized to ensure fairness and eliminate unintended cues for model inference.

To rigorously examine the impact of reasoning on vulnerability detection performance, the Prompt Strategy module designs distinct prompting strategies for both reasoning LLMs and non-reasoning LLMs. These prompt templates are designed to align with each model’s respective capabilities, facilitating a fair and effective comparison. In this study, the selected models are categorized into two groups based on their reasoning capabilities: non-reasoning models and reasoning models. Specifically, non-reasoning models such as GPT-4o-Mini, Claude 3.5, and DeepSeek-V3 typically exhibit limited multi-step reasoning capabilities and tend to rely on pattern matching or memorization rather than deep comprehension. In contrast, reasoning models like O3-Mini and DeepSeek-R1 are trained with reinforcement learning and optimization techniques. They are designed to encourage multi-hop reasoning and structured thinking. These models are capable of generating intermediate steps in their responses, leading to more reliable results on complex tasks. The specific prompting strategies are provided in Section 3.4.

In the Model Inference phase, the preprocessed code samples are fed into each LLM. This study evaluates five representative models: Claude-3.5-Haiku, GPT-4o-Mini, DeepSeek-V3, O3-Mini, and DeepSeek-R1. Model responses are obtained via official APIs, preserving both raw output and any intermediate reasoning traces when available.

Next, the Post-processing stage, as reflected in Figure 1, involves keyword-based extraction of critical metric information, such as “Probability of Vulnerability”, enabling consistent and quantitative analysis. To enhance robustness, this stage also incorporates multiple keyword patterns in the post-processing scripts to accurately extract final results across diverse model outputs. The data preprocessing and post-processing codes will be included at commit main in the publicly released GitHub repository to facilitate understanding and reproducibility, as well as some CWE code samples.

Finally, a Comparative Evaluation is conducted across all models based on key detection metrics. This stage not only benchmarks performance differences but also analyzes the impact of reasoning capabilities through detailed case studies. Furthermore, it discusses the potential limitations of current LLMs in software vulnerability detection.

3.2. Data Collection

To construct a representative and well-structured dataset for evaluating the vulnerability detection capabilities of LLMs, this study leverages annotated test cases retrieved from SARD. Specifically, the experiment focuses on the 2024 CWE Top 25 Most Dangerous Software Weaknesses, as published annually by the CWE project on its official platform. According to the latest SARD technical report [47], as of October 2024, the dataset contains over 150 documented vulnerability types and more than 450,000 labeled test samples.

A significant portion of these vulnerabilities appears in C/C++ programs, primarily due to the languages’ low-level memory manipulation features, such as pointer arithmetic and manual memory management. These features substantially increase the risk of introducing critical security flaws. Accordingly, this study adopts C/C++ as the primary target language. However, the proposed methodology remains language-agnostic, as it leverages the reasoning and representation power of LLMs to capture universal patterns in code semantics and control flow. This generalization capacity allows the approach to be extended to other programming languages, including Java, Python, and HTML.

Using SARD’s structured query interface, all available test cases associated with the selected Top 25 CWE categories in C/C++ are retrieved. The detailed vulnerability categories and their sample statistics are listed in Table 1.

Table 1.

Top25 C/C++ Vulnerability Dataset Summary.

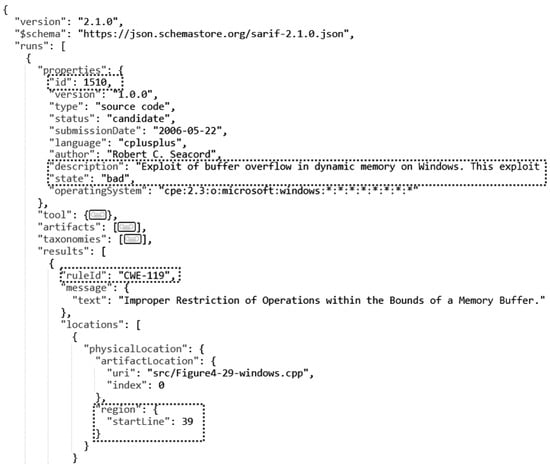

Each SARD test case comprises several components, including source code files containing vulnerabilities, supplementary test scripts, and a Static Analysis Results Interchange Format (SARIF) property file. SARIF, defined by the Organization for the Advancement of Structured Information Standards (OASIS), is a standardized format that promotes the interoperability of static analysis tools and streamlines the sharing and interpretation of vulnerability data [48]. Each SARIF file contains structured metadata such as vulnerability type (ruleId), state (bad or good), programming language, upload timestamp, and the precise location of vulnerable code segments. An excerpt from a SARIF file corresponding to a CWE-119 test sample is shown in Figure 2.

Figure 2.

SARIF Property File Segment from CWE-119 Sample.

In the example provided, the ruleId indicates a “buffer overflow” issue categorized under CWE-119. The state field is marked as “bad”, signaling that the file contains a known vulnerability. The vulnerability location is pinpointed by the locations field, which shows that the vulnerable statement appears at line 39 in the file “src/Figure4-29-windows.cpp”.

Notably, a single SARIF file references multiple vulnerable files and multiple vulnerabilities within each file, making it a rich and informative source for detection evaluation. However, as summarized in Table 1, the number of available test cases varies substantially across CWE categories, ranging from fewer than 10 to several thousand per category.

To ensure balanced evaluation while maintaining computational feasibility, the following sampling strategy is applied: for vulnerability types with fewer than T = 120 samples, all available test cases are retained. For those exceeding T samples, T+ representative cases are randomly sampled to preserve diversity while limiting evaluation cost.

Additionally, SARD includes relatively fewer non-vulnerable (“good” state) samples. To mitigate class imbalance, all good-state test cases are included to better balance the dataset. The final evaluation dataset comprises both vulnerable and non-vulnerable samples, with their distribution detailed in Table 2.

Table 2.

Evaluation Sample Distribution.

This curated dataset provides a diverse, well-labeled, and realistic benchmark to rigorously evaluate the performance of various LLMs in vulnerability detection tasks.

3.3. Data Preprocessing

To ensure a fair and reliable evaluation of LLMs in vulnerability detection, a comprehensive data preprocessing pipeline is applied prior to inference. This pipeline is designed to eliminate potential biases and ensure that models analyze only the intrinsic characteristics of source code, without relying on extraneous hints.

The main actions of Data Preprocessing are presented using Algorithm 1.

| Algorithm 1: Pseudocode for Data Preprocessing Stage Algorithm |

| Input: SARD—Source code dataset with SARIF metadata Output: CleanedDataset—Clean dataset without biases begin 1. NonEssentialFiles in SARD: io.c, std_testcase.h, and std_testcase_io.h 2. TargetFiles = FilterNonEssentialFiles(SARD) 3. LabeledFiles = FilterNonAnnotatedFiles(TargetFiles) 4. ProcessedFiles = RemoveComments(LabeledFiles) 5. CleanedDataset = AnonymizeFilenames(ProcessedFiles) return CleanedDataset (*.code) end |

Algorithm 1 receives the SARD dataset with SARIF metadata as input. The output algorithm returns the CleanedDataset without biases. Each test case retrieved from SARD includes multiple C/C++ source files with .c or .cpp extensions. However, not all of these files contain meaningful vulnerability information. In particular, many files serve as auxiliary components, such as utility functions or standard testing interfaces, which are reused across multiple test cases. A typical example is the testcasesupport directory, which includes files like io.c, std_testcase.h, and std_testcase_io.h. These files are defined as NonEssentialFiles in Line 1 of Algorithm 1. They are shared across different CWE categories and do not contribute to the unique vulnerability logic of individual test cases. In Line 2, all non-essential files are programmatically filtered out and return TargetFiles.

Next, only source files that are directly annotated in the SARIF metadata as containing vulnerabilities are retained. This avoids sample contamination and reduces noise in model evaluation. So in Line 3, LabeledFiles is returned by filtering non-annotated files.

Then, the issue of code comments is addressed. Comments, while sometimes beneficial, can also introduce biases. They may provide direct cues about the presence of vulnerabilities or even describe the bug itself. Additionally, in practice, high-quality comments are often missing, especially in buggy or legacy code, which further supports the rationale for excluding them to better simulate such real-world conditions. These clues can inadvertently assist LLMs in identifying vulnerabilities, thus skewing performance evaluations. All inline (//) and block (/* */) comments are removed using custom scripts. In addition, unnecessary line breaks and formatting are normalized to present the LLMs with pure source code only, thereby enforcing an equal basis for model comparison. After this step in Line 4, ProcessedFiles is returned after removing unnecessary items.

In Line 5, another subtle yet critical preprocessing step involves filename anonymization. To evaluate the intrinsic code comprehension capabilities of each model, any auxiliary signals that might artificially inflate performance are anonymized. In the SARD dataset, filenames often embed semantic information, such as the CWE identifier (e.g., CWE119_BufferOverflow_bad.cpp) or the vulnerability state (e.g., goodB2G, bad, goodG2B). Prior studies have shown that such metadata can strongly influence the predictions of LLMs, especially those with prompt-sensitivity or contextual learning capabilities. All filenames are replaced with randomized hash-based identifiers, ensuring that no external semantic hints (such as CWE type or vulnerability state) are leaked into the inference process. This ensures that LLM predictions are grounded solely in code semantics, not in file metadata. In Line 5, CleanedDataset is returned with a specific extension (*.code).

After applying these steps in Algorithm 1, a clean, balanced, and bias-minimized dataset is created, enabling an objective evaluation of LLMs’ performance in vulnerability detection. It ensures that the models must rely on their understanding of code syntax and semantics, rather than auxiliary signals, to infer the presence or absence of vulnerabilities.

3.4. Prompting Strategies

Prompt engineering is a critical component in effectively leveraging LLMs for software vulnerability detection. A well-crafted prompt not only helps guide the model toward accurate and interpretable results but also ensures fairness when comparing models with different architectural characteristics.

Although the selected LLMs vary significantly in their reasoning abilities, ranging from non-reasoning models like GPT-4o-Mini, Claude 3.5, and DeepSeek-V3 to reasoning models such as O3-Mini and DeepSeek-R1, a unified prompting strategy is adopted during inference. This approach ensures a fair and consistent evaluation protocol across all models. To accommodate the diverse capabilities of these models, the unified prompt is carefully designed to serve dual purposes.

The main actions are presented as a function DesignUnifiedPromptStrategy() using Algorithm 2 in pseudocode form for the Prompt Strategy stage.

| Algorithm 2: Pseudocode for Prompt Strategy Stage Algorithm |

| Function DesignUnifiedPromptStrategy() Output: UnifiedPrompt—Unified prompting strategy for all models begin 1. // Role assignment strategy based on [39,40] RoleDescription ← DefineRoleAssignment() 2. TaskInstruction ← DefineTask() 3. BasePrompt = RoleDescription + TaskInstruction 4. // COT prompting strategy based on [49] ReasoningGuidance ← AddSimpleStepGuidance() 5. // Standardized output requirement OutputFormat ← StandardizeOutput() 6. // Merge all components into a unified prompt (same for all models) UnifiedPrompt ← Concatenate(BasePrompt, ReasoningGuidance, OutputFormat) return UnifiedPrompt end |

Algorithm 2 creates a single unified prompt applicable to all models, integrating elements for both reasoning and non-reasoning capabilities based on the prior prompting engineering research. In Line 1 and Line 2, it is essential to reduce ambiguity and provide precise, role-based instructions for non-reasoning models to direct their focus. Building on prior research [39,40] in prompt engineering, a role-assignment strategy is designed, where the LLM is asked to assume the role of a highly experienced expert in code vulnerability analysis. This role is concatenated with a clearly defined task instruction in Line 3, for example, “You are given a C/C++ source code file. Your task is to determine whether it contains any vulnerabilities”.

For reasoning models, minimal high-level guidance is often sufficient, as these models tend to internally generate a structured thought process before responding. This concept is supported by Wang’s research [49] on Chain-Of-Thought (COT) prompting, which demonstrates that LLMs can effectively perform multi-step reasoning when provided with minimal prompts. COT prompting improves logical consistency and performance on complex decision-making tasks. In Line 4, simple phrases like “Please provide your analysis step by step” are included to help the model break down the vulnerability detection process into logical stages. For reasoning models (e.g., O3-Mini, DeepSeek-R1), this guidance encourages step-by-step reasoning for models capable of it. For non-reasoning models (e.g., Claude-3.5-Haiku, GPT-4o-Mini, DeepSeek-V3), this guidance is treated as optional, allowing them to skip structured analysis with constrained reasoning abilities.

Moreover, in Line 5, the prompt enforces a standardized output format by requesting a probabilistic estimation of the code’s vulnerability status. This enables a quantitative evaluation across different models and supports downstream statistical analysis. Instructions such as “Additionally, provide a total probability estimate of the code containing vulnerabilities. If no vulnerabilities are found, the probability should be 0. The probability is a floating-point value between 0 and 1”.

The final unified prompt, concatenated in Line 6 for all inference tasks, is as follows:

“Assume you are a highly experienced expert in code vulnerability analysis. You are given a C/C++ source code file. Your task is to determine whether it contains any vulnerabilities. Additionally, provide a total probability estimate of the code containing vulnerabilities. If no vulnerabilities are found, the probability should be 0. The probability is a floating-point value between 0 and 1. Please provide your analysis step by step.”

This prompt ensures that non-reasoning models receive sufficient guidance to stay on task while reasoning models are encouraged to apply internal thought processes before responding. By enforcing a uniform prompt and output structure, a task-aware yet model-agnostic evaluation framework is enabled that more accurately reflects the true vulnerability detection capabilities of modern LLMs.

4. Experiments

4.1. Comparative Methods

In order to validate the effectiveness of DeepSeek-R1 reasoning models in the field of vulnerability detection, this paper investigates five typical LLMs for comparative analysis. These models include the following:

To assess the effectiveness of DeepSeek-R1 in software vulnerability detection, a comparative analysis involving five representative LLMs is conducted. These models are selected to cover a diverse range of capabilities in terms of reasoning ability, efficiency, and general language understanding. The details of the compared models are summarized as follows:

- Claude-3.5-Haiku: Claude-3.5-Haiku is optimized for high-throughput applications, offering extremely fast response times and low computational cost. Its advantages lie in scenarios that demand rapid, large-scale inference with minimal latency. However, its reasoning capabilities are relatively shallow, and it currently only supports textual inputs, limiting its effectiveness on tasks that require multi-step logical inference.

- GPT-4o-Mini: GPT-4o-Mini is a lightweight variant of OpenAI’s multimodal model family, emphasizing low-cost deployment and efficient multimodal handling. It demonstrates strong performance in general-purpose natural language tasks. Nonetheless, its performance degrades on lengthy or structurally complex code, and it struggles with maintaining reasoning consistency over long contexts.

- O3-Mini: O3-Mini is optimized for fast response and efficient reasoning, exhibiting strong performance in STEM-related tasks, especially those involving structured problem solving. It balances speed and reasoning quality well, making it suitable for tasks requiring intermediate logical depth.

- DeepSeek-V3: As a high-performance open-source model, DeepSeek-V3 stands out in code generation, mathematical reasoning, and programming-oriented tasks. It is particularly effective in logic-intensive scenarios and can produce high-quality code snippets. Despite its strength in text-based tasks, its capabilities in multimodal reasoning and language adaptability remain limited.

- DeepSeek-R1: DeepSeek-R1 leverages reinforcement learning and advanced optimization techniques to enhance its reasoning capabilities. It excels in mathematical reasoning, program analysis, and complex logic tasks. Although it currently lacks robust multimodal processing and may occasionally mix Chinese and English in its outputs, DeepSeek-R1 is well-suited for high-precision tasks requiring structured analytical thinking.

4.2. Evaluation Metrics

To comprehensively assess the performance of the API-generated outputs, a set of evaluation metrics is employed, including Precision, Recall, Accuracy, F1-Score, Cohen’s Kappa, and Matthews Correlation Coefficient (MCC). The definition of Precision and Recall is given as follows:

Here in Equation (1), TP denote correctly identified vulnerable instances, while TN refer to correctly classified non-vulnerable instances. FP occur when a non-vulnerable case is misclassified as vulnerable, and FN arise when a vulnerable instance is incorrectly predicted as non-vulnerable. Precision quantifies the reliability of positive predictions by evaluating the proportion of correctly identified vulnerabilities among all predicted positives. Recall and True Positive Rate (TPR) measures the model’s ability to correctly detect actual vulnerabilities.

Based on these scalar metrics, Receiver Operating Characteristic (ROC) and Precision-Recall (PR) curves are incorporated to visualize and analyze the model’s performance. The ROC curve plots TPR against False Positive Rate (FPR) to illustrate the balance between sensitivity and specificity. A model with strong discriminative power produces a ROC curve that quickly approaches the top-left corner. The Area Under the ROC Curve (AUC-ROC) quantifies this performance; values closer to 1 indicate excellent classification ability, while values near 0.5 imply random guessing.

The PR curve, on the other hand, focuses specifically on the trade-off between Precision and Recall. This makes it particularly insightful in scenarios involving class imbalance, such as vulnerability detection, where vulnerable cases are rare. Unlike the ROC curve, the PR curve excludes true negatives and therefore provides a more focused evaluation of a model’s effectiveness in detecting positive cases. The area under the PR curve, referred to as mean Average Precision (mAP), serves as a comprehensive summary metric, where higher mAP values indicate stronger capability in identifying true vulnerabilities with minimal false alarms.

F1-Score, defined as the harmonic mean of Precision and Recall, offers a balanced metric, especially useful when dealing with imbalanced class distributions, where managing the trade-off between false positives and false negatives is critical. Accuracy provides a general indicator of performance by measuring the proportion of correctly classified instances over the total number of predictions. These metrics are formally defined as follows:

Cohen’s Kappa is a statistical measure that evaluates the level of agreement between predicted and true labels, while adjusting for the agreement that could occur by chance. This makes it particularly robust in scenarios involving class imbalance, where conventional Accuracy may overestimate performance. In contrast to raw Accuracy, Kappa offers a more calibrated assessment of the model’s true predictive power. Its formulation is defined as follows:

In Equation (3), represents the observed agreement, which defines the proportion of samples where the prediction matches the ground truth, while indicates the expected agreement, which illustrates the probability of random agreement based on the marginal probabilities of each class. The Kappa score ranges from −1 to 1, where 1 indicates perfect agreement, 0 corresponds to agreement by chance, and negative values reflect systematic disagreement. A higher Kappa value suggests stronger alignment between model predictions and ground truth labels beyond random expectations.

The Matthews Correlation Coefficient (MCC) offers a comprehensive metric by incorporating all four confusion matrix elements, TP, TN, FP, and FN, into a single correlation-based score. Unlike metrics such as Accuracy or F1-Score, which may be influenced by class imbalance, MCC remains balanced and reliable even under highly disproportionate class distributions. The definition of MCC is defined as follows:

MCC values range from −1 to 1, where 1 indicates perfect prediction, 0 denotes no better than random guessing, and −1 reflects complete disagreement. This metric is particularly advantageous for binary classification tasks in imbalanced datasets, as it provides a balanced evaluation of all types of classification errors.

To assess the statistical robustness of these performance metrics, 95% Confidence Intervals (CI) are computed using bootstrap resampling for F1-Score, Accuracy, Kappa, and MCC. These intervals estimate the range within which the true metric value is expected to lie with high probability, thereby helping to assess whether observed differences between models are statistically significant rather than random chance. Specifically, bootstrap sampling with 1000 iterations on the evaluation is performed to construct the CIs and determine whether observed performance differences are statistically significant or attributable to random variation.

4.3. Experimental Results

4.3.1. Performance Comparison

To ensure consistency across all evaluations, OpenAI’s unified API framework is implemented to interface with each selected LLM, standardizing input parameters and output formatting. For every code sample, the model-generated answer is stored as an answer file, and if applicable, the corresponding reasoning process is saved separately as a reasoning file. Among all tested models, only DeepSeek-R1 provides a structured, step-by-step reasoning output. Hence, in the subsequent discussions, the term reasoning process specifically refers to the output generated by DeepSeek-R1.

A key consideration when invoking LLMs through OpenAI’s API involves understanding constraints related to context length, maximum tokens, and response limits. These limitations vary across models. For instance, GPT-4o-Mini enforces a strict cap on the maximum context length, which, if exceeded, results in an error due to surpassing token constraints.

All samples are processed via OpenAI’s API with a selected LLM. Each model independently generates its answer file, from which the relevant vulnerability indicators are extracted using the predefined keywords. A parsing script is employed to identify and compute probability estimates for vulnerability detection across all samples. The comparative performance metrics of the models are summarized in Table 3.

Table 3.

Comparative performance metrics across LMMs.

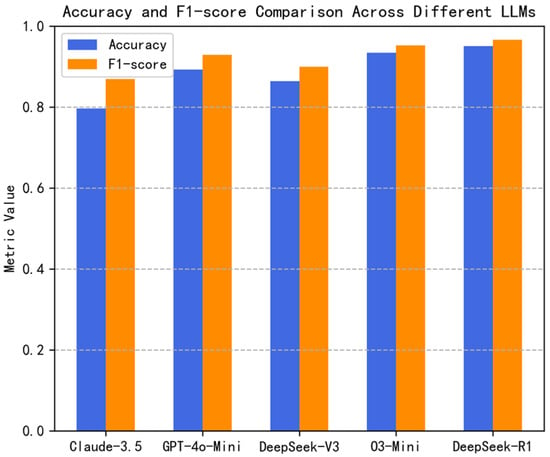

As depicted in Figure 3, DeepSeek-R1 demonstrates the highest Accuracy (0.9507) and F1-score (0.9659), underscoring its superior performance in identifying software vulnerabilities with balanced precision and recall. Close behind, O3-Mini attains an Accuracy of 0.9338 and an F1-score of 0.9524, indicating a similar level of effectiveness. In contrast, Claude-3.5-Haiku lags behind other models in both metrics, suggesting a comparatively weaker detection capability. GPT-4o-Mini and DeepSeek-V3 perform moderately well, though they exhibit a slight drop in overall reliability.

Figure 3.

Accuracy and F1-Score Comparison across different LLMs.

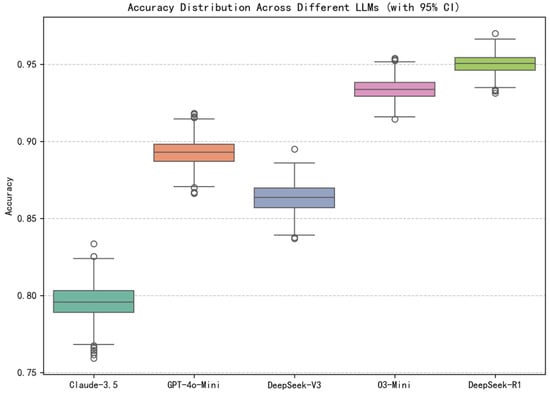

The 95% confidence intervals derived from bootstrap resampling for Accuracy and F1-Score are depicted in Figure 4 and Figure 5. These intervals help determine whether differences in agreement levels across models are statistically meaningful or attributable to random fluctuations in the evaluation. Among the evaluated models, DeepSeek-R1 demonstrates the highest performance, with the highest Accuracy and F1-score, accompanied by tight 95% confidence intervals (0.9395, 0.9619) for Accuracy and (0.9575, 0.9735) for F1-Score, indicating high stability and robustness. In contrast, O3-Mini also achieves strong performance results, yet its confidence intervals (0.9211, 0.9464) for Accuracy and (0.9429, 0.9616) for F1-Scores show only partial overlap with those of DeepSeek-R1.

Figure 4.

Accuracy Distribution across different LLMs.

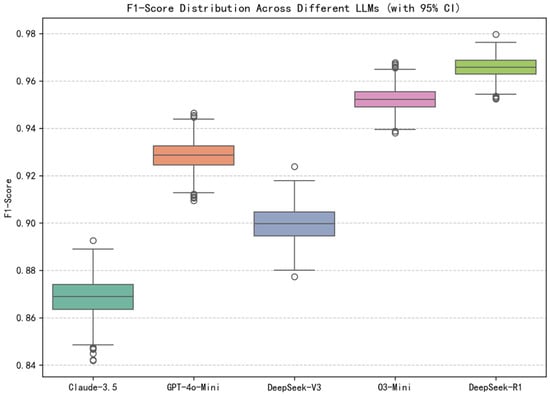

Figure 5.

F1-Score Distribution across different LLMs.

Meanwhile, models like GPT-4o-Mini and DeepSeek-V3 exhibit broader and more overlapping intervals, e.g., the GPT-4o-Mini’s Accuracy CI of (0.8759, 0.9086) and F1-Score CI of (0.9167, 0.9394), making their margins of difference less decisive compared to the above two reasoning models. Claude-3.5, the weakest among the five, shows both the lowest central scores and the widest confidence bounds (0.7749, 0.8165) and (0.8531, 0.8831), indicating greater variability and less consistent performance.

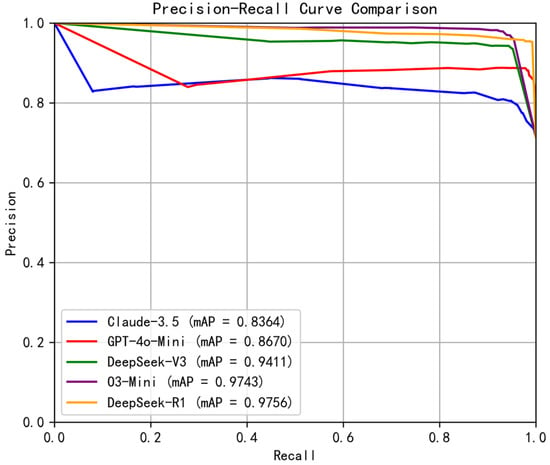

The PR curve offers insight into the trade-off between precision and recall and the overall effectiveness beyond a single threshold point. It illustrates how model performance varies across a range of threshold values. As shown in Figure 6, DeepSeek-R1 achieves the highest mAP at 0.9756, with O3-Mini closely following at 0.9743. These results reinforce the effectiveness of reasoning models in accurately capturing vulnerability patterns. DeepSeek-V3 also performs well with mAP at 0.9411, indicating a strong balance between reducing false positives and maintaining high recall. On the other hand, GPT-4o-Mini and Claude-3.5-Haiku register lower mAP scores of 0.8670 and 0.8364, respectively, suggesting diminished efficacy in distinguishing true vulnerabilities.

Figure 6.

Precision-Recall Curve Comparison across different LLMs.

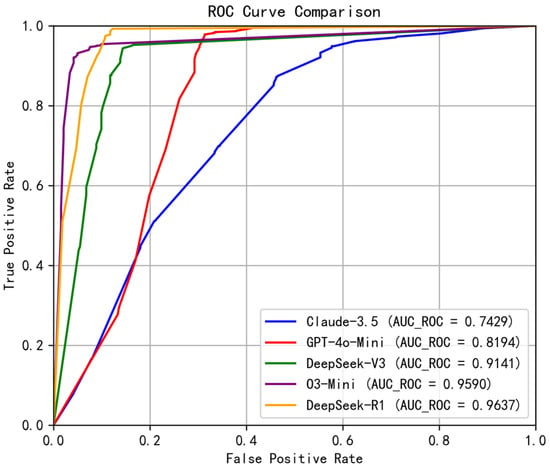

The ROC curve evaluates the trade-off between the true positive rate (TPR) and false positive rate (FPR) and visualizes how model performance varies across different classification thresholds. As illustrated in Figure 7, DeepSeek-R1 again leads with an AUC-ROC of 0.9637, followed by O3-Mini at 0.9590, highlighting their robustness in binary classification scenarios. DeepSeek-V3 also demonstrates strong discriminatory power (AUC-ROC: 0.9141), while GPT-4o-Mini and Claude-3.5-Haiku exhibit lower scores (0.8194 and 0.7429, respectively), indicating reduced classification confidence.

Figure 7.

ROC Curve Comparison across different LLMs.

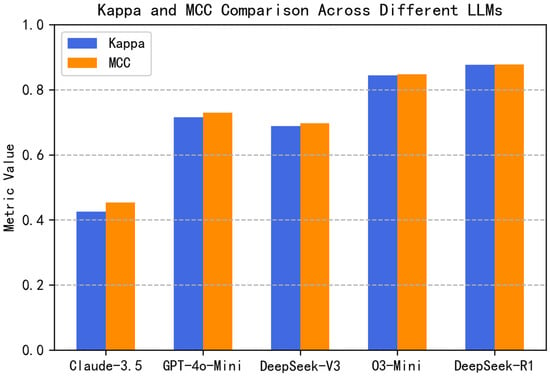

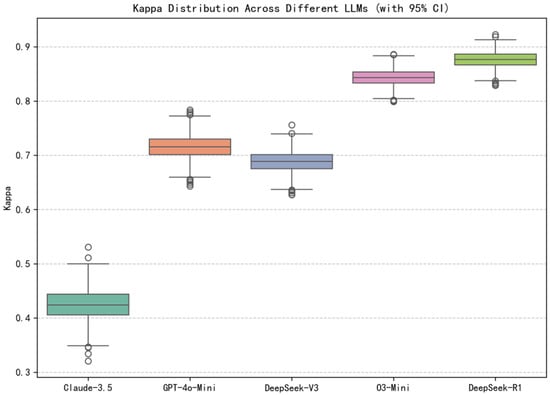

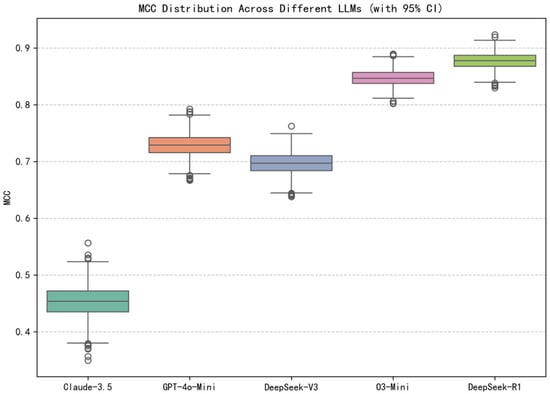

As shown in Figure 8, the reasoning-aware model DeepSeek-R1 achieves the highest agreement score with Kappa = 0.8770 and MCC = 0.8779, indicating strong alignment between predicted and actual labels even under class imbalance. O3-Mini also performs competitively with Kappa = 0.8438 and MCC = 0.8472, suggesting high overall quality in its predictions. Models like GPT-4o-Mini and DeepSeek-V3 show moderate agreement levels with Kappa from 0.69 to 0.72 and MCC from 0.70 to 0.73, while Claude-3.5 lags behind with Kappa = 0.4250 and MCC = 0.4534, reflecting lower consistency and less robust classification performance.

Figure 8.

Kappa and MCC Comparison across different LLMs.

The 95% confidence intervals using 1000-round bootstrap resampling for Kappa and MCC across different LLMs is depicted in Figure 9 and Figure 10. These intervals provide insights into the inter-rater reliability and overall correlation between predictions and true labels, further validating model consistency beyond simple classification accuracy. DeepSeek-R1 again emerges as the strongest performer, with Kappa of (0.8475, 0.9042) and MCC of (0.8493, 0.9048), showing tight intervals that indicate high agreement and strong predictive correlation with minimal uncertainty. O3-Mini follows closely with Kappa of (0.8125, 0.8734) and MCC of (0.8173, 0.8759), and its slightly lower upper bounds and narrower margins suggest less consistent alignment with the ground truth compared to DeepSeek-R1.

Figure 9.

Kappa Distribution across different LLMs.

Figure 10.

MCC Distribution across different LLMs.

In contrast, GPT-4o-Mini and DeepSeek-V3 show comparable performance, but their confidence intervals (e.g., Kappa CIs of (0.6730, 0.7589) and (0.6442, 0.7277), MCC CIs of (0.6891, 0.7692) and (0.6551, 0.7349), respectively) overlap substantially, suggesting no statistically significant difference between them. However, both trail behind the previous two reasoning models by a noticeable margin, particularly in their lower bounds. Finally, Claude-3.5 records the weakest agreement scores, with Kappa and MCC wide intervals of (0.3655, 0.4787) and (0.3938, 0.5080), reflecting greater variability and a weaker correlation structure in its predictions. These confidence intervals not only reinforce the ranking among the five models but also justify the statistical reliability of DeepSeek-R1′s improvements in terms of agreement strength and prediction quality.

Given the relatively small performance gap between DeepSeek-R1 and O3-Mini, it is crucial to determine whether such differences are statistically significant rather than attributable to random chance. To this end, McNemar’s Test [50], a non-parametric method for paired nominal data, is applied to evaluate whether two classifiers have significantly different error rates on the same test instances. The more commonly used variant is proposed by Edwards [51], which defines the and as follows:

where is the number of samples misclassified only by O3-Mini, is the number of samples misclassified only by DeepSeek-R1, and . McNemar’s Test is conducted on the exact same code samples. The resulting is 8.43, with a corresponding of 0.0037. Given that is below the conventional significance threshold of 0.05, the null hypothesis of equal performance is rejected. This indicates that the observed performance improvement of DeepSeek-R1 over O3-Mini is unlikely to be due to random chance, thus supporting the claim that DeepSeek-R1 achieves a statistically significant gain in classification accuracy.

Notably, both DeepSeek-R1 and O3-Mini are reasoning models, while the other models fall under the non-reasoning category. The superior performance of reasoning models suggests that explicit step-by-step analytical reasoning enhances vulnerability detection, allowing for more precise vulnerability detection. The reasoning traces returned by reasoning models provide deep insights for locating code vulnerability, and it will be further explored in the case study and error analysis in Section 4.3.3 and Section 4.3.4.

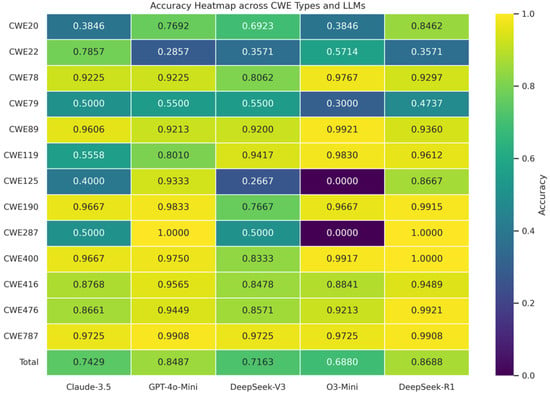

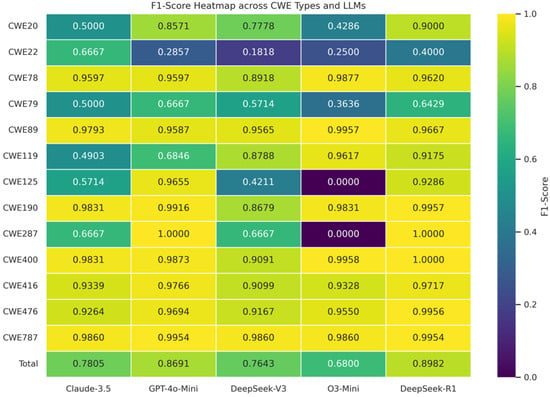

4.3.2. Category-Wise Analysis

To further investigate the per-type effectiveness of various LLM-based vulnerability detection methods, the model performance across different CWE categories in Table 1 is evaluated. The detailed results for both Accuracy and F1-Score are reported in Table 4, where the highest values for each CWE are marked in bold, and the second-highest scores are underlined for clarity. This fine-grained comparison provides insights into how different models generalize across diverse vulnerability patterns.

Table 4.

Comparison of Accuracy and F1-Score for each CWE type across LLMs.

As shown in Table 4, DeepSeek-R1 consistently outperforms all other models, achieving the highest average Accuracy (0.8688) and F1-Score (0.8982) across all vulnerability categories. GPT-4o-Mini emerges as the second, with an overall Accuracy of 0.8487 and F1-Score of 0.8691, often demonstrating results very close to those of DeepSeek-R1.

To facilitate intuitive comparisons and better visualization, two heatmaps are additionally provided in Figure 11 and Figure 12 to depict the distribution of Accuracy and F1-Score, respectively. Darker shades indicate higher metric value.

Figure 11.

Heatmap of Accuracy across CWE types for each LLM.

Figure 12.

Heatmap of F1-Score across CWE types for each LLM.

It can be observed that DeepSeek-R1 consistently delivers top-tier performance across a broad spectrum of CWE types. Specifically, it achieves either the highest or second-highest F1-Score across all 13 CWE categories, demonstrating strong generalization and robust detection capabilities. In terms of Accuracy, DeepSeek-R1 ranks first or second on more than ten CWE types, further confirming its overall superiority. Both Claude-3.5-Haiku and DeepSeek-V3 exhibit less stable performance, with notable strengths and weaknesses depending on the specific CWE type.

While O3-Mini ranks lower in average performance, this is largely attributed to its complete failure to correctly predict samples in two low-sample CWE types, CWE-125 and CWE-287. These misclassifications significantly skew its mean metrics downward. Notably, CWE-287 has only two test cases, which limits the statistical reliability of its results due to high variance. In contrast, CWE-125 includes 15 test instances, providing more meaningful insights. The results indicate that O3-Mini may lack sufficient capability to detect Out-of-bounds Read vulnerabilities. Its prediction confidence scores for vulnerable samples in this category tend to fall below the 0.5 threshold, highlighting weaknesses in analyzing memory-related flaws. However, the remaining CWE types, O3-Mini, perform comparably to GPT-4o-Mini, the second-best model overall.

For CWE-79 (Improper Neutralization of Input During Web Page Generation) and CWE-22 (Improper Input Validation), most LLM models exhibit relatively poor performance. Specifically, DeepSeek-R1 achieves an Accuracy of only 0.4737, while GPT-4o-Mini reaches 0.55 for CWE-79, indicating potential limitations in the models’ ability to identify web page-related vulnerabilities. The same trend is observed in CWE-22, where DeepSeek-R1 achieves an Accuracy of 0.3571 and an F1-Score of 0.4, further indicating challenges in addressing path traversal vulnerabilities. This also highlights an area that may benefit from further fine-tuning or the development of task-specific adaptation strategies in future research. The detailed error analysis will be discussed in Section 4.3.4.

4.3.3. Cost Comparison

In addition to performance evaluation, a comparative analysis of the cost associated with using different LLMs is conducted. The API services for these models were primarily accessed through third-party platforms such as Alibaba Cloud [52]. While pricing may vary depending on deployment region, the rates used in this study are based on publicly available pricing from each provider. The total number of tokens processed across all test samples ranges approximately from 6M to 8.8M. A detailed cost is provided in Table 5.

Table 5.

Cost Comparison of LLMs in API Services.

As shown in Table 5, reasoning models, including DeepSeek-R1 and O3-Mini, generally incur higher costs compared to non-reasoning models. This difference is largely attributable to their increased output token usage, which stems from generating detailed step-by-step reasoning explanations. The token consumption distribution between input and output for reasoning and non-reasoning models is summarized in Table 6.

Table 6.

Token Distribution Comparison. DeepSeek-V3 vs. DeepSeek-R1.

For instance, DeepSeek-R1, a reasoning model, utilizes approximately 3.081M output tokens, more than twice the amount produced by DeepSeek-V3, a non-reasoning model with 1.426M output tokens. Despite comparable input token usage, the substantial increase in output reflects the cost overhead incurred by the reasoning mechanism. This distinction underscores the necessity of separating reasoning models from non-reasoning models when analyzing cost-effectiveness, as reasoning tasks inherently demand more resources due to their extended output generation and intermediate reasoning traces.

Among the non-reasoning models, Claude-3.5-Haiku, GPT-4o-Mini, and DeepSeek-V3 produce fewer output token counts and thus incur lower costs. Notably, GPT-4o-Mini achieves the lowest total cost at $1.53, though it comes with a trade-off in performance as its mAP is the second lowest (0.8670). DeepSeek-V3, on the other hand, maintains a strong mAP of 0.9411 while keeping the total cost at a modest $2.85 (¥20.67), demonstrating a favorable performance-to-cost ratio within the non-reasoning group.

For reasoning models, DeepSeek-R1 stands out as the most cost-efficient option. Despite the increased output token count, it maintains a relatively low total cost of $9.38 (¥68.04) while delivering the highest performance across all evaluation metrics. In comparison, O3-Mini consumes significantly 8.797M tokens in total and incurs a much higher cost of $19.96, which is more than twice that of DeepSeek-R1.

In summary, DeepSeek-R1 offers the best trade-off between cost and performance. Its ability to deliver state-of-the-art accuracy while maintaining reasonable token usage makes it particularly well-suited for practical deployment in real-world vulnerability detection systems, where both efficiency and reliability are critical.

4.3.4. Case Study of Reasoning Traces

As demonstrated in the preceding sections, reasoning-enabled LLMs exhibit superior performance over non-reasoning counterparts in vulnerability detection tasks. While both types of models ultimately produce vulnerability assessments, the reasoning models, exemplified by DeepSeek-R1, adopt a structured, step-by-step analysis pipeline that closely resembles human expert review processes. This section analyzes the fundamental differences between reasoning and non-reasoning mechanisms, their influence on detection accuracy, and their potential implications for vulnerability remediation.

Non-reasoning models typically generate direct and concise outputs that flag potential vulnerabilities based on surface-level features. These outputs are often driven by heuristics derived from training data, such as known insecure code patterns, function calls, or syntactic cues. As a result, such models may overly rely on explicit indicators of insecurity and standard coding idioms. When presented with code that is syntactically correct and incorporates superficial security checks, non-reasoning models may be prone to false negatives, failing to identify deeper semantic flaws or logic-based vulnerabilities.

In contrast, reasoning models operate with a more deliberate and interpretable analytical process. For instance, DeepSeek-R1 begins by explicitly identifying the security objective of the given task (e.g., buffer bounds checking, input sanitization, memory management), followed by a systematic examination of the code’s logic, control flow, and functional semantics. Rather than relying on static patterns, the model attempts to understand the intended behavior of the code, evaluate how input is processed, and identify whether any security guarantees are violated in context. This paradigm shift from pattern recognition to semantic reasoning is aligned with how professional security analysts approach code auditing.

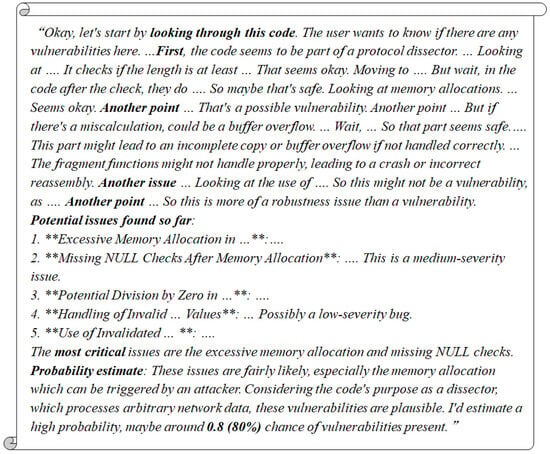

A representative reasoning trace from DeepSeek-R1 for a vulnerability case, CWE-787 in packet-dcp-etsi.c (ID: 231378) is illustrated in Figure 13.

Figure 13.

Representative reasoning trace by DeepSeek-R1 on CWE-787 (ID: 231378).

As illustrated in Figure 13, DeepSeek-R1 approaches vulnerability detection through a structured, step-by-step reasoning process. It begins by interpreting the overall purpose of the function, then analyzes how memory is allocated and how pointers are manipulated, and finally identifies specific conditions under which memory access might exceed allocated bounds. This systematic breakdown forms a clear and logical path from input code to vulnerability conclusion, enhancing both transparency and reproducibility—key factors in building developer trust.

Another notable strength of reasoning-enabled models is their ability to provide quantified probability estimates for each detection. DeepSeek-R1, for instance, outputs a confidence score alongside each vulnerability it identifies, indicating how certain the model is about its assessment. These probabilistic insights allow downstream tools or human reviewers to make informed decisions—such as prioritizing high-confidence vulnerabilities for immediate remediation or flagging low-confidence cases for further analysis.

Beyond detection, the structured reasoning output of models like DeepSeek-R1 adds value by revealing the root causes of vulnerabilities. By clearly outlining where the logic fails or which operations introduce risk, the model offers actionable insights that can guide remediation. Developers can use this information to refactor problematic code, introduce appropriate validations, or redesign unsafe data flows—ultimately contributing to stronger and more secure software.

In summary, reasoning-capable models such as DeepSeek-R1 offer notable advantages in software vulnerability detection. They improve not only accuracy but also interpretability and support for mitigation. These qualities make them especially well-suited for integration into real-world secure coding practices, including those in safety-critical domains.

4.3.5. Error Analysis of DeepSeek-R1

As highlighted in Section 4.3.1, most LLM-based vulnerability detectors, including DeepSeek-R1 and O3-Mini, show relatively weak performance on certain categories such as CWE-22 and CWE-79. To further investigate the limitations of these models, a detailed error analysis is conducted using DeepSeek-R1 as the representative reasoning model.

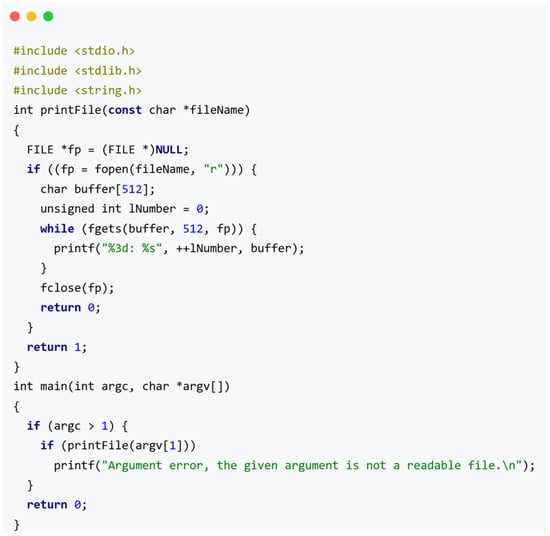

One of the most illustrative failure cases is observed in CWE-22. A representative vulnerable sample path_basic_bad.c, is shown in Figure 14.

Figure 14.

Vulnerable sample path_basic_bad.c corresponding to CWE-22 (ID: 1878).

This simple C program reads a file path from user input and attempts to open the specified file without any path validation or sanitization. Such code is inherently vulnerable to path traversal attacks, where malicious input (e.g., ../etc/passwd) can be used to access unauthorized system files. This type of vulnerability typically occurs when a program allows users to influence file paths but fails to restrict the path scope appropriately.

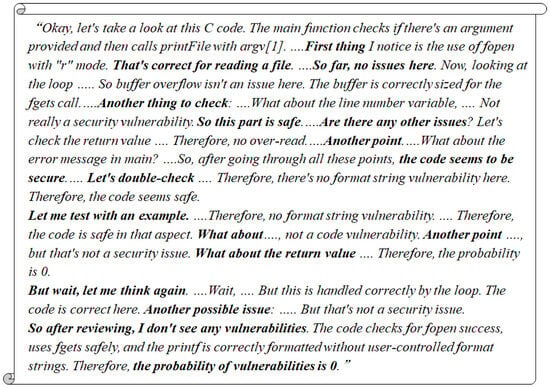

Surprisingly, both DeepSeek-R1 and O3-Mini assign a vulnerability score of 0.0 to this sample, failing to detect the issue. The reasoning trace generated by DeepSeek-R1 for this instance (ID:1889) is shown in Figure 15.

Figure 15.

Reasoning trace of DeepSeek-R1 on a CWE-22 sample (ID: 1889).

As seen in Figure 15, the model evaluates aspects such as argument validation, buffer overflow, string formatting, and general logic correctness. However, it overlooks the core issue of directory traversal, which is a critical file system constraint and secure input handling. This indicates a significant gap in the model’s ability to reason about file system boundaries and input validation in security-critical contexts. For code developers, this issue could be fixed by manually implementing input sanitization techniques such as blocking “..” patterns or enforcing strict whitelisting of file locations. This suggests that while DeepSeek-R1 can simulate a structured analysis process, it may lack domain-specific knowledge for certain vulnerability types.

A similar trend is observed with CWE-79, where the model struggles to detect vulnerabilities involving improper input neutralization during web page generation. These issues often result in unauthorized exposure of sensitive data or script injection risks. For example, if user input is directly embedded into HTML content without unauthorized content, attackers may gain access to restricted resources. This issue is related to the exposure of the resource to the wrong sphere.

This failure may be attributed to two main factors: (1) a possible lack of domain-specific training examples in the model’s training steps. (2) Insufficient emphasis on secure coding practices during the instruction tuning process. In particular, there is a lack of security risk awareness and adversarial robustness training, both of which are critical for equipping models with the ability to recognize subtle yet dangerous input-driven vulnerabilities in real-world applications.

Overall, these findings suggest that while models like DeepSeek-R1 exhibit general reasoning abilities, they still struggle with subtle, domain-specific security issues. Enhancing detection performance in these cases may require task-specific fine-tuning, enriched datasets, and integration of security design patterns into the model’s training framework.

5. Conclusions and Answers to Research Questions

This study presents a comprehensive evaluation of reasoning and non-reasoning LLMs for software vulnerability detection. Through extensive experiments on the SARD dataset, we investigated four research questions concerning the performance, cost, interpretability, and limitations of these models.

(RQ1) Do reasoning-aware LLMs outperform non-reasoning models in software vulnerability detection?

Yes. Through a systematic comparison of representative reasoning models (e.g., DeepSeek-R1, O3-Mini) and non-reasoning models (e.g., GPT-4o-Mini, Claude-3.5-Haiku, DeepSeek-V3), it demonstrates the clear advantages of incorporating structured reasoning mechanisms into vulnerability detection tasks. Experimental results show that reasoning LLMs consistently outperform their non-reasoning counterparts across all major evaluation metrics. In particular, DeepSeek-R1 achieves state-of-the-art performance on the SARD dataset, with an Accuracy of 0.9507, F1-score of 0.9659, and mAP of 0.9756. These results highlight the effectiveness of step-by-step reasoning in enhancing the model’s understanding of complex code semantics and in identifying subtle vulnerabilities that heuristic-based models may overlook.

(RQ2) What are the trade-offs between performance and cost for different models?

Beyond performance, a detailed cost-performance analysis is also conducted. Although reasoning models incur higher token usage, especially in output generation due to explanatory reasoning, their improved precision and reduced false positives contribute to greater overall efficiency and practicality in real-world applications. Among them, DeepSeek-R1 achieves the best trade-off between performance and cost, making it a strong candidate for scalable deployment in software security.

(RQ3) Can reasoning traces from DeepSeek-R1 provide interpretable insights into its decision-making process?

Yes. DeepSeek-R1 generates coherent reasoning traces that simulate the diagnostic thought process of a human security expert. These traces enhance transparency and interpretability by illustrating how the model identifies, explains, and justifies its vulnerability predictions. The valuable traces offer actionable insights for remediation, making these models valuable tools for developers to improve code quality.

(RQ4) What are the potential limitations of reasoning-aware LLMs in the context of software vulnerability detection?

Despite their strengths, it is worth noting that despite their strong potential, reasoning LLMs still exhibit several limitations. Error analysis reveals that current models struggle with subtle, domain-specific vulnerabilities. These failures underscore the need for enhanced instruction tuning that not only improves technical comprehension but also emphasizes security risk awareness and encourages attack-aware coding practices. In addition, the inherent complexity of software vulnerabilities, along with adversarial examples specifically crafted to exploit LLM behaviors, continues to increase the difficulty of accurate detection.

Moreover, the extensive computational demands required for model inference pose scalability challenges, particularly due to the high costs and energy consumption associated with running LLMs containing tens of billions of parameters. Future research is expected to address these issues through the development of more efficient, task-specific LLMs for vulnerability detection. Lightweight or specialized architectures are also hoped to be explored for software defect detection in future work.

In conclusion, this work highlights the transformative potential of reasoning LLMs in automated software vulnerability detection. By addressing the aforementioned limitations through continued research and technical innovation, such models can play a crucial role in building more secure, robust, and intelligent software systems.

Author Contributions

Conceptualization, W.Q., L.S., L.L. and F.Y.; methodology, F.Y.; software, W.Q., L.S. and F.Y.; validation, W.Q., L.S. and F.Y.; formal analysis, W.Q.; investigation, W.Q. and F.Y.; resources, F.Y.; data curation, F.Y.; writing—original draft preparation, W.Q. and F.Y.; writing—reviewing and editing, F.Y., L.L., W.Q. and L.S.; visualization, F.Y.; supervision, F.Y. and L.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the NSF OF CHINA with grant number 12271438, NSF OF HENAN with grant number 242300420259, and the NANJING TECH UNIVERSITY GRADUATE EDUCATION REFORM PROJECT with grant number YJG2413.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

This study utilizes the Software Assurance Reference Dataset (SARD), which is made available under a specific license and cannot be publicly shared by the authors. Researchers interested in accessing this dataset could refer to the official SARD website (https://samate.nist.gov/SARD/) (accessed on 10 October 2024). Additionally, the example codes and prediction results in this paper will be publicly available at the following GitHub repository: https://github.com/fftechnj/reasoning-llm-vuln-detection (accessed on 30 May 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AST | Abstract Syntax Tree |

| BLSTM | Bidirectional Long Short-Term Memory |

| CI | Confidence Interval |

| COT | Chain-Of-Thought |

| CWE | Common Weakness Enumeration |

| FRAMES | Factuality Retrieval And reasoning Measurement Set |

| GCN | Graph Convolutional Network |

| GNN | Graph Neural Network |

| GPQA | Graduate-Level Google-Proof QA Benchmark |

| LLM | Large Language Model |

| MCC | Matthews Correlation Coefficient |

| MMLU | Measuring Massive Multitask Language Understanding |

| NLP | Natural Language Processing |

| OASIS | Organization for the Advancement of Structured Information Standards |

| PR | Precision-Recall |

| ROC | Receiver Operating Characteristic |

| SARIF | Static Analysis Results Interchange Format |

| SARD | Software Assurance Reference Dataset |

References

- Li, Y.; Huang, C.L.; Wang, Z.F.; Yuan, L.; Wang, X.C. Survey of Software Vulnerability Mining Methods Based on Machine Learning. J. Softw. 2020, 31, 2040–2061. [Google Scholar]

- Zhan, Q.; Pan, S.Y.; Hu, X.; Bao, L.F.; Xia, X. Survey on Vulnerability Awareness of Open Source Software. J. Softw. 2024, 35, 19–37. [Google Scholar]

- Meng, Q.; Zhang, B.; Feng, C.; Tang, C. Detecting buffer boundary violations based on SVM. In Proceedings of the 3rd International Conference on Information Science and Control Engineering (ICISCE), Beijing, China, 8–10 July 2016; pp. 313–316. [Google Scholar]

- Gao, C.; Zheng, Y.; Li, N.; Li, Y.F.; Qin, Y.R.; Piao, J.H.; Quan, Y.H.; Chang, J.X.; Jin, D.P.; He, X.N.; et al. A survey of graph neural networks for recommender systems: Challenges, methods, and directions. ACM Trans. Recomm. Syst. 2023, 1, 1–51. [Google Scholar] [CrossRef]

- Wang, S.J.; Hu, L.; Wang, Y.; He, X.N.; Sheng, Q.Z.; Orgun, M.A.; Cao, L.B.; Ricci, F.; Yu, P.S. Graph learning based recommender systems: A review. In Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence (IJCAI), Montreal, QC, Canada, 19–27 August 2021; pp. 4644–4652. [Google Scholar]

- Li, Z.; Zou, D.; Xu, S.; Ou, X.; Jin, H.; Wang, S.J.; Deng, Z.; Zhong, Y. Vuldeepecker: A deep learning-based system for vulnerability detection. In Proceedings of the 25th Annual Network and Distributed System Security Symposium, San Diego, CA, USA, 18–21 February 2018. [Google Scholar]

- Wu, S.Z.; Guo, T.; Dong, G.W.; Wang, J. Software vulnerability analyses: A road map. J. Tsinghua Univ. Sci. Technol. 2012, 52, 1309–1319. [Google Scholar]

- Chang, E.Y. Prompting large language models with the Socratic method. In Proceedings of the 2023 IEEE 13th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 8–11 March 2023; pp. 351–360. [Google Scholar]

- Chakrabarty, T.; Padmakumar, V.; Brahman, F.; Muresan, S. Creativity Support in the Age of Large Language Models: An Empirical Study Involving Emerging Writers. arXiv 2023, arXiv:2309.12570. [Google Scholar]

- Tian, H.; Lu, W.; Li, T.O.; Tang, X.; Cheung, S.C.; Klein, J.; Bissyande, T.F. Is ChatGPT the Ultimate Programming Assistant–How far is it? arXiv 2023, arXiv:2304.11938. [Google Scholar]

- Zhou, X.; Cao, S.; Sun, X.; Lo, D. Large language model for vulnerability detection and repair: Literature review and the road ahead. ACM Trans. Softw. Eng. Methodol. 2024, 34, 145. [Google Scholar] [CrossRef]

- Shestov, A.; Levichev, R.; Mussabayev, R.; Maslov, E.; Cheshkov, A.; Zadorozhny, P. Finetuning Large Language Models for Vulnerability Detection. arXiv 2024, arXiv:2401.17010. [Google Scholar] [CrossRef]

- Hanif, H.; Maffeis, S. Vulberta: Simplified source code pre-training for vulnerability detection. In Proceedings of the 2022 International Joint Conference on Neural Networks (IJCNN), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar]

- Fu, M.; Tantithamthavorn, C.; Nguyen, V.; Le, T. ChatGPT for vulnerability detection, classification, and repair: How far are we? In Proceedings of the 30th Asia-Pacific Software Engineering Conference (APSEC), Seoul, Republic of Korea, 6–9 December 2023; pp. 632–636. [Google Scholar]

- Bsharat, S.M.; Myrzakhan, A.; Shen, Z. Principled instructions are all you need for questioning LLaMA-1/2, GPT-3.5/4. arXiv 2023, arXiv:2312.16171. [Google Scholar]

- Pham, N.H.; Nguyen, T.T.; Nguyen, H.A.; Nguyen, T.N. Detection of recurring software vulnerabilities. In Proceedings of the ACM International Conference on Automated Software Engineering, New York, NY, USA, 20–24 September 2010; pp. 447–456. [Google Scholar]

- Li, Z.; Zou, D.Q.; Wang, Z.L.; Jin, H. Survey on Static Software Vulnerability Detection for Source Code. Chin. J. Netw. Inf. Secur. 2019, 5, 5–18. [Google Scholar]

- Zivkovic, T.; Nikolic, B.; Simic, V.; Pamucar, D.; Bacanin, N. Software Defects Prediction by Metaheuristics Tuned Extreme Gradient Boosting and Analysis Based on Shapley Additive Explanations. Appl. Soft Comput. 2023, 146, 110659. [Google Scholar] [CrossRef]

- Cha, S.K.; Avgerinos, T.; Rebert, A.; Brumley, D. Unleashing Mayhem on Binary Code. In Proceedings of the 2012 IEEE Symposium on Security and Privacy (SP’12), San Francisco, CA, USA, 20–23 May 2012; pp. 380–394. [Google Scholar]

- LibFuzzer: A Library for Coverage-Guided Fuzz Testing. Available online: http://llvm.org/docs/LibFuzzer.html (accessed on 10 October 2024).

- Cheng, X.; Wang, H.; Hua, J.; Zhang, M.; Xu, G.; Yi, L. Static detection of control-flow-related vulnerabilities using graph embedding. In Proceedings of the 24th Int. Conf. on Engineering of Complex Computer Systems (ICECCS), Guangzhou, China, 10–13 November 2019; pp. 41–50. [Google Scholar]

- Feng, Z.; Guo, D.; Tang, D.; Duan, N.; Feng, X.; Gong, M.; Shou, L.; Qin, B.; Liu, T.; Jiang, D.; et al. CodeBERT: A pre-trained model for programming and natural languages. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 1536–1547. [Google Scholar]

- Guo, D.; Ren, S.; Lu, S.; Feng, Z.; Tang, D.; Liu, S.; Zhou, L.; Duan, N.; Svyatkovskiy, A.; Fu, S.; et al. GraphCodeBERT: Pre-training code representations with data flow. In Proceedings of the 35th AAAI Conference on Artificial Intelligence, Online, 2–9 February 2021; pp. 229–237. [Google Scholar]

- Petrovic, A.; Jovanovic, L.; Bacanin, N.; Antonijevic, M.; Savanovic, N.; Zivkovic, M.; Milovanovic, M.; Gajic, V. Exploring Metaheuristic Optimized Machine Learning for Software Defect Detection on Natural Language and Classical Datasets. Mathematics 2024, 12, 2918. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30, 15. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. Adv. Neural Inf. Process. Syst. 2020, 33, 1877–1901. [Google Scholar]