Real-Time Cloth Simulation in Extended Reality: Comparative Study Between Unity Cloth Model and Position-Based Dynamics Model with GPU

Abstract

1. Introduction

2. Related Work

2.1. Extended Reality (XR) and Mixed Reality (MR) Interaction Systems

2.2. Meta Quest 3 for XR Physical Simulation

2.3. Position-Based Dynamics for Cloth Simulation

- Prediction Step:

- Constraint Solving:

- Position and Velocity Update:

2.4. GPU-Accelerated Physics Simulation

- Parallel computation of particle data (position, velocity, external forces);

- Parallel enforcement of constraints, including distance, bending, and fixed-point constraints;

- Parallelized collision detection with meshes or colliders;

- Dynamic optimization of simulation iterations to balance quality and real-time performance.

3. Methodology

3.1. System Overview

3.2. GPU-Based PBD Cloth Framework (Reality Collision Version)

3.2.1. Algorithm Overview

| Algorithm 1 GPU-Based PBD cloth simulation framework |

| 1: Initialization Phase: 2: Create cloth mesh vertices, edges, and constraint data. 3: Upload vertex data to GPU memory. 4: Per-Frame Simulation Loop: 5: Apply external forces to vertices. 6: Predict vertex positions. 7: Solve distance and bending constraints. 8: Detect collisions with RoomMesh and Hand Mesh. 9: Resolve collisions and update projected positions. 10: Update vertex positions and velocities. 11: Render the cloth mesh. |

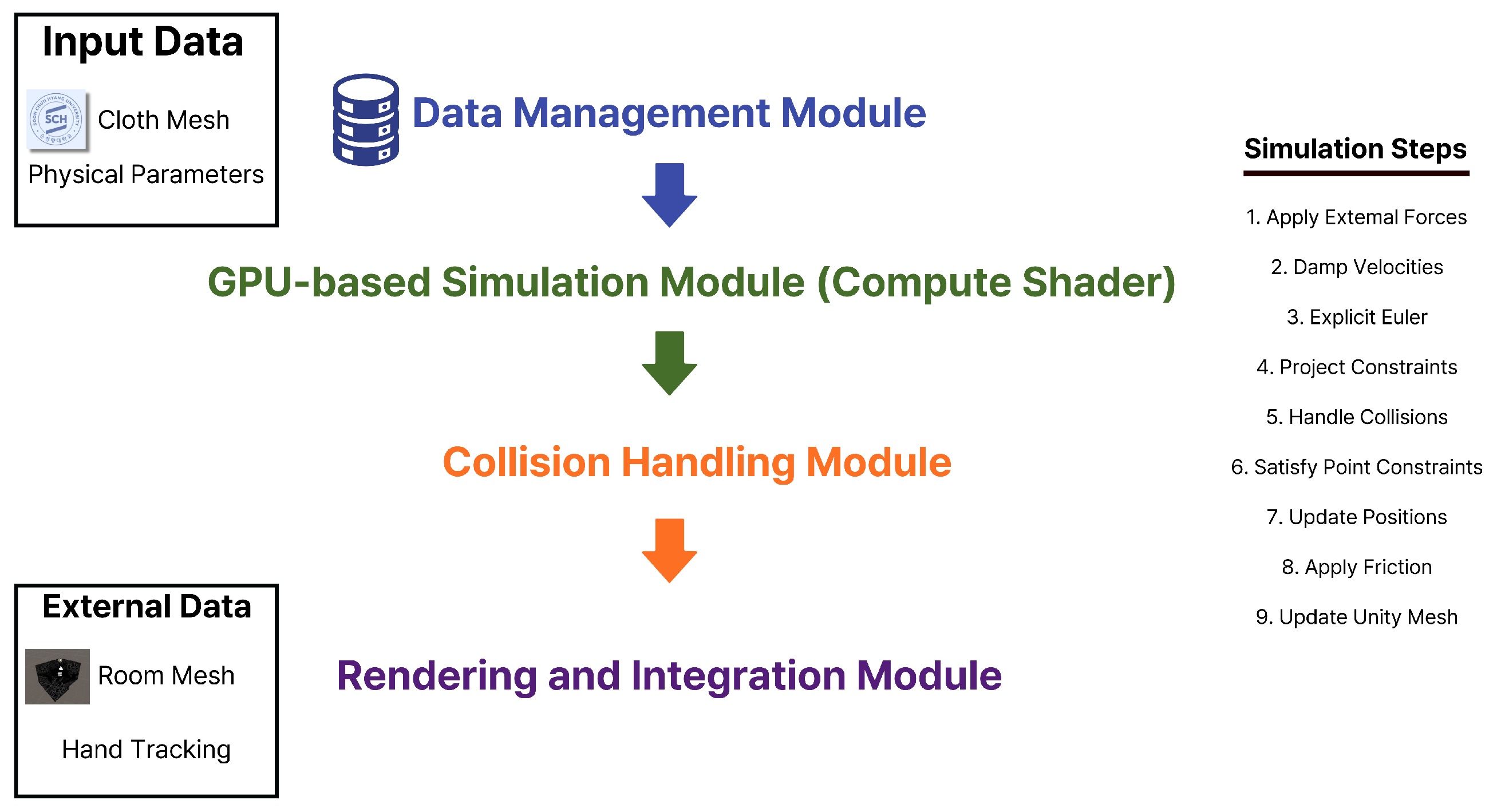

3.2.2. System Flow Using Flowchart

3.2.3. Compute Shader Kernel Design

- ApplyExternalForces: Applies external forces such as gravity to the velocities of particles.

- DampVelocities: Applies damping to particle velocities to simulate energy dissipation.

- ApplyExplicitEuler: Predicts new particle positions using explicit Euler integration.

- ProjectConstraintDeltas: Projects particles to satisfy distance and bending constraints, including atomic operations for parallel accumulation.

- AverageConstraintDeltas: Averages accumulated constraint deltas and updates particle positions accordingly.

- SatisfySphereCollisions and SatisfyCubeCollisions: Handles collision responses against primitive colliders such as spheres and cubes.

- SatisfyMeshCollisions: Detects and resolves collisions between cloth particles and RoomMesh triangles.

- SatisfyHandMeshCollisions: Handles dynamic collision interactions with the user’s hand mesh obtained via Meta Quest OVR Mesh data.

- UpdatePositions: Updates final particle velocities and positions after constraint satisfaction and collision response.

3.2.4. RoomMesh-Based Collision Handling Algorithm

- Press the A button to visualize the complete RoomMesh.

- Use the right-hand trigger button to select six points, defining the region of interest.

- Construct an Axis-Aligned Bounding Box (AABB) from the selected points.

- Extract only the vertices and triangles of the RoomMesh that intersect the AABB to form the SubMesh.

- Memory usage is significantly reduced.

- Computational load for collision detection is minimized.

- Real-time responsiveness of cloth–environment interaction is enhanced.

| Algorithm 2 SubMesh extraction based on user-defined selection box |

|

3.2.5. Hand Collision Handling Algorithm

| Algorithm 3 Hand collision handling for cloth particles (using capsule collider) |

|

| Algorithm 4 Hand collision handling for cloth particles (using OVR mesh) |

|

3.2.6. Summary

4. Results

4.1. Performance Analysis: Resolution vs. Frame Rate

4.1.1. Resolution-Based Performance Test

4.1.2. GPU Memory Access Analysis

4.1.3. Functional Capability Comparison

4.1.4. Summary and Discussion

4.2. Collision Interaction with RoomMesh

4.2.1. SubMesh Extraction and Collision Interaction Process

- Step 1: RoomMesh AcquisitionThe environment is scanned using Meta Quest 3 to acquire a full RoomMesh.

- Step 2: Region Selection (White Pointer)The user defines the target region by selecting six points with the controller, where a white pointer visually indicates the selected points.

- Step 3: SubMesh Generation and Collision Position DisplayBased on the selected points, an AABB is generated, and RoomMesh triangles within the AABB are extracted to form a SubMesh. After dropping the virtual cloth onto the SubMesh, the final collision position is displayed and held on screen for approximately 1 s.

- Step 4: Cloth Collision ExperimentThe SubMesh is instantiated as the collision surface, and the cloth simulation experiment is performed.

4.2.2. Experimental Setup

4.2.3. Collision Processing Results: Unity Cloth vs. GPU-PBD

- Unity Cloth: The cloth fails to properly detect the desk surface, resulting in the cloth passing through it.

- GPU-PBD Cloth (Double-Sided): The cloth accurately detects and interacts with the SubMesh surface, resting naturally on the desk.

4.3. Collision Setup Using OVRSkeleton Capsule Colliders

4.3.1. Experimental Setup

4.3.2. Collision Response Comparison

- (a)

- Unity Cloth:

- (b)

- GPU-PBD Cloth:

4.3.3. Conclusions

4.4. Advanced Hand Mesh Collision Interaction

4.4.1. Real-Time Hand Mesh-Based Collision Implementation

4.4.2. Functional Limitations of Unity Cloth Component

4.4.3. Real-Time Hand Mesh-Based Interaction with GPU-PBD System

4.4.4. Experimental Setup

4.4.5. Summary and Discussion

- Unity Cloth: Limited to primitive colliders, unable to handle natural interactions with complex hand geometries.

- GPU-PBD Cloth: By leveraging real-time hand mesh collision, the system enables realistic cloth deformations through advanced interactions such as pushing and stretching.

4.5. Quantitative User Evaluation

- Visual Realism: “The appearance of the cloth, including folds and wrinkles, looked similar to real fabric.”

- Interaction Naturalness: “The cloth responded physically naturally when touched, pushed, or stretched.”

- Responsiveness: “The cloth responded promptly to my hand movements without noticeable delay.”

- Spatial Alignment: “The cloth correctly interacted with real-world surfaces such as desks and floors without misalignment.”

- Immersive Experience: “The simulation felt immersive and enhanced the overall realism of the XR environment.”

5. Results and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rauschnabel, P.A.; Felix, R.; Hinsch, C.; Shahab, H.; Alt, F. What is XR? Towards a Framework for Augmented and Virtual Reality. Comput. Hum. Behav. 2022, 133, 107289. [Google Scholar] [CrossRef]

- Unity Manual: Cloth Component. Available online: https://docs.unity3d.com/Manual/class-Cloth.html (accessed on 26 August 2024).

- Fang, J.; You, L.; Chaudhry, E.; Zhang, J. State-of-the-art improvements and applications of position based dynamics. Comput. Animat. Virtual Worlds 2023, 34, e2143. [Google Scholar] [CrossRef]

- Müller, M.; Heidelberger, B.; Hennix, M.; Ratcliff, J. Position based dynamics. J. Vis. Commun. Image Represent. 2007, 18, 109–118. [Google Scholar] [CrossRef]

- Introduction to Compute Shaders in Unity. Available online: https://docs.unity3d.com/Manual/class-ComputeShader.html (accessed on 16 September 2024).

- OVRSceneManager. Available online: https://developers.meta.com/horizon/documentation/unity/unity-scene-use-scene-anchors (accessed on 26 April 2024).

- Meta Quest-Hand Tracking Documentation. Available online: https://developer.oculus.com/documentation/unity/unity-handtracking (accessed on 26 April 2025).

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Azuma, R.T. A survey of augmented reality. Presence Teleoperators Virtual Environ. 1997, 6, 355–385. [Google Scholar] [CrossRef]

- Cadena, C.; Carlone, L.; Carrillo, H.; Latif, Y.; Scaramuzza, D.; Neira, J.; Reid, I.; Leonard, J.J. Past, present, and future of simultaneous localization and mapping: Toward the robust-perception age. IEEE Trans. Robot. 2016, 32, 1309–1332. [Google Scholar] [CrossRef]

- Unity XR Development Documentation. Available online: https://docs.unity3d.com/Manual/XR.html (accessed on 26 March 2025).

- Unreal Engine XR Development Guide. Available online: https://docs.unrealengine.com/en-US/SharingAndReleasing/XRDevelopment/index.html (accessed on 26 December 2024).

- Meta Platforms. Meta Quest 3 Specifications. 2023. Available online: https://www.meta.com/quest/quest-3/specs/ (accessed on 27 August 2024).

- Makhataeva, Z.; Varol, H.A. Microsoft HoloLens 2: New Possibilities for Augmented Reality. IEEE Access 2020, 8, 125859–125870. [Google Scholar]

- Sung, N.J.; Ma, J.; Hor, K.; Kim, T.; Va, H.; Choi, Y.J.; Hong, M. Real-Time Physics Simulation Method for XR Application. Computers 2025, 14, 17. [Google Scholar] [CrossRef]

- Volino, P.; Magnenat-Thalmann, N. Accurate garment prototyping and simulation. Comput.-Aided Des. 2010, 42, 778–788. [Google Scholar] [CrossRef]

- Meta Quest 3 Spatial Awareness and SLAM Technology Overview. Available online: https://developer.meta.com/docs/quest/room-mesh/ (accessed on 27 April 2025).

- Meta XR SDK Documentation. Available online: https://developers.meta.com/horizon/downloads/package/oculus-platform-sdk/ (accessed on 27 April 2025).

- Unity Input System Documentation. Available online: https://docs.unity3d.com/Packages/com.unity.inputsystem@1.0/manual/index.html (accessed on 27 April 2025).

- Ma, J.; Sung, N.J.; Choi, M.H.; Hong, M. Performance Comparison of Vertex Block Descent and Position Based Dynamics Algorithms Using Cloth Simulation in Unity. Appl. Sci. 2024, 14, 11072. [Google Scholar] [CrossRef]

- Han, D.; Lee, J.; Kim, M.; Lee, Y. XRMan: Towards Real-time Hand-Object Pose Tracking in eXtended Reality. In Proceedings of the 30th Annual International Conference on Mobile Computing and Networking, ACM MobiCom ’24, Washington, DC, USA, 18–22 November 2024; pp. 1575–1577. [Google Scholar] [CrossRef]

- Müller, M.; Heidelberger, B.; Teschner, M.; Gross, M. Meshless deformations based on shape matching. ACM Trans. Graph. (TOG) 2005, 24, 471–478. [Google Scholar] [CrossRef]

- Macklin, M.; Müller, M.; Chentanez, N.; Kim, T.Y. Unified particle physics for real-time applications. ACM Trans. Graph. (TOG) 2014, 33, 153. [Google Scholar] [CrossRef]

- Va, H.; Choi, M.H.; Hong, M. Real-Time Cloth Simulation Using Compute Shader in Unity3D for AR/VR Contents. Appl. Sci. 2021, 11, 8255. [Google Scholar] [CrossRef]

- Bondarenko, V.; Zhang, J.; Nguyen, G.T.; Fitzek, F.H.P. A Universal Method for Performance Assessment of Meta Quest XR Devices. In Proceedings of the 2024 IEEE Gaming, Entertainment, and Media Conference (GEM), Turin, Italy, 5–7 June 2024; pp. 1–6. [Google Scholar] [CrossRef]

| Item | Specification |

|---|---|

| Processor | Qualcomm Snapdragon XR2 Gen 2 |

| Memory | 8GB LPDDR5 SDRAM |

| Internal Storage | 512GB UFS 3.1 |

| Display | 2 × 2064 × 2208 Fast-switch LCD, 90–120 Hz refresh rate |

| Lens Type | Pancake lens |

| Field of View (FOV) | 110° horizontal, 96° vertical |

| Interpupillary Distance (IPD) | 58–70 mm (4-stage adjustment) |

| Eye Relief | 4-stage adjustment |

| Tracking | 6 Degrees of Freedom (6DoF) |

| Cameras | 2 external RGB cameras, 4 wide-angle IR cameras |

| Connectivity | Wi-Fi 6E, Bluetooth 5.3 |

| Battery | Built-in 5060 mAh Li-ion |

| Operating System | Meta Horizon OS |

| Headset Dimensions | 260 × 98 × 192 mm, 515 g |

| Controller Dimensions | 130 × 70 × 62 mm, 103 g |

| Ports | USB Type-C × 1, 3.5 mm Audio Jack × 1 |

| Manufacturer | Meta Platforms, Menlo Park, CA, USA |

| No. | Purpose | Test Setup | Compared Systems |

|---|---|---|---|

| 1 | Measure FPS performance across resolutions (8 × 8 to 128 × 128) | Gravity-only cloth drop in static space using Meta Quest 3 | Unity Cloth vs. GPU-PBD (Single & Double-Sided) |

| 2 | Test physical interaction accuracy between cloth and real-world surface | SubMesh from controller-selected RoomMesh region | Unity Cloth vs. GPU-PBD (Double-Sided) |

| 3 | Evaluate hand interaction realism using 19 capsule colliders | Hand sweeps across cloth using capsule colliders | Unity Cloth vs. GPU-PBD (Double-Sided) |

| 4 | Evaluate natural deformation via hand mesh geometry | Real-time hand mesh in push and two-hand stretch interactions | GPU-PBD (Double-Sided) |

| Resolution | Unity Cloth (FPS) | GPU-PBD (Single-Sided) | GPU-PBD (Double-Sided) |

|---|---|---|---|

| 8 × 8 | 72.0 | 72.0 | 72.0 |

| 16 × 16 | 72.0 | 72.0 | 72.0 |

| 32 × 32 | 72.0 | 72.0 | 72.0 |

| 64 × 64 | 72.0 | 65.0 | 40.0 |

| 128 × 128 | 72.0 | 42.0 | 16.0 |

| Feature | Unity Cloth | GPU-PBD (Single-Sided) | GPU-PBD (Double-Sided) |

|---|---|---|---|

| Double-Sided Cloth Support | X | X | O |

| Hand Mesh Collision Handling | X | X | O |

| RoomMesh (Reality Mesh) Collision Handling | X | X | O |

| Fine-Grained Constraint Adjustment | Limited | O | O |

| Compute Shader Extensibility | X | O | O |

| Real-Time Performance (Up to 32 × 32) | O (72 FPS) | O (72 FPS) | O (72 FPS) |

| Real-Time Performance (64 × 64 and above) | O (72 FPS) | (65 FPS at 64 × 64) | (40 FPS at 64 × 64) |

| Item | Details |

|---|---|

| Cloth Resolution | 32 × 32 |

| Collision Surface | Extracted SubMesh (desk surface) |

| Drop Height | Approximately 30 cm above the desk |

| Physical Parameters | Gravity, mass, stiffness (identical settings for both systems) |

| Item | Details |

|---|---|

| Cloth Resolution | 32 × 32 |

| Hand Tracking Method | OVRSkeleton (Meta Quest 3) |

| Collision Targets | 19 Unity Capsule Colliders (attached to each joint) |

| Interaction Scenario | Hand moves across the cloth from right to left |

| Item | Details |

|---|---|

| Cloth Resolution | 32 × 32 |

| Hand Tracking Method | Meta Quest 3 OVRMesh (Real-Time Update) |

| Collision Surface | Dynamically updated hand mesh |

| Interaction Scenarios | Right-hand push (moving from right to left), two-hand stretching (pulling cloth in opposite directions) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, T.; Ma, J.; Hong, M. Real-Time Cloth Simulation in Extended Reality: Comparative Study Between Unity Cloth Model and Position-Based Dynamics Model with GPU. Appl. Sci. 2025, 15, 6611. https://doi.org/10.3390/app15126611

Kim T, Ma J, Hong M. Real-Time Cloth Simulation in Extended Reality: Comparative Study Between Unity Cloth Model and Position-Based Dynamics Model with GPU. Applied Sciences. 2025; 15(12):6611. https://doi.org/10.3390/app15126611

Chicago/Turabian StyleKim, Taeheon, Jun Ma, and Min Hong. 2025. "Real-Time Cloth Simulation in Extended Reality: Comparative Study Between Unity Cloth Model and Position-Based Dynamics Model with GPU" Applied Sciences 15, no. 12: 6611. https://doi.org/10.3390/app15126611

APA StyleKim, T., Ma, J., & Hong, M. (2025). Real-Time Cloth Simulation in Extended Reality: Comparative Study Between Unity Cloth Model and Position-Based Dynamics Model with GPU. Applied Sciences, 15(12), 6611. https://doi.org/10.3390/app15126611