Abstract

Pneumothorax is a critical condition that requires rapid and accurate diagnosis from standard chest radiographs. Identifying and segmenting the location of the pneumothorax are essential for developing an effective treatment plan. nnUNet is a self-configuring, deep learning-based framework for medical image segmentation. Despite adjusting its parameters automatically through data-driven optimization strategies and offering robust feature extraction and segmentation capabilities across diverse datasets, our initial experiments revealed that nnUNet alone struggled to achieve consistently accurate segmentation for pneumothorax, particularly in challenging scenarios where subtle intensity variations and anatomical noise obscure the target regions. This study aims to enhance the accuracy and robustness of pneumothorax segmentation in low-contrast chest radiographs by integrating spatial prior information and attention mechanism into the nnUNet framework. In this study, we introduce the spatial prior contrast adapter (SPCA)-enhanced nnUNet by implementing two modules. First, we integrate an SPCA utilizing the MedSAM foundation model to incorporate spatial prior information of the lung region, effectively guiding the segmentation network to focus on anatomically relevant areas. In the meantime, a probabilistic atlas, which shows the probability of an area prone to pneumothorax, is generated based on the ground truth masks. Both the lung segmentation results and the probabilistic atlas are used as attention maps in nnUNet. Second, we combine the two attention maps as additional input into nnUNet and integrate an attention mechanism into standard nnUNet by using a convolutional block attention module (CBAM). We validate our method by experimenting on the dataset CANDID-PTX, a benchmark dataset representing 19,237 chest radiographs. By introducing spatial awareness and intensity adjustments, the model reduces false positives and improves the precision of boundary delineations, ultimately overcoming many of the limitations associated with low-contrast radiographs. Compared with standard nnUNet, SPCA-enhanced nnUNet achieves an average Dice coefficient of 0.81, which indicates an improvement of standard nnUNet by 15%. This study provides a novel approach toward enhancing the segmentation performance of pneumothorax with low contrast in chest X-ray radiographs.

1. Introduction

Pneumothorax is a medical condition in which air or gas accumulates in the pleural cavity, leading to partial or complete lung collapse. It can occur spontaneously due to underlying lung diseases, such as chronic obstructive pulmonary disease, or as a result of trauma [1]. Based on the mechanism by which air enters the pleural cavity, pneumothorax is classified into the following three categories: open pneumothorax, which results from a direct communication between the pleural cavity and the external environment; closed pneumothorax, in which air accumulates without an external wound, often due to internal lung pathology; and tension pneumothorax, a medical emergency where air enters the pleural space but cannot escape, causing increased intrathoracic pressure, lung collapse, mediastinal shift, and compromised cardiovascular function [2]. The mortality rate of untreated tension pneumothorax can reach as high as 91% [3], underscoring the urgency of prompt medical intervention. Additionally, pneumothorax has a high recurrence rate; primary spontaneous pneumothorax recurs in approximately 30% of cases [4], while secondary spontaneous pneumothorax, which occurs in patients with pre-existing lung disease, exhibits an even higher recurrence risk. These figures highlight the necessity of appropriate treatment and preventive measures, such as pleurodesis or surgical management, especially in recurrent cases. Therefore, early and accurate segmentation and diagnosis of pneumothorax are essential, necessitating the involvement of trained medical professionals.

For clinical diagnosis and treatment planning, medical imaging is an essential technology that enables the visualization of internal anatomical structures. It includes various modalities such as X-ray, computed tomography (CT), magnetic resonance imaging (MRI), ultrasound, and nuclear medicine, each offering unique benefits depending on the clinical application. Among these, X-ray imaging is particularly favored by respiratory physicians for the detection and management of pneumothorax due to its ability to provide detailed images of bones and thoracic structures, in addition to its low cost and widespread availability in most healthcare facilities [5]. However, a significant challenge in X-ray-based diagnosis is the global shortage of trained radiologists. In clinical workflows, image annotations made by less experienced personnel often require verification by more experienced annotators or radiologists, particularly in ambiguous cases, further increasing the workload of specialists. To address this issue and alleviate the burden on radiologists, computer-aided diagnosis (CAD) systems have been developed. Artificial intelligence (AI) and medical image-processing techniques enable advanced CAD approaches and have been widely adopted across multiple disciplines, including radiology, oncology, and neurology. In the context of pneumothorax, CAD systems powered by deep learning algorithms can automatically detect pneumothorax regions in chest X-rays, thereby supporting clinicians in making more accurate and timely diagnostic decisions.

Convolutional neural networks (CNNs), such as U-Net [6] and its optimized variants, have been widely employed for medical image segmentation tasks. Among these, nnUNet [7] stands out as a self-configuring deep learning framework that eliminates the need for manual network design and hyperparameter tuning. Designed as a robust and adaptive solution, nnUNet automatically configures its architecture, preprocessing, training, and postprocessing pipelines based on the properties of a given dataset. This makes it highly versatile across a range of medical imaging modalities, including CT, X-ray, and ultrasound. It achieves state-of-the-art performance by leveraging a U-Net-like architecture while dynamically optimizing its components to suit different tasks. Inspired by its self-configuring nature, nnUNet has the potential to be adapted for automated pneumothorax diagnosis after appropriate task-specific optimization.

In addition, MedSAM [8], a deep learning model designed specifically for medical image segmentation, is inspired by the Segment Anything Model (SAM) [9]. Unlike conventional segmentation approaches that require large volumes of labeled data, MedSAM utilizes powerful pre-trained capabilities to produce accurate segmentations with minimal annotations. Its interactive segmentation feature allows users to refine results through prompts such as points, bounding boxes, or masks, thereby enhancing workflow efficiency, especially in organ segmentation tasks like lung delineation. Furthermore, MedSAM demonstrates strong performance in challenging scenarios involving irregular organ shapes, low contrast, and blurred anatomical boundaries, making it a valuable tool for complex internal organ segmentation [10].

Our preliminary experiments, along with other related studies, evaluating the performance of nnUNet for pneumothorax segmentation on chest radiographs, indicate that nnUNet alone struggles to consistently produce accurate results, particularly in challenging cases characterized by subtle intensity variations and anatomical noise. These variations can obscure the boundaries between pneumothorax and adjacent tissues, increasing the likelihood of missegmentation. Moreover, anatomical noise, resulting from overlapping structures or tissues with similar radiographic intensities, further complicates the differentiation of pneumothorax from surrounding anatomy [11]. Consequently, there is a critical need for more robust techniques that can enhance image contrast, suppress background noise, and improve the precision of pneumothorax delineation in complex imaging environments.

In summary, high-precision segmentation of pneumothorax is critical for the development of reliable automated diagnosis systems. Our proposed method introduces a novel framework, MedSAM SPCA, designed to enhance segmentation performance through the integration of anatomical priors and contrast adjustment techniques. The key contributions of our approach are as follows:

- We propose SPCA, a method that incorporates spatial priors of the lung region, enhances contrast in chest X-ray images, and generates a probabilistic atlas from ground truth masks in the CANDID-PTX dataset.

- We construct an attention map by combining the lung segmentation mask of each pneumothorax case with the corresponding probabilistic atlas.

- We fuse these spatial priors into an attention-enhanced nnUNet architecture, which integrates the CBAM into the baseline U-Net. This design directs the model’s focus toward the lung region, thereby improving the network’s ability to identify relevant patterns and structures associated with pneumothorax in X-ray images.

2. Related Work

Our work is most closely aligned with research in the field of pneumothorax segmentation methodology. Numerous studies have investigated approaches for detecting and segmenting pneumothorax in chest radiographs using AI and deep learning techniques. For instance, Wang et al. introduced CheXLocNet, a deep convolutional neural network-based framework designed to automatically localize pneumothorax in chest radiographs, highlighting the potential of deep learning for both localization and detection tasks [12]. In a separate contribution, Wang et al. also proposed a two-stage deep learning model that integrates segmentation and classification components to enhance the accuracy of pneumothorax segmentation [13]. These studies underscore the growing interest in leveraging deep learning to improve the reliability and automation of pneumothorax diagnosis.

In addition to these methods, Lee et al. investigated the factors that enhance the positive predictive value of AI-based pneumothorax detection, showing that incorporating clinical context and improving AI models’ sensitivity can lead to better performance in chest radiographs [14]. Feng et al. utilized deep learning algorithms to automate pneumothorax triaging in chest X-rays, focusing on the New Zealand population, and demonstrated the potential of AI for large-scale medical screening [15] and diagnosis. Hong et al. implemented deep learning models for pneumothorax detection specifically after needle biopsy, which highlights the clinical relevance and real-world implementation of AI in medical practices [16].

Moreover, Tian et al. applied a deep multi-instance transfer learning approach for pneumothorax classification, emphasizing the importance of transfer learning in enhancing model generalization across different data distributions [17]. Kamalakannan et al. explored novel techniques like the exponential pixelating integral transform for enhanced chest X-ray abnormality detection, which could be useful for improving the detection of pneumothorax [18]. Additionally, Sae-Lim et al. proposed an automated pneumothorax segmentation and quantification algorithm based on deep learning, improving the precision of both segmentation and measurement tasks in X-ray images [19]. Yuan et al. focused on leveraging anatomical constraints with uncertainty modeling for pneumothorax segmentation, aiming to improve segmentation accuracy by incorporating prior anatomical knowledge into the learning process [20]. While the most common imaging modality for segmentation and quantification of pneumothorax is chest X-ray, computed tomography (CT) is also used for more reliable three-dimensional imaging. Several studies have discussed how deep learning can enable high-performance automatic segmentation of pneumothorax from CT scans [21,22,23].

These studies demonstrate a wide range of approaches, from deep learning-based localization and segmentation to multi-instance learning and transfer learning techniques, showing significant progress in automating pneumothorax detection and quantification from chest radiographs. Despite the significant advancements presented in these studies, several limitations remain. Many of the existing approaches are tailored to specific datasets or clinical settings, limiting their generalizability across diverse patient populations and imaging conditions. Additionally, while several models achieve high performance in detection or classification, fewer works focus on fine-grained segmentation with spatial accuracy, particularly in complex cases involving overlapping anatomical structures or subtle pneumothorax presentations. Most models also lack the integration of anatomical priors or contrast enhancement mechanisms, which are crucial for robust segmentation in low-contrast X-ray images. Furthermore, limited attention has been given to model interpretability and the incorporation of interactive tools that enable radiologists to refine automated results in real time. These limitations highlight the need for more adaptable, generalizable, and clinically aligned methods that can improve segmentation precision and better support decision-making in real-world healthcare environments.

3. Materials and Methods

3.1. Image Dataset

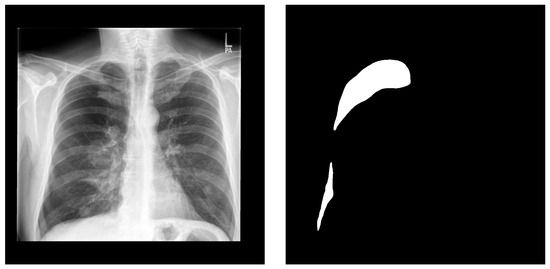

In this study, we use the Chest X-ray Anonymized Dataset in Dunedin—Pneumothorax (CANDID-PTX) benchmark dataset, which represents 19,237 chest radiographs (53.4% male subjects) [15]. The age range is 16–101 years (mean = 60.1 and STDEV = 20.1). In the dataset, 3196 chest radiographs are pneumothorax cases, while the remaining images are normal cases. The data were acquired from three imaging devices manufactured by Philips, GE Healthcare, and Kodak. Images were annotated using the MD.ai platform with free-form line marking. The image size was 1024 × 1024 pixels in DICOM format, and the segmentation ground truth is provided in RLE (run-length encoding) format. Figure 1 demonstrates a sample of the CANDID-PTX dataset, including both the original chest X-ray radiograph and the corresponding pneumothorax segmentation ground truth (converted from RLE format to a binary image). The 300 penumothorax cases we use in this dataset is randomly divided into five batches for a 5-fold cross-validation study.

Figure 1.

A sample from the CANDID-PTX dataset. Left is the original chest X-ray and right is the corresponding pneumothorax manual segmentation.

3.2. Network Architecture

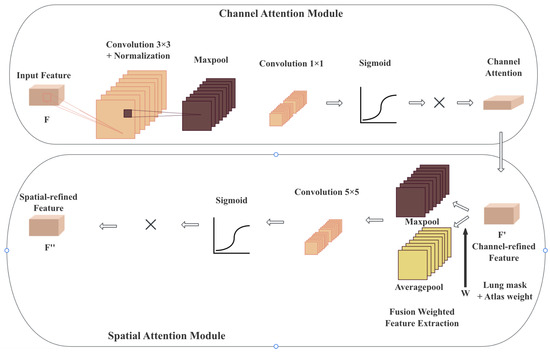

CBAM [24] serves as a lightweight and easily pluggable module that can be seamlessly integrated into different CNN architectures. With negligible additional computational costs, CBAM effectively enhances the representational capacity of CNNs, making it particularly suitable for complex tasks such as medical image analysis, where precise localization and discrimination of features are critical. CBAM operates by sequentially applying two complementary attention mechanisms, that is, channel attention and spatial attention.

Subsequently, the spatial attention module is employed to capture the inter-spatial dependencies of features. By applying average pooling operations along the channel axis, followed by a convolutional layer, the spatial attention mechanism produces an attention map that highlights the most salient regions within the feature map.

As a result, CBAM significantly improves the network’s discriminative capability and generalization performance in classification, detection, and segmentation tasks. Its flexibility and ease of integration make it a valuable component in the design of high-performance deep learning models. The structure and functional pipeline of CBAM are illustrated in Figure 2.

Figure 2.

The structure of CBAM, containing a channel attention module and a spatial attention module. The generated attention map is used in the spatial attention module to force the nnUNet model to focus on the lung region.

Specifically, the channel attention module analyzes the importance of different channels, amplifying the weights of key channels while reducing the influence of less relevant ones, allowing the network to effectively capture global information. Meanwhile, the spatial attention module redistributes attention across spatial dimensions, enabling the model to focus more on salient regions where the target is located, thereby increasing the discriminative power of feature representations.

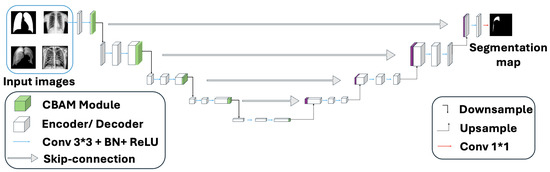

The integration of CBAM blocks with a standard U-Net architecture significantly enhances feature extraction by leveraging attention mechanisms [25]. Initially, the input feature map undergoes a series of convolutional layers, each followed by normalization and LeakyReLU activations. These stacked convolutional blocks progressively capture hierarchical features, enabling the network to extract both low-level and high-level representations. Upon completion of the final convolutional block, the CBAM is applied to refine the feature maps. The attention-enhanced feature map, which has been refined through both channel and spatial attention, is then passed through the network, allowing the model to prioritize critical features. This refinement improves the model’s ability to focus on significant details, thereby enhancing the overall segmentation performance within the nnUNet framework. The overview architecture of the combination of CBAM with plain nnUNet is shown in Figure 3 based on the original U-Net’s 5-stage structure.

Figure 3.

Combination of CBAM with 5-layer plain U-Net. Upon completion of the final convolutional block, the CBAM is applied to refine the feature maps.

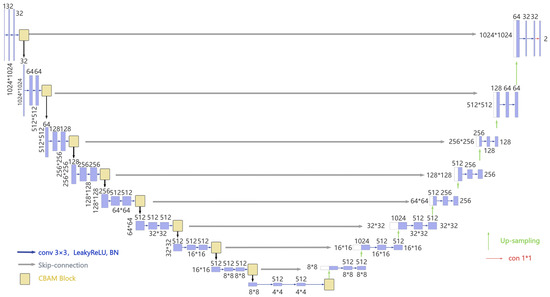

Our model combines these two mechanisms in a sequential and complementary manner. Our model seamlessly integrates channel and spatial attention mechanisms in a sequential and complementary manner, leveraging the CBAM to adaptively enhance feature representations, resulting in a total of 212 individual layers for the given configuration. The encoder contributes 90 layers across 9 stages, with each stage comprising 10 layers (3 layers from a convolutional block, 6 layers from a CBAM block, and 1 final activation layer). The decoder adds 104 layers over 8 stages, each consisting of 13 layers (1 transposed convolution, 10 layers from a convolutional block, and 1 segmentation output convolution layer). Through the sequential application of channel and spatial attention, CBAM enables the network to prioritize task-relevant features while minimizing noise, rendering this 212-layer architecture highly effective for high-resolution image segmentation tasks. This dual attention design guides the model to concentrate on task-relevant features, such as lesions, anatomical landmarks, or pathological textures in medical images, while attenuating background noise and redundant information. Our model’s detailed structure is shown in Figure 4.

Figure 4.

Detailed structure of the proposed model with a total of 9 levels of decomposition.

3.3. Attention Map Fusion Strategy

In our study, we have two forms of attention maps, that is, multiple lung segmentation [26] masks and a single probabilistic atlas. These two attention mechanisms have different roles in guiding the segmentation model:

- Lung segmentation masks: These masks are derived from various previous lung segmentations by utilizing the foundation model MedSAM. They provide spatial information on where the lung regions are located, highlighting relevant anatomical structures. Since there are multiple lung segmentation masks, we will treat these as multiple sources of local spatial attention maps.

- Probabilistic atlas: The probabilistic atlas is derived from the pneumothorax ground truth in the CANDID-PTX dataset. It provides a probability map indicating the likelihood of a voxel belonging to a pneumothorax region. This map is generated by calculating the probability distribution of pneumothorax occurrence across the available population, guiding the model to focus on regions where pneumothorax is more likely to appear. The probabilistic atlas serves as an anatomical and probabilistic prior, helping the model focus on areas that are more likely to contain pneumothorax, further improving its ability to detect this condition.

To combine these two sources of attention, we use a weighted fusion strategy. The lung segmentation masks provide local spatial attention focusing on the lung region, and the probabilistic atlas adds a global context for detecting pneumothorax lesions within those regions. Mathematically, the fusion of these two attention maps can be represented as follows:

where is the i-th lung segmentation mask at location , is the probabilistic atlas, providing the likelihood of pneumothorax at each location. The sum over i represents the combined contribution from all lung segmentation masks. The combined attention map is computed by summing the weighted contributions of the lung masks and the probabilistic atlas.

3.4. Spatial Prior Contrast Adapter

The SPCA consists of three main components. Contrast enhancement, Med-SAM-enabled lung segmentation, and probabilistic atlas prior. Below, we detail the components of SPCA.

3.4.1. Contrast Enhancement

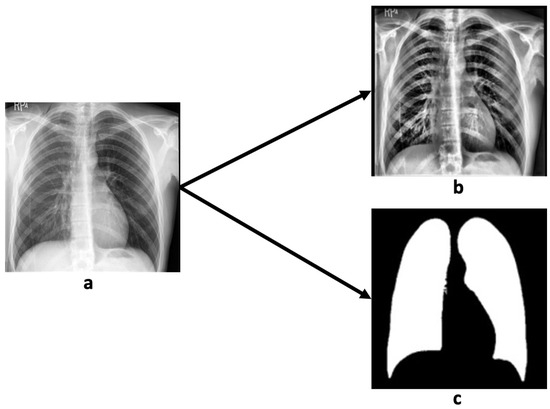

In this study, we apply contrast-limited adaptive histogram equalization (CLAHE) [27] to enhance the local contrast of chest X-ray images for improved pneumothorax detection, as illustrated in Figure 5. The process begins with the normalization of pixel intensities to the range [0, 1], ensuring consistent scaling across all images. CLAHE is then applied to enhance contrast within localized regions, thereby highlighting subtle features that are diagnostically significant. To prevent over-amplification of noise, the ClipLimit parameter is used to control the extent of enhancement. The resulting images are saved and displayed alongside their normalized counterparts for comparative visualization. This approach is particularly advantageous in medical imaging, where the ability to detect small but clinically important details is critical. By enhancing local contrast without significantly altering global image brightness, CLAHE supports more accurate interpretation while preserving the original image structure.

Figure 5.

A sample of contrast and lung segmentation by MedSAM demonstrates original chest X-ray radiograph (a), contrast-enhanced chest X-ray radiograph (b), and lung segmentation result (c).

3.4.2. Lung Segmentation by MedSAM

We employed MedSAM to perform automatic segmentation of lung regions in chest X-ray images. The process began by uploading the images to the MedSAM platform, where the lung fields were manually designated as regions of interest. MedSAM then utilized advanced deep learning algorithms in conjunction with image-processing techniques to automatically segment the lungs, effectively isolating them from adjacent anatomical structures such as the ribs and heart. To improve segmentation accuracy, users were able to refine the output by adjusting parameters or manually correcting boundary discrepancies. This semi-automated approach enabled precise delineation of lung regions, which is essential for identifying subtle abnormalities such as infections, tumors, or other pulmonary conditions [28].

As shown in Figure 5, MedSAM provided accurate and well-defined lung segmentation, thereby enhancing the detection and evaluation of lung-related pathologies and supporting radiologists in making more reliable and efficient diagnostic decisions based on chest X-rays.

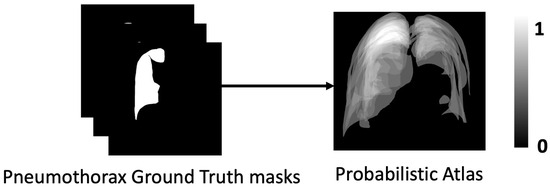

3.4.3. Probabilistic Atlas

In medical image segmentation, the use of a probabilistic atlas has proven to be an effective approach for detecting and delineating pneumothorax in chest X-ray radiography. Given the clinical importance of precise pneumothorax segmentation for accurate assessment and decision-making, this method provides valuable spatial priors to guide the segmentation process. A probabilistic atlas is constructed from a dataset of annotated chest X-rays representing various stages and manifestations of pneumothorax. This enables the model to capture the spatial distribution and visual characteristics of pneumothorax-related features, such as air pockets and the lung–chest wall boundary. By leveraging the ground truth masks from the CANDID-PTX dataset, a probability map is generated for each X-ray, where each pixel is assigned a statistical likelihood of belonging to the pneumothorax region (Figure 6). This approach effectively addresses challenges such as anatomical variability, inconsistent image quality, and the heterogeneous presentation of pneumothorax. The application of a probabilistic atlas not only enhances segmentation robustness and diagnostic accuracy but also reduces the time required for evaluation, making it especially valuable in time-sensitive clinical settings such as emergency care.

Figure 6.

Probabilistic atlas generated from ground truth masks demonstrates segmentation labels in dataset CANDID-PTX (left) and probabilistic atlas (right).

3.5. Preprocessing and Normalization

In this study, we first apply contrast enhancement using CLAHE to improve the visibility of lung structures and pneumothorax-related anomalies. CLAHE enhances subtle intensity differences between pneumothorax regions and surrounding lung tissue, thereby facilitating better distinction of abnormal areas by the model. Intensity normalization and data augmentation are automatically managed by the nnUNet framework during training. For chest X-ray images, nnUNet performs z-score normalization on a per-image basis using each image’s own mean and standard deviation. This process mitigates intensity variations arising from differences in exposure settings, imaging devices, or patient positioning, enhancing the model’s robustness to such variability. In addition, nnUNet employs a range of data augmentation techniques such as flipping, rotation, and zooming to increase the diversity of the training data. These augmentations improve the model’s ability to generalize to unseen images and handle variations in pneumothorax size, location, and orientation. Together, these preprocessing and normalization procedures significantly enhance image quality and ensure consistent input for training, ultimately contributing to more accurate and reliable pneumothorax segmentation performance.

3.6. Loss Function

In this study, SPCA-enhanced nnUNet was trained using a combined loss function, which is the sum of Dice loss and cross-entropy loss, which was previously used by standard nnUNet [7]. The loss function can be represented as follows:

The total loss of the model is formulated as the sum of two components, as follows: the Dice loss and the cross-entropy loss, both of which serve complementary purposes in segmentation tasks. Specifically, the Dice loss is computed as the negative of the Dice coefficient, a measure of overlap between predicted and ground truth masks. Since the Dice coefficient ranges from 0 to 1, the Dice loss consequently lies within the interval [−1, 0], inherently contributing a negative value to the total loss. In contrast, the cross-entropy loss evaluates pixel-wise classification performance and is strictly non-negative. When the model yields relatively high overlap and low cross-entropy loss, the magnitude of the negative Dice loss can exceed that of the cross-entropy term, leading to a negative total loss.

The Dice loss [29] formulation here is like a finely tuned compass, based on the multi-class version proposed by Drozdzal et al., which uses skip connections to preserve intricate details in complex biomedical images. Its loss function can be represented as follows:

where u is the softmax output of the network and v is the one-hot encoding of the ground truth segmentation map. Both u and v are of shape , where I denotes the number of pixels in the training patch or batch, and K is the number of classes, with indexing the pixels and indexing the class labels.

As a seasoned guide helps you navigate through dense forests by ensuring you stay on track, Isensee et al. found that this formula excels when dealing with imbalanced data, ensuring the model focuses on the right areas without getting lost in the noise [30]. It is like giving the model a clear map of where the important features lie. Furthermore, Sudre et al.’s generalized Dice overlap loss function acts like a powerful engine, boosting the model’s ability to break through tougher barriers, allowing it to handle the most challenging and imbalanced segments with precision [31]. Together, these advancements work harmoniously, transforming the Dice loss into a high-performance tool for medical image segmentation, empowering us to cut through the complexity of difficult segmentation tasks like a sharp scalpel through tissue, ensuring both accuracy and reliability in the results. The cross-entropy loss function can be represented as follows:

Cross-entropy loss is a widely used loss function in deep learning. It measures the difference between two probability distributions, that is, the predicted probability distribution output by a model and the true distribution represented by the labels. Cross-entropy calculates the negative logarithm of the predicted probability corresponding to the true class label, penalizing incorrect predictions more heavily as the confidence in the wrong class increases. This loss function encourages the model to output probabilities closer to 1 for the correct class and closer to 0 for incorrect ones, thus improving prediction accuracy over time.

3.7. Evaluation Metrics

To evaluate the performance of SPCA-enhanced nnUNet, we employed the Dice similarity coefficient (DSC), accuracy, precision, recall, and IoU. DSC measures the similarity between predicted and true regions, with higher values indicating better overlap [6,32]. Accuracy calculates the overall correctness of predictions, though it may not be reliable for imbalanced datasets. Precision measures the proportion of correct positive predictions, while recall evaluates how well the model identifies all positive instances. IoU assesses the overlap between predicted and actual regions, with higher values indicating better segmentation performance. These metrics together provide a thorough evaluation of the model’s performance.

A confusion matrix is a table used to evaluate the performance of a classification model. It displays the counts of true positive (TP), true negative (TN), false positive (FP), and false negative (FN) predictions. The evaluation matrix can be defined by using a confusion matrix.

4. Results

SPCA-enhanced nnUNet was implemented in Python 3.12.2 on a workstation featuring a Core i9 3.0 GHz CPU, 32 GB of memory, and two NVIDIA A4000 GPUs. Training was optimized using SGD with Nesterov momentum ( = 0.99) and 5-fold cross-validation, with 1000 epochs per fold. For this study, we used 300 subjects from the CANDID-PTX dataset: 216 for training, 54 for validation, and 30 for testing.

This model integrates PyTorch 2.7.0 as the core deep learning framework, offering essential components such as convolutional layers, dropout, and tensor operations for model construction and training. NumPy is employed for efficient numerical computation, particularly in handling multidimensional arrays. The typing module provides type annotations to enhance code readability and maintainability. Additionally, the dynamic_network_architectures package developed by DKFZ contains PyTorch implementations of U-Net architectures that can be dynamically configured to accommodate varying image dimensions and different numbers of input channels.

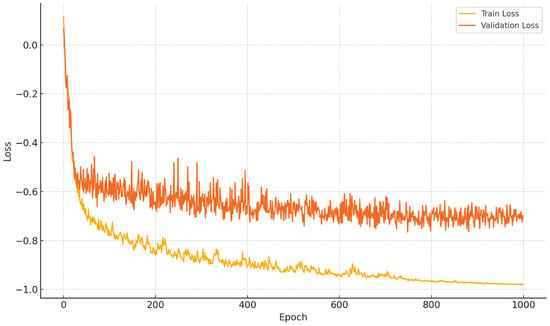

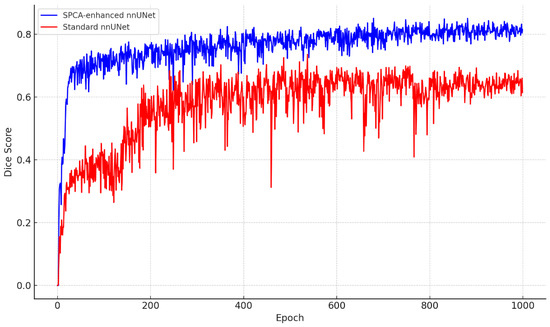

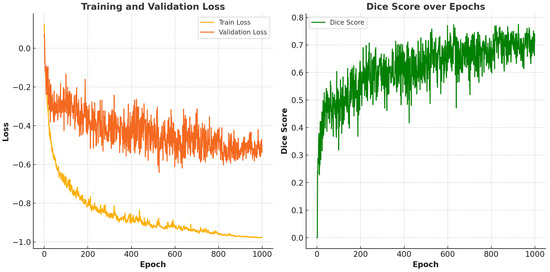

Figure 7 demonstrates the training and validation loss of SPCA-enhanced nnUNet and the Dice score comparison between SPCA-enhanced nnUNet and standard nnUNet.

Figure 7.

Performance comparison. Top plot demonstrates the training and validation loss of SPCA-enhanced nnUNet. Bottom plot demonstrates Dice comparison between SPCA-enhanced nnUNet and standard nnUNet.

4.1. Comparison with Standard nnUNet

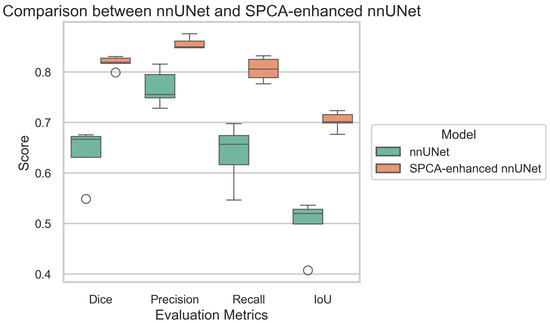

To assess the performance of both models, the following evaluation metrics were used: Dice coefficient, accuracy, recall, IoU, and precision. The results are shown in Table 1, highlighting the improvements achieved by the SPCA-enhanced nnUNet. The boxplot illustrates the performance comparison between the baseline nnUNet and the SPCA-enhanced nnUNet across four key evaluation metrics, as follows: Dice, precision, recall, and IoU. The SPCA-enhanced nnUNet consistently outperforms the baseline model, achieving higher median scores in all metrics. The improvement is particularly pronounced in recall and IoU, where the SPCA-enhanced model demonstrates not only higher median values but also reduced variance, indicating more stable and reliable performance. For Dice and precision, while the enhancement is less substantial, the SPCA-enhanced nnUNet still maintains a clear advantage. These results underscore the efficacy of integrating SPCA into nnUNet, leading to significant gains in segmentation performance, especially in metrics that emphasize model sensitivity and spatial overlap accuracy. Figure 8 demonstrate a comparison between nnUNet and SPCA-enhanced nnUNet using different metrics.

Table 1.

Evaluation of segmentation accuracy comparing standard nnUNet and SPCA-enhanced nnUNet on pneumothorax segmentation in chest radiography. Bold indicates the top values.

Figure 8.

Comparison of evaluation metrics between standard nnUNet and SPCA-enhanced nnUNet. The green one demonstrates standard nnUNet, and the orange one demonstrates SPCA-enhanced nnUNet.

4.2. Ablation Study

To evaluate the effectiveness of each component in our proposed framework, we conducted an ablation study by systematically removing or modifying key modules. Specifically, we assess the impact of the SPCA module and CBAM block. By comparing the performance of different model variants, we demonstrate the contribution of each component to the overall improvement. The ablation study results are shown in Table 2.

Table 2.

Ablation study on SPCA-enhanced nnUNet. An ablation study was implemented on the SPCA module and the CBAM module. Bold indicates the top values.

4.3. Comparison with Other Datasets

We further apply our model to the public SIIM-ACR pneumothorax segmentation dataset to evaluate its generalization ability [33]. SIIM-ACR is a dataset designed for the SIIM-ACR pneumothorax segmentation challenge, supporting both segmentation and classification tasks. It consists of 12,047 images with 2669 labeled pneumothorax objects. For this comparative study, we used 300 subjects from the SIIM-ACR dataset, allocating 216 for training, 54 for validation, and 30 for testing, using 5-fold cross-validation, with 1000 epochs per fold. Results are shown in Figure 9 and Table 3.

Figure 9.

Performance of the proposed model on the SIIM-ACR dataset. The left side demonstrates the training and validation loss while the right side demonstrates the Dice score over epochs.

Table 3.

Evaluation of segmentation accuracy on the SIIM-ACR dataset. Bold indicates the top values.

5. Discussion

5.1. Performance Evaluation

The comparison between the standard nnUNet and the SPCA-enhanced nnUNet for pneumothorax segmentation in chest radiography reveals notable improvements across all metrics with the enhanced model. Specifically, the SPCA-enhanced nnUNet outperforms the standard model in terms of the Dice coefficient, accuracy, recall, IoU, and precision. The Dice coefficient, which measures the overlap between predicted and ground truth areas, is significantly higher for the enhanced model, indicating better segmentation quality. Similarly, accuracy and recall are improved, reflecting the enhanced model’s ability to correctly identify pneumothorax regions while minimizing false negatives. The IoU also sees a marked increase, demonstrating improved spatial consistency between predicted and actual regions. Finally, precision, which indicates the model’s ability to avoid false positives, is noticeably better in the SPCA-enhanced version. Overall, the SPCA enhancement boosts the segmentation performance in every aspect, showcasing its effectiveness in pneumothorax segmentation on chest radiographs.

Segmentation performance was primarily assessed using the Dice score. As shown in Table 1, SPCA-enhanced nnUNet consistently outperformed standard nnUNet. This improvement can be attributed to several key modifications in the data preprocessing and model structure, such as the contrast enhancement and combination of the attention mechanism with plain nnUNet. These modifications enable the SPCA-enhanced nnUNet to capture finer pneumothorax details in X-ray radiography, leading to higher accuracy in pneumothorax segmentation tasks.

In terms of computational efficiency, we measured the training time and inference speed. While the improved nnUNet introduces additional model complexity due to its enhanced architecture, it maintains a reasonable balance between performance and efficiency. The training time of the improved model increased by approximately compared to the original nnUNet, which can be attributed to the increased depth and complexity of the network. However, the inference speed was found to be nearly identical between the two models, demonstrating that the performance gains did not significantly compromise runtime efficiency.

The ablation study highlights the effectiveness of the proposed SPCA-enhanced nnUNet in the task of pneumothorax segmentation. Specifically, the full model incorporating both the SPCA and CBAM achieved the highest average Dice coefficient and IoU across five folds. When the CBAM module was ablated, a substantial performance degradation was observed, with the average Dice dropping, indicating the critical role of enhancing feature refinement through attention mechanisms. The exclusion of the SPCA module also led to performance reductions, though to a lesser extent, demonstrating the complementary value of spatial prior information in guiding the precise localization of pneumothorax regions. These findings collectively validate the design of our proposed architecture and underscore the synergistic contributions of SPCA and CBAM in improving segmentation accuracy and robustness.

When implementing our model on the SIIM-ACR dataset, it exhibits a decrease of approximately 0.1 in its Dice score. This performance drop may be attributed to differences in data distribution, imaging protocols, or annotation standards across datasets. The result variations between datasets also highlight the challenges of generalizing models trained on a specific dataset to diverse clinical environments.

In a ConTEXTual Net paper [34], the authors experimented on the CANDID-PTX dataset to evaluate their model’s performance in a clinical setting. They compared ConTEXTual Net against several state-of-the-art segmentation methods, including Res50 U-Net (Avg Dice: 0.677) [35], GLoRIA (Avg Dice: 0.686) [36], SWIN-UNETR (Avg Dice: 0.670) [37], LAVT (Avg Dice: 0.706) [38], and ConTEXTual Net (Avg Dice: 0.716). This comparison demonstrated that our model achieves superior performance in terms of the Dice coefficient, highlighting its effectiveness in capturing contextual features for medical image segmentation.

5.2. Limitations

In this study, SPCA-enhanced nnUNet primarily focuses on a single dataset, CANDID-PTX, and its evaluation is limited to pneumothorax on this specific dataset. As such, the model’s ability to generalize to other datasets or modalities remains untested. While the model achieves promising results within the context of the chosen dataset, its effectiveness and robustness in handling different types of data or in diverse application scenarios have not been explored. This limitation raises concerns about the model’s potential to perform well when applied to other domains or tasks, as the features and patterns inherent in different datasets might vary significantly.

Additionally, SPCA-enhanced nnUNet has not been evaluated on multimodal data, which could include variations in data types such as images and text. Medical reports generated by physicians are also included in the dataset CANDID-PTX. Many clinical applications involve complex, multimodal inputs, and a model that is restricted to a single modality may struggle to adapt to such environments. A model named ConTEXTual Net was proposed by Huemann et al., which uses medical reports from the CANDID-PTX dataset as attention to prompt the location of pneumothorax regions, ultimately improving segmentation accuracy. However, the paper has a logical issue in practical medical applications; in real-world scenarios, we do not have pre-existing medical reports to guide the deep learning model to focus on specific areas. If medical reports were available, there would be no need to perform pneumothorax segmentation [34]. Therefore, future work should aim to test the model across a variety of datasets from different domains and modalities to assess its adaptability and scalability. By doing so, we can better understand its strengths and limitations, ultimately improving its performance and ensuring its applicability to a wider range of practical tasks. Furthermore, incorporating cross-modal data could lead to more comprehensive insights, making the model more versatile and useful in clinical applications.

5.3. Discussion

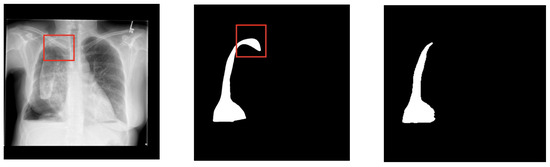

As shown in Figure 10, SPCA-enhanced nnUNet relies on CANDID-PTX, consisting only of the 2D X-ray radiograph, which limits the ability to analyze and interpret spatial information in three dimensions.

Figure 10.

A sample comparison between the segmentation result and the ground truth mask demonstrates the original chest X-ray radiograph (left), ground truth mask (middle), and segmentation result (right). The red boxes show the location of pneumothorax region that is difficult to be detected.

As we can see from Figure 10, SPCA-enhanced nnUNet still cannot detect pneumothorax highlighted with a red border line. Since 2D images represent individual slices of anatomy, the model cannot leverage the full context provided by the depth of the tissue or organ. This means that pneumothoraxes located in different layers or with overlapping regions in 3D space aren’t adequately captured. When one structure partially covers another in a 2D slice, the model struggles to accurately segment the obscured regions, leading to a loss of correct segmentation. This is especially problematic in complex anatomical structures where understanding the relationships between adjacent tissues or organs is crucial. Additionally, the lack of 3D information makes it harder for the model to distinguish between similar tissues or identify boundaries that might be clearer in a 3D volume. This limitation can affect the accuracy of the segmentation, potentially leading to issues in clinical applications where precise delineation of organs or pathologies is essential for diagnosis, treatment planning, and surgical procedures.

Medical image segmentation models that rely on limited pseudo-labels may still introduce noise, especially in the absence of high-quality manual annotations. This label noise can potentially degrade model performance and affect segmentation reliability in clinical applications. To address this limitation, recent research has proposed noise-robust learning strategies, such as BPT-PLR [39], a balanced partitioning and training framework with pseudo-label relaxed contrastive loss, and progressive sample selection methods based on contrastive learning. Integrating such approaches into the training or fine-tuning stages of medical image segmentation models could further enhance their robustness to noisy or imperfect supervision. This integration may be particularly beneficial in real-world scenarios where obtaining accurate medical image annotations is costly, time-consuming, or inconsistent across annotators. Future work may explore these strategies to improve their performance and generalizability under practical constraints.

After implementing the model on the new dataset, the model’s Dice score dropped by approximately 0.1 compared to previous runs. This decline suggests that the new dataset may be more challenging or less aligned with the training distribution. Despite the drop, the model still shows consistent learning behavior, indicating reasonable generalization. Further improvements may require dataset-specific tuning or augmentation strategies to better adapt to the new data characteristics.

6. Conclusions

In this study, we presented SPCA-enhanced nnUNet, an improved version of the nnUNet architecture specifically designed for pneumothorax segmentation in chest X-ray radiography. Pneumothorax, with its varied presentation and subtle features in radiographs, poses a challenge for accurate and efficient detection. The modifications made to the nnUNet, including enhanced data augmentation strategies, optimized preprocessing techniques, and architectural refinements, have been shown to significantly improve segmentation performance in terms of both accuracy and robustness.

At the same time, the ablation study confirms that integrating attention mechanisms into the model leads to notable performance improvements. By enabling the model to focus on the most informative regions of the image, attention enhances feature representation and facilitates more accurate image comparison. This highlights the crucial role of attention in improving both the precision and robustness of image-processing tasks.

Through extensive experiments on a publicly available pneumothorax dataset, our proposed model demonstrated superior performance over baseline methods, achieving notable improvements in the Dice score and other key evaluation metrics. Additionally, the model’s ability to generalize well across diverse datasets underlines its potential for deployment in clinical practice, where the variability of X-ray radiography is a significant concern.

These results underscore the efficacy of the SPCA-enhanced nnUNet as a reliable tool for the automated segmentation of pneumothorax in chest X-rays. The model holds promise for assisting radiologists in the early detection of pneumothorax, potentially reducing diagnostic time and improving patient outcomes. However, further optimization is still required to adapt the model to diverse clinical environments. Future work will focus on fine-tuning the model for broader applications and exploring its integration into real-world diagnostic systems.

By enhancing diagnosis accuracy and accelerating image interpretation, AI is playing an increasingly pivotal role in medical imaging. In the diagnosis of pneumothorax, an acute condition requiring rapid intervention, deep convolutional neural networks have demonstrated robust performance in detecting subtle radiographic indicators. This information can assist physicians in estimating pneumothorax volume and monitoring disease progression.

However, despite these advances, AI remains a supportive tool rather than a replacement for human expertise [40]. The clinical interpretation of imaging findings still requires nuanced judgment. AI systems should, therefore, be viewed as augmentative technologies that empower radiologists and clinicians by reducing cognitive load, minimizing human error, and enabling more consistent and efficient diagnostic workflows.

Author Contributions

Conceptualization, supervision, funding acquisition, writing—original draft, and project administration: E.A.R.; software, validation, formal analysis, investigation, visualization, and data curation: Y.J.; methodology and writing—review and editing: Y.J. and E.A.R. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JST, PRESTO Grant Number JPMJPR23P7, Japan.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study is available from https://figshare.com/articles/dataset/CANDID-PTX/14173982 (accessed on 11 June 2025). A data use agreement and completion of an ethical training course may be required to access the data.

Acknowledgments

The authors would like to thank Sijing Feng from the Department of Radiology, Dunedin Hospital, Dunedin, New Zealand, for providing the CANDID-PTX dataset.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Abedalla, A.; Abdullah, M.; Al-Ayyoub, M.; Benkhelifa, E. Chest X-ray pneumothorax segmentation using U-Net with EfficientNet and ResNet architectures. PeerJ Comput. Sci. 2021, 7, e607. [Google Scholar] [CrossRef] [PubMed]

- Baumann, M.; Noppen, M. Pneumothorax. Respirology 2004, 9, 157–164. [Google Scholar] [CrossRef] [PubMed]

- Roberts, D.; Leigh-Smith, S.; Faris, P.; Blackmore, C.; Ball, C.; Robertson, H.; Dixon, E.; James, M.; Kirkpatrick, A.; Kortbeek, J.; et al. Clinical Presentation of Patients with Tension Pneumothorax: A Systematic Review. Ann. Surg. 2015, 261, 1068–1078. [Google Scholar] [CrossRef]

- Sadikot, R.T.; Greene, T.; Meadows, K.; Arnold, A.G. Recurrence of primary spontaneous pneumothorax. Thorax 1997, 52, 805–809. [Google Scholar] [CrossRef]

- Iqbal, T.; Shaukat, A.; Akram, M.U.; Mustansar, Z.; Khan, A. Automatic diagnosis of pneumothorax from chest radiographs: A systematic literature review. IEEE Access 2021, 9, 145817–145839. [Google Scholar] [CrossRef]

- Olaf, R.; Philipp, F.; Thomas, B. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Proceedings of the 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Fabian, I.; Paul, F.J.; Simon, A.A.K.; Jens, P.; Klaus, H.M.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods 2021, 18, 203–221. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment Anything. In Proceedings of the 2023 IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 3992–4003. [Google Scholar] [CrossRef]

- Nouman, M.; Khoriba, G.; Rashed, E.A. Rethinking MedSAM: Performance Discrepancies in Clinical Applications. In Proceedings of the 2024 IEEE International Conference on Future Machine Learning and Data Science (FMLDS), Sydney, Australia, 20–23 November 2024; pp. 301–307. [Google Scholar] [CrossRef]

- Liu, L.; Wolterink, J.M.; Brune, C.; Veldhuis, R.N.J. Anatomy-aided deep learning for medical image segmentation: A review. Phys. Med. Biol. 2021, 66, 11TR01. [Google Scholar] [CrossRef]

- Wang, H.; Gu, H.; Qin, P.; Wang, J. CheXLocNet: Automatic localization of pneumothorax in chest radiographs using deep convolutional neural networks. PLoS ONE 2020, 15, e0242013. [Google Scholar] [CrossRef]

- Wang, X.; Yang, S.; Lan, J.; Fang, Y.; He, J.; Wang, M.; Zhang, J.; Han, X. Automatic segmentation of pneumothorax in chest radiographs based on a two-stage deep learning method. IEEE Trans. Cogn. Dev. Syst. 2020, 14, 205–218. [Google Scholar] [CrossRef]

- Lee, S.; Kim, E.K.; Han, K.; Ryu, L.; Lee, E.H.; Shin, H.J. Factors for increasing positive predictive value of pneumothorax detection on chest radiographs using artificial intelligence. Sci. Rep. 2024, 14, 19624. [Google Scholar] [CrossRef] [PubMed]

- Feng, S.; Azzollini, D.; Kim, J.S.; Jin, C.K.; Gordon, S.P.; Yeoh, J.; Kim, E.; Han, M.; Lee, A.; Patel, A.; et al. Curation of the candid-ptx dataset with free-text reports. Radiol. Artif. Intell. 2021, 3, e210136. [Google Scholar] [CrossRef]

- Hong, W.; Hwang, E.J.; Lee, J.H.; Park, J.; Goo, J.M.; Park, C.M. Deep Learning for Detecting Pneumothorax on Chest Radiographs after Needle Biopsy: Clinical Implementation. Radiology 2022, 303, 433–441. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Wang, J.; Yang, W.; Wang, J.; Qian, D. Deep multi-instance transfer learning for pneumothorax classification in chest X-ray images. Med. Phys. 2022, 49, 231–243. [Google Scholar] [CrossRef] [PubMed]

- Kamalakannan, N.; Macharla, S.R.; Kanimozhi, M.; Sudhakar, M.S. Exponential Pixelating Integral transform with dual fractal features for enhanced chest X-ray abnormality detection. Comput. Biol. Med. 2024, 182, 109093. [Google Scholar] [CrossRef]

- Sae-Lim, W.; Wettayaprasit, W.; Suwannanon, R.; Cheewatanakornkul, S.; Aiyarak, P. Automated pneumothorax segmentation and quantification algorithm based on deep learning. Intell. Syst. Appl. 2024, 22, 200383. [Google Scholar] [CrossRef]

- Yuan, H.; Hong, C.; Tran, N.; Xu, X.; Liu, N. Leveraging anatomical constraints with uncertainty for pneumothorax segmentation. Health Care Sci. 2024, 3, 456–474. [Google Scholar] [CrossRef]

- Liu, Y.; Liang, P.; Liang, K.; Chang, Q. Automatic and efficient pneumothorax segmentation from CT images using EFA-Net with feature alignment function. Sci. Rep. 2023, 13, 15291. [Google Scholar] [CrossRef]

- Li, X.; Thrall, J.H.; Digumarthy, S.R.; Kalra, M.K.; Pandharipande, P.V.; Zhang, B.; Nitiwarangkul, C.; Singh, R.; Khera, R.D.; Li, Q. Deep learning-enabled system for rapid pneumothorax screening on chest CT. Eur. J. Radiol. 2019, 120, 108692. [Google Scholar] [CrossRef]

- Liang, P.; Chen, J.; Yao, L.; Yu, Y.; Liang, K.; Chang, Q. DAWTran: Dynamic adaptive windowing transformer network for pneumothorax segmentation with implicit feature alignment. Phys. Med. Biol. 2023, 68, 175020. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Computer Vision—ECCV 2018, Proceedings of the 15th European Conference, Munich, Germany, 8–14 September 2018; Part VII; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–19. [Google Scholar] [CrossRef]

- Zhao, Z.; Chen, K.; Yamane, S. CBAM-Unet++:easier to find the target with the attention module “CBAM”. In Proceedings of the 2021 IEEE 10th Global Conference on Consumer Electronics (GCCE), Kyoto, Japan, 12–15 October 2021; pp. 655–657. [Google Scholar] [CrossRef]

- Jannat, M.; Birahim, S.; Hasan, M.; Roy, T.; Sultana, L.; Sarker, H.; Fairuz, S.; Abdallah, H. Lung Segmentation with Lightweight Convolutional Attention Residual U-Net. Diagnostics 2025, 15, 854. [Google Scholar] [CrossRef] [PubMed]

- Pizer, S.; Johnston, R.; Ericksen, J.; Yankaskas, B.; Muller, K. Contrast-limited adaptive histogram equalization: Speed and effectiveness. In Proceedings of the [1990] First Conference on Visualization in Biomedical Computing, Atlanta, GA, USA, 22–25 May 1990; pp. 337–345. [Google Scholar] [CrossRef]

- Kancherla, D.S.V.; Mannava, P.; Tallapureddy, S.; Chintala, V.; P, K.; Iwendi, C. Pneumothorax: Lung Segmentation and Disease Classification Using Deep Neural Networks. In Proceedings of the 2023 International Conference on Self Sustainable Artificial Intelligence Systems (ICSSAS), Erode, India, 18–20 October 2023; pp. 181–187. [Google Scholar] [CrossRef]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The importance of skip connections in biomedical image segmentation. In Deep Learning and Data Labeling for Medical Applications, Proceedings of the First International Workshop, LABELS 2016, and Second International Workshop, DLMIA 2016, Held in Conjunction with MICCAI 2016, Athens, Greece, 21 October 2016; Springer: Cham, Switzerland, 2016; pp. 179–187. [Google Scholar]

- Isensee, F.; Jaeger, P.; Full, P.; Wolf, I.; Engelhardt, S.; Maier-Hein, K. Automatic cardiac disease assessment on cine-MRI via time-series segmentation and domain specific features. In International Workshop on Statistical Atlases and Computational Models of the Heart, Proceedings of the 8th International Workshop, STACOM 2017, Held in Conjunction with MICCAI 2017, Quebec City, QC, Canada, 10–14 September 2017; Springer: Cham, Switzerland, 2017; pp. 120–129. [Google Scholar]

- Sudre, C.; Li, W.; Vercauteren, T.; Ourselin, S.; Cardoso, M. Generalised Dice overlap as a deep learning loss function for highly unbalanced segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, Proceedings of the Third International Workshop, DLMIA 2017, and 7th International Workshop, ML-CDS 2017, Held in Conjunction with MICCAI 2017, Québec City, QC, Canada, 14 September 2017; Springer: Cham, Switzerland, 2017; pp. 240–248. [Google Scholar]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar] [CrossRef]

- Zawacki, A.; Wu, C.; Shih, G.; Elliott, J.; Fomitchev, M.; Hussain, M.; Lakhani, P.; Culliton, P.; Bao, S. SIIM-ACR Pneumothorax Segmentation 2019. 2019. Available online: https://www.kaggle.com/competitions/siim-acr-pneumothorax-segmentation (accessed on 2 June 2025).

- Huemann, Z.; Tie, X.; Hu, J.; Bradshaw, T.J. ConTEXTual Net: A Multimodal Vision-Language Model for Segmentation of Pneumothorax. J. Digit. Imaging 2024, 37, 1652–1663. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:1512.03385. [Google Scholar]

- Huang, S.C.; Shen, L.; Lungren, M.P.; Yeung, S. GLORIA: A Multimodal Global-Local Representation Learning Framework for Label-Efficient Medical Image Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3942–3951. [Google Scholar]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.; Xu, D. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. arXiv 2022, arXiv:2201.01266. [Google Scholar]

- Yang, Z.; Wang, J.; Tang, Y.; Chen, K.; Zhao, H.; Torr, P.H.S. LAVT: Language-Aware Vision Transformer for Referring Image Segmentation. arXiv 2022, arXiv:2112.02244. [Google Scholar]

- Zhang, Q.; Jin, G.; Zhu, Y.; Wei, H.; Chen, Q. BPT-PLR: A Balanced Partitioning and Training Framework with Pseudo-Label Relaxed Contrastive Loss for Noisy Label Learning. Entropy 2024, 26, 589. [Google Scholar] [CrossRef]

- Rajpurkar, P.; Chen, E.; Banerjee, O.; Topol, E.J. AI in health and medicine. Nat. Med. 2022, 28, 31–38. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).