Abstract

The rapid advancement in deep forgery technology in recent years has created highly deceptive face video content, posing significant security risks. Detecting these fakes is increasingly urgent and challenging. To improve the accuracy of deepfake face detection models and strengthen their resistance to adversarial attacks, this manuscript introduces a method for detecting forged faces and defending against adversarial attacks based on a multi-feature decision fusion. This approach allows for rapid detection of fake faces while effectively countering adversarial attacks. Firstly, an improved IMTCCN network was employed to precisely extract facial features, complemented by a diffusion model for noise reduction and artifact removal. Subsequently, the FG-TEFusionNet (Facial-geometry and Texture enhancement fusion-Net) model was developed for deepfake face detection and assessment. This model comprises two key modules: one for extracting temporal features between video frames and another for spatial features within frames. Initially, a facial geometry landmark calibration module based on the LRNet baseline framework ensured an accurate representation of facial geometry. A SENet attention mechanism was then integrated into the dual-stream RNN to enhance the model’s capability to extract inter-frame information and derive preliminary assessment results based on inter-frame relationships. Additionally, a Gram image texture feature module was designed and integrated into EfficientNet and the attention maps of WSDAN (Weakly Supervised Data Augmentation Network). This module aims to extract deep-level feature information from the texture structure of image frames, addressing the limitations of purely geometric features. The final decisions from both modules were integrated using a voting method, completing the deepfake face detection process. Ultimately, the model’s robustness was validated by generating adversarial samples using the I-FGSM algorithm and optimizing model performance through adversarial training. Extensive experiments demonstrated the superior performance and effectiveness of the proposed method across four subsets of FaceForensics++ and the Celeb-DF dataset.

1. Introduction

Against the backdrop of booming social media and digital technologies, the rise of deep forgery techniques poses a serious threat to information security and social stability. Highly realistic synthesized images and audio make them the vanguard of disinformation dissemination and privacy invasion, challenging personal privacy and information security. With the advancement of deep learning technology, deep face forgery technology is gradually becoming a new security challenge. The performance of traditional detection methods becomes more and more vulnerable in the face of highly realistic deep images. Especially in recent years, the rise of advanced technologies such as Generative Adversarial Network (GAN) [1] and Diffusion Model (DP) [2] has made synthetic face images more and more realistic and difficult to distinguish from real face images. This makes traditional face detection methods face serious challenges in recognizing deeply faked images. At the same time, the emergence of adversarial attacks has provided new tools and strategies to the producers of forged images, making it more difficult for existing detection systems to detect forged images. Therefore, there is an urgent need for researchers to come up with more efficient and robust deep forgery face detection techniques to secure digital identity and privacy. Deep forgery face detection serves as a key application in the field of intelligent visual security surveillance. It can more effectively identify and block behaviors that attempt to evade surveillance systems through fake face images. Key applications such as authentication and security applications, social media and online platforms, video forensics and investigations, and personal privacy protection. Fake face videos may be harmless in intent and have advanced research in video generation across industries. However, once they are maliciously used to disseminate false information, harass individuals, or defame celebrities, they have attracted significant attention on social platforms globally, particularly hampering the credibility of digital media. As a result, fake face video detection has become a key challenge in the field of AI security. However, the emergence of sample adversarial attacks has further elevated the difficulty of detection. These attacks introduce tiny perturbations on the original image that alter the image almost imperceptibly but are effective in spoofing deep forgery detection models and distorting their outputs to avoid detection.

Currently, the detection models on deepfake face video are more often using CNN methods that target the face features in each frame and CNN+LSTM methods that focus on the features between frame and frame images. Xing et al. [3] proposed a deepfake face video detection model based on 3DCNNS, which notices the time-domain features and spatial-domain features of deepfake fake face video feature inconsistencies to achieve higher detection accuracy and robustness. Fu et al. [4] first revealed that deepfake detection’s generalization challenges stem not only from forgery method discrepancies but also position bias (over-relying on specific image regions) and content bias (misusing irrelevant information). They proposed a transformer-based feature-reorganization framework that eliminates biases through latent space token rearrangement and mixing, significantly enhancing cross-domain generalization across benchmarks. Siddiqui et al. [5] proposed integrating vision transformers with DenseNet-based neural feature extractors, achieving state-of-the-art vision transformer performance without relying on knowledge distillation or ensemble methods. Frank et al. [6] found that the discrete cosine transform (DCT) of the deepfake image and the real image show significant differences in the frequency domain. Sabir et al. [7] designed a framework for detecting deepfake videos using inter-frame temporal information, which achieved the SOTA at that time. Gu et al. [8] utilized the spatio-temporal inconsistency in Deepfake to propose the three templates of SIM, TIM, and ISM, which form the STILBlock plug-in module that can be inserted into a convolutional neural network to spatio-temporal information and perform spatio-temporal information fusion to complete the output of the deep forgery detection results. Kroiß et al. [9] implemented efficient synthetic/fake facial image detection using a pre-trained ResNet-50 architecture modified with adapted fully connected output layers, trained via transfer learning and fine-tuning on their “Diverse Facial Forgery Dataset”. R et al. [10] introduced TruthLens, a semantic-aware interpretable deepfake detection framework that simultaneously authenticates images and provides granular explanations (e.g., “eye/nose authenticity”), uniquely addressing facial manipulation deepfakes through unified multi-scale forensic analysis validated via multi-dataset experiments. Cheng et al. [11] proposed a directional progressive learning framework redefining hybrid forgeries as pivotal anchors in the “real-to-deepfake” continuum. They systematically implemented a Directional Progressive Regularizer (OPR) to enforce discrete anchor distributions and a feature bridging module for smooth transition modeling, demonstrating enhanced forgery information utilization through extensive experiments. Choi et al. [12] detected temporal anomalies in synthetic videos’ style latent vectors using StyleGRU and style attention modules, experimentally validating their cross-dataset robustness and temporal dynamics’ critical role in detection generalization. Cozzolino et al. [13] demonstrated the breakthrough potential of pre-trained vision-language models (CLIP) for cross-architecture AI-generated image detection under few-shot training, achieving state-of-the-art in-domain performance through a lightweight detection strategy with 13% robustness improvement. VTD-Net [14] is a frame-based deep forgery video identification method using CNN, Xception [13], and LSTM [15]. In VTD-Net, faces are extracted from video frames using a multi-task cascaded CNN, and then the Xception network is used to learn the distinguishing features between real and fake faces. Coccomini et al. [16] further improved the model by combining Vision Transformer [17] in the accuracy performance in deep forgery identification tasks. Chen et al. [18] proposed DiffusionFake, enhancing detection generalization by reverse-engineering forgery generation through feature injection into frozen pre-trained diffusion models for source/target image reconstruction, effectively disentangling forgery features to improve cross-domain robustness. Zhao et al. [19] developed an Interpretable Spatiotemporal Video Transformer (ISTVT) featuring a novel spatiotemporal self-attention decomposition mechanism and self-subtraction module to capture spatial artifacts and temporal inconsistencies, enabling robust deepfake detection. MesoNet [20] distinguishes whether the content is forged or not by detecting the mid-level semantics of the forged faces in the video. This approach can automatically and efficiently detect forged videos generated by forgeries such as deepfake and Face2Face methods. Li et al. [21] proposed a FaceX-Ray model for forgery detection by determining the boundaries of face fusion. Liu et al. [22] emphasized the importance of mobility, i.e., cross-library detection accuracy, and achieved the best migration performance in forgery detection. Wang et al. [23] used a multiscale vision transformer to capture the local inconsistencies existing in faces at different scales, in resistance to compression algorithms by strong robustness, and achieved significant results on mainstream datasets. Lu et al. [24] proposed a new long-range attention mechanism to capture global semantic inconsistencies in forgery samples, which reduces the complexity of the model and achieves good detection results.

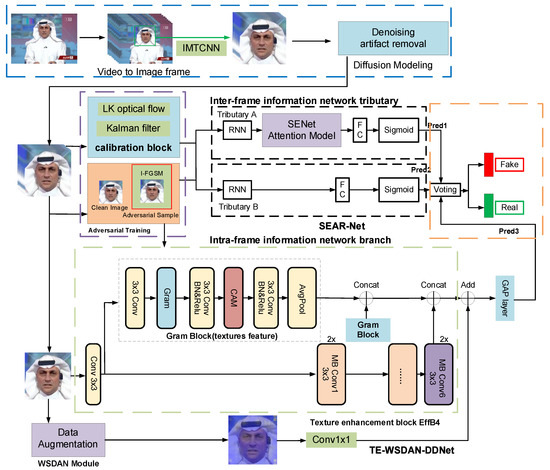

Therefore, to address the above problems, this manuscript proposes an improved deep forgery face detection method mainly focusing on the existing deep-learning-based deep forgery detection methods with noise artifacts, insufficient feature extraction capability in the face of a deep forgery identification task, and poor detection performance in the face of sample confrontation attack. The framework of deepfake face detection and adversarial attack defense method based on multi-feature decision fusion is shown in Figure 1, which includes the use of improved IMTCNN to accurately extract face faces and a diffusion model for noise reduction and de-artificing of the forgery face data, the addition of the SENet attention mechanism to the FG-TEFusionNet network, WADAN, and an image texture enhancement module on the FG-TEFusionNet network to improve the deep neural network for the acquisition of deep information and texture features of the face in the video image; add samples in the network during the training process against the attack I-FGSM algorithm to generate samples against the attack; and then use Adversarial Training for defense, for the accuracy, robustness, and security of the experimental dataset for the research and analysis.

Figure 1.

The framework of deepfake face detection and the adversarial attack defense method based on multi-feature decision fusion. (It encompasses four end-to-end workflows, including an input preprocessing module (denoising/artifact removal), inter-frame and intra-frame feature extraction, decision fusion, and adversarial attack and defense.).

Figure 1 illustrates the overall framework and workflow of the proposed method, with each component linked to the main contributions; to enhance the method’s reproducibility and clarity, this paper adopted a four-stage processing workflow: (1) input preprocessing resizes raw video frames to 384 × 384 pixels and normalizes them, locates facial features via the IMTCCN network, and achieves denoising and artifact removal using a diffusion model; (2) the spatiotemporal feature extraction stage processes geometric and texture feature streams in parallel, where the geometric branch calibrates key points with LRNet and calculates inter-frame attention through SENet, while the texture branch extracts features via EfficientNet and detects spectral anomalies through the collaboration of Gram matrices and WSDAN modules; (3) adversarial optimization generates adversarial samples based on I-FGSM to update model weights, yielding preliminary inter-frame/intra-frame detection results; (4) decision fusion output produces frame-level authenticity predictions. This structured workflow complements the visualization in Figure 1 to ensure algorithmic transparency.

The main contributions of this manuscript are as follows:

(1) The IMTCCN architecture was systematically improved to achieve high-precision facial feature extraction through the integration of a diffusion model, which effectively suppresses noise and artifacts. Qualitative evaluations and quantitative metrics jointly validate the enhanced operational efficacy of this optimized framework.

(2) A novel multi-feature decision fusion model named FG-TEFusionNet is proposed for deepfake detection, which consists of two specialized modules: the SEAR-Net and the TE-WSDAN-DDNet. The SEAR-Net enhances inter-frame dependency modeling by integrating SENet attention mechanisms into a dual-stream RNN architecture based on the LRNet baseline, enabling preliminary predictions through frame-sequence correlation analysis. Simultaneously, the TE-WSDAN-DDNet embeds a Gram image texture module within the EfficientNet backbone, fusing feature maps from the Weakly Supervised Data Augmentation Network (WSDAN) to overcome geometric method limitations through deep texture pattern extraction. A voting mechanism synergistically combines geometric and textural features to generate final detection results, achieving state-of-the-art (SOTA) performance on the FaceForensics++ and Celeb-DF datasets.

(3) An adversarial training methodology was implemented to enhance defense robustness by incorporating I-FGSM-generated adversarial samples during training. The experimental results indicate that under adversarial training conditions, the success rate of adversarial attacks on the model significantly decreases, effectively improving the model’s detection accuracy.

2. Related Works

In the fields of computer vision and network safety, deepfake face detection remains a fundamental challenge. In recent years, rapid advancements in deep generative models have enabled the creation of highly realistic and indistinguishable content, such as manipulated, forged, or synthesized images. This development has introduced new challenges and security threats to information security and societal stability. Consequently, there is an urgent need to explore deepfake detection methods that offer high efficiency, precision, and robust security to mitigate the potential risks associated with this mature technology. Currently, deepfake detection is predominantly approached as a binary classification problem, focusing on subtle local features of deepfake faces, such as minor variations in facial geometry, temporal consistency, and changes in feature textures. Effective feature extraction and meticulous model design are crucial for enhancing accuracy and detection efficacy. In this section, we provide a concise overview of prior research directly pertinent to our work.

2.1. Deepfakes

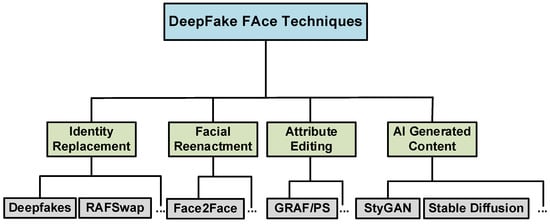

Current mainstream deepfake technologies primarily encompass face-swapping techniques such as FaceSwap [25] and Deepfakes [25], the use of generative adversarial networks (GANs) and diffusion models for generating entirely fake faces, and facial reenactment techniques based on facial expression transfer. Figure 2 provides a schematic classification of these deepfake face technologies. Identity replacement involves employing deep learning methods to substitute the facial shape and features of the source image with those of the target image. Facial reenactment is the process of achieving face forgery by altering facial expressions while maintaining the identity information of the face. Attribute editing entails modifying facial appearance attributes such as age, lips, and skin color. These three methods manipulate real faces. Another method generates realistic fake faces using GANs or DDPMs with labels or noise information. Facial forgery technology has significantly compromised individuals’ privacy and has even been used for illegal purposes, posing a considerable threat to social security.

Figure 2.

The classification diagram of deepfake technology.

2.2. Deepfake Detection

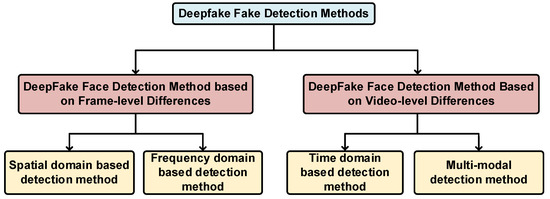

Traditional methods for creating fake faces rely heavily on manually designed features and rules, yet they perform inadequately when confronted with complex deepfake videos. In recent years, deep-learning-based methods have emerged as the mainstream, capable of autonomously learning and extracting features from images and videos to effectively detect deepfakes. This section categorizes deepfake video detection based on features and analytical methods between frames within fake videos, distinguishing between frame-level and video-level information differences. As shown in Figure 3.

Figure 3.

The classification diagram of deepfake detection methods.

In the realm of frame-level detection, much of the effort in deepfake detection focuses on methods analyzing individual image frames to identify irregularities and flaws. Typically, deep learning techniques are employed to distinguish between authentic and manipulated images. Zhao et al. [26] introduced an attention-mechanism-based approach for extracting artifact features from images and achieving fine-grained classification, yielding promising detection outcomes. Furthermore, Hu et al. [27] integrated convolutional attention modules that combine channel and spatial attention for detecting manipulated faces, leveraging attention mechanisms to enhance the model’s focus on crucial image details and improve its ability to discern inconsistencies surrounding manipulated content. Li et al. [28] proposed an adaptive frequency feature generation module to extract discriminative features from different frequency bands in a trainable manner. They also introduced the Single Center Loss (SCL) to enhance differentiation between real and fake faces. In the realm of video-level detection, video-level detection significantly amplifies the appeal of temporal-feature-based deepfake detection, driven by the intuition that videos, unlike images, inherently encapsulate richer information. Boosting detection accuracy involves leveraging spatiotemporal cues embedded within video sequences. These methodologies commonly entail optical flow analysis of video frames, validation of motion consistency, and statistical examination of video duration to pinpoint anomalies and inconsistencies in deepfake videos. Zheng et al. [29] discovered that by setting the temporal convolution kernel size to 1 within 3D convolutional kernels, the network’s ability to represent temporal information can be enhanced, allowing for the detection of temporal inconsistencies in forged videos. This approach exhibits excellent detection performance when confronted with unknown forgery methods. Shao et al. [30] introduced a novel research problem for multimodal fake media, termed Detection and Multi-modal Media Manipulation (DGM4). DGM4 aims not only to ascertain the authenticity of multimodal media but also to detect manipulated content, thereby facilitating the identification of video forgeries and ownership authentication.

2.3. MTCNN Network Model

The Multi-Task Convolutional Neural Network (MTCNN) is a deep learning model extensively employed for face detection and alignment. It is composed of three subnetworks, each dedicated to a specific task: face detection, key point localization, and bounding box regression. Each network contributes to the efficient detection and precise alignment of faces. Initially, the Proposal Network (P-Net) utilizes a convolutional neural network to concurrently generate candidate boxes and associated face confidence scores. P-Net effectively filters potential face regions, serving as input for subsequent stages. Following this, the Refinement Network (R-Net) further refines the candidate boxes produced by P-Net, enhancing the accuracy and stability of face detection. R-Net leverages a deeper neural network architecture to meticulously process and filter the candidate boxes, ensuring that the final detection results are both accurate and reliable. Finally, the output Network (O-Net) is tasked with the ultimate face landmark regression and bounding box refinement. O-Net not only pinpoints facial key points but also precisely adjusts the position and size of each face box, leading to superior alignment accuracy and effective facial feature extraction. MTCNN accomplishes the comprehensive process from initial candidate box generation to final face alignment and feature extraction through these three sequential subnetworks. Its architectural design and end-to-end processing enable rapid and accurate face detection, even in complex environments, providing a dependable foundation for subsequent face recognition and analysis tasks. MTCNN, as a typical face detection and alignment model, plays a crucial role in the field of artificial intelligence due to its effective multitasking capabilities and optimized network architecture. In this paper, the face detection and alignment method was improved based on the MTCNN framework. The receptive field of the images was expanded, enabling a more accurate capture of facial information in scenes. This enhancement provides more precise feature information for subsequent facial feature extraction tasks.

2.4. SENet Channel Attention Mechanism

In deep learning models, the richness of extracted features increases with the number of parameters, significantly improving the model’s accuracy and robustness. However, this parameter expansion can lead to information overload, negatively impacting model training and real-world performance. To address this challenge, an attention mechanism is introduced in this study. This mechanism allows the model to swiftly select high-value feature information, enabling it to accurately focus on critical information while effectively mitigating the interference of irrelevant data. Typical attention mechanisms are categorized into three main types: channel attention, spatial attention, and temporal attention. These mechanisms enable the model to assign varying weights to different positions in the input sequence, allowing it to concentrate on the most relevant parts when processing each sequence element. Accordingly, this study incorporates a SENet channel attention mechanism, which enhances the feature information learned within frames of forged videos by the attention dual-stream RNN. This approach also facilitates the effective collection of local features for the deepfake detection task, compelling the network to attend to diverse local information and thereby improving the accuracy of forgery detection.

SENet Attention Optimization Network Model. The fundamental concept of SENet involves the introduction of a lightweight attention module, which comprises three primary operations: Squeeze, Excitation, and Scale. The architecture of the SENet attention mechanism is illustrated in Figure 4.

Figure 4.

Structure of the SENet attention mechanism network.

SENet utilizes the Squeeze and Excitation operations to extract inter-channel relationships and recalibrate the convolutional input accordingly. This process involves extracting convolutional features between channels and using these features to adjust the feature maps produced by the original network, as detailed below.

The Squeeze operation is represented by the function in Figure 4. This operation compresses channel features by applying global average pooling, which reduces multidimensional data to a single scalar value. This scalar represents the overall importance of the features within each channel, thereby capturing the global information for each channel. The Squeeze operation is illustrated in Equation (1):

In Equation (1), represents the result of the Squeeze operation; denotes the input feature map matrix; H and W indicate the height and width of the feature map, respectively; and x and y represent the rows and columns of the input matrix.

The Excitation component is represented by in Figure 4. The Excitation operation functions as a gating mechanism to generate a feature weight for each feature channel. In this process, the features obtained from the Squeeze operation pass through a fully connected layer, which involves two fully connected operations, a ReLU activation function and a Sigmoid activation function. This design enables the model to automatically learn the importance of each channel’s features. The Excitation operation is described in Equation (2):

In Equation (2), denotes the result of the Excitation operation, δ represents the ReLU activation function, σ represents the Sigmoid activation function, and and refer to the two fully connected layer operations.

The Scale component is represented by in Figure 4. This component recalibrates the feature map by multiplying the weights obtained from the Excitation operation with the features of the original channels, resulting in the weighted output features. It is described in Equation (3):

Within the SENet architecture, the input tensor is defined as (batch size B, channel dimension , temporal frames , spatial resolution . Global average pooling is first applied to extract channel-wise statistics followed by the generation of attention weight vectors through two-stage fully-connected (FC) layers: The first FC layer with ReLU activation models inter-channel dependencies, while the second FC layer with Sigmoid activation normalizes attention weights. Crucially, parameter sharing across the temporal dimension is implemented in the FC layers, achieving temporal consistency preservation with computational complexity reduced.

2.5. WSDAN Network Model

In practical applications of deepfake face detection, random data augmentation often introduces uncontrollable noise and interference, which can hinder the model’s learning capacity and negatively impact feature extraction, leading to suboptimal detection performance. Compared to traditional data augmentation methods, random augmentation is typically less efficient in handling small objects and can introduce background noise. To address these challenges, reference [31] proposes the Weakly Supervised Data Augmentation Network (WSDAN). The model leverages attention maps to augment data more effectively, enhancing feature extraction and data augmentation capabilities. This approach improves the accuracy and generalization ability of the detection model.

During the model training process, preprocessed images are denoted as , which are then fed into the Xception weakly supervised network for data augmentation, producing feature maps . Ref. [31] employs Bilateral Attention Pooling (BAP) to extract features. As depicted in Equation (4), feature fusion involves element-wise multiplication of the feature maps and attention map , yielding values for the new feature maps. This element-wise multiplication enables the network to selectively emphasize local regions, significantly enhancing its capability to capture subtle features. Moreover, this strategy plays a constructive role by mitigating the network’s tendency to overfit irrelevant features, thereby ensuring that the feature matrix contains fewer disruptive elements and provides more precise feature descriptions.

According to Equation (5), the attention map feature is derived using the Global Average Pooling (GAP) method. represents element-wise multiplication. Following this, the attention feature matrix M is constructed, as depicted in Equation (6).

Regarding training images, as suggested in reference [31], an attention normalization method has been devised. This method strategically focuses attention on specific regions and normalizes them according to Equation (7), guiding the data augmentation process to produce enhanced data. Thus, it strategically directs attention to the most crucial areas of the image, offering more advantageous guidance for model training. Empirical [31] evidence shows that during the data augmentation phase, this attention normalization strategy enhances the model’s performance by improving its capacity to assimilate and discern critical features.

3. Multi-Feature Extraction

3.1. Extraction of Facial Geometric Features Based on Inter-Frame Information

In the realm of deepfake detection, the crucial task lies in accurately distinguishing genuine facial images from synthesized deepfake counterparts by extracting and analyzing facial geometric features such as contours, eyes, nose, and mouth structure. Researchers typically tackle this challenge by analyzing temporal features between video frames, albeit facing inherent challenges. Thus, there is a pressing need to explore temporal features specific to manipulated facial videos to bolster the accuracy and resilience of deepfake detection methods. This paper adopts LRNet (Landmark Recurrent Network), a foundational model proposed by Sun et al. [32], for extracting geometric features. LRNet leverages facial landmarks’ geometric attributes as discriminative features and introduces an effective facial landmark alignment module aimed at refining the precision of facial frames in videos. It mitigates noise and jitter induced by video motion or compression artifacts, ensuring high geometric fidelity of facial landmarks fed into subsequent feature extraction networks. This study addresses and improves upon the limitations of the LRNet model.

3.2. Extraction of Image Texture Information Based on Intra-Frame Information

Deepfake-generated synthetic media achieves visual realism through localized texture manipulation in facial regions. This methodology has gained prominence due to its effectiveness in enhancing visual authenticity through detailed feature reproduction, including micro-expressions, skin texture variations, and lighting effects. Conventional detection approaches typically employ direct texture analysis through comparative evaluation of pixel values and localized texture patterns. However, these techniques are frequently challenged by advanced generation methods that employ region-specific texture modifications capable of deceiving traditional analytical frameworks. Current detection systems demonstrate limited robustness when confronted with sophisticated manipulation algorithms that maintain structural consistency while altering subtle textural properties.

Gatys et al. [33] proposed using the Gram matrix as a method for describing image textures. To address this limitation, we introduce the Gram matrix as a means to capture the global texture features of an image. Unlike traditional methods that analyze the texture of individual local regions, the Gram matrix provides a more abstract and comprehensive image description by comparing the texture relationships across all regions of the image. Specifically, the Gram matrix utilizes the covariance matrix of feature maps to describe the texture correlations between different locations, thereby capturing the overall texture structure of the image within a broader spatial context. This approach to extracting global texture features is more effective for detecting deepfakes because, even when local textures are fine-tuned, the overall texture structure and relationships retain a certain degree of stability and distinctiveness. Furthermore, current convolutional neural networks often fail to capture long-range information due to their limited receptive fields, which leads to reduced performance on lower-resolution images. By incorporating auxiliary networks to gather texture information, the ability to capture long-range information is enhanced, thereby improving the model’s generalization capability. The Gram matrix is defined as shown in Equation (8).

Equation (8) defines a matrix composed of the pairwise inner products of any k vectors in an n-dimensional Euclidean space, known as the Gram matrix of these k vectors. This paper employs the shallow and deep structures of the EfficientNet backbone network to extract global texture features of images. By calculating the Gram matrix from the feature vectors, the hidden relationships between image features can be revealed. The calculation method is detailed in Equation (9). The computational framework standardizes input tensor dimensions as (B, C, H, W), where B denotes batch size, represents channel count, and H/W correspond to spatial dimensions. The intermediate variable is reshaped into a two-dimensional form (1, H × W) through Gram matrix operations, maintaining the tensor dimensions as (B, C, H, W).

In Equation (9), denotes the feature map at layer , with representing the -th element of the -th feature map at the same layer. Each element in the feature map indicates the intensity of a particular feature. describes the entire feature map and is not constrained by the receptive field of the convolutional neural network, thus allowing the Gram matrix to capture long-range texture information within images. The Gram matrix reflects the pairwise correlations between different feature intensities in the feature map, with diagonal elements representing the response of specific filters and off-diagonal elements indicating the relationships between different filters. With standardized input dimensions of (B, C, H × W) for Gram matrix computation, the final output tensor dimensions are reformulated as (B, C × C). This dimensional transformation is systematically achieved via (1) spatial dimensions (H, W) into H × W, and (2) Gram matrix-based computation of inter-channel covariance features to generate cross-channel correlation matrices. Furthermore, Gram matrices are computed frame-wise to preserve temporal dynamics. Compared to direct feature mapping, the Gram matrix demonstrates heightened sensitivity to subtle spatiotemporal inconsistencies in generative deepfakes by encoding cross-channel texture statistics, thereby providing an interpretable physical basis for detection methodologies.

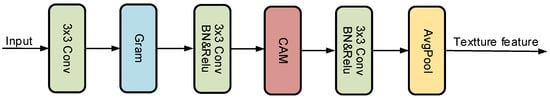

For model construction, a Gram matrix-based image texture enhancement module is introduced to calculate image texture information. This module is designed to aid the neural network in effectively capturing texture features, thereby significantly improving the network’s ability to extract these features. The structure of the Gram image texture enhancement module is depicted in Figure 5.

Figure 5.

Structure of the Gram image texture enhancement module.

In the network module illustrated in Figure 5, feature extraction is first conducted by a 3 × 3 convolutional layer, followed by a Gram matrix calculation layer to capture global texture features. A channel attention mechanism is then introduced between the subsequent two 3 × 3 convolutional layers and the normalization and activation function blocks, enhancing the representation capability of the image and further optimizing texture feature representation. Finally, a global average pooling layer is employed to ensure dimensional consistency with the backbone network. The texture features extracted at different levels of the backbone network are fused together using appropriate feature fusion methods, resulting in comprehensive image texture feature information across various network layers. This approach facilitates a better understanding of the overall structure of textures and the relationships between texture elements.

4. Construction and Optimization for the Deepfake Detection Model

4.1. Construction of the Improved MTCNN-Based Model

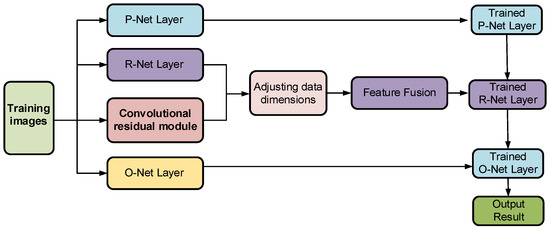

MTCNN, a multi-task cascaded convolutional network, has advanced face detection and alignment. But it has limitations. In complex scenarios like uneven lighting or large pose variations, its detection accuracy drops. Also, its high computational complexity and slow speed limit large-volume data processing. To address these, MTCNN is improved. The improved IMTCNN structure (Figure 6) adds a convolutional residual module in the R-Net layer, along with transposed convolution and max-pooling layers for dimension adjustment and resolving fusion issues. This increases network depth and non-linearity, expanding the receptive field. These enhancements boost both detection accuracy and adaptability.

Figure 6.

Structure of the improved MTCNN model.

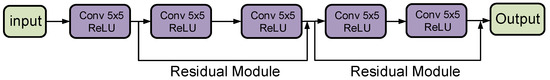

The structure of the convolutional residual module proposed in this paper is illustrated in Figure 7. This module comprises a 5 × 5 convolutional kernel and two serially connected residual blocks, each containing a 5 × 5 convolutional kernel and a ReLU activation function. By employing larger convolutional kernels, the receptive field is expanded, allowing the network to accurately capture facial information in images while mitigating the effects of external environmental factors. The stacking of residual modules facilitates deeper network layers, enhancing training stability and improving detection performance.

Figure 7.

Structure of the convolutional residual module.

4.2. Noise Reduction and De-Artifacting Using the Diffusion Model

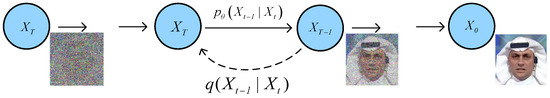

In the realm of image processing, diffusion models stand out as extensively adopted methods for data generation, leveraging the diffusion relationships between pixels in images or videos to uncover the genesis of images. The fundamental concept behind this approach treats the data generation process akin to a Markov chain, transitioning gradually from a complex data distribution to a simpler Gaussian distribution, and then reversing this process to generate new data points, thereby achieving denoising and artifact removal. Traditional image denoising methods, such as those relying on wavelet transforms or conventional low-pass filters, though effective in some scenarios, often falter when dealing with intricate noise and artifacts, struggling to capture high-order correlations between pixels. Hence, this paper introduces deep-learning-based diffusion models to adeptly learn complex diffusion relationships within images, thereby enhancing the efficacy of image processing tasks. The algorithm of the diffusion model is depicted in Figure 8.

Figure 8.

Directed graphical modeling diagram for diffusion models.

As illustrated in the process flow above, the diffusion process involves gradually introducing noise into the image, as indicated by the process s in Figure 8. Conversely, the inverse diffusion process, , entails denoising and restoring the image or generating new images. The diffusion model excels in learning the ability to generate high-quality data samples from noise, thus demonstrating outstanding performance in generating diverse, high-fidelity data, and in image denoising and artifact removal.

In the diffusion model illustrated in Figure 8, a cosine noise schedule is primarily employed to balance generation efficiency and quality through a 1000-step fully trained sampling strategy. The denoising network, based on a pre-trained U-Net architecture with encoder–decoder structure, simultaneously captures global structural features and local details via multi-scale feature fusion and skip connections during the reverse denoising process. This design optimizes both noise prediction accuracy and generation speed during progressive denoising steps, which was experimentally validated to enhance detection sensitivity and synthetic data fidelity for forgery detection tasks.

In image noise reduction, the diffusion model treats the noisy data as the result of a partial backward diffusion process. Through training, it learns effective strategies to reverse this process, thereby achieving denoising. Deartifacting, meanwhile, targets the elimination of unnatural signals or structures introduced during data acquisition and processing. Consequently, the formulation of the diffusion model is articulated below. It delineates forward and reverse processes, representing sequential stages within the diffusion model, with a forward step of the diffusion model presented as follows.

The forward diffusion steps of the diffusion model are executed through the application of linear transformations and Gaussian noise, as depicted in Equation (10).

In Equation (10), denotes the noise scale at each time step, regulating the amount of noise added. I stands for the identity matrix, ensuring isotropic noise. signifies Gaussian noise, while denotes the Gaussian probability distribution transition. Thus, the computation of noise x_t is expressed in Equation (11).

The above equation implies that as time t progresses, increases gradually until closely approximates Gaussian noise. Further analysis reveals

In Equation (12), ,. Here, signifies the original image before noise addition, denotes the image fully diffused with noise, and ε represents Gaussian noise. In addition, the inverse step of the diffusion model is formulated as follows:

In Equation (13), is the denoised image, is the noisy image, and is a function approximator for the total noise ε between and used in prediction. Within the diffusion model, the goal is to incorporate learned noise into , and the relationship between the diffusion model score function and noise prediction is articulated in Equation (14).

In Equation (14), we simultaneously use and to represent the diffusion model.

4.3. Construction of the FG-TEFusionnet Model for Deepfake Detection

Multi-feature fusion is a method that utilizes different types of features for fusion judgments to improve detection performance. This approach typically encompasses both feature-level fusion and decision-level fusion. Feature-level fusion involves concatenating or adding features extracted from different feature extractors to create a richer feature representation. Decision-level fusion, on the other hand, aggregates classification outcomes from various features using weighting or voting methods to produce the ultimate classification result. By integrating cross-frame information analysis into established frame-level detection techniques, the effectiveness of deepfake video detection can be significantly enhanced. Depending solely on intra-frame or inter-frame information in deepfake video detection methods may result in a drastic reduction in detection accuracy when facing novel datasets or emerging forgery techniques. Comprehensive utilization of intra-frame and inter-frame information for integrated feature analysis not only enhances the model’s capability to identify forged videos but also substantially improves the accuracy and robustness of the detection system across various contexts. Consequently, this study utilizes decision-level fusion for deepfake fake detection. As shown in the method framework of Figure 1, this method leverages the LRNet network model, based on SENet attention, to extract facial geometric features, and the EfficientNet network model, incorporating WSNDA and image texture enhancement modules, to extract image texture features. By comprehensively extracting both inter-frame and intra-frame feature information and subsequently performing decision-level fusion, the system can more effectively differentiate between authentic and forged videos.

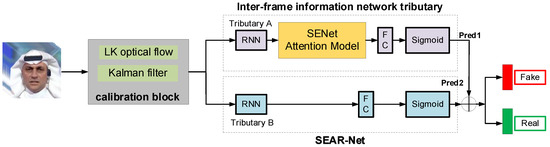

4.3.1. Construction of the LRnet Network Model Incorporating SENet Attention

The LRNet framework, by employing its specifically designed calibration module, enhances the accuracy of facial landmarks in input face images and effectively mitigates irregular jitter and noise resulting from video compression or facial movements within videos. Consequently, the geometric features of facial landmarks can be utilized for the detection of deepfake face videos. However, LRNet still has several limitations. Due to the specific nature of optical flow calculations, the tracking and denoising of facial landmarks can only be carried out between adjacent frames and propagated downwards. This approach lacks the capability to extract cross-frame information and does not sufficiently consider long-range temporal features, leading to inadequate extraction of inter-frame information. Thus, by comprehensively utilizing the detailed features within the facial landmarks, the accuracy and robustness of the deepfake face video detection model can be significantly improved. Building on the aforementioned concepts, an inter-frame information-based deepfake face detection model, SEAR-Net (SENet Attention in Two-RNN-Net), is proposed. To enhance the feature representation of inter-frame information in deepfake videos, the facial landmark calibration module from the LRNet framework was retained, while a SENet attention mechanism module was incorporated into the recurrent neural network branches. The outputs of these two branches are then fed into a decision fusion module. The SEAR-Net method extracts features from the inter-frame information of the input sample video and generates the results from the network branches, thus achieving the detection of deepfake face videos. The framework of the SEAR-Net method is illustrated in Figure 9.

Figure 9.

The framework of the SEAR-Net model.

- Facial Landmarks Calibration Module

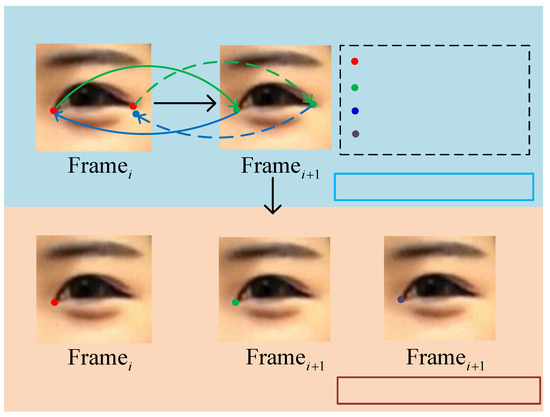

The facial landmarks obtained after preprocessing can accurately represent the contours and details of facial features. However, due to the continuous nature of video dynamics, these landmarks still exhibit noticeable jitter, which hinders the effective extraction of inter-frame information. To address this, a facial landmark calibration module is employed to reduce the impact of jitter noise, thereby enhancing the resolution and accuracy of the facial landmarks and improving detection accuracy. The facial landmark calibration module used in this study is illustrated in Figure 10. It incorporates the Pyramid Lucas–Kanade algorithm and the Kalman filter, which are utilized for predicting the position in the next frame and denoising the inter-frame facial landmarks, respectively.

Figure 10.

Schematic diagram of the facial landmarks calibration module.

To calibrate facial landmarks, the algorithm adjusts the landmarks by matching small patches in the surrounding area. The calibration module employs the optical flow method to track the positional changes of facial landmarks across consecutive video frames. Given that deepfake face images are highly detailed and the Lucas–Kanade algorithm is sensitive to patch size, a pyramidal structure is introduced. The Pyramid Lucas–Kanade algorithm facilitates the tracking of facial landmarks between adjacent frames, as depicted in Step 1 of the LK operation in Figure 10.

In applications, the Lucas–Kanade algorithm may introduce noise to facial landmark movement in sample videos, affecting network model feature extraction. Thus, the Kalman filter is used. It combines actual and predicted landmark positions to remove most noise, enhancing feature-point precision and stability, as shown in Figure 10 Step 2.

The working principle of the facial landmark calibration module is further detailed. First, the Lucas–Kanade algorithm tracks the facial feature points in the previous frame to predict the approximate location of the face in the next frame. To ensure prediction accuracy, small image patches with unreasonable predicted positions that exhibit significant discrepancies are discarded. The Kalman filter is then used to perform a weighted average of the positions predicted by the Lucas–Kanade algorithm and those actually detected by the face detection module. This fusion strategy not only calibrates the detection positions but also effectively reduces the noise level of facial landmarks within the video frame sequence. The calibrated detection position information is more precise, enabling subsequent feature extraction networks to capture it more effectively and extract critical temporal feature information, thereby enhancing the overall system’s capability to understand and analyze the dynamic characteristics of video content.

- SENet Attention-Based Feature Extraction Module For Dual-Flow RNN

The input sample video is processed through the facial landmarks calibration module to obtain standardized facial landmarks. The Lucas–Kanade algorithm and Kalman filter are then utilized to fuse the predicted and actual positions, effectively reducing noise and yielding the optimal calibrated feature point locations. Subsequently, a dual-stream RNN is used to extract temporal dimension features. The calibrated facial landmark coordinates are embedded into two types of feature vector sequences in different forms, which are then fed into two separate RNNs to extract temporal information at different levels. Each set of facial landmarks for a video frame can be represented as , where a facial landmark point is denoted as . Consequently, the first type of feature vector sequence is expressed as shown in Equation (15)

The first type of feature vector sequence represents direct facial geometric features. The second type of feature vector sequence is derived from the differences between facial landmarks of two consecutive frames, as illustrated in Equation (16).

Therefore, by embedding, the geometric feature information of the optimal facial landmarks can be represented as two feature vector sequences and , where the subscript denotes the number of facial landmark sets in a video. Temporally, represents the collection of facial landmarks in the sample video and serves as the input vector sequence to an RNN network . The vector sequence , formed by the differences between consecutive frames, captures the velocity feature information of the facial landmarks and is input as a vector sequence into another RNN network . In the branch network , the SENet attention module is incorporated to enhance the representation of varying weights for each facial landmark in the temporal information features. This forces the network to focus on different local information, thereby improving classification accuracy. Both RNN branches subsequently pass through fully connected neural networks, with the sigmoid activation function then producing the prediction results and of each branch. Finally, these outputs are fed into the multi-feature decision fusion module to achieve deepfake face detection.

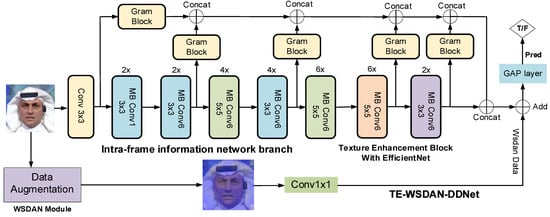

4.3.2. Model Construction of Incorporating WSDAN and Image Texture Enhancement Module

The improved SEAR-Net model relies solely on optimized geometric features of facial landmarks for discrimination, which effectively eliminates finer facial textures and retains only the external contour shapes. By comprehensively utilizing detailed facial texture features and incorporating a temporal information branch within video frames into the discriminative network, the accuracy and robustness of deepfake face video detection can be significantly improved. Consequently, this study introduces the Gram image texture enhancement module. Compared to baseline neural networks, this module excels in capturing facial texture features, acquiring larger texture sizes and more extensive texture patterns, thereby enhancing the model’s capability to detect deepfake faces. Furthermore, the model integrates the WSDAN network, which further strengthens the extracted texture features, enhancing the model’s robustness. In summary, the image texture enhancement module and the WSDAN network are integrated into EfficientNet, resulting in the design of TE-WSDAN-DDNet (Texture Enhancement and WSDAN with EfficientNet for Deepfake Detection) for deepfake face detection, as illustrated in Figure 11.

Figure 11.

Framework diagram of TE-WSDAN-DDNet incorporating image texture enhancement.

As illustrated in Figure 11, the framework of TE-WSDAN-DDNet for deepfake face detection integrates the image texture enhancement module and the WSDAN network. The TE-WSDAN-DDNet model primarily comprises the Gram Block, WSDAN module, EfficientNet, and GAP Layer. Both shallow and deep layers of the network compute the Gram matrix to extract texture feature information at different levels. Specifically, texture enhancement blocks are added at five positions within EfficientNet: after the first convolution layer, after the second MBConv6, after the tenth MBConv6, after the twenty-second MBConv6, and before the final classification layer. Texture information is computed at each of these layers. This texture information from different network levels is concatenated and then combined with the backbone network of the EfficientNet network to produce image features containing multi-level texture information. The enhanced data derived from WSDAN is subsequently used to aid in deepfake detection. Finally, the features are input into the global average pooling module for the classification of the prediction results.

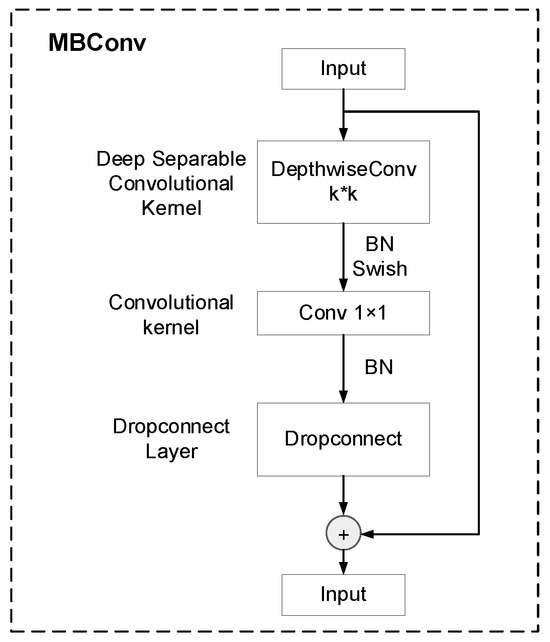

The EfficientNet backbone network in this paper primarily relies on the MBConv module. This module performs convolutional operations on input feature maps to capture inter-pixel correlations within convolutional maps and subsequently extracts global features. The composition of the MBConv module is depicted in Figure 12. During the model’s feature extraction process, Conv1 is initially employed to extract shallow-level information from the images using a 3 × 3 convolutional kernel and a stride of 2. Following this, batch normalization (BN) and Swish activation functions are incorporated to mitigate overfitting. In subsequent stages, seven MBConv modules are utilized for deep feature extraction. Among these, MBConv1 and MBConv6 employ expansion ratios of 1 and 6, respectively, with similar network structures. MBConv6, however, scales up the input channels by a factor of 6 to capture more intricate image features, necessitating five downsampling steps for extracting forged image texture features.

Figure 12.

Component structure of the MBConv module.

4.3.3. Multi-Feature Decision Fusion Module

The multi-feature extraction and decision fusion framework in this study incorporates three distinct network branches. In the network branch dedicated to inter-frame information extraction, the RNN g1 leverages the SENet attention mechanism to extract geometric features from the video input, yielding the branch prediction . Concurrently, RNN g2 extracts velocity features of the optimal facial landmarks, resulting in the branch prediction . Within the network branch focused on intra-frame information extraction, EfficientNet integrates the WSDAN network and an image texture enhancement module to extract detailed texture information from facial regions within the input video frames. This process effectively highlights the extracted features, with the output prediction result labeled as . The final multi-feature extraction and decision fusion network predict the overall outcome pred for detecting deepfake videos. Consequently, depending on the type of prediction results, the voting method is employed to fuse and finalize the outcomes from each branch, as depicted in Equation (17).

In Equation (17), f denotes the voting method commonly employed in machine learning classification tasks. Furthermore, the decision logic of base classifiers in the voting method adheres to a hard decision rule based on majority consensus. Specifically, if two of the three independent branches reach consensus on a sample’s class prediction, the consensus prediction is adopted as the ensemble result. Conversely, when branch predictions diverge (two votes are false, one vote is true), the system defaults to a false classification.

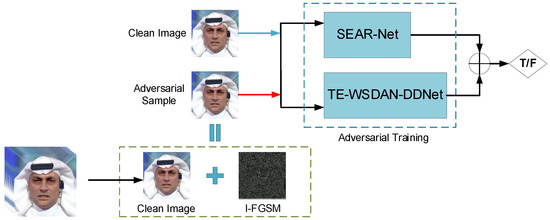

5. Construction of Sample Adversarial Attacks and Defense Model

Adversarial attack refers to the process of introducing adversarial perturbations into a model’s original inputs, thereby creating adversarial examples that cause the model to make incorrect predictions. Adversarial perturbations involve making subtle modifications to the model inputs capable of misleading the model’s output. IFGSM (Iterative Fast Gradient Sign Method) [34] is an iterative adversarial attack method used to generate adversarial samples within a model. It repeatedly plays out the game [35] within the model through multiple iterations to deeply generate adversarial samples. Consequently, to enhance the robustness of the deepfake video detection in this study, IFGSM was introduced to generate adversarial examples and mitigate their impact through adversarial training. The algorithm for generating IFGSM adversarial examples is depicted in Equation (18). As shown in Algorithm 1.

In Equation (18), represents the original sample, and denotes the adversarial example after iterations, where does not exceed the specified number of iterations . refers to the clipping function, which ensures that the updated adversarial example remains within an distance from the original sample, thereby preventing significant deviations between and . Here, , denotes the perturbation range, represents the learning rate, indicates the sign function, J represents the cross-entropy loss function, denotes the objective function, stands for the model parameters, and signifies the true label for the genuine face. The loss is computed using the cross-entropy between the output of the sample and the original output.

| Algorithm 1 IFGSM algorithm for generating adversarial samples |

| Input: Original sample Loss function Perturbation range Number of iterations, Learning rate Truncation function , where denotes the sample after iterations Output: Adversarial sample |

Initialization:

|

According to Equation (18), by employing the IFGSM algorithm to generate adversarial examples, this study utilizes adversarial training to assess the model’s robustness against adversarial attacks. The essence of adversarial training lies in generating effective adversarial examples and striking a balance between the significance of original samples and adversarial ones during the model training process. This methodology aims to bolster the model’s robustness in the face of adversarial assaults [36]. Figure 13 outlines the schematic of the proposed method for defending against sample adversarial attacks in this paper. The primary workflow involves generating adversarial examples using the IFGSM algorithm, feeding them alongside original samples into SEAR-Net and TE-WSDAN-DDNet for adversarial training, and ultimately employing decision fusion to determine the authenticity of the results.

Figure 13.

Adversarial training approach for adversarial attack sample defense.

Furthermore, in this paper, when the adversarial training method is adopted, the adversarial samples used are mixed with the original samples at a ratio of 1:1 for training. By replacing the original samples with adversarial samples, the model’s robustness is enhanced while ensuring that the original data distribution is maintained. Meanwhile, the weighted cross-entropy is used as the loss function for adversarial training to balance the robustness and the original accuracy. Therefore, the formula for the cross-entropy in adversarial training is as follows.

First, in the detection task, the model learns the true distribution of the data by minimizing the cross-entropy loss function, as shown in Equation (19).

Let denote the input image, and y = represent the ground-truth labels for authentic and forged categories, respectively. The predicted probability for class is expressed as .

The adversarial perturbation is generated via the IFGSM algorithm defined in Equation (20). By injecting into the original data, the model is compelled to maintain robustness against adversarial samples . Consequently, the adversarial loss function is formulated as follows:

The final adversarial training objective function is formulated by jointly optimizing the original data loss and adversarial sample loss, as mathematically expressed below:

As indicated in Equation (21), the adversarial loss weighting coefficient is empirically set to 0.5. This hyperparameter serves as a critical trade-off parameter to balance the model’s learning focus between clean sample feature extraction and robustness enhancement against adversarial perturbations, thereby preventing either loss term from dominating the optimization process.

6. Experiment and Result Analysis

6.1. Datasets

The method was tested and validated on widely acknowledged benchmark datasets FaceForensics++ (FF++) [21] and CelebDF [37]. FF++ encompasses four distinct methods for generating deepfake facial data. The dataset, comprising 1000 original real video sequences and 4000 manipulated videos, is extensively utilized in training and evaluating our models. Specifically, Deepfakes employs an autoencoder-based approach to achieve precise face swapping through the training of one-to-one generation models. Face2Face replaces original faces in videos with real ones without employing generative models to alter facial features. FaceSwap utilizes a 3D-based technique to swap faces by learning and reconstructing facial features. NeuralTextures adopts deep neural texture transfer methods to ensure the 3D consistency of generated faces by incorporating texture details from reference images.

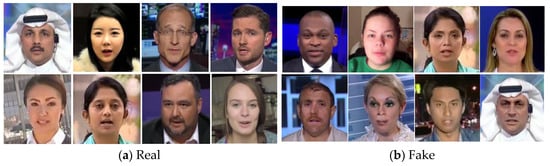

To cater to researchers with diverse requirements, the original data was encoded using H264 compression for both real and fake videos, with varying levels of compression applied. In our experiments, the datasets consisted of lightly compressed high-definition facial video images, specifically graded at the C23 quality level. This ensures dataset clarity and establishes an effective benchmark to assess the influence of different compression techniques on forged videos. This study selected 1000 real videos and 500 forged videos from each of the Deepfakes, FaceSwap, Face2Face, and NeuralTextures datasets for training and testing. Examples of dataset samples are illustrated in Figure 14.

Figure 14.

Sample example of the FaceForensics++ dataset.

Moreover, the CelebDF dataset exists in two versions, with this study opting for CelebDF-v2. This dataset effectively compensates for the FF++ dataset’s lower-quality deepfake content by offering synthetic videos that rival the high-quality fake data prevalent on the internet. Specifically, CelebDF-v2 consists of 590 real videos and 5639 synthetic videos of generally high quality, serving as the transfer test dataset for evaluating the deepfake face detection model in this study.

6.2. Evaluation Metrics and Experimental Parameter Settings

6.2.1. Model Evaluation Metrics

ACC (Accuracy) is a metric that measures the precision of the model in identifying targets. The ROC [38] curve reflects the relationship between sensitivity and specificity. The ROC curve is obtained by calculating the True Positive Rate (TPR) and False Positive Rate (FPR), as shown in Equations (22) and (23). AUC (Area Under the Curve) quantifies the area under the ROC curve, shown in Equation (24), providing a comprehensive measure of the model’s classification performance across different thresholds and effectively mitigating the subjective impact of threshold selection. Therefore, this study employs AUC and ACC as primary evaluation metrics to validate the performance of the predictive network models. These model evaluation metrics are defined as follows.

In the three equations provided above, represents the count of true positive samples, represents the count of false negative samples. stands for the count of false positive samples, while indicates the count of true negative samples.

6.2.2. Experimental Model Parameter Settings

In accordance with the study requirements, the dataset undergoes initial preprocessing steps. Each frame image is cropped to a standardized size, followed by facial feature extraction using MTCCN. A diffusion model is then applied to denoise the images and eliminate artifacts. Subsequently, each video is processed through various input methods tailored to the respective networks for feature extraction, training, and predictive assessment of facial forgery. The dataset is partitioned into three segments, divided in a ratio of 60% for training, 20% for validation, and 20% for testing, to assess both its performance and generalization ability.

In the inter-frame information extraction network of SEAR-Net, the dual-stream RNN branches and are each equipped with 32 GRU units. As a result, SENet attention is applied at the output layer of the RNN, with input and output dimensions of 2 and 64, respectively. Following this, an FC layer with input and output dimensions of 64 and 2, respectively, is connected at the output layer. Before entering the RNN network, a Dropout layer with a dropout rate of 0.3 is added to the input, and within the RNN network, four Dropout layers with a dropout rate of 0.5 each are inserted to enhance model generalization and prevent overfitting. For network training, the Adam optimization algorithm is chosen for parameter optimization, with a learning rate set to 0.002, and batch size and block size set to 2048 and 32, respectively. The epochs for and are set to 1000 and 800, respectively.

In the intra-frame information extraction network of TE-WSDAN-DDNet, the WSDAN network, with an attention map threshold of 0.45, is integrated, and a Gram Block is introduced for deep texture feature extraction. The EfficientNetB4 model, pre-trained and used as the backbone network, is employed during training. The input image size for model training is set to 384 × 384. Adam is selected as the optimizer, with cross-entropy loss serving as the loss function. The learning rate is set to 0.01, and ExponentialLR is utilized as the learning rate scheduler. The epochs and batch size for data training are 100 and 32, respectively. The GPU used for this experiment is the NVIDIA GeForce RTX4090, and the PyTorch 1.1.0 deep learning framework is employed. The primary evaluation metrics for analyzing model performance are AUC and ACC. Detailed parameters used in the experiments are listed in Table 1 and Table 2.

Table 1.

Meaning of parameters in the experimental section.

Table 2.

Parameters settings in the experimental section.

6.3. Analysis of Feature Extraction Effectiveness

The FG-TEFusionNet network proposed in this study extracts facial geometric and texture features independently and integrates them at the decision layer. Thus, the effectiveness of the method in deepfake detection is further validated through an analysis of the experimental results on facial geometric and texture features.

6.3.1. Analysis of Facial Geometric Feature Point Extraction

In the experiment, the Landmark method is utilized for extracting facial geometric features. Initially, the Lucas–Kanade algorithm is employed to track facial landmark points, followed by noise filtering using a Kalman filter. Research demonstrates the effectiveness of the Landmark method in extracting facial geometric landmarks for deepfake face detection. Figure 15 illustrates a schematic of facial landmark tracking on selected forged face images.

Figure 15.

The Landmark method is employed to annotate and track facial landmarks in two sets of sample data. (The second column shows facial landmark annotation, and the third column depicts landmark tracking.).

6.3.2. Analysis of Texture Feature Extraction

The following section introduces the effectiveness of the proposed image texture enhancement module in extracting texture features. A series of experiments were conducted on four distinct datasets from FaceForensic++, exploring training adjustments with incrementally increasing weight coefficients and evaluating training outcomes with different loss functions.

To enhance the training effectiveness in extracting texture features, this study incrementally added weights to different layers of the EfficientNet model within the intra-frame network branch. This approach aimed to improve the model’s detection performance across various texture features. This study employed a geometric progression with a set common ratio between adjacent terms (q = 1, 2, 3, 3.5, 4). Table 3 presents the AUC values tested on the EfficientNet network branch.

Table 3.

The AUC results from testing four different subsets of FaceForensics++ on the efficientnet network branch.

Based on the results from Table 3, the best detection performance was achieved by setting the training incremental weight q to 3 when the texture feature module was added to the EfficientNet network. As q increased, the detection AUC value gradually decreased, possibly due to a decline in the quality of texture features extracted by the network, thereby resulting in diminished detection effectiveness. Additionally, this study employed Earth Mover’s Distance (EMD) as a loss function for model optimization. EMD is a measure used in content-based image retrieval to compute the distance between two distributions. Initially proposed by Rubner et al. [39] for visual problems, we formulate the following loss function based on the EMD algorithm, as shown in Equation (25).

In this study, the EMD loss function was integrated into the model. Building upon the incremental sequence with q = 3, experiments were conducted to train the L2 loss function for calculating the Gram matrix and the EMD loss function separately within EfficientNet. The detection AUC results are presented in Table 4.

Table 4.

The detection AUC results were obtained from training experiments with the gram l2 loss function and the emd loss function in efficientnet.

Table 4 shows that the Gram L2 loss function achieved superior AUC performance. The network model learns relationships between feature vectors of the original texture images, yielding richer and more detailed texture features. Conversely, modifying the EMD loss results in poorer texture capture due to its sorted principle. This underscores the image texture enhancement module’s role in aiding the model to capture forgery features across local and global perspectives. Integrated with the WSDAN network, it enables the backbone network to prioritize areas with prominent textures, thereby improving detection accuracy.

6.4. Analysis of Diffusion Model Effectiveness

To validate the effectiveness of the diffusion model in denoising and artifact removal tasks, we employed four distinct subsets of FaceForensics++. Initial preprocessing steps were implemented to ensure the quality of the input data. Subsequently, the effectiveness of denoising and artifact removal was evaluated using assessment metrics and visual analysis, laying the foundation for subsequent deepfake detection efforts.

In the quantitative experiments of the diffusion model, we utilized the Peak Signal-to-Noise Ratio (PSNR) [40] and Learned Perceptual Image Patch Similarity (LPIPS) [41] as metrics to gauge image quality. PSNR is considered a reliable method for evaluating image quality, with higher scores indicating better image quality. LPIPS, meanwhile, uses deep learning models to assess perceptual differences between two images. In this study, VGG network models were employed for extracting image features, followed by computing the distance between them. Evaluation scores were derived by comparing the generated images with real images to calculate the L2 distance value. A smaller LPIPS score signifies higher image quality, as illustrated in Equations (26) and (27).

In Equation (27), represents multiplication. signifies the maximum pixel value in the real image, while represents the mean squared error between the generated and real images. Within Equation (27), denote two image blocks, and and denote the normalized feature map activations of the real and generated images at layer l, respectively. These activations have dimensions H × W (where H is height and W is width), and is a scaling vector used to weigh the feature channels of the layer. Finally, by computing the L2 distance between and across all layers of image blocks, these distances are averaged across the spatial dimensions.

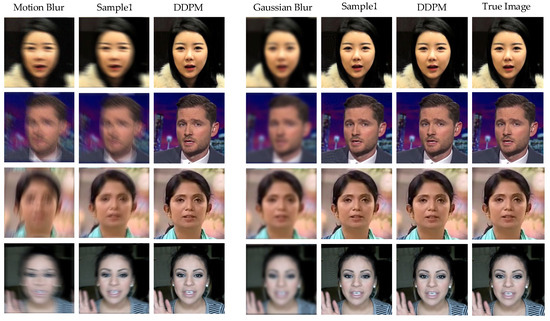

Moreover, in the experiments, T was set to 1000 for all trials to ensure a sufficient and consistent number of neural network evaluations during sampling. Therefore, this paper illustrates the denoising process through the inverse reversible process of the diffusion model, as depicted qualitatively in Figure 16.

Figure 16.

The qualitative results of motion blur denoising (left) and Gaussian blur denoising (right) using the diffusion model on samples from the FaceForensic++ dataset.

The experiment validated the efficacy using motion blur and Gaussian noise. Through the inverse process of the diffusion model, it was observed from Figure 16 that both denoising and restoration were notably effective on images affected by motion blur and those with added Gaussian noise. The diffusion model effectively reduced noise from deblurred images and removed Gaussian noise, as visually confirmed in Figure 16. This verifies the effectiveness of the diffusion model in experimental settings. Additionally, to ensure result stability, quantitative tests were conducted. PSNR and LPIPS scores were computed on the sample dataset, experiments were repeated, and the averages were taken as the final results. The model’s performance on the four different subsets of the FaceForensics++ dataset is presented in Table 5.

Table 5.

Results from the diffusion model across four different subsets of the FaceForensics++ dataset.

Based on the experimental results, it is obvious that our diffusion model performs well in denoising tasks. Table 5 displays the PSNR evaluation results of our method on four different deepfake face datasets, with PSNR values consistently above 35 for each dataset and LPIPS values around 0.2. Particularly notable are the highest PSNR values achieved on the FaceSwap and NeuralTextures datasets, indicating excellent generalization capabilities of the diffusion model on these datasets. According to the quality definitions of these two metrics, such results typically indicate a very close visual resemblance between pairs of images, highlighting the effectiveness of our method in reducing noise levels. This further underscores the significant potential of the diffusion model in handling real-world noise and artifacts.

6.5. Analysis of Sample Adversarial Attack and Defence Effectiveness

Adversarial samples were generated using the IFGSM sample attack algorithm in this study. These samples were then trained along with the original dataset inputs on two models. This adversarial training approach effectively mitigates sample attacks and has demonstrated favorable outcomes. The following section presents an analysis of the results on adversarial attacks and defense.

Adversarial sample generation algorithms require manual configuration of three hyperparameters: the number of iterations T, the perturbation range , and the learning rate . Therefore, based on the experimental requirements of this study, the hyperparameters were set to for generating adversarial samples. In this research, adversarial samples were evaluated using the average L1 loss and success rate metrics. denotes the average L1 loss between all adversarial sample frames and their corresponding original samples, as formulated in Equation (28). The success rate ASR represents the proportion of test results where the model predictions were successfully deceived. Thus, adversarial samples were generated, and adversarial training was conducted using four different datasets. The results of the adversarial attacks are presented in Table 6.

Table 6.

Based on the results of the adversarial attacks using ifgsm.

In Equation (28), and are the original and adversarial images, respectively, and N is the total number of pixels in the image.

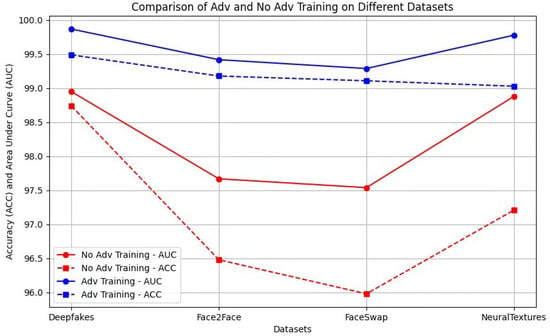

From Table 6, it can be observed that the average L1 loss in this study was quite low, indicating that the differences between the original frames and the adversarial frames were minimal, and the effectiveness of generating adversarial samples was substantial. Additionally, the high sample attack success rate suggests that the robustness of the model needs improvement. Consequently, the model was trained using adversarial training to enhance its robustness against adversarial attacks. The ASR under adversarial training, as shown in Table 6, decreased, confirming that adversarial training is beneficial for defending against adversarial attacks and improving robustness. Specifically, the strategy reduced the success rates (ASR) of adversarial attacks on four datasets by (10.15%, 7.07%, 3.32%, and 1.24%), respectively, while still achieving high detection AUC and ACC under the premise of maintaining good robustness.

Figure 17 presents adversarial deepfake images from four datasets, randomly selected from successful attacks. The frames in the first row were correctly identified as Fake by the model, while the samples generated by the IFGSM attack algorithm were misclassified as Real. As depicted in the figure, the adversarial samples generated by the algorithm showed no noticeable differences from the original images but consistently resulted in misclassification during detection. This demonstrates the effectiveness of the IFGSM adversarial attack algorithm. Moreover, employing adversarial training as a defensive measure significantly improved the model’s robustness, effectively countering adversarial sample attacks.

Figure 17.

IFGSM Adversarial Sample Examples from Four Forged Datasets.

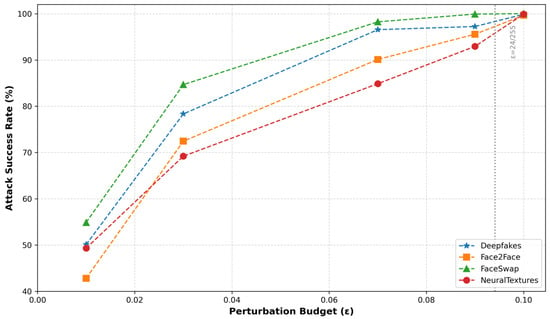

To systematically assess the robustness of deepfake detection models under adversarial attack scenarios, controlled experiments were conducted to investigate the correlation between perturbation magnitude (ε) and attack success rates. With iterative steps held constant, the perturbation range was progressively increased while monitoring the variation patterns of attack success rates across four deepfake datasets: Deepfakes, Face2Face, FaceSwap, and NeuralTextures. The quantitative relationship between ε and detection vulnerability is demonstrated in Figure 18.

Figure 18.

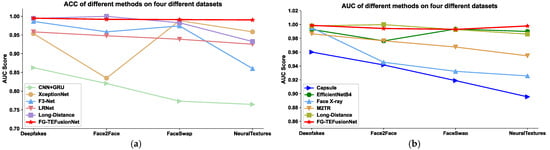

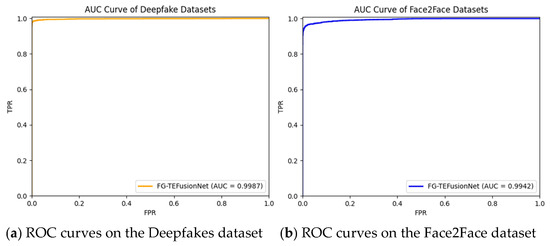

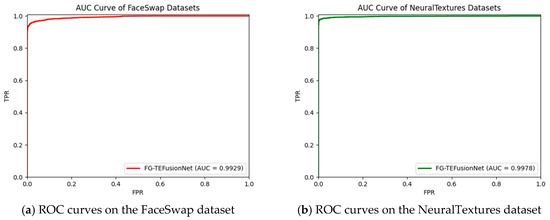

The attack success rate (ASR) variations of the I-FGSM adversarial attacks under different perturbation budgets (ε) across four deepfake datasets.