Abstract

Bringing together the Internet of Things (IoT), LLMs, and Federated Learning (FL) offers exciting possibilities, creating a synergy to build smarter, privacy-preserving distributed systems. This review explores the merging of these technologies, particularly within edge computing environments. We examine current architectures and practical methods enabling this fusion, such as efficient low-rank adaptation (LoRA) for fine-tuning large models and memory-efficient Split Federated Learning (SFL) for collaborative edge training. However, this integration faces significant hurdles: the resource limitations of IoT devices, unreliable network communication, data heterogeneity, diverse security threats, fairness considerations, and regulatory demands. While other surveys cover pairwise combinations, this review distinctively analyzes the three-way synergy, highlighting how IoT, LLMs, and FL working in concert unlock capabilities unattainable otherwise. Our analysis compares various strategies proposed to tackle these issues (e.g., federated vs. centralized, SFL vs. standard FL, DP vs. cryptographic privacy), outlining their practical trade-offs. We showcase real-world progress and potential applications in domains like Industrial IoT and smart cities, considering both opportunities and limitations. Finally, this review identifies critical open questions and promising future research paths, including ultra-lightweight models, robust algorithms for heterogeneity, machine unlearning, standardized benchmarks, novel FL paradigms, and next-generation security. Addressing these areas is essential for responsibly harnessing this powerful technological blend.

1. Introduction

Internet of Things (IoT) and Artificial Intelligence (AI) are reshaping the way we live. IoT is penetrating every aspect of our modern society. It features the explosion of interconnected devices generating vast amounts of real-world data, driving significant and innovative insights to improve our lives. Simultaneously, emerging LLMs like the GPT series have shown a remarkable ability to understand and process complex information [1,2]. The power of Large Language Models (LLMs) arises from a vast amount of training data, while IoT systems are excellent means to provide such data. Combining the two fields is a natural move. This, however, incurs significant challenges. A core question is how we can leverage the intelligence of resource-hungry LLMs to make sense of the massive, diverse, and often sensitive data streams produced by countless IoT devices, especially when the data is mostly heterogeneous, multimodality, high-dimensional, sparse, and needs to be processed quickly and, in many cases, locally [3,4,5]. This is further elaborated on below.

Sending huge volumes of IoT data to a central cloud for AI analysis is often not practical [6]. It can be too slow for applications needing real-time responses (like industrial control or autonomous systems), consumes too much bandwidth, and raises significant privacy concerns [7]. Many critical IoT applications simply demand intelligence closer to the data source [3]. On the other hand, while LLMs possess the analytical power needed for complex IoT tasks, they face their own hurdles: they require massive datasets for training, and accessing the rich, real-world, but often private, data held on distributed IoT devices is difficult [8]. Moreover, deploying these powerful models effectively within the constraints of real-world distributed systems like IoT remains a significant challenge, considering limited hardware resources and power supply, data access, and privacy. This is precisely where Federated Learning (FL) enters the picture [9]. FL revolutionizes traditional approaches by enabling collaborative model training across decentralized data sources, eliminating the need for raw data centralization. This creates a compelling opportunity: using FL to train powerful LLMs on diverse, distributed IoT data while preserving user privacy and data locality [10,11]. This combination promises smarter, more responsive, and privacy-respecting systems, potentially leading to more efficient factories, safer autonomous vehicles, or more personalized healthcare, all leveraging local data securely. However, integrating these three sophisticated technologies (IoT, LLMs, FL) creates unique complexities and challenges related to efficiency, security, fairness, and scalability [12]. Given the significance of the integration and the increasing attention it has gained recently, this review aims to provide a timely overview of the state of the art in synergizing IoT, LLMs, and FL, particularly for edge environments, hoping to highlight current capabilities, identify key challenges, and inspire future research directions that enable intelligent, privacy-preserving, and resource-efficient edge intelligence systems. Specifically, we will explore the architectures, methods, inherent challenges, and promising solutions, highlighting why this three-way integration is crucial for building the next generation of intelligent, distributed systems.

The burgeoning interest in deploying advanced AI models like LLMs within distributed environments like IoT, often facilitated by techniques such as FL and edge computing, has spurred a number of valuable survey papers. While these reviews provide essential insights, they typically focus on specific sub-domains or pairwise interactions. Some representative survey works are reviewed below. Table 1 summarizes their primary focus and key differentiating aspects alongside our current work.

Table 1.

Comparison with related surveys.

- Qu et al. [13] focus on how mobile edge intelligence (MEI) infrastructure can support the deployment (caching, delivery, training, inference) of LLMs, emphasizing resource efficiency in mobile networks. Their core contribution lies in detailing MEI mechanisms specifically tailored for LLMs, especially in caching and delivery, within 6G.

- Adam et al. [14] provide a comprehensive overview of FL applied to the broad domain of IoT, covering FL fundamentals, diverse IoT applications (healthcare, smart cities, autonomous driving), architectures (CFL, HFL, DFL), a detailed FL-IoT taxonomy, and challenges like heterogeneity and resource constraints. LLMs are treated as an emerging FL trend within the IoT ecosystem.

- Friha et al. [15] examine the integration of LLMs as a core component of edge intelligence (EI) , detailing architectures, optimization strategies (e.g., compression, caching), applications (driving, software engineering, healthcare, etc.), and offering an extensive analysis of the security and trustworthiness aspects specific to deploying LLMs at the edge.

- Cheng et al. [10] specifically target the intersection of FL and LLMs, providing an exhaustive review of motivations, methodologies (pre-training, fine-tuning, Parameter-Efficient Fine-Tuning (PEFT), backpropagation-free), privacy (DP, HE, SMPC), and robustness (Byzantine, poisoning, prompt attacks) within the “Federated LLM” paradigm, largely independent of the specific application domain (like IoT) or deployment infrastructure (like MEI).

While prior reviews cover areas like edge resources for LLMs [13], FL for IoT [14], edge LLM security [15], or federated LLM methods [10], they mainly look at pairs of these technologies. This survey distinctively examines the combined power and challenges of integrating all three, including IoT, LLMs, and FL, particularly for privacy-focused intelligence at the network edge. This synergy is depicted in Figure 1. It illustrates a conceptual framework in which synergistic AI solutions emerge from the integration of IoT, LLMs, FL, and privacy-preserving techniques (PETs). Each component contributes uniquely, where IoT provides pervasive data sources, LLMs offer powerful reasoning and language capabilities, FL supports decentralized learning, and PETs ensure data confidentiality, together forming a foundation for scalable, intelligent, and privacy-aware edge AI systems.

Figure 1.

Conceptual overview of technological convergence for synergistic AI solutions. This diagram illustrates the roles of key components: IoT for data provision, LLMs for intelligence, FL for privacy-preserving distributed training, and PETs for security, together forming a foundation for advanced edge AI systems.

More specifically, this review provides a comprehensive analysis of the state of the art regarding architectures, methodologies, challenges, and potential solutions for integrating IoT, LLMs, and FL, with a specific emphasis on achieving privacy-preserving intelligence in edge computing environments. We explore architectural paradigms conducive to edge deployment based on [3], investigate key enabling techniques including PEFT methods like low-rank adaptation (LoRA) [16] and distributed training strategies such as Split Federated Learning (SFL) [17,18], and systematically analyze the inherent multifaceted challenges spanning resource constraints, communication efficiency, data/system heterogeneity, privacy/security threats, fairness, and scalability [3]. Mitigation strategies are discussed alongside critical comparisons highlighting advantages and disadvantages. We survey recent applications to illustrate practical relevance [19]. While existing surveys may cover subsets of this intersection, such as FL for IoT [20,21] or FL for LLMs [22], this review offers a unique contribution by focusing specifically on the three-way synergy (IoT + LLM + FL) and its implications for privacy-preserving edge intelligence [10]. We aim to provide a structured taxonomy of relevant techniques, critically compare their suitability for resource-constrained and distributed IoT settings, identify research gaps specifically arising from this unique technological confluence, and propose targeted future research directions essential for advancing the field of trustworthy, decentralized AI [23].

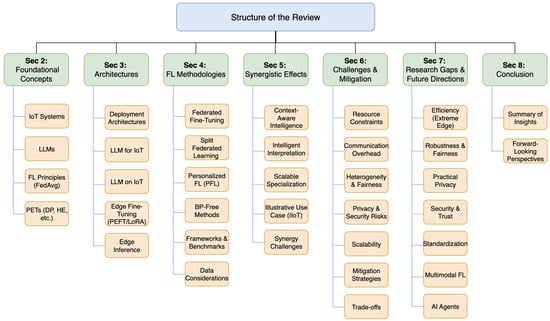

As summarized in Figure 2, the subsequent sections are structured as follows: Section 2 introduces foundational concepts related to IoT systems, LLMs, FL principles, and PETs. Section 3 discusses architectural considerations for deploying LLMs within IoT ecosystems. Section 4 examines FL methodologies specifically adapted for LLM training and fine-tuning in this context, including frameworks and data considerations. Section 5 analyzes the unique synergistic effects arising from the integration of IoT, LLMs, and FL, highlighting emergent capabilities. Section 6 provides an expanded analysis of key challenges encountered in the integration, discusses mitigation strategies, and evaluates inherent trade-offs. Section 7 identifies critical research gaps and elaborates on future research directions stemming from the synergistic integration. Section 8 concludes the review, summarizing the key insights and forward-looking perspectives on privacy-preserving, intelligent distributed systems enabled by IoT, LLMs, and FL.

Figure 2.

Overview of the review’s organizational structure. The diagram outlines the paper’s progression through its main sections: Foundational Concepts (Section 2), Architectures (Section 3), FL Methodologies (Section 4), Synergistic Effects (Section 5), Challenges and Mitigation (Section 6), Research Gaps and Future Directions (Section 7), and Conclusion (Section 8). Key topics within each section are indicated, offering a reader roadmap.

To ensure a comprehensive and systematic review, we adopted a structured literature search and selection methodology.

- Search Strategy and Databases: We conducted extensive searches in prominent academic databases, including Google Scholar, IEEE Xplore, ACM Digital Library, Scopus, and ArXiv (for pre-prints). The search was performed between February 2024 and May 2025 to capture the most recent advancements.

- Search Keywords: A combination of keywords was used, including, but not limited to “Internet of Things” OR “IoT” AND “Large Language Models” OR “LLMs” AND “Federated Learning” OR “FL”; “LLMs on edge devices”; “Federated LLMs for IoT”; “privacy-preserving LLMs in IoT”; “LoRA for Federated Learning”; “Split Federated Learning for LLMs”; “efficient LLM deployment on IoT”; “AIoT AND LLMs”; “Industrial IoT AND Federated Learning”.

- Inclusion Criteria: Papers were included if they were peer-reviewed journal articles, conference proceedings, or highly cited pre-prints directly relevant to the integration of IoT, LLMs, and FL. We prioritized studies that discussed system architectures, methodologies, applications, challenges, or future directions related to this tripartite synergy, particularly those addressing resource constraints and privacy in IoT environments.

- Exclusion Criteria: Papers were excluded if they focused solely on one technology without significant discussion of its integration with the other two, were not written in English, or were not accessible in full text. Short abstracts, posters, and non-academic articles were also excluded.

- Literature Screening and Selection Statistics: Our initial search across the specified databases (Google Scholar, IEEE Xplore, ACM Digital Library, Scopus, and ArXiv using keywords such as ((“Internet of Things” OR “IoT”) AND (“Large Language Models” OR “LLMs”) AND (“Federated Learning” OR “FL”)) AND (“Edge Computing” OR “Privacy”)) yielded 223 unique articles. After screening titles and abstracts for relevance to the tripartite synergy of IoT, LLMs, and FL, particularly in edge environments, 160 articles were retained. These 160 articles underwent a full-text review against our predefined inclusion and exclusion criteria. From this detailed assessment, 78 articles were identified as directly pertinent to the core research questions of this review and were selected for in-depth data extraction and synthesis. The final manuscript cites a total of 135 references, which encompass these 78 core articles along with foundational papers and other supporting literature.

- Bias Assessment and Mitigation: To ensure a balanced review, potential sources of bias were considered. Publication bias, the tendency to publish positive or significant results, was mitigated by including pre-prints from ArXiv, allowing for the inclusion of recent and ongoing research that may not yet have undergone peer review. To counteract database bias, we utilized multiple prominent and diverse academic databases. Furthermore, keyword bias was addressed by developing a comprehensive list of search terms, including synonyms and variations, related to IoT, LLMs, FL, and their intersection with edge computing and privacy. The selection and data extraction were primarily conducted by two authors, with discrepancies resolved through discussion to minimize individual researcher bias.

- Data Extraction and Synthesis: Relevant information regarding methodologies, challenges, proposed solutions, applications, and future trends was extracted from the selected papers. This information was then synthesized to identify common themes, research gaps, and the overall state of the art, forming the basis of this review.

2. Foundational Concepts

This section lays the groundwork for our review by introducing the fundamental concepts underpinning the integration of IoT, LLMs, and FL. We will briefly define each core technology, including IoT systems and their characteristics in advanced networks, the capabilities and challenges of LLMs, the principles of FL such as FedAvg, and key PETs like differential privacy and Homomorphic Encryption. Understanding these foundational elements is crucial for appreciating the synergistic approach and addressing the complexities discussed in later sections.

2.1. IoT in Advanced Networks

The IoT encompasses vast networks of interconnected physical objects and devices, enabling them to collect, exchange, and act upon data, often without direct human intervention [24,25]. This ecosystem is characterized by its massive scale and significant heterogeneity in terms of hardware capabilities, power sources, connectivity, and the types of data generated in real time [6], as summarized in Table 2. To better understand the context for integrating advanced AI, it is useful to consider key IoT sub-domains:

- Massive IoT (MIoT): This segment focuses on connecting a very large number of low-cost, low-power devices (e.g., in smart metering or environmental monitoring) that typically transmit small amounts of data infrequently. Key challenges include ensuring scalability to billions of devices, long battery life, and managing connectivity for devices with severely limited local processing capabilities. The data, while individually small, can be voluminous in aggregate [26].

- Industrial IoT (IIoT): Applied in sectors like manufacturing and energy, IIoT prioritizes high reliability, low latency, and robust security for critical operations such as predictive maintenance and process automation. IIoT systems often generate large volumes of high-frequency, time-sensitive data from sophisticated sensors and machinery, frequently necessitating a strong trend towards edge computing for localized processing and real-time analytics [27].

- Artificial Intelligence of Things (AIoT): AIoT signifies the convergence of AI technologies with IoT infrastructure, aiming to embed AI capabilities, including machine learning and potentially Large Language Models (LLMs), into IoT devices, edge gateways, or associated platforms. This facilitates intelligent decision-making and autonomous operations across diverse applications (e.g., smart homes, intelligent transportation). A primary challenge in AIoT is managing the computational demands of AI models on typically resource-constrained IoT hardware [28].

Table 2.

Comparison of IoT characteristics.

Table 2.

Comparison of IoT characteristics.

| Characteristic | Massive IoT (MIoT) | Industrial IoT (IIoT) | Artificial Intelligence of Things (AIoT) | General Consumer IoT |

|---|---|---|---|---|

| Primary Goal | Wide-scale, low-cost data collection from numerous simple devices [29] | High-reliability, low-latency control and monitoring of critical industrial processes | Enhanced automation, intelligent decision-making, and adaptive behavior through AI/ML at the edge/device | Convenience, automation, and enhanced user experience in daily life |

| Typical Applications | Smart metering, environmental monitoring, asset tracking, smart agriculture (large-scale sensor networks) | Manufacturing automation (PLCs, SCADA), predictive maintenance, robotics, process control, smart grids | Smart surveillance (intelligent video analytics), autonomous vehicles/drones, advanced robotics, personalized healthcare monitors, smart retail | Smart home devices (lights, thermostats, speakers), wearables (fitness trackers), connected appliances |

| Data Types and Volume | Small data packets, often infrequent; high volume due to massive device numbers; primarily sensor readings (temperature, humidity, location, status) | Time-series sensor data (vibration, pressure, temperature), control signals, machine status, production data; moderate to high volume per device, often continuous | Multimodal data (video, audio, sensor fusion, text), complex features extracted by AI models; volume varies greatly depending on AI task [30] | User commands (voice, app), sensor data (activity, environment), media streams; volume varies, can be high for media |

| Network Topology and Connectivity | LPWAN (LoRaWAN, NB-IoT, Sigfox), cellular IoT; star or mesh topologies; focus on long range, low power | Wired (Industrial Ethernet, Profibus), reliable wireless (e.g., private 5G, Wi-Fi HaLow); often deterministic networks; Focus on reliability, low latency | Diverse: Wi-Fi, 5G/6G, Bluetooth, Zigbee, wired; edge-centric or device-to-device communication; focus on bandwidth and latency for AI processing | Wi-Fi, Bluetooth, Zigbee, Z-Wave; typically star or mesh connected to a home hub/router; focus on ease of use and interoperability |

| Key Constraints and Challenges | Extreme low power, low cost per device, massive scalability, simple device management, intermittent connectivity | Ultra-high reliability, low and deterministic latency, security against cyber–physical attacks, harsh operating environments, interoperability of legacy systems [31] | Computational power for AI on device/edge, energy for AI processing, real-time AI inference, complexity of AI model deployment and management, data quality for AI | User privacy, security vulnerabilities, ease of setup and use, interoperability between vendor ecosystems, device cost |

| LLM/FL Relevance | FL for anomaly detection across massive datasets, simple status summarization by LLMs (if data aggregated); SFL for very basic feature extraction. | FL for predictive maintenance models, LLMs for analyzing maintenance logs and generating reports, LLMs for human–machine interfaces (NL queries about machine status). | FL for training sophisticated AI models (e.g., vision, speech) at the edge, LLMs for complex scene understanding, natural language interaction, and autonomous decision-making. | FL for personalized models (e.g., smart home routines), LLMs for voice assistants and intuitive control; SFL for privacy-preserving on-device learning [32] |

Across these varied IoT deployments, and particularly as AIoT applications become more sophisticated, the inherent resource limitations (such as CPU, memory, battery, and power budgets) of many end devices and even edge nodes represent a primary bottleneck. Executing complex AI models, such as LLMs, directly at the extreme edge is thus particularly challenging [33], underscoring the critical need for resource-efficient AI techniques, including PEFT and FL, which are central to this review.

2.2. Large Language Models

LLMs are deep learning models, primarily Transformer-based [34], possessing billions of parameters and demonstrating powerful emergent capabilities derived from extensive pre-training [1,35]. They typically undergo fine-tuning for task adaptation [36]. Their significant size imposes high computational costs for training and inference, making deployment on standard IoT hardware challenging [4]. Ethical considerations regarding potential biases and responsible use are also critical [5,37].

2.3. Federated Learning

FL enables collaborative training on decentralized data [9]. The most widely known FL algorithm is Federated Averaging (FedAvg) [9,38,39]. In each communication round t, local clients receive the current global model weights from the central server. K selected clients then train the model locally using its data for E epochs, and update local weights . The server aggregates these local weights to produce the updated global model , as defined in (1):

where is the number of data points on client k, and is the total number of data points across the selected clients [40]. This weighted average aims to give more importance to updates from clients with more data. The adoption of FL, particularly in sensitive or distributed environments like IoT, is driven by several key advantages over traditional centralized approaches [7]:

- Enhanced Privacy: Data remains localized on user devices, reducing risks associated with central data aggregation.

- Communication Efficiency: Transmitting model updates instead of raw data significantly reduces network load.

- Utilizing Distributed Resources: Leverages the computational power available at the edge devices [41].

While FedAvg provides a foundational approach, practical FL implementations involve several key characteristics, architectural choices, and challenges:

- CFL vs. DFL: Centralized FL (CFL) uses a server for coordination and aggregation, offering simplicity but creating a potential bottleneck and single point of failure [42]. Decentralized FL (DFL) employs peer-to-peer communication, potentially increasing robustness and scalability for certain network topologies (like mesh networks common in IoT scenarios) but adding complexity in coordination and convergence analysis [43].

- Non-IID Data: A central challenge in FL stems from heterogeneous data distributions across clients, commonly referred to as Non-Independent and Identically Distributed (Non-IID) data [44]. This means the statistical properties of data significantly vary between clients; for instance, clients might hold data with different label distributions (label skew) or different feature characteristics for the same label (feature skew). Such heterogeneity can substantially degrade the performance of standard algorithms like FedAvg, as the single global model aggregated from diverse local models may not generalize well to each client’s specific data distribution [7].

2.4. Privacy-Preserving Techniques

FL’s privacy benefits can be further enhanced using PETs, with significant advantages and disadvantages, particularly relevant in the resource-constrained IoT context:

Differential Privacy (DP): DP provides a formal, mathematical definition of privacy guarantees [45,46]. A randomized mechanism satisfies -DP if, for any two adjacent datasets and (differing by at most one element), and for any possible subset of outputs S, the following inequality holds:

where is the privacy budget, and represents the probability that the strict -DP guarantee might be violated. The privacy budget () is a fundamental concept in differential privacy that quantifies the maximum amount of information leakage or privacy loss permitted in a privacy-preserving mechanism. A smaller privacy budget (a smaller value) indicates stronger privacy by limiting the influence of any single data point on the output, thereby making it harder to infer individual information. However, this typically results in lower utility of the data or model, as more noise is often required to achieve stronger privacy. In the context of Federated Learning with LLMs, the privacy budget must be carefully managed across multiple rounds of training and participating clients to balance privacy protection with model performance, especially in sensitive IoT applications like healthcare or smart homes. For , it is typically set to a very small value (e.g., less than the inverse of the dataset size ), representing a small probability that the pure -DP guarantee is broken. This definition ensures that the output distribution of the mechanism is statistically similar regardless of the presence or absence of any single individual’s data [47]. DP guarantees are commonly achieved by adding carefully calibrated noise (e.g., following a Gaussian or Laplace distribution) to function outputs, gradients, or model updates, as implemented in algorithms like DP-SGD [48].

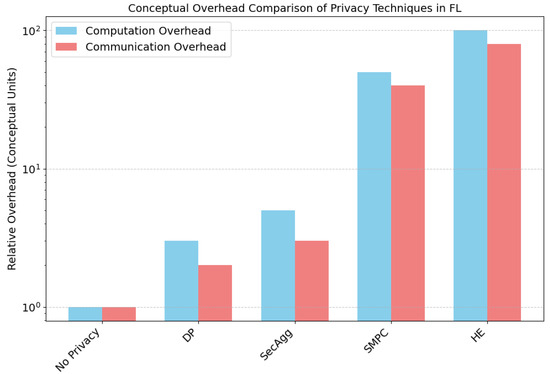

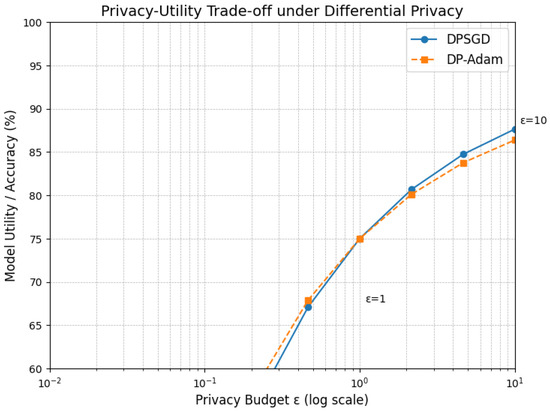

DP offers strong, mathematically rigorous privacy guarantees against inference attacks. Its computational overhead is generally lower compared to cryptographic methods like HE or SMPC. However, a key challenge of DP is the inherent trade-off between privacy and utility, where increasing noise (reducing ) to enhance privacy typically degrades model accuracy [49], as conceptually illustrated in Figure 3. This figure compares the relative computational and communication overheads of various privacy-preserving techniques in FL. It highlights that while DP introduces additional costs, its overhead remains modest compared to more complex methods like SMPC and HE. Notably, homomorphic encryption incurs the highest total overhead, underscoring the practicality of DP in resource-constrained edge scenarios. Managing privacy budgets effectively across rounds and clients is complex [50,51,52], and DP noise can disproportionately affect fairness for underrepresented groups [3].

Figure 3.

Conceptual illustration of the privacy–utility trade-off in DP. Stronger privacy guarantees (lower ) often correlate with a decrease in model utility or accuracy. The exact curve depends heavily on the dataset, model, and specific DP mechanism.

Homomorphic Encryption (HE): HE allows specific computations (e.g., addition for averaging updates) on encrypted data [53]. The server aggregates ciphertexts without decrypting them. The advantage of HE lies in the fact that it provides strong confidentiality against the server (server learns nothing about individual updates), hence no impact on model accuracy (utility) compared to non-private aggregation. However, HE can have extremely high computational overhead for encryption/decryption and homomorphic operations, significantly expanding the communication data size (ciphertext size). Thus, HE is currently impractical for direct implementation on most resource-constrained IoT devices [7].

Secure Multi-Party Computation (SMPC): SMPC enables multiple parties to jointly compute a function, such as the sum of updates, using cryptographic protocols like secret sharing, without revealing their private inputs [54]. The primary advantage of SMPC lies in its strong privacy guarantees achieved by distributing trust among participants, including potentially the server and clients, with no impact on model accuracy [55]. However, SMPC protocols often require complex multi-round interactions, leading to significant communication overhead. Furthermore, assumptions of synchronous participation or the need for fault tolerance mechanisms add complexity, posing challenges for deployment in dynamic IoT environments [3].

Secure Aggregation: Secure Aggregation utilizes specialized protocols, often based on secret sharing or lightweight cryptography, optimized specifically for the FL aggregation task [56]. These protocols allow the server to securely compute only the sum or average of client updates [57]. Compared to general HE or SMPC, Secure Aggregation is significantly more efficient computationally and communication-wise for this specific task, leading to its widespread adoption in practical FL systems. Nevertheless, while it protects individual updates from the server during the aggregation phase, it does not shield the final aggregated result from potential inference attacks, nor does it secure the updates during transmission unless combined with additional encryption methods.

Table 3 provides a comparative summary of these key privacy-preserving techniques, highlighting their mechanisms, pros, and cons within the FL context. The practical choice often involves secure aggregation, potentially combined with DP for stronger client-level guarantees, or relies on trust in the server, depending heavily on the threat model, system capabilities, and regulatory environment (e.g., GDPR, HIPAA constraints on data processing and transfer) [3,58].

Table 3.

Comparison of key privacy-preserving techniques in the FL context.

3. LLM-Empowered IoT Architecture for Distributed Systems

Having established the foundational concepts of IoT, LLMs, and FL in the preceding section, this section transitions to explore the architectural frameworks necessary for effectively deploying LLM-empowered IoT systems within distributed environments. We begin by outlining a general multi-tier (Cloud–Edge–Device) architecture that balances computational demands with data locality and latency requirements. Subsequently, we delve into two key operational perspectives: first, how LLMs can augment and enhance the capabilities of IoT systems (termed “LLM for IoT”) by enabling intelligent interfaces, advanced data analytics, and automated control; second, the crucial strategies and optimization techniques for efficiently running LLMs on or near resource-constrained IoT devices (termed “LLM on IoT”), covering essential aspects like edge fine-tuning and edge inference.

3.1. Architectural Overview

Deploying LLMs within IoT often favors multi-tier architectures (Cloud–Edge–Device) to balance computation, latency, and data locality [33]. This involves strategically placing LLM-related tasks: heavy pre-training in the cloud, fine-tuning and inference closer to the edge, and potentially highly optimized inference on capable end devices [25]. This architecture supports both leveraging LLMs for IoT enhancement (“LLM for IoT”) and efficiently managing LLMs within IoT constraints (“LLM on IoT”) [10].

3.2. LLM for IoT

LLMs can significantly enhance IoT system capabilities through the following:

- Intelligent Interfaces and Interaction: Enabling sophisticated natural language control (e.g., complex conditional commands for smart environments) and dialogue-based interaction with IoT systems for status reporting or troubleshooting [59].

- Advanced Data Analytics and Reasoning: Fusing data from multiple sensors (e.g., correlating camera feeds with environmental sensor data for scene understanding in smart cities), performing complex event detection, predicting future states (e.g., equipment failure prediction in IIoT based on subtle degradation patterns), and providing causal explanations for system behavior.

- Automated Optimization and Control: Learning complex control policies directly from high-dimensional sensor data for optimizing resource usage (e.g., dynamic energy management in buildings considering real-time occupancy, weather forecasts, and energy prices) or network performance (e.g., adaptive traffic routing in vehicular networks).

3.3. LLM on IoT: Deployment Strategies

Efficiently running LLMs on or near IoT devices requires optimization. In the training stage, model pruning is a typical strategy, while inference adaptation can also be performed for edge devices. These techniques are reviewed next.

3.3.1. Edge Fine-Tuning

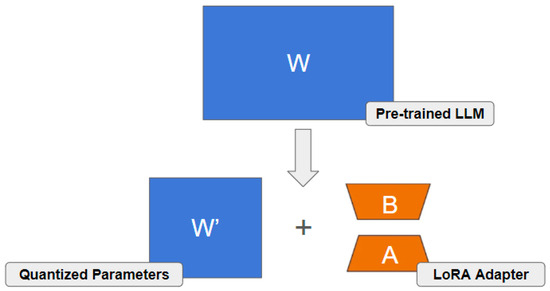

Adapting pre-trained models locally using PEFT is key. To adapt large pre-trained models like LLMs without incurring the high computational and memory costs of full fine-tuning, PEFT methods can be employed. A prominent example is the popular LoRA [16]. Instead of updating the entire pre-trained weight matrix , LoRA introduces two smaller, low-rank matrices, and , where the rank r is typically much smaller than d or k (i.e., ). The core idea is to represent the weight update as the product of these low-rank matrices (). During fine-tuning, the original weights remain frozen, and only the parameters in and are trained. This mechanism is illustrated in Figure 4. The effective weight matrix used in the forward pass is then computed as

This approach drastically reduces the number of trainable parameters from for full fine-tuning down to only for LoRA [60]. This significant reduction in parameters, memory usage, and computation makes fine-tuning large models feasible even on resource-constrained edge devices and substantially decreases communication overhead in Federated Learning scenarios where only the small and matrices need to be exchanged [61].

Figure 4.

Illustration of the LoRA adapter mechanism, potentially used with quantized base model weights (as in QLoRA [62]). The large pre-trained weights (W) might be stored in a quantized format (W’), while the task-specific update is learned via the small, trainable low-rank adapter matrices (B and A).

Recent advancements in LoRA have produced variants that further address challenges pertinent to resource-constrained IoT and FL settings. For instance, DoRA (Weight-Decomposed Low-Rank Adaptation) [63] extends LoRA by decomposing the pre-trained weights into magnitude and direction components for fine-tuning. This allows LoRA updates to refine the model with a different learning mechanism compared to full fine-tuning of magnitudes and directions, reportedly enabling more efficient and effective training by potentially better capturing nuanced parameter adjustments while maintaining or even improving performance over standard LoRA. Such an approach can be particularly valuable in Federated Learning rounds over bandwidth-constrained IoT environments where efficient yet impactful updates are crucial.

Building upon such decomposed approaches, EDoRA (Efficient DoRA) [64] aims to further optimize training efficiency. The EDoRA methodology is designed to significantly reduce the computational burden and memory footprint during the fine-tuning process, potentially involving techniques like quantization or structured pruning tailored for the decomposed components. For example, EDoRA is reported to achieve substantial reductions in communication overhead compared to standard LoRA while maintaining comparable task performance by employing sparse updating mechanisms or more aggressive compression. These characteristics make variants like EDoRA highly suitable for IoT+FL scenarios where both computational and communication efficiency are critical for practical deployment on diverse and often limited edge devices.

However, PEFT methods, including LoRA and its variants, have benefits and drawbacks. The choice of parameters, such as the rank r in LoRA, directly impacts the balance between efficiency and the model’s adaptation capacity; a very low rank might limit the model’s ability to capture complex task-specific nuances [65]. Furthermore, the generalization capability of PEFT methods, especially when adapting models to tasks significantly different from the pre-training data, compared to full fine-tuning, remains an active area of investigation [66].

3.3.2. Edge Inference

Prediction/generation performance can be optimized through the following techniques.

- On-device Inference: Utilizes model compression (quantization, pruning, distillation) [62,67]. Compression inherently risks degrading model accuracy or robustness, and the extent depends heavily on the technique and compression ratio [68].

- Co-inference/Split Inference: Divides layers between device and edge server [18]. It introduces network latency and dependency on the edge server, although it keeps raw data local. This is distinct from SFL used for training.

- Edge Caching: Reduces latency for repeated queries [3].

4. Federated Learning for Privacy-Preserving LLM Training in IoT

Having established the foundational concepts and architectural considerations, this section delves into the specific methodologies required to effectively train and adapt LLMs within distributed IoT environments using FL. We examine various techniques designed to overcome the inherent challenges of resource constraints, communication overhead, data heterogeneity, and privacy concerns that arise when integrating these powerful models with FL paradigms at the edge [61]. Key topics include core federated fine-tuning strategies tailored for LLMs, methods for personalization, alternative training approaches, essential supporting frameworks and data handling techniques, the emerging role of LLMs in aiding the FL process itself, and crucial evaluation metrics specific to this context [69]. Understanding these methodologies is crucial for realizing the practical potential of the synergistic IoT, LLM, and FL integration.

4.1. Federated Fine-Tuning of LLMs

Applying FL to fine-tune Large Language Models enables collaborative adaptation on decentralized IoT data, crucial for personalization and domain specialization while preserving privacy [23]. The integration of FL with PEFT methods, particularly LoRA, significantly reduces communication overhead by transmitting only lightweight parameter updates (typically <1% of total model parameters) [70]. Beyond CFL approaches, research is exploring decentralized fine-tuning methods [71]. For instance, Dec-LoRA is an algorithm designed for decentralized fine-tuning of LLMs using LoRA without relying on a central parameter server [72]. Experimental results suggest that Dec-LoRA can achieve performance comparable to centralized LoRA, even when facing challenges like data heterogeneity and quantization constraints, offering a potential pathway for more robust and scalable federated fine-tuning in certain network topologies [60].

4.2. Split Federated Learning

SFL addresses the critical memory limitations on edge devices during the training phase of large models within an FL context [19]. By partitioning the model and offloading a significant portion of the computation (especially backward passes through deeper layers) to a server, SFL allows memory-constrained devices to participate [18]. Integrating LoRA further optimizes this [17]. However, SFL introduces latency due to the necessary exchange of activations and gradients between client and server per iteration, and its performance is sensitive to the network bandwidth and the choice of the model split point [18].

4.3. Personalized Federated LLMs

PFL methods are vital for addressing client heterogeneity in FL, aiming to provide models better suited to individual client data or capabilities than a single global model [73,74]. Table 4 provides a comparative overview.

Table 4.

Comparative overview of personalized PFL approaches for LLMs.

4.4. Backpropagation-Free Methods

These methods (e.g., zeroth-order optimization) bypass standard backpropagation, reducing peak memory usage by eliminating the need to store activations [87,88,89,90]. Limitations: They often require significantly more function evaluations (slower convergence) and can be less stable or scalable for very high-dimensional parameter spaces compared to gradient-based methods [87,91]. Their practical application in large-scale federated LLM training remains an active research topic.

4.5. Frameworks and Benchmarks

The practical implementation and evaluation of federated LLMs rely on specialized software frameworks and benchmarks:

Frameworks: Libraries like FedML [92] with its FedLLM component [92], Flower [93,94], FATE-LLM [95], and FederatedScope-LLM [96] provide infrastructure for simulating or deploying FL. Features relevant to IoT/edge include support for heterogeneous devices, PEFT methods (e.g., LoRA), various aggregation algorithms, security mechanisms (DP, secure aggregation), and sometimes specific optimizations for edge deployment (e.g., efficient client runtimes, handling intermittent connectivity). Selecting a framework depends on the specific research or deployment needs regarding scale, flexibility, supported models, and available privacy/security features.

Benchmarks: Standardized datasets and evaluation protocols are crucial for comparing different algorithms. Efforts like FedIT [97] focus on benchmarking federated instruction tuning. FedNLP [98] provided early benchmarks for standard NLP tasks in FL. OpenFedLLM aims to offer a comprehensive platform with multiple datasets and metrics [99]. However, benchmarks specifically capturing the complexities of real-world IoT data heterogeneity, network conditions, and device constraints for LLMs are still needed.

4.6. Initialization and Data Considerations

Effective federated LLM training depends significantly on model initialization and data handling:

Model Initialization: Starting FL from a well-pre-trained LLM, rather than random initialization, significantly improves convergence speed, final model performance, and robustness to Non-IID data [100]. It allows FL to focus on adaptation rather than learning foundational knowledge from scratch [101].

Data Processing: Handling massive, distributed datasets requires scalable tools. Libraries like Dataset Grouper aim to facilitate partitioning large datasets for FL simulation [102].

Synthetic Data Generation: When local data is scarce or highly skewed, generating synthetic data can augment training [10]. LLMs show promise for generating high-quality synthetic data that reflects complex real-world distributions, potentially overcoming limitations of earlier generative models used in FL [103]. Frameworks like GPT-FL explore using LLM-generated data to aid FL. Selecting relevant public data using distribution matching techniques can also enhance privacy-preserving training via knowledge distillation [104].

4.7. LLM-Assisted Federated Learning

Beyond using FL to train LLMs, the reciprocal relationship where LLMs assist FL is also emerging [23]:

Mitigating Data Heterogeneity: LLMs pre-trained on vast datasets can generate high-quality synthetic data reflecting diverse distributions. This synthetic data can be used centrally or shared (with privacy considerations) to augment clients’ local datasets, helping to alleviate the negative impacts of Non-IID data on FL convergence [103].

Knowledge Distillation: A large, powerful LLM (potentially centrally available or trained via FL itself) can act as a “teacher” model. Its knowledge (e.g., predictions, representations) can be distilled into smaller “student” models trained by clients in the FL network, improving the efficiency and performance of client models, especially on resource-constrained devices [65].

Intelligent FL Orchestration: LLMs could potentially be used for more sophisticated FL management tasks, such as predicting client resource availability, assessing data quality for client selection, or even dynamically tuning FL hyperparameters based on observed training dynamics.

4.8. Evaluation Metrics

Evaluating federated LLM systems requires a multifaceted approach beyond standard accuracy measures, particularly in the IoT context (see Table 5). Developing standardized benchmarks that allow for consistent evaluation across these diverse metrics is a key challenge and future direction [97]. Table 5 summarizes the key categories of evaluation, including model utility, efficiency, privacy, fairness, and scalability, each with specific metrics tailored to the constraints and demands of federated IoT settings. For instance, communication and computation efficiency metrics reflect the limited bandwidth, energy, and processing power typical of edge devices. Privacy is evaluated through both theoretical guarantees (such as differential privacy parameters) and empirical attack resistance, while fairness and scalability ensure inclusiveness and robustness across heterogeneous clients. Together, these metrics offer a comprehensive framework for assessing the real-world feasibility and trustworthiness of federated LLM systems deployed across diverse and distributed IoT environments.

Table 5.

Key evaluation metrics for federated LLM systems in IoT contexts.

5. Synergistic Effects of Integrating IoT, LLMs, and Federated Learning

The previous sections have laid the groundwork by introducing the core concepts and individual capabilities of IoT, LLM and FL. While pairwise integrations—such as applying LLMs to IoT data analytics [105], using FL for privacy-preserving IoT applications [20,21], or employing FL to train LLMs [23]—offer significant advancements, they often encounter inherent limitations [10]. Centralized LLM processing of IoT data raises critical privacy and communication bottlenecks [13]; traditional FL models struggle with the complexity and scale of raw IoT data [14]; and federated LLMs without direct access to real-world IoT streams lack crucial grounding and context [7].

This section argues that the true transformative potential lies in the synergistic convergence of all three technologies, IoT, LLMs, and FL, explicitly enhanced by Privacy-Enhancing Technologies [49]. This three-way integration creates a powerful ecosystem where the strengths of each component compensate for the weaknesses of the others, enabling capabilities and solutions that are fundamentally unattainable or significantly less effective otherwise [45]. We posit that this synergy is not merely additive but multiplicative, paving the way for a new generation of advanced, privacy-preserving, context-aware distributed intelligence operating directly at the network edge [15]. We will explore this “1 + 1 + 1 > 3” effect through three core synergistic themes, building upon the motivations discussed in works like [56].

5.1. Theme 1: Privacy-Preserving, Context-Aware Intelligence from Distributed Real-World Data

The Challenge: LLMs thrive on vast, diverse, and timely data to develop nuanced understanding and maintain relevance [8]. IoT environments generate precisely this type of data—rich, real-time, multimodal streams reflecting the complexities of the physical world [7,49]. However, this data is inherently distributed across countless devices and locations [14], and often contains highly sensitive personal, operational, or commercial information, making centralized collection legally problematic (e.g., GDPR, HIPAA compliance [21]), technically challenging (bandwidth costs, latency [13]), and ethically undesirable [5,22]. Relying solely on public datasets limits LLM grounding and domain specificity [10].

The Synergy (IoT + LLM + FL): Federated Learning acts as the crucial enabling mechanism [9] that allows LLMs to tap into the rich, distributed data streams generated by IoT devices without compromising data locality and privacy [15]. IoT provides the continuous flow of real-world, multimodal data (the “what” and “where”) [14]. FL provides the privacy-preserving framework for collaborative learning across these distributed sources (the “how”) [10]. The LLM provides the advanced cognitive capabilities to learn deep representations, understand context, and extract meaningful intelligence from this data (the “why” and “so what?”) [69].

Emergent Capability: This synergy empowers LLMs to maintain robust general capabilities while dynamically adapting to specific real-world contexts. By leveraging fresh, diverse, and privacy-sensitive IoT data, these models achieve continuous grounding in evolving environments. This allows for the following:

- Hyper-Personalization: Training models tailored to individual users or specific environments (e.g., a smart home assistant learning user routines from sensor data via FL [14]).

- Real-time Domain Adaptation: Continuously fine-tuning LLMs (e.g., using PEFT like LoRA [61]) with the latest IoT data to adapt to changing conditions (e.g., adapting a traffic prediction LLM based on real-time sensor feeds from different city zones [106]).

- Enhanced Robustness: Learning from diverse, real-world IoT data sources via FL can make LLMs more robust to noise and domain shifts compared to training solely on cleaner, but potentially less representative, centralized datasets [44].

5.2. Theme 2: Intelligent Interpretation and Action Within Complex IoT Environments

The Challenge: IoT environments produce data that is often complex, noisy, unstructured, and multimodal (e.g., raw sensor time series, machine logs, video feeds, acoustic signals) [14]. Traditional FL, while preserving privacy, often employs simpler models that struggle to extract deep semantic meaning or perform complex reasoning on such data [49]. Conversely, powerful LLMs, while capable of understanding complexity [15], lack the direct connection to the physical world for sensing and actuation and struggle with distributed private data access [107].

The Synergy (IoT + LLM + FL): LLMs bring sophisticated natural language understanding, reasoning, and generation capabilities to the table [1], allowing the system to interpret intricate patterns, correlate information across different IoT modalities, and even generate human-readable explanations or reports [105]. FL provides the means to train these powerful LLMs collaboratively using the relevant complex IoT data distributed across the network [61]. Crucially, IoT devices provide the physical grounding, acting as the sensors collecting the complex data and potentially as actuators executing decisions derived from LLM insights [3]. Furthermore, LLMs can enhance the FL process itself by intelligently guiding client selection based on interpreting the relevance or quality of their IoT data, or even assisting in designing personalized FL strategies [15].

Emergent Capability: The combination allows for systems that can deeply understand complex physical environments and interact intelligently within them. This goes beyond simple data aggregation or pattern matching:

Contextual Anomaly Detection: Identifying subtle anomalies in IIoT machine behavior by correlating multi-sensor data and unstructured logs, understood and explained by an LLM trained via FL [108]. Causal Reasoning in Smart Cities: Using FL-trained LLMs to analyze diverse IoT data (traffic, pollution, events) to infer causal relationships and predict cascading effects [14,106]. Goal-Oriented Dialogue with Physical Systems: Enabling users to interact with complex IoT environments (e.g., a smart factory floor) using natural language, where an LLM interprets the request, queries relevant IoT data (potentially involving FL for aggregation), and generates responses or even commands for actuators [15].

5.3. Theme 3: Scalable and Adaptive Domain Specialization at the Edge

The Challenge: Deploying large, general-purpose LLMs directly onto resource-constrained IoT devices is often infeasible due to their size and computational requirements [62]. While smaller, specialized models can run on the edge, training them from scratch for every specific IoT application or location is inefficient and does not leverage the power of large pre-trained models [15]. Centralized fine-tuning of large models for specific domains requires access to potentially private or distributed IoT data [13].

The Synergy (IoT + LLM + FL): FL combined with PEFT techniques like LoRA [70] provides a highly scalable and resource-efficient way to specialize pre-trained LLMs for diverse IoT domains using distributed edge data [13,60]. IoT devices/edge servers provide the specific local data needed for adaptation [14]. PEFT ensures that only a small fraction of parameters need to be trained and communicated during the FL process, drastically reducing computation and communication overhead [61,82]. The base LLM provides the powerful foundational knowledge, while FL+PEFT enables distributed, privacy-preserving specialization [71].

Emergent Capability: This synergy enables the mass customization and deployment of powerful, specialized AI capabilities directly within diverse IoT environments. Key outcomes include the following:

- Locally Optimized Performance: Models fine-tuned via FL+PEFT on local IoT data will likely outperform generic models for specific edge tasks (e.g., a traffic sign recognition LLM adapted via FL to local signage variations [14]).

- Rapid Adaptation: New IoT devices or locations can quickly join the FL process and adapt the shared base LLM using PEFT without needing massive data transfers or full retraining [10].

- Resource-Aware Deployment: Allows for leveraging powerful base LLMs even when end devices can only handle the computation for small PEFT updates during FL training [79], or optimized inference models (potentially distilled using FL-trained knowledge [86]). Frameworks like Split Federated Learning can further distribute the load [17,18].

5.4. Illustrative Use Case: Predictive Maintenance in Federated Industrial IoT

Consider a scenario involving multiple manufacturing plants belonging to different subsidiaries of a large corporation, or even different collaborating companies [108]. Each plant operates similar types of critical machinery (e.g., CNC machines, robotic arms) equipped with various sensors (vibration, temperature, acoustic, power consumption—the IoT component). The goal is to predict potential machine failures proactively across the entire fleet to minimize downtime and optimize maintenance schedules, while ensuring that proprietary operational data and specific machine performance characteristics from one plant are not shared with others.

Below, we summarize the limitations without synergy.

- IoT only: Basic thresholding or simple local models on sensor data might miss complex failure patterns. No collaborative learning.

- IoT + Cloud LLM: Requires sending massive, potentially sensitive sensor streams and logs to the cloud, incurring high costs, latency, and privacy risks [13].

- IoT + FL (Simple Models): Can learn collaboratively but struggles to interpret unstructured maintenance logs or complex multi-sensor correlations indicative of subtle wear patterns [14].

- LLM + FL (No IoT): Lacks real-time grounding; trained on potentially outdated or generic data, not the specific, current state of the machines [10].

To address the issues highlighted above, a synergistic solution (IoT + LLM + FL) is illustrated next.

- Data Generation: Sensors on machines continuously generate multimodal time-series data and operational logs.

- Model Choice (LLM): A powerful foundation LLM (potentially pre-trained on general engineering texts and machine manuals) is chosen as the base model. It possesses the capability to understand technical language in logs and potentially process time-series data patterns [15].

- Collaborative Fine-Tuning (FL + PEFT): FL is used to fine-tune this LLM across the plants using their local IoT sensor data and maintenance logs [69]. To manage resources and communication, PEFT (e.g., LoRA [16]) is employed. Only the small LoRA adapter updates are shared with a central FL server (or aggregated decentrally [72])—preserving privacy regarding raw data and detailed operational parameters [61].

- Intelligence and Action (LLM + IoT): The fine-tuned LLM (potentially deployed at edge servers within each plant [13]) analyzes incoming IoT data streams and logs in near-real time. It identifies complex failure precursors missed by simpler models, correlates sensor data with log entries, predicts remaining useful life, and generates concise, human-readable alerts and maintenance recommendations for specific machines [108]. These alerts can be directly integrated into the plant’s maintenance workflow system (potentially an IoT actuation).

This integrated system can achieve highly accurate, context-aware predictive maintenance across multiple entities by leveraging diverse operational data (IoT) through privacy-preserving collaborative learning (FL), powered by the deep analytical and interpretive capabilities of LLMs, all achieved efficiently using PEFT. This outcome would be significantly harder, if not impossible, to achieve with only two of the three components.

5.5. Challenges Arising from the Synergy

While powerful, the tight integration of IoT, LLMs, and FL introduces unique challenges beyond those of the individual components:

Cross-Domain Data Alignment and Fusion: Effectively aligning and fusing heterogeneous, multimodal IoT data streams within an FL framework before feeding them to an LLM requires sophisticated alignment and representation techniques [105].

Resource Allocation Complexity: How to jointly optimize computation (LLM inference/training, FL aggregation), communication (IoT data upload, FL updates), and privacy (PET overhead) across heterogeneous IoT devices, edge servers, and potentially the cloud specifically for this integrated task [13].

Model Synchronization vs. Real-time Needs: Balancing the need for FL model synchronization (potentially slow for large LLM updates [10]) with the real-time data processing and decision-making requirements of many IoT applications.

Emergent Security Vulnerabilities: New attack surfaces emerge at the interfaces, e.g., malicious IoT data poisoning FL training specifically to mislead the LLM’s interpretation [109], or FL privacy attacks aiming to reconstruct sensitive IoT context interpreted by the LLM [110]. Verifying the integrity of both IoT data and FL updates becomes critical [15].

5.6. Concluding Remarks on Synergy

The convergence of IoT, Large Language Models, and Federated Learning represents a fundamental paradigm shift in designing intelligent distributed systems. As demonstrated, their synergy unlocks capabilities far exceeding the sum of their individual parts. By enabling powerful LLMs to learn from diverse, real-world, privacy-sensitive IoT data through the secure framework of FL, we can create adaptive, context-aware, and specialized AI solutions deployable at the network edge. This synergy directly addresses the limitations inherent in previous approaches, paving the way for truly intelligent, efficient, and trustworthy applications across critical domains like Industrial IoT, autonomous systems, and smart infrastructure. While unique challenges arise from this tight integration, they also define fertile ground for future research focused on realizing the full, transformative potential of this powerful technological triad.

6. Key Challenges and Mitigation Strategies

In this section, we identify the key challenges of the synergy of IoT, LLM, and FL, and suggest potential mitigation strategies based on relevant techniques found in the open literature. Table 6 summarizes the main challenges and mitigation methods, as elaborated on next.

Table 6.

Major challenges in integrating IoT, LLMs, and FL, with mitigation strategies.

6.1. Resource Constraints

A primary obstacle when deploying LLMs within IoT ecosystems arises from the stark mismatch between the models’ demands and the typically severe resource constraints of edge devices [3]. Edge units often provide limited processing power, small memory capacities (e.g., typically 1–4 GB of RAM), and must operate under strict power budgets (often ≤ 10 W) [24]. Yet, even moderately sized models, like a 7-billion-parameter LLM, can require approximately 4 GB of memory just for inference, making deployment challenging [10].

To bridge this gap and enable on-device LLM adaptation and execution, several mitigation strategies focusing on efficiency can be employed. Model compression techniques, notably quantization (e.g., to 4-bit precision), can significantly slash memory usage by roughly 75% while often preserving a high percentage (e.g., 92–97%) of the original model’s accuracy on tasks like text classification [62]. Another approach is split computing, particularly SFL, which partitions the model layers between the device and a more capable edge server. This can cut on-device memory requirements substantially (e.g., by 40–60%), though it introduces trade-offs such as increased round-trip latency (e.g., 150–300 ms) during operations like federated training iterations [18]. Furthermore, PEFT methods have emerged as a highly promising strategy. Techniques like LoRA drastically reduce the number of trainable parameters by updating only a small fraction (e.g., about 1–2%) of the model’s weights, achieving massive reductions (up to 98%) in parameters needing training and storage [16]. Impressively, this efficiency often comes with only a modest decrease in performance, retaining substantial percentages (e.g., around 89%) of full fine-tuning performance on standard benchmarks.

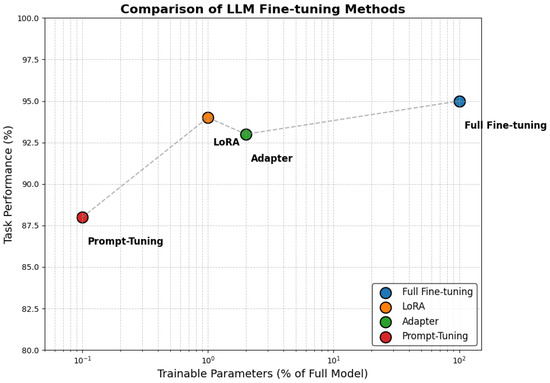

The trade-offs between parameter efficiency and task performance for various PEFT methods, including LoRA, adapter tuning, and prompt tuning compared to full fine-tuning, are clearly visualized in Figure 5. Full fine-tuning involves updating all model parameters, which leads to the highest performance but at a substantial computational and memory cost. In contrast, PEFT methods significantly reduce the number of trainable parameters—LoRA updates approximately 1% of parameters, adapters around 2%, and prompt tuning fewer than 0.1%—while still achieving competitive downstream task performance. As the figure illustrates, these methods strike different balances between efficiency and effectiveness, making them particularly attractive for resource-constrained IoT and Federated Learning settings where full fine-tuning is often impractical. This visual comparison underscores the growing importance of PEFT techniques in scaling LLM applications to diverse, decentralized edge environments.

Figure 5.

Trade-off between trainable parameter ratio and downstream task performance (e.g., typical accuracy observed on natural language understanding tasks from benchmarks like GLUE) for various LLM fine-tuning methods. Full fine-tuning updates 100% of parameters, whereas PEFT approaches such as LoRA (1%), adapter (2%), and prompt tuning (<0.1%) offer large savings in parameter updates at the cost of some performance.

Finally, complementing these model-level optimizations, adaptive distribution techniques employing dynamic workload schedulers can monitor real-time device telemetry (available RAM, CPU load, network bandwidth) to adjust model partitioning or batch sizes on the fly, maximizing the utilization of available resources. Together, these diverse approaches—compression, splitting, parameter-efficient adaptation, and dynamic scheduling—make it increasingly practical to deploy and adapt sophisticated LLMs effectively on resource-constrained IoT hardware.

6.2. Communication Overhead

The high communication overhead associated with FL poses another significant challenge, particularly in IoT networks characterized by potentially unreliable or low-bandwidth connections [10]. Transmitting large model updates frequently between numerous devices and a central server can saturate the network and consume considerable energy. Several approaches aim to mitigate this communication burden. As mentioned, PEFT methods are highly effective, as only the small adapter updates need to be transmitted [101]. Update compression techniques can further reduce the size of transmitted data, but carry a risk of information loss [111]. Reducing the frequency of communication rounds can save bandwidth, but typically slows down the convergence of the global model [9]. Additionally, asynchronous protocols allow devices to communicate more flexibly based on their availability, alleviating delays caused by stragglers, but they introduce challenges related to model staleness and potential inconsistencies [112].

6.3. Data Heterogeneity and Fairness

The performance and fairness of FL systems are significantly impacted by data heterogeneity, commonly referred to as Non-IID data, which is prevalent in IoT environments [113]. Data distributions often vary substantially across devices due to differing local environments, usage patterns, or sensor types (e.g., label or feature skew). This heterogeneity can hinder the convergence of standard FL algorithms like FedAvg and lead to a global model that performs poorly for specific clients. Furthermore, biases present in local data or even within the pre-trained base LLM can be amplified or unfairly distributed across participants through the FL process, and measuring or mitigating such biases in a decentralized manner remains difficult [37]. Strategies to address Non-IID data and promote fairness include using Robust Aggregation algorithms (like FedProx), designed to be less sensitive to diverging updates [44], and employing PFL techniques that tailor parts of the model to local data, although this adds complexity [73,74]. Fairness-aware algorithms explicitly try to balance performance across different client groups, sometimes at the cost of overall average accuracy. Another approach involves augmenting local data with synthetic data (potentially generated by LLMs) or relevant public data, but this requires careful consideration of privacy implications [103,104].

6.4. Privacy and Security Risks

Ensuring robust privacy and security is perhaps the most critical challenge, given the sensitive nature of IoT data and the distributed nature of FL. Key concerns involve balancing model utility against privacy guarantees, protecting against various attacks such as data leakage from model updates [110,114], data or model poisoning by malicious clients [109,115], Byzantine failures [116], and backdoor attacks targeting the models [117,118,119], all while complying with regulatory mandates like GDPR or HIPAA.

A variety of techniques, often referred to as PETs and robust mechanisms, are used to mitigate these risks, each with distinct trade-offs in aspects like overhead (conceptually compared in Figure 6) and utility. DP offers strong, mathematical guarantees against inference attacks by adding calibrated noise. While generally having lower computational overhead than cryptographic methods, it introduces a direct privacy–utility trade-off, where increasing noise to enhance privacy typically degrades model accuracy [49], as illustrated conceptually in Figure 6. Cryptographic approaches like HE allow computations (like aggregation) on encrypted data, providing strong confidentiality against the server without accuracy loss, but their extremely high computational and communication overhead makes them largely impractical for direct use on most IoT clients [3,53]. Similarly, SMPC enables joint computations without revealing private inputs, offering strong security through distributed trust with no accuracy loss, but typically requires complex, multi-round interactions unsuitable for dynamic IoT environments [55]. Secure aggregation protocols are optimized specifically for the FL summation task, offering much better efficiency than general HE/SMPC and protecting individual updates from the server during aggregation, but they do not protect the final model from inference or updates during transmission without additional measures [56].

Figure 6.

Illustration of the privacy–utility trade-off under differential privacy for Federated Learning models. The plot compares the model accuracy (%) of two different DP-based optimization methods, DPSGD and DP-Adam, across a range of privacy budgets () on a logarithmic scale. As the privacy guarantee strengthens (smaller ), model performance consistently degrades, highlighting the inherent trade-off between privacy protection and predictive utility. Key privacy levels ( = 0.01, 0.1, 1, and 10) are annotated to demonstrate performance sensitivity.

To defend against malicious clients sending faulty updates (poisoning or Byzantine attacks), Robust Aggregation methods like Krum [116], Bulyan [126], coordinate-wise median, or trimmed mean are employed to filter outlier updates. However, their effectiveness can decrease with sophisticated attacks or high Non-IID levels [127,128]. Recent advancements show promise, such as the PEAR mechanism using cosine similarity and trust scores for better robustness in Non-IID settings [120], or techniques like ByzSFL that integrate Byzantine robustness with secure computation using zero-knowledge proofs (ZKPs) for efficient verification without revealing private data [124]. Complementary strategies include explicit attack detection and verification mechanisms [121,122] and leveraging hardware security through Trusted Execution Environments (TEEs) to provide protected enclaves for computation [123].

6.5. Scalability and On-Demand Deployment

Finally, achieving efficient scalability and supporting on-demand deployment is crucial for applying FL-trained LLMs across massive and dynamic IoT populations [42]. Managing the training process and subsequent inference efficiently requires optimized edge infrastructure, including techniques like caching and optimized model serving [43]. Scalable FL orchestration is also essential, employing architectures like hierarchical, decentralized, or asynchronous FL, each presenting different trade-offs in coordination complexity, robustness to failures or stragglers, and communication latency [101]. Furthermore, effective resource-aware management, incorporating adaptive scheduling, intelligent client selection strategies, and potentially incentive mechanisms, is needed to handle the dynamic nature of device availability and network conditions [125].

7. Research Gaps and Future Directions

The IoT, LLMs, and FL have seen rapid progress, establishing a notable current state of development and research. However, despite these advancements, substantial challenges persist. This section aims to provide a structured overview by first briefly acknowledging key aspects of the current landscape within specific domains of this integration. Building on this, we then identify critical research gaps, supported by detailed evidence and insights from recent literature. Finally, based on these identified gaps, we delineate promising future directions for advancing the synergistic application of these technologies.

Efficiency for Extreme Edge: LLMs are notoriously resource-intensive, but edge IoT devices often operate on milliwatts of power with kilobytes of RAM. Techniques like QLoRA [62] reduce fine-tuning memory use by combining 4-bit quantization and low-rank adaptation, making LLMs tractable for edge execution. Similarly, SparseGPT achieves one-shot pruning with negligible accuracy drop on billion-parameter models [67]. SmoothQuant enhances post-training quantization by aligning activations and weights to improve stability under int8 quantization [68]. Backpropagation-free training is emerging as a potential direction to eliminate memory-heavy gradient calculations; the survey in [87] reviews biologically inspired and forward–forward alternatives relevant to constrained hardware. These are particularly promising when combined with hardware-aware co-design, as advocated in [3], for FL in 6G IoT networks.

Robustness to Heterogeneity and Fairness: Extreme client heterogeneity in IoT-FL, both in data and hardware, poses serious convergence and fairness challenges. Pfeiffer et al. [24] analyze system-level disparities and advocate for client-specific adaptation layers. Carlini et al. [37] further highlight how adversarial alignment in neural networks can propagate biases, underscoring the need for fairness constraints in model design. Multi-prototype FL, as discussed in the Wevolver report [12], enables clients to specialize on subsets of prototypes that better represent their local distributions. Deng et al. [73] propose a hierarchical knowledge transfer scheme that separates global, cluster, and local models, reducing the negative transfer from outlier clients. Formal fairness-aware FL protocols, however, are still lacking.

Practical Privacy Guarantees: Applying PETs to LLM-based FL is non-trivial. While traditional DP mechanisms such as those in [45,48] remain foundational, Ahmadi et al. [49] show that when applied to LLMs in FL, DP introduces substantial performance degradation unless combined with hybrid masking and adaptive clipping strategies. Liu et al. [70] propose DP-LoRA, which selectively adds noise only to low-rank adaptation matrices, achieving a trade-off between utility and formal privacy. Yet, computational cost remains high. HE and SMPC offer stronger privacy but with significant communication and computational overheads unsuitable for IoT [53,55]. Efficient and scalable PET integration into low-power FL deployments remains an open issue.

Advanced Security and Trust: Foundation models open new attack surfaces in FL. Li et al. [118] demonstrate that compromised foundation models can inject imperceptible backdoors into global models during federated fine-tuning. Wu et al. [119] study adversarial adaptations where model updates mimic benign behavior, bypassing current anomaly detection. Existing aggregation defenses like Krum [116] and Bulyan [126] struggle when attackers use model-aligned poisoning. Fan et al. [124] propose using zero-knowledge proofs for secure update verification in FL, though integration into LLM systems is yet to be tested. Decentralized trust frameworks with verifiable integrity, such as those discussed in [42], could mitigate these threats in IoT federations.

Standardization and Benchmarking: Most existing FL benchmarks are designed for small NLP tasks (e.g., FedNLP [98]), lacking scale and modality diversity. Zhang et al. [97] introduce FederatedGPT to benchmark instruction tuning under FL settings, incorporating metrics like alignment score and robustness. FederatedScope-LLM [96] goes further, providing end-to-end support for parameter-efficient tuning (e.g., LoRA, prompt tuning) across diverse datasets. However, neither covers streaming sensor data, nor evaluates under network constraints typical in IoT. A comprehensive benchmark must include multimodal tasks, model size variability, privacy/utility/fairness trade-offs, and realistic simulation environments [129].

Multimodal Federated Learning: IoT deployments naturally involve multimodal data. ImageBind [130] demonstrates crossmodal LLMs trained on image, audio, depth, and IMU inputs in a single embedding space, but assumes centralized training. Cui et al. [105] highlight the challenges of decentralized multimodal alignment, including inter-client modality mismatch and unbalanced contributions. Communication-efficient multimodal fusion techniques and modality-specific adapters are needed. Sensor-based FL must incorporate asynchronous updates and crossmodal imputation to be practical in the real world.

Federated Learning for AI Agents: Li et al. [131] envision LLM-based AI agents capable of perception, planning, and actuation across decentralized IoT systems. Such agents require lifelong learning and task adaptation, which traditional FL lacks. PromptFL [80] proposes learning shared prompts instead of entire models, while FedPrompt [81] enhances this with privacy-preserving prompt updates. These methods significantly reduce communication and allow client-specific behavior, but lack the reasoning and memory modules required by generalist agents. Integration with reinforcement FL and safe exploration policies is a future direction.

Continual Learning and Adaptability: The temporal nature of IoT data leads to frequent concept drift. Shenaj et al. [132] propose online adaptation techniques but do not consider privacy. Wang et al. [107] review continual FL methods including regularization-based and rehearsal-based strategies. Xia et al. [108] propose FCLLM-DT, which maintains temporal awareness via digital twins. These approaches should be enhanced with memory-efficient adaptation and forgettable modules that meet legal obligations on data deletion.

Legal, Ethical, and Economic Considerations: Federated LLMs operating across jurisdictions must comply with evolving data governance policies. Cheng et al. [10] outline open legal questions in multi-party FL, such as liability for biased decisions and model misuse. Qu et al. [13] emphasize ethical concerns such as disproportionate access to computing resources and biased training data. Witt et al. [43] review incentive mechanisms like token-based payments or fairness-based credit allocation, critical for encouraging client participation. However, these are rarely tested in LLM-specific scenarios, and no consensus exists on equitable reward strategies.

Machine Unlearning and Data Erasure: Hu et al. [133] propose erasing LoRA-tuned knowledge via gradient projection and local retraining to remove specific client data contributions without damaging generalization. Patil et al. [134] leverage influence functions to reduce a sample’s effect on final predictions, but require full access to model internals. Qiu et al. [135] address federated unlearning by designing reverse aggregation schemes, though practical validation on LLMs is absent. Verifiability and efficiency of unlearning remain open problems, especially in decentralized, heterogeneous FL contexts.