Abstract

With continuous advancements in autonomous driving technology, systematic and reliable safety verification is becoming increasingly important. However, despite the active development of various X-in-the-loop simulation (XILS) platforms to validate autonomous driving systems (ADSs), standardized evaluation frameworks for assessing the credibility of the simulation platforms themselves remain lacking. Therefore, we propose a novel integrated credibility-assessment methodology that combines dynamics-based fidelity assessment, parameter-based reliability assessment, and scenario-based reliability assessment. These three techniques evaluate the similarity and consistency between XILS and real-world test data based on statistical and mathematical comparisons. The three consistency measures are then utilized to derive a dynamics-based correlation metric for fidelity, along with parameter-based and scenario-based correlation and applicability metrics for reliability. The novel contribution of this paper lies in a geometric similarity analysis methodology that significantly enhances the efficiency of credibility assessment. We propose a methodology that enables geometric similarity assessment through spider chart visualization of metrics derived from the credibility-assessment process and shape comparison, based on Procrustes, Fréchet, and Hausdorff distances. As a result, speed is not a dominant factor for credibility evaluation, enabling assessment with a single representative speed test; the framework simplifies the XILS evaluation and enhances ADS validation efficiency.

1. Introduction

The rapid development of autonomous driving systems (ADSs) has led to an exponential increase in software and hardware complexity. This trend is further accelerated by the emergence of autonomous aerial vehicles (AAVs), which extend autonomous technology beyond ground vehicles and introduce even more complex safety challenges [1]. These developments highlight the importance of sophisticated verification methodologies to ensure overall system safety [2,3,4]. As the level of autonomous driving advances, driver intervention progressively diminishes, which inevitably shifts the responsibility for accidents caused by system defects or limitations toward the manufacturers [5,6]. Therefore, ADSs must perform robustly under various driving conditions and unpredictable circumstances, which necessitates simulation-based verification frameworks that can evaluate system behavior quantitatively and reproducibly [7,8].

Real-vehicle testing conducted on actual roads offers clear advantages in terms of directly validating systems in real driving situations and naturally reflecting the many variables and uncertainties inherent in real-world environments. However, such testing involves fundamental constraints, including difficulties in ensuring reproducibility, limitations in implementing extreme situations, substantial costs, and safety concerns [9,10,11]. To overcome these limitations, X-in-the-loop simulation (XILS) has been widely adopted, with representative methodologies including model-in-the-loop simulation (MILS), software-in-the-loop simulation (SILS), and hardware-in-the-loop simulation (HILS) [12,13,14].

However, such simulation-based verification methods cannot perfectly reflect the dynamic characteristics of actual vehicles. Among the various XILS approaches, this limitation has prompted a growing interest in vehicle-in-the-loop simulation (VILS), which integrates simulation environments with real vehicles to achieve higher fidelity [15,16].

VILS is considered highly credible as it enables not only repetitive and safe reproduction of various scenarios, but also precise evaluation based on the physical responses of actual vehicles [17,18]. However, existing research has focused primarily on verification at a simple functional level, with very few systematic methodologies available for quantitatively evaluating the credibility of simulation platforms [19]. Thus, although research is being actively conducted to validate ADSs through XILS, a system for quantitatively evaluating the credibility of the simulation platforms as a virtual tool chain remains to be developed.

Given the critical importance of reliable simulation platforms for ADS validation, standardized evaluation frameworks have become essential. Recognizing these challenges, the United Nations Economic Commission for Europe (UNECE) has included credibility-assessment principles for virtual tool chains in its SAE Level 2 ADS Driver Control Assistance Systems (DCAS) regulation (ECE/TRANS/WP.29/2024/37) [20]. According to the DCAS regulation, credibility defines whether a simulation is fit for its intended purpose, based on a comprehensive assessment of five key characteristics of modeling and simulation: capability, accuracy, correctness, usability, and fitness for purpose. The concept of credibility in this regard is distinct from simply reliability. Whereas reliability refers to the consistency of results obtained under the same conditions, credibility is a metric that comprehensively quantifies how well a simulation reflects the relevant real-world system. Thus, for a simulation platform to be deemed credible, it must not only be reliable (i.e., capable of providing reproducible results) but also offer fidelity (i.e., the ability to accurately replicate the physical characteristics and behavior of the actual system) [21].

For example, even if a vehicle simulation demonstrates high reliability, its value as a validation tool can be undermined if the vehicle dynamics model is inaccurate or the sensor characteristics are not appropriately represented. In such cases, although reproducibility is ensured, real-world vehicle maneuvers are not accurately reflected.

Therefore, a systematic framework for evaluating XILS platforms based on UNECE’s credibility principles is a critical necessity for ADS validation. By verifying that the XILS results would be valid in real-world driving environments, a systematic credibility-assessment framework can serve as a trustworthy foundation for the development of ADSs. Such a framework is also expected to facilitate the establishment of an efficient development process that can overcome the limitations of real-world testing and reduce development costs and time while enabling safe testing of risk scenarios.

2. Related Work

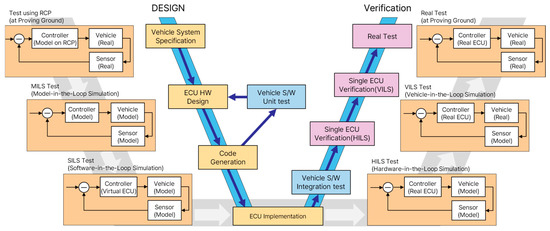

XILS-based techniques have been actively researched and utilized as a systematic approach to verifying ADS safety. As shown in Figure 1, a key component in the development and validation of ADSs, XILS is categorized into MILS, SILS, HILS, and VILS based on the development stage and constitutes the final step before real-world validation [22]. Figure 1 illustrates the hierarchical integration of XILS technologies within the V-Model development framework. The figure demonstrates systematic progression from early-stage MILS for algorithm verification, through SILS for software validation, HILS for real-time hardware integration testing, to VILS for comprehensive vehicle-level validation, culminating in real vehicle testing. With the continued progress in simulation research, though, the characteristics and limitations of each XILS phase are becoming clearer. Specifically, MILS enables rapid verification of control algorithms and vehicle models within a purely software environment, thus being an effective tool for securing algorithm stability during the initial development phase [23]. However, it is limited by its inability to reflect real hardware characteristics. SILS leverages virtual electronic control unit (ECU) models to systematically validate control software functionality and evaluate software code performance before hardware integration [24]. However, aspects such as actual hardware data structures and computational delays are difficult to emulate perfectly. HILS incorporates real ECUs and hardware for real-time validation and a comprehensive assessment of hardware–software interactions, but it fails to reproduce the complexity of real vehicle dynamics [25,26,27]. To overcome these stage-specific limitations, recent research has focused on VILS, an advanced approach that integrates a real vehicle with a virtual environment, maintaining the vehicle’s dynamic characteristics while enabling safe testing across various virtual scenarios [28].

Figure 1.

XILS technologies integrated with V-Model.

To address the limitations of existing XILS approaches while maintaining vehicle dynamic fidelity, Son et al. [29] proposed a proving ground (PG)-based VILS system that recreates a real proving ground as a high-definition (HD) map-based virtual road. The system comprises four key components: virtual road generation, real-to-virtual synchronization, virtual traffic behavior generation, and perception sensor modeling. This design preserves the vehicle’s true dynamic characteristics while enabling safe, repeatable testing of various scenarios in a virtual environment. Unlike dynamometer-based VILS, it can be implemented with only a proving ground and does not require large-scale dynamometers or over-the-air (OTA) equipment, which significantly reduces initial infrastructure costs and operational risks. Additionally, by utilizing a virtual road derived from an actual test site, it enhances the reproducibility of experiments. Given these advantages, we adopted the PG-based VILS platform for our XILS case study. Simulation platforms for the evaluation and verification of ADSs have been continuously developed and refined. However, before assessing the consistency of results between real-world experiments and simulations, the credibility of the simulation platform itself must be confirmed.

Oh [30,31] proposed a methodology to implement the AD-VILS platform designed for evaluating ADSs and developed a technique to assess the platform’s reliability based on key test parameters relevant to ADSs. They quantitatively evaluated consistency by comparing these key parameters between real-vehicle tests and VILS tests based on statistical indicators. Furthermore, they proposed a scenario-based reliability evaluation method to verify whether VILS testing could partially replace real-world testing or be effective in specific scenarios. Based on the indices of consistency between real-vehicle tests and VILS tests, they derived correlation and applicability metrics to evaluate the platform’s overall reliability. However, existing credibility-assessment techniques for XILS platforms still have significant limitations. Major issues include their focus on evaluating advanced driver assistance system (ADAS) functions without sufficiently considering the overall dynamic behavior of the vehicle. Furthermore, most verification frameworks are limited to low-speed scenarios, which hinders reliability verification under higher-speed conditions. Additionally, the requirement for repeating independent experiments under different speed conditions represents a significant structural inefficiency.

Therefore, we propose a novel framework for evaluating the credibility of XILS platforms as a virtual tool chain for ADS validation. The strategy involves statistical and mathematical comparisons between the results of XILS tests and real-vehicle tests, with similarity and consistency metrics calculated for each test. These metrics are determined from the perspectives of parameters, scenarios, and dynamics, and the calculated consistency enables evaluation across three aspects: parameter-based reliability, scenario-based reliability, and dynamics-based fidelity. Ultimately, the credibility of the XILS platform is determined based on the results of these three types of analyses. Furthermore, to ensure efficient credibility evaluation, geometric similarity analysis is performed. The credibility evaluation metrics, derived from experimental data obtained through tests conducted under various speed conditions within the same scenario, are visualized on spider charts. The use of geometric shape comparison metrics allows quantitative analysis of the similarity between two shapes, demonstrating that speed is not the dominant factor when assessing scenario credibility. Additionally, the analysis of geometric similarities between different scenarios suggests the possibility of establishing a representativeness assessment framework, where certain scenarios can serve as proxies for the credibility assessment of others.

The systematic credibility evaluation framework proposed herein is expected to efficiently verify whether virtual XILS test results remain valid in real-world driving environments, thereby serving as a dependable foundation for the development of ADSs.

The remainder of this paper is structured as follows: Section 3 explains the proposed credibility-assessment methodology, which is based on an integrated evaluation framework that considers not only reliability but also fidelity while reflecting dynamic consistency. Section 4 introduces the procedures for efficiently verifying the credibility of XILS platforms, which is followed by a test to determine whether the speed condition is the dominant factor when assessing a given scenario. The credibility-assessment results across various speed conditions within the same scenario are visualized through spider charts, and the similarity between shapes is quantitatively analyzed through geometric shape comparison. This similarity analysis serves as the basis for evaluating the efficiency of credibility validation. Furthermore, we extend the geometric shape comparison to scenarios involving different driving maneuvers to establish a methodology for confirming whether certain scenarios can represent others. Section 5 describes the experimental environment created for the real-vehicle tests and VILS tests and discusses the validity of the methods outlined in Section 3 and Section 4 based on experimental results. Finally, Section 6 presents the implications of our findings for the credibility assessment of simulation platforms, states the limitations of this study, and outlines future research directions.

3. Proposed Credibility Evaluation Methodology

Before utilizing XILS environments for ADS validation, the credibility of the XILS platform itself must be rigorously assessed.

It is important to clarify the distinction between credibility, reliability, and fidelity as used in this framework. Following UNECE DCAS regulations, credibility represents a comprehensive assessment that encompasses both reliability (the consistency and repeatability of results under identical conditions) and fidelity (the accuracy with which simulation models reproduce real-world physical characteristics and behaviors). While reliability focuses on reproducibility and consistency between repeated tests, fidelity emphasizes the physical accuracy of dynamic responses. Credibility, therefore, serves as an overarching metric that ensures a simulation platform is both consistent in its outputs and accurate in its representation of real-world phenomena, making it fit for its intended validation purpose.

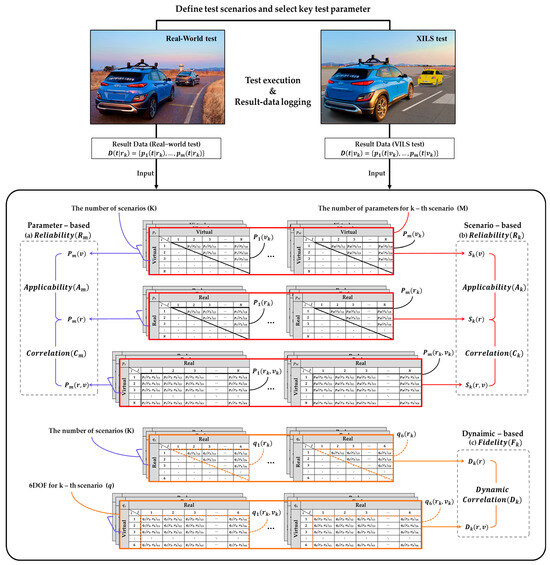

We propose a framework to quantify the credibility of XILS through a comprehensive assessment from parameter-based, scenario-based, and dynamics-based perspectives (Figure 2).

Figure 2.

Overall process flow of proposed methodology for evaluating XILS credibility.

Figure 2 presents the comprehensive three-dimensional credibility evaluation framework for XILS platforms, building upon the reliability assessment methodology established by Oh [31]. The framework illustrates a systematic matrix-based comparison structure that processes similarity calculations between different test configurations (virtual–virtual, real–real, and virtual–real combinations) across multiple dimensions. The core of the methodology centers on structured similarity matrices where each table represents different comparison types indicated by the (virtual, real) labels at the top and left sides. Within each matrix, black-bordered cells represent the similarity comparison results for individual parameters (one of m parameters) between specific test iterations, enabling detailed component-level analysis. Red-bordered horizontal sections encompass the similarity results for all m parameters within a single scenario (one of k scenarios), providing scenario-level aggregated comparisons. Blue-bordered vertical stacks represent similarity results for a single parameter (one of m parameters) across all k scenarios, facilitating parameter-level cross-scenario analysis. These multi-layered matrix structures feed into the three parallel evaluation streams: (1) Parameter-based reliability assessment utilizes the blue-bordered parameter stacks to calculate correlation indices and applicability indices for each of the m key parameters, identifying specific modeling deficiencies in simulation components; (2) scenario-based reliability assessment leverages the red-bordered scenario sections to compute scenario-level correlation and applicability indices, determining replacement feasibility of real-world tests; (3) dynamics-based fidelity assessment employs additional orange-bordered matrices that specifically compare six-degree-of-freedom motion characteristics between virtual and real test configurations, yielding dynamic correlation indices . Validation test scenarios and key test parameters are defined, and data are then collected by performing repeated real-world and XILS tests under identical conditions. The collected data are analyzed across three core dimensions before being integrated into the final credibility assessment.

The parameter-based reliability assessment involves calculating the applicability index () and correlation index () for each key test parameter. The applicability index determines whether XILS tests offer better repeatability and reproducibility than real-world tests, quantifying the consistency of individual component models within the XILS platform in maintaining their outputs across multiple test iterations. The correlation index measures the similarity between real-world and XILS tests at the parameter level, reflecting the accuracy of the simulation component models with reference to the respective parameters. The parameter-based reliability () is then evaluated based on whether these two indices meet their defined evaluation criteria.

Scenario-based reliability assessment involves calculating the applicability index () and correlation index () for each scenario. The scenario applicability index quantifies how consistently XILS test results align with real-world test results in a given scenario, while the scenario correlation index measures the overall similarity between real-world and XILS tests at the scenario level. The scenario-based reliability () is then determined based on whether these two indices meet their predefined evaluation criteria.

Dynamics-based fidelity assessment involves calculating the dynamic correlation index () centered on the vehicle’s six-degree-of-freedom (6DOF) motion characteristics. This index measures the similarity between real-world tests and XILS tests based on information on the vehicle’s three types of translational movements and three types of rotational movements. The dynamics-based fidelity () is then evaluated based on whether meets the defined evaluation criterion.

When the parameter-based reliability (), scenario-based reliability (), and dynamics-based fidelity () have all been validly assessed, the XILS platform is deemed credible. This multi-dimensional approach minimizes the potential biases arising from single-perspective evaluations and comprehensively verifies the credibility of the XILS platform for ADS validation.

We employed the comparative analysis techniques proposed by Oh [31] to measure the similarity between the datasets from the real-world and XILS tests. Oh [31] proposed a comprehensive similarity evaluation metric that combines the correlation coefficient () for integrated comparison, the Zilliacus error () for point-to-point comparison, and the Geers metric () to analyze magnitude and phase errors separately.

The correlation coefficient is defined by Equation (1), where and represent the two datasets to be compared, and denotes the number of sample data points, with each data point indexed by from 0 to [32]. The correlation coefficient ranges from −1 to 1, where values closer to 1 indicate stronger positive linear relationships between the dataset trends. The Zilliacus error is calculated using Equation (2), which normalizes the sum of absolute point-wise errors by the sum of absolute values of the reference dataset; lower f2 values indicate better point-to-point agreement between the two datasets [33]. The Geers metric is calculated as the square root of the sum of the squares of the magnitude error and phase error , as expressed in Equation (3). The magnitude error in Equation (4) quantifies the energy ratio between datasets through their squared sums, while the phase error in Equation (5) measures temporal alignment differences using normalized cross-correlation [34]. This technique enables separate evaluations of amplitude differences and temporal synchronization between the datasets.

Finally, the combined similarity metric () is calculated as the weighted sum of these three indicators, as shown in Equation (6). Here, , and represent the weighting factors for the three metrics, which can be adjusted according to their respective importance levels. In this study, equal weighting was applied to ensure balanced consideration of all three similarity aspects. However, these weights can be adjusted according to specific testing requirements and the relative importance of each metric for different evaluation contexts. Since the complete mathematical derivation and information regarding each metric have already been provided by Oh [31], in-depth discussion is omitted in this paper.

We used this integrated similarity index to evaluate the reliability of XILS from a parameter perspective and a scenario perspective, as explained in Section 3.2 and Section 3.3, respectively. The evaluation of the XILS platform’s fidelity from a dynamics perspective is described in Section 3.4.

3.1. Definitions of Scenarios and Parameters

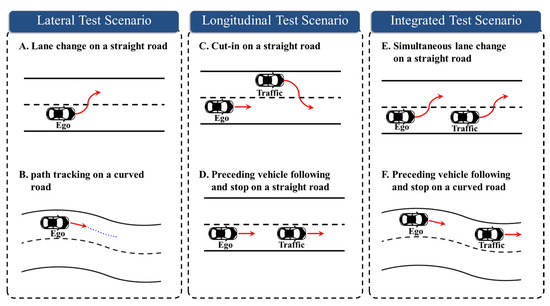

To evaluate the credibility of XILS platforms, the test scenarios and key test parameters must be systematically defined. This section outlines the scenario selection criteria and the key parameters used in the proposed evaluation framework. We constructed six test scenarios based on driving conditions and maneuver types. Based on the control characteristics, these scenarios are categorized into lateral (A and B), longitudinal (C and D), and integrated (E and F) evaluation. Figure 3 illustrates each scenario.

Figure 3.

Test scenarios created based on maneuver types.

The lateral evaluation scenarios were designed to verify the steering performance and lateral stability of the subject vehicle. Scenario A represents a situation where the subject vehicle changes lanes on a straight road without any external environmental influence, while driving at speeds of 30 km/h, 50 km/h, and 70 km/h. Scenario B evaluates the vehicle’s ability to stably follow a trajectory created based on information provided by the global positioning system (GPS) sensor on an S-shaped road, while driving at speeds of 20 km/h, 40 km/h, and 60 km/h.

The longitudinal evaluation scenarios were intended to verify the subject vehicle’s speed control and its interaction with a target vehicle. Scenario C involves a cut-in maneuver on a straight road, designed to evaluate the effectiveness of the adaptive cruise control function in maintaining a safe distance from the vehicle ahead. Scenario D focuses on testing the subject vehicle’s ability to maintain a constant distance behind a slower target vehicle on a straight road. In both scenarios, the subject vehicle’s speeds are 60 km/h, 80 km/h, and 100 km/h, while the target vehicle’s speeds are 40 km/h, 60 km/h, and 80 km/h.

The integrated evaluation scenarios were designed to analyze complex situations requiring simultaneous lateral and longitudinal control. Scenario E evaluates the subject vehicle’s ability to perform steering and speed control while maintaining a stable distance from the vehicle ahead when both vehicles simultaneously change lanes on a straight road. In this scenario, the subject vehicle’s speeds are set at 60 km/h, 80 km/h, and 100 km/h, while the target vehicle’s speeds are set at 40 km/h, 50 km/h, and 80 km/h. Scenario F comprehensively assesses the subject vehicle’s lateral stability and longitudinal following control while it drives behind the target vehicle on a curved road. In this scenario, the subject vehicle’s speeds are 40 km/h, 60 km/h, and 80 km/h, while the target vehicle’s speeds are 30 km/h, 40 km/h, and 50 km/h.

In all six scenarios, the target vehicle utilizes a speed control method to maintain the specified target speeds; however, it may or may not reach the target speeds depending on the situation. By conducting experiments under these diverse scenarios, the performance of the ADS and the credibility of the XILS platform can be comprehensively evaluated. Table 1 lists the main test parameters used in this study, which are defined as internal signals reflecting the core operations of the ADS and consist of vehicle status information, object data based on perception sensors, and control commands.

Table 1.

Classification of key test parameters based on maneuver (scenario) types.

For the lateral evaluation scenarios (A and B), lateral acceleration (), yaw rate (), and target steering angle () were selected as the primary metrics. These parameters are instrumental for a precise quantitative assessment of lateral control performance during lane-change maneuvers and curved-road navigation.

For the longitudinal evaluation scenarios (C and D), we employed longitudinal acceleration (), vehicle speed (), target longitudinal acceleration (), relative longitudinal distance (), relative lateral distance (), and relative speed (RV) as the core evaluation metrics. These measures are paramount for robust evaluation of whether a safe following distance is maintained and if speed is suitably regulated relative to a lead vehicle.

In the integrated assessment scenarios (E and F), the lateral and longitudinal metrics were combined to ensure a holistic assessment framework for analyzing system performance under complex maneuvering conditions.

The simulations in this study were performed in a VILS environment built using IPG CarMaker HIL 10.2, a high-fidelity autonomous driving and vehicle dynamics simulation software program. A realistic test environment was implemented using a high-fidelity vehicle dynamics model and an object list-based perception sensor model. Specifically, we focused on evaluating the accuracy of the relative distance and relative velocity to analyze the effects of sensor noise and uncertainty on the performance of the ADS [35,36,37]. This approach contributed to improving the similarity between simulated and actual driving and enhancing the credibility of the evaluation results.

3.2. Parameter-Based Reliability Evaluation

We adopted the parameter-based reliability evaluation method proposed by Oh [31], which enables the accuracy of the simulation component models used in XILS to be verified indirectly. For each scenario, we conducted N identical trials of both the XILS test and the real-vehicle test and compared the obtained parameter values to calculate three types of consistency indices: intra-XILS consistency (), intra-real-vehicle consistency (), and XILS–real-vehicle consistency (). Based on these indices, the correlation index and the application index are calculated as shown in Equations (7) and (8), respectively, as follows:

The correlation index represents the similarity between the results of the XILS tests and the real-vehicle tests, with values close to 100% indicating highly accurate simulation models. The applicability index exceeding 100% means that the repeatability and reproducibility of the XILS test are better than those of the real-vehicle test. The XILS platform is considered to achieve parameter-based reliability when all criteria in Equation (9) are met:

where and denote the correlation evaluation criterion and the applicability evaluation criterion, which are defined in Equations (10) and (11), respectively:

where denotes the minimum value among the maximum consistency indices for the real-vehicle tests, and is the average deviation between the maximum and minimum consistency indices of these tests. These criteria imply that the normalized consistency of the XILS trials must exceed that of the real-vehicle trials to guarantee superior repeatability and reproducibility.

The evaluation criteria and are established based on empirical data analysis to ensure statistical robustness. As detailed in Oh ([31], Equations (19)–(24)), is derived from the principle that XILS-to-real-world consistency must exceed the normalized consistency observed within real-world tests alone, with accounting for inherent experimental variability. The criterion assumes ideal simulation repeatability while incorporating the same variability measure, ensuring XILS demonstrates superior reproducibility compared to real-world testing conditions.

This method enables prior identification of any specific parameters that could undermine the XILS platform’s reliability, whereby the accuracy of the corresponding simulation models can be refined accordingly. As the complete derivation of the parameter-based evaluation equation is provided by Oh [31], it is omitted herein.

3.3. Scenario-Based Reliability Evaluation

We employed the scenario-based reliability assessment method proposed by Oh [31], the primary objective of which is to assess whether XILS tests can effectively replace real-vehicle tests in a given scenario. For each scenario, we conducted N trials of both the XILS and real-vehicle tests to calculate three types of scenario consistency indices: intra-XILS consistency (), intra-real-vehicle consistency (), and XILS–real-vehicle consistency (). Based on these indices, the scenario correlation index and the applicability index are calculated as shown in Equations (12) and (13):

A scenario correlation index close to 100% indicates a very high similarity between the XILS and real-vehicle test results in that scenario, while an applicability index exceeding 100% indicates that the repeatability and reproducibility of the XILS tests are better than those of the real-vehicle tests. The XILS platform is deemed to attain scenario-based reliability when all the criteria in Equation (14) are met:

where and denote the correlation evaluation criterion and the applicability evaluation criterion, which are defined in Equations (15) and (16), respectively:

The scenario evaluation-based thresholds and follow the same statistical framework as parameter-based criteria but are applied at the scenario evaluation. Following the methodology detailed in Oh ([31], Equations (34)–(39)), these thresholds are derived from the distribution of consistency indices across all test scenarios, ensuring that evaluation criteria reflect realistic performance expectations while maintaining statistical validity for scenario-specific assessments.

This method allows for pre-assessing whether XILS can effectively replace real-vehicle testing in a given scenario, thereby enhancing the efficiency of simulation-based validation. The complete derivation of the scenario-based evaluation equation is excluded from this paper as it has been provided by Oh [31].

3.4. Dynamics-Based Fidelity Evaluation

While the parameter-based and scenario-based evaluations discussed earlier are useful for quantifying the consistency between real-vehicle tests and XILS tests, they cannot fully capture the complex physical phenomena that a vehicle experiences during actual driving. For example, the vertical behavior of a vehicle when driving on irregular surfaces, the interaction between translational and rotational motions due to crosswinds, and the nonlinear lateral dynamics that occur during sudden steering cannot be fully assessed through simple input–output comparisons [38,39,40]. If these vehicle dynamics characteristics under real disturbance conditions are not properly reflected in the simulation model, applying results obtained from the virtual environment to real-world situations can lead to unpredictable errors and compromise safety [41,42,43]. Therefore, we propose a dynamics-based evaluation method to assess how faithfully the simulation reproduces the physical dynamics of the vehicle. Simulations using 3DOF models have been performed to quantitatively analyze the longitudinal and lateral behavior of vehicles, and various control strategies and driving stability techniques have been developed accordingly [44,45]. However, as these models do not fully incorporate the roll, pitch, and yaw variations of the vehicle, they cannot accurately reproduce the dynamic responses observed in real vehicles during high-speed driving, during sudden steering, or under complex road and weather conditions [46,47]. Moreover, with the focus of previous research being on system verification, the physical credibility of simulation platforms themselves has yet to be appropriately assessed.

To overcome these limitations, we introduce a 6DOF model that can precisely evaluate the accuracy of the simulation platform in reproducing the complex dynamic responses of the vehicle body. This method enables objective comparison of the dynamic consistency between the results of simulations and actual tests under various disturbance conditions. The proposed 6DOF model accounts for the vehicle’s longitudinal (), lateral (), and vertical () translational motions, along with its roll (), pitch (), and yaw () rotational motions; thus, it can describe the vehicle’s complete dynamics by assuming it to be a rigid body based on Newton–Euler equations [48,49]. However, as Coriolis and gyroscopic effects are minimal for vehicles in contact with the ground, these terms are omitted to simplify the model [50,51]. This exclusion significantly reduces the computational load of the simulation while enabling more intuitive and efficient comparison with actual experimental data.

The 6DOF model-based dynamics evaluation significantly enhances the physical credibility of the simulation platform by considering both translational and rotational motions, which 3DOF models fail to achieve. Thus, this evaluation method provides an essential foundation for verifying the credibility of autonomous driving simulation platforms and improving the adaptability of ADSs to real-world driving environments.

The equation of a vehicle’s translational motion explains the relationship between the forces acting when a vehicle moves in space and the resulting acceleration. Considering to be the vehicle’s mass and to be Earth’s gravitational acceleration, the forces acting on the vehicle can be defined based on Newton’s second law (). Along the vehicle’s X-axis (forward–backward direction) and Y-axis (left–right direction), the force is the product of the respective mass and acceleration values. Along the Z-axis (vertical direction), the influence of gravitational acceleration must be additionally considered. Accordingly, these translational motions can be represented by Equation (17), which mathematically expresses the vehicle’s motion in each direction. Here, and represent the vehicle’s acceleration along the X-, Y-, and Z-axes, respectively [52].

The equation of a vehicle’s rotational motion explains the relationship between torque (moment) and angular acceleration when the vehicle rotates around each axis. Rotational motion is defined by the relationship , which corresponds to Newton’s second law. Here, the moment of inertia () along each axis quantifies an object’s resistance to rotational motion. The rotational motions of a vehicle can be represented by Equation (18), which can be utilized to predict and control the vehicle’s attitude changes. Here, , , and denote the roll moments generated around the X-, Y-, and Z-axes of the vehicle; , and represent the vehicle’s moments of inertia in the roll, pitch, and yaw directions; and , , and represent the corresponding angular accelerations, respectively [53].

To quantitatively assess the dynamic consistency between simulation tests and real-vehicle tests, the normalized root-mean-square error (NRMSE) was adopted as the primary evaluation metric. NRMSE expresses the deviation between two datasets in a standardized form, enabling objective comparison between variables of different scales [54]. In this study, the credibility of the simulation model was evaluated by systematically quantifying the differences between the simulation results and experimental data based on NRMSE. This evaluation methodology is defined by Equations (19) and (20):

Equation (23) constitutes a multi-valued dynamic similarity index considering the cross-comparison between repeated tests. To represent these values as a single value for each scenario, Equations (24)–(26) are utilized:

The values computed through the three types of comparisons based on Equation (26) represent the dynamic consistency index of the q-th DOF parameter for the k-th scenario. Next, the dynamic consistency indices for all DOFs are weighted and averaged based on Equations (27)–(29) to allow the three types of dynamic consistency indices for the k-th scenario to be expressed as a single value:

The weighting factors in Equations (27)–(29) for the dynamic consistency indices were set to equal values ( = 1/6 for each DOF) to ensure balanced representation of all six degrees of freedom in the dynamics-based evaluation. These weights can be modified based on the specific vehicle dynamics characteristics being emphasized in the evaluation.

Finally, the dynamic correlation index is defined as the ratio of the dynamic consistency index for the inter-real-vehicle-test comparisons to that for the XILS–real-vehicle comparisons, as expressed in Equation (30):

approaches 100% as the consistency between the two tests increases, indicating that the simulation component models associated with the 6DOF parameters are highly accurate. Finally, the dynamics-based fidelity of the XILS platform is determined based on whether satisfies the specified acceptance criteria, as expressed in Equation (31):

where denotes the dynamic correlation evaluation criterion for . As detailed in Equations (32)–(36), is established on the principle that the dynamic consistency between XILS and real-vehicle tests must be greater than or equal to the dynamic consistency observed between repeated real-vehicle tests.

In Equations (32)–(36), and respectively, represent the maximum and minimum values of the dynamic consistency index of the -th DOF parameter in each of the k-th scenarios, refers to the minimum value among all values across the k-th scenarios, and indicates the average of the maximum deviations in the consistency indices of the principal DOF parameters across all k-th scenarios.

The dynamic correlation threshold is established using a data-driven approach that considers the natural variability inherent in 6DOF vehicle dynamics. As shown in Equations (32)–(36), this threshold is derived from the statistical distribution of dynamic consistency indices across all degrees of freedom, ensuring that XILS platforms demonstrate a dynamic fidelity that meets or exceeds the baseline consistency observed in repeated real-world tests, thereby guaranteeing adequate capture of complex vehicle physical behavior.

If the parameter-based reliability (), scenario-based reliability , and dynamics-based fidelity () all satisfy their respective evaluation criteria, the XILS implementation under evaluation is considered a credible simulation platform for validating ADSs.

4. Proposed Geometric Similarity Evaluation Methodology

Simulation-based ADS validation is more time-efficient and cost-effective than real-vehicle testing. However, when calculating the credibility of simulation for multiple speed conditions, especially across numerous scenarios, substantial resources are still consumed. Considering the countless situations in which ADSs must operate, a systematic approach is required to improve validation efficiency.

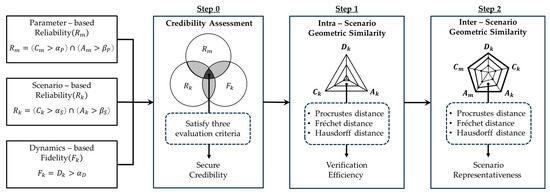

Herein, we propose an efficient credibility verification methodology based on geometric similarity evaluation, as illustrated in Figure 4. In Step 0, three evaluation metrics—parameter-based reliability , scenario-based reliability , and dynamics-based fidelity —are utilized to determine credibility. In Step 1, we evaluate credibility under different speed conditions (low, medium, and high) within the same scenario and visualize the derived evaluation metrics through a spider chart. The geometric similarity between the shapes is analyzed using the Procrustes, Fréchet, and Hausdorff distance techniques. These techniques evaluate the efficiency of the verification process by quantitatively determining whether speed is a dominant factor in the credibility assessment for a given scenario. In Step 2, we additionally evaluate the geometric similarity between different scenarios. This involves verifying whether a particular scenario can represent many other scenarios during credibility assessment.

Figure 4.

Overall process of proposed methodology for evaluating geometric similarity.

The proposed assessment framework ensures the credibility of the XILS platforms used to test ADSs while significantly reducing the verification time and cost compared with traditional independent verification approaches. The complete methodology is detailed in Section 4.1 and Section 4.2.

The Procrustes distance quantifies the similarity between two sets of points by optimally aligning them to their common center via rotation, translation, and scaling, before minimizing the sum of the squared errors between the points [55]. This least-squares criterion aligns each configuration’s centroid and allows structural differences to be analyzed via linear transformations, enabling global shape comparison without requiring explicit point-to-point correspondences. Specifically, optimal rotation and scaling solutions are obtained by performing an eigen-decomposition of the transformation matrix between the two configurations.

In Equation (37), the Procrustes distance quantifies similarity based on the global alignment between two point sets . Here, denotes the number of points in each shape, denotes the dimensionality of the space in which each point resides, and each row of the matrix corresponds to a single -dimensional point. Procrustes alignment involves applying the scaling factor , the rotation matrix , and the translation vector to , in that order, which transforms it into . We compute the sum of the squared Frobenius norms of the differences between corresponding points in the transformed point set and ; subsequently, we define the minimal value of this sum as the Procrustes distance . Here, is a column vector of ones, and multiplying it by ensures that the same translation vector is applied uniformly to all points. A smaller optimized Frobenius norm indicates that the two point sets are globally well aligned, implying structural similarity.

The Fréchet distance is often illustrated through the analogy of finding the shortest leash length needed to connect a person and a dog as they each walk along their respective curves [56]. This metric captures both the ordering of points along the curves and the overall path flow; hence, it is well suited for comparing time-series data or trajectory-based shapes. Specifically, the discrete Fréchet distance approximation enables efficient computation of this metric in O(pq) time via a dynamic programming-based algorithm.

In Equation (38), is the Fréchet distance, which quantifies the overall path-flow similarity between two point sets . Each point set is treated as a trajectory of sequentially connected points, where and denote the -th and -th points on the corresponding trajectories, respectively. The correspondence between the two sets is defined by an order-preserving path within the collection of such paths. The distances are computed based on the standard Euclidean norm. The Fréchet distance then evaluates the similarity between the trajectories by choosing among all possible paths the one that minimizes the maximum distance between matched points. Hence, a smaller value implies that the two trajectories maintain the same ordering and share similar shapes.

The Hausdorff distance is a metric that measures the distance of every point in Set A from its nearest neighbor in Set B and then finds the maximum value among these nearest-point-pair distances in both directions. Thus, this term quantifies the largest local dissimilarity between two shapes [57]. Because it does not require explicit point-to-point correspondences, it robustly captures shape mismatches even in the presence of small positional errors. Therefore, it is widely used in image comparison and pattern recognition. Additionally, algorithms that approximate the Hausdorff distance on a binary raster grid have been proposed to efficiently compute the minimum over all possible translations.

In Equation (39), represents the Hausdorff distance, which quantifies the maximum local discrepancy between two shapes, i.e., the degree of structural mismatch. The first term reflects the extent to which deviates from , while the second term measures the discrepancy in the opposite direction. The maximum of these two values represents the largest bidirectional distance. A smaller indicates that the shapes overlap consistently, whereas a larger value suggests a significant local mismatch (i.e., an outlier). All distances are calculated based on the Euclidean norm.

As shown in Equation (40), the geometric similarity between two shapes in this study was calculated as a weighted sum of the distance-based metrics from the three perspectives mentioned earlier. This metric was then used to evaluate geometric similarity, as explained in Section 4.1 and Section 4.2

4.1. Geometric Similarity Evaluation

To ensure the credibility of XILS platforms, previous studies have introduced parameter-based and scenario-based evaluation metrics. Herein, we present a new dynamics-based metric. A simulation is deemed credible only when each of these metrics satisfies its predefined evaluation criterion. However, evaluating a single scenario separately under multiple speed conditions increases the number of required tests exponentially, thereby extending both the duration of the process and raising the resource demand. To overcome this challenge, we propose a methodology that quantitatively analyzes the geometric similarity between different speed conditions within the same scenario, which enables the credibility of the platform under untested conditions to be predicted from the results of a single-speed trial. By allowing the overall reliability to be inferred from one experiment, this approach significantly reduces the number of tests needed—and thus the associated time and cost—while also revealing potential compromising factors during the inference process. Hence, this integrated evaluation framework can enhance both the credibility and efficiency of XILS assessments.

Geometric similarity evaluation leverages two scenario-based reliability metrics, namely applicability () and correlation (), together with a dynamics-based fidelity metric, namely dynamic correlation (). Parameter-based metrics are deliberately excluded from this analysis as they are unsuitable for inter-scenario comparisons and cannot be used to compare different speed conditions within the same scenario. Each metric is rendered as a triangular polygon on a spider chart for each speed condition, which enables a quantitative assessment of geometric similarity across low, medium, and high speeds within a single scenario. By jointly evaluating the structural characteristics of these spider-chart polygons and the interactions among the three metrics, this geometric analysis facilitates a more precise and efficient credibility assessment of XILS platforms. The proposed geometric similarity indices are formulated in Equations (41)–(44):

In Equations (46)–(50), and respectively, denote the maximum and minimum geometric similarity indices across the different speed conditions within the same scenario. , and denote the geometry-based similarity indices between low and medium speeds, between medium and high speeds, and between high and low speeds, respectively. represents the smallest of the values. In the k-th scenario, denotes the average of the maximum deviations in the geometric similarity indices across all scenarios. Thus, we can conclude that for all scenarios where exceeds , the geometric similarity between different speed conditions is sufficient, which allows us to assess the credibility of a given scenario without performing individual experiments for different speed levels.

4.2. Scenario Representativeness Evaluation

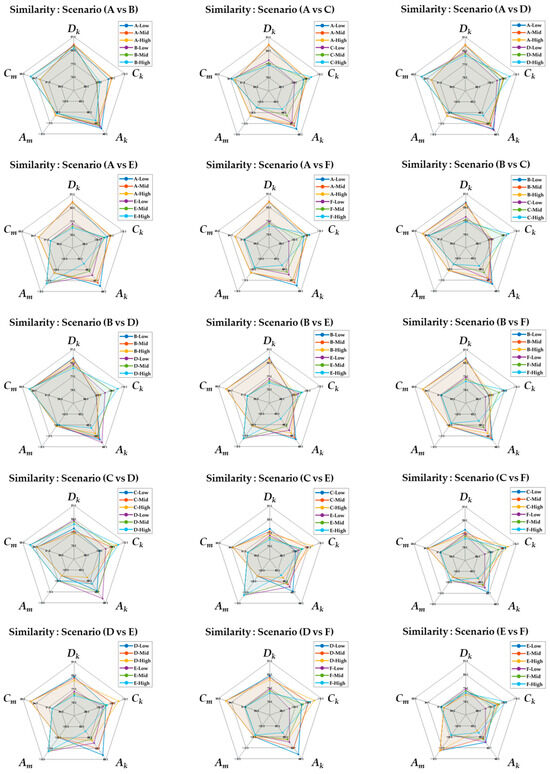

Building on the process described in Section 4.1, we extended our methodology to assess whether certain entire scenarios can represent other geometrically similar scenarios. Accordingly, based on the premise that the credibility of an XILS platform for different scenarios can be evaluated based on the results from specific representative scenarios for which the platform has already been confirmed to be credible, we propose a scenario representativeness evaluation framework involving geometric similarity calculations between different scenarios.

The evaluation metrics used for the scenario representativeness assessment include parameter-based applicability () and correlation (), scenario-based applicability () and correlation (), and dynamics-based correlation (). The two parameter-based metrics (Am and Cm) are averaged across the different speed conditions (low, medium, and high) within each scenario to obtain a single value that represents the overall parameter applicability and correlation for each scenario. For each k-th scenario, the parameter evaluation scores for each speed condition are calculated as follows: represents the applicability and correlation evaluation score for XILS data under the low-speed condition in the k-th scenario. represents the applicability and correlation evaluation score for real data under the low-speed condition in the -th scenario. represents the applicability and correlation evaluation score between XILS data and real data under the low-speed condition in the -th scenario. The evaluation scores for the medium-speed () and high-speed () conditions are derived in the same manner. Accordingly, the average parameter evaluation scores across speed conditions in the k-th scenario are calculated as shown in Equations (51)–(53):

Through this process, a single average value for the parameter-based reliability evaluation indices can be derived for each scenario. Based on these average values, the correlation index and applicability index are calculated using the parameter-based reliability evaluation formulas presented earlier. This yields new and values for each scenario, which are used to evaluate the representativeness of different scenarios. For each -th scenario, a scenario representativeness vector is constructed by integrating the previously calculated indicators corresponding to each speed condition, as shown in Equations (54)–(56):

Using , and , we quantitatively evaluate the representativeness of a scenario based on its geometric similarity to other scenarios. For this purpose, two comparison methods are proposed: comparison across the same speed condition and comparison across different speed conditions.

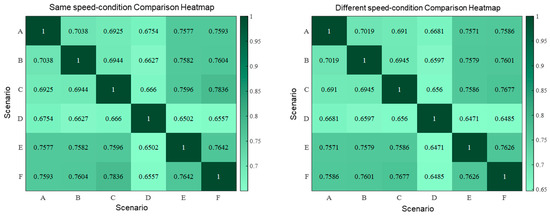

The same-speed comparison involves calculating the geometric similarity between different scenarios by comparing the representativeness vectors of each scenario under identical speed conditions. When the number of scenarios is , the number of possible scenario pairs is , and the comparisons are performed across all three speed conditions for each scenario pair. The geometric similarity under each speed condition is quantified by comparing the representativeness vectors and as shown in Equation (57):

The different-speed comparison involves evaluating the representativeness of a scenario across different speed conditions, i.e., verifying whether a specific scenario’s representativeness is maintained across various operating conditions. Such an assessment is important for determining the versatility of scenarios. The speed-condition set contains six distinct condition pairs , and the geometric similarity assessments are performed across all heterogeneous condition pairs for scenario pairs (i,j). Each comparison is designated as , and the overall different-speed similarity score is calculated as shown in Equation (58):

Thus, for each pair of scenarios, two comparison scores can be calculated, whereby a total of individual scenario representativeness scores are obtained for K scenarios. Therefore, the total number of similarity scores is . The scenario representativeness scores calculated in this manner are visualized as a heatmap and serve as the basis for selecting representative scenarios for credibility assessment.

5. Field Test Configuration and Results

To validate the proposed credibility evaluation methodology, we conducted a case study on VILS, one of the four main XILS approaches. Specifically, among the various VILS techniques, PG-based VILS was adopted to replicate scenarios that are difficult to implement repeatedly in real-vehicle tests and to capture behavioral characteristics similar to those of actual vehicles. To test the credibility evaluation methodology, we performed PG-based VILS across the six scenarios defined in Section 3.1 (K = 6), which comprised lateral evaluation (A and B), longitudinal evaluation (C and D), and combined longitudinal–lateral evaluation (E and F). For each scenario, both VILS and real-vehicle tests were conducted five times each (N = 5), and the results were measured against the 12 key parameters presented in Table 1 (M = 12). This section describes the vehicle configuration and evaluation environment utilized for the real-world vehicle testing and VILS testing and explains how the credibility of the VILS platform was evaluated based on test results obtained from both real and virtual environments. The procedures to evaluate the verification efficiency and representativeness through geometric similarity calculations are then outlined.

5.1. Experimental Setup

The proposed credibility evaluation methodology for XILS platforms was validated by constructing a VILS environment and comparatively analyzing the VILS results against those from real-world experiments. By repeatedly implementing the same test scenario in both real and virtual environments, we aimed to quantitatively evaluate the credibility and reproducibility of XILS-based verification.

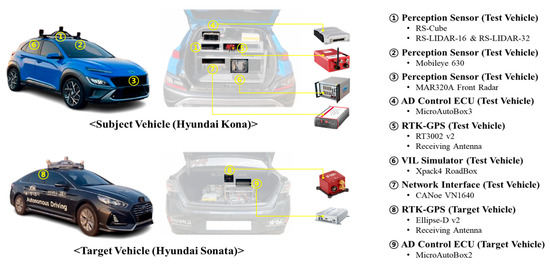

The subject vehicle was a Hyundai Kona (Hyundai Motor Company, Seoul, Republic of Korea) featuring an ADS comprising an integrated controller based on a real-time control unit MicroAutoBox3 (MAB3) (dSPACE GmbH, Paderborn, Germany) equipped with an autonomous driving algorithm. An RT3002-v2 Real-Time Kinematic (RTK) (Oxford Technical Solutions, Oxfordshire, UK)—GPS device was used to ensure precise positioning of the vehicle, and the sensor system for peripheral object and lane recognition was adapted from previous studies by incorporating a multi-sensor fusion method. Specifically, a RoboSense lidar (RoboSense, Shenzhen, China), a Hyundai Mobis radar (Hyundai Mobis, Seoul, Republic of Korea), and a Mobileye 630 camera (Mobileye, Jerusalem, Israel) were integrated to improve recognition performance. The target vehicle was a Hyundai Sonata, which was equipped with a real-time controller MicroAutoBox2 (dSPACE GmbH, Paderborn, Germany) and RTK-GPS sensors featuring the same autonomous driving algorithm. This vehicle was selected to minimize driver intervention and maximize the reproducibility of the scenarios during the repeated experiments, as its cruise control function can consistently maintain a specified route and speed. For precise collection of the experimental data, a Vector VN1640 (Vector Informatik GmbH, Stuttgart, Germany) was used to monitor the CAN messages from the real car and the VILS environment in real time. The logged data were stored in the form of CAN database files, which were utilized for the credibility evaluation. A description and configuration of vehicles and devices is shown in Figure 5.

Figure 5.

Test vehicle and target vehicle equipment.

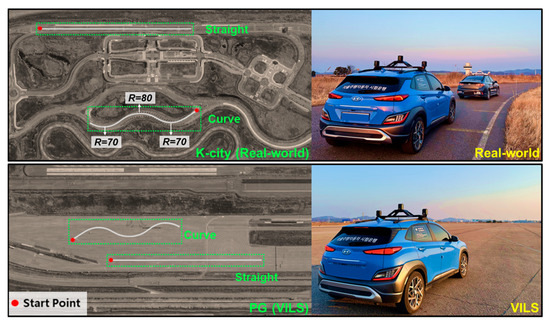

The real-vehicle tests were conducted at K-City, the autonomous driving testbed of the Korea Automotive Technology Institute (KATRI). As shown in Figure 6, the test facility comprises a curved section approximately 320 m long and having curve radii of 70 m, 80 m, and 70 m, together with a one-way, three-lane straight section of approximately 600 m. These road segments are specifically designed to evaluate both the longitudinal and lateral dynamic characteristics of autonomous vehicles. For the simulation (VILS) experiments, a virtual track with an identical geometry was constructed based on HD-map data. These tests were performed in a designated area of the KATRI proving ground designed to accommodate the target road dimensions. By maintaining the same autonomous driving algorithm and scenario conditions across the real and VILS environments, the credibility of the XILS platform could be quantitatively evaluated.

Figure 6.

Test field at KATRI K-City (real) and proving ground (VILS).

5.2. Credibility Evaluation Results

The credibility evaluation methodology outlined in Section 3 was employed to quantitatively analyze and compare the results of the real-vehicle tests (Real) and PG-based VILS tests (Sim). Six test scenarios (A–F), each representing a distinct driving behavior, were evaluated from three perspectives: parameter-based, scenario-based, and dynamics-based. Based on the results, we examined how consistently the XILS platform performed in terms of reliability and fidelity, before integrating the findings to assess its overall credibility. Finally, we reviewed the limitations of the evaluation metrics used and their potential scope for enhancement, gaining insights regarding future research directions.

5.2.1. Results of Key Parameter-Based Evaluation

Following the parameter-based reliability evaluation methodology presented in Section 3.2, we comparatively analyzed the results of the real-vehicle (Real) and VILS (Sim) tests across the 12 key parameters listed in Table 2. All tests were repeated five times, and the graphs of the real-vehicle data (Real) and VILS data (Sim) are represented by dashed and solid lines, respectively, to ensure an intuitive comparison (Figure 7 and Figure 8).

Table 2.

Consistency indices of key parameters.

Figure 7.

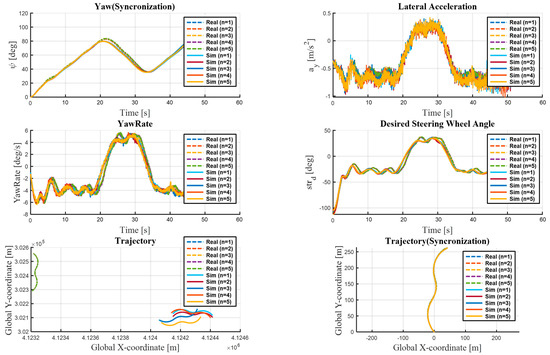

Test results of key parameters related to lateral behavior in Scenario B.

Figure 8.

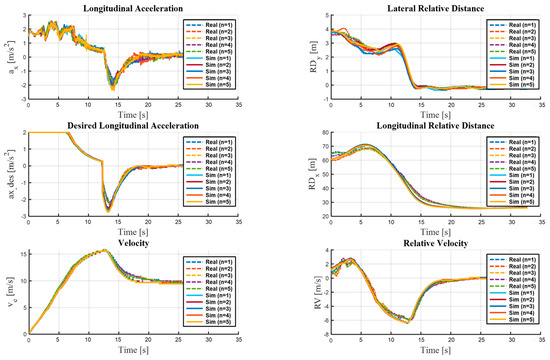

Test results of key parameters related to longitudinal behavior in Scenario C.

In Scenario B, the yaw angle (), lateral acceleration (), yaw rate (), desired steering angle (), and driving trajectory () were analyzed (Figure 7), with the trajectory data being presented in two separate plots [31]. The first plot shows the results of the repeated real-vehicle tests conducted on the actual road, while the second (“synchronized”) plot shows all test runs aligned to the origin (0,0) via rotation and translation. This synchronization is necessary because the VILS tests were performed in multiple areas of the PG environment. Since each area had a different initial position and orientation, the trajectory and yaw angle were both reset to (0,0). The synchronized yaw angle and trajectory plots for the real-vehicle and VILS tests both show a consistent S-shaped pattern; specifically, the two trajectory patterns are nearly identical, which indicates accurate coordinate synchronization. In contrast, some subtle differences are observed between the synchronized yaw graphs, which are attributed to errors during the generation of the virtual road model reflecting the actual road geometry based on the high-precision map. The lateral acceleration, yaw rate, and desired steering angle plots show minor oscillations within each test iteration, attributable to road-surface irregularities and sensor noise during real-world driving. Notably, the yaw rate peak around 30 s appears almost identical between the real-vehicle and VILS tests, which demonstrates that the VILS system accurately replicated the real vehicle’s dynamic maneuvers.

In Scenario C, the longitudinal acceleration (), vehicle speed (), desired longitudinal acceleration (), relative longitudinal distance (), relative lateral distance (), and relative velocity () were analyzed (Figure 8). The test was conducted from 0 to 35 s, with the subject vehicle and the target vehicle both starting from rest. The subject vehicle reached approximately 15 m/s at around 10 s. The target vehicle performed a cut-in maneuver between 12 and 15 s, causing the relative lateral distance to drop sharply from about 3 m to 0 m. In response, the autonomous driving controller of the subject vehicle issued a deceleration command of about –2.5 m/s2. In the desired acceleration plot, the close match between real-vehicle and VILS results confirms that the control algorithm behaved identically in both environments. The relative longitudinal distance plot shows the gap between the vehicles widening during the initial 0–5 s period and then gradually narrowing after the cut-in maneuver, while the relative velocity plot exhibits a peak of about –6 m/s at the cut-in point, indicating that the target vehicle entered the subject vehicle’s lane at a lower speed. The relative lateral distance displayed greater variability during the real-vehicle tests due to initial-position offsets, lane-alignment differences, and sensor-perception errors, whereas the VILS test yielded more consistent results because of the virtual sensor modeling and automated scenario execution.

Table 2 lists the consistency indices of the main test parameters calculated using equations formulated in previous studies [29,30], while Table 3 presents the correlation and applicability indices calculated using Equations (7) and (8).

Table 3.

Correlation and applicability indices of key parameters, along with evaluation criteria.

Compared with the prior study [31], the ordering of the parameters in this study was different, e.g., our (lateral acceleration) corresponds to in the previous study. Considering this, a particularly noteworthy finding is that, whereas the earlier paper reported a correlation index of 71.8% for , thus failing to meet the evaluation criterion ( = 76.3%), we achieved an improved correlation index of approximately 88.25% for p1. This improvement stems from enhancements to the simulation logic: unlike in the previous study, we automated event triggering (e.g., lane changes) within fixed segments to minimize manual tester intervention, which markedly boosted reproducibility in the lateral performance scenarios. Furthermore, our (relative velocity) consolidates and from the prior study into a single parameter. Despite this simplification, this parameter exhibited a high correlation index of 93.89%, which demonstrates the effectiveness of our parameter design. The applicability index () exceeded 100% for all parameters, thereby confirming the superior reproducibility and repeatability of the VILS tests. Specifically, (relative lateral distance) exhibited an applicability index of 119.38%, attributable to our improved perception sensor model and virtual traffic generation algorithm.

In summary, the VILS system implemented in this study—improving on the system from the previous research—achieved greater reliability in terms of certain parameters through reduced tester involvement and a more refined automation logic. Given that all parameters satisfied their respective evaluation criteria, the simulation environment for ADS verification can be considered credible.

5.2.2. Results of Scenario-Based Evaluation

The six test scenarios shown in Figure 3 were evaluated using the scenario-based reliability assessment methodology introduced in Section 3.3. Table 4 lists the consistency indices, with values closer to 1 indicating a higher similarity between the two experiments compared. Table 5 presents the correlation and applicability indices for each test scenario. According to the data in Table 4, the inter-repetition consistency indices of the VILS tests generally exceeded those of the real-vehicle tests across all scenarios. This indicates that the VILS tests could reproduce the same scenario with higher precision and repeatability, which highlights their advantage over real-vehicle tests in terms of reproducibility and consistency within a simulation environment.

Table 4.

Consistency indices for each scenario.

Table 5.

Correlation and applicability indices for each scenario, along with evaluation criteria.

However, the consistency index between the real-vehicle and VILS tests was slightly lower than the consistency index within each testing environment. This implies that within the same experimental configuration, structural differences between the two platforms, along with input errors, affected the consistency between the corresponding results. These observations indicate inherent differences between real-world environments and simulation environments. Notably, Scenarios E and F exhibited lower consistency indices than the other scenarios. This is because these scenarios include both longitudinal and lateral vehicle behavior, which restricts the possibility of achieving consistent results.

Another important pattern in Table 4 is the variation in the consistency index according to the speed condition. In all scenarios, the consistency indices displayed a clear decreasing trend with increasing speed. Specifically, the inter-repetition consistency index for the real-vehicle tests decreased from 0.922 under the low-speed condition to 0.873 under the high-speed condition in Scenario A, and from 0.846 to 0.806 in Scenario F. This reflects the physical phenomenon in which maintaining consistency in vehicle behavior becomes more difficult as speed increases, because factors such as inertial forces, tire dynamics, and steering sensitivity interact more complexly, resulting in greater variability in system responses to the same inputs. Additionally, the consistency indices for Scenarios C and D were lower than those for Scenarios A and B; this shows that the inclusion of the target vehicle increased the structural complexity of the scenarios, whereby ensuring consistency in system responses between repeated experiments became more difficult. The consistency index between the real-vehicle and VILS tests was also significantly lower in the integrated behavior scenarios (E and F) than in the single-behavior scenarios (A–D). Particularly under the high-speed condition, Scenarios E and F recorded the lowest values, at 0.681 and 0.676, respectively. This reflects the increasing difficulty in ensuring coherent multi-dimensional system responses due to the inclusion of both longitudinal and lateral terms in the main parameters used for the consistency index calculations.

Table 5 presents the correlation index and applicability index for each scenario as percentages, with all metrics meeting the pre-established criteria across all scenarios. Notably, the applicability index exceeded 100% in all scenarios and under all speed conditions. This numerically verifies that the VILS tests were more repeatable and reproducible than the real-vehicle tests, indicating that the VILS environment was sufficiently reliable to replace real-vehicle testing. Under the high-speed condition in Scenario D, the applicability index recorded its highest value (110.37%), which suggests that VILS tests can provide more consistent results than real-vehicle tests even in complex and challenging driving situations. The correlation index also exhibited a consistent pattern with respect to the speed condition, displaying a decreasing trend with increasing speed across all scenarios. In Scenario A, the correlation index decreased from 95.79% under the low-speed condition to 92.49% under the high-speed condition, while in Scenario E, it underwent a larger reduction, from 89.53% to 82.81%. This observation can be interpreted as the dynamic differences between real and simulated vehicles becoming more pronounced in higher-speed driving situations. Interestingly, the single-behavior scenarios (A–D) showed higher correlation indices than the integrated-behavior scenarios (E and F), which suggests that VILS environments can be more coherent in relatively simple scenarios. Conversely, the applicability index tended to be higher in more complex scenarios and under higher-speed conditions. Under the high-speed condition in Scenario D, it recorded its highest value (110.37%), which signifies that the VILS tests were more consistent than the real-vehicle tests under more complex conditions.

5.2.3. Results of Dynamics-Based Evaluation

We comparatively analyzed each scenario using the dynamics-based fidelity assessment methodology described in Section 3.4. The similarity of the dynamic motion responses between VILS and real-vehicle tests was quantitatively analyzed based on the six DOFs that constitute the translational and rotational motions of a vehicle. Table 6 lists the dynamic consistency indices calculated by weighting the average similarity index for each DOF. Values closer to 1 indicate more consistent dynamic responses between the simulated and real vehicles. Table 7 presents the dynamic correlation index for each scenario.

Table 6.

Dynamics-based consistency index for each scenario.

Table 7.

Dynamics-based correlation for each scenario, along with evaluation criteria.

The dynamics consistency index and correlation values in Table 6 and Table 7 exhibit a clear pattern. Across all scenarios, the consistency index and correlation displayed a consistent, gradually decreasing trend with increasing speed. This is ascribed to the increased influence of disturbances such as crosswinds and road surface irregularities, which are more pronounced at higher speeds, along with amplified nonlinearities in the tire–body dynamics and increased input–output timing differences due to controller response delays. In terms of the evaluation type, the best dynamic consistency was observed within the VILS tests, while relatively low consistency was observed between the real-world and VILS tests. This is attributed to the structural advantage of the simulation environment, which allows precise control over the initial conditions. Regarding the effect of scenario complexity, the lateral-motion scenarios (A and B) exhibited the highest consistency index, the longitudinal-motion scenarios (C and D) displayed moderate consistency, and the integrated scenarios (E and F) showed the lowest consistency. The low consistency in the integrated scenarios resulted from the complex response of the system due to the simultaneous occurrence of longitudinal and lateral inputs and the dynamic interaction between the subject and target vehicles.

The foregoing observations underscore the advantages of the proposed dynamics-based fidelity assessment over traditional assessment methods. First and foremost is the ability to reflect disturbances. Our method can compare the reproducibility between real-world and simulation tests, considering various disturbance factors, such as crosswinds, road surface conditions, and tire characteristics, an important aspect that is often overlooked in traditional parameter-based evaluations. Additionally, the dynamics evaluation utilizes a multidimensional measurement mechanism: rather than depending on a single parameter or a specific input condition, it integrally measures the complex response of the overall system, thereby mitigating structural inconsistencies. This results in a balanced distribution of the dynamics correlation indices, without extremes. In terms of practical credibility, the evaluation criterion being satisfied in all scenarios and under all speed conditions proves that the VILS platform could adequately reproduce the real vehicle’s dynamics, even in complex, integrated-behavior scenarios.

The dynamics-based fidelity assessment is superior to existing methodologies as it includes vehicle dynamics characteristics. Existing evaluation metrics focus only on individual parameters or scenario-related results and do not comprehensively reflect the dynamics of the vehicle. In contrast, the proposed dynamics-based methodology ensures a comprehensive evaluation by measuring the dynamic response of the entire vehicle system. The consideration of real-time control feedback represents another important advantage. The dynamics-based evaluation includes the vehicle control system feedback loops, as well as their time delays and nonlinear response characteristics. This is particularly important under high-speed conditions or in complex scenarios as it enables a systematic analysis of uncertainty factors.

Despite the merits of the proposed methodology, considering the results of our evaluation, specific aspects of the framework can be improved to strengthen the credibility assessment of the VILS platform further. The most important enhancement would be the incorporation of a highly accurate, real-world-based tire–road interaction model. A tire model that accurately simulates the physical properties and behavior of real tires is necessary, as is using high-resolution road-profile data to simulate actual road surface conditions more accurately. The input response of the high-speed area control must be synchronized better as well. Algorithms must be developed to compensate for the time delays and nonlinearities that occur during high-speed driving. Delay compensation within the controller is another important requirement, which necessitates the introduction of compensation techniques to minimize the difference in control signal delay between the real vehicle and the simulated vehicle. The credibility of the evaluation can be improved further by extending the experimental conditions to cover more types of driving situations. Moreover, the statistical analysis should be strengthened with additional scenarios, speed conditions, and experimental trials. Specifically, the scope of the evaluation must be expanded by adding variables such as extreme weather conditions.

Overall, we comprehensively validated the credibility of the VILS platform through parameter-based and scenario-based reliability evaluations and a dynamics-based fidelity evaluation. In particular, the dynamics-based fidelity evaluation captured the various disturbances and vehicle dynamics characteristics encountered in real-world driving environments, demonstrating the potential of virtual-environment testing to replace real-world testing. Our integrated evaluation methodology can serve as a dependable validation framework during the development of ADSs and ADASs, ultimately helping address the technical limitations and social acceptance issues surrounding the commercialization of autonomous driving technologies.

5.3. Results of Geometric Similarity Evaluation

We analyzed the testing efficiency and scenario representativeness based on the geo 장어metric similarity indicators presented in Section 4. For the scenarios that were deemed credible based on the three evaluation criteria discussed earlier, we calculated the geometric similarity between the shapes by visualizing the credibility-assessment results across the three representative speed ranges—low, medium, and high—through spider charts. The aim of this process was to quantify the consistency of shape changes across speed conditions and verify whether the results for a specific speed condition can represent the characteristics across the entire speed range.

The primary objective of this study was to establish objective criteria for determining whether testing under a single representative speed condition can replace individual tests under multiple speed conditions when the speed condition is not a dominant factor in the credibility assessment. This approach can significantly enhance testing efficiency and resource optimization if the geometric similarity between velocity conditions exceeds a certain threshold. Section 5.3.1 and Section 5.3.2 present the results of the geometric similarity assessment and the scenario representativeness assessment, respectively. Through a multifaceted interpretation and in-depth examination of these results, we discuss the efficiency and validity of the geometry-based verification methodology.

5.3.1. Results of Efficiency Evaluation

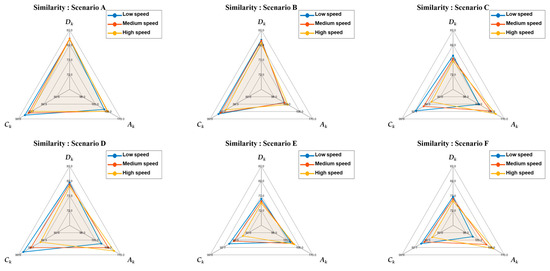

The geometric similarity vectors for each speed condition in each test scenario were defined using Equations (41)–(43). Based on these vectors, we quantitatively analyzed the geometric similarity between the two shapes depicted in the spider charts to determine the testing efficiency during credibility evaluation. To evaluate the consistency of the differences between the shapes corresponding to the different speed conditions, we utilized vector components based on the three previously derived metrics: dynamics-based correlation , scenario-based applicability , and scenario-based correlation .

Table 8 summarizes the values of , , and for Scenarios A–F, which were used as input vectors to compare the geometric shapes on the spider charts. Figure 9 presents the triangular spider charts constructed based on these vectors, which provide an intuitive visualization of the geometric characteristics and variation patterns corresponding to each speed condition in each scenario.

Table 8.

Geometric similarity vector components for each scenario.

Figure 9.

Spider charts showing the geometric similarity index for each scenario.

The similarities between these shapes were quantitatively assessed based on the Procrustes, Fréchet, and Hausdorff distance metrics introduced earlier; these metrics were calculated using Equations (37)–(39), respectively, and captured different geometric characteristics, allowing for complementary evaluations. The geometric similarity index was then derived via a weighted integration of the three distance measures based on Equation (44). Table 9 lists the scores calculated accordingly for each scenario.

Table 9.

Geometric similarity for each scenario, along with evaluation criteria.