Abstract

The semiconductor industry currently lacks a robust, holistic method for detecting parameter drifts in wide-bandgap (WBG) manufacturing, where conventional fault detection and classification (FDC) practices often rely on static thresholds or isolated data modalities. Such legacy approaches cannot fully capture the intricate, multi-modal shifts that either gradually erode product quality or trigger abrupt process disruptions. To surmount these challenges, we present M2D2 (Multi-Machine and Multi-Modal Drift Detection), an end-to-end framework that integrates data preprocessing, baseline modeling, short- and long-term drift detection, interpretability, and a drift-aware federated paradigm. By leveraging self-supervised or unsupervised learning, M2D2 constructs a resilient baseline of nominal behavior across numeric, textual, and categorical features, thereby facilitating the early detection of both rapid spikes and slow-onset deviations. An interpretability layer—using attention visualization or SHAP/LIME—delineates which sensors, logs, or batch identifiers precipitate each drift alert, accelerating root-cause analysis. An active learning loop dynamically refines threshold settings and model parameters in response to real-time feedback, reducing false positives while adapting to evolving production conditions. Crucially, M2D2’s drift-aware federated learning mechanism reweights local updates based on each site’s drift severity, preserving global model integrity at scale. The key scientific breakthrough of this work lies in combining advanced multi-modal processing, short- and long-term anomaly detection, transparent model explainability, and an adaptive federated infrastructure—all within a single, coherent framework. Evaluations of real WBG fabrication data confirm that M2D2 substantially improves drift detection accuracy, broadens anomaly coverage, and offers a transparent, scalable solution for next-generation semiconductor manufacturing.

Keywords:

active learning; anomaly detection; attention-based interpretability; autoencoder; Bayesian threshold; contrastive learning; drift-aware federated learning (FL); graph neural network (GNN); multi-modal data; parameter drift; self-supervised learning; semiconductor manufacturing; transformer 1. Introduction

Wide-bandgap (WBG) materials such as gallium nitride (GaN) and silicon carbide (SiC) have transformed the semiconductor landscape by enabling higher power density, faster switching speeds, and superior thermal stability compared to conventional silicon-based devices [1]. The adoption of WBG technologies is proliferating in applications ranging from power converters and electric vehicles to emerging 5G/6G communication systems. Although these advances promise higher efficiency and performance, the accompanying fabrication and assembly processes have grown markedly more complex. Manufacturing WBG-based devices often involves numerous tightly coupled parameters—such as plasma discharge power, chemical vapor deposition (CVD) conditions, critical temperature points, and wafer handling protocols—that must be maintained within stringent control windows to preserve device quality and yield.

Parameter drift arises when these control windows begin to shift or degrade over time, occurring either slowly due to gradual wear in reactor components or abruptly due to sporadic fluctuations in gas composition or chamber pressure. If left unaddressed, drift can contribute to device performance deterioration, reduce production yield, and in severe cases trigger catastrophic system failures. Detecting and correcting parameter drift at an early stage is therefore a linchpin in ensuring the stable and profitable operation of WBG semiconductor manufacturing lines [2]. To achieve this, many FDC systems aggregate sensor data from multiple process steps (e.g., etching, deposition, cleaning) and leverage statistical process control (SPC) or machine learning algorithms to monitor device health in near real-time.

Despite decades of progress, conventional FDC methods face significant hurdles in the context of modern WBG manufacturing. Early works often utilized simple control charts (e.g., Shewhart, CUSUM, or EWMA) or static thresholds, which proved adequate for smaller-scale processes with relatively stable parameter distributions [3]. However, as processes became more intricate and the number of monitored variables expanded, these classical techniques showed limited sensitivity to subtle or slowly developing drifts. Studies that introduced principal component analysis (PCA) and other linear dimensionality reduction methods [4] helped to some extent, but the prevalent nonlinearity and cross-variable dependencies in WBG device fabrication frequently undermine purely linear models. Similarly, heuristic threshold updates and domain expert fine-tuning—common in many industrial settings—cannot fully address the challenge of capturing dynamic, multi-modal correlations and complex temporal dependencies [5].

In response to these limitations, more advanced anomaly detection and drift-monitoring frameworks have been proposed in recent years. Machine learning- and deep learning-based FDC systems—ranging from random forests and support vector machines to convolutional and recurrent neural networks—have demonstrated a certain level of success in learning normal operational patterns from large archives of historical data [6,7]. Autoencoder-based methods, in which the reconstruction error of incoming data points serves as a proxy for deviations, can detect unexpected shifts in process parameters [8]. Contrastive learning and self-supervised techniques further enhance feature extraction, especially when partial labels or similarities exist among different operation modes [9]. Online learning algorithms and adaptive thresholding strategies aim to keep detection schemes current as processes evolve, sometimes employing Bayesian updates or incremental retraining [10].

Nonetheless, few of these approaches adequately confront the acute challenges that define drift detection in WBG contexts. First, WBG processes typically generate multi-modal data, including numeric sensor readings, unstructured textual logs (e.g., maintenance records or operator notes), and categorical identifiers such as batch or chamber IDs. Most existing solutions specialize in numeric time series but struggle to meaningfully incorporate textual or categorical signals, leading to potential blind spots in the detection mechanism [11]. Second, drift behaviors are not confined to single manufacturing steps. Subtle shifts in an upstream epitaxy (Epi) process, for example, may only manifest downstream in device electrical measurements. Methods focusing on single-machine anomalies may therefore fail to account for cross-machine or cross-step dependencies [12]. Third, the difference between short-term abrupt anomalies (e.g., a sudden spike in gas flow) and long-term gradual drifts (e.g., progressive chamber contamination) cannot be captured by a one-size-fits-all threshold or model. Balancing immediate detection with longer-horizon insights requires a multi-timescale approach [13]. Fourth, the necessity for human oversight remains crucial in high-stakes production environments. Although explainable AI (XAI) techniques like attention maps, SHAP, or LIME have been increasingly applied, many anomaly detection pipelines omit or undervalue the role of interactive feedback loops through which human experts can refine model thresholds and confirm genuine anomalies [14]. Finally, with semiconductor manufacturing often distributed across multiple fabrication facilities and machine clusters, the use of FL and distributed sensor networks is becoming more prevalent. Classical FL methods, however, are ill-equipped to handle heterogeneous or site-specific drifts, risking the dilution of the global model by drifting clients [15].

These gaps highlight the need for an integrated framework capable of unifying multi-modal data preprocessing, robust baseline modeling, interpretable short-term and long-term drift detection, active learning for continuous adaptation, and drift-aware federated coordination. To meet this challenge, we propose the M2D2 framework. M2D2 combines several design objectives that collectively address the shortfalls of existing methods: (1) a systematic approach to combining numeric, textual, and categorical inputs, ensuring that crucial signals are not overlooked; (2) a dual-level drift detection pipeline that flags both acute anomalies in real time and gradual shifts over extended windows; (3) interpretable modules that pinpoint root causes of drift, enhancing trust and transparency for engineers and decision-makers; (4) an active learning loop that refines detection thresholds and model parameters based on timely human or automated feedback; and (5) a drift-aware federated learning strategy that synthesizes local models without being jeopardized by severe parameter mismatches in individual machines.

Although this paper does not demonstrate the direct integration of drift detection outcomes into predictive maintenance (PdM) or digital twin (DT) systems, it is nonetheless important to emphasize that robust detection of parameter drift is pivotal for these advanced manufacturing paradigms. PdM strategies rely on the early detection of equipment wear or process degradation to schedule cost-effective interventions, and DTs depend on timely, accurate process data to maintain high-fidelity virtual replicas of real-world production environments. Failing to capture subtle drifts at the source can undermine both the quality of PdM insights and the reliability of DT simulations, ultimately impacting yield, operational costs, and strategic decision-making in WBG manufacturing.

By consolidating state-of-the-art anomaly detection mechanisms into a holistic architecture, we envision M2D2 not only as a technical innovation but also as a critical enabler of stable, efficient, and reliable WBG semiconductor production. The framework’s agility in handling multi-modal data, its adaptability to short- and long-term changes, and its capacity to operate robustly across multiple facilities all align with the evolving demands of next-generation manufacturing. As the electronics industry continues to expand WBG adoption, an effective and scalable drift detection paradigm will be essential to maintaining high yields, reducing operational costs, and extending device performance to new frontiers.

The remainder of this paper is organized as follows. Section 2 introduces the M2D2 framework, detailing its innovative modules. Section 3 then details the proposed M2D2 methodology, covering its core phases—data preprocessing, baseline modeling, real-time and long-term drift detection, interpretability, active learning, and drift-aware federated coordination. Section 4 provides theoretical justification, presenting formal arguments for short-term and long-term detection consistency as well as federated convergence when confronted with drifting clients. Section 5 describes empirical validation using real-world WBG fabrication data, emphasizing M2D2’s improvements in detection accuracy, drift mitigation, and explainability. Section 6 concludes by synthesizing the major findings and discussing the study’s contributions and limitations. Finally, Section 7 outlines directions for future research, including deeper integration with PdM and DT frameworks.

2. M2D2 System Framework

Modern industrial environments often generate diverse modes of information simultaneously, including continuous numeric sensor readings (e.g., temperature, pressure, current), textual or semantic data (e.g., maintenance logs, operator notes, scheduling instructions), and categorical attributes such as batch codes, machine IDs, or plant locations. When extending these infrastructures to multi-machine or multi-plant collaborations, the data characteristics become increasingly complex and distributed. To meet the high-dimensional, dynamic, and privacy-conscious requirements of such settings, we propose M2D, which provides an integrated framework designed to achieve four primary objectives:

- (1)

- Multi-Modal Data Integration and Baseline Modeling: Uniformly manage numeric sensor readings, textual content, and categorical labels; then, learn the baseline distribution of “normal” operating states via self-supervised or unsupervised methods.

- (2)

- Short- and Long-Term Drift Detection: Capture abrupt or acute drifts in real time, while also identifying latent, incremental deviations through periodic, batch-based analyses.

- (3)

- Interpretability and Active Learning: Once a warning is issued, offer visualized explanations of relevant sensor dimensions or textual keywords, and leverage human or automated feedback to reduce false alarms while continuously refining the model.

- (4)

- Drift-Aware Federated Learning: Address large-scale or geographically distributed industrial environments that require integrated knowledge sharing without violating data privacy, and mitigate the impact of severe local drift on the global model using dynamic weighting and micro-round strategies.

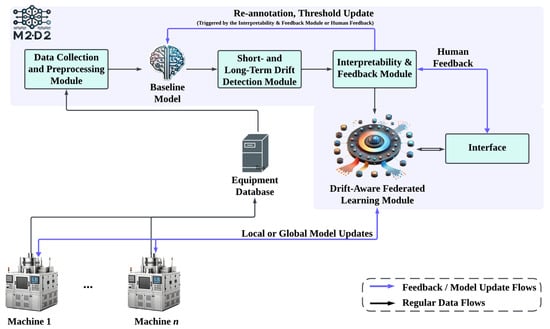

Building on these foundations, M2D2 functions as an end-to-end framework, as illustrated in Figure 1. It integrates data acquisition, preprocessing, detection, explanation, and feedback into a closed-loop system that dynamically adapts to evolving manufacturing processes.

Figure 1.

M2D2 system framework.

2.1. Multi-Modal Data Collection and Preprocessing

M2D2 begins by rigorously consolidating numeric, textual, and categorical data, followed by a series of preprocessing and feature engineering tasks to align these variables in a single analytical space. Practically, this involves streaming pipelines or data buses that continually ingest sensor readings and maintenance logs and applying mechanisms to rectify noise and missing values in the raw records. For instance, when temperature or pressure data are absent, linear interpolation or autoencoder-based inpainting can be employed. Textual inputs, including maintenance logs or error messages, may be transformed into embeddings (e.g., via BERT or Word2Vec), supplemented as needed by domain-specific dictionaries or keyword annotation. As for batch IDs or machine category fields, one-hot encoding or learned embeddings can preserve their discriminatory power while averting dimensional explosion. Finally, normalization methods like Z-score or min–max scaling standardize these diverse inputs, yielding multi-modal feature vectors for subsequent baseline modeling.

2.2. Baseline Model: Self-Supervision and Contrastive Learning

Once the data have been preprocessed, M2D2 typically assumes that the collected offline training set is primarily composed of “normal” data. Leveraging self-supervised or unsupervised methods, it then learns a baseline model that represents a “normal operational state”. In contexts where strong correlations or physical proximity exist across multiple machines (e.g., shared pipelines, adjacent production lines), a GNN may be introduced to encode cross-machine dependencies. This baseline model commonly integrates two complementary strategies:

- (1)

- Reconstruction-Based Learning: Autoencoders and similar architectures reconstruct multi-modal data samples assumed to be normal. The system then derives a distribution of reconstruction errors, such that high reconstruction error may indicate emerging anomalies or drifts.

- (2)

- Contrastive Learning: When certain pairs or subsets of samples are known to stem from the same operational regime, contrastive objectives bring their latent representations closer together. Conversely, those from distinct regimes are pushed apart. In this way, the learned embedding space becomes more distinct for subsequent drift detection, improving generalization to new, unseen variations.

At the conclusion of this training phase, M2D2 acquires one or more baseline distributions—e.g., a “reconstruction error distribution” or a “latent embedding distribution”—which serve as references when evaluating any shifts in newly arriving data.

2.3. Short- and Long-Term Drift Detection

- (1)

- Short-Term Detection:

Industrial processes often exhibit acute, short-term anomalies—such as sudden pressure spikes or momentary disruptions in gas flow—that can rapidly degrade product yield or threaten equipment safety. To capture these events, M2D2’s short-term drift detection module compares each incoming data point’s reconstruction error or latent distance against an adaptive (e.g., Bayesian) threshold. If the error exceeds this threshold, the system flags a potential drift within seconds or minutes. Unlike rigid fixed-threshold methods, M2D2’s dynamically updated threshold accommodates normal fluctuations in process parameters, ultimately minimizing false alarms and enhancing responsiveness.

- (2)

- Long-Term Detection:

In addition to acute anomalies, many manufacturing processes undergo gradual performance erosion or chronic changes—for instance, due to mechanical wear or contamination accumulation—that may span days, weeks, or months. Such drifts rarely surpass short-term thresholds but can substantially impair operational efficiency and reliability. Consequently, M2D2 employs periodic batch analysis: at fixed intervals (e.g., weekly or monthly), it gathers recent data and quantifies how their distribution differs from an established baseline or historical reference using metrics like KL divergence or the Wasserstein distance. Once this distance crosses a threshold or displays a sustained upward trajectory, the system designates a long-term drift. If coupled with multi-scale attention, M2D2 can simultaneously capture both immediate irregularities and gradual, low-frequency shifts.

2.4. Interpretability and Active Learning Loop

- (1)

- Drift Explanation and Root Cause Analysis:

Alerting users to an anomaly is often insufficient by itself; actual practitioners, whether engineers or management personnel, need clarity on which measurements or textual entries triggered the warning. M2D2 addresses this by incorporating interpretability mechanisms. If the underlying model leverages Transformer-based architectures, attention-weight visualizations can highlight which sensor channels or textual tokens contributed most. Alternatively, for more opaque deep neural networks, SHAP can approximate the feature-level contributions behind the drift score. This transparency expedites root-cause analysis—be it “current fluctuations” becoming prominent in maintenance logs or a temperature sensor exhibiting statistically unusual oscillations—and thus leads to more targeted remediation.

- (2)

- Active Learning and Feedback:

Even the most refined detection model is prone to occasional misclassifications, especially as production environments evolve in unforeseen ways. M2D2 counteracts this problem via an active feedback loop: whenever the system flags a drift, domain experts have the opportunity to label the alert as a genuine anomaly or a false alarm. For false alarms, the model or threshold can be adjusted accordingly, reducing the recurrence of the same misclassification. For newly discovered anomalies, the labeled samples are incorporated into an “anomaly database”, used in future retraining or incremental updates to enhance the coverage of novel drift patterns. In this manner, M2D2 continuously adapts to dynamic manufacturing conditions, reducing the burden of frequent manual inspections and lowering the rate of missed detections.

2.5. Drift-Aware Federated Learning

- (1)

- Motivation and Mechanism:

Large enterprises or multi-site manufacturing deployments often require a shared global view of production behavior without centralizing proprietary data. FL naturally emerges as a solution, wherein local nodes train models on their respective data, only transmitting model parameters to a central coordinator. However, naive averaging approaches such as FedAvg frequently fail when certain machines undergo severe distributional shifts that skew the global model. M2D2 addresses this shortcoming by infusing a drift-aware component into FL: each site computes a drift score—incorporating short-term reconstruction errors and long-term distributional deviations—and passes this score alongside its model parameters. If the drift score is significantly high for a given site, the central aggregator can deploy additional local micro-rounds to correct local drift or temporarily exclude the site from aggregation, thereby safeguarding the global model’s robustness.

- (2)

- Multi-Head/Multi-Task and Deployment Strategies

Given the heterogeneous nature of many industrial processes, M2D2 can adopt a “shared-backbone-plus-local-heads” or multi-task architecture. The central server merges the shared backbone (e.g., front-end layers of a neural network) across all sites, while each site independently fine-tunes local task-specific heads for more nuanced adaptation. In cases of radical “concept drift”, such as hardware upgrades or major process overhauls, M2D2 can dynamically create new heads and preserve older ones, thus enabling continual learning while retaining previously acquired knowledge.

2.6. System Workflow and Visualization Interface

Once multi-modal data start streaming in, M2D2’s preprocessing module merges and cleans them into consistent feature vectors, which are subsequently fed into the baseline model to compute reconstruction errors or latent embedding distances. Short-term detections trigger real-time alerts if these measures exceed the chosen threshold, supported by interpretability cues that help engineers or operators identify potential causes. Meanwhile, long-term analyses are used to periodically compare the evolving data distribution with historical baselines; significant divergence leads to a “long-term drift” alert. In both cases, a user-friendly dashboard provides historical trend graphs, root-cause explanations, and maintenance log cross-referencing. False alarms can be labeled to refine future detection thresholds, whereas confirmed new anomalies are archived in the anomaly repository for eventual retraining. Under multi-site or multi-machine setups, each node periodically transmits updated local parameters and drift scores to the central server, which conducts drift-aware parameter aggregation based on data volume and drift severity, preserving consistency and precision across the network.

2.7. Novelty and Breakthroughs

Compared to existing research, M2D2 is distinguished by its far-reaching approach to multi-modal data usage, from baseline modeling (combining reconstruction and contrastive learning) to dual-scale drift detection (short- and long-term), as well as its built-in interpretability, active learning feedback loop, and drift-aware federated framework. This end-to-end design more comprehensively addresses the complexities of large-scale industrial production lines and accommodates diverse anomalies that emerge at varying frequencies and severities. Moreover, the system transcends mere anomaly signaling by offering in-depth explanations—through attention visualization or SHAP/LIME—allowing on-site personnel to swiftly devise targeted interventions. Extended to multi-site or multi-machine contexts, federated learning incorporates drift scoring to dynamically reweigh local updates or exclude highly drifting clients, thereby ensuring that the global model remains stable in the face of heterogeneous or rapidly evolving conditions.

Overall, M2D2 combines multi-modal feature integration, short- and long-term drift detection, interpretability, active learning, and drift-aware federated coordination into a system with adaptive, self-improving capabilities. This integrated design not only matches the demands of high-complexity domains such as advanced semiconductor manufacturing, Industry 4.0, and smart factories but also establishes a forward-looking foundation for future extensions, including DT integrations, PdM strategies, and beyond.

3. Methodology

Modern industrial environments often deploy numerous machines (e.g., ) equipped with diverse sensors, alongside textual or categorical information such as maintenance logs or batch IDs. Over time, these machines can experience drift—a shift from their normal operational parameter distribution to a new, possibly abnormal distribution. Detecting this shift (drift) early is paramount to avoiding quality degradation, production downtime, or catastrophic failures [16].

Formally, let denote the multi-modal feature vector for machine mmm at time . Under normal conditions, the data follow the distribution . When a drift occurs, the distribution changes to . The key objective is to detect the earliest time at which

no longer holds, thereby generating a drift warning . We seek a methodology that accommodates the following aspects:

- (1)

- Multi-modal data (e.g., numeric sensors, maintenance logs in text, and categorical features).

- (2)

- Phase-by-phase processes for data cleaning, baseline modeling, real-time detection, and long-term monitoring.

- (3)

- Explainability and active learning to continually refine the detection model with new feedback and anomalies.

The following sections describe our proposed M2D2 framework, which organizes the solution into seven phases, from data preprocessing to optional federated learning and maintenance strategies.

3.1. Phase 0: Data Preprocessing

Before any modeling can begin, we must consolidate numeric sensor readings, textual maintenance records, and categorical batch/shift labels into a coherent multi-modal feature set, as shown in Table 1. At the same time, we must filter out noise, address missing values, and standardize the representation [16].

Table 1.

Example data table for drift detection.

- (1)

- Multi-modal Integration:Text (maintenance logs) is converted into embeddings (using Word2Vec, Doc2Vec, or BERT [17]); categorical data (e.g., batch ID) is transformed into one-hot or learned embeddings [18]; numeric sensor readings are then concatenated with these embeddings (as shown in Table 2):

Table 2. Example of feature transformation table.

Table 2. Example of feature transformation table. - (2)

- Denoising and Imputation:Missing sensor entries or misaligned timestamps are handled via linear interpolation or autoencoder-based inpainting [19].

- (3)

- Normalization:Each dimension (including text/categorical embeddings) is standardized, for instance via Z-score:

This produces a cleaned and merged multi-modal feature vector, , ready for baseline modeling in the next phase.

3.2. Phase 1: Baseline Model

We assume an offline training set, largely comprising normal data (or at least predominantly normal). Our aim is to learn the baseline representation or distribution of normal operating behavior using self-supervised or unsupervised techniques. If multiple machines are connected via adjacency (e.g., shared process lines or spatial proximity), a graph structure can be leveraged.

- (1)

- Self-Supervision (Reconstruction + Contrastive):

- i.

- Masked Reconstruction: A portion of is masked or noised, and the model (e.g., a Transformer) attempts to reconstruct the following:

- ii.

- Contrastive Learning: If some pairs are known to be under similar operating conditions and others are not, we include a contrastive loss [20]:Here, is the latent embedding from .

- (2)

- Graph-Structured GNN:If machines form a graph , GNN can be integrated to capture cross-machine dependencies [21].

- (3)

- Baseline Distribution:After training, we derive either a reconstruction error distribution,or a latent embedding distribution, . These constitute the baseline representations of normal states.This involves a baseline mode , capturing normal multi-modal data behavior, plus a reference distribution, or to which new data can be compared.

3.3. Phase 2: Short-Term Detection

Once the baseline model is deployed in real-time (), each incoming data point must be quickly classified as normal or anomalous. However, a fixed threshold can be insufficient in a changing production environment, so we adopt a Bayesian threshold [22].

- (1)

- Forward Inference:

- (2)

- Bayesian Threshold:Let be the threshold with prior Observing updates the posterior:We take (e.g., the 95% quantile) from the posterior. If , a short-term drift is flagged:A drift score and binary detection label . This can be executed at a second- or minute-level timescale for real-time alerts.

3.4. Phase 3: Long-Term Detection

Apart from acute anomalies, machines can exhibit gradual drifts over days or weeks (e.g., air pressure). This phase periodically measures distribution shifts in embeddings or reconstruction errors over a batch window.

- (1)

- Distribution Measures (KL/Wasserstein):Gather recent data to form . Compare with the baseline via [16]If this distance grows beyond threshold (statistically or via Bayesian update), a long-term drift is declared.

- (2)

- Multi-Scale Attention:A Transformer can incorporate specialized heads that capture short-range signals vs. long-range temporal trends [23]. This can automatically learn chronic vs. acute shifts.There is a long-term drift warning if . This mechanism catches subtle, slowly accumulating deviations.

3.5. Phase 4: Interpretability

Once drift is detected (short- or long-term), engineers need clarity about which features or maintenance records caused the model to flag an anomaly. Multi-modal attention can guide the explanation.

- (1)

- Attention Visualization:In a Transformer-based system, each attention head yields weight . By visualizing , one can identify which textual or categorical tokens strongly influenced sensor-based anomaly detection.

- (2)

- SHAP/LIME:Final drift scores can be treated as a function for Shapley value decomposition or LIME-based perturbation [24]. If textual tokens, e.g., “current fluctuations,” appear with high contribution, the cause is more transparent.

Root-cause analysis or drift explanation can be used to specify “which dimension or which textual clue” triggered the drift verdict, enabling targeted corrective actions.

3.6. Phase 5: Active Learning

Systems used in production inevitably produce false alarms or encounter novel drift patterns. Incorporating human (engineer) feedback can dynamically reduce false positives and enhance coverage of new anomalies [22].

- (1)

- Human Feedback:If a flagged sample is deemed normal by experts, it is re-labeled as a “false alarm,” and the threshold prior can be adjusted accordingly. On the other hand, if an anomaly is confirmed, it is added to a “labeled anomaly” set for retraining.

- (2)

- Retraining with Semi-Supervision:Here, is an additional penalty term used to reinforce the model’s discrimination of newly confirmed drift samples.

An updated model is shown by and a refined threshold is shown by . Over time, the model adapts to evolving operating conditions, reducing false positives and the number of missed detections.

3.7. Phase 6: Drift-Aware Federated Learning

In large-scale industrial environments spanning multiple machines or even multiple companies, FL has become a key approach to consolidating distributed knowledge under strict privacy and communication constraints. However, most existing frameworks—such as FedAvg or FedProx—merely average local parameters from each site without considering drift discrepancies across machines (sites). When one or more machines experience significant distributional shifts, the global model can be severely degraded. Moreover, if the federated process solely focuses on parameter updates (e.g., aggregating weights) without incorporating the maintenance and drift detection insights from earlier phases (Phase 2, Phase 3), it cannot deliver true “intelligent operations and maintenance”.

To address these issues, we enhance the original Phase 6 into a drift-aware federated learning and maintenance paradigm. By integrating dynamic aggregation weights, collective drift responses, multi-head/multi-task architectures, and a closed-loop approach with interpretability and maintenance decisions, federated learning becomes the driving force in our M2D2 framework.

- (1)

- Drift Score Calculation and Upload:Building on Phase 2 (short-term detection) and Phase 3 (long-term detection), each machine in round computes one or more drift scores, denoted by . A straightforward approach is to combine short-term reconstruction errors with long-term distributional distances using weighted sums:wheretypically reflects the short-term anomaly or reconstruction error as described in Phase 2.can be a KL-divergence, Wasserstein distance, or any metric quantifying the long-term shift from a baseline distribution (Phase 3).are hyperparameters used for regulating the relative importance of short-term versus long-term drift.In practice, if one needs finer distinctions, can be a vector (, ), thereby allowing separate policies for immediate vs. gradual shifts.

- (2)

- Drift-Aware Aggregation Weights:Traditional FL methods, such as FedAvg, compute the global model by weighting local parameters using the data size :However, if different machines exhibit heterogeneous drift, a naive averaging scheme can allow heavily drifting clients to “pull” the global parameters off track. To mitigate this, we introduce drift-aware weight :The function decreases with to downweight clients that are heavily drifting. Common choices for includewhere controls how strongly the system penalizes drift. By adjusting and , the global model remains robust against outliers or heavily drifting sites.

- (3)

- Adaptive Rounds and Micro-Rounds

- i.

- Micro-Round TriggerWhen machine reports that exceeds threshold , the server can trigger micro-rounds to afford extra local updates before final aggregation. Let represent the local loss function (e.g., reconstruction or contrastive loss in earlier phases). The micro-round training can be formalized as follows:After these extra steps, the updated is uploaded to the server, potentially reducing the drift gap.

- ii.

- Drop StrategyIf is exceedingly large (i.e., the site is severely out of sync with the global distribution) and cannot be quickly corrected, the server may skip (drop) that site in the current aggregation:When temporarily excluding extreme outliers, the global model remains stable. Later, once the drifting machine completes more extensive local retraining or maintenance (see below), it can rejoin the FL process.

- (4)

- Multi-Head/Multi-Task FL with Continual Learning:

- i.

- Shared Backbone + Task-Specific HeadsTo handle both common features across sites and site-specific variations, one can adopt a shared-backbone-plus-head architecture. LetThe server aggregates the shared backbone across all sites (global update). Each site fine-tunes its own head based on local drift or anomalies (local update), enabling more extensive adaptation for that site without disrupting the globally shared backbone.

- ii.

- Dynamic Head Generation (Continual Learning)If a site undergoes a radical or “conceptual” shift (e.g., the entire manufacturing process changes or sensor hardware is replaced), a new head can be dynamically created while preserving the old head . This design merges continual learning with FL, ensuring that previously learned knowledge is retained and the system can accommodate newly emerging distributions.

- (5)

- Collective Drift and Graph-Based Adaptation:In real industrial settings, machines often have upstream–downstream or spatial interdependencies. A drift in a first-stage machine can reverberate downstream. Let us define a site-dependency graph , where each node corresponds to a machine , and edges represent interlinked processes.

- i.

- Group-Based Aggregation: If a subgraph of machines concurrently exhibits high drift, the server can perform more frequent local aggregations within that subgraph and coordinate maintenance for them as a group.

- ii.

- Neighbor-Aware: If a neighbor’s drift scores spike, the system can proactively adjust or warn nearby machines, facilitating a more holistic approach to distributed production lines.

- (6)

- Maintenance Decisions and Closed-Loop Integration:

- i.

- Maintenance TriggersWhen surpasses a critical threshold, or when interpretability methods (Phase 4) highlight sensor/log anomalies (e.g., “Current fluctuations”), we initiate PdM to prevent imminent failures.In multi-machine scenarios, we can formulate a global scheduling objective balancing maintenance cost and risk (), e.g.,where indicates that site undergoes immediate maintenance, and represents expected downtime or failure risk if not repaired.

- ii.

- Active Learning (Phase 5) and RetrainingAfter maintenance, each site collects new data reflecting the repaired conditions. With an active learning process, it can select or label critical samples to rapidly adapt local parameters. The updated is then transmitted to the server, forming a detect–explain–repair–retrain loop.

- iii.

- Interpretability Feedback (Phase 4)If interpretability methods (e.g., attention weights, SHAP, or LIME) converge on specific features, sensors, or textual signals as causes of anomalies, multiple sites can share these explanations with the server. A recurring pattern (e.g., “Batch ID #123 malfunction”) might prompt a system-wide fix or the replacement of parts across the factory or multiple collaborating factories.By incorporating these design elements, Phase 6 not only safeguards inter-site privacy but also robustly handles heterogeneous and dynamic drift conditions at scale. As a result (shown in Table 3), our M2D2 framework can more effectively realize intelligent, real-time process monitoring and PdM in large-scale industrial ecosystems.

Table 3. Example of drift detection results.

Table 3. Example of drift detection results.

4. Mathematical Justification

This chapter provides a rigorous mathematical foundation to demonstrate that the proposed M2D2 framework can achieve the following objectives in multi-modal and multi-machine industrial settings:

- (1)

- Short-Term Drift Detection Consistency: When “short-term drift” (acute anomaly) occurs, the framework can detect it with high probability given a finite number of observations (corresponding to the Bayesian threshold in Phase 2).

- (2)

- Long-Term Drift Detection Consistency: When “long-term drift” (gradual anomaly) develops over time, the framework uses distributional metrics such as , Wasserstein distance, or other measures to detect the deviation in extended time windows (corresponding to Phase 3).

- (3)

- Federated Aggregation Robustness: In “drift-aware federated learning” (Phase 6), the system introduces a drift score for each machine to dynamically adjust aggregation weights or trigger micro-rounds/drop strategies. We show that this mechanism for ensuring the risk minimization of the global model is not jeopardized by severe local drifts, converging under reasonable assumptions at (or near) the global optimum.

In the subsections that follow, we present the core theorems and proofs addressing these three objectives.

4.1. Short-Term Drift Detection Consistency

In Phase 2, for each new data point , we compute the reconstruction error,

and employ a Bayesian threshold mechanism (with prior ) to decide if a sudden drift has occurred [25,26]. Formally, we define

If , the system flags an acute anomaly, setting . The following theorem states that if the underlying data distribution truly shifts abruptly, the detection probability quickly approaches 1, even with a limited number of samples.

Theorem 1.

(Short-Term Detection with High Confidence). Let be the distribution of the reconstruction error under the normal condition, and be the distribution under a short-term drift event. If

then there exists a threshold such that, as , the probability that {} occurs under converges to 1. In other words, the system can detect acute drift with high confidence in finite time.

Proof.

- i.

- If and are disjointed, then there is a critical point such that the probability of exceeding that threshold is 0 under and 1 under .

- ii.

- If , Chernoff bounds or Sanov’s theorem imply that, as the sample size grows, the probability of exceeding a certain threshold rises exponentially under [27].

- iii.

- Incorporating the Bayesian threshold mechanism, adjusts posteriorly to favor higher drift likelihood as reconstruction errors increase, and so the detection probability approaches 1 over time.

- iv.

- Consequently, under a genuine acute drift event, detection’s success probability converges to 1 as .□

4.2. Long-Term Drift Detection Consistency

In Phase 3, the system periodically collects samples over a window to form the empirical distribution , and compares it against a baseline distribution . The distance measure is defined as follows

If exceeds the threshold , the system declares the existence of a long-term drift [28]. The next theorem shows that if a permanent drift truly occurs, detection probability converges to 1 as

Theorem 2.

(Long-Term Detection Consistency). Consider an infinite sequence originally drawn from . Suppose that after some time , samples are drawn from a new distribution . Then, for any , there almost surely exists such that for all ,

meaning that the probability of detecting the drift approaches 1 for sufficiently large .

Proof.

- i.

- Under or weakly stationary assumptions, one can apply the law of large numbers or the Glivenko–Cantelli theorem to show that converges to as the sample size grows [29].

- ii.

- If , then or .

- iii.

- Due to the continuity of the distance measure, for sufficiently large samples, almost surely exceeds any . Hence, the long-term drift will be detected.

- iv.

- Therefore, under gradual or permanent drift, detection eventually becomes certain as .□

4.3. Federated Aggregation Robustness Under Drift-Aware Weighting

In Phase 6, we propose a drift-aware FL mechanism. The core idea is to assign dynamic weights

where is the drift score (combining short- and long-term indicators) for machine at round , and is a decreasing function, for example . Then we update the global parameter:

This ensures that heavily drifting machines receive smaller weights (or are dropped altogether in extreme cases) to protect the global model from corruption [30,31].

For simplicity, assume each local loss is -strongly convex and -smooth (Lipschitz). Then, we can derive the following theorem on convergence.

Theorem 3.

(Convergence under Drift-Aware FL). If is bounded and for all (e.g., ), then with a suitably small learning rate , the iterative updates,

converge to a neighborhood of the global optimum , where the neighborhood size depends sub-linearly on the level of drift .

Proof.

- i.

- Let . Then, for any round ,

- ii.

- Using -strong convexity and Lipschitz assumptions, we derive a Polyak–Łojasiewicz (PL)-type inequality [32], ensuring that the loss decreases monotonically with the number of rounds.

- iii.

- If becomes too large for a machine , the drop strategy can be employed to exclude that machine from aggregation in the current round. Because only a small fraction of machines is typically dropped, the overall convergence is not compromised.

- iv.

- Combining these results, under a sufficiently small and a strict positive lower bound , {} converges to a neighborhood of .□

4.4. Discussion

From Theorems 1–3, we conclude the following:

- (1)

- As regards short-term drift (Phase 2), the Bayesian threshold reliably detects acute changes in distribution with high confidence given sufficiently distinct data.

- (2)

- As regards long-term drift (Phase 3), through distribution measures (), the approach almost surely detects gradual or permanent deviations over extended windows once enough samples are accumulated.

- (3)

- As regards federated learning (Phase 6), by weighting client updates based on a drift score and allowing for micro-rounds or drop strategies, the global model can still converge, preserving privacy constraints across sites while mitigating the adverse effects of severe local drifts.

These theoretical results show how the M2D2 framework can effectively implement an integrated, closed-loop workflow for use in real-time detection, interpretability, active labeling, federated aggregation, and maintenance decisions in complex industrial environments.

5. Illustrative Example

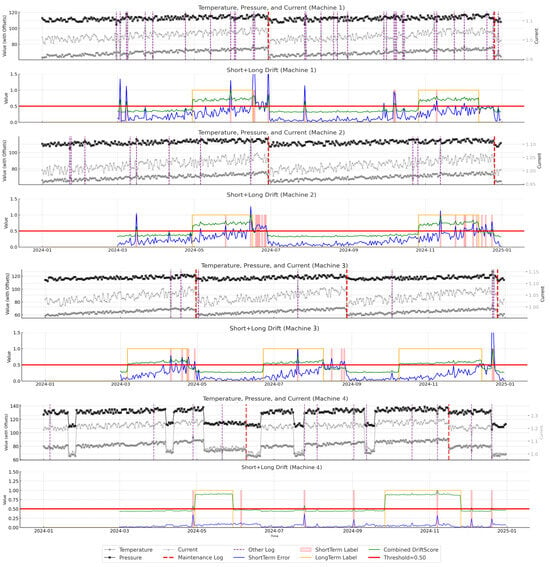

The WBG Epi production line at Infineon Technologies exemplifies a complex semiconductor fabrication environment where multiple machines operate under diverse load conditions and maintenance protocols. Table 4 summarizes key information about the four machines, including their recipes, products, and nominal cycle times. Machine 1 frequently faces minor yet disruptive instabilities that can prompt sudden production pauses. Machine 2 appears broadly stable in day-to-day operations but can drift away from nominal process conditions if regular calibrations are postponed. Machine 3, which handles higher-capacity and more intensive processing, repeatedly reports maintenance activities (e.g., “maintenance cycle”, “part replaced”) reflective of its heavy workload. Machine 4 processes multiple recipes (A and C) and can switch between different products (X and K). This extension tests whether M2D2 can effectively detect anomalies and drifts, even under mixed-recipe conditions, thereby adding another layer of complexity to the production line. Although these varied machine profiles highlight WBG Epi’s versatility, they also complicate data-driven monitoring and PdM. Efforts to achieve consistent yields, rapidly flag anomalies, and optimize machine usage require a consolidated framework capable of handling both acute deviations and slower cumulative shifts. Here, we employ the M2D2 approach—covering multimodal data preprocessing, baseline modeling, real-time anomaly detection, interpretability, active learning, and drift-aware federated learning—to address these challenges in a unified manner. All raw data have been de-identified and range-transformed to uphold Infineon’s confidentiality requirements without sacrificing the statistical fidelity necessary for advanced modeling. The ensuing sections, along with Figure 2, Figure 3, Figure 4 and Figure 5, reveal the efficacy of M2D2 in this real-world WBG EPI environment.

Table 4.

Machine information.

Figure 2.

The results of M2D2.

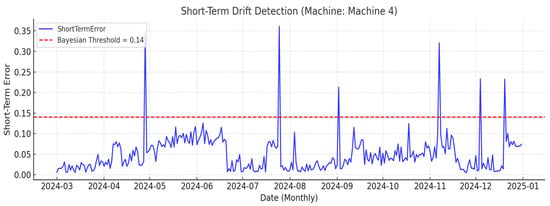

Figure 3.

Short-term drift detection on Machine 4.

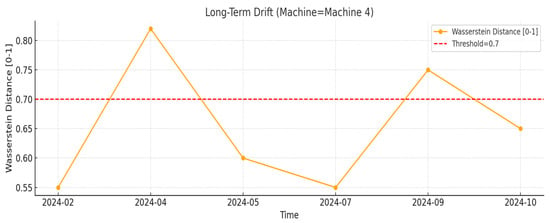

Figure 4.

Long-term drift detection on Machine 4.

Figure 5.

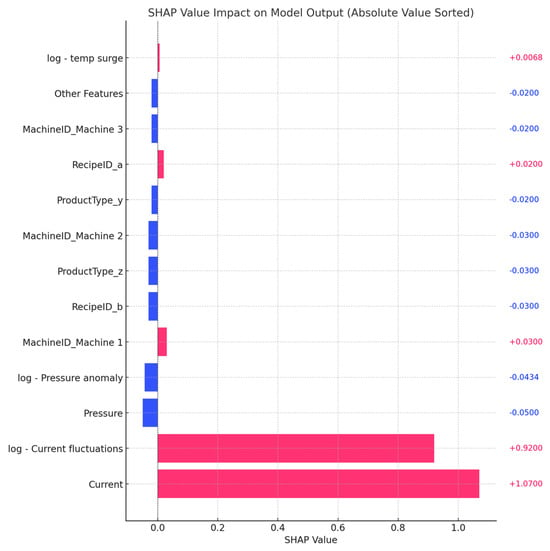

SHAP-based root cause analysis for Machine 1 on 17 June 2024.

5.1. Phase 0: Data Preprocessing

In the preprocessing phase, sensor data (temperature, pressure, current) were integrated with maintenance logs (e.g., maintenance) and categorical attributes (e.g., Recipe A, B; Product Type X, Y, Z) to form a unified multi-modal feature vector at each time point. Missing sensor values and timestamp misalignments were corrected via linear interpolation and autoencoder-based inpainting, followed by Z-score normalization to harmonize feature scales. After this procedure, every time step became a coherent snapshot of numeric readings, text-based log embeddings, and relevant recipe or product labels. In the top section of Figure 2, Machines 1, 2, and 3 display well-synchronized pressure, temperature, and current signals. Machine 3, due to its heavier load and more frequent maintenance schedules, contributed a richer set of textual log entries, consistently appearing as significant factors in subsequent modeling phases. Machine 4’s mixed-recipe data were similarly integrated, revealing periodic parameter shifts whenever it switched between Recipe A and Recipe C. These shifts further tested M2D2’s preprocessing robustness, especially in terms of handling abrupt transitions in product types X and K.

5.2. Phase 1: Baseline Model

Following the comprehensive cleansing of the multi-modal dataset, an offline training corpus predominantly composed of normal or near-normal records was assembled and augmented with 60 previously collected samples to establish the initial baseline model. This baseline was learned using masked reconstruction, requiring the model to infer partially occluded sensor fields and textual tokens, and contrastive learning, which compares similar (identical recipe and product) versus dissimilar samples to sharpen the latent space’s discrimination power. The completed model offers a means to quantify newly arrived data against expected operational norms, typically through a reconstruction error or latent distance metric. This reference distribution underpins both short-term anomaly detection and the identification of longer-term drifts. With Machine 4 included in the baseline training, the model accounts for potential operational mode changes due to recipe switching, ensuring that multi-recipe scenarios are well-represented in the learned latent space.

5.3. Phase 2: Short-Term Anomaly Detection

Once deployed in real time, the system scrutinizes each arriving multi-modal instance for abrupt deviations. A surge in reconstruction error prompts a Bayesian threshold mechanism to decide whether a short-term label is warranted (as shown in Figure 3), thereby adapting the decision boundary to fluctuating production contexts. The bottom portion of Figure 2 features purple dashed lines that denote short-term alerts. Machine 1 exemplifies this phenomenon, generating multiple warnings in the spring of 2024 tied to intermittent current spikes. Machine 3, owing to its high-throughput recipes, occasionally underwent sudden current surges, aptly captured by concurrent log references such as “current fluctuations.” For Machine 4, spikes often occurred during transitions between Recipe A and Recipe C, creating short-term anomalies whenever process parameters briefly deviated from baseline norms. These minute-scale alerts empower operators to intervene preemptively, preventing minor anomalies from amplifying into more serious downtime.

5.4. Phase 3: Long-Term Drift Detection

While immediate alerts address abrupt perturbations, the system also assesses whether machines undergo incremental shifts over extended periods. To accomplish this, data from several days or weeks are aggregated and then compared to the baseline distribution using KL divergence or Wasserstein distance. Furthermore, as shown in Figure 4, every 30 days the system checks for sustained drift, and if it exceeds the designated threshold, the long-term label is set to 1. If this measured gap remains elevated beyond a certain threshold, the model designates a long-term drift. Figure 2 uses intervals shaded orange (long-term label) and red (short-term label) to indicate drift events, such as those observed in Machine 2 from late spring to early summer 2024. At times, maintenance logs made a note stating “maintenance schedule postponed”, implying that slight, cumulative deviations can escalate without proper calibration or part replacements. By distinguishing chronic drift from short-lived anomalies, the system facilitates well-timed preventative actions.

5.5. Phase 4: Multi-Modal Interpretability and Root-Cause Analysis

Anomaly detection alone offers limited practical benefit unless operators can pinpoint the primary causes. Phase 4 implements interpretability techniques—particularly SHAP—to map detected anomalies back to the most impactful features, encompassing both numeric sensor values and textual maintenance logs. In one instance, during the short-term alert on 17 June 2024, SHAP revealed that a sharp rise in “current” alongside repeated mentions of “current fluctuations” in the logs were the main contributors, as shown in Figure 5. This consistent alignment between numeric sensor data and textual entries steered engineers to inspect the electrical supply infrastructure and measurement modules, reducing speculation and the time to resolution. Moreover, if “current fluctuations” repeatedly emerges as a top driver in future alerts, process parameters tied to current regulation can be reevaluated to forestall additional incidents.

5.6. Phase 5: Active Learning and Maintenance Recovery

Any industrial detection system must evolve with real-world feedback. Here, M2D2 leverages active learning to continually refine its thresholds and classification boundaries. If an operator deems a flagged sample to be a false alarm, the system updates its internal parameters to lessen the rate of future false positives under similar conditions. Conversely, when a newly encountered fault is corroborated, the relevant samples join a “labeled anomaly” set, thereby training the model to become more sensitive to this novel deviation. Crucially, M2D2 also accommodates maintenance recovery. Whenever operators complete part swaps or recalibrations, they log messages such as “maintenance done” or “part replaced”, prompting the system to realign its baseline or threshold distributions. For instance, if Machine 3 consistently shows high reconstruction errors due to a faulty vacuum pump, finalizing “part replaced” allows M2D2 to treat the machine as being returned to normal operation, as shown in Figure 2, thus preventing residual pre-maintenance anomaly data skewing in post-maintenance detection. This loop ensures that once physical issues are rectified, false positives do not linger purely because of the historical fault state. For Machine 4, active learning likewise helps the model adapt to recurring recipe changes. Once any maintenance or recalibration is completed post-switch, M2D2 resets its expectations, preventing repeated false alarms around known transition points.

5.7. Phase 6: Drift-Aware Federated Learning

In scenarios involving multiple machines or multiple plants, FL becomes crucial for safeguarding each machine’s sensitive data while enabling shared detection knowledge. However, if the distributions or drift severity among machines differ substantially, a standard federated averaging approach (FedAvg) may allow a single outlier machine to “drag down” the global model. To address this issue, this study introduces drift-aware federated learning (drift-aware FL) in Phase 6, adjusting aggregation weights dynamically in each round based on the short- and long-term drift scores obtained in Phases 2 and 3. For instance, if Machine 3 experiences a surge in reconstruction error and distributional divergence from late June to early July 2024—perhaps due to component aging or overload—the system may reduce its parameter weights or invoke additional micro-round local updates, thus preventing undue disruption to the global model. After Machine 3 completes maintenance and logs “maintenance done”, and its reconstruction error returns to a stable range, its parameters are reintegrated into the global aggregation. As illustrated in the lower portion of Figure 2, this approach ensures that the drift of Machine 3 exerts minimal impact on the detection quality of Machines 1 and 2, ultimately preserving both the stability and strong performance of the global model across multiple machines. Machine 4, with its dual-recipe operations, can sometimes display more frequent distribution shifts. By incorporating drift-aware weighting, M2D2 prevents these recipe-induced changes from disproportionately influencing the federated model, thus preserving its performance for other machines.

5.8. Overall Conclusions and Persuasiveness

Figure 2 and Figure 5 jointly demonstrate M2D2’s proficiency at distinguishing abrupt faults from incremental degradations within Infineon’s real WBG EPI production environment. Short-term alerts capture acute anomalies in near real time, while long-term drift indicators warn of potential process misalignments before they compromise yield. SHAP-based interpretability pinpoints the numeric and textual features—such as “current”, “pressure anomaly”, or “current fluctuations”—that precipitate each alert, enabling swift, evidence-driven maintenance actions. Active learning systematically refines the model in response to new fault patterns and false positives, and the maintenance recovery mechanism ensures that machines, once physically repaired, swiftly revert to normal detection baselines. Drift-aware federated learning finally extends these benefits across multiple machines, preventing a single drifting device from undermining the collective model.

Taken together, these results affirm that M2D2 effectively integrates multi-modal sensing, advanced anomaly detection, human-in-the-loop feedback, and privacy-sensitive collaboration to sustain high-level monitoring and PdM in WBG Epi fabrication. Ongoing work focuses on broader sensor modalities, prolonged longitudinal observations, and in-depth economic impact evaluations (e.g., reduced downtime or improved resource efficiency), with the goal of reinforcing M2D2’s role as a next-generation enabler of intelligent semiconductor manufacturing.

6. Conclusions

In this study, we proposed the M2D2 framework, which incorporates multi-sensor data, multi-machine coordination, and dual-scale anomaly detection (short-term and long-term), thereby addressing the diverse and dynamic challenges prevalent in advanced manufacturing. By integrating deep self-supervision, contrastive learning, explainability mechanisms, active feedback loops, and drift-aware federated learning, M2D2 provides an end-to-end solution for detecting and mitigating equipment parameter drifts in high-stakes industrial settings such as semiconductor fabrication. The salient contributions of M2D2 can be encapsulated as follows:

- (1)

- Robustness and Sensitivity: By leveraging a self-supervised approach complemented with contrastive learning, M2D2 achieves high detection sensitivity, even in scenarios with extremely limited abnormal samples. Its dual-scale detection architecture effectively identifies both sudden anomalies and gradual drifts, ensuring swift corrective actions and sustained operational reliability.

- (2)

- Multi-Modal Integration and Explainability: Through multi-layer visualization techniques and attention-based interpretability (e.g., SHAP, LIME, Transformer Attention), M2D2 elucidates the relative contributions of various sensors and textual maintenance logs in generating anomaly scores. This transparency not only streamlines root-cause analysis but also significantly reduces false alarms and missed detections, thereby enhancing user trust and operational efficiency.

- (3)

- Federated Learning and Privacy Preservation: The drift-aware federated learning module allows geographically dispersed machines or multiple production lines to collaboratively update a global model without exposing raw, sensitive data. By adaptively weighting individual machines according to their data shifts, M2D2 retains a high level of overall model consistency and accuracy, even as local environments undergo significant changes.

- (4)

- Local Adaptation and Scalability: M2D2 demonstrates seamless adaptability to diverse, heterogeneous manufacturing devices and processes. Through localized fine-tuning and distributed coordination, the framework retains previously learned knowledge while simultaneously accommodating new hardware introductions or process modifications, thereby underscoring its suitability for large-scale production environments.

The inclusion of mixed-recipe experiments in this study is a notable highlight, showcasing the framework’s applicability in more complex scenarios. By testing Machine 4 with multiple recipes (A and C) and products (X and K), M2D2 effectively demonstrated its capability to handle frequent operational mode changes and abrupt transitions. This extension reinforces the robustness of the framework in managing real-world industrial challenges, further validating its practicality and versatility.

Taken together, these capabilities exemplify M2D2’s comprehensive “data preprocessing → short-term detection → long-term observation → interpretability analysis → active learning and federated coordination” cycle, which positions it as a robust and versatile solution for complex equipment ecosystems. Empirical evaluations and theoretical insights confirm that M2D2’s consistent performance, explainability, and responsiveness not only fulfill the urgent requirement for agile anomaly detection and maintenance but also contribute to the overall safety and high yield of multi-machine industrial production. This achievement marks a significant breakthrough in smart manufacturing, setting a new benchmark for data-driven monitoring and real-time decision support in critical production environments.

7. Future Work

Although M2D2 lays a solid foundation for robust multi-machine, multi-modal drift detection, several critical research directions remain underexplored within the current framework. One significant avenue concerns deeper interpretability and visualization, where the employment of advanced graph-based techniques—such as graph neural networks (GNNs) or knowledge graphs—could reveal latent relationships among sensor signals, textual logs, and high-dimensional data. While M2D2 effectively integrates diverse sensing modalities for anomaly detection, it has yet to thoroughly investigate how these more sophisticated tools could illuminate root causes or interactive effects in a more detailed and intuitive manner.

Another underexamined aspect relates to the adaptability of federated learning within heterogeneous industrial settings. M2D2 demonstrates the feasibility of drift-aware federated learning, but it does not fully delve into dynamic strategies for handling highly variable data distributions across different machines or production lines. Devising new methods—such as hierarchical grouping based on shared process characteristics or variable polling frequencies informed by anomaly rates—could offer more granular control over federated model updates, thereby improving both local customization and global consistency.

Moreover, M2D2 has not yet addressed the integration of DT technology for real-time simulation and parameter tuning. While the framework can detect parameter drifts with high sensitivity, further research is needed to systematically evaluate how a DT environment might model the operational impact of these drifts. By iteration in a virtual space, M2D2 could refine or validate parameter adjustments prior to implementing them in the physical production line, thus reducing risk and downtime.

Additionally, although M2D2 benefits from active learning, it has not fully explored more advanced schemas that incorporate semi-supervised and self-supervised techniques. A more rigorous approach to identifying and prioritizing valuable unlabeled instances for labeling or verification could substantially accelerate the discovery of novel drift patterns. Finally, the potential for PdM has been recognized but not thoroughly integrated into the M2D2 pipeline. By combining real-time anomaly alerts with historical maintenance records and sensor-based health assessments, future extensions could deliver more proactive strategies, estimating Remaining Useful Life (RUL) and scheduling interventions before failures occur. These unexplored avenues collectively point to a rich landscape for future investigation that could further enhance M2D2’s applicability, robustness, and overall impact on smart manufacturing.

Author Contributions

C.-Y.L. was responsible for conceptualization, methodology, software development, validation, formal analysis, investigation, resources, data curation, writing—original draft preparation, writing—review and editing, and visualization; T.-L.T. oversaw supervision and funding acquisition; T.-H.T. handled project administration and validation. All authors have read and agreed to the published version of the manuscript.

Funding

This work was funded in part by the U.S. National Science Foundation under grants ERC-ASPIRE-1941524; DUE-2216396 and U.S. Department of Education under grants Award #P116S210004; Award #P120A220044.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to Confidentiality at Semiconductor Companies.

Acknowledgments

The authors sincerely thank Infineon Technologies Austria AG, Villach, for their support of the Wide Bandgap Semiconductor (WBG) Project, including access to real-world data and technical expertise. Special appreciation is extended to colleagues at Infineon Technologies Malaysia, Melaka, for their invaluable assistance and collaboration, which were instrumental in validating the proposed framework and showcasing its practical relevance to the challenges of advanced semiconductor manufacturing.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Chow, T.P. High-voltage SiC and GaN power devices. Microelectron. Eng. 2006, 83, 112–122. [Google Scholar] [CrossRef]

- Meneghini, M.; De Santi, C.; Abid, I.; Buffolo, M.; Cioni, M.; Khadar, R.A.; Matioli, E. GaN-based power devices: Physics, reliability, and perspectives. J. Appl. Phys. 2021, 130, 181101. [Google Scholar] [CrossRef]

- Montgomery, D. Introduction to Statistical Quality Control; Wiley: Hoboken, NJ, USA, 2020. [Google Scholar]

- Yu, J. Fault detection using principal components-based Gaussian mixture model for semiconductor manufacturing processes. IEEE Trans. Semicond. Manuf. 2011, 24, 432–444. [Google Scholar] [CrossRef]

- Fan, S.-K.S.; Hsu, C.-Y.; Tsai, D.-M.; Chou, M.C.; Jen, C.-H.; Tsou, J.-H. Key feature identification for monitoring wafer-to-wafer variation in semiconductor manufacturing. IEEE Trans. Autom. Sci. Eng. 2022, 19, 1530–1541. [Google Scholar] [CrossRef]

- Cao, H.; Zhang, H.; Gu, C.; Zhou, Y.; He, X. Fault detection and classification in solar-based distribution systems in the presence of deep learning and social spider method. Sol. Energy 2023, 262, 111868. [Google Scholar] [CrossRef]

- Almukadi, W.S.; Alrowais, F.; Saeed, M.K.; Yahya, A.E.; Mahmud, A.; Marzouk, R. Deep feature fusion with computer vision-driven fall detection approach for enhanced assisted living safety. Sci. Rep. 2024, 14, 21537. [Google Scholar] [CrossRef]

- Maged, A.; Lui, C.F.; Haridy, S.; Xie, M. Variational autoencoders-LSTM based fault detection of time-dependent high-dimensional processes. Int. J. Prod. Res. 2024, 62, 1092–1107. [Google Scholar] [CrossRef]

- Bae, Y.; Kang, S. Supervised contrastive learning for wafer map pattern classification. Eng. Appl. Artif. Intell. 2023, 126, 107154. [Google Scholar] [CrossRef]

- Yu, H.; Li, J.; Lu, J.; Song, Y.; Xie, S.; Zhang, G. Type-LDD: A type-driven lite concept drift detector for data streams. IEEE Trans. Knowl. Data Eng. 2024, 36, 9476–9489. [Google Scholar] [CrossRef]

- Chung, E.; Park, K.; Kang, P. Fault classification and timing prediction based on shipment inspection data and maintenance reports for semiconductor manufacturing equipment. Comput. Ind. Eng. 2023, 176, 108972. [Google Scholar] [CrossRef]

- Zhou, M.; Lu, J.; Lu, P.; Zhang, G. Dynamic graph regularization for multi-stream concept drift self-adaptation. IEEE Trans. Knowl. Data Eng. 2024, 36, 6016–6028. [Google Scholar] [CrossRef]

- Takyi-Aninakwa, P.; Wang, S.; Liu, G.; Bage, A.N.; Masahudu, F.; Guerrero, J.M. An enhanced lithium-ion battery state-of-charge estimation method using long short-term memory with an adaptive state update filter incorporating battery parameters. Eng. Appl. Artif. Intell. 2024, 132, 107946. [Google Scholar] [CrossRef]

- Cohen, J.; Huan, X.; Ni, J. Shapley-based explainable AI for clustering applications in fault diagnosis and prognosis. J. Intell. Manuf. 2024, 35, 4071–4086. [Google Scholar] [CrossRef]

- Mehta, M.; Chen, S.; Tang, H.; Shao, C. A federated learning approach to mixed fault diagnosis in rotating machinery. J. Manuf. Syst. 2023, 68, 687–694. [Google Scholar] [CrossRef]

- Goodfellow, I.J.; Mirza, M.; Xiao, D.; Courville, A.; Bengio, Y. An empirical investigation of catastrophic forgetting in gradient-based neural networks. arXiv 2013, arXiv:1312.6211. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Advances in Neural Information Processing Systems, Proceedings of the 27th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; Curran Associates Inc.: Red Hook, NY, USA, 2013; Volume 26. [Google Scholar]

- Guo, C.; Berkhahn, F. Entity embeddings of categorical variables. arXiv 2016, arXiv:1604.06737. [Google Scholar]

- Gondara, L. Medical image denoising using convolutional denoising autoencoders. In Proceedings of the 2016 IEEE 16th International Conference on Data Mining Workshops (ICDMW), Barcelona, Spain, 12–15 December 2016; pp. 241–246. [Google Scholar]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the International Conference on Machine Learning, Virtual, 13–18 July 2020; pp. 1597–1607. [Google Scholar]

- Kipf, T.N.; Welling, M. Semi-supervised classification with graph convolutional networks. arXiv 2016, arXiv:1609.02907. [Google Scholar]

- Gelman, A.; Carlin, J.; Stern, H.; Rubin, D.B. Bayesian Data Analysis; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Vaswani, A. Attention is all you need. In Advances in Neural Information Processing Systems, Proceeding of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Curran Associates Inc.: Red Hook, NY, USA, 2017. [Google Scholar]

- Van den Broeck, G.; Lykov, A.; Schleich, M.; Suciu, D. On the tractability of SHAP explanations. J. Artif. Intell. Res. 2022, 74, 851–886. [Google Scholar] [CrossRef]

- McMahan, H.B.; Moore, E.; Ramage, D.; Hampson, S.; y Arcas, B.A. Communication-efficient learning of deep networks from decentralized data. In Proceedings of the 20th International Conference on Artificial Intelligence and Statistics (AISTATS), Fort Lauderdale, FL, USA, 20–22 April 2017; pp. 1273–1282. [Google Scholar]

- Gama, J.; Žliobaitė, I.; Bifet, A.; Pechenizkiy, M.; Bouchachia, A. A survey on concept drift adaptation. ACM Comput. Surv. 2014, 46, 1–37. [Google Scholar] [CrossRef]

- Devroye, L.; Györfi, L.; Lugosi, G. A Probabilistic Theory of Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 1996. [Google Scholar]

- Villani, C. Optimal Transport: Old and New; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar]

- Talagrand, M. The Glivenko-Cantelli problem. Ann. Probab. 1987, 15, 837–870. [Google Scholar] [CrossRef]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Zhao, S. Advances and open problems in federated learning. Found. Trends Mach. Learn. 2021, 14, 1–210. [Google Scholar] [CrossRef]

- Li, T.; Sahu, A.K.; Talwalkar, A.; Smith, V. Federated learning: Challenges, methods, and future directions. IEEE Signal Process. Mag. 2020, 37, 50–60. [Google Scholar] [CrossRef]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).