License Plate Recognition Under the Dual Challenges of Sand and Light: Dataset Construction and Model Optimization

Abstract

1. Introduction

2. Materials and Methods

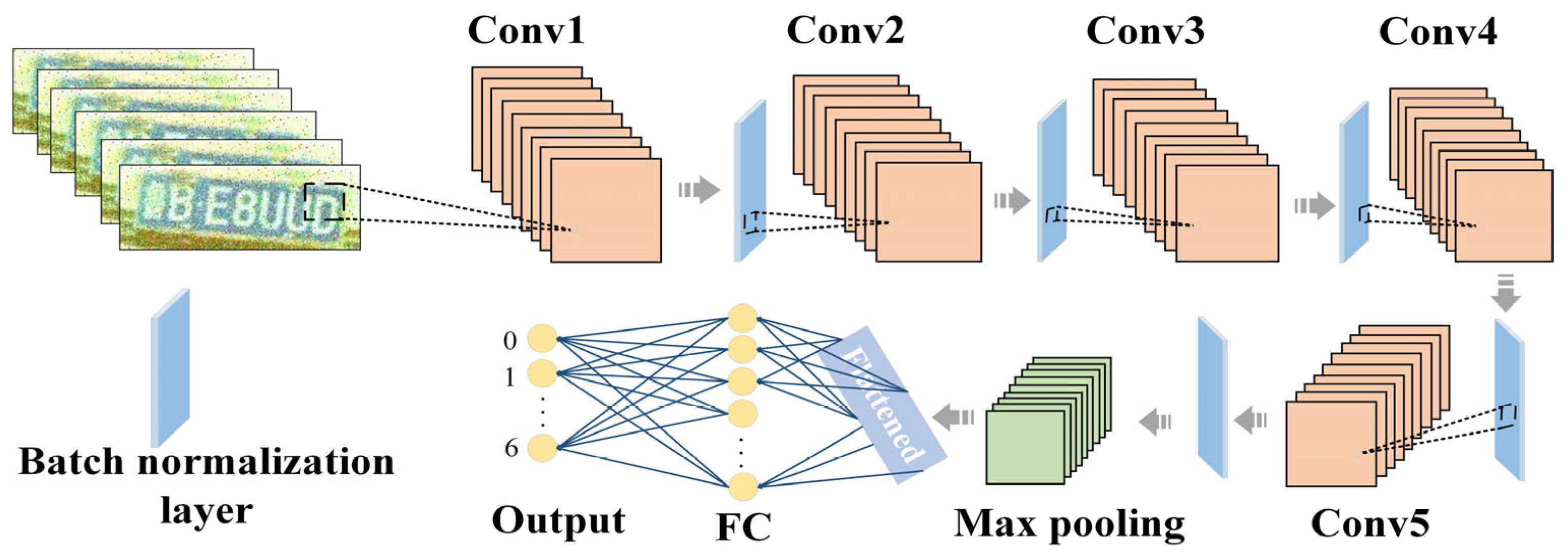

2.1. Introduction to the Model Structure

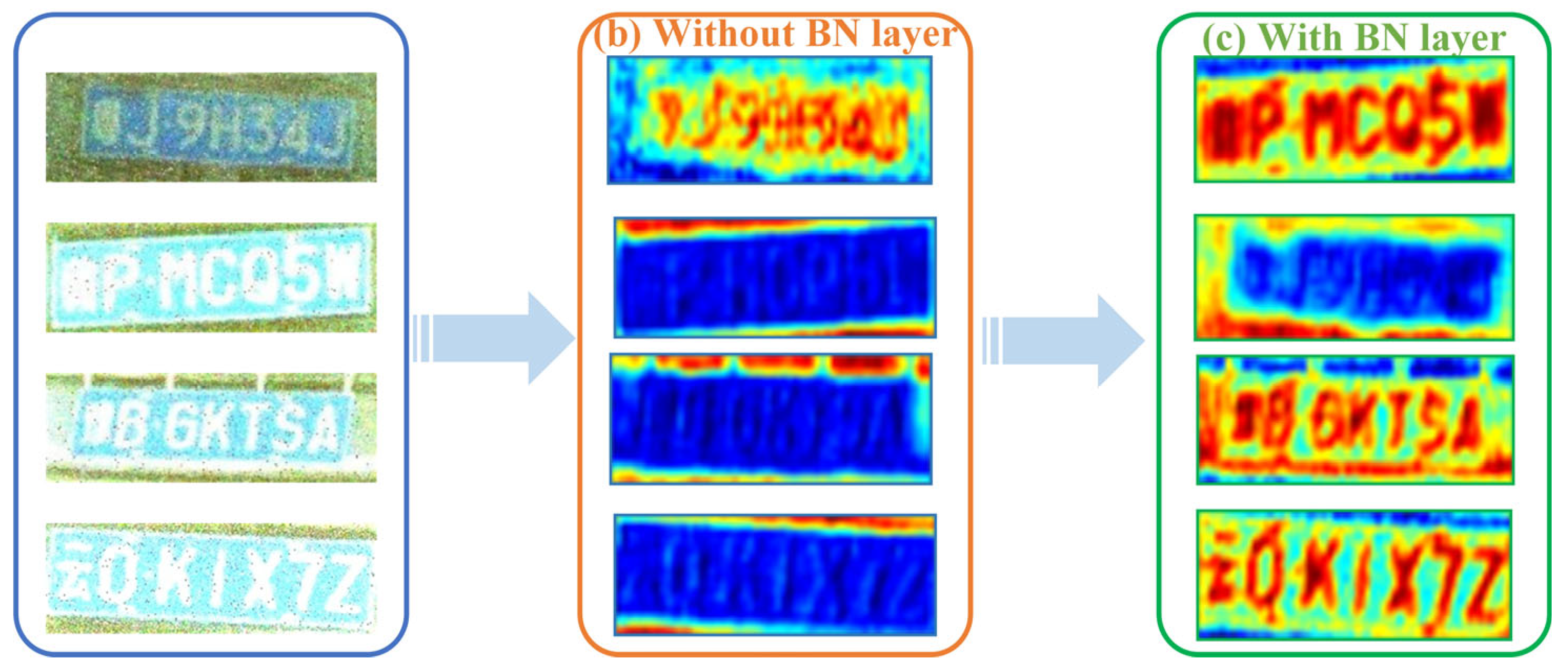

2.2. Analysis of Optimization Principles

3. Results

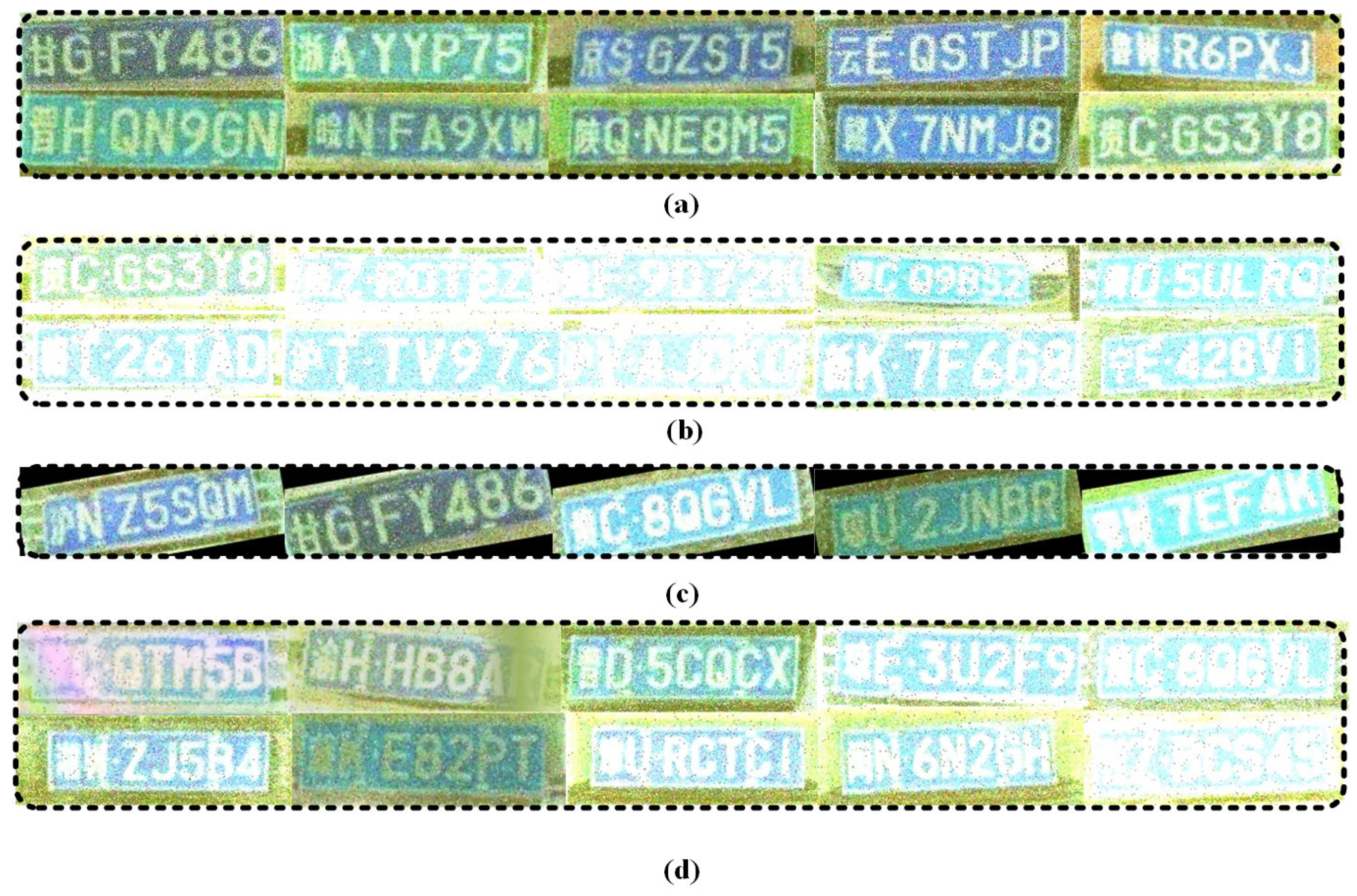

3.1. Construction of the Dataset

- Noise addition: By introducing additional ‘granular’ interference into the image, we simulate the image quality problems that may occur in the real environment. This strategy effectively trains the robustness of the model in dealing with multiple noise interferences, so that it can maintain stable recognition performance in the face of complex real-world scenarios, providing the model with ‘real-world experience’ in dealing with various uncertain environments.

- Light intensity variations: By simulating different lighting conditions, such as strong sunlight and deep shadows, the model is able to learn how to accurately recognize license plates in diverse lighting environments, thus effectively reducing recognition errors due to lighting variations.

- Rotation and tilting: By rotating and tilting the image to simulate the presentation of the license plate under different viewing angles and perspectives, the model is enhanced to adapt to changes in the position of the license plate, thus improving its recognition accuracy in real application scenarios. This enhancement allows the model to better cope with the challenges posed by changes in angles and optimizes its performance in complex scenes.

- Masking: By adding a large amount of noise or increasing the local brightness in the image, some or all characters of the license plate are masked to simulate the state of the license plate in complex environments such as sand and dust coverage or direct sunlight, which further improves the robustness and adaptive ability of the model.

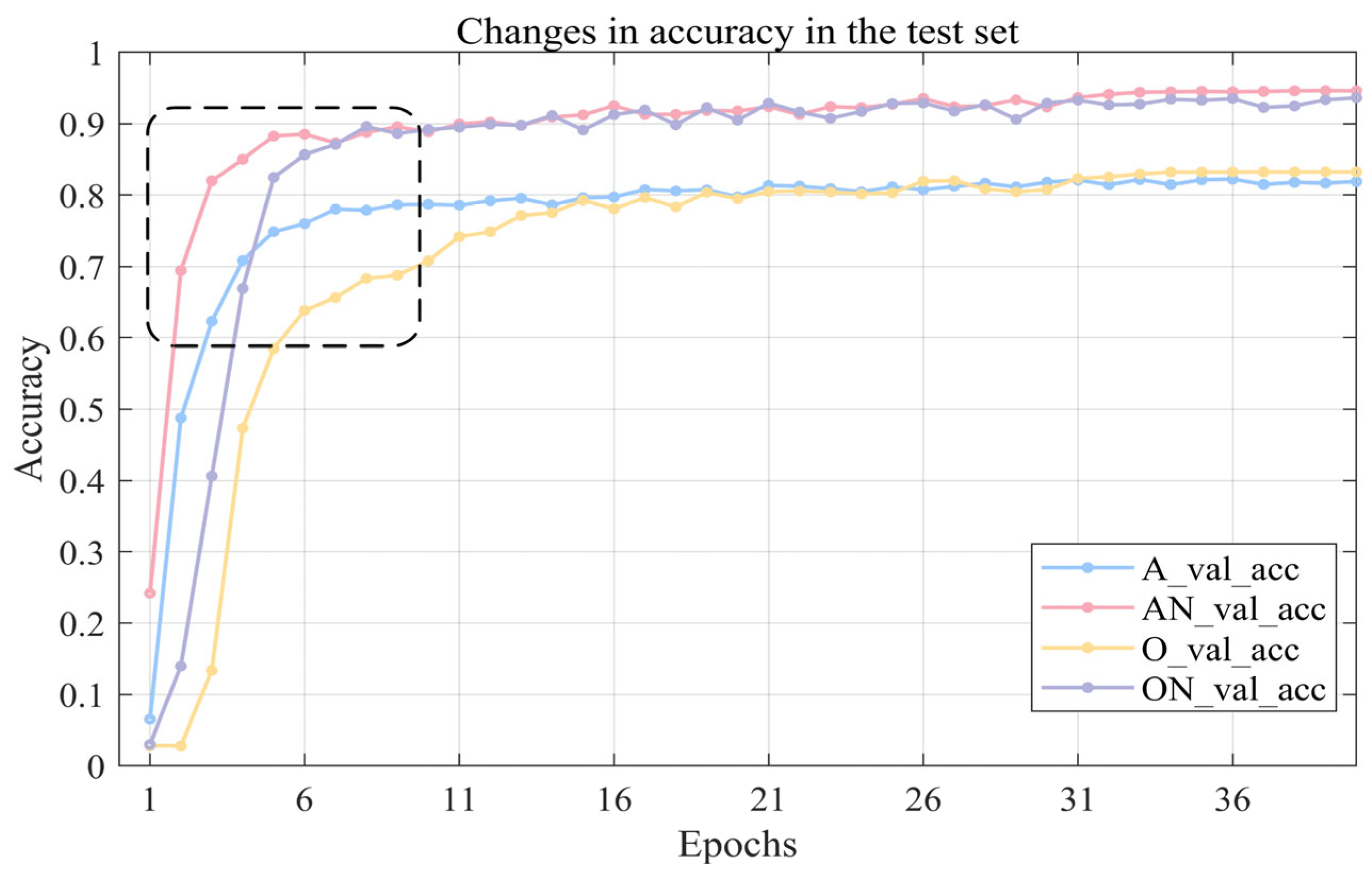

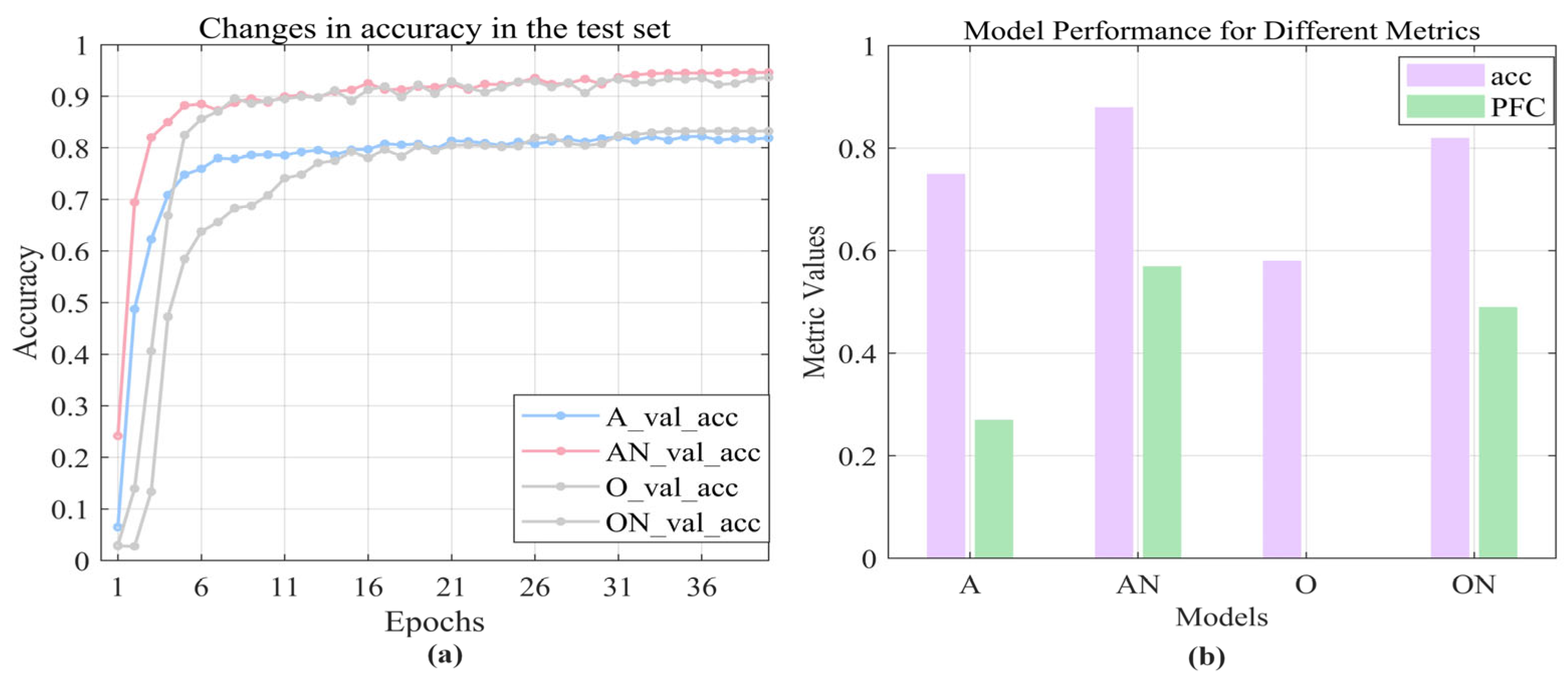

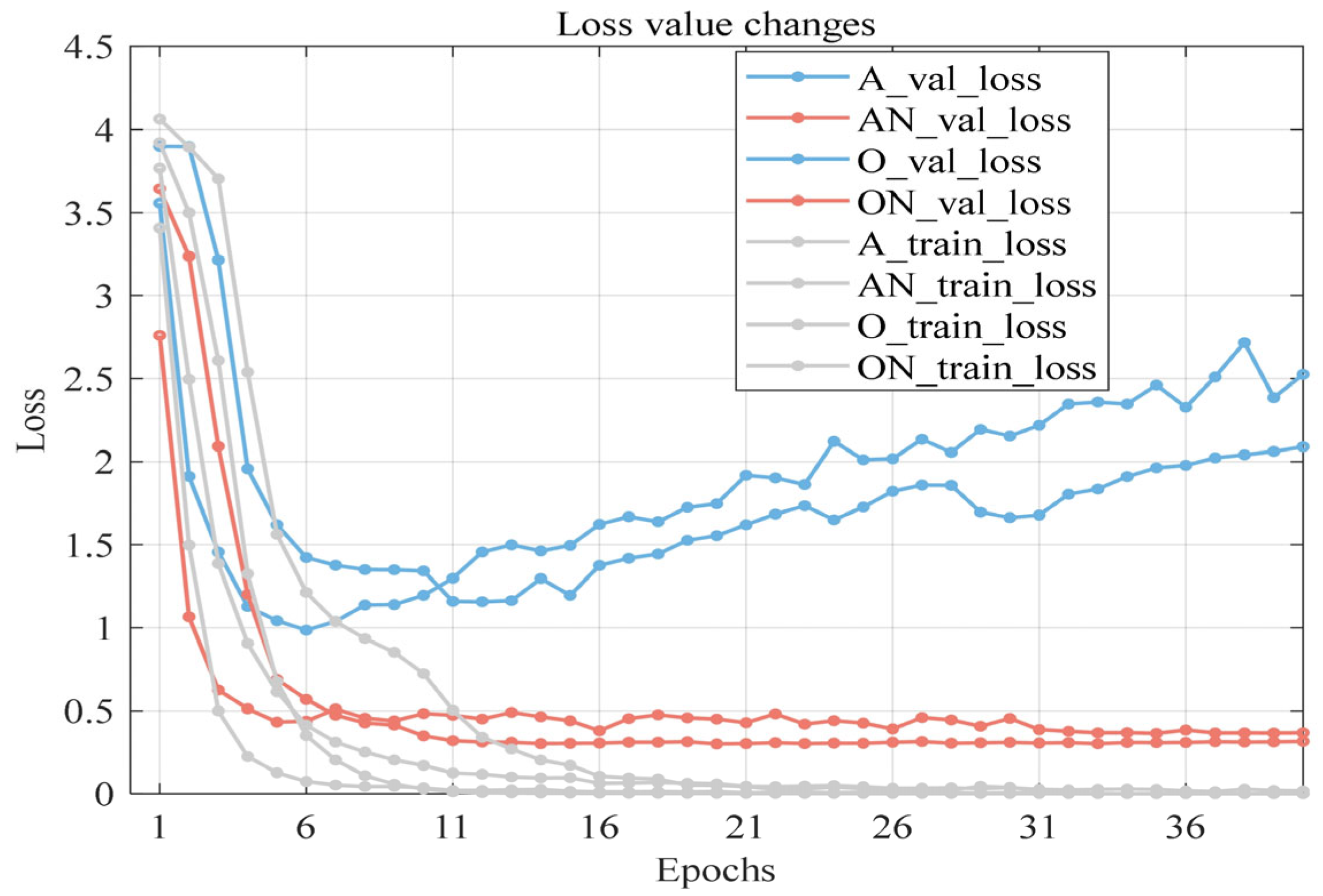

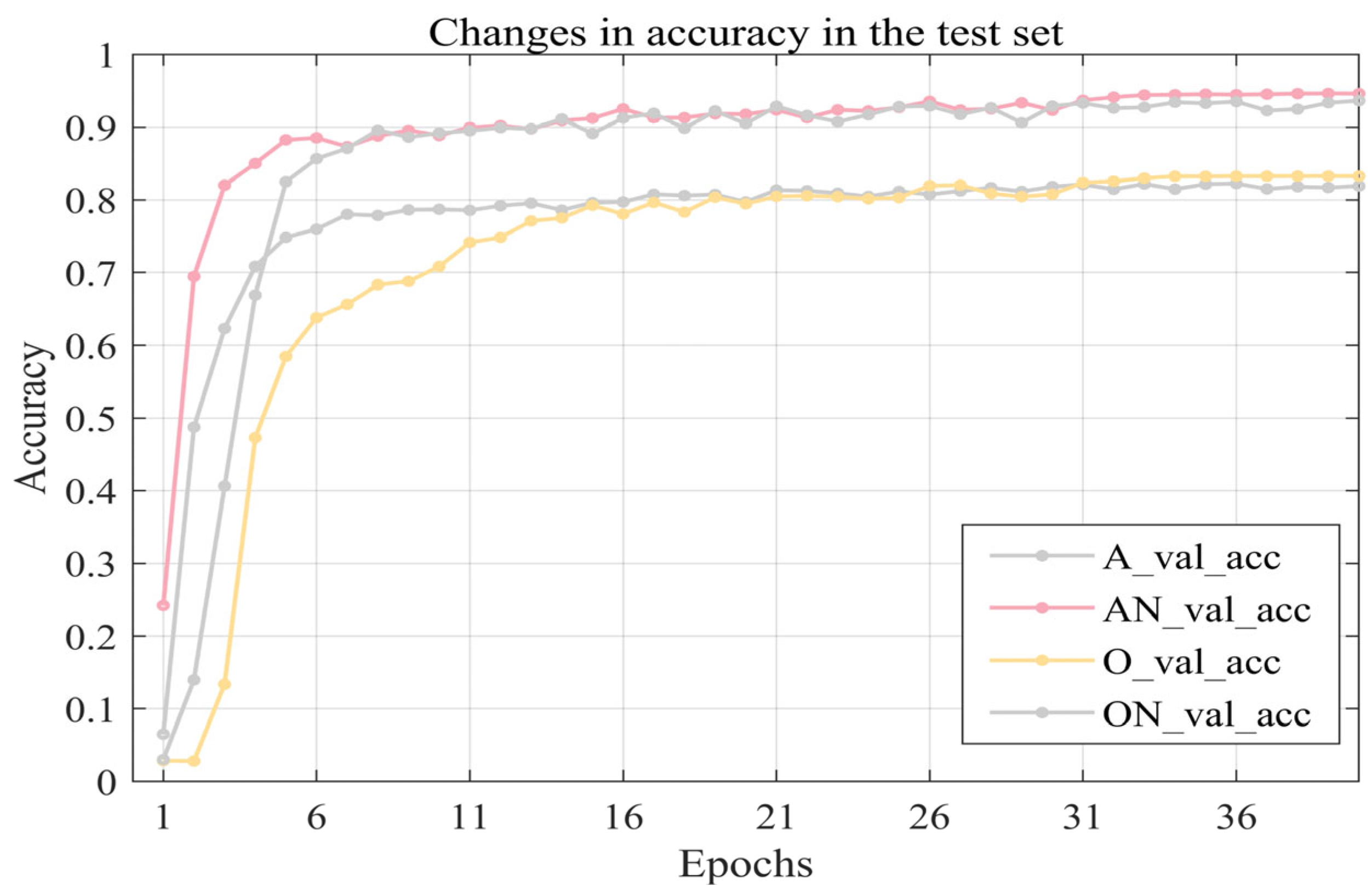

3.2. Experimental Results and Analyses

3.2.1. Comparative Experiments on Datasets

3.2.2. Ablation Experiment

3.2.3. Comparison Experiment Before and After Optimization

4. Discussion

4.1. Conclusions

4.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lu, J.; Chen, Z. Research on multi-sensor fusion target detection algorithm in autonomous driving scenarios. Netw. Secur. Technol. Appl. 2023, 2023, 38–41. [Google Scholar] [CrossRef]

- Fu, G. Research and Application of Dynamic Prediction Model for Urban Intelligent Transportation. Ph.D. Thesis, South China University of Technology, Guangzhou, China, 2014. [Google Scholar]

- Wang, W.; Fang, Z. A review of sandstorm weather and its research progress. J. Appl. Meteorol. 2004, 15, 366–381. [Google Scholar]

- Zheng, L.; Yang, Y.; Tian, Q. SIFT meets CNN: A decade survey of instance retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1224–1244. [Google Scholar] [CrossRef]

- Wang, X.; Han, T.X.; Yan, S. An HOG-LBP Human Detector with Partial Occlusion Handling. In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009; pp. 32–39. [Google Scholar]

- Zhang, Y.; Wang, H.; Yang, G.; Zhang, J.; Gong, C.; Wang, Y. CSNet: A ConvNeXt-based Siamese network for RGB-D salient object detection. Vis. Comput. 2024, 40, 1805–1823. [Google Scholar] [CrossRef]

- Liu, R.; Meng, G.; Yang, B.; Sun, C.; Chen, X. Dislocated time series convolutional neural architecture: An intelligent fault diagnosis approach for electric machine. IEEE Trans. Ind. Inform. 2016, 13, 1310–1320. [Google Scholar] [CrossRef]

- Hmidani, O.; Alaoui, E.M.I. A comprehensive survey of the R-CNN family for object detection. In Proceedings of the 2022 5th International Conference on Advanced Communication Technologies and Networking (CommNet), Marrakech, Morocco, 12–14 December 2022; pp. 1–6. [Google Scholar]

- Pang, J.; Chen, K.; Shi, J.; Feng, H.; Ouyang, W.; Lin, D. Libra r-cnn: Towards Balanced Learning for Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; 821–830. [Google Scholar]

- Rafique, M.A.; Pedrycz, W.; Jeon, M. Vehicle license plate detection using region-based convolutional neural networks. Soft Comput. 2018, 22, 6429–6440. [Google Scholar] [CrossRef]

- Redmon, J. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J. Yolov3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, G.; Chen, Y.; An, P.; Hong, H.; Hu, J.; Huang, T. UAV-YOLOv8: A small-object-detection model based on improved YOLOv8 for UAV aerial photography scenarios. Sensors 2023, 23, 7190. [Google Scholar] [CrossRef]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Rio-Alvarez, A.; de Andres-Suarez, J.; González-Rodríguez, M.; Fernandez-Lanvin, D.; López Pérez, B. Effects of Challenging Weather and Illumination on Learning-Based License Plate Detection in Noncontrolled Environments. Sci. Program. 2019, 2019, 6897345. [Google Scholar] [CrossRef]

- Azam, S.; Islam, M.M. Automatic license plate detection in hazardous condition. J. Vis. Commun. Image Represent. 2016, 36, 172–186. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Ioffe, S. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Zafar, A.; Aamir, M.; Mohd Nawi, N.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.K.; Almotairi, S. A comparison of pooling methods for convolutional neural networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Basha, S.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef]

- Liu, W.; Wen, Y.; Yu, Z.; Yang, M. Large-margin softmax loss for convolutional neural networks. arXiv 2016, arXiv:1612.02295. [Google Scholar]

- Günther, J.; Pilarski, P.M.; Helfrich, G.; Shen, H.; Diepold, K. First steps towards an intelligent laser welding architecture using deep neural networks and reinforcement learning. Procedia Technol. 2014, 15, 474–483. [Google Scholar] [CrossRef]

- Scherer, D.; Müller, A.; Behnke, S. Evaluation of Pooling Operations in Convolutional Architectures for Object Recognition. In International Conference on Artificial Neural Networks; Springer: Berlin/Heidelberz, Germany, 2010; pp. 92–101. [Google Scholar]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation functions: Comparison of trends in practice and research for deep learning. arXiv 2018, arXiv:1811.03378. [Google Scholar]

- Garbin, C.; Zhu, X.; Marques, O. Dropout vs. batch normalization: An empirical study of their impact to deep learning. Multimed. Tools Appl. 2020, 79, 12777–12815. [Google Scholar] [CrossRef]

- Yoshioka, T.; Ito, N.; Delcroix, M.; Ogawa, A.; Kinoshita, K.; Fujimoto, M.; Yu, C.; Fabian, W.J.; Espi, M.; Higuchi, T.; et al. The NTT CHiME-3 System: Advances in Speech Enhancement and Recognition for Mobile Multi-Microphone Devices. In Proceedings of the 2015 IEEE Workshop on Automatic Speech Recognition and Understanding (ASRU), Scottsdale, AZ, USA, 13–17 December 2015; pp. 436–443. [Google Scholar]

- Si, N.; Zhang, W.; Qu, D.; Luo, X.; Chang, H.; Niu, T. Representation visualization of convolutional neural networks: A survey. Acta Autom. Sin. 2022, 48, 1890–1920. [Google Scholar]

- Jabeen, F.; Khusro, S.; Anjum, N. Research in Collaborative Tagging Applications: Choosing the Right Dataset. VAWKUM Trans. Comput. Sci. 2023, 11, 1–25. [Google Scholar] [CrossRef]

- Li, H.; Wang, P.; Shen, C. Toward end-to-end car license plate detection and recognition with deep neural networks. IEEE Trans. Intell. Transp. Syst. 2018, 20, 1126–1136. [Google Scholar] [CrossRef]

- Laroca, R.; Severo, E.; Zanlorensi, L.A.; Oliveira, L.S.; Gonçalves, G.R.; Schwartz, W.R.; Menotti, D. A Robust Real-Time Automatic License Plate Recognition Based on the YOLO Detector. In Proceedings of the 2018 International Joint Conference on Neural Networks (ijcnn), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–10. [Google Scholar]

- Gonçalves, G.R.; da Silva, S.P.G.; Menotti, D.; Schwartz, W.R. Benchmark for license plate character segmentation. J. Electron. Imaging 2016, 25, 053034. [Google Scholar] [CrossRef]

- Hsu, G.S.; Chen, J.C.; Chung, Y.Z. Application-oriented license plate recognition. IEEE Trans. Veh. Technol. 2012, 62, 552–561. [Google Scholar] [CrossRef]

- Xie, L.; Ahmad, T.; Jin, L.; Liu, Y.; Zhang, S. A new CNN-based method for multi-directional car license plate detection. IEEE Trans. Intell. Transp. Syst. 2018, 19, 507–517. [Google Scholar] [CrossRef]

- Liu, X. Research on Face Image Recognition Technology Based on Deep Learning. Ph.D. Thesis, University of Chinese Academy of Sciences (Changchun Institute of Optical Precision Machinery and Physics, Chinese Academy of Sciences), Changchun, China, 2019. [Google Scholar]

- Yang, G.; Pennington, J.; Rao, V.; Sohl-Dickstein, J.; Schoenholz, S.S. A mean field theory of batch normalization. arXiv 2019, arXiv:1902.08129. [Google Scholar]

- Yong, H.; Huang, J.; Meng, D.; Hua, X.; Zhang, L. Momentum Batch Normalization for Deep Learning with Small Batch Size. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XII 16. Springer International Publishing: New York, NY, USA, 2020; pp. 224–240. [Google Scholar]

- Luo, P.; Wang, X.; Shao, W.; Peng, Z. Towards understanding regularization in batch normalization. arXiv 2018, arXiv:1809.00846. [Google Scholar]

| Dataset | Year | Country | Amount | Resolution | Description |

|---|---|---|---|---|---|

| CCPD (2019) [33] | 2019 | China | 250 k | 720 × 1280 | Unevenly bright license plates, tilted license plates, etc., but not enough of them |

| UFPR-ALPR [34] | 2018 | Brazil | 4500 | 1920 × 1080 | License plates from different national regions, lack of dusty and light-variable license plates |

| GAP-LP [34] | 2019 | Tunisia | 9175 | Includes multiple resolutions | Lack of license plates with lots of dust and strong light variations; labels may be inaccurate and inconsistent |

| OpenALRP-EU [35] | 2016 | Europe | 108 | Includes multiple resolutions | License plates from various EU countries with poor generalization when used |

| USCD-still [36] | 2005 | America | 291 | 640 × 480 | Multiple license plate styles and image capture conditions, fewer license plates in complex environments |

| CD-HARD [37] | 2016 | Involving multiple countries | 102 | Includes multiple resolutions | Higher number of difficult-to-identify samples, but lack of photographs of license plates that are sandy and have strong light variations |

| CSCL | 2024 | China | 25 k | 240 × 80 | Includes a large number of license plate photos for areas with frequent dust storms and drastic changes in lighting |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Z.; Yang, Y.; Yang, P.; Zhang, X.; Li, J.; Sun, Y.; Ma, L.; Cui, D. License Plate Recognition Under the Dual Challenges of Sand and Light: Dataset Construction and Model Optimization. Appl. Sci. 2025, 15, 6444. https://doi.org/10.3390/app15126444

Wang Z, Yang Y, Yang P, Zhang X, Li J, Sun Y, Ma L, Cui D. License Plate Recognition Under the Dual Challenges of Sand and Light: Dataset Construction and Model Optimization. Applied Sciences. 2025; 15(12):6444. https://doi.org/10.3390/app15126444

Chicago/Turabian StyleWang, Zihao, Yining Yang, Panxiong Yang, Xiaoge Zhang, Jiaming Li, Yanling Sun, Li Ma, and Dong Cui. 2025. "License Plate Recognition Under the Dual Challenges of Sand and Light: Dataset Construction and Model Optimization" Applied Sciences 15, no. 12: 6444. https://doi.org/10.3390/app15126444

APA StyleWang, Z., Yang, Y., Yang, P., Zhang, X., Li, J., Sun, Y., Ma, L., & Cui, D. (2025). License Plate Recognition Under the Dual Challenges of Sand and Light: Dataset Construction and Model Optimization. Applied Sciences, 15(12), 6444. https://doi.org/10.3390/app15126444