Abstract

This study presents a novel approach to the increasingly important task of distinguishing AI-generated images from authentic photographs. The detection of such synthetic content is critical for combating deepfake misinformation and ensuring the authenticity of digital media in journalism, forensics, and online platforms. A custom-designed Vision Transformer (ViT) model, termed Patch-Based Vision Transformer for Identifying Synthetic Media (PV-ISM), is introduced. Its performance is benchmarked against innovative transfer learning methods using 60,000 authentic images from the CIFAKE dataset, which is derived from CIFAR-10, along with a corresponding collection of images generated using Stable Diffusion 1.4. PV-ISM incorporates patch extraction, positional encoding, and multiple transformer blocks with attention mechanisms to identify subtle artifacts in synthetic images. Following extensive hyperparameter tuning, an accuracy of 96.60% was achieved, surpassing the performance of ResNet50 transfer learning approaches (93.32%) and other comparable methods reported in the literature. The experimental results demonstrate the model’s balanced classification capabilities, exhibiting excellent recall and precision throughout both image categories. The patch-based architecture of Vision Transformers, combined with appropriate data augmentation techniques, proves particularly effective for synthetic image detection while requiring less training time than traditional transfer learning approaches.

1. Introduction

In the last decade, research regarding artificial intelligence (AI) related to photographic and visual data has garnered increasing attention, primarily due to the proliferation of algorithms for deep learning and their rapidly improving performance. These advancements have enabled a broad spectrum of applications across diverse domains. Notable examples include visual arts [1,2], health sciences [3,4,5,6,7,8], earth sciences [9,10,11,12], criminal investigations [13,14], engineering [15,16,17,18,19,20,21,22], and psychology [23,24]. The reason for these many examples is the widespread use of mobile devices that can take high-quality photographs [25]. In addition, in disciplines where visual data plays a critical role, such as smart cities, space, and health, the fact that observation devices are of increasingly high quality in terms of hardware is motivating for artificial intelligence studies in these fields [26].

In parallel with these developments, recent studies have explored image manipulation techniques for privacy and visual realism. For example, generative models have been used for facial anonymization [27], while advanced filtering methods enhance details and correct illumination in images [28,29]. On the other hand, image filtering has gained attention for its role in robustness and clarity. Studies have introduced low-pass filters to defend against adversarial attacks [30], analyzed filter types for mesh and texture preservation [31], and proposed hybrid filtering methods for noise reduction and structural preservation [32,33].

Another area of research in this context focuses on synthetic media content. Current developments in generative artificial intelligence have significantly reshaped image-producing technologies, empowering computational models to produce highly realistic visuals that often closely resemble or are virtually indistinguishable from genuine imagery. While this progress brings innovation across fields such as art, entertainment, healthcare, and surveillance, it simultaneously poses significant challenges in the detection and authentication of synthetic media. Recent studies have tackled this issue from various angles: Pellicer et al. introduced a robust multimodal prototype-based approach (PUDD) to deepfake detection [34]; Bhinge and Nagpal quantified how differently real and artificial images behave in vision models [35]; Gallagher and Pugsley proposed a dual-input neural architecture to integrate structural and contextual cues [36]; and Bartos and Akyol performed an comparative evaluation of deep learning-based methods for image authentication [37]. Vora et al. explored the classification of various AI-generated content using machine learning and knowledge graphs [38]. Other contributions, such as CIFAKE by Bird and Lotfi, emphasize interpretability in the classification of fake content [39], while Kim et al. leveraged transfer learning for efficient adaptation to novel generative techniques [40]. Together, these approaches reflect a growing consensus in the research community. Identifying artificial intelligence-generated photos is not merely a technical task, but a critical safeguard against misinformation, digital manipulation, and loss of trust in visual images. As generative models become more sophisticated, the urgency to develop reliable, explainable, and adaptive detection methods continues to intensify.

Traditionally, convolutional neural networks (CNNs) and transfer learning approaches have been the preferred methodologies for such detection tasks. Models like ResNet, pre-trained on massive datasets, have offered high performance across various classification problems. However, these architectures carry inherent limitations, particularly in their potential to model long-term reliance and the global environment within image data. Transformers, with their self-attention mechanisms, address these shortcomings elegantly. Vision Transformers (ViTs), recently adapted from their successful natural language processing (NLP) counterparts, have shown great promise in image classification tasks; yet their potential in the realm of artificially produced image recognition remains underexplored. This study introduces a Vision Transformer (ViT) architecture, termed Patch-Based Vision Transformer for Identifying Synthetic Media (PV-ISM), created for the purpose of binary classification, which involves separating artificial intelligence-generated photos from genuine ones. In order to train and assess the model, the CIFAKE dataset has been used. This dataset comprises real CIFAR-10 images and synthetically generated counterparts produced via Stable Diffusion 1.4. The results of the experiments show that the suggested model performs well, highlighting the effectiveness of transformer-based architectures in the domain of image authentication. The following are this work’s main contributions:

- It is demonstrated that Vision Transformers, even without extensive pre-training or external datasets, can outperform conventional transfer learning models (e.g., ResNet50) on the task of synthetic image detection, achieving an accuracy of 96.60% versus 93.32%.

- A comprehensive ablation study is conducted on hyperparameters such as patch size, image resolution, and epoch count, with their tangible influence on detection accuracy and model generalization being identified.

- It is highlighted that, compared to transfer learning models, which require vast datasets and training time, PV-ISM is not only more time-efficient, but also maintains balanced classification performance across classes, as evidenced by a near-symmetric confusion matrix.

What emerges is not just a performance model, but an adaptable framework for identifying synthetic imagery—a task becoming increasingly vital in today’s digital ecosystem. Moreover, our findings suggest that the patch-based architecture of Vision Transformers aligns naturally with the structural patterns present in generated images, providing an edge in subtle feature discrimination.

The following is how the paper is structured: Section 2 offers a detailed analysis of relevant research on synthetic image detection and transformer-based models. The suggested methodology is described in Section 3, along with the architectural design of the model and training procedures. Section 4 describes the experimental framework, presents the obtained results, and offers an in-depth analysis. Section 5 presents experimental studies with the proposed model by using two more datasets. Finally, Section 6 wraps up the study by summarizing the main conclusions and offering recommendations for further research paths.

2. Related Works

Our study presents a specialized classification task that focuses on distinguishing AI-generated images from real images. Numerous prior works have addressed this topic using similar or even identical datasets, largely because of the insufficient amount of reliable benchmark datasets that enable consistent performance evaluation and cross-method comparison. As a result, many studies exhibit similarities, with minor methodological variations. The following section gives a thorough rundown of current developments in AI-generated image identification.

Several studies have applied deep learning techniques to tackle this classification problem. A significant portion of existing research relies on transfer learning or various configurations of CNNs. These include both simple multi-layered CNNs and more complex architectures with fine-tuned parameters. Transfer learning remains one of the most frequently adopted methods, with many studies comparing different CNN architectures and reporting their relative performance [38,39,41,42,43,44].

For example, one study incorporated activation mapping to highlight the most discriminative features involved in identifying AI-generated images, building on standard CNN architectures [39]. Another work compared Variational Autoencoders (VAEs) with Residual Neural Networks (ResNets), concluding that ResNet significantly outperforms VAEs in this domain [37]. Similarly, a comparative analysis involving pre-trained models such as ResNet, VGGNet, DenseNet, and a custom CNN revealed that DenseNet, enhanced with an additional dense layer, achieved the best results [45].

Additional research examined the performance of various pre-trained models, including VGG16, VGG19, ResNet101, ResNet152, DenseNet121, and DenseNet169, with DenseNet121 performing particularly well when extended with supplementary layers [40]. Other studies have explored novel architectures beyond conventional transfer learning. One such work proposed a dual-input neural network that accepts two versions of the same image, leading to improved classification accuracy [36].

Further comparative studies offer deeper insights. For instance, ResNet50, VGG16, EfficientNetV2B0, and MobileNetV3Small were evaluated on the CIFAKE dataset, with EfficientNetV2B0 delivering the best performance even without fine-tuning [46]. Another study demonstrated the utility of prototype-based classification using similarity scores, improving training efficiency and inference accuracy [34]. Likewise, comparisons between lightweight CNNs and EfficientNetV2B0 highlighted the latter’s superiority [35]. Some studies also implement attention mechanisms, such as those used in PV-ISM, to further enhance detection performance [47].

There have also been investigations into how image properties affect model performance. For example, one study explored the influence of background elements on detection accuracy, noting potential performance variations depending on visual context [48]. Another method used the local binary pattern (LBP) in addition to fast Fourier transform (FFT) features to make use of texture and frequency-based information, thereby enriching the feature space [49].

Moreover, under the domain of digital forensics, several studies have examined the detection of AI-edited images [48,50,51,52]. While this task differs from identifying fully AI-generated content, it shares significant overlap, as both involve artificial manipulations. Such edits often leverage similar generative techniques like GANs, VAEs, or transformers to add virtual elements rather than synthesizing complete images.

Lastly, comparative studies that evaluate models on shared datasets are common, often synthesizing insights across related inquiries [44,52,53,54]. With the exception of more novel approaches—such as FFT-based analysis, multi-input CNNs, and channel-wise classification—most works converge on transfer learning as the most accessible and effective strategy for this classification task. Notably, variants of DenseNet and ResNet frequently surpass alternative models in terms of robustness and accuracy.

From this comprehensive review, it is evident that transfer learning represents the most prevalent and effective method for distinguishing AI-generated images from real ones. Among the various transfer learning strategies, models based on DenseNet and ResNet architectures consistently achieve superior performance. Nonetheless, several unique and emerging techniques show potential for competing with or complementing these dominant approaches [55]. Table 1 shows the accuracy performance of these traditional approaches.

Table 1.

Accuracy comparison between the traditional approaches.

3. Materials and Methods

PV-ISM combines both traditional and modern deep learning techniques, aiming to assess the effectiveness of a ViT model in comparison with a widely recognized pre-trained model, ResNet. Recent research has shown that both ViT and ResNet represent state-of-the-art architectures that compete closely in various image classification tasks [56,57]. Accordingly, our study includes a comparative analysis between these two models to assess their effectiveness in detecting AI-generated images.

The models employed in this study were trained, fine-tuned, and evaluated following the pipeline illustrated in Figure 1. Each model underwent hyperparameter tuning and performance validation to ensure robustness and generalizability.

Figure 1.

Pipeline of model training.

For evaluation, we employed accuracy as the primary metric. To provide a more thorough assessment of the models’ ability to discriminate between real and fake images, precision, recall, and F1 score were also computed. Together, these metrics provide a robust measure of classification efficacy, especially given the circumstances of imbalanced or challenging datasets. The subsections that follow provide a thorough explanation of the dataset used, the architectural design of the models, and the experimental procedures involved in training and evaluation.

3.1. Dataset

The image-media data collection that was used in this research is CIFAKE, a curated benchmark specifically designed for evaluating and comparing methods that detect artificial intelligence (AI)-generated images [39]. The use of this dataset offers two primary advantages. First, its adoption in multiple existing studies facilitates direct performance comparison with previously published results. Second, it is especially well suited for training and assessing CNNs and other deep learning models due to its comparatively relatively small image dimensions, which minimizes training time and computational overhead.

“Real” and “fake” are the two separate categories which CIFAKE contains. The “real” images consist of natural photographs sourced from the CIFAR-10 dataset, where each picture falls into one of the 10 classes listed below: truck, ship, frog, dog, deer, airplane, car, bird, cat, or deer [58]. In contrast, the “fake” images are synthetically generated using advanced AI image generation models.

The 60,000 media images in the dataset that have been classified as “real” each have dimensions of 32 × 32 × 3 (height × width × RGB channels), as illustrated in Figure 2. These compact yet diverse images make CIFAKE a practical and effective choice for benchmarking classification models in the context of synthetic image detection.

Figure 2.

Sample of CIFAR-10 images.

For the “fake”, synthetic images, the CIFAKE dataset has equal amounts of artificially generated images. These synthetic images are generated using a special image dataset of LAION-5B and the Latent Diffusion Model called Stable Diffusion 1.4 (SDM) [39,59,60]. Diffusion models like SDM utilize noising and denoising operations such as Gaussian noise, as given in Equation (1) [61].

Similarly, there are 60,000 images in the “fake” category, all of which are uniformly distributed among ten different classes and have dimensions of 32 × 32 × 3. These classes provide data structure consistency by matching those in the “real” category. The image generation process involves 50 reverse noising steps, using tailored textual prompts for each class to guide the creation of synthetic images. For instance, the “truck” class uses prompts like garbage, tow, and fire, but the “airplane” class uses terms like fighter, jet, and aircraft. Figure 3 shows the flaws and irregularities in a few of the produced images, which are a reflection of the synthesis process’s inherent limits and shed light on the dataset’s unpredictability.

Figure 3.

Cherry-picked sample of synthetic images with abnormal effects, upscaled for seeing imperfections.

3.2. Patch-Based Transformer Model

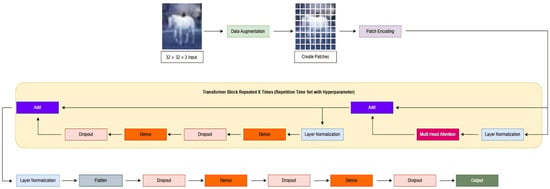

This section presents the methodology used to enhance the model originally proposed by Dosovitskiy et al. [62] to distinguish real images from artificially generated images. The proposed PV-ISM architecture, shown in Figure 4, builds on the Vision Transformer (ViT) framework by integrating data augmentation and a custom patch-based processing pipeline to improve classification accuracy. Unlike traditional convolutional models, ViT employs transformer encoders, which utilize self-attention to capture contextual relationships and long-range dependencies between image patches. This mechanism enables the model to recognize global patterns and structural consistency, which is essential for differentiating real images from synthetic ones.

Figure 4.

Purposed model’s architecture.

3.2.1. Data Augmentation

Initially, in the model training step, the dataset was enhanced by applying various methods for data augmentation to improve the model’s robustness and lessen the chance of overfitting. The augmentation pipeline was implemented using the Keras Sequential application programming interface (API) and included the following transformations.

Normalization, shown in Equation (2), was applied to each pixel of all the channels of images to bring the mean to zero and adjust the standard deviation () to one, as shown in Equations (3) and (4). The data supplied as an input is standardized to a consistent scale thanks to this process, which is necessary to keep the models developed using deep learning stable and performing well.

In addition to normalization, we applied a range of data augmentation techniques to enhance the performance and generalization ability of our models. Specifically, random horizontal and vertical flips were employed to alter the spatial orientation of image pixels, thereby increasing data diversity. Furthermore, random rotations were implemented using Keras data augmentation layers, with rotation angles controlled via floating-point parameters that define the maximum permissible degrees for both clockwise and counterclockwise rotation. To further diversify the training data, a random zoom effect was introduced using dedicated Keras augmentation layers, simulating variations in object scale and perspective. These augmentations collectively helped to mitigate overfitting by introducing subtle variations in the input images. By slightly modifying pixel arrangements, the model is encouraged to learn generalizable features rather than memorizing specific training examples.

As illustrated in Figure 5, augmentations such as flipping, rotating, and zooming adjust the input images dynamically to provide a richer and more robust training dataset. While these augmentations increase input variability, normalization plays a crucial complementary role by ensuring that the range of input feature values remains consistent. This prevents disproportionately high or low input values from exerting undue influence on the learning process due to scale imbalances in model parameters.

Figure 5.

Input variability.

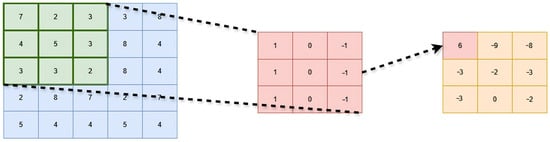

In the contemporary landscape of deep learning, CNNs are widely regarded as the backbone of classification models, primarily due to their extensive usage and remarkable performance across a variety of tasks [63]. CNNs have proven particularly effective in image classification, but their utility extends beyond visual data, with successful applications in domains such as audio and sound classification as well [64,65].

Unlike traditional deep learning models, CNNs process input data in the form of two-dimensional matrices (of size ), enabling the application of convolutional filters in localized regions of the input. These filters learn spatial hierarchies of features by sliding across the input matrix, effectively capturing low- to high-level patterns. This process, illustrated in Figure 6, allows CNNs to generate rich feature representations layer by layer, making them highly suitable for tasks involving structured spatial data.

Figure 6.

Applying edge detection filter to matrix.

Unlike the CNN architecture, implementing the transformer architecture for image classification requires a different approach to input processing. As described in the work by Alexey Dosovitskiy et al. [62], the standard image representation (a 3D matrix with height, width, and channel dimensions) must be transformed into a 2D sequence format suitable for transformer-based models [66].

3.2.2. Patch Encoding and Transformer Block

To adapt the transformer architecture for image classification, the input images must be transformed from their standard 2D spatial format to a 1D sequence format suitable for transformer processing, as originally proposed by Dosovitskiy et al. [62]. This is accomplished through a patch extraction mechanism, which divides each image into fixed-size, non-overlapping patches. Each patch is then flattened and linearly projected into a vector representation. The mathematical formulation of this transformation is given in Equations (5) and (6), and its implementation is visually depicted in Figure 7.

Figure 7.

Encoding image matrix to transformer-processable format.

Each projected patch is augmented with a positional encoding to maintain spatial coherence across the image sequence [62]. These encoded patches serve as inputs to the transformer block, as illustrated in Figure 7.

To enable the model to capture long-range dependencies between image regions, each transformer block applies a multi-head self-attention mechanism, which computes attention scores based on the query (Q), key (K), and value (V) matrices. The operation is defined by the attention formula in Equation (7) [66]:

In Equation (7), denotes the dimensionality of the key vectors. In practice, the original patch embeddings are extended with an additional dimension to support multi-head attention, enabling the model to attend to multiple subspaces of representation in parallel [66]. The resulting shape of the encoded patches, considering the projection dimension and the number of attention heads, is given in Equation (8):

Following the self-attention operation, layer normalization is applied both before and after the attention and feed-forward sublayers, adhering to the pre-normalization strategy, which has been shown to improve model stability and convergence [67]. A residual connection (Add) is used to facilitate better gradient flow, and dropout layers are included to prevent overfitting [68,69].

The final layer of the transformer block applies a dense feed-forward network, and the entire sequence of blocks is repeated as per the model’s configuration. After the last transformer block, the sequence is flattened and passed through further dense and dropout layers to refine the representation. For classification, a sigmoid-activated output layer is used, as described in Equation (9), where x denotes the input to the final neuron before activation (i.e., the output of the raw logit score by the model):

Importantly, rather than relying on a conventional binary output, our approach defines two output neurons without an activation function, selecting the neuron with the highest score as the predicted class [70]. This allows for a smoother, probabilistic interpretation of the model’s decision boundaries.

A summary of the complete encoding and transformer process is presented in Algorithm 1, which provides a high-level overview of the PV-ISM pipeline. By combining patch-level tokenization with attention-based contextual learning, the PV-ISM architecture efficiently learns global and local features necessary for robust discrimination between real and synthetic imagery.

| Algorithm 1 PV-ISM Algorithm |

|

3.2.3. Architectural Contributions Beyond ViT

While the PV-ISM architecture builds upon the standard Vision Transformer (ViT) framework, several architectural modifications distinguish it from conventional implementations. First, instead of relying solely on transformer blocks followed by a single output layer, PV-ISM incorporates a customized sequence of dense and dropout layers, both within and beyond the transformer stack. This design provides additional non-linearity and regularization, enhancing classification robustness without requiring large-scale pre-training or transfer learning. Second, the model diverges from the typical binary classification setup that applies a sigmoid-activated single-neuron output. Instead, PV-ISM outputs two raw logits representing each class, deferring the softmax computation to post-processing. This structure allows greater flexibility in threshold selection and facilitates more calibrated output analysis.

Overall, the architectural novelty lies in the integration of lightweight dense components within the attention pipeline, a dual-logit output strategy, and training from scratch without transfer learning, achieving comparable or better performance than state-of-the-art ViT-based models in deepfake detection tasks.

3.3. Transfer Learning with ResNet

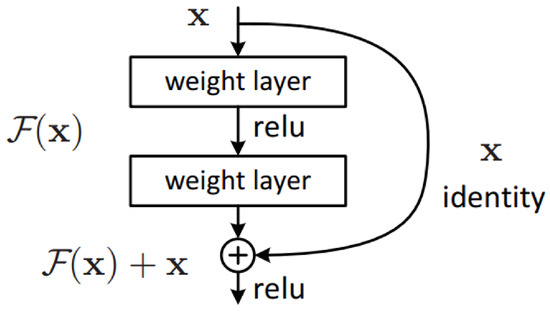

To assess the performance of PV-ISM in distinguishing real images from synthetic counterparts, we utilized transfer learning with ResNet, a deep residual network architecture renowned for achieving exceptionally good results in problems involving image classification. ResNet effectively preserves essential low-level features, including edges, textures, and fundamental patterns, thereby enhancing its applicability and efficacy throughout a variety of computer vision applications [71].

Transfer learning allows for the utilization of a pre-trained model, usually trained by use of extensive datasets like ImageNet, which can then be specially fine-tuned for the specific task of classification at hand. This methodology substantially decreases the training time required and improves the model’s ability to generalize across diverse data. The architecture of ResNet is based on residual blocks (illustrated in Figure 8), which incorporate shortcut connections. These links mitigate the problem of vanishing gradients that deep networks frequently face while enabling the neural network to learn richer representations of features [71]. The primary advantage of residual connections lies in their facilitation of efficient gradient flow through the network, enabling the training of models with greater depth. ResNet has been widely adopted in contemporary classification applications, including facial recognition, breast cancer subtype diagnosis, and malicious software detection, among others [72,73,74].

Figure 8.

Residual block [71].

In our implementation, illustrated in Figure 9, we employed ResNet50, a 50-layer deep variant of the ResNet architecture, as a feature extractor [71]. The convolutional layers of ResNet50 were frozen to preserve the learned filters from pre-training on ImageNet, whereas the classifier layers were adjusted to fit our dataset’s unique features. The feature extraction and classification pipeline consisted of the following steps.

Figure 9.

Implementation of ResNet model.

Preprocessing and data augmentation: The input images, originally sized at 32 × 32 × 3, were resized to 224 × 224 × 3 to comply with ResNet50’s input requirements. Normalization was applied in accordance with the procedure described in Section 3.2. Additionally, geometric data augmentation techniques, such as random horizontal flipping and rotations, and color-based augmentations, such as random brightness, contrast, and saturation adjustments, were applied to enhance model generalization and robustness.

Extraction of features using ResNet50: A ResNet50 model that had already been trained, aside from its fully linked top layers, was loaded. The final convolutional block’s output was used to represent the input images’ extracted features.

Custom classification head: On top of the ResNet50 base, we appended a custom classification head comprising multiple fully connected (dense) layers, dropout layers for regularization, and a last dense output layer for binary detection of the input images that used a sigmoid activation function [71].

Hyperparameter tuning: Various hyperparameter configurations (e.g., learning rate, learning rate scheduler, batch size, optimizer, dropout rate) were explored during training to identify the optimal settings for performance maximization.

By integrating transfer learning with ResNet50, we effectively harnessed pre-trained feature representations derived from a large-scale dataset, enabling the model to capture general visual patterns while simultaneously adapting to the specific characteristics of our dataset [71]. This strategy is widely recognized for its ability to enhance performance in generalization and training efficiency. Moreover, it serves as a cutting-edge standard for evaluating the efficacy of PV-ISM in determining which images are artificially created and which are real.

4. Results and Discussion

This section contains the findings from the tests that were carried out and the evaluations of PV-ISM are presented. We include in-depth specifications of the computational environment that we used for this research to ensure the reproducibility of our studies. The experiments were conducted on a system running Ubuntu 22.04.5 LTS (Canonical Ltd., London, UK) as the operating system, equipped with an AMD Ryzen 9 7900X CPU (Advanced Micro Devices, Inc., Santa Clara, CA, USA), and GeForce RTX 3090 24G GPU (NVIDIA Corporation, Santa Clara, CA, USA), supported by 32 GB of RAM. The development environment was based on Python 3.10.12, and the primary deep learning framework utilized was TensorFlow 2.16.0-rc0 (Google LLC, Mountain View, CA, USA). For GPU acceleration, CUDA 12.2 was used as the communication agent between the TensorFlow framework and the GPU, with the NVIDIA driver version 535.183.01 specifically installed to ensure compatibility and performance.

Throughout the evaluation process, accuracy (), defined in Equation (10), is employed as the primary metric for comparison. To guarantee a thorough evaluation of model performance, in addition to (), precision (P), recall (R), and F1 score (), given in Equations (11)–(13), are also provided in the results.

R is the percentage of true positive cases that the model accurately recognized, whereas P indicates the percentage of cases that were projected to be positive and turned out to be positive. The score provides an accurate evaluation of recall and precision by taking the harmonic average of the two factors. These metrics, which are referred to above, are derived from the confusion matrix, which has four main elements. Accurate predictions are indicated as either true negative (), which indicates that negative cases are correctly identified, or true positive (), which indicates that positive cases are correctly discovered. Conversely, false positive () and false negative () are examples of classification errors, where a negative case is mistakenly predicted as positive and a positive case is mistakenly predicted as negative.

The analytical outcomes and performance metrics obtained from the implementation of PV-ISM on the CIFAKE dataset are presented. To address a wide range of potential scenarios, the model parameters were systematically adjusted to determine the most effective configuration. Several significant hyperparameters utilized throughout the study were the learning rate scheduler (LRS), batch size (BS), epoch number (EN), test set percentage (TSP), image resize (IS), patch size (PS), and transformer block (TB).

It was observed that the model demonstrated satisfactory performance even without fine-tuning. However, to ensure the stability and generalizability of the model, parameter optimization was conducted, and notable improvements were identified and are discussed in Table 2.

Table 2.

Evaluation results of hyperparameter tuning process.

One of the most evident findings from the fine-tuning process was the significant impact of the training epoch count on total accuracy. When trained with a low number of epochs, the model’s accuracy consistently ranged between 85% and 89%. Conversely, an increased number of epochs resulted in higher accuracy, although this came with a potential risk of overfitting.

In the search for an appropriate number of epochs, a progressive approach was adopted, starting from lower values and gradually increasing, considering the dataset size. Subsequently, an early stopping mechanism, which stopped if there was no improvement after 10 epochs, was integrated into the training process using the estimated optimal parameters. The evaluation revealed that the training process typically converged around 65 epochs, with no significant improvement in accuracy observed beyond this point.

Another important factor influencing performance was the learning rate scheduling strategy applied during training. To control the training dynamics, a learning rate scheduler was utilized, decreasing the learning rate by a scaling factor of 0.3 after three consecutive epochs, with the intention of refining the gradient descent process. However, this strategy unexpectedly resulted in a decrease in model performance. Consequently, the scheduler was excluded from the final training procedure, and the AdamW optimizer, recognized as a state-of-the-art optimization algorithm, was employed exclusively.

The next aspects to be considered were the image resize and patch size parameters. Image resize defines the upscaled size of the original image, addressing the issue of lower image resolution. Patch size represents the size of the patches in pixels. A slight decrease in the image resize parameter results in a performance improvement. A similar effect is observed when slightly adjusting the patch size parameter within a small window.

Furthermore, upon examining the minor percentage differences, it can be observed that reducing the test set percentage has a slight impact on performance. Since the test and evaluation sets are distinct, increasing the number of training images aids the model in identifying anomalies more effectively. The transformer block, inspired by Vaswani et al. [66], also demonstrates similar characteristics: a balanced application of transformers improves accuracy, while excessive use may negatively affect performance.

For a comprehensive comparison, a state-of-the-art transfer learning model, ResNet50 initialized using ImageNet weights, was introduced. The ResNet50 model, which is trained on a massive image dataset, is particularly designed for classification tasks.

In Table 3, a comparison between the ResNet50 transfer learning model and PV-ISM is provided. PV-ISM achieves an accuracy of 96.60%, which is 3.28 percentage points higher than ResNet50’s 93.32%. This indicates that, overall, PV-ISM classifies a higher proportion of images correctly. PV-ISM appears to have a slightly reduced rate of incorrect positive classifications based on the precision score, indicating its higher reliability in predicting real images. The most significant improvement is observed in recall, where PV-ISM achieves 96.6%, compared to ResNet50’s 90.43%, marking a substantial 6.17 percentage point increase. This shows that PV-ISM is considerably better at identifying all relevant instances, leading to fewer false negatives. The balanced performance, also reflected in the F1 score, suggests that PV-ISM has well-tuned parameters that work effectively in distinguishing AI-generated images from real ones, whereas the ResNet50 model exhibits more variation across metrics. This comparison demonstrates that while transfer learning with ResNet50 provides a strong baseline performance, PV-ISM offers significant improvements.

Table 3.

Comparison between ResNet50 and PV-ISM.

The performance of PV-ISM can be investigated in comparison with other studies, as presented in Table 4. When using the same dataset, PV-ISM demonstrates superior performance relative to other studies, even outperforming highly information-dense transfer learning models. Moreover, when considering the duration times for training, the performance gap becomes even more pronounced. While transfer learning models are commonly trained on very large datasets with industrial-grade computational resources, PV-ISM achieves its results without such extensive resources.

Table 4.

Accuracy comparison between PV-ISM and other studies.

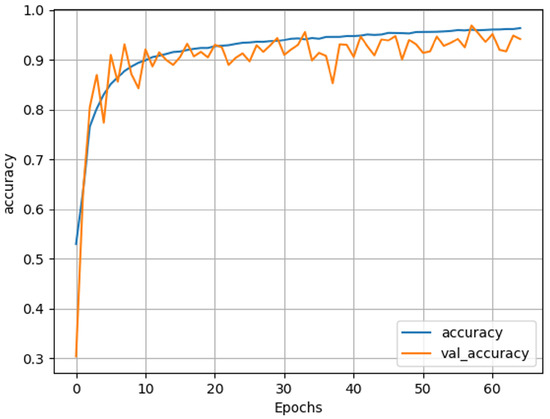

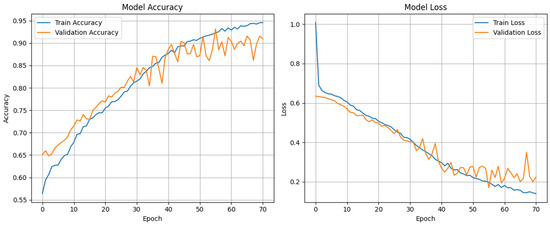

The best-performing model in our experiments, as presented in the final result in Table 2, was the flattened transformer architecture, which achieved the highest accuracy on the CIFAKE dataset. The accuracy graph, shown in Figure 10, illustrates how the model’s accuracy changed during training over 65 epochs. It can be observed that the training accuracy steadily increased and reached approximately 96% by the time the training was over. Also validation accuracy remained high, albeit with some minor fluctuations after the initial few epochs. These findings show that the model maintained good performance on unseen data, successfully learning to distinguish between real and false, AI-generated media images.

Figure 10.

Graph of accuracy vs. epoch for best-performing PV-ISM.

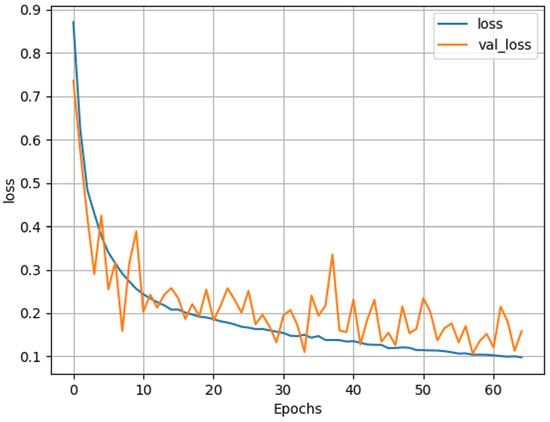

As shown in Figure 10, the training accuracy increased consistently and reached over 96% toward the end of training. The validation accuracy also remained high, albeit with some minor oscillations, indicating that the model maintained strong generalization throughout the training process. This is supported by Figure 11, which demonstrates a smooth and parallel decline in training and validation loss. The narrow gap between the two curves suggests minimal overfitting and confirms that the model is not memorizing the training data, but rather learning meaningful patterns.

Figure 11.

Graph of loss vs. epoch for best-performing PV-ISM.

Figure 11 demonstrates the loss rate throughout the training and validation sets which correspond to the accuracy graph. The training loss decreased smoothly as the number of epochs increased, suggesting that the model continued to improve during the training phase. The validation loss also remained low, despite some slight fluctuations. This behavior is typical and indicates that the model was able to generalize to new data without significant overfitting. The small difference between the training and validation loss further supports this conclusion.

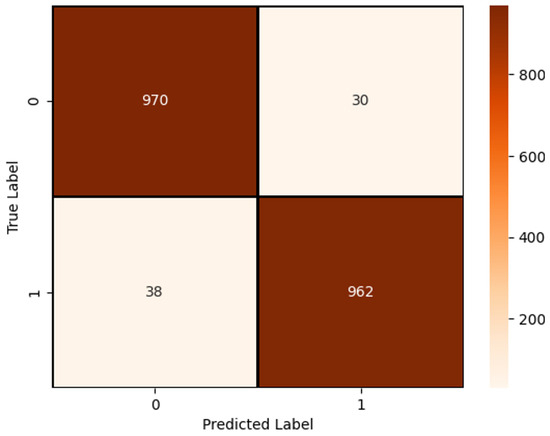

Figure 12 presents the results through the confusion matrix for the evaluation set. The proposed model successfully predicted 970 fake images and 962 real images from a set of 1000 each. Only 30 fake images were misclassified as real, and 38 real images were misclassified as fake. These numbers demonstrate that the model performs well across both classes and does not exhibit a bias toward one class over the other. Thus, the outcomes validate the model’s accuracy and balance in recognizing between real and AI-generated images.

Figure 12.

Confusion matrix of best-performing PV-ISM.

The confusion matrix in Figure 12 further illustrates the model’s balanced performance. PV-ISM correctly classified 970 out of 1000 fake images and 962 out of 1000 real images, resulting in 30 false negatives and 38 false positives, respectively. This nearly symmetric outcome suggests that the model does not favor one class over the other. The low number of classification errors across both classes demonstrates the robustness of the model’s decision boundary and its applicability to real-world deepfake detection scenarios. Based on performance trends and model behavior, the patch encoding and transformer-based attention layers appear to be the most impactful. The patch-wise tokenization enables fine-grained feature localization, while the attention mechanism enhances global feature correlation, both essential for distinguishing subtle generative artifacts.

5. Supplementary Experimental Studies with PV-ISM

To further validate the proposed PV-ISM model’s generalizability beyond the CIFAKE dataset, two additional experimental studies were conducted using the Real vs. Fake Faces (RVF) dataset and a curated set of art images. These datasets present distinct visual domains—facial realism and stylistic abstraction—which challenge the model’s ability to detect synthetic content across different contexts. The performance on these datasets offers further insight into the adaptability and robustness of the proposed architecture.

5.1. The RVF-10K Dataset

For additional experiments, the Real vs. Fake Faces (RVF-10K) dataset was utilized—a curated collection of 10,000 facial images specifically designed for binary classification tasks distinguishing authentic human faces from synthetically generated ones. This dataset addresses the growing need for robust deepfake detection systems in an era where photorealistic face generation has become increasingly accessible through advanced generative models.

The RVF-10K dataset maintains a balanced distribution across both training and validation splits to ensure unbiased model evaluation. The complete dataset structure is organized as follows:

- Training set: 7000 images (3500 real, 3500 fake).

- Validation set: 3000 images (1500 real, 1500 fake).

- Total: 10,000 images with perfect class balance (50% real, 50% fake).

The dataset draws from two distinct and well-established sources to ensure high quality and diversity.

Authentic images: Sourced from NVIDIA Research’s Flickr-Faces-HQ (FFHQ) dataset, these images represent authentic human faces captured in natural settings. The FFHQ dataset is renowned for its high resolution, diverse demographic representation, and careful curation process that removes low-quality or problematic images [75].

Synthetic images: These images were generated using the StyleGAN architecture from Bojan Tunguz’s 1 Million Fake Face dataset [76]. They demonstrate state-of-the-art generative capabilities, producing photorealistic faces that challenge traditional detection methods. StyleGAN-generated content was selected to ensure that the dataset reflects current deepfake generation techniques.

The dataset encompasses a wide range of facial characteristics and imaging conditions. Authentic images exhibit natural variations in lighting, pose, expression, age, and ethnicity, reflecting the diversity found in authentic photography. Synthetic images demonstrate sophisticated generation quality, with consistent lighting and composition, while maintaining a photorealistic appearance that poses genuine classification challenges.

The sample images in Figure 13 from the dataset illustrate the quality and diversity of both classes. Real faces display natural imperfections, varied lighting conditions, and authentic expressions. In contrast, synthetic faces show the high-fidelity output of modern generative models, with subtle artifacts that require sophisticated identification detection methods.

Figure 13.

Sample images in RVF-10K.

As with the CIFAKE dataset classification, the same data augmentation techniques were applied. In addition, no limit was set for training epoch count, and an early stopping mechanism was utilized with a patience of ten epochs. Differently from the CIFAKE dataset, consecutive dense layers were adjusted for performance improvement. The training split ratio was set to 0.2 for all hyperparameter evaluations. The results shown in Table 5 demonstrate that the proposed performance is susceptible to architectural choices, including image size, patch size, transformer depth, MLP layer dimensions, and batch size. Substantial gains in classification performance are observed as these parameters are scaled appropriately.

Table 5.

Evaluation results of the hyperparameter tuning process on the face dataset.

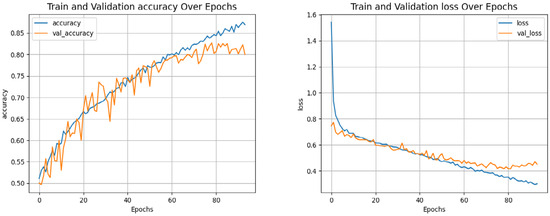

Starting with an image size of 224 × 224, increasing the depth of the transformer blocks and the size of the MLP layers led to notable improvements. For instance, moving from a shallow ViT with four transformer blocks and small MLP layers (accuracy = 69.30%, F1 = 63.20) to deeper models with more expressive MLPs and finer patch sizes resulted in a consistent rise in accuracy and F1 score. Notably, using a 256 × 256 image resolution with a patch size of 16, 32 transformer blocks, and an MLP of (2048, 1024), the model achieved its best performance, with an accuracy of 81.70%, precision of 82.82%, recall of 80.00%, and F1 score of 81.38. Figure 14 shows the overall training progress, demonstrating the accuracy and loss over epochs.

Figure 14.

Accuracy and loss values vs. epoch.

This progression reflects the proposed model’s capacity to capture better spatial hierarchies and long-range dependencies as model depth and input resolution increase. Smaller patch sizes also contribute to finer feature extraction, which benefits performance, especially in high-resolution settings. The improvements are even more pronounced when comparing the proposed model to traditional convolutional baselines such as ResNet50 and ResNet101 in Table 6. ResNet50 achieved an accuracy of 67.27% and an F1 score of 65.57%, while ResNet101 achieved a slightly higher F1 score of 65.96%. In contrast, even the moderately configured proposed model significantly outperformed both ResNet variants across all metrics. The proposed model surpassed ResNet101 by over 14 percentage points in F1 score and over 12 percentage points in accuracy, highlighting the transformative potential of self-attention-based architectures over traditional convolutional networks in this task.

Table 6.

Comparison between ResNet50, ResNet101, and PV-ISM on face dataset.

5.2. The Art Images Dataset

The dataset employed in this experiment is sourced from various artists and GAN projects [77]. This curated dataset enables the development and evaluation of models for distinguishing between authentic human-created art and artificially synthesized images, including those generated by generative adversarial networks (GANs) and other AI-based image synthesis techniques.

The dataset comprises a total of 21,642 images, evenly divided into two categories.

- Real: This class contains 10,821 authentic artworks from WikiArt, a comprehensive and publicly accessible art database. The artworks span a diverse range of artistic styles, periods, and genres, contributing to the representational richness of the real class.

- Fake: This class includes 10,821 AI-generated images created using various generative models, including GANs. These synthetic images were curated to emulate the visual properties of real artworks, introducing variability in style and technique to challenge classification algorithms and promote generalization.

Each image in the dataset has been resized to 256 × 256 pixels, providing uniformity and reducing preprocessing overhead during model training. Figure 15 illustrates sample images from both categories. The dataset is intentionally balanced with an equal number of real and fake images, facilitating fair evaluation in binary classification tasks without additional class-balancing techniques. The real artworks encompass many artistic traditions, while the fake class includes outputs from multiple GAN architectures, enhancing the dataset’s variability and suitability for training robust models. All images are stored in a high-resolution format and preprocessed to a standard size, making them directly compatible with deep learning pipelines.

Figure 15.

Sample images in Art Images dataset.

Table 7 presents the performance metrics for various configurations of the Vision Transformer (ViT) architecture, where the input image size (IS), patch size (PS), number of transformer blocks (TBs), and MLP layer dimensions were systematically varied. The performance is reported in terms of accuracy (ACC), precision, recall, and F1 score.

Table 7.

Evaluation results of hyperparameter tuning process on Art Images dataset.

The evaluation process of this experiment was conducted similarly to the previous experiment, including base data augmentation as well as an early stopping mechanism with a patience of ten. Consecutive dense layers were also adjusted for performance improvement. The split ratio of test-to-training was adjusted to 0.2 for all hyperparameter evaluations, with a batch size of 64. Initial results using an image size of 224 by 224 pixels and a patch size of 14 showed modest performance when only four transformer blocks were used. This configuration achieved an accuracy of 78.42 percent and an F1 score of 79.20 percent. Increasing the number of transformer blocks to eight and expanding the multi-layer perceptron to include larger hidden units resulted in a significant performance boost. Under this setting, the model achieved an F1 score of 89.60 percent, illustrating the positive influence of greater depth and model capacity. Transitioning to a higher image resolution of 256 by 256 pixels and a patch size of 16 led to further improvements. A configuration with eight transformer blocks and a relatively small multi-layer perceptron still matched the previous F1 score of 89.60 percent. This indicates that increased input resolution enhances feature representation, even with reduced feed-forward capacity. The most notable performance gains were observed in configurations that used high-resolution inputs and deeper transformer networks. A configuration using eight transformer blocks and a multi-layer perceptron with intermediate capacity achieved an F1 score of 92.40 percent. Increasing the number of transformer blocks to sixteen and thirty-two led to further gains, with the highest performance reaching an accuracy of 93.16 percent and an F1 score of 93.66 percent. These results suggest that deeper transformer architectures can better capture the subtle differences between authentic and AI-generated images. Along with the high results in the metrics, we can observe the steady and robust learning process through the epochs in Figure 16.

Figure 16.

Accuracy and loss values vs. epoch.

In contrast, increasing the depth without a sufficiently expressive feed-forward layer led to diminished returns. For example, when thirty-two transformer blocks were used with a smaller multi-layer perceptron, the F1 score dropped to 89.60 percent. This outcome underscores the importance of balancing transformer depth with adequate feed-forward capacity. To further evaluate the effectiveness of the proposed PV-ISM architecture, a comparative analysis was conducted against two widely adopted convolutional neural network models: ResNet50 and ResNet101. The models were evaluated on the same dataset under identical training and testing conditions. Table 8 presents the performance metrics, including accuracy, precision, recall, and F1 score, for each model. The ResNet50 model achieved an accuracy of 88.98 percent, with a precision of 83.50 percent, a recall of 93.77 percent, and an F1 score of 88.34 percent. The deeper ResNet101 model showed improved performance, reaching an accuracy of 90.94 percent, precision of 86.46 percent, recall of 94.97 percent, and an F1 score of 90.52 percent. However, the proposed PV-ISM model, which is based on a Vision Transformer backbone with optimized architectural parameters, outperformed both the ResNet variants across all evaluation metrics. Specifically, PV-ISM attained an accuracy of 93.16 percent, a precision of 88.80 percent, a recall of 99.09 percent, and an F1 score of 93.66 percent. This demonstrates a substantial improvement in classification capability, particularly regarding recall and F1 score, suggesting superior sensitivity and overall model balance.

Table 8.

Comparison between ResNet50, ResNet101, and PV-ISM on Art Images dataset.

6. Conclusions

This study introduced PV-ISM, a Vision Transformer-based framework for distinguishing between real and AI-generated images. Beyond strong performance on the CIFAKE dataset, the results underscore the architectural advantage of patch-wise tokenization and attention-based modeling for capturing subtle generative artifacts.

Architecturally, PV-ISM diverges from conventional ViT models through several design choices. First, it integrates custom dense and dropout layers not only after but also within the transformer stack, improving non-linearity and regularization. Second, the model replaces the typical sigmoid-activated single-neuron output with a dual-logit strategy, outputting raw values per class before applying softmax externally. This offers greater flexibility in thresholding and interpretability. Importantly, PV-ISM achieves high performance without relying on pre-trained backbones or transfer learning, which makes it lightweight, fast, and adaptable for deployment in practical scenarios.

To evaluate generalizability, two additional experiments were conducted using the Real vs. Fake Faces (RVF-10K) and Art Images datasets. The RVF-10K dataset tested the model’s ability to detect facial deepfakes in realistic, high-resolution scenarios, while the Art Images dataset assessed PV-ISM’s robustness in stylistically abstract domains. In both cases, the model maintained strong classification performance, supporting its applicability across varying visual distributions and use cases.

These findings extend the potential impact of PV-ISM beyond technical benchmarks. As generative models continue to evolve, their misuse in journalism, forensics, and digital media poses growing risks. Robust detection systems like PV-ISM are therefore crucial for preserving visual trust in increasingly synthetic digital ecosystems.

Future work could investigate the scalability of PV-ISM to high-resolution datasets and real-time applications, explore its integration with multimodal data (e.g., video or audio), and advance interpretability tools to better understand attention mechanisms in transformer-based architectures.

Author Contributions

O.Ç.: Conceptualization, methodology, investigation, formal analysis, resources, data curation, visualization, and writing—review and editing; Y.D.: data curation, supervision, project administration, and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The CIFAKE dataset [39] is publicly available in the Kaggle machine learning repository (https://www.kaggle.com/datasets/birdy654/cifake-real-and-ai-generated-synthetic-images, accessed on 12 April 2025). The RVF-10K dataset [76] is publicly available in the Kaggle machine learning repository (https://www.kaggle.com/c/deepfake-detection-challenge/discussion/121173, accessed on 1 June 2025). The Art Images dataset [77] is publicly available in the Kaggle machine learning repository (https://www.kaggle.com/datasets/doctorstrange420/real-and-fake-ai-generated-art-images-dataset, accessed on 1 June 2025).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| ACC | Accuracy |

| AI | Artificial intelligence |

| BS | Batch size |

| CNN | Convolutional neural network |

| CUDA | Compute Unified Device Architecture |

| EN | Epoch number |

| F1 | F1 score |

| FFT | Fast Fourier transform |

| FN | False negative |

| FP | False positive |

| GAN | Generative adversarial network |

| GPU | Graphics processing unit |

| IS | Image resize |

| K | Key |

| LBP | Local binary pattern |

| LRS | Learning rate scheduler |

| NLP | Natural language processing |

| P | Precision |

| PS | Patch size |

| PV-ISM | A Patch-Based Vision Transformer for Identifying Synthetic Media |

| Q | Query |

| R | Recall |

| SDM | Stable Diffusion 1.4 |

| TB | Transformer block |

| TN | True negative |

| TP | True positive |

| TSP | Test set percentage |

| VAE | Variational Autoencoder |

| V | Value |

| ViT | Vision Transformer |

References

- Yao, Q. Application of Artificial Intelligence Virtual Image Technology in Photography Art Creation under Deep Learning. IEEE Access 2025, 10, 101–110. [Google Scholar] [CrossRef]

- Knight, Y.; Eladhari, M.P. Artificial intelligence in an artistic practice: A journey through surrealism and generative arts. Media Pract. Educ. 2025, 1, 1–18. [Google Scholar] [CrossRef]

- Liu, X.; Wang, X.; Qian, R. Application of Machine Learning in Cell Detection. Targets 2025, 3, 2. [Google Scholar] [CrossRef]

- Ramirez-Quintana, J.A.; Salazar-Gonzalez, E.A.; Chacon-Murguia, M.I.; Arzate-Quintana, C. Novel Extreme-Lightweight Fully Convolutional Network for Low Computational Cost in Microbiological and Cell Analysis: Detection, Quantification, and Segmentation. Big Data Cogn. Comput. 2025, 9, 36. [Google Scholar] [CrossRef]

- Lu, Y.; Li, M.; Gao, Z.; Ma, H.; Chong, Y.; Hong, J.; Wu, J.; Wu, D.; Xi, D.; Deng, W. Advances in Whole Genome Sequencing: Methods, Tools, and Applications in Population Genomics. Int. J. Mol. Sci. 2025, 26, 372. [Google Scholar] [CrossRef]

- Câmara, G.B.M.; Coutinho, M.G.F.; Silva, L.M.D.d.; Gadelha, W.V.d.N.; Torquato, M.F.; Barbosa, R.d.M.; Fernandes, M.A.C. Convolutional Neural Network Applied to SARS-CoV-2 Sequence Classification. Sensors 2022, 22, 5730. [Google Scholar] [CrossRef]

- Mondol, R.K.; Millar, E.K.A.; Graham, P.H.; Browne, L.; Sowmya, A.; Meijering, E. hist2RNA: An Efficient Deep Learning Architecture to Predict Gene Expression from Breast Cancer Histopathology Images. Cancers 2023, 15, 2569. [Google Scholar] [CrossRef]

- Anand, V.; Gupta, S.; Gupta, D.; Gulzar, Y.; Xin, Q.; Juneja, S.; Shah, A.; Shaikh, A. Weighted Average Ensemble Deep Learning Model for Stratification of Brain Tumor in MRI Images. Diagnostics 2023, 13, 1320. [Google Scholar] [CrossRef]

- Li, W.; Hsu, C.-Y. GeoAI for Large-Scale Image Analysis and Machine Vision: Recent Progress of Artificial Intelligence in Geography. ISPRS Int. J. Geo-Inf. 2022, 11, 385. [Google Scholar] [CrossRef]

- Xu, W.; Yang, D.; Liu, J.; Li, Y.; Zhou, M. A Visual Navigation Algorithm for UAV Based on Visual-Geography Optimization. Drones 2024, 8, 313. [Google Scholar] [CrossRef]

- Jiang, C.; Abdul Halin, A.; Yang, B.; Abdullah, L.N.; Manshor, N.; Perumal, T. Res-UNet Ensemble Learning for Semantic Segmentation of Mineral Optical Microscopy Images. Minerals 2024, 14, 1281. [Google Scholar] [CrossRef]

- Neranjan, S.; Uchida, T.; Yamakawa, Y.; Hiraoka, M.; Kawakami, A. Geometrical Variation Analysis of Landslides in Different Geological Settings Using Satellite Images: Case Studies in Japan and Sri Lanka. Remote Sens. 2024, 16, 1757. [Google Scholar] [CrossRef]

- Cai, J.; Duan, Q.; Long, M.; Zhang, L.-B.; Ding, X. Feature Interaction-Based Face De-Morphing Factor Prediction for Restoring Accomplice’s Facial Image. Sensors 2024, 24, 5504. [Google Scholar] [CrossRef]

- Wu, B.; Zhang, S.; Gao, W.; Bi, Y.; Hu, X. A Method for Fingerprint Edge Enhancement Based on Radial Hilbert Transform. Electronics 2024, 13, 3886. [Google Scholar] [CrossRef]

- Kim, S.-U.; Kim, J.-Y. The Development and Optimization of a Textile Image Processing Algorithm (TIPA) for Defect Detection in Conductive Textiles. Processes 2025, 13, 486. [Google Scholar] [CrossRef]

- Hassan, S.A.; Beliatis, M.J.; Radziwon, A.; Menciassi, A.; Oddo, C.M. Textile Fabric Defect Detection Using Enhanced Deep Convolutional Neural Network with Safe Human–Robot Collaborative Interaction. Electronics 2024, 13, 4314. [Google Scholar] [CrossRef]

- Rana, S.; Gerbino, S.; Crimaldi, M.; Cirillo, V.; Carillo, P.; Sarghini, F.; Maggio, A. Comprehensive Evaluation of Multispectral Image Registration Strategies in Heterogenous Agriculture Environment. J. Imaging 2024, 10, 61. [Google Scholar] [CrossRef]

- Zhou, B.; Wu, K.; Chen, M. Detection of Gannan Navel Orange Ripeness in Natural Environment Based on YOLOv5-NMM. Agronomy 2024, 14, 910. [Google Scholar] [CrossRef]

- Eum, I.; Kim, J.; Wang, S.; Kim, J. Heavy Equipment Detection on Construction Sites Using You Only Look Once (YOLO-Version 10) with Transformer Architectures. Appl. Sci. 2025, 15, 2320. [Google Scholar] [CrossRef]

- Mariniuc, A.M.; Cojocaru, D.; Abagiu, M.M. Building Surface Defect Detection Using Machine Learning and 3D Scanning Techniques in the Construction Domain. Buildings 2024, 14, 669. [Google Scholar] [CrossRef]

- Wu, F.; Jiang, X.; Fu, T.; Fu, Y.; Xu, D.; Zhao, C. RSTSRN: Recursive Swin Transformer Super-Resolution Network for Mars Images. Appl. Sci. 2024, 14, 9286. [Google Scholar] [CrossRef]

- Ziv, M.; Galanti, E.; Sheffer, A.; Howard, S.; Guillot, T.; Kaspi, Y. NeuralCMS: A Deep Learning Approach to Study Jupiter’s Interior. Astron. Astrophys. 2024, 686, L7. [Google Scholar] [CrossRef]

- Kim, K.Y.; Yang, Y.B.; Kim, M.R.; Kim, J.; Park, J.S. Predicting Early Depression in WZT Drawing Image Based on Deep Learning. Expert Syst. 2025, 42, e13675. [Google Scholar] [CrossRef]

- Wang, M.; Xu, A.; Fan, C.; Sun, X. Machine Learning for Predicting Personality and Psychological Symptoms from Behavioral Dynamics. Electronics 2025, 14, 583. [Google Scholar] [CrossRef]

- Delbracio, M.; Kelly, D.; Brown, M.S.; Milanfar, P. Mobile Computational Photography: A Tour. Annu. Rev. Vis. Sci. 2021, 7, 571–604. [Google Scholar] [CrossRef]

- Flores-Calero, M.; Astudillo, C.A.; Guevara, D.; Maza, J.; Lita, B.S.; Defaz, B.; Ante, J.S.; Zabala-Blanco, D.; Armingol Moreno, J.M. Traffic Sign Detection and Recognition Using YOLO Object Detection Algorithm: A Systematic Review. Mathematics 2024, 12, 297. [Google Scholar] [CrossRef]

- Duszejko, P.; Walczyna, T.; Piotrowski, Z. Detection of Manipulations in Digital Images: A Review of Passive and Active Methods Utilizing Deep Learning. Appl. Sci. 2025, 15, 881. [Google Scholar] [CrossRef]

- Ahmed, S.; Yoon, B.; Sharma, S.; Singh, S.; Islam, S. General Image Manipulation Detection Using Feature Engineering and a Deep Feed-Forward Neural Network. Mathematics 2023, 11, 4537. [Google Scholar] [CrossRef]

- Agarwal, S.; Jung, K.-H. Enhancing Low-Pass Filtering Detection on Small Digital Images Using Convolutional Neural Networks. Electronics 2023, 12, 2637. [Google Scholar] [CrossRef]

- Ziyadinov, V.; Tereshonok, M. Low-Pass Image Filtering to Achieve Adversarial Robustness. Sensors 2023, 23, 9032. [Google Scholar] [CrossRef]

- Zhang, J.; Chen, C.; Chen, K.; Ju, M.; Zhang, D. Local Adaptive Image Filtering Based on Recursive Dilation Segmentation. Sensors 2023, 23, 5776. [Google Scholar] [CrossRef] [PubMed]

- Rendón-Castro, Á.A.; Mújica-Vargas, D.; Luna-Álvarez, A.; Vianney Kinani, J.M. Enhancing Image Quality via Robust Noise Filtering Using Redescending M-Estimators. Entropy 2023, 25, 1176. [Google Scholar] [CrossRef]

- Hartbauer, M. A Simple Denoising Algorithm for Real-World Noisy Camera Images. J. Imaging 2023, 9, 185. [Google Scholar] [CrossRef]

- Pellicer, A.L.; Li, Y.; Angelov, P. PUDD: Towards Robust Multi-modal Prototype-based Deepfake Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 3809–3817. [Google Scholar]

- Bhinge, S.; Nagpal, P. Quantifying the Performance Gap between Real and AI-Generated Synthetic Images in Computer Vision. SSRN 2024, 1, 1–7. [Google Scholar] [CrossRef]

- Gallagher, J.; Pugsley, W. Development of a Dual-Input Neural Model for Detecting AI-Generated Imagery. arXiv 2024, arXiv:2406.13688. [Google Scholar] [CrossRef]

- Bartos, G.E.; Akyol, S. Deep Learning for Image Authentication: A Comparative Study on Real and AI-Generated Image Classification. In Proceedings of the AIS 2023, 18th International Symposium on Applied Informatics and Related Areas, Szekesfehervar, Hungary, 16 November 2023; pp. 1–5. [Google Scholar]

- Vora, V.; Savla, J.; Mehta, D.; Gawade, A.; Mangrulkar, R. Classification of Diverse AI Generated Content: An In-Depth Exploration using Machine Learning and Knowledge Graphs. Res. Sq. 2023, 1, 1–24. [Google Scholar] [CrossRef]

- Bird, J.J.; Lotfi, A. Cifake: Image Classification and Explainable Identification of AI-Generated Synthetic Images. IEEE Access 2024, 12, 15642–15650. [Google Scholar] [CrossRef]

- Kim, C.; Yoon, S.; Han, M.; Park, M. Transfer Learning-based Generated Synthetic Images Identification Model. J. Converg. Cult. Technol. 2024, 10, 465–470. [Google Scholar] [CrossRef]

- Hossain, M.Z.; Zaman, F.U.; Islam, M.R. Advancing AI-Generated Image Detection: Enhanced Accuracy through CNN and Vision Transformer Models with Explainable AI Insights. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Dhaka, Bangladesh, 20–22 December 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Hayathunnisa, V.; Kuppusamy, P.; Manimaran, A. Art of Detection: Custom CNN and VGG19 for Accurate Real Vs Fake Image Identification. In Proceedings of the 2023 6th International Conference on Recent Trends in Advance Computing (ICRTAC), Chennai, India, 15–17 December 2023; pp. 306–312. [Google Scholar] [CrossRef]

- Lokner Lađević, A.; Kramberger, T.; Kramberger, R.; Vlahek, D. Detection of AI-Generated Synthetic Images with a Lightweight CNN. AI 2024, 5, 1575–1593. [Google Scholar] [CrossRef]

- Muthaiah, U.; Divya, A.; Swarnalaxmi, T.N.; Vidhyasagar, B.S. A Comparative Review of AI-Generated vs Real Images and Classification Techniques. In Proceedings of the 2024 4th International Conference on Ubiquitous Computing and Intelligent Information Systems (ICUIS), Kyoto, Japan, 9–12 December 2024; pp. 141–147. [Google Scholar] [CrossRef]

- Wang, Y.; Hao, Y.; Cong, A.X. Harnessing Machine Learning for Discerning AI-Generated Synthetic Images. arXiv 2024, arXiv:2401.07358. [Google Scholar] [CrossRef]

- Gupta, A.S.; Shreneter, K.P.; Sehgal, S. Visual Veracity: Advancing AI-Generated Image Detection with Convolutional Neural Networks. In Proceedings of the 2024 11th International Conference on Reliability, Infocom Technologies and Optimization (Trends and Future Directions) (ICRITO), Noida, India, 6–8 March 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Mahameed, A.I.; Kadhim, A.A.; Aiiedane, H.A. Transfer Learning-Based Models for Comparative Evaluation for the Detection of AI-Generated Images. J. Electr. Syst. 2024, 20, 2570–2578. [Google Scholar] [CrossRef]

- Tanfoni, M.; Ceroni, E.G.; Marziali, S.; Pancino, N.; Maggini, M.; Bianchini, M. Generated or Not Generated (GNG): The Importance of Background in the Detection of Fake Images. Electronics 2024, 13, 3161. [Google Scholar] [CrossRef]

- Lanzino, R.; Fontana, F.; Diko, A.; Marini, M.R.; Cinque, L. Faster Than Lies: Real-time Deepfake Detection using Binary Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 17–18 June 2024; pp. 3771–3780. [Google Scholar] [CrossRef]

- Rössler, A.; Cozzolino, D.; Verdoliva, L.; Riess, C.; Thies, J.; Nießner, M. Faceforensics++: Learning to Detect Manipulated Facial Images. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1–11. [Google Scholar] [CrossRef]

- Ali, S.S.; Ganapathi, I.I.; Vu, N.S.; Ali, S.D.; Saxena, N.; Werghi, N. Image Forgery Detection Using Deep Learning by Recompressing Images. Electronics 2022, 11, 403. [Google Scholar] [CrossRef]

- Qazi, E.U.H.; Zia, T.; Almorjan, A. Deep Learning-Based Digital Image Forgery Detection System. Appl. Sci. 2022, 12, 2851. [Google Scholar] [CrossRef]

- Taspinar, Y.S.; Cinar, I. Distinguishing Between AI Images and Real Images with Hybrid Image Classification Methods. In Proceedings of the 2024 13th Mediterranean Conference on Embedded Computing (MECO), Budva, Montenegro, 2–5 June 2024; pp. 1–4. [Google Scholar] [CrossRef]

- Zhu, M.; Chen, H.; Yan, Q.; Huang, X.; Lin, G.; Li, W.; Wang, Y. Genimage: A Million-Scale Benchmark for Detecting AI-Generated Image. Adv. Neural Inf. Process. Syst. 2023, 36, 77771–77782. [Google Scholar] [CrossRef]

- Castillo Camacho, I.; Wang, K. A Comprehensive Review of Deep-Learning-Based Methods for Image Forensics. J. Imaging 2021, 7, 69. [Google Scholar] [CrossRef]

- Maurício, J.; Domingues, I.; Bernardino, J. Comparing Vision Transformers and Convolutional Neural Networks for Image Classification: A Literature Review. Appl. Sci. 2023, 13, 5521. [Google Scholar] [CrossRef]

- Yu, H.; Yang, L.T.; Zhang, Q.; Armstrong, D.; Deen, M.J. Convolutional Neural Networks for Medical Image Analysis: State-of-the-Art, Comparisons, Improvement, and Perspectives. Neurocomputing 2021, 444, 92–110. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; University of Toronto: Toronto, ON, Canada, 2009; Available online: https://www.cs.toronto.edu/~kriz/learning-features-2009-TR.pdf (accessed on 1 June 2025).

- Schuhmann, C.; Beaumont, R.; Vencu, R.; Gordon, C.; Wightman, R.; Cherti, M.; Coombes, T.; Katta, A.; Mullis, C.; Wortsman, M.; et al. Laion-5B: An Open Large-Scale Dataset for Training Next Generation Image-Text Models. Adv. Neural Inf. Process. Syst. 2022, 35, 25278–25294. [Google Scholar] [CrossRef]

- Rombach, R.; Blattmann, A.; Lorenz, D.; Esser, P.; Ommer, B. High-resolution image synthesis with latent diffusion models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 10684–10695. [Google Scholar]

- Nachmani, E.; Roman, R.S.; Wolf, L. Non Gaussian Denoising Diffusion Models. arXiv 2021, arXiv:2106.07582. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Elngar, A.A.; ElHefnawy, A.A.; El-Bakry, H.M.; Ismail, A.F.; Basyuni, M.; Hammad, M.; Yousif, E.S. Image Classification Based on CNN: A Survey. J. Cybersecur. Inf. Manag. 2021, 6, 18–50. [Google Scholar] [CrossRef]

- Luo, C.; Zhang, Q.; Zhang, S.; Zhang, Y.; Xu, Z.; Li, J.; He, Y. How Does the Data Set Affect CNN-Based Image Classification Performance? In Proceedings of the 2018 5th International Conference on Systems and Informatics (ICSAI), Shanghai, China, 12–14 November 2018; pp. 224–229. [Google Scholar] [CrossRef]

- Xie, J.; Xu, Y.; Zhang, D.; Li, J.; Liu, L.; Li, X. Investigation of Different CNN-Based Models for Improved Bird Sound Classification. IEEE Access 2019, 7, 175353–175361. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is All You Need. Adv. Neural Inf. Process. Syst. 2017, 30, 6000–6010. [Google Scholar] [CrossRef]

- Xiong, R.; Yang, Y.; He, D.; Zheng, K.; Zheng, S.; Xing, C.; Zhang, H.; Lan, Y.; Wang, L.; Liu, T.-Y. On Layer Normalization in the Transformer Architecture. arXiv 2020, arXiv:2002.04745. [Google Scholar] [CrossRef]

- Josephine, V.H.; Nirmala, A.P.; Alluri, V.L. Impact of Hidden Dense Layers in Convolutional Neural Network to Enhance Performance of Classification Model. In IOP Conference Series: Materials Science and Engineering, Proceedings of the 4th International Conference on Emerging Technologies in Computer Engineering: Data Science & Blockchain Technology (ICETCE 2021), Jaipur, India, 3–4 February 2021; IOP Publishing: Bristol, UK, 2021; Volume 1131, No. 1; p. 012007. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. Available online: https://jmlr.csail.mit.edu/beta/papers/v15/srivastava14a.html (accessed on 1 June 2025).

- Manaswi, N.K.; Manaswi, N.K. Regression to MLP in Keras. In Deep Learning with Applications Using Python: Chatbots and Face, Object, and Speech Recognition with TensorFlow and Keras; Apress: Berkeley, CA, USA, 2018; pp. 69–89. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Li, B.; Lima, D. Facial Expression Recognition via ResNet-50. Int. J. Cogn. Comput. Eng. 2021, 2, 57–64. [Google Scholar] [CrossRef]

- Rezende, E.; Ruppert, G.; Carvalho, T.; Ramos, F.; De Geus, P. Malicious Software Classification Using Transfer Learning of ResNet-50 Deep Neural Network. In Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA), Las Vegas, NV, USA, 18–21 December 2017; pp. 1011–1014. [Google Scholar] [CrossRef]

- Al-Haija, Q.A.; Adebanjo, A. Breast Cancer Diagnosis in Histopathological Images Using ResNet-50 Convolutional Neural Network. In Proceedings of the 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS), Barcelona, Spain, 7–9 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Karras, T.; Samuli, L.; Aila, T. A Style-Based Generator Architecture for Generative Adversarial Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; Volume 2, pp. 4401–4410. [Google Scholar] [CrossRef]

- Bojan, T. 1 Million Fake Faces on Kaggle. 2020. Available online: https://www.kaggle.com/c/deepfake-detection-challenge/discussion/121173 (accessed on 1 June 2025).

- Various Artists. Real and Fake (AI-Generated) Art Images Dataset. 2025. Available online: https://www.kaggle.com/datasets/doctorstrange420/real-and-fake-ai-generated-art-images-dataset (accessed on 1 June 2025).

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).