Abstract

As Industry 4.0 advances, emerging use cases demand 5G NR networks capable of delivering high uplink throughput and ultra-low downlink latency. This study evaluates a 5G link between a LiDAR-equipped unmanned ground vehicle (UGV) and its control unit using a configurable industrial testbed. Based on 3GPP standards and related literature, we identified latency and uplink throughput as key factors for real-time control. Experiments were conducted across different gNB configurations and attenuation levels. The results show that tuning parameters such as CSI, TRS, and SSB significantly improves performance. In this study, we provide a practical analysis of how these parameters influence key metrics, supported by real-world measurements. Furthermore, adjusting the Scheduling Request period and PDCCH candidate settings enhanced uplink reliability. Several configurations supported high LiDAR uplink traffic while maintaining low control latency, meeting industrial 3GPP standards. The configurations also met throughput requirements specified in UAV-related 3GPP standards. In favorable radio link conditions, selected configurations were sufficient to also enable cooperative driving or even machine control. This work highlights the importance of fine-tuning parameters and performing testbed-based evaluations to bridge the gap between simulation and deployment in Industry 4.0.

1. Introduction

Over the past three decades, we have witnessed a tremendous technological leap in mobile devices. Cellular networks have evolved significantly, with each generation introducing improvements not only in throughput and service quality but also in functionality, culminating in 5G’s support for advanced use cases such as AR/VR, IoT, and M2M communications [1].

The continuous improvement in network capabilities has resulted in 5G being designed not only as a faster solution for traditional services like internet access, voice calls, and messaging. Already at the design stage, three distinct service types were defined by the ITU in the IMT-2020 recommendation (ITU-R M.2083-0) [2] to support emerging needs across diverse sectors: Ultra Reliable Low Latency Communications (URLLC), Enhanced Mobile Broadband (eMBB), and Massive Machine Type Communications (mMTC).

The authors of [3] provide a clear overview of these three service categories, describing their characteristics and applications. eMBB focuses on high data rates required for services such as ultra-high-definition (UHD) video or immersive experiences. URLLC targets use cases that demand very low latency and extremely high reliability, such as industrial automation, remote surgery, or autonomous driving. mMTC supports the deployment of massive numbers of low-power devices commonly found in smart cities or smart grid systems. The paper also discusses performance expectations, test scenarios, and traffic forecasts for each category.

These three categories translate into specific demands of new services and applications, from which each is characterized by a different set of network quality parameters. Although low-latency requirements have been known for quite a long time, e.g., from increasingly popular human–robot shared control systems [4] used for remote surgeon scenarios [5], a new context of Industrial 4.0 systems points out the significant importance of two parameters simultaneously: low latency and high uplink throughput.

A comprehensive overview of these applications is presented in [6], where 5G is identified as a key enabler of collaborative and autonomous robotics, remote-controlled machinery, smart production lines, and mobile inspection platforms such as UAVs and unmanned ground vehicles (UGVs). Other recent studies also highlight the importance of edge computing for local low-latency processing [7,8], the use of private 5G networks for security and reliability [9], and the integration of enabling technologies such as AI, blockchain, and virtualization [10,11].

To address these Industry 4.0 use cases, this study focuses on the performance of 5G in asymmetric traffic scenarios involving unmanned vehicles. Many industrial use cases require extremely low latency in the downlink and very high uplink throughput to transmit video streams, mapping data, or sensor information in real time. This is especially important for unmanned vehicles such as UAVs and UGVs used in material handling, inspection, factory automation, and surveillance. These applications often demand latency as low as 10 ms, reliability above 99.9999 percent, and uplink data rates reaching hundreds of megabits per second or more [12]. A high-level representation of such a scenario is illustrated in Figure 1, where a mobile platform equipped with a high-throughput sensor (e.g., LiDAR) communicates with a remote operator or automated control system. While the sensor generates substantial uplink traffic, downlink communication is simultaneously needed for responsive control, often via a human–machine interface such as a VR headset or dashboard.

Figure 1.

Conceptual diagram of the investigated use case: a remotely located operator controls a LiDAR-equipped unmanned ground vehicle (UGV) over a standalone 5G network. This scenario involves asymmetric traffic–high-volume uplink traffic generated by the LiDAR sensor and low-latency downlink traffic used for control.

To meet these challenges, standardization bodies such as 3GPP have proposed reference use cases and detailed performance requirements. However, most published studies focus on simulation environments, which allow for broad experimentation but fail to account for hardware-specific behaviors and real-world radio conditions [13,14,15,16,17,18,19,20,21]. As a result, there is still a clear gap between the theoretical potential and practical performance of 5G networks in industrial deployments.

Our work addresses that gap by jointly evaluating both latency and throughput, which are rarely studied together, using commercial off-the-shelf (COTS) 5G hardware in a real testbed environment.

Our research focused on uplink-intensive applications, such as those involving LiDAR sensors mounted on mobile robots. These sensors generate high-volume uplink traffic, while low-latency downlink is simultaneously required for control signaling. To simulate realistic scenarios, we used configurable attenuation to represent mobility and environmental changes and tested numerous configurations inspired by both the literature and equipment vendor recommendations.

Our goal was to evaluate 5G performance in a realistic industrial scenario involving a LiDAR-equipped ground vehicle, with a focus on achieving both high uplink throughput and low control latency. However, we did not find any publicly available specifications describing LiDAR data traffic for UGVs. What mattered was the service profile itself, not the specific platform type. For this reason, we used UAV-related throughput characteristics, as they include detailed LiDAR traffic profiles. Meanwhile, latency expectations were guided by scenarios involving autonomous ground vehicles. This combination allowed us to realistically reflect the requirements of industrial UGV applications despite the lack of direct standardization references.

This dual-focus approach, combined with the use of deployable COTS infrastructure, allows our findings to be directly applicable in real-world industrial deployments and distinguishes our study from prior simulation-based or single-metric testbed works.

A key contribution of our work is the practical analysis of how selected 5G parameters affect latency and throughput under different conditions. We demonstrate which configurations are capable of supporting demanding applications such as remote drone control via video streaming or LiDAR data transmission. The results are presented in a manner directly applicable to engineers and system designers aiming to deploy wireless connectivity in industrial scenarios.

The remainder of this paper is structured as follows. Section 2 discusses the related literature and standards in more detail. Section 3 outlines our research methodology. Section 4 describes the experimental setup, including hardware and scenarios. Section 5 presents and discusses the measurement results. Finally, Section 6 summarizes the key findings and outlines directions for future research.

2. Related Works

The topic of ensuring high-throughput and low-latency wireless communication— particularly in industrial and mission-critical contexts—has gained increasing attention in both academic and commercial research. Numerous studies have aimed to address these challenges by proposing novel solutions at various layers of the communication stack. However, the landscape remains fragmented, with varying approaches, technologies, and performance assumptions depending on the use case. Therefore, in this section, we aim to organize and contextualize key aspects of the problem. We begin by reviewing relevant use cases and their communication requirements, followed by an overview of related work and promising technical directions. This structured analysis provides the necessary foundation and motivation for our proposed research approach.

In every field around us, we identified numerous use cases that clearly warranted deeper investigation. Since addressing all of them simultaneously was not feasible, we focused on a subset that stood out—particularly those involving industrial drones equipped with components that generate high uplink traffic. For instance, a camera mounted on a remotely operated drone may require continuous 4K streaming, resulting in high-throughput uplink transmission, while control commands require low-throughput but ultra-low-latency communication. This simple setup illustrates the dual nature of such traffic demands.

2.1. Requirements

As a starting point, we established performance requirements based on standardized industrial UAV use cases. Here, the 3GPP TR 22.829 standard [22] proved helpful. It centers on 3GPP’s support for a diverse range of applications and scenarios utilizing low-altitude UAVs in various commercial and government sectors. It addresses service requirements and KPIs associated with command and control (C2), payload functions (e.g., camera), and the operation of on-board radio access nodes. Table 1 presents several specific use cases from the standard. All of them demonstrate the need for higher uplink traffic alongside downlink control traffic with appropriately low throughput.

Table 1.

UAV use case (based on 3GPP TR 22.829 [22]).

Particularly relevant to the context of our research was the S2 use case, which describes laser mapping, as the LiDAR sensor was our central interest. The standard itself is frequently cited in the context of UAV applications across different scenarios. For example, ref. [23] explored how reconfigurable smart surfaces (RSS) can be integrated into aerial platforms to enhance 5G and beyond (B5G) connectivity by intelligently reflecting signals. This study outlined RSS technology, proposed a control architecture, discussed potential use cases, and identified research challenges in this emerging field.

In [24], the authors examined the use of UAV-based communication at millimeter-wave frequencies for on-demand radio access in B5G systems, focusing on dynamic UAV positioning to match fluctuating user traffic and realistic backhaul constraints. The study reviewed recent 3GPP activities, compared static and mobile UAV communication options, and demonstrated that leveraging UAV mobility can enhance overall system performance despite backhaul limitations.

Another example is presented in [25], where the authors studied how terahertz (THz) bands can empower UAVs with ultra-high throughput, low latency, and precise sensing to meet the stringent requirements of the 6G ecosystem. It reviewed potential use cases, design challenges, trade-offs, and regulatory aspects and provided numerical insights through a UAV-to-UAV communication case study.

Bajracharya et al., in [26], proposed using unmanned aerial vehicles (WI UAVs) equipped with 6G NR in the unlicensed band (NR-U) to create a flexible, flying wireless infrastructure that can serve as a base station, relay, or data collector. It discussed how these WI UAVs can overcome the limitations of fixed RANs by adapting to dynamic traffic demands while also covering regulatory, standardization, opportunities, and design challenges.

A final interesting example is presented by the authors of [27], who developed an analytical framework to calculate the line-of-sight probability for air-to-ground channels in urban environments. It took into account the operating frequency, transmitter/receiver heights, distance, and urban obstacle characteristics to enable system-level performance evaluation of airborne platform-assisted wireless networks.

Since this study operates within the domain of Industry 4.0, the aforementioned requirements must be expanded. To this end, we referred to the 3GPP TR 22.804 standard [28], which focuses on 5G communication for automation in vertical domains covering production, goods handling, and service delivery. This context typically demands low latency, high reliability, and high availability, although other types of communication can coexist within the same 5G infrastructure. The standard also offers an overview of key communication principles for mapping industrial automation requirements onto 5G systems. Table 2 presents various control scenarios for UGVs (Automated Guided Vehicles), along with their respective latency constraints. All of them involve UGVs operating in a cyclic interaction model. Requirement P1 describes UGVs controlled by production lines or industrial robots. P2 involves the exchange of information between UGVs to enable cooperative movement. P3 describes a human remotely controlling a UGV using a camera feed. P4 addresses semi-autonomous UGVs where a human operator takes over in exceptional situations using a camera mounted on the vehicle.

Table 2.

UGV use case (based on 3GPP TR 22.804 [28]).

For our study, the P3 case was particularly relevant, as its latency requirements served as a reference point for evaluating our results. Similar research was conducted by Qualcomm, which, in [29], identified robotic motion control as a key example of an application with extreme performance requirements and highlighted how 5G can enhance efficiency, flexibility, and integration with existing and emerging industrial communication standards.

All of this reflects the growing recognition within the industry of the benefits offered by wireless connectivity. For example, throughout 2024, the adoption of such solutions increased by 22%. However, Time-Sensitive Networking (TSN) solutions continue to be dominated by Industrial Ethernet, and the integration of TSN with 5G remains an active area of development [30]. This is evident in works such as [31], where the authors reviewed the integration of 5G and TSN, outlining current 3GPP developments, architectural considerations, and remaining challenges. Similarly, in [32], the authors proposed an AI-driven approach for improved resource management and reliable communication within integrated 5G-TSN networks.

In summary, 3GPP TR 22.829 [22] helped us determine the uplink throughput targets for the LiDAR-equipped drone, while 3GPP TR 22.804 [28] provided latency benchmarks for control message delivery in industrial drone scenarios. Based on this, we derived the requirements for a scenario combining both aspects: specifically, LiDAR data transmission (S2) from an industrial drone operated manually with the aid of a live video feed (P3).

2.2. Specific Technical Approaches

2.2.1. Simulation-Based Studies

In our research, a key objective was to meet the requirements related to downlink latency while simultaneously achieving sufficiently high throughput. Numerous studies have proposed various strategies to optimize both aspects, with many of them conducted in simulation environments. A common approach to minimizing latency involves modifying the standard resource reservation mechanism—for example, by enabling immediate message transmission at the cost of a higher risk of collision. This technique was explored in [13,14,15,16]. Furthermore, in [14], the authors proposed eliminating queuing to exclude buffering effects on latency and recommended using higher numerologies to shorten the slot duration. In [17], an alternative to traditional network slicing was proposed, where the focus was placed on services and functions rather than resource allocation, specifically in the context of 5G autonomous vehicular networks. In addition to adapting grant-free access, ref. [16] also presented a technique for non-orthogonal resource sharing between URLLC traffic and latency-tolerant services.

To address the second goal, which is ensuring high uplink throughput, several recent studies have proposed innovative physical layer and network management techniques. In [18], the authors introduced a multi-layer non-orthogonal multiple access architecture for 5G NR that enables the simultaneous transmission of several signal layers with reduced transmitter complexity and latency. Their link-level simulations demonstrated a clear throughput gain over traditional orthogonal multiple access schemes, even under imperfect interference cancellation. Other works have focused on intelligent adaptation of modulation and coding. For instance, ref. [19] proposed a machine learning-based adaptive modulation model for MIMO-OFDM systems. Using a deep neural network, the model dynamically adjusts transmission parameters based on channel conditions, leading to significantly better throughput in complex environments when compared to rule-based AMC algorithms. Another notable direction is the use of carrier aggregation and dual connectivity. In [20], the authors developed a reinforcement learning algorithm for energy-aware scheduling of component carriers and uplink power control. This method increased average user throughput while simultaneously minimizing delay and energy consumption, confirming that the combination of CA and DC can be highly effective for uplink-intensive applications. Finally, ref. [21] addressed network-level optimization through deep reinforcement learning-based slicing. The proposed method dynamically allocated frequency resources to different service slices, maximizing overall network throughput while preserving QoS for latency-sensitive applications. This approach outperformed static slicing strategies and demonstrated the benefits of intelligent spectrum sharing in multi-service 5G environments.

2.2.2. Testbed-Based Studies

However, while simulations allow for extensive testing, it is difficult to replicate real-world environmental conditions. Moreover, the required equipment is not always available or capable of supporting the proposed solutions, especially in industrial settings. Therefore, alongside analytical and simulation-based research, work is also being conducted on more advanced technological solutions using various testbeds. During our literature review, we found significantly fewer studies focusing on latency minimization and throughput maximization that follow this approach. One of them is [33], which investigated the practical challenges of minimizing latency in a controlled experimental environment. The authors analyzed the impact of multiple factors on latency performance, including core network configurations, radio access technologies (RATs), and transport protocols. They also highlighted the importance of parameters such as gain, attenuation, and sampling rate. The test setup in this study consisted of a 5G testbed platform in a single-UE (User Equipment) setup and a 5G core with RAN software-Amarisoft Callbox Classic version 2023-06-10 platforms hosted on a single PC.

In the context of maximizing throughput, several recent testbed-based works are worth highlighting. In [34], the authors implemented a real-time NOMA transmitter and receiver on SDR hardware compatible with 5G, introducing variable symbol rates for different NOMA streams. Over-the-air experiments showed that this technique achieved significantly higher aggregate throughput compared to traditional orthogonal multiple access schemes. In another study, ref. [35] described the design and field validation of a full-scale outdoor massive MIMO testbed for 5G, where directional beamforming and multi-user scheduling yielded cell throughputs in the order of several Gbps, confirming the practicality of massive MIMO under real-world conditions. Furthermore, ref. [36] demonstrated how coordinated network slicing, using controlled constructive interference between base stations, can improve overall spectral efficiency. Tested on a live LTE testbed, their solution increased aggregate network throughput by up to 27 percent while simultaneously improving users’ signal-to-noise ratio (SNR).

2.3. gNB Parameters Relevant in Low-Latency, High-Throughput Scenarios

To evaluate and optimize both latency and throughput, we focused on the gNB configuration parameters that are most impactful in industrial 5G scenarios. Our analysis was guided by insights from the literature, as well as suggestions provided directly by the base station manufacturer through their baseline configuration sets.

The channel bandwidth was selected as a key parameter, as doubling it nearly doubles throughput [33]. Subcarrier spacing and numerologies were also included; higher numerologies reduce latency due to a shorter symbol duration [14,15,37,38]. The impact of the transmitter-to-base-station distance on latency, shown in [38], was approximated in our setup using programmable attenuation.

Amarisoft documentation [39] provided several baseline configurations targeting either low latency or high throughput. Each configuration included a recommended set of tuning parameters selected by the manufacturer as the most influential. We therefore prioritized parameters such as the uplink/downlink symbol allocation, PRACH configuration, defined vs. automatic CSI-RS, Scheduling Request period [40], RX to TX latency, TRS, SSB, and the number of PDCCH candidates in the UE-specific search space (USS) [41], as these were directly derived from those vendor-provided baselines. The importance of these parameters is supported by recent studies. For example, reducing the SR Period directly minimizes uplink latency, which is crucial for URLLC applications [38]. Increasing the number of USS candidates enhances scheduling flexibility and control channel robustness. This supports higher throughput and reliability in dynamic 5G scenarios [42].

This set of parameters served as the foundation for our experimental campaign, which was conducted in the LAB PL5G testbed environment [43]. We evaluated both the original baseline configurations and our own parameter modifications, which were motivated by the preceding literature review and the vendor’s technical documentation, in order to assess their effectiveness and identify the most influential tuning options in practical deployment scenarios.

3. Research Methodology

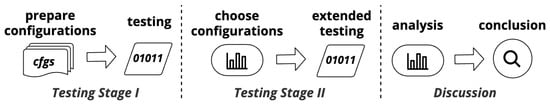

All stages of the research conducted to obtain reliable results—upon which the final conclusions are based—are presented in this section. The entire research process is illustrated in Figure 2.

Figure 2.

Overview of the research methodology used in this study, including LiDAR traffic analysis, configuration preparation, two-stage testing, and final evaluation.

This structured, multi-stage approach ensures methodological rigor and relevance. The initial broad testing (Stage I) enables the identification of promising configurations across diverse conditions. By narrowing the focus in Stage II, we validate these selections under more realistic and demanding scenarios, allowing us to observe nuanced system behavior. Finally, the evaluation phase synthesizes these insights to draw reliable, application-driven conclusions. Each step builds upon the previous, ensuring that the final results are both robust and practically meaningful.

3.1. LiDAR Traffic Verification

To ensure the 5G uplink system was tested under realistic and representative traffic conditions, we began by analyzing the actual data output characteristics of the LiDAR sensor. Understanding the volume and variability of traffic generated by different LiDAR configurations was essential for selecting appropriate parameters and validating whether specific base station configurations could meet the performance requirements of industrial use cases.

To this end, we calculated the average throughput of the data stream under various sensor configurations. Each 10-min measurement was divided into 1-min intervals, from which ten average values were obtained and subsequently averaged again. The standard deviation was computed to assess the temporal variability of the generated traffic.

In this way, we tested configurations created by combining multiple parameter values in all possible ways. These were as follows:

- Profile: Low Data Rate, Single Return, and Dual Return;

- View angle (°): 90, 180, 270, and 360;

- Resolution and frames per second: 512 × 10, 1024 × 10, 2048 × 10, 512 × 20, and 1024 × 20.

The Ouster OS0 Ultra-Wide View High-Resolution Imaging LiDAR sensor used in our setup supports several configurable data output profiles that allow users to balance between data richness and bandwidth or processing constraints [44]. These profiles include the Single Return, Low Data Rate, and Dual Return modes. The default Single Return profile (RNG19_RFL8_SIG16_NIR16) provides high-resolution measurements, including 19-bit range, 8-bit reflectivity, 16-bit signal intensity, and 16-bit near-infrared (NIR) data. This mode is ideal for applications that require complete measurement fidelity. For scenarios with limited bandwidth or processing capacity, the Low Data Rate profile (RNG15_RFL8_NIR8) reduces each pixel’s data to a single word, containing scaled 15-bit range, 8-bit reflectivity, and 8-bit NIR, which makes it suitable for lightweight systems. The Dual Return profile (RNG19_RFL8_SIG16_NIR16_DUAL) enables the capture of up to two returns per laser pulse, which is useful for detecting partially transparent or multi-layered surfaces. In this mode, the sensor outputs both the strongest and second strongest returns, each with full data fields. These profiles provide flexibility for adapting the LiDAR output to different industrial tasks, such as perception, mapping, and localization [45].

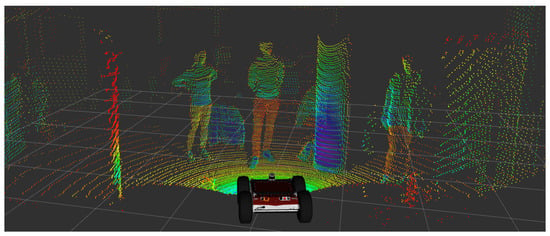

The view angle refers to the area covered by the sensor’s scan. The direction the LiDAR was pointing was not important for measuring throughput. As for resolution and frames per second, all available values were included. Figure 3 demonstrates the typical output of the LiDAR sensor used in the test setup, showing the point cloud produced during operation.

Figure 3.

Visualization of raw LiDAR sensor data. The image shows a point cloud representation of the surrounding environment generated by the sensor’s laser scanning mechanism.

3.2. Testing Stage I

In addition to the configurations provided by the 5G core manufacturer, which we refer to as baseline configurations, we selected those specifically recommended for either low-latency or high-throughput performance, as documented in the Amarisoft technical guidelines [39]. These were used as starting points to reflect realistic default setups used in industrial deployments. We then prepared several other gNB configurations. Each of them was a modified baseline configuration with one or two parameters changed that was selected after careful evaluation of both related works and technical documentation provided by the core network equipment manufacturer. This kind of modification allowed us to isolate the effects of specific changes and better understand their contribution to end-to-end performance.

We then conducted two sets of tests, the detailed methodology of which is described in Section 4.2. The first set involved measuring the Round Trip Time (RTT) latency for each prepared configuration. The second focused on using the most demanding sensor configuration to determine their maximum achievable throughput.

3.3. Testing Stage II

After analyzing the results from the first stage, we selected six standout configurations for more extensive testing. These tests primarily focused on measuring latency under simultaneous uplink load generated by the LiDAR. This time, however, two sensor configurations were used: one whose entire traffic could be transmitted without loss across all base station configurations, and another whose traffic could only be transmitted without loss by selected configurations. The tests also considered mobility aspects by introducing various levels of additional attenuation, which affected the SNR and consequently influenced the automatic selection of the Modulation and Coding Scheme (MCS). Additionally, different packet sizes were evaluated with respect to their latency.

3.4. Discussion

4. Experimental Setup

4.1. Hardware and Topology

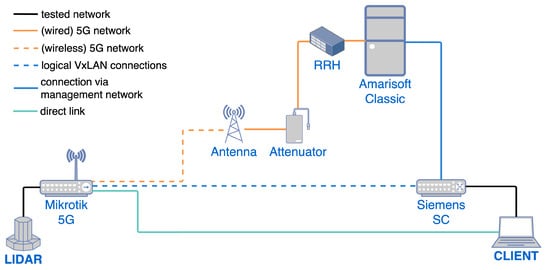

As the 5G core, we used the Amarisoft Callbox Classic base station [46]. The license allowed us to utilize up to 60 MHz of bandwidth. It is a cost-effective solution offering an all-in-one approach (an integrated 5G network core with a gNB) selected from among various available solutions, including carrier-grade telco core/gNBs, as the solution best suited for the implementation of private 5G networks. Similar testbeds were used in other works described in, e.g., [47,48,49]. The programmable attenuator was a Vaunix LDA-802Q Lab Brick [50], which enabled us to introduce attenuation in a controlled manner, simulating different radio-link conditions and allowing us to analyze its impact on network performance. The antenna used was Alpha Wireless AW3941-T0-F with gain of 8.5 dBi and az beamwidth of 360 degrees. The Remote Radio Head (RRH) was AW2S Panther, offering a power output of 33 dB per chain.

On the sensor side, the 5G router was the MikroTik Chateau 5G ax [51]. On the opposite end, we used the Siemens SCALANCE SC646-2C. Both devices functioned as Virtual Tunnel Endpoints (VTEPs) for VxLAN traffic. The LiDAR sensor used was the Ouster OS-0-128, which allows configuration of the field of view, data resolution, and packet format. The sensor was mounted on an industrial unmanned ground vehicle (UGV), the Husarion Panther, which also served as its power source.

The endpoint device was a computer equipped with an Intel Core i7-11800H processor, 32 GB of RAM, and running Ubuntu 20.04.6 LTS. This setup enabled comprehensive evaluation of latency, throughput, logical tunneling mechanisms (VxLAN), and the effects of signal degradation in a controlled 5G industrial communication environment.

The experimental setup scheme is shown in Figure 4. The LiDAR sensor mounted on the UGV was directly connected to a 5G router that encapsulated the traffic using the Virtual Extensible LAN (VxLAN) protocol. It then sent the traffic to the 5G core. The radio link between the UE and the base station included a programmable attenuator. The core forwarded the traffic to the industrial switch, which decapsulated the VxLAN traffic—a commonly used approach to integrating 5G systems with Industry 4.0 equipment [52]. These data were delivered to the client. The client simultaneously generated traffic-simulating control messages for the UGV. Additionally, a direct link was established to the Mikrotik 5G router. Downlink traffic was mirrored to this link to facilitate the analysis of end-to-end latency between the client and the UE.

Figure 4.

Diagram of the test setup.

When it comes to the types of media used to connect individual components and the underlying technologies, the 5G core utilized the eCPRI (evolved Common Public Radio Interface) protocol to connect to the Radio Unit (RU) via a fiber optic cable. The RU was connected to the Remote Radio Head (RRH), with a programmable attenuator placed between them. The RRH communicated wirelessly with a Mikrotik 5G device, which was connected to the LiDAR sensor via an Ethernet cable.

4.2. Scenarios

To automate the execution of the experiment, we developed a Python 3.8.10 script that remotely configured both the base station and the attenuator with the appropriate settings. The script used the paramiko SSH module to connect to each testbed component, ensuring secure and reliable communication. After applying the desired configuration (e.g., adjusting gNB parameters and setting attenuation levels), the script verified whether the changes had been correctly applied. Depending on the testing phase, latency, throughput, or both were measured.

To assess latency, the script adjusted the ICMP packet size and started monitoring the traffic exchange between the LiDAR client and the remote controller. Once a stable request–response pattern was detected, indicating a successful and valid test run, a brief warm-up period was triggered. During this time, ping ran continuously to eliminate transient fluctuations that typically occur immediately after connection establishment. This helped to ensure that the collected results were accurate and not distorted by initial anomalies.

As previously mentioned, first, we aimed to run multiple configurations prepared beforehand based on the configurations proposed by the base station manufacturer with additional parameters. The analysis and results are presented in Section 5.

There were four baseline configurations: one focused on minimizing latency, while the other three aimed at maximizing throughput. Their modifications involved parameters discussed in the Section 2.3. Ultimately, 24 configurations were tested: 4 baseline, 20 derived. Not all parameters were tested with each baseline configuration. The main parameters that were modified include the following:

- Bandwidth: 20 and 60 [MHz];

- RX to TX latency (parameter that defines the minimum allowed latency in slots between RX and TX): 1, 2, 4, and without this parameter;

- Scheduling Request Period (the time that User Equipment (UE) waits to obtain UL grant): we used the value of 1;

- PRACH (The Physical Random Access Channel—used to carry random access preamble for the UE toward the next-generation Node B (gNB)): 128 and 160;

- Disabling TRS (Tracking Reference Signal);

- Number of PDCCH candidates in USS (possible scheduling opportunities for the UE on the control channel): we used 8 for Aggregation Level 2 (AL2).

In Table 3, we specified the parameter values used at both stages. As mentioned in Section 3, Stage I aimed to identify which configurations offer the greatest potential for minimizing latency and maximizing throughput. Due to the time-consuming nature of the tests, we limited the number of parameter values examined, as presented below. In Stage II, we thoroughly examined the capabilities of the selected configurations under varying environmental conditions and with different packet sizes.

Table 3.

The specific parameter values used in each stage are presented.

5. Results and Discussion

This section presents the results of our experimental evaluation and provides an in-depth discussion of the observed behaviors. We begin by verifying the stability of different LiDAR configurations, followed by a two-stage performance analysis of selected 5G base station setups under varying traffic and radio conditions. The final part of the section discusses key insights and implications for practical deployment.

5.1. LiDAR Traffic Verification

Table 4 presents the results we obtained while evaluating the actual throughput required by the sensor in specific profiles. In the configuration including Dual Return, 90-degree field of view, 512 resolution, and 10 frames per second, which represents the worst case, the coefficient of throughput variation (CoTV) equaled . According to [53], CoTV values below 1% typically indicate highly stable throughput conditions. Therefore, no further measurements were necessary.

Table 4.

Average throughput and standard deviation (SD) for selected LiDAR configurations.

As we can see, the most demanding configuration included the Dual Return profile, 360 degree view angle, 2048 × 10 or 1024 × 20 resolution, and frames per second of around 330 Mibps.

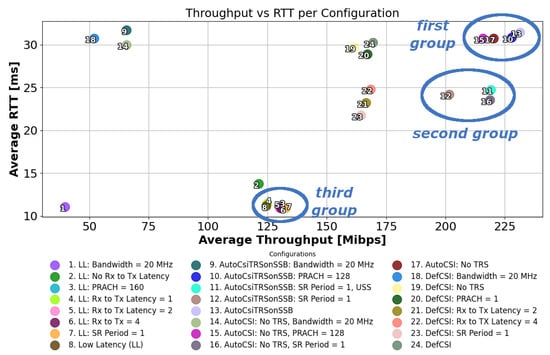

5.2. Stage I

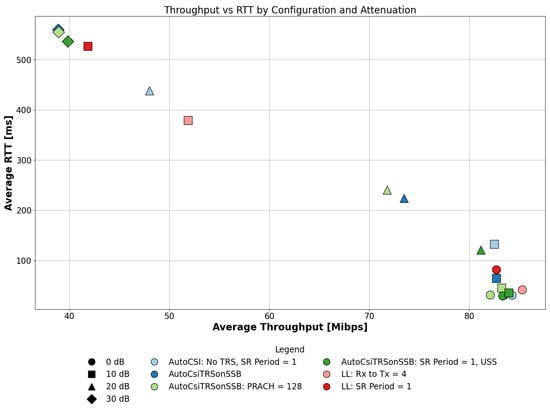

Figure 5 shows the results from Stage I, in which we separately measured RTT latency and throughput for each of the 24 configurations. The latency and throughput values represent the averages of the measured results aggregated across all packet sizes. The legend indicates which parameter was modified for each baseline configuration. We can observe three distinct groups of data points. The first group is characterized by the highest throughput and the highest latency. The second group presents intermediate values, falling between the maximum measured throughput and the minimum measured latency. The third group exhibits the lowest throughput but, at the same time, the lowest latency.

Figure 5.

Results from separate latency and throughput measurements for each configuration in Stage I.

5.3. Stage II

For further testing, two configurations from each of the three groups described above were selected. These were 6, 7, 10, 11, 13, and 16. Their detailed results are presented in Table 5. Based on Table 4, we selected two LiDAR configurations. The less demanding one used the Dual Return mode, a 180-degree field of view, 1024 resolution, and 10 frames per second. It required an average throughput of approximately 85 Mibps. The second, more demanding configuration had the same parameters but operated in the Dual Return mode with a 360-degree field of view and an average throughput of about 165 Mibps. It met the S2 requirement for laser mapping. As shown in Table 5, all base station configurations were able to transmit traffic generated by the first LiDAR configuration without loss, while the second configuration’s traffic exceeded the capabilities of several of them.

Table 5.

Results of selected base stations configurations from Figure 5.

5.3.1. Less Demanding LiDAR Configuration

Figure 6 presents the results of simultaneous latency and throughput measurements using a less demanding LiDAR configuration. For clarity, only average values are shown in the figure; however, the standard deviation (SD) for each measurement is provided in Table 6, which also details the results differentiated by additional attenuation levels. At 30 dB of attenuation, packet loss became so significant that latency reached levels disqualifying the results from any meaningful discussion.

Figure 6.

Results from simultaneous latency and throughput measurements for selected base station configurations with the less demanding LiDAR setup—Stage II.

Table 6.

Results for gNB configurations under the less demanding LiDAR sensor scenario across multiple attenuation levels. Refer to Figure 6 for a graphical summary.

All configurations successfully transmitted the full traffic load under 0 dB of additional attenuation. This is not surprising, considering that each configuration is capable of supporting at least 130 Mibps, as indicated in Table 5. To provide a clearer view of compliance, the final column in Table 6 summarizes which performance targets were met by each configuration based on selected latency and throughput requirements from relevant 3GPP specifications. When a configuration satisfies a given requirement and all less strict ones, it is marked with a + symbol (e.g., P2+ indicates compliance with P2, P3, and P4 thresholds). This form of presentation makes it easier to identify configurations that offer robust performance across different levels of radio degradation, which is particularly useful for those intending to apply such setups in real industrial environments.

Configurations optimized for high throughput performed significantly better at 0 dB of additional attenuation. All of them exhibited similar levels of throughput and latency, with Configuration 3 achieving slightly better latency results. Nevertheless, all configurations met the P3 and S3 requirements, while only Configuration 6 failed to meet the P2 requirement.

At 10 dB of additional attenuation, the advantage of Configuration 3 becomes much more pronounced. While the throughput remains similar among Configurations 1–4, the latency for Configuration 3 is approximately 10 ms lower compared to its closest counterpart, Configuration 2. It can also be observed that configurations favoring low latency experienced almost a twofold degradation in throughput and a severalfold increase in latency. Here, the impact of packet loss on latency becomes evident. At this point, only Configurations 2 and 3 meet the P2 latency requirement, while Configuration 1 satisfies P3.

To complement the RTT-focused analysis in Table 6, Table 7 presents downlink end-to-end latency values for Configuration 3 across different packet sizes and attenuation levels. Notably, this configuration consistently meets the strictest latency requirement (P1) within the 0–20 dB attenuation range.

Table 7.

Downlink end-to-end latency depending on attenuation and packet size, measured using the (3) Auto CSI, TRS, and SSB configuration (SR Period = 1, USS) in the less demanding LiDAR scenario.

It is important to note that the degradation observed in Table 6 was not as severe as the additional attenuation might suggest. This is not unexpected. In 5G, the Uplink Power Control mechanism allows user equipment (UE) to adjust its transmission power based on radio conditions. The primary goal of this feature is energy efficiency: the UE increases its transmission power only when necessary (for example, due to higher attenuation or a greater distance from the base station) and otherwise transmits at lower power levels to conserve battery life. This mechanism is described in more detail in the 3GPP TS 38.213 standard [54].

Configurations focused on latency were not included in Table 6 when looking at 20 dB of attenuation, as their latency values were too high. Once again, this was primarily due to packet loss. Among the remaining configurations, Configuration 3 performed best. However, even Configuration 3 did not meet the P3 latency requirement.

To provide a more detailed view of latency characteristics, we further analyzed the best-performing configuration (Configuration 3, Auto CSI, TRS, SSB, SR Period = 1, USS) by breaking down RTT latency results according to packet size and attenuation. This approach allows us to assess the influence of packet size on average RTT latency and its variability under different radio conditions. Table 8 summarizes these findings.

Table 8.

Average RTT latency (ms) and standard deviation for Configuration 3, grouped by packet size and attenuation level, in the less demanding LiDAR scenario.

The results in Table 8 show that under low attenuation (0 and 10 dB), the packet size has a negligible effect on average RTT latency, which remains in the 29–32 ms range for all sizes. However, as attenuation increases to 20 dB, both the latency and its variability rise sharply, especially for larger packets. The average RTT latency for the largest packet (1436 B) at 20 dB exceeds 150 ms, with a much higher standard deviation. This indicates that while the configuration is robust under good radio conditions, both packet size and link quality start to significantly impact latency performance as the environment deteriorates. These findings highlight the importance of selecting both configuration and sensor parameters according to the expected channel conditions in practical deployments.

5.3.2. More Demanding LiDAR Configuration

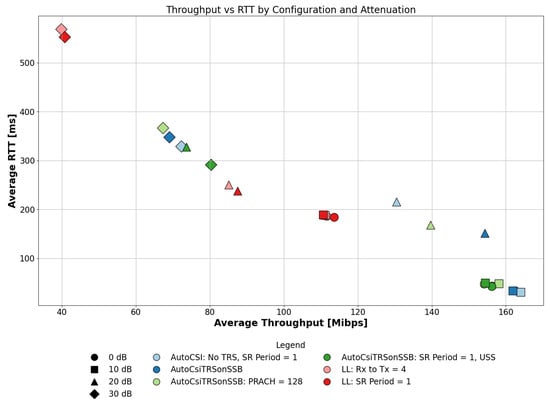

Figure 7 presents the results of simultaneous latency and throughput measurements using the more demanding LiDAR configuration. As in the previous case, only average values are shown in the figure for better readability; the corresponding standard deviations (SD) are provided in Table 9, which also breaks down the results by attenuation level and includes the compliance with latency and throughput requirements, similarly to the previous table.

Figure 7.

Results from simultaneous latency and throughput measurements for selected base station configurations with the more demanding LiDAR setup—Stage II.

Table 9.

Results for configurations optimized for high throughput across different attenuation levels with reference to Figure 7.

Unlike the experiment with the less demanding LiDAR setup, here, we observe somewhat different phenomena. First, for each level of additional attenuation, the configurations optimized for minimal latency performed significantly worse, which was expected, as the selected LiDAR setup deliberately exceeded their capacity. The observed behavior stems from packet losses caused by link saturation. Therefore, we will focus on the configurations optimized for high throughput.

Table 9 presents the results of the four configurations. At 0 dB of additional attenuation, all of them successfully transmitted all LiDAR-generated traffic without packet loss and maintained latency clearly below 50 ms. This meets not only the requirements shown for P3 from Table 2 for human-operated drone control with a video stream but also the thresholds for cooperative driving (P2). It is also worth noting that RTT was measured, while most recommendations refer to end-to-end latency. In this scenario, the best performance in terms of throughput and RTT was achieved by Configuration 4 (Auto CSI, TRS, SSB, SR Period = 1, USS), but only in ideal radio conditions without additional attenuation.

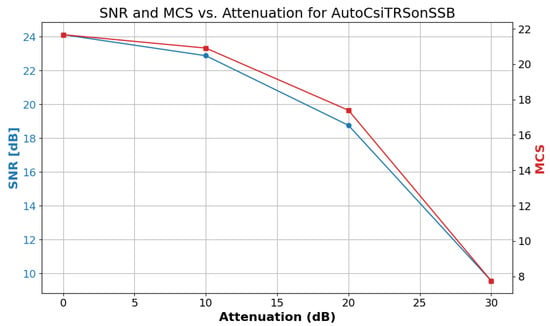

When the attenuation was increased to 10 dB, a significant performance degradation was expected. However, configuration 4 once again delivered the best results. Nevertheless, all configurations remained within the specified latency and throughput recommendations. This could again be a result of Uplink Power Control, which was described in the previous section. Another important factor influencing this outcome is the relationship between signal quality and the Modulation and Coding Scheme (MCS). As attenuation increases, the signal-to-noise ratio (SNR) decreases, which can reduce the achievable MCS and thereby limit throughput or increase latency. Figure 8 illustrates this effect for Configuration 1, which used Auto CSI, TRS, and SSB. It demonstrates a clear non-linear relationship between attenuation and both SNR and MCS under the more demanding LiDAR configuration.

Figure 8.

The impact of attenuation on SNR and MCS for configuration 1 (Auto CSI, TRS, and SSB) from Stage II under more demanding LiDAR configuration.

With 20 dB attenuation, the situation changes significantly. At this point, configurations optimized for minimum latency, such as Configuration 4, experience a pronounced drop in throughput and a substantial increase in RTT and its variability. Configuration 3 is affected even more severely, with a notable reduction in throughput and a threefold increase in RTT. In contrast, Configurations 1 and 2 maintain much more stable performance, with only moderate increases in RTT and relatively high throughput preserved. This highlights the greater resilience of Configuration 1 under worsening radio conditions.

When the attenuation reaches 30 dB, Figure 7 clearly shows that none of the tested configurations are able to maintain the required performance. This illustrates the practical boundary for reliable transmission in this setup.

Based on these results, Configuration 1 emerges as the most robust and stable across all considered test conditions. As shown in Table 10, it maintains consistently low end-to-end latency values for all packet sizes, even as the attenuation increases to 10 and 20 dB. Importantly, the latency always remains well within the strict requirements for all defined use cases, including the most demanding scenario, P1. Notably, P1 describes use cases where unmanned ground vehicles (UGVs) are controlled by production lines or industrial robots, highlighting the suitability of Configuration 1 for highly demanding, latency-sensitive industrial automation scenarios, even under challenging radio conditions.

Table 10.

Downlink end-to-end latency depending on attenuation and packet size, measured using Configuration (1) Auto CSI, TRS, and SSB in the more demanding LiDAR scenario.

To further investigate the influence of packet size and channel conditions on latency, we analyzed the best-performing configuration (Configuration 1, Auto CSI, TRS, and SSB) in the more demanding LiDAR scenario, breaking down RTT latency by packet size and attenuation. This detailed breakdown is provided in Table 11.

Table 11.

Average RTT latency (ms) and standard deviation for Configuration 1, grouped by packet size and attenuation level, in the more demanding LiDAR scenario.

The data in Table 11 indicate that under increased attenuation, the average RTT generally increases with packet size, which is consistent with expectations for more challenging radio conditions. However, an exception occurs at 0dB attenuation, where the smallest packets (16 B) experience noticeably higher average RTT and greater variability compared to larger packets.

To better understand this phenomenon, we examined the downlink retransmission rate, defined as the ratio of retransmitted downlink transport blocks to transmitted downlink blocks without retransmissions, expressed as a percentage. Table 12 presents these values for each packet size and attenuation level.

Table 12.

Downlink retransmission rate (%) for different attenuation levels and packet sizes, measured using Configuration (1) Auto CSI, TRS, and SSB in the more demanding LiDAR scenario.

Table 12 shows that for 16 B packets at 0dB attenuation, the retransmission rate remains noticeably high (4.03%), which aligns with the elevated RTT observed for these smallest packets and constitutes a significant outlier compared to larger packet sizes under the same conditions.

5.4. Summary

The previous sections presented the experimental results obtained under various LiDAR and base station configurations. Here, we interpret those results and discuss the key findings, focusing on aspects such as packet loss, environmental influence, packet size sensitivity, configuration flexibility, and the impact of critical parameters.

The selection of an appropriate configuration is primarily determined by the traffic characteristics of the sensor. This relationship becomes evident when comparing Table 5 with Table 6 and Figure 7. Table 5 demonstrates that latency-optimized configurations can achieve up to three times lower latency under idle uplink conditions compared to throughput-optimized alternatives. These configurations satisfy not only the P3 but also the P2 requirements and, in some cases, approach the P1 threshold, which is particularly relevant given that the standard refers to end-to-end latency, whereas our tests were based on RTT. They are also capable of transmitting the full sensor traffic while meeting the S2 requirement. However, Table 6 at 0 dB of additional attenuation and Figure 7, which involves simultaneous sensor data transmission, indicate that even under moderate traffic levels, well below the theoretical capacities of configurations 5 and 6, latency increased nearly threefold and over sevenfold, respectively. In contrast, configurations 1 to 4, which initially exhibited higher latency, showed only moderate degradation (up to 35%) or stable performance. This behavior is attributable to link saturation effects, which result in packet delays and losses. While similar behavior was expected for the more demanding LiDAR setup due to traffic exceeding available throughput, it was not anticipated in less demanding scenarios. This underscores the importance of conducting scenario-specific performance testing prior to deployment.

Another important factor influencing performance was the surrounding radio environment. While the testbed was deployed in a controlled indoor laboratory, we used a programmable attenuator to emulate changing conditions, such as increased signal loss caused by distance or obstacles. This setup enabled repeatable and fine-grained evaluation of how different configurations responded to varying attenuation levels. Across both LiDAR scenarios, a particularly interesting observation was made at the 10 dB attenuation level. Despite the degradation in link conditions, several configurations, especially those optimized for high throughput, maintained stable performance. This effect is partially attributable to mechanisms such as Uplink Power Control, which dynamically adjusts transmission power in response to link degradation. As a result, configurations that might otherwise suffer from increased latency or reduced throughput were able to compensate for moderate signal losses.

Regarding the scenario that was of primary interest to us, namely, the combined industrial use case specified by 3GPP, which requires an uplink throughput of at least 120 Mbps (S2) and an end-to-end latency below 100 ms (P3), the best-performing setup was the configuration using automatic CSI, TRS, and SSB. This configuration maintained acceptable performance even under increased attenuation levels. Notably, it was also capable of supporting more demanding applications, such as cooperative driving, provided the radio conditions did not degrade beyond moderate thresholds.

In controlled environments, this setup demonstrated RTT values low enough that, based on direct end-to-end latency measurements, certain configurations nearly met the P1 requirement outlined in Table 2 even under 30 dB attenuation. Importantly, for the best-performing configuration, end-to-end latency analysis confirmed that the strict P1 latency constraint (below 10 ms) was consistently satisfied for all tested packet sizes and attenuation levels in the less demanding LiDAR scenario. Furthermore, in the more demanding scenario, the same configuration maintained end-to-end latency values below the P1 threshold up to 20 dB attenuation. This demonstrates remarkable robustness even under challenging conditions and confirms that, under favorable circumstances, the most stringent P1 latency requirement is achievable for both less and more demanding sensor applications.

A clear trend observed across all scenarios is that larger packet sizes generally lead to higher latency, especially under increased attenuation. This behavior reflects the expected impact of packetization and transmission scheduling in wireless networks, where larger packets are more susceptible to queuing delays and retransmissions. However, we also observed an interesting exception in the more demanding LiDAR scenario: for the smallest packet size (16 B) at 0 dB attenuation, the average latency was unexpectedly high, accompanied by a significantly increased downlink retransmission rate.

It is also worth emphasizing that we selected two specific LiDAR parameter sets. However, alternative configurations could be selected depending on specific needs. Suppose that the uplink traffic generated would require around 85 Mbps, similar to the scenario with the less demanding sensor configuration. If the Dual Return mode was not necessary and the priority was resolution and a full field of view, one could select the Low Data Rate mode with a 360-degree field of view, a resolution of 2048, and 10 frames per second. Similarly, if a higher frame rate was preferred over resolution, instead of using the Dual Return mode with 10 frames per second and a resolution of 1024, one could opt for the Dual Return mode with a 512 resolution and 20 frames per second. Given the above results, we are not limited to the LiDAR-based scenario. The same conclusions can apply to other use cases defined in Table 1, such as 8K video transmission, or any application requiring high uplink throughput and appropriately low latency.

Very important conclusions can be drawn regarding the parameters that contributed to the observed performance. Bandwidth is certainly critical for achieving high throughput. In our case, the higher value of 60 MHz proved to be more effective. However, the combination of Auto CSI, TRS (Tracking Reference Signal), and SSB (Synchronization Signal Block) was present in both top-performing configurations in the Stage II scenarios. Notably, under the more demanding sensor configuration, these mechanisms provided the best overall performance in terms of both throughput and latency, regardless of environmental conditions, which is crucial for mobile drones. Auto CSI, understood here as the automatic selection of the optimal channel state information configuration at the initial stage of communication, enables the efficient use of available MIMO modes and enhances precoding, as described in [55]. This feature supports both low- and high-resolution CSI feedback, allowing the system to match the most effective feedback type to the current spatial correlation of the channel, which leads to measurable throughput gains in realistic 5G-NR scenarios. The inclusion of TRS, leveraging the NZP CSI-RS mechanism, further improves channel estimation accuracy and supports reliable link adaptation. Recent studies demonstrate that NZP CSI-RS-based interference measurement, as standardized in 3GPP TS 38.214, enables a more precise assessment of interference and adaptation of transmission parameters, such as modulation and coding rate, to current radio conditions. This process, often managed through a Channel Quality Indicator (CQI) reported by the user device, directly results in increased throughput and improved service quality in interference-limited or cell-edge scenarios [56,57]. SSB plays a key role in ensuring robust synchronization and initial access. According to Boiko et al., advanced SSB structures and their integration with beamforming mechanisms improve the signal-to-noise ratio during initial access, directly reducing BER and retransmission rates, which is especially important in high-interference and multipath environments [58].

In the measurements with the less demanding sensor configuration, another very important parameter was the Scheduling Request Period. This defines the time interval after which a physical layer signaling message is sent by the User Equipment (UE) to inform the network that it has data ready for uplink transmission. Additionally, the number of PDCCH candidates at Aggregation Level 2 (AL2) was increased from 4 to 8. This change provides the UE with more decoding opportunities, improving the reliability of control message detection, particularly in degraded radio conditions. Although it slightly increases the complexity of blind decoding, it helps maintain low latency and stable uplink performance.

6. Conclusions

This study presented a comprehensive evaluation of 5G performance in an industrial use case involving a LiDAR-equipped unmanned ground vehicle (UGV). Using a real-world testbed and commercial hardware, we assessed how different base station configurations influence both uplink throughput and downlink latency under various radio conditions. The testing process was divided into two stages. First, we identified configurations with the greatest potential, and then we evaluated the selected ones more thoroughly using different attenuation levels and packet sizes.

The results show that configurations using automatic Channel State Information (CSI), Tracking Reference Signal (TRS), and Synchronization Signal Block (SSB) provided the most robust and consistent performance. Automatic CSI enabled the selection of the optimal feedback configuration at the initial stage, ensuring the efficient use of MIMO modes and enhancing precoding. TRS, implemented using NZP CSI-RS, improved the accuracy of channel estimation and supported reliable link adaptation. SSB ensured precise synchronization and initial access, leading to higher signal-to-noise ratios, lower bit error rates, and fewer retransmissions. Together, these mechanisms helped maintain high throughput and minimize latency, even under degraded radio conditions.

We also found that tuning the Scheduling Request (SR) period and increasing the number of PDCCH candidates at Aggregation Level 2 (AL2) from 4 to 8 significantly improved uplink reliability and latency, particularly under degraded radio conditions. This highlights the importance of fine-grained control over uplink signaling parameters in achieving industrial-grade performance.

It is worth noting that the results do not show a clear or consistent relationship between packet size and latency. While it might seem intuitive that larger packets cause more delay, our measurements suggest more complex behavior. This may be influenced by factors such as grant timing, scheduling delays, and auto-configuration mechanisms like Auto CSI, TRS, and SSB. However, these are only some of the possible contributors. Other elements of the scheduling process or resource management may also play a role, and not all of them are exposed or well understood. Therefore, we caution against assuming any fixed trend based solely on the packet size.

For scenarios that require uplink throughput above 120 Mbps (S2) and control latency below 100 milliseconds (P3), such as remotely piloted drones with video feedback, several configurations met the requirements even under added attenuation. However, more demanding applications like cooperative driving typically require latency between 1 and 50 milliseconds (P2). Only a few configurations met these tighter constraints when signal quality was reduced. Notably, in well-controlled radio environments, certain configurations achieved latency performance nearly low enough to support even stricter use cases, such as machine control over UGVs, where end-to-end latency must remain within the 1 to 10 ms (P1) range.

A particularly noteworthy finding is that, under controlled conditions, several configurations achieved end-to-end latency well within the strictest P1 requirement (below 10 ms), and this was maintained across all tested packet sizes and most attenuation levels. This demonstrates the suitability of these solutions for the most demanding industrial use cases, such as real-time robot or vehicle control.

Across both less and more demanding LiDAR scenarios, we observed the expected trend that increasing the packet size tends to result in higher average latency, especially as radio conditions worsen. This relationship was especially pronounced at high attenuation, where both the average delay and its variability increased substantially for the largest packets.

However, a notable exception was observed in the more demanding LiDAR scenario at 0 dB attenuation: the smallest packet size (16 B) exhibited unexpectedly high latency, which was directly correlated with a significantly elevated downlink retransmission rate for these packets.

This study clearly demonstrated that the appropriate configuration depends strongly on both the generated sensor traffic and the surrounding environment. In particular, uplink-intensive applications such as high-resolution LiDAR mapping demand careful matching of 5G parameters to system requirements. Likewise, low-latency-optimized configurations, if deployed under favorable conditions, could enable advanced industrial operations like real-time machine control or drone-based inspections.

These findings emphasize the importance of not only simulation but also real-world experimentation and parameter optimization when deploying 5G for Industry 4.0. Fine-tuning system parameters to match specific use cases and conditions remains crucial for bridging the gap between the theoretical 5G potential and practical industrial performance.

Looking ahead, the presented testbed methodology offers potential scalability to more complex deployment scenarios. Since the testbed utilizes real 5G modems and a wireless connection to the base station, it is well-suited for simulating multiple LiDAR-equipped UGVs. We have also collected full packet traces from the sensor, enabling realistic traffic replay. With these data and the current infrastructure, we are equipped to emulate multi-UGV scenarios, including cooperative applications, provided that an appropriate mobility model is integrated. This makes the setup a strong foundation for future research on scalability and coordination in industrial 5G deployments.

An important direction for future work is improving the performance adaptability of networked systems in industrial environments. In our current testbed, parameters such as SR Period and USS candidate configurations require restarting the gNB, which limits the ability to respond to changing conditions in real time. However, dynamic adaptation is critical for maintaining high throughput and low latency when link quality varies, especially in mobile scenarios. Building on this idea, we plan to investigate adaptive control of the LiDARs output profile based on link conditions. This approach offers a practical application-layer method to sustain performance in cases where direct reconfiguration of network parameters is not possible.

Author Contributions

Conceptualization, J.K. and M.H.; data curation, J.K. and M.H.; investigation, J.K.; methodology, J.K. and M.H.; project administration, J.K.; resources, M.H.; software, J.K. and A.W.; supervision, J.K. and M.H.; validation, J.K. and M.H.; visualization, J.K.; writing—original draft, J.K. and A.W.; writing—review & editing, J.K. and M.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors upon request.

Acknowledgments

The authors would like to thank Gdańsk University of Technology Department of Computer Communications for making the PL5G laboratory available.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Shah, A.F.M.S. A Survey From 1G to 5G Including the Advent of 6G: Architectures, Multiple Access Techniques, and Emerging Technologies. In Proceedings of the 2022 IEEE 12th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 26–29 January 2022; Volume 12, pp. 1117–1123. [Google Scholar]

- ITU-R. Recommendation ITU-R M.2083-0: IMT Vision–Framework and Overall Objectives of the Future Development of IMT for 2020 and Beyond; International Telecommunication Union Radiocommunication Sector. 2015. Available online: https://www.itu.int/dms_pubrec/itu-r/rec/m/r-rec-m.2083-0-201509-i!!pdf-e.pdf (accessed on 28 March 2025).

- Navarro-Ortiz, J.; Romero-Diaz, P.; Sendra, S.; Ameigeiras, P.; Ramos-Munoz, J.J.; Lopez-Soler, J.M. A Survey on 5G Usage Scenarios and Traffic Models. IEEE Commun. Surv. Tutor. 2020, 22, 905–929. [Google Scholar] [CrossRef]

- Zhang, D.; Song, D.; Zhu, Y.; Lu, J.; Liu, X. Human-Robot Shared Control for Surgical Robot Based on Context-Aware Sim-to-Real Adaptation. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022; pp. 7694–7700. [Google Scholar]

- Moustris, G.; Tzafestas, C.; Konstantinidis, K. A long distance telesurgical demonstration on robotic surgery phantoms over 5G. Int. J. Comput. Assist. Radiol. Surg. 2023, 18, 1577–1587. [Google Scholar] [CrossRef] [PubMed]

- Varga, P.; Peto, J.; Franko, A.; Balla, D.; Haja, D.; Janky, F.; Soos, G.; Ficzere, D.; Maliosz, M.; Toka, L. 5G Support for Industrial IoT Applications—Challenges, Solutions, and Research Gaps. Sensors 2020, 20, 828. [Google Scholar] [CrossRef]

- Zou, X.; Li, K.; Zhou, J.T.; Wei, W.; Chen, C. Robust Edge AI for Real-Time Industry 4.0 Applications in 5G Environment. IEEE Commun. Stand. Mag. 2023, 7, 64–70. [Google Scholar] [CrossRef]

- Qi, W.; Xu, X.; Qian, K.; Schuller, B.W.; Fortino, G.; Aliverti, A. A Review of AIoT-Based Human Activity Recognition: From Application to Technique. IEEE J. Biomed. Health Inform. 2025, 29, 2425–2438. [Google Scholar] [CrossRef]

- Wen, M.; Wu, Y.; Zhang, J.; Yuan, D.; Wang, T.; Guo, W.; Zhang, J.; Lin, X.; Zhang, R.; Chen, J.; et al. Private 5G Networks: Concepts, Architectures, and Research Landscape. IEEE J. Sel. Top. Signal Process. 2022, 16, 7–25. [Google Scholar] [CrossRef]

- Nahum, C.V.; Garcia, L.A.; Busson, A.; Vaillancourt, R.; Li, Z.; Chowdhury, K.R. Testbed for 5G Connected Artificial Intelligence on Virtualized Networks. IEEE Access 2020, 8, 223202–223213. [Google Scholar] [CrossRef]

- Tahir, M.; Habaebi, M.H.; Dabbagh, M.; Mughees, A.; Ahad, A.; Ahmed, K.I. A Review on Application of Blockchain in 5G and Beyond Networks: Taxonomy, Field-Trials, Challenges and Opportunities. IEEE Access 2020, 8, 115876–115904. [Google Scholar] [CrossRef]

- Fellan, A.; Schellenberger, C.; Zimmermann, M.; Schotten, H.D. Enabling Communication Technologies for Automated Unmanned Vehicles in Industry 4.0. In Proceedings of the 2018 International Conference on Information and Communication Technology Convergence (ICTC), Jeju, Republic of Korea, 17–19 October 2018; pp. 171–176. [Google Scholar]

- Singh, B.; Tirkkonen, O.; Li, Z.; Uusitalo, M.A. Contention-Based Access for Ultra-Reliable Low Latency Uplink Transmissions. IEEE Wirel. Commun. Lett. 2018, 7, 182–185. [Google Scholar] [CrossRef]

- Lezzar, M.Y.; Mehmet-Ali, M. Optimization of Ultra-Reliable Low-Latency Communication Systems. IEEE Access 2021, 197, 108332. [Google Scholar] [CrossRef]

- Larrañaga, A.; Lucas-Estañ, M.C.; Lagén, S.; Ali, Z.; Martinez, I.; Gozalvez, J. An Open-Source Implementation and Validation of 5G NR Configured Grant for URLLC in ns-3 5G LENA: A Scheduling Case Study in Industry 4.0 Scenarios. J. Netw. Comput. Appl. 2023, 215, 103638. [Google Scholar] [CrossRef]

- Doğan, S.; Tusha, A.; Arslan, H. NOMA With Index Modulation for Uplink URLLC Through Grant-Free Access. IEEE J. Sel. Top. Signal Process. 2019, 13, 1249–1257. [Google Scholar] [CrossRef]

- Ge, X. Ultra-Reliable Low-Latency Communications in Autonomous Vehicular Networks. IEEE Trans. Veh. Technol. 2019, 68, 5005–5016. [Google Scholar] [CrossRef]

- Islam, M.S.; Abozariba, R.; Mi, D.; Patwary, M.N.; He, D.; Asyhari, A.T. Design and Evaluation of Multi-Layer NOMA on NR Physical Layer for 5G and Beyond. IEEE Trans. Broadcast. 2024, 70, 42–56. [Google Scholar] [CrossRef]

- Ha, C.-B.; You, Y.-H.; Song, H.-K. Machine Learning Model for Adaptive Modulation of Multi-Stream in MIMO-OFDM System. IEEE Access 2019, 7, 5141–5152. [Google Scholar] [CrossRef]

- Khoramnejad, F.; Joda, R.; Sediq, A.B.; Boudreau, G.; Erol-Kantarci, M. AI-Enabled Energy-Aware Carrier Aggregation in 5G New Radio With Dual Connectivity. IEEE Access 2023, 11, 74768–74783. [Google Scholar] [CrossRef]

- Suh, K.; Kim, S.; Ahn, Y.; Ju, H.; Shim, B. Deep Reinforcement Learning-Based Network Slicing for Beyond 5G. IEEE Access 2022, 10, 7384–7395. [Google Scholar] [CrossRef]

- 3GPP. Enhancement for Unmanned Aerial Vehicles; Stage 1 (Release 17); Technical Report TR 22.829 V17.1.0; 3rd Generation Partnership Project (3GPP), Technical Specification Group Services and System Aspects: 2019. Available online: https://www.3gpp.org/ftp//Specs/archive/22_series/22.829/22829-h10.zip (accessed on 4 March 2025).

- Alfattani, S.; Jaafar, W.; Hmamouche, Y.; Yanikomeroglu, H.; Yongaçoglu, A.; Đào, N.D.; Zhu, P. Aerial Platforms with Reconfigurable Smart Surfaces for 5G and Beyond. IEEE Commun. Mag. 2021, 59, 96–102. [Google Scholar] [CrossRef]

- Tafintsev, N.; Moltchanov, D.; Gerasimenko, M.; Gapeyenko, M.; Zhu, J.; Yeh, S.P.; Himayat, N.; Andreev, S.; Koucheryavy, Y.; Valkama, M. Aerial Access and Backhaul in mmWave B5G Systems: Performance Dynamics and Optimization. IEEE Commun. Mag. 2020, 58, 93–99. [Google Scholar] [CrossRef]

- Azari, M.M.; Solanki, S.; Chatzinotas, S.; Bennis, M. THz-Empowered UAVs in 6G: Opportunities, Challenges, and Trade-offs. IEEE Commun. Mag. 2022, 60, 24–30. [Google Scholar] [CrossRef]

- Bajracharya, R.; Shrestha, R.; Kim, S.; Jung, H. 6G NR-U Based Wireless Infrastructure UAV: Standardization, Opportunities, Challenges and Future Scopes. IEEE Access 2022, 10, 30536–30555. [Google Scholar] [CrossRef]

- Hmamouche, Y.; Benjillali, M.; Saoudi, S. Fresnel Line-of-Sight Probability With Applications in Airborne Platform-Assisted Communications. IEEE Trans. Veh. Technol. 2022, 71, 5060–5072. [Google Scholar] [CrossRef]

- 3GPP. Study on Communication for Automation in Vertical Domains (Release 16); Technical Report TR 22.804 V16.3.0; 3rd Generation Partnership Project (3GPP), Technical Specification Group Services and System Aspects: 2020. Available online: https://www.3gpp.org/ftp//Specs/archive/22_series/22.804/22804-g30.zip (accessed on 1 March 2025).

- Brown, G. Ultra-Reliable Low-Latency 5G for Industrial Automation; Heavy Reading White Paper Produced for Qualcomm Inc. 2018. Available online: https://www.qualcomm.com/content/dam/qcomm-martech/dm-assets/documents/ultra-reliable-low-latency-5g-for-industrial-automation.pdf (accessed on 24 March 2025).

- HMS Networks. Industrial Ethernet in Focus—Overview of the Most Common Standards. 2025. Available online: https://www.hms-networks.com/tech-blog/blogpost/hms-blog/2025/01/27/industrial-ethernet-in-focus_overview-of-the-most-common-standards (accessed on 10 April 2025).

- Striffler, T.; Michailow, N.; Bahr, M. Time-Sensitive Networking in 5th Generation Cellular Networks—Current State and Open Topics. In Proceedings of the 2019 IEEE 2nd 5G World Forum (5GWF), Dresden, Germany, 30 September–2 October 2019; pp. 547–552. [Google Scholar]

- Guan, W.; Zhang, H.; Sun, L.; Fu, M.; Wen, X.; Leung, V.C.M. RAN Slicing Toward Coexistence of Time-Sensitive Networking and Wireless Networking. IEEE Commun. Mag. 2024, 62, 96–102. [Google Scholar] [CrossRef]

- Amini, M.; El-Ashmawy, A.; Rosenberg, C.; Khandani, A.K. 5G DIY: Impact of Different Elements on the Performance of an E2E 5G Standalone Testbed. In Proceedings of the 2023 IEEE Global Communications Conference (GLOBECOM), Kuala Lumpur, Malaysia, 4–8 December 2023; pp. 6377–6382. [Google Scholar]

- Qi, Y.; Zhang, X.; Vaezi, M. Over-the-Air Implementation of NOMA: New Experiments and Future Directions. IEEE Access 2021, 9, 135828–135844. [Google Scholar] [CrossRef]

- Bisoyi, S.; Muralimohan, P.; Dureppagari, H.K.; Manne, P.R.; Kuchi, K. Outdoor Massive MIMO Testbed With Directional Beams: Design, Implementation, and Validation. IEEE Access 2025, 13, 22230–22242. [Google Scholar] [CrossRef]

- D’Oro, S.; Bonati, L.; Restuccia, F.; Melodia, T. Coordinated 5G Network Slicing: How Constructive Interference Can Boost Network Throughput. IEEE/ACM Trans. Netw. 2021, 29, 1881–1894. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, J.; Hu, X.S.; Han, S. Real-Time Flow Scheduling in Industrial 5G New Radio. In Proceedings of the 2023 IEEE Real-Time Systems Symposium (RTSS), Taipei, Taiwan, 5–8 December 2023; pp. 371–384. [Google Scholar]

- Zhao, Y.; Wei, M.; Hu, C.; Xie, W. Latency Analysis and Field Trial for 5G NR. In Proceedings of the 2022 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Bilbao, Spain, 15–17 June 2022; pp. 1–5. [Google Scholar]

- Amarisoft. TDD Pattern. Available online: https://tech-academy.amarisoft.com/NR_TDD_Pattern.html (accessed on 21 May 2025).

- Amarisoft. Improve RTT Latency in NR. Available online: https://tech-academy.amarisoft.com/improve_rtt_latency_in_nr.wiki (accessed on 21 May 2025).

- Amarisoft. Maximizing Uplink Speed in 5G NR TDD. Available online: https://tech-academy.amarisoft.com/maximizing_uplink_speed_in_5_nr_tdd.wiki (accessed on 21 May 2025).

- Takeda, K.; Xu, H.; Kim, T.; Schober, K.; Lin, X. Understanding the Heart of the 5G Air Interface: An Overview of Physical Downlink Control Channel for 5G New Radio (NR). IEEE Commun. Stand. Mag. 2020, 4, 82–90. [Google Scholar] [CrossRef]

- Projekt PL-5G. Available online: https://eti.pg.edu.pl/node/39/nauka-i-badania/projekt-pl-5g (accessed on 24 March 2025).

- Ouster. OS0-128 Rev 7 Data Sheet (v3.1). Available online: https://data.ouster.io/downloads/datasheets/datasheet-rev7-v3p1-os0.pdf (accessed on 2 May 2025).

- Ouster. Configurable Data Packet Format (v2.x). Available online: https://static.ouster.dev/sensor-docs/image_route1/image_route2/sensor_data/sensor-data.html#configurable-data-packet-format-v2-x (accessed on 28 March 2025).

- Amarisoft. AMARI Callbox Classic–Overview. Available online: https://www.amarisoft.com/test-and-measurement/device-testing/device-products/amari-callbox-classic (accessed on 2 May 2025).

- Snyder, J.; Hoffer, L.; Martin, B.; Rogers, D.; Kanth, V. Comparing 5G Network Latency Utilizing Native Security Algorithms. In Proceedings of the Joint European Conference on Networks and Communications & 6G Summit (EuCNC/6G Summit), Gothenburg, Sweden, 6–9 June 2023; pp. 532–537. [Google Scholar]

- Singh, B.C.; Shetty, S.; Chivate, P.; Wright, D.; Alenberg, A.; Woodward, P. Performance Analysis of Indoor 5G NR Systems. In Proceedings of the IEEE 21st Consumer Communications & Networking Conference (CCNC), Las Vegas, NV, USA, 6–9 January 2024; pp. 632–633. [Google Scholar]

- Kahvazadeh, S.; Khalili, H.; Silab, R.N.; Bakhshi, B.; Mangues-Bafalluy, J. Vertical-oriented 5G Platform-as-a-Service: User-Generated Content Case Study. In Proceedings of the IEEE Future Networks World Forum (FNWF), Montreal, QC, Canada, 10–14 October 2022; pp. 706–711. [Google Scholar]

- Vaunix. LDA-802Q Programmable Digital Attenuator. Available online: https://vaunix.com/lda-802q-programmable-digital-attenuator/ (accessed on 2 May 2025).

- MikroTik. Chateau 5G ax. Available online: https://mikrotik.com/product/chateau_5g_ax (accessed on 2 May 2025).

- Siemens AG. Configuration of a VxLAN Tunnel with SCALANCE Devices, Manual, Version 1.1. Available online: https://support.industry.siemens.com/cs/attachments/109805209/109805209_VXLAN_MUM85x_to_SC600_DOC_V1_1_en.pdf (accessed on 2 May 2025).

- Minhas, T.N.; Fiedler, M.; Arlos, P. Quantification of Packet Delay Variation through the Coefficient of Throughput Variation. In Proceedings of the 6th International Wireless Communications and Mobile Computing Conference (IWCMC), Caen, France, 28 June–2 July 2010; pp. 336–340. [Google Scholar]