Augmented Reality for PCB Component Identification and Localization

Abstract

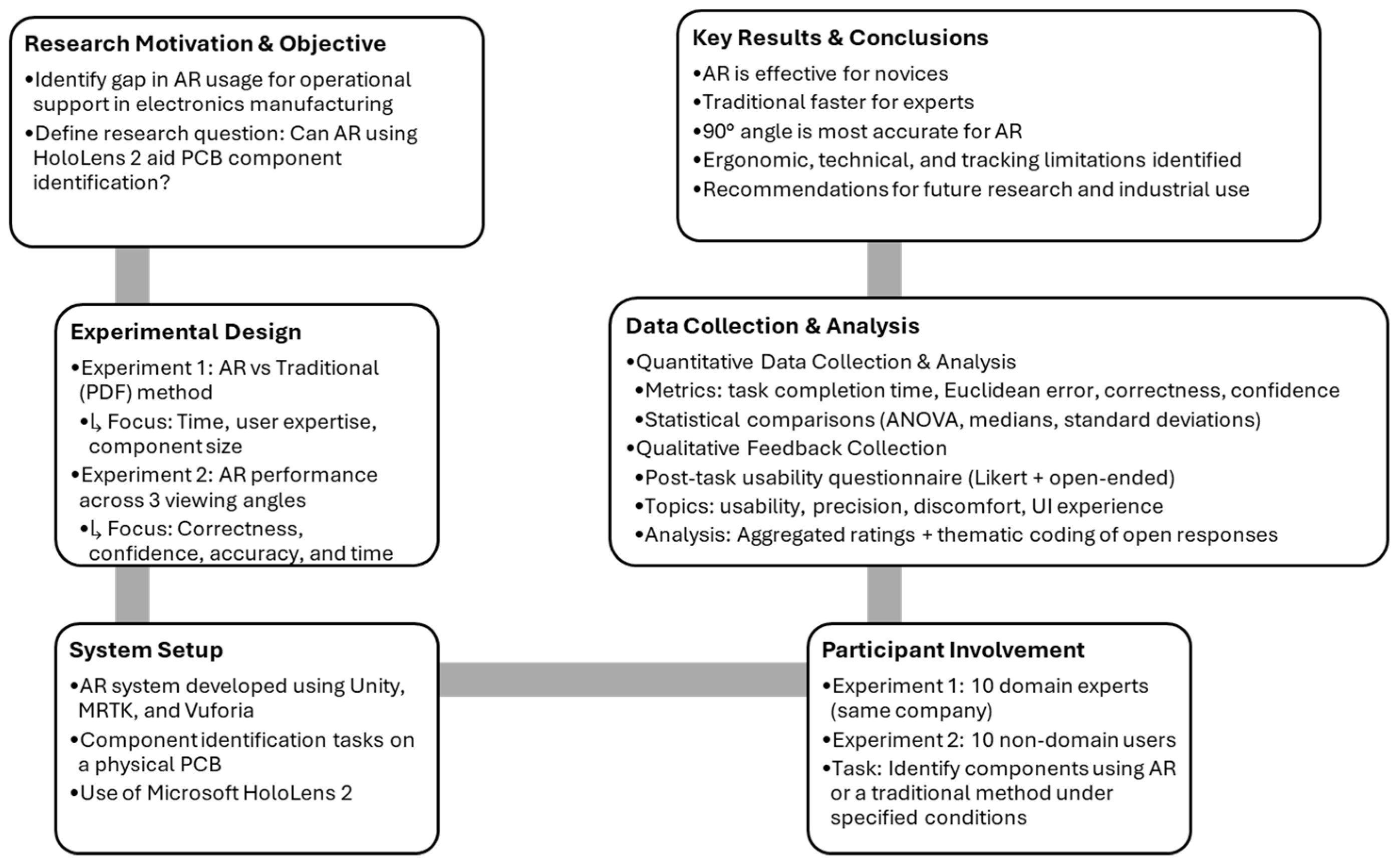

1. Introduction

2. Literature Review and Technical Framework

3. Research Aims

4. Materials and Methods

4.1. AR System Architecture

- Hardware: Microsoft™ HoloLens™™ 2

- Development Environment: Unity™2021.3.9f1;

- AR Libraries: MRTK for spatial interaction and UI integration;

- Tracking Engine: Vuforia™ Engine for image-based marker tracking.

4.2. Experiment 1-Methods

4.2.1. Technical Setup

4.2.2. Procedure

4.2.3. Data Collection

4.2.4. Expected Outcomes

4.3. Experiment 2-Methods

4.3.1. Technical Setup

4.3.2. Procedure

4.3.3. Data Collection and Analysis

4.3.4. Study Overview

4.3.5. Experimental Setup

4.3.6. Position Definitions

- Reference Position: The reference position represented the true 2D coordinates of the center of each component on the PCB. These positions were predefined using the original PCB layout or schematic (Figure 7), serving as the baseline for comparison. In Figure 7, yellow squares and circles represent the mapped boundaries of selected components, and the reference position corresponds to the geometric center of each boundary.

- Augmented Position: The augmented position (Figure 8) refers to the 2D coordinates of the component center as displayed in the AR interface. It reflects the technical performance of the system, specifically marker tracking, spatial co-registration accuracy, and the fidelity of the digital twin. In this context, the coordinates obtained for the red dot rendered on each component represent the augmented position. These positions were extracted from recorded video frames using image analysis techniques, such as centroid detection, calculated for each component, angle, and participant.

- Identified Position: The identified position indicates where users manually pointed to a component using a stylus. It captures the combined influence of human visual perception and motor coordination, providing insight into real-world usability. In this case, the coordinates represent the calculated location of the stylus tip and were extracted from video frames by locating the stylus tip within the AR display (Figure 9), using similar image processing methods as described above.

4.3.7. Accuracy Calculations

- System Alignment Accuracy: Measures the Euclidean distance between the reference position and the augmented position to quantify the accuracy of AR marker tracking.

- User Interaction Accuracy: Assesses the user’s ability to interpret and interact with the AR display by measuring the distance between the augmented position and the identified position. These distances are calculated using the Euclidean distance formula, providing a straightforward assessment of accuracy for both system alignment and user interactions.

4.3.8. Repetition Across Angles

4.3.9. Data Organization

4.3.10. Participant Feedback

5. Results

5.1. Experiment 1

5.1.1. Impact of Method Sequence on Localization Times (Run 1)

- In Group A, where participants started with the traditional method (Figure 10b), significantly (p = 0.003) shorter localization times were observed, indicating an advantage when beginning with this method.

- A reverse pattern can be noticed in Group B, where participants started with the HoloLens™ method (Figure 10c) and achieved slightly shorter times (median) when using the HoloLens™ method. However, these differences were not statistically significant, suggesting a minimal impact of method sequence on task completion times for this group.

- Interpretation: Across the entire population (Figure 10a), there is a weak effect, suggesting that the traditional method is more efficient than the HoloLens™-based method. For the subgroup starting with the traditional method, this effect is statistically significant. Subjects performed, on average, better in the first attempt, regardless of which method they used. This indicates the subjects’ loss of attention or possible fatigue during the experiment.

| Group | Method | Mean (Seconds) | Median (Seconds) | Wilcoxon Test (Statistic, p-Value) |

|---|---|---|---|---|

| Entire Population (n = 10) | Traditional | 17.20 | 17.00 | (342.00, 0.068) |

| HoloLens™ | 25.75 | 20.50 | ||

| Group A (n = 5) | Traditional | 13.70 | 13.00 | (65.00, 0.003) |

| HoloLens™ | 31.50 | 30.50 | ||

| Group B (n = 5) | HoloLens™ | 20.00 | 15.50 | (113.50, 0.544) |

| Traditional | 20.70 | 22.00 |

5.1.2. Localization Times for Different Component Sizes (Run 2)

- Comparison of Methods: As illustrated in Figure 11, the traditional method resulted in shorter localization times compared to the HoloLens™ method:

- o

- SD Card: The traditional method recorded a median time of 3 s, while the HoloLens™ method recorded a median time of 32.9 s.

- o

- Resistor: The traditional method had a median time of 17 s, compared to 22.5 s for the HoloLens™ method.

- Variability in the HoloLens™ Method: Analysis of the HoloLens™ method revealed increased variability, most prominently for the SD card component, as evidenced by the wider interquartile range in the boxplot. This suggests potential limitations related to unstable marker tracking and subjective differences in user interpretation of the AR interface, which collectively contribute to greater inconsistency in task execution times.

5.1.3. Impact of User Expertise on Localization Times (Run 3)

- Comparison of Methods

5.1.4. Usability and User Experience

- Increasing marker size and contrast.

- Adding features like blinking or brighter colors for easier identification.

- “The contrast of the designated area should be higher”.

- “The marker should blink or shine brighter”.

- “It was difficult to place windows exactly where needed”.

- “The biggest challenge was getting used to the system. Once familiar, it felt more manageable”.

- Better marker visibility (e.g., improved size, contrast, and dynamic visual cues like blinking).

- Refinements to the interface for smoother navigation and interaction.

- Improved tools for managing AR elements like windows and input mechanisms.

5.2. Experiment 2

- −

- Accuracy denotes the spatial precision of component localization, measured by the Euclidean distance between augmented/indicated positions and the reference position.

- −

- Correctness refers to a binary classification of whether the user-indicated position falls within the target component’s footprint.

- −

- Confidence reflects the average proportion of correct identifications made by participants per component size category and angle.

5.2.1. Positional Accuracy Analysis

5.2.2. Component Identification Correctness Analysis

5.2.3. Component Identification Accuracy and Confidence

5.2.4. Time Analysis Across Viewing Angles

5.2.5. Usability and User Experience

- Very Poor (1): Completely inadequate or unsatisfactory.

- Poor (2): Significant issues or limitations.

- Neutral (3): Acceptable, neither positive nor negative.

- Good (4): Effective with minor limitations.

- Very Good (5): Highly effective and satisfactory.

Physical and Visual Discomfort

Precision of 3D Markers

- Increasing marker contrast and size to enhance visibility.

- Reducing flickering for more stable positioning.

Learning and Ease of Use

Overall Feedback and Limitations

Results Summary

6. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sharma, M.D.; Kiran, K.K.; Surya, M.P.; Singh, N.K.; Choudhary, P.R. Printed Circuit Board Defect Detection Using Machine Learning. Int. J. Tech. Res. Sci. 2024, 9, 27–35. [Google Scholar] [CrossRef] [PubMed]

- Akhtar, M.B. The Use of a Convolutional Neural Network in Detecting Soldering Faults from a Printed Circuit Board Assembly. HighTech Innov. J. 2022, 3, 1–14. [Google Scholar] [CrossRef]

- Dai, W.; Mujeeb, A.; Erdt, M.; Sourin, A. Soldering defect detection in automatic optical inspection. Adv. Eng. Inform. 2020, 43, 101004. [Google Scholar] [CrossRef]

- Sankar, V.U.; Lakshmi, G.; Sankar, Y.S. A Review of Various Defects in PCB. J. Electron. Test. 2022, 38, 1498–1504. [Google Scholar] [CrossRef]

- Radamson, H.H.; He, X.; Zhang, Q.; Liu, J.; Cui, H.; Xiang, J.; Kong, Z.; Xiong, W.; Li, J.; Gao, J.; et al. Miniaturization of CMOS. Micromachines 2019, 10, 293. [Google Scholar] [CrossRef]

- Lukacs, P.; Rovensky, T.; Otahal, A. A Contribution to Printed Circuit Boards’ Miniaturization by the Vertical Embedding of Passive Components. J. Electron. Packag. Trans. ASME 2024, 146, 011004. [Google Scholar] [CrossRef]

- Gattullo, M.; Laviola, E.; Evangelista, A.; Fiorentino, M.; Uva, A.E. Towards the Evaluation of Augmented Reality in the Metaverse: Information Presentation Modes. Appl. Sci. 2022, 12, 12600. [Google Scholar] [CrossRef]

- Morales Méndez, G.; del Cerro Velázquez, F. Augmented Reality in Industry 4.0 Assistance and Training Areas: A Systematic Literature Review and Bibliometric Analysis. Electronics 2024, 13, 1147. [Google Scholar] [CrossRef]

- Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Vilar-Montesinos, M.; Fernández-Caramés, T.M. Creating Collaborative Augmented Reality Experiences for Industry 4.0 Training and Assistance Applications: Performance Evaluation in the Shipyard of the Future. Appl. Sci. 2020, 10, 9073. [Google Scholar] [CrossRef]

- Yeow, P.H.P.; Sen, R.N. Ergonomics improvements of the visual inspection process in a printed circuit assembly factory. Int. J. Occup. Saf. Ergon. 2004, 10, 369–385. [Google Scholar] [CrossRef]

- Park, S.; Bokijonov, S.; Choi, Y. Review of microsoft hololens applications over the past five years. Appl. Sci. 2021, 11, 7259. [Google Scholar] [CrossRef]

- Vidal-Balea, A.; Blanco-Novoa, O.; Fraga-Lamas, P.; Vilar-Montesinos, M.; Fernández-Caramés, T.M. A Collaborative Augmented Reality Application for Training and Assistance during Shipbuilding Assembly Processes. Proceedings 2020, 54, 4. [Google Scholar] [CrossRef]

- Chatterjee, I.; Pforte, T.; Tng, A.; Salemi Parizi, F.; Chen, C.; Patel, S. ARDW: An Augmented Reality Workbench for Printed Circuit Board Debugging. UIST 2022. In Proceedings of the 35th Annual ACM Symposium on User Interface Software and Technology, Bend, OR, USA, 29 October–2 November 2022. [Google Scholar]

- Hahn, J.; Ludwig, B.; Wolff, C. Augmented reality-based training of the PCB assembly process. ACM Int. Conf. Proceeding Ser. 2015, 395–399. [Google Scholar]

- Yang, X.; Yang, J.; He, H.; Chen, H. A Hybrid 3D Registration Method of Augmented Reality for Intelligent Manufacturing. IEEE Access 2019, 7, 181867–181883. [Google Scholar] [CrossRef]

- Tiwari, A.S.; Bhagat, K.K. Comparative analysis of augmented reality in an engineering drawing course: Assessing spatial visualisation and cognitive load with marker-based, markerless and Web-based approaches. Australas. J. Educ. Technol. 2024, 40, 19–36. [Google Scholar] [CrossRef]

- Alessa, F.M.; Alhaag, M.H.; Al-harkan, I.M.; Ramadan, M.Z.; Alqahtani, F.M. A Neurophysiological Evaluation of Cognitive Load during Augmented Reality Interactions in Various Industrial Maintenance and Assembly Tasks. Sensors 2023, 23, 7698. [Google Scholar] [CrossRef]

- Park, S.; Maliphol, S.; Woo, J.; Fan, L. Digital Twins in Industry 4. Electronics 2024, 13, 2258. [Google Scholar] [CrossRef]

- Voinea, G.D.; Gîrbacia, F.; Duguleană, M.; Boboc, R.G.; Gheorghe, C. Mapping the Emergent Trends in Industrial Augmented Reality. Electronics 2023, 12, 1719. [Google Scholar] [CrossRef]

- Palmarini, R.; Erkoyuncu, J.A.; Roy, R.; Torabmostaedi, H. A systematic review of augmented reality applications in maintenance. Robot. Comput. Integr. Manuf. 2018, 49, 215–228. [Google Scholar] [CrossRef]

- Chatterjee, I.; Khvan, O.; Pforte, T.; Li, R.; Patel, S. Augmented Silkscreen: Designing AR Interactions for Debugging Printed Circuit Boards. DIS 2021. In Proceedings of the 2021 ACM Designing Interactive Systems Conference: Nowhere and Everywhere, Virtual, 28 June–2 July 2021; Association for Computing Machinery, Inc.: New York, NY, USA, 2021; pp. 220–233. [Google Scholar]

- Fernández del Amo, I.; Erkoyuncu, J.A.; Roy, R.; Palmarini, R.; Onoufriou, D. A systematic review of Augmented Reality content-related techniques for knowledge transfer in maintenance applications. Comput. Ind. 2018, 103, 47–71. [Google Scholar] [CrossRef]

- Haraguchi, D.; Miyahara, R. High Accuracy and Wide Range Recognition of Micro AR Markers with Dynamic Camera Parameter Control †. Electronics 2023, 12, 4398. [Google Scholar] [CrossRef]

- Syberfeldt, A.; Danielsson, O.; Gustavsson, P. Augmented Reality Smart Glasses in the Smart Factory: Product Evaluation Guidelines and Review of Available Products. IEEE Access 2017, 5, 9118–9130. [Google Scholar] [CrossRef]

- Roberts, J.; Christian, S. User Comfort in VR/AR Headsets: A Mathematical Investigation into Ergonomic and Functional Limitations of Eye Tracking Technology. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2024, 68, 1235–1239. [Google Scholar] [CrossRef]

- Ariwa, M.; Itamiya, T.; Koizumi, S.; Yamaguchi, T. Comparison of the observation errors of augmented and spatial reality systems. Appl. Sci. 2021, 11, 12076. [Google Scholar] [CrossRef]

- Vuforia Developer Library. Best Practices for Designing and Developing Image-Based Targets. Vuforia. 2024. Available online: https://developer.vuforia.com/library/vuforia-engine/images-and-objects/image-targets/best-practices/best-practices-designing-and-developing-image-based-targets/ (accessed on 20 May 2024).

- Deshpande, A.; Kim, I. The effects of augmented reality on improving spatial problem solving for object assembly. Adv. Eng. Inform. 2018, 38, 760–775. [Google Scholar] [CrossRef]

- Hou, L.; Wang, X.; Truijens, M. Using Augmented Reality to Facilitate Piping Assembly: An Experiment-Based Evaluation. J. Comput. Civ. Eng. 2015, 29, 05014007. [Google Scholar] [CrossRef]

- Xu, M.; Shu, Q.; Huang, Z.; Chen, G.; Poslad, S. ARLO: Augmented Reality Localization Optimization for Real-Time Pose Estimation and Human–Computer Interaction. Electronics 2025, 14, 1478. [Google Scholar] [CrossRef]

- Seeliger, A.; Merz, G.; Holz, C.; Feuerriegel, S. Exploring the Effect of Visual Cues on Eye Gaze during AR-Guided Picking and Assembly Tasks. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct, ISMAR-Adjunct 2021, Virtual, 4–8 October 2021; pp. 159–164. [Google Scholar]

- Yang, Z.; Shi, J.; Jiang, W.; Sui, Y.; Wu, Y.; Ma, S.; Kang, C.; Li, H. Influences of augmented reality assistance on performance and cognitive loads in different stages of assembly task. Front. Psychol. 2019, 10, 1703. [Google Scholar] [CrossRef]

- Devos, H.; Gustafson, K.; Ahmadnezhad, P.; Liao, K.; Mahnken, J.D.; Brooks, W.M.; Burns, J.M. Psychometric properties of NASA-TLX and index of cognitive activity as measures of cognitive workload in older adults. Brain Sci. 2020, 10, 994. [Google Scholar] [CrossRef]

- Kim, J.Y.; Choi, J.K. Effects of AR on Cognitive Processes: An Experimental Study on Object Manipulation, Eye-Tracking, and Behavior Observation in Design Education. Sensors 2025, 25, 1882. [Google Scholar] [CrossRef]

- Qian, X.; He, F.; Hu, X.; Wang, T.; Ipsita, A.; Ramani, K. ScalAR: Authoring Semantically Adaptive Augmented Reality Experiences in Virtual Reality. In Proceedings of the Conference on Human Factors in Computing Systems, New Orleans, LA, USA, 29 April–5 May 2022. [Google Scholar]

- Ito, K.; Tada, M.; Ujike, H.; Hyodo, K. Effects of the weight and balance of head-mounted displays on physical load. Appl. Sci. 2021, 11, 6802. [Google Scholar] [CrossRef]

- Laviola, J.J. A Discussion of Cybersickness in Virtual Environments. ACM Sigchi Bull. 2000, 32, 47–56. [Google Scholar] [CrossRef]

- ISO/TS 9241-411:2012; Ergonomics of Human-System Interaction—Part 411: Evaluation Methods for the Design of Physical Input Devices. ISO: Geneva, Switzerland, 2012.

- Seeliger, A.; Netland, T.; Feuerriegel, S. Augmented Reality for Machine Setups: Task Performance and Usability Evaluation in a Field Test. Procedia CIRP 2022, 107, 570–575. [Google Scholar] [CrossRef]

- Chen, L.; Zhao, H.; Shi, C.; Wu, Y.; Yu, X.; Ren, W.; Zhang, Z.; Shi, X. Enhancing Multi-Modal Perception and Interaction: An Augmented Reality Visualization System for Complex Decision Making. Systems 2024, 12, 7. [Google Scholar] [CrossRef]

- Zhang, X.; He, W.; Bai, J.; Billinghurst, M.; Qin, Y.; Dong, J.; Liu, T. Evaluation of Augmented Reality instructions based on initial and dynamic assembly tolerance allocation schemes in precise manual assembly. Adv. Eng. Inform. 2025, 63, 102954. [Google Scholar] [CrossRef]

- Bachmann, M.; Subramaniam, A.; Born, J.; Weibel, D. Virtual reality public speaking training: Effectiveness and user technology acceptance. Front. Virtual Real. 2023, 4, 1242544. [Google Scholar] [CrossRef]

- Montgomery, D.C. Design and Analysis of Experiments; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2013. [Google Scholar]

- Jones, B.; Kenward, M.G. Design and Analysis of Cross-Over Trials, 3rd ed.; Chapman and Hall/CRC: New York, NY, USA, 2014; pp. 1–438. [Google Scholar] [CrossRef]

- Toutenburg, H.; Shalabh. Statistical Analysis of Designed Experiments, 3rd ed.; Springer Texts in Statistics; Springer: New York, NY, USA, 2009; pp. 1–615. [Google Scholar] [CrossRef]

- Masood, T.; Egger, J. Augmented reality in support of Industry 4.0—Implementation challenges and success factors. Robot. Comput. Integr. Manuf. 2019, 58, 181–195. [Google Scholar] [CrossRef]

- Nee, A.Y.C.; Ong, S.K.; Chryssolouris, G.; Mourtzis, D. Augmented reality applications in design and manufacturing. CIRP Ann Manuf. Technol. 2012, 61, 657–679. [Google Scholar] [CrossRef]

- Leins, N.; Gonnermann-Müller, J.; Teichmann, M. Comparing head-mounted and handheld augmented reality for guided assembly. J. Multimodal User Interfaces 2024, 18, 313–328. [Google Scholar] [CrossRef]

- Teruggi, S.; Fassi, F. Hololens 2 Spatial Mapping Capabilities in Vast Monumental Heritage Environments. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, 46, 489–496. [Google Scholar] [CrossRef]

- Bazel, M.A.; Mohammed, F.; Baarimah, A.O.; Alawi, G.; Al-Mekhlafi, A.-B.A.; Almuhaya, B. The Era of Industry 5.0: An Overview of Technologies, Applications, and Challenges. In Advances in Intelligent Computing Techniques and Applications; Saeed, F., Mohammed, F., Fazea, Y., Eds.; Springer Nature: Cham, Switzerland, 2024; pp. 274–284. [Google Scholar]

- Stadler, S.; Cornet, H.; Frenkler, F. Assessing Heuristic Evaluation in Immersive Virtual Reality—A Case Study on Future Guidance Systems. Multimodal Technol. Interact. 2023, 7, 19. [Google Scholar] [CrossRef]

| Attribute | Description |

|---|---|

| Participant ID | Unique identifier for each participant (P1–P10) |

| Viewing Angle | One of the three predefined viewing angles |

| Component | Identifier for the PCB component being located |

| Time Taken | Time (in seconds) to complete the identification task |

| Screenshot Filename | File name of the screenshot captured |

| Run | Focus | Groups | Participants | Variable | Analysis Focus |

|---|---|---|---|---|---|

| Run 1 | 10 Components (All) | Group A: Traditional First, HoloLens™ Second (n = 5) | 5 | Method Sequence: Traditional → HoloLens™ | Impact of method sequence on localization time. |

| Group B: HoloLens™ First, Traditional Second (n = 5) | 5 | Method Sequence: HoloLens™ → Traditional | Impact of method sequence on localization time. | ||

| Run 2 | 2 Components (SD Card and Resistor) | Same Participants (n = 10) | 10 | Component Size: SD Card vs. Resistor | Effect of component size on localization time. |

| Run 3 | 10 Components (All) | Experts (≥8 years, n = 6) and Non-Experts (<8 years, n = 4) | 10 | Expertise: Experts vs. Non-Experts | Effect of expertise on localization time. |

| Group | Method | Mean (s) | Median (s) | Wilcoxon Test (Statistic, p-Value) |

|---|---|---|---|---|

| Entire Population (n = 10) | Traditional | 16.61 | 10 | (3210.5, 0.0013) |

| HoloLens™ | 21.57 | 13.5 | ||

| Experts (n = 6) | Traditional | 14.87 | 8 | (1480, 1.30 × 10−6) |

| HoloLens™ | 23.60 | 16.5 | ||

| Non-Experts (n = 4) | Traditional | 19.23 | 16.5 | (323, 0.4908) |

| HoloLens™ | 18.53 | 10 |

| Viewing Angle | Mean | Std Dev | Variance | Min | 25th Percentile | Median | 75th Percentile | Max |

|---|---|---|---|---|---|---|---|---|

| 90° | 3.26 | 1.81 | 3.28 | 1.26 | 2.16 | 2.59 | 3.40 | 8.46 |

| 60° | 2.48 | 0.69 | 0.47 | 1.36 | 1.96 | 2.46 | 2.83 | 4.22 |

| 45° | 2.45 | 1.25 | 1.57 | 0.97 | 1.31 | 2.28 | 3.10 | 6.25 |

| Experiment | Focus | Key Variables | Main Findings |

|---|---|---|---|

| Exp. 1 | AR vs. Traditional Method | Method, Component Size, User Expertise | Traditional method was faster overall, especially for experts. AR slightly better for non-experts. Component size and method order affected outcomes. |

| Usability and Qualitative Feedback | AR usability, marker precision, interface | Mixed usability ratings. Issues included marker flicker, interface learning curve, and headset discomfort. Suggestions made for improving UI and comfort. | |

| Exp. 2 | Accuracy at Different Viewing Angles | Viewing Angle (90°, 60°, 45°), Position Error | Highest accuracy at 90°. Significant decline in AR augmentation accuracy at 45°. Users compensated for AR drift using spatial cues. |

| Component Identification Correctness | Correct/Incorrect identification, Position | Correctness highest at 90°, lowest at 45°. Larger components and edge placement improved results. Geometric bias noted for smaller components. | |

| Confidence and Component Size | Component Size (Small, Medium, Large) | Medium components identified with highest confidence. Small components most affected by viewing angle. Limited generalizability for large components. | |

| Time Analysis by Angle | Task Completion Time at 3 Angles | No significant difference. Slight variability across angles. Highest SD at 90° likely due to learning curve. | |

| Usability Feedback | Physical, visual, interface, learning curve | Participants cited headset discomfort, visual strain, and usability limitations. Training and ergonomic improvements recommended. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chandel, K.; Seipel, S.; Åhlén, J.; Roghe, A. Augmented Reality for PCB Component Identification and Localization. Appl. Sci. 2025, 15, 6331. https://doi.org/10.3390/app15116331

Chandel K, Seipel S, Åhlén J, Roghe A. Augmented Reality for PCB Component Identification and Localization. Applied Sciences. 2025; 15(11):6331. https://doi.org/10.3390/app15116331

Chicago/Turabian StyleChandel, Kuhelee, Stefan Seipel, Julia Åhlén, and Andreas Roghe. 2025. "Augmented Reality for PCB Component Identification and Localization" Applied Sciences 15, no. 11: 6331. https://doi.org/10.3390/app15116331

APA StyleChandel, K., Seipel, S., Åhlén, J., & Roghe, A. (2025). Augmented Reality for PCB Component Identification and Localization. Applied Sciences, 15(11), 6331. https://doi.org/10.3390/app15116331