Abstract

In the context of autonomous driving, predicting pedestrian behavior is a critical component for enhancing road safety. Currently, the focus of such predictions extends beyond accuracy and reliability, placing increasing emphasis on the explainability and interpretability of the models. This research presents a novel neuro-symbolic approach that integrates deep learning with fuzzy logic to develop a pedestrian behavior predictor. The proposed model leverages a set of explainable features and utilizes a fuzzy inference system to determine whether a pedestrian is likely to cross the street. The pipeline was trained and evaluated using both the Pedestrian Intention Estimation (PIE) and Joint Attention for Autonomous Driving (JAAD) datasets. The results provide experimental insights into achieving greater explainability in pedestrian behavior prediction. Additionally, the proposed method was applied to assess the data selection process through a series of experiments, leading to a set of guidelines and recommendations for data selection, feature engineering, and explainability.

1. Introduction and Related Work

Nowadays, it is essential to highlight that pedestrians are among the most Vulnerable Road Users (VRUs), and they represent one of the groups most severely affected by traffic incidents on European Union roads. According to the European Road Safety Observatory, pedestrians accounted for approximately 20% of all road fatalities in 2022, which means that one in five fatal accidents involved a pedestrian [1]. Although there was a reported decline in road fatalities in 2023 and 2024 [2], progress is still insufficient to achieve a truly safe environment for all road users. This data underscores the critical need for advancements in pedestrian behavior prediction (also referred to as crossing action prediction) and road safety measures to protect this vulnerable group and reduce accidents on the road. In light of this, many research communities have developed machine learning (ML) models and methods to make more robust prediction systems and to face the related challenges.

For a long time, most of these models and methods have been addressed through a diverse range of algorithms that can be considered ‘black boxes’ because they lacked the ability to explain the reasoning behind their predictions. Among these approaches, various models have been employed, including those based on Recurrent Neural Networks (RNNs), Convolutional Neural Networks (ConvNets), Long Short-Term Memorys (LSTMs), and more recently, Transformer-based architectures. We can highlight methods such as SingleRNN that focuses on leveraging contextual features and employing an encoder–decoder architecture powered by RNNs [3]. Then, in [4], the authors use a graph-based model and 2D human pose estimation to predict whether a pedestrian is going to cross the street. The method of CapFormer [5] uses a self-attention alternative based on Transformer architecture. This method focuses primarily on bounding boxes, pose estimation, and AV speed. Additionally, a 3D Convolutional model (C3D) is employed, along with cropped regions of pedestrian bounding boxes from RGB video sequences, to facilitate spatiotemporal feature learning [6]. Another study [7] proposes an Stacked Fusion Gated Recurrent Unit (SF-GRU) approach composed of a five-layer stacked recurrent neural network that incrementally fuses multimodal features regarding pedestrian appearance, surrounding context, 2D body joint coordinates, bounding boxes over time, and ego-vehicle speed. Finally, we highlight the study by [8], where the authors propose a Convolutional LSTM (ConvLSTM) network to capture spatiotemporal dependencies. This architecture extends the traditional LSTM by incorporating convolutional operations in both the input to state and state to state transitions. For pedestrian behavior prediction, the model takes as input a stack of images, processes them using a CNN to extract spatial features, and then feeds these features into the ConvLSTM to model temporal dynamics.

Furthermore, it is worth mentioning the benchmark developed by York University, which extensively assessed the performance of various ML approaches in the pedestrian behavior task. This benchmark not only standardized the evaluation criteria for the task but also introduced a model that combines the power of RNNs and 3D convolutions [9].

Despite the growing number of models and research efforts dedicated to pedestrian behavior prediction, only a limited subset explicitly addresses explainability or is designed with interpretability as a core focus. It is important to emphasize that understanding why a machine learning model makes a specific prediction remains both a significant challenge and a critical requirement, particularly in safety critical domains where interpretability builds trust and supports informed decision-making.

Recent studies have begun to address this gap. For example, ref. [10] highlights that Transformer-based architectures offer advantages in interpretability due to their attention mechanisms, which inherently reveal which parts of the input the model focuses on during prediction. Similarly, ref. [11] introduces a dynamic Bayesian network that models the influence of interactions and social signals. This approach combines visual information with probabilistic inference techniques to provide transparent explanations, particularly by quantifying the relative importance of individual characteristics in shaping the predicted likelihood of pedestrian actions.

It is also worth noting that there is no universally accepted definition of interpretability and explainability within the machine learning community, and these terms are often used interchangeably [12]. However, some authors such as [13] emphasize the distinction between these concepts by framing them as questions: interpretability raises the question of ‘How does the model work?’ while explainability attempts to answer ‘What additional insights can the model provide?’ [12]. Additionally, the term interpretability is often associated with the degree to which a human can comprehend the rationale behind a decision, while explanation refers to the response to a why question [14]. In this paper, we use both terms complementarily to explain why the model predicts specific pedestrian behavior.

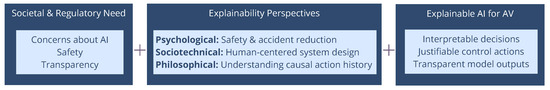

The explainability task, in the context of autonomous driving, has gained prominence due to the imperative need to clarify every decision made by the autonomous vehicles (AVs). This need arises from various disciplinary perspectives and opinions, centering on the issues and growing concerns caused by artificial intelligence (AI) systems, generating the necessity to establish regulations and standards that ensure control over their data and predictions made by the AV. The need for explainability is summarized in Figure 1, which highlights the primary concerns related to societal and regulatory demands, the expectations from explainable AI, and various perspectives on explainability [15].

Figure 1.

Explainability and perspectives under AV context.

On the other hand, it is crucial to acknowledge that the success of machine learning projects is significantly influenced by the quality and relevance of the datasets used for model, training, and testing. In fact, a well-chosen dataset has the potential to enhance the accuracy and efficiency of the model, whereas an inaccurate selection can yield unfavorable results. Furthermore, the characteristics of the datasets play a crucial role in shaping the behavior of a model. It is essential to consider that a model’s performance in real-world scenarios may be compromised if its deployment context significantly differs from the training and evaluation datasets [16]. Moreover, it is important to highlight that a well-chosen dataset can play a key role in facilitating the extraction of explainable features from pedestrian behavior datasets. For instance, there are studies that aim to comprehensively document the creation and utilization of datasets through the development of datasheets. These datasheets provide valuable information regarding the motivation behind dataset creation, composition details, collection processes, preprocessing techniques, distribution methods, besides data usage and maintenance guidelines [16]. Similarly, research efforts are being dedicated to various aspects of datasets, such as tracking and controlling dataset versions [17] and exploring data provenance [18]. It is important to highlight that there are some data selection methods which focus on choosing the most informative training examples for machine learning tasks across a specific dataset [19]. Nevertheless, it is worth noting that the topic of dataset selection approaches is relatively under-discussed within the ML research community.

Regarding the pedestrian behavior datasets, there are numerous datasets which include pedestrian annotations. A dataset like Targeted Action Priors Network (TITAN) [20] concentrates on a range of pedestrian actions, including motion, communicative, and contextual actions but does not explicitly incorporate the crossing action in their dataset approach. Conversely, datasets such as Stanford-TRI Intent Prediction (STIP) [21], JAAD [22], and PIE [23] explicitly include crossing actions within their dataset approach. The crossing action labels are crucial to this study, as they enable the identification of pedestrian behavior in specific road situations. These labels are used as the ground truth in this work.

We discarded the use of STIP for this research, as the dataset could not be shared by its owners at the time of the study. Therefore we have chosen to focus on the two widely used and publicly available datasets within the research community: JAAD is publicly available at https://data.nvision2.eecs.yorku.ca/JAAD_dataset/ (accessed on 10 May 2024) and PIE is publicly available at: https://data.nvision2.eecs.yorku.ca/PIE_dataset/ (accessed on 10 May 2024).

- JAAD Dataset: The JAAD dataset is a richly annotated collection consisting of 348 short video clips. It features a diverse range of road users across various driving environments, including different locations, traffic scenarios, and weather conditions. The dataset’s annotations are categorized into spatial, behavioral, contextual, and pedestrian-specific information. Approximately 72% of the pedestrians in the dataset actively engage in street crossing, while the remaining 28% do not.

- PIE Dataset: The creators of JAAD released a new dataset called PIE, which contains over 300K labeled video frames recorded in Toronto in clear weather. In addition to the similar type of annotations found in JAAD, PIE stands out by incorporating ego-vehicle information derived from On-board diagnostics (OBD) sensors. Distinguished from the JAAD dataset which primarily focuses on pedestrians intending to cross, the PIE dataset provides annotations for all pedestrians in close proximity to the road, irrespective of whether they attempt to cross in front of the ego vehicle or not. Furthermore, in contrast to the annotated pedestrian action in the JAAD dataset, the PIE dataset also exhibits an imbalance where a larger proportion of pedestrians are observed not crossing the street. Specifically, approximately 39.2% of pedestrians actively cross the street, whereas the remaining 60.8% do not engage in street crossing.

Building on the provided context and emphasizing the importance of both explainability and dataset selection into the autonomous driving context, this work proposes a novel neuro-symbolic approach that integrates symbolic reasoning with neural perception to enhance transparency and interpretability. We aim to develop an explainable pedestrian behavior predictor and evaluate the data selection process through a series of experiments conducted on this predictor. The proposed method employed multiple explainable features extracted from the JAAD and PIE datasets which were used for mining fuzzy rules. Subsequently, these rules were used to define a fuzzy inference system that provides the pedestrian behavior prediction. This approach is particularly novel, as it not only focuses on accurate prediction but also establishes a baseline for explainability.

The rest of the paper proceeds as follows: Details about the proposed pipeline for the Neuro-symbolic approach are introduced in Section 2. Section 3 describes the implementation, and the experimental setup is presented in Section 4. The results and discussions are presented in Section 5. The guidelines and recommendations regarding feature selection, dataset selection, and explainable prediction are introduced in Section 6. Finally, Section 7 presents the conclusions and future work.

2. Proposed Method

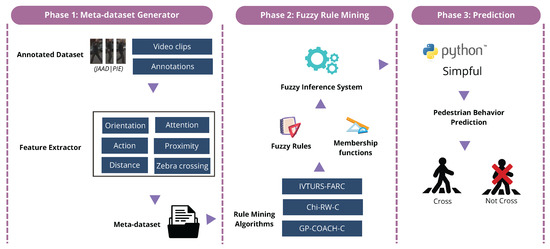

In this work, we propose a neuro-symbolic model predictor which is based on fuzzy logic and deep learning for feature extraction and requires a pipeline of three main steps: (1) meta-dataset generator, (2) fuzzy rule mining, and (3) crossing prediction. As can be observed in Figure 2, the pipeline starts with the annotated meta-dataset and ends with the pedestrian behavior prediction (whether the pedestrian will cross the road or not) including the probability of the crossing behavior.

Figure 2.

Neuro-symbolic approach pipeline.

The following section outlines the conceptualization and definition of the three phases.

2.1. Phase 1: Meta-Dataset Generator

The first phase involves the potential of feature extraction which begins with the pedestrian action dataset (JAAD and PIE) consisting of videos, images and annotations, then a feature extractor component is employed to extract features using deep learning and neural networks, creating a meta-dataset that contains the extracted features for each pedestrian. It is important to highlight that the meta-dataset maintains a balance between the number of pedestrians who cross the street and those who do not. This balanced representation ensures that the extracted fuzzy rules are equally informed by both scenarios.

As mentioned previously, our approach focuses on providing insights into the explainability of the pedestrian behavior predictor, with an emphasis on considering the overall processing pipeline. In particular, we aim to ensure that the pedestrian-related inputs are inherently explainable. To support this goal, we identified a set of features that offer meaningful contextual information and contribute to a comprehensive representation of road user behavior.

The selection of these features was guided by a contextual analysis of various road scenarios, interpreted from both the driver’s and the pedestrian’s perspectives. This analysis allowed us to capture the key elements that influence pedestrian behavior and driver decision-making, ensuring that the model is grounded in real-world dynamics. By incorporating features that reflect how road users perceive and respond to their environment, we enhance the interpretability and relevance of the predictive model in practical applications.

Therefore in this study, we carefully choose six features to serve as inputs for the pedestrian behavior predictor. Among these features, five are derived through the implementation of neural networks, while one feature is obtained directly from the pedestrian crossing datasets. The following list outlines the selected features:

- Body Orientation. This describes the pedestrian posture through an angle from 0° to 360°.

- Attention. This describes the attention of the pedestrian, indicating whether the pedestrian is looking at the ego vehicle.

- Action. This describes the motion state of the pedestrian, classifying between the following actions: stand, walk, wave, run, or undefined (used when the pedestrian action is not clear).

- Proximity to the road. This describes if the pedestrian is near the road. This feature is classified into three levels according to the pedestrian closeness to the road: near, medium distance, or far.

- Zebra Crossing. This represents the presence of a zebra crossing in the scene.

- Distance to ego vehicle. This represents the estimated distance between the pedestrian and the ego vehicle.

2.2. Phase 2: Fuzzy Rule Mining

During this phase, the meta-dataset was utilized to extract fuzzy rules and membership functions using fuzzy rule learning algorithms. Several algorithms were evaluated, including Chi-RW-C [24], GP-COACH-C [25], and IVTURS-FARC [26]. Among these, IVTURS-FARC was selected due to its superior performance and promising preliminary results in generating fuzzy rules from the meta-dataset.

IVTURS-FARC is a linguistic fuzzy rule-based classification algorithm that employs interval-valued restricted equivalence functions to enhance rule relevance during the inference process. Its fuzzy rule learning process is based on the FARC-HD algorithm [27]. The extracted fuzzy rules take the following form as outlined in ref. [26]:

where represents the label of the jth rule, is an nn-dimensional pattern vector corresponding to pedestrian features in this study, denotes an antecedent fuzzy set representing a linguistic term, is the class label, and is the rule weight [28].

During the fuzzy rule mining process, membership functions were generated to define the shape and characteristics of the fuzzy sets. Then, the fuzzy inference system was established using Takagi–Sugeno (TS) fuzzy inference model [29], which enables the representation of nonlinear systems through a set of fuzzy rules and zero-order output values, where the consequent parts are linear state equations.

To provide a clearer understanding of the fuzzy inference procedure, the behavior prediction output is defined according to

2.3. Phase 3: Prediction

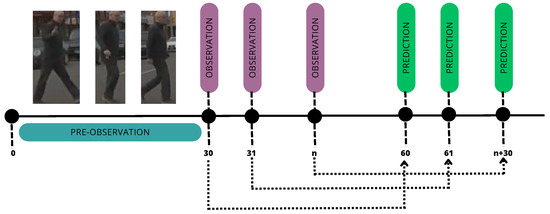

The pedestrian behavior prediction system was defined by integrating the pedestrian features defined on Section 2.1 as input, utilizing the TS fuzzy inference system, and incorporating the fuzzy rules and membership functions established in phase 1 of this pipeline. The pedestrian behavior predictor operates by analyzing 30 frames as the pre-observation period, followed by using a single frame as the observation time to predict pedestrian behavior for the upcoming second (See Figure 3).

Figure 3.

Pedestrian behavior predictor time.

3. Implementation Details

The implementation of the neuro-symbolic pipeline takes into account two main components, the generation of the meta-dataset and then the implementation of the two phases from the described pipeline. All learnable methods were trained on a system equipped with an AMD Ryzen 5 5600X 6-core CPU and an NVIDIA GeForce RTX 3080 GPU. The neuro-symbolic approach was executed on an ASUS Zephyrus laptop featuring an AMD Ryzen 7 CPU and an NVIDIA GeForce RTX 3050 GPU.

3.1. Phase 1: Meta-Dataset Generation

The meta-dataset generation involves feature extraction, starting with JAAD and PIE datasets, which consist of videos, images, and annotations. This process results in a meta-dataset containing the extracted features for each pedestrian, considering the following aspects:

- Frame-by-frame analysis: The extracted features capture the pedestrian’s state on a per-frame basis. This means that each frame is independently analyzed, providing a detailed understanding of pedestrian behavior over time.

- Data filtering: Videos with poor visibility and low quality were excluded to ensure reliable data. As a result, we carefully selected 284 videos from JAAD and 53 videos from PIE.

- Balancing crossing vs. non-crossing pedestrians: To mitigate the imbalance between pedestrians who cross the street and those who do not, two rules were defined to guide the data selection process and reduce noise:

- –

- Do not consider more than 60 frames after the pedestrian crosses;

- –

- Do not consider more than 90 frames when the pedestrian will not cross.

The feature extraction was developed under Python 3 language and PyTorch 2.7.0 framework; it is composed of six modules which are responsible for extracting each feature as is described.

3.1.1. Pedestrian Orientation

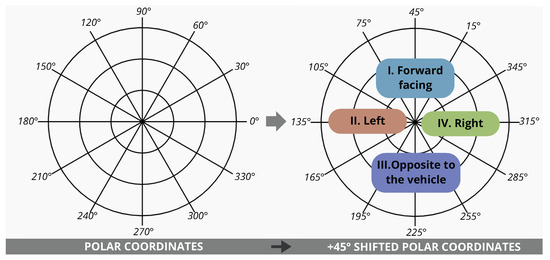

To calculate pedestrian body orientation, we used the pre-trained PedRecNet network [30], developed by Reutlingen University. This multi-task neural network supports various pedestrian detection functions based on 2D and 3D human pose estimation. By analyzing joint positions, the network estimates human body orientation, producing two key angles: the polar angle and the azimuthal angle .

- The polar angle ranges from 0° to 180°.

- The azimuthal angle ranges from 0° to 360°, determining whether the pedestrian is oriented left, right, toward the vehicle, or opposite to the vehicle.

However, there is a discontinuity in the right-facing orientation, as angles in this range span 0–45° and 315–0°. To standardize this representation, we applied a +45° shift to the final orientation (see Figure 4). This adjustment is particularly relevant for the neuro-symbolic approach, where the fuzzy set is characterized by a membership function , represented by continuous truth values within an interval. Since the original discontinuous range cannot be effectively represented as a fuzzy set, this shift ensures a more coherent and interpretable classification.

Figure 4.

Shifted polar coordinates to represent pedestrian orientation.

3.1.2. Attention and Zebra Crossing

The 2D body pose estimated by PedRecNet [30] is used to calculate whether the pedestrian is looking toward the vehicle. Pedestrian attention is determined by focusing on position of the nose, left eye, and right eye keypoints [31].

Then, the presence of a zebra crossing in the road scene is determined based on the dataset annotations. For the fuzzy rules, if a zebra crossing is present, the crossing is considered to have an “easy” level of difficulty; otherwise, it is classified as “hard”.

3.1.3. Action

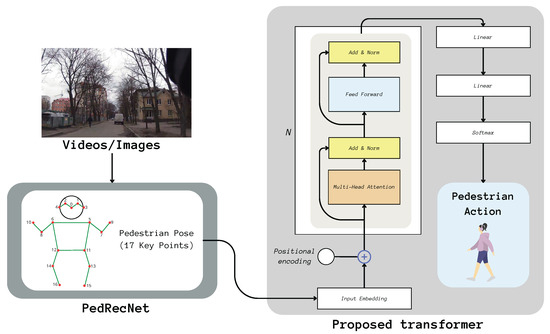

To determine the pedestrian’s action, a neural network was implemented using the pedestrian pose data provided by the pre-trained PedRecNet network [30] as input. The architecture of this neural network was inspired by the Action Transformer approach [32]. Our implementation focuses exclusively on the encoder component, enabling efficient feature extraction and classification as illustrated in the corresponding Figure 5.

Figure 5.

Pedestrian action pipeline detection.

To train the proposed Transformer, we used the MPOSE2021 dataset [32], which establishes a relationship between human skeleton data and corresponding human actions. This dataset consists of 20 distinct actions and is derived from human pose data detected by OpenPose [33] and PoseNet [34] on widely used datasets. However, since the dataset includes a broad range of general human actions, we refined the classification to focus specifically on actions relevant to pedestrian crossing predictions. To achieve this, we reduced the number of action classes to five, grouping them as illustrated in Table 1.

Table 1.

Pedestrian actions classification for our approach.

3.1.4. Pedestrian Proximity to the Road

To determine the pedestrian’s proximity to the road, we utilized the YOLOPv2 pre-trained network [35]. YOLOPv2 is a multi-task learning network capable of performing traffic object detection, drivable road area segmentation, and lane detection. For extracting pedestrian proximity to the road, we followed these steps:

- Step 1—Input Processing: Images or video frames are provided as input.

- Step 2—Segmentation and Detection: YOLOPv2 extracts the drivable road area segmentation and lane detection.

- Step 3—Distance Estimation: The pedestrian’s proximity is determined based on a minimum pixel distance threshold, which varies depending on the video resolution.

- Step 4—Proximity Classification: The pedestrian’s position is analyzed to determine if, when displaced by the estimated pixel threshold, they intersect the drivable road area or a lane, using the segmentation mask provided by YOLOPv2.

3.1.5. Distance to Ego Vehicle

The pedestrian distance was estimated using the triangle similarity, which is represented in Equation (3), where W is a known width of the pedestrians, F is the focal length, and P is the pedestrian width in pixels:

It is important to highlight that for each dataset, it was required to compute a different estimation, due to the difference between the camera parameters used for each record.

3.2. Phase 2: Fuzzy Rule Mining

The fuzzy rule mining process was conducted using KEEL software 3.0 [36] which is publicly available at http://www.keel.es/ (accessed on 10 May 2024). It is an open-source Java framework designed for machine learning and data mining tasks. Specifically, we employed the IVTURS-FARC algorithm, which was trained on the pedestrian meta-dataset to generate fuzzy rules and membership functions. The use of KEEL software involves three main steps:

- Uploading the dataset to be used for training.

- Designing the experiment by linking the dataset with the IVTURS-FARC algorithm.

- Executing the experiment to obtain the generated fuzzy rules and membership functions.

This process was repeated for each experiment conducted within the neuro-symbolic pedestrian prediction approach, particularly when changes were made to the training data or the fuzzy rule extraction process.

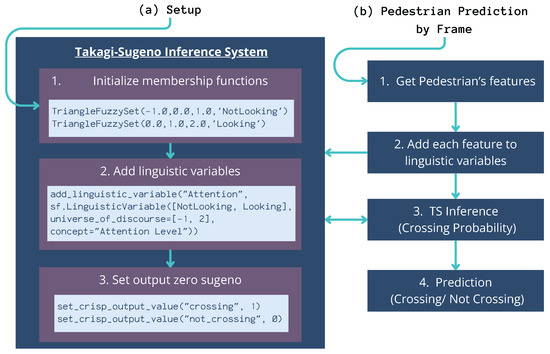

3.3. Phase 3: Prediction

To develop the pedestrian behavior predictor, we utilized Python along with the Simpful library [37]. Simpful is a Python-based library for fuzzy logic reasoning, designed to offer a simple and lightweight API that closely resembles natural language, making it intuitive and efficient for defining and applying fuzzy logic rules.

To implement the predictor, we developed a generic module capable of integrating the outputs generated by fuzzy rule mining, including membership functions and fuzzy rules. This module processes pedestrian features extracted from a single frame and predicts pedestrian behavior for the following second, enabling a dynamic and interpretable decision-making process. As shown in Figure 6, the first step of the algorithm (part a) is to set up the Takagi–Sugeno (TS) inference system, initialize all membership functions, and define the linguistic variables corresponding to the six pedestrian features identified in Phase 1. Then, for each frame (part b), the algorithm makes a prediction. In this step, the pedestrian features are obtained and inputted into the TS inference system. The system then produces an inference in the form of a pedestrian crossing probability. If this probability is greater than 0.5, the prediction is classified as Crossing; otherwise, it is classified as Not Crossing.

Figure 6.

Pedestrian behavior prediction algorithm. (a) Setup of the Takagi–Sugeno inference system and (b) pedestrian frame prediction by frame.

4. Experimental Setup

To evaluate the performance of the pedestrian behavior predictor within the neuro-symbolic framework, we established a well-structured experimental setup. This setup prioritizes data distribution as a key strategy and defines a testing methodology that enables the creation of diverse experiments. These experiments aim to provide deeper insights into the impact of dataset characteristics and data selection on the predictor’s performance. The following section details the distribution of training and testing data, along with the testing methodology.

4.1. Data Distribution

Based on the selected videos used for meta-dataset generation in Section 3.1, we define the following key aspects for structuring the training and testing sets.

- Training data prioritization: During the training phase, videos were carefully sorted based on their quality and relevance, prioritizing the most representative ones at the beginning of the training set. This strategy aims to enhance the learning process by focusing on the most informative samples.

- Testing data organization: The testing phase is structured into four distinct groups: (1) , (2) , (3) , and (4) . The all versions include all pedestrians annotated in the testing videos from the JAAD and PIE dataset, while the beh groups exclude videos where pedestrians are annotated as irrelevant.

- Training and testing distribution: The selected videos are divided into training and testing sets as detailed in Table 2.

Table 2. Dataset sampling for neuro-symbolic approach.

Table 2. Dataset sampling for neuro-symbolic approach.

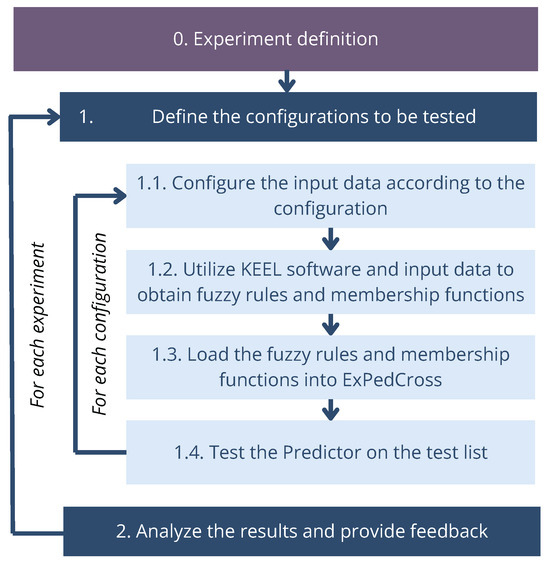

4.2. Testing Methodology

To conduct the experiments, various data configurations were employed, focusing on five key aspects. Each experiment was designed to analyze specific aspects of dataset selection and configuration.

- Experiment 1—Dataset quantity: Varying the amount of data used for training.

- Experiment 2—Data preprocessing: Applying different preprocessing techniques to assess their impact.

- Experiment 3—Randomness: Evaluating the influence of random selection in data sampling.

- Experiment 4—Features ablation: Testing the effect of excluding specific features.

- Experiment 5—Incorporation of diverse datasets: Combining data from both JAAD and PIE for a mixed dataset evaluation.

Based on the meta-dataset and the sorted list of videos from JAAD and PIE, the test methodology is applied across five experiments. As illustrated in Figure 7, each experiment begins by defining the configurations to be tested. For each configuration, the input data is preprocessed and adjusted before being loaded into the KEEL software, which generates the fuzzy rules and membership functions. These outputs are then integrated into the predictor module, which is tested using the JAAD and PIE test datasets. Once all configurations have been tested, the results are analyzed to extract meaningful insights and refine the methodology. This structured approach ensures a thorough evaluation of the pedestrian behavior predictor, allowing for iterative improvements based on the observed outcomes.

Figure 7.

Test methodology sequence for neuro-symbolic approach.

All experiments were evaluated using the F1 score (F1), which provides a balanced measure by calculating the harmonic mean between precision and recall.

5. Experimental Results and Discussion

The following section presents the experimental results from the five previously mentioned experiments, with a focus on the pedestrian behavior prediction task. Additionally, key guidelines and recommendations derived from these experiments are provided.

5.1. Experiment 1—Dataset Quantity

In the context of ML, one of the most crucial decisions in model training is determining the optimal amount of data to use. The first experiment aimed to answer the following question: Does increasing the amount of training data improve the prediction performance?

To investigate this, we designed a set of incremental data configurations for both PIE and JAAD, gradually increasing the number of data rows. Each row represents the state and pedestrian features for one frame.

As can be observed in Table 3, the process began with 2000 rows (J2K and P2K), evenly balanced between pedestrians who cross and those who do not. The dataset size was then progressively expanded to include 4000 rows (J4K and P4K), 8000 rows (J8K and P8K), 10,000 rows (J10K and P10K), and then 14,000 rows (J14K and P14K). Since the PIE dataset is larger, we conducted additional experiments with 20,000 rows (P20K) and 80,000 rows (P80K).

Table 3.

Experiment 1—dataset quantity results. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

By systematically expanding the dataset, we observed that increasing the amount of data within the same dataset can enhance the predictor’s performance. However, this improvement does not necessarily translate to better results across different datasets. According to the accuracy and F1 score results, better performance is observed in configurations that do not include the maximum amount of data. This finding suggests that, for a pedestrian behavior predictor based on fuzzy logic, simply increasing the dataset size does not guarantee better generalization. Moreover, as shown in Table 3, a higher number of fuzzy rules does not always lead to better performance. Notably, one of the best-performing configurations over the PIE dataset, P80K, achieved optimal results with the fewest rules of only 36 among all tested configurations.

In addition, it is important to highlight that the J14K configuration, based on JAAD, demonstrated the best average performance across both datasets. This is particularly noteworthy because J14K contains 66,000 fewer records than the P80K configuration and provides similar performance over the PIE dataset. A possible explanation for this outcome is that JAAD videos contain a more diverse set of scenarios and pedestrian behaviors, enabling a better understanding of the factors influencing the crossing decision.

5.2. Experiment 2—Data Preprocessing

This experiment aimed to assess the impact and significance of data selection and filtering in achieving accurate results from the pedestrian behavior predictor. The primary objective was to validate the hypothesis that careful data filtering is essential to generate meaningful fuzzy rules.

To test this hypothesis, we explored various configurations with incremental dataset sizes both with and without frame restrictions defined in Section 3.1:

- For JAAD:

- –

- J14K—The filtered 14,000 rows configuration from Experiment 1.

- –

- J14Kn—The 14,000 rows configuration without data filtering.

- –

- J30Kn—The 30,000 rows configuration without data filtering.

- For PIE:

- –

- P80K—The filtered 80,000 rows configuration from Experiment 1.

- –

- P80Kn—The 80,000 rows configuration without data filtering.

- –

- P160K—The 160,000 rows configuration without data filtering.

By comparing these configurations, we aimed to determine whether unfiltered data introduce noise that negatively impacts the extraction of reliable fuzzy rules, ultimately affecting predictor performance.

As shown in Table 4, data selection and filtering played a crucial role in the generation of effective fuzzy rules. The results indicate that rules derived from datasets with frame restrictions consistently outperformed those generated from unfiltered data, leading to improved performance of the pedestrian behavior predictor.

Table 4.

Experiment 2—data preprocessing results. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

5.3. Experiment 3—Randomness

The objective of this experiment was to determine whether incorporating human reasoning in the selection of videos for fuzzy rule generation could enhance the performance of the pedestrian behavior predictor or if random selection would be sufficient to achieve accurate results. To evaluate this hypothesis, we compared the results against the J8K and P14K configurations from Experiment 1—dataset quantity.

As outlined in the data distribution (See Section 4.1), before selecting input data for the fuzzy rule mining process, videos were systematically ranked based on their quality and relevance to pedestrian behavior prediction. This experiment introduced six additional configurations, each containing the same number of records as J8K (8000 records) and P14K (14,000 records) but with randomly selected videos contributing to the training set used for mining the fuzzy rules.

According to the results presented in Table 5, random data selection can enhance the performance of the explainable predictor within its own dataset, taking into account both accuracy and F1 score. Notably, the J8KR3 and P14KR1 configurations achieved the best results for JAAD and PIE, respectively. However, the process of analyzing and systematically ranking videos based on relevance, quality, and contextual suitability proved to be beneficial. This structured approach not only improved the predictor’s overall performance but also contributed to its generalization capabilities across different datasets.

Table 5.

Experiment 3—randomness results. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

5.4. Experiment 4—Features Ablation

This experiment aimed to explore the relationship between different pedestrian features and their impact on prediction performance. It built upon the best performing configurations from Experiment 1, specifically J14K and P80K.

To assess the significance of each feature, the input data for the fuzzy rule mining process was systematically modified by removing one feature at a time from J14K and P80K. This approach allowed us to evaluate how the absence of each feature influenced the predictor’s accuracy. Table 6 presents the results of the different configurations tested:

Table 6.

Experiment 4—features ablation results. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

According to the results, the most influential feature for JAAD was Proximity, while for PIE, the most critical features were Distance and Action. This conclusion is based on the drop in prediction performance observed when these features were removed from the input data during the fuzzy rule learning process (i.e., the J14K-NotProximity and P80K-NotDistance configurations). The significant decline in accuracy highlights the essential role these features play in pedestrian behavior prediction.

Additionally, during cross-testing, it was observed that Orientation and the Zebra crossing label from JAAD and the Distance from PIE had a negative impact on the results. This suggests that these features are not consistently represented across datasets. Variability in how these features are defined and labeled in different datasets can introduce inconsistencies, reducing the predictor’s ability to generalize across different environments.

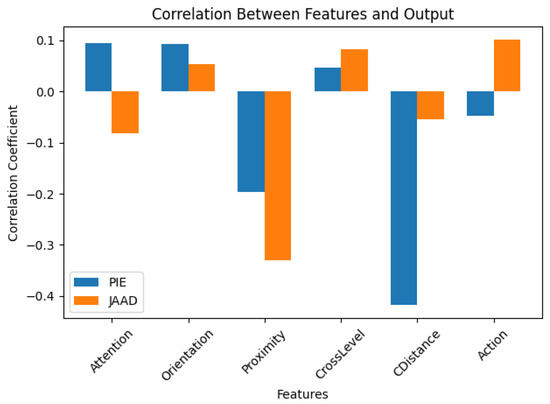

To further investigate the relationship between features and pedestrian behavior, a correlation analysis was conducted using data from the J14K and P80K configurations. As illustrated in Figure 8, the pedestrian behavior exhibits a slight positive correlation with the pedestrian action in JAAD. This suggests that as the pedestrian’s action class increases (see Table 1), the likelihood of crossing also increases.

Figure 8.

Features correlation.

Moreover, a moderate negative correlation was found between proximity to the road and crossing likelihood in both JAAD and PIE. This indicates that pedestrians closer to the road are more likely to cross. Similarly, in the PIE dataset, a moderate negative correlation was identified between crossing action and pedestrian distance, implying that pedestrians closer to the ego vehicle have a higher probability of crossing.

These findings underscore the importance of carefully selecting and interpreting features to ensure that the predictor captures meaningful patterns across different datasets. Conversely, the remaining pedestrian features do not show a strong correlation with the pedestrian’s decision to cross. This indicates that, on their own, the evaluated features are not sufficiently representative to accurately predict pedestrian crossing behavior. Instead, they should be complemented with additional features that provide more contextual and informative cues, enhancing the overall predictive capability of the model.

5.5. Experiment 5—Incorporation of Diverse Datasets

The primary objective of this experiment was to determine whether the performance of the explainable predictor improves when training data from multiple datasets is combined. To investigate this, three training configurations were designed, integrating data from both JAAD and PIE during the fuzzy rule mining process. These configurations are defined as follows:

- J8K-P8K: Consists of 8000 records from each dataset.

- J10K-P10K: Includes 10,000 records from each dataset.

- J14K-P80K: Incorporates 14,000 records from JAAD and 80,000 records from PIE.

These configurations were selected to analyze the impact of different dataset proportions on the model’s ability to generalize across diverse pedestrian behavior scenarios.

As shown in Table 7, combining datasets to generate the training input results in only a marginal improvement in performance during cross-testing. In the JAAD dataset there is not a clear improvement neither in the accuracy nor F1 score, while in the PIE dataset, there are improvements. This outcome suggests that the limited performance gain is likely due to the datasets containing similar scenarios, reducing the potential benefits of data fusion.

Table 7.

Experiment 5—diverse datasets results. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

5.6. Analysis of the Explainability of the Experimental Results

Based on the results obtained from the five experiments mentioned, we have summarized the best performing configurations of the pedestrian behavior predictor. These results are presented in Table 8 and include fuzzy rules trained with the following datasets: (1) 14,000 records from ordered JAAD videos (J14K), (2) 14,000 records from randomly selected JAAD videos (J14KR3), and (3) a combined dataset of 10,000 records from PIE and 10,000 records from JAAD (J10K-P10K).

Table 8.

Best results over all experiments. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

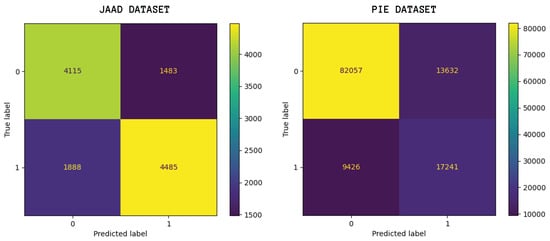

It is important to note that the results of these configurations are associated with balanced mispredictions between the two pedestrian behaviors (crossing or not crossing) as can be observed in the confusion matrix shown in Figure 9. This confusion matrix was elaborated from the results provided using the J10K-P10K configuration.

Figure 9.

Confusion matrix for J10K-P10K configuration.

In the JAAD dataset, mispredictions occur in 26% of cases where the model predicts a pedestrian will cross when they actually will not, and in 29% of cases where the model predicts a pedestrian will not cross when they actually will. In contrast, for the PIE dataset, 14% of predictions incorrectly classify a pedestrian as crossing when they are not, while 35% of predictions fail to detect actual crossing behavior. This analysis suggests that the F1 score remains unaffected because the predictor achieves high accuracy in at least one of the classification categories, balancing the overall performance despite specific misclassifications trends.

Table 9 presents a comparison between the best results we obtained and some state-of-the-art approaches based on the benchmark [9]. According to the table, although the neuro-symbolic approach does not show a significant improvement in terms of accuracy and F1 score, the key advantage of our method lies in producing explainable predictions, from the input to the final result. Nevertheless, our model J8KR3 provides more consistent results on the JAAD dataset.

Table 9.

Comparative results with state-of-the-art models. Accuracy (A) and F1 score. Bold values indicate the best performance for each evaluation metric across the different configurations.

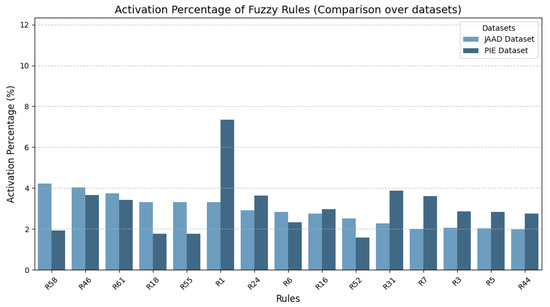

Regarding explainability, we performed an analysis using the best performing configuration, J10K-P10K composed by 64 rules to examine the fuzzy rules activated by the pedestrian behavior predictor when assessing pedestrian crossing behavior in the and test sets. As is shown in Figure 10, over the it is evident that three primary rules (Rule 58, Rule 46, and Rule 61) are activated most frequently, while the remaining rules display a relatively similar activation percentage. In contrast, within the dataset, there is a significant disparity between the most frequently activated rule and the other fourteen. Rule 1 was the most frequently activated, occurring 7.34% of the time.

Figure 10.

Top 15 fuzzy rules activation.

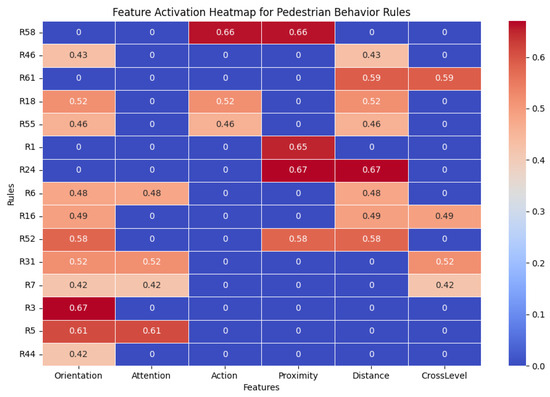

Figure 11 presents the distribution and impact of pedestrian features on the most activated fuzzy rules. From an explainability perspective, the most frequently activated rules offer preliminary insights into why a pedestrian chooses to cross and how explainable features support decision-making transparency. Proximity and Distance emerge as the most influential factors as evidenced by the high weights in rules where these features are present.

Figure 11.

Feature activation heatmap over the most activated fuzzy rules.

Orientation plays a significant role in decision-making, but contradictory rules indicate that it must be analyzed in combination with other features. While orientation provides directional context, its influence varies depending on additional conditions. Pedestrians facing the road are more likely to cross, whereas those walking forward or away tend to refrain from crossing.

Pedestrian Action serves as a strong supporting feature but is not a standalone predictor. Some pedestrians may walk near the road without actually crossing, highlighting the need for additional contextual cues.

Finally, Attention and Zebra Crossing Presence (CrossLevel) contribute to the prediction but act as secondary factors. Rather than determining pedestrian behavior outright, they refine and enhance the accuracy of predictions, complementing the primary features.

To visually illustrate the model’s output, Figure 12 presents an example prediction from the JAAD test set. In this example, the pedestrian is about to cross the road, and the figure showcases how the neuro-symbolic approach predicts the probability of crossing across different frames. Additionally, it highlights the most activated rules and the key features that influenced the prediction, providing valuable insight into the model’s decision-making process.

Figure 12.

Visual example test of the model over the JAAD video.

For each frame, the linguistic values of each explainable feature are displayed, along with a bar indicating the feature’s relevance based on the activated rules. The impact of proximity, distance, and orientation is evident throughout the example. Notably, proximity becomes particularly significant when the probability of crossing is high, reinforcing its crucial role in pedestrian behavior prediction.

6. Guidelines and Recommendations

We provide guidelines and recommendations derived from the five mentioned experiments. These recommendations focus on key aspects such as dataset selection and feature selection, aiming to enhance the reliability and interpretability of future models.

6.1. Dataset Selection

During the experiments, we identified the critical importance of carefully selecting and analyzing datasets. As a result, we have established the following guidelines for choosing an appropriate dataset for a ML project, particularly in the context of autonomous driving and explainability:

- What you expect from a dataset?: Identify the task you want to achieve and what you expect in detail from the dataset. We recommend to define a checklist of criteria in order to save time when you are selecting the dataset. Establishing clear criteria for dataset selection can prevent the choice of inappropriate datasets, which could otherwise lead to erroneous feature selection and methodological approaches, ultimately resulting in incorrect conclusions.

- Detail deeply the dataset: Take a considerable time to identify, analyze and understand the dataset, its data, properties, videos, images, and what it is composed of. Detail how the dataset is labeled and read in detail the documentation.

- Evaluate quality vs. quantity: In terms of explainability, a big amount of data for pedestrian crossing task does not guarantee a fuzzy rule generalization on its own. Therefore, it is important to evaluate the quality of the dataset, and the diversity of the scenes and actors. It can be ultimately more relevant than the quantity of data.

- Each dataset is different: Different datasets vary significantly in annotation standards and scene distribution, and a unified preprocessing pipeline may lead to inconsistencies in feature extraction. Using different datasets with the same preprocessing process and without detailing the differences can lead to unexpected results.

6.2. Feature Selection

Regarding feature selection for the pedestrian crossing prediction task, we gained valuable insights from this study. We organized these insights into key guidelines to help optimize feature selection for improved model performance and explainability.

- Group the features: One feature by itself will not be enough to identify the pedestrian crossing behavior. It is highly recommended to combine two or more features. The best strategy implies starting with few features and adding the other ones progressively.

- Identify the generic features: There are features which just can be used for a specific dataset, others that need some changes in the preprocessing phase, and others that are not dependent from the dataset. So carefully analyze the use of these features because it could affect the cross-dataset evaluation.

- Features preprocessing: In order to improve the interpretability and explainability of the pedestrian crossing action prediction, it is important to analyze each feature in detail and identify if the feature needs any processing or if the feature extraction has to consider any variable which can affect or create noise into the system.Transforming raw data into more meaningful representations can enhance explainability. This may involve creating new features, normalizing data, or encoding categorical variables in ways that preserve their semantic meaning.

- Evaluating features importance: Understanding the influence of each feature on the model’s predictions helps assess whether the selected features align with domain knowledge.

- Data Cleaning and Consistency: Ensuring that the data is free from inconsistencies, missing values, or biases is critical. Explainable models rely on well-structured, high-quality data to generate reliable insights.

7. Conclusions and Future Work

In this work, we have presented a novel, interpretable and explainable approach for pedestrian behavior predictor. The approach is based on a neuro-symbolic model using fuzzy logic. The experiments addressed some evaluation factors which allow to define some guidelines and recommendations regarding the process of data selection and feature selection over the explainable and interpretable context. We emphasize that the process of selecting the right dataset, one that is suitable, accurate, and comprehensive, is indeed a challenging task within the domain of ML context.

From these insights, it is important to highlight that in the context of an explainable predictor, having a large amount of data does not necessarily lead to better results and does not guarantee improved generalization on its own. Additionally, as we look for explainable predictions, it is crucial to include a deep analysis of the videos and annotations before using them. Another insight shows us that including data selection and filtering strategies is also important with a view to creating meaningful fuzzy rules.

On the other hand, it is important to mention that the features to use need to be selected carefully, and one feature by itself may not be enough for obtaining accurate results; the features need to be complemented with additional features that convey more representative information. Likewise, the divergent representation of these features across datasets may lead to inconsistencies in prediction performance when the explainable predictor is applied; therefore, each feature has to be analyzed and used for each dataset in an optimal way.

Regarding the use of different features extracted from the JAAD and PIE dataset in an explainable approach, the proximity, orientation, action and distance are presented as strong features which can reveal meaningful information about the pedestrian behavior.

In the future, we will explore more complex strategies and approaches to develop an explainable and interpretable pedestrian behavior predictor. In addition, we will work over the features extraction including features which can encapsulate the pedestrian motion or behavior history.

Author Contributions

Conceptualization A.N.M.C., C.S.M. and M.Á.S.; methodology, A.N.M.C.; software, A.N.M.C.; validation, A.N.M.C.; formal analysis, A.N.M.C.; investigation, A.N.M.C.; resources, A.N.M.C.; data curation, A.N.M.C.; writing—original draft preparation, A.N.M.C.; writing—review and editing, A.N.M.C., C.S.M. and M.Á.S.; visualization, A.N.M.C.; supervision, C.S.M. and M.Á.S.; project administration, M.Á.S.; funding acquisition, C.S.M. and M.Á.S. All authors have read and agreed to the published version of the manuscript.

Funding

The work of Angie Nataly Melo Castillo has been funded by the HEIDI project of the European Commission under Grant Agreement 101069538 and the SEGVAUTO5G-CM project of the Regional Government of Madrid under reference 2024/ECO-277.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within the article.

Acknowledgments

The work of Angie Nataly Melo Castillo has been funded by the HEIDI project of the European Commission under Grant Agreement 101069538 and the SEGVAUTO5G-CM project of the Regional Government of Madrid under reference 2024/ECO-277. In addition, we acknowledge the use of AI-assisted tools in the preparation of this manuscript. Specifically, we used OpenAI’s ChatGPT (Version 4) to support writing and language refinement.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

| AV | Autonomous Vehicle |

| CNN | Convolutional Neural Network |

| JAAD | Joint Attention for Autonomous Driving |

| F1 | F1 Score |

| FIS | Fuzzy inference system |

| ITS | Intelligent Transportation Systems |

| ML | Machine Learning |

| PIE | Pedestrian Intention Estimation |

| RNN | Recurrent Neural Network |

| SFRNN | Stacked with multilevel fusion RNN |

| STIP | Stanford-TRI Intent Prediction |

| TITAN | Targeted Action Priors Network |

| TS | Takagi–Sugeno |

| VRU | Vulnerable Road User |

| YOLO | You Only Look Once |

References

- Slootmans, F. European Road Safety Observatory; Technical Report; European Commission: Brussels, Belgium, 2022. [Google Scholar]

- World Health Organization. Global Status Report on Road Safety 2023; World Health Organization: Geneva, Switzerland, 2023. [Google Scholar]

- Kotseruba, I.; Rasouli, A.; Tsotsos, J.K. Do They Want to Cross? Understanding Pedestrian Intention for Behavior Prediction. In Proceedings of the 2020 IEEE Intelligent Vehicles Symposium (IV), Las Vegas, NV, USA, 19 October–13 November 2020; pp. 1688–1693. [Google Scholar] [CrossRef]

- Cadena, P.R.G.; Yang, M.; Qian, Y.; Wang, C. Pedestrian Graph: Pedestrian Crossing Prediction Based on 2D Pose Estimation and Graph Convolutional Networks. In Proceedings of the 2019 IEEE Intelligent Transportation Systems Conference (ITSC), Auckland, New Zealand, 27–30 October 2019; pp. 2000–2005. [Google Scholar] [CrossRef]

- Lorenzo, J.; Alonso, I.P.; Izquierdo, R.; Ballardini, A.L.; Saz, A.H.; Llorca, D.F.; Sotelo, M.A. Capformer: Pedestrian Crossing Action Prediction Using Transformer. Sensors 2021, 21, 5694. [Google Scholar] [CrossRef] [PubMed]

- Tran, D.; Bourdev, L.; Fergus, R.; Torresani, L.; Paluri, M. Learning Spatiotemporal Features with 3D Convolutional Networks. arXiv 2015, arXiv:1412.0767. [Google Scholar]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Pedestrian Action Anticipation Using Contextual Feature Fusion in Stacked RNNs. arXiv 2020, arXiv:2005.06582. [Google Scholar]

- Shi, X.; Chen, Z.; Wang, H.; Yeung, D.-Y.; Wong, W.-K.; Woo, W.-C. Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting. Adv. Neural Inf. Process. Syst. 2015, 28. Available online: https://proceedings.neurips.cc/paper_files/paper/2015/file/07563a3fe3bbe7e3ba84431ad9d055af-Paper.pdf (accessed on 18 July 2024).

- Kotseruba, I.; Rasouli, A.; Tsotsos, J.K. Benchmark for Evaluating Pedestrian Action Prediction. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2021; pp. 1257–1267. [Google Scholar] [CrossRef]

- Achaji, L.; Moreau, J.; Aioun, F.; Charpillet, F. Analysis Over Vision-Based Models for Pedestrian Action Anticipation. arXiv 2023, arXiv:2305.17451. [Google Scholar]

- Muscholl, N.; Klusch, M.; Gebhard, P.; Schneeberger, T. Emidas: Explainable Social Interaction-Based Pedestrian Intention Detection Across Street. In Proceedings of the 36th Annual ACM Symposium on Applied Computing, Virtual, 22–26 March 2021. [Google Scholar]

- Marcinkevičs, R.; Vogt, J.E. Interpretability and Explainability: A Machine Learning Zoo Mini-Tour. arXiv 2023, arXiv:2012.01805. [Google Scholar]

- Lipton, Z.C. The Mythos of Model Interpretability. arXiv 2017, arXiv:1606.03490. [Google Scholar]

- Molnar, C. Interpretable Machine Learning. 2022. Available online: https://christophm.github.io/interpretable-ml-book (accessed on 18 July 2024).

- Atakishiyev, S.; Salameh, M.; Yao, H.; Goebel, R. Explainable Artificial Intelligence for Autonomous Driving: A Comprehensive Overview and Field Guide for Future Research Directions. arXiv 2023, arXiv:2112.11561. [Google Scholar] [CrossRef]

- Gebru, T.; Morgenstern, J.; Vecchione, B.; Vaughan, J.W.; Wallach, H.; Daumé, H., III; Crawford, K. Datasheets for Datasets. arXiv 2018, arXiv:1803.09010. [Google Scholar]

- Bhardwaj, A.P.; Bhattacherjee, S.; Chavan, A.; Deshpande, A.; Elmore, A.J.; Madden, S.; Parameswaran, A.G. Datahub: Collaborative Data Science & Dataset Version Management at Scale. In Proceedings of the Seventh Biennial Conference on Innovative Data Systems Research, CIDR 2015, Asilomar, CA, USA, 4–7 January 2015; Available online: http://cidrdb.org/cidr2015/Papers/CIDR15_Paper18.pdf (accessed on 18 July 2024).

- Cheney, J.; Chiticariu, L.; Tan, W.-C. Provenance in Databases: Why, How, and Where. Found. Trends Databases 2009, 1, 379–474. [Google Scholar] [CrossRef]

- Coleman, C.; Yeh, C.; Mussmann, S.; Mirzasoleiman, B.; Bailis, P.; Liang, P.; Leskovec, J.; Zaharia, M. Selection via Proxy: Efficient Data Selection for Deep Learning. In Proceedings of the International Conference on Learning Representations, ICLR 2020, Addis Ababa, Ethiopia, 30 April 2020; Available online: https://openreview.net/forum?id=HJg2b0VYDr (accessed on 18 July 2024).

- Malla, S.; Dariush, B.; Choi, C. Titan: Future Forecast Using Action Priors. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 11186–11196. [Google Scholar]

- Liu, B.; Adeli, E.; Cao, Z.; Lee, K.-H.; Shenoi, A.; Gaidon, A.; Niebles, J.C. Spatiotemporal Relationship Reasoning for Pedestrian Intent Prediction. IEEE Robot. Autom. Lett. 2020, 5, 3485–3492. [Google Scholar] [CrossRef]

- Rasouli, A.; Kotseruba, I.; Tsotsos, J.K. Are They Going to Cross? A Benchmark Dataset and Baseline for Pedestrian Crosswalk Behavior. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV 2017), Venice, Italy, 22–29 October 2017; pp. 206–213. [Google Scholar]

- Rasouli, A.; Kotseruba, I.; Kunic, T.; Tsotsos, J. PIE: A Large-Scale Dataset and Models for Pedestrian Intention Estimation and Trajectory Prediction. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6261–6270. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Yamamoto, T. Rule Weight Specification in Fuzzy Rule-Based Classification Systems. IEEE Trans. Fuzzy Syst. 2005, 13, 428–435. [Google Scholar] [CrossRef]

- Berlanga, F.; Rivera, A.; del Jesus, M.; Herrera, F. GP-COACH: Genetic Programming Based Learning of Compact and Accurate Fuzzy Rule Based Classification Systems for High Dimensional Problems. Inf. Sci. 2010, 180, 1183–1200. [Google Scholar] [CrossRef]

- Sanz, J.; Fernandez, A.; Bustince, H.; Herrera, F. IVTURS: A Linguistic Fuzzy Rule-Based Classification System Based on a New Interval-Valued Fuzzy Reasoning Method with Tuning and Rule Selection. IEEE Trans. Fuzzy Syst. 2013, 21, 399–411. [Google Scholar] [CrossRef]

- Alcala-Fdez, J.; Alcala, R.; Herrera, F. A Fuzzy Association Rule-Based Classification Model for High-Dimensional Problems with Genetic Rule Selection and Lateral Tuning. IEEE Trans. Fuzzy Syst. 2011, 19, 857–872. [Google Scholar] [CrossRef]

- Ishibuchi, H.; Nakashima, T. Effect of Rule Weights in Fuzzy Rule-Based Classification Systems. IEEE Trans. Fuzzy Syst. 2001, 9, 506–515. [Google Scholar] [CrossRef]

- Takagi, T.; Sugeno, M. Fuzzy Identification of Systems and Its Applications to Modeling and Control. IEEE Trans. Syst. Man Cybern. 1985, SMC-15, 116–132. [Google Scholar] [CrossRef]

- Burgermeister, D.; Curio, C. PedRecNet: Multi-Task Deep Neural Network for Full 3D Human Pose and Orientation Estimation. In Proceedings of the 2022 IEEE Intelligent Vehicles Symposium (IV), Aachen, Germany, 4–9 June 2022; pp. 441–448. [Google Scholar] [CrossRef]

- Gonzalo, R.I.; Maldonado, C.S.; Ruiz, J.A.; Alonso, I.P.; Llorca, D.F.; Sotelo, M.A. Testing Predictive Automated Driving Systems: Lessons Learned and Future Recommendations. IEEE Intell. Transp. Syst. Mag. 2022, 14, 77–93. [Google Scholar] [CrossRef]

- Mazzia, V.; Angarano, S.; Salvetti, F.; Angelini, F.; Chiaberge, M. Action Transformer: A Self-Attention Model for Short-Time Pose-Based Human Action Recognition. Pattern Recognit. 2022, 124, 108487. [Google Scholar] [CrossRef]

- Cao, Z.; Simon, T.; Wei, S.-E.; Sheikh, Y. Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- Papandreou, G.; Zhu, T.; Chen, L.-C.; Gidaris, S.; Tompson, J.; Murphy, K. PersonLab: Person Pose Estimation and Instance Segmentation with a Bottom-Up, Part-Based, Geometric Embedding Model. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 269–286. [Google Scholar]

- Han, C.; Zhao, Q.; Zhang, S.; Chen, Y.; Zhang, Z.; Yuan, J. YOLOPv2: Better, Faster, Stronger for Panoptic Driving Perception. arXiv 2022, arXiv:2208.11434. [Google Scholar]

- Triguero, I.; González, S.; Moyano, J.M.; García, S.; Alcalá-Fdez, J.; Luengo, J.; Fernández, A.; del Jesús, M.J.; Sánchez, L.; Herrera, F. KEEL 3.0: An Open Source Software for Multi-Stage Analysis in Data Mining. Int. J. Comput. Intell. Syst. 2017, 10, 1238–1249. [Google Scholar] [CrossRef]

- Spolaor, S.; Fuchs, C.; Cazzaniga, P.; Kaymak, U.; Besozzi, D.; Nobile, M.S. Simpful: A User-Friendly Python Library for Fuzzy Logic. Int. J. Comput. Intell. Syst. 2020, 13, 1687–1698. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).