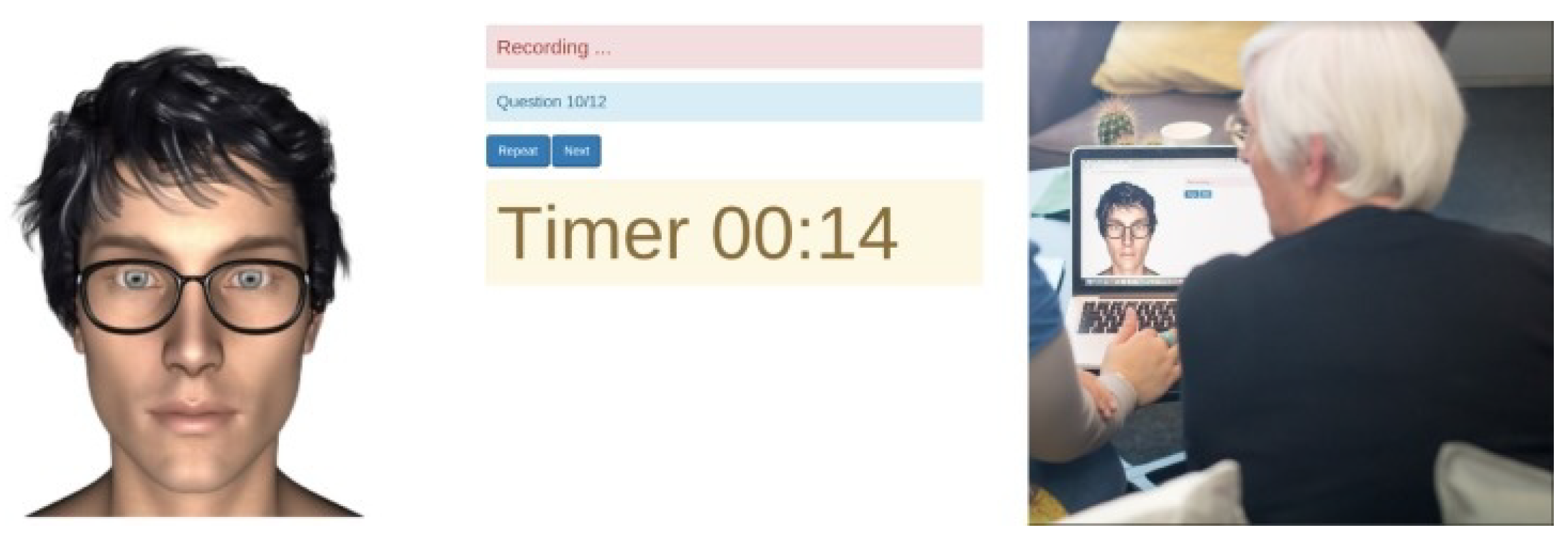

Figure 1.

A screen-shot that presents the IVA when it is in use [

29].

Figure 1.

A screen-shot that presents the IVA when it is in use [

29].

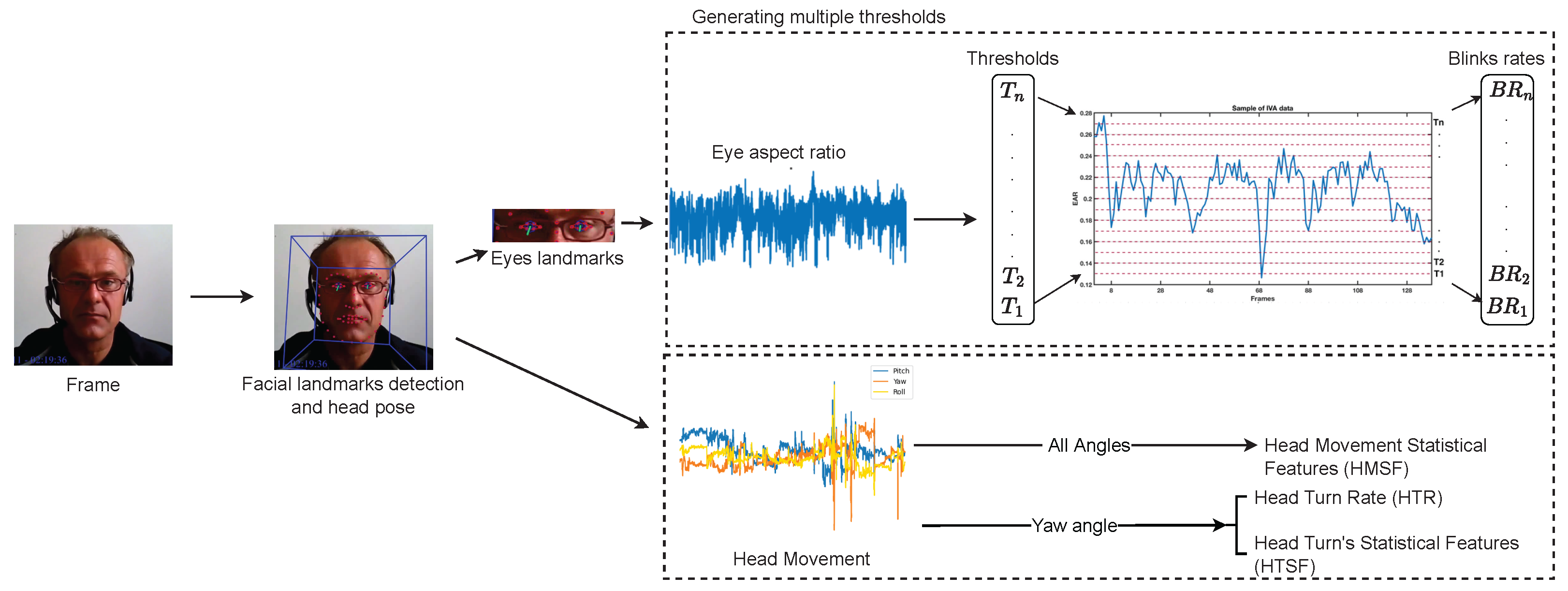

Figure 2.

Pipeline of visual feature extraction approach. The top part of the figure shows the calculation of the EAR and then the calculation of the EBRs based on the different thresholds of the whole video. The lower part of the figure illustrates how the three head orientations are extracted and used for two different visual features.

Figure 2.

Pipeline of visual feature extraction approach. The top part of the figure shows the calculation of the EAR and then the calculation of the EBRs based on the different thresholds of the whole video. The lower part of the figure illustrates how the three head orientations are extracted and used for two different visual features.

Figure 3.

Detected eye landmarks.

Figure 3.

Detected eye landmarks.

Figure 4.

The mean and the third standard deviation (SD) of every participant in both datasets, (left) and (right).

Figure 4.

The mean and the third standard deviation (SD) of every participant in both datasets, (left) and (right).

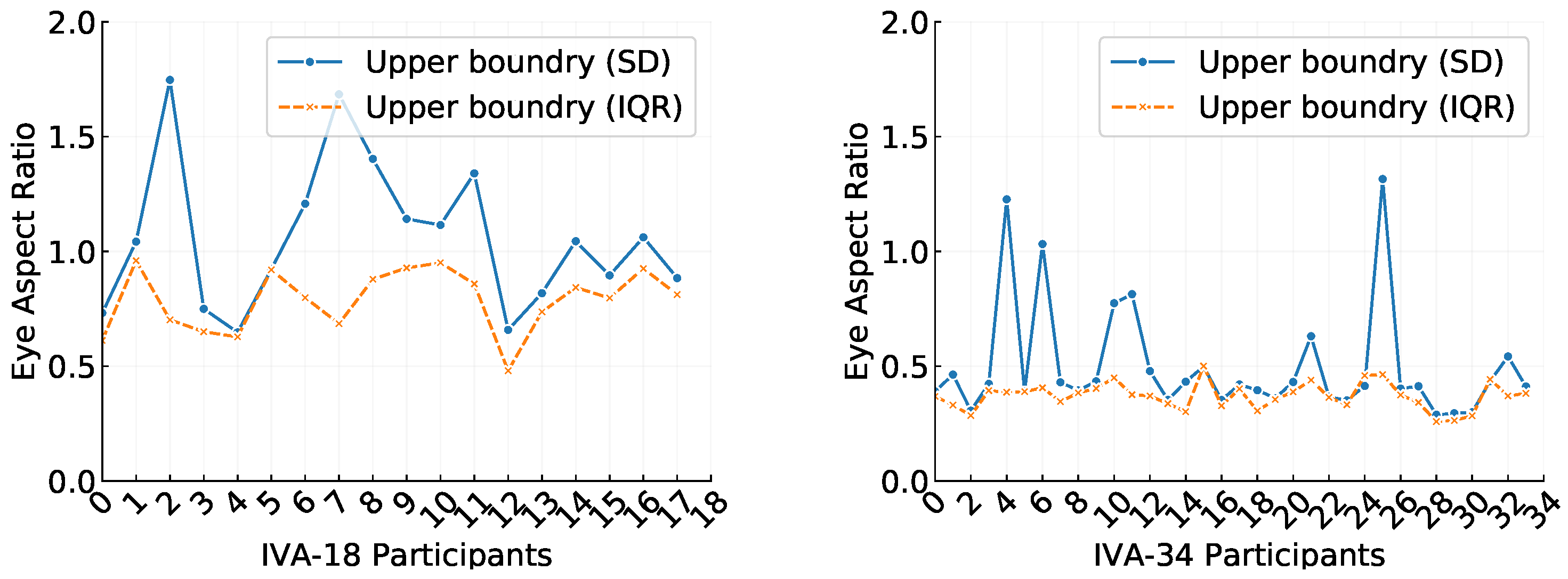

Figure 5.

Comparison of third standard deviation (SD) and interquartile range (IQR) upper boundaries used to remove outliers in the eye aspect ratio (EAR) data for individual participants from the (left) and (right) datasets.

Figure 5.

Comparison of third standard deviation (SD) and interquartile range (IQR) upper boundaries used to remove outliers in the eye aspect ratio (EAR) data for individual participants from the (left) and (right) datasets.

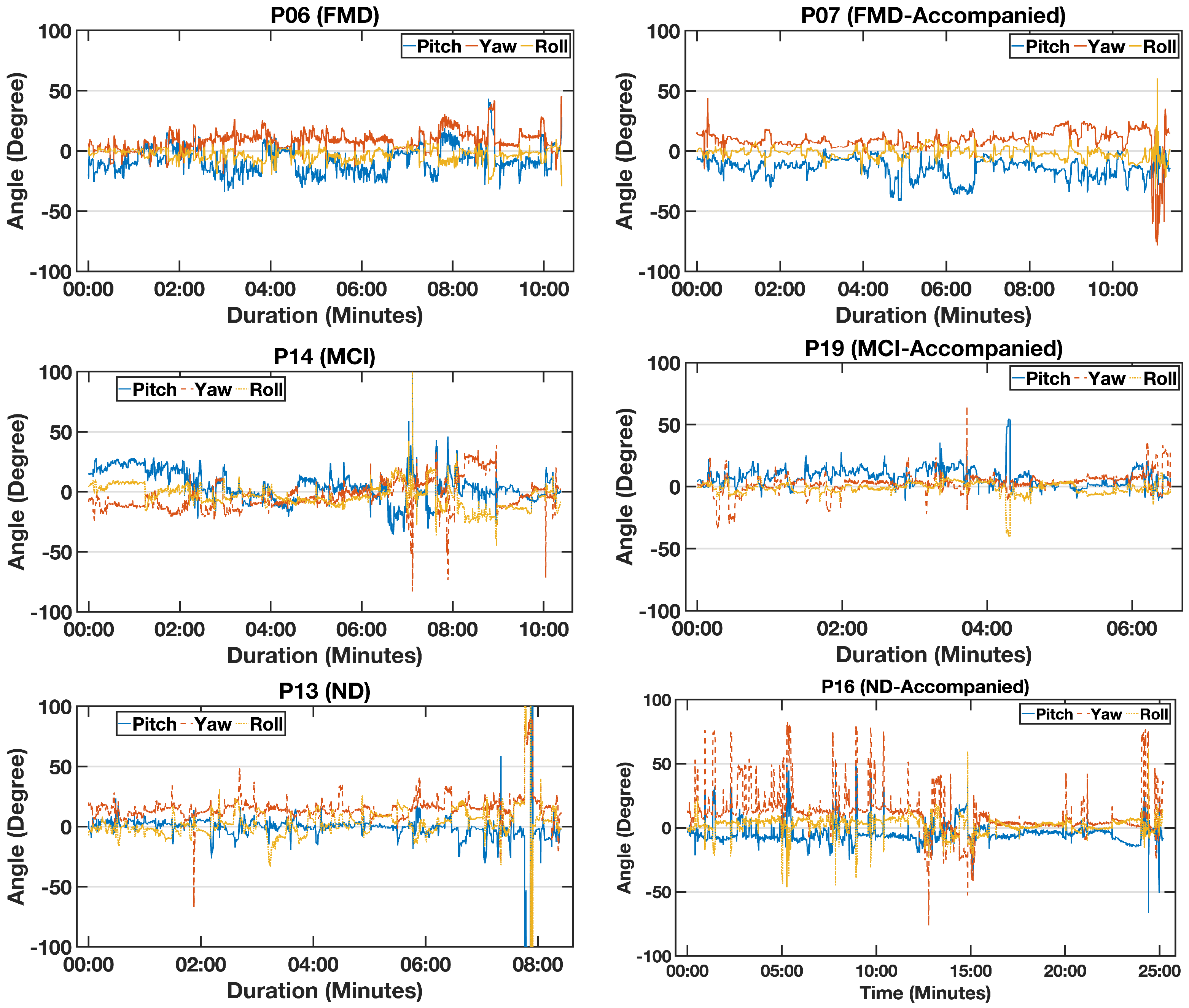

Figure 6.

An example of the calculated head angles—pitch (blue), yaw (orange), and roll (yellow)—for six participants with functional memory disorder (FMD), mild cognitive impairment (MCI), and neurodegenerative disorder(ND) (who both came with a partner).

Figure 6.

An example of the calculated head angles—pitch (blue), yaw (orange), and roll (yellow)—for six participants with functional memory disorder (FMD), mild cognitive impairment (MCI), and neurodegenerative disorder(ND) (who both came with a partner).

Figure 7.

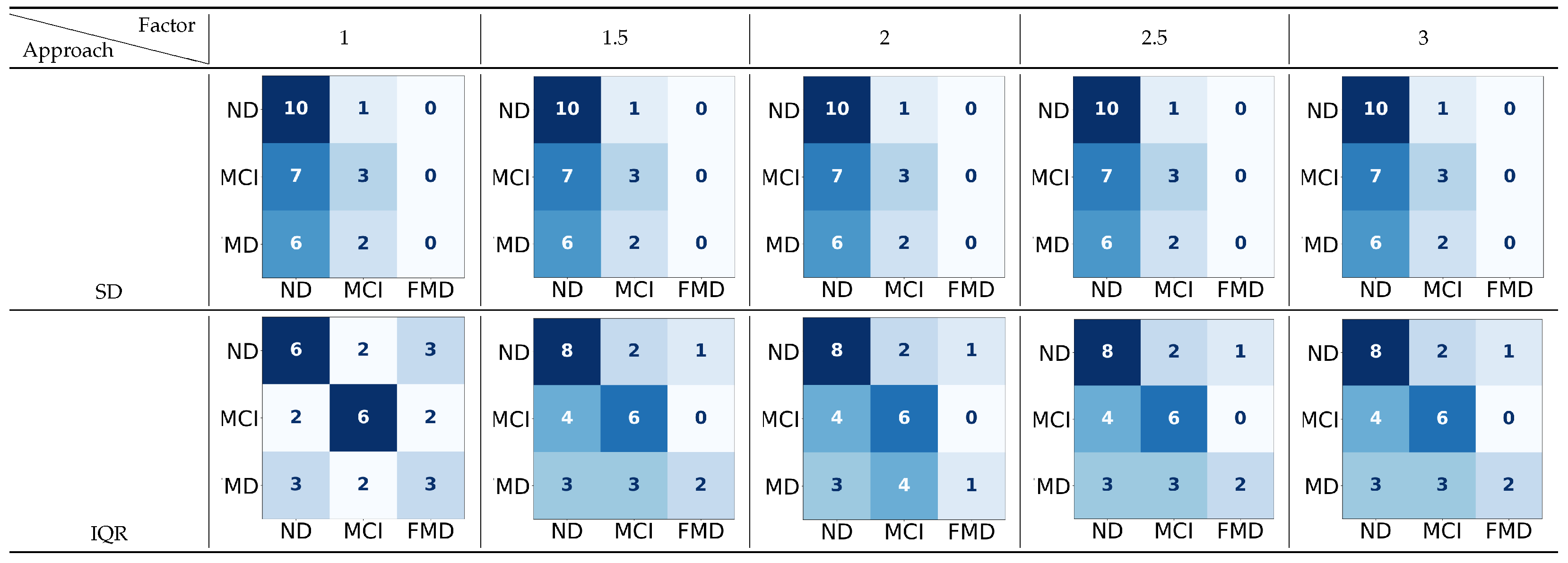

The confusion matrices for the three-way classification—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—using two approaches to detect the upper boundary (UB), with a range of factors applied to 70 thresholds. In

the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions (rows: true labels; columns: classified labels).

Figure 7.

The confusion matrices for the three-way classification—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—using two approaches to detect the upper boundary (UB), with a range of factors applied to 70 thresholds. In

the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions (rows: true labels; columns: classified labels).

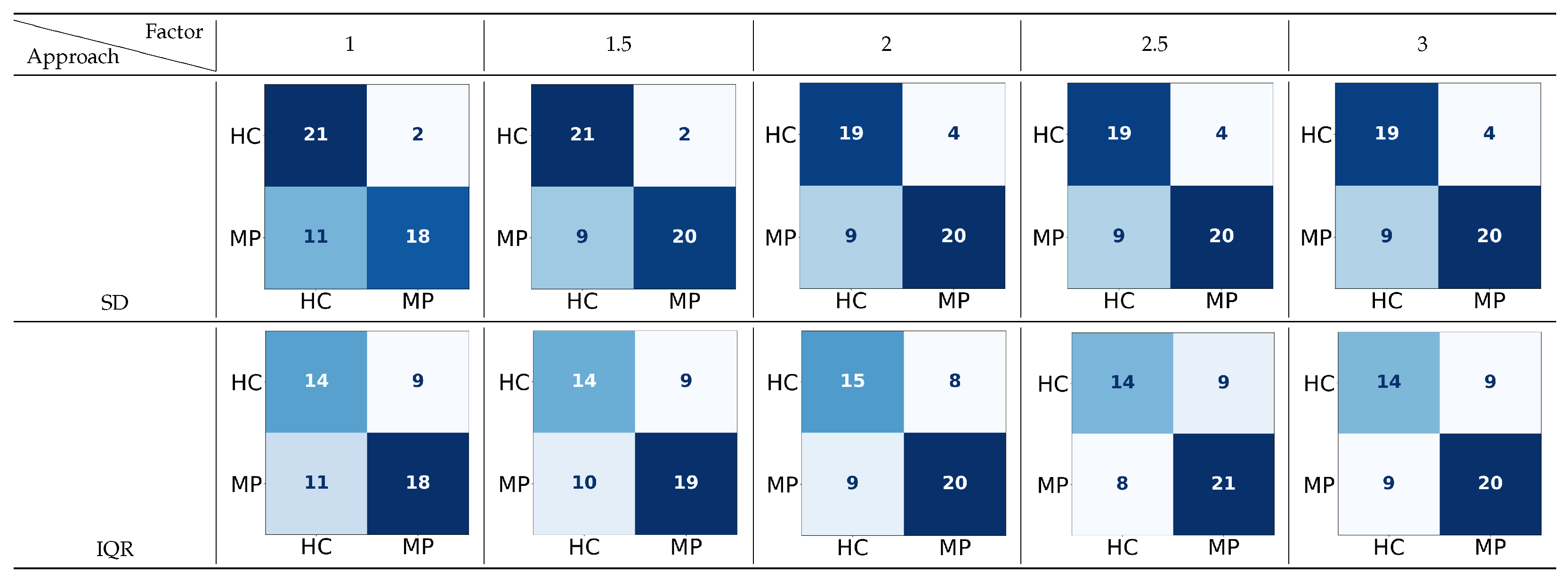

Figure 8.

The confusion matrices for the two-way classification—dementia(D) vs. non-dementia (Non-D)—using two approaches to detect the upper boundary (UB) with a range of factors applied to 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions. (rows: true labels; columns: classified labels).

Figure 8.

The confusion matrices for the two-way classification—dementia(D) vs. non-dementia (Non-D)—using two approaches to detect the upper boundary (UB) with a range of factors applied to 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions. (rows: true labels; columns: classified labels).

Figure 9.

The confusion matrices for the two-way classification of healthy controls (HCs) vs. memory problems (MPs) using two approaches to detect the upper boundary (UB) with a range of factors applied to 70 features or thresholds. In the confusion matrix, darker colors indicate higher true

predicted values, while lighter colors indicate lower predictions (MP: includes ND, MCI, and FMD; rows: true labels; columns: classified labels).

Figure 9.

The confusion matrices for the two-way classification of healthy controls (HCs) vs. memory problems (MPs) using two approaches to detect the upper boundary (UB) with a range of factors applied to 70 features or thresholds. In the confusion matrix, darker colors indicate higher true

predicted values, while lighter colors indicate lower predictions (MP: includes ND, MCI, and FMD; rows: true labels; columns: classified labels).

Figure 10.

The confusion matrices for the two-way classifications—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI), neurodegenerative disorder (ND) vs. functional memory disorder (FMD), and mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—for both the standard deviation (SD) and the interquartile range (IQR), using 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate

lower predictions. (rows: true labels; columns: classified labels).

Figure 10.

The confusion matrices for the two-way classifications—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI), neurodegenerative disorder (ND) vs. functional memory disorder (FMD), and mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—for both the standard deviation (SD) and the interquartile range (IQR), using 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate

lower predictions. (rows: true labels; columns: classified labels).

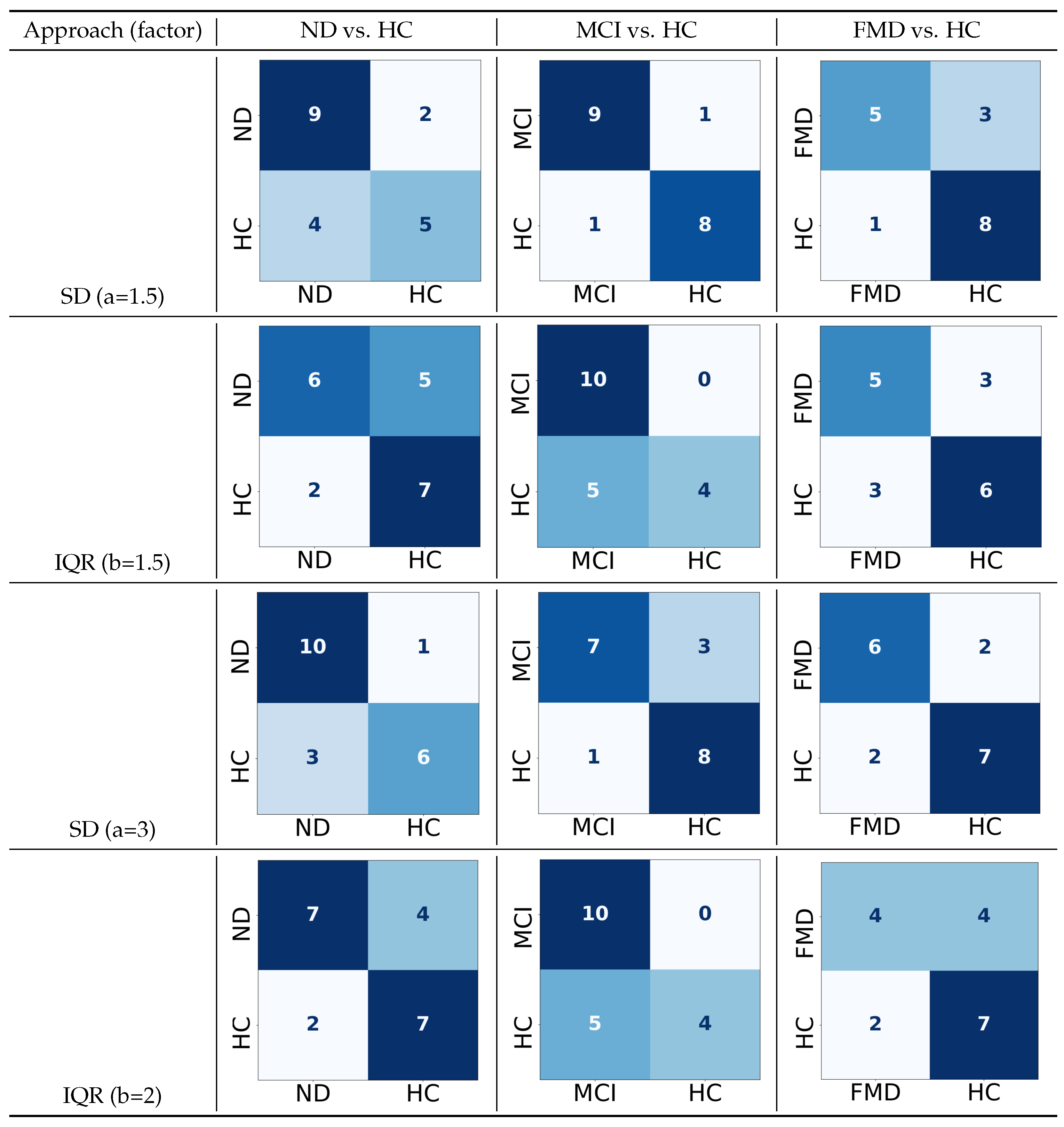

Figure 11.

The confusion matrices for the two-way classifications—neurodegenerative disorder (ND) vs. healthy controls (HCs), mild cognitive impairment (MCI) vs. healthy controls (HCs), and functional memory disorder (FMD) vs. healthy controls (HCs)—for both the standard deviation (SD) and interquartile range (IQR) with their default factors and the ones with the highest performance, using 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions. (rows: true labels; columns: classified labels).

Figure 11.

The confusion matrices for the two-way classifications—neurodegenerative disorder (ND) vs. healthy controls (HCs), mild cognitive impairment (MCI) vs. healthy controls (HCs), and functional memory disorder (FMD) vs. healthy controls (HCs)—for both the standard deviation (SD) and interquartile range (IQR) with their default factors and the ones with the highest performance, using 70 features or thresholds. In the confusion matrix, darker colors indicate higher true predicted values, while lighter colors indicate lower predictions. (rows: true labels; columns: classified labels).

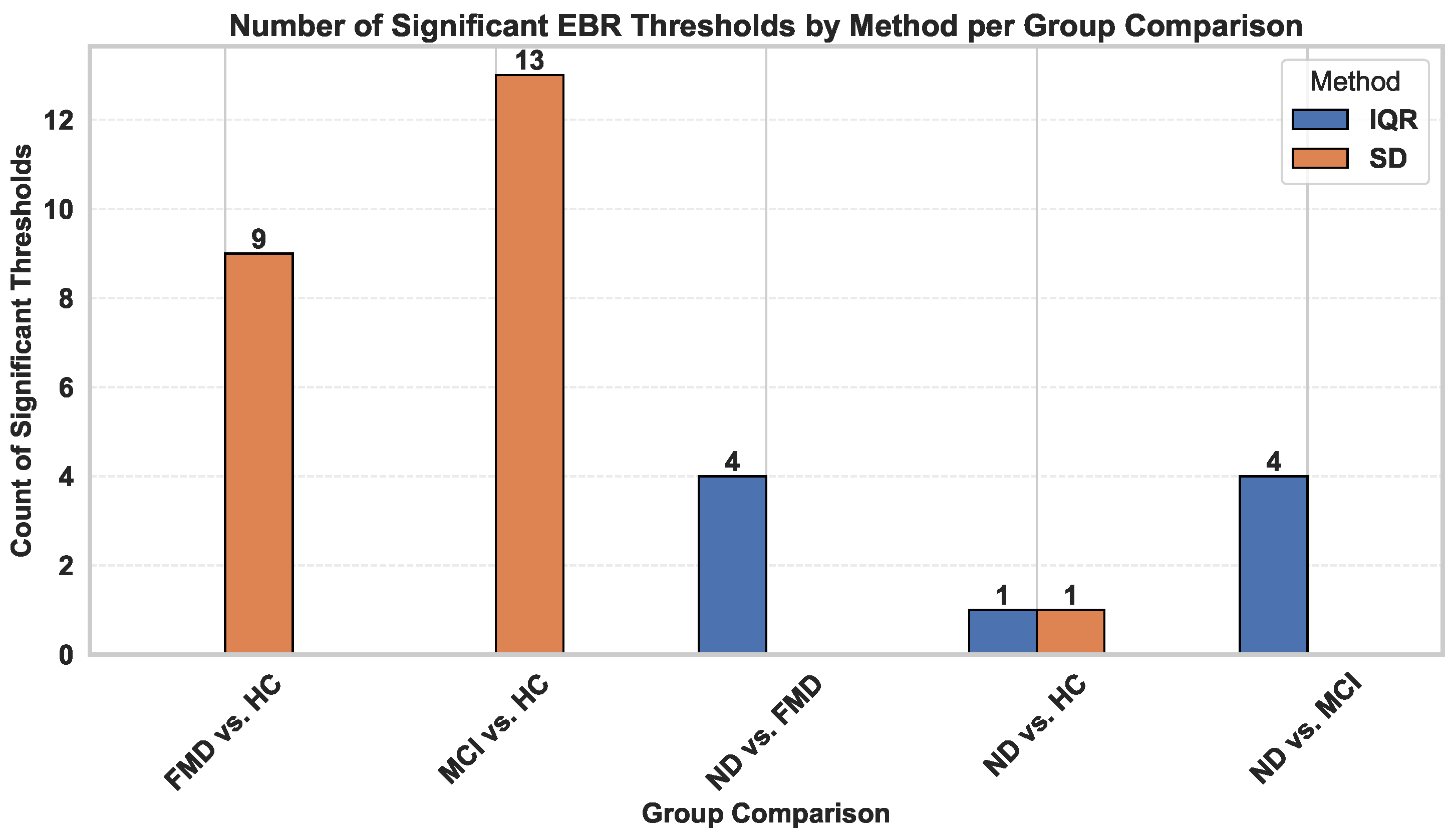

Figure 12.

An illustration of the number of statistically significant eye blink rate (EBR) thresholds identified using standard deviation (SD) and interquartile range (IQR) across different group pairs.

Figure 12.

An illustration of the number of statistically significant eye blink rate (EBR) thresholds identified using standard deviation (SD) and interquartile range (IQR) across different group pairs.

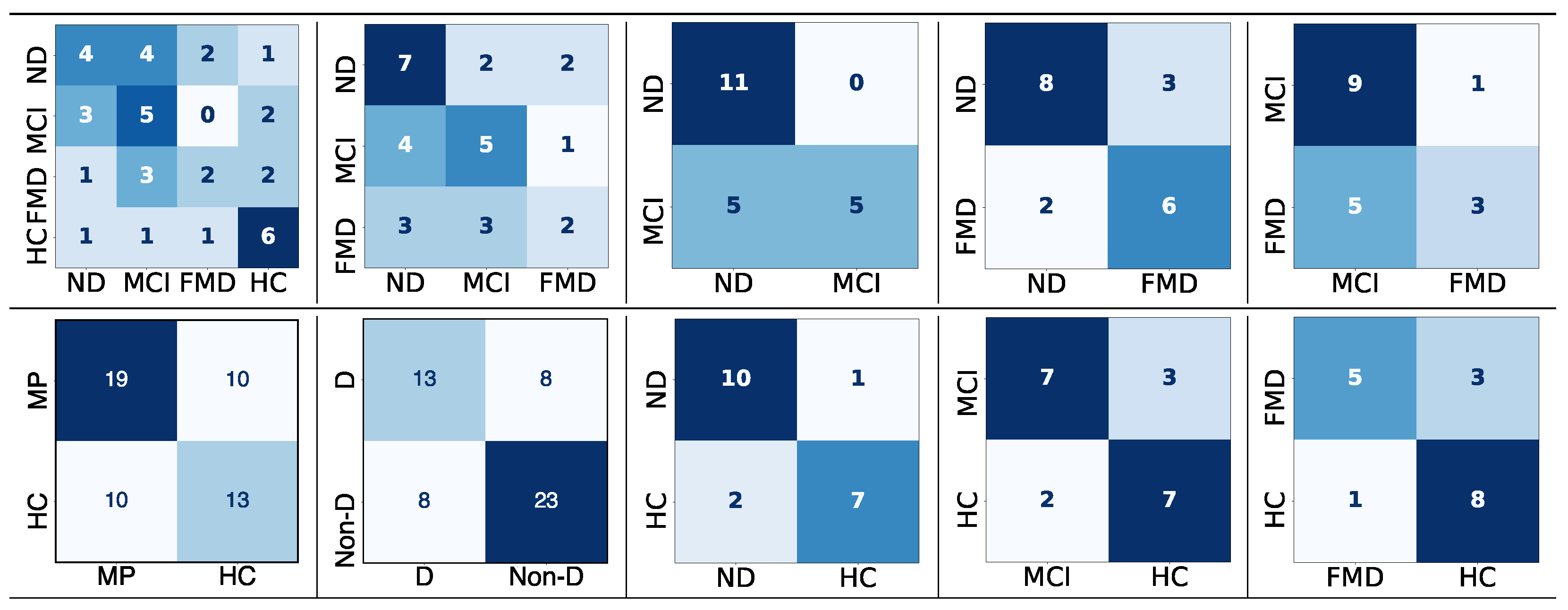

Figure 13.

The confusion matrices for the different classification tasks using the interquartile range

(IQR) approach to detect the upper boundary (UB) with a range of factors applied to 70 features or

thresholds. Darker colors indicate higher true predicted values, while lighter colors indicate lower

predictions. (rows: true labels; columns: classified labels).

Figure 13.

The confusion matrices for the different classification tasks using the interquartile range

(IQR) approach to detect the upper boundary (UB) with a range of factors applied to 70 features or

thresholds. Darker colors indicate higher true predicted values, while lighter colors indicate lower

predictions. (rows: true labels; columns: classified labels).

Table 1.

Classification results of the three-way problem—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder(FMD)—using a range of values for SD and IQR to find the factor with the highest performance score. These approaches are tested on 70 thresholds.

Table 1.

Classification results of the three-way problem—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder(FMD)—using a range of values for SD and IQR to find the factor with the highest performance score. These approaches are tested on 70 thresholds.

| Factor | Approach | Accuracy | Precision | Recall | F-Measure |

|---|

| 1 | SD (Min-UB) | 44% | 31% | 40% | 32% |

| IQR | 49% | 51% | 51% | 51% |

| 1.5 | SD (Min-UB) | 44% | 31% | 40% | 32% |

| IQR | 53% | 58% | 53% | 52% |

| 2 | SD (Min-UB) | 44% | 31% | 40% | 32% |

| IQR | 50% | 51% | 48% | 45% |

| 2.5 | SD (Min-UB) | 44% | 31% | 40% | 32% |

| IQR | 53% | 58% | 53% | 52% |

| 3 | SD (Min-UB) | 44% | 31% | 40% | 32% |

| IQR | 53% | 58% | 53% | 52% |

Table 2.

Classification results for the two-way problem—dementia (D) vs. non-dementia (Non-D)—using a range of values for standard deviation (SD) and interquartile range (IQR) to identify the factor that yields the highest performance score. These approaches were evaluated across 70 thresholds or features using k-nearest neighbor (KNN) classification with uniform weighting (D includes MCI and ND).

Table 2.

Classification results for the two-way problem—dementia (D) vs. non-dementia (Non-D)—using a range of values for standard deviation (SD) and interquartile range (IQR) to identify the factor that yields the highest performance score. These approaches were evaluated across 70 thresholds or features using k-nearest neighbor (KNN) classification with uniform weighting (D includes MCI and ND).

| Factor | Approach | Accuracy | Precision | Recall | F-Measure |

|---|

| 1 | SD (Min-UB) | 63% | 62% | 59% | 58% |

| IQR | 62% | 64% | 63% | 63% |

| 1.5 | SD (Min-UB) | 67% | 66% | 63% | 63% |

| IQR | 71% | 72% | 71% | 71% |

| 2 | SD (Min-UB) | 64% | 64% | 62% | 62% |

| IQR | 74% | 74% | 73% | 73% |

| 2.5 | SD (Min-UB) | 67% | 66% | 65% | 65% |

| IQR | 71% | 72% | 71% | 71% |

| 3 | SD (Min-UB) | 64% | 64% | 61% | 61% |

| IQR | 69% | 70% | 68% | 69% |

Table 3.

Classification results of the two-way problem—healthy controls (HC) vs. memory problems (MP)—using a range of values for the standard deviation (SD) and interquartile range (IQR) to find the factor with the highest performance score. These approaches are tested on 70 thresholds or features using KNN with uniform weight (MP = includes ND, MCI, and FMD).

Table 3.

Classification results of the two-way problem—healthy controls (HC) vs. memory problems (MP)—using a range of values for the standard deviation (SD) and interquartile range (IQR) to find the factor with the highest performance score. These approaches are tested on 70 thresholds or features using KNN with uniform weight (MP = includes ND, MCI, and FMD).

| Factor | Approach | Accuracy | Precision | Recall | F-Measure |

|---|

| 1 | SD (Min-UB) | 77% | 78% | 77% | 75% |

| IQR | 63% | 61% | 61% | 61% |

| 1.5 | SD (Min-UB) | 81% | 80% | 80% | 79% |

| IQR | 65% | 63% | 63% | 63% |

| 2 | SD (Min-UB) | 78% | 76% | 76% | 75% |

| IQR | 69% | 67% | 67% | 67% |

| 2.5 | SD (Min-UB) | 78% | 76% | 76% | 75% |

| IQR | 68% | 67% | 67% | 67% |

| 3 | SD (Min-UB) | 78% | 76% | 76% | 75% |

| IQR | 68% | 68% | 67% | 67% |

Table 4.

Classification results of four-way classification—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder (FMD) vs. healthy controls (HCs)—using two factors for standard deviation (SD) a = 3 as the default value and a = 1.5 as the factor with the highest performance, and for interquartile range (IQR) b = 1.5 as the default value and b = 2 as the factor with the highest performance. These approaches are tested on 70 thresholds (features) using linear SVM.

Table 4.

Classification results of four-way classification—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI) vs. functional memory disorder (FMD) vs. healthy controls (HCs)—using two factors for standard deviation (SD) a = 3 as the default value and a = 1.5 as the factor with the highest performance, and for interquartile range (IQR) b = 1.5 as the default value and b = 2 as the factor with the highest performance. These approaches are tested on 70 thresholds (features) using linear SVM.

| Factor | Approach | Accuracy | Precision | Recall | F-Measure |

|---|

| a, b = 1.5 | SD (Min-UB) | 44% | 35% | 43% | 38% |

| IQR | 49% | 53% | 49% | 49% |

| a = 3, b = 2 | SD (Min-UB) | 42% | 32% | 41% | 35% |

| IQR | 49% | 54% | 49% | 49% |

Table 5.

Classification results of the two-way classifications—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI), neurodegenerative disorder (ND) vs. functional memory disorder (FMD), and mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—using two factors for standard deviation (SD), a = 3 as the default value, and a = 1.5 as the factor with highest performance, and for interquartile range (IQR), b = 1.5 as the default value, and b = 2 as the factor with highest performance. These approaches are tested on 70 thresholds or features using linear SVM.

Table 5.

Classification results of the two-way classifications—neurodegenerative disorder (ND) vs. mild cognitive impairment (MCI), neurodegenerative disorder (ND) vs. functional memory disorder (FMD), and mild cognitive impairment (MCI) vs. functional memory disorder (FMD)—using two factors for standard deviation (SD), a = 3 as the default value, and a = 1.5 as the factor with highest performance, and for interquartile range (IQR), b = 1.5 as the default value, and b = 2 as the factor with highest performance. These approaches are tested on 70 thresholds or features using linear SVM.

| Classes | Approach | Accuracy | Precision | Recall | F-Measure |

|---|

| ND vs. MCI | SD (Min-UB) | 67% | 68% | 67% | 66% |

| IQR | 72% | 72% | 72% | 71% |

| ND vs. FMD | SD (Min-UB) | 56% | 29% | 50% | 37% |

| IQR | 69% | 68% | 66% | 66% |

| MCI vs. FMD | SD (Min-UB) | 54% | 28% | 50% | 36% |

| IQR | 67% | 70% | 64% | 63% |

Table 6.

Classification results of the two-way classifications—neurodegenerative disorder (ND) vs. healthy controls (HCs), mild cognitive impairment (MCI) vs. healthy controls (HCs), and functional memory disorder (FMD) vs. healthy controls (HCs)—using two factors for standard deviation (SD), a = 3 as the default value, and a = 1.5 as the factor with the highest performance, and for interquartile range (IQR), b = 1.5 as the default value, and b = 2 as the factor with the highest performance. These approaches are tested on 70 thresholds or features using linear SVM (P: precision; R: recall; F1: f-measure).

Table 6.

Classification results of the two-way classifications—neurodegenerative disorder (ND) vs. healthy controls (HCs), mild cognitive impairment (MCI) vs. healthy controls (HCs), and functional memory disorder (FMD) vs. healthy controls (HCs)—using two factors for standard deviation (SD), a = 3 as the default value, and a = 1.5 as the factor with the highest performance, and for interquartile range (IQR), b = 1.5 as the default value, and b = 2 as the factor with the highest performance. These approaches are tested on 70 thresholds or features using linear SVM (P: precision; R: recall; F1: f-measure).

| | | ND vs. HC | MCI vs. HC | FMD vs. HC |

|---|

| Factor | Approach | Accuracy | P | R | F1 | Accuracy | P | R | F1 | Accuracy | P | R | F1 |

|---|

| a, b = 1.5 | SD (Min-UB) | 69% | 70% | 69% | 69% | 89% | 89% | 89% | 89% | 77% | 78% | 76% | 76% |

| IQR | 69% | 67% | 66% | 65% | 72% | 83% | 72% | 71% | 67% | 65% | 65% | 65% |

| a = 3, b = 2 | SD (Min-UB) | 78% | 81% | 79% | 79% | 78% | 80% | 79% | 79% | 77% | 76% | 76% | 76% |

| IQR | 72% | 71% | 71% | 70% | 72% | 83% | 72% | 71% | 67% | 65% | 64% | 64% |

Table 7.

All classification tasks and the approach to upper boundary (UB) determination that achieved the highest performance in the t-test to show the significant difference between the highest results obtained using the standard deviation (SD) and interquartile range (IQR) (*: statistically significant; **: extremely statistically significant).

Table 7.

All classification tasks and the approach to upper boundary (UB) determination that achieved the highest performance in the t-test to show the significant difference between the highest results obtained using the standard deviation (SD) and interquartile range (IQR) (*: statistically significant; **: extremely statistically significant).

| Classification Task | Best IQR or SD? | p-Value |

|---|

| ND vs. MCI vs. FMD vs. HC | IQR | 0.04 * |

| ND vs. MCI vs. FMD | IQR | 0.02 * |

| ND vs. MCI | IQR | 0.0003 ** |

| ND vs. FMD | IQR | 0.02 * |

| MCI vs. FMD | IQR | 0.03 * |

| ND vs. HC | SD | 0.002 * |

| MCI vs. HC | SD | 0.01 * |

| FMD vs. HC | SD | 0.0004 ** |

| MP vs. HC | SD | 0.0001 ** |

| D vs. Non-D | IQR | 0.0001 ** |

Table 8.

Classification accuracy of four-way, three-way, and two-way classification tasks for the dataset, measuring the system performance using individual features with the KNN classifier. The number of features is indicated in parentheses.

Table 8.

Classification accuracy of four-way, three-way, and two-way classification tasks for the dataset, measuring the system performance using individual features with the KNN classifier. The number of features is indicated in parentheses.

| Classification Task | Feature | Accuracy | Precision | Recall | F-Measure |

|---|

| ND/MCI/FMD/HC | HTR (1) | 45% | 46% | 42% | 59% |

| HTR + HTSF (13) | 48% | 50% | 46% | 53% |

| HMSF (54) | 44% | 45% | 44% | 44% |

| ND/MCI/FMD | HTR (1) | 45% | 31% | 46% | 37% |

| HTR + HTSF (13) | 59% | 60% | 58% | 58% |

| HMSF (54) | 53% | 59% | 55% | 53% |

| ND/MCI | HTR (1) | 53% | 51% | 51% | 46% |

| HTR + HTSF (13) | 52% | 52% | 50% | 69% |

| HMSF (54) | 62% | 62% | 62% | 62% |

| ND/FMD | HTR (1) | 73% | 76% | 70% | 71% |

| HTR + HTSF (13) | 92% | 89% | 89% | 89% |

| HMSF (54) | 69% | 68% | 66% | 66% |

| MCI/FMD | HTR (1) | 71% | 72% | 73% | 72% |

| HTR + HTSF (13) | 67% | 71% | 69% | 66% |

| HMSF (54) | 90% | 92% | 88% | 88% |

| MP/HC | HTR (1) | 72% | 71% | 71% | 71% |

| HTR+HTSF (13) | 64% | 63% | 62% | 62% |

| HMSF (54) | 73% | 73% | 72% | 72% |

| D/Non-D | HTR (1) | 69% | 66% | 64% | 64% |

| HTR + HTSF (13) | 75% | 72% | 71% | 72% |

| HMSF (54) | 73% | 70% | 70% | 70% |

| ND/HC | HTR (1) | 83% | 85% | 84% | 85% |

| HTR + HTSF (13) | 72% | 77% | 73% | 73% |

| HMSF (54) | 74% | 75% | 74% | 74% |

| MCI/HC | HTR (1) | 74% | 74% | 74% | 74% |

| HTR + HTSF (13) | 69% | 68% | 68% | 68% |

| HMSF (54) | 69% | 68% | 68% | 68% |

| FMD/HC | HTR (1) | 67% | 65% | 64% | 64% |

| HTR + HTSF (13) | 45% | 25% | 44% | 32% |

| HMSF (54) | 58% | 59% | 58% | 58% |

Table 9.

Classification accuracy of four-way, three-way, and two-way classification tasks for the dataset, measuring the system performance using the interquartile range (IQR) approach as the upper boundary (UB) when features are fused and selected with the KNN classifier. The number of features is indicated in parentheses.

Table 9.

Classification accuracy of four-way, three-way, and two-way classification tasks for the dataset, measuring the system performance using the interquartile range (IQR) approach as the upper boundary (UB) when features are fused and selected with the KNN classifier. The number of features is indicated in parentheses.

| Classification Task | Feature | Accuracy | Precision | Recall | F-Measure |

|---|

| ND/MCI/FMD/HC | Feature fusion (137) | 44% | 44% | 46% | 44% |

| Feature selection (5) | 32% | 26% | 30% | 28% |

| ND/MCI/FMD | Feature fusion (137) | 48% | 47% | 46% | 46% |

| Feature selection (5) | 43% | 50% | 45% | 42% |

| ND/MCI | Feature fusion (137) | 75% | 84% | 75% | 74% |

| Feature selection (6) | 63% | 63% | 61% | 60% |

| ND/FMD | Feature fusion (137) | 73% | 73% | 74% | 73% |

| Feature selection (20) | 76% | 78% | 78% | 78% |

| MCI/FMD | Feature fusion (137) | 65% | 70% | 64% | 63% |

| Feature selection (7) | 52% | 49% | 49% | 49% |

| MP/HC | Feature fusion (137) | 62% | 61% | 61% | 61% |

| Feature selection (116) | 59% | 67% | 57% | 57% |

| D/Non-D | Feature fusion (137) | 70% | 68% | 68% | 68% |

| Feature selection (37) | 62% | 59% | 59% | 59% |

| ND/HC | Feature fusion (137) | 85% | 85% | 84% | 85% |

| Feature selection (38) | 60% | 65% | 64% | 63% |

| MCI/HC | Feature fusion (137) | 72% | 74% |

74% | 74% |

| Feature selection (38) | 60% | 65% | 64% | 63% |

| FMD/HC | Feature fusion (137) | 79% | 78% | 76% | 76% |

| Feature selection (2) | 47% | 47% | 47% | 47% |

Table 10.

Classification results (%) for dementia detection compared to previous work.

Table 10.

Classification results (%) for dementia detection compared to previous work.

| Study | Participants | Data Settings | Modality | Classifier | Performance |

|---|

| [10] | 18 (9 with dementia) | Lab-controlled | Audiovisual | SVM | 84% |

| [7] | 29 (14 with dementia including (NPH, AD, DLB, MCI)) | Lab-controlled | Audiovisual | LR | 93% |

| [8] | 24 (12 with dementia) | Lab-controlled | Visual | LR | 82% |

| [12] | (6 ND, 6 MCI, and 6 FMD) | In-the-wild | Visual | SVM | 89% |

| [25] | (6 ND, 6 MCI, and 6 FMD) | In-the-wild | Visual | SVM | 78% |

| [11] | 32 videos (MCI and HCs) | Semi-in-the-wild | Visual | DL | 87% |

| Our | (29 MP, 23 HCs) | In-the-wild | Visual | KNN | 59% |

| subset (11 ND, 10 MCI, and 8 FMD) | In-the-wild | Visual | KNN | 81% |

| subset (10 MCI, 9 HCs) | In-the-wild | Visual | KNN | 89% |