Deep Learning Techniques for Fault Diagnosis in Interconnected Systems: A Comprehensive Review and Future Directions

Abstract

1. Introduction

2. Multimodal Learning Techniques for Fault Diagnosis

2.1. Deep Learning Approaches

2.2. Transfer Learning

2.3. Hybrid Models and Ensemble Learning

3. Data Fusion and Multimodal Integration

- Early fusion: This method integrates data from multiple modalities at the input level, feeding them into a single unified model. Inputs may consist of raw signals, handcrafted features, or deep features. Common techniques for early fusion include concatenation, element-wise summation, multiplication (Hadamard product), and bilinear pooling (Kronecker product). One major advantage of early fusion is the simplicity of working with a single model; however, it assumes that the model can effectively handle all modalities. Synchronization is also necessary, which may pose challenges when data are captured at different time points. In healthcare, early fusion is applied in tasks such as combining ultrasound imagery for breast cancer diagnosis or merging imaging data with electronic medical records (EMRs) for applications like skin lesion classification and cervical dysplasia prediction. It is also used to integrate genomics with histology or radiology data for cancer classification, survival prediction, and treatment response analysis.As shown in Figure 2, early fusion integrates multiple inputs at the raw level, allowing a unified model to process them jointly. This approach is often challenged due to data synchronization issues.

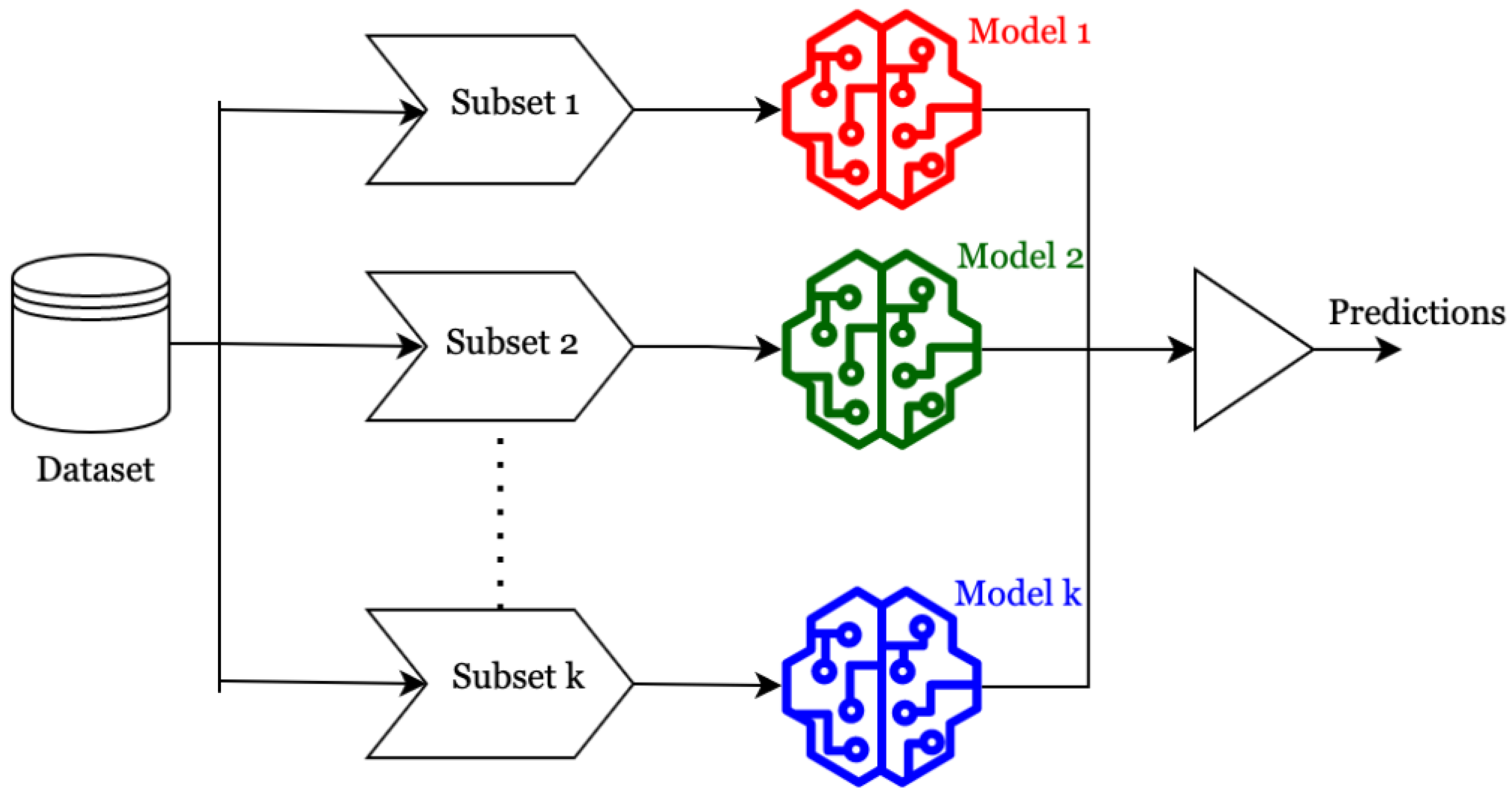

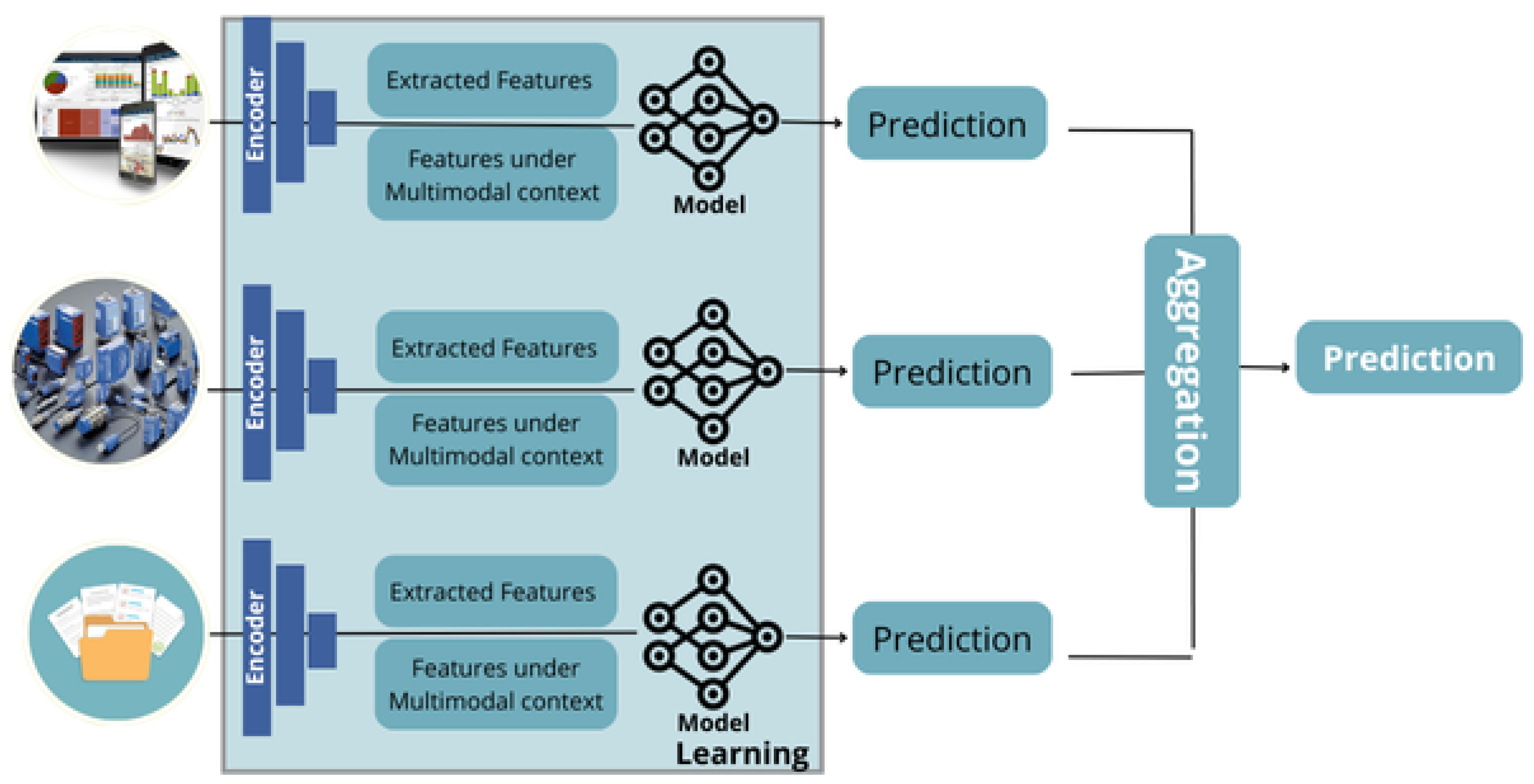

- Late fusion: Also referred to as decision-level fusion, this approach trains separate models for each modality and then combines their predictions. Methods such as averaging, majority voting, or Bayesian inference are commonly used. Since synchronization is not required, different architectures can be applied independently to each modality. This is especially beneficial in scenarios involving heterogeneous or incomplete data. Furthermore, new modalities can be added without retraining the entire model. Late fusion is well suited to cases where modalities are loosely related. In healthcare, it is used in applications like combining MRI data with PSA blood tests for prostate cancer detection or integrating genomics with histology data for survival prediction.Figure 3 illustrates the late fusion technique, where separate models process each modality independently and then combine their results into a final decision. This approach is particularly effective when the modalities vary greatly from one another.

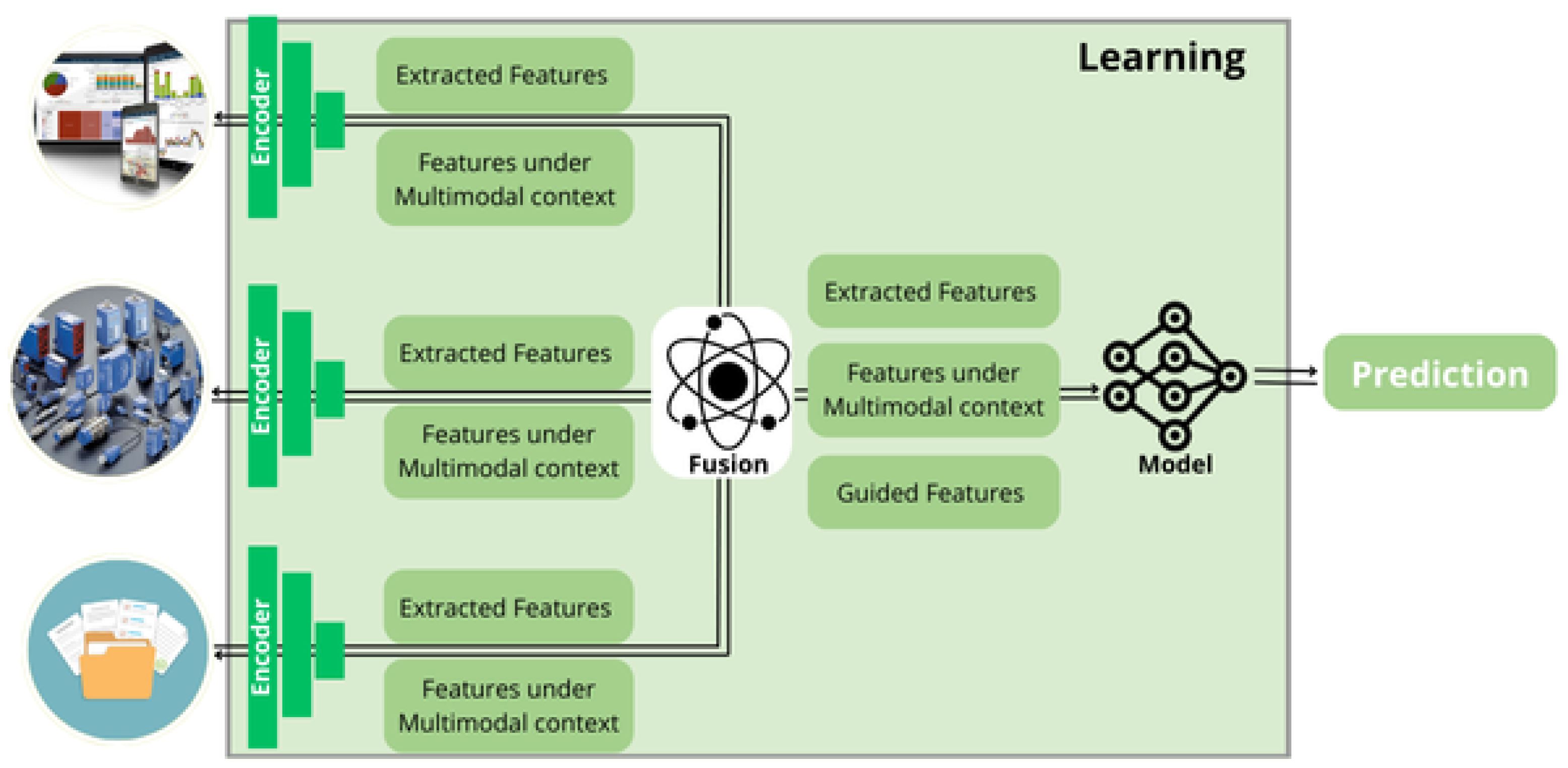

- Intermediate fusion: Positioned between early and late fusion, intermediate fusion combines features at various abstraction levels, enhancing the model’s ability to learn cross-modal relationships. Unlike early or late fusion, intermediate fusion allows the loss function to influence feature extraction, enabling each modality to improve its own representations in a multimodal setting. Fusion may occur simultaneously or progressively, starting with strongly correlated modalities before incorporating less-related data. Guided fusion can refine this process by letting one modality influence feature extraction in another (e.g., using genomics data to guide histology feature selection). Applications include lung cancer detection through PET and CT fusion, prostate cancer classification with MRI and ultrasound, and multi-omics cancer subtyping for survival analysis.Figure 4 illustrates how intermediate fusion strikes a balance between early and late fusion by combining features during the learning process. This helps capture deeper relationships between different modalities.

3.1. Data Fusion Techniques

3.1.1. Understanding Data Fusion in Multimodal Learning

3.1.2. Methods for Integrating Diverse Data Sources

- Low-level fusion (sensor level): This approach integrates raw sensor outputs before any feature extraction. It is the most direct form of fusion and often uses simple mathematical operations. A typical formulation is a weighted sum:where are weights assigned based on sensor reliability or data quality [35].

- Mid-level fusion (feature level): In this method, features are first extracted from each modality, then combined into a single set before classification. This is effective when different modalities provide complementary insights. For instance, combining thermal and vibration features enhances diagnostic coverage. Principal component analysis (PCA) is a common technique here:where represents features from the i-th modality [36].

- High-level fusion (decision level): This method combines outputs from separate models trained on individual modalities. Techniques such as weighted voting or ensemble averaging are used to generate the final decision:where is the output from the i-th classifier, and is its corresponding weight [37].

3.1.3. Challenges in Real-Time Data Fusion

- Latency: Low latency is crucial for detecting faults in real-time systems. However, the fusion process, particularly when handling large datasets from various modalities, can create delays. Minimizing this latency is essential, and it can be accomplished by optimizing the fusion algorithms and utilizing high-performance computing techniques [38].Each term denotes the duration allocated for sensor data collection, the fusion process, and fault diagnosis.

- Data synchronization: Data from various sensors may not always be synchronized, complicating the fusion process. Time-stamping, interpolation, and synchronization algorithms are essential to align the data streams before fusion can occur.

- Noisy data: Sensor data are often noisy in real-world environments, which can impact the accuracy of the fusion process. Filtering or denoising techniques are essential to ensuring that the data remain clean and reliable [39].

- Scalability: As the number of sensors or modalities increases, the complexity of the fusion system also grows. Efficient fusion algorithms are necessary to manage large datasets and ensure real-time processing capabilities.

3.2. Multimodal Data Representation

3.2.1. Approaches for Representing Data from Different Modalities

- Feature concatenation: This approach combines the features from different modalities into a single vector, which the model then processes. It is particularly effective when the features from various modalities are similar in size and scale.

- Canonical correlation analysis (CCA): CCA is a statistical technique that investigates the relationship between two sets of variables. It identifies highly correlated linear combinations of features from each modality [41]. This method is ideal when the different modalities provide complementary information, helping to align them effectively.where and are data matrices from the two modalities, and and are the corresponding weight vectors.

- Multimodal autoencoders: These specialized neural networks are designed to compress data from different modalities into a lower-dimensional space [42]. Each modality is processed via a separate encoder, and the encoded outputs are combined before decoding. This allows the model to learn a shared latent representation of the multimodal data.where represents the encoded features from modality i, and is the resulting fused representation.

3.2.2. Strategies for Handling Complex, Noisy, and High-Dimensional Data

- Dimensionality reduction: Techniques such as principal component analysis (PCA), t-distributed stochastic neighbor embedding (t-SNE), and autoencoders are commonly used to reduce the dimensionality of data. By decreasing the number of features, these methods simplify the fusion process and mitigate challenges like the curse of dimensionality, which can hinder model performance [40].where represents the original high-dimensional data, and is the smaller, reduced feature set.

- Data augmentation: This includes techniques such as adding synthetic noise and generating new data points to enhance the robustness of the multimodal system. These methods enable the system to manage noise and variability in real-world data more effectively.

- Noise filtering: to enhance the quality of sensor data before it is fused, noise filtering methods such as Kalman filters, wavelet transforms, or deep learning-based denoising autoencoders can be utilized to clean the data.

4. Applications of Multimodal Learning for Fault Detection Diagnosis

4.1. Applications in Manufacturing Systems

4.2. Applications in Renewable Energy Systems

4.2.1. Wind Turbine Fault Diagnosis

4.2.2. Photovoltaic (PV) System Fault Detection

4.2.3. Integrating Multiple Data Sources for Better Fault Detection

4.3. Applications in Automotive Systems

4.3.1. Autonomous Vehicle Fault Detection

4.3.2. Electric Vehicle (EV) Battery Health Monitoring

4.3.3. Vehicle Engine Fault Detection

4.3.4. Integrating Multiple Data Sources for Automotive Fault Detection

| Application | Paper | Year | Technique Used |

|---|---|---|---|

| Autonomous Vehicle Fault Detection | Javed et al. | 2020 | MSALSTM-CNN: Hybrid Learning (CNN + LSTM) [79] |

| Electric Vehicle Battery Health Monitoring | Deng et al. | 2022 | Support Vector Machine (SVM) [80] |

| Engine Fault Diagnosis | Auran et al. | 2024 | Decision Trees (DT) [77] |

| Battery Monitoring for EVs | Sulaiman et al. | 2024 | Random Forest (RF) [81] |

| Autonomous Vehicle Sensor Fusion | Wang et al. | 2022 | Multimodal Learning (Fusion of Radar + Lidar) [82] |

| Predictive Maintenance for EV Batteries | Naresh et al. | 2024 | Deep Neural Networks (DNNs) [83] |

| Autonomous Vehicle Fault Detection | Safavi et al. | 2021 | Recurrent Neural Networks (RNNs) [84] |

| Engine Performance Monitoring | Okumucs et al. | 2023 | Gradient Boosting Machines (GBMs) [85] |

| Battery Fault Detection in EVs | Trivedi et al. | 2022 | Convolutional Neural Networks (CNNs) [86] |

| Vehicle System Health Monitoring | Rahman et al. | 2022 | Multilayer Perceptron (MLP) [87] |

| Autonomous Vehicle Navigation Faults | Jeong et al. | 2023 | Long Short-Term Memory (LSTM) [88] |

| EV Battery Fault Diagnosis | Shah et al. | 2024 | K-Nearest Neighbor (KNN) [89] |

| Vehicle Diagnostics in Autonomous Driving | Chen et al. | 2020 | Transfer Learning [90] |

| Electric Vehicle Fault Detection | Shen et al. | 2024 | Naive Bayes Classifier (NBC) [91] |

| Autonomous Vehicle Fault Classification | Kuutti et al. | 2020 | Deep Learning (DL) [92] |

| Engine Fault Classification | Zhao et al. | 2022 | Extreme Learning Machine (ELM) [93] |

| Battery Performance Degradation Detection | Valladares et al. | 2022 | Gaussian Process (GP) [94] |

| Vehicle Engine Health Monitoring | Fotias et al. | 2021 | Multiscale Learning (MSL) [95] |

| Vehicle Fault Detection using Sensor Data | Cui et al. | 2022 | Multi-Task Learning (MTL) [96] |

| Autonomous Vehicle Sensor Reliability | Anyanwu et al. | 2023 | Random Forest (RF) [97] |

4.4. Applications in Aerospace Systems

4.5. Trends in Multimodal Learning for Fault Diagnosis

4.5.1. Integration of Advanced Deep Learning Models

4.5.2. End-to-End Learning Systems

4.5.3. Multiscale and Multi-Resolution Approaches

4.6. Challenges in Scaling Up to Large, Real-Time Systems

4.6.1. Data Volume and Storage

4.6.2. Real-Time Data Processing

4.6.3. Sensor Fusion and Synchronization

4.7. Promising Research Directions

4.7.1. Uncertainty Handling in Fault Diagnosis

4.7.2. Edge Computing for Fault Diagnosis

4.7.3. Real-Time Data Fusion

4.8. Enhancing Data Preprocessing in Multimodal Systems

- Improving Data Preprocessing with Multiscale RepresentationRaw data are often noisy and autocorrelated. Multiscale representation using high-pass and low-pass filters can isolate noise and highlight relevant features, improving fault detection accuracy.

- Addressing Uncertainty with Interval-Valued Representation and Dimensionality ReductionInterval-valued techniques account for environmental uncertainty. Combined with dimensionality reduction (e.g., Euclidean distance-based filtering), they enhance robustness and reduce computational load.

- Simplifying Multimodel DesignMultimodel systems benefit from domain knowledge and data-driven optimization. Methods like PSO and GA can optimize parameters such as hidden layers and activation functions to reduce complexity and improve adaptability.

- Improving Decision-Making in Multimodel SystemsCombining techniques like PCA, KPCA, and Fourier analysis in a unified framework enhances interpretability and decision-making in noisy or rapidly changing environments.

- Building Enhanced Multimodel Systems with Multiple LearnersIntegrated learning systems that combine multiple algorithms can handle temporal dependencies more effectively. Dynamic kernel PCA and ensemble learners improve classification and prediction speed while ensuring robustness.

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ji, C.; Sun, W. A review on data-driven process monitoring methods: Characterization and mining of industrial data. Processes 2022, 10, 335. [Google Scholar] [CrossRef]

- Saberironaghi, A.; Ren, J.; El-Gindy, M. Defect detection methods for industrial products using deep learning techniques: A review. Algorithms 2023, 16, 95. [Google Scholar] [CrossRef]

- Jamil, F.; Verstraeten, T.; Nowé, A.; Peeters, C.; Helsen, J. A deep boosted transfer learning method for wind turbine gearbox fault detection. Renew. Energy 2022, 197, 331–341. [Google Scholar] [CrossRef]

- Shakiba, F.M.; Azizi, S.M.; Zhou, M.; Abusorrah, A. Application of machine learning methods in fault detection and classification of power transmission lines: A survey. Artif. Intell. Rev. 2023, 56, 5799–5836. [Google Scholar] [CrossRef]

- Yu, W.; Liu, Y.; Dillon, T.; Rahayu, W. Edge computing-assisted IoT framework with an autoencoder for fault detection in manufacturing predictive maintenance. IEEE Trans. Ind. Inform. 2022, 19, 5701–5710. [Google Scholar] [CrossRef]

- Deng, W.; Nguyen, K.T.; Gogu, C.; Medjaher, K.; Morio, J. Enhancing prognostics for sparse labeled data using advanced contrastive self-supervised learning with downstream integration. Eng. Appl. Artif. Intell. 2024, 138, 109268. [Google Scholar] [CrossRef]

- Liu, X.; Ma, H.; Liu, Y. A novel transfer learning method based on conditional variational generative adversarial networks for fault diagnosis of wind turbine gearboxes under variable working conditions. Sustainability 2022, 14, 5441. [Google Scholar] [CrossRef]

- Braei, M.; Wagner, S. Anomaly detection in univariate time-series: A survey on the state-of-the-art. arXiv 2020, arXiv:2004.00433. [Google Scholar]

- Pergeline, H. État des Lieux des Pratiques et Connaissances des Intervenants de Première Ligne Concernant les Difficultés Alimentaires des Enfants de Moins de 3 ans. DUMAS—Dépôt Universitaire de Mémoires Après Soutenance. Master’s Thesis, Aix Marseille Université, Marseille, France, 2022. [Google Scholar]

- Chaillet-Antoine, A. Contributionsa L’analyse de la Stabilité et de la Robustesse des Systemes Non-Linéaires Interconnectés et Applications. Ph.D. Thesis, Université Paris Sud-Paris XI, Sceaux, Paris, 2012. [Google Scholar]

- Momen, S. Smart Maintenance Framework Development Using Blockchain in an Industry 4.0 Context. Ph.D. Thesis, Polytechnique = Montréal, Montréal, QC, Canada, 2024. [Google Scholar]

- Nawaz, A.; Umar, M.A.; Shuaib, K.; Ahmad, A.; Belkacem, A.N. Autoencoder-based Arrhythmia Detection using Synthetic ECG Generation Technique. In Proceedings of the 2024 46th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 15–19 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–7. [Google Scholar]

- Khanam, R.; Hussain, M.; Hill, R.; Allen, P. A comprehensive review of convolutional neural networks for defect detection in industrial applications. IEEE Access 2024, 12, 94250–94295. [Google Scholar] [CrossRef]

- Liu, B.; Chen, D.; Qi, X. YOLO-pdd: A Novel Multi-scale PCB Defect Detection Method Using Deep Representations with Sequential Images. arXiv 2024, arXiv:2407.15427. [Google Scholar]

- Mera, M.; Michida, N.; Honda, M.; Sakamoto, K.; Tamada, Y.; Mikami, T.; Nakaji, S. A Study of the Relationship between Driving and Health based on Large-scale Data Analysis using PLSA and t-SNE. IEEE Access 2024, 12, 99614–99659. [Google Scholar] [CrossRef]

- Pal, K.; Bau, D.; Miller, R.J. Model Lakes. arXiv 2024, arXiv:2403.02327. [Google Scholar]

- Yang, T.; Yu, X.; Ma, N.; Zhang, Y.; Li, H. Deep representation-based transfer learning for deep neural networks. Knowl.-Based Syst. 2022, 253, 109526. [Google Scholar] [CrossRef]

- Zhou, Q.; Pan, S.J. Convergence Analysis of Inexact Over-relaxed ADMM via Dissipativity Theory. In Proceedings of the 16th Asian Conference on Machine Learning (Conference Track), Hanoi, Vietnam, 5–8 December 2024. [Google Scholar]

- Jiang, J.; Shu, Y.; Wang, J.; Long, M. Transferability in deep learning: A survey. arXiv 2022, arXiv:2201.05867. [Google Scholar]

- Shimada, S.; Golyanik, V.; Pérez, P.; Theobalt, C. Decaf: Monocular deformation capture for face and hand interactions. ACM Trans. Graph. 2023, 42, 1–16. [Google Scholar] [CrossRef]

- Chen, H.; Luo, H.; Huang, B.; Jiang, B.; Kaynak, O. Transfer learning-motivated intelligent fault diagnosis designs: A survey, insights, and perspectives. IEEE Trans. Neural Netw. Learn. Syst. 2023, 35, 2969–2983. [Google Scholar] [CrossRef]

- Berghout, T.; Benbouzid, M. Diagnosis and Prognosis of Faults in High-Speed Aeronautical Bearings with a Collaborative Selection Incremental Deep Transfer Learning Approach. Appl. Sci. 2023, 13, 10916. [Google Scholar] [CrossRef]

- Kumar, S.; Kaur, P.; Gosain, A. A comprehensive survey on ensemble methods. In Proceedings of the 2022 IEEE 7th International conference for Convergence in Technology (I2CT), Mumbai, India, 7–9 April 2022; pp. 1–7. [Google Scholar]

- Chen, M.S.; Lin, J.Q.; Li, X.L.; Liu, B.Y.; Wang, C.D.; Huang, D.; Lai, J.H. Representation learning in multi-view clustering: A literature review. Data Sci. Eng. 2022, 7, 225–241. [Google Scholar] [CrossRef]

- Patil, P.; Du, J.H.; Kuchibhotla, A.K. Bagging in overparameterized learning: Risk characterization and risk monotonization. J. Mach. Learn. Res. 2023, 24, 1–113. [Google Scholar]

- Kunapuli, G. Ensemble Methods for Machine Learning; Simon and Schuster: New York, NY, USA, 2023. [Google Scholar]

- Qian, G.; Liu, J. A comparative study of deep learning-based fault diagnosis methods for rotating machines in nuclear power plants. Ann. Nucl. Energy 2022, 178, 109334. [Google Scholar] [CrossRef]

- Attouri, K.; Dhibi, K.; Mansouri, M.; Hajji, M.; Bouzrara, K.; Nounou, H. Enhanced fault diagnosis of wind energy conversion systems using ensemble learning based on sine cosine algorithm. J. Eng. Appl. Sci. 2023, 70, 56. [Google Scholar] [CrossRef]

- Dahesh, A.; Tavakkoli-Moghaddam, R.; Wassan, N.; Tajally, A.; Daneshi, Z.; Erfani-Jazi, A. A hybrid machine learning model based on ensemble methods for devices fault prediction in the wood industry. Expert Syst. Appl. 2024, 249, 123820. [Google Scholar] [CrossRef]

- Hu, J.; Szymczak, S. A review on longitudinal data analysis with random forest. Briefings Bioinform. 2023, 24, bbad002. [Google Scholar] [CrossRef] [PubMed]

- Kullu, O.; Cinar, E. A deep-learning-based multi-modal sensor fusion approach for detection of equipment faults. Machines 2022, 10, 1105. [Google Scholar] [CrossRef]

- Kibrete, F.; Woldemichael, D.E.; Gebremedhen, H.S. Multi-Sensor data fusion in intelligent fault diagnosis of rotating machines: A comprehensive review. Measurement 2024, 232, 114658. [Google Scholar] [CrossRef]

- Lei, Y.; Li, N.; Li, X. Big Data-Driven Intelligent Fault Diagnosis and Prognosis for Mechanical Systems; Springer: Singapore, 2023. [Google Scholar]

- Afrasiabi, S.; Allahmoradi, S.; Afrasiabi, M.; Liang, X.; Chung, C.; Aghaei, J. A Robust Multi-modal Deep Learning-Based Fault Diagnosis Method for PV Systems. IEEE Open Access J. Power Energy 2024, 11, 583–594. [Google Scholar] [CrossRef]

- Basani, D.K.R.; Gudivaka, B.R.; Gudivaka, R.L.; Gudivaka, R.K. Enhanced Fault Diagnosis in IoT: Uniting Data Fusion with Deep Multi-Scale Fusion Neural Network. Internet Things 2024, 101361. [Google Scholar] [CrossRef]

- Gao, T.; Yang, J.; Zhang, B.; Li, Y.; Zhang, H. A fault diagnosis method based on feature-level fusion of multi-sensor information for rotating machinery. Meas. Sci. Technol. 2023, 35, 036109. [Google Scholar] [CrossRef]

- Chaleshtori, A.E. Data fusion techniques for fault diagnosis of industrial machines: A survey. arXiv 2022, arXiv:2211.09551. [Google Scholar]

- Zhang, H.; Yang, W.; Yi, W.; Lim, J.B.; An, Z.; Li, C. Imbalanced data based fault diagnosis of the chiller via integrating a new resampling technique with an improved ensemble extreme learning machine. J. Build. Eng. 2023, 70, 106338. [Google Scholar] [CrossRef]

- Tang, S.; Ma, J.; Yan, Z.; Zhu, Y.; Khoo, B.C. Deep transfer learning strategy in intelligent fault diagnosis of rotating machinery. Eng. Appl. Artif. Intell. 2024, 134, 108678. [Google Scholar] [CrossRef]

- Feng, X.; Liu, J. Method of Multi-Mode Sensor Data Fusion with an Adaptive Deep Coupling Convolutional Auto-Encoder. J. Sens. Technol. 2023, 13, 69–85. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, Q.; Zhao, Z.; Tang, P.; Luo, W.; Liu, Q. Application of just-in-time-learning CCA to the health monitoring of a real cold source system. IFAC-PapersOnLine 2022, 55, 23–30. [Google Scholar] [CrossRef]

- Faysal, A.; Boushine, T.; Rostami, M.; Roshan, R.G.; Wang, H.; Muralidhar, N.; Sahoo, A.; Yao, Y.D. DenoMAE: A Multimodal Autoencoder for Denoising Modulation Signals. arXiv 2025, arXiv:2501.11538. [Google Scholar]

- Huang, T.; Zhang, Q.; Tang, X.; Zhao, S.; Lu, X. A novel fault diagnosis method based on CNN and LSTM and its application in fault diagnosis for complex systems. Artif. Intell. Rev. 2022, 55, 1289–1315. [Google Scholar] [CrossRef]

- Ko, J.U.; Lee, J.; Kim, T.; Kim, Y.C.; Youn, B.D. Deep-learning-based fault detection and recipe optimization for a plastic injection molding process under the class-imbalance problem. J. Comput. Des. Eng. 2023, 10, 694–710. [Google Scholar] [CrossRef]

- Alsaif, K.M.; Albeshri, A.A.; Khemakhem, M.A.; Eassa, F.E. Multimodal Large Language Model-Based Fault Detection and Diagnosis in Context of Industry 4.0. Electronics 2024, 13, 4912. [Google Scholar] [CrossRef]

- Choudhary, A.; Mishra, R.K.; Fatima, S.; Panigrahi, B.K. Multi-input CNN based vibro-acoustic fusion for accurate fault diagnosis of induction motor. Eng. Appl. Artif. Intell. 2023, 120, 105872. [Google Scholar] [CrossRef]

- Wang, J.; Wang, X.; Wang, Y.; Sun, Y.; Sun, G. Intelligent joint actuator fault diagnosis for heavy-duty industrial robots. IEEE Sens. J. 2024, 24, 15292–15301. [Google Scholar] [CrossRef]

- Zhang, X.; Wang, H.; Wu, B.; Zhou, Q.; Hu, Y. A novel data-driven method based on sample reliability assessment and improved CNN for machinery fault diagnosis with non-ideal data. J. Intell. Manuf. 2023, 34, 2449–2462. [Google Scholar] [CrossRef]

- Hussain, A.; Yadav, A.; Ravikumar, G. Anomaly detection using bi-directional long short-term memory networks for cyber-physical electric vehicle charging stations. IEEE Trans. Ind. Cyber-Phys. Syst. 2024, 2, 508–518. [Google Scholar] [CrossRef]

- Wang, W.; Li, Y.; Song, Y. Fault diagnosis method of vehicle engine via HOSVD–HOALS hybrid algorithm-based multi-dimensional feature extraction. Appl. Soft Comput. 2022, 116, 108293. [Google Scholar] [CrossRef]

- Hsu, S.H.; Lee, C.H.; Wu, W.F.; Lee, C.W.; Jiang, J.A. Machine learning-based online multi-fault diagnosis for ims using optimization techniques with stator electrical and vibration data. IEEE Trans. Energy Convers. 2024, 39, 2412–2424. [Google Scholar] [CrossRef]

- Zhang, Z.; Dong, S.; Li, D.; Liu, P.; Wang, Z. Prediction and Diagnosis of Electric Vehicle Battery Fault Based on Abnormal Voltage: Using Decision Tree Algorithm Theories and Isolated Forest. Processes 2024, 12, 136. [Google Scholar] [CrossRef]

- Abed, N.K.; Abed, F.T.; Al-Yasriy, H.F.; ALRikabi, H.T.S. Detection of power transmission lines faults based on voltages and currents values using K-nearest neighbors. Int. J. Power Electron. Drive Syst. 2023, 14, 1033–1043. [Google Scholar] [CrossRef]

- Vasan, V.; Sridharan, N.V.; Prabhakaranpillai Sreelatha, A.; Vaithiyanathan, S. Tire condition monitoring using transfer learning-based deep neural network approach. Sensors 2023, 23, 2177. [Google Scholar] [CrossRef]

- Xie, S.; Li, Y.; Tan, H.; Liu, R.; Zhang, F. Multi-scale and multi-layer perceptron hybrid method for bearings fault diagnosis. Int. J. Mech. Sci. 2022, 235, 107708. [Google Scholar] [CrossRef]

- Vinothini, K.; Harshavardhan, K.; Amerthan, J.; Harish, M. Fault detection of electric vehicle using machine learning algorithm. In Proceedings of the 2022 3rd International Conference on Electronics and Sustainable Communication Systems (ICESC), Coimbatore, India, 17–19 August 2022; pp. 878–881. [Google Scholar]

- Akcan, E.; Kuncan, M.; Kaplan, K.; Kaya, Y. Diagnosing bearing fault location, size, and rotational speed with entropy variables using extreme learning machine. J. Braz. Soc. Mech. Sci. Eng. 2024, 46, 4. [Google Scholar] [CrossRef]

- Matthaiou, I.; Khandelwal, B.; Antoniadou, I. Vibration monitoring of gas turbine engines: Machine-learning approaches and their challenges. Front. Built Environ. 2017, 3, 54. [Google Scholar] [CrossRef]

- Ahmed, R.; El Sayed, M.; Gadsden, S.A.; Tjong, J.; Habibi, S. Automotive internal-combustion-engine fault detection and classification using artificial neural network techniques. IEEE Trans. Veh. Technol. 2014, 64, 21–33. [Google Scholar] [CrossRef]

- Xiao, D.; Huang, Y.; Zhao, L.; Qin, C.; Shi, H.; Liu, C. Domain adaptive motor fault diagnosis using deep transfer learning. IEEE Access 2019, 7, 80937–80949. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, J.; Zheng, Y.; Jiang, W.; Zhang, Y. Fault diagnosis of rolling bearings with recurrent neural network-based autoencoders. ISA Trans. 2018, 77, 167–178. [Google Scholar] [CrossRef] [PubMed]

- Shen, D.; Lyu, C.; Yang, D.; Hinds, G.; Wang, L. Connection fault diagnosis for lithium-ion battery packs in electric vehicles based on mechanical vibration signals and broad belief network. Energy 2023, 274, 127291. [Google Scholar] [CrossRef]

- Sun, L.; Zhan, W.; Tomizuka, M. Probabilistic prediction of interactive driving behavior via hierarchical inverse reinforcement learning. In Proceedings of the 2018 IEEE International Conference on Intelligent Transportation Systems (ITSC), Maui, HI, USA, 4–7 November 2018; pp. 2111–2117. [Google Scholar]

- Band, S.S.; Ardabili, S.; Sookhak, M.; Chronopoulos, A.T.; Elnaffar, S.; Moslehpour, M.; Csaba, M.; Torok, B.; Pai, H.T.; Mosavi, A. When smart cities get smarter via machine learning: An in-depth literature review. IEEE Access 2022, 10, 60985–61015. [Google Scholar] [CrossRef]

- Chen, Y.; Zhao, D.; Lv, L.; Zhang, Q. Multi-task learning for dangerous object detection in autonomous driving. Inf. Sci. 2018, 432, 559–571. [Google Scholar] [CrossRef]

- Lee, H.J.; Kim, K.T.; Park, J.H.; Bere, G.; Ochoa, J.J.; Kim, T. Convolutional neural network-based false battery data detection and classification for battery energy storage systems. IEEE Trans. Energy Convers. 2021, 36, 3108–3117. [Google Scholar] [CrossRef]

- Mansouri, M.; Hajji, M.; Trabelsi, M.; Harkat, M.F.; Al-khazraji, A.; Livera, A.; Nounou, H.; Nounou, M. An effective statistical fault detection technique for grid connected photovoltaic systems based on an improved generalized likelihood ratio test. Energy 2018, 159, 842–856. [Google Scholar] [CrossRef]

- Ng, E.Y.K.; Lim, J.T. Machine learning on fault diagnosis in wind turbines. Fluids 2022, 7, 371. [Google Scholar] [CrossRef]

- Dhanagopal, V. Fault Detection and Prognosis of Aerospace Systems Using Long Short-Term Memory Based Recurrent Neural Networks. Ph.D. Thesis, Toronto Metropolitan University, Toronto, ON, Canada, 2020. [Google Scholar]

- Chen, Z.; Han, F.; Wu, L.; Yu, J.; Cheng, S.; Lin, P.; Chen, H. Random forest based intelligent fault diagnosis for PV arrays using array voltage and string currents. Energy Convers. Manag. 2018, 178, 250–264. [Google Scholar] [CrossRef]

- Fentaye, A.D.; Ul-Haq Gilani, S.I.; Baheta, A.T.; Li, Y.G. Performance-based fault diagnosis of a gas turbine engine using an integrated support vector machine and artificial neural network method. Proc. Inst. Mech. Eng. Part J. Power Energy 2019, 233, 786–802. [Google Scholar] [CrossRef]

- Realpe, M.; Vintimilla, B.; Vlacic, L. Sensor fault detection and diagnosis for autonomous vehicles. In Proceedings of the 2015 4th International Conference on Material Science and Engineering Technology (ICMSET 2015), Singapore, 26–28 October 2015; Volume 30, p. 04003. [Google Scholar]

- Ren, J.; Ren, R.; Green, M.; Huang, X. A deep learning method for fault detection of autonomous vehicles. In Proceedings of the 2019 14th International Conference on Computer Science & Education (ICCSE), Toronto, ON, Canada, 19–21 August 2019; pp. 749–754. [Google Scholar]

- Kosuru, V.S.R.; Kavasseri Venkitaraman, A. A smart battery management system for electric vehicles using deep learning-based sensor fault detection. World Electr. Veh. J. 2023, 14, 101. [Google Scholar] [CrossRef]

- Cavus, M.; Dissanayake, D.; Bell, M. Next Generation of Electric Vehicles: AI-Driven Approaches for Predictive Maintenance and Battery Management. Energies 2025, 18, 1041. [Google Scholar] [CrossRef]

- Vernekar, K.; Kumar, H.; KV, G. Engine gearbox fault diagnosis using machine learning approach. J. Qual. Maint. Eng. 2018, 24, 345–357. [Google Scholar] [CrossRef]

- Arun Balaji, P.; Sugumaran, V. Fault detection of automobile suspension system using decision tree algorithms: A machine learning approach. Proc. Inst. Mech. Eng. Part J. Process Mech. Eng. 2024, 238, 1206–1217. [Google Scholar] [CrossRef]

- Pattipati, K.; Kodali, A.; Luo, J.; Choi, K.; Singh, S.; Sankavaram, C.; Mandal, S.; Donat, W.; Namburu, S.M.; Chigusa, S.; et al. An integrated diagnostic process for automotive systems. In Computational Intelligence in Automotive Applications; Springer: Berlin/Heidelberg, Germany, 2008; pp. 191–218. [Google Scholar]

- Javed, A.R.; Usman, M.; Rehman, S.U.; Khan, M.U.; Haghighi, M.S. Anomaly detection in automated vehicles using multistage attention-based convolutional neural network. IEEE Trans. Intell. Transp. Syst. 2020, 22, 4291–4300. [Google Scholar] [CrossRef]

- Deng, L.; Cheng, Y.; Shi, Y. Fault detection and diagnosis for liquid rocket engines based on long short-term memory and generative adversarial networks. Aerospace 2022, 9, 399. [Google Scholar] [CrossRef]

- Sulaiman, M.H.; Mustaffa, Z. State of charge estimation for electric vehicles using random forest. Green Energy Intell. Transp. 2024, 3, 100177. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Li, J.; Xv, B.; Fu, R.; Chen, H.; Yang, L.; Jin, D.; Zhao, L. Multi-modal and multi-scale fusion 3D object detection of 4D radar and LiDAR for autonomous driving. IEEE Trans. Veh. Technol. 2022, 72, 5628–5641. [Google Scholar] [CrossRef]

- Naresh, V.S.; Ratnakara Rao, G.V.; Prabhakar, D. Predictive machine learning in optimizing the performance of electric vehicle batteries: Techniques, challenges, and solutions. Data Min. Knowl. Discov. 2024, 14, e1539. [Google Scholar] [CrossRef]

- Safavi, S.; Safavi, M.A.; Hamid, H.; Fallah, S. Multi-sensor fault detection, identification, isolation and health forecasting for autonomous vehicles. Sensors 2021, 21, 2547. [Google Scholar] [CrossRef]

- Okumuş, F.; Sönmez, H.İ.; Safa, A.; Kaya, C.; Kökkülünk, G. Gradient boosting machine for performance and emission investigation of diesel engine fueled with pyrolytic oil–biodiesel and 2-EHN additive. Sustain. Energy Fuels 2023, 7, 4002–4018. [Google Scholar] [CrossRef]

- Trivedi, M.; Kakkar, R.; Gupta, R.; Agrawal, S.; Tanwar, S.; Niculescu, V.C.; Raboaca, M.S.; Alqahtani, F.; Saad, A.; Tolba, A. Blockchain and deep learning-based fault detection framework for electric vehicles. Mathematics 2022, 10, 3626. [Google Scholar] [CrossRef]

- Rahman, M.A.; Rahim, M.A.; Rahman, M.M.; Moustafa, N.; Razzak, I.; Ahmad, T.; Patwary, M.N. A secure and intelligent framework for vehicle health monitoring exploiting big-data analytics. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19727–19742. [Google Scholar] [CrossRef]

- Jeong, Y. Fault detection with confidence level evaluation for perception module of autonomous vehicles based on long short term memory and Gaussian Mixture Model. Appl. Soft Comput. 2023, 149, 111010. [Google Scholar] [CrossRef]

- Shah, U. Fault Detection and Diagnosis in Electric Vehicle Systems using IoT and Machine Learning: A Support Vector Machine Approach. J. Electr. Syst. 2024, 20, 990–999. [Google Scholar]

- Chen, Z.; Mauricio, A.; Li, W.; Gryllias, K. A deep learning method for bearing fault diagnosis based on cyclic spectral coherence and convolutional neural networks. Mech. Syst. Signal Process. 2020, 140, 106683. [Google Scholar] [CrossRef]

- Shen, X.; Lun, S.; Li, M. Multi-Fault Diagnosis of Electric Vehicle Power Battery Based on Double Fault Window Location and Fast Classification. Electronics 2024, 13, 612. [Google Scholar] [CrossRef]

- Kuutti, S.; Bowden, R.; Jin, Y.; Barber, P.; Fallah, S. A survey of deep learning applications to autonomous vehicle control. IEEE Trans. Intell. Transp. Syst. 2020, 22, 712–733. [Google Scholar] [CrossRef]

- Zhao, Y.P.; Chen, Y.B. Extreme learning machine based transfer learning for aero engine fault diagnosis. Aerosp. Sci. Technol. 2022, 121, 107311. [Google Scholar] [CrossRef]

- Valladares, H.; Li, T.; Zhu, L.; El-Mounayri, H.; Hashem, A.M.; Abdel-Ghany, A.E.; Tovar, A. Gaussian process-based prognostics of lithium-ion batteries and design optimization of cathode active materials. J. Power Sources 2022, 528, 231026. [Google Scholar] [CrossRef]

- Fotias, N.; Bao, R.; Niu, H.; Tiller, M.; McGahan, P.; Ingleby, A. A Modelica Library for Modelling of Electrified Powertrain Digital Twins. In Proceedings of the Modelica Conferences, Linköping, Sweden, 20–24 September 2021; pp. 249–261. [Google Scholar]

- Cui, J.; Xie, P.; Wang, X.; Wang, J.; He, Q.; Jiang, G. M2FN: An end-to-end multi-task and multi-sensor fusion network for intelligent fault diagnosis. Measurement 2022, 204, 112085. [Google Scholar] [CrossRef]

- Anyanwu, G.O.; Nwakanma, C.I.; Lee, J.M.; Kim, D.S. Novel hyper-tuned ensemble random forest algorithm for the detection of false basic safety messages in internet of vehicles. ICT Express 2023, 9, 122–129. [Google Scholar] [CrossRef]

- Das, K.; Kumar, R.; Krishna, A. Analyzing electric vehicle battery health performance using supervised machine learning. Renew. Sustain. Energy Rev. 2024, 189, 113967. [Google Scholar] [CrossRef]

- Khan, K.; Sohaib, M.; Rashid, A.; Ali, S.; Akbar, H.; Basit, A.; Ahmad, T. Recent trends and challenges in predictive maintenance of aircraft’s engine and hydraulic system. J. Braz. Soc. Mech. Sci. Eng. 2021, 43, 403. [Google Scholar] [CrossRef]

- Wang, H.; Xu, D.; Wen, X.; Song, J.; Li, L. Flight test sensor fault diagnosis based on data-fusion and machine learning method. IEEE Access 2022, 10, 120013–120022. [Google Scholar] [CrossRef]

- Liu, J.; Lei, F.; Pan, C.; Hu, D.; Zuo, H. Prediction of remaining useful life of multi-stage aero-engine based on clustering and LSTM fusion. Reliab. Eng. Syst. Saf. 2021, 214, 107807. [Google Scholar] [CrossRef]

- Fentaye, A.D.; Zaccaria, V.; Kyprianidis, K. Aircraft engine performance monitoring and diagnostics based on deep convolutional neural networks. Machines 2021, 9, 337. [Google Scholar] [CrossRef]

| Technique | Key Features | Advantages | Drawbacks |

|---|---|---|---|

| Deep learning | Automatically extracts complex features from raw data using models like CNNs, RNNs, and YOLO. | Performs well with complex data (images, time series) and needs little feature engineering. | Needs large labeled datasets, can be computationally expensive, and lacks interpretability. |

| Transfer learning | Transfers knowledge from a source task to a related target task, requiring minimal retraining. | Works well with limited labeled data and reduces training time. | Performance drops if the source and target tasks are too different. |

| Hybrid models | Combines different machine learning models (e.g., SVM and Neural Networks). | Improves robustness and combines the strengths of different models. | Designing and tuning can be complex, and training time may increase. |

| Ensemble learning | Combines predictions from multiple models (e.g., bagging, boosting, stacking). | Helps reduce overfitting and improves accuracy. | Can be computa tionally expensive and less transparent. |

| Fusion strategies | Integrates data from multiple sources at different levels (input, features, or decisions). | Leads to richer representations and more accurate diagnostics. | Requires careful data synchronization and choosing the right fusion method. |

| Application | Paper | Year | Technique Used |

|---|---|---|---|

| Vehicle Fault Detection | Zhang et al. [48] | 2023 | Convolutional Neural Networks (CNNs) |

| Automotive System Monitoring | Hussain et al. [49] | 2024 | Long Short-Term Memory (LSTM) |

| Engine Fault Diagnosis | Wang et al. [50] | 2022 | Support Vector Machines (SVMs) |

| Brake System Failure Detection | Hsu et al. [51] | 2024 | Random Forest (RF) |

| Vehicle Battery Health Monitoring | Zhang et al. [52] | 2020 | Decision Trees (DTs) |

| Transmission Fault Detection | Abed et al. [53] | 2020 | K-Nearest Neighbors (KNN) |

| Vehicle Condition Monitoring | Vasan et al. [54] | 2022 | Deep Neural Networks (DNNs) |

| Hybrid Vehicle Fault Diagnosis | Xie et al. [55] | 2021 | Multi-Layer Perceptron (MLP) |

| Fault Detection in Electric Vehicles | Vinothini et al. [56] | 2020 | Naive Bayes Classifier (NBC) |

| Electric Motor Fault Diagnosis | Akcan et al. [57] | 2021 | Extreme Learning Machine (ELM) |

| Engine Vibration Monitoring | Matthaiou et al. [58] | 2020 | Gaussian Process (GP) |

| Vehicle Powertrain Fault Detection | Ahmed et al. [59] | 2021 | Artificial Neural Networks (ANNs) |

| Hybrid Electric Vehicle Fault Diagnosis | Xiao et al. [60] | 2022 | Transfer Learning (TL) |

| Automotive Sensor Fault Detection | Liu et al. [61] | 2020 | Recurrent Neural Networks (RNNs) |

| Vehicle Vibration Diagnosis | Shen et al. [62] | 2021 | Deep Belief Networks (DBNs) |

| Autonomous Vehicle Fault Detection | Chen et al. [41] | 2021 | Multimodal Feature Fusion |

| Driver Behavior Prediction | Sun et al. [63] | 2020 | Reinforcement Learning (RL) |

| Vehicle Monitoring in Smart Cities | Band et al. [64] | 2020 | Hybrid Machine Learning Models |

| Fault Detection in Autonomous Cars | Chen et al. [65] | 2022 | Multi-Task Learning (MTL) |

| Battery Management System Faults | Lee et al. [66] | 2020 | Deep Convolutional Networks (DCNs) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Said, N.; Mansouri, M.; Al Hmouz, R.; Khedher, A. Deep Learning Techniques for Fault Diagnosis in Interconnected Systems: A Comprehensive Review and Future Directions. Appl. Sci. 2025, 15, 6263. https://doi.org/10.3390/app15116263

Said N, Mansouri M, Al Hmouz R, Khedher A. Deep Learning Techniques for Fault Diagnosis in Interconnected Systems: A Comprehensive Review and Future Directions. Applied Sciences. 2025; 15(11):6263. https://doi.org/10.3390/app15116263

Chicago/Turabian StyleSaid, Nawel, Majdi Mansouri, Rami Al Hmouz, and Atef Khedher. 2025. "Deep Learning Techniques for Fault Diagnosis in Interconnected Systems: A Comprehensive Review and Future Directions" Applied Sciences 15, no. 11: 6263. https://doi.org/10.3390/app15116263

APA StyleSaid, N., Mansouri, M., Al Hmouz, R., & Khedher, A. (2025). Deep Learning Techniques for Fault Diagnosis in Interconnected Systems: A Comprehensive Review and Future Directions. Applied Sciences, 15(11), 6263. https://doi.org/10.3390/app15116263