Assessing the Selection of Digital Learning Materials: A Facet of Pre-Service Teachers’ Digital Competence

Abstract

1. Introduction

2. Theoretical Background

2.1. Teacher Competence in Teacher Education

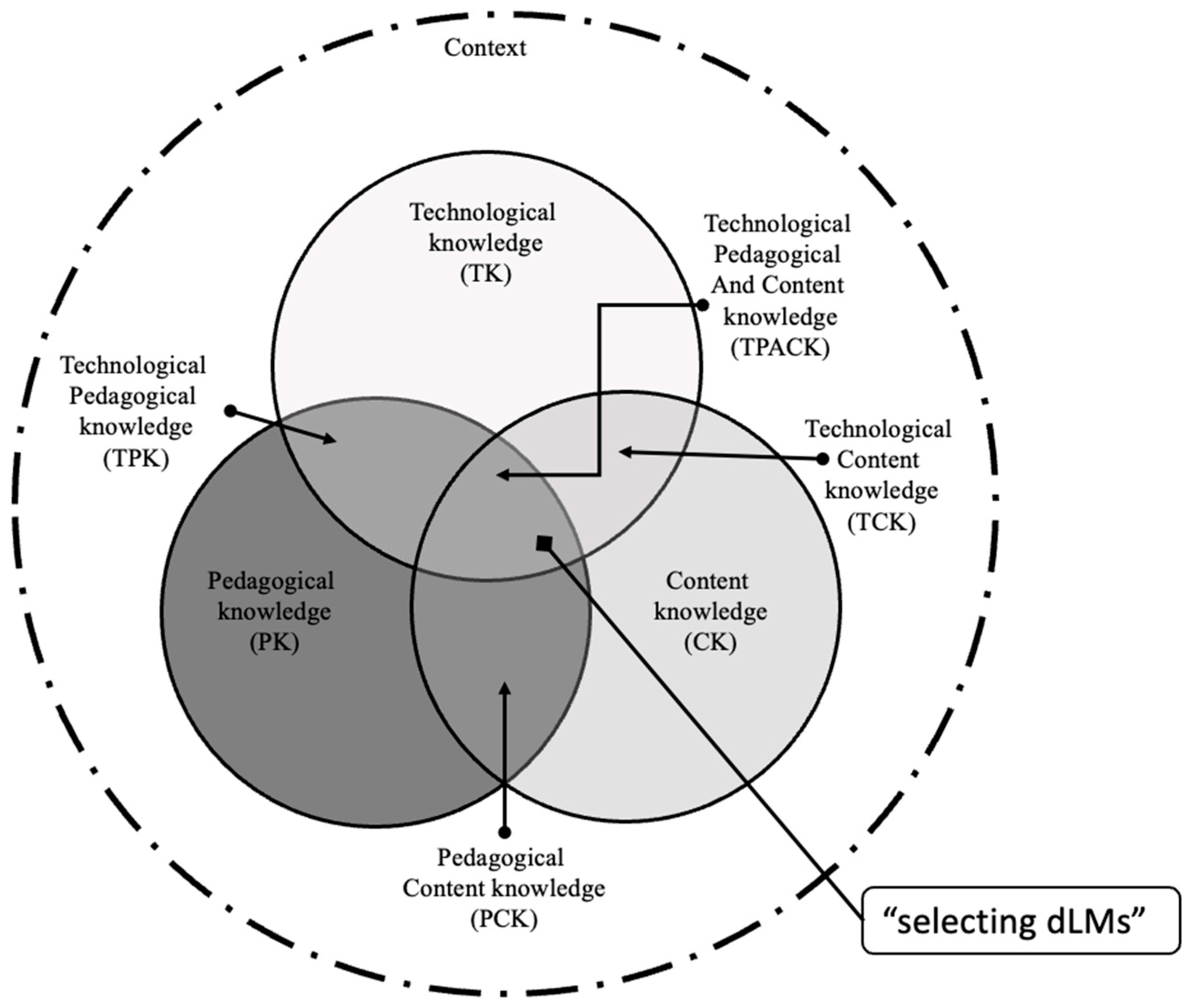

2.2. TPACK Framework

2.2.1. TCK-Reasoning

2.2.2. TPK-Reasoning

3. Materials and Methods

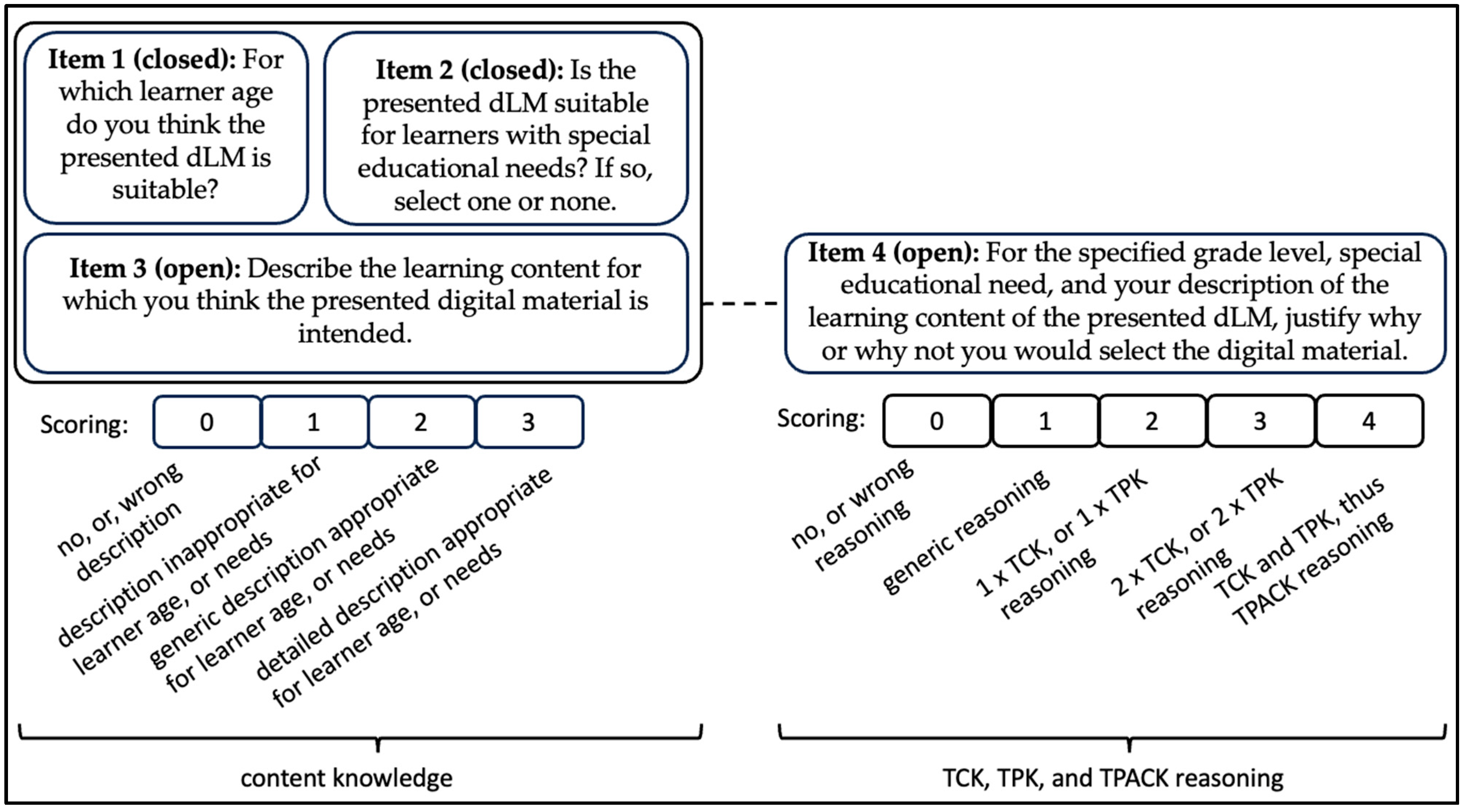

3.1. Research Objective One: Design of Open-Text Items for Assessing “Selecting dLMs”

3.2. Research Objective One: Scoring of the Designed Open-Text Items for Assessing “Selecting dLMs”

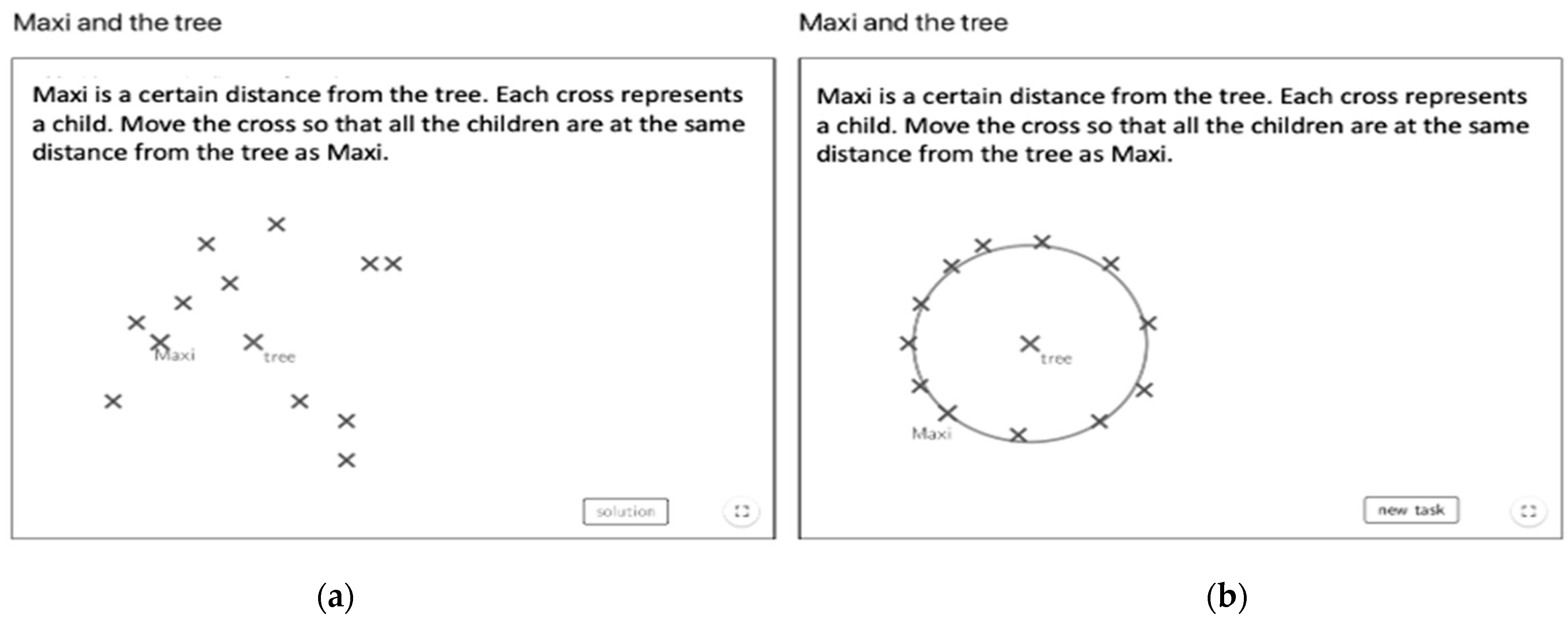

3.3. Description of the dLMs Used in the Studies for Evaluating the Designed Instrument

3.4. Participants and Design of Study 1 and Study 2

3.4.1. Design of Study 1 for Research Objective One: Design of Open-Text Items for Assessing “Selecting dLMs”

3.4.2. Design of Study 2 for Research Objective Two: Empirical Investigation of Pre-Service Mathematics Teachers Using the New Instrument to Assess “Selecting dLMs”

4. Results

4.1. Results for Research Objective One: Design of Open-Text Items for Assessing “Selecting dLMs”

4.2. Results for Research Objective Two: Empirical Investigation of Pre-Service Mathematics Teachers Using the New Instrument to Assess “Selecting dLMs”

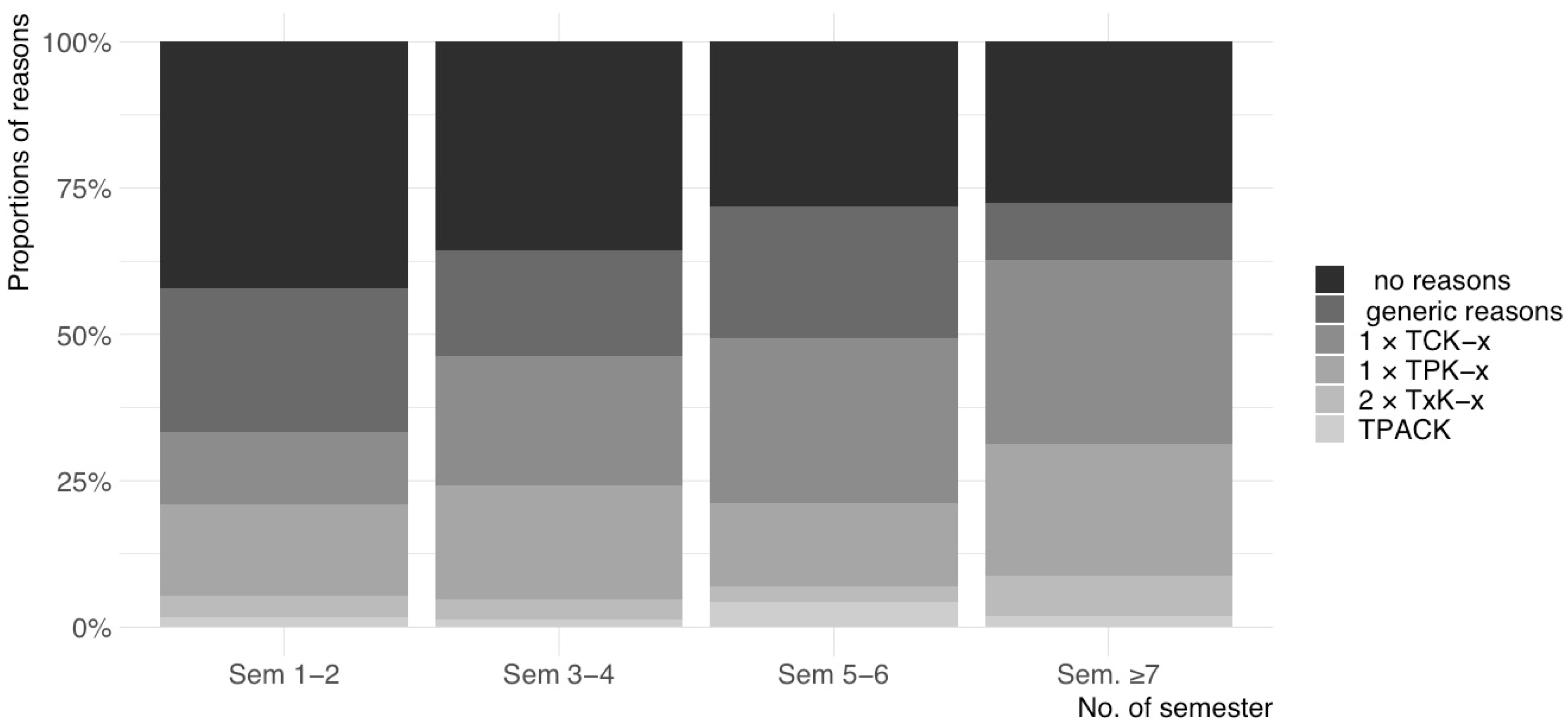

4.2.1. RQ 2.1: Can the Designed Instrument for “Selecting dLMs” Assess Different Levels Regarding the Number of Semesters of Study, and Are the Results Distinguishable from TPACK Self-Report Results?

4.2.2. RQ 2.2: What Is the Relationship Between the Designed Items?

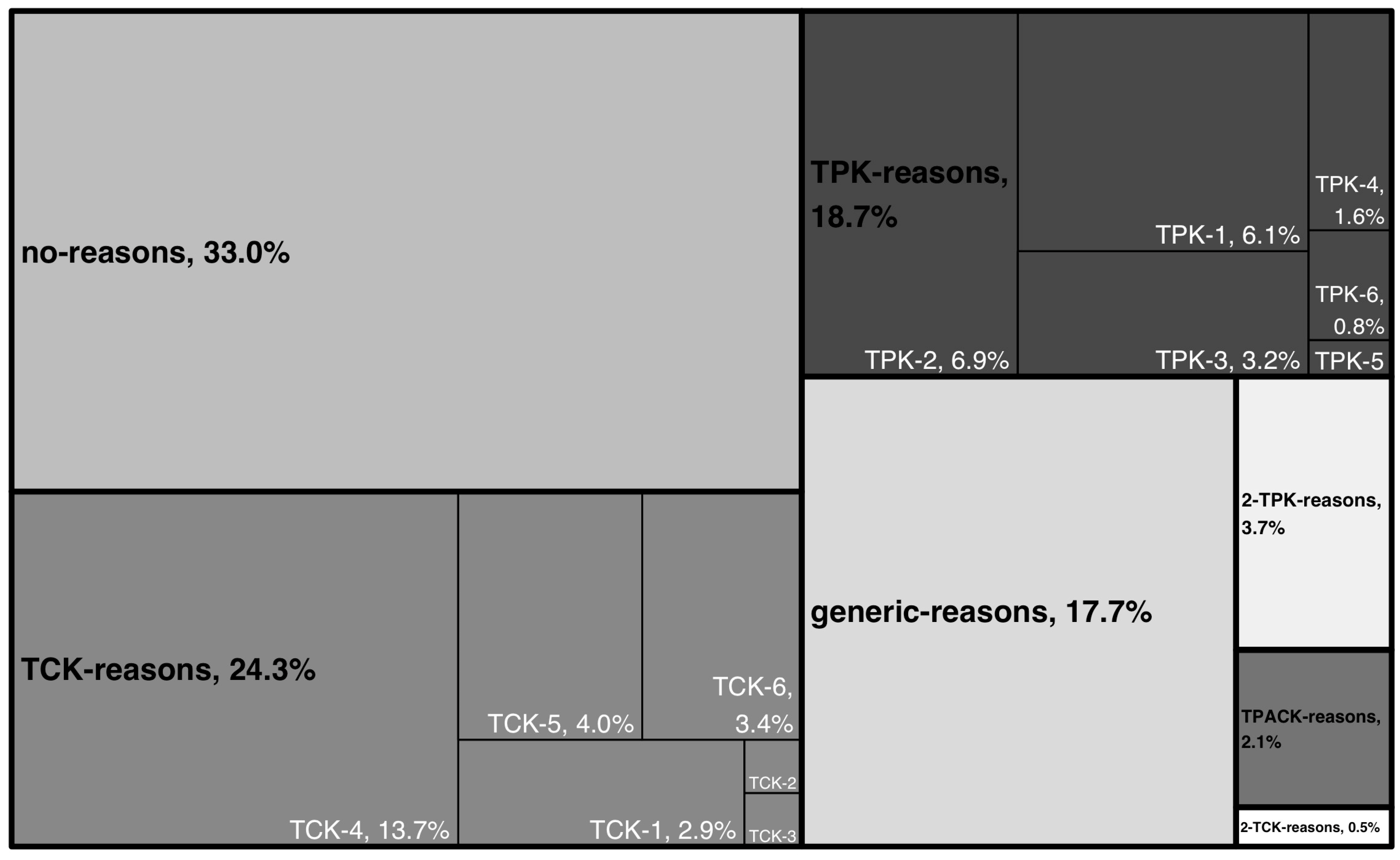

4.2.3. RQ 2.3: What Specific Reasoning Is Considered When “Selecting dLMs”?

5. Discussion

5.1. Discussion Research Objective One: Design of Open-Text Items for Assessing “Selecting dLMs”

5.2. Discussion Research Objective Two: Empirical Investigation of Pre-Service Mathematics Teachers Using the New Instrument to Assess “Selecting dLMs”

5.3. Implications for Teacher Education and the Development of “Selecting dLMs”

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Description of dLM1 Used in Studies 1 and 2

Appendix A.2. Sample Responses for dLM1-dLM4 for Items 1–3

| Score | dLM1 (Circle, and Property of Radius) [65] | dLM2 (Symmetry and Axis of Symmetry) [66] | dLM3 (Generate Bar Charts) [67] | dLM4 (Arithmetic Mean) [68] |

|---|---|---|---|---|

| No, or wrong description (score 0) | “geometry, shapes, figures” | “spatial thinking” | “visualization, sizes and quantities” | “probability” |

| description inappropriate for learner age, or needs (score 1) | 7–8th grade and no special educational needs; “circles and the properties of circles (radius...)” | 7–8th grade and no FSP “symmetry” | 1–2nd grade and learning disabilities “bar charts” | 7–8th grade and hearing impairment “arithmetic mean” |

| generic description appropriate for learner age, or needs (score 2) | “the content is useful for introducing circles and their radius” | “axis mirroring” | “bar charts” | “arithmetic mean” |

| detailed description appropriate for learner age, or needs (score 3) | “To introduce the circle. Pupils should be made aware that every point on the circle is exactly the same distance from the center.” | “the material presents that the dimensions of the mirrored object remain the same size when mirrored at a straight line.” | “It is about absolute frequencies and the creation of bar charts.” | “The dLM evaluates students’ understanding of how to calculate the arithmetic mean, ... helping students to grasp the underlying principles of the calculation.” |

Appendix A.3. Sample Responses for dLM1-dLM4 for Item 4

| Score | dLM1 (Circle, and Property of Radius) [65] | dLM2 (Symmetry and Axis of Symmetry) [66] | dLM3 (Generate Bar Charts) [67] | dLM4 (Arithmetic Mean) [68] |

|---|---|---|---|---|

| no, or wrong reasoning (score 0) | “I don’t see much point in the dLM” | “There are better visual examples” | “Would rather do it analogue” | “I don’t know” |

| generic reasoning (score 1) | “a good concept that combines math with technology” | “good representation of the principle of symmetry” | “simple and nice” | “a good task for calculating” |

| 1 × TCK, or 1 × TPK reasoning (score 2) | “I wouldn’t use the learning environment in a setting where students need support. It requires a lot of cognitive skills to comprehend the task and be able to visualize it...” (TCK-6) | “It is fun and motivating for the children to watch how the butterfly can move its wings.” (TPK-1) | “It is a good activity to check whether students understand the representation of the bar chart without having to draw a chart themselves (saves time).”(TCK-4) | “...learners can all work self-regulated, there are solution hints...” (TPK-2) |

| 2 × TCK, or 2 × TPK reasoning (score 3) | “Learners self-regulate how it is possible to solve the task and thus learn an important property of the circle (radius) in a playful way” (TPK-1, TPK3) | “Students learn about symmetries through play, students can learn about the properties of symmetries through experimentation, which would be more difficult without digital media” (TPK-1, TPK-3) | “...motivational, context accessible to all learners...” (TPK-1, TPK-4) | “...as everyone can work on the tasks at their own pace and it can be a motivating factor for the children to work digitally and see results immediately...” (TPK-1, TPK-2) |

| TCK and TPK, thus TPACK reasoning (score 4) | “I would use this learning environment because it is enactive and visual learning that actively engages students in the learning process. Through the concrete task of positioning x in a circle around the tree, the children experience geometric concepts such as radius, center, and circle shape. This not only promotes an understanding of abstract mathematical concepts, [...] the ability to recognize connections” (TCK-2, TPK-1) | “I wouldn’t use the learning environment... For example, the task is too abstract for learners or offers too few differentiated approaches to understand the core of axial symmetry. If there’s no way to adapt the task to different learning levels, some students might be overwhelmed or under-challenged.” (TCK-6, TPK-4) | “...without requiring learners to do a lot of drawing. Learners can easily experiment and check their solutions independently. Doing this on paper would waste lesson time and verification of results is time-consuming for teachers...” (TCK-4, TPK-3) | “It assesses students’ understanding of calculating the arithmetic mean. Students are forced to rethink their learned knowledge of calculation and can thus better reflect on the arithmetic mean calculation. However, I view this learning environment more as a test to determine the extent to which students have internalized the subject matter they have learned.” (TCK-4, TPK-2) |

References

- Drijvers, P.; Sinclair, N. The Role of Digital Technologies in Mathematics Education: Purposes and Perspectives. ZDM Math. Educ. 2023, 56, 239–248. [Google Scholar] [CrossRef]

- Roblyer, M.D.; Hughes, J.E. Integrating Educational Technology into Teaching: Transforming Learning Across Disciplines, 8th ed.; Pearson Education, Inc.: New York, NY, USA, 2019; ISBN 978-0-13-474641-8. [Google Scholar]

- Weinhandl, R.; Houghton, T.; Lindenbauer, E.; Mayerhofer, M.; Lavicza, Z.; Hohenwarter, M. Integrating Technologies Into Teaching and Learning Mathematics at the Beginning of Secondary Education in Austria. Eurasia J. Math. Sci. Tech. Ed. 2021, 17, 1–15. [Google Scholar] [CrossRef] [PubMed]

- Engelbrecht, J.; Llinares, S.; Borba, M.C. Transformation of the Mathematics Classroom with the Internet. ZDM Math. Educ. 2020, 52, 825–841. [Google Scholar] [CrossRef]

- OECD The Future of Education and Skills Education 2030; OECD: Paris, France, 2018.

- Weigand, H.-G.; Trgalova, J.; Tabach, M. Mathematics Teaching, Learning, and Assessment in the Digital Age. ZDM Math. Educ. 2024, 56, 525–541. [Google Scholar] [CrossRef]

- Heine, S.; König, J.; Krepf, M. Digital Resources as an Aspect of Teacher Professional Digital Competence: One Term, Different Definitions—A Systematic Review. Educ. Inf. Technol. 2022, 28, 3711–3738. [Google Scholar] [CrossRef]

- Clark-Wilson, A.; Robutti, O.; Thomas, M. Teaching with Digital Technology. ZDM Math. Educ. 2020, 52, 1223–1242. [Google Scholar] [CrossRef]

- Gonscherowski, P.; Rott, B. How Do Pre-/In-Service Mathematics Teachers Reason for or against the Use of Digital Technology in Teaching? Mathematics 2022, 10, 2345. [Google Scholar] [CrossRef]

- Lindenbauer, E.; Infanger, E.-M.; Lavicza, Z. Enhancing Mathematics Education through Collaborative Digital Material Design: Lessons from a National Project. Eur. J. Sci. Math. Educ. 2024, 12, 276–296. [Google Scholar] [CrossRef]

- Gonscherowski, P.; Rott, B. Selecting Digital Technology: A Review of TPACK Instruments. In Proceedings of the 46th Conference of the International Group for the Psychology of Mathematics Education, Haifa, Israel, 16–21 July 2023; Ayalon, M., Koichu, B., Leikin, R., Rubel, L., Tabach, M., Eds.; PME: Haifa, Israel, 2023; Volume 2, pp. 378–386. [Google Scholar]

- König, J.; Heine, S.; Kramer, C.; Weyers, J.; Becker-Mrotzek, M.; Großschedl, J.; Hanisch, C.; Hanke, P.; Hennemann, T.; Jost, J.; et al. Teacher Education Effectiveness as an Emerging Research Paradigm: A Synthesis of Reviews of Empirical Studies Published over Three Decades (1993–2023). J. Curric. Stud. 2023, 56, 371–391. [Google Scholar] [CrossRef]

- Schmidt, W.H.; Xin, T.; Guo, S.; Wang, X. Achieving Excellence and Equality in Mathematics: Two Degrees of Freedom? J. Curric. Stud. 2022, 54, 772–791. [Google Scholar] [CrossRef]

- Handal, B.; Campbell, C.; Cavanagh, M.; Petocz, P. Characterising the Perceived Value of Mathematics Educational Apps in Preservice Teachers. Math. Educ. Res. J. 2016, 28, 199–221. [Google Scholar] [CrossRef]

- Valtonen, T.; Leppänen, U.; Hyypiä, M.; Sointu, E.; Smits, A.; Tondeur, J. Fresh Perspectives on TPACK: Pre-Service Teachers’ Own Appraisal of Their Challenging and Confident TPACK Areas. Educ. Inf. Technol. 2020, 25, 2823–2842. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2005, 108, 1017–1054. [Google Scholar] [CrossRef]

- Koehler, M.J.; Shin, T.S.; Mishra, P. How Do We Measure TPACK? Let Me Count the Ways. In A Research Handbook on Frameworks and Approaches; Ronau, R.N., Rakes, C.R., Niess, M.L., Eds.; IGI Global: Hershey, PA, USA, 2011; pp. 16–31. ISBN 978-1-60960-750-0. [Google Scholar]

- Schmid, M.; Brianza, E.; Mok, S.Y.; Petko, D. Running in Circles: A Systematic Review of Reviews on Technological Pedagogical Content Knowledge (TPACK). Comput. Educ. 2024, 214, 105024. [Google Scholar] [CrossRef]

- Karakaya Cirit, D.; Canpolat, E. A Study on the Technological Pedagogical Contextual Knowledge of Science Teacher Candidates across Different Years of Study. Educ. Inf. Technol. 2019, 24, 2283–2309. [Google Scholar] [CrossRef]

- von Kotzebue, L. Beliefs, Self-Reported or Performance-Assessed TPACK: What Can Predict the Quality of Technology-Enhanced Biology Lesson Plans? J. Sci. Educ. Technol. 2022, 31, 570–582. [Google Scholar] [CrossRef]

- Fabian, A.; Fütterer, T.; Backfisch, I.; Lunowa, E.; Paravicini, W.; Hübner, N.; Lachner, A. Unraveling TPACK: Investigating the Inherent Structure of TPACK from a Subject-Specific Angle Using Test-Based Instruments. Comput. Educ. 2024, 217, 105040. [Google Scholar] [CrossRef]

- Lachner, A.; Fabian, A.; Franke, U.; Preiß, J.; Jacob, L.; Führer, C.; Küchler, U.; Paravicini, W.; Randler, C.; Thomas, P. Fostering Pre-Service Teachers’ Technological Pedagogical Content Knowledge (TPACK): A Quasi-Experimental Field Study. Comput. Educ. 2021, 174, 104304. [Google Scholar] [CrossRef]

- Jin, Y.; Harp, C. Examining Preservice Teachers’ TPACK, Attitudes, Self-Efficacy, and Perceptions of Teamwork in a Stand-Alone Educational Technology Course Using Flipped Classroom or Flipped Team-Based Learning Pedagogies. J. Digit. Learn. Teach. Educ. 2020, 36, 166–184. [Google Scholar] [CrossRef]

- Mouza, C.; Nandakumar, R.; Yilmaz Ozden, S.; Karchmer-Klein, R. A Longitudinal Examination of Preservice Teachers’ Technological Pedagogical Content Knowledge in the Context of Undergraduate Teacher Education. Action Teach. Educ. 2017, 39, 153–171. [Google Scholar] [CrossRef]

- Pekkan, Z.T.; Ünal, G. Technology Use: Analysis of Lesson Plans on Fractions in an Online Laboratory School. In Proceedings of the 45th Conference of the International Group for the Psychology of Mathematics Education, Alicante, Spain, 18–23 July 2022; Fernández, C., Llinares, S., Gutiérrez, A., Planas, N., Eds.; PME: Alicante, Spain, 2022; Volume 4, p. 410, ISBN 978-84-1302-178-2. [Google Scholar]

- McCulloch, A.; Leatham, K.; Bailey, N.; Cayton, C.; Fye, K.; Lovett, J. Theoretically Framing the Pedagogy of Learning to Teach Mathematics with Technology. Contemp. Issues Technol. Teach. Educ. (CITE J.) 2021, 21, 325–359. [Google Scholar]

- Tseng, S.-S.; Yeh, H.-C. Fostering EFL Teachers’ CALL Competencies through Project-Based Learning. Educ. Technol. Soc. 2019, 22, 94–105. [Google Scholar]

- Revuelta-Domínguez, F.-I.; Guerra-Antequera, J.; González-Pérez, A.; Pedrera-Rodríguez, M.-I.; González-Fernández, A. Digital Teaching Competence: A Systematic Review. Sustainability 2022, 14, 6428. [Google Scholar] [CrossRef]

- Yeh, Y.; Hsu, Y.; Wu, H.; Hwang, F.; Lin, T. Developing and Validating Technological Pedagogical Content Knowledge—Practical TPACK through the Delphi Survey Technique. Br. J. Educ. Tech. 2014, 45, 707–722. [Google Scholar] [CrossRef]

- Grimm, P. Social Desirability Bias. In Wiley International Encyclopedia of Marketing; Sheth, J., Malhotra, N., Eds.; Wiley: Hoboken, NJ, USA, 2010; ISBN 978-1-4051-6178-7. [Google Scholar]

- Safrudiannur Measuring Teachers’ Beliefs Quantitatively: Criticizing the Use of Likert Scale and Offering a New Approach; Springer Fachmedien Wiesbaden: Wiesbaden, Germany, 2020.

- Schmid, M.; Brianza, E.; Petko, D. Self-Reported Technological Pedagogical Content Knowledge (TPACK) of Pre-Service Teachers in Relation to Digital Technology Use in Lesson Plans. Comput. Hum. Behav. 2021, 115, 106586. [Google Scholar] [CrossRef]

- Kaiser, G.; König, J. Competence Measurement in (Mathematics) Teacher Education and Beyond: Implications for Policy. High. Educ. Policy 2019, 32, 597–615. [Google Scholar] [CrossRef]

- Blömeke, S.; Gustafsson, J.-E.; Shavelson, R.J. Beyond Dichotomies: Competence Viewed as a Continuum. Z. Psychol. 2015, 223, 3–13. [Google Scholar] [CrossRef]

- Deng, Z. Powerful Knowledge, Educational Potential and Knowledge-Rich Curriculum: Pushing the Boundaries. J. Curric. Stud. 2022, 54, 599–617. [Google Scholar] [CrossRef]

- Yang, X.; Deng, J.; Sun, X.; Kaiser, G. The Relationship between Opportunities to Learn in Teacher Education and Chinese Preservice Teachers’ Professional Competence. J. Curric. Stud. 2024, 1–19. [Google Scholar] [CrossRef]

- Schleicher, A. PISA 2022 Insights and Interpretations; OECD: Paris, France, 2023. [Google Scholar]

- Hattie, J. Visible Learning, the Sequel: A Synthesis of over 2,100 Meta-Analyses Relating to Achievement, 1st ed.; Routledge: New York, NY, USA, 2023; ISBN 978-1-0719-1701-5. [Google Scholar]

- Koehler, M.J.; Mishra, P.; Cain, W. What Is Technological Pedagogical Content Knowledge (TPACK)? J. Educ. 2013, 193, 13–19. [Google Scholar] [CrossRef]

- Shulman, L.S. Those Who Understand: Knowledge Growth in Teaching. Educ. Res. 1986, 15, 4–14. [Google Scholar] [CrossRef]

- Reinhold, F.; Leuders, T.; Loibl, K.; Nückles, M.; Beege, M.; Boelmann, J.M. Learning Mechanisms Explaining Learning with Digital Tools in Educational Settings: A Cognitive Process Framework. Educ. Psychol. Rev. 2024, 36, 14. [Google Scholar] [CrossRef]

- Mishra, P.; Warr, M. Contextualizing TPACK within Systems and Cultures of Practice. Comput. Hum. Behav. 2021, 117, 106673. [Google Scholar] [CrossRef]

- Tabach, M.; Trgalová, J. Teaching Mathematics in the Digital Era: Standards and Beyond. In STEM Teachers and Teaching in the Digital Era; Ben-David Kolikant, Y., Martinovic, D., Milner-Bolotin, M., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 221–242. ISBN 978-3-030-29395-6. [Google Scholar]

- Redecker, C.; Punie, Y. Digital Competence of Educators; Publications Office of the European Union: Seville, Spain, 2017. [Google Scholar] [CrossRef]

- Grossman, P.L. Teaching Core Practices in Teacher Education; Core Practices in Education Series; Harvard Education Press: Cambridge, MA, USA, 2018; ISBN 978-1-68253-187-7. [Google Scholar]

- Anderson, S.; Griffith, R.; Crawford, L. TPACK in Special Education: Preservice Teacher Decision Making While Integrating iPads into Instruction. Contemp. Issues Technol. Teach. Educ. (CITE J.) 2017, 17, 97–127. [Google Scholar]

- Bonafini, F.C.; Lee, Y. Investigating Prospective Teachers’ TPACK and Their Use of Mathematical Action Technologies as They Create Screencast Video Lessons on iPads. TechTrends Link. Res. Pract. Improv. Learn. 2021, 65, 303–319. [Google Scholar] [CrossRef]

- Hillmayr, D.; Ziernwald, L.; Reinhold, F.; Hofer, S.I.; Reiss, K.M. The Potential of Digital Tools to Enhance Mathematics and Science Learning in Secondary Schools: A Context-Specific Meta-Analysis. Comput. Educ. 2020, 153, 103897. [Google Scholar] [CrossRef]

- Morgan, C.; Kynigos, C. Digital Artefacts as Representations: Forging Connections between a Constructionist and a Social Semiotic Perspective. Educ. Stud. Math. 2014, 85, 357–379. [Google Scholar] [CrossRef]

- Moreno-Armella, L.; Hegedus, S.J.; Kaput, J.J. From Static to Dynamic Mathematics: Historical and Representational Perspectives. Educ. Stud. Math. 2008, 68, 99–111. [Google Scholar] [CrossRef]

- Sweller, J.; van Merriënboer, J.J.G.; Paas, F. Cognitive Architecture and Instructional Design: 20 Years Later. Educ. Psychol. Rev. 2019, 31, 261–292. [Google Scholar] [CrossRef]

- Pusparini, F.; Riandi, R.; Sriyati, S. Developing Technological Pedagogical Content Knowledge (TPACK) in Animal Physiology. J. Phys. Conf. Ser. 2017, 895, 012052. [Google Scholar] [CrossRef]

- Solvang, L.; Haglund, J. How Can GeoGebra Support Physics Education in Upper-Secondary School—A Review. Phys. Educ. 2021, 56, 055011. [Google Scholar] [CrossRef]

- Turan, Z.; Karabey, S.C. The Use of Immersive Technologies in Distance Education: A Systematic Review. Educ. Inf. Technol. 2023, 28, 16041–16064. [Google Scholar] [CrossRef]

- Rüth, M.; Breuer, J.; Zimmermann, D.; Kaspar, K. The Effects of Different Feedback Types on Learning with Mobile Quiz Apps. Front. Psychol. 2021, 12, 665144. [Google Scholar] [CrossRef]

- Drijvers, P. Digital Technology in Mathematics Education: Why It Works (Or Doesn’t). In Selected Regular Lectures from the 12th International Congress on Mathematical Education; Cho, S.J., Ed.; Springer International Publishing: Cham, Switzerland, 2015; pp. 135–151. ISBN 978-3-319-17186-9. [Google Scholar]

- Gerhard, K.; Jäger-Biela, D.J.; König, J. Opportunities to Learn, Technological Pedagogical Knowledge, and Personal Factors of Pre-Service Teachers: Understanding the Link between Teacher Education Program Characteristics and Student Teacher Learning Outcomes in Times of Digitalization. Z. Erzieh. 2023, 26, 653–676. [Google Scholar] [CrossRef]

- Drijvers, P.; Ball, L.; Barzel, B.; Heid, M.K.; Cao, Y.; Maschietto, M. Uses of Technology in Lower Secondary Mathematics Education: A Concise Topical Survey; Kaiser, G., Ed.; ICME-13 Topical Surveys; Springer International Publishing: Cham, Switzerland, 2016; ISBN 978-3-319-33665-7. [Google Scholar]

- Molenaar, I.; Boxtel, C.; Sleegers, P. Metacognitive Scaffolding in an Innovative Learning Arrangement. Instr. Sci. 2011, 39, 785–803. [Google Scholar] [CrossRef]

- Rott, B. Inductive and Deductive Justification of Knowledge: Epistemological Beliefs and Critical Thinking at the Beginning of Studying Mathematics. Educ. Stud. Math. 2021, 106, 117–132. [Google Scholar] [CrossRef]

- Guillén-Gámez, F.D.; Mayorga-Fernández, M.J.; Bravo-Agapito, J.; Escribano-Ortiz, D. Analysis of Teachers’ Pedagogical Digital Competence: Identification of Factors Predicting Their Acquisition. Tech. Knowl. Learn. 2021, 26, 481–498. [Google Scholar] [CrossRef]

- Gonscherowski, P.; Rott, B. Measuring Digital Competencies of Pre-Service Teachers-a Pilot Study. In Proceedings of the 44th Conference of the International Group for the Psychology of Mathematics Education, Khon Kaen, Thailand, 19–22 July 2021; Volume 1, p. 143. [Google Scholar]

- Gonscherowski, P.; Rott, B. Instrument to Assess the Knowledge and the Skills of Mathematics Educators’ Regarding Digital Technology. In Proceedings of the Beiträge zum Mathematikunterricht 2022; WTM: Frankfurt, Germany, 2022; Volume 3, p. 1424. [Google Scholar]

- GeoGebra Team Classroom Resources. Available online: https://www.geogebra.org/materials (accessed on 23 May 2023).

- Flink Maxi und der Baum—GeoGebra. Available online: https://www.geogebra.org/m/a4pppe7a (accessed on 10 July 2021).

- Schüngel, M. Bewege Den Schmetterling—GeoGebra. Available online: https://www.geogebra.org/m/zrj2zcam (accessed on 28 February 2022).

- FLINK Lieblingssport—GeoGebra. Available online: https://www.geogebra.org/m/v4xuvmhf (accessed on 28 February 2023).

- FLINK Welche Zahl Fehlt?—GeoGebra. Available online: https://www.geogebra.org/m/qqv3kxt6 (accessed on 28 February 2023).

- Cohen, J. Quantitative Methods in Psychology: A Power Primer. Psychol. Bull. 1992, 112, 155–159. [Google Scholar] [CrossRef] [PubMed]

- Cicchetti, D.V. Guidelines, Criteria, and Rules of Thumb for Evaluating Normed and Standardized Assessment Instruments in Psychology. Psychol. Assess. 1994, 6, 284–290. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Taber, K.S. The Use of Cronbach’s Alpha When Developing and Reporting Research Instruments in Science Education. Res. Sci. Educ. 2018, 48, 1273–1296. [Google Scholar] [CrossRef]

- Janssen, N.; Knoef, M.; Lazonder, A.W. Technological and Pedagogical Support for Pre-Service Teachers’ Lesson Planning. Technol. Pedagog. Educ. 2019, 28, 115–128. [Google Scholar] [CrossRef]

- König, J.; Heine, S.; Jäger-Biela, D.; Rothland, M. ICT Integration in Teachers’ Lesson Plans: A Scoping Review of Empirical Studies. Eur. J. Teach. Educ. 2022, 47, 821–849. [Google Scholar] [CrossRef]

- Brianza, E.; Schmid, M.; Tondeur, J.; Petko, D. Uncovering Relations between Self-Reported TPACK and Objective Measures: Accounting for Experience. In Proceedings of Society for Information Technology & Teacher Education International Conference; Cohen, R.J., Ed.; Association for the Advancement of Computing in Education (AACE): Orlando, FL, USA, 2025; pp. 3114–3123. [Google Scholar]

- Thurm, D.; Barzel, B. Teaching Mathematics with Technology: A Multidimensional Analysis of Teacher Beliefs. Educ. Stud. Math. 2022, 109, 41–63. [Google Scholar] [CrossRef]

- Chen, W.; Tan, J.S.H.; Pi, Z. The Spiral Model of Collaborative Knowledge Improvement: An Exploratory Study of a Networked Collaborative Classroom. Int. J. Comput. Support. Collab. Learn. 2021, 16, 7–35. [Google Scholar] [CrossRef]

- Purwaningsih, E.; Nurhadi, D.; Masjkur, K. TPACK Development of Prospective Physics Teachers to Ease the Achievement of Learning Objectives: A Case Study at the State University of Malang, Indonesia. J. Phys. Conf. Ser. 2019, 1185, 012042. [Google Scholar] [CrossRef]

- Curran, P.G. Methods for the Detection of Carelessly Invalid Responses in Survey Data. J. Exp. Soc. Psychol. 2016, 66, 4–19. [Google Scholar] [CrossRef]

- Gonzalez, M.J.; González-Ruiz, I. Behavioural Intention and Pre-Service Mathematics Teachers’ Technological Pedagogical Content Knowledge. Eurasia J. Math. Sci. Technol. Educ. 2017, 13, 601–620. [Google Scholar] [CrossRef]

- Knievel, I.; Lindmeier, A.M.; Heinze, A. Beyond Knowledge: Measuring Primary Teachers’ Subject-Specific Competences in and for Teaching Mathematics with Items Based on Video Vignettes. Int. J. Sci. Math. Educ. 2015, 13, 309–329. [Google Scholar] [CrossRef]

- Weyers, J.; Kramer, C.; Kaspar, K.; König, J. Measuring Pre-Service Teachers’ Decision-Making in Classroom Management: A Video-Based Assessment Approach. Teach. Teach. Educ. 2024, 138, 104426. [Google Scholar] [CrossRef]

- Baumanns, L.; Pohl, M. Leveraging ChatGPT for Problem Posing: An Exploratory Study of Pre-Service Teachers’ Professional Use of AI. In Proceedings of the Mathematics Education in the Digital Age 4 (MEDA4), Bari, Italy, 3–6 September 2024. [Google Scholar]

- Cai, J.; Rott, B. On Understanding Mathematical Problem-Posing Processes. ZDM Math. Educ. 2024, 56, 61–71. [Google Scholar] [CrossRef]

- Trixa, J.; Kaspar, K. Information Literacy in the Digital Age: Information Sources, Evaluation Strategies, and Perceived Teaching Competences of Pre-Service Teachers. Front. Psychol. 2024, 15, 1336436. [Google Scholar] [CrossRef] [PubMed]

- Sen, M.; Demirdögen, B. Seeking Traces of Filters and Amplifiers as Pre-Service Teachers Perform Their Pedagogical Content Knowledge. Sci. Educ. Int. 2023, 34, 58–68. [Google Scholar] [CrossRef]

- Brungs, C.L.; Buchholtz, N.; Streit, H.; Theile, Y.; Rott, B. Empirical Reconstruction of Mathematics Teaching Practices in Problem-Solving Lessons: A Multi-Method Case Study. Front. Educ. 2025, 10, 1555763. [Google Scholar] [CrossRef]

| TPACK Component | Code # | Description of the Code |

|---|---|---|

| TCK-x | Different (TCK-1), real world (TCK-2), or dynamic representation (TCK-3) | Reasoning that includes the selection of dLMs enabling new, real-world, and dynamic ways of presenting learning content that would not be possible with traditional material. |

| Decrease in (TCK-4), or modification of (TCK-5) or increase of (TCK-6) extraneous cognitive load | Reasoning that dLMs supports the learning (decreasing extraneous cognitive load), changes the learning (modifying extraneous cognitive load), or hinders the learning (increasing extraneous cognitive load). | |

| TPK-x | Motivation (TPK-1) | Reasoning that dLMs increase learners motivation or engagement. |

| Self-regulated learning (TPK-2) | Reasoning that dLMs support self-regulated learning. | |

| Try out, explore, discover (TPK-3) | Reasoning that dLMs support exploration of learning content or discovery learning. | |

| Differentiation and inclusion (TPK-4) | Reasoning that dLMs support inclusion and differentiation. | |

| Teacher efficiency (TPK-5) | Reasoning that dLMs lead to lecture time savings or increase the efficiency of teachers by automating assessment or feedback. | |

| Distraction of learners (TPK-6) | Reasoning that dLMs distract learners from the intended learning objective. | |

| TPACK | Combination of TCK-x and TPK-x | Reasoning that includes TCK-x and TPK-x reasoning. |

| Item No. | TPACK Component | Item Wording | Type of Item |

|---|---|---|---|

| 1 | Content knowledge | For which learner age do you think the presented digital learning material is suitable? | single-choice selection of a grade level 1 |

| 2 | In your opinion, is the presented digital learning material suitable for learners with special educational needs? If so, select one or none. | single-choice selection of (no) special need 2 | |

| 3 | Describe the learning content for which you think the presented digital learning material is intended. | open-text item | |

| 4 | TCK-x,TPK-x, and/or TPACK reasoning | For the specified grade level, special educational needs, and your description of the learning content of the presented dLM, justify why or why not you would select the presented digital learning material. | open-text item |

| Study | RQs | Size of Sample | Sample Size Per University (Austria/Germany) 1 | dLMs Used in Study | Mean Processing Time of Task in Minutes |

|---|---|---|---|---|---|

| 1 | RQ 1.1 | 164 | 61/103 | dLM1-4 | 9.84 |

| 2 | RQ 2.x | 395 | 55/324 | dLM1 | 4.29 2 |

| Type of Assessment | Sem. 1, 2 (n = 57) | Sem. 3, 4 (n = 149) | Sem. 5, 6 (n = 71) | Sem. ≥ 7 (n = 102) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M | SD | M | SD | M | SD | M | SD | |||

| external 1 | 1.37 a | 1.43 | 2.12 b | 1.60 | 2.27 b | 1.55 | 2.65 b | 1.51 | F (3375) 8.51 | ηp2 0.06 |

| self-report 2 | 3.12 c | 0.86 | 3.27 c | 0.73 | 3.44 | 0.72 | 3.55 d | 0.76 | Chi2 (3) 11.99 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gonscherowski, P.; Lindenbauer, E.; Kaspar, K.; Rott, B. Assessing the Selection of Digital Learning Materials: A Facet of Pre-Service Teachers’ Digital Competence. Appl. Sci. 2025, 15, 6024. https://doi.org/10.3390/app15116024

Gonscherowski P, Lindenbauer E, Kaspar K, Rott B. Assessing the Selection of Digital Learning Materials: A Facet of Pre-Service Teachers’ Digital Competence. Applied Sciences. 2025; 15(11):6024. https://doi.org/10.3390/app15116024

Chicago/Turabian StyleGonscherowski, Peter, Edith Lindenbauer, Kai Kaspar, and Benjamin Rott. 2025. "Assessing the Selection of Digital Learning Materials: A Facet of Pre-Service Teachers’ Digital Competence" Applied Sciences 15, no. 11: 6024. https://doi.org/10.3390/app15116024

APA StyleGonscherowski, P., Lindenbauer, E., Kaspar, K., & Rott, B. (2025). Assessing the Selection of Digital Learning Materials: A Facet of Pre-Service Teachers’ Digital Competence. Applied Sciences, 15(11), 6024. https://doi.org/10.3390/app15116024