Abstract

The compiler serves as a bridge connecting hardware architecture and application software, converting source code into executable files and optimizing code. Fuzz testing is an automated testing technology that evaluates software reliability by providing a large amount of random or mutated input data to the target system to trigger abnormal program behavior. When existing fuzz testing methods are applied to compiler testing, although they can detect common errors like lexical and syntax errors, there are issues such as insufficient pertinence in constructing the input corpus, limited support for structured Intermediate Representation (IR) node manipulation, and limited perfection of the mutation strategy. This study proposes a deep fuzz testing framework named BoostPolyGlot for GCC compiler frontend IR generation, which effectively covers the code-execution paths and improves the code-coverage rate through constructing an input corpus, employing translation by a master–slave IR translator, conducting operations on structured program characteristic IR nodes, and implementing an IR mutation strategy with dynamic weight adjustment. This study evaluates the fuzz testing capabilities of BoostPolyGlot based on dependency relationships, loop structures, and their synergistic effect. The experimental outcomes confirm that, when measured against five crucial performance indicators including total paths, count coverage, favored paths rate, new edges on rate, and level, BoostPolyGlot demonstrated statistically significant improvements compared with American Fuzzy Lop (AFL) and PolyGlot. These findings validate the effectiveness and practicality of the proposed framework.

1. Introduction

In the domain of computer science, compilers stand as pivotal enablers of code optimization and cross-platform programming. Compiler construction is a multifaceted process encompassing stages such as lexical analysis, syntax analysis, and semantic analysis. Lexical analysis converts the source program’s character stream into lexical units, whereas syntax analysis constructs an Abstract Syntax Tree (AST) from these units. Semantic analysis ensures that syntactically valid code adheres to language specifications at the semantic level, verifying proper variable usage and type consistency. Following these analyses, the compiler performs code optimization to enhance the performance of the generated target code, including reducing execution time and memory footprint. The optimization process employs numerous techniques, including constant folding, loop optimization, and function inlining. Notable compilers such as GCC not only translate source code into machine-executable files but also offer a suite of optimization options [1]. With ongoing advancements in compilation technology, compilers have achieved substantial breakthroughs in enhancing computing performance, security, and reliability. Nevertheless, due to the intricate nature of compiler architectures and the diversity of functional modules, improper handling of operations such as lexical analysis, syntax analysis, and semantic analysis may introduce compilation errors into the final executable program. These errors can precipitate critical security vulnerabilities during software execution, including memory leaks, buffer overflows, and logical flaws [2].

Fuzz testing [3,4] is a technique for uncovering software vulnerabilities by providing unintended inputs to a target system and monitoring for anomalous outcomes. Compilers take a large volume of directly generated or mutated source code as input, and runtime crashes or memory-detection errors often indicate compilation defects, including those inherent in the compiler itself. In recent years, the field of fuzz testing has witnessed significant progress. Generation-based fuzz testing methods have been developed for programs with highly structured input formats. These methods focus on generating test cases that are valid in terms of syntax and semantics, often using techniques such as input models, grammar-based generation, or machine learning-driven approaches. Mutation-based fuzz testing, on the other hand, typically starts with an initial corpus of test cases and generates new ones through random mutations. Modern mutation-based fuzzers have integrated more advanced techniques like symbolic execution and taint analysis to improve their effectiveness.

However, current compiler-focused fuzz testing is confronted with three critical challenges. First, generating syntactically correct test cases remains problematic: every programming language adheres to fundamental grammatical rules, and syntax errors in source code trigger “syntax error” prompts from the compiler’s frontend parser, halting execution. Second, ensuring semantic correctness is equally challenging: semantic analysis verifies context-dependent properties of syntactically valid code, such as variable-usage relationships and compliance with language specifications. Existing fuzzing frameworks, however, lack contextual awareness during mutation, which may potentially lead to program termination. These two challenges lead to the rejection of test cases during the frontend processing phase, thereby precluding coverage of the deeper stages of compilation optimization. Furthermore, even when test cases traverse lexical, syntactic, and semantic analysis to form AST, convert to Intermediate Representation (IR), and enter the optimization pipeline, current tools inadequately consider optimization phase characteristics during generation and mutation. This deficiency causes test cases to fail to effectively cover critical code.

To address the above challenges, this study proposes a deep fuzzing framework named BoostPolyGlot, which is tailored for GCC compiler frontend IR generation and grounded in program feature analysis, IR node correlation, and dynamic feedback principles. By analyzing structured program characteristics, the framework employs a master–slave IR translator to establish IR node connections between programs and test cases. It dynamically adjusts the testing process through real-time evaluation and feedback from the fuzzer regarding test case performance and code coverage. Experimental results demonstrate that BoostPolyGlot effectively enhances code coverage during fuzz testing and explores more code-execution paths. The primary contributions of this work are as follows:

- A fuzz testing framework BoostPolyGlot for GCC compiler frontend IR generation is proposed. Programs with specific structured characteristics and initial test cases are taken as the inputs of the compiler. Through constructing an input corpus, the translation of the master–slave IR translator, the extraction and insertion of IR nodes with structured program characteristics, and the IR mutation strategy are effected. Consequently, it can explore more code-execution paths and improve code coverage.

- The extraction and insertion operations of IR nodes with structured program characteristics are implemented. By conducting a detailed analysis of node attributes such as type, operator, and operand, suitable IR nodes are strategically selected and integrated into the IR structure of test cases, thereby constructing novel test cases that can more effectively trigger code-execution paths during the compilation process.

- An IR mutation strategy based on dynamic weight adjustment is proposed. Initial weights are assigned according to the program features and code-coverage rates of different test cases. The fuzzer monitors and adjusts the mutation strategy in real time, guides the mutation operations to be more inclined to the structures of test cases with high coverage rates, and effectively improves the effectiveness of test cases and the code-coverage rate.

- This study evaluates the fuzz testing performance of BoostPolyGlot by analyzing dependency relationships, loop structures, and their combined interactions. Empirical results show that compared with AFL and PolyGlot, BoostPolyGlot has achieved significant improvements in five key performance indicators, validating the effectiveness of the proposed framework.

The remainder of this manuscript is structured as follows. Section 2 provides a detailed review of related work, including different fuzz testing methods and their applications. Section 3 presents the materials and methods used in this study, including the construction of the input corpus, the design of the master–slave IR translator, IR node extraction and insertion, and the IR mutation strategy. Section 4 focuses on the results and discussion, evaluating the performance of BoostPolyGlot through experiments and analyzing the impact of dependency relationships, loop structures, and their synergistic effects. Finally, Section 5 concludes the study, summarizes the research findings, and outlines directions for future work.

2. Related Work

Fuzz testing is a widely used vulnerability-discovery technique. Randomly generated and mutated data are input into the program under test while monitoring its running state. Once a crash or other abnormal conditions occur, the relevant data are saved, and program vulnerabilities are identified through data analysis. Compared with other software security detection techniques such as code auditing, taint analysis, and symbolic execution, fuzz testing offers advantages of high automation, wide application scope, and ease of deployment and implementation. Moreover, tests can be conducted even without access to the source code. The progress of fuzz testing technology is primarily reflected in the advancements of generation-based and mutation-based fuzz testing methodologies. Existing research continues to enhance the efficiency and depth of vulnerability discovery through diverse strategies and algorithms.

2.1. Fuzz Testing Method Based on Generation

Generation-based fuzz testing is primarily applied to target programs with highly structured input formats, such as network protocols and JavaScript engines. These programs impose extensive format checks, in which input file fields adhere to specific syntax and semantics. Generation-based approaches efficiently generate test cases that are validated grammatically and semantically by parsers. For instance, MoWF et al. [5] proposed a generation method using file format information as an input model, which enables test cases to probe deeper code paths through parsers. Jueon et al. [6] combined large language models with coverage-guided reinforcement learning for JavaScript engines to construct weighted coverage graphs via term frequency–inverse document frequency, compute fuzzing rewards, and generate test cases targeting novel coverage regions. Wang et al. [7] introduced a data-driven seed-generation method, which analyzes existing samples to learn probabilistic context-sensitive grammars for describing the syntax and semantics of structured inputs, thereby producing well-distributed seeds to enhance fuzzing effectiveness for such programs. Godefroid et al. [8] addressed the automated input grammar-generation challenge in grammar-based fuzzing by leveraging neural statistical learning. They constructed a PDF object-generation model using seq2seq networks for Microsoft Edge’s PDF parser, to generate new test cases via diverse sampling strategies. Fan et al. [9] proposed an automated test case-generation method tailored for black-box fuzz testing of proprietary network protocols. Specifically, the approach involves first capturing the traffic of the proprietary network protocol, then leveraging LSTM and seq2seq models to learn the protocol format, and finally generating new test cases based on the learned format. The GANFuzz framework [10] employed generative adversarial networks to train generative models that learn real-world protocol grammars, thereby producing diverse and format-compliant test cases to address the protocol grammar-dependency issue in traditional industrial network protocol fuzzing.

When the program under test has closed-source code, it may be difficult to obtain the syntax structure and construct an input-generation model using the methods mentioned above. However, static analysis techniques, dynamic taint analysis techniques, and machine learning techniques can be used to infer the input structure from the initial seed corpus. Osbert et al. [11] constructed a context-free grammar of the language through a set of test cases and black-box access to the binary file under test, and used this grammar to fuzz-test programs with structured inputs. However, during corpus construction, they did not optimize the initial corpus to adapt to different program characteristics. Viide et al. [12] proposed a model inference technique to assist in fuzz testing, which can be applied to any sample input and automatically generate test cases in a black-box manner. This technique has discovered multiple critical vulnerabilities in the fuzz testing of multi-file formats. Matthias et al. [13] used dynamic taint analysis techniques to generate a readable and structurally accurate input grammar. This grammar not only allows for simple reverse-engineering of the input format but also can serve as the input for the test generator. KLEE [14] combined symbolic execution technology to compile the program into LLVM bytecode. During the execution process, it records path conditions and uses a constraint solver to generate test cases. Meanwhile, it employs techniques such as compact state representation, query optimization, and reasonable state scheduling to conduct in-depth checks on complex programs.

Enhancing the semantic correctness of generated test cases empowers fuzzers to uncover deeper errors in language processors. HyungSeok et al. [15] developed a semantic-aware program assembly to generate JavaScript test cases that are both syntactically and semantically valid. CSmith [16] generated fully correct C test cases by analyzing language-specific features. Lidbury et al. [17] built upon Csmith to develop CLsmith, a tool compliant with OpenCL syntax. While retaining the semantic generation model of Csmith, CLsmith modifies the original strategy by inserting or deleting dead code. Unlike Csmith, YARPGen [18] generates code snippets using pre-defined grammar-generation rules. Dewey et al. [19] employed constraint logic programming to specify grammatical and behavioral semantics for the generation of test cases, yielding higher-quality test cases compared to random generation. Xia et al. [20] utilized large language models as input generation and mutation engines, leveraging automated prompting and LLM-supported fuzzing loops to generate diverse and semantically compliant test cases.

2.2. Fuzz Testing Method Based on Mutation

Unlike generation-based fuzzers, mutation-based fuzzers typically require an initial corpus to operate, generating new test cases through random mutations of existing ones. When a test case triggers a novel execution path that uncovers previously unknown program behavior, it is retained for further mutation. This iterative process enables fuzzers to rapidly explore deeper program states and uncharted execution paths.

The fuzzing tool American Fuzzy Lop (AFL) [21] employed coverage feedback to guide random bit-flipping mutations. However, simple bit-flipping often failed grammatical, semantic, and logical checks, resulting in discarded inputs and reduced effectiveness. To address this, modern fuzzers have integrated symbolic execution or taint analysis. Driller [22] generated new test cases from valid inputs and used symbolic execution to explore novel code paths at strategic locations. QSYM [23] optimized hybrid execution through instruction-level symbolic execution, relaxed constraint solving, and basic block trimming to reduce overhead while adjusting inputs to trigger vulnerabilities. Angora [24] solved path constraints without symbolic execution via scalable byte-level taint analysis, context-sensitive branch counting, and gradient descent-based search, and generated high-quality mutants. VUzzer [25] used dynamic taint analysis to identify mutation targets, directing exploration of deeper code paths but lacking runtime-adaptive mutation strategies. To enhance efficiency, researchers have developed optimized feedback-guided approaches. AFLGo [26] introduced directed greybox fuzzing, prioritizing seeds with minimal distance between basic blocks and target points, and outperformed directed whitebox and undirected greybox methods. Ijon [27] annotated internal program state data to guide test case mutations. Peng et al. [28] proposed T-Fuzz, a fuzz testing tool that generates test cases based on genetic algorithms. It selects a subset of existing test cases as “parents” and generates “offspring” through operations such as crossover and mutation.

However, in scenarios requiring highly structured input data, for example, adherence to specific file formats or protocol specifications, the aforementioned mutation-based fuzzers may fail to generate valid test cases that meet the requirements. Current fuzzer research emphasizes structural analysis of target programs to produce test cases aligned to such formats or specifications. GRIMORE [29] employed combinatorial operations resembling program syntax structures for large-scale mutations, generating highly structured test cases. Nevertheless, large-scale mutations risk overlooking internal program structural correlations, compromising mutation effectiveness for complex programs. Superion [30] is a grammar-aware coverage-based greybox fuzzing approach that segments test cases prior to mutation while preserving input validity, facilitating deeper fuzzing exploration. Samuel et al. [31] constructed JavaScript-specific IRs and used Fuzzilli to generate semantically valid test cases. Squirrel [32] designed SQL-specific IRs for mutation and semantic analysis, supporting operations such as statement insertion, deletion, and replacement. PolyGlot [33] eliminated syntactic and semantic disparities across programming languages via a unified IR, thereby performing IR mutation and semantic validation to ensure correctness.

In generation-based fuzz testing, existing approaches are beset by inherent limitations. For instance, some tools are constrained by specific syntactic rules, thereby lacking adaptability to diverse scenarios and complex operations, and struggling to generate test cases that accommodate varied program characteristics and edge cases. Inadequate consideration of dynamic program states and intricate logic further restricts test case diversity. In contrast, mutation-based methods rely heavily on initial corpora for random mutations, leading to inflexible mutation strategies that fail to adapt to runtime program behavior or overlook internal structural correlations. These issues compromise mutation effectiveness for complex programs and hinder the generation of valid test cases for highly structured inputs. Additionally, current fuzzing techniques inadequately account for the program structural characteristics of GCC compiler frontend IR generation phase, with limited focus on IR translators, insertion, and extraction operations. This deficiency results in frequent syntactic errors during the generation of test cases, thereby undermining the validity of test cases and the precision of targeting, and ultimately restricting improvements in code coverage and the depth of path exploration.

3. Materials and Methods

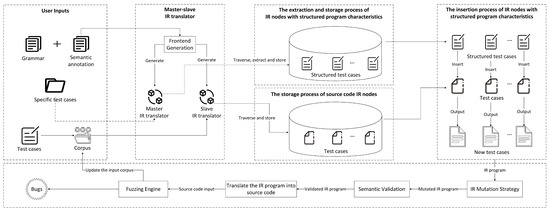

Existing fuzz testing methods predominantly focus on the compiler frontend, effectively detecting issues in syntax and semantic analysis. However, research on GCC compiler frontend IR generation, a crucial part of the compilation process, remains relatively scarce. Although the frontend is well-explored, overlooking IR generation intricacies can lead to vulnerabilities. If flaws exist during the frontend IR generation, malicious actors could exploit them. For example, they might manipulate function call relationships, alter data structures, or insert hidden logic in the generated IR. This could cause the program to perform malicious actions like data theft, system function disruption, or executing remote attack commands at runtime. Based on the characteristics of programming languages and the requirements of frontend IR generation, this study proposes BoostPolyGlot, a structured IR generation-based fuzz testing framework for GCC compiler frontend. As depicted in Figure 1.

Figure 1.

A structured IR generation-based fuzz testing framework BoostPolyGlot.

First, the Backus-Naur Form (BNF) grammar and semantic annotations of the target programming language are provided. Additionally, a set of C programs with structured program characteristics and initial test cases stored in the input corpus are inputted. The C programs with structured program characteristics contain various dependency relationships and loop structures, while the initial test cases encompass multiple types of C programs, including conditional statements and function calls. Second, this study designs a master–slave IR translator. This translator is responsible for translating the source code of C programs with structured program characteristics and test cases into corresponding programs composed of several IR nodes. Subsequently, the converted IR nodes are classified and stored. The IR nodes with structured program characteristics converted by the master IR translator are extracted and stored in the container, and the test case IR nodes converted by the slave IR translator are stored in the other container. This separate storage mechanism allows for independent processing of the IR nodes in different containers. Based on the good compatibility and interactivity among the IR nodes, the corresponding insertion positions are determined according to the structure and logical relationships of the test case IR nodes. This enables the insertion of IR nodes with structured program characteristics into the test case IR nodes. The new test cases after insertion have more complex program characteristics and can comprehensively cover various different code paths. Then, the IR undergoes IR mutation and semantic correction operations in sequence. In the IR mutation phase, initial weights are assigned according to the code-coverage rates corresponding to test cases with different program characteristics. The fuzzer monitors and analyzes the test cases being mutated in real time. Then, it adjusts the mutation strategy in real time based on the characteristics of test cases with higher code-coverage rates, increasing the weight of generating test cases with such structural characteristics. In the semantic correction phase, a semantic checking tool conducts a semantic check on the mutated IR program to detect and correct semantic errors that may be caused by the mutation. Finally, the IR program of the new test case is converted back into source code form and passed as input to the fuzz testing engine for testing and analysis. During the fuzz testing process, test cases that trigger new code paths and have high coverage rates will be added to the input corpus. This process is executed iteratively until the preset test termination conditions, such as limited test time, are met.

3.1. Construction of Input Corpus

During the fuzz testing process, the input corpus is the initial step and plays a crucial role in determining the depth and breadth of subsequent testing procedures. Constructing an appropriate input corpus exposes the compiler to diverse inputs during testing, thus enhancing the testing efficiency and code-coverage rate. The input corpus refers to the set of initial test cases constructed prior to conducting fuzz testing. It encompasses representative data types, different data scales, and diverse input formats. During the fuzz testing process, initially, various initial test cases from the input corpus are used as inputs for the compiler. Test cases that pass both syntax analysis and semantic analysis will be retained in the input corpus as qualified initial test cases, whereas those that fail the analysis will be discarded.

When initially performing fuzz testing on all test cases in the input corpus, we comprehensively collect their coverage information and calculate the coverage rate C. We adopt a coverage collection method based on code instrumentation, inserting specific code snippets into the compiler to record the code regions covered during the execution of test cases. These instrumentation points are distributed at key locations in the program, including function entry and exit points, conditional branches, and loop bodies, to accurately capture the code-execution status.

We then compare the coverage rate C with a pre-set threshold T. When , it indicates that the construction of the input corpus has not met the expected requirements. In this case, we need to adjust the scale of the input corpus or replace the test cases, and then conduct the next round of fuzz testing and re-collect the coverage information. When adjusting the scale of the input corpus, we conduct an analysis based on specific circumstances. If we find that certain types of test cases are missing, resulting in a low coverage rate, we will supplement these types of test cases in a targeted manner. For example, if we find that operations on specific data structures are rarely involved in the test cases, we will add test cases that include operations on multi-dimensional arrays. Conversely, when , it means that the construction of the input corpus has met the requirements and can be used as the input corpus for fuzz testing to carry out subsequent testing work.

3.2. Master–Slave IR Translator

Chen et al. [33] utilized a frontend generator to create an IR translator, enabling the conversion of source programs into IR programs. Inspired by this approach, this study designs a master–slave IR translator to separately translate the source code of C programs with structured characteristics and test case programs into IR programs. This design facilitates the insertion of IR nodes with structured program characteristics into test case IRs at the IR level, thereby supporting deeper exploration of GCC compiler frontend IR generation phase.

The generation of a master–slave IR translator must integrate the BNF grammar and semantic annotations of the target programming language to ensure accurate parsing of syntactic structures and semantic information during translation. First, the frontend generator treats different symbols in the BNF grammar as distinct objects, forming the basic elements of the language’s syntactic rules. These symbols, categorized by priority, data type, and other attributes into keywords, operators, constants, and more, collectively describe the programming language’s syntax. Next, by analyzing semantic annotations, the frontend generator determines the semantic attributes of each symbol, including the data type it represents, variable scopes, and semantic relationships between symbols. Finally, based on syntactic and semantic rules, the frontend generator produces unique parsing and translation strategies for each object. These strategies strictly follow predefined code conversion rules, error-handling mechanisms, and performance-optimization protocols to generate the master–slave IR translator, enabling accurate translation of source programs.

Compared to traditional IR translators, the master–slave IR translator demonstrates higher translation efficiency in handling specific data structures such as multidimensional arrays. For instance, in the case of multidimensional arrays, the master translator optimizes memory allocation based on their unique memory layout, ensuring compact storage of array elements to reduce memory access span and latency. By analyzing memory mapping patterns during array access, it categorizes and optimizes access modes under different data characteristics, adjusting data-storage structures and read orders to minimize computation time and enhance processing efficiency. Concurrently, the slave translator verifies boundary conditions across all dimensions of multidimensional arrays to prevent out-of-bounds access errors during testing. It validates array initialization and assignment operations to ensure data correctness and conducts random input testing on programs involving multidimensional arrays. This process records program stability and correctness under diverse inputs, addresses potential edge cases, and improves test coverage and reliability.

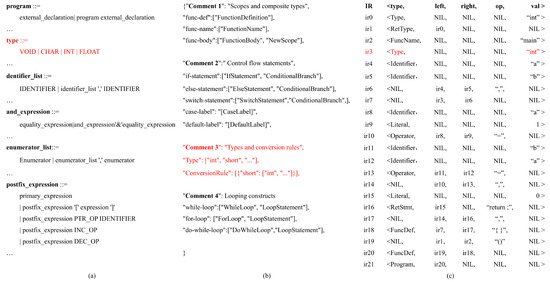

In this study, a master–slave IR translator is utilized to translate the source program into IR program nodes, which include sequence (IR), type (type), no more than two operands (left, right), operator (op), and value (val). Here, the IR sequence and type correspond to the statement sequence of the source code and the symbols in the BNF grammar respectively. The IR operator and IR value store the corresponding source code of the program. All IRs are connected by IR operands. The two operands, left and right, serve as bridges to connect different IRs, enabling the accurate expression of the structure and logical relationships of the source program. Figure 2 shows an example of the BNF grammar, semantic annotations, and the IR program when the source program is , demonstrating how to construct the IR of a program using the BNF grammar and semantic annotations. Figure 2a presents the BNF grammar of the C language. When converting the source program according to the BNF grammar rules, each symbol generates a unique type of IR, and these symbols represent keywords, operators, identifiers, constants, etc. For example, when processing the variable declaration statement using the rule “<type>::=VOID|CHAR|INT|FLOAT|…”, will be recognized as the “INT” type in “<type>” and generate the corresponding IR. Figure 2b shows the semantic annotations of the BNF grammar rules. For example, “Type”: [“int”, “short”, “...”] explains the “type” defined in the BNF grammar, where “int” represents the integer type, “short” represents the short-integer type, etc. “ConversionRule” [“short”: [“int”, “...”]] gives the information that, under specific context constraints, the “short” type can be converted to types such as “int”. Figure 2c shows the IR program converted by the master–slave IR translator based on the BNF grammar rules and semantic annotations. The “Type” of ir3 corresponds to the grammar rules in Figure 2a and the semantic annotations in Figure 2b, and the value (val) of ir3 is “int”. That is, the “int” in ir3 conforms to a legal type definition in the BNF grammar “<type>::=VOID|CHAR|INT|FLOAT|…” and is also one of the valid types in the semantic annotation “Type”: [“int”, “short”, “...”].

Figure 2.

An example IR program with its corresponding BNF grammar and semantic annotations. (a) Part of the BNF grammar for C programs. (b) Part of semantic annotations for the BNF grammar. (c) An example IR program after conversion by master–slave IR translation.

3.3. Structured Program Characteristics IR Extraction

To enhance the code coverage of test cases, this study employs the IR node set derived from translating structured program cases using the main IR translator as the foundational data source. It systematically extracts IR nodes with structured program characteristics, including loop structures and dependency relationships, which serve as insertion nodes for test cases.

When extracting IR nodes with loop structures, the following steps are carried out. First, the IR nodes marking the start and end of the loop are identified to determine the loop scope. Then, for the expression nodes of the loop condition, by examining the changes of variables in the expression and the types of conditional operators, the boundary values and changing trends of the number of loop executions under different test scenarios are determined. Meanwhile, an analysis is conducted to check if there is a potential risk of an infinite loop caused by complex conditions. Regarding the loop variable nodes, it is necessary to track their definition nodes, initialization nodes, and update nodes in the program. The definition node represents the position and basic type information of the loop variable in the program structure. The initialization node indicates the initial value of the loop variable participating in operations, and the update node reflects its changing pattern in each loop iteration. Through the analysis of these nodes, the changing range of the loop variable is determined. Finally, the nodes performing operations inside the loop body are analyzed to identify the relevant nodes that change according to the loop variable or the loop condition. These nodes related to the loop structure are then extracted.

Assume that the IR node set translated by the main IR translator is denoted as . Each () represents an independent IR node, encompassing various semantic and structural information of the program at the IR level. Meanwhile, the ancestor node of all ir nodes with structured program characteristics (which need to be extracted) is defined as . The feature judgment function for each node in the set IR is defined, as shown in Equation (1):

When extracting IR nodes with dependency relationships, the following steps are taken. First, program variables and their operational logic are determined by analyzing the roles of function parameters in variable assignment and expression construction, as well as the usage patterns of function return values, thereby identifying dependency relationships among program elements. Second, a dependency relationship graph is constructed using program nodes as vertices and dependency relationships as edges, which intuitively presents the program’s dependency structure. Finally, nodes on dependency paths are extracted based on the dependency relationship graph. These nodes include those directly embodying dependency relationships and those related to variable type declarations and initialization value settings, ensuring the complete extraction of all elements associated with dependency relationships. The extraction algorithm for IR nodes with structured program characteristics is shown in Algorithm 1.

| Algorithm 1 Extract structured program characteristics IR. |

| Input: Array |

| Output: The array to be extracted |

| 1. begin |

| 2. int ;// Statistical array length |

| 3. ;// is the entry of the node to be extracted |

| 4. ;// is the end node of the node to be extracted |

| 5. for to |

| 6. //Find the entry of the node to be extracted |

| 7. if == then |

| 8. return ; |

| 9. end if |

| 10. end for |

| 11. end |

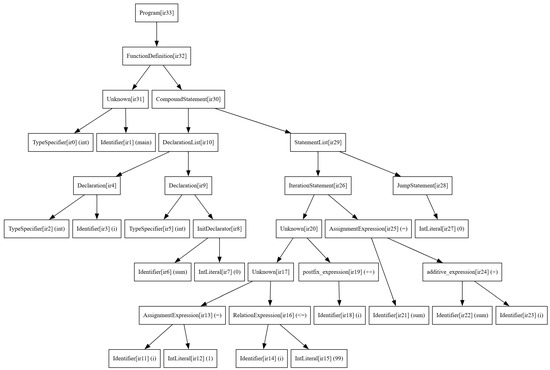

Figure 3 illustrates the source code of a structured program case and the abstract syntax tree generated by the master–slave IR translator. IR nodes are derived from the syntactic structure and semantic information of the source code, forming an IR node set denoted as . For example, the left and right children of constitute the initial representation structure of the function at the IR level, reflecting the transformation logic from the function definition in the source code to IR nodes. The left child of defines the variable , which is an abstract representation of the variable declaration part in the source code. The left child of contains IR nodes related to the loop structure . Within the loop body, the value of changes based on the result of the previous loop iteration, and each iteration may lead to different execution paths. When extracting IR nodes with structured program characteristics, first, determine that , which includes the loop structure range and loop content, is the loop representation node. Then, analyze the loop condition expression , where i is the loop variable, and defines the number of iterations. The definition node of the loop variable i is in the left child node of , determining its relative position and type information in the abstract syntax tree. The initialization node indicates the starting value, and the update node reflects its change pattern in each iteration. Finally, analyze in the loop body. The node of this calculation expression is directly related to the loop variable i. Since sum is updated with the change of i, these nodes are closely related to the loop structure. Through node screening and analysis, the ancestor node to be extracted is determined. Combined with the feature judgment function, finally, assign the value 1 to the IR nodes – that meet the conditions and extract them.

Figure 3.

The abstract syntax tree of a structured program case after being translated by the master–slave IR translator.

3.4. Structured Program Characteristics IR Insertion

When conducting fuzz testing, based on the extracted IR nodes with structured program characteristics, performing insertion operations at the IR level can introduce new IR nodes, explore more potential program behaviors, and further enhance code coverage.

For node insertion into IR with structured program characteristics, the following steps are taken: First, analyze the test cases and IR nodes with structured characteristics. Second, traverse the abstract syntax tree of test cases to identify potential insertion nodes based on semantic matching, control flow, and data dependencies. Then, carry out a weighted comprehensive evaluation of the relevant characteristics of multiple insertion nodes to select the final insertion node and execute the insertion operation. The specific procedures are as follows:

Step 1: Analyze the test case and IR nodes with structured characteristics. Denote the abstract syntax tree of the test case as . Each IR node represents a grammatical structure element, with leaf nodes marked as . The relevant attributes of leaf nodes are as follows: the grammatical structure level indicates the node’s depth in the abstract syntax tree, the data type denotes the type involved in the node, and the predecessor node set and successor node set are used to determine the leaf node’s position in the control flow. Denote the abstract syntax tree composed of IR nodes with structured characteristics as , with the entry node and exit node labeled as and , respectively.

Step 2: Conduct depth-first traversal starting from the root node, recursively visiting each IR node. When traversing to a node satisfying , perform the following checks to determine whether it is a suitable candidate insertion point:

Semantic matching check. Construct the semantic feature vector of the leaf node , denoted as , where each represents a semantic dimension. The semantic feature vector of the entry node is . Measure the semantic difference between them using the semantic distance function , as shown in Equation (2):

If its value is less than the preset semantic threshold , it indicates that the leaf node and the entry node are compatible.

Control flow check. For the leaf node , calculate the control flow path lengths and from the root node to each predecessor node and successor node , respectively. Determine the longest control flow path length of and the original control flow path length from to . Define the control flow insertion impact function , which evaluates the impact on the control flow of based on the structure of and the changes in the control flow after insertion. If , then is a potential insertion point in terms of control flow.

Data-dependency check. The data-dependency set of the leaf node is denoted as , and the data-dependency set of IR nodes with structured program characteristics is denoted as . For each data element pair , the conflict judgment function is defined, as shown in Equation (3). If there is a read-write operation conflict between and , then ; otherwise, it is 0.

When its value is 0, it indicates that the insertion requirement is satisfied. All leaf nodes that pass the check are added to the leaf node insertion point set.

Step 3: When there exist multiple leaf-node insertion points, a comprehensive evaluation of these insertion points is required. Specifically, semantic matching ensures the logical correctness of the program. If the semantics of the insertion point do not match the context, it may lead to abnormal program execution. Abnormal control flow can trigger issues such as infinite loops and disordered program-execution order. Data dependency imposes associative constraints on data. If the data-dependency relationship is disrupted by unexpected factors, it will cause data errors or loss, and in severe cases, lead to abnormal program functionality or system crashes.

Therefore, this section calculates the comprehensive evaluation score for each , and selects the node with the highest Score value as the final insertion point, as shown in Equation (4):

where , and Q and R are the number of elements in and , respectively. Through this calculation method, a weighted comprehensive evaluation of semantic matching degree, control-flow stability, and data-dependency security can be achieved.

Step 4: Execute the insertion of IR nodes with structured characteristics into the test case. Establish control-flow connections between the entry node of and the final insertion point . Meanwhile, connect the exit node of to the successor node of the final insertion point based on control-flow logic and data-dependency relationships. This ensures the syntactic and semantic correctness of the new abstract syntax tree, thus achieving the insertion of IR nodes with structured characteristics and improving code coverage.

3.5. IR Mutation Strategy

During the IR mutation phase, a mutation strategy based on dynamic weight adjustment is adopted to generate test cases with stronger code-coverage capabilities. This strategy first calculates code coverage based on program characteristics and determines the initial weights of mutation types. Subsequently, it employs a fuzzer to monitor and evaluate test cases in real time during the mutation process, constructing a new weight table. Finally, optimal weight values are screened out based on the normalization results of the initial weight table and the outcomes of the new weight table, enabling effective adjustment of the mutation strategy. The specific procedures are as follows:

First, initial weight allocation is carried out according to the code coverage corresponding to test cases with different program characteristics. Let the test case set be . For each test case , the calculation of code coverage is shown in Equation (5):

Here, is the statement-coverage ratio, which represents the ratio of the number of covered statements during the execution of to the total number of statements in the program. denotes the branch-coverage ratio, namely the ratio of the number of branches covered by to the total number of branches in the program. is the condition-coverage ratio, serving as a measurement for the coverage of conditional expressions. are corresponding weight coefficients, with , which are used to adjust the weights of different coverage types in the calculation. For each in the mutation type set , the calculation of its initial weight is shown in Equation (6):

Here, is an indicator function, used to establish the association between the test case and the mutation type . When the test case contains the characteristics of the mutation type, ; otherwise, . The numerator is a weighted sum of the code-coverage rates of all test cases that contain the characteristics of the mutation type . Here, the indicator function serves as a screening mechanism. Only when the test case contains the characteristics of the mutation type , the code-coverage rate of this test case will be included in this summation operation. This means that when calculating the weight of the mutation type , only the coverage situations of those test cases that have actually applied this mutation type are taken into account. The denominator represents a comprehensive summation of the code-coverage rates of test cases corresponding to all mutation types. It traverses all mutation types , as well as all test cases under each mutation type, and accumulates the code-coverage rates of test cases that meet the conditions of the corresponding indicator function. Through this calculation method, the resulting initial weight reflects the relative importance of the mutation type among all mutation types. In practical testing, if a mutation type is applied in test cases that can achieve a relatively high code-coverage rate, then the corresponding weighted sum in the numerator will be large. Given a fixed denominator, the initial weight of this mutation type will be relatively high. By determining the initial weights of mutation types based on the code coverage of test cases, subsequent tests can preferentially execute mutation operations with high weights according to the weight relationships, effectively optimizing test paths.

Then, the fuzzer performs real-time monitoring and analysis on the test cases undergoing mutation, measuring the mutation effect through a comprehensive evaluation function. For in , the calculation of its comprehensive evaluation score is shown in Equation (7):

Here, denotes the absolute change in code coverage caused by the mutation operation. The fuzzer first records the initial code-coverage rate of each test case before the test case is mutated. This process typically relies on code instrumentation technology, that is, inserting specific monitoring code snippets into the code of the target program. These snippets can record which code regions are accessed during program execution, and then the initial code-coverage rate can be statistically calculated. After the mutation operation is performed on the test case , the fuzzer again uses code instrumentation to obtain the mutated code-coverage rate . By calculating the difference , the absolute change in code coverage is obtained. This method of obtaining coverage data based on code instrumentation can accurately capture the direct impact of the mutation operation on the code-coverage scope, providing basic data for subsequent evaluations. reflects the relative proportion of this change based on the original code coverage. It is calculated based on the absolute change and in combination with the pre-mutation code-coverage baseline. This calculation step is implemented to establish a unified comparison standard among test cases with different initial coverage rates. Since the initial code-coverage rates of different test cases can vary significantly, relying solely on the absolute change amount makes it difficult to accurately measure the actual effect of the mutation operation. By introducing the calculation of relative change, even for a test case with a low initial coverage rate, a small absolute change amount after mutation can, through the calculation of relative change, reflect the significant degree to which the mutation operation has improved its own coverage rate. Through this calculation method, the comprehensive evaluation score for each mutation type is obtained. This score reflects the comprehensive contribution degree of the mutation type in improving the code-coverage rate. The comprehensive evaluation score enables the screening of the mutation operation sequence, thus enhancing the code coverage during the fuzz testing process.

Finally, conduct normalization processing on the scores of each mutation type, and form key-value pairs with each normalized score and the corresponding mutation type to construct a new weight table. Let , where m represents the length of the mutation type set. For , the normalization is performed using the formula . The essence of normalization processing is to map the scores of different mutation types onto the same measurement scale. Since the original scores of different mutation types may vary significantly due to differences in calculation methods and data characteristics, directly comparing these original scores can be misleading. Through normalization, the scores of all mutation types can be measured under a unified standard, which facilitates subsequent comparison and analysis. For example, if the original score of mutation type A is 10 and that of mutation type B is 5, without normalization, one might intuitively think that A performs better than B. However, this may not take into account the differences in their testing environments or calculation backgrounds. After normalization, their relative advantages and disadvantages can be reflected more accurately, providing a reliable basis for subsequent decision-making.

By utilizing the above IR mutation algorithm, the normalized score and obtained from the new weight table are compared. The optimal value of the two is selected for mutation strategy adjustment, guiding subsequent mutation operations to be more inclined to generate test cases with similar characteristics. This adjustment mechanism can guide subsequent mutation operations to generate test cases with similar characteristics, specifically those features of test cases that have proven effective in improving code coverage during previous testing. For example, if mutating a specific type of function call was found to significantly increase code coverage during prior testing, the system will be more inclined to perform mutation processing on similar function calls in subsequent operations. Through continuous iterative adjustment of the mutation strategy, the efficiency and quality of code coverage achieved by fuzz testing can be continuously improved.

4. Results and Discussion

This chapter focuses on the proposed BoostPolyGlot fuzz testing framework. First, it introduces the experimental setup. Then, by comparing with other relevant tools, it evaluates the performance of BoostPolyGlot in GCC compiler frontend IR generation testing from aspects such as dependency relationships, loop structures, and their synergistic effects.

4.1. Experimental Setup

The experimental setup serves as the bedrock for ensuring the accuracy and reliability of experiments. It encompasses multiple crucial aspects, including the experimental environment, test sets, evaluation metrics, and experimental design. A rational experimental setup can effectively control variables, minimize errors, and provide a solid foundation for the subsequent analysis of experimental results.

In terms of the experimental environment, to ensure the consistency of the experimental environment and the accuracy of experimental results, all experiments were conducted in a unified experimental environment, with detailed configurations as shown in Table 1.

Table 1.

Experimental environment.

In terms of the experimental test set, for the input corpus, test cases from the testsuite-gcc.dg of the GCC 8.4.0 test suite were selected. For structured program cases, typical C programs from the Polybench benchmark suite were adopted. Consequently, a multi-level initial corpus was constructed. This design effectively addresses the limitations of traditional test cases in covering such complex paths. Meanwhile, the newly constructed test cases enable targeted testing of critical aspects, such as loop optimization and dependence analysis. This not only enhances the specificity and effectiveness of the testing process but also reduces invalid test cases resulting from blind mutation, thereby improving the utilization efficiency of testing resources.

In terms of the index of experimental evaluation, to comprehensively measure the improvement effect of BoostPolyGlot on code coverage, five evaluation indicators are selected: total paths, count coverage, new edges on rate, favored paths rate, and level. Total paths can intuitively reflect BoostPolyGlot’s capability to explore program paths-higher values indicate that more code-execution branches are touched. Count Coverage reflects both the degree of coverage of code byte regions by test cases and the triggering frequency of critical paths. New edges on rate effectively evaluates the extent to which test cases explore the program’s control flow, reflecting the ability to uncover potential code-execution paths. Favored paths rate demonstrates the accuracy of BoostPolyGlot in screening paths. Level the complexity of test cases throughout mutation and testing processes—higher values signify deeper levels of covered code paths.

In terms of the experimental design, the cc1 frontend component of the GCC compiler was selected as the target program for testing. To verify the reliability of the results, three fuzz testing tools: AFL, PolyGlot, and BoostPolyGlot were employed. Independent experiments were conducted three times within three testing cycles of 1 h, 4 h, and 8 h. Ultimately, the average values of the three experimental results were used as the evaluation basis, aiming to eliminate the interference of random factors and ensure the stability and validity of the data.

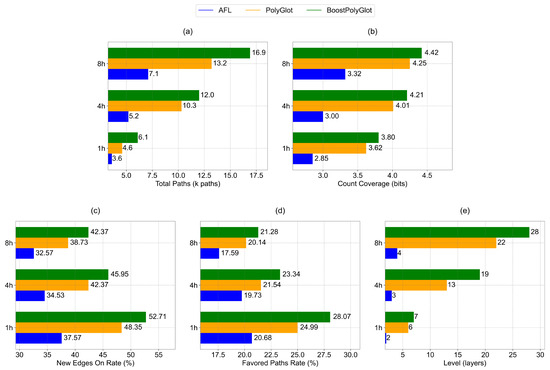

4.2. Fuzz Testing Capability Evaluation Based on Dependency Relationship

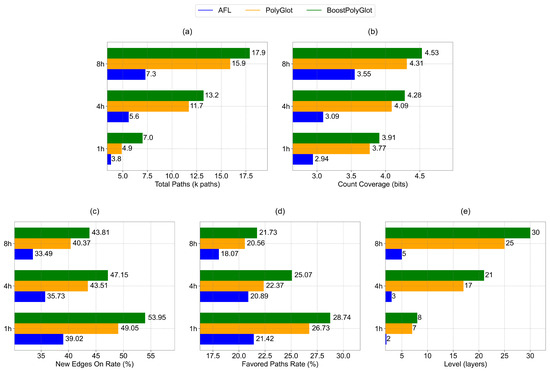

In fuzz testing, dependency relationships can identify diverse program paths and boundary conditions, clarify intrinsic data correlations, and expand code-execution paths and conditional judgment characteristics. The dependencies selected in this experiment include true dependencies, anti-dependencies, loop-carried dependencies, and loop-independent dependencies. Figure 4 illustrates the comparison of AFL, PolyGlot, and BoostPolyGlot across five metrics:total paths, count coverage, new edge on rate, favored paths rate, and level for three testing durations of 1 h, 4 h, and 8 h.

Figure 4.

Comparison of evaluation metrics based on dependency relationships among AFL, PolyGlot, and BoostPolyGlot. (a) Total paths comparison. (b) Count coverage comparison. (c) New edges on rate comparison. (d) Favored paths rate comparison. (e) Level comparison.

The experimental results demonstrate that BoostPolyGlot exhibits significant performance advantages in dependency-based testing scenarios. For example, during an 8-h testing period, BoostPolyGlot reaches a total of 16.9k paths (Figure 4a). The number of paths is 138.02% more than that of AFL and 28.03% more than that of PolyGlot, which shows its superior efficiency in exploring program-dependency paths. Moreover, its count coverage is 4.42 bits (Figure 4b). This value is over 33.13% higher than that of AFL and 4% higher than that of PolyGlot, indicating better effectiveness in triggering core dependency-related code. In the aspect of new edge on rate, BoostPolyGlot achieves 42.37% (Figure 4c). This percentage is 9.8 percentage points higher than that of AFL and 3.64 percentage points higher than that of PolyGlot, reflecting its stronger ability to navigate complex program branches caused by dependencies. Furthermore, BoostPolyGlot’s favored paths rate is 21.28% (Figure 4d). This rate is 3.69 percentage points higher than that of AFL and 1.14 percentage points higher than that of PolyGlot, highlighting its dependency-driven advantage in identifying high-value paths. Finally, BoostPolyGlot reaches a maximum level of 28 layers (Figure 4e). This level is 600% higher than that of AFL and 27.28% greater than that of PolyGlot, validating its ability to analyze deep dependency logic and thoroughly explore program internal structures.

BoostPolyGlot’s introduction of dependency relationships facilitates the identification of diverse program paths and boundary conditions. In traditional testing, the absence of systematic consideration for dependency relationships results in incomplete coverage of code blocks under various special boundary conditions. Conversely, BoostPolyGlot generates highly tailored test inputs for these boundary conditions, effectively steering the program to execute target paths. During IR mutation, it integrates dependency relationships to produce logically consistent array inputs, ensuring the mutated program executes efficiently and expanding the scope of program path exploration. Meanwhile, dependency relationships define data associations, which are collectively established through variable definition patterns, assignment operation workflows, and computational logic systems, thereby maintaining program logic and coherence. Traditional random mutation strategies, which arbitrarily alter judgment conditions, may disrupt program-execution logic. By contrast, BoostPolyGlot’s dependency-driven targeted mutation modifies judgment conditions rationally and adjusts test cases to cover new boundary values and ranges, ensuring the program executes properly under mutated logic branches and enhancing code coverage effectively. Additionally, dependency relationships introduce more execution paths and conditional judgment features, uncovering latent execution logic deep within the program. For example, in the code snippet “while (flag && value > 0) {value - - ; if (value % 3 == 0) {action(); }}”, data dependencies and control dependencies interact during looping. Traditional random mutation, if adding invalid conditional judgments arbitrarily, would disrupt original dependency interactions and reduce valid execution paths. With dependency relationships considered, mutations add conditions like “value % 5 == 0” after value– based on dependency characteristics, collaborating with existing dependencies. This triggers more dependency-based code-execution paths, enriches conditional judgment features.

4.3. Fuzz Testing Capability Evaluation Based on Loop Structure

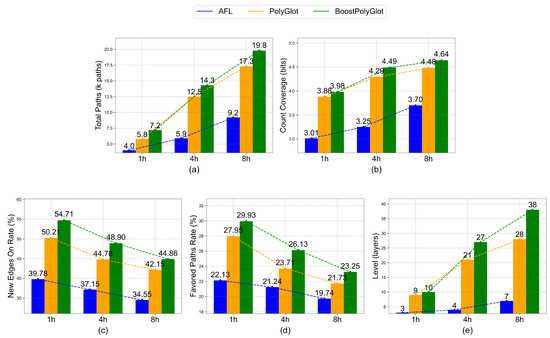

In fuzz testing, the introduction of a loop-structure-coverage improvement strategy serves multiple purposes. The iterative execution feature of loop structures allows the program to continuously change execution paths during loops. By reasonably constructing test cases, more code-execution paths can be explored. Regarding the control of the number of iterations, the loop-structure-coverage improvement strategy is closely related to data changes and boundary conditions, which determines the program’s running status under different data scales. Meanwhile, loop structures are also crucial for uncovering potential dependency relationships. Changing the iteration mode of loop variables can reveal the hidden dependency relationships within. The loop structures selected in this experiment include single-layer forward-order loop structures, double-layer forward-order loop structures, triple-layer forward-order loop structures, single-layer reverse-order loop structures, double-layer reverse-order loop structures, triple-layer reverse-order loop structures, perfectly nested loop structures, and non-perfectly nested loop structures. Figure 5 illustrates the comparison of AFL, PolyGlot, and BoostPolyGlot across five metrics:total paths, count coverage, new edges on rate, favored paths rate, and level for three testing durations of 1 h, 4 h, and 8 h.

Figure 5.

Comparison of evaluation metrics based on loop structures among AFL, PolyGlot, and BoostPolyGlot. (a) Total paths comparison. (b) Count coverage comparison. (c) New edges on rate comparison. (d) Favored paths rate comparison. (e) Level comparison.

The experimental results demonstrate that BoostPolyGlot exhibits comprehensive performance advantages in loop-structure testing scenarios. Taking the 8-h testing cycle as an example, it achieves a total of 17.9 k paths (Figure 5a). The number of paths is 145.2% more than that of AFL and 12.6% more than that of PolyGlot, which highlights its enhanced program branch space exploration capability. The count coverage reach 4.53 bits. The value of count coverage is 27.6% more than that of AFL and 5.1% more than that of PolyGlot (Figure 5b), indicating that test cases can trigger core code more efficiently. In control flow analysis, its new edges on rate reaches 43.81% (Figure 5c). This percentage is 10.32 percentage points higher than that of AFL and 3.44 percentage points higher than that of PolyGlot, verifying its stronger ability to explore complex loop branches. The favored paths rate reaches 28.74% (Figure 5d). This rate is 3.66 percentage points higher than that of AFL and 1.17 percentage points higher than that of PolyGlot, reflecting the high-value path screening mechanism. Additionally, the maximum level increases to 30 layers (Figure 5e). This level is 500% higher than that of AFL and 20% greater than that of PolyGlot. The specific analysis are as follows.

BoostPolyGlot analyzes key factors such as the range of loop variables and the boundaries of conditional judgments, and leverages the loop iteration mechanism to explore more code-execution paths. This enables the program to reach deeply hidden branches that can only be triggered by specific combinations of conditions under complex logic. In the regular fuzz testing process, if the key factors in loop structures are not fully considered, a large number of potential code-execution paths are likely to be overlooked. Traditional mutation methods often disrupt the program logic, making it difficult to effectively improve code coverage. In contrast, BoostPolyGlot can not only adjust loop conditions but also construct appropriate test data to guide the program to explore new paths and enhance code coverage. There is a close relationship between the number of iterations, data changes, and boundary conditions in loop structures. Dynamic data changes lead to adjustments in the number of iterations, while boundary conditions limit the scope of iteration. Their combined effect ensures the program can execute effectively under different data-scale scenarios. If this relationship is ignored in testing and loop conditions are changed randomly, the program may fail to comprehensively test its behavior under various data scales. Based on the characteristics of loop structures, BoostPolyGlot constructs diverse test cases that cover different data scales and boundary values. During IR mutation, it reasonably expands the value range of triggering conditions to ensure that the program can operate as expected under complex data scenarios.

4.4. Fuzz Testing Capability Evaluation Based on Synergy

In fuzz testing, the synergistic effect of loop structures and dependency relationships plays multiple roles in coverage improvement. Loop structures enable the repeated execution of code blocks, while dependency relationships guide the direction of code execution. Their collaborative effort provides fuzz testing tools with clearer and more in-depth guidance for path exploration. Simultaneously, the synergy between loop structures and dependency relationships, through analyzing dependency relationships, can effectively define the range of variations for loop conditions. Figure 6 illustrates the comparison of AFL, PolyGlot, and BoostPolyGlot across five metrics:total paths, count coverage, new edges on rate, favored paths rate, and level for three testing durations of 1 h, 4 h, and 8 h.

Figure 6.

Comparison of evaluation metrics based on synergistic effects among AFL, PolyGlot, and BoostPolyGlot. (a) Total paths comparison. (b) Count coverage comparison. (c) New edges on rate comparison. (d) Favored paths rate comparison. (e) Level comparison.

In the test scenario where loops and dependencies interact synergistically, BoostPolyGlot demonstrates significant multi-dimensional advantages over AFL and PolyGlot. Regarding the total paths, as the test duration extends from 1 h to 8 h, BoostPolyGlot reaches 19.8 k paths (Figure 6a). This number is 115.21% more than the 9.2 k paths of AFL and 14.5% more than the 17.3 k paths of PolyGlot. This indicates that BoostPolyGlot can effectively leverage the synergistic relationship between loops and dependencies to explore a wider range of program-execution paths and cover the path variations derived from dependencies within loop structures. In terms of the number of count coverage, BoostPolyGlot increases from 3.88 bits at 1 h to 4.64 bits at 8 h (Figure 6b). This value is 25.4% more than that of AFL and 3.6% more than that of PolyGlot. This confirms that its test cases can precisely trigger the core code associated with loops and dependencies, enhancing the coverage efficiency of the program’s critical logic. With respect to new edges on rate, BoostPolyGlot leads at all time points (Figure 6c). This reflects its stronger exploration and coverage capabilities when dealing with the complex control flows caused by loop branches and dependencies, enabling a comprehensive traversal of the program’s different execution branches. In terms of favored paths rate, BoostPolyGlot maintains a leading position throughout the duration between 1 h and 8 h (Figure 6d). This suggests that the paths selected based on the synergistic relationship between loops and dependencies are more valuable, precisely focusing on the program’s critical execution logic. Concerning the maximum level, BoostPolyGlot rises from 10 levels at 1 h to 38 levels at 8 h (Figure 6e). This level is 442.9% more than the 7 levels of AFL and 35.7% more than the 28 levels of PolyGlot. This shows that it can utilize the synergistic effect of loops and dependencies to deeply explore the program’s underlying logical structure, breaking through the exploration depth limitations of traditional tools.

In program execution, dependency relationships determine the prerequisite conditions that code execution must satisfy, such as variable values and function return results. These conditions are interconnected, forming a complex logical network. Loop structures, on the other hand, enable the repeated execution of the same code block across iterations, with each iteration generating distinct execution paths due to variations in input data and conditional judgment outcomes. The interplay between these two elements collectively shapes the program’s execution trajectory. During the IR mutation phase, when conditional logic or loop conditions are altered, traditional fuzz testing often fails to reconcile changes in dependencies and loops, leading to chaotic program execution and leaving numerous potential paths unexplored. In contrast, BoostPolyGlot dynamically adjusts test case inputs based on new dependency conditions and loop boundaries. For instance, when a mutation modifies a dependency condition, it not only adjusts relevant variable values but also integrates loop structures to ensure that code under the new conditions has execution opportunities in each iteration. If loop conditions are altered, it constructs data inputs according to new boundaries while considering dependency relationships, enabling the program to trigger corresponding operations as expected during iterations. BoostPolyGlot fully exploits the potential of dependency relationships and loop structures to expand code-execution paths and enhance code coverage. By doing so, it ensures comprehensive and in-depth testing of programs across diverse scenarios involving complex dependencies and loop combinations, safeguarding the reliability of program behavior, effectively uncovering latent issues, and providing robust support for improving program quality.

4.5. Vulnerability-Reproduction Efficiency Comparison

In the field of software security testing, vulnerability-reproduction efficiency is a key indicator for measuring the performance of testing tools. Its numerical manifestation directly reflects the actual effectiveness of the tool in uncovering software vulnerabilities, which holds a crucial and non-negligible significance for ensuring software quality and security. In order to fully verify the effectiveness of BoostPolyGlot, this experiment conducted a systematic comparison of the vulnerability-reproduction efficiencies of BoostPolyGlot, AFL, and PolyGlot for the three typical vulnerabilities of CVE-2018-12886, CVE-2020-13844, and CVE-2021-20231. The specific data are detailed in Table 2.

Table 2.

Vulnerability-reproduction efficiency comparison.

Analysis of the table data shows that BoostPolyGlot is significantly more efficient than AFL and PolyGlot in detecting the above three types of vulnerabilities. In the reproduction of the CVE-2018-12886 buffer overflow vulnerability, BoostPolyGlot took 871 s, representing a 29.5% improvement compared to AFL’s 1235 s and a 19.2% improvement compared to PolyGlot’s 1078 s. It was the only tool among the three that managed to control the reproduction time within 1000 s. This vulnerability originated from a defect in the handling of negative loop step sizes in the tree-ssa-loop-niter.c module of the GCC compiler, which led loop variables to access the buffer out of bounds. BoostPolyGlot pre-constructed loop test cases in the input corpus, which contained negative step sizes and boundary values. By combining static loop structure analysis, it accurately covered the unhandled negative step size logic in the module. This approach reduced the ineffective attempts caused by random mutations.

For the CVE-2020-13844 dead code elimination error, BoostPolyGlot took 2439 s for reproduction, representing a 34.5% improvement compared to AFL’s 3724 s and a 23.7% improvement compared to PolyGlot’s 3197 s. Thus, its efficiency advantage was further expanded. This vulnerability was caused by the misjudgment in the tree-ssa-dce.c module that the code within the loop was unreachable, resulting in the incorrect removal of the critical boundary-checking logic. BoostPolyGlot performed extraction and insertion operations on IR nodes. Specifically, by changing the loop conditions, it enabled constant variables to break through their initial limitations, thus allowing test cases to quickly reach the compiler defects.

In the reproduction of the CVE-2021-20231 vectorization-optimization memory-alignment error, BoostPolyGlot took 9775 s. This represented a 47.8% improvement compared to AFL’s 18,731 s and a 19.0% improvement compared to PolyGlot’s 12,057 s, with the largest improvement margin among the three types of vulnerabilities. This vulnerability was caused by the failure of the tree-vect-stmts.c module to verify the 16-byte memory-alignment constraint when generating the MOVDQA vectorization instruction. BoostPolyGlot employed a dynamic weight adjustment strategy to monitor the code coverage of test cases in real time. It preferentially mutated the IR nodes related to vectorization instructions, effectively targeting the code paths with missing alignment constraints in the testing process. This demonstrated its deep exploration ability of the vectorization logic.

In summary, BoostPolyGlot’s superior performance in detecting these three vulnerabilities underscores its effectiveness.

5. Conclusions and Future Work

To enhance the coverage of code-execution paths and the efficiency of fuzz testing, this study proposes a deep fuzz testing framework named BoostPolyGlot for GCC compiler fronted IR generation. Firstly, a diverse input corpus is constructed based on the structural characteristics. Secondly, the designed master–slave IR translator is utilized to convert the source code of structured program cases and test cases into IRs. Then, a method for extracting and inserting IR nodes based on structured program characteristics is proposed to generate test cases. Finally, an IR mutation strategy based on dynamic weight adjustment is put forward to guide the generation of test cases with high code coverage. Experimental results demonstrate that the deep fuzz testing framework BoostPolyGlot proposed in this study significantly improves five core evaluation metrics compared with AFL and PolyGlot, validating its feasibility and effectiveness.

However, there are still deficiencies in the existing work. In future work, a multi-granularity mutation location selection strategy can be designed to dynamically adjust the mutation granularity according to the characteristics of the target program. For example, at the initial stage, coarse-grained mutations can be adopted to quickly cover the main code paths, and at the in-depth stage, the granularity can be refined to explore complex logic. Moreover, the most suitable mutation locations can be selected by combining control flow, data flow, and semantic analysis. This approach can further improve the pertinence and effectiveness of mutations, enhance the ability to cover diverse paths and behaviors of the program, and provide support for the application of fuzz testing in complex scenarios.

Author Contributions

Methodology, H.G. and P.L.; validation, T.H.; formal analysis, H.G.; data curation, H.G. and T.H.; writing—original draft preparation, H.G.; writing—review and editing, H.L. and P.L.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Henan Province Science and Technology Key Project “Research on Deep Fuzz Testing Methods for GCC Compiler Backend Optimization” under Grant No. 242102211094 and the Henan Province Key Research, Development and Promotion Special Project “Research on Address Translation and Cache Coherence for New Memory-Computing Integrated Architectures” under Grant No. 232102210185.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Conflicts of Interest

The authors declare that there are no conflicts of interest regarding the publication of this paper.

References

- Gough, B.J.; Stallman, R. An Introduction to GCC; Network Theory Limited: Bristol, UK, 2004. [Google Scholar]

- Mogensen, T.Æ. Introduction to Compiler Design; Springer Nature: Berlin/Heidelberg, Germany, 2024. [Google Scholar]

- Manès, V.J.; Han, H.; Han, C.; Cha, S.K.; Egele, M.; Schwartz, E.J.; Woo, M. The art, science, and engineering of fuzzing: A survey. IEEE Trans. Softw. Eng. 2019, 47, 2312–2331. [Google Scholar] [CrossRef]

- Miller, B.P.; Fredriksen, L.; So, B. An empirical study of the reliability of UNIX utilities. Commun. ACM 1990, 33, 32–44. [Google Scholar] [CrossRef]

- Pham, V.T.; Böhme, M.; Roychoudhury, A. Model-based whitebox fuzzing for program binaries. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Singapore, 3–7 September 2016; pp. 543–553. [Google Scholar]

- Eom, J.; Jeong, S.; Kwon, T. Covrl: Fuzzing javascript engines with coverage-guided reinforcement learning for llm-based mutation. arXiv 2024, arXiv:2402.12222. [Google Scholar]

- Wang, J.; Chen, B.; Wei, L.; Liu, Y. Skyfire: Data-driven seed generation for fuzzing. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 579–594. [Google Scholar]

- Godefroid, P.; Peleg, H.; Singh, R. Learn&fuzz: Machine learning for input fuzzing. In Proceedings of the 2017 32nd IEEE/ACM International Conference on Automated Software Engineering (ASE), Urbana, IL, USA, 30 October–3 November 2017; pp. 50–59. [Google Scholar]

- Fan, R.; Chang, Y. Machine learning for black-box fuzzing of network protocols. In International Conference on Information and Communications Security, Proceedings of the Information and Communications Security 19th International Conference, Beijing, China, 6–8 December 2017; Springer: Berlin/Heidelberg, Germany, 2017; pp. 621–632. [Google Scholar]

- Hu, Z.; Shi, J.; Huang, Y.; Xiong, J.; Bu, X. GANFuzz: A GAN-based industrial network protocol fuzzing framework. In Proceedings of the 15th ACM International Conference on Computing Frontiers, Ischia, Italy, 8–10 May 2018; pp. 138–145. [Google Scholar]

- Bastani, O.; Sharma, R.; Aiken, A.; Liang, P. Synthesizing program input grammars. Acm Sigplan Not. 2017, 52, 95–110. [Google Scholar] [CrossRef]

- Viide, J.; Helin, A.; Laakso, M.; Pietikäinen, P.; Seppänen, M.; Halunen, K.; Puuperä, R.; Röning, J. Experiences with Model Inference Assisted Fuzzing. WOOT 2008, 2, 1–2. [Google Scholar]

- Höschele, M.; Zeller, A. Mining input grammars from dynamic taints. In Proceedings of the 31st IEEE/ACM International Conference on Automated Software Engineering, Singapore, 3–7 September 2016; pp. 720–725. [Google Scholar]

- Cadar, C.; Dunbar, D.; Engler, D.R. Klee: Unassisted and automatic generation of high-coverage tests for complex systems programs. In Proceedings of the OSDI, San Diego, CA, USA, 8–10 December 2008; Volume 8, pp. 209–224. [Google Scholar]

- Han, H.; Oh, D.; Cha, S.K. CodeAlchemist: Semantics-aware code generation to find vulnerabilities in JavaScript engines. In Proceedings of the NDSS, San Diego, CA, USA, 24–27 February 2019. [Google Scholar]

- Yang, X.; Chen, Y.; Eide, E.; Regehr, J. Finding and understanding bugs in C compilers. In Proceedings of the 32nd ACM SIGPLAN Conference on Programming Language Design and Implementation, San Jose, CA, USA, 4–8 June 2011; pp. 283–294. [Google Scholar]

- Lidbury, C.; Lascu, A.; Chong, N.; Donaldson, A.F. Many-core compiler fuzzing. ACM Sigplan Not. 2015, 50, 65–76. [Google Scholar] [CrossRef]

- Livinskii, V.; Babokin, D.; Regehr, J. Random testing for C and C++ compilers with YARPGen. Proc. ACM Program. Lang. 2020, 4, 1–25. [Google Scholar] [CrossRef]

- Dewey, K.; Roesch, J.; Hardekopf, B. Language fuzzing using constraint logic programming. In Proceedings of the 29th ACM/IEEE International Conference on Automated Software Engineering, Vasteras, Sweden, 15–19 September 2014; pp. 725–730. [Google Scholar]

- Xia, C.S.; Paltenghi, M.; Le Tian, J.; Pradel, M.; Zhang, L. Fuzz4all: Universal fuzzing with large language models. In Proceedings of the IEEE/ACM 46th International Conference on Software Engineering, Lisbon, Portugal, 14–20 April 2024; pp. 1–13. [Google Scholar]

- Zalewski, M. american fuzzy lop (2.52 b). Retrieved April 2019, 10, 2020. [Google Scholar]

- Stephens, N.; Grosen, J.; Salls, C.; Dutcher, A.; Wang, R.; Corbetta, J.; Shoshitaishvili, Y.; Kruegel, C.; Vigna, G. Driller: Augmenting fuzzing through selective symbolic execution. In Proceedings of the NDSS, San Diego, CA, USA, 21–24 February 2016; Volume 16, pp. 1–16. [Google Scholar]

- Yun, I.; Lee, S.; Xu, M.; Jang, Y.; Kim, T. QSYM: A practical concolic execution engine tailored for hybrid fuzzing. In Proceedings of the 27th USENIX Security Symposium (USENIX Security 18), Baltimore, MD, USA, 15–17 August 2018; pp. 745–761. [Google Scholar]

- Chen, P.; Chen, H. Angora: Efficient fuzzing by principled search. In Proceedings of the 2018 IEEE Symposium on Security and Privacy (SP), San Francisco, CA, USA, 21–23 May 2018; pp. 711–725. [Google Scholar]

- Rawat, S.; Jain, V.; Kumar, A.; Cojocar, L.; Giuffrida, C.; Bos, H. Vuzzer: Application-aware evolutionary fuzzing. In Proceedings of the 2017 Network and Distributed System Security (NDSS) Symposium, San Diego, CA, USA, 26 February–1 March 2017; Internet Society. pp. 1–14. [Google Scholar]

- Böhme, M.; Pham, V.T.; Nguyen, M.D.; Roychoudhury, A. Directed greybox fuzzing. In Proceedings of the 2017 ACM SIGSAC Conference on Computer and Communications Security, Dallas, TX, USA, 30 October–3 November 2017; pp. 2329–2344. [Google Scholar]