Abstract

Although scholars have made significant progress in obtaining high dynamic range (HDR) images by using deep learning algorithms to fuse multiple exposure images, there are still challenges, such as image artifacts and distortion in high-brightness and low-brightness saturated areas. To this end, we propose a more detailed high dynamic range (MDHDR) method. Firstly, our proposed method uses super-resolution to enhance the details of long-exposure and short-exposure images and fuses them into medium-exposure images, respectively. Then, the HDR image is reconstructed by fusing the original medium-exposure, enhanced medium-exposure images. Extensive experimental results show that the proposed method can reconstruct good HDR images that perform better image clarity in quantitative tests and improve HDR-VDP2 by 4.8% in qualitative tests.

1. Introduction

General purpose photography equipment on the market cannot preserve the full dynamic range of a real scene within a single frame [1]. Reconstructed HDR images can make the images present a dynamic range closer to the real scene. From the development of HDR image reconstruction, many HDR image datasets have been established by scholars as the basis for result evaluation, and these datasets also provide momentum for continuous progress in this field. Scholars provide a lot of various methods for reconstructing HDR images, including alignment-based [2,3,4], rejection-based [5,6,7], patch-based [8,9], and CNN-based [10,11,12,13,14,15,16,17,18,19,20,21]. With the help of the rapid development of deep learning, the results of reconstructing HDR images have made great progress. However, the work of reconstructing HDR images still faces challenges. The quality of reconstructed HDR images is prone to defects, such as artifacts, loss of details, and image blur. We believe a good solution for reconstructing HDR images must have the following capabilities.

The purpose of this paper is to propose a method to avoid the three major problems of artifacts, loss of details, and image blur when reconstructing HDRI. We assume that to solve the above three problems to reconstruct HDR images, several capabilities listed below are required in the solution:

- Ability to enhance image details;

- Ability to extract image features;

- Ability to accurately align the poses of objects in images with different exposures;

- Ability to accurately fuse multiple exposure images.

In order to solve the above three problems, we proposed a solution called more detail HDR (MDHDR) to reconstruct HDR images. MDHDR is based on inspiration from many technologies, such as fusing multi-exposure images and super-resolution [8,11,22,23,24,25,26,27,28,29,30,31,32,33]. The MDHDR solution integrates the network architecture in paper [28,32] and is divided into three main functional blocks. The first block is to enhance the details of long-exposure and short-exposure LDR images. This part adopts the network architecture of paper [28]. The second functional block is responsible for capturing the image details of the low-exposure highlight area and the high-exposure low-light area, respectively, and fusing them into the medium-exposure image. This part adopts the network architecture of paper [30,31,32]. The third block uses a residual network to perform fusion on the long- and short-exposure images and the medium-exposure image after detail enhancement. This part mainly adopts the network architecture of papers [26,32,34]. Based on the objective conditions of using a public dataset, the experimental results confirm that the HDR images reconstructed by our proposed MDHDR solution are extremely competitive in terms of image clarity. The main difference between MDHDR and network in the paper [32] is that MDHDR uses a super-resolution network to enhance the details of long-exposure and short-exposure images, compensating for the loss of details in the HDR image reconstruction process, thereby reconstructing a better-quality HDR image.

2. Related Work

2.1. Datasets

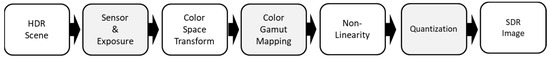

General-purpose camera devices on the market cannot preserve the full dynamic range of a real scene within a single frame [1]. The dynamic range of the image outputs by the camera devices is limited because the imaging process undergoes many transformations [1]. The imaging process requires sensor and exposure adjustment, color space conversion, tone mapping, nonlinear conversion, digital quantization, and other conversions [1,35,36,37].

Figure 1 outlines the conversion process that imaging must go through [1,35,36,37]. After the block named Sensor & Exposure, high dynamic range (HDR) images will suffer from sensor noise pollution and exposure to reduce dynamic range. After the block named Color Gamut Mapping, the HDR image pixels will be truncated due to the color gamut mapping. After the block named Quantization, HDR images are further degraded due to conversion to 8-bit digital image data and compression. The final standard dynamic range (SDR) image is the original HDR scene after multiple degradations.

Figure 1.

The conversion from an HDR scene to an SDR image.

Since general-purpose camera devices cannot capture the full dynamic range of a scene, the limited dynamic range of the image affects the image quality.

For example, the e shows a full wide dynamic range in Figure 2. Obviously, it displays various brightness levels. The a~d in Figure 2 represent the dynamic range of a part of the scene captured by the camera, so the brightness level that can be displayed is relatively truncated. By observing Figure 2, we can more clearly understand the difference between the image with limited dynamic range and the original scene at the same exposure time. Obviously, the higher dynamic range image can fully preserve the details in both high-brightness and low-brightness areas. Oppositely, a limited dynamic range image cannot preserve image details in both the high-brightness area and low-brightness area at the same time. In order to record more image details, people usually set the camera to retain the middle dynamic range, such as b or c in Figure 2. Another factor that can affect the dynamic range of an image is exposure time. We take the 145th scene in the Tursen dataset [38] as an example and illustrated in Figure 3.

Figure 2.

The illustration of dynamic range.

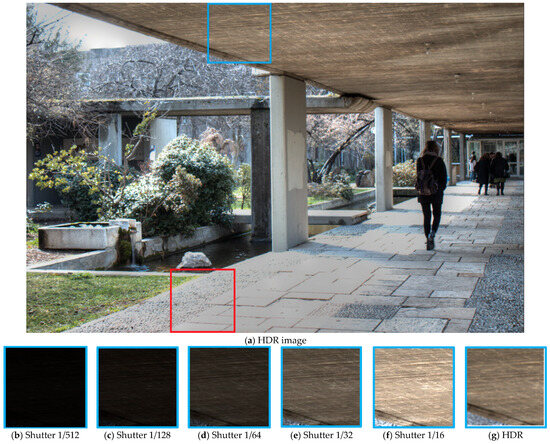

Figure 3.

The illustration of a high dynamic range image.

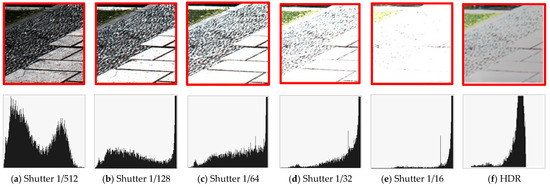

Figure 3a is the HDR image generated by using Photomatix free version [39], fusing LDR images with shutter time 1/512, 1/128, 1/64, 1/32, and 1/16. Figure 3b–f and Figure 3h–l are taken from the shutter time 1/512, 1/128, 1/64, 1/32, and 1/16 LDR images, respectively. Figure 3g and Figure 3m are, respectively, taken from the fused HDR image. By observing Figure 3b–m, we can easily understand that different exposure times produce different dynamic ranges and affect the preservation of image details [40,41,42]. Generally speaking, a longer exposure time will result in a brighter overall image, and more image details in the dark areas will be retained. On the contrary, when the exposure time is shorter, the overall image brightness is darker, and more image details in the bright area can be retained. We draw the histograms of Figure 3b–g, respectively, as shown in Figure 4a–f. Furthermore, we draw the histograms of Figure 3h–m, respectively, as shown in Figure 5a–f.

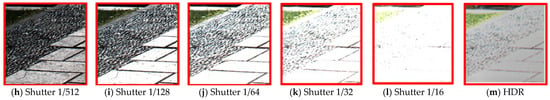

Figure 4.

Images and histograms of the low light area at different shutter speeds.

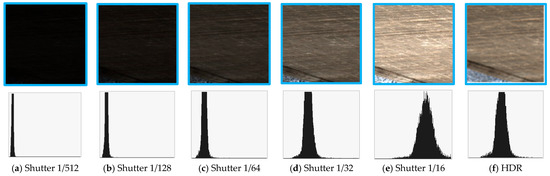

Figure 5.

Images and histograms of the high light area at different shutter speeds.

Figure 4a–e illustrates areas with relatively low brightness of the HDR image in Figure 3. Since the shutter time of Figure 4a is shorter than that of Figure 4e, Figure 4a can hardly retain the image details in the image. We call this phenomenon as a low-light saturation. The histogram of Figure 4a shows that all pixels are close to the minimum brightness. Low-brightness LDR images cannot retain low-brightness image details. In other words, the low-brightness areas in LDR images are prone to detail distortion.

Figure 5a–e illustrates the areas with relatively high brightness in Figure 3a. Since the shutter time of Figure 5e is longer than that of Figure 5a, Figure 5e can hardly preserve the image details in the image. We call this phenomenon a highlight saturation. The histogram of Figure 5e shows that all pixels are close to the maximum brightness. High-brightness LDR images cannot retain high-brightness image details. In other words, the high-brightness areas in LDR images are prone to detail distortion. Comparing the histograms of Figure 4a,f and comparing the histograms of Figure 5a,f, the HDR image still retains more image details in the high-brightness area and the low-brightness area than an LDR image.

Based on the above, scholars intuitively use the fusion technology of multi-exposure LDR images to reconstruct HDR images. The advantage of this is that the reconstructed HDR image will have image detail information of different brightness areas. Establishing an HDR image database is very important. Public datasets can provide scholars with an objective benchmark to evaluate the performance of reconstructing HDR images. In order to provide a public benchmark for reconstructing HDR images, scholars have successively established HDR datasets. Until 2024, the well-known datasets are HDREye dataset [43], Tursen dataset [38], Kalantari dataset [10], Prabhakar dataset [44], Liu dataset [36], and NITIRE2021 dataset [45]. Some datasets are single-exposure images, and some datasets are multi-exposure images. Some datasets have ground truth; some datasets do not have ground truth. Some datasets are real images, and some datasets are synthetic images. The differences between these famous datasets are summarized in Table 1.

Table 1.

Comparison of datasets.

The fusion of multi-exposure LDR images to generate HDR images is prone to artifacts. Artifacts are caused by the misalignment of the position of the moving object in the LDR images at different exposures. To this end, scholars face challenges in reconstructing HDR images. As time goes by, the alignment-based method, rejection-based method, patch-based method, and CNN-based method are proposed in sequence to improve the technology of reconstructing HDR images.

2.2. Alignment-Based Method

Before 2010, many scholars used the alignment-based method to overcome the artifact problem [2,3,4]. In 2000, Bogoni aligned with the reference images by estimating flow vectors [2]. In 2003, Kang et al. processes the alignment of source images in the luminance domain to reduce ghosting artifacts by utilizing optical flow [3]. In 2007, Tomaszewska et al. performed global alignments by using the SIFT feature [4]. Alignment errors are easy to happen by this method when large movements or occlusions occur.

2.3. Rejection-Based Method

In 2010~2015, many scholars used the rejection-based method to overcome the artifact problem [5,6,7]. In 2011, Zhang et al. used quality measurement on image gradients to obtain a motion weighting map [7]. In 2014 and 2015 separately, Lee et al. [5] and Oh et al. [6] detected motion regions by rank minimization. This method will result in image loss of the moving object after alignment.

2.4. Patch-Based Method

During the same period from 2010 to 2015, another group of scholars used the patch-based method to reconstruct HDR images [8,9]. In 2012, Sen et al. [8] optimized alignment and reconstruction simultaneously by proposing a patch-based energy minimization approach. In 2013, Hu et al. [9] produced aligned images by utilizing a patch-match mechanism. A lot of calculations are required, and practicality is not good when using this method.

2.5. CNN-Based Method

After 2015, due to the rapid development of neural networks, many scholars used CNN-based methods to reconstruct HDR images [10,11,12,13,14,15,16,17,18,19,20,21]. In 2017, Kalantari et al. aligned LDR images with optical flow and then used a CNN network to fuse them [10]. In 2018, Wu et al. aligned the camera motion by homography and used CNN to reconstruct HDR images [11]. In 2019, Yan et al. suppressed motion and saturation by proposing an attention mechanism [12]. In 2020, Yan et al. released the constraint of locality receptive field with a nonlocal block to globally merge HDR images [13]. In 2021, Niu et al. proposed generative adversarial networks, named HDR-GAN, to recover missing content [14]. In 2021, Ye et al. generated ghost-free images by a proposed multi-step feature fusion [15]. In 2021, Liu et al. removed ghosts by utilizing the pyramid, cascading, and deformable alignment subnetwork [16]. The method requires a huge number of labeled samples, and we all know that labeled samples are not easy to prepare.

2.6. Deblurring

Although in recent years many scholars have made great progress in reconstructing HDR images and overcoming artifacts by using deep learning methods [10,11,12,13,14,15,16], it is easy to cause details to disappear and the image to be blurred if the process of fusing multi-exposure LDR images to generate HDR images uses smoothing to suppress artifacts. Scholars realized that in order to solve the problem of reconstructing HDR images, it is not only necessary to remove artifacts but also to deal with the blur associated with the reconstructed HDR images [46,47,48,49,50]. In 2018, Yang et al. reconstructed the missing details of an input LDR image in the HDR domain and performed tone mapping on the predicted HDR material to produce an output image with restored details [46]. In 2018, Vasu et al. yielded a high-quality image by harnessing the complementarity present, both the sensor exposure and blur [47]. In 2022, Cogalan et al. provided an approach to improve not only the quality but also dynamic range of reconstructed HDR video by capturing mid exposure as a spatial and temporal reference to perform denoising, deblurring, and upsampling tasks and by merging learned optical flow to handle saturated/disoccluded image regions [48]. In 2023, Kim et al. addressed mosaiced multiple exposures and registered spatial displacements of exposures and colors by a feature-extraction module and a multiscale transformer, respectively, to achieve high-quality HDR images [49]. In 2023, Conde et al. proposed LPIENet to handle the noise, blur, and overexposure for perceptual image enhancement [50].

2.7. Super-Resolution

The HDR image fused by multi-exposure images is composed of LDR images. LDR images contribute pixel values and then are fused. Fusion inevitably causes distortion of pixel values in the resulting image. In order to restore the clarity of the image, many scholars have studied how to improve the resolution of the image from a single image. In 2015, Dong et al. proposed a method to learn an end-to-end mapping between different resolution images and extend the network to process three color channels simultaneously, improving reconstruction quality [22]. In 2016, Kim et al. used a deep convolutional network to utilize information over a big image area by cascading filters multiple times to achieve a high-precision, single-image super-resolution method [23]. In 2016, Shi et al. proposed a CNN architecture that introduced an efficient sub-pixel convolution layer to learn a series of upsampling filters to replace the bicubic filter and upgrade the low-resolution input image to the high-resolution space [24]. In 2018, Wang et al. provided the enhanced SRGAN (ESRGAN) to obtain better visual quality and more realistic and natural textures by improving the network architecture, adversarial loss, and perceptual loss [25]. In 2018, Zhang et al. extracted local features through densely connected convolutional layers and then used global feature fusion to learn global hierarchical features to achieve image super-resolution [26]. In 2019, Qiu et al. proposed an embedded block residual network (EBRN) in which different modules recover texture in different frequencies to achieve image super-resolution [27]. In 2021, Wang et al. proposed Real-ESRGAN, which takes into account the common ringing and overshoot artifacts, introduces a modeling process from ESRGAN to simulate complex degradation, and extends it to practical restoration applications [28]. In 2021, Ayazoglu proposed a hardware limitation-aware real-time super-resolution network (XLSR), using Clipped ReLU in the last layer of the network, maintaining the image quality of the reconstructed image super-resolution with less running time [29].

We have briefly introduced the methods proposed by scholars and shortcomings when overcoming artifacts and loss of details when reconstructing HDR images. We will step by step introduce the MDHDR solution and the evaluation method used in this paper in the methods. In the result, we compare the MDHDR solution with various state-of-the-art models for reconstructing HDR images and show experimental results on processing artifacts, detail loss, and image blur. In the discussion, we explain why our model is outstanding according to the experimental results. Finally, we show the contribution of the model proposed in this paper to the reconstruction of HDR images in the conclusion.

3. Methods

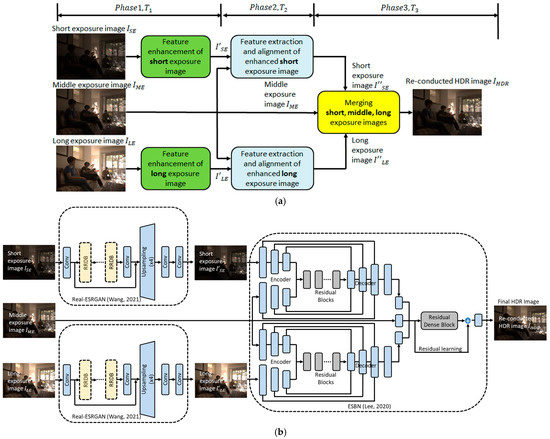

Figure 6.

The architecture of MDHDR: (a) the concept of MDHDR; (b) the blocks of MDHDR [28,32].

3.1. Enhance Image Details

We use the network proposed in paper [28], with long-exposure and short- exposure LDR images as input images. We direct use the pre-trained model provided by the author of paper [28]. After the super-resolution network progressed, we get the long-exposure LDR image that enhanced details marked as and the short-exposure LDR image that enhanced details marked as .

3.2. Capture Highlight Details of Short-Exposure LDR Image

We use the alignment network of paper [32]. We use the loss function in this part. The input images are short-exposure LDR image and medium-exposure LDR image . Encoder network is used to capture highlight details in short-exposure LDR images. To minimize difference between short-exposure LDR image and medium-exposure, LDR image is performed through nine layers of residual blocks. Similar to the alignment network in paper [32], a three-layer decoder network is used after the residual network to decrypt the feature data extracted from the short-exposure image. After decryption, we get the medium-exposure LDR image . has the highlight area details of the short-exposure LDR image and is aligned with the short-exposure image.

3.3. Capture Low-Light Details of Long-Exposure LDR Image

We use the alignment network of paper [32]. We use the loss function in this part. The input images are long-exposure LDR image and medium-exposure LDR image . Encoder Network is used to capture low-light details in long-exposure LDR Images. To minimize difference between long-exposure LDR image and medium-exposure, LDR image is done through nine layers of residual blocks. Similar to the alignment network in paper [32], a three-layer decoder network is used after the residual network to decrypt the feature data extracted from the long-exposure image. After decryption, we get the medium-exposure LDR image . has the low-light area details of the long-exposure LDR image and is aligned with the long-exposure image.

3.4. Fusion Images

Referring to the training strategy in paper [32], we use the loss function to minimize the difference between ground truth HDR image and tone-mapped HDR image in LDR domain. The fusion network has three input images. The first one is the medium-exposure LDR image, which is aligned by enhanced long-exposure image. The second one is the medium-exposure LDR image, which is aligned by the enhanced short-exposure image. The third one is the original medium-exposure LDR image . The output image of the fusion network marked as is the tone-mapped HDR image that has been reconstructed with the set of , , and . This HDR image has enhanced details in both low-brightness and high-brightness areas. As mentioned in [32], the differentiable tone-mapper, -law, is defined as follows, and is set to 5000.

3.5. Evaluation Metrics

The evaluation metrics used in this paper include PSNR-μ, SSIM-μ, PSNR-L, SSIM-L, and HDR-VDP-2 [51]. PSNR-μ, SSIM-μ, PSNR-L, and SSIM-L can show the quality of the HDR image reconstructed after alignment of multi-exposure LDR images. Specifically, PSNR-μ and SSIM-μ are used to evaluate the quality of the HDR images after tone mapping using μ-law. PSNR-L and SSIM-L are used to evaluate the quality of the HDR images in the linear space. HDR-VDP-2 [51] is used to evaluate the difference between the HDR image reconstructed by the human eye and the ground truth image. The formula of PSNR is expressed as follows:

The is the maximum value of image pixel coloration. The is the mean squared error that is expressed as follows:

where and represent the height and width of the image, respectively. and represent the reconstructed HDR image and the ground truth HDR image, respectively. The SSIM is expressed as follows:

where x and represent the reconstructed HDR image and the ground truth HDR image, and represent the mean values of x and , and represent the variance of x and , and represent the covariance of x and , respectively.

3.6. Loss Functions

In this paper, we follow the paper [32] to use loss and loss as the loss functions for training alignment network and merging network, respectively. The and are expressed as follows:

where and represent the trained value and ground truth value, respectively.

4. Results

4.1. Qualitative

To validate our hypotheses, we conduct qualitative tests on MDHDR and other state-of-the-art networks. In the qualitative comparison, we use different datasets, such as Kalantari dataset [10], Tursen dataset [38], and Prabhakar dataset [44], to compare the HDR images generated by MDHDR and other state-of-the-art models in terms of image alignment and image details.

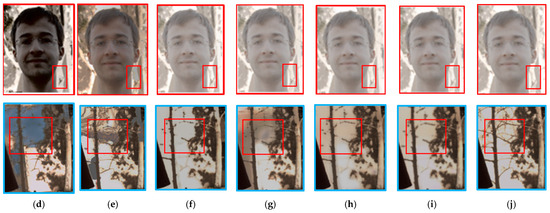

In Figure 7, we focus on the results of the foreground and background images of the reconstructed HDR image. In the foreground part, all models can align LDR images well to generate HDR images. However, in terms of background part, the models of Sen [8], Kalantari [10], and Niu [14] show serious artifacts. In addition, in the area of tree shadows, the HDR images reconstructed by Wu [11] and our MDHDR retain more image details and no serious ghosting artifacts.

Figure 7.

Results of various models on HDR images reconstructed by using the Kalantari dataset [10]: (a) the patch of input multi-exposure LDR images; (b) the patch of input multi-exposure LDR images; (c) the ground truth HDR image; (d) result of Sen [8] model; (e) result of Kalantari [10] model; (f) result of Wu [11] model; (g) result of Niu [14] model; (h) result of Lee [32] model; (i) result of Ours; (j) the ground truth HDR image.

Because the Tursen dataset has no ground truth HDR image, we use the free version of Photomatix tool to generate the tone-mapped HDR image as HDR GT in Figure 8 [39]. In the high-brightness background part, Sen [8] and Kalantari [10] models produce obvious artifacts. Our MDHDR adaptors are the same as Lee [32] model that fuse images majorly basing on the medium-exposure image component and results in that the wall and the tree have similar colors, but our result has more details than the Lee [32] model. In the low-brightness foreground part of the reconstructed HDR image, most models perform well in alignment, even though the multi-exposure LDR images have different poses. In the medium-brightness foreground part of the reconstructed HDR image, the Sen [8] and Kalantari [10] models produced artifacts; other models reconstructed HDR images well. It is worth to note that the result of comparing with other models, our MDHDR can reconstruct an HDR image that is clearer and has more details.

Figure 8.

Results of various models on HDR images reconstructed by using the Tursen dataset [38]: (a) the patch of input multi-exposure LDR images; (b) the patch of input multi-exposure LDR images; (c) the ground truth HDR image; (d) result of Sen [8] model; (e) result of Kalantari [10] model; (f) result of Wu [11] model; (g) result of Niu [14] model; (h) result of Lee [32] model; (i) result of Ours; (j) the ground truth HDR image.

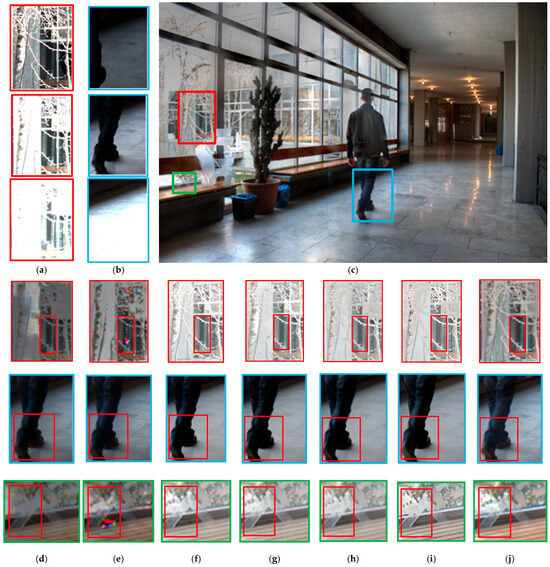

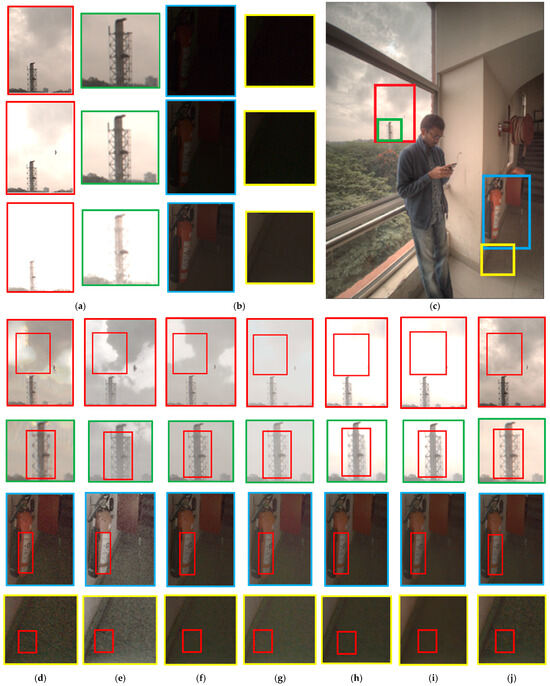

In Figure 9, Sen [8] and Kalantari [10] produce artifacts in the high-brightness background. Wu [11] model retains the details of image objects with lower brightness but loses the details in the high-brightness area. Niu [14] model loses the low-contrast details of the image. Our MDHDR adaptors are the same as Lee [32] model that fuses images majorly basing on the medium-exposure image component and results in reconstructed HDR image similar to medium-exposure LDR image, but our MDHDR retains more details in the high-brightness contrast image than Lee [32] model. In Figure 9, all models perform well in alignment in low-brightness areas. It is worth noting that our MDHDR significantly eliminates noises in low-light areas. According to Figure 7, Figure 8 and Figure 9, the HDR image reconstructed by our proposed MDHDR achieves not only having no ghosts, retaining the image details in the highlight and low-light areas, but also reducing the image noises in the low-light area and having a better clarity than the ground truth image.

Figure 9.

Results of various models on HDR images reconstructed by using the Prabhakar dataset [44]: (a) the patch of input multi-exposure LDR images; (b) the patch of input multi-exposure LDR images; (c) the ground truth HDR image; (d) result of Sen [8] model; (e) result of Kalantari [10] model; (f) result of Wu [11] model; (g) result of Niu [14] model; (h) result of Lee [32] model; (i) result of Ours; (j) the ground truth HDR image.

4.2. Quantitative

To be objective, we conduct quantitative testing regarding MDHDR and other advanced models after qualitative experiments. In the quantitative comparison, we use the Kalantari dataset [10] to test all models and calculate their PSNR-L, SSIM-L, PSNR-, SSIM-, and HDR-VDP-2 [51]. The result of the quantitative testing is recorded in Table 2.

Table 2.

Quantitative comparison on public Kalantari datasets [10].

Table 2 focuses on PSNR-L, PSNR-, SSIM-L, SSIM- and HDR-VDP2. The higher the values of PSNR-L, PSNR-, SSIM-L, and SSIM- mean the reconstructed HDR image is more similar to the ground truth. The higher the HDR-VDP2 value mean the visual quality of the reconstructed HDR image is better. In general, the main reason for the decrease of PSNR-L, PSNR-, SSIM-L, and SSIM- is the image artifacts and loss of details; the reason for the decrease of HDR-VDP2 value is the image becomes blurred. Since the architecture of our proposed solution is based on Lee [32], we directly compare the results of our solution with Lee [32] results. Table 2 shows that our solution has lower PSNR-L, PSNR-, SSIM-L, and SSIM- than Lee [32], which means that the HDR image reconstructed by our solution has more difference from the ground truth. Table 2 shows that our solution gets higher HDR-VDP-2 score than that of Lee [32], which means the HDR image, which is reconstructed by our solution, is better in visual perception.

In addition, the results of the qualitative experiments in Figure 7, Figure 8 and Figure 9 confirm that the HDR images, which are reconstructed by our solution, do not produce obvious artifacts or loss of details and have better clarity than the ground truth. Therefore, for the decrease in PSNR-L, PSNR-, SSIM-L, and SSIM- but the increase in HDR-VDP-2, our interpretation is that the improvement in image clarity leads to a gap with the ground truth. This result is consistent with our expectations. In other words, Table 2 confirms that our proposed HDRI reconstruction solution can avoid artifacts, detail loss, and image blur simultaneously.

We also list the running time and parameter size of models, as shown in Table 3. Our testing environment uses windows10 OS, I5-4570 CPU, and one GTX-1070Ti. The codes of Sen [8] and Kalantari [10] were performed by the CPU. Others were performed by the GPU.

Table 3.

Comparison the average runtime on public Kalantari datasets [10].

In Table 3, the focus is on the network size and the inference time. Our proposed solution is based on Lee [32] and super-resolution [28], which has better HDR image quality but has more parameters, which costs the expense of long inference time. The large amount of Para (M) and inference time prompts us to think that simplifying super-resolution models will be the major task in our future research.

4.3. Ablation Study

In order to better understand our proposed solution, we conducted an ablation study that contains the following situations:

- Use super-resolution to only enhance long-exposure test images;

- Use super-resolution to only enhance middle-exposure test images;

- Use super-resolution to only enhance short-exposure test images.

The screenshots of the ablation study results are shown in Figure 10. According to Figure 10b,c, by comparing the HDR images’ clarity that reconstructed with enhanced medium-exposure image, enhanced long-exposure image, and enhanced short-exposure image, we find that the HDR image reconstructed using the enhanced middle-exposure image has the higher clarity. In Figure 10e–g, by comparing the HDR images’ details that reconstructed with enhanced medium-exposure image, enhanced long-exposure image, and enhanced short-exposure image, we find that the HDR image reconstructed using the enhanced middle-exposure image retains fewer details.

Figure 10.

Ablation study: (a) HDR ground truth; (b) Use super-resolution to only enhance long-exposure test images; (c) Use super-resolution to only enhance middle-exposure test images; (d) Use super-resolution to only enhance short-exposure test images; (e) Use super-resolution to only enhance long-exposure test images; (f) Use super-resolution to only enhance middle-exposure test images; (g) Use super-resolution to only enhance short-exposure test images.

In Table 4, we find that the HDR image, which is reconstructed with enhanced medium-exposure image, decreases PSNR-L, PSNR-, SSIM-L, and SSIM-. The result of ablation study shows that if we want to retain more details and clarity of the reconstructed HDR image through the super-resolution network, the super-resolution network should be built on the path except the middle-exposure test image.

Table 4.

Ablation study results on public Kalantari datasets [10].

5. Discussion

Since using the patch method, the Sen [8] model and the Kalantari [10] model are not robust for aligning multi-exposure LDR images and have high possibility of causing artifacts in reconstructed HDR images, especially when the foreground of the source LDR images have different poses. Wu [11] model uses homography to align the camera motion and therefore works well in aligning multi-exposure LDR images. However, the Wu [11] model causes the reconstructed HDR image to lose some image details and become a little blurred. The reconstructed HDR images by Niu [14] model HDR-GAN have slight artifacts and are a little blurred. The Lee [32] model works well in processing the alignment of multi-exposure LDR images. However, it will cause the reconstructed HDR images with reduced details on the highlight areas due to long-exposure LDR images with highlights saturated. In contrast, the HDR image reconstructed by our proposed MDHDR model has no artifacts and retains the details that are easily lost in highlight and low-light areas by other models. The main reasons that MDHDR can reconstruct an HDR image well are two. The first one is that mapping the short-exposure image and the long-exposure image to the medium-exposure image, respectively, and then fusing them can effectively avoid the artifacts caused by the different postures of the foreground image. The second one is that the corresponding details of the short-exposure image and the long-exposure image are enhanced in advance in order to solve the blurring of the fused image. Therefore, the fused HDR image can retain details that are closer to the ground truth. It is worth noting that because the corresponding details of the short-exposure image and the long-exposure image are enhanced in advance, the final reconstructed HDR image becomes smoother than the ground truth. Any difference with ground truth image decreases the PSNR-L, PSNR-, SSIM-L, and SSIM- even though our reconstructed HDR image has better clarity. This is consistent with our expectations and can be observed in Table 2.

Besides, according to the results of qualitative experiments, we found that our solution has the following two potential limitations. The first one is that when reconstructing HDR images using multiple exposure images, if the over-bright area appears in the same position in the long-exposure image and the medium-exposure image, it is difficult to use only the image textures of the short-exposure image to restore the image details lost in the over-bright area of the long-exposure image. The second one is that when reconstructing HDR images using multiple-exposure images, if the dark area appears in the same position in the short-exposure image and the medium-exposure image, it is difficult to use only the image textures of the long-exposure image to restore the image details lost in the dark area of the short-exposure image.

6. Conclusions

With the help of continuously improving deep learning models, considerable progress has been made in reconstructing HDR images by fusing multi-exposure LDR images. There are many challenges in reconstructing HDR images by fusing multi-exposure LDR images, especially the ghosting, blurring, noises, and detail distortion. After comparing with the state-of-the-art models in both of qualitative and quantitative experiments, it is confirmed that the MDHDR model proposed in this paper performs well in reconstructing HDR images by fusing multiple-exposure LDR images. The HDR image reconstructed by our proposed MDHDR has no ghosting, retains the details that are easily lost in high-brightness and low-brightness areas, and has better clarity. Our proposed MDHDR provides a practical and effective solution for reconstructing an HDR image by using multi-exposure LDR images.

Our future research focuses on two main tasks. The first task is that we will base on following two points to adjust the network architectures to recover the lost image details. The first point is to extract short- and medium-exposure images to compensate lost image details in long-exposure images. The second point is to extract long- and medium-exposure images to compensate lost image details in short-exposure images. The second task is to simplify the super-resolution model to reduce the number of network parameters and inference time.

Author Contributions

Conceptualization, K.-C.H., S.-F.L. and C.-H.L.; Methodology, K.-C.H.; validation, K.-C.H.; writing—original draft preparation, K.-C.H. and S.-F.L.; writing—review and editing, K.-C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Acknowledgments

Thanks to friends who are keen on reconstructing HDR images for their suggestions on my study. Thanks to all scholars who provide open sources on https://github.com/xinntao/Real-ESRGAN-ncnn-vulkan.git (accessed on 29 January 2025) and https://github.com/tkd1088/ESBN.git (accessed on 15 October 2024).

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Guo, C.; Jiang, X. Lhdr: Hdr reconstruction for legacy content using a lightweight dnn. In Proceedings of the Asian Conference on Computer Vision, Macau SAR, China, 4–8 December 2022. [Google Scholar]

- Bogoni, L. Extending dynamic range of monochrome and color images through fusion. In Proceedings of the 15th International Conference on Pattern Recognition, ICPR-2000, Barcelona, Spain, 3–7 September 2000; IEEE: Piscataway, NJ, USA, 2000. [Google Scholar]

- Kang, S.B.; Uyttendaele, M.; Winder, S.; Szeliski, R. High dynamic range video. ACM Trans. Graph. (TOG) 2003, 22, 319–325. [Google Scholar] [CrossRef]

- Tomaszewska, A.; Mantiuk, R. Image Registration for Multi-Exposure High Dynamic Range Image Acquisition. 2007. Available online: https://scispace.com/pdf/image-registration-for-multi-exposure-high-dynamic-range-4tym59cxpm.pdf (accessed on 2 February 2025).

- Lee, C.; Li, Y.; Monga, V. Ghost-free high dynamic range imaging via rank minimization. IEEE Signal Process. Lett. 2014, 21, 1045–1049. [Google Scholar] [CrossRef]

- Oh, T.-H.; Lee, J.-Y.; Tai, Y.-W.; Kweon, I.S. Robust high dynamic range imaging by rank minimization. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 1219–1232. [Google Scholar] [CrossRef] [PubMed]

- Zhang, W.; Cham, W.-K. Gradient-Directed Multiexposure Composition. IEEE Trans. Image Process. 2011, 21, 2318–2323. [Google Scholar] [CrossRef] [PubMed]

- Sen, P.; Kalantari, N.K.; Yaesoubi, M.; Darabi, S.; Goldman, D.B.; Shechtman, E. Robust patch-based hdr reconstruction of dynamic scenes. ACM Trans. Graph. 2012, 31, 203:1–203:11. [Google Scholar] [CrossRef]

- Hu, J.; Gallo, O.; Pulli, K.; Sun, X. HDR deghosting: How to deal with saturation? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Kalantari, N.K.; Ramamoorthi, R. Deep high dynamic range imaging of dynamic scenes. ACM Trans. Graph. 2017, 36, 144:1–144:12. [Google Scholar] [CrossRef]

- Wu, S.; Xu, J.; Tai, Y.-W.; Tang, C.-K. Deep high dynamic range imaging with large foreground motions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yan, Q.; Gong, D.; Shi, Q.; van den Hengel, A.; Shen, C.; Reid, I.; Zhang, Y. Attention-guided network for ghost-free high dynamic range imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Yan, Q.; Zhang, L.; Liu, Y.; Zhu, Y.; Sun, J.; Shi, Q.; Zhang, Y. Deep HDR imaging via a non-local network. IEEE Trans. Image Process. 2020, 29, 4308–4322. [Google Scholar] [CrossRef] [PubMed]

- Niu, Y.; Wu, J.; Liu, W.; Guo, W.; Lau, R. Hdr-gan: Hdr image reconstruction from multi-exposed ldr images with large motions. IEEE Trans. Image Process. 2021, 30, 3885–3896. [Google Scholar] [CrossRef] [PubMed]

- Ye, Q.; Xiao, J.; Lam, K.-m.; Okatani, T. Progressive and selective fusion network for high dynamic range imaging. In Proceedings of the 29th ACM International Conference on Multimedia, Chengdu, China, 20–24 October 2021. [Google Scholar]

- Liu, Z.; Lin, W.; Li, X.; Rao, Q.; Jiang, T.; Han, M.; Fan, H.; Sun, J.; Liu, S. ADNet: Attention-guided deformable convolutional network for high dynamic range imaging. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Chen, Y.; Jiang, G.; Yu, M.; Jin, C.; Xu, H.; Ho, Y.-S. HDR light field imaging of dynamic scenes: A learning-based method and a benchmark dataset. Pattern Recognit. 2024, 150, 110313. [Google Scholar] [CrossRef]

- Guan, Y.; Xu, R.; Yao, M.; Huang, J.; Xiong, Z. EdiTor: Edge-guided transformer for ghost-free high dynamic range imaging. ACM Trans. Multimed. Comput. Commun. Appl. 2024. [Google Scholar] [CrossRef]

- Hu, T.; Yan, Q.; Qi, Y.; Zhang, Y. Generating content for hdr deghosting from frequency view. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–21 June 2024. [Google Scholar]

- Li, H.; Yang, Z.; Zhang, Y.; Tao, D.; Yu, D. Single-image HDR reconstruction assisted ghost suppression and detail preservation network for multi-exposure HDR imaging. IEEE Trans. Comput. Imaging 2024, 10, 429–445. [Google Scholar] [CrossRef]

- Zhu, L.; Zhou, F.; Liu, B.; Goksel, O. HDRfeat: A feature-rich network for high dynamic range image reconstruction. Pattern Recognit. Lett. 2024, 184, 148–154. [Google Scholar] [CrossRef]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26–30 June 2016. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. Esrgan: Enhanced super-resolution generative adversarial networks. In Proceedings of the European Conference on Computer Vision (ECCV) Workshops, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Qiu, Y.; Wang, R.; Tao, D.; Cheng, J. Embedded block residual network: A recursive restoration model for single-image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27–28 October 2019. [Google Scholar]

- Wang, X.; Xie, L.; Dong, C.; Shan, Y. Real-esrgan: Training real-world blind super-resolution with pure synthetic data. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Ayazoglu, M. Extremely lightweight quantization robust real-time single-image super resolution for mobile devices. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Proceedings, Part III. Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part II. Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Lee, S.-H.; Chung, H.; Cho, N.I. Exposure-structure blending network for high dynamic range imaging of dynamic scenes. IEEE Access 2020, 8, 117428–117438. [Google Scholar] [CrossRef]

- Yang, K.; Hu, T.; Dai, K.; Chen, G.; Cao, Y.; Dong, W.; Wu, P.; Zhang, Y.; Yan, Q. CRNet: A Detail-Preserving Network for Unified Image Restoration and Enhancement Task. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Seattle, WA, USA, 17–18 June 2024. [Google Scholar]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Karaimer, H.C.; Brown, M.S. A software platform for manipulating the camera imaging pipeline. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I. Springer: Berlin/Heidelberg, Germany, 2016; pp. 429–444. [Google Scholar]

- Liu, Y.-L.; Lai, W.-S.; Chen, Y.-S.; Kao, Y.-L.; Yang, M.-H.; Chuang, Y.-Y.; Huang, J.-B. Single-image HDR reconstruction by learning to reverse the camera pipeline. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Ramanath, R.; Snyder, W.E.; Yoo, Y.; Drew, M.S. Color image processing pipeline. IEEE Signal Process. Mag. 2005, 22, 34–43. [Google Scholar] [CrossRef]

- Tursun, O.T.; Akyüz, A.O.; Erdem, A.; Erdem, E. An objective deghosting quality metric for HDR images. In Computer Graphics Forum; Wiley Online Library: Hoboken, NJ, USA, 2016. [Google Scholar]

- Try Photomatix Pro. Available online: https://www.hdrsoft.com/download/photomatix-pro.html (accessed on 23 March 2025).

- Boitard, R.; Pourazad, M.T.; Nasiopoulos, P. High dynamic range versus standard dynamic range compression efficiency. In Proceedings of the 2016 Digital Media Industry & Academic Forum (DMIAF), Santorini, Greece, 4–6 July 2016; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar]

- BT.709-6 (06/2015); Recommendation, Parameter Values for the HDTV Standards for Production and International Programme Exchange. ITU: Geneva, Switzerland, 2002.

- ITU-R BT.2020-1 (06/2014); Parameter Values for Ultra-High Definition Television Systems for Production and International Programme Exchange. ITU-T, Bt. 2020; ITU: Geneva, Switzerland, 2012.

- Nemoto, H.; Korshunov, P.; Hanhart, P.; Ebrahimi, T. Visual attention in LDR and HDR images. In Proceedings of the 9th International Workshop on Video Processing and Quality Metrics for Consumer Electronics (VPQM), Chandler, AZ, USA, 5–6 February 2015. [Google Scholar]

- Prabhakar, K.R.; Arora, R.; Swaminathan, A.; Singh, K.P.; Babu, R.V. A fast, scalable, and reliable deghosting method for extreme exposure fusion. In Proceedings of the 2019 IEEE International Conference on Computational Photography (ICCP), Tokyo, Japan, 5–17 May 2019; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Pérez-Pellitero, E.; Catley-Chandar, S.; Leonardis, A.; Timofte, R. NTIRE 2021 challenge on high dynamic range imaging: Dataset, methods and results. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021. [Google Scholar]

- Yang, X.; Xu, K.; Song, Y.; Zhang, Q.; Wei, X.; Lau, R.W. Image correction via deep reciprocating HDR transformation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Vasu, S.; Shenoi, A.; Rajagopazan, A. Joint hdr and super-resolution imaging in motion blur. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; IEEE: Piscataway, NJ, USA. [Google Scholar]

- Cogalan, U.; Bemana, M.; Myszkowski, K.; Seidel, H.-P.; Ritschel, T. Learning HDR video reconstruction for dual-exposure sensors with temporally-alternating exposures. Comput. Graph. 2022, 105, 57–72. [Google Scholar] [CrossRef]

- Kim, J.; Kim, M.H. Joint demosaicing and deghosting of time-varying exposures for single-shot hdr imaging. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023. [Google Scholar]

- Conde, M.V.; Vasluianu, F.; Vazquez-Corral, J.; Timofte, R. Perceptual image enhancement for smartphone real-time applications. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 2–7 January 2023. [Google Scholar]

- Mantiuk, R.; Kim, K.J.; Rempel, A.G.; Heidrich, W. HDR-VDP-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. ACM Trans. Graph. (TOG) 2011, 30, 1–14. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).