Comparison of ECG Between Gameplay and Seated Rest: Machine Learning-Based Classification

Abstract

1. Introduction

2. Materials and Methods

2.1. Participants

2.2. Experimental Protocol

2.3. ECG Data Collection and HRV Analysis

- Mean RR interval (ms): The average duration between consecutive R-peaks, representing overall heart-rate trends.

- SDRR (ms): The standard deviation of RR intervals, reflecting overall HRV magnitude.

- VLF (very low-frequency power (ln, ms2), 0.003–0.04 Hz): Associated with long-term autonomic regulation and possibly thermoregulatory mechanisms.

- LF (low-frequency power (ln, ms2), 0.04–0.15 Hz): Represents a combination of sympathetic and parasympathetic nervous system activity.

- HF (high-frequency power (ln, ms2), 0.15–0.40 Hz): Primarily reflects parasympathetic (vagal) activity and respiratory influences.

- LF/HF ratio: An indicator of sympathovagal balance, with higher values suggesting increased sympathetic dominance.

- HF peak frequency (Hz): The dominant frequency within the HF band, associated with respiratory modulation of heart rate.

2.4. Machine Learning Classification

- Logistic Regression (LGR): A linear classification model used for binary classification, providing probability estimates.

- Random Forest (RF): An ensemble learning method that constructs multiple decision trees and averages predictions.

- XGBoost (XGB): A gradient boosting algorithm optimized for structured data and classification tasks.

- One-Class SVM (OCS): A support vector machine-based method for detecting outliers or separating a single class from others.

- Isolation Forest (ILF): An unsupervised learning algorithm designed for anomaly detection based on tree structures.

- Local Outlier Factor (LOF): A density-based anomaly detection algorithm that compares local densities of data points.

2.5. Dataset Preparation and Model Evaluation

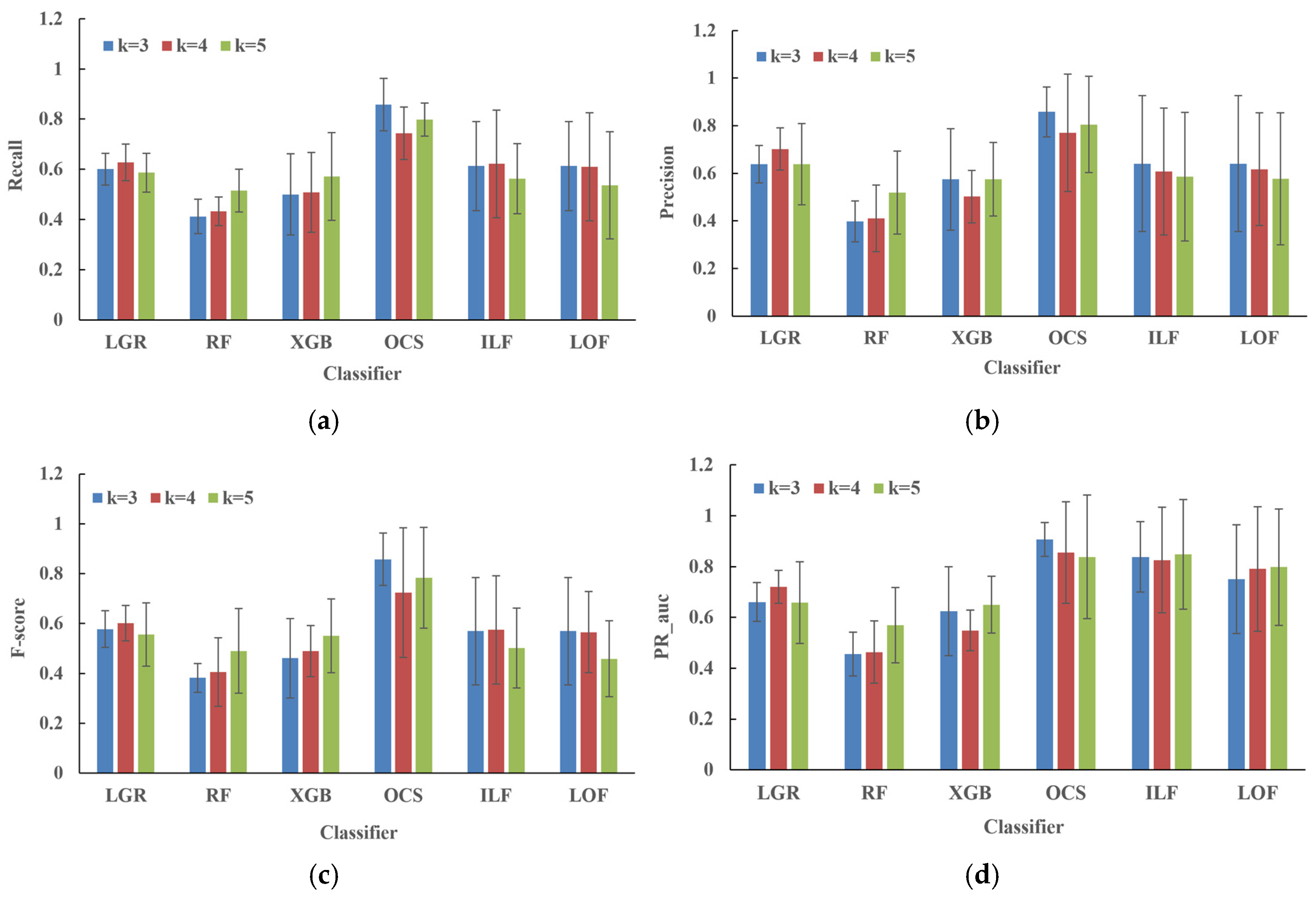

- Precision: Measures the proportion of correctly identified gaming participants out of all samples predicted as gaming. A higher precision indicates fewer false positives.

- Recall: Measures the sensitivity of the model in correctly identifying gaming participants, reflecting the ability to detect actual gaming cases.

- F-score: The harmonic mean of precision and recall, balancing false positives and false negatives. It provides a single measure of a model’s effectiveness.

- PR-AUC (precision–recall area under the curve): Evaluates model performance, particularly for imbalanced datasets, by analyzing the trade-off between precision and recall across different thresholds.

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Mori, A.; Iwadate, M.; Minakawa, N.T.; Kawashima, S. Game play decreases prefrontal cortex activity and causes damage in game addiction. Nihon Rinsho 2015, 73, 1567–1573. [Google Scholar]

- Limone, P.; Ragni, B.; Toto, G.A. The epidemiology and effects of video game addiction: A systematic review and meta-analysis. Acta Psychol. 2023, 241, 104047. [Google Scholar] [CrossRef] [PubMed]

- Menéndez-García, A.; Jiménez-Arroyo, A.; Rodrigo-Yanguas, M.; Marin-Vila, M.; Sánchez-Sánchez, F.; Roman-Riechmann, E.; Blasco-Fontecilla, H. Internet, video game and mobile phone addiction in children and adolescents diagnosed with ADHD: A case-control study. Adicciones 2022, 34, 208–217. [Google Scholar] [CrossRef] [PubMed]

- Meng, S.Q.; Cheng, J.L.; Li, Y.Y.; Yang, X.Q.; Zheng, J.W.; Chang, X.W.; Shi, Y.; Chen, Y.; Lu, L.; Sun, Y.; et al. Global prevalence of digital addiction in the general population: A systematic review and meta-analysis. Clin. Psychol. Rev. 2022, 92, 102128. [Google Scholar] [CrossRef]

- Greenfield, D.N. Clinical considerations in internet and video game addiction treatment. Child Adolesc. Psychiatr. Clin. N. Am. 2022, 31, 99–119. [Google Scholar] [CrossRef]

- Mathews, C.L.; Morrell, H.E.R.; Molle, J.E. Video game addiction, ADHD symptomatology, and video game reinforcement. Am. J. Drug Alcohol Abuse 2019, 45, 67–76. [Google Scholar] [CrossRef]

- Greenfield, D.N. Treatment considerations in internet and video game addiction: A qualitative discussion. Child Adolesc. Psychiatr. Clin. N. Am. 2018, 27, 327–344. [Google Scholar] [CrossRef]

- Gentile, D.A.; Choo, H.; Liau, A.; Sim, T.; Li, D.; Fung, D.; Khoo, A. Pathological video game use among youths: A two-year longitudinal study. Pediatrics 2011, 127, e319–e329. [Google Scholar] [CrossRef]

- Zhou, R.; Xiao, X.Y.; Huang, W.J.; Wang, F.; Shen, X.Q.; Jia, F.J.; Hou, C.L. Video game addiction in psychiatric adolescent population: A hospital-based study on the role of individualism from South China. Brain Behav. 2023, 13, e3119. [Google Scholar] [CrossRef]

- Mylona, I.; Deres, E.S.; Dere, G.S.; Tsinopoulos, I.; Glynatsis, M. The Impact of Internet and Video Gaming Addiction on Adolescent Vision: A Review of the Literature. Front. Public Health 2020, 8, 63. [Google Scholar] [CrossRef]

- King, D.L.; Delfabbro, P.H. The cognitive psychology of Internet gaming disorder. Clin. Psychol. Rev. 2014, 34, 298–308. [Google Scholar] [CrossRef] [PubMed]

- Stevens, M.W.; Dorstyn, D.; Delfabbro, P.H.; King, D.L. Global prevalence of gaming disorder: A systematic review and meta-analysis. Aust. N. Z. J. Psychiatry 2021, 55, 553–568. [Google Scholar] [CrossRef] [PubMed]

- Esposito, M.R.; Serra, N.; Guillari, A.; Simeone, S.; Sarracino, F.; Continisio, G.I.; Rea, T. An investigation into video game addiction in pre-adolescents and adolescents: A cross-sectional study. Medicina 2020, 56, 221. [Google Scholar] [CrossRef]

- Weinstein, A.M. Computer and video game addiction—A comparison between game users and non-game users. Am. J. Drug Alcohol Abuse 2010, 36, 268–276. [Google Scholar] [CrossRef]

- Kim, J.Y.; Kim, H.S.; Kim, D.J.; Im, S.K.; Kim, M.S. Identification of Video Game Addiction Using Heart-Rate Variability Parameters. Sensors 2021, 21, 4683. [Google Scholar] [CrossRef]

- Odenstedt Hergès, H.; Vithal, R.; El-Merhi, A.; Naredi, S.; Staron, M.; Block, L. Machine learning analysis of heart rate variability to detect delayed cerebral ischemia in subarachnoid hemorrhage. Acta Neurol. Scand. 2022, 145, 151–159. [Google Scholar] [CrossRef] [PubMed]

- Ambale-Venkatesh, B.; Yang, X.; Wu, C.O.; Liu, K.; Hundley, W.G.; McClelland, R.; Gomes, A.S.; Folsom, A.R.; Shea, S.; Guallar, E.; et al. Cardiovascular Event Prediction by Machine Learning: The Multi-Ethnic Study of Atherosclerosis. Circ. Res. 2017, 121, 1092–1101. [Google Scholar] [CrossRef]

- Guo, C.Y.; Wu, M.Y.; Cheng, H.M. The Comprehensive Machine Learning Analytics for Heart Failure. Int. J. Environ. Res. Public Health 2021, 18, 4943. [Google Scholar] [CrossRef]

- Xu, L.; Cao, F.; Wang, L.; Liu, W.; Gao, M.; Zhang, L.; Hong, F.; Lin, M. Machine learning model and nomogram to predict the risk of heart failure hospitalization in peritoneal dialysis patients. Ren. Fail. 2024, 46, 2324071. [Google Scholar] [CrossRef]

- Accardo, A.; Silveri, G.; Merlo, M.; Restivo, L.; Ajčević, M.; Sinagra, G. Detection of subjects with ischemic heart disease by using machine learning technique based on heart rate total variability parameters. Physiol. Meas. 2020, 41, 115008. [Google Scholar] [CrossRef]

- Agliari, E.; Barra, A.; Barra, O.A.; Fachechi, A.; Franceschi Vento, L.; Moretti, L. Detecting cardiac pathologies via machine learning on heart-rate variability time series and related markers. Sci. Rep. 2020, 10, 8845. [Google Scholar] [CrossRef] [PubMed]

- Nemati, S.; Holder, A.; Razmi, F.; Stanley, M.D.; Clifford, G.D.; Buchman, T.G. An Interpretable Machine Learning Model for Accurate Prediction of Sepsis in the ICU. Crit. Care Med. 2018, 46, 547–553. [Google Scholar] [CrossRef] [PubMed]

- Chiew, C.J.; Liu, N.; Tagami, T.; Wong, T.H.; Koh, Z.X.; Ong, M.E.H. Heart rate variability based machine learning models for risk prediction of suspected sepsis patients in the emergency department. Medicine 2019, 98, e14197. [Google Scholar] [CrossRef]

- Geng, D.; An, Q.; Fu, Z.; Wang, C.; An, H. Identification of major depression patients using machine learning models based on heart rate variability during sleep stages for pre-hospital screening. Comput. Biol. Med. 2023, 162, 107060. [Google Scholar] [CrossRef]

- Matuz, A.; van der Linden, D.; Darnai, G.; Csathó, Á. Generalisable machine learning models trained on heart rate variability data to predict mental fatigue. Sci. Rep. 2022, 12, 20023. [Google Scholar] [CrossRef]

- Ni, Z.; Sun, F.; Li, Y. Heart Rate Variability-Based Subjective Physical Fatigue Assessment. Sensors 2022, 22, 3199. [Google Scholar] [CrossRef]

- Lee, K.F.A.; Gan, W.S.; Christopoulos, G. Biomarker-Informed Machine Learning Model of Cognitive Fatigue from a Heart Rate Response Perspective. Sensors 2021, 21, 3843. [Google Scholar] [CrossRef]

- Fan, J.; Mei, J.; Yang, Y.; Lu, J.; Wang, Q.; Yang, X.; Chen, G.; Wang, R.; Han, Y.; Sheng, R.; et al. Sleep-phasic heart rate variability predicts stress severity: Building a machine learning-based stress prediction model. Stress Health 2024, 40, e3386. [Google Scholar] [CrossRef] [PubMed]

- Cao, R.; Rahmani, A.M.; Lindsay, K.L. Prenatal stress assessment using heart rate variability and salivary cortisol: A machine learning-based approach. PLoS ONE 2022, 17, e0274298. [Google Scholar] [CrossRef]

- Bahameish, M.; Stockman, T.; Requena Carrión, J. Strategies for Reliable Stress Recognition: A Machine Learning Approach Using Heart Rate Variability Features. Sensors 2024, 24, 3210. [Google Scholar] [CrossRef]

- Tsai, C.Y.; Majumdar, A.; Wang, Y.; Hsu, W.H.; Kang, J.H.; Lee, K.Y.; Tseng, C.H.; Kuan, Y.C.; Lee, H.C.; Wu, C.J.; et al. Machine learning model for aberrant driving behaviour prediction using heart rate variability: A pilot study involving highway bus drivers. Int. J. Occup. Saf. Ergon. 2023, 29, 1429–1439. [Google Scholar] [CrossRef] [PubMed]

- Pop, G.N.; Christodorescu, R.; Velimirovici, D.E.; Sosdean, R.; Corbu, M.; Bodea, O.; Valcovici, M.; Dragan, S. Assessment of the Impact of Alcohol Consumption Patterns on Heart Rate Variability by Machine Learning in Healthy Young Adults. Medicina 2021, 57, 956. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Tse, M.M.Y.; Chung, J.W.Y.; Yau, S.Y.; Wong, T.K.S. Effects of Posture on Heart Rate Variability in Non-Frail and Prefrail Individuals: A Cross-Sectional Study. BMC Geriatr. 2023, 23, 870. [Google Scholar] [CrossRef]

- Hallman, D.M.; Sato, T.; Kristiansen, J.; Gupta, N.; Skotte, J.; Holtermann, A. Prolonged Sitting is Associated with Attenuated Heart Rate Variability during Sleep in Blue-Collar Workers. Int. J. Environ. Res. Public Health 2015, 12, 14811–14827. [Google Scholar] [CrossRef] [PubMed]

- Nam, K.C.; Kwon, M.K.; Kim, D.W. Effects of Posture and Acute Sleep Deprivation on Heart Rate Variability. Yonsei Med. J. 2011, 52, 569–573. [Google Scholar] [CrossRef][Green Version]

- Kumar, P.; Das, A.K.; Halder, S. Statistical Heart Rate Variability Analysis for Healthy Person: Influence of Gender and Body Posture. J. Electrocardiol. 2023, 79, 81–88. [Google Scholar] [CrossRef]

- Chuangchai, W.; Pothisiri, W. Postural Changes on Heart Rate Variability among Older Population: A Preliminary Study. Curr. Gerontol. Geriatr. Res. 2021, 2021, 6611479. [Google Scholar] [CrossRef]

- Ashtiyani, M.; Navaei Lavasani, S.; Asgharzadeh Alvar, A.; Deevband, M.R. Heart rate variability classification using support vector machine and genetic algorithm. J. Biomed. Phys. Eng. 2018, 8, 423–434. [Google Scholar] [CrossRef]

- Callejas-Cuervo, M.; Martínez-Tejada, L.A.; Alarcón-Aldana, A.C. Emotion recognition techniques using physiological signals and video games—Systematic review. Ing. Investig. Desarrollo 2017, 26, 109–118. [Google Scholar] [CrossRef]

- Karthikeyan, P.; Murugappan, M.; Yaacob, S. ECG signals based mental stress assessment using wavelet transform. In Proceedings of the 2011 IEEE International Conference on Control System, Computing and Engineering, Penang, Malaysia, 25–27 November 2011; IEEE: Piscataway Townshi, NJ, USA, 2011; pp. 157–161. [Google Scholar] [CrossRef]

| Dataset | 5 min | 10 min |

|---|---|---|

| Game | 67 | 33 |

| Rest | 78 | 38 |

| Participants | MRR [ms] | SDRR [ms] | VLF [ln, ms2] | LF [ln, ms2] | HF [ln, ms2] | LF/HF [Ratio] | HF Freq [Hz] |

|---|---|---|---|---|---|---|---|

| G1 | 803 | 91 | 8.30 | 6.89 | 5.02 | 6.44 | 0.228 |

| G2 | 724 | 78 | 7.94 | 7.19 | 5.58 | 5.01 | 0.233 |

| G3 | 722 | 90 | 8.01 | 7.42 | 6.20 | 3.38 | 0.243 |

| G4 | 637 | 33 | 5.61 | 5.71 | 4.33 | 3.97 | 0.246 |

| G5 | 511 | 44 | 6.35 | 6.23 | 4.71 | 4.56 | 0.228 |

| G6 | 776 | 74 | 7.30 | 7.14 | 6.18 | 2.61 | 0.234 |

| Mean ± S.D. | 696 ± 98 | 69 ± 22 | 7.25 ± 0.97 | 6.76 ± 0.60 | 5.34 ± 0.71 | 4.33 ± 1.22 | 0.235 ± 0.007 |

| Participants | MRR [ms] | SDRR [ms] | VLF [ln, ms2] | LF [ln, ms2] | HF [ln, ms2] | LF/HF [Ratio] | HF Freq [Hz] |

|---|---|---|---|---|---|---|---|

| R1 | 571 | 21 | 5.16 | 4.90 | 3.42 | 4.38 | 0.296 |

| R2 | 694 | 57 | 6.85 | 7.10 | 6.28 | 2.27 | 0.215 |

| R3 | 769 | 47 | 6.84 | 6.79 | 5.72 | 2.92 | 0.219 |

| R4 | 1055 | 113 | 8.44 | 7.38 | 6.16 | 3.40 | 0.247 |

| R5 | 575 | 28 | 5.66 | 4.75 | 3.47 | 3.59 | 0.253 |

| R6 | 706 | 45 | 6.71 | 5.88 | 5.68 | 1.23 | 0.268 |

| R7 | 775 | 38 | 6.19 | 5.74 | 5.14 | 1.82 | 0.234 |

| Mean ± S.D. | 735 ± 151 | 50 ± 28 | 6.55 ± 0.937 | 6.08 ± 0.970 | 5.12 ± 1.12 | 2.08 ± 1.02 | 0.247 ± 0.026 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yuda, E.; Edamatsu, H.; Yoshida, Y.; Ueno, T. Comparison of ECG Between Gameplay and Seated Rest: Machine Learning-Based Classification. Appl. Sci. 2025, 15, 5783. https://doi.org/10.3390/app15105783

Yuda E, Edamatsu H, Yoshida Y, Ueno T. Comparison of ECG Between Gameplay and Seated Rest: Machine Learning-Based Classification. Applied Sciences. 2025; 15(10):5783. https://doi.org/10.3390/app15105783

Chicago/Turabian StyleYuda, Emi, Hiroyuki Edamatsu, Yutaka Yoshida, and Takahiro Ueno. 2025. "Comparison of ECG Between Gameplay and Seated Rest: Machine Learning-Based Classification" Applied Sciences 15, no. 10: 5783. https://doi.org/10.3390/app15105783

APA StyleYuda, E., Edamatsu, H., Yoshida, Y., & Ueno, T. (2025). Comparison of ECG Between Gameplay and Seated Rest: Machine Learning-Based Classification. Applied Sciences, 15(10), 5783. https://doi.org/10.3390/app15105783