1. Introduction

The increasing reliance on pharmaceutical treatments has significantly improved global health outcomes, enabling the effective management of various diseases. However, medication misidentification and misuse remain critical healthcare challenges, contributing to adverse drug reactions (ADRs) and patient safety concerns [

1]. According to the World Health Organization (WHO), medication errors lead to substantial morbidity and mortality, with one-third of global fatalities linked to substance misuse rather than the primary disease itself [

2,

3]. The risks are particularly pronounced among the elderly, who often experience cognitive decline and rely on caregivers for medication management. Misidentification of pills due to similar appearances, unfamiliar formulations, or inadequate labeling can result in serious health consequences, including drug interactions, overdoses, and ineffective treatment.

Traditional pharmaceutical information systems primarily rely on manual input, requiring users to enter drug names or descriptions, which can be impractical for elderly patients or those with limited pharmaceutical knowledge. However, visual recognition of diverse pharmaceutical preparations is inherently challenging [

4,

5], particularly for aging populations who may have difficulty remembering medication names and prescription details [

5]. Many elderly individuals rely on medications provided by family members or acquaintances without verifying their accuracy, increasing the risk of medication misuse and adverse reactions [

6].

Deep learning-based automated pharmaceutical identification systems have shown potential in addressing these issues by enabling real-time and accurate pill recognition through image classification. Existing research has explored various machine learning models for pill classification, demonstrating notable advancements [

4,

7,

8]. However, limitations persist in terms of accuracy, robustness, and adaptability to real-world conditions, including variations in lighting, occlusions, and diverse pill designs. Recent studies have proposed integrating object detection techniques with convolutional neural networks (CNNs) for enhanced accuracy, with some systems achieving classification rates of 85–90% on standardized datasets [

2,

4]. Despite these improvements, real-world implementation remains a challenge, as many systems are designed for laboratory-controlled environments and struggle with real-time processing [

2].

To address these challenges, we propose an advanced deep learning-based pharmaceutical recognition system optimized for mobile applications. Our system employs ResNet101, a state-of-the-art CNN architecture, which demonstrated superior classification accuracy (98.51%) compared to other widely used architectures such as DenseNet, EfficientNet, MobileNet, SqueezeNet, and VGGNet. The system is designed to process medication images captured in diverse environments while integrating real-time inference capabilities suitable for mobile deployment. Additionally, heatmap-based visualizations enhance model interpretability, improving trust in the system’s classification decisions.

This research aims to develop a robust and practical medication identification system that ensures high-precision classification of pharmaceutical compounds. A comparative evaluation of multiple CNN architectures is conducted to determine the most effective deep learning model for real-time pill recognition. Furthermore, the system is implemented as a user-friendly mobile application, specifically designed for accessibility, particularly for elderly individuals who may struggle with conventional medication management. Ultimately, this study seeks to enhance patient safety by preventing medication errors and supporting healthcare providers in drug administration [

1,

9].

By integrating advanced deep learning techniques with practical mobile applications, this study contributes to the growing field of AI-powered healthcare solutions, offering a scalable, efficient, and highly accurate medication recognition system. The findings suggest significant potential for reducing medication-related errors and enhancing patient outcomes, particularly in home healthcare, hospitals, and emergency settings.

2. Related Work

2.1. Deep Learning-Based Approaches for Pharmaceutical Identification

Recent advancements in deep learning have significantly enhanced pharmaceutical identification systems, addressing the limitations of traditional manual pill recognition methods. Deep learning models, particularly convolutional neural networks (CNNs), have been widely adopted for image-based medication classification due to their high accuracy and adaptability. Various research studies have explored the efficacy of CNN architectures in distinguishing pharmaceutical compounds, with notable contributions in the field.

Heo et al. [

4] developed a pill identification system integrating YOLOv5 for object detection, ResNet32 for image recognition, and RNN models for text-based character recognition. Their system achieved an accuracy of 85.65% on Korean datasets (MFDS) and 74.46% on American datasets (NLM), demonstrating the potential of combining deep learning with natural language processing (NLP) for comprehensive pill recognition. However, the system struggled with variations in pill imprints, environmental lighting conditions, and real-world image distortions, leading to misclassification in certain cases.

Similarly, Lee et al. [

4] introduced an automated pill recognition framework utilizing YOLOv5 and ResNet-32, achieving 85.65% and 74.46% accuracy on standard datasets, with 78% accuracy for consumer-captured images. Their approach incorporated a character correction module, improving accuracy by 3.3–11.3%. Despite its efficiency, the system exhibited limitations in processing lowercase characters and special symbols on pill imprints, affecting classification performance in real-world scenarios.

Nguyen et al. [

2] highlighted the deficiencies of conventional pill identification models, which primarily rely on limited datasets such as ePillID and CURE, making them less adaptable to real-world environmental variations. To overcome these constraints, they developed PGPNet (priori graph-assisted pill detection network), which integrates graph neural networks (GNNs) with multimodal data fusion techniques. This model demonstrated enhanced context-aware medication recognition, improving classification accuracy in diverse illumination conditions and backgrounds.

While these studies contribute valuable insights into pharmaceutical image classification, existing models still encounter practical challenges in real-time deployment, accuracy under varying conditions, and user accessibility. Building on these advancements, our research aims to develop a mobile-based medication identification system leveraging ResNet101, achieving a classification accuracy of 98.51%, outperforming other CNN architectures such as DenseNet, EfficientNet, MobileNet, SqueezeNet, and VGGNet. The proposed system integrates real-time inference capabilities, heatmap-based visual explanations for model interpretability, and an optimized user interface tailored for elderly users, ensuring practical usability and patient safety in homecare and clinical environments.

2.2. Deep Learning Models for Pharmaceutical Image Classification

The success of pharmaceutical image classification depends on the selection and optimization of convolutional neural network (CNN) architectures. Various CNN models have been extensively studied for their effectiveness in medical and pharmaceutical imaging, each offering unique advantages in terms of accuracy, computational efficiency, and scalability. In this study, we conducted a comparative analysis of six widely recognized CNN architectures—DenseNet, EfficientNet, ResNet, MobileNet, SqueezeNet, and VGG—to determine the most suitable model for real-time medication identification in a mobile application.

2.2.1. DenseNet

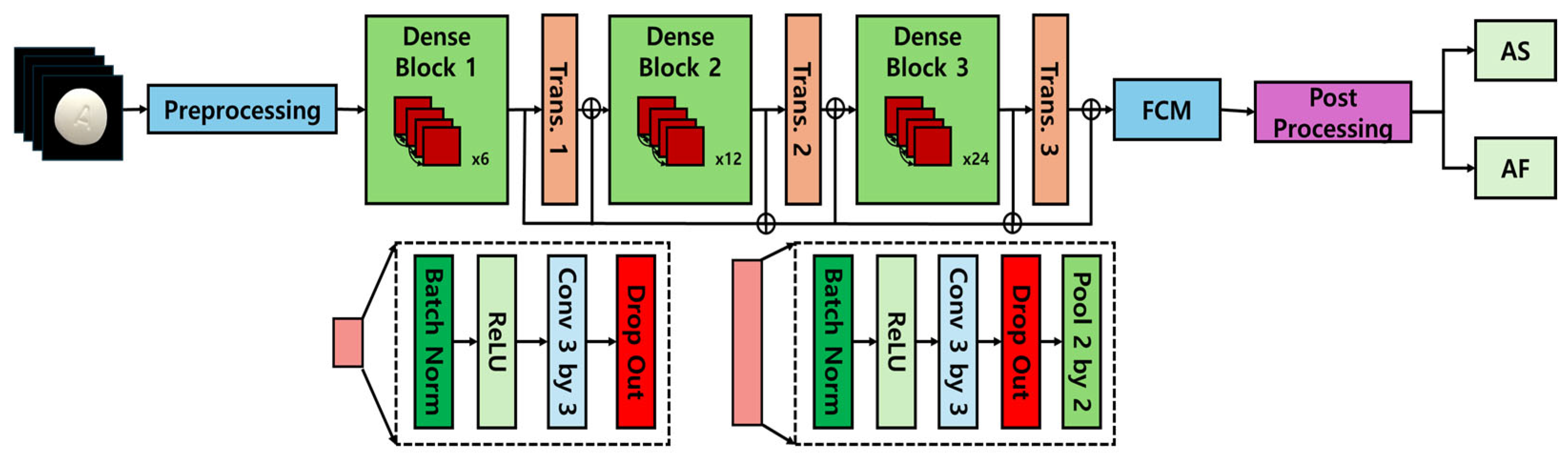

DenseNet (densely connected convolutional network), introduced by Huang et al. in 2017 [

10], represents a significant advancement in convolutional neural network (CNN) architectures through its dense block structure, which establishes direct connections between all layers in the network. This design allows feature maps from each layer to be propagated to all subsequent layers, effectively mitigating the vanishing gradient problem that commonly affects deep networks while simultaneously enhancing feature reuse across the network. Due to this efficient connectivity pattern, DenseNet achieves superior performance with reduced parametric complexity, leading to enhanced computational efficiency and optimized resource utilization. The architectural framework of DenseNet is depicted in

Figure 1 [

11,

12], illustrating its distinctive connectivity structure and the dense feature propagation mechanism that underpins its performance advantages.

DenseNet implements a dense block architecture in which outputs from each layer are directly transmitted to all subsequent layers, effectively alleviating feature reuse challenges and mitigating the vanishing gradient phenomenon. This architectural characteristic provides significant advantages for processing pharmaceutical images that require the analysis of complex morphological feature combinations (such as tablet engravings, colorimetric properties, and surface textures). In particular, the network’s ability to integrate localized image information across multiple resolution scales substantially contributes to improved classification performance when distinguishing between visually similar pharmaceutical images.

2.2.2. EfficientNet

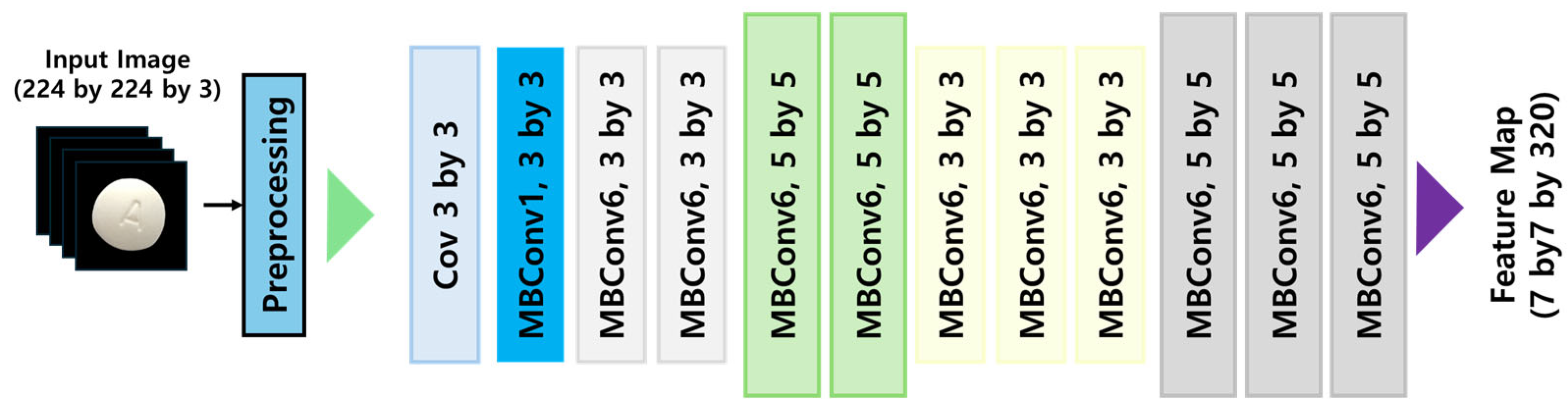

EfficientNet, developed by Tan & Le at Google Brain in 2019 [

13], is a highly optimized CNN architecture that utilizes neural architecture search (NAS) to automate the design of efficient deep learning models. The distinguishing feature of EfficientNet is its compound scaling methodology, which systematically scales network depth, width, and input resolution simultaneously, thereby maximizing accuracy while maintaining computational efficiency. For this study, we implemented the EfficientNet B2 variant, which is particularly suited for high-resolution feature extraction in pharmaceutical image classification. The compound scaling approach facilitates progressive network expansion while incorporating regularization techniques to prevent overfitting and enhance generalization performance. The architectural configuration of EfficientNet is visually represented in

Figure 2 [

12], highlighting its scaled layers and interconnection patterns that optimize performance.

EfficientNet employs a compound scaling methodology to concurrently optimize dimensional parameters—depth, width, and resolution—achieving enhanced performance with minimal parametric overhead. Nevertheless, applications within specialized domains like pharmaceutical image analysis necessitate the identification of extremely subtle visual cues (such as delicate imprint configurations or slight chromatic differentiations), where the diminished representational capabilities consequent to model lightweighting may adversely affect classification accuracy. Although MBConv blocks demonstrate exceptional computational parsimony, they fundamentally struggle to retain the granular visual information essential for these highly specialized analytical contexts.

2.2.3. ResNet

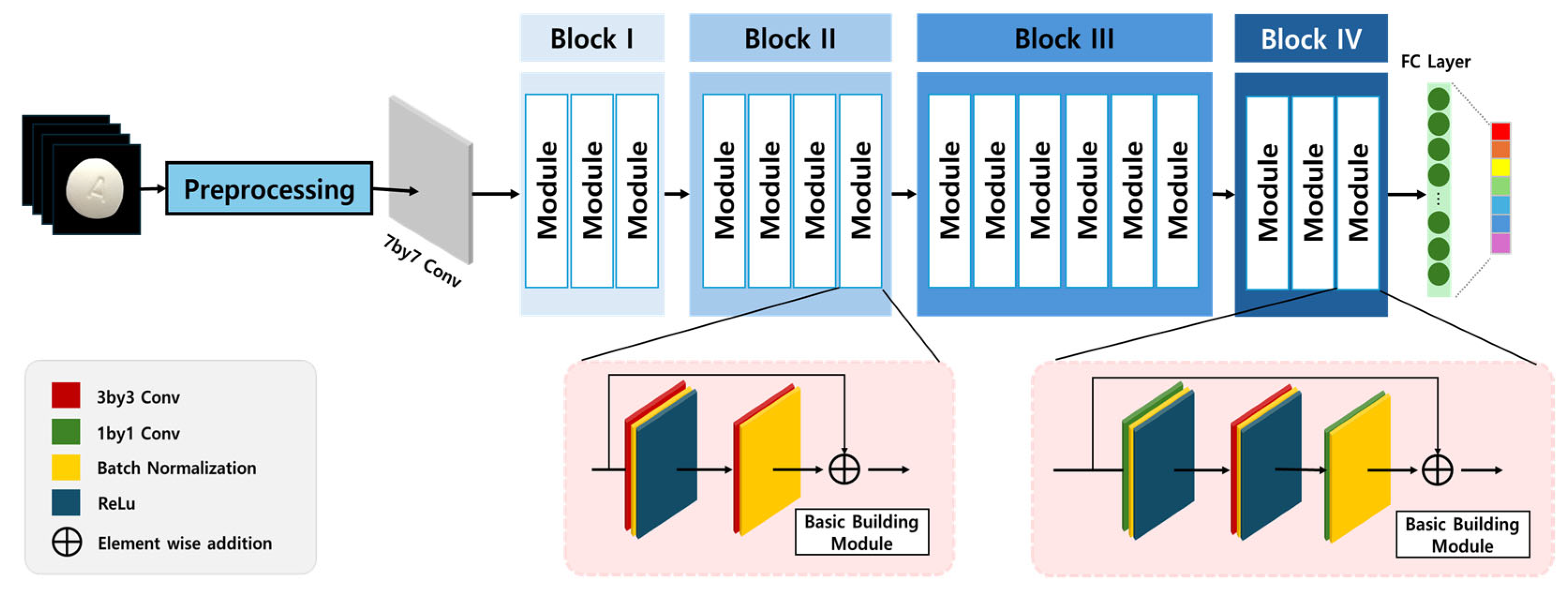

ResNet (residual network), proposed by He et al. in 2015 [

14], was specifically designed to address the gradient vanishing problem, which becomes increasingly significant in deep networks. The key innovation of ResNet is the Residual Learning framework, which introduces identity shortcut connections that allow input signals to bypass intermediate layers, ensuring consistent gradient propagation throughout the network. This architecture significantly enhances training stability, enabling deep networks to learn effectively without degradation in performance. The implementation of residual connections not only facilitates information flow but also prevents feature degradation, making ResNet particularly well-suited for complex image classification tasks. The structural composition of ResNet is illustrated in

Figure 3 [

12,

15], demonstrating its skip connections and residual blocks, which contribute to its superior performance in pharmaceutical image classification.

Within pharmaceutical image classification contexts, ResNet’s architectural framework shows exceptional compatibility with domain-specific requirements. Pharmaceutical tablets frequently present subtle visual differentiators—including graduated morphological variations, debossed identifiers, perimeter configurations, or delicate chromatic surface properties—necessitating comprehensive hierarchical feature extraction capabilities. The distinctive residual connectivity structure enables concurrent acquisition of both fundamental spatial attributes and advanced semantic representation while preserving critical discriminative information throughout successive transformational processes.

Additionally, the characteristic skip connection methodology prevents discriminative feature degradation across network depth, thereby ensuring preservation and amplification of essential tablet characteristics (including impressed alphanumeric identifiers and boundary definition) throughout the learning process. This enhanced representational precision proves particularly valuable in pharmaceutical classification work characterized by exceptional inter-class visual homogeneity. Consequently, ResNet’s residual architecture not only enhances computational learning efficiency but also significantly improves generalization capacity within a domain where classification accuracy directly impacts taking medication safety protocols and pharmaceutical identification reliability.

2.2.4. MobileNet

MobileNet V2, developed by Google’s research team, is a lightweight CNN architecture optimized for mobile and edge computing environments [

16,

17]. The architecture is designed for efficient computational resource utilization, making it well-suited for applications requiring low-latency inference on mobile devices. The key innovation in MobileNet is its depthwise separable convolution operation, which decomposes standard convolutions into depthwise and pointwise convolutions, significantly reducing computational complexity while preserving feature representation capacity. This architectural optimization enables MobileNet to deliver high-speed performance in image classification and object detection tasks while maintaining compatibility with transfer learning and domain-specific fine-tuning. The architectural framework of MobileNet is illustrated in

Figure 4 [

12], highlighting its specialized convolution operations and compact layer organization.

MobileNet represents a lightweight architecture that maximizes computational efficiency through depthwise separable convolutions, making it particularly suitable for real-time inference in mobile environments. However, this architectural configuration inherently constrains representational capacity, resulting in limitations when capturing high-resolution localized information required for pharmaceutical tablet imagery (specifically engraving details and surface texture characteristics). This constraint potentially diminishes identification accuracy between tablets exhibiting simple and morphologically similar configurations.

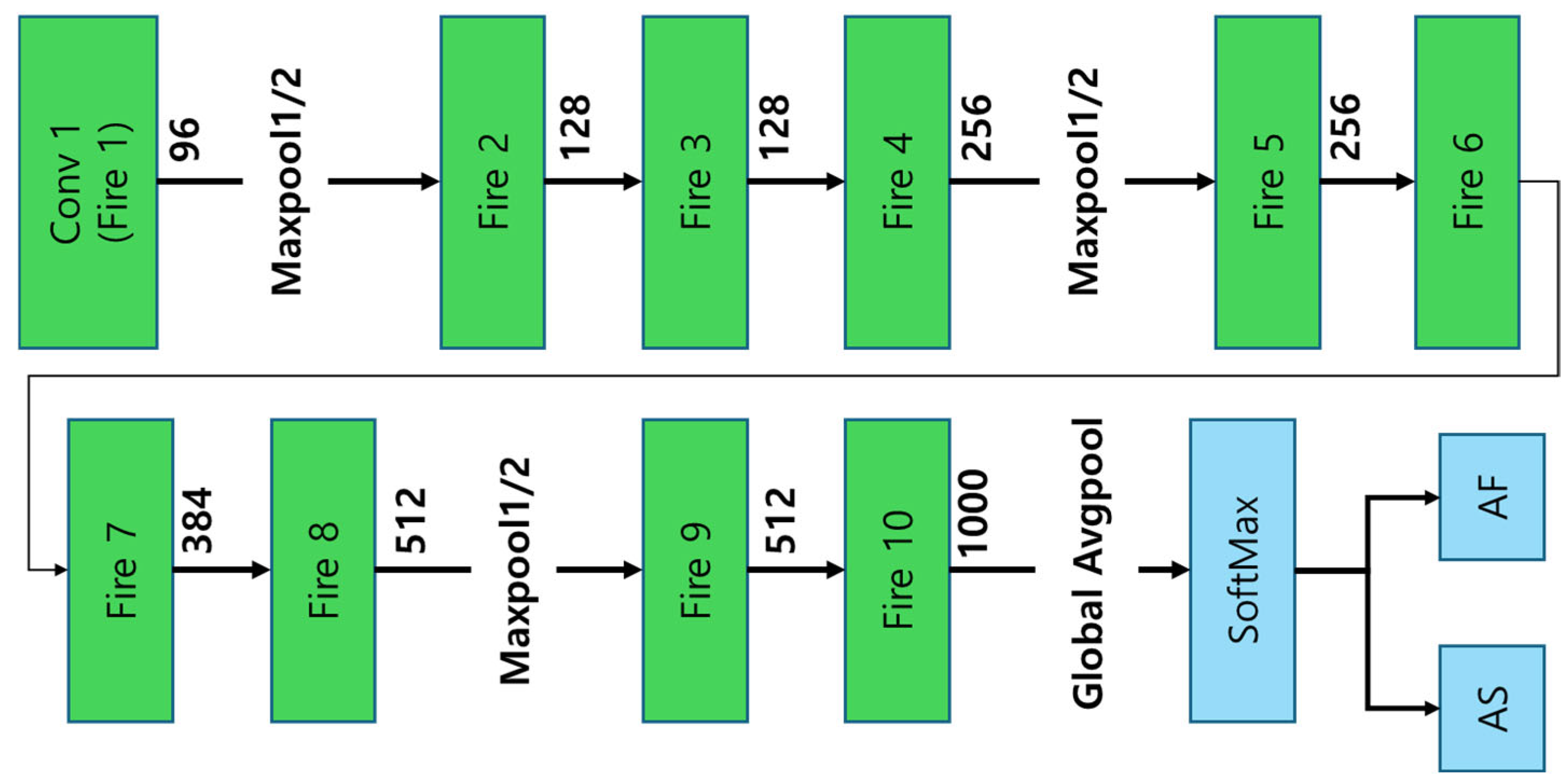

2.2.5. SqueezeNet

SqueezeNet, introduced by Iandola et al. in 2016 [

18], is a highly compact CNN architecture designed to achieve AlexNet-level accuracy while dramatically reducing parameter count by a factor of 50. This results in an extremely small model footprint, making it particularly well-suited for resource-constrained environments. A key innovation in SqueezeNet is the Fire Module, which strategically combines 1 × 1 and 3 × 3 convolution operations to minimize computational cost while preserving classification accuracy. However, despite these optimizations, SqueezeNet’s reduced feature extraction capacity limits its ability to process complex pharmaceutical images, leading to lower classification accuracy compared to deeper architectures such as ResNet and EfficientNet. The architectural structure of SqueezeNet is depicted in

Figure 5 [

12], illustrating its Fire Module implementation and overall network organization.

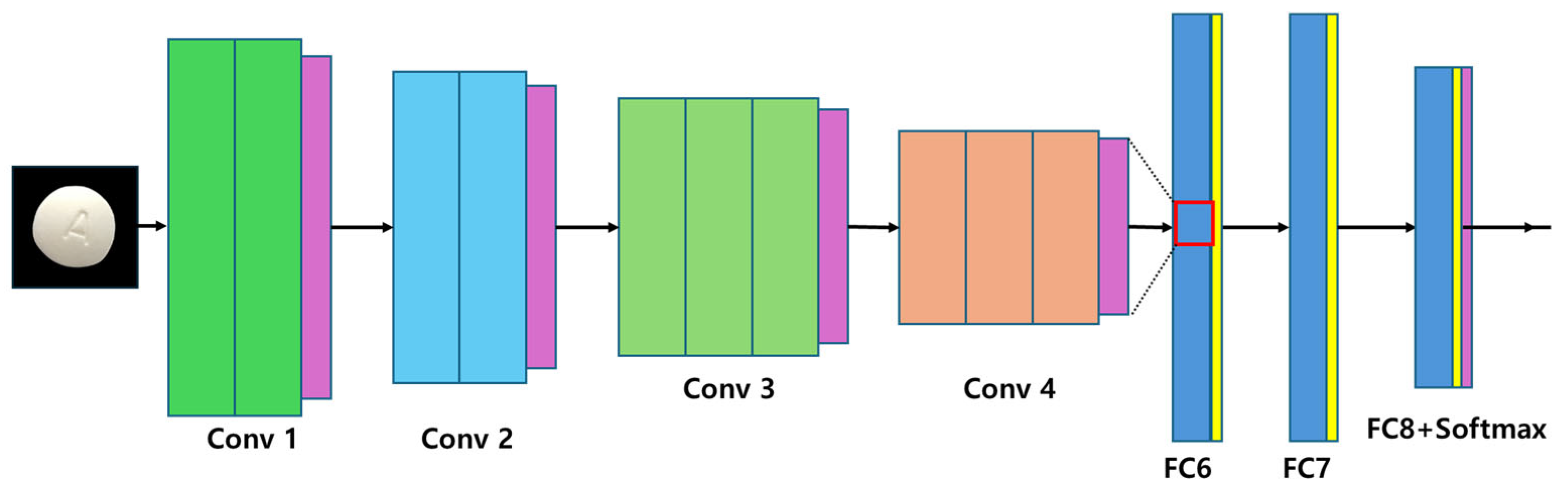

2.2.6. VGGNet

VGGNet (very deep convolutional network), developed by Simonyan & Zisserman in 2014 [

19], is characterized by its stacked 3 × 3 convolutional filters, which facilitate hierarchical feature extraction. This approach expands receptive fields while simultaneously reducing the number of parameters, allowing for more refined feature learning in deep architectures. Despite its advantages in accuracy, VGGNet suffers from high computational demands, particularly in the fully connected layers, which account for a large proportion of the network’s parameters. This results in increased memory consumption and extended training cycles, making VGGNet less suitable for mobile-based pharmaceutical image classification. The architectural framework of VGGNet is presented in

Figure 6 [

12], illustrating its deep structure and characteristic filter arrangement.

In this study, a range of CNN architectures—including ResNet101, DenseNet, EfficientNet, MobileNet, SqueezeNet, and VGGNet—was systematically examined to evaluate their applicability to pharmaceutical image classification tasks. The selection of each model was grounded in its architectural characteristics and theoretical suitability for capturing domain-specific visual features.

ResNet101 was designated as the principal model due to its robust residual learning framework, which facilitates the effective preservation of fine-grained spatial features such as tablet imprints, edge contours, and subtle morphological distinctions. DenseNet improves gradient flow, offering advantages for datasets with moderate inter- and intra-class variability. EfficientNet was included to assess the trade-off between accuracy and computational efficiency, given its compound scaling strategy that balances depth, width, and resolution.

MobileNet and SqueezeNet, both lightweight architectures optimized for deployment on low-resource environments, were evaluated for their potential applicability in mobile or embedded pharmaceutical systems. VGGNet, despite its relatively shallow and straightforward architecture, was employed as a comparative baseline to assess the influence of network depth and parameter complexity on classification performance.

The inclusion of these architectures was not incidental but rather intended to encompass a broad spectrum of CNN design philosophies, ranging from deep residual learning to compact, parameter-efficient designs. This comprehensive selection enabled a balanced evaluation of how architectural variation impacts classification accuracy, generalization capability, and practical deployment feasibility in the context of high-resolution pharmaceutical imaging.

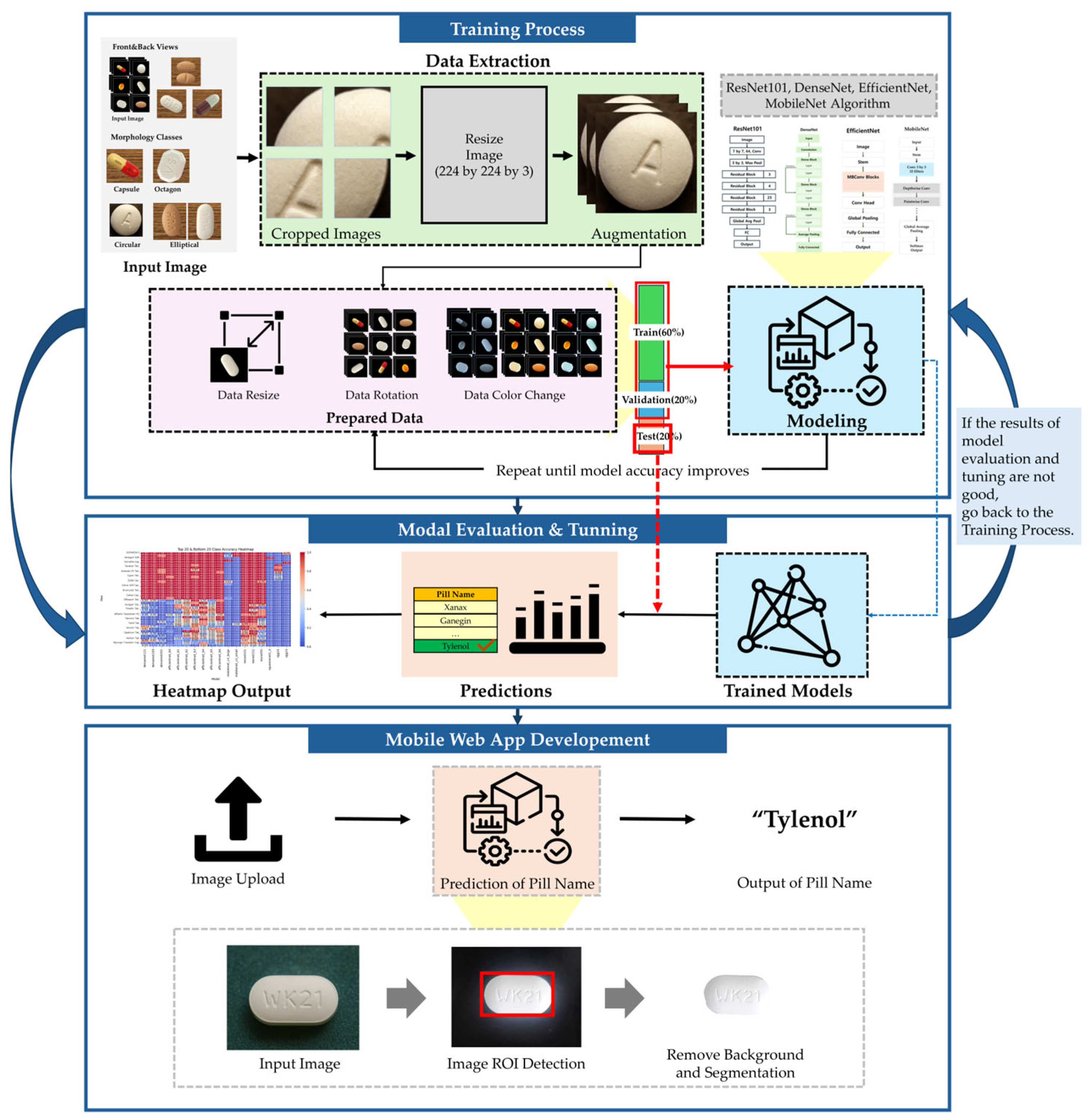

3. Materials and Methods

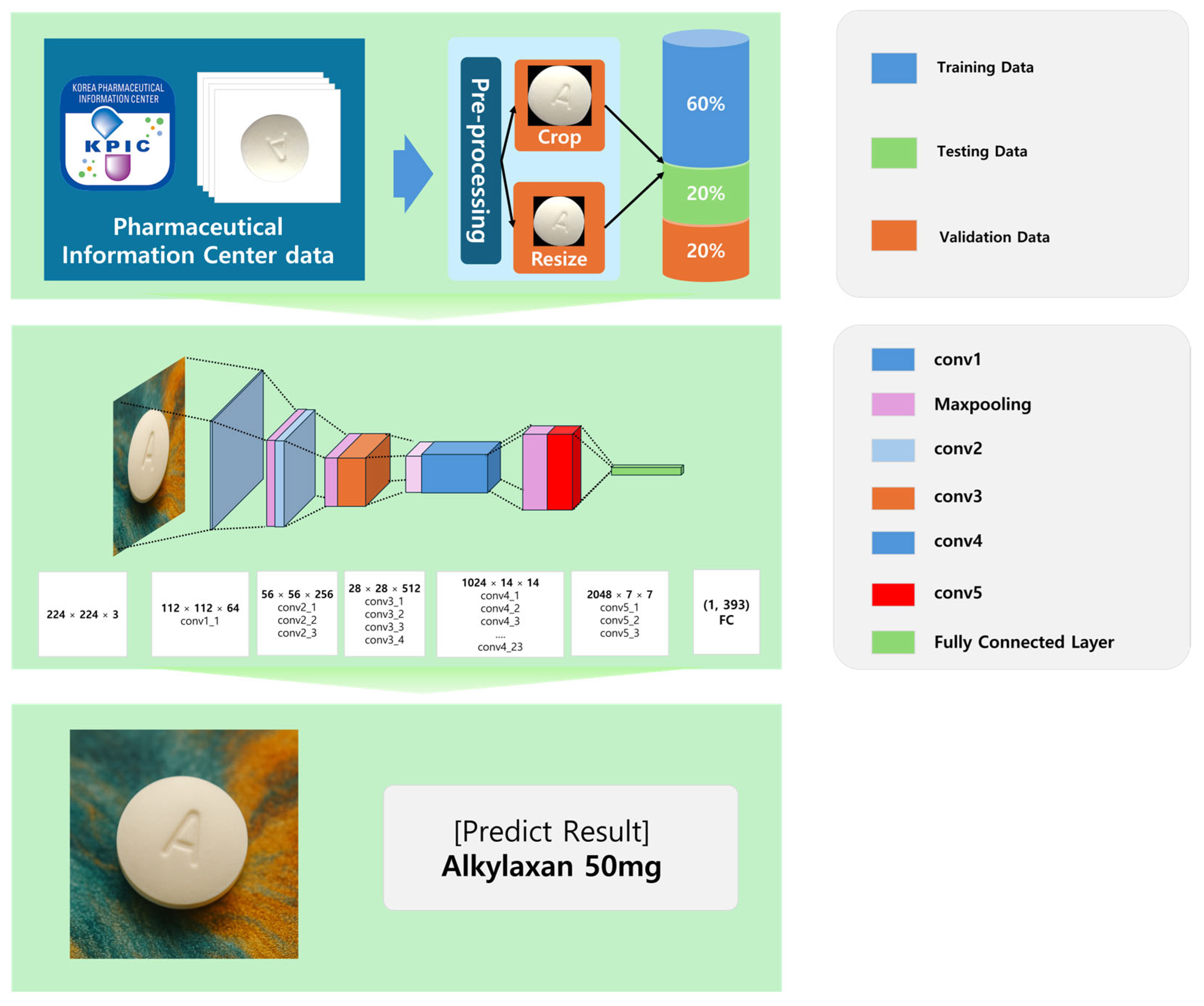

This section outlines the methodology employed in developing a deep learning-based pharmaceutical classification system, including dataset preparation, preprocessing, model selection, training strategy, and evaluation. The overall workflow of the proposed methodology is illustrated in

Figure 7.

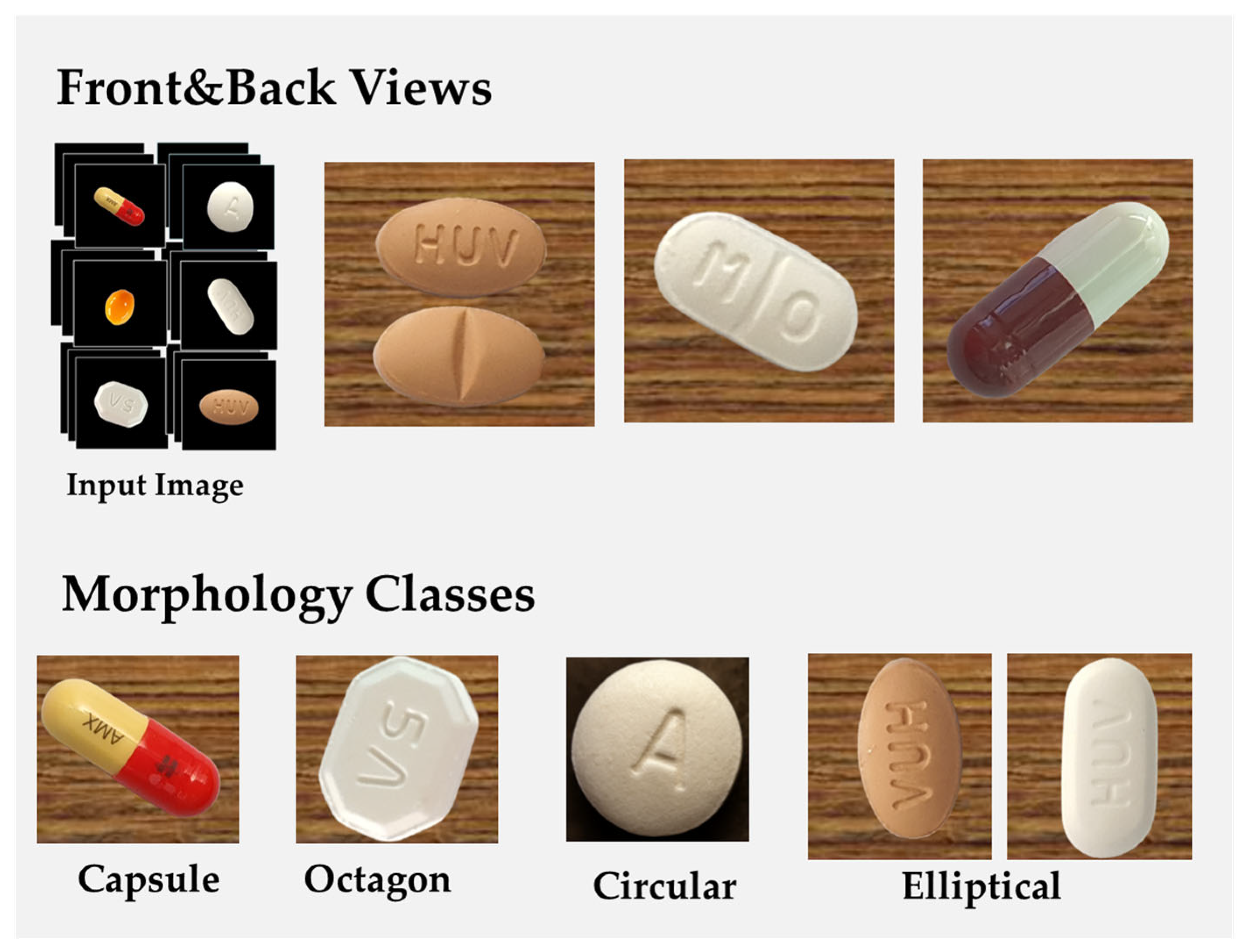

3.1. Datasets

A large-scale pharmaceutical image dataset was used to train and evaluate the proposed system. The dataset, sourced from the Korea Pharmaceutical Information Center, comprises 5088 distinct images of various pharmaceutical tablets, occupying a total storage volume of 168 GB. These images were systematically categorized based on tablet morphology, imprints, and colors to ensure accurate classification.

Table 1 presents Summary of the pharmaceutical image dataset. The dataset was partitioned into training (60%), validation (20%), and testing (20%) subsets, ensuring an even distribution of anterior and posterior tablet images across all categories to mitigate potential biases.

Figure 8 presents the morphological variations of tablets in the dataset, categorizing them based on their geometric configurations. This classification framework facilitated a structured approach to training the deep learning model, ensuring that it could differentiate between tablets with similar visual features but distinct pharmaceutical compositions.

The images in the dataset were captured under diverse environmental conditions, incorporating both background-removed images and contextual images with original backgrounds to improve the model’s adaptability to real-world scenarios. The classification criteria for organizing the dataset were based on tablet geometry (e.g., circular, oblong, oval, and quadrilateral shapes) and distinctive manufacturer markings such as embossed or debossed imprints.

To maintain consistency across all images, a standardized preprocessing pipeline was implemented. Each image was centered and cropped to retain only the tablet region, effectively removing background noise. The images were then resized to a uniform 512 × 512 pixels, ensuring compatibility with deep learning models while preserving morphological integrity. Contrast enhancement techniques, such as histogram equalization, were applied to improve the visibility of tablet imprints and surface details. Additionally, data augmentation methods, including random rotation, flipping, brightness adjustment, and Gaussian noise addition, were employed to improve the model’s generalization capabilities against real-world variations in tablet appearance.

Recognizing the limitations of our laboratory environment, which made it physically difficult to take direct images in various environments (e.g., variable lighting, clinical settings, and public image repositories), we simulated such diversity through an extensive data augmentation strategy.

To evaluate the dataset’s reliability and ensure high-quality pharmaceutical image classification, multiple measures were taken. Specifically, image rotation, flip, brightness adjustment, and noise injection were used to simulate different viewing angles, illumination variations, and background elements to reduce visual imbalance between different domains. The dataset was also cross-referenced with official pharmaceutical records to confirm label accuracy. Furthermore, duplicate image detection algorithms were applied to eliminate redundant samples, preventing overfitting during model training.

3.2. Data Preprocessing and Augmentation

To ensure optimal model performance and robustness, a standardized data preprocessing and augmentation pipeline was implemented. The primary objective of preprocessing was to enhance image quality, normalize input dimensions, and increase dataset variability to improve the generalization capability of the deep learning model.

Each image in the dataset underwent several preprocessing steps, beginning with background removal to isolate the tablet from its surroundings. To standardize input dimensions, all images were resized to 512 × 512 pixels, ensuring consistency while maintaining the morphological integrity of each pharmaceutical tablet. Additionally, grayscale conversion was applied where necessary to reduce computational complexity, particularly in models that prioritize shape-based feature extraction.

To further enhance the visibility of pill imprints and surface details, contrast normalization techniques such as histogram equalization were employed. This adjustment ensured that subtle features, such as engraved text or embossed symbols, remained distinguishable under varying lighting conditions. Moreover, to mitigate potential biases from illumination differences, adaptive brightness and contrast adjustments were applied.

To improve model robustness against real-world image variations, data augmentation techniques were incorporated during training. The augmentation process introduced controlled modifications to the dataset, ensuring the model could generalize well to different orientations, distortions, and imaging conditions. The following augmentation strategies were applied:

Random rotation (±20°): Introduced minor rotational variations to account for non-perfectly aligned pill images.

Horizontal and vertical flipping: Applied selectively to balance asymmetric pill distributions in the dataset.

Gaussian noise addition: Simulated real-world noise conditions, improving the model’s ability to classify pills under suboptimal imaging scenarios.

Shearing and scaling: Applied to introduce perspective variations, ensuring robustness to variations in camera angles.

A critical aspect of data preprocessing involved ensuring class balance within the dataset. To prevent the model from overfitting to dominant classes, synthetic image generation techniques, such as oversampling underrepresented classes, were employed. This ensured that all pharmaceutical categories were adequately represented in the training dataset, minimizing class imbalance issues that could skew model predictions.

Once preprocessing and augmentation were completed, the dataset was normalized using mean subtraction and scaling, aligning all pixel values within a range of [0, 1]. This standardization improved convergence speed during model training by ensuring that all images were processed on a consistent scale.

By implementing this comprehensive preprocessing and augmentation strategy, the dataset was transformed into a robust, well-balanced input source, ensuring high classification accuracy and real-world applicability of the deep learning model in pharmaceutical image recognition.

3.3. Deep Learning Model Design

The development of a deep learning-based pharmaceutical classification system required a carefully structured model selection, training strategy, and network architecture. This section describes the rationale behind selecting ResNet101, the details of the training process, and the architectural design of the model implemented in the proposed mobile-based medication recognition system.

3.3.1. Model Selection and Training Strategy

The selection of an appropriate deep learning model is crucial for achieving high accuracy in pharmaceutical image classification. In this study, we conducted a comparative evaluation of multiple convolutional neural network (CNN) architectures, including DenseNet, EfficientNet, ResNet, MobileNet, SqueezeNet, and VGGNet, to determine the optimal model for real-time medication identification.

Each model was evaluated based on classification accuracy, inference speed, and computational efficiency. ResNet101 emerged as the best-performing model, achieving an accuracy of 98.51%, outperforming the other architectures in both precision and recall metrics. Its residual learning framework mitigates gradient vanishing, enabling deeper networks to learn effectively while retaining computational feasibility.

The training process was optimized to enhance model generalization and real-time deployment capabilities. Transfer learning was employed, leveraging pretrained ResNet101 weights from ImageNet to initialize the model for pharmaceutical classification. Fine-tuning was then applied to adapt the model to tablet-specific morphological details, including shape, color, and imprint text.

To prevent overfitting and improve robustness, batch normalization and dropout regularization techniques were incorporated. The hyperparameters were systematically optimized to maximize performance, as outlined below:

Learning rate: Initially set to 0.001, with a step decay strategy reducing it by a factor of 0.1 every 10 epochs.

Batch size: 32 images per batch to balance computational efficiency and training stability.

Optimization algorithm: Adam optimizer, selected for its adaptive learning rate and improved convergence speed.

Loss function: Categorical cross-entropy, ensuring accurate multi-class classification.

Number of epochs: 50, with early stopping applied when the validation loss plateaued to prevent unnecessary computation.

3.3.2. Network Architecture of ResNet101

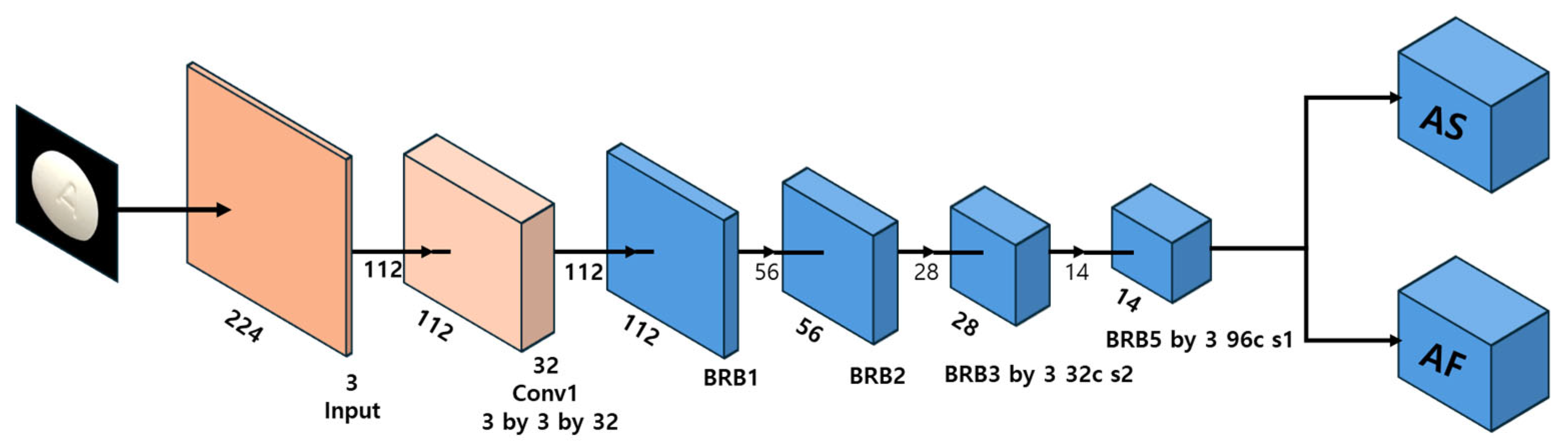

The ResNet101 model used in this study was specifically designed to handle pharmaceutical image classification by extracting tablet-specific visual features through a hierarchical deep learning framework. The architecture is depicted in

Figure 9.

The ResNet101 model is structured into three primary components, facilitating hierarchical feature extraction and classification:

Convolutional layers (feature extraction stage): The input images (224 × 224 × 3) undergo initial feature extraction through a convolutional layer employing 64 filters, capturing low-level visual attributes such as edges, textures, and color gradients. Max pooling operations are applied to reduce spatial dimensions while retaining high-impact features.

Deep feature learning (residual block stacking): Residual learning blocks (conv2-conv5) progressively build hierarchical representations of tablet images, capturing increasingly complex visual patterns such as imprint shapes, color variations, and texture details. Identity mappings (skip connections) enable direct feature propagation, preventing gradient degradation and improving model optimization.

Fully connected layers (classification stage): The final feature maps from the residual blocks are converted into a 2048-dimensional feature vector. The softmax activation function normalizes the feature vector, generating probabilistic classification outputs corresponding to pharmaceutical categories.

3.4. Implementation and System Deployment

To ensure the efficient deployment of the pharmaceutical classification system, we implemented a robust deep learning framework utilizing Python (version 3.9.20) as the primary programming language. The system was designed to leverage Python’s extensive ecosystem of scientific computing libraries and deep learning frameworks, enabling optimized model training, evaluation, and real-time inference.

For the web server implementation, we employed Flask (version 3.1.0), a lightweight WSGI web application framework, which facilitated the seamless integration of the pharmaceutical classification model into a networked environment. This ensured real-time processing capabilities, making the system suitable for mobile-based deployment.

The classification model development was based on the Fastai library (version 2.7.18), a high-level deep learning API built on PyTorch (version 2.6.0). Fastai provided sophisticated tools for model construction and fine-tuning, advanced data augmentation techniques, discriminative learning rates for optimized training, and progressive resizing to enhance generalization performance.

These features were critical in optimizing the classification model’s accuracy while ensuring efficient computation, making it suitable for real-world healthcare applications.

To systematically validate the system, we implemented rigorous evaluation protocols to assess the classification precision across various pharmaceutical compounds. Through quantitative performance analysis, we established reliable classification benchmarks that could translate effectively into clinical and pharmaceutical applications.

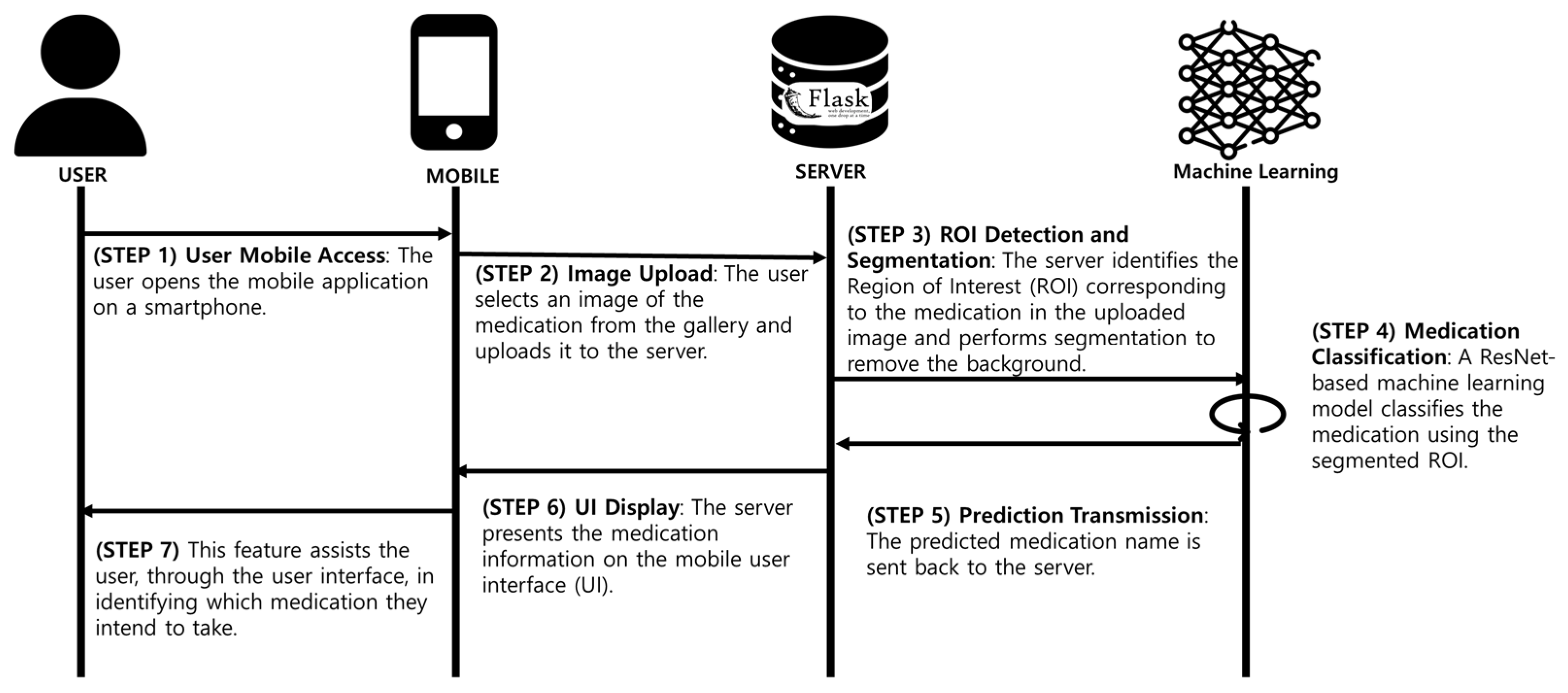

Figure 10 presents an overview of the implementation workflow, illustrating the sequential process from data acquisition to model training, validation, and deployment. The pharmaceutical classification system follows a structured seven-step process, ensuring an accurate, efficient, and user-friendly experience:

- (1)

User access (STEP 1): The user launches the mobile application on their smartphone.

- (2)

Image upload (STEP 2): The user selects or captures an image of a pharmaceutical tablet, uploading it to the flask-based backend server.

- (3)

Region of interest (ROI) detection (STEP 3): The server applies advanced computer vision algorithms to detect the tablet region and eliminate unnecessary background elements.

- (4)

Deep learning classification (STEP 4): The isolated pill image is classified using ResNet101, which processes the segmented image through multiple convolutional layers, extracting hierarchical feature representations.

- (5)

Prediction generation (STEP 5): The system generates a classification label along with confidence metrics, which are transmitted back to the server.

- (6)

Result transmission (STEP 6): The classified medication information is sent to the mobile device via an API request.

- (7)

User interface display (STEP 7): The app displays the identified pill name, dosage, and confidence score, enabling users to recognize and verify their medication.

This structured pipeline ensures that the system delivers accurate and fast medication classification, addressing critical challenges in pharmaceutical safety.

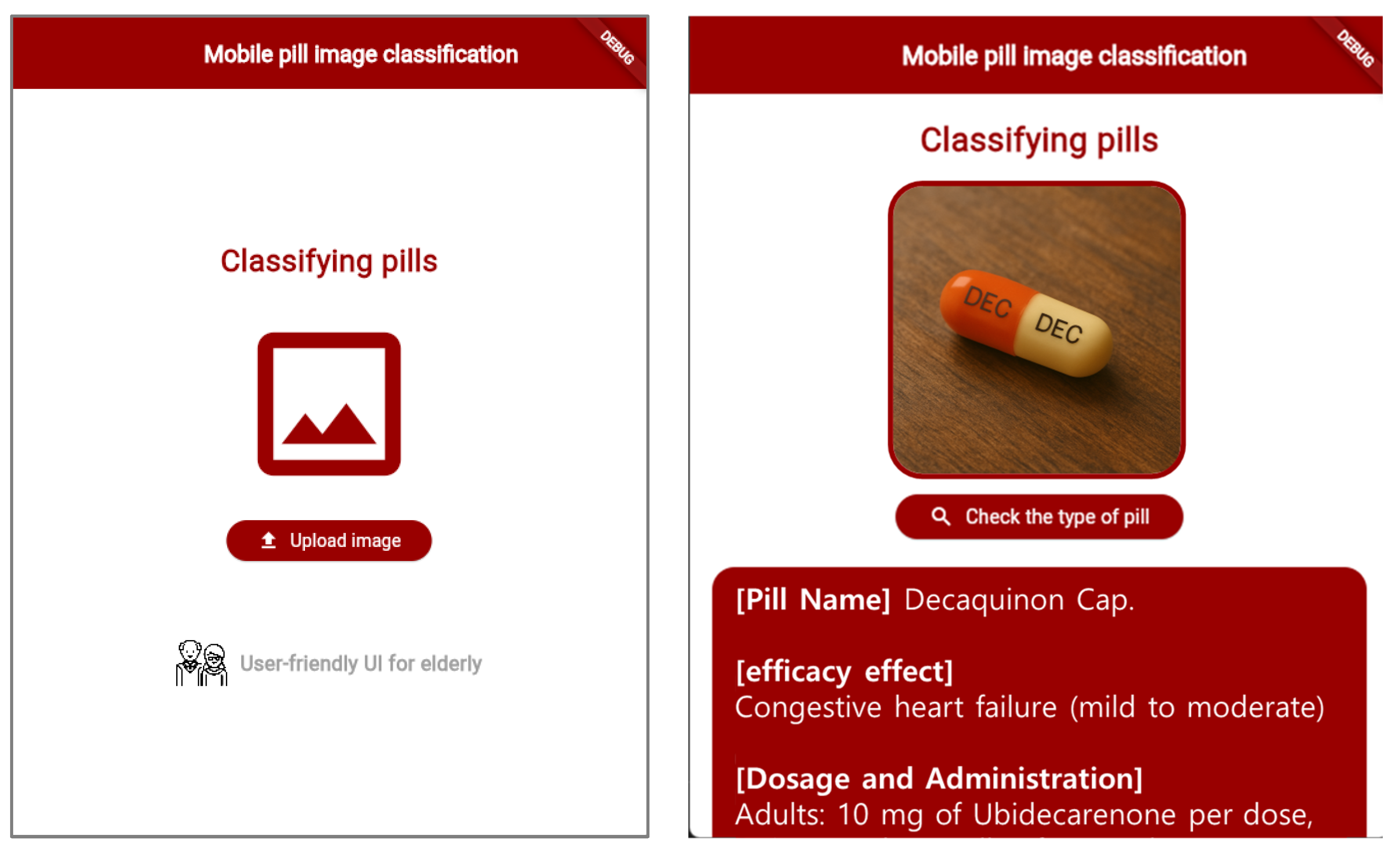

To enhance accessibility, the mobile application interface was designed with a user-centric approach, prioritizing ease of use, clarity, and functionality. The primary target demographic includes elderly users, who may require simplified navigation and clear feedback. Key design considerations included the following:

A minimalist interface to reduce cognitive load.

Streamlined navigation for effortless interaction.

Large fonts and high-contrast UI elements for improved readability.

Intuitive workflow to guide users through the medication classification process.

The mobile application’s user interface is shown in

Figure 11, demonstrating its functionality and layout. By providing instantaneous medication identification capabilities, the system significantly reduces the risk of pharmaceutical misidentification and improves medication safety, particularly for elderly users managing multiple prescriptions.

4. Experiment Results

This section presents a comprehensive evaluation of the pharmaceutical classification model, focusing on its classification accuracy, computational efficiency, inference speed, and real-world applicability. The experimental results provide empirical insights into the model’s generalization capabilities, robustness, and performance across different pharmaceutical types.

4.1. Comparative Model Accuracy Performance Analysis

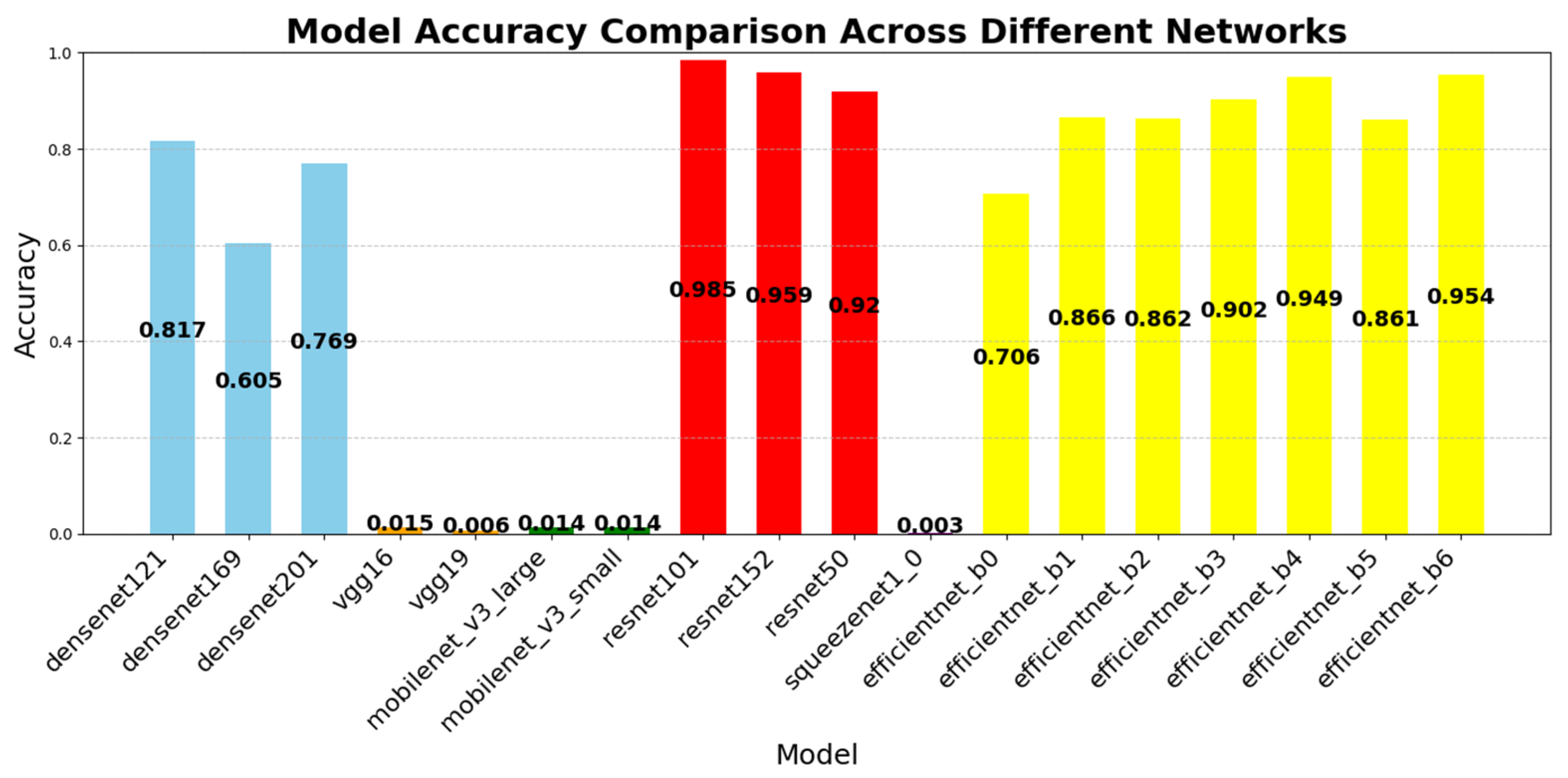

To assess the classification accuracy of the proposed ResNet101-based model, we conducted a comparative analysis with other CNN architectures, including DenseNet, EfficientNet, MobileNet, SqueezeNet, and VGGNet. The accuracy values were computed with high precision, rounded to the fourth decimal place.

Given that precise medication classification is critical for preventing pharmaceutical misuse, our evaluation prioritized models that could avoid ambiguous classifications where a single tablet might be categorized under multiple medication types. Therefore, classification accuracy was used as the principal performance metric.

Each deep learning model was trained and fine-tuned using pre-trained weights from the pharmaceutical image dataset. The evaluation process ensured consistent data partitioning and hyperparameter tuning across all models.

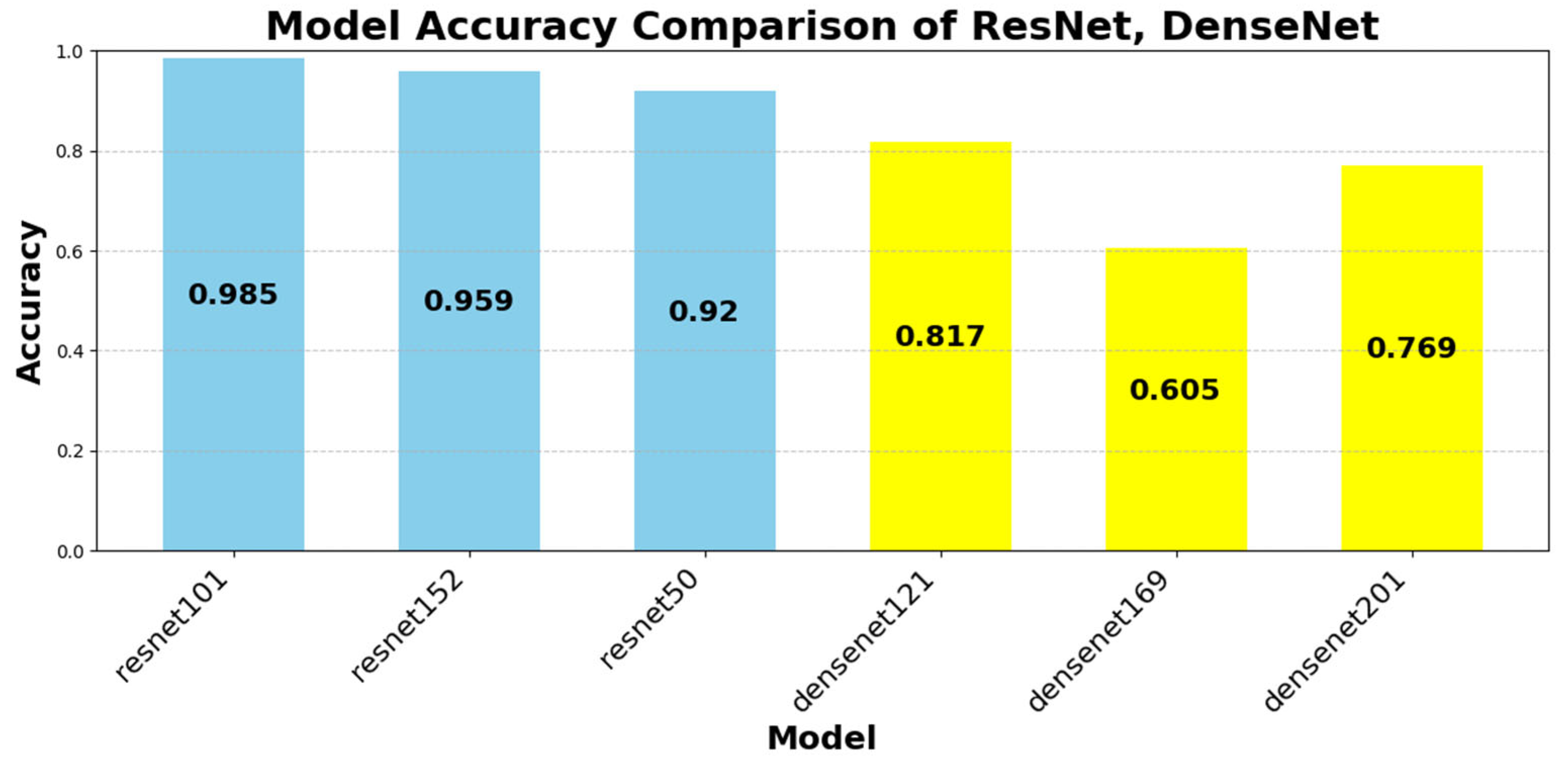

Figure 12 illustrates the classification accuracy of the evaluated CNN architectures. ResNet101 achieved the highest accuracy (98.51%), followed by ResNet152 (95.94%) and EfficientNet B6 (95.40%). In contrast, SqueezeNet1_0 (0.25%) and MobileNet V3 Small (1.37%) demonstrated significantly lower performance.

ResNet101’s superior accuracy can be attributed to its residual learning architecture, which effectively prevents gradient vanishing and facilitates stable gradient propagation through skip connections. This structure allows the model to retain and transmit both low-level and high-level visual features, thereby enabling fine-grained recognition of subtle tablet characteristics—such as engravings, imprints, and minor color variations—that are critical for accurately distinguishing visually similar pharmaceutical samples.

In contrast, EfficientNet’s compound scaling approach prioritizes computational efficiency using MBConv blocks and parameter reduction. While this design is effective for general-purpose tasks (e.g., ImageNet classification), it may limit feature resolution and expressive capacity in domain-specific applications such as pharmaceutical classification, where fine-grained local features are essential.

DenseNet architectures demonstrated notable accuracy, with DenseNet121 (81.75%) and DenseNet201 (76.92%) benefiting from dense connectivity and feature reuse, which enhanced gradient propagation and facilitated the learning of tablet-specific features. Conversely, lightweight models such as SqueezeNet, MobileNet, and VGGNet exhibited lower accuracy due to their simplified architectures and compression-driven constraints, which limited representational depth and capacity.

Conversely, SqueezeNet, MobileNet, and VGG models recorded relatively lower accuracy due to their design priorities:

SqueezeNet and MobileNet focus on model compression and parameter reduction, limiting their feature extraction capacity.

VGGNet employs a simplistic architecture that lacks the deep-layer optimizations of modern CNNs.

EfficientNet, while optimized for compound scaling, offers limited depth and feature resolution, making it less suitable for complex pharmaceutical classification tasks.

To further analyze performance trends,

Figure 13 provides a breakdown of ResNet and DenseNet variants, demonstrating how architectural modifications impact accuracy.

Despite the general assumption that deeper networks yield better accuracy, our findings reveal a diminishing return effect in certain cases:

ResNet101 (98.51%) outperformed ResNet152 (95.94%), highlighting that network depth alone does not guarantee improved accuracy.

DenseNet121 (81.75%) outperformed DenseNet201 (76.92%), suggesting that deeper DenseNet models may experience overfitting in pharmaceutical image classification.

These findings emphasize that model efficiency and architectural balance play a more crucial role than raw parameter count in pharmaceutical classification tasks.

4.2. Model Accuracy Performance by Pharmaceutical Type

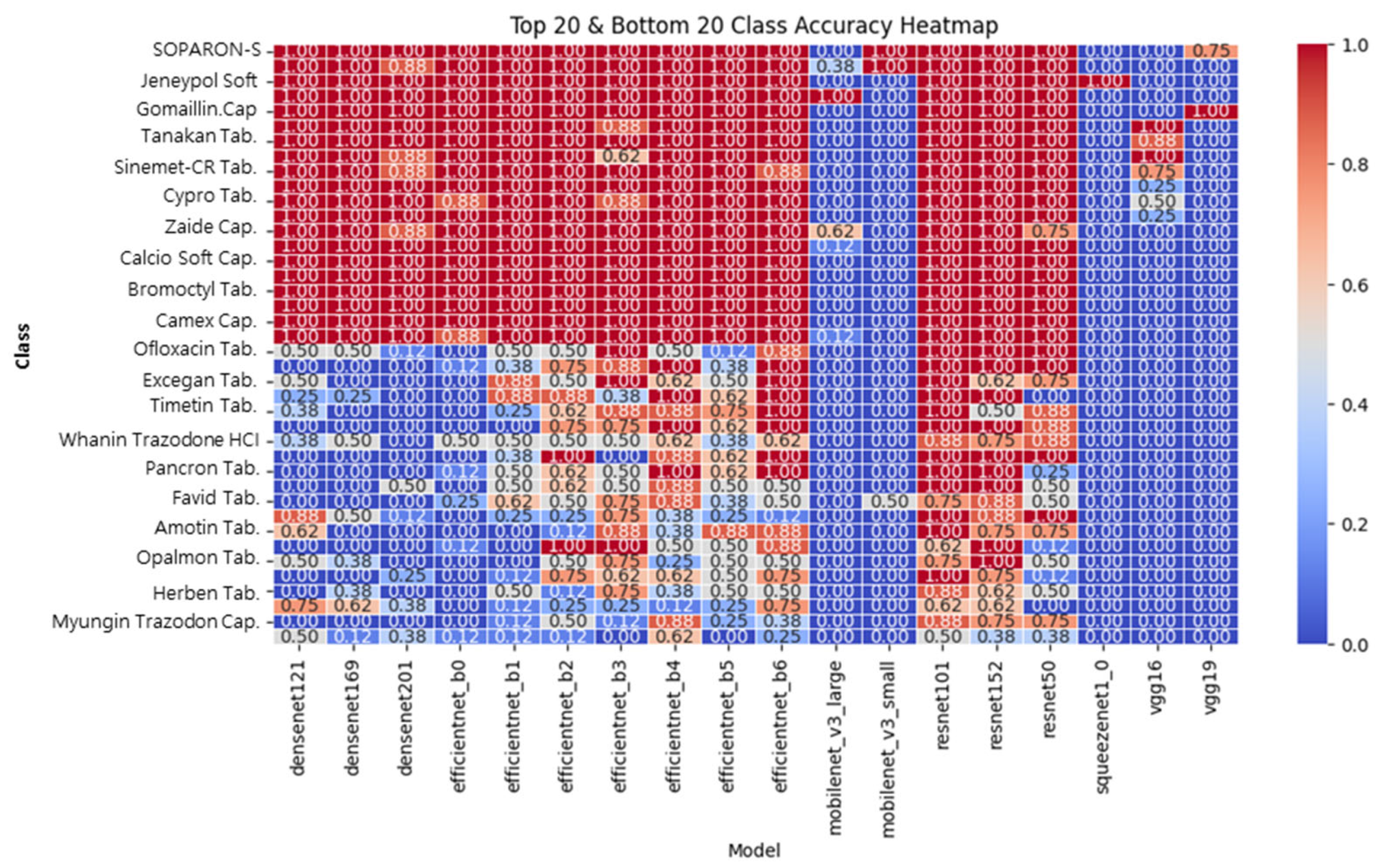

To evaluate how different models classify specific pharmaceutical compounds, a heatmap analysis was performed. Specifically, ResNet101 and ResNet152 demonstrated consistently high classification accuracy (above 0.90) across most medication types, including those with faint imprints or minimal color contrast. This robustness is attributed to the residual learning mechanism, which facilitates the effective propagation of both low-level visual details and high-level semantic representations.

In contrast, the EfficientNet models exhibit fluctuating accuracy patterns across classes. While certain medication types are accurately classified, others—particularly those with subtle morphological differences—show sharp performance degradation. This inconsistency likely stems from the MBConv-based compression mechanism, which reduces feature resolution and limits the model’s ability to preserve fine-grained visual cues essential in pharmaceutical classification.

Lightweight models such as MobileNet and SqueezeNet further accentuate this trend, showing significant misclassifications across visually complex categories. Their parameter-efficient designs, while beneficial for resource-limited environments, are less suited for tasks requiring high expressive capacity.

These heatmap-based visualizations play a critical role in enhancing model transparency and explainability. By offering a qualitative and class-specific perspective, they complement quantitative accuracy metrics and support a more granular evaluation of model behavior. Thus, the use of class-wise heatmaps contributes not only to performance reporting but also to the validation of architectural suitability in real-world domain-specific applications, such as pharmaceutical image classification.

Figure 14 illustrates classification accuracy across different medication categories. The results indicate:

ResNet101 and ResNet152 maintain consistently high classification accuracy across multiple pharmaceutical categories.

MobileNet and SqueezeNet struggle with complex pharmaceutical features, demonstrating reduced accuracy in tablets with faint imprints or similar color patterns.

EfficientNet models exhibit variable performance, excelling in some medication types but performing poorly in others, suggesting potential bias toward specific feature distributions.

These results confirm that deep architectures with strong feature extraction capabilities (e.g., ResNet) are better suited for pharmaceutical classification than lightweight models optimized for mobile efficiency.

In our analysis, ResNet101 and ResNet152 demonstrated consistent classification performance across the board, maintaining high accuracy (above 0.90) for most pharmaceutical classes. Their performance remained stable even for tablets with faint identifying characters or similar colors and shapes. This stability is attributed to the residual learning-based structure’s ability to effectively learn and propagate both low-level visual features and high-level semantic information simultaneously.

The EfficientNet family achieved high accuracy in certain classes but exhibited a marked performance degradation on visually complex or similar classes. This finding suggests that EfficientNet’s MBConv-based structure limits feature resolution, potentially leading to inconsistent performance in tasks where subtle visual differences are critical, such as drug identification. MobileNet and SqueezeNet exhibited lower overall accuracy, with significant misclassifications, particularly for visually complex tablets. This result is attributed to the structural limitations of these models, which, while parameter-efficient, are limited in representational capacity.

These heatmap-based visualizations effectively enhance model interpretability by going beyond overall accuracy metrics and clearly identifying classes where the model performs well or poorly. Therefore, they provide a clearer qualitative assessment of the applicability and limitations of each model.

4.3. Comparison of Accuracy, Computational Load, and Inference Speed

To evaluate the practical feasibility of deploying deep learning models for pharmaceutical classification, we compared multiple architectures in terms of accuracy, inference speed, and computational efficiency. These factors are critical in determining the suitability of a model for real-time mobile applications, where both classification precision and processing latency must be optimized.

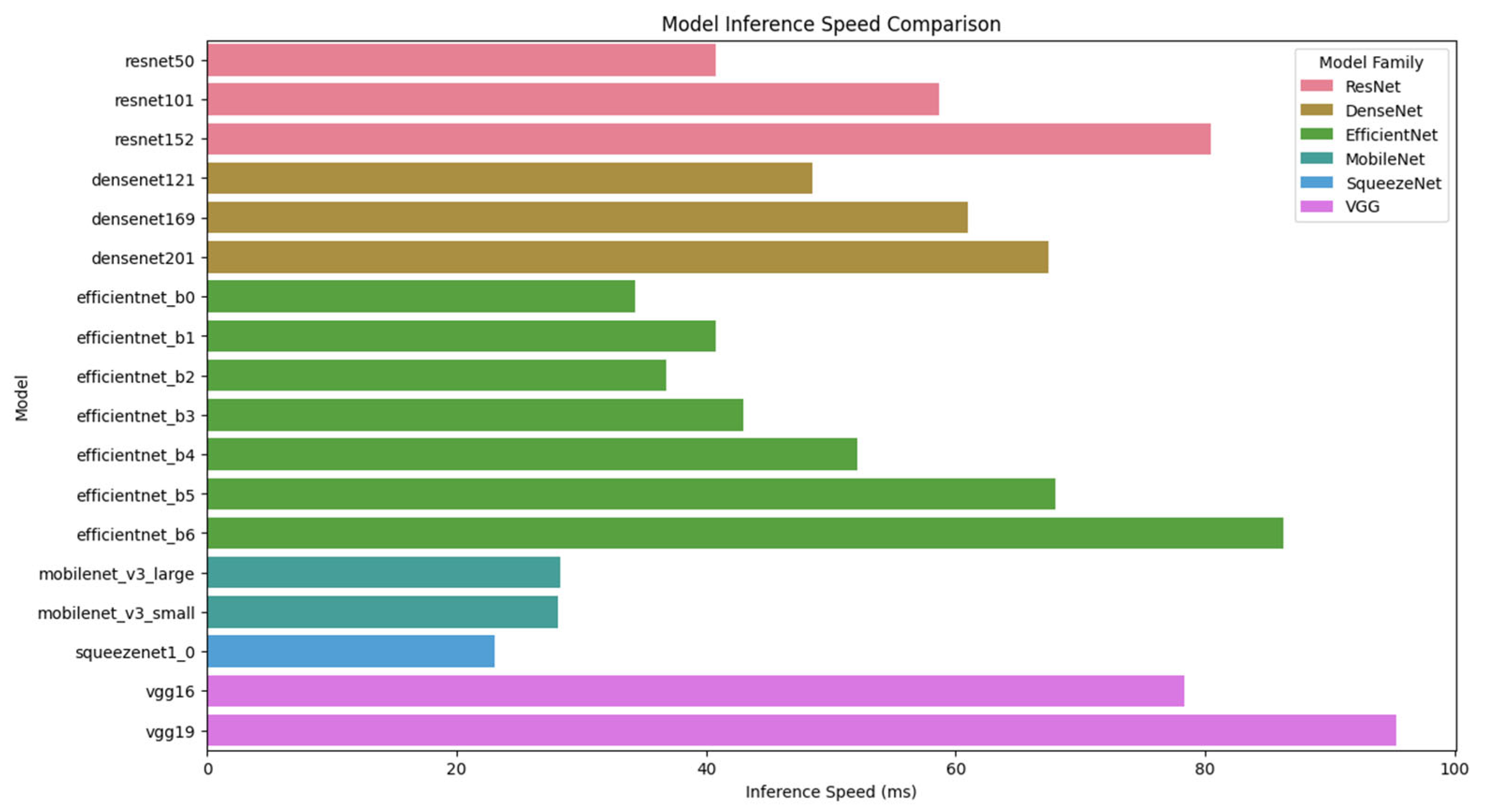

Figure 15 illustrates the inference speed comparison of different deep learning models. As shown in the figure, MobileNet and SqueezeNet exhibit the fastest inference times, making them highly efficient for real-time applications. However, their low classification accuracy (below 2%) makes them unsuitable for high-precision pharmaceutical identification. In contrast, ResNet101, despite having a slightly higher inference time (58.72 ms), offers the highest classification accuracy (98.51%). Therefore, ResNet101 provides an optimal balance between accuracy and computational efficiency, making it the best candidate for real-time mobile-based pharmaceutical classification.

To complement the analysis presented in

Figure 15,

Table 2 provides a detailed comparison of model accuracy, inference speed, and model size. The results indicate that ResNet101 outperforms all other architectures in classification accuracy (98.51%), while maintaining a reasonable inference time (58.72 ms) and a manageable model size (181 MB). Although EfficientNet B6 and ResNet152 show competitive accuracy levels (95.40% and 95.94%, respectively), they require longer inference times (86.26 ms and 80.49 ms), making them less efficient for real-time deployment.

On the other hand, DenseNet models demonstrate moderate accuracy levels (60.48–81.75%) but exhibit inferior inference speeds compared to ResNet architectures, highlighting the limitations of densely connected feature propagation for large-scale image classification tasks. Meanwhile, MobileNet and SqueezeNet models, despite their lightweight structure and rapid inference times (23.07–28.35 ms), fail to achieve adequate classification accuracy (<2%), rendering them impractical for pharmaceutical identification.

These results further emphasize that ResNet101 provides the optimal trade-off between classification accuracy and computational cost, making it the most suitable architecture for real-time pharmaceutical classification on mobile platforms. The findings also highlight the limitations of lightweight CNNs, such as MobileNet and SqueezeNet, which, despite their efficiency, fail to meet classification accuracy requirements for pharmaceutical identification.

5. Conclusions

In this study, we proposed a deep learning-based pharmaceutical recognition system designed for real-time medication identification on mobile devices. Our system leverages ResNet101, which demonstrated superior classification accuracy (98.51%) compared to other widely used CNN architectures, including DenseNet, EfficientNet, MobileNet, SqueezeNet, and VGGNet. Through extensive experimentation, we validated the effectiveness of residual learning in enhancing model stability and interpretability. Additionally, the integration of heatmap-based visual explanations improved transparency in automated medication recognition. The proposed system was implemented in a mobile environment, ensuring accessibility and usability, particularly for elderly individuals who are at higher risk of medication errors. By optimizing real-time inference capabilities and developing a user-friendly interface, our research contributes to the growing field of AI-powered healthcare solutions. The results suggest significant potential for reducing medication-related errors and enhancing patient safety in clinical and home care settings.

Recognizing the inherent operational limitations of mobile deployment scenarios, we emphasize the critical importance of algorithmic efficiency optimization and hardware resource management. Our current system employs a ResNet101 model stored in .pkl format for inference; however, future iterations will incorporate advanced model optimization techniques, including precision-aware training methods and interoperability-focused transformation frameworks (specifically TensorFlow Lite and ONNX runtime environments), aimed at reducing computational latency and optimizing memory utilization on resource-constrained devices. These performance-enhancement strategies are undergoing rigorous empirical evaluation and will be systematically integrated into subsequent platform releases to ensure functional effectiveness in computationally limited healthcare environments.

Future research will focus on expanding the dataset to include a broader range of pharmaceutical variations, improving the model’s robustness against real-world imaging conditions, and exploring the integration of multimodal data sources, such as textual drug information and barcode recognition, to further enhance system performance. By continuously refining deep learning-based pharmaceutical recognition, we aim to contribute to the advancement of safe and efficient medication management.