Abstract

Smart farms refer to spaces and technologies that utilize networks and automation to monitor and manage the environment and livestock without the constraints of time and space. As various devices installed on farms are connected to a network and automated, farm conditions can be observed remotely anytime and anywhere via smartphones or computers. These smart farms have evolved into smart livestock farming, which involves collecting, analyzing, and sharing data across the entire process from livestock production and growth to post-shipment distribution and consumption. This data-driven approach aids decision-making and creates new value. However, in the process of evolving smart farm technology into smart livestock farming, challenges remain in the essential requirements of data collection and intelligence. Many livestock farms face difficulties in applying intelligent technologies. In this paper, we propose an intelligent monitoring system framework for smart livestock farms using artificial intelligence technology and implement deep learning-based intelligent monitoring. To detect cattle lesions and inactive individuals within the barn, we apply the RT-DETR method instead of the traditional YOLO model. YOLOv5 and YOLOv8 are representative models in the YOLO series, both of which utilize Non-Maximum Suppression (NMS). NMS is a postprocessing technique used to eliminate redundant bounding boxes by calculating the Intersection over Union (IoU) between all predicted boxes. However, this process can be computationally intensive and may negatively impact both speed and accuracy in object detection tasks. In contrast, RT-DETR (Real-Time Detection Transformer) is a Transformer-based real-time object detection model that does not require NMS and achieves higher accuracy compared to the YOLO models. Given environments where large-scale datasets can be obtained via CCTV, Transformer-based detection methods like RT-DETR are expected to outperform traditional YOLO approaches in terms of detection performance. This approach reduces computational costs and optimizes query initialization, making it more suitable for the real-time detection of cattle maintenance behaviors and related abnormal behavior detection. Comparative analysis with the existing YOLO technique verifies RT-DETR and confirms that RT-DETR shows higher performance than YOLOv8. This research contributes to resolving the low accuracy and high redundancy of traditional YOLO models in behavior recognition, increasing the efficiency of livestock management, and improving productivity by applying deep learning to the smart monitoring of livestock farms.

1. Introduction

Agriculture holds significant importance for purposes such as food supply and the preservation of environmental ecosystems. Additionally, the livestock industry, as a high-value-added sector, is expected to account for a substantial portion of agricultural demand. The ongoing decline in rural populations and the reluctance to engage in agricultural work are expected to exacerbate labor shortages and cause management crises in the livestock industry. In Europe, the livestock equipment industry is transitioning from hardware-based to software-integrated operations, with the active development of products using farm integration management technologies and advanced sensing systems. By incorporating software functions, it is possible to maximize hardware performance, reduce farm labor, and manage precise specifications. European livestock equipment manufacturers are increasingly developing integrated management technologies to enhance the usability and applicability of their products across farming operations.

In line with the smart era, efforts are being undertaken to improve productivity and increase income in the livestock industry by integrating advanced ICT convergence technologies and optimizing livestock management [1]. As part of the construction of intelligent livestock management systems, research is being conducted to develop smart farm models that are tailored to each livestock type [2,3].

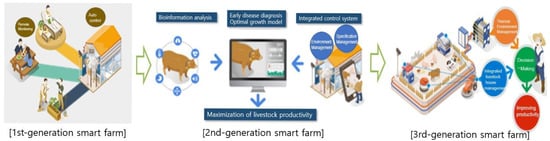

Smart livestock farming leverages technologies such as the Internet of Things (IoT) and artificial intelligence (AI) to simultaneously improve agricultural productivity and optimize the required workforce [2]. In these applications, research on the situational awareness of animals plays a critical role. The domestic and international smart farm markets are experiencing annual growth rates of over 10%, while the smart farm market in the livestock sector is also expected to grow significantly. Smart agriculture, based on ICT convergence technologies, is entering the stage of foundational technology development and growth; Figure 1 illustrates the three generations of domestic livestock smart farms that the National Institute of Animal Science has categorized: first-generation basic models, second-generation advanced models, and third-generation cutting-edge models. Currently, most domestic livestock farms implementing smart farm technologies are at the 1st or 1.5 intermediary level. This indicates the pressing need for progress toward second-generation and third-generation livestock smart farms to fully realize the benefits of these advanced technologies.

Figure 1.

Domestic smart livestock farm model (National Institute of Animal Science in Korea, 2022).

As the adoption of smart livestock systems continues to expand among livestock farms, research is being conducted to establish data use frameworks that enhance the competitiveness of farms. These frameworks focus on standard-based data collection and analysis, as well as data-driven livestock management, enabling diverse forms of data application. However, compared to European nations that are advanced in livestock management, such as the Netherlands, Denmark, and Belgium, which have established the concept of precision livestock farming similar to smart farming and are actively pursuing research and dissemination, domestic efforts remain in the early stages.

In Europe, research has been actively performed to monitor and control environmental factors within livestock facilities, such as temperature, humidity, and harmful gases. Using ubiquitous technology, automated systems have been developed to regulate lighting and the opening or closing of roofs during rainy conditions. Advanced systems have been created to allow large-scale farms to control their facilities in real time while viewing video feeds. These technologies also include fuzzy systems to reason about environmental changes, control ventilation systems, and trigger alarms in hazardous situations caused by environmental changes, thereby promoting advanced livestock practices.

In the Netherlands, sensors attached to cattle collect approximately 200 MB of data per cow annually. These data are combined with external information, such as climate change, to enable farmers to continuously monitor the movements and health of their livestock. Additionally, the Smart Dairy Farming program, implemented from 2015 to 2017, achieved smart livestock farming using sensors, indicators, and decision-making models. In various applications, there are increasing cases of maximizing productivity and improving management efficiency through the use of deep learning, machine learning, and big data; ICT convergence and intelligence are essential to achieving the ultimate unmanned livestock integrated management system pursued by livestock smart farms. Smart livestock technology development should be undertaken to realize not only feeding management but also productivity improvement and farm management convenience and efficiency through data collection and data-based control systems and monitoring system automation, reflecting the functional requirements shown in Figure 1.

In this paper, we propose an AI-based intelligent monitoring framework for livestock farms by applying the RT-DETR model as an alternative to the commonly used CNN-based YOLO models. Using collected video footage, which is already available through CCTV systems commonly installed on most cattle farms, we were able to collect a training dataset and test dataset to detect lesions and identify abnormal behaviors in Hanwoo cattle without the need for additional equipment. To ensure high recognition accuracy, the RT-DETR model was applied to construct the AI model. The objective of this study is to apply AI technologies to focus on detecting abnormal behaviors and situational awareness related to the individual maintenance behaviors of Hanwoo cattle. This differentiates this study from existing smart livestock systems, which primarily focus on environmental control and the monitoring of livestock environments. Therefore, this research is expected to enhance the efficiency of livestock management while contributing to improved productivity in the industry.

The rest of this paper is organized as follows: Section 2 reviews the research on smart farm monitoring and deep learning models. Section 3 proposes a framework for a smart livestock monitoring system using AI. Section 4 describes dataset collection and preprocessing and AI model construction. Section 5 describes the experiments and the verification of the constructed model, and the conclusions and future work are presented in Section 6.

2. Related Work

Animals express their condition and responses to external environmental stimuli through their behavior. Most livestock facilities are equipped with CCTV, and by collecting video footage to analyze livestock behavior patterns, it is possible to detect abnormal or irregular behaviors in real time. A rapid response to such behaviors enables precise livestock management, improving overall efficiency and animal welfare [2,3,4].

In the early stages of smart farming, accelerometers and gyroscopic sensors were attached to livestock to observe their movements. However, this approach caused discomfort to the animals while failing to yield positive results. As ICT technologies and livestock industry innovations have progressed, more advanced integration has enabled the collection of information about livestock conditions and barn environments. Utilizing these data, research is actively being conducted on applications such as growth measurement, animal condition recognition, individual behavior classification, animal welfare assessment, and disease detection.

In 2018, M.F. Hansen and colleagues conducted research utilizing visible light and thermal imaging cameras installed on the ceiling of livestock barns to recognize livestock behavior. However, traditional image processing techniques had limitations in fully identifying animal behaviors. Subsequently, simple object-tracking research evolved into more advanced motion recognition studies using deep learning-based CNN feature extractors, enabling the recognition of complex movements [5,6,7,8]. These techniques have since been applied to areas such as facial recognition and basic behavior classification. Additionally, research has been conducted on utilizing CCTV footage not only to monitor livestock behavior but also to integrate spatiotemporal metadata. This approach aims to notify farm managers of specific events in real time, enhancing farm management efficiency.

Another study proposed a method to convert a hierarchical structure into domain-specific information by structuring it as graph nodes. By mapping spatiotemporal data and behavioral recognition—such as individual and group behaviors—along with graph-structured interrelated information, an identification number was assigned to each simple action level [6,7]. This allowed for the simultaneous processing of each object’s behavior based on body parts, simple actions, and identification numbers. The method was applied to monitor both the individual and group behaviors of Hanwoo cattle, enabling object tracking through spatiotemporal analysis. However, notwithstanding the depth and sophistication of these studies, practical implementation in actual Hanwoo livestock farms remains challenging. High costs and the need for additional ICT equipment pose significant barriers, making it difficult to effectively improve animal welfare conditions in current livestock environments [8].

Recent studies in real-time object detection have prominently focused on CNN-based single-stage algorithms such as the YOLO (You Only Look Once) series, which have demonstrated outstanding performance in real-time object detection tasks over the past few years. The YOLO series includes both anchor-based and anchor-free approaches [9,10,11,12,13]. Since its introduction, the YOLO approach has undergone continuous development, significantly improving its performance, and it has become synonymous with real-time object detection. YOLOv3 further addressed the limitations of previous versions, especially in detecting small objects more effectively [5,6,7,8]. Research has been actively conducted using YOLO-based models to detect and monitor cattle behavior and body parts. A study was conducted to automatically identify livestock using YOLOv5, and the detection accuracy was improved by using the mosaic augmentation technique for partial images such as the cow’s mouth [2]. A study was conducted to identify the feeding behavior of dairy cows. The feeding behavior of cows in a feeding environment was identified using the DRN-YOLO technique based on YOLOv4 [3]. Another study was conducted using YOLOv5 to detect the major body parts of cows. A study was conducted using the YOLOv5-EMA model to detect the appearance of cows. Through this study, the performance of detecting small parts such as the head or legs was improved [9,10]. It was carried out to identify abnormal conditions of cows’ legs by detecting movement characteristics such as head shaking and posture duration measurement [11]. In another study, the monitoring of behaviors such as the lying down and standing of dairy cows on a farm using the Res-Dense YOLO technique was performed [12].

However, these methods often generate a large number of overlapping bounding boxes, requiring a postprocessing step known as Non-Maximum Suppression (NMS) to eliminate redundancies. This step can become a bottleneck, reducing the overall detection speed, which is problematic for applications requiring high speed and accuracy. To address this issue, a new approach called DETR (Detection Transformer) was introduced, which eliminates the need for manually crafted anchors and NMS [13,14,15,16,17]. Instead, DETR adopts an end-to-end Transformer-based architecture that uses bipartite matching and directly predicts a one-to-one set of objects. While DETR offers these architectural advantages, it also faces significant limitations such as slow training convergence, high computational cost, and challenges in query optimization [18]. Although subsequent improvements like Deformable-DETR, DAB-DETR, DN-DETR, and Group-DETR have been proposed to address these issues, these Transformer-based models still tend to have high computational requirements and are not fully optimized for real-time performance. However, RT-DETR (Real-Time Detection Transformer) emerges as a more suitable alternative for real-time object detection. It has many advantages such as query initialization, computational costs, and high performance suitable for real-time detection [18,19,20].

In this paper, we propose an AI-based intelligent monitoring system framework and implement a deep learning-based AI model to detect lesions and identify inactive Hanwoo cattle within the barn environment. We apply the RT-DETR model which enables the intelligent recognition of abnormal behaviors and situational awareness related to the individual maintenance behaviors of cattle. To validate the performance of RT-DETR, a comparative analysis was conducted against traditional YOLO-based methods.

This research is the first application of RT-DETR to livestock behavior recognition, and it will contribute to enhancing the efficiency and productivity of livestock management by integrating intelligent technologies into existing smart farm systems that are primarily focused on environmental control and monitoring.

3. AI-Based Monitoring Framework for Smart Livestock Farms

To establish a welfare-friendly livestock facility, it is essential to provide appropriate levels of care across several aspects, including environmental conditions, physical health, behavioral patterns, and animal–human interactions. From an environmental perspective, the continuous monitoring of temperature, humidity, carbon dioxide levels, and the cleanliness of bedding materials is necessary. Additionally, the physical appearance of cattle can serve as an important indicator of their health and welfare. It is critical to look for signs of illness, injury, or abnormal behaviors and to promptly recognize and respond to such conditions. To this end, an AI model can be developed to enhance the intelligence of livestock monitoring using CCTV systems, which are already commonly installed on most livestock farms. By applying AI to analyze real-time CCTV footage, it is possible to detect lesions, abnormal behaviors, and other signs of distress in Hanwoo cattle, without needing additional equipment. This approach enables continuous and efficient health and welfare monitoring within the barn, supporting the development of smarter, welfare-oriented livestock management systems.

Many studies have been conducted on livestock farms to quickly identify abnormal behavior in cattle using filming devices and image recognition programs. Image processing techniques that allow programs to identify lesions in cattle and notify managers without requiring staff to continuously observe livestock and the barn have recently achieved many results, and in particular, there is a shift towards applying AI using deep learning in relation to object recognition.

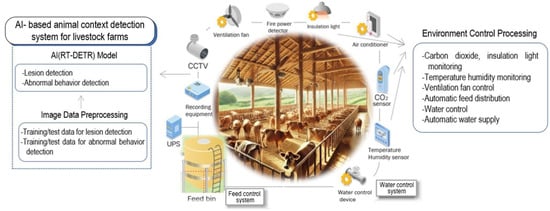

In this study, we design an AI-based situational awareness system architecture for the behavior of Hanwoo cattle in a barn, which forms the basis for intelligent barn automation. Figure 2 shows a schematic of the AI-based smart livestock framework we developed that enables early response to the abnormal situations and behavior of Hanwoo cattle in a barn through AI-based smart monitoring. Recently, livestock farms have been able to install CCTVs in barns to obtain constant barn video data, and these can be used as a learning dataset to build an AI model.

Figure 2.

A Framework for the AI-based smart monitoring of livestock farms.

4. Data Inspection and RT-DETR Model

4.1. Data Inspection

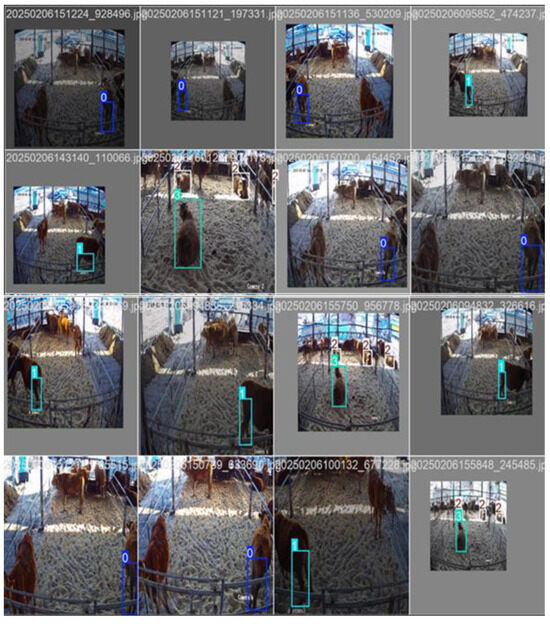

In this study, if there were many instances of contaminants on the body of a cow, this was judged as a suspicious lesion and labeled. Figure 3 is an example of marking the legs and torso as suspicious lesion areas. A cow that sat motionless for a certain period of time was also classified as a case of suspected lesion, and the change in the bounding box of the object was calculated so that if there was no movement for more than an hour, it was identified as a suspicious case. Images of suspected lesions and the shape of a cow that is motionless are shown in Figure 3.

Figure 3.

Images of suspected lesions and the shape of a cow that is motionless.

A total of 8142 images were used for training, and Table 1 shows the dataset of four classes of abnormal behavior. Table 1 presents the number of instances for four categories, each annotated with bounding boxes. A total of 8142 images were utilized for model training. Although a test dataset was secured, it was excluded from the experiments in this study due to its high similarity in distribution to the validation dataset. The class labeling for each object was boxed into a rectangular shape using the LabelImg tool, and Figure 4 shows examples, while Figure 5 shows examples of the class labeling results. At present, the dataset includes only postural data obtained under the current CCTV recording setup. In future work, we plan to augment the dataset by incorporating data captured from multiple angles to improve the robustness and generalizability of the model.

Table 1.

Composition of dataset.

Figure 4.

Data example and annotation.

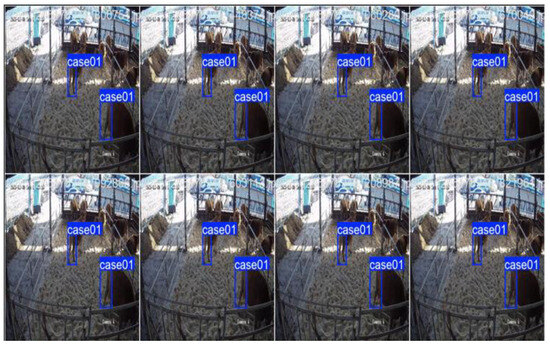

Figure 5.

Examples of data classes.

4.2. RT-DETR Model

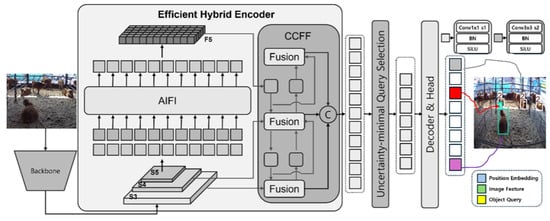

Recent advancements in Transformer-based object detection have led to models such as DETR and Deformable DETR, which formulate object detection as a direct set prediction problem, eliminating the need for hand-designed anchors and Non-Maximum Suppression (NMS). However, the slow convergence and inference speed of these models have hindered their practical deployment in real-time applications [16,17,18,19]. To address these limitations, RT-DETR (Real-Time Detection Transformer) was proposed, which is a real-time object detection framework that maintains the end-to-end simplicity of DETR while significantly improving the inference speed and computational efficiency. RT-DETR introduces a hybrid encoder that processes multi-scale feature maps extracted from a CNN backbone [19,20]. Within the encoder, two novel modules are employed:

AIFI (Attention-based Intra-scale Feature Interaction) applies lightweight self-attention exclusively to the highest-resolution feature map (S5) to enhance intra-scale contextual information with minimal computational cost.

CCFF (CNN-based Cross-scale Feature Fusion) efficiently fuses adjacent scale features (S3, S4, S5) using convolutional operations, enabling effective multi-scale feature integration without relying on attention mechanisms.

The RT-DETR (Real-Time Detection Transformer) is an extended version of DETR that removes NMS (Non-Maximum Suppression) which negatively affects real-time object detection [20]. It is required for the optimal model to reduce computational costs, optimize query initialization, and show high performance.

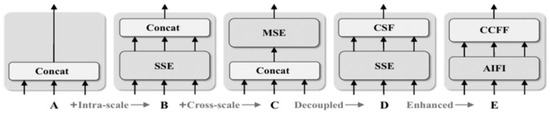

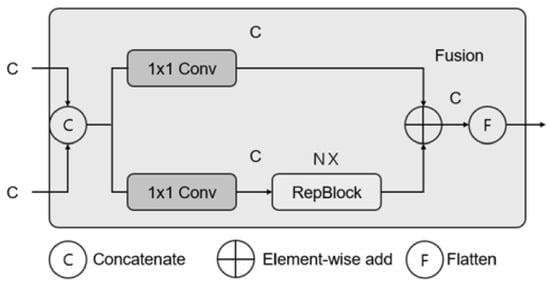

Figure 6 shows the RT-DETR pipeline, in which the last three stages of the backbone {S3, S4, S5} are used as inputs to the encoder. An efficient hybrid encoder converts multi-scale features into a sequence of image features through intra-scale feature interactions and cross-scale feature fusion. Then, query selection with minimal uncertainty is used to select a fixed number of encoders to be used as initial object queries for the decoder. Finally, the decoder, equipped with auxiliary prediction heads, iteratively optimizes the object queries to generate classifications and bounding boxes. AIFI performs intra-scale interaction only at the S5 stage using a single-scale Transformer encoder, which significantly reduces computational cost while maintaining performance. CCFF is an optimized cross-scale fusion module that utilizes a fusion block composed of multiple convolutional layers. The role of the fusion block is to fuse adjacent features into new representations, enabling more effective multi-scale feature integration. High-level features containing meaningful information related to objects are extracted from low-level features, and redundant computations are performed for feature interaction for multi-scale features. In order to prove that it is inefficient to perform intra-scale and cross-scale feature interaction simultaneously, various types of encoders were designed, as shown in Figure 7. Multi-scale features ({S3, S4, S5}) are taken as input. AIFI employs a single-scale Transformer encoder to perform intra-scale interaction only at the S5 level, which helps to reduce computational cost. In Figure 8, the fusion block is designed to fuse two adjacent features into a new representation, effectively integrating information across different scales.

Figure 6.

RT-DETR model overview.

Figure 7.

The encoder structure for each variant.

Figure 8.

Fusion block in CCFF.

The hybrid encoder process can be mathematically formulated as follows:

5. Analysis of Experimental Results

The experiment was conducted with an Intel® Xeon® CPU @ 2.20 GHz, 8 GB of memory, and an NVIDIA A100-SXM4-40GB GPU. The dataset used for learning was divided into training, validation, and testing sets of 7991, 151, and 150 images, trained based on the RT-DETR model. The hyperparameters for training were an image size of 640 × 640 pxl, a momentum of 0.937, a learning rate of 0.00125, a batch size of 16, a training period of 10, and the AdamW optimizer.

The dataset used for training and validation consisted of 5726 livestock images at a resolution of 640 × 640. The dataset used for training and validation consisted of 8142 images 640 × 640 in size, and the details of the identified labeling classes are shown in Table 2. The hyperparameters used for training were as follows:

Table 2.

Dataset partitioning.

Learning rate: 0.00125;

Batch size: 16;

Optimizer: AdamW.

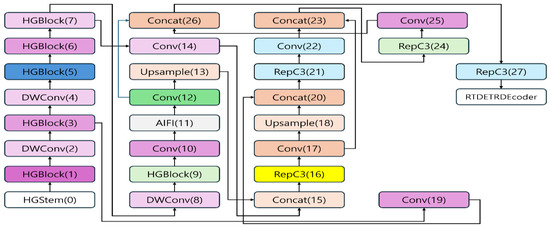

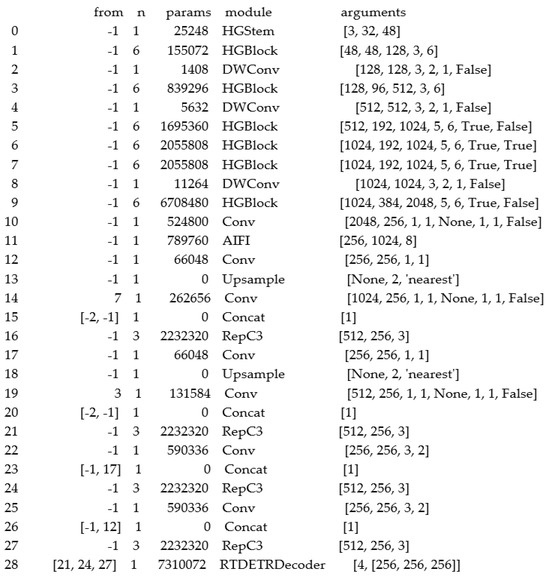

Figure 9 shows the layer-wise network diagram, detailing the dimensions and parameters of each layer.

Figure 9.

Network structure diagram (layer summary: 32,814,296 parameters, 108.0 GFLOPs).

5.1. Training Results

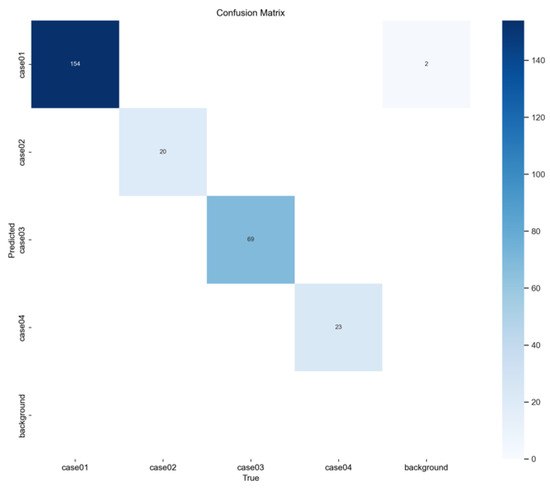

Figure 10 shows the confusion matrix, where the four suspected lesion classes are denoted as case01, case02, case03, and case04, with each accuracy showing an ideal result. In Figure 10, the “background” column indicates cases where an object was not present but had been detected. A confusion matrix summarizing the number of detected and undetected instances is included. Specifically, in case01, the model achieved one hundred and fifty-four true positive detections and two false positives.

Figure 10.

Confusion matrix.

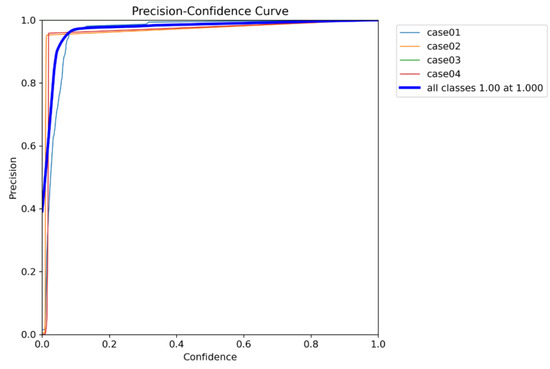

Figure 11 shows the ideal trend in the accuracy for the four lesion-suspected classes. The precision curve presents the proportion of true positive predictions among all predicted instances. All classes achieve a precision score of 1.0, indicating perfect accuracy in identifying the respective lesion-suspected categories.

Figure 11.

Graph of precision.

Figure 12 illustrates the proportion of true positive predictions among all actual positive objects. Each curve shows an ideal trend across all categories, indicating that the model is effectively detecting most of the lesion-suspected areas present in the cattle images. This demonstrates the model’s strong capability to identify relevant regions of interest related to potential health concerns.

Figure 12.

Recall curve.

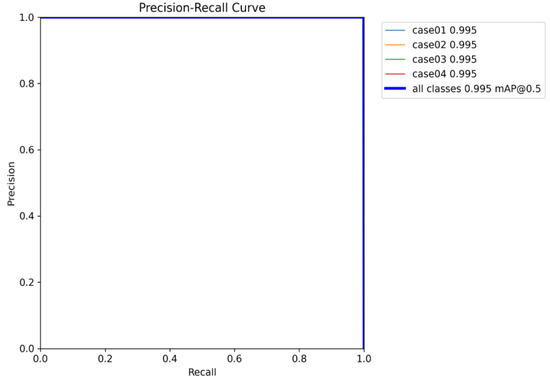

Figure 13 simultaneously plots the precision and recall, providing insights into the balance between the two metrics for each class. The area under the precision–recall curve (AUC-PR) serves as an indicator of the model performance. A larger AUC-PR value signifies that the model maintains a well-balanced trade-off between precision and recall, confirming its effectiveness across the different classes of suspected lesions.

Figure 13.

Precision–recall curve.

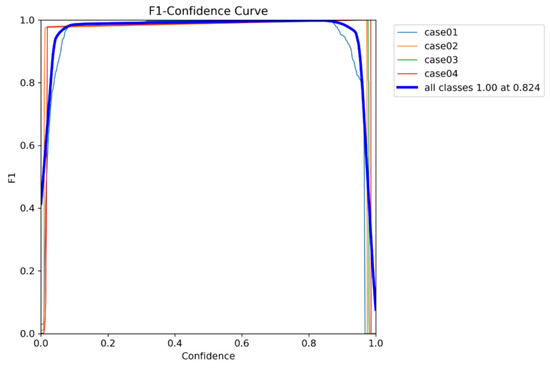

Figure 14 presents the F1 score, which is the harmonic mean of the precision and recall. The model demonstrates high F1 scores across all classes, indicating that it accurately detects lesion-suspected images while maintaining a strong balance between precision and recall. This confirms the model’s robustness in identifying and classifying potential abnormalities in cattle images.

Figure 14.

F1-score curve.

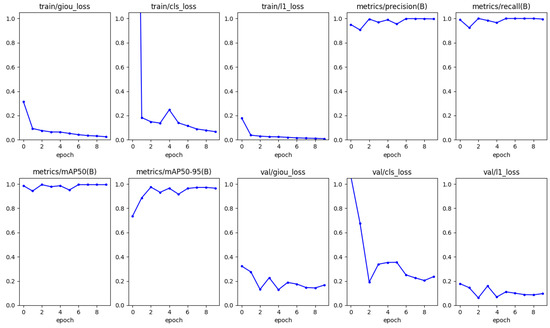

Figure 15 shows the model’s performance during the training and validation stages. It presents metrics such as the bounding box loss, object loss, classification loss, precision, recall, and mAP, all of which indicate high performance. As shown in Figure 15, the model achieves a notably high mAP@50 of 0.995, demonstrating excellent accuracy in object detection, particularly in detecting lesion-suspected areas in cattle images.

Figure 15.

Model performance at each learning and validation stage.

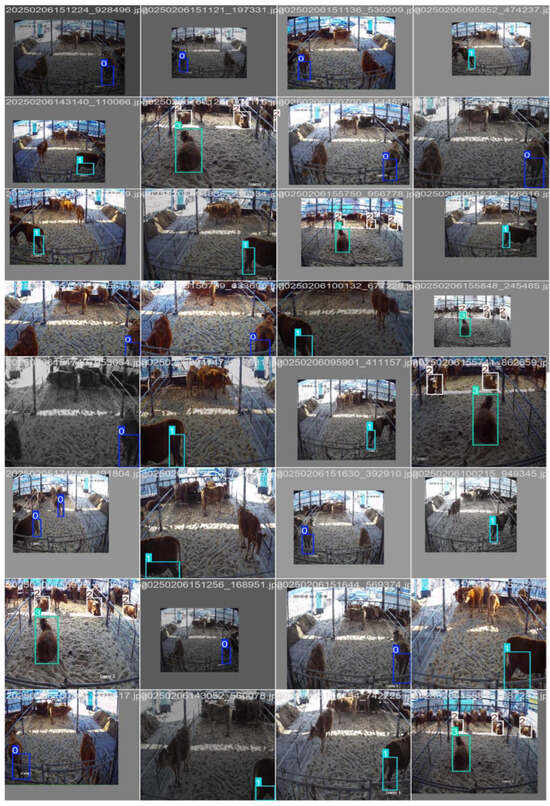

Figure 16 confirms that the lesion-suspected images learned by the RT-DETR model were successfully detected. All four types of images shown in the validation images were detected.

Figure 16.

Validation results for RT-DETR.

5.2. Comparison of RT-DETR and YOLO Models

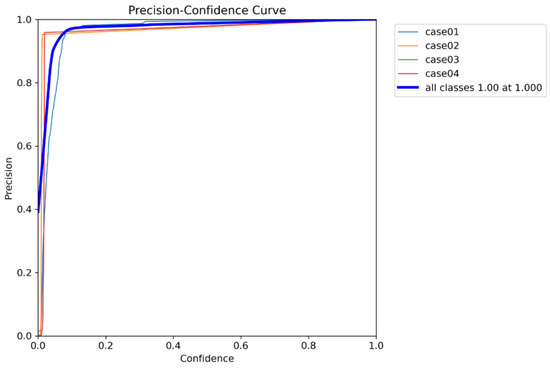

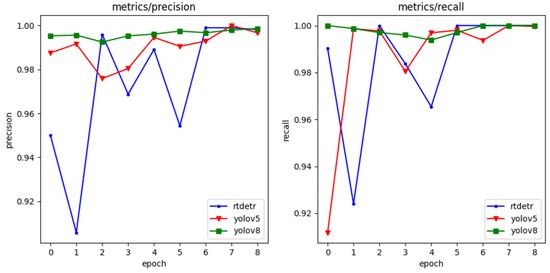

In this study, the performance of the proposed RT-DETR method was evaluated by comparing it with other object detection techniques from the YOLO series. The methods used for comparison were YOLOv5 and YOLOv8. The left graph in Figure 17 shows the precision curves for the RT-DETR, YOLOv5, and YOLOv8 methods. Precision refers to the proportion of true positive predictions among all objects predicted as positive. All three methods demonstrate similar results with high precision, indicating accurate detection.

Figure 17.

Results of precision curves and recall curves.

The right graph in Figure 17 presents the recall curves for RT-DETR, YOLOv5, and YOLOv8. Recall is defined as the proportion of actual positive objects that were correctly predicted as positive. The three methods show excellent recall performance, highlighting their effectiveness in detecting relevant objects. The left graph in Figure 18 compares the mAP@50 (mean Average Precision at an IoU threshold 0.5) for the RT-DETR, YOLOv5, and YOLOv8 models. AP@50 refers to the Average Precision when the Intersection over Union (IoU) is 0.5 or higher, and mAP@50 is the mean of AP@50 across all object classes. In this context, predictions with an IoU ≥ 0.5 are classified as true positives (TPs). Predictions with an IoU < 0.5 are classified as false positives (FPs). False negatives (FNs) refer to objects that should have been detected but were not. Precision and recall are calculated using TP, FP, and FN values, and they typically have a trade-off relationship. To improve both precision and recall, the confidence threshold needs to be adjusted: Lowering the threshold increases FPs and decreases FNs, resulting in lower precision but higher recall. Raising the threshold decreases FPs and increases FNs, leading to higher precision but lower recall.

Figure 18.

Results of mAP50 and mAP50-95 curves.

The AP (Average Precision) is calculated as the area under the precision–recall curve, and the mAP is the average of APs across multiple object classes. The right graph in Figure 18 illustrates the evaluation method used in MS COCO, where the AP is calculated at IoU thresholds ranging from 0.5 to 0.95 in 0.05 increments and then averaged. From this evaluation, RT-DETR shows superior performance compared to YOLOv5 and YOLOv8.

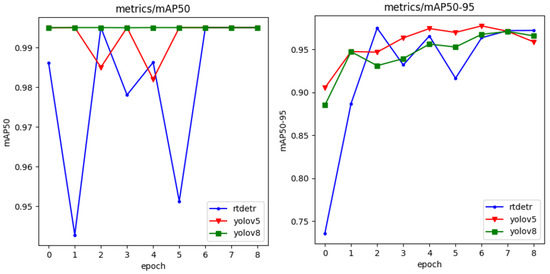

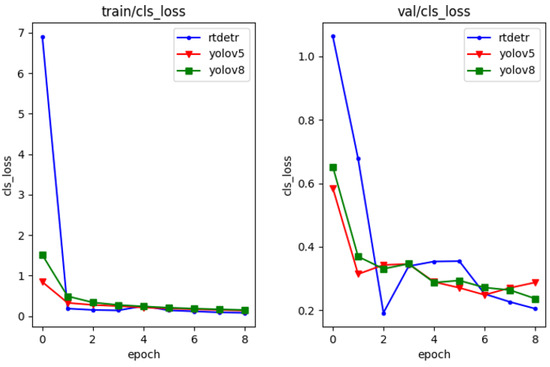

To validate the performance of the proposed model, a comparative analysis was conducted by examining the class loss of YOLOv5 and YOLOv8. The results of the comparison test are presented in Table 3, which clearly demonstrate that the RT-DETR model achieved the highest performance among the evaluated models. Table 3 presents the final loss values for the validation dataset, where the RT-DETR method achieves the lowest loss, further demonstrating its superior detection performance.

Table 3.

Performance comparison of different models on class loss.

As shown in Figure 19, which presents the classification loss curves for both training and validation sets, the RT-DETR method outperforms both YOLOv5 and YOLOv8. Classification loss in this context is calculated as the sum of squared errors of the predicted class probabilities, and lower values indicate better performance. The consistently lower loss observed with RT-DETR demonstrates its superior accuracy and generalization in classifying lesion-suspected behaviors in Hanwoo cattle. The right-hand graph in Figure 19 shows the class loss for the validation dataset. The RT-DETR method exhibits a lower loss compared to YOLOv5 and YOLOv8. A lower loss indicates that the model is able to detect objects more accurately.

Figure 19.

Classification loss curves.

6. Conclusions

There are many challenges in the dissemination of intelligent solutions that support smart livestock farming. In this paper, we proposed an intelligent monitoring system framework by applying artificial intelligence technology and implemented the RT-DETR model for smart livestock farms. To achieve real-time detection more efficiently, we adopted the RT-DETR method rather than the commonly used CNN-based YOLO models. RT-DETR reduces computational costs and optimizes query initialization, making it more suitable for real-time object detection. We defined four classes to detect cattle lesions and abnormal behaviors, collected images from the CCTV video images of real ranches, and performed preprocessing and labeling tasks to build a high-quality training dataset for deep learning. An AI model was constructed by designing an RT-DETR network architecture, and its performance was evaluated using a test dataset and validation dataset.

The experimental results confirmed that the RT-DETR model ensures high recognition accuracy. Furthermore, to verify the effectiveness of the proposed AI model, a comparative analysis was conducted with YOLO models. Through these experiments, the class loss values of RT-DETR, YOLOv5, and YOLOv8 were further analyzed, and it was confirmed that the RT-DETR model outperformed the YOLO models, demonstrating superior classification performance.

The proposed RT-DETR model-based smart livestock monitoring framework is expected to be highly practical as it does not require the installation of additional devices because it exploits the CCTV systems already installed on livestock farms. In future work, we are going to further enhance the model and develop an integrated solution that includes a user-friendly interface.

Author Contributions

Conceptualization, M.S. and B.K.; methodology, S.H. and M.S.; investigation, S.H., M.S. and B.K.; validation, M.S. and S.H.; writing—original draft, S.H. and M.S.; writing—review and editing, B.K. and M.S.; funding acquisition, M.S. All authors have read and agreed to the published version of this manuscript.

Funding

This paper was funded by Konkuk University in 2023.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Dataset available on request from the authors.

Acknowledgments

Moonsun Shin and Seonmin Hwang are the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jiao, L.; Zhao, J. A survey on the new generation of deep learning in image processing. IEEE Access 2019, 7, 172231–172263. [Google Scholar] [CrossRef]

- Dulal, R.; Zheng, L.; Kabir, M.A.; McGrath, S.; Medway, J.; Swain, D.; Swain, W. Automatic cattle identification using yolov5 and mosaic augmentation: A comparative analysis. In Proceedings of the 2022 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Sydney, Australia, 20–30 November 2022; pp. 1–8. [Google Scholar]

- Yu, Z.; Liu, Y.; Yu, S.; Wang, R.; Song, Z.; Yan, Y.; Li, F.; Wang, Z.; Tian, F. Automatic Detection Method of Dairy Cow Feeding Behaviour Based on YOLO Improved Model and Edge Computing. Sensors 2022, 22, 3271. [Google Scholar] [CrossRef] [PubMed]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Glenn, J. Yolov5 Release v7.0. 2022. Available online: https://github.com/ultralytics/yolov5/tree/v7.0 (accessed on 20 March 2025).

- Long, X.; Deng, K.; Wang, G.; Zhang, Y.; Dang, Q.; Gao, Y.; Shen, H.; Ren, J.; Han, S.; Ding, E.; et al. Pp-yolo: An effective and efficient implementation of object detector. arXiv 2020, arXiv:2007.12099. [Google Scholar]

- Wang, C.-Y.; Bochkovskiy, A.; Liao, H.-Y.M. Yolov7: Trainable bag-of-freebies sets new state-of the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar] [CrossRef]

- Shao, D.; He, Z.; Fan, H.; Sun, K. Detection of cattle key parts based on the improved Yolov5 algorithm. Agriculture 2023, 13, 1110. [Google Scholar] [CrossRef]

- Hao, W.; Ren, C.; Han, M.; Zhang, L.; Li, F.; Liu, Z. Cattle body detection based on YOLOv5-EMA for precision livestock farming. Animals 2023, 13, 3535. [Google Scholar] [CrossRef] [PubMed]

- Russello, H.; van der Tol, R.; Holzhauer, M.; van Henten, E.J.; Kootstra, G. Video-based automatic lameness detection of dairy cows using pose estimation and multiple locomotion traits. Comput. Electron. Agric. 2024, 223, 109040. [Google Scholar] [CrossRef]

- Yu, R.; Wei, X.; Liu, Y.; Yang, F.; Shen, W.; Gu, Z. Research on Automatic Recognition of Dairy Cow Daily Behaviors Based on Deep Learning. Animals 2024, 14, 458. [Google Scholar] [CrossRef] [PubMed]

- Li, G.; Shi, G.; Zhu, C. Dynamic Serpentine Convolution with Attention Mechanism Enhancement for Beef Cattle Behavior Recognition. Animals 2024, 14, 466. [Google Scholar] [CrossRef] [PubMed]

- Li, F.; Zhang, H.; Liu, S.; Guo, J.; Ni, L.M.; Zhang, L. Dn-detr: Accelerate detr training by introducing query denoising. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orlean, LA, USA, 18–24 June 2022; pp. 13619–13627. [Google Scholar]

- Liu, S.; Li, F.; Zhang, H.; Yang, X.; Qi, X.; Su, H.; Zhu, J.; Zhang, L. Dab-detr: Dynamic anchor boxes are better queries for detr. In Proceedings of the International Conference on Learning Representations, Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Meng, D.; Chen, X.; Fan, Z.; Zeng, G.; Li, H.; Yuan, Y.; Sun, L.; Wang, J. Conditional detr for fast training convergence. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 3631–3640. [Google Scholar]

- Wang, Y.; Zhang, X.; Yang, T.; Sun, J. Anchor detr: Query design for transformer-based detector. In Proceedings of the AAAI Conference on Artificial Intelligence, Online, 22 February–1 March 2022; pp. 2567–2575. [Google Scholar] [CrossRef]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable transformers for end-to-end object detection. In Proceedings of the International Conference on Learning Representations, Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.; Shum, H.-Y. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. In Proceedings of the International Conference on Learning Representations, Virtual, 25–29 April 2022. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs beat YOLOs on rReal-time Object Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).