Research on Vehicle Target Detection Method Based on Improved YOLOv8

Abstract

1. Introduction

2. Preliminary

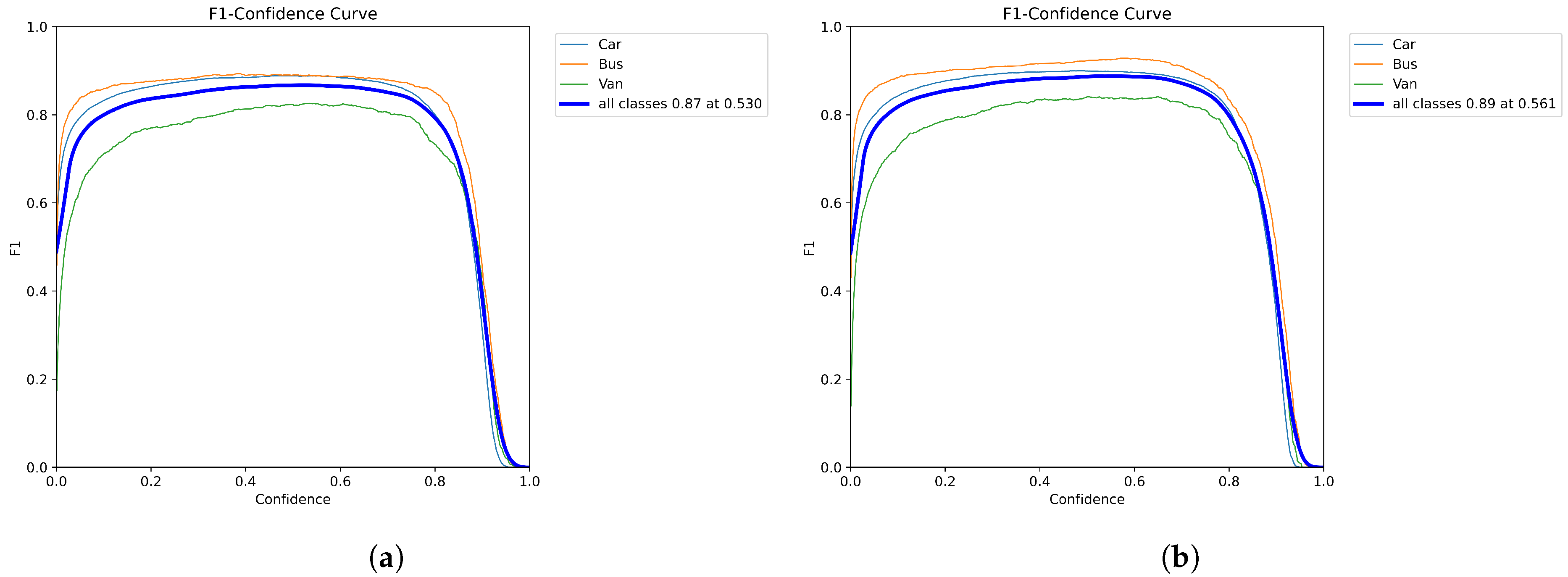

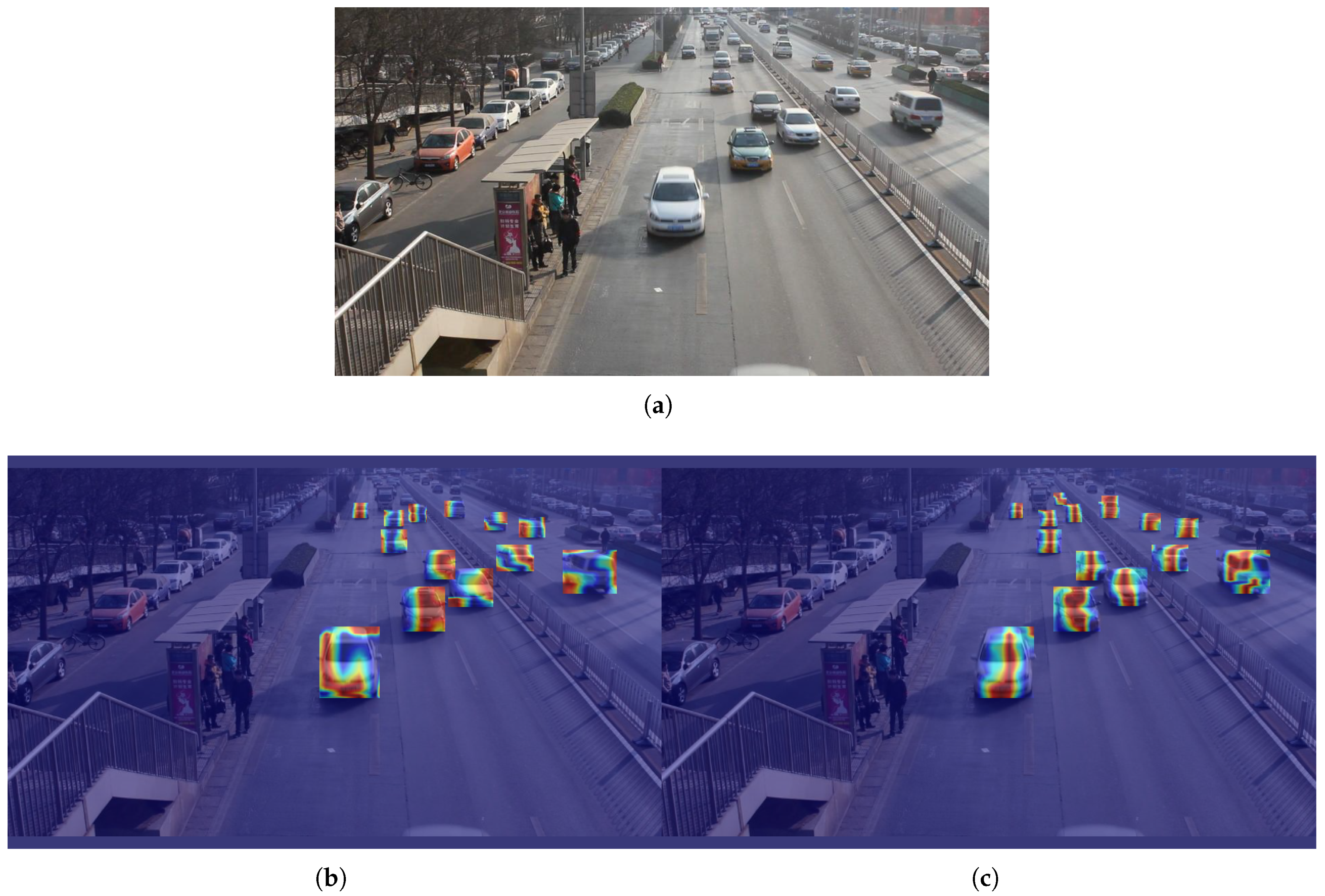

3. Methods

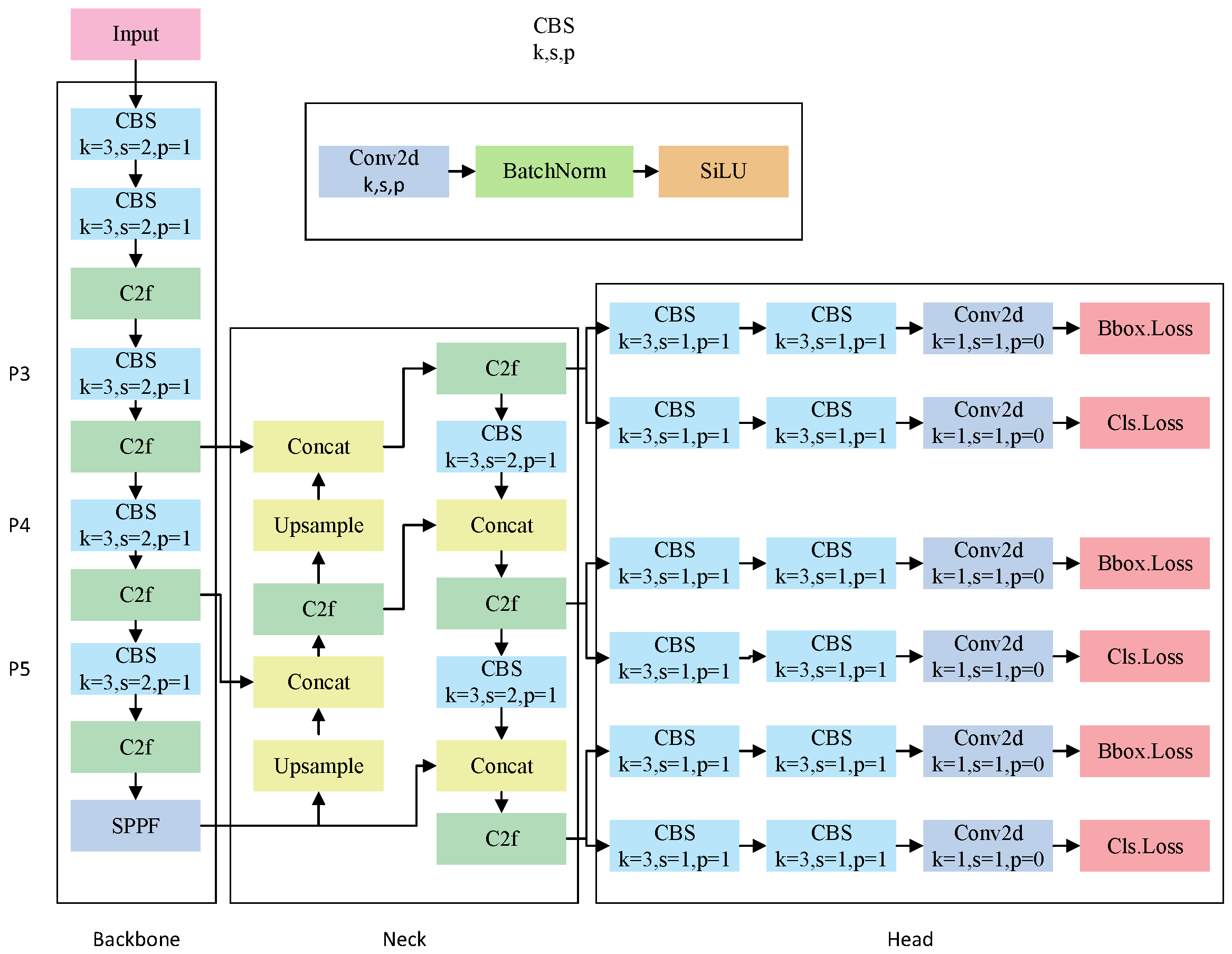

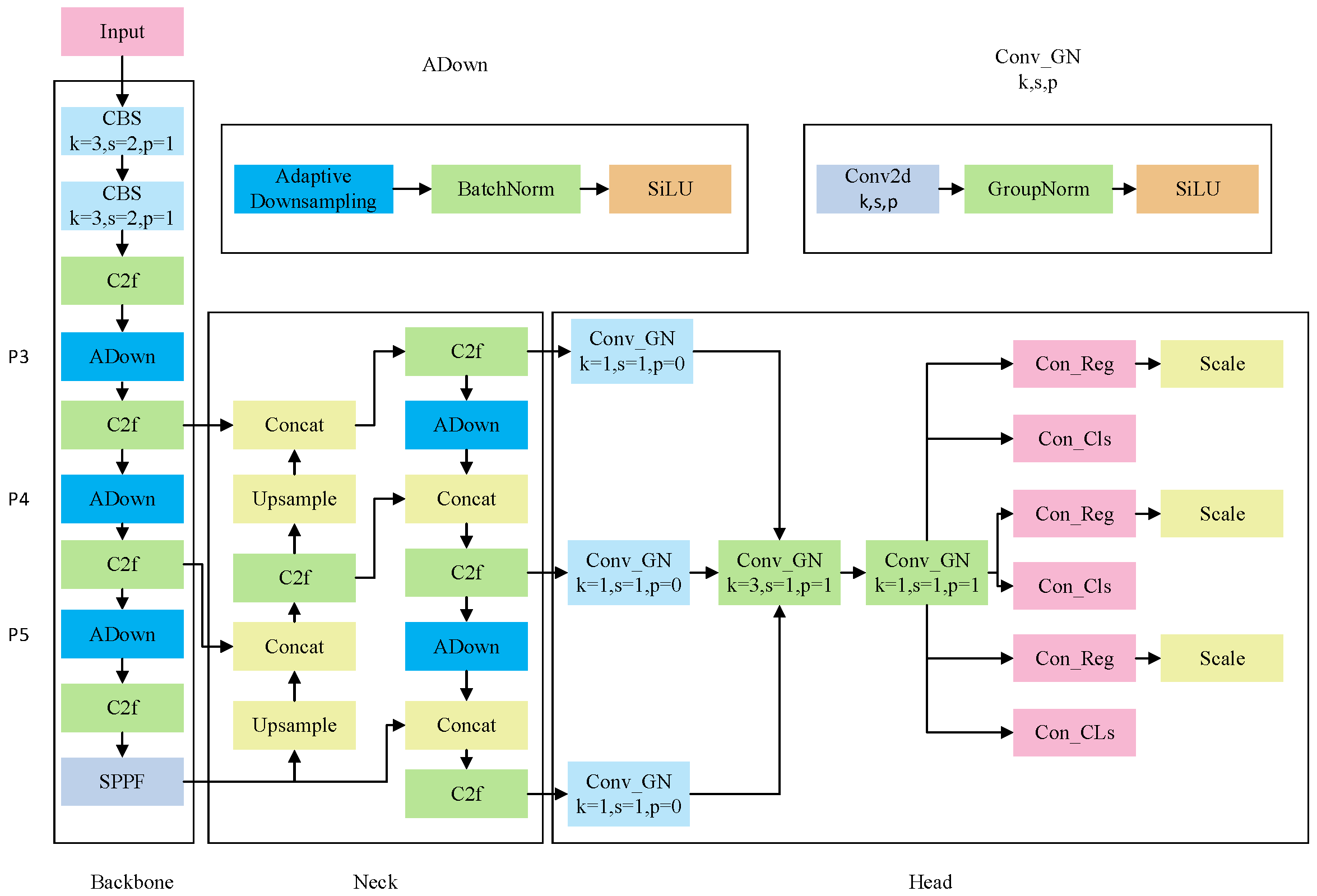

3.1. Lightweight Vehicle Target Detection Algorithm YOLO-AL

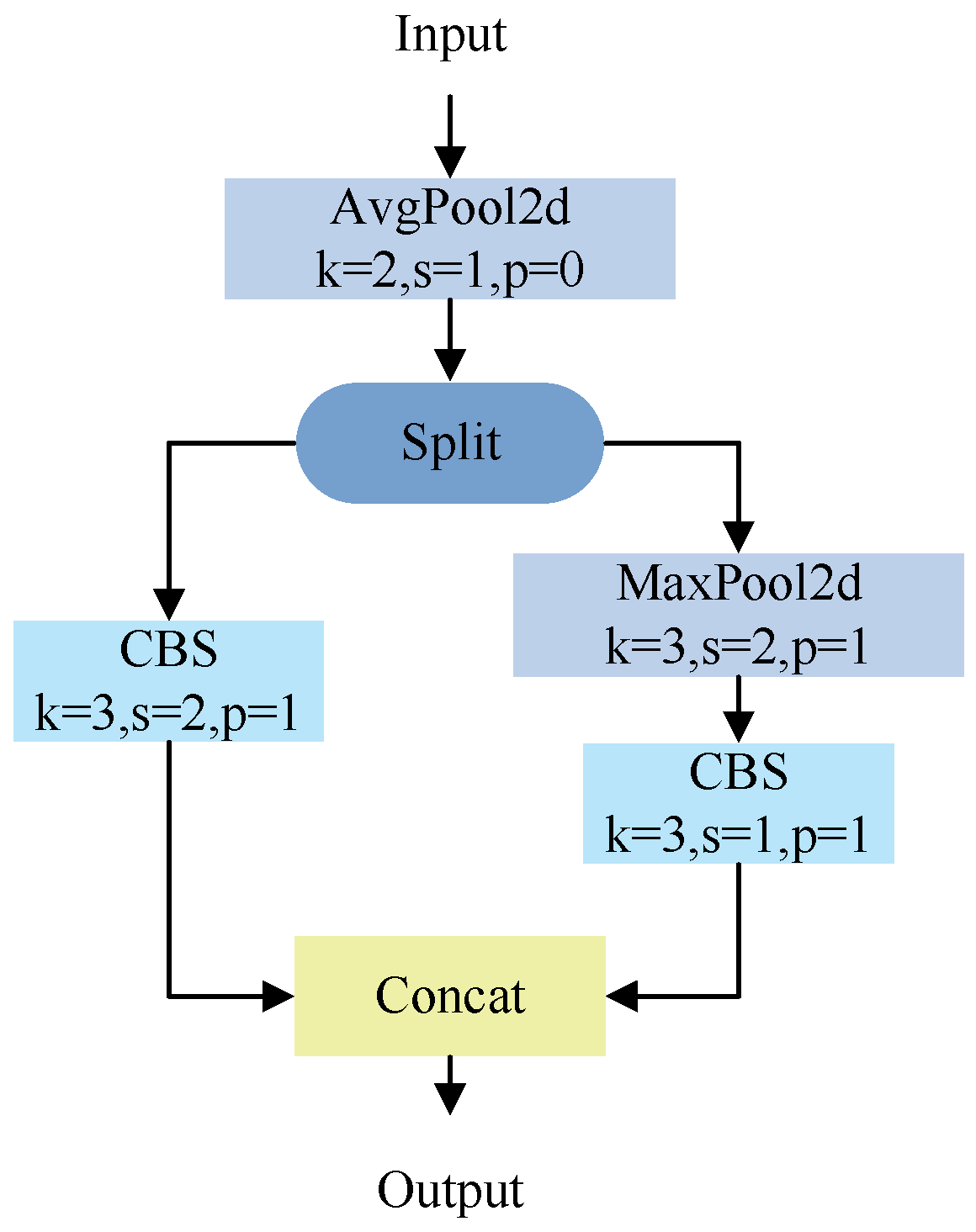

3.2. Adaptive Downsampling Module

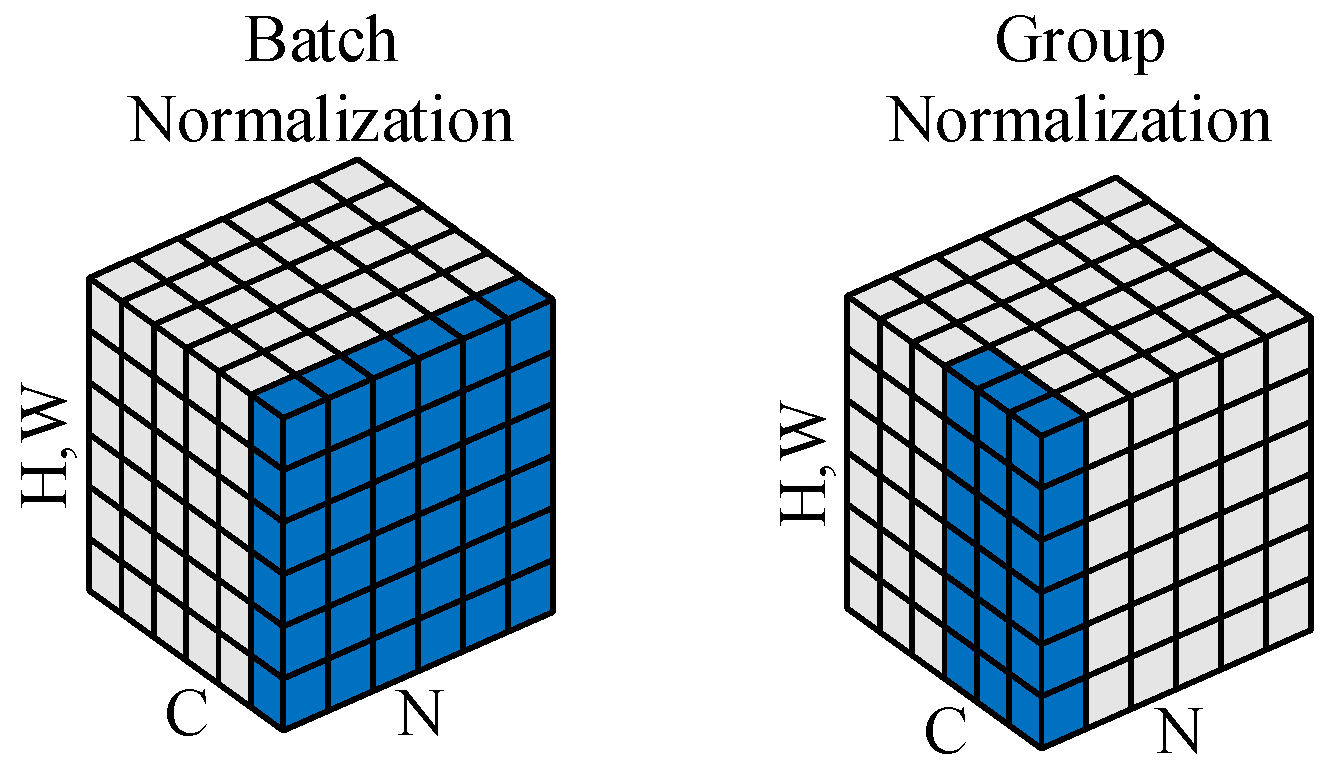

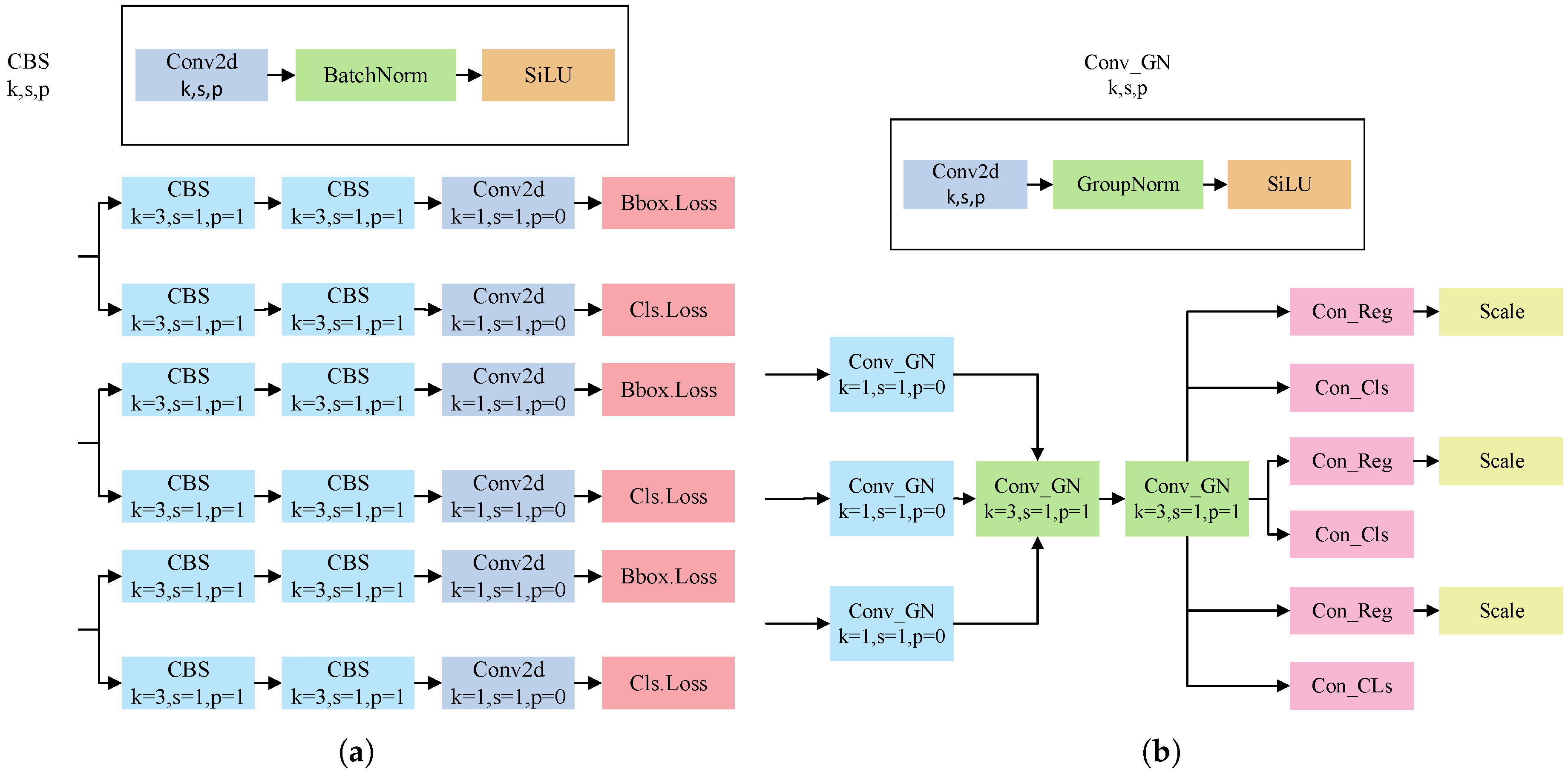

3.3. Lightweight Shared Convolutional Detection Head

4. Experiments

4.1. Evaluation Indicators for Target Detection

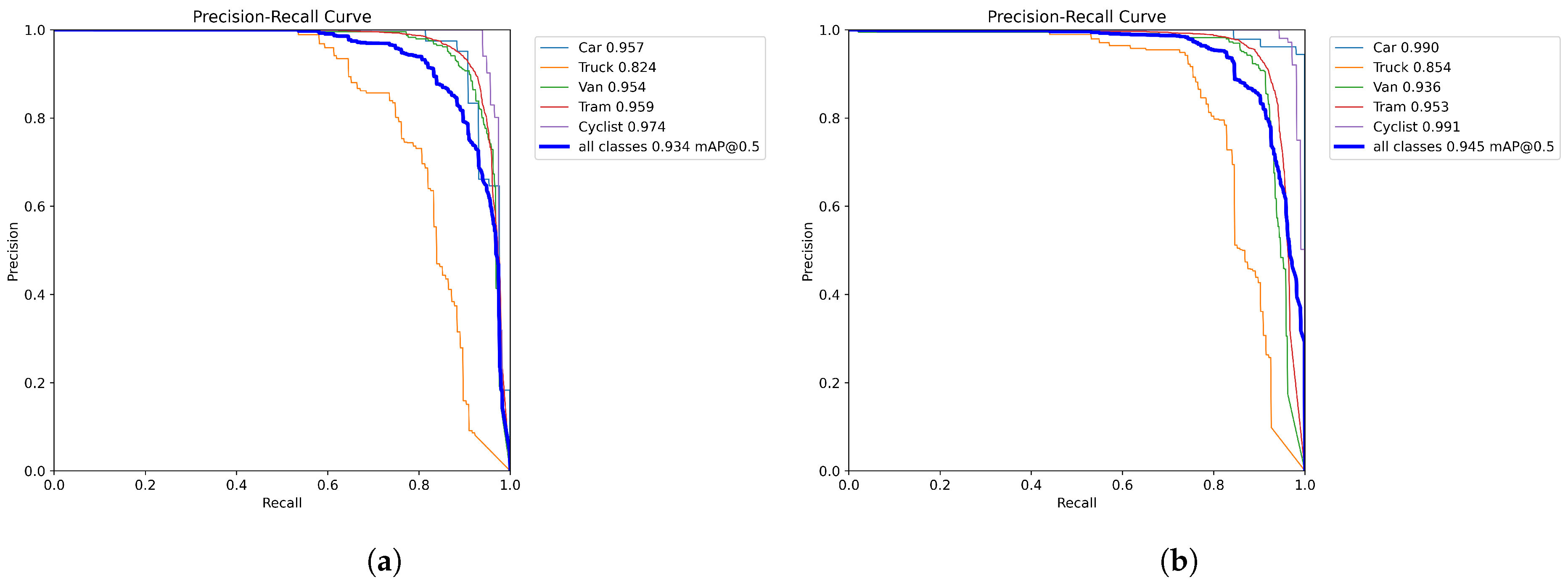

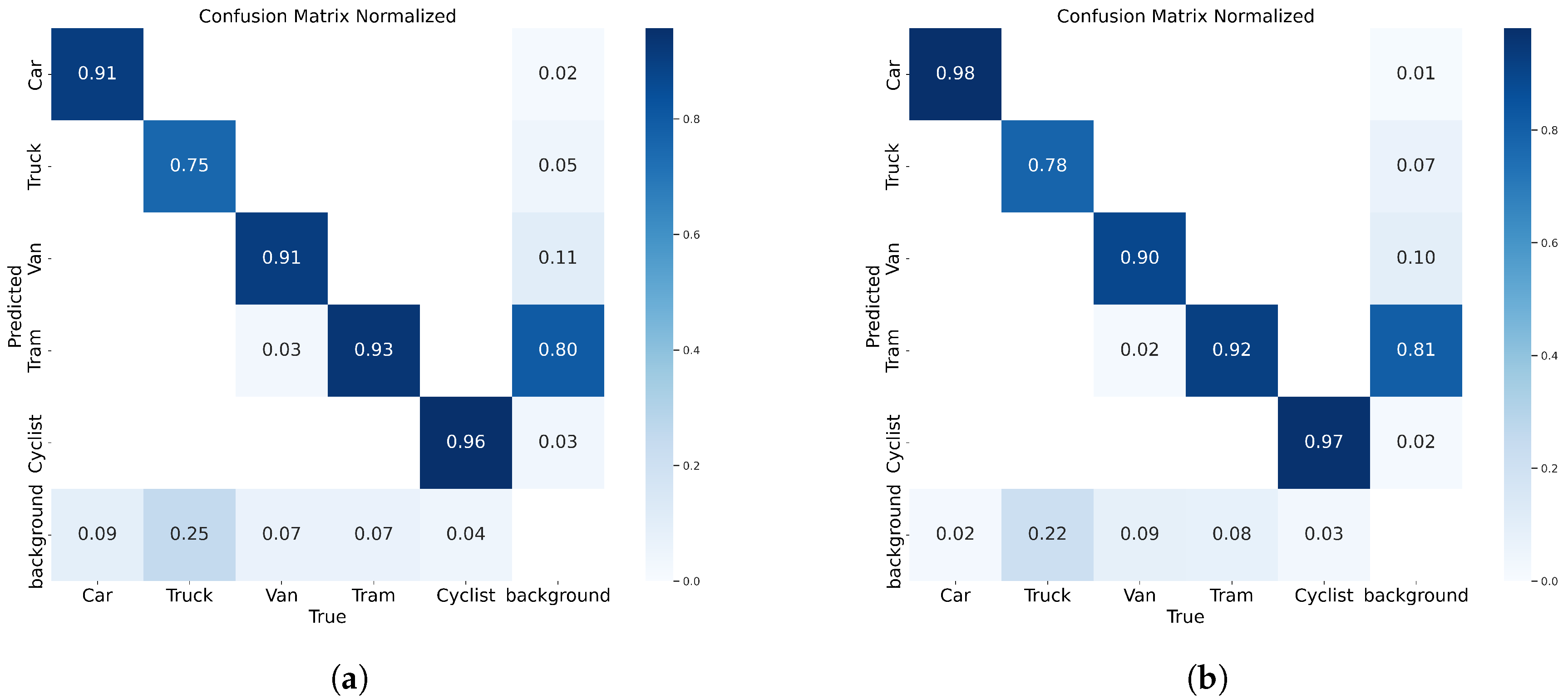

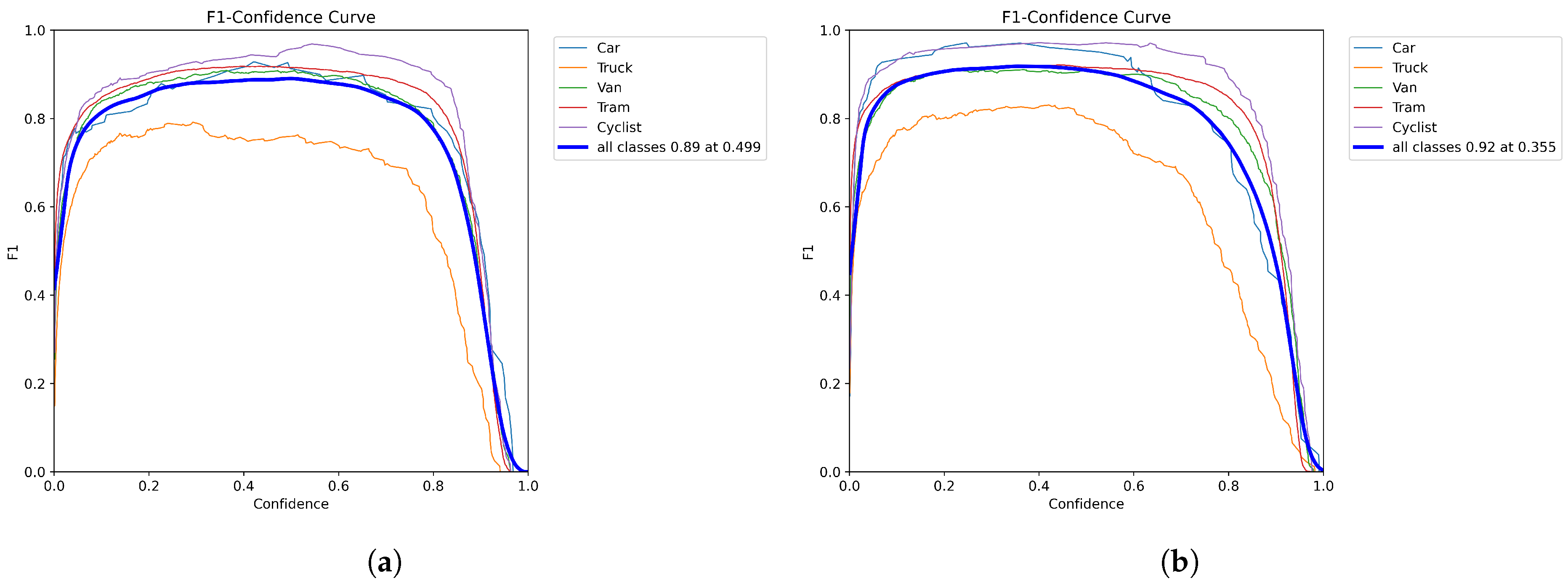

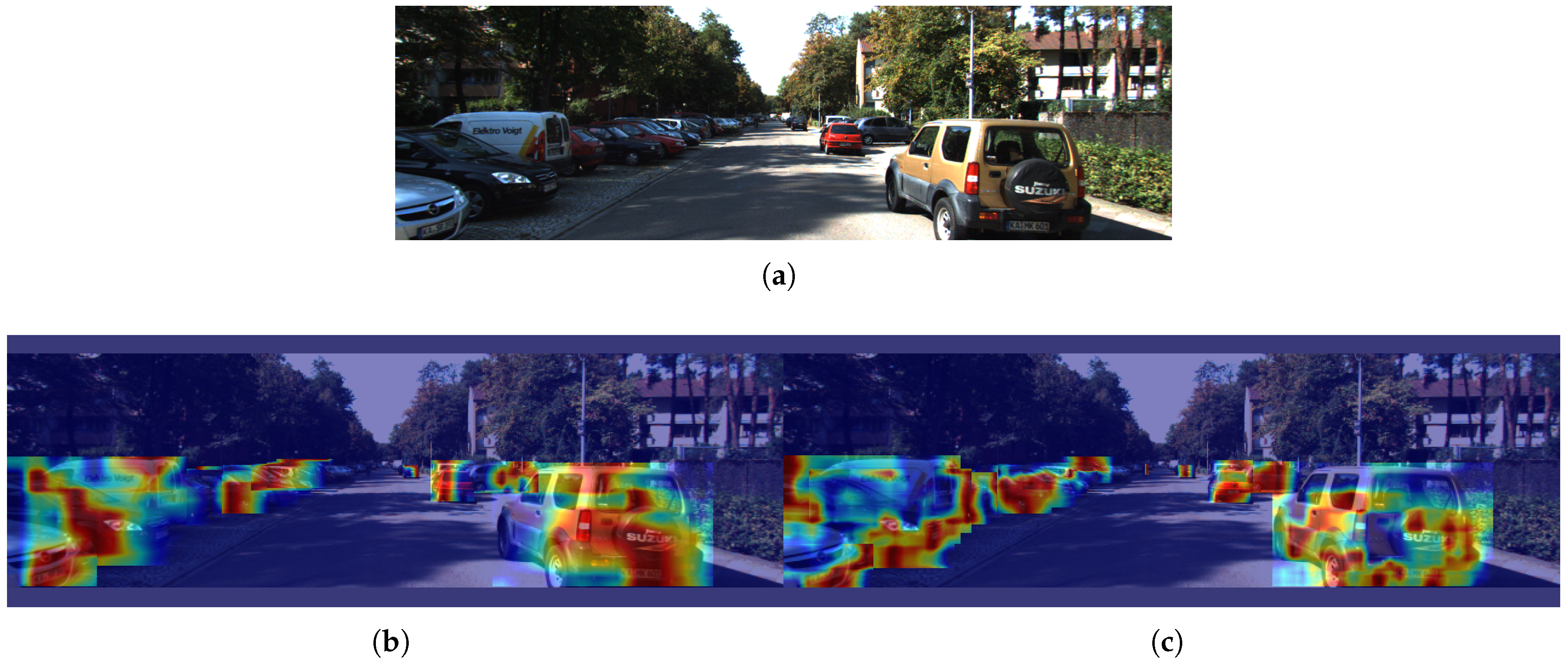

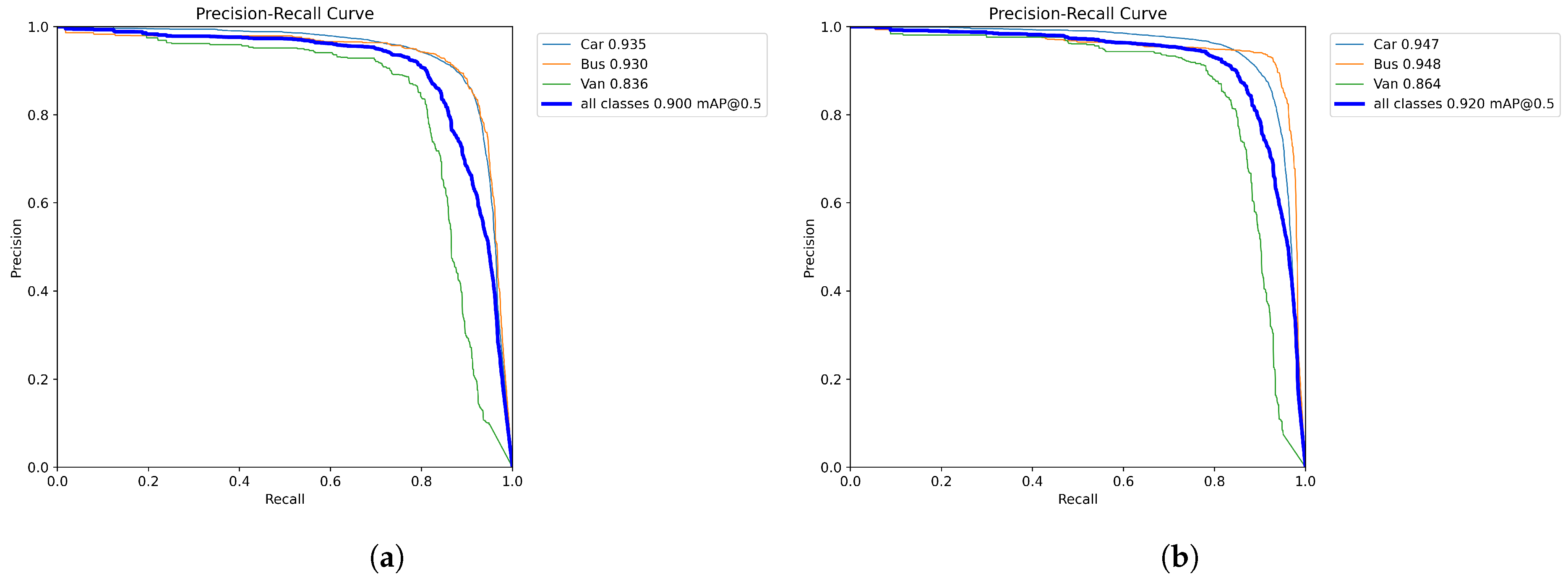

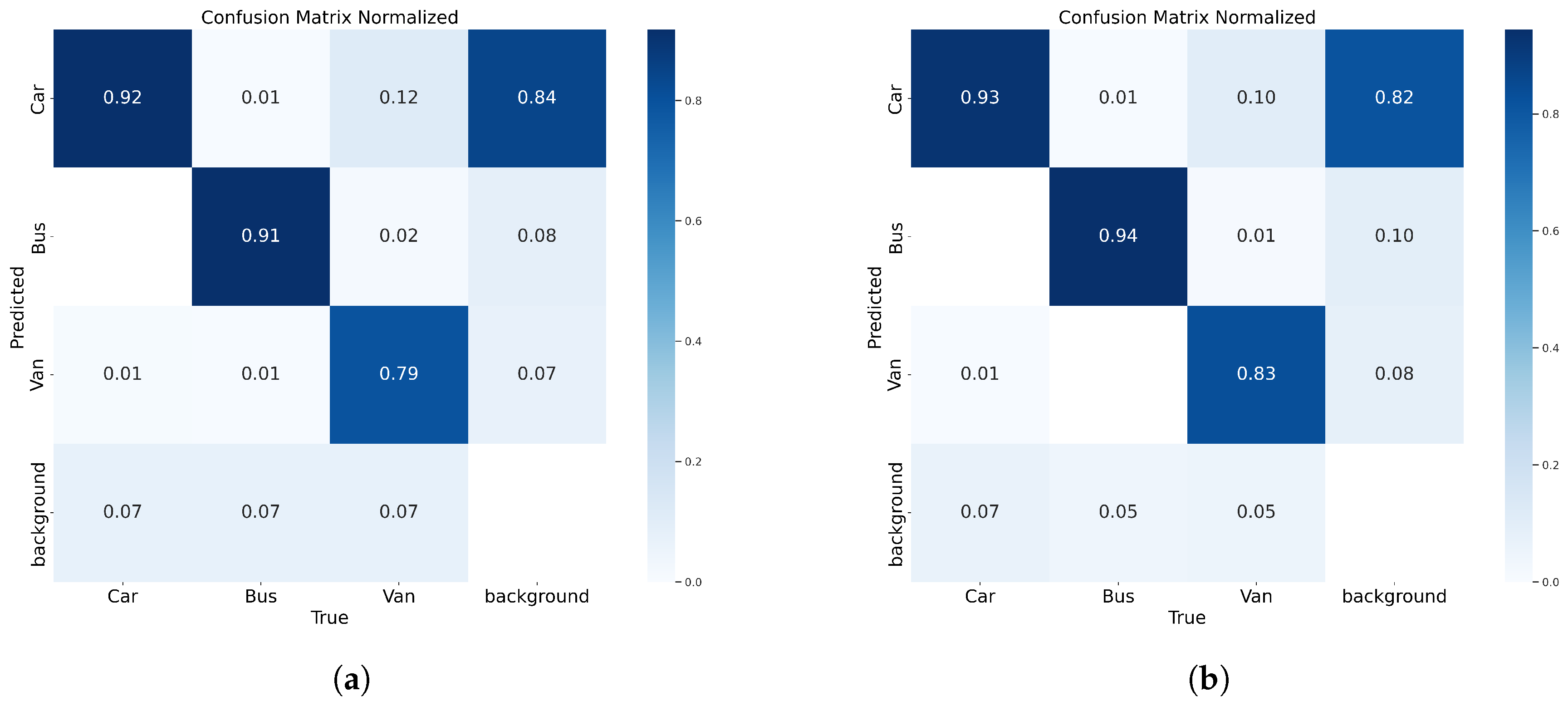

4.2. KITTI 2D

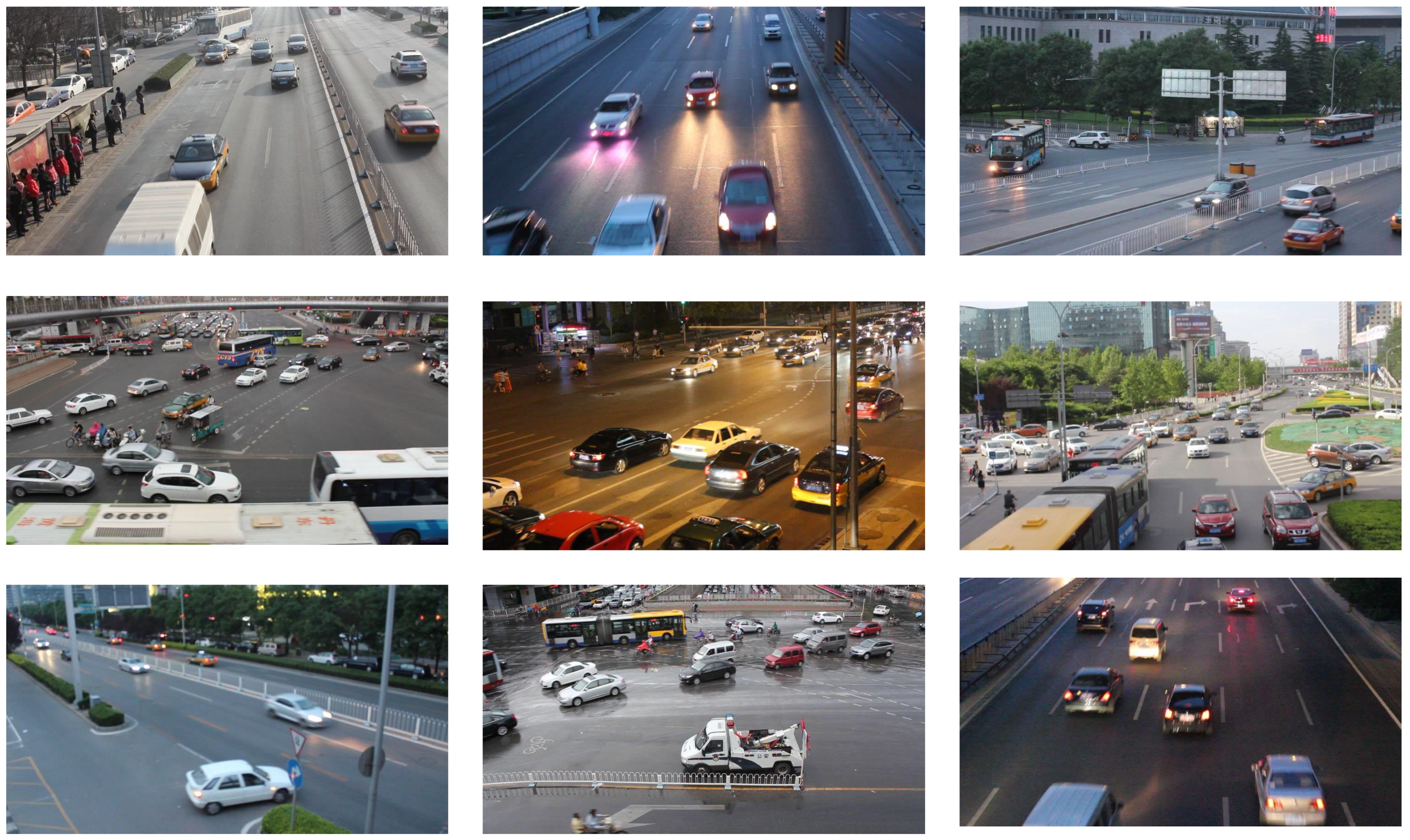

4.3. UA-DETRAC

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Chen, S.; Piao, L.; Zang, X.; Luo, Q.; Li, J.; Yang, J.; Rong, J. Analyzing differences of highway lane-changing behavior using vehicle trajectory data. Phys. A Stat. Mech. Its Appl. 2023, 624, 128980. [Google Scholar] [CrossRef]

- Zhu, T.; Liu, Z. Intelligent transport systems in china: Past, present and future. In Proceedings of the 2015 Seventh International Conference on Measuring Technology and Mechatronics Automation, Nanchang, China, 13–14 June 2015; pp. 581–584. [Google Scholar]

- Chen, X.; Hu, R.; Luo, K.; Wu, H.; Biancardo, S.; Zheng, Y.; Xian, J. Intelligent ship route planning via an A search model enhanced double-deep Q-network. Ocean. Eng. 2025, 327, 120956. [Google Scholar] [CrossRef]

- Lee, D. Effective Gaussian mixture learning for video background subtraction. IEEE Trans. Pattern Anal. Mach. Intell. 2005, 27, 827–832. [Google Scholar] [PubMed]

- Zhang, H.; Zhang, H. A moving target detection algorithm based on dynamic scenes. In Proceedings of the 2013 8th International Conference on Computer Science & Education, Colombo, Sri Lanka, 26–28 April 2013; pp. 995–998. [Google Scholar]

- Deng, G.; Guo, K. Self-adaptive background modeling research based on change detection and area training. In Proceedings of the 2014 IEEE Workshop on Electronics, Computer and Applications, Ottawa, ON, USA, 8–9 May 2014; pp. 59–62. [Google Scholar]

- Barnich, O. Van Droogenbroeck, M. A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2010, 20, 1709–1724. [Google Scholar] [CrossRef] [PubMed]

- Weng, M.; Huang, G.; Da, X. A new interframe difference algorithm for moving target detection. In Proceedings of the 2010 3rd International Congress on Image and Signal Processing, Yantai, China, 16–18 October 2010; Volume 1, pp. 285–289. [Google Scholar]

- Fang, Y.; Dai, B. An improved moving target detecting and tracking based on optical flow technique and kalman filter. In Proceedings of the 2009 4th International Conference on Computer Science & Education, Nanning, China, 25–28 July 2009; pp. 1197–1202. [Google Scholar]

- Alahi, M.; Sukkuea, A.; Tina, F.; Nag, A.; Kurdthongmee, W.; Suwannarat, K.; Mukhopadhyay, S. Integration of IoT-enabled technologies and artificial intelligence (AI) for smart city scenario: Recent advancements and future trends. Sensors 2023, 23, 5206. [Google Scholar] [CrossRef] [PubMed]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; Volume 1, p. I. [Google Scholar]

- Xu, Z.; Huang, W.; Wang, Y. Multi-class vehicle detection in surveillance video based on deep learning. J. Comput. Appl. Math. 2019, 39, 700–705. [Google Scholar]

- Zhang, S.; Wang, X. Human detection and object tracking based on Histograms of Oriented Gradients. In Proceedings of the 2013 Ninth International Conference on Natural Computation (ICNC), Shenyang, China, 23–25 July 2013; pp. 1349–1353. [Google Scholar]

- Freund, Y.; Schapire, R. A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 1997, 55, 119–139. [Google Scholar] [CrossRef]

- Kaijun, Y. A least squares support vector machine classifier for information retrieval. J. Converg. Inf. Technol. 2013, 8, 177–183. [Google Scholar]

- Felzenszwalb, P.; McAllester, D.; Ramanan, D. A discriminatively trained, multiscale, deformable part model. In Proceedings of the 2008 IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008; pp. 1–8. [Google Scholar]

- Li, G.; Zeng, R.; Lin, L. Moving target detection in video monitoring system. In Proceedings of the 2006 6th World Congress on Intelligent Control and Automation, Dalian, China, 21–23 June 2006; Volume 2, pp. 9778–9781. [Google Scholar]

- Wu, X.; Song, X.; Gao, S.; Chen, C. Review of target detection algorithms based on deep learning. Transducer Microsyst. Technol. 2021, 40, 4–7. [Google Scholar]

- Xie, W.; Zhu, D.; Tong, X. Small target detection method based on visual attention. Comput. Eng. Appl. 2013, 49, 125–128. [Google Scholar]

- Li, Z.; Liu, F.; Yang, W. A survey of convolutional neural networks: Analysis, applications, and prospects. IEEE Trans. Neural Netw. Learn. Syst. 2021, 33, 6999–7019. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Guo, Z.; Wu, J.; Tian, Y.; Tang, H.; Guo, X. Real-time vehicle detection based on improved yolo v5. Sustainability 2022, 14, 12274. [Google Scholar] [CrossRef]

- Chen, D.; Huang, C.; Fan, T.; Lau, H.; Yan, X. Predictive Modeling for Vessel Traffic Flow: A Comprehensive Survey from Statistics to AI. Transp. Saf. Environ. 2025, tdaf022. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Bharati, P.; Pramanik, A. Deep learning techniques—R-CNN to mask R-CNN: A survey. In Computational Intelligence in Pattern Recognition: Proceedings of CIPR 2019, London, UK, 13 November 2019; Springer: Singapore, 2020; pp. 657–668. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.; Berg, A. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4: Universal models for the mobile ecosystem. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 79–96. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–20 June 2020; pp. 16965–16974. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S. DETRs beat YOLOs on real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16965–16974. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Wu, Y.; He, K. Group normalization. Int. J. Comput. Vis. 2020, 128, 742–755. [Google Scholar] [CrossRef]

- Everingham, M.; Luc, V.; Williams, C.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for autonomous driving? The KITTI vision benchmark suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Wang, C.; Bochkovskiy, A.; Liao, H. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canda, 18–22 June 2023; pp. 7464–7475. [Google Scholar]

- Wang, C.; Yeh, I.; Liao, H. Yolov9: Learning what you want to learn using programmable gradient information. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; pp. 1–21. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Wen, L.; Du, D.; Cai, Z. UA-DETRAC: A new benchmark and protocol for multi-object detection and tracking. Comput. Vis. Image Underst. 2020, 193, 102907. [Google Scholar] [CrossRef]

| Name | Configuration |

|---|---|

| CPU | 16 vCPU Intel(R) Xeon(R) Platinum 8481C |

| GPU | RTX 4090D(24 GB) |

| Operating system | Ubuntu20.04 |

| Memory | 80GB RAM |

| Deep learning frameworks | PyTorch 1.11.0 |

| Programming language | Python 3.8 |

| Parameter | Value |

|---|---|

| Epochs | 200 |

| Train batch size | 32 |

| Test batch size | 1 |

| Optimizer | SGD |

| Initial learning rate | 0.01 |

| Momentum | 0.937 |

| Weight decay coefficient | 0.0005 |

| Numerical precision | FP32 |

| Method | mAP@0.5/% | Params/M | FLOPs/G | FPS |

|---|---|---|---|---|

| Faster R-CNN | 91.1 | 41 | 180 | 13.5 |

| YOLOv5n | 92.1 | 2.5 | 7.1 | 207.8 |

| YOLOv6n | 91.3 | 4.2 | 11.8 | 280.5 |

| YOLOv7-tiny | 92.7 | 6.0 | 13.1 | 212.3 |

| YOLOv8n(baseline) | 93.4 | 3.0 | 8.1 | 259.8 |

| YOLOv9-tiny | 92.9 | 2.8 | 4.5 | 280.5 |

| YOLOv10n | 93.2 | 2.3 | 6.5 | 228.7 |

| MobileNetv4-YOLO | 92.7 | 5.7 | 22.5 | 311.7 |

| EfficientDet-D0 | 92.3 | 3.9 | 3.2 | 93.5 |

| RT-DETR-L | 93.2 | 32.0 | 103.5 | 25.9 |

| YOLO-AL | 94.5 | 2.0 | 5.8 | 290.9 |

| Method | mAP@0.5/% | /% | Params/M | FLOPs/G | FPS |

|---|---|---|---|---|---|

| YOLOv8n | - | 3.0 | 8.1 | 259.8 | |

| YOLOv8n + ADown | 0.4 | 2.6 | 7.4 | 265.6 | |

| YOLOv8n + LSCD | 0.7 | 2.4 | 6.5 | 276.3 | |

| YOLO-AL | 1.1 | 2.0 | 5.8 | 290.9 |

| Method | mAP@0.5/% | Params/M | FLOPs/G | FPS |

|---|---|---|---|---|

| Faster R-CNN | 86.4 | 41 | 180 | 13.1 |

| YOLOv5n | 87.6 | 2.5 | 7.1 | 201.6 |

| YOLOv6n | 86.8 | 4.2 | 11.8 | 272.1 |

| YOLOv7-tiny | 89.3 | 6.0 | 13.1 | 205.9 |

| YOLOv8n (baseline) | 90.0 | 3.0 | 8.1 | 252.0 |

| YOLOv9-tiny | 88.7 | 2.0 | 7.7 | 272.1 |

| YOLOv10n | 88.3 | 2.3 | 6.5 | 221.7 |

| Mobilenetv4-YOLO | 88.2 | 5.7 | 22.5 | 302.4 |

| EfficientDet-D0 | 87.6 | 3.9 | 3.2 | 90.7 |

| RT-DETR-L | 88.8 | 32 | 103.5 | 25.2 |

| YOLO-AL | 92.0 | 2.0 | 5.8 | 289.6 |

| Method | mAP@0.5/% | /% | Params/M | FLOPs/G | FPS |

|---|---|---|---|---|---|

| YOLOv8n | - | 3.0 | 8.1 | 252.0 | |

| YOLOv8n + ADown | 0.7 | 2.6 | 7.4 | 267.2 | |

| YOLOv8n + LSCD | 0.5 | 2.4 | 6.5 | 278.1 | |

| YOLO-AL | 2.0 | 2.0 | 5.8 | 289.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, M.; Zhang, Z. Research on Vehicle Target Detection Method Based on Improved YOLOv8. Appl. Sci. 2025, 15, 5546. https://doi.org/10.3390/app15105546

Zhang M, Zhang Z. Research on Vehicle Target Detection Method Based on Improved YOLOv8. Applied Sciences. 2025; 15(10):5546. https://doi.org/10.3390/app15105546

Chicago/Turabian StyleZhang, Mengchen, and Zhenyou Zhang. 2025. "Research on Vehicle Target Detection Method Based on Improved YOLOv8" Applied Sciences 15, no. 10: 5546. https://doi.org/10.3390/app15105546

APA StyleZhang, M., & Zhang, Z. (2025). Research on Vehicle Target Detection Method Based on Improved YOLOv8. Applied Sciences, 15(10), 5546. https://doi.org/10.3390/app15105546