Harnessing Semantic and Trajectory Analysis for Real-Time Pedestrian Panic Detection in Crowded Micro-Road Networks

Abstract

1. Introduction

2. Related Work

2.1. Crowd Density and Movement Analysis

2.2. Panic Behavior Detection

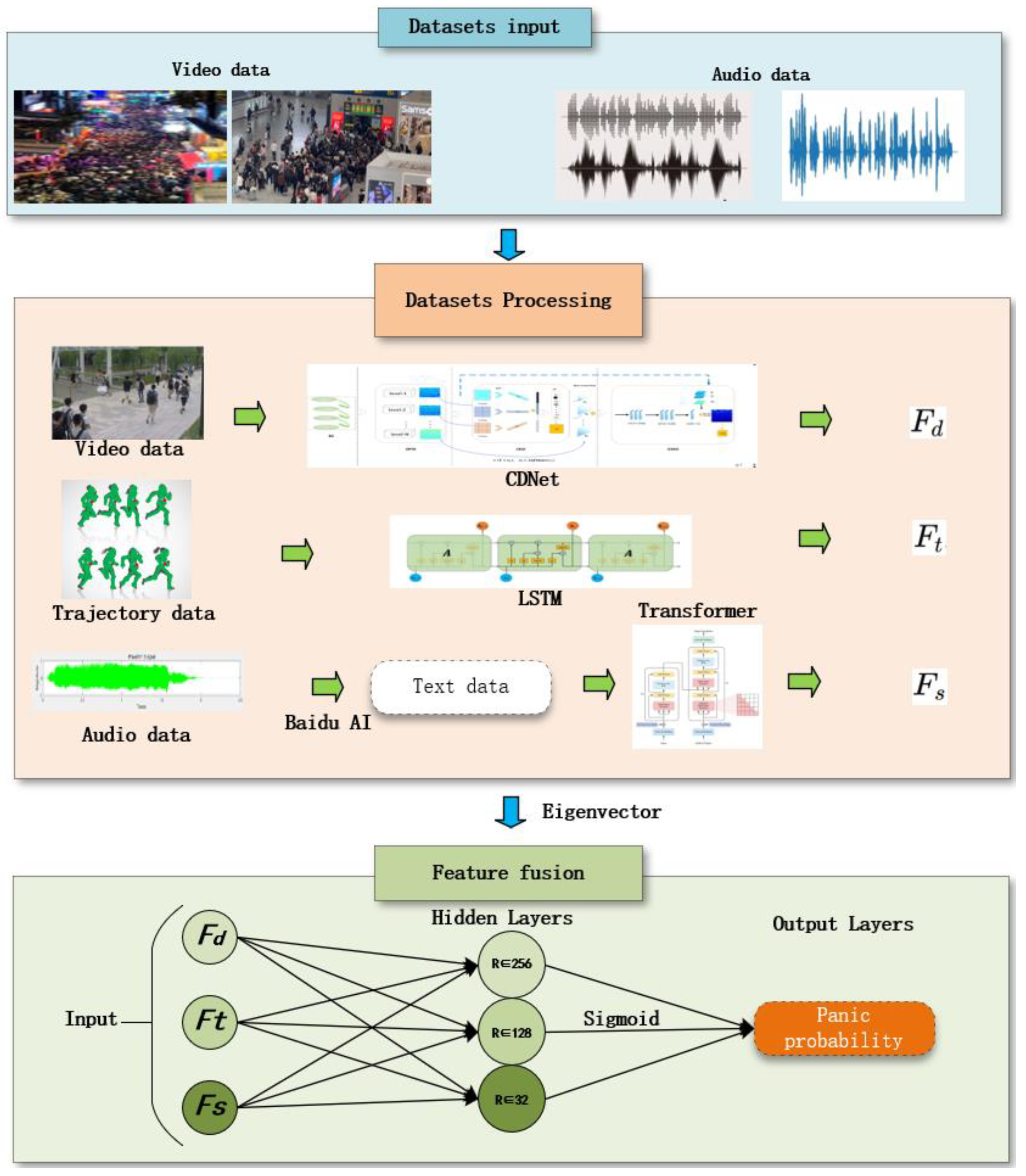

3. Methodology

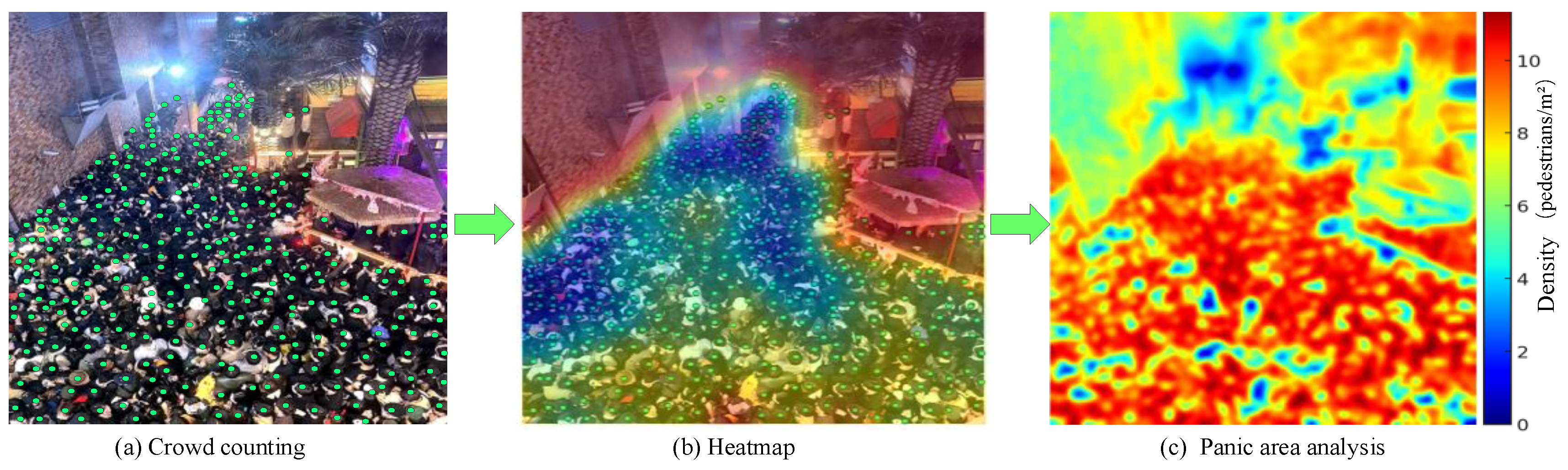

3.1. Crowd Density Measures Panic Risk

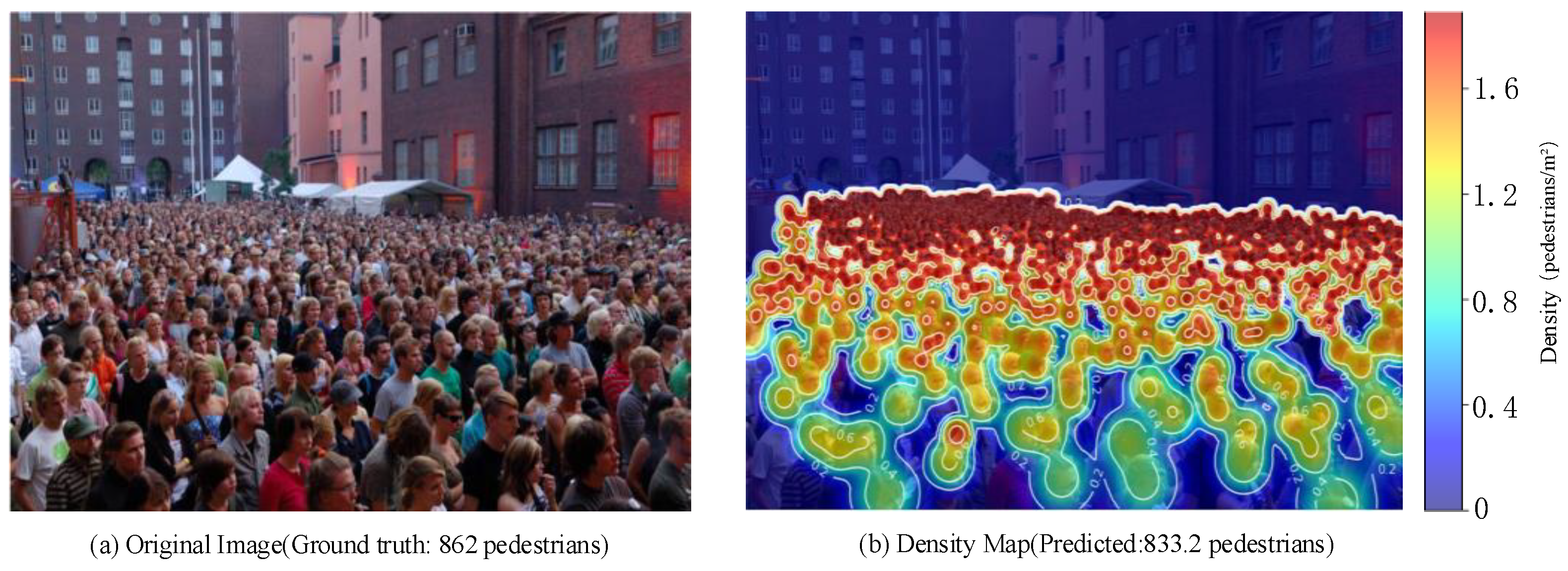

3.1.1. CDNet Framework

3.1.2. Abnormal Change of Contour Line

- (1)

- Mathematical Description of Contour Features.

- (2)

- Evaluation Rules of contour line.

- Rules 1: (Abnormal Change in Contour Quantity).

- Rules 2: (Abnormal Change in Contour Area).

3.2. Panic Trajectory Recognition Criterion

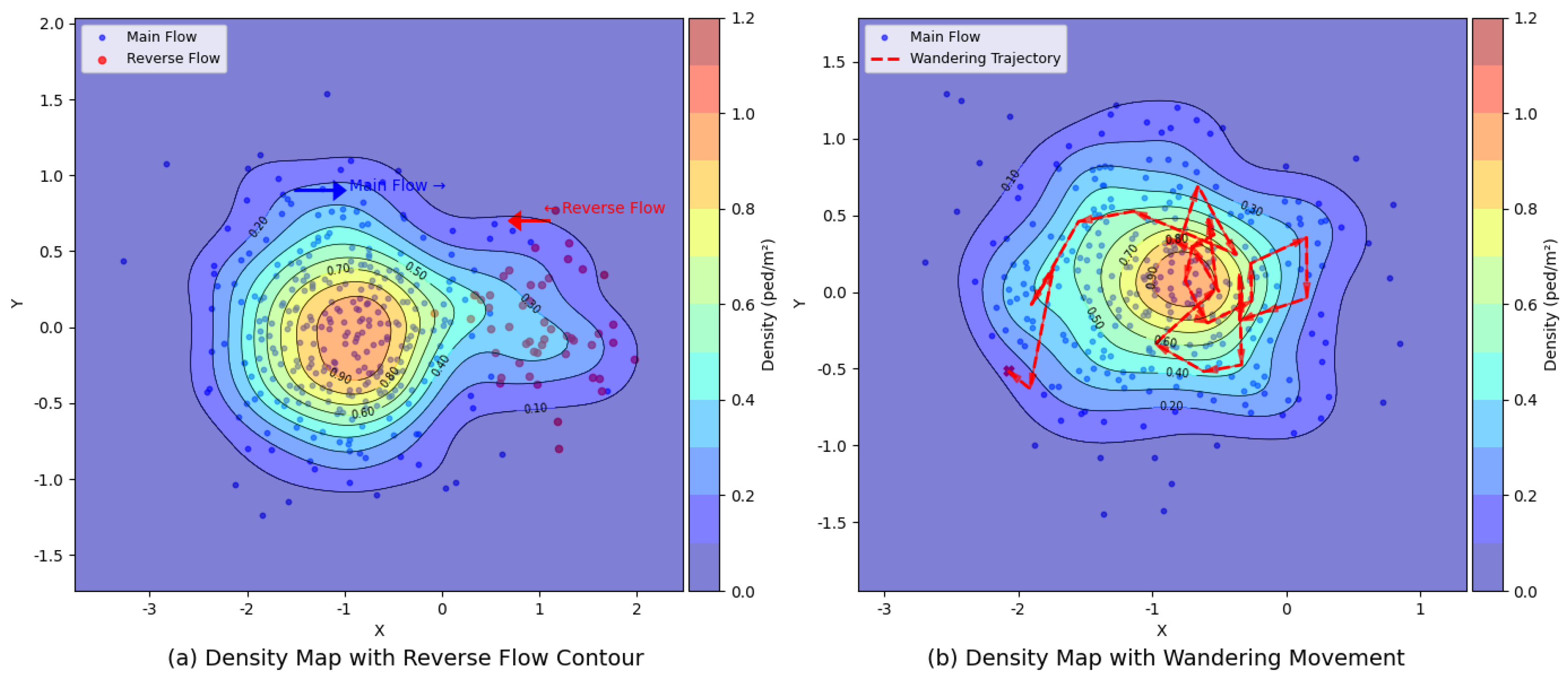

3.2.1. Countercurrent Trajectory Criterion

3.2.2. Nonlinear Motion Trajectory Criterion

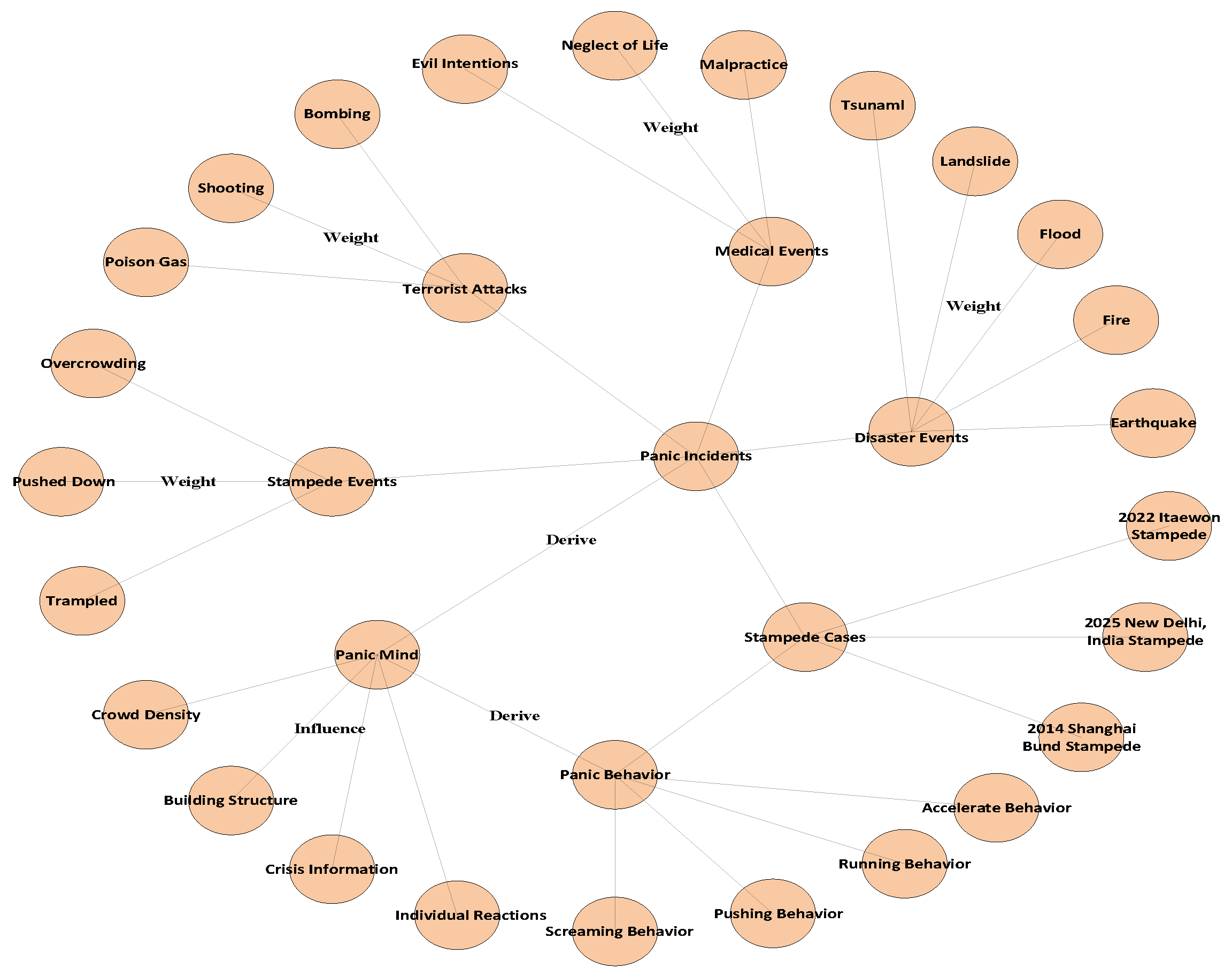

3.3. Panic Semantic Recognition Criterion

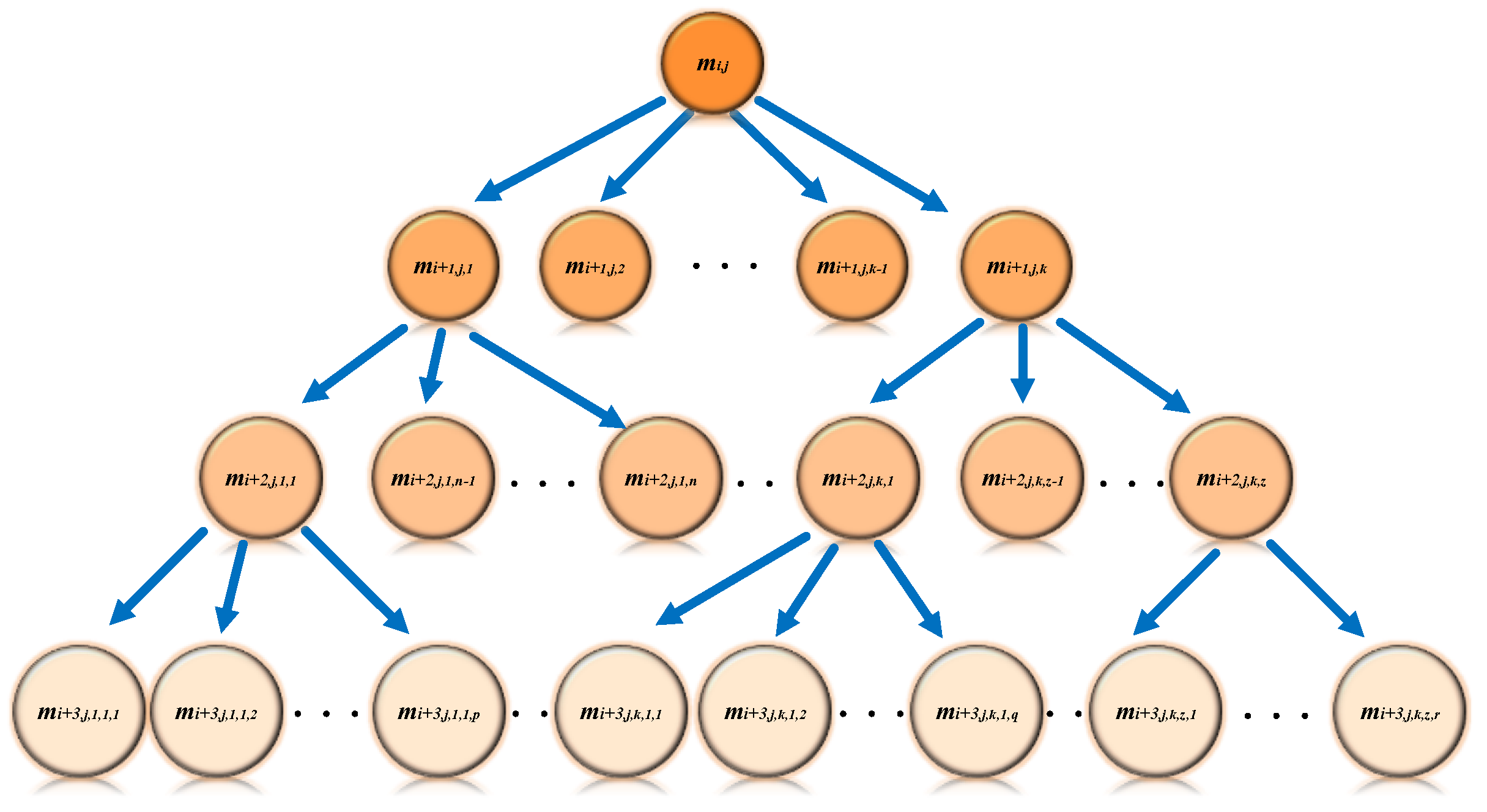

3.4. Fusion-Based Multi-Feature Method for Pedestrian Panic Recognition

4. Experiments

4.1. Experimental Setup

- Traffic simulation;

- Traffic Panic trigger;

- Visual rendering;

Ethical and Privacy Compliance

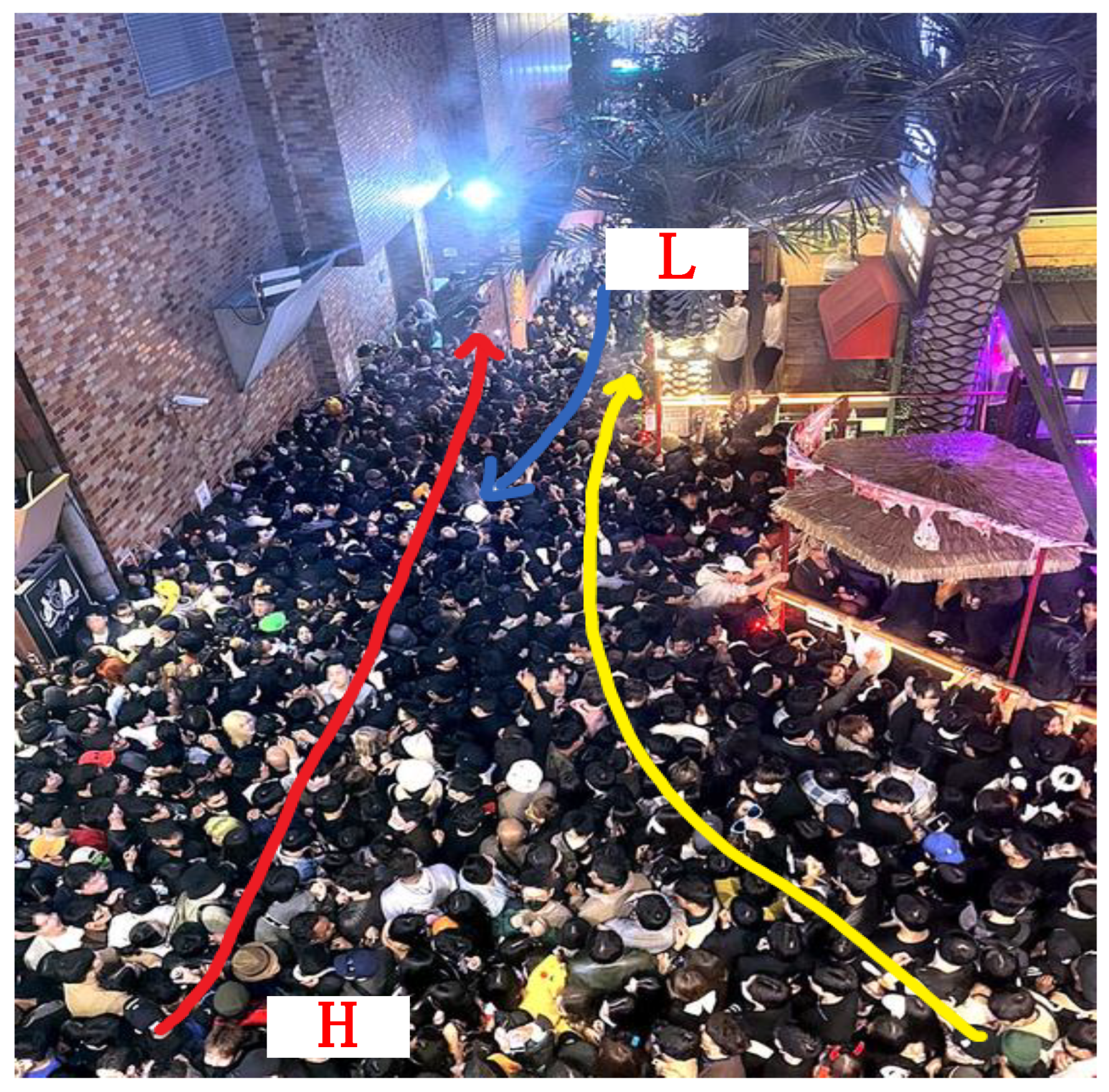

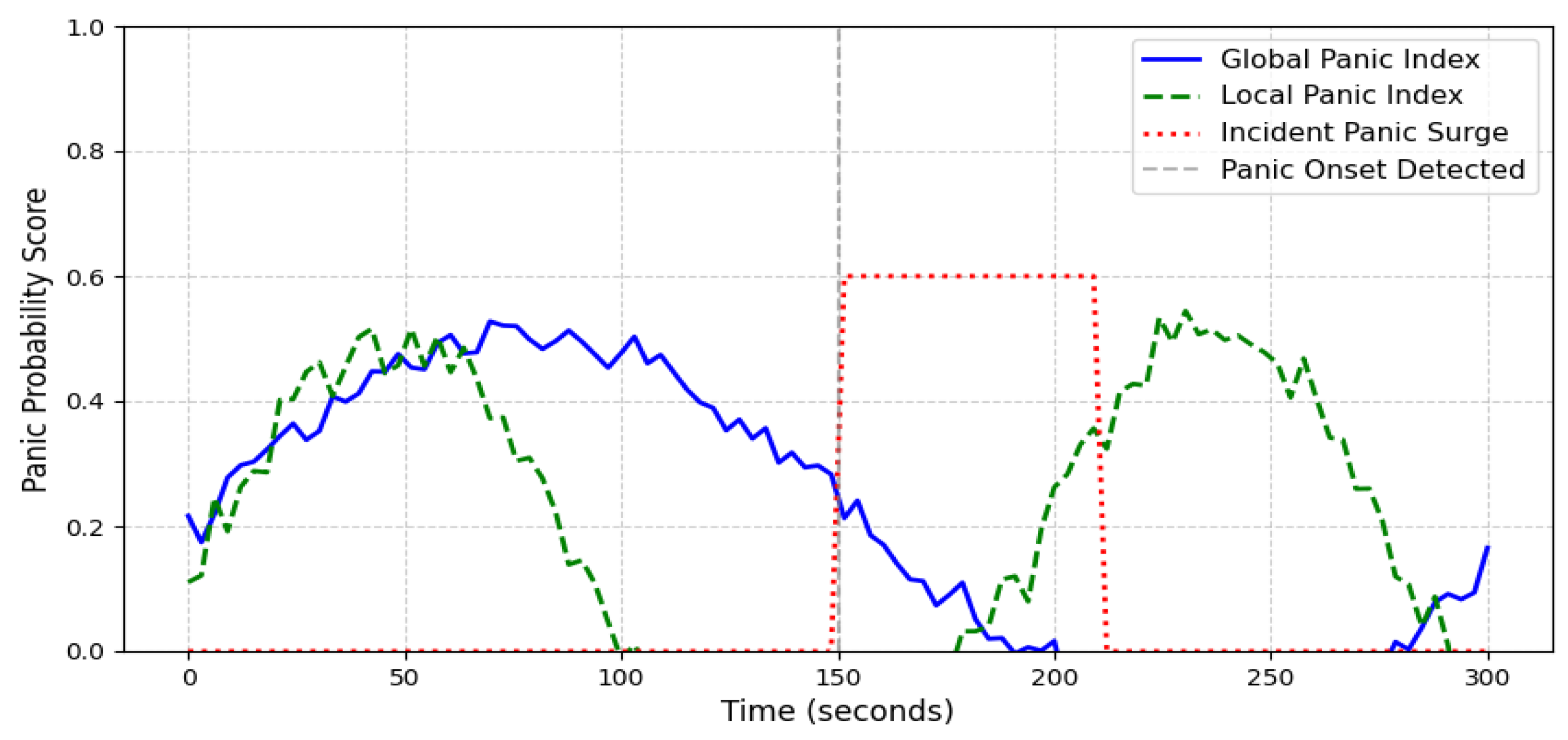

4.2. Case Analysis

4.3. Evaluation Metrics

4.3.1. Performance Metrics

4.3.2. Ablation Study

4.3.3. Robustness and Error Case Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hu, Y.; Bi, Y.; Ren, X.; Huang, S.; Gao, W. Experimental study on the impact of a stationary pedestrian obstacle at the exit on evacuation. Phys. A Stat. Mech. Its Appl. 2023, 626, 129062. [Google Scholar] [CrossRef]

- Altamimi, A.B.; Ullah, H. Panic Detection in Crowded Scenes. Engineering. Technol. Appl. Sci. Res. 2020, 10, 5412–5418. [Google Scholar] [CrossRef]

- De Iuliis, M.; Battegazzorre, E.; Domaneschi, M.; Cimellaro, G.P.; Bottino, A.G. Large scale simulation of pedestrian seismic evacuation including panic behavior. Sustain. Cities Soc. 2023, 904, 104527. [Google Scholar] [CrossRef]

- Jain, D.K.; Zhao, X.; González-Almagro, G.; Gan, C.; Kotecha, K. Multimodal pedestrian detection using metaheuristics with deep convolutional neural network in crowded scenes. Inf. Fusion 2023, 95, 401–414. [Google Scholar] [CrossRef]

- López, A.M. Pedestrian Detection Systems. In Wiley Encyclopedia of Electrical and Electronics Engineering; Wiley-Interscience: Hoboken, NJ, USA, 2018. [Google Scholar]

- Ganga, B.; Lata, B.T.; Venugopal, K.R. Object detection and crowd analysis using deep learning techniques: Comprehensive review and future directions. Neurocomputing 2024, 597, 127932. [Google Scholar] [CrossRef]

- Hamid, A.A.; Monadjemi, S.A.; Bijan, S. ABDviaMSIFAT: Abnormal Crowd Behavior Detection Utilizing a Multi-Source Information Fusion Technique. IEEE Access 2024, 13, 75000–75019. [Google Scholar] [CrossRef]

- Lazaridis, L.; Dimou, A.; Daras, P. Abnormal behavior detection in crowded scenes using density heatmaps and optical flow. In Proceedings of the 2018 26th European Signal Processing Conference (EUSIPCO), Rome, Italy, 3–7 September 2018. [Google Scholar]

- Sharma, V.K.; Mir, R.N.; Singh, C. Scale-aware CNN for crowd density estimation and crowd behavior analysis. Comput. Electr. Eng. 2023, 106, 108569. [Google Scholar] [CrossRef]

- Zhou, S.; Shen, W.; Zeng, D.; Zhang, Z. Unusual event detection in crowded scenes by trajectory analysis. In Proceedings of the 2015 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), South Brisbane, QLD, Australia, 19–24 April 2015. [Google Scholar]

- Su, H.; Qi, W.; Hu, Y.; Sandoval, J.; Zhang, L.; Schmirander, Y.; Chen, G.; Aliverti, A.; Knoll, A.; Ferrigno, G.; et al. Towards Model-Free Tool Dynamic Identification and Calibration Using Multi-Layer Neural Network. Sensors 2019, 19, 3636. [Google Scholar] [CrossRef]

- Miao, Y.; Yang, J.; Alzahrani, B.; Lv, G.; Alafif, T.; Barnawi, A.; Chen, M. Abnormal behavior learning based on edge computing toward a crowd monitoring system. IEEE Netw. 2022, 36, 90–96. [Google Scholar] [CrossRef]

- Tyagi, B.; Nigam, S.; Singh, R. A review of deep learning techniques for crowd behavior analysis. Arch. Comput. Methods Eng. 2022, 29, 5427–5455. [Google Scholar] [CrossRef]

- Qi, W.; Xu, X.; Qian, K.; Schuller, B.W.; Fortino, G.; Aliverti, A. A Review of AIoT-based Human Activity Recognition: From Application to Technique. IEEE J. Biomed. Health Inform. 2024, 29, 2425–2438. [Google Scholar] [CrossRef] [PubMed]

- Baidu, A.I. Baidu AI Open Platform n.d. Available online: https://ai.baidu.com/ (accessed on 20 August 2024).

- Khan, M.A.; Menouar, H.; Hamila, R. LCDnet: A lightweight crowd density estimation model for real-time video surveillance. J. Real-Time Image Process. 2023, 20, 29. [Google Scholar] [CrossRef]

- Luo, L.; Xie, S.; Yin, H.; Peng, C.; Ong, Y.-S. Detecting and Quantifying Crowd-level Abnormal Behaviors in Crowd Events. IEEE Trans. Inf. Forensics Secur. 2024, 19, 6810–6823. [Google Scholar] [CrossRef]

- Alashban, A.; Alsadan, A.; Alhussainan, N.F.; Ouni, R. Single convolutional neural network with three layers model for crowd density estimation. IEEE Access 2022, 10, 63823–63833. [Google Scholar] [CrossRef]

- Zhao, R.; Wang, Y.; Jia, P.; Zhu, W.; Li, C.; Ma, Y.; Li, M. Abnormal behavior detection based on dynamic pedestrian centroid model: Case study on u-turn and fall-down. IEEE Trans. Intell. Transp. Syst. 2023, 24, 8066–8078. [Google Scholar] [CrossRef]

- Korbmacher, R.; Dang, H.-T.; Tordeux, A. Predicting pedestrian trajectories at different densities: A multi-criteria empirical analysis. Phys. A Stat. Mech. Its Appl. 2024, 634, 129440. [Google Scholar] [CrossRef]

- Xie, C.-Z.T.; Xu, J.; Zhu, B.; Tang, T.-Q.; Lo, S.; Zhang, B.; Tian, Y. Advancing crowd forecasting with graphs across microscopic trajectory to macroscopic dynamics. Inf. Fusion 2024, 106, 102275. [Google Scholar] [CrossRef]

- Sen, A.; Rajakumaran, G.; Mahdal, M.; Usharani, S.; Rajasekharan, V.; Vincent, R.; Sugavanan, K. Live event detection for people’s safety using NLP and deep learning. IEEE Access 2024, 12, 6455–6472. [Google Scholar] [CrossRef]

- Li, N.; Hou, Y.-B.; Huang, Z.-Q. Implementation of a Real-time Fall Detection Algorithm Based on Body’s Acceleration. J. Chin. Comput. Syst. 2012, 33, 2410–2413. (In Chinese) [Google Scholar]

- Pan, D.; Liu, H.; Qu, D.; Zhang, Z. Human Falling Detection Algorithm Based on Multisensor Data Fusion with SVM. Mob. Inf. Syst. 2020, 2020, 8826088. [Google Scholar] [CrossRef]

- Li, J.; Huang, Q.; Du, Y.; Zhen, X.; Chen, S.; Shao, L. Variational Abnormal Behavior Detection with Motion Consistency. IEEE Trans. Image Process. 2022, 31, 275–286. [Google Scholar] [CrossRef] [PubMed]

- Guo, S.; Bai, Q.; Gao, S.; Zhang, Y.; Li, A. An Analysis Method of Crowd Abnormal Behavior for Video Service Robot. IEEE Access 2019, 7, 169577–169585. [Google Scholar] [CrossRef]

- Huo, F.Z. Research on evacuation of people in panic state considering rush behavior. J. Saf. Sci. Technol. 2022, 18, 203–209. (In Chinese) [Google Scholar]

- Zhong, S. Panic Crowd Behavior Detection Based on Intersection Density of Motion Vector. Comput. Syst. Appl. 2017, 26, 210–214. (In Chinese) [Google Scholar]

- Chang, C.-W.; Chang, C.-Y.; Lin, Y.-Y. A hybrid CNN and LSTM-based deep learning model for abnormal behavior detection. Multimed. Tools Appl. 2022, 81, 11825–11843. [Google Scholar] [CrossRef]

- Qiu, J.; Yan, X.; Wang, W.; Wei, W.; Fang, K. Skeleton-Based Abnormal Behavior Detection Using Secure Partitioned Convolutional Neural Network Model. IEEE J. Biomed. Health Inform. 2022, 26, 5829–5840. [Google Scholar] [CrossRef]

- Vinothina, V.; George, A. Recognizing Abnormal Behavior in Heterogeneous Crowd using Two Stream CNN. In Proceedings of the 2024 Asia Pacific Conference on Innovation in Technology (APCIT), Mysore, India, 26–27 July 2024. [Google Scholar]

- Zhang, Y.; Zhou, D.; Chen, S.; Gao, S.; Ma, Y. Single-Image Crowd Counting via Multi-Column Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Ha, K.M. Reviewing the Itaewon Halloween crowd crush, Korea 2022: Qualitative content analysis. F1000Research 2023, 12, 829. [Google Scholar] [CrossRef]

- Idrees, H.; Tayyab, M.; Athrey, K.; Zhang, D.; Al-Maadeed, S.; Rajpoot, N.; Shah, M. Composition Loss for Counting, Density Map Estimation and Localization in Dense Crowds. arXiv 2018, arXiv:1808.01050. [Google Scholar]

| NO. | Reference | Method | Data Source | Feature | Susceptible Factor |

|---|---|---|---|---|---|

| 1 | Zhao et al. [19] | Open pose, dynamic centroid model | Experiment Volunteers, a set of falling activity records | Acceleration, mass inertial of human body subsegments, and internal constraints | Simple group behavior patterns |

| 2 | Li et al. [23] | Decision tree classifier | Experiment Volunteers, a set of falling activity records | Acceleration, tilting angle, and still time | Environment |

| 3 | Pan et al. [24] | Multisensory data fusion with Support Vector Machine (SVM) | Experiments of 100 Volunteers | Acceleration | Multi-noise or multi-source environments |

| 4 | Li et al. [25] | Variational abnormal behavior detection (VABD) | UCSD, CUHK, Corridor, ShanghaiTech | Motion consistency | Sensitivity |

| 5 | Guo et al. [26] | Improved k-means | UMN | Velocity vector | Sensitivity |

| 6 | Huo et al. [27] | Simulation | / | Move probability | Sensitivity |

| 7 | Zhong et al. [28] | LK optical flow method | UMN | Intersection density | Environment |

| 8 | Chang et al. [29] | CNN and LSTM | Fall Detection Dataset | Fall, down | Real-time |

| 9 | Qiu et al. [30] | Partitioned Convolutional Neural Network | / | Cognitive impairment | Simple group behavior patterns |

| 10 | Vinothina et al. [31] | Two-stream CNN | Avenue Dataset | Racing, tossing objects, and loitering | False positives |

| NO. | Event Type | Key Word | Turnout | Weight | NO. | Event Type | Key Word | Turnout | Weight |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Medical accident | Murder | 152 | 0.51 | 2 | Stampede | Let me out | 13 | 0.04 |

| 3 | Medical accident | Stabbing | 76 | 0.25 | 4 | Stampede | Don’t push me | 32 | 0.11 |

| 5 | Medical accident | Help | 21 | 0.07 | 6 | Stampede | Someone fell | 57 | 0.19 |

| 7 | Medical accident | Pay with your life | 38 | 0.13 | 8 | Stampede | Trampled to death | 73 | 0.24 |

| 9 | Medical accident | Black-hearted | 2 | 0.01 | 10 | Stampede | Crushed to death | 53 | 0.18 |

| 11 | Medical accident | Disregard for human life | 7 | 0.02 | 12 | Stampede | Can’t breathe | 50 | 0.17 |

| 13 | Medical accident | Misdiagnosis | 4 | 0.01 | 14 | Stampede | Help | 22 | 0.07 |

| 15 | Disaster event | Landslide | 32 | 0.11 | 16 | Terrorist attack | Kidnapping | 42 | 0.14 |

| 17 | Disaster event | Earthquake | 57 | 0.19 | 18 | Terrorist attack | Explosion | 36 | 0.12 |

| 19 | Disaster event | Fire | 28 | 0.09 | 20 | Terrorist attack | Bomb | 48 | 0.16 |

| 21 | Disaster event | Mudslide | 23 | 0.08 | 22 | Terrorist attack | Gun | 47 | 0.16 |

| 23 | Disaster event | Flood | 45 | 0.15 | 24 | Terrorist attack | Poison gas | 35 | 0.11 |

| 25 | Disaster event | Tornado | 42 | 0.14 | 26 | Terrorist attack | Dead body | 26 | 0.09 |

| 27 | Disaster event | Tsunami | 73 | 0.24 | 28 | Terrorist attack | Murder | 66 | 0.22 |

| Category | Parameter (Symbol) | Value/Range | Note |

|---|---|---|---|

| Arrival process | Arrival rate/ | 0.8–1.2 ped/s | 47 |

| Normal walking | Desired speed/ | 1.8 m/s | 24 |

| Panic trigger | Density threshold | 4 ped/m2 | 15 |

| Panic walking | Desired speed/ | 2.8 m/s | Helbing social-force |

| Rendering | Frame rate | 25 FPS | H.264 export |

| Domain randomization | Speed noise | ±10% | Applied per agent |

| Output | video clips | 160 | Final simulation dataset |

| Dataset | Videos | ) | Panic Events |

|---|---|---|---|

| Stampede in Itaewon | 30 | 8.7 | 47 |

| UCF Crowd | 150 | 2.8 | 24 |

| Simulated Data | 160 | 4.0 | 15 |

| Metric | Value |

|---|---|

| Accuracy | 0.917 |

| Precision | 0.892 |

| Recall | 0.873 |

| F1-score | 0.882 |

| AUC-ROC | 0.920 |

| Mean end-to-end latency | 71 ms |

| 99th-percentile latency | 96 ms |

| Model Configuration | Accuracy (%) | F1-Score (%) | Inference Speed (FPS) |

|---|---|---|---|

| Density-Only | 74.5 | 76.3 | 50 |

| Trajectory-Only | 77.2 | 79.1 | 48 |

| Semantic-Only | 72.8 | 74.9 | 53 |

| Density + Trajectory | 81.6 | 83.4 | 45 |

| Density + Semantic | 78.9 | 80.7 | 47 |

| Trajectory + Semantic | 76.5 | 78.2 | 49 |

| Full Model | 91.7 | 88.2 | 40 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhao, R.; Han, L.; Cai, Y.; Wei, B.; Rahman, A.; Li, C.; Ma, Y. Harnessing Semantic and Trajectory Analysis for Real-Time Pedestrian Panic Detection in Crowded Micro-Road Networks. Appl. Sci. 2025, 15, 5394. https://doi.org/10.3390/app15105394

Zhao R, Han L, Cai Y, Wei B, Rahman A, Li C, Ma Y. Harnessing Semantic and Trajectory Analysis for Real-Time Pedestrian Panic Detection in Crowded Micro-Road Networks. Applied Sciences. 2025; 15(10):5394. https://doi.org/10.3390/app15105394

Chicago/Turabian StyleZhao, Rongyong, Lingchen Han, Yuxin Cai, Bingyu Wei, Arifur Rahman, Cuiling Li, and Yunlong Ma. 2025. "Harnessing Semantic and Trajectory Analysis for Real-Time Pedestrian Panic Detection in Crowded Micro-Road Networks" Applied Sciences 15, no. 10: 5394. https://doi.org/10.3390/app15105394

APA StyleZhao, R., Han, L., Cai, Y., Wei, B., Rahman, A., Li, C., & Ma, Y. (2025). Harnessing Semantic and Trajectory Analysis for Real-Time Pedestrian Panic Detection in Crowded Micro-Road Networks. Applied Sciences, 15(10), 5394. https://doi.org/10.3390/app15105394