Abstract

This work presents an alternative method for defining feasible joint-space boundaries and their corresponding geometric workspace in a planar robotic system. Instead of relying on traditional numerical approaches that require extensive sampling and collision detection, the proposed method constructs a continuous boundary by identifying the key intersection points of boundary functions. The feasibility region is further refined through centroid-based scaling, addressing singularity issues and ensuring a well-defined trajectory. Comparative analyses demonstrate that the final robot pose and reachability depend on the selected traversal path, highlighting the nonlinear nature of the workspace. Additionally, an evaluation of traditional numerical methods reveals their limitations in generating continuous boundary trajectories. The proposed approach provides a structured method for defining feasible workspaces, improving trajectory planning in robotic systems.

1. Introduction

The determination of feasible workspaces in robotic manipulators presents a fundamental challenge in robot design and control, requiring precise mathematical characterization of operational limits while ensuring collision-free configurations. Traditional methodologies primarily rely on numerical approaches that, although effective in estimating workspaces, often suffer from inefficiencies and a lack precision when dealing with complex kinematic constraints. To address these issues, this work introduces a rigorous mathematical framework based on q-bound theorems, integrating geometric and kinematic constraints to improve accuracy and computational efficiency in workspace determination.

Several numerical methods have been extensively studied for workspace analysis, including algorithms designed to map manipulator workspace boundaries, probabilistic numerical analysis approaches [1], and Monte Carlo-based techniques [2]. These methodologies have been widely used to approximate workspace limits and singularity regions, demonstrating effectiveness in various robotic configurations. For instance, the Monte Carlo method proposes to enhance precision by leveraging Gaussian growth to refine workspace estimations. However, despite improving accuracy, its reliance on iterative random sampling limits its ability to produce continuous boundary trajectories. Similarly, the numerical algorithms developed by Haug et al. [3] employ a continuation method to systematically trace workspace boundaries, yet they require significant computational resources, particularly for kinematically redundant manipulators. Alternative strategies have been proposed to overcome these limitations, such as algebraic approaches for kinematic analysis [4] and probabilistic mapping methods for workspace determination [5].

To overcome these limitations, Ref. [6] proposed a mathematical formulation to define feasible, collision-free workspaces through boundary functions that account for both geometric constraints and internal link interactions. This approach is particularly relevant for mechanisms with parallelogram structures, where geometric modeling plays a crucial role in improving workspace representation and ensuring the accurate detection of feasible regions, thereby mitigating the inefficiencies associated with numerical sampling methods.

In addition, hybrid techniques have been explored to address workspace characterization. Li et al. [1] introduced a probabilistic approximation to generate boundary curves in workspaces; however, its dependence on randomization introduces potential inaccuracies, especially for manipulators with complex nonlinear constraints. Meanwhile, workspace determination methods for redundant manipulators, such as those proposed by Ginnante et al. [7], have been developed to reduce computational redundancy, but they often struggle to precisely define workspace boundaries when joint configurations exceed a certain degree of redundancy.

Structural optimization techniques have also been investigated to enhance workspace determination. Cervantes-Sánchez et al. [8] formulated workspace generation as a direct kinematic problem, reducing computational complexity while maintaining accuracy in identifying singularity curves. Similarly, topology optimization methods [9] have been utilized to improve workspace efficiency, though they introduce additional complexities in parametric system optimization. Algebraic formulations [10] further support workspace evaluation, providing efficient solutions for robotic design in constrained environments.

Apart from geometric and numerical considerations, the feasibility of a workspace is influenced by control strategies. The workspace observer method introduced by Oda et al. [11] presents a robust control strategy for redundant manipulators, focusing on real-time workspace regulation. However, such control-based approaches do not inherently define feasible workspaces and must be supplemented with mathematical workspace constraints, as examined in this study.

Alternative geometric transformations have also been proposed to tackle workspace determination challenges. The coverings transformation concept, explored by Rybak et al. [12], introduces an optimization-based method for approximating solutions to nonlinear inequalities that describe workspace constraints. Likewise, numerical approaches developed for parallel mechanisms [13] demonstrate that direct mapping techniques provide reliable workspace estimations but often involve significant computational loads. Structural synthesis methods [14] have also been introduced to systematically refine parallel robot architectures for improved workspace feasibility.

Another challenge in workspace determination involves identifying singularity curves and assembly configurations [8]. The characterization of singular configurations is essential for ensuring continuous, collision-free motion within the workspace, a concept that aligns with prior findings [3] where singular points were employed to construct precise workspace boundaries. Several studies have emphasized the role of Jacobian-based analysis in detecting singularities, particularly in complex parallel mechanisms, as demonstrated in [15], where a post-processing strategy was developed to improve control performance near singularities. Further studies on parasitic motion analysis [16] have provided insights into workspace constraints by leveraging constraint-embedded Jacobian formulations.

Furthermore, numerical ray-based methods, such as the one introduced by Ginnante et al. [7], offer an alternative approach for defining workspace limits in kinematically redundant manipulators. However, as noted by Ceccarelli [10], algebraic formulations provide more efficient methods for workspace evaluation and design.

In summary, the primary contribution of this work is the introduction of a rigorous mathematical framework for defining feasible, collision-free workspaces via the formulation of boundary functions that integrate kinematic, collision avoidance, and geometric constraints. Unlike traditional numerical methods—which rely on extensive iterative sampling and often yield fragmented boundaries—the proposed approach yields continuous and analytically tractable workspace boundaries. This study provides a clear and structured alternative to existing methods, thereby enhancing both the theoretical understanding and the practical efficiency of workspace determination.

To clarify the structure of the proposed methodology, a step-by-step process is followed throughout the manuscript. First, a standardized convention is established to define reference frames and spatial relationships in robotic manipulators. Then, a general model is developed to identify the conditions under which two parts of a robot may come into contact. Based on this, a set of constraints is formulated to determine whether a given robot configuration is valid and free of collisions. These constraints are categorized and evaluated to identify the limits of safe operation. A geometric region of feasible configurations is then constructed and adjusted to ensure safety margins and avoid singularities. Finally, the method is applied to a planar robot example, where different motion alternatives are analyzed and compared.

2. Vector Labeling Standard

To ensure clarity and consistency in the mathematical formulation of the proposed method, a structured vector labeling standard is adopted throughout this work. This convention enables the explicit representation of positions, directions, and reference frames involved in the kinematic chains, which are essential for formulating and evaluating boundary functions under geometric and collision constraints.

The notation employed here is aligned with the vectorial formalism introduced in [17], where directional vectors and reference frames are systematically labeled to support the construction of transformation matrices and constraint expressions. This standard has been particularly useful in the current study for expressing relative positions and orientations in a modular and traceable manner.

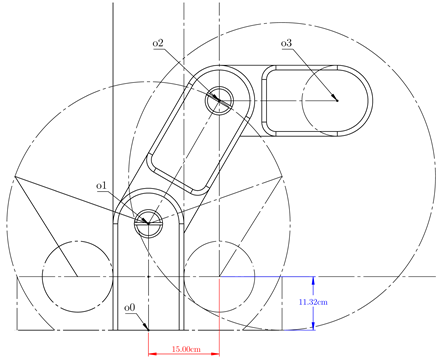

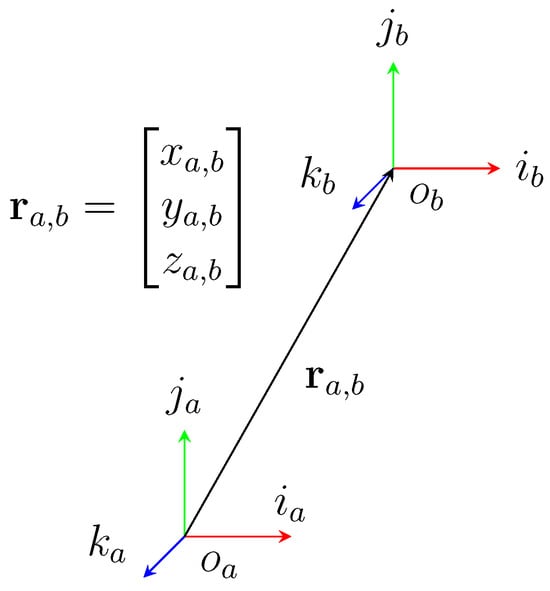

A graphical representation of the labeling convention is provided in Figure 1.

Figure 1.

Graphical representation of the vector labeling standard.

The following definitions summarize the adopted labeling scheme:

- A relative reference frame is denoted bywhere represents the orientation and position of frame b as observed from frame a. The matrix contains the orthonormal unit vectors (directional cosines) , and denotes the position vector of the origin of frame b with respect to frame a.When only one subscript is used, such as , the frame is considered to be self-referenced. In this case, the expression corresponds towhere , and corresponds to the canonical orientation of frame a with respect to itself.

- The vectors represent the directional cosines of frame a, forming an orthonormal basis. Orthonormality implies that the vectors are mutually perpendicular and of unit length.

- The vectors i, j, and k are visually distinguished in illustrations using the colors red, green, and blue, respectively, as shown in Figure 1.

- A position vector is represented aswhere a and b are the initial (origin) and the terminal (destination) points of the vector, respectively, while c is the observer frame from which the vector is expressed.The notation is interpreted as “the vector that connects the frame a to b, observed from frame c”. If the observer frame is omitted, it is assumed that all vectors are observed in a common reference frame.When only one subscript is provided, such as , the vector is interpreted as being self-referenced, i.e., , which by convention is equal to the zero vector:

- If all vectors are expressed with respect to a common observer, the superscript c may be omitted for simplicity.

- A kinematic chain is defined as an ordered sequence of reference frames that are linked by physical or geometric constraints. The relation between frames is denoted by the precedence operator:which indicates that frame a precedes frame b, and frame b precedes frame c in the kinematic structure.

- Subscripts a, b, and c may represent alphanumeric labels associated with a kinematic sequence and are not limited to numeric indices.

- The Cartesian components of a vector are denoted as

- The canonical orientation of a frame with respect to itself is defined asThis is referred to as the canonical orientation or canonical convention.

- Directional cosines of a frame b, as observed from frame a, are defined as

- The unit vector corresponding to is expressed as

This convention supports a rigorous and scalable construction of geometric constraints and kinematic relationships and has been applied throughout this manuscript to express boundary functions and configuration-dependent vector equations in a concise and interpretable form.

3. Theoretical Framework and Methodology

This section presents the theoretical framework and methodology for analyzing kinematic configurations and constraints in robotic manipulators. To ensure clarity in notation, vectors in will be represented as , while rotation matrices in will be denoted as R. Mathematical conditions are derived to prevent internal collisions, forming the basis for defining feasible workspaces across robotic systems.

In the context of kinematic chains for robotic manipulators, each link is represented as a set of points. The principal nodes a and b define the positions of the joints that connect one link to the next. Any point on the current link can be labeled as , where n denotes the index of the point relative to the node a. Similarly, the node b connects to the subsequent link, and its points are labeled as . The purpose of this work is to establish the conditions under which a collision occurs between a point on the current link and a point on the subsequent link. An example of this kinematic configuration is illustrated in Figure 2.

Figure 2.

Illustration of a kinematic chain is presented as a simplified example of a planar robot. The nodes a and b indicate the joint positions connecting consecutive links, while the points and are identified as collision points between adjacent links.

To describe the feasible workspace, we define a family of boundary functions denoted as , where each scalar function evaluates the feasibility of the manipulator’s configuration with respect to specific constraints.

Each scalar component is interpreted as

In addition, each boundary function component may be bounded using a three-index notation , where the following are defined:

- i indicates the index of the boundary function;

- j refers to the vector component (e.g., x, y, or z);

- denotes the type of bound: 1 for the upper (right) bound and 2 for the lower (left) bound.

These functions encapsulate three main types of constraints:

- Kinematic Constraints: These ensure that the joint variables respect their operational limits:

- Collision Avoidance Constraints: These prevent intersections between links by requiring a minimum separation distance between key points on different bodies:

- Geometric Constraints: These define the external spatial boundaries of the workspace imposed by the robot’s design or environment:

To organize these constraints, we distinguish between two categories of boundary functions:

- Point Boundary Functions, which evaluate spatial relationships between pairs of points (e.g., potential contact or collision points between links). These are typically defined through vector differences between transformed local positions and used to ensure separation between mechanical components.

- Geometric Boundary Functions, which impose spatial limits on the manipulator’s motion by constraining the position of specific links or end effectors relative to predefined geometric boundaries. These are often formulated using projection matrices and fixed spatial references.

Using these definitions, the boundary functions serve as mathematical tools for systematically evaluating whether a given configuration satisfies the required motion constraints while remaining within a collision-free, feasible workspace.

The first step in ensuring that the manipulator operates safely and within a feasible workspace is to define the mathematical conditions under which collisions between links occur. These conditions are crucial for analyzing and understanding the interactions between the kinematic elements of the manipulator. The subsequent collision model generalizes these conditions, providing a foundational framework for identifying all potential collision scenarios. Finally, these generalized insights are used to construct boundary functions, which incorporate kinematic, collision avoidance, and geometric constraints to define the feasible workspace free of collisions.

To formalize the analysis, the following theorem generalizes the collision model for points within a kinematic chain.

Theorem 1.

Let the kinematic chain be defined, where 0 represents the base system, a and b are systems with variable position and orientation relative to each other, and and denote points with constant position and orientation within their respective local systems a and b.

A collision between the points and is said to occur if and only if the relative vector between these points equals a null vector:

wherein this condition is equivalent to the norm of the relative vector being null:

and under this condition, the relative position between the points is expressed as

where is the rotation matrix that transforms the coordinates from b to a, represents the relative position vector between a and b, denotes the local position of relative to a, and refers to the local position of relative to b. The condition for collision can also be expressed in terms of the boundary function , which depends on the joint variables . This boundary function is defined as

A collision occurs if and only if

The collision condition implies that all components of the vector are null, ensuring the positional coincidence of the points involved.

Proof of Theorem 1.

To derive the condition for a collision, we begin with the general formula for relative positions in a kinematic chain:

where is the relative vector between systems a and b, is the vector between a and , is the vector between and b, and is the rotation matrix that transforms coordinates from to a. While this relationship can be recursively extended for longer chains, we focus here on the consecutive points and for simplicity.

The relative position between and can be expressed as the difference between their global positions:

Using the transformations for global positions, we write

Substituting these expressions into the relative position equation yields

Simplifying this expression, we have

Recognizing that can be rewritten as , we substitute and simplify to yield

Factoring out , we obtain

The collision condition implies that the term in parentheses must also be null and defined as follows:

We now define the boundary function that encapsulates the condition for collision in terms of the joint variables :

Thus, a collision occurs if and only if

This formulation ensures that the collision condition is expressed as a function of the joint variables, linking the geometry of the system to the kinematic configuration. Since the null vector ensures positional coincidence, the theorem is validated. □

Note that this approach facilitates a straightforward implementation of collision checks and the definition of boundaries, which are critical in the modeling of feasible workspaces and the optimization of manipulator trajectories. A significant simplification is achieved in the analysis of relative kinematic chains by avoiding the inherent complexity associated with homogeneous transformations and the Denavit–Hartenberg (DH) formalism. By focusing exclusively on the geometric relationships between consecutive systems, the need for complete transformation matrices is eliminated. Consequently, a more intuitive and computationally efficient model is provided, which is particularly advantageous in scenarios requiring rapid calculations and analytical clarity.

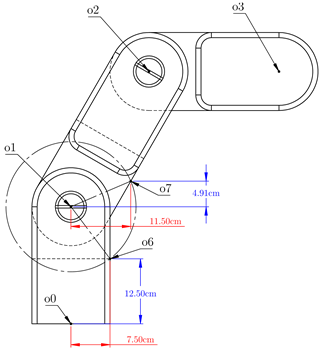

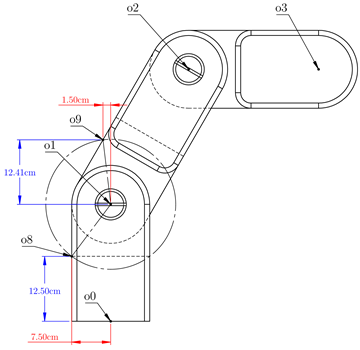

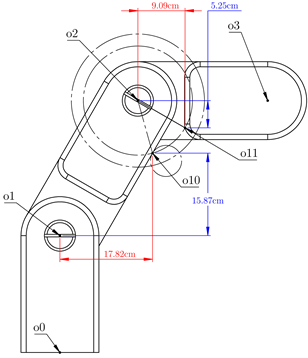

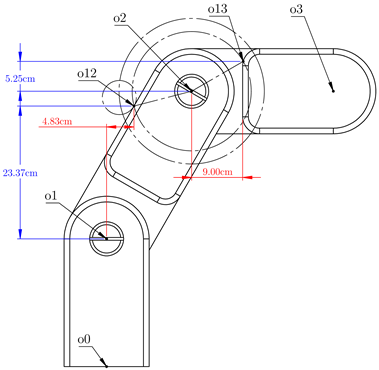

Case Study: Planar Robot

The planar robot was analyzed as a case study to illustrate the kinematic and collision constraints in robotic manipulators. Its structure consists of a two-degree-of-freedom mechanism, where and correspond to rotations about the y axis. This configuration allows for a simplified representation of kinematic interactions while preserving essential characteristics for evaluating workspace feasibility and self-collision conditions. Figure 2 presents an isometric view of the robot, depicting its geometric arrangement and joint distribution.

This model is particularly useful for demonstrating the role of boundary conditions in defining feasible workspaces. The kinematic relationships between its links provide a structured approach for verifying self-collision criteria, as they depend on the relative positioning of consecutive elements in the manipulator’s chain. In this context, the feasible workspace is determined by considering the relative position vector between key reference points along the structure.

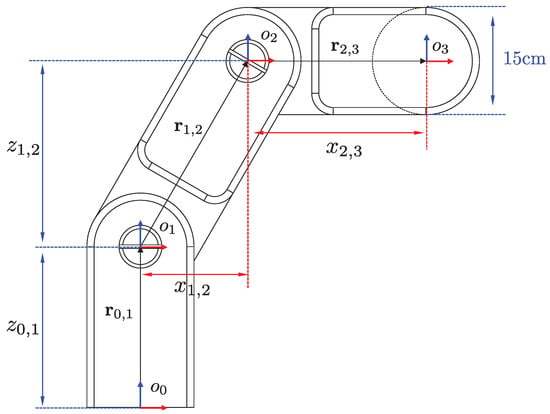

The kinematic chain of the planar robot, depicted in Figure 3, provides a structured representation of the positional relationships and rotational axes of its joints. The degrees of freedom are defined as follows:

Figure 3.

Representation of the kinematic chain for MinervaBotV2.

- : Represents a rotational motion about the y axis at the base.

- : Represents an additional rotation about the y axis at the second joint, determining the final positioning of the end effector.

By formulating the manipulator’s motion in terms of relative position vectors and transformation matrices, it is possible to define a set of constraints that delineate the boundary of the feasible workspace. The self-collision condition is verified through a function that depends on the joint variables , ensuring that the relative displacement between reference points remains within allowable limits. The analysis of this system provides insight into the general methodology used to evaluate collision-free trajectories in robotic mechanisms. To support this analysis, Table 1 presents the geometric parameters that define the relative positioning of the robot’s joints, while Table 2 details the recursive formulation of the direct kinematics equations, describing the position and orientation of each joint in terms of preceding transformations.

Table 1.

Geometric offsets of planar robot.

Table 2.

Direct kinematics equations in recursive notation.

4. Boundary Functions for Workspace Definition

The feasible workspace of the planar robot is determined by analyzing its geometric and kinematic constraints. To accurately characterize this workspace, boundary functions are derived to define the permissible motion range while preventing self-collisions. These functions capture the spatial relationships imposed by the robot’s structure, joint limits, and possible internal interferences. The formulation of these boundary conditions is guided by the theoretical framework established in Theorem 1, which provides the necessary conditions for detecting collisions within the kinematic chain. However, this theorem is not the sole tool employed in the analysis. Additional kinematic formulations, geometric considerations, and spatial transformations are integrated to comprehensively define the feasible workspace. The combination of these methods ensures a robust characterization of motion constraints, allowing for a detailed evaluation of collision-free configurations.

To systematically define these constraints, four tables are presented, each illustrating the computation of boundary functions along with a diagram marking critical collision points in the planar robot. These functions correspond to what are referred to as point boundary functions, where each function represents a specific constraint associated with a given collision point. In this type of boundary function, all components indexed by j must be satisfied simultaneously to confirm the occurrence of a collision. This simultaneous fulfillment is necessary because each corresponds to a different coordinate component, meaning that the collision is only valid when the spatial conditions for all coordinates hold true at the same time. By enforcing these constraints, it is possible to determine precise conditions under which the manipulator self-intersects at distinct locations in its configuration space.

In addition to these point boundary functions, a final table is included to introduce a different category of constraints known as geometric boundary functions. Unlike point boundary functions, these functions do not necessarily require all components to be satisfied simultaneously, as they do not define collisions at a discrete location but rather establish broader geometric constraints. Geometric boundary functions define spatial limits that the manipulator must respect, preventing it from exceeding predefined regions in its workspace. Since these constraints refer to entire geometric boundaries rather than discrete points, the conditions imposed by each function may be satisfied independently. This distinction highlights the difference between collision detection at specific points and the enforcement of global geometric restrictions that ensure safe operation.

The tables presenting the first four point boundary functions are labeled as Table 3 and Table 4. The final table, labeled as Table 5, provides the geometric boundary functions that define broader workspace constraints. Together, these boundary functions offer a structured method for computing the complete feasible workspace, ensuring that the manipulator operates within a collision-free region while supporting trajectory planning and operational reliability.

Table 3.

Point boundary functions 1, 2, and 3.

Table 4.

Point boundary function 4.

Table 5.

Geometric boundary functions 5, 6, and 7.

The boundary functions obtained from the previous analysis are presented as follows, encapsulating the constraints derived from the kinematic and geometric relationships of the planar robot. These functions define the conditions that must be satisfied to ensure a collision-free workspace while maintaining the feasible motion range of the manipulator, and they are presented below:

To further characterize the motion constraints imposed by these boundary functions, the next step involves defining upper and lower bounds for each function. These bounds, denoted as , represent the k-th constraint imposed on the j-th component of the boundary function associated with the i-th collision condition. By establishing these bounds, the feasible motion range of the planar robot is precisely quantified, ensuring a structured approach to workspace determination.

Several of the boundary functions obtained in the analysis are expressed as linear combinations of cosine and sine terms. Therefore, it is necessary to establish a general method for determining the range of values that the variable q can assume while satisfying these constraints. The following theorem provides an explicit formulation for these bounds, enabling a systematic computation of the feasible joint configurations.

Theorem 2.

Given the equation of the form

where and c are real numbers, and q is the unknown variable to be determined, it follows that the solution for q is bounded by

where accounts for the periodic nature of the trigonometric functions.

Proof of Theorem 2.

The given equation

is rewritten using the trigonometric identity

where d and are defined as

by dividing both sides of the equation by d, it follows that

for real solutions to exist, the term must satisfy the condition

taking the inverse cosine function on both sides, the expression

is obtained. Solving for q, the result is

thus, it is concluded that q is bounded by

this completes the proof. □

The upper and lower bounds for each boundary function are defined in Table 6. These bounds are established to determine the feasible range within which the functions operate, ensuring consistency in constraint evaluation. The right-bounded functions correspond to the upper limits, whereas the left-bounded functions define the lower limits, allowing for a comprehensive characterization of the solution space.

Table 6.

Boundary function bounds.

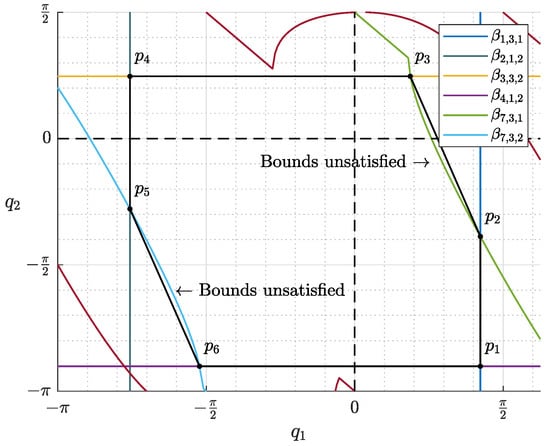

Figure 4 illustrates the graphical representation of the boundary functions. The red lines correspond to functions that are not considered in the construction of the feasible region, whereas the cyan lines represent the functions that define this region.

Figure 4.

Graphical representation of the boundary functions. Red lines indicate functions that are not considered in defining the feasible region, while cyan lines represent the functions used to construct it. The selected feasible region is determined by the boundary functions , , , , , and .

Although multiple feasible regions exist, reaching them from the home pose of the planar robot would require crossing or making contact with boundary functions, which would impose constraints on the motion. For this reason, the selection of the feasible region shown in the figure is justified.

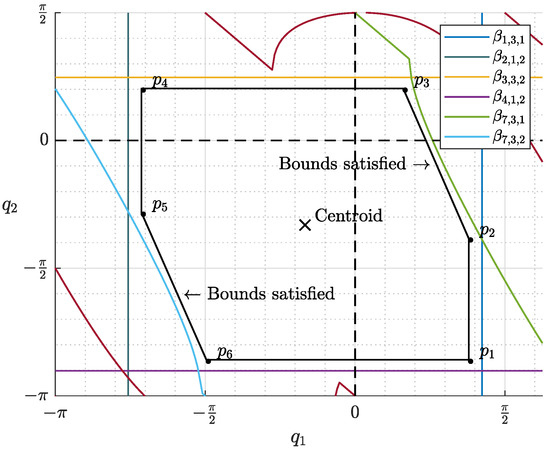

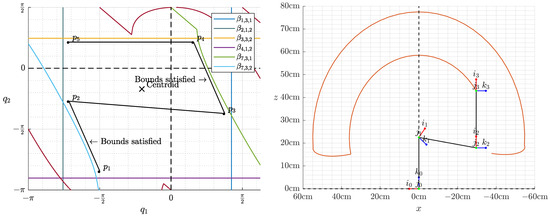

As observed in Figure 4, the presence of multiple boundary functions complicates the identification of a unique solution. However, a feasible region can be constructed by identifying the intersection points of the selected boundary functions, forming a continuous boundary, as illustrated in Figure 5. This boundary is defined by six intersection points.

Figure 5.

Construction of the feasible region by identifying the intersection points of the selected boundary functions. The continuous boundary is formed by six intersection points, ensuring a well-defined feasible area for the robot’s motion.

Nevertheless, the nonlinearities of certain functions prevent their respective constraints from being fully satisfied. This issue can be addressed through two possible approaches. The first approach involves incorporating additional tangent points along the sections of the functions where the constraints are not met. The second approach, as depicted in Figure 6, consists of computing the centroid of the feasible region and scaling it with respect to this point.

Figure 6.

Scaling of the feasible region with respect to its centroid. This approach ensures that the boundary functions do not directly imply collision while introducing a safety margin for the robot’s operation. The scaling factor acts as a safeguard to maintain a collision-free workspace.

The latter approach also resolves an additional issue: while the boundary functions define the safe region, they inherently imply potential collisions. By applying a scaling factor, a safety margin is introduced between the robot and the boundary functions. This scaling coefficient acts as a safety factor, ensuring a collision-free operational space.

Figure 6 not only presents the closed and continuous boundary of the joint limits but also proposes a sequence for traversing these limits. However, Figure 7 illustrates the trajectory of these six intersection points in the geometric space, along with a simplified diagram of the planar robot in its final pose after completing the trajectory. It is important to note that, although Figure 6 defines an ordered sequence for the boundary traversal, this does not inherently guarantee a structured or sequential trajectory in the geometric space.

Figure 7.

Trajectory of the six intersection points in the geometric space, including the simplified diagram of the planar robot in its final pose.

Figure 8 presents two plots. The first illustrates a nonclosed sequence of five points, while the second depicts the simplified diagram of the planar robot in its final pose after completing the trajectory. In this case, the trajectory is structured but remains open.

Figure 8.

Nonclosed trajectory consisting of five points. The first plot represents the joint space sequence, while the second illustrates the planar robot in its final pose after completing the trajectory. Despite following an ordered sequence, the trajectory remains open.

This phenomenon can be explained as follows: the workspace with the greatest reach is directly linked to the robot’s links when they become collinear. However, this configuration results in a joint singularity. This issue was indirectly resolved by scaling the feasible joint region with respect to its centroid, ensuring a structured path.

As a result, two distinct approaches exist for traversing the feasible geometric space along the right or the left side. This choice determines the final pose of the robot as well as its reach. Due to the limited workspace, the final link cannot transition to its mirrored pose within the same trajectory.

This second alternative is illustrated in Figure 9, which contains two plots. The first represents a joint trajectory consisting of five points, while the second shows the corresponding geometric trajectory. These paths are opposite to those presented in Figure 8.

Figure 9.

Alternative feasible trajectory. The first plot depicts a five-point joint space path, while the second shows its corresponding geometric trajectory.

The feasible geometric regions from Figure 8 and Figure 9 are overlaid in Figure 10. Notably, these regions do not exhibit symmetry, which is a result of the scaling applied relative to the centroid and the nonlinear properties inherent to the trajectory.

Figure 10 highlights the application of traditional numerical methods for solving this type of problem. Initially, a set of n random joint variables was generated. Following a conventional approach, each detected collision was marked with a red point, while noncolliding configurations were represented by blue points. These methods, however, entail a high computational cost and do not produce a continuous boundary trajectory in the joint space. Their efficiency is directly dependent on the volume of analyzed data.

Figure 11 presents two plots from this experiment: the first in the joint space and the second in the geometric space. In the latter, overlapping regions containing both collisions and noncollisions can be observed. This phenomenon is associated with the poses and trajectory patterns described in Figure 8 and Figure 9. Additionally, the lateral shapes of the geometric workspace, as seen in Figure 7, are also visible.

Figure 11.

Numerical collision detection results. The first plot shows joint space sampling with red points for collisions and blue points for noncollisions. The second plot illustrates the corresponding geometric space, highlighting overlapping regions and the lateral shapes of the feasible workspace.

Although the methodology presented in this work is exemplified using rotational joints in planar mechanisms, the proposed boundary function formulation is not limited to revolute joints. In the case of translational (prismatic) joints, the joint variable affects the position vectors linearly instead of trigonometrically. As a result, the same structure of boundary functions remains applicable, and the constraints reduce to affine relationships in the configuration space.

Building on this foundation, the method is being extended to more complex manipulator configurations. Its applicability to spatial mechanisms, including those incorporating hybrid kinematic structures and parallelogram-based linkages, is currently under investigation. In such cases, boundary functions defined as represent implicit hypersurfaces in high-dimensional configuration spaces. These surfaces can be visualized using established computational techniques, such as isosurface extraction (e.g., marching cubes or adaptive grid subdivision), which are suitable for feasibility analysis in spatial domains.

To maintain computational efficiency, the evaluation of boundary functions can be restricted to lower-dimensional joint subspaces or slices, avoiding the need for exhaustive random sampling. In this way, the deterministic and symbolic character of the formulation is preserved while enabling scalability to more complex robotic systems. Moreover, data-driven parameterization of boundary surfaces is currently being explored as part of a broader strategy to generalize the framework while retaining its analytical structure.

5. Conclusions

This study introduced a structured mathematical framework for defining feasible joint-space boundaries and their corresponding geometric workspaces through the formulation of boundary functions . Unlike traditional numerical methods that rely on extensive sampling and collision detection, the proposed approach constructs continuous workspace boundaries by identifying and connecting critical intersection points derived from geometric and kinematic constraints. The boundary function formulation provides an explicit representation of geometric and collision constraints, offering analytical insights that complement existing numerical approaches.

The effectiveness of the methodology was demonstrated using a planar robot case study, showing how the feasible joint-space region can be scaled with respect to its centroid to resolve singularities and generate structured trajectories. The analysis of different traversal paths revealed that the final pose and reachability of the manipulator are highly dependent on the selected trajectory and that such paths are generally asymmetric due to the inherent nonlinearities of the system and the scaling process. This formulation contrasts with traditional numerical approaches by offering an explicit, constraint-driven modeling alternative.

A broader set of applications is being investigated in a subsequent study, in which the present formulation serves as the mathematical foundation. In particular, the extension to spatial manipulators and hybrid architectures is being considered, incorporating data-driven parameterization and visualization of boundary surfaces to support higher-dimensional configurations. These developments build upon the theoretical contributions introduced in this work, establishing a foundation for generalizing the proposed method to a wider class of robotic systems.

Author Contributions

Conceptualization, J.A.L., L.F.L.-V. and H.A.G.-O.; methodology, J.A.L., E.L.-N. and M.E.M.-R.; software, D.M.N. and J.A.L.; validation, L.F.L.-V., L.E.G.-J. and S.C.-T.; formal analysis, J.A.L. and R.C.-N.; investigation, D.M.N., S.C.-T. and M.E.M.-R.; resources, J.A.L.; writing—original draft preparation, D.M.N. and J.A.L.; writing—review and editing, L.E.G.-J., L.F.L.-V. and H.A.G.-O.; visualization, D.M.N. and R.C.-N.; supervision, J.A.L., S.C.-T. and M.E.M.-R.; project administration, M.E.M.-R. and J.A.L.; funding acquisition, J.A.L. and E.L.-N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The data presented in this study are available upon request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Li, X.J.; Cao, Y.; Yang, D.Y. A Numerical-Analytical Method for the Computation of Robot Workspace. In Proceedings of the Multiconference on “Computational Engineering in Systems Applications”, Beijing, China, 4–6 October 2006; Volume 1, pp. 1082–1086. [Google Scholar] [CrossRef]

- Peidró, A.; Reinoso, Ó.; Gil, A.; Marín, J.M.; Payá, L. An improved Monte Carlo method based on Gaussian growth to calculate the workspace of robots. Eng. Appl. Artif. Intell. 2017, 64, 197–207. [Google Scholar] [CrossRef]

- Haug, E.J.; Luh, C.M.; Adkins, F.A.; Wang, J.Y. Numerical Algorithms for Mapping Boundaries of Manipulator Workspaces. J. Mech. Des. 1996, 118, 228–234. [Google Scholar] [CrossRef]

- Gallardo-Alvarado, J. Kinematic Analysis of Parallel Manipulators by Algebraic Screw Theory; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kucner, T.P.; Lilienthal, A.J.; Magnusson, M.; Palmieri, L.; Swaminathan, C.S. Probabilistic Mapping of Spatial Motion Patterns for Mobile Robots. IEEE Robot. Autom. Lett. 2020, 5, 1500–1507. [Google Scholar] [CrossRef]

- Merlet, J.P. Parallel Robots; Springer: Berlin/Heidelberg, Germany, 2006. [Google Scholar]

- Ginnante, A.; Caro, S.; Simetti, E.; Leborne, F. Workspace Determination of Kinematic Redundant Manipulators Using a Ray-Based Method. In Proceedings of the ASME 2023 International Design Engineering Technical Conferences and Computers and Information in Engineering Conference (IDETC-CIE), Boston, MA, USA, 20–23 August 2023; Volume 8: 47th Mechanisms and Robotics Conference. p. V008T08A016. [Google Scholar] [CrossRef]

- Cervantes-Sánchez, J.; Hernández-Rodríguez, J.; Rendón-Sánchez, J. On the workspace, assembly configurations and singularity curves of the RRRRR-type planar manipulator. Mech. Mach. Theory 2000, 35, 1117–1139. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, D.; Zhao, C.; Zhang, P.; Zhang, Y.; Cai, Y. Optimal design of lightweight serial robots by integrating topology optimization and parametric system optimization. Mech. Mach. Theory 2019, 132, 48–65. [Google Scholar] [CrossRef]

- Ceccarelli, M. Workspace analysis and design of open-chain manipulators. AIP Conf. Proc. 1998, 437, 388–405. [Google Scholar] [CrossRef]

- Oda, N.; Murakami, T.; Ohnishi, K. A Robust Control Strategy of Redundant Manipulator by Workspace Observer. J. Robot. Mechatron. 1996, 8, 235–242. [Google Scholar] [CrossRef]

- Rybak, L.A.; Behera, L.; Averbukh, M.A.; Sapryka, A.V. A novel approach for coverings transformation to define a robot workspace. IOP Conf. Ser. Mater. Sci. Eng. 2020, 945, 012075. [Google Scholar] [CrossRef]

- Zhou, Y.; Niu, J.; Liu, Z.; Zhang, F. A novel numerical approach for workspace determination of parallel mechanisms. J. Mech. Sci. Technol. 2017, 31, 3005–3015. [Google Scholar] [CrossRef]

- Gogu, G. Structural Synthesis of Parallel Robots: Part 1: Methodology. In Solid Mechanics and Its Applications; Springer: Dordrecht, The Netherlands, 2008; Volume 149. [Google Scholar] [CrossRef]

- Wang, D.; Wu, J.; Wang, L.; Liu, Y. A Postprocessing Strategy of a 3-DOF Parallel Tool Head Based on Velocity Control and Coarse Interpolation. IEEE Trans. Ind. Electron. 2018, 65, 6333–6342. [Google Scholar] [CrossRef]

- Nigatu, H.; Choi, Y.H.; Kim, D. Analysis of Parasitic Motion with the Constraint Embedded Jacobian for a 3-PRS Parallel Manipulator. Mech. Mach. Theory 2021, 157, 104181. [Google Scholar] [CrossRef]

- Lizarraga, J.A.; Garnica, J.A.; Ruiz-Leon, J.; Munoz-Gomez, G.; Alanis, A.Y. Advances in the Kinematics of Hexapod Robots: An Innovative Approach to Inverse Kinematics and Omnidirectional Movement. Appl. Sci. 2024, 14, 8171. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).