FLE-YOLO: A Faster, Lighter, and More Efficient Strategy for Autonomous Tower Crane Hook Detection

Abstract

1. Introduction

- (1)

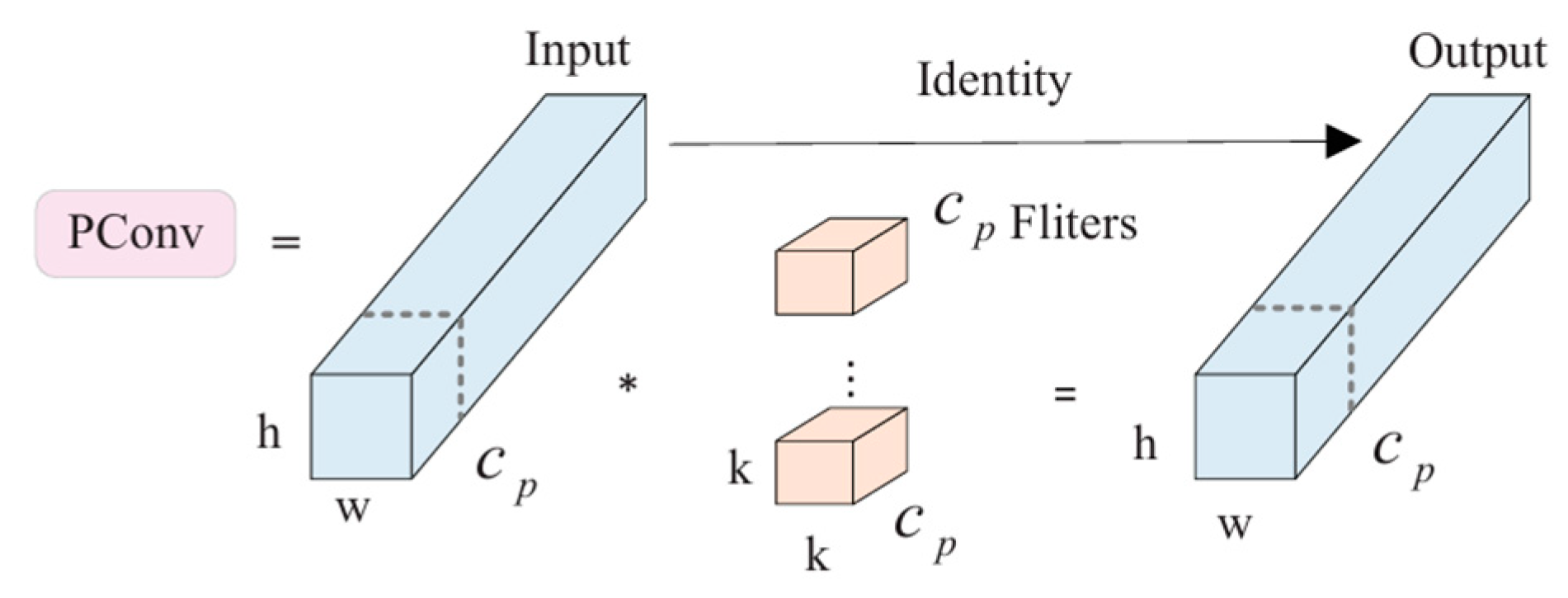

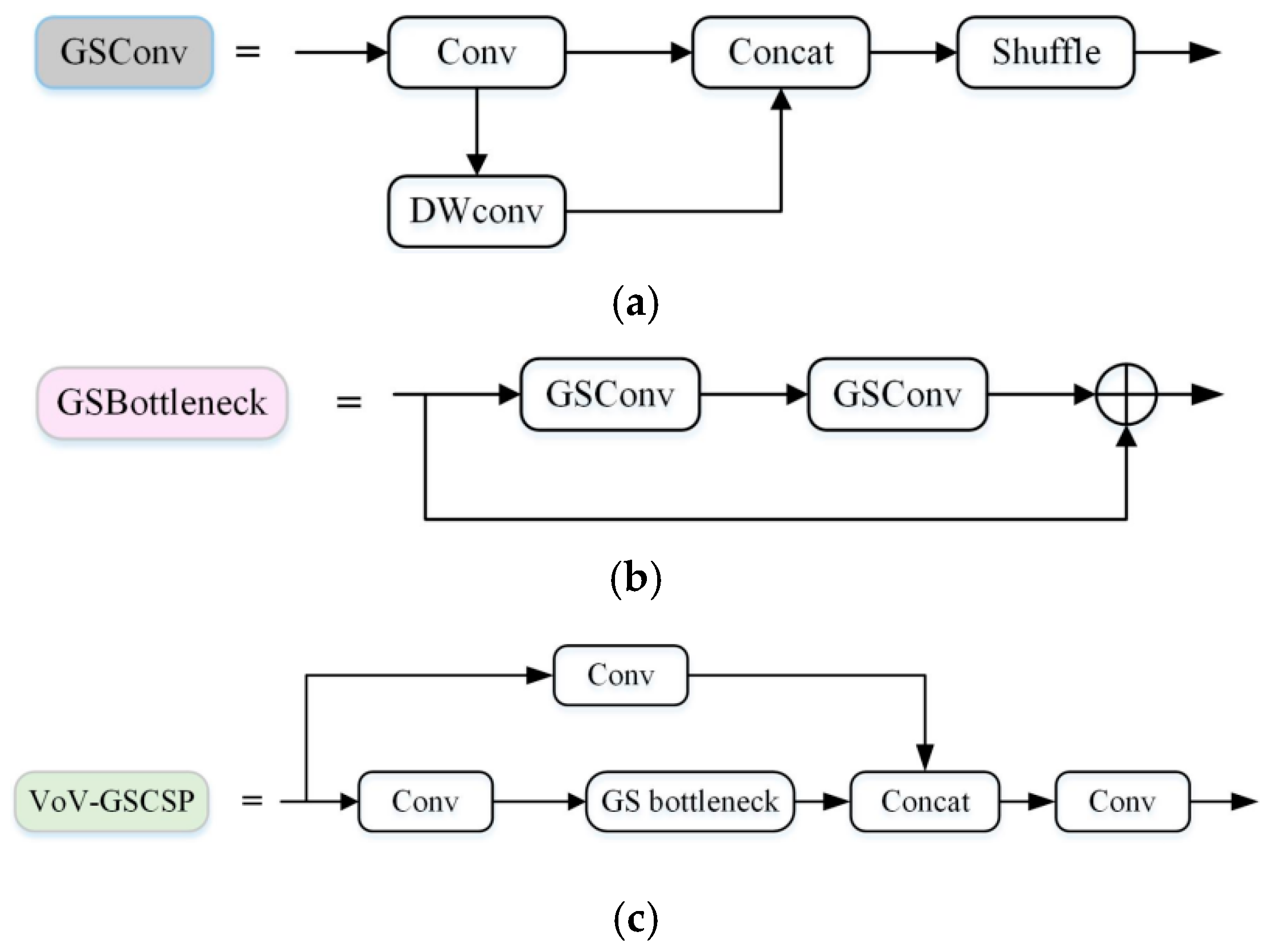

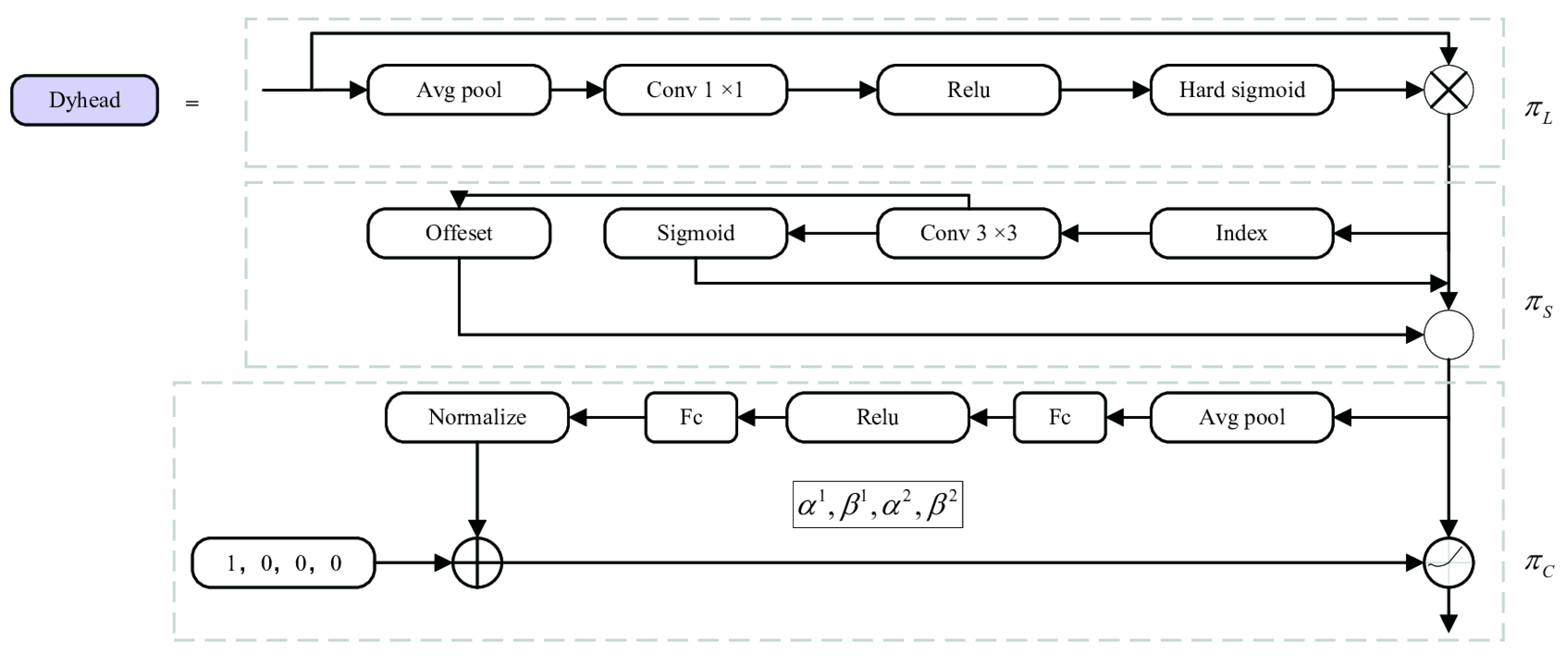

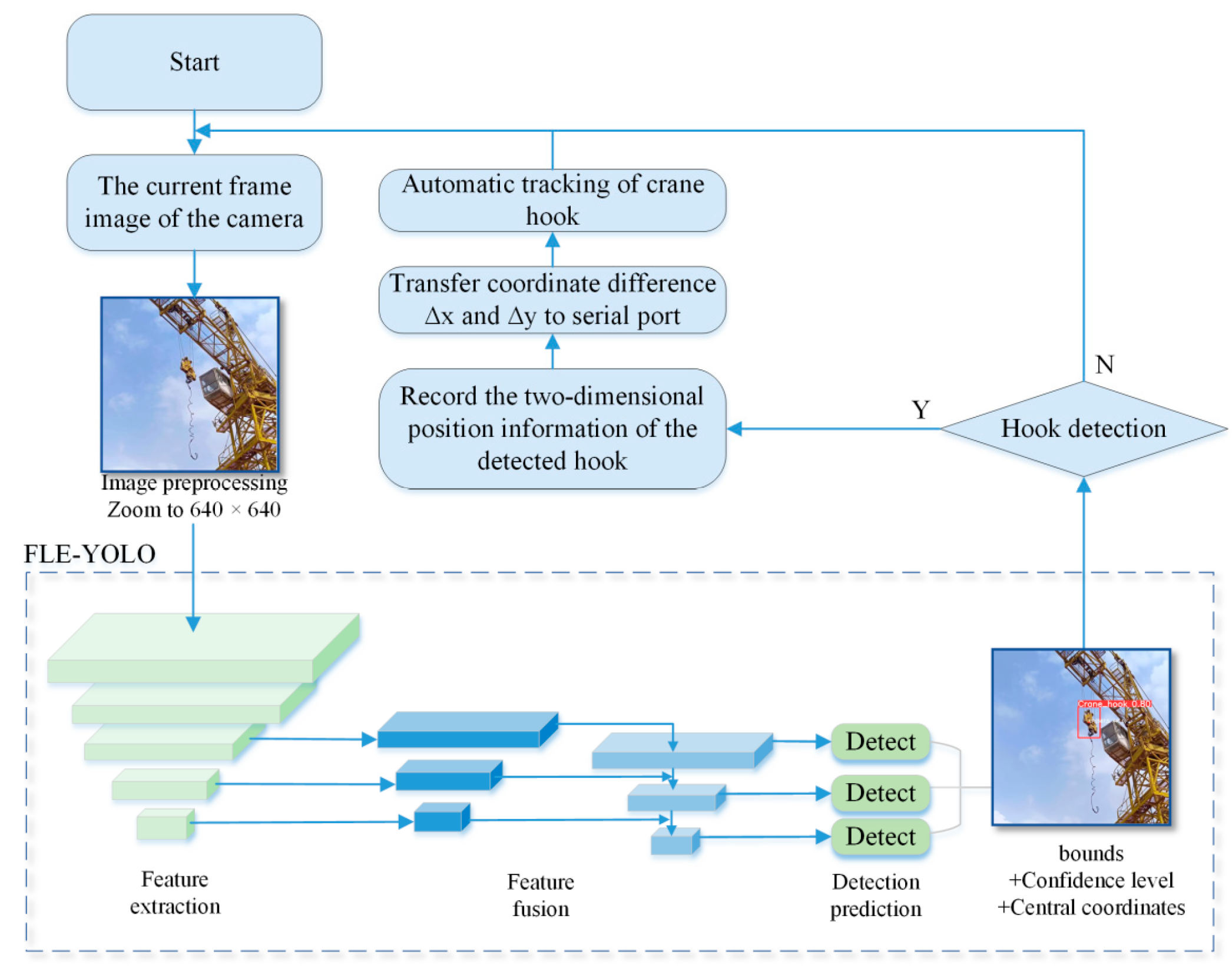

- A novel object detection model FLE-YOLO is proposed, which adopts the FasterNet lightweight backbone network and introduces the Triplet Attention module. By focusing on the triplet interaction of spatial dimensions H, W, and channel dimension C, the feature expression of key areas of the hook is strengthened. Simultaneously, Slimneck is utilized to embed VoV-GSCSP and GSConv into the neck structure for bottom-up and top-down feature fusion. Introducing Dyhead into the head module, its unified structure of spatial, scale, and task perception can comprehensively learn target features, balancing detection accuracy and lightness.

- (2)

- To validate the effectiveness of each module, heat maps are generated to assess the impact of the Triplet Attention and Dyhead attention on crane hooks. The color distribution in the FLE-YOLO heat maps is more concentrated in the hook area than that in the original algorithm. Additionally, ablation studies are conducted to evaluate the contribution of each module within the entire network using performance metrics.

- (3)

- Extensive experiments on large datasets, COCO2017 and VOC2012, demonstrate significant improvements in computational complexity, parameter count, and detection speed of the FLE-YOLO detector compared to original network. Further experiments on a collected tower crane hook dataset show that compared to the original algorithm, computational complexity is reduced to 19.4 GFLOPs, detection speed increased to 142.857 f/s, accuracy reached 97.3% (can remain the same), AP50 reached 98.3% (an increase of 0.6%), and parameter count reached 7.588 M, a reduction of 3.538 M.

- (4)

- During the testing phase at the construction site, a visual detection and tracking scheme was proposed. This involved establishing a visual monitoring interface and implementing efficient identification and tracking of hooks, ultimately ensuring both the efficiency and safety of tower crane operations.

2. Techniques and Approaches

2.1. Object Detection Algorithms

2.2. Tower Crane Safety Status Monitoring System

3. Dataset Processing

3.1. Collection of Tower Crane Hook Datasets

3.2. Dataset Construction

4. The Proposed Method

4.1. Backbone Feature Extraction Network

4.1.1. Adding FasterNet Backbone

4.1.2. Triplet Attention Mechanism

4.2. Neck Feature Fusion Network

Slim-Neck

4.3. Head Prediction Network

Dynamic Head

4.4. The Coordinate Positioning and Tracking of Crane Hooks

5. Experimental Results and Discussion

5.1. Hardware Configuration and Hyper-Parameter Settings

5.2. Comparative Analysis of Experimental Results

5.2.1. Regarding the Attention Mechanism

5.2.2. Performance Comparison of Different Networks

5.2.3. Ablation Experiment

5.3. Experimental Systems and Testing in Construction Sites

5.3.1. The Construction of Experimental Platforms

5.3.2. Visualization Effect of Object Detection Algorithm

5.3.3. The Implementation of Automatic Hook Tracking

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Han, Z.; Weng, W.; Zhao, Q.; Ma, X.; Liu, Q.; Huang, Q. Investigation on an Integrated Evacuation Route Planning Method Based on Real-Time Data Acquisition for High-Rise Building Fire. IEEE Trans. Intell. Transp. Syst. 2013, 14, 782–795. [Google Scholar] [CrossRef]

- Xiong, H.; Xiong, Q.; Zhou, B.; Abbas, N.; Kong, Q.; Yuan, C. Field vibration evaluation and dynamics estimation of a super high-rise building under typhoon conditions: Data-model dual driven. J. Civ. Struct. Health Monit. 2023, 13, 235–249. [Google Scholar] [CrossRef]

- Hung, W.-H.; Kang, S.-C. Configurable model for real-time crane erection visualization. Adv. Eng. Softw. 2013, 65, 1–11. [Google Scholar] [CrossRef]

- Gutierrez, R.; Magallon, M.; Hernandez, D.C. Vision-Based System for 3D Tower Crane Monitoring. IEEE Sens. J. 2021, 21, 11935–11945. [Google Scholar] [CrossRef]

- Tong, Z.; Wu, W.; Guo, B.; Zhang, J.; He, Y. Research on vibration damping model of flat-head tower crane system based on particle damping vibration absorber. J. Braz. Soc. Mech. Sci. Eng. 2023, 45, 557. [Google Scholar] [CrossRef]

- Zhang, D. Statistical analysis of safety accident cases of tower cranes from 2014 to 2022. Constr. Saf. 2025, 40, 81–85. [Google Scholar]

- Chen, Y.; Zeng, Q.; Zheng, X.; Shao, B.; Jin, L. Safety supervision of tower crane operation on construction sites: An evolutionary game analysis. Saf. Sci. 2022, 152, 105578. [Google Scholar] [CrossRef]

- Shapira, A.; Rosenfeld, Y.; Mizrahi, I. Vision System for Tower Cranes. J. Constr. Eng. Manag. 2008, 134, 320–332. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, Y.; Ji, B.; Ma, C.; Cheng, X. Adaptive sway reduction for tower crane systems with varying cable lengths. Autom. Constr. 2020, 119, 103342. [Google Scholar] [CrossRef]

- Zhong, D.; Lv, H.; Han, J.; Wei, Q. A Practical Application Combining Wireless Sensor Networks and Internet of Things: Safety Management System for Tower Crane Groups. Sensors 2014, 14, 13794–13814. [Google Scholar] [CrossRef]

- Postigo, J.A.; Garaigordobil, A.; Ansola, R.; Canales, J. Topology optimization of Shell–Infill structures with enhanced edge-detection and coating thickness control. Adv. Eng. Softw. 2024, 189, 103587. [Google Scholar] [CrossRef]

- Xiong, X.; Zhang, Y.; Zhou, Q.; Zhao, J. Swing angle detection system of bridge crane based on YOLOv3. Hoisting Conveying Mach. 2021, 4, 30–33. [Google Scholar] [CrossRef]

- Liang, G.; Li, X.; Rao, Y.; Yang, L.; Shang, B. A Transformer Guides YOLOv5 to Identify Illegal Operation of High-altitude Hooks. Electr. Eng. 2023, 10, 1–4. [Google Scholar] [CrossRef]

- Lu, X.; Sun, X.; Tian, Z.; Wang, Y. Research on Dangerous Area Identification Method of Tower Crane Based on Improved YOLOv5s. Water Power 2023, 49, 68–77. [Google Scholar] [CrossRef]

- Sun, X.; Lu, X.; Wang, Y.; He, T.; Tian, Z. Development and Application of Small Object Visual Recognition Algorithm in Assisting Safety Management of Tower Cranes. Buildings 2024, 14, 3728. [Google Scholar] [CrossRef]

- Pang, Y.; Li, Z.; Liu, W.; Li, T.; Wang, N. Small target detection model in overlooking scenes on tower cranes based on improved real-time detection Transformer. J. Comput. Appl. 2024, 44, 3922–3929. [Google Scholar]

- Pei, J.; Wu, X.; Liu, X.; Gao, L.; Yu, S.; Zheng, X. SGD-YOLOv5: A Small Object Detection Model for Complex Industrial Environments. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; pp. 1–10. [Google Scholar]

- Xia, J.; Ouyang, H.; Li, S. Fixed-time observer-based back-stepping controller design for tower cranes with mismatched disturbance. Nonlinear Dyn. 2023, 111, 355–367. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.-h.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–22 June 2023; pp. 12021–12031. [Google Scholar]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to Attend: Convolutional Triplet Attention Module. In Proceedings of the 2021 IEEE Winter Conference on Applications of Computer Vision (WACV), Virtual, 5–9 January 2021; pp. 3138–3147. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Dai, X.; Chen, Y.; Xiao, B.; Chen, D.; Liu, M.; Yuan, L.; Zhang, L. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 7373–7382. [Google Scholar]

- Li, J.; Chen, J.; Sheng, B.; Li, P.; Yang, P.; Feng, D.D.; Qi, J. Automatic Detection and Classification System of Domestic Waste via Multimodel Cascaded Convolutional Neural Network. IEEE Trans. Ind. Inform. 2022, 18, 163–173. [Google Scholar] [CrossRef]

- Nguyen, D.H.; Abdel Wahab, M. Damage detection in slab structures based on two-dimensional curvature mode shape method and Faster R-CNN. Adv. Eng. Softw. 2023, 176, 103371. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Q.; Jia, M.; Bi, L.; Zhuang, Z.; Gao, K. Development of a core feature identification application based on the Faster R-CNN algorithm. Eng. Appl. Artif. Intell. 2022, 115, 105200. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Li, Y.-l.; Feng, Y.; Zhou, M.-l.; Xiong, X.-c.; Wang, Y.-h.; Qiang, B.-h. DMA-YOLO: Multi-scale object detection method with attention mechanism for aerial images. Vis. Comput. 2024, 40, 4505–4518. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. arXiv 2016, arXiv:1512.02325v5. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2999–3007. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020; pp. 10778–10787. [Google Scholar]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar] [CrossRef]

- Kumar, D.; Zhang, X. Improving More Instance Segmentation and Better Object Detection in Remote Sensing Imagery Based on Cascade Mask R-CNN. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 4672–4675. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Zhou, B.; Zi, B.; Qian, S. Dynamics-based nonsingular interval model and luffing angular response field analysis of the DACS with narrowly bounded uncertainty. Nonlinear Dyn. 2017, 90, 2599–2626. [Google Scholar] [CrossRef]

- Zhang, M.; Zhang, Y.; Ouyang, H.; Ma, C.; Cheng, X. Adaptive integral sliding mode control with payload sway reduction for 4-DOF tower crane systems. Nonlinear Dyn. 2020, 99, 2727–2741. [Google Scholar] [CrossRef]

- Zhou, Q.; Ding, S.; Qing, G.; Hu, J. UAV vision detection method for crane surface cracks based on Faster R-CNN and image segmentation. J. Civ. Struct. Health Monit. 2022, 12, 845–855. [Google Scholar] [CrossRef]

- He, J.; He, Z.; Wang, W.; Wang, S.; Li, Z. Visual system design of tower crane based on improved YOLO. In Proceedings of the CNIOT’23: Proceedings of the 2023 4th International Conference on Computing, Networks and Internet of Things, Xiamen, China, 27 July 2023; pp. 798–802. [Google Scholar]

- Sun, H.; Dong, Y.; Liu, Z.; Sun, L.; Yu, H. Design and implementation of a smart site supervision system based on Internet of Things. In Proceedings of the 2023 IEEE 3rd International Conference on Information Technology, Big Data and Artificial Intelligence (ICIBA), Chongqing, China, 26–28 May 2023; pp. 1331–1337. [Google Scholar]

- Sleiman, J.-P.; Zankoul, E.; Khoury, H.; Hamzeh, F. Sensor-Based Planning Tool for Tower Crane Anti-Collision Monitoring on Construction Sites. In Proceedings of the Construction Research Congress, San Juan, Puerto Rico, 31 May–2 June 2016; pp. 2624–2632. [Google Scholar] [CrossRef]

- Jiang, W.; Ding, L.; Zhou, C. Digital twin: Stability analysis for tower crane hoisting safety with a scale model. Autom. Constr. 2022, 138, 104257. [Google Scholar] [CrossRef]

- Yu, J.-L.; Zhou, R.-F.; Miao, M.-X.; Huang, H.-Q. An Application of Artificial Neural Networks in Crane Operation Status Monitoring. In Proceedings of the 2015 Chinese Intelligent Automation Conference, Fuzhou, China, 8–10 May 2015; pp. 223–231. [Google Scholar]

- Wang, J.; Zhang, Q.; Yang, B.; Zhang, B. Vision-Based Automated Recognition and 3D Localization Framework for Tower Cranes Using Far-Field Cameras. Sensors 2023, 23, 4851. [Google Scholar] [CrossRef] [PubMed]

- Wu, D.; Jiang, S.; Zhao, E.; Liu, Y.; Zhu, H.; Wang, W.; Wang, R. Detection of Camellia oleifera Fruit in Complex Scenes by Using YOLOv7 and Data Augmentation. Appl. Sci. 2022, 12, 11318. [Google Scholar] [CrossRef]

- Xiong, J.; Wu, J.; Tang, M.; Xiong, P.; Huang, Y.; Guo, H. Combining YOLO and background subtraction for small dynamic target detection. Vis. Comput. 2024, 41, 481–490. [Google Scholar] [CrossRef]

- Zhang, G.; Tian, Y.; Hao, J.; Zhang, J. A Mongolian-Chinese neural machine translation model based on Transformer’s two-branch gating structure. In Proceedings of the 2022 4th International Conference on Intelligent Information Processing (IIP), Guangzhou, China, 14–16 October 2022; pp. 374–377. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning Deep Features for Discriminative Localization. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2921–2929. [Google Scholar]

| Algorithm | Backbone | Advantage | Weakness | Applicable Scene | FPS |

|---|---|---|---|---|---|

| R-CNN [25] | AlexNet VGG16 | Combine CNN with candidate box method | The detection speed is slow, time-consuming, and the fixed image input size is fixed | Object detection | 0.03 0.5 |

| Fast R-CNN [26] | VGG16 | Use POI Pooling to extract features and save time | The calculation of candidate region selection method is complicated | Object detection | 7 |

| Faster R-CNN [27] | VGG16 ResNrt | Replace the regional suggestion with RPN to speed up the training and improve the accuracy | The model is complex | Object detection | 7 5 |

| YOLO [28] | Darknet | YOLO series is real-time, simple and efficient, and widely used | The detection accuracy of small targets is low, and it is difficult to deal with complex backgrounds. The initial model has high complexity and is difficult to be applied to devices with limited resources | Object detection, Real-time video analysis | 46 |

| SSD [30] | VGG16 | Multi-scale anchor box detection, efficiency | The model is difficult to converge and the detection accuracy of the model is low | Multi-scale object detection, real-time video analysis | 59 |

| RetinaNet [31] | ResNet | The problem of category imbalance is solved through Focal Loss | Sample imbalance is caused during intensive sample training | Multi-scale object detection | 5.4 |

| Corner Net [35] | Hourglass −52/104 | Without anchor point, the detection is changed to corner point detection, and the positioning performance is good | The internal features are missing, and the corner feature points of the same object need to be classified, which leads to high computational complexity | Object detection | 300 |

| CenterNet [33] | Hourglass −52/104 | No anchor point, the detection is changed to a triplet, and the reasoning speed is fast | Relying on preprocessing and post-processing, the center point positioning of small targets is not accurate enough, and the ability to deal with complex scenes is limited | Object detection | 270 |

| Cascade R-CNN [34] | ResNet + FPN | The cascade structure improves the detection effect | The computing overhead is large | Object detection | 0.41 |

| Efficient-Det D1~D7 [32] | EfficientNet | with composite scaling and BiFPN network, excellent multi-scale target detection performance | The model is complex, the training time is long | Mobile devices, embedded devices | 24 |

| Classify | Approach | Application Scenarios | Advantage | Weakness |

|---|---|---|---|---|

| Sensor based security monitoring method | Dynamic modeling and uncertain interval parameters | Predictions of pitch response for the double tower operation | Precisely determine the upper and lower limits of amplitude angle response to reduce the risk of collision | Only applicable to narrow sites with two towers and the calculation is complicated |

| Adaptive integral sliding mode control | Tower crane load anti-oscillation control | Continuous control without jitter and strong anti-interference ability | The control algorithm is complex and the calculation burden is heavy | |

| Ultrasonic sensor positioning | Real-time monitoring of hook position | Real-time and easy to deploy | The influence of environmental interference is large and the accuracy is limited | |

| Visual security monitoring method | Based on UAV image detection | Crack detection on crane surface | It can detect cracks in difficult areas under complex background | Relying on drone equipment is costly |

| Variable focus industrial camera | Video surveillance, zooming hook tracking | High precision, adapt to different scenarios | Expensive | |

| Digital twin technology | Crane status monitoring | Visualization, real-time state reflection | Implementation is complex and relies on multiple sources of data | |

| Based on visual algorithms | Real-time identification and automatic positioning of crane hook | High precision crane attitude estimation meets the requirements of construction application. | In the case of large area shielding by the boom, it is difficult to extract geometric features, which affects the detection effect | |

| FLE-YOLO(ours) | Real-time detection and tracking of crane hook | Lightweight network, efficient detection of small targets, suitable for hardware deployment | The detection effect of extreme occlusion is reduced |

| Name | Experimental Configuration |

|---|---|

| Operating system | Windows 11 Ubantu22.04.1 |

| Deep learning framework | PyTorch1.13.1 |

| Programming CPU | Intel(R)Core (TM)i7-13700H |

| GPU | NVIDIA GeForce RTX 3090 (24 G) × 2 |

| Programming Language | Python3.8 |

| Cuda | 11.6 |

| Platform | Pycharm2022 |

| Optimizer | SGD |

| Batch Size | 4 |

| Epoch | 100 |

| Learning rate | 0.01 |

| momentum | 0.937 |

| Dataset | Category | Model | P | mAP50 | FPS | FLOPs/G | Parameters/M |

|---|---|---|---|---|---|---|---|

| VOC2012 | 20 | YOLOv8 | 0.781 | 0.741 | 111.11 | 28.5 | 11.133 |

| 20 | YOLOv12s | 0.791 | 0.756 | 208.33 | 21.3 | 9.238 | |

| 20 | FLE-YOLO | 0.801 | 0.789 | 114.942 | 19.4 | 8.889 | |

| COCO2017 | 80 | YOLOv8 | 0.663 | 0.5776 | 102.618 | 28.6 | 11.157 |

| 80 | YOLOv12s | 0.689 | 0.606 | 208.33 | 24.1 | 9.261 | |

| 80 | FLE-YOLO | 0.672 | 0.5777 | 106.382 | 19.6 | 8.809 |

| Model | Backbone | P | mAP50 | FPS | FLOPs/G | Parameters/M |

|---|---|---|---|---|---|---|

| YOLOv3-spp | Darknet-53 | 0.949 | 0.945 | 29.069 | 293.1 | 104.71 |

| YOLOv5s | CSP-Darknet-53 | 0.957 | 0.935 | 101.01 | 23.8 | 9.112 |

| YOLOv6s | RepVGG | 0.966 | 0.946 | 62.5 | 44 | 16.297 |

| YOLOv8s | C2f-sppf-Darknet-53 | 0.973 | 0.977 | 95.238 | 28.4 | 11.125 |

| YOLOv9s | GELAN | 0.966 | 0.952 | 136.986 | 26.7 | 7.167 |

| YOLOv10s | CSP-Darknet-53 | 0.970 | 0.971 | 208.333 | 21.4 | 7.218 |

| YOLOv11s | C3K2 | 0.962 | 0.964 | 161.29 | 21.3 | 9.413 |

| YOLOv12s | R-ELAN | 0.951 | 0.960 | 147.058 | 21.2 | 9.231 |

| FLE-YOLO | FasterNet | 0.973 | 0.983 | 142.857 | 19.4 | 7.588 |

| Faster Net | Triplet Attention | Slim-Neck | Dyhead | P | AP50 | FPS | FLOPs/G | Parameters/M |

|---|---|---|---|---|---|---|---|---|

| - | - | - | - | 0.973 | 0.977 | 95.238 | 28.4 | 11.125 |

| √ | - | - | - | 0.948 | 0.970 | 151.515 | 21.7 | 8.616 |

| - | √ | - | - | 0.983 | 0.974 | 192.307 | 28.4 | 11.126 |

| - | - | √ | - | 0.975 | 0.977 | 136.98 | 25.1 | 10.265 |

| - | - | - | √ | 0.977 | 0.963 | 125 | 28.1 | 10.851 |

| √ | √ | √ | √ | 0.973 | 0.983 | 142.041 | 19.4 | 7.588 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hu, X.; Wang, X.; Chang, Y.; Xiao, J.; Cheng, H.; Abdelhad, F. FLE-YOLO: A Faster, Lighter, and More Efficient Strategy for Autonomous Tower Crane Hook Detection. Appl. Sci. 2025, 15, 5364. https://doi.org/10.3390/app15105364

Hu X, Wang X, Chang Y, Xiao J, Cheng H, Abdelhad F. FLE-YOLO: A Faster, Lighter, and More Efficient Strategy for Autonomous Tower Crane Hook Detection. Applied Sciences. 2025; 15(10):5364. https://doi.org/10.3390/app15105364

Chicago/Turabian StyleHu, Xin, Xiyu Wang, Yashu Chang, Jian Xiao, Hongliang Cheng, and Firdaousse Abdelhad. 2025. "FLE-YOLO: A Faster, Lighter, and More Efficient Strategy for Autonomous Tower Crane Hook Detection" Applied Sciences 15, no. 10: 5364. https://doi.org/10.3390/app15105364

APA StyleHu, X., Wang, X., Chang, Y., Xiao, J., Cheng, H., & Abdelhad, F. (2025). FLE-YOLO: A Faster, Lighter, and More Efficient Strategy for Autonomous Tower Crane Hook Detection. Applied Sciences, 15(10), 5364. https://doi.org/10.3390/app15105364