1. Introduction

Human Action Recognition (HAR) is currently widely applied to recognize complex movements of athletes, including accuracy prediction or to detect objects in images or videos [

1]. Due to the large amount of data gathered via YouTube, traffic cameras, social media, and motion capture systems, as well as stored in publicly available datasets, this area of Computer Vision (CV) possesses sufficient sources to apply deep learning (DL) models in order to enhance athlete’s performance. These modern techniques may also encourage people to learn and master sports skills. Recognition of sports movements plays a pivotal role in monitoring athletes during training, matches, and competitions. Another aspect of HAR is to verify how sport movements are performed. For these tasks, the recognition of specific moves is essential. The development of vision systems allows one to obtain increasingly accurate data, which in the case of sport analysis is of a very significant importance.

The main challenges of HAR are faced in detecting sport actions and activities, which are further applied for monitoring a player’s performance. Recently studies have focused on fusion of the skeleton structure of sensor-based data, together with other representations like joint position and velocities [

2], kinematics data [

3], and trajectories [

4,

5,

6,

7]. These approaches provide topological relations that are further utilized in the recognition process. The fusion of motion capture data provides a better representation of this kind of data. Thus, this study focuses on discussing the fundamental issues of high-accuracy tennis movement recognition. DL models have difficulties in accurately identifying both short-term and long-term dynamic characteristics, as well as in extracting joint information at different scales from motion capture data, especially for very dynamic movements. In response to the above challenges, the authors proposed a new model for recognizing the main tennis movements. The main contributions are as follows:

Motion capture datasets containing basic tennis strokes were selected, such as THETIS, Tennis-Mocap, and 3DTennisDS. The first contains data recorded with a markerless system, while the latter two used optical, retro-reflective systems. The data contain the positions of participants performing individual tennis movements. The 3DTennisDS dataset additionally contains the position of the tennis racket.

Participant data obtained from the three datasets were unified and simplified to the THETIS dataset, in order to create similar input data for the model.

The following tennis movements were chosen to recognize: forehand, backhand, volley forehand, and volley backhand.

The authors propose a new model to recognize the abovementioned tennis moves. The Feature Fusion Graph Consecutive-Attention Network (FFGCAN) was created, which incorporates seven basic blocks that are combined with two types of module: Adaptive Consecutive Attention Module, and Graph Self-Attention. Additionally, a temporal convolutional network, as a part of the network, models short-term temporal dependencies.

The FFGCAN was verified utilizing the following metrics: accuracy, precision, recall, F1-score, and confusion matrices. The training process toward accurate prediction was analyzed.

In order to visualize which features are the most essential for tennis movement recognition, Gradient-weighted Class Activation Mapping (Grad-CAM) was applied. This CV technique indicated the most relevant data for making predictions.

2. Related Works

Tennis stroke recognition has been of great interest recently. Many studies concerning tennis movement detection are performed, utilizing various types of data, such as motion capture, images, video, and sensors. For these purposes, publicly available datasets are applied, as well as data captured by the authors. Many Machine Learning and Deep Learning models have been proposed for tennis stroke recognition.

Motion capture data of movements have been obtained for various systems, including markerless and marker-based. The THETIS dataset was created utilizing the Microsoft Kinect system. It contains twelve tennis moves, such as forehand, backhand, volley, service, and smash, as well as their types. A great number of studies concerning tennis stroke recognition have been performed utilizing this dataset. The five-layer deep historical Long Short-Term Memory (LSTM) network together with the Inception V3 model were combined for classification of tennis moves from video sequences [

8]. All captured moves were detected using InceptionResNetV2 and ResNet152V2. The CNN-LSTM network was applied for feature extraction from RGB video and Xception for spatial feature extraction [

9]. A 3-layered LSTM model was also proposed to classify these moves. In the studies in [

10,

11], the Inception network was chosen for feature extraction from RGB videos. The LSTM model with channel and attention modules was also applied for recognition of six tennis moves [

12]. Linear-Chain Conditional Random Fields and Support Vector Machine were also used for classification of tennis moves [

13]. Distinguishing the level of tennis players, taking into account twelve strokes, was performed utilizing k-NN classification with Dynamic Time Warping (DTW) [

14]. Tennis forehand and backhand swing were detected utilizing a time-series CNN based on MPU9250 sensor [

15]. A Hilbert-embedding-based framework (EHECCO) was used to extract the nonlinear dependencies for time series classification based on motion capture data, Tennis-Mocap, CMU, and HDM05 [

16]. A multimodal solution, the Adaptive Semantic-Enhanced Convolutional Neural Network, was proposed for complex action classification from tennis data using the THETIS and Tennis-Mocap datasets [

17]. The model integrates a Large Language Model to obtain an action semantic enhancement mechanism.

Apart from the available datasets, a lot of studies have used data captured for the purpose of the research. Forehand and backhand swing were analyzed utilizing a Hidden Markov Model, where hand positions were indicated [

18]. Forehand, backhand, serve, and volley were detected based on inertial sensor data, from the PIQ Robot sports tracker, utilizing Learning Vector Quantization [

19]. A Deep Neural Network was applied for serve, backhand, and forehand recognition using tennis racket orientation gathered from a wearable SensorTile [

20]. Decision Tree (DT), SVM, Neural Networks (NN), and k-NN methods were applied for classification of six tennis movements [

21]. A SVM with a radial basis function kernel and k-NN methods were chosen for tennis serve, forehand, and backhand recognition [

22]. The study involved data gathered from an eight-video camera system and IMU sensor.

A series of studies concerning the recognition of forehand, backhand, volley forehand, and volley backhand strokes obtained from motion capture data, registered with tennis players together with a tennis racket, can be found in [

23,

24,

25,

26,

27]. The movements were performed with and without a tennis ball, which were gathered into the 3DTennisDS dataset [

24]. The adjusted Spatial-Temporal Graph Neural Network (ST-GCN) together with active features was applied for images presenting the model of a tennis player holding a tennis racket, and reflecting the silhouette and the tennis racket position for the abovementioned moves [

23]. Moreover, the fuzzification of input data with a trapezoidal function improved the classification accuracy. The same model was applied for forehand, backhand, and volley recognition based on data from three datasets, 3DTennisDS, THETIS, and Tennis-Mocap [

24]. An Attention Temporal Graph Convolutional Network, involving an attention model defined as an encoder–decoder, and a BiDirectional Recurrent Neural Network for extracting temporal features and Graph Convolutional Networks for calculating spatial features were proposed for recognition of the abovementioned tennis strokes gathered in the 3DTennisDS dataset [

25]. Another modification of an Attention Temporal Graph Convolutional Network with Gated Recurrent Unit for extracting temporal attention for classification of forehand and backhand strokes with phases of these moves was described in [

26]. A Dual Attention Graph Convolutional Network combining two attention modules, a Graph Convolutional Network for extracting spatial features and LSTM for temporal features, was applied for four tennis strokes based on the 3DTennisDS dataset [

27]. All SY-GCN- based models proved to have a high performance.

3. Problem Definition

Tennis stroke classification involves examining continuous time series recordings that illustrate a tennis player’s body movements, in order to classify them into one of the defined stroke categories. The 2D poses of a person with joints over frames can be expressed mathematically as in Equation (

1) [

28]:

Here, each

corresponds to the 2D coordinates of all

J joints at frame

i. Given these observed sequences of 2D player poses, the primary goal in tennis motion classification is to develop a parametric model capable of accurately assigning each sequence to one of several predefined action or stroke classes. Formally, this can be represented by (

2):

where

is the predicted stroke class, and

represents the set of all possible stroke classes. We use spatio-temporal graphs to represent the observed skeleton poses at

J joints for one person in

T frames. At each frame

t, we characterize a spatio-temporal graph

. Here, the set of vertices

indicates the skeletal joints, and the set of edges

shows the connections between these joints. Positional information for each node at time

t is stored. The edges are defined using an adjacency matrix

, where its entries

denote connected joints and

denote disconnected ones. Thus, the input to the classification model is then represented as

.

4. Preliminaries

Human skeletal data, in terms of skeleton-based action classification, are usually represented in the form of a graph structure

. Here,

denotes a set containing

N joint nodes. Each node has

C features (for example, spatial coordinates or velocities of joints). The edge set

E represents connections between the nodes and can be expressed through an adjacency matrix

. Graph Convolutional Networks (GCNs) have become popular in recent papers because they perform well in modeling problems classifying actions based on skeletons [

26,

29,

30,

31]. Usually, a 3D time skeleton sequence used for classification is shown as a tensor

, where

C denotes the channel dimension,

T the number of frames, and

N the number of nodes of joints. The fundamental relationship for classic GCNs is defined by Equation (

3):

Here,

denotes an

A matrix using a diagonal

matrix for normalization.

is any nonlinear activation function like

or sigmoid, and

W is a learnable weight matrix that combines features across channels [

31,

32].

5. Materials and Methods

In the proposed model, feature fusion is described as combining multiple complementary elements of a single skeleton into a richer description. In practice, three feature families are combined: absolute joint coordinates, bone vectors defined as differences between neighboring joints, and motion vectors calculated as differences in position in the subsequent frames. Each of these modalities is first projected through a separate lightweight convolution projection to allow encoding patterns specific to each description space without mutual suppression of information. These tensors are subsequently concatenated along the channel dimension normalized with BatchNorm and fed into the subsequent graph-attention blocks.

In this model, feature fusion is described as combining multiple complementary elements of a skeleton into a single, richer description. The model puts out two link lists: a fixed, globally taught one, and a changing one, worked out in each frame using Adaptive Consecutive Attention. Both lists are weighted using the learned values and added together, making a map that shows the present, path-based links between joints. The feature values are then multiplied by their corresponding topological maps, so that the result preserves the time–node alignment. This considers the context of whole-body motion. This scheme allows the network to treat the same pose differently according to the direction and speed of motion.

5.1. Datasets

This study incorporates three datasets containing tennis movements, registered using markerless and marker-based motion capture systems. The data for the study were unified and simplified according to the THETIS dataset, in order to standardize the input data to the model.

5.1.1. THETIS

The THETIS (Three dimEnsional TennIs Shot) dataset was created in 2013 [

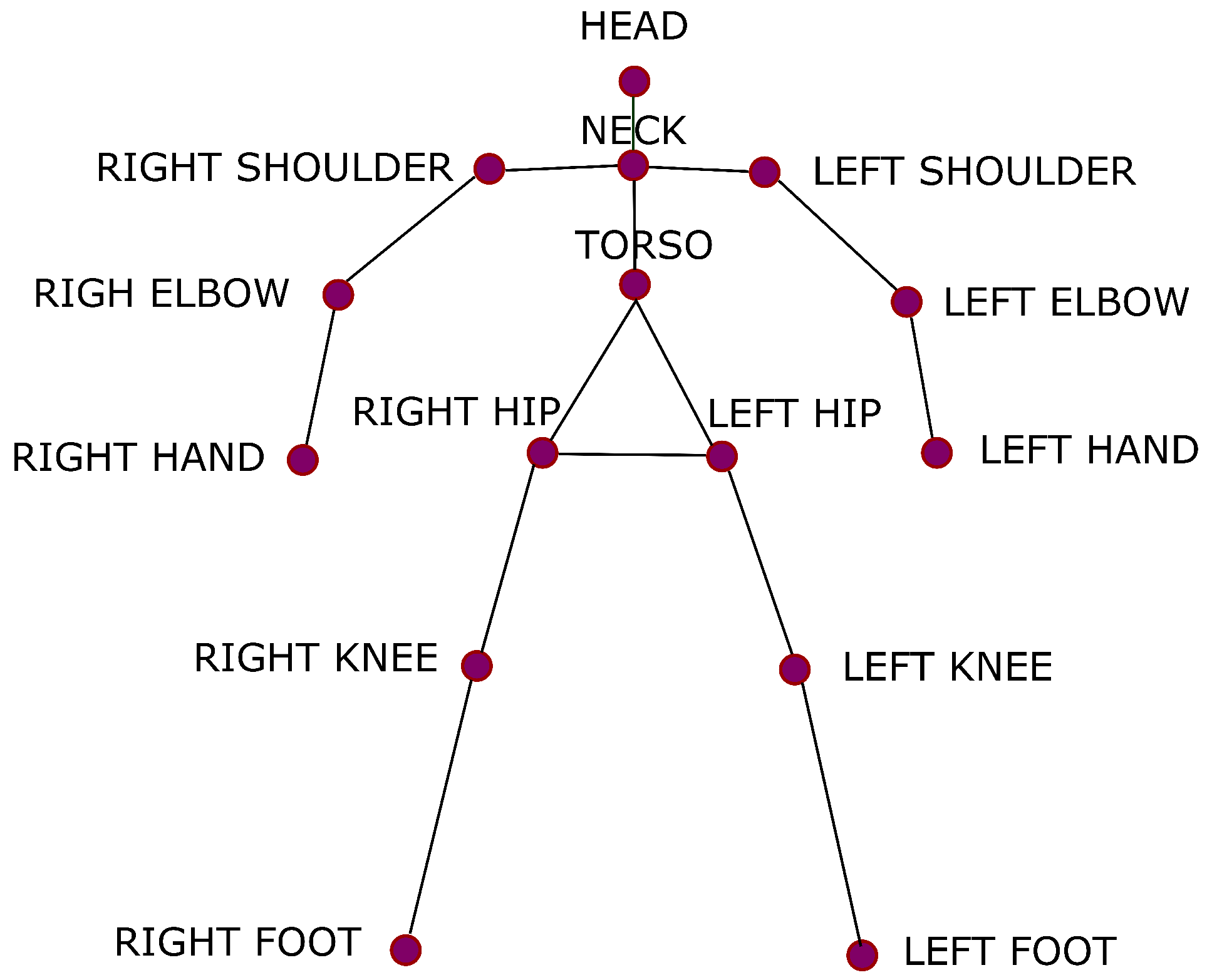

33]. Twelve tennis strokes were registered by Microsoft’s Kinect motion capture system. They were performed by thirty-one amateurs and twenty-four professional tennis players. The data collection included twelve movements: backhand (one-handed, two-handed, slice), volley (backhand and forehand), forehand (flat, open stands, slice), service (flat, kick, slice) and smash. The following data formats are available in the dataset: ONI files, depth and RGB videos, silhouette, skeleton 2D and 3D videos, and skeleton joints. THETIS is a very well-known dataset that has been incorporated in many papers recently. In this study, the authors focus on ONI files that reflect a model of the tennis player. The model involves 15 points (

Figure 1).

5.1.2. Tennis-Mocap

The Tennis-Mocap dataset was created in 2020 [

16]. It consists of motion capture data of 17 athletes from the Caldas-Colombia tennis league. Five of the participants were stated to be high performers and the others regular performers. Their movements were recorded using an Optitrack Flex V100 including six cameras, with the frequency set to 100 Hz. Thirty-four markers, defined by Arena software, were attached for collecting information about their body joints. The study was performed according to the Biovision Hierarchy (BVH) motion capture protocol. All participants were instructed to hit the ball as if they were playing a match for thirty seconds. Each tennis movement was registered separately. They performed a series of the following strokes: groundstrokes (forehand and backhand), serve, volleys, and smash.

5.1.3. 3DTennisDS

The 3DTennisDS dataset was created in 2024 [

24]. It consists of the motion data of ten tennis players. Tennis movements, including forehand, backhand, volley forehand, and volley backhand, were recorded using an 8-camera Vicon motion capture system, with the frequency set to 100 Hz. The movements of tennis players were registered, as well as the orientations of the tennis racket while performing the strokes. Thirty-nine retro-reflective markers were attached to the participant’s body according to the biomechanical model Plug-in Gait. The participants, former professional tennis players, performed the moves while constantly moving, running around a bollard placed on the floor, so their movements were as similar as possible to those on a tennis court. The motion data were stored in .csv files. Tennis racket data were also incorporated to this study.

5.2. Graph Self-Attention

According to [

34], attention is defined in terms of Query (Q), Key (K), and Value (V) matrices. The first step entails deriving these matrices through a trainable linear transformation (

Figure 2). It should be noted that, in terms of self-attention, Q, K, and V are the linear projections of the input feature

.

At the heart of the self-attention mechanism lies the capacity to make the model construct correlations among distinct portions of the input patch. Formally, the self-attention procedure applied to input data

can be expressed as follows in Equation (

4):

where

indicates the scaling factor.

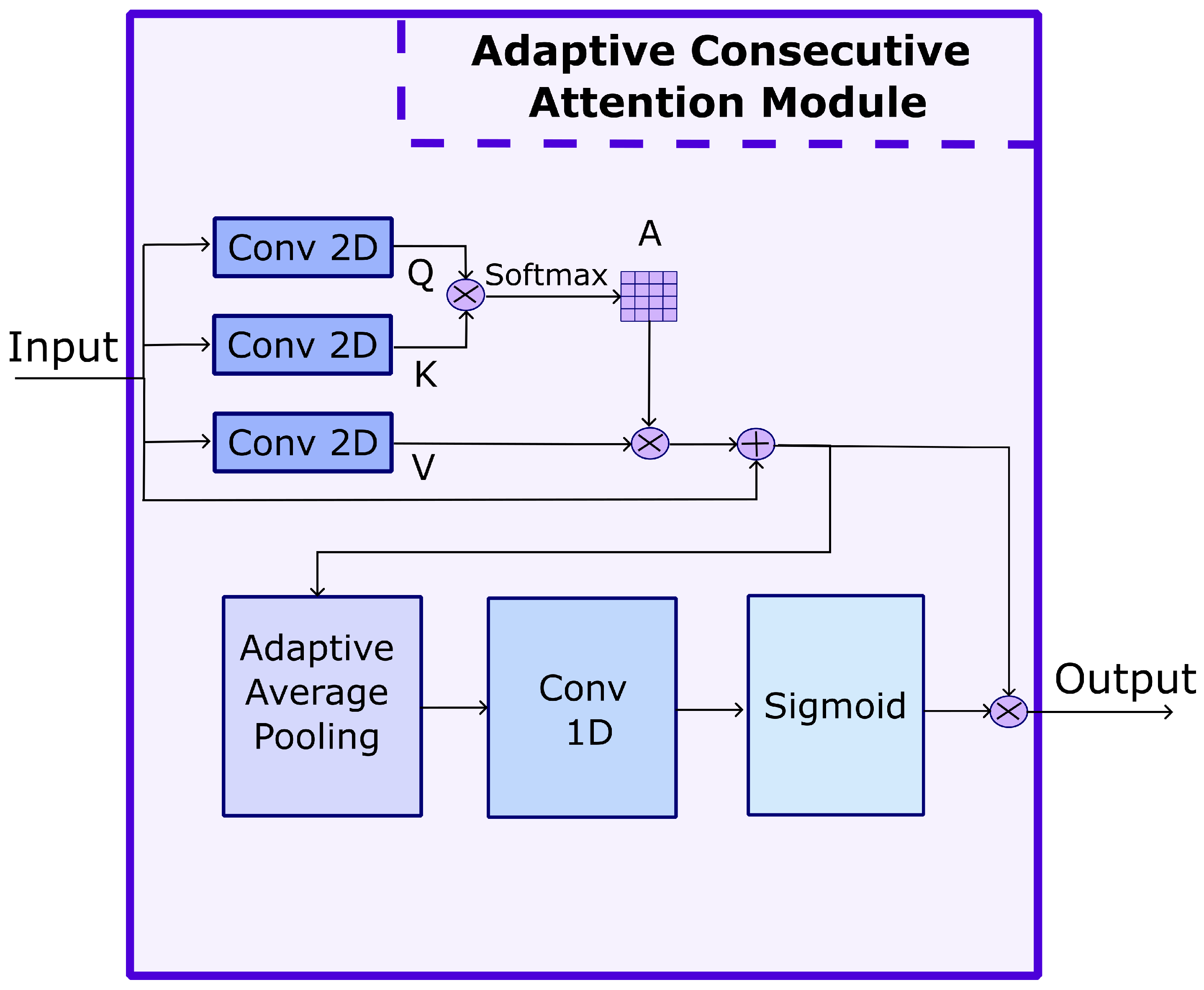

5.3. Adaptive Consecutive Attention

Positional details about exactly where something is in the feature map of the input tensor are crucial for understanding the context and structure of pose sequences, while feature channels related to encapsulating dependencies between different channels are essential for understanding complex patterns and relationships, which are important for decoding complex patterns and relationships. Inspired by [

29,

35,

36], a dedicated adaptive consecutive attention module (ACAM) is proposed. The CAM captures both positional and channel-related information of input features. The overall goal of this module is to systematically integrate attention mechanisms to enhance the representational capacity of the network, focused on spatial relationships and channel dependencies. The overall structure of the ACAM is depicted in

Figure 3.

In the ACAM, the input

first passes through three

layers, to obtain new feature maps for query (

Q), key (

K), and value (

V) Equation (

5).

It should be emphasized that

are in

, where

L are the learned features of the joints in the skeleton data. Due to the need to determine the attention matrix in two-dimensional space, the ACAM input should be transformed to

, where

. Based on the module input, the applied attention map

can be defined as in Equation (

6):

The

is noted as the measure of the impact at position

m on position

n. Then, multiplication is performed between the transpose of

A and

V, with the result reshaped to

. Later this result is multiplied by a scale parameter

, and an element-wise sum is performed with features

I to obtain

as follows in Equation (

7):

Then, an adaptive gate is added, which dynamically scales the attention mask. This allows the model to adjust the impact of the attention mechanism in Equation (

8):

Finally, we take the result and perform an element-wise multiplication ⊙ with the original

to obtain the final output

, as in Equation (

9):

The consecutive attention module allows the network to focus on relevant components of the motion capture data. By applying two different attention mechanisms consecutively, the module moves across spatial and channel dimensions. This is possible by using information of the relationships between features from different channels. This contextual integration enriches the network’s comprehension of tennis motion data representation and allows for a more invested representation.

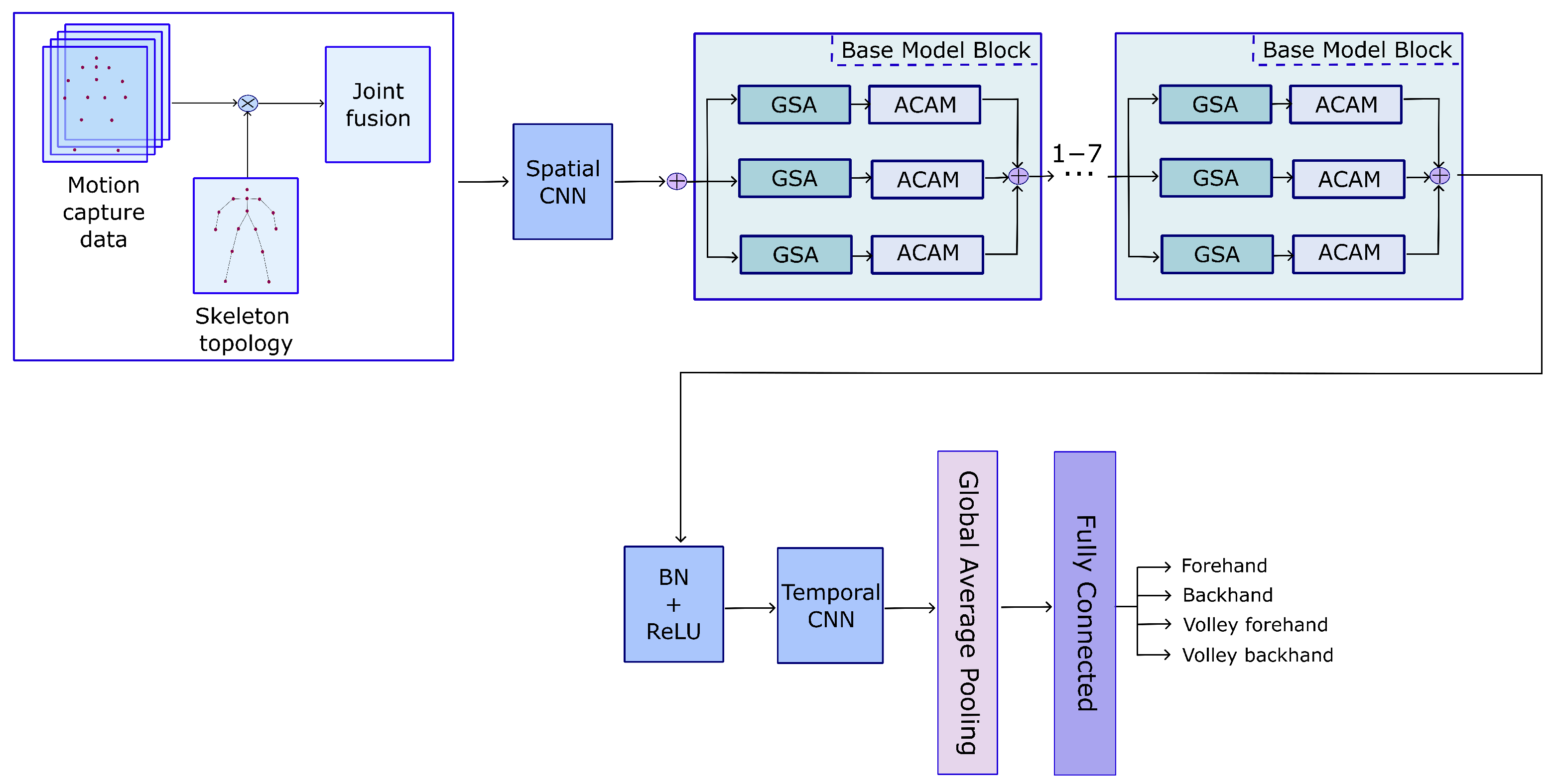

5.4. Model Architecture

Three specialized modules are introduced for the classification of tennis strokes based on skeletons. They can be arranged either sequentially or in parallel, to form the basic building blocks of the proposed model. The graph self-attention module captures and extracts spatial as well as long-term temporal information from the skeletal joints. Moreover, information of the relationships between features from different channels is added by the adaptive consecutive attention model. Short-term temporal dependencies are multi-scale modeled by a temporal convolutional network that contributes complementary temporal cues. In addition, the Joint Motion module identifies which joints are in motion versus which remain stationary, guiding the graph self-attention to emphasize “salient” joints. Although these three modules function independently, they are closely interrelated. The complete model comprises seven stacked basic blocks, and the model architecture is outlined in

Table 1 and

Figure 4. In

Table 1, each layer describes the input channels and temporal stride, as well as the output channels. Each basic block contains three graph self-attention modules, three adaptive consecutive attention modules, and one temporal modeling module. In blocks 3 and 6, the number of channels is doubled and the temporal dimension is reduced. Each block begins with either a

convolution or a motion fusion operation that increases the dimensionality of the input channel. Three parallel graph self-attention modules connected with adaptive consecutive attention modules are employed to extract joint information at different scales. Their outputs are summed to produce the final feature representation.

Moreover, the module integrates multi-scale temporal information, and the operation that reduces the time dimension is accomplished via depth-wise convolution. Finally, after traversing all basic layers, average pooling compresses the feature map, followed by classification of the resulting features using a fully connected layer.

5.5. Fusion Strategy

To produce the value of the input feature

, a feature transformation

is defined. The objective of this transformation is to map shallow representations onto more advanced ones, formally expressed as in Equation (

10):

where

is defined as a matrix of value

size.

C denotes the channels, and

represents the 3D matrix of

. Due to the fact that human skeletons are highly compact data representations, a conventional self-attention mechanism can easily overfit. To mitigate this issue, we enhance the processes for extracting the query and key, adapting them more effectively to skeleton data. A linear projection is employed to reduce the channel dimensionality, thereby limiting the redundancy and computational complexity. This operation is denoted by

, mathematically described as in Equation (

11):

Here, denotes batch normalization, which ensures a consistent data distribution.

The learnable matrices

project the input

onto

and

.

denotes the one-layer channel dimension (see

Section 5.2 and

Figure 2). Moreover, the projection of

Q is divided by the number of channels, to enable to model temporal dependencies

. Furthermore, a correlation modeling function

is introduced. Its task is to aggregate the temporal information within each channel, making it possible to capture time dependencies in the skeleton sequences.

and

are obtained by applying

to

and

as in Equation (

12):

and

are the query and key vectors. Each channel

i is associated with a learnable parameter set

, which fuses skeletal joint features from multiple time frames. After this temporal aggregation, the input features become

and

. In addition, we employ an adaptive parameter map (APM) that captures static joint relationships via channels, similarly to an attention map indicating how strongly joints are connected. Next, the APM is fused with the dynamic topology derived from graph self-attention to form an updated topological structure among joints, Equation (

13):

Here,

is a learnable parameter. Its role is to regulate the impact of the graph self-attention map (

). This fusion generates a channel-specific topology map for each output channel, each reflecting how the joints connect when the object is in motion. Finally, the channel topology maps from the value and fusion maps are aggregated by splitting them into

and

, where

and

. Each pair of channels is then aggregated as follows, Equation (

14) [

29]:

Here, · denotes matrix multiplication, and represents the final features output.

5.6. Temporal Convolutional Network

Previous works have used Temporal Convolutional Networks (TCNs) extensively in SOTA models for temporal feature extraction [

37,

38,

39]. According to [

29], this module has two main limitations. First, it has problems in detecting temporal dependencies. Second, the applied convolutions can create discontinuous temporal features with respect to the smooth motion representation in the skeleton data. Therefore, taking inspiration from [

29], we decided to use a system that combines 3D spatial feature extraction with a 1D temporal convolution module for feature representation derived from skeleton-based joint data. Its structure is depicted in

Figure 5.

A TCN is introduced as a novel 1D temporal convolution module which smoothly models short-term dependencies across different scales. The TCN consists of four branches and a convolution branch. The first two such branches halve the number of channels through convolutions to reduce the computational load. Depth-wise convolutions are then applied to extract multi-scale temporal features. In the third layer, the number of channels is halved again and a layer is added following the convolution in the third column. Finally, all four branches are concatenated to match the original input–output specifications. Crucially, the applied TCN does not increase the overall complexity.

5.7. Grad-CAM

To generate a Grad-CAM visualization, a specific target class is selected to interpret the model’s decision. A Grad-CAM applies the gradients of the class score (the output for the selected class) with respect to the feature maps of a chosen convolutional layer, often the last convolutional layer, during a backward pass. The derivative of the class score with respect to these feature maps indicates how the final prediction changes if the feature map values are adjusted, thus revealing each feature map’s contribution to the output. The gradients are then globally averaged (pooled) across the height and width of the feature maps, producing a weight for each feature map. These weights form the basis for creating a coarse localization map, as in Equation (

15) [

40]:

In Equation (

15),

indicates the weight for feature map

i.

N is the number of pixels in the map,

represents the score value for

c class, and

denotes the activation of the

i-th feature map at location

. A weight is added for each feature map that represents its importance in the context of the target class. Each feature map is multiplied by its respective weight, and the weighted feature maps are summed to obtain a single two-dimensional heatmap, as in Equation (

16) [

40]:

A activation function is used to emphasize positive contributions, generating a heatmap that highlights key regions for the target class. This heatmap can be normalized to map its values to the range , enhancing its visualization. Afterward, the heatmap is resized to align with the dimensions of the original input image. Finally, it is superimposed onto the original image, often using a color map to represent areas of greater significance.

6. Experiments and Results

In order to verify the potential of the created model, a series of experiments were performed applying the ST-GCN classifier in comparison with the FFGCAN. The well-known THETIS, Tennis-Mocap, and 3DTennisDS datasets were compared in order to verify how various types of data acquisition affected the accuracy of the human action recognition. In this study, all ONI files from THETIS, all bvh files from Tennis-Mocap, as well as all c3d data from 3DTennisDS consisting forehand, backhand, volley forehand, and volley backhand were taken into consideration. The experiment was as follows:

Create predefined classes.

Create simplified models of all motion capture data, divided into four strokes (corresponding to the classes).

Create training, validation, and test sets, containing 70%, 15%, and 15% of all data, respectively.

Train the network based on the training and validation sets.

Test the network—five trials.

Create a confusion matrix and compute selected measures (

17)–(

20) [

24].

where

refers to the true positive fraction,

refers to the false positive fraction, and

refers to the false negative fraction.

To assess the robustness and generalization capability of the proposed model, five independent experiments were conducted. Each involved a random and player-independent division of the dataset into training, validation, and test subsets. Following each run, the performance metrics were recorded. The standard deviation was computed as the square root of the variance, reflecting the statistical dispersion of individual results around the mean value. This procedure quantifies the variability in model performance attributable to different random splits of the data. No uncertainty matrix based on initial or boundary conditions was employed. The evaluation of uncertainty was restricted to a statistical analysis of repeated independent experiments. Thus, the reported standard deviations characterize the consistency of the model’s performance across various random samplings, rather than the uncertainty within a single experimental condition.

The proposed model was developed based on PyTorch 2.2. The training and testing were conducted on a work station with AMD Ryzen Threadripper PRO 7965WX, as well as a single NVIDIA RTX 4090 GPU. The momentum was SGD, the Adam optimizer was set to , , . The epochs were set to 60, and model warm up was the first 5 epochs. An early stop procedure was used after 5 epochs without any improvement. The learning rate was 0.1, and at epoch 40 the learning rate decayed by a factor of 0.1. The batch size was 64 data points.

Computational Efficiency

The proposed FFGCAN had only 1.33 M parameters and required 2.746 GFLOPs per

sequence. On the GPU (RTX 4090) it achieved 0.96 ms latency, which corresponds to ≈

and allowed for smooth real-time inference. Compared to the baseline ST-GCN, we had ≈22.65% fewer FLOPs and a ≈45.5% shorter processing time, with incomparably higher accuracy. The obtained computational efficiency results are presented in

Table 10 and

Table 11.

7. Discussion

In this study, a new model, called the Feature Fusion Graph Consecutive-Attention Network, was proposed for tennis movement recognition. The basic strokes were detected, such as forehand, backhand, volley forehand, and volley backhand. The model incorporates seven basic blocks that are combined with two types of module: an Adaptive Consecutive Attention module, and Graph Self-Attention module. They are employed to extract joint information at different scales from motion capture data. The consecutive attention module allows the network to focus on relevant components. This enriches the network’s comprehension of tennis motion data representation and allows for a more invested representation. The second module captures and extracts spatial and long-term temporal information from skeletal joints. The temporal convolutional network is applied to identify short-term temporal dependencies. The FFGCAN utilizes a fusion of motion capture data that generates a channel-specific topology map for each output channel, reflecting how the joints are connected when the tennis player is moving.

The proposed model was verified using three well-known motion capture datasets containing basic tennis moves. They contain the positioning of the player’s silhouette while performing strokes with different representations and with various quantities of three-dimensional data. That is why the Tennis-Mocap and 3DTennisDS data were simplified to the THETIS model. It should be noted that the 3DTennisDS dataset contains additional data of the tennis racket, which were also incorporated in this study.

The suggested Feature Fusion Graph Consecutive-Attention Network was compared with a Spatial-Temporal Graph Convolutional Network, which is often applied for identifying human actions. This network is very often used to designate motion and appearance patterns, both from video and three-dimensional data. The obtained accuracy results for the ST-GCN proved that this type of model is suitable for accurate tennis movements recognition (

Table 2). It is clear that the network performance depended on the type of input data. The lowest accuracy results were obtained for motion capture data registered from the markerless system, from the THETIS dataset. The tennis stroke recognition performance ranged from 73.21% to 75.44%. Tennis stroke recognition based on data from the Tennis-Mocap dataset achieved a slightly higher efficiency, from 76.98% to 78.13%. The highest accuracy results for tennis stroke recognition for the ST-GCN were obtained for data from the 3DTennisDS dataset, slightly above 80%. The precision results obtained for the ST-GCN proved that this solution is accurate and avoids false positives (

Table 3). It also usually correctly identified positive instances from all actual positive samples (

Table 4). The predictive skill of the ST-GCN model was high, exceeding 74%, given by the F1-score results (

Table 5). The proposed new FFCGAN network was characterized by a very high efficiency in recognizing tennis strokes. The obtained accuracy results exceeded 90% for each analyzed dataset, regardless of the type of input data (

Table 6). The proposed classifier recognized tennis strokes proficiently. It should be noted that both the ST-GCN network and the new model had very low standard deviation values, which confirms their stability. The FFCGAN model obtained very high precision results, above 91%, which proves that the model dealt excellently with false positive samples (

Table 7). The recall results for the proposed model also reached very high values, exceeding 89.68% (

Table 8). This indicates that the network correctly recognized the positive samples. The obaitned harmonic mean values of precision and recall for the FFCGAN were higher than 91%, which shows that the network has excellent predictive skills (

Table 9).

The confusion matrices obtained for the ST-GCN and the FFCGAN provide a more comprehensive insight into the performance of the compared models. It can be seen that the forehand and backhand strokes were misclassified as their volley equivalents and vice versa (

Figure 6). It should be emphasized that the proposed model significantly reduced the confusion of these tennis shots, by up to 70%.

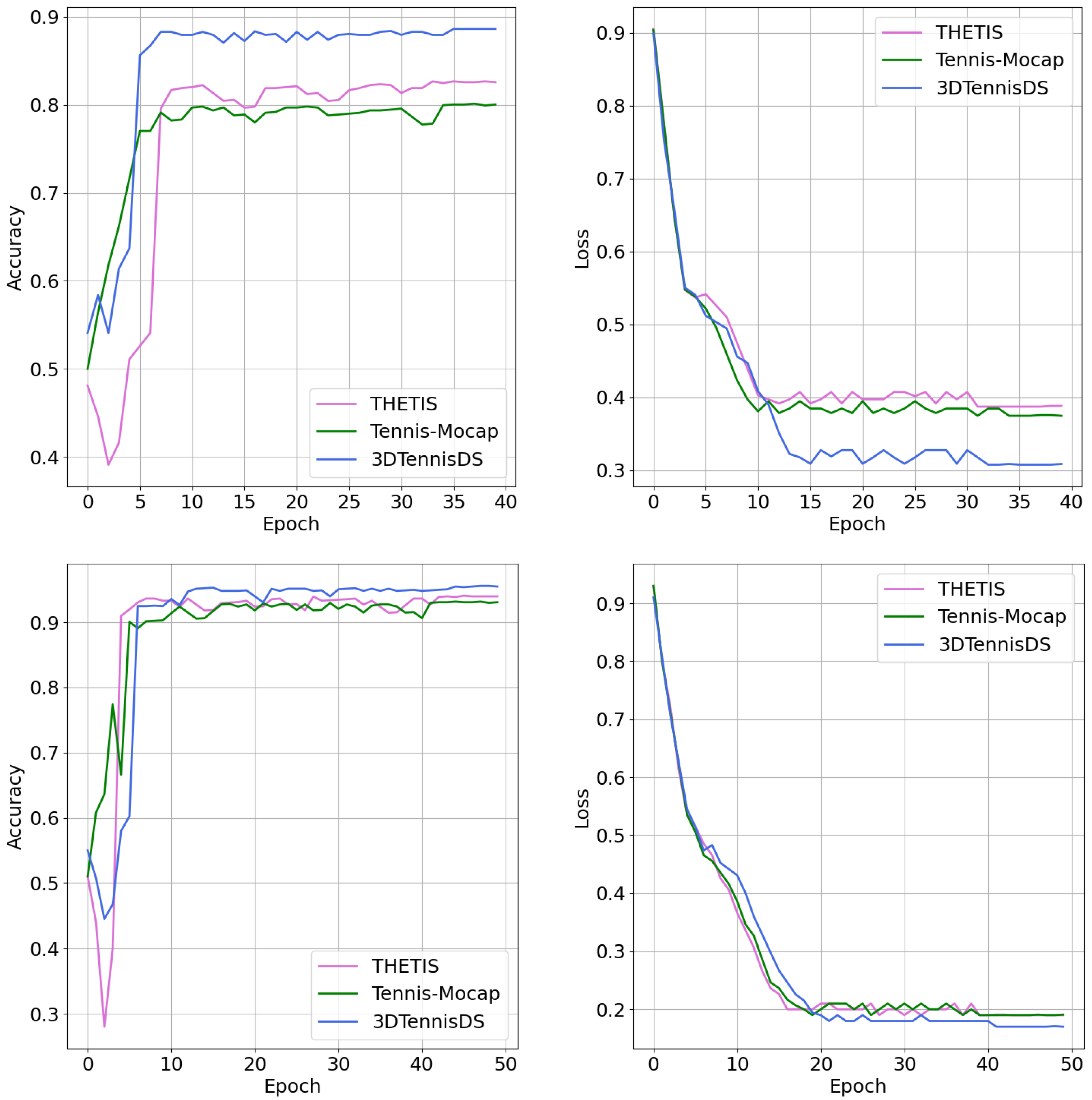

Analyzing the learning curves and loss functions of the ST-GCN and FFCGAN models, it can be concluded that both solutions were stable (

Figure 7). The ST-GCN network achieved stability after 40 epochs. While the network proposed by the authors needed slightly longer, at 50 epochs. This is a comparable number. It should be noted that in the case of the FFCGAN, the obtained effectiveness was comparable for all analyzed datasets, while for the ST-GCN network, significant differences can be observed. The FFCGAN model achieved more accurate predictions.

Taking into account all the results, it should be stated that the developed tool, FFCGAN, was extremely effective in recognizing tennis strokes based on three-dimensional data acquired from different types of motion capture systems. This universal tool will allow increasing the effectiveness of recognizing basic tennis strokes.

7.1. Comparison with the State of the Art

Spatial-temporal dependencies in motion capture data are crucial for action recognition performance. Sequence-vision-based data may suffer from the inconsistency in trajectories, which further results in misleading classification [

41,

42]. Many deep learning models disregard the relationships between frames in dynamic movements, and thus long-term temporal dependencies are not sufficiently provided [

43,

44]. In many studies, the motion capture data were split into an equal length set of frames, which may cause a loss of key information [

45]. Usually, these kinds of data are registered utilizing systems calibrated for specific types of movements. In order to eliminate the above weaknesses, the Feature Fusion Graph Consecutive-Attention Network (FFGCAN) was proposed.

It was compared with other models that were applied for three tennis motion capture datasets, THETIS, Tennis-Mocap, and 3DTennisDS (

Table 12). The SOTA results confirm that the proposed model was exceptionally effective in recognizing tennis strokes, regardless of the type of input data, gathered by markerless systems or passive optical ones. The FFGCAN was characterized by much higher performance, outperforming DL models such as LSTM, but also ST-GCNs. The obtained accuracy results are higher than in the case of input data fuzzification for classifiers based on graph neural networks. It is worth stressing that the proposed solution outperformed graph models with attention modules applied.

7.2. Explainable AI

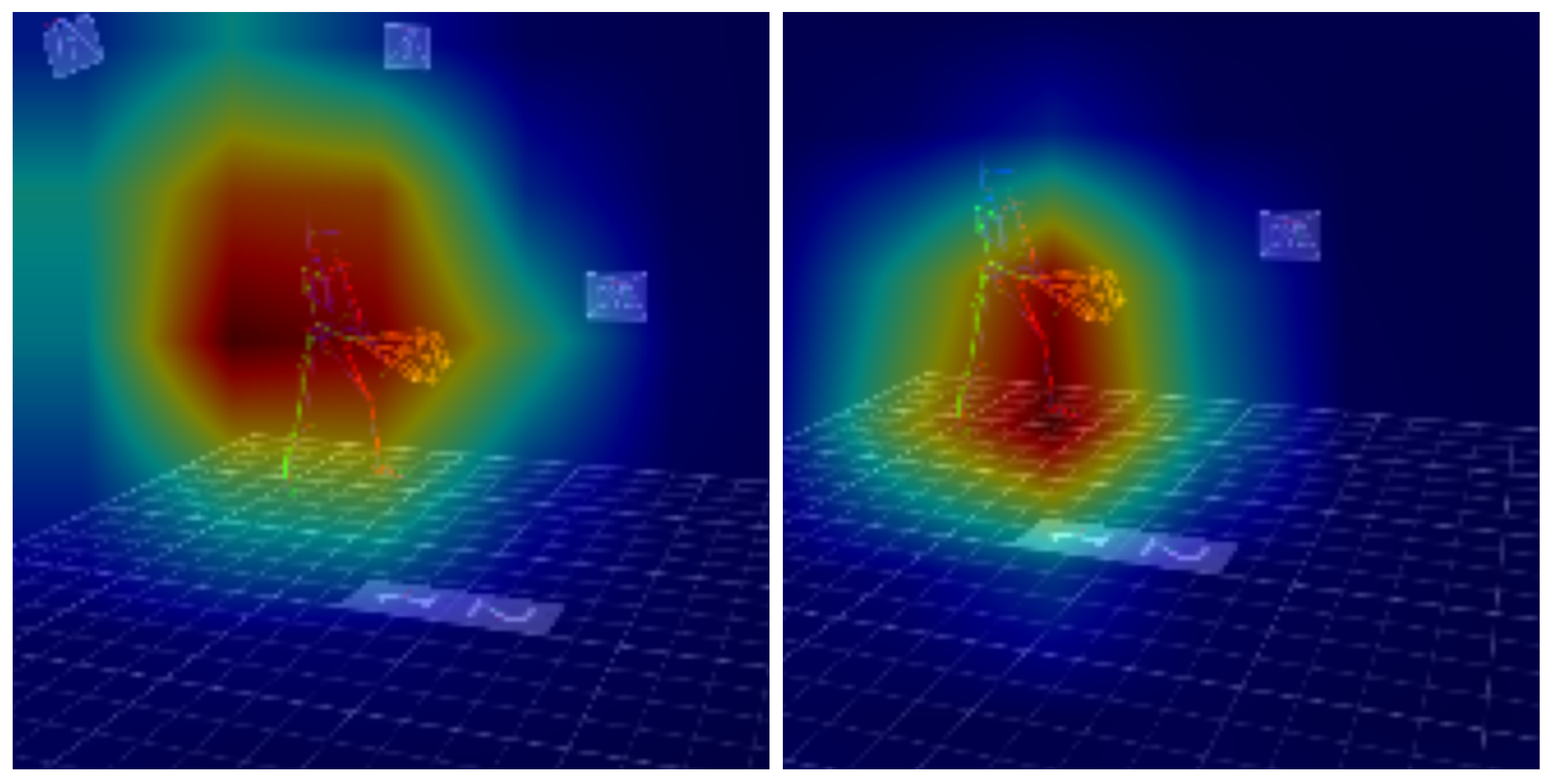

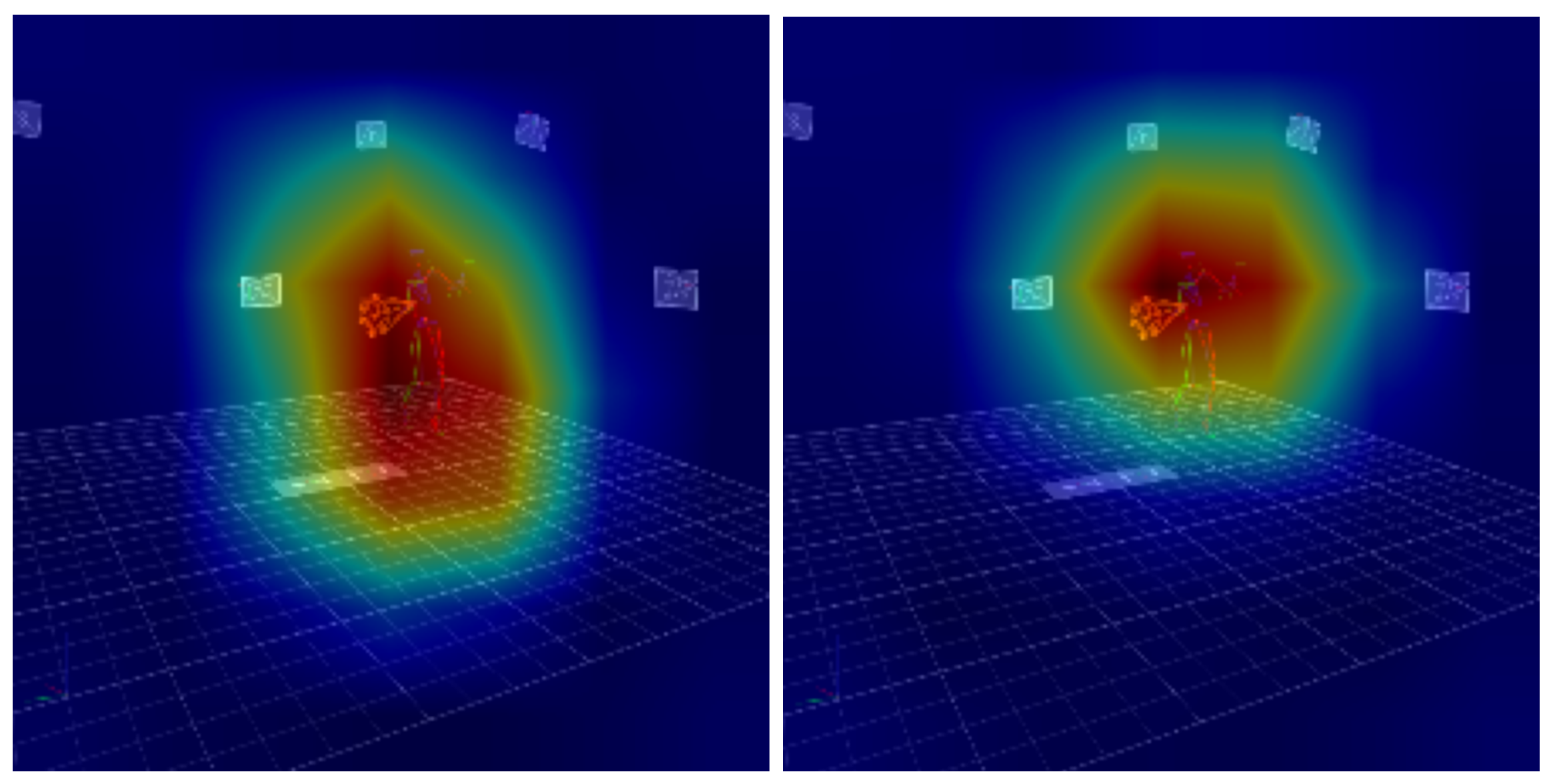

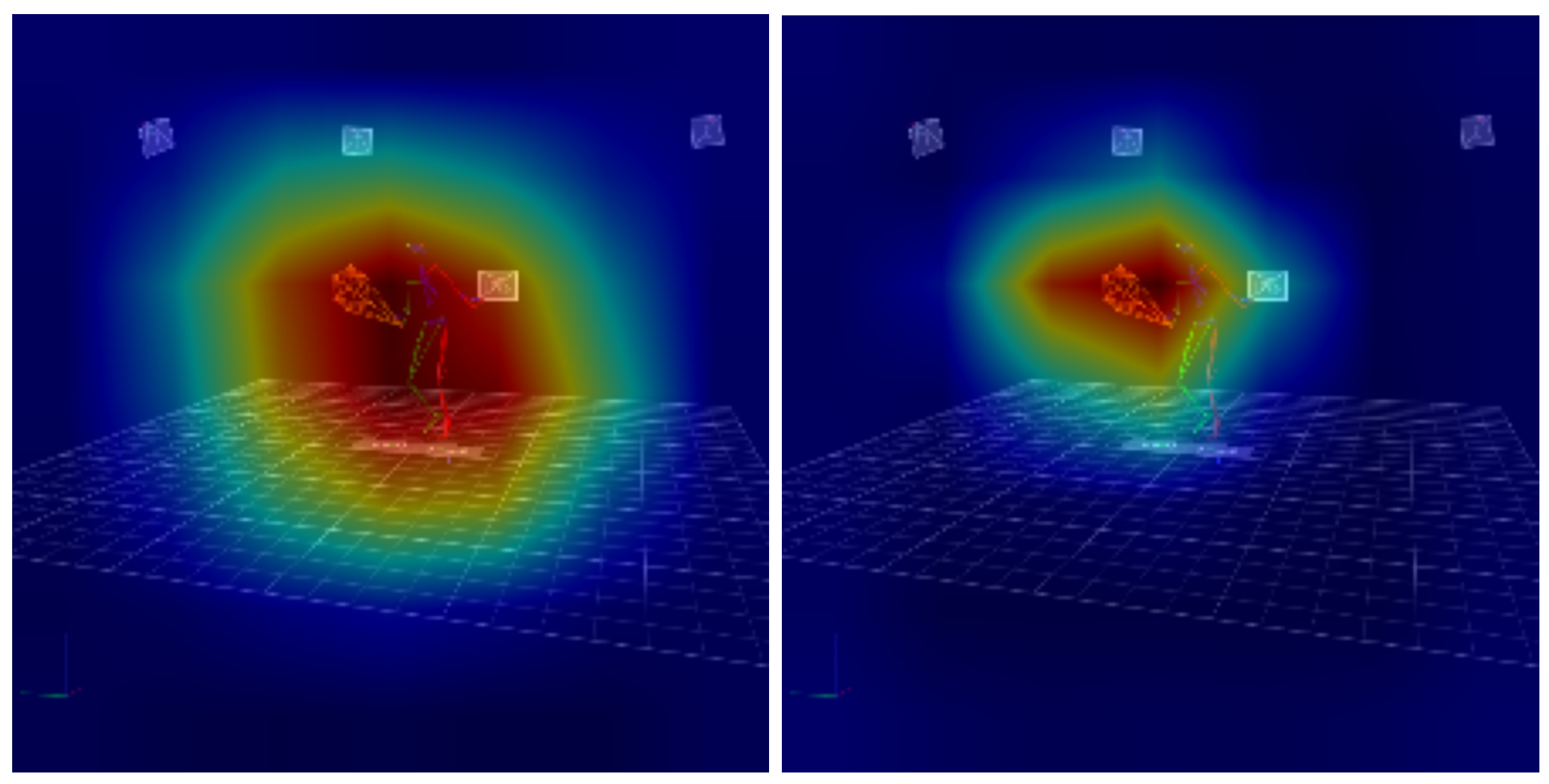

In order to show the elements of the model’s effectiveness and to visualize the elements taken into account in the classification of tennis strokes, the grad-CAM technique was used. Due to the fact that it operates only on a point cloud, the corresponding images from the VICON system are displayed as a background for the generated heat maps.

It should be emphasized that the background images in

Figure 8,

Figure 9,

Figure 10 and

Figure 11, depicting a player executing a specific type of stroke, serve solely illustrative purposes and do not convey the dynamic nature of the recorded motion. The model under discussion was constructed using motion capture data, recording the spatial coordinates of markers in three-dimensional space over time. As a result, the Grad-CAM visualizations may appear to suggest substantial errors. However, these discrepancies arose from the natural variability in the player’s movement, rather than from inaccuracies in the model itself. It is important to note that Grad-CAM identifies regions of the input data that are most critical for the classification of the entire stroke, not of a single frame. In contrast, the background image corresponds to a single frame extracted from the analyzed stroke. The warmer colors (red, orange, yellow) mark regions that most increased the model’s confidence in the chosen class, while cooler colors (blue, green) had margin or no positive influence.

The analysis of the Grad-CAM visualization for the ST-GCN and FFCGAN models showed how both models tracked the player’s movement during tennis strokes in a different way. The visualization for the FFCGAN model shows a much more concentrated, spatial activation, located mainly in the lower body and at the point of contact between the racket and the ball. It should be noted that the image visible as a background does not reflect the movement sequence, but presents a single frame in a static form. This suggests that the FFCGAN model primarily pays attention to the movements of the legs and racket that generate the stroke. On the other hand, the visualization for the ST-GCN model shows an activation that is quite broad, covering almost the entire player’s silhouette, with the surroundings. It can be stated that the ST-GCN model integrates a wide movement context, taking into account the dynamics of the whole body and wider spatial relationships. It should be noted that footwork is crucial in performing tennis strokes. It can therefore be assumed that the adaptive spatio-temporal attention modules used in the FFCGAN model allow capturing more complex relationships between the individual biomechanical elements of tennis movement.

8. Conclusions and Future Works

Although the proposed model achieved state-of-the-art performance on three publicly available motion-capture datasets, several factors may currently limit its robustness.

Sensitivity to missing or occluded data

All graph–based skeleton models implicitly assume complete and correctly connected joint graphs. Frames in which markers are either lost or merged in the 2-D projection (overlapping nodal lines) degrade the quality of the adjacency matrix and cannot be recovered solely by the attention mechanism. In such cases, the model inherits the errors of the upstream pose-estimation pipeline, rather than compensating for them.

Dataset-specific topology inconsistencies

The FFGCAN uses an additional graph node representing the racket (available in 3DTennisDS), whereas THETIS and Tennis-Mocap lack this information. Although the fusion strategy learns channel-specific topologies, cross-dataset transfer still requires retraining or domain adaptation, because the underlying joint sets differ.

Limited kinematic diversity

The training corpora mainly include amateur and sub-elite players performing a short list of strokes under controlled conditions. Movement variations unseen in the training process may therefore lie outside the training distribution and elicit unpredictable model behaviors.

Computational constraints on edge hardware

Despite the relatively small trainable features (1.33 M parameters, 2.7 GFLOPs per

sequence), real-time deployment on low-power devices still demands further optimization (pruning, quantization, or knowledge-distillation). The latency measurements presented in

Section 6 correspond to a high-end RTX 4090 GPU and do not directly translate to mobile or embedded platforms.

Explaining decisions beyond coarse Grad-CAM maps

Current interpretability relies on sequence-level Grad-CAM heatmaps, which highlight joint clusters but offer limited temporal resolution. Single-frame visualizations may appear misaligned with the heatmap because the latter integrates evidence across the entire stroke. Finer-grained temporal roll-out or attention-flow analyses may reveal how the network reasons over consecutive motion phases.

In conclusion, the proposed FFGCAN model showed significant promise for tennis action recognition, outperforming existing models. Future work should focus on enhancing data diversity, computational efficiency, and interpretability, to fully unlock its potential in real-world applications.

Despite demonstrating promising results, this study acknowledges several inherent limitations. The datasets used, THETIS, Tennis-Mocap, and 3DTennisDS, have very limited variability in the player skill levels, conditions of the environment, and scenarios of the game. Such restricted diversity might limit the generalization capability of the model regarding tennis scenarios at large. In addition, the simplification procedures used to align the Tennis-Mocap and 3DTennisDS datasets with the THETIS dataset framework inevitably reduced some detailed features. The absence of analysis related to inference times, model sizes, or computational resource requirements could affect its practical deployment in real-time or resource constrained applications.

To address these limitations, future studies could explore enhancing the dataset diversity by including a broader selection of player data, as well as testing the model on other motion capture datasets. Investigating methods to optimize computational efficiency through pruning, quantization, or knowledge distillation could also substantially enhance real-world applicability. Furthermore, enhancing interpretability beyond Grad-CAM by employing attention visualization methods would deepen our understanding of the underlying decision mechanisms.

The results proved that the proposed model is highly effective, providing new insights into HAR in tennis movement classification.