Deep Learning-Enhanced Spectrogram Analysis for Anatomical Region Classification in Biomedical Signals

Abstract

1. Introduction

Key Contributions

- The first deep learning framework for anatomical region classification of biomedical spectrograms ensured interpretability and clinical relevance.

- The fine-tuned ResNet50 model was adapted for grayscale spectrogram input, effectively capturing spectral and spatial features of palpation and percussion signals.

- The achieved high classification accuracy (93.37%) surpassed that of conventional models and demonstrated robustness in anatomical region-based biomedical signal classification.

- A comprehensive preprocessing pipeline incorporating spectrogram normalization, augmentation, and optimized feature extraction ensures an improved model generalization and robustness.

2. Related Work

2.1. Advancements in Deep Learning for Biomedical Signal Classification

2.2. Challenges in Existing Biomedical Signal Classification Methods

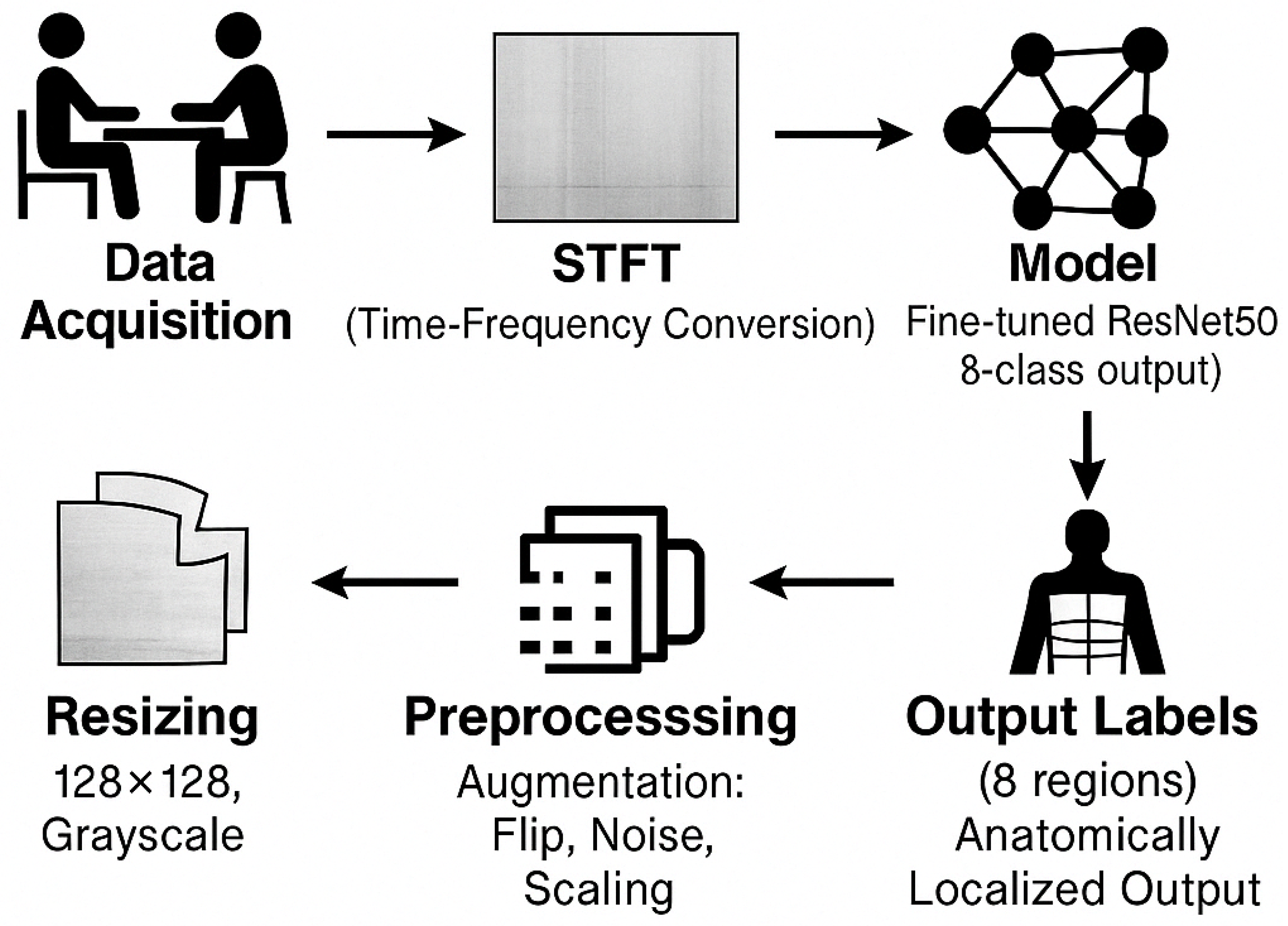

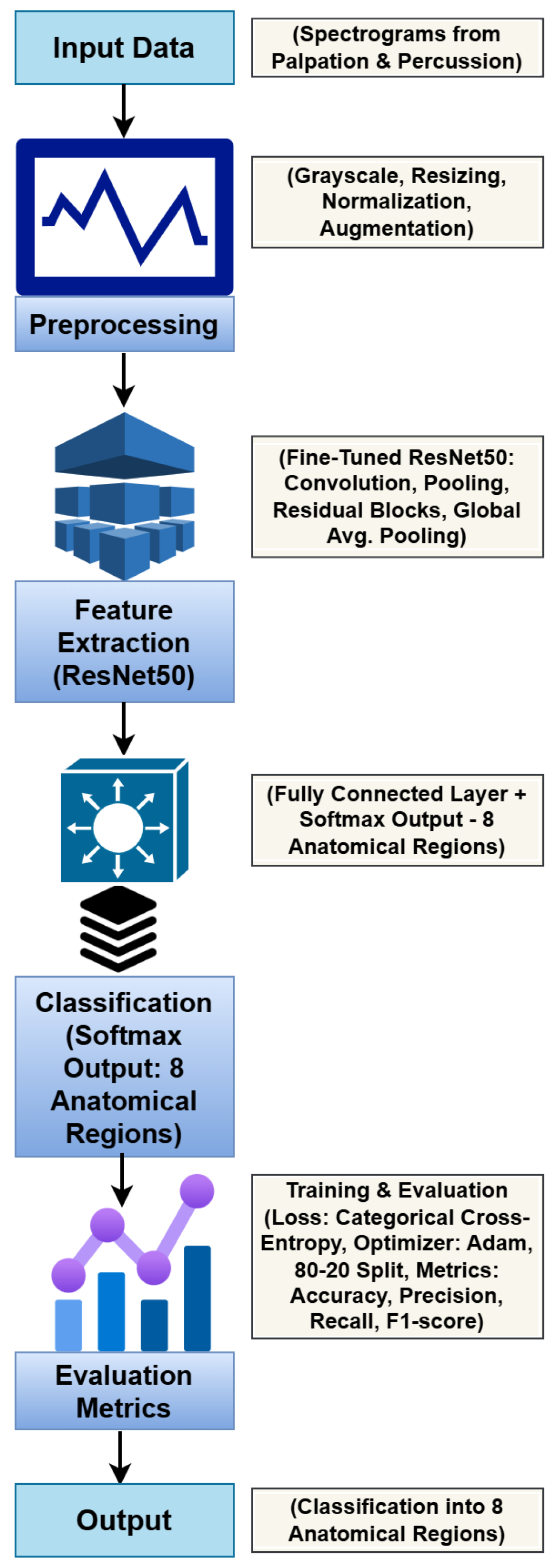

3. Methodology

3.1. Problem Statement

3.2. Dataset and Preprocessing

3.3. ResNet50-Based Deep Learning Model

| Algorithm 1 Training Procedure for ResNet50 Model |

|

3.4. Training and Optimization

3.5. Evaluation Metrics

3.6. Implementation Details

- Processor: Intel Core i9-13900K

- GPU: NVIDIA RTX 3060

- RAM: 128 GB

- Software: Python 3.8, TensorFlow 2.12.0, Scikit-learn 1.3.1, and Matplotlib 3.7.1

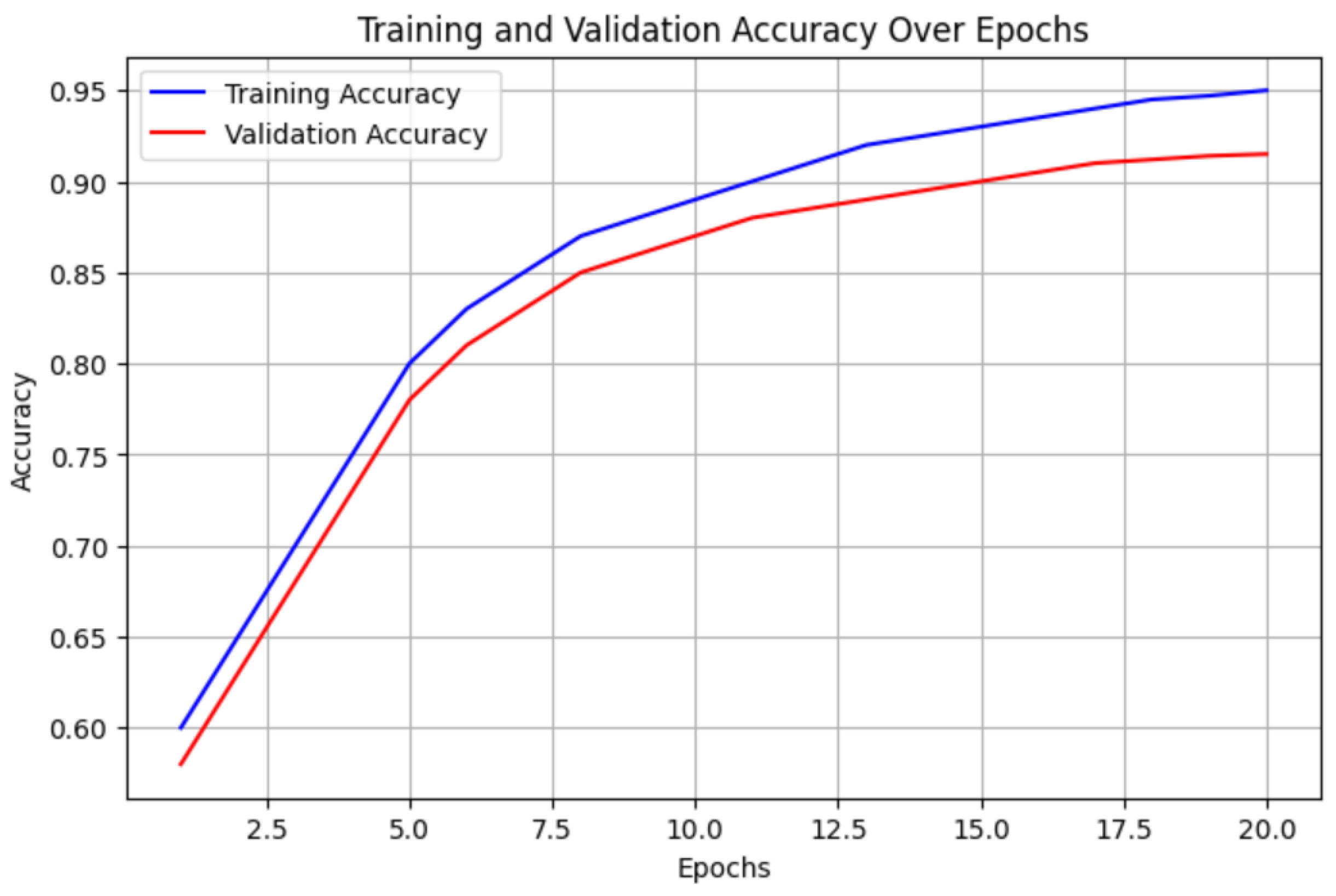

4. Results

4.1. Performance Metrics

4.2. Confusion Matrix Analysis

4.3. Comparison with Existing Methods

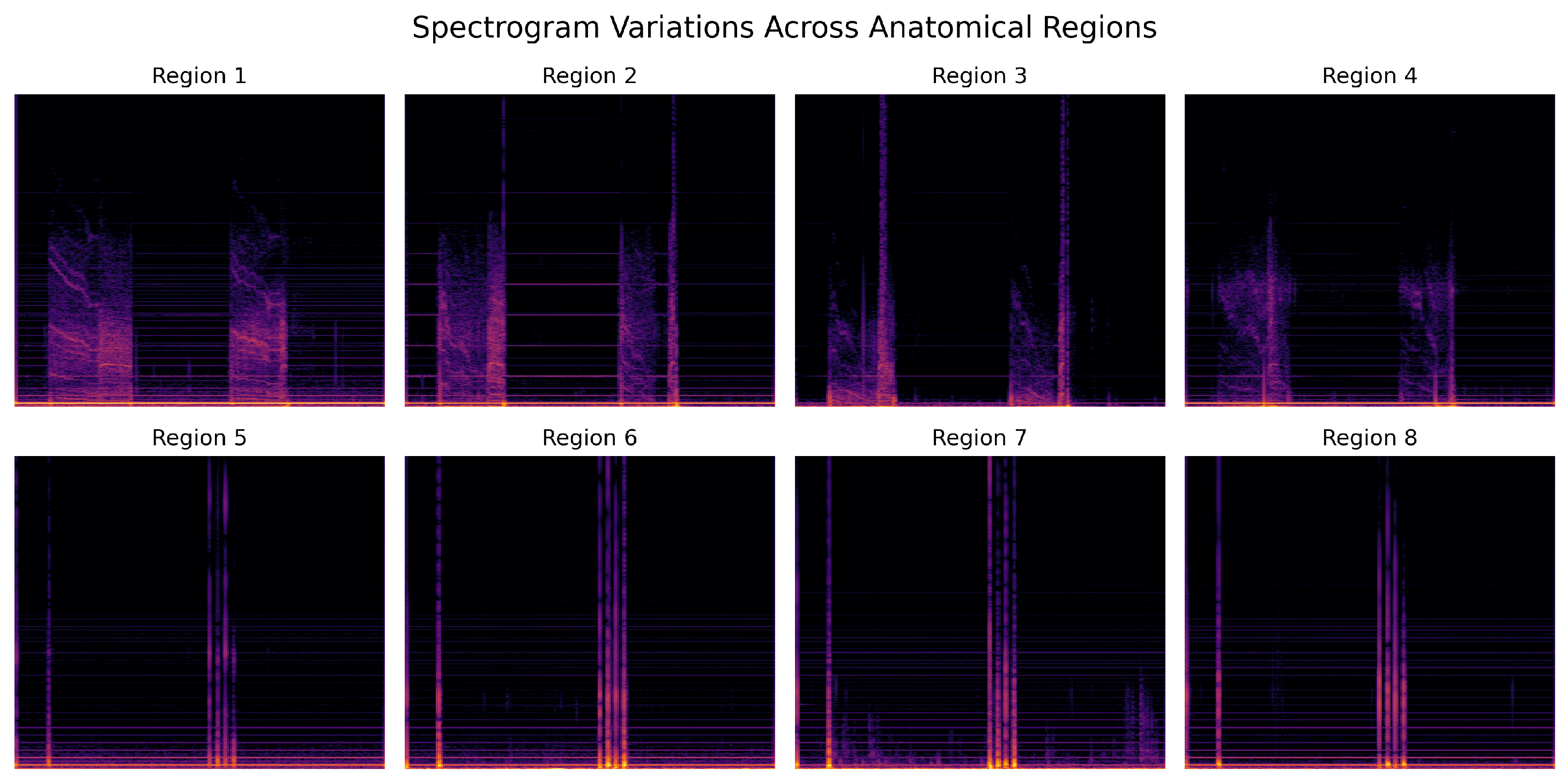

4.4. Spectrogram-Based Analysis Across Anatomical Regions

5. Discussion

5.1. Clinical Relevance and Comparison with Traditional Methods

- Remote Monitoring for Pulmonary Disorders: Individuals afflicted with chronic obstructive pulmonary disease (COPD) or asthma may benefit from ongoing, AI-facilitated categorization of auscultation and percussion data to identify deteriorating situations [42].

- Telemedicine and Primary Care: Physicians in rural or underserved areas can use automated signal classification to support initial diagnoses and improve access to healthcare.

- Early Detection of Respiratory Infections: AI-based spectrogram analysis can assist in the early screening of pneumonia or post-COVID-19 lung complications, reducing hospital admissions by enabling timely intervention.

- Objective Assessment of Disease Progression: Longitudinal monitoring of spectrogram fluctuations across anatomical regions can facilitate the assessment of progressive lung disorders, such as interstitial lung disease or pulmonary fibrosis, offering quantitative insights that surpass subjective clinical evaluations [43].

5.2. Comparison with Existing Deep Learning Approaches

- More precise localization of abnormal signals, aiding in targeted diagnosis and treatment planning.

- Objective assessment of signal variability across different anatomical locations reduces inter-observer variability in manual auscultation [44].

- Potential integration with multi-modal diagnostic tools, where regional classification can enhance disease identification by correlating spatial signal variations with pathological findings [45].

5.3. Challenges and Limitations

5.4. Future Directions

- Real-time deployment: The model is implemented on mobile and embedded systems for real-time signal classification in clinical and remote settings [54].

- Multi-modal Learning: Combining spectrogram-based classification with additional physiological data such as lung sound recordings, ECG, or respiratory motion signals to improve diagnostic accuracy [55].

- Explainable AI (XAI): Integrating model interpretation techniques to enhance transparency and support clinician trust in AI-driven decision-making [56].

- Federated Learning for Privacy-Preserving Training: Implementing decentralized learning techniques to train models across multiple institutions while preserving patient data privacy [57].

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Williamson, E.J.; Walker, A.J.; Bhaskaran, K.; Bacon, S.; Bates, C.; Morton, C.; Curtis, H.J.; Mehrkar, A.; Evans, D.; Inglesby, P.; et al. Factors associated with COVID-19 related death using OpenSAFELY. Nature 2020, 584, 430–436. [Google Scholar] [CrossRef] [PubMed]

- Miotto, R.; Wang, F.; Wang, S.; Jiang, X.; Dudley, J.T. Deep learning for healthcare: Review, opportunities and challenges. Brief. Bioinform. 2018, 19, 1236–1246. [Google Scholar] [CrossRef]

- Shickel, B.; Tighe, P.J.; Bihorac, A.; Rashidi, P. Deep EHR: A survey of recent advances in deep learning techniques for electronic health record (EHR) analysis. IEEE J. Biomed. Health Inform. 2018, 22, 1589–1604. [Google Scholar] [CrossRef]

- Singh, D.; Agusti, A.; Anzueto, A.; Barnes, P.J.; Bourbeau, J.; Celli, B.R.; Criner, G.J.; Frith, P.; Halpin, D.M.G.; Han, M.; et al. Chronic obstructive lung disease: The GOLD science committee report 2019. Eur. Respir. J. 2019, 53, 1900164. [Google Scholar] [CrossRef] [PubMed]

- Wu, K.; Jelfs, B.; Ma, X.; Ke, R.; Tan, X.; Fang, Q. Weakly supervised lesion analysis with a CNN-based framework for COVID-19. Phys. Med. Biol. 2021, 66, 245027. [Google Scholar] [CrossRef] [PubMed]

- Landge, K.; Kidambi, B.R.; Singhal, A.; Basha, A. Electronic stethoscopes: Brief review of clinical utility, evidence, and future implications. J. Pract. Cardiovasc. Sci. 2018, 4, 65. [Google Scholar] [CrossRef]

- Palaniappan, R.; Sundaraj, K.; Sundaraj, S. A comparative study of the SVM and k-NN machine learning algorithms for the diagnosis of respiratory pathologies using pulmonary acoustic signals. BMC Bioinform. 2014, 15, 223. [Google Scholar] [CrossRef]

- Sakai, T.; Kato, M.; Miyahara, S.; Kiyasu, S. Robust detection of adventitious lung sounds in electronic auscultation signals. In Proceedings of the 21st International Conference on Pattern Recognition (ICPR2012), Tsukuba, Japan, 11–15 November 2012; pp. 1993–1996. [Google Scholar]

- Oweis, R.J.; Abdulhay, E.W.; Khayal, A.; Awad, A. An alternative respiratory sounds classification system utilizing artificial neural networks. Biomed. J. 2015, 38, 152–161. [Google Scholar] [CrossRef]

- Huang, D.; Wang, L.; Wang, W. A multi-center clinical trial for wireless stethoscope-based diagnosis and prognosis of children community-acquired pneumonia. IEEE Trans. Biomed. Eng. 2023, 70, 2215–2226. [Google Scholar] [CrossRef]

- Emmanouilidou, D.; McCollum, E.D.; Park, D.E.; Elhilali, M. Adaptive noise suppression of pediatric lung auscultations with real applications to noisy clinical settings in developing countries. IEEE Trans. Biomed. Eng. 2015, 62, 2279–2288. [Google Scholar] [CrossRef]

- Mills, G.A.; Nketia, T.A.; Oppong, I.A.; Kaufmann, E.E. Wireless digital stethoscope using Bluetooth technology. Int. J. Eng. Sci. Technol. 2012, 4, 3961–3969. [Google Scholar]

- Acharya, J.; Basu, A. Deep neural network for respiratory sound classification in wearable devices enabled by patient-specific model tuning. IEEE Trans. Biomed. Circuits Syst. 2020, 14, 535–544. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, T.; Pernkopf, F. Lung sound classification using co-tuning and stochastic normalization. IEEE Trans. Biomed. Eng. 2022, 69, 2872–2882. [Google Scholar] [CrossRef]

- Pham, L.; McLoughlin, I.; Phan, H.; Tran, M.; Nguyen, T.; Palaniappan, R. Robust Deep Learning Framework for Predicting Respiratory Anomalies and Diseases. arXiv 2020, arXiv:2002.03894. [Google Scholar] [CrossRef]

- Perna, D.; Tagarelli, A. Deep Auscultation: Predicting Respiratory Anomalies and Diseases via Recurrent Neural Networks. arXiv 2019, arXiv:1907.05708. [Google Scholar] [CrossRef]

- Altan, G.; Kutlu, Y.; Pekmezci, A.Ö.; Nural, S. Deep learning with 3D-second order difference plot on respiratory sounds. Biomed. Signal Process. Control 2018, 45, 58–69. [Google Scholar] [CrossRef]

- Pramono, R.X.A.; Bowyer, S.; Rodriguez-Villegas, E. Automatic adventitious respiratory sound analysis: A systematic review. PLoS ONE 2017, 12, e0177926. [Google Scholar] [CrossRef]

- Palaniappan, R.; Sundaraj, K.; Ahamed, N.U.; Arjunan, A.; Sundaraj, S. Computer-based respiratory sound analysis: A systematic review. IETE Tech. Rev. 2013, 30, 248–256. [Google Scholar] [CrossRef]

- Jácome, C.; Marques, A. Computerized respiratory sounds in patients with COPD: A systematic review. J. Chronic Obstr. Pulm. Dis. 2015, 12, 104–112. [Google Scholar] [CrossRef]

- Rao, A.; Huynh, E.; Royston, T.J.; Kornblith, A.; Roy, S. Acoustic methods for pulmonary diagnosis. IEEE Rev. Biomed. Eng. 2018, 12, 221–239. [Google Scholar] [CrossRef]

- Ansari, M.I.; Hasan, T. SpectNet: End-to-End Audio Signal Classification Using Learnable Spectrograms. arXiv 2022, arXiv:2211.09352. [Google Scholar]

- Chang, G.C.; Lai, Y.F. Performance evaluation and enhancement of lung sound recognition system in two real noisy environments. Comput. Methods Programs Biomed. 2010, 97, 141–150. [Google Scholar] [CrossRef]

- Li, F.; Zhang, Z.; Wang, L.; Liu, W. Heart Sound Classification Based on Improved Mel-Frequency Spectral Coefficients and Deep Residual Learning. Front. Physiol. 2022, 13, 1084420. [Google Scholar] [CrossRef] [PubMed]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Lung sounds classification using convolutional neural networks. Artif. Intell. Med. 2018, 88, 58–69. [Google Scholar] [CrossRef]

- Pasterkamp, H.; Kraman, S.S.; Wodicka, G.R. Respiratory sounds: Advances beyond the stethoscope. Am. J. Respir. Crit. Care Med. 1997, 156, 974–987. [Google Scholar] [CrossRef] [PubMed]

- Bohadana, A.; Izbicki, G.; Kraman, S.S. Fundamentals of lung auscultation. N. Engl. J. Med. 2014, 370, 744–751. [Google Scholar] [CrossRef] [PubMed]

- Sharma, A.K.; Nandal, A.; Dhaka, A.; Alhudhaif, A.; Polat, K.; Sharma, A. Diagnosis of cervical cancer using CNN deep learning model with transfer learning approaches. Biomed. Signal Process. Control 2025, 105, 107639. [Google Scholar] [CrossRef]

- Olson, D.E.; Hammersley, J.R. Mechanisms of lung sound generation. Semin. Respir. Crit. Care Med. 1985, 6, 171–179. [Google Scholar] [CrossRef]

- Sarkar, M.; Madabhavi, I.; Niranjan, N.; Dogra, M. Auscultation of the respiratory system. Ann. Thorac. Med. 2015, 10, 158–168. [Google Scholar] [CrossRef]

- Ryu, S.; Kim, S.-C.; Won, D.-O.; Bang, C.S.; Koh, J.-H.; Jeong, I. iApp: An Autonomous Inspection, Auscultation, Percussion, and Palpation Platform. Front. Physiol. 2022, 13, 825612. [Google Scholar] [CrossRef]

- Zhou, G.; Chen, Y.; Chien, C. On the Analysis of Data Augmentation Methods for Spectral Imaged Based Heart Sound Classification Using Convolutional Neural Networks. BMC Med. Inform. Decis. Mak. 2022, 22, 226. [Google Scholar] [CrossRef] [PubMed]

- Bao, X.; Xu, Y.; Lam, H.-K.; Trabelsi, M.; Chihi, I.; Sidhom, L.; Kamavuako, E.N. Time-Frequency Distributions of Heart Sound Signals: A Comparative Study Using Convolutional Neural Networks. arXiv 2022, arXiv:2208.03128. [Google Scholar] [CrossRef]

- Kraman, S.S. Vesicular (normal) lung sounds: How are they made, where do they come from, and what do they mean? Semin. Respir. Crit. Care Med. 1985, 6, 183–191. [Google Scholar] [CrossRef]

- Monaco, A.; Amoroso, N.; Bellantuono, L.; Pantaleo, E.; Tangaro, S.; Bellotti, R. Multi-time-scale features for accurate respiratory sound classification. Appl. Sci. 2020, 10, 8606. [Google Scholar] [CrossRef]

- Jayalakshmy, S.; Sudha, G.F. Scalogram-based prediction model for respiratory disorders using optimized convolutional neural networks. Artif. Intell. Med. 2020, 103, 101809. [Google Scholar] [CrossRef]

- Becker, K.; Scheffer, C.; Blanckenberg, M.; Diacon, A. Analysis of adventitious lung sounds originating from pulmonary tuberculosis. In Proceedings of the 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Osaka, Japan, 3–7 July 2013; pp. 4334–4337. [Google Scholar] [CrossRef]

- Kahya, Y.P.; Guler, E.C.; Sahin, S. Respiratory disease diagnosis using lung sounds. In Proceedings of the 19th Annual International Conference of the IEEE Engineering in Medicine and Biology Society. ‘Magnificent Milestones and Emerging Opportunities in Medical Engineering’ (Cat. No. 97CH36136), Chicago, IL, USA, 30 October–2 November 1997; Volume 5, pp. 2051–2053. [Google Scholar] [CrossRef]

- Cinyol, F.; Baysal, U.; Köksal, D.; Babaoğlu, E.; Ulaşlı, S.S. Incorporating support vector machine to the classification of respiratory sounds by convolutional neural network. Biomed. Signal Process. Control 2023, 79, 104093. [Google Scholar] [CrossRef]

- Gemmeke, J.F.; Ellis, D.P.; Freedman, D.; Jansen, A.; Lawrence, W.; Moore, R.C.; Plakal, M.; Ritter, M. Audio set: An ontology and human-labeled dataset for audio events. In Proceedings of the 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), New Orleans, LA, USA, 5–9 March 2017; pp. 776–780. [Google Scholar] [CrossRef]

- Rocha, B.M.; Filos, D.; Mendes, L.; Serbes, G.; Ulukaya, S.; Kahya, Y.P.; Jakovljevic, N.; Loncar-Turukalo, T.; Vogiatzis, I.M.; Perantoni, E.; et al. An open access database for the evaluation of respiratory sound classification algorithms. Physiol. Meas. 2019, 40, 035001. [Google Scholar] [CrossRef]

- Fraiwan, M.; Fraiwan, L.; Khassawneh, B.; Ibnian, A. A dataset of lung sounds recorded from the chest wall using an electronic stethoscope. Data Brief 2021, 35, 106913. [Google Scholar] [CrossRef]

- Hsu, F.S.; Huang, S.R.; Huang, C.W.; Huang, C.J.; Cheng, Y.R.; Chen, C.C.; Hsiao, J.; Chen, C.-W.; Chen, L.-C.; Lai, Y.-C.; et al. Benchmarking of eight recurrent neural network variants for breath phase and adventitious sound detection on a self-developed open-access lung sound database-HF_Lung_V1. PLoS ONE 2021, 16, e0254134. [Google Scholar] [CrossRef]

- Hsu, F.S.; Huang, S.R.; Huang, C.W.; Cheng, Y.R.; Chen, C.C.; Hsiao, J.; Chen, C.-W.; Lai, F. An update on a progressively expanded database for automated lung sound analysis. arXiv 2021, arXiv:2102.04062. [Google Scholar] [CrossRef]

- Altan, G.; Kutlu, Y.; Garbİ, Y.; Pekmezci, A.Ö.; Nural, S. Multimedia respiratory database (RespiratoryDatabase@TR): Auscultation sounds and chest X-rays. Nat. Eng. Sci. 2017, 2, 59–72. [Google Scholar] [CrossRef]

- Roy, A.; Satija, U. A novel mel-spectrogram snippet representation learning framework for severity detection of chronic obstructive pulmonary diseases. IEEE Trans. Instrum. Meas. 2023, 72, 1–11. [Google Scholar] [CrossRef]

- Emmanouilidou, D.; McCollum, E.D.; Park, D.E.; Elhilali, M. Computerized lung sound screening for pediatric auscultation in noisy field environments. IEEE Trans. Biomed. Eng. 2018, 65, 1564–1574. [Google Scholar] [CrossRef]

- Pouyani, M.F.; Vali, M.; Ghasemi, M.A. Lung sound signal denoising using discrete wavelet transform and artificial neural network. Biomed. Signal Process. Control 2022, 72, 103329. [Google Scholar] [CrossRef]

- Haider, N.S.; Behera, A.K. Respiratory sound denoising using sparsity-assisted signal smoothing algorithm. Biocybern. Biomed. Eng. 2022, 42, 481–493. [Google Scholar] [CrossRef]

- Singh, D.; Singh, B.K.; Behera, A.K. Comparative analysis of lung sound denoising techniques. In Proceedings of the 2020 First International Conference on Power, Control and Computing Technologies (ICPC2T), Raipur, India, 3–5 January 2020; pp. 406–410. [Google Scholar] [CrossRef]

- Syahputra, M.; Situmeang, S.; Rahmat, R.; Budiarto, R. Noise reduction in breath sound files using wavelet transform-based filter. In IOP Conference Series: Materials Science and Engineering; IOP Publishing: Semarang, Indonesia, 2017; Volume 190, p. 012040. [Google Scholar] [CrossRef]

- Sangeetha, B.; Periyasamy, R. Performance metrics analysis of adaptive threshold empirical mode decomposition denoising method for suppression of noise in lung sounds. In Proceedings of the 2021 Seventh International Conference on Bio Signals, Images, and Instrumentation (ICBSII), Chennai, India, 25–27 March 2021; pp. 1–6. [Google Scholar] [CrossRef]

- Gupta, S.; Agrawal, M.; Deepak, D. Gammatonegram-based triple classification of lung sounds using deep convolutional neural network with transfer learning. Biomed. Signal Process. Control 2021, 70, 102947. [Google Scholar] [CrossRef]

- Haider, N.S. Respiratory sound denoising using empirical mode decomposition, hurst analysis, and spectral subtraction. Biomed. Signal Process. Control 2021, 64, 102313. [Google Scholar] [CrossRef]

- Nersisson, R.; Noel, M.M. Heart sound and lung sound separation algorithms: A review. J. Med. Eng. Technol. 2017, 41, 13–21. [Google Scholar] [CrossRef]

- Ayari, F.; Ksouri, M.; Alouani, A.T. Lung sound extraction from mixed lung and heart sounds fastica algorithm. In Proceedings of the 2012 16th IEEE Mediterranean Electrotechnical Conference, Yasmine Hammamet, Tunisia, 25–28 March 2012; pp. 339–342. [Google Scholar] [CrossRef]

- Lozano, M.; Fiz, J.A.; Jané, R. Automatic differentiation of normal and continuous adventitious respiratory sounds using ensemble empirical mode decomposition and instantaneous frequency. IEEE J. Biomed. Health Inform. 2016, 20, 486–497. [Google Scholar] [CrossRef] [PubMed]

- Datta, S.; Choudhury, A.D.; Deshpande, P.; Bhattacharya, S.; Pal, A. Automated lung sound analysis for detecting pulmonary abnormalities. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Republic of Korea, 11–15 July 2017; pp. 4594–4598. [Google Scholar] [CrossRef]

- Gavriely, N.; Palti, Y.; Alroy, G. Spectral characteristics of normal breath sounds. J. Appl. Physiol. 1981, 50, 307–314. [Google Scholar] [CrossRef] [PubMed]

| Study | Methodology | Dataset | Key Findings | Limitations |

|---|---|---|---|---|

| Palaniappan et al. [19] | Systematic review of respiratory sound analysis | Multiple datasets | Discussed computer-based classification approaches | No experimental validation of classification accuracy |

| Jácome & Marques [20] | Analysis of computerized respiratory sounds in COPD patients | Clinical COPD sound dataset | Identified potential of computerized auscultation for COPD detection | Lack of standardized classification techniques |

| Rao et al. [21] | Acoustic signal processing methods for pulmonary diagnosis | Public respiratory dataset | Explored various feature extraction techniques for lung sounds | Limited focus on deep learning-based classification |

| Pasterkamp et al. [26] | Review on advances in respiratory sound analysis | Multiple studies reviewed | Discussed acoustic characteristics and clinical importance of lung sounds | No implementation of machine learning models |

| Bohadana et al. [27] | Fundamentals of lung auscultation, including classification methods | No dataset used (review study) | Provided insights into the interpretation of auscultation signals | Lacks experimental validation of classification approaches |

| Sharma et al. [28] | CNN deep learning model with transfer learning | Cervical cancer diagnosis dataset | Improved classification accuracy using CNN | Not focused on biomedical sound classification |

| Region | Training Samples | Testing Samples |

|---|---|---|

| R1 | 1824 | 456 |

| R2 | 1824 | 456 |

| R3 | 1824 | 456 |

| R4 | 1824 | 456 |

| R5 | 1824 | 456 |

| R6 | 1824 | 456 |

| R7 | 1824 | 456 |

| R8 | 1824 | 456 |

| Total | 14,592 | 3648 |

| Region | Accuracy (%) | Precision | Recall | F1-Score |

|---|---|---|---|---|

| R1 | 92.5 | 0.93 | 0.92 | 0.92 |

| R2 | 94.1 | 0.94 | 0.93 | 0.93 |

| R3 | 93.8 | 0.94 | 0.94 | 0.94 |

| R4 | 92.9 | 0.92 | 0.93 | 0.92 |

| R5 | 94.3 | 0.95 | 0.94 | 0.94 |

| R6 | 93.1 | 0.93 | 0.92 | 0.92 |

| R7 | 92.7 | 0.92 | 0.91 | 0.91 |

| R8 | 93.5 | 0.93 | 0.93 | 0.93 |

| Overall | 93.37 | 0.93 | 0.93 | 0.93 |

| Study | Methodology | Dataset | Accuracy (%) | Key Findings | Limitations |

|---|---|---|---|---|---|

| Sharma et al. [28] | CNN deep learning model with transfer learning | Cervical cancer diagnosis dataset | 94.2 | Demonstrated effectiveness of deep learning in medical imaging classification | Study is specific to cervical cancer, not respiratory sound analysis |

| Ryu et al. [31] | Deep multi-modal learning with auscultation, percussion, and palpation | Collected multi-modal abdominal sound dataset (8 regions) | 89.46 | Achieved high accuracy in classifying abdominal divisions | Requires further testing on unseen patient data |

| Monaco et al. [35] | Multi-time-scale feature extraction for respiratory sound classification | Publicly available respiratory sound datasets | 91.3 | Improved feature representation for robust respiratory disease classification | Dependence on high-quality labeled datasets for training |

| Jayalakshmy et al. [36] | Scalogram-based CNN for respiratory disorder classification | Respiratory sound datasets from clinical sources | 92.5 | Demonstrated efficiency of scalogram-based feature extraction for classification | Limited generalization for unseen respiratory conditions |

| Becker et al. [37] | Analysis of adventitious lung sounds for tuberculosis detection | Clinical lung sound recordings from TB patients | 87.8 | Effective identification of TB-related acoustic anomalies | Requires further validation with diverse population groups |

| Proposed Model | Fine-tuned ResNet50 on spectrogram dataset | Spectrogram dataset (8 anatomical regions) | 93.37 | High classification accuracy, robust feature extraction | Requires further testing on unseen patient data |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karim, A.; Ryu, S.; Jeong, I.c. Deep Learning-Enhanced Spectrogram Analysis for Anatomical Region Classification in Biomedical Signals. Appl. Sci. 2025, 15, 5313. https://doi.org/10.3390/app15105313

Karim A, Ryu S, Jeong Ic. Deep Learning-Enhanced Spectrogram Analysis for Anatomical Region Classification in Biomedical Signals. Applied Sciences. 2025; 15(10):5313. https://doi.org/10.3390/app15105313

Chicago/Turabian StyleKarim, Abdul, Semin Ryu, and In cheol Jeong. 2025. "Deep Learning-Enhanced Spectrogram Analysis for Anatomical Region Classification in Biomedical Signals" Applied Sciences 15, no. 10: 5313. https://doi.org/10.3390/app15105313

APA StyleKarim, A., Ryu, S., & Jeong, I. c. (2025). Deep Learning-Enhanced Spectrogram Analysis for Anatomical Region Classification in Biomedical Signals. Applied Sciences, 15(10), 5313. https://doi.org/10.3390/app15105313