Improvement of Dung Beetle Optimization Algorithm Application to Robot Path Planning

Abstract

1. Introduction

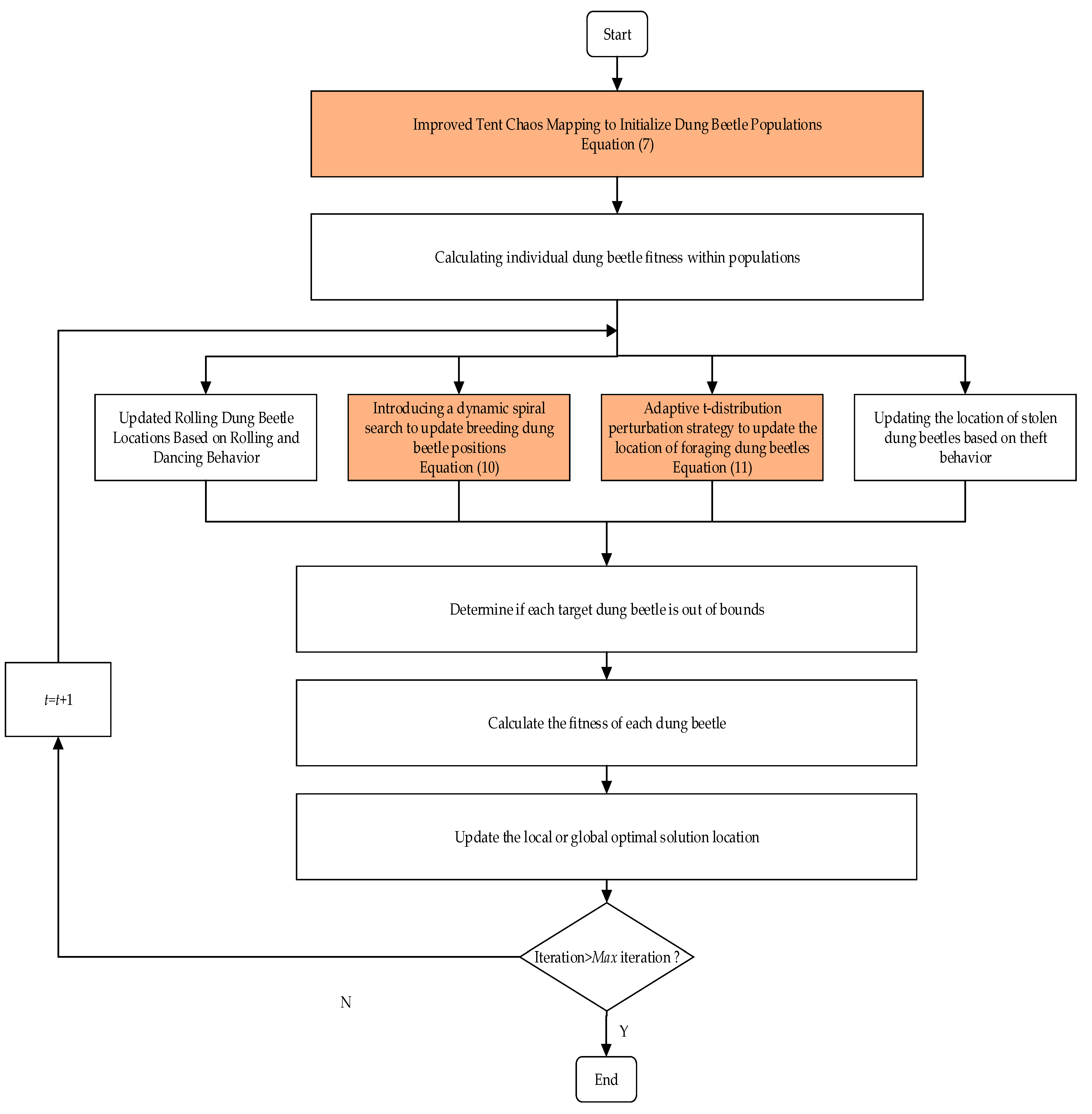

- The TSDBO algorithm incorporates an improved Tent chaotic mapping in the initialization phase to enhance the distribution of dung beetles in the solution space. A dynamic spiral search strategy is introduced during the reproduction phase to boost global search capability and efficiency. In the foraging phase, an adaptive t-distribution perturbation strategy helps the small dung beetles escape local optima and accelerates the convergence rate.

- To assess TSDBO, twelve benchmark functions were chosen from the CEC2005 set. TSDBO shows better all aspects than other algorithms in the evaluation of twelve benchmark functions from the CEC2005 set.

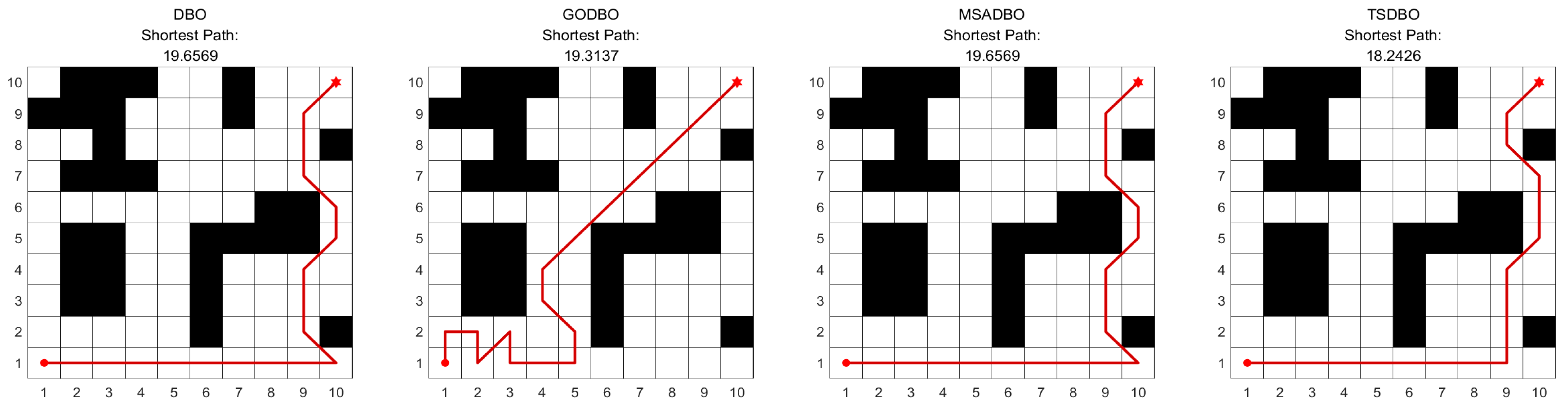

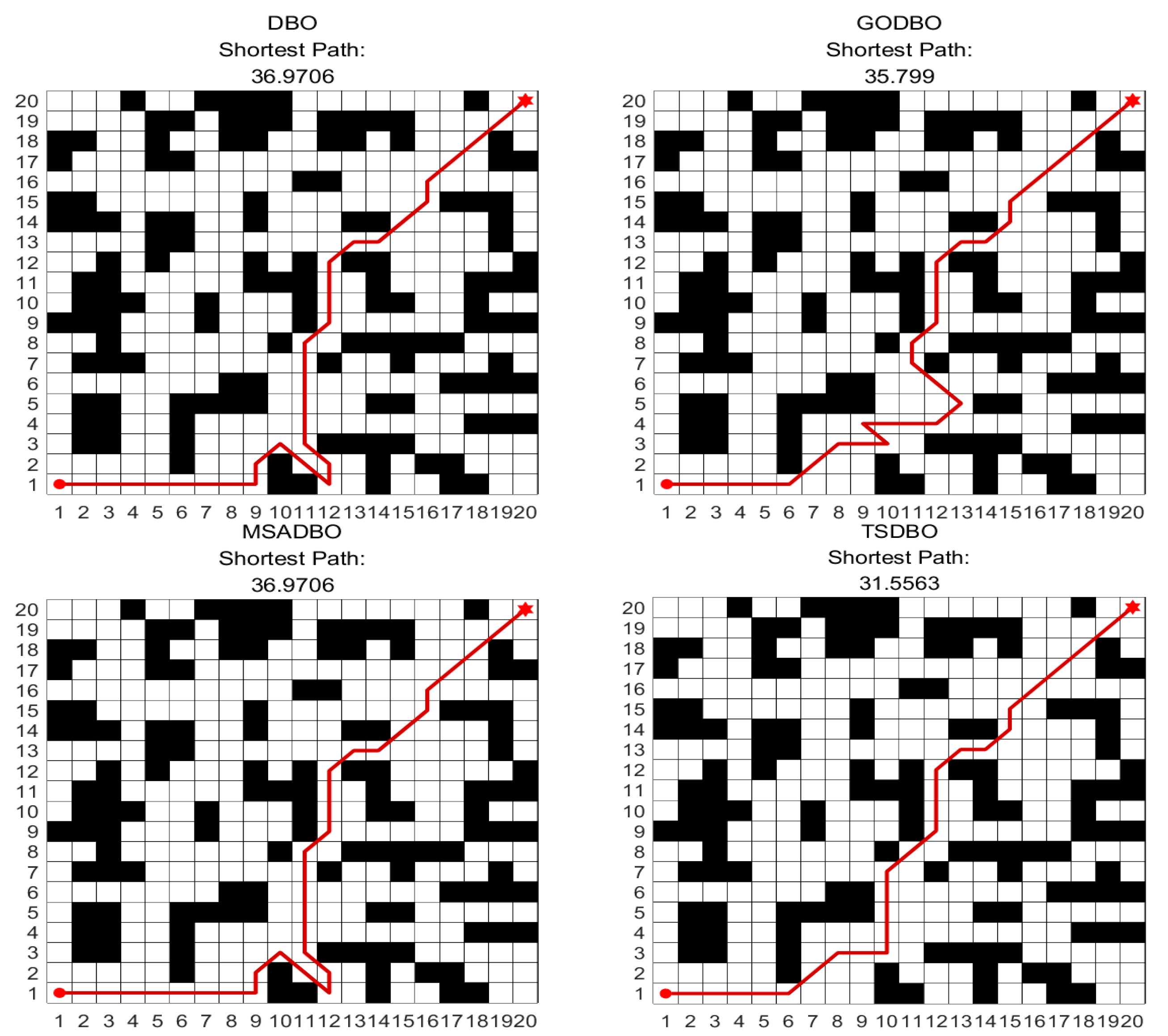

- TSDBO demonstrated its efficacy in generating shorter paths in comparison to alternative algorithms when applied to the 2D robot path planning challenge.

2. Related Works and Method

2.1. Related Works

2.2. Method

- (1)

- Rolling behavior

- (2)

- Reproductive behavior

- (3)

- Foraging behavior

- (4)

- Stealing behavior

3. The Proposed Algorithm

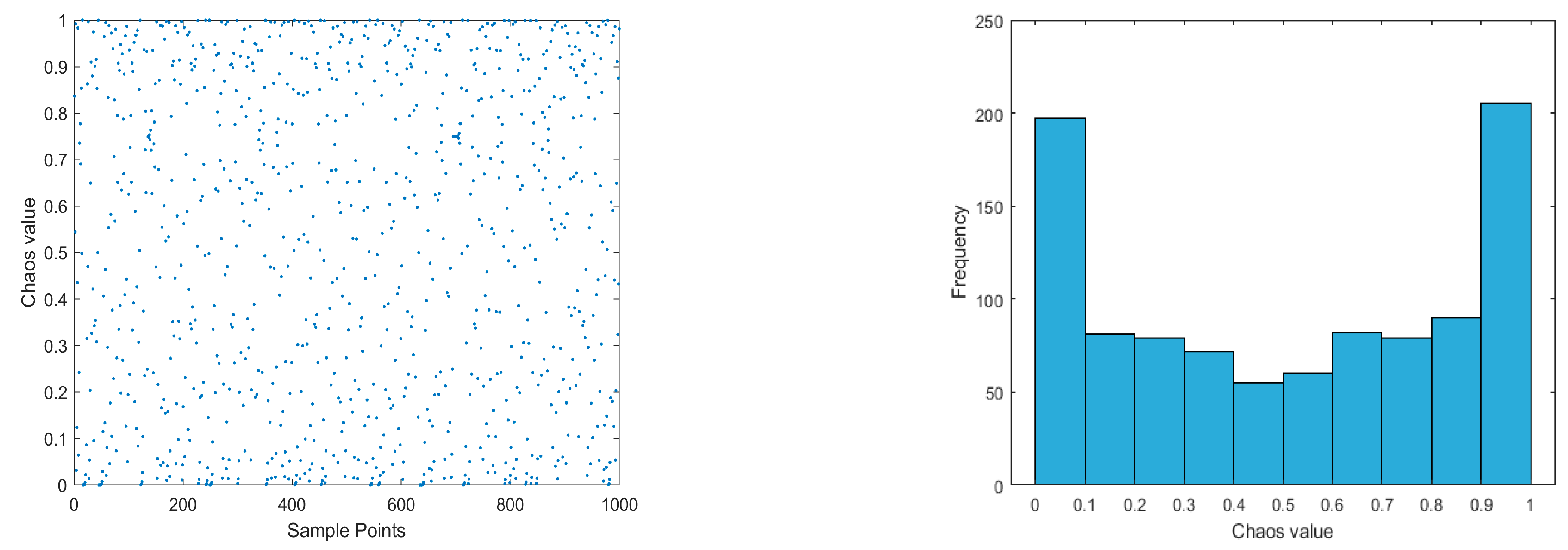

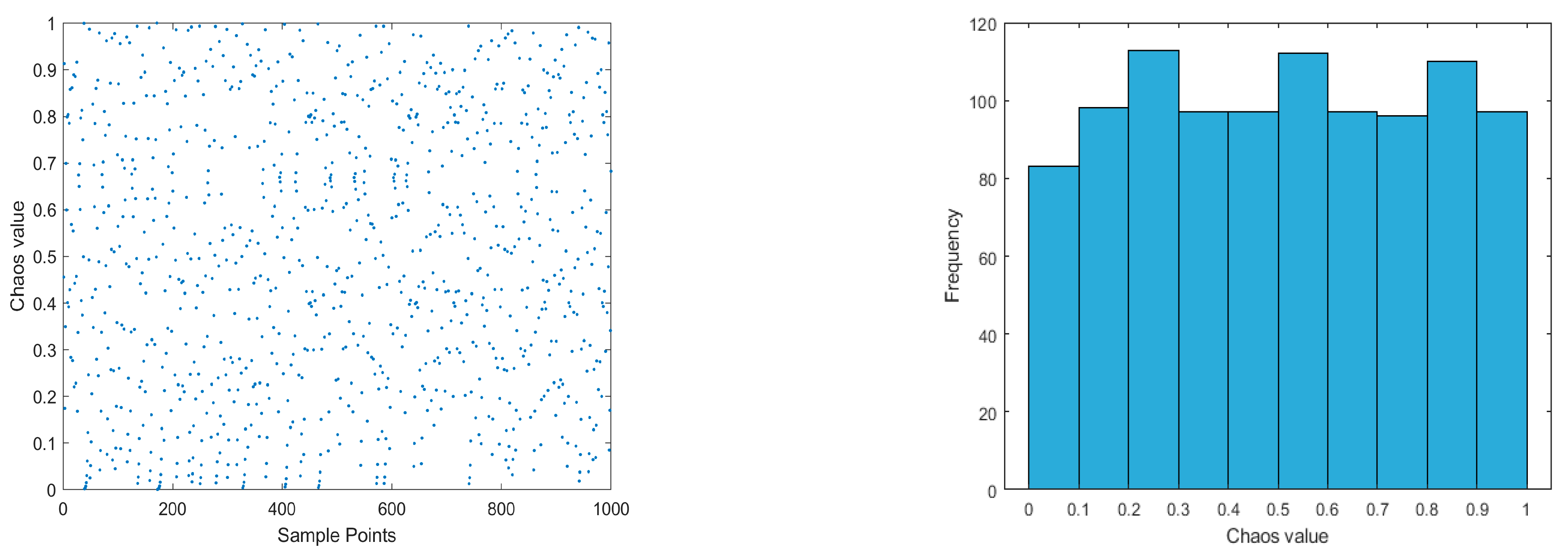

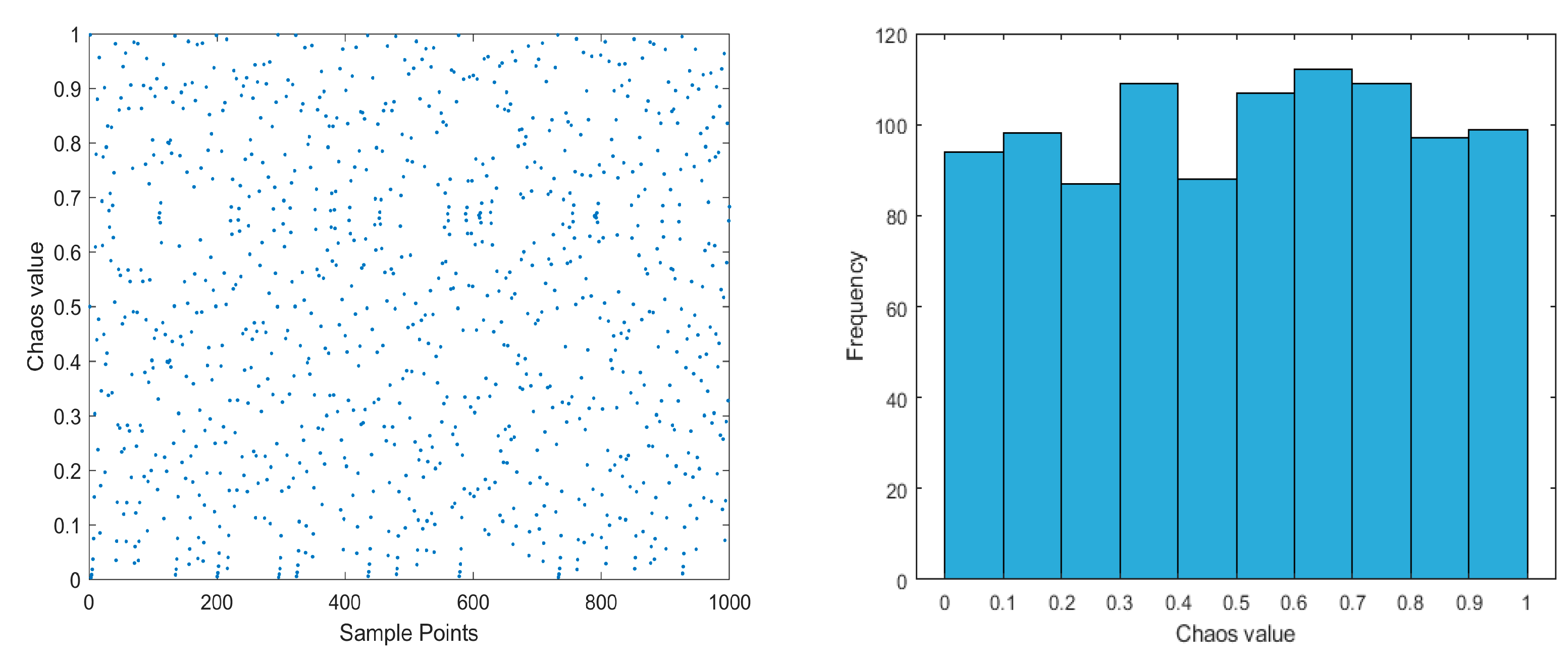

3.1. Improved Tent Chaos Mapping Strategy

3.2. Dynamic Spiral Search Strategy

3.3. Adaptive T-Distribution Perturbation Strategy

3.4. Algorithm Steps and Algorithm Flow

| Algorithm 1 The framework of the TSDBO algorithm |

| Require: The maximum iterations TMAX, the size of individuals in the population N, obtain an initialized population X of dung beetles using the improved Tent chaos mapping strategy. |

| Ensure: Optimal position and its fitness value |

| 1: while do |

| 2: for to Number of rolling dung beetles do |

| 3: |

| 4: if then |

| 5: Update rolling dung beetle location by Equation (1). |

| 6: else |

| 7: Rolling the ball in the encounter of obstacles by Equation (2) to update. |

| 8: end if |

| 9: end for |

| 10: The value of the nonlinear convergence factor is calculated by . |

| 11: for to Number of breeding dung beetles do |

| 12: Updating of breeding dung beetles by Equation (10). |

| 13: end for |

| 14: for to Number of Foraging dung beetles do |

| 15: Updating of foraging dung beetles by Equation (11). |

| 16: end for |

| 17: for to Number of Stealing dung beetles do |

| 18: Updating of stealing dung beetles by Equation (5). |

| 19: end for |

| 20: Determine if each target dung beetle is out of bounds. |

| 21: Calculate the fitness of each dung beetle. |

| 22 if the newly generated position is better than before then |

| 23: Update it. |

| 24: end if |

| 25 |

| 26: end while |

| 27: return and its fitness value |

4. Results of the Experiment and Discussion

4.1. Description of Test Functions

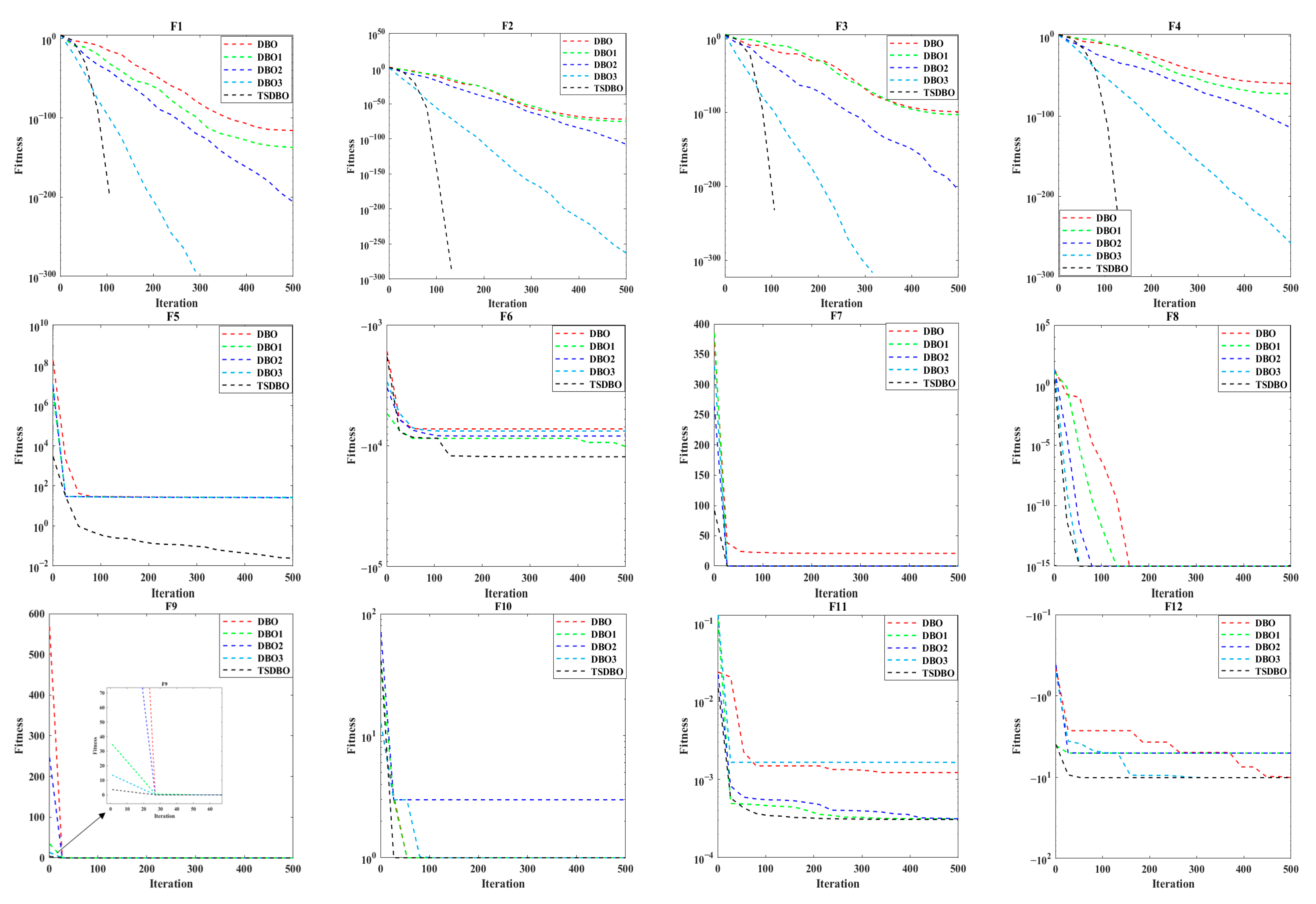

4.2. Analysis of the Effectiveness of Improvement Strategies

- (1)

- Adding the improved Tent chaotic mapping to initialize the population in DBO to obtain DBO1;

- (2)

- Introducing a dynamic spiral search strategy in DBO to obtain DBO2;

- (3)

- DBO is used with an adaptive t-distribution perturbation technique to produce DBO3.

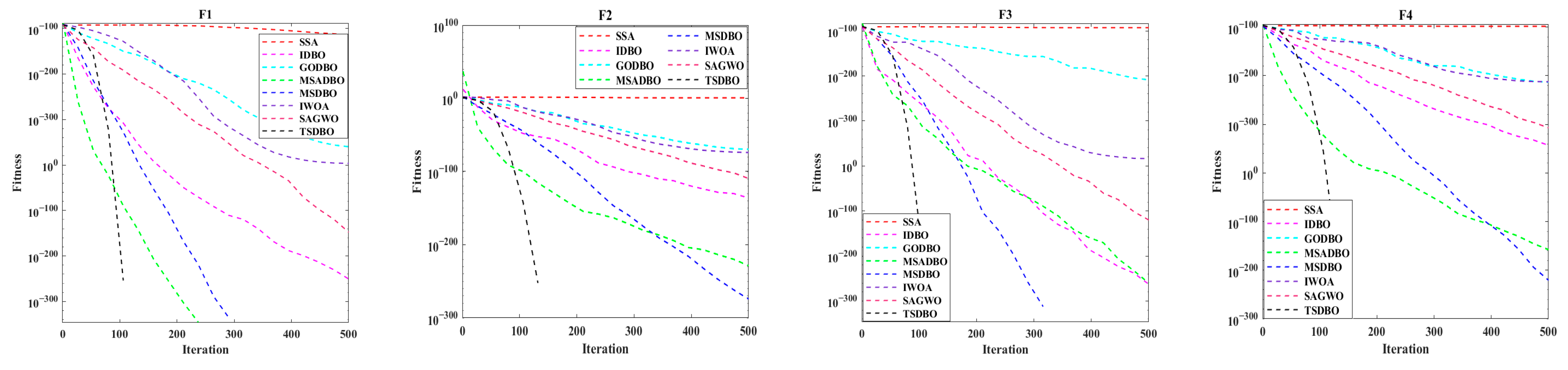

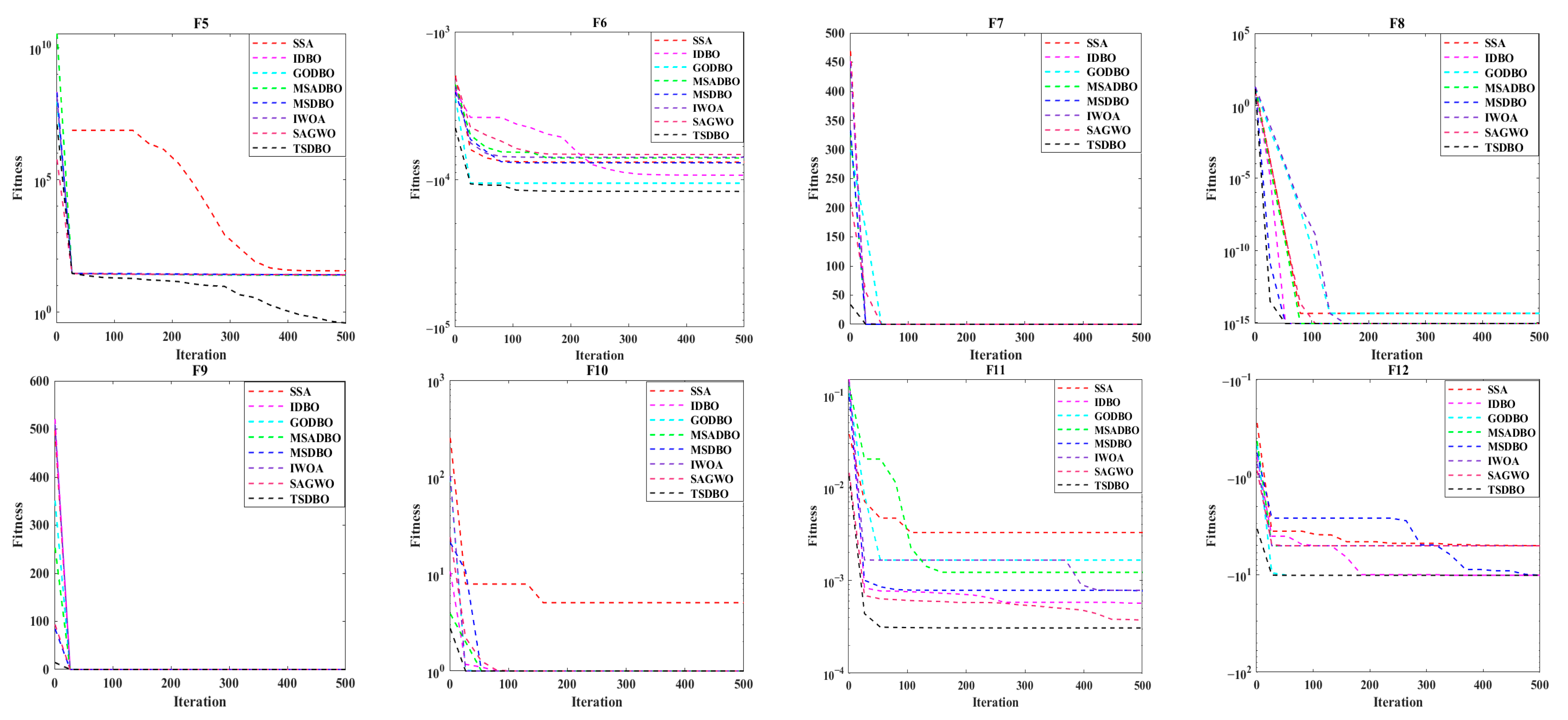

4.3. Comparison with Other Algorithms

- (1)

- Comparison between TSDBO and SSA: SSA is highly adaptive and can find optimal solutions on 12 test functions. However, the optimal solution found by SSA has a big gap compared with TSDBO, and the average value is much larger than TSDBO. And the standard deviation of SSA is larger than that of TSDBO, which is an indicator to reflect the stability of the algorithm, and a too-large standard deviation indicates that the deviation between the data is relatively large. The combined performance of the two algorithms indicates that TSDBO also has a good adaptive performance.

- (2)

- Comparison between TSDBO and IDBO: IDBO finds the optimal value in the F7 and F9 functions, but analyzing the data shows that IDBO improves the performance of the algorithm, but it is easy to fall into the local optimum, and it cannot be compared with TSDBO.

- (3)

- Comparison between TSDBO and GODBO: The performance of GODBO on the 12 test functions is average, even negative optimization occurs on some functions, and there is no competitive advantage compared with TSDBO.

- (4)

- Comparison between TSDBO and MSADBO: The average convergence accuracy of TSDBO converges to the theoretical optimal solution on F1 and F3, while the average optimization accuracy of MSADBO only converges to the theoretical optimal solution on F1, and MSADBO, like most of the other optimization algorithms, falls into the local optimal solution in the function F5, where finding the best is more difficult, whereas the optimal value of TSDBO approaches the theoretical best value of 0, with an average convergence accuracy of 0. The theoretical optimal solution is 0, and the average convergence accuracy also reaches 2.34E−01, which is better than that of MSADBO; on the multi-peak functions F6-F9, TSDBO shows superior performance in searching for the optimal solution, which indicates that TSDBO is quite good at both local escape and global search; on the hybrid benchmark test functions F10-F12, the optimal values of TSDBO and MSADBO have the same optimal values, but are better than MSADBO in both average convergence accuracy and standard deviation. When analyzed together, TSDBO’s optimality-seeking performance on its test functions is stronger than MSADBO.

- (5)

- Comparison between TSDBO and MSDBO: Compared to IDBO and GODBO, MSDBO performs better overall, and it can find the theoretical best value in the F7 and F10 functions, but the Mean and Std are worse than those of TSDBO, and the optimization results are not as accurate as those of TSDBO on other functions.

- (6)

- Comparison between TSDBO and IWOA: IWOA performs similarly to GODBO on the test functions and has no competitive advantage over TSDBO.

- (7)

- Comparison between TSDBO and SAGWO: SAGWO outperforms GODBO and IWOA in F1-F4, has a good performance in the F6 function, and finds the theoretical optimal value in the F7, F9, and F10 functions, but the Mean and Std are not comparable to those of TSDBO, and the overall performance is not as good as TSDBO.

4.4. Convergence Curve Analysis

- (1)

- The TSDBO algorithm is the most accurate and fastest for functions F1–F4. In addition to determining the theoretically ideal values for functions F1 and F3, it also determines how many iterations are necessary to reach those values.

- (2)

- For function F5, while other algorithms become trapped in local optima, the convergence curve illustrates that the TSDBO algorithm outperforms them in both speed and accuracy. Moreover, the best value achieved by the TSDBO algorithm is close to the theoretical optimal value.

- (3)

- From the iteration convergence curve of functions F6–F9, it is evident that the TSDBO algorithm achieves faster convergence while maintaining high accuracy in the multi-peak benchmark functions. Unlike the comparison algorithms, it avoids repeated entrapment in local optima, showcasing its strong ability to escape local solutions.

- (4)

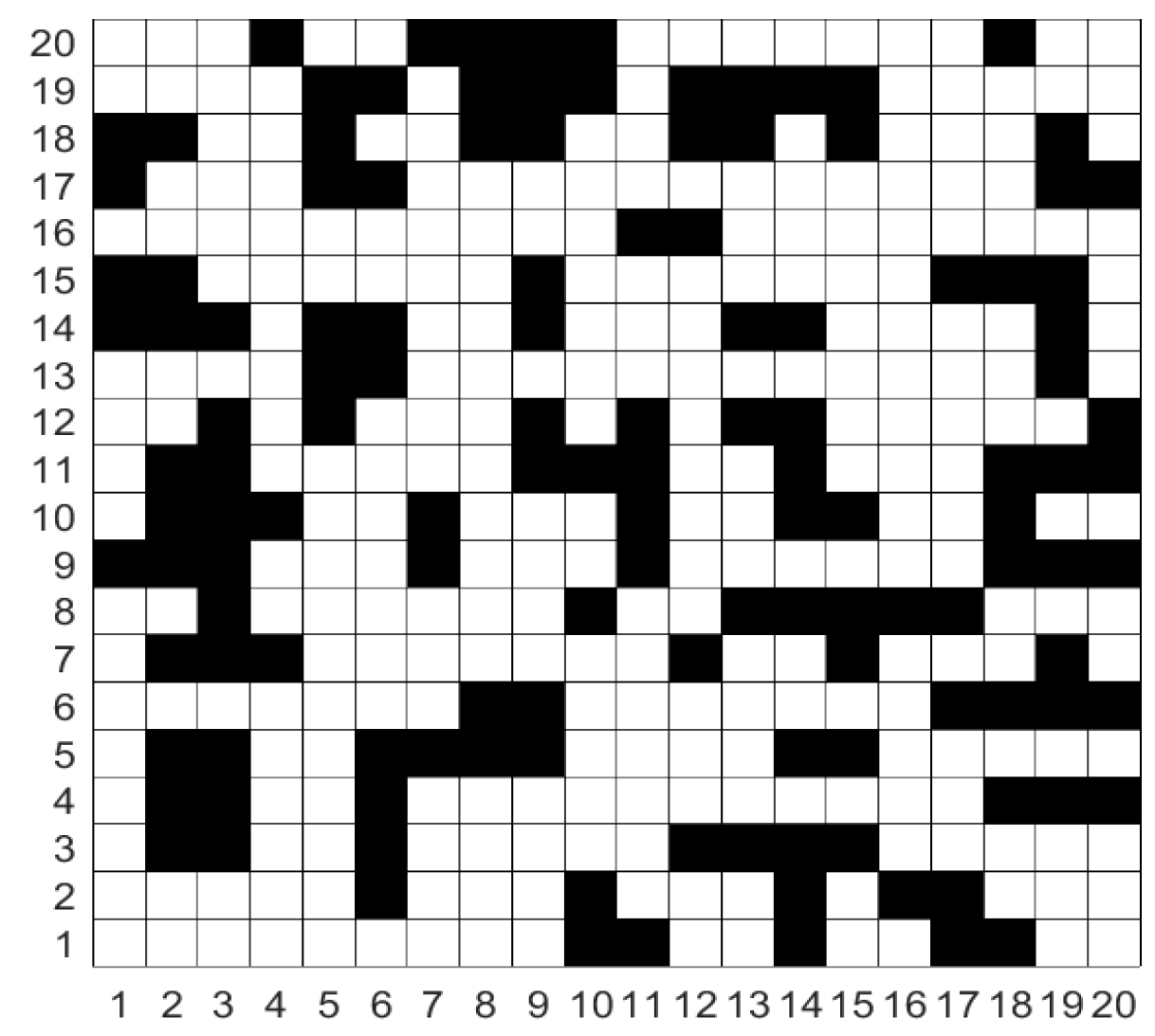

4.5. Robot Path Planning Based on TSDBO Algorithm

4.5.1. Environmental Modeling

4.5.2. Constraints and Single-Objective Fitness Functions

- (1)

- Path continuity. In path planning, the robot cannot appear to walk backwards. That is to say, if the robot starts from the starting point and its position at a certain point is (X1, Y1), then its next position should be (X2, Y2) and the condition X2 > X1 or Y2 > Y1 must be satisfied.

- (2)

- Limitations of obstacles and boundary conditions. In the path planning result, the robot’s moving path cannot appear to cross the boundary, and the search result cannot go beyond the boundary or pass through the area where obstacles exist in the grid.

- (3)

- Shortest path. If conditions (1) and (2) are satisfied, the path that retains the shortest path should be selected as the optimal path in robot path planning.

4.5.3. Two-Dimensional Map Model of 10 × 10

4.5.4. Two-Dimensional Map Model of 20 × 20

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Faris, H.; Al-Zoubi, A.M.; Heidari, A.A.; Aljarah, I.; Mafarja, M.; Hassonah, M.A.; Fujita, H. An Intelligent System for Spam Detection and Identification of the Most Relevant Features Based on Evolutionary Random Weight Networks. Inf. Fusion 2018, 48, 67–83. [Google Scholar] [CrossRef]

- Abbassi, R.; Abbassi, A.; Heidari, A.A.; Mirjalili, S. An efficient salp swarm-inspired algorithm for parameters identification of photovoltaic cell models. Energy Convers. Manag. 2019, 179, 362–372. [Google Scholar] [CrossRef]

- Andi, T.; Huan, Z.; Tong, H.; Lei, X. A Modified Manta Ray Foraging Optimization for Global Optimization Problems. IEEE Access 2021, 9, 128702–128721. [Google Scholar]

- Holland, J.H. Genetic Algorithms. Sci. Am. 1992, 267, 66–72. [Google Scholar] [CrossRef]

- Storn, R.; Price, K. Differential Evolution—A Simple and Efficient Heuristic for global Optimization over Continuous Spaces. J. Glob. Optim. 1997, 11, 341–359. [Google Scholar] [CrossRef]

- Xue, J.; Shen, B. A novel swarm intelligence optimization approach: Sparrow search algorithm. Syst. Sci. Control Eng. 2020, 8, 22–34. [Google Scholar] [CrossRef]

- Mohamed, A.-B.; Reda, M.; Mohammed, J.; Mohamed, A. Nutcracker optimizer: A novel nature-inspired metaheuristic algorithm for global optimization and engineering design problems. Knowl. Based Syst. 2023, 262, 110248. [Google Scholar]

- Jun, W.; Chuan, W.W.; Xue, H.X.; Lin, Q.; Fei, Z.H. Black-winged kite algorithm: A nature-inspired meta-heuristic for solving benchmark functions and engineering problems. Artif. Intell. Rev. 2024, 57, 98. [Google Scholar]

- Naruei, I.; Keynia, F. Wild horse optimizer: A new meta-heuristic algorithm for solving engineering optimization problems. Eng. Comput. 2021, 38 (Suppl. S4), 3025–3056. [Google Scholar] [CrossRef]

- Nitish, C.; Muhammad, M.A. Golden jackal optimization: A novel nature-inspired optimizer for engineering applications. Expert Syst. Appl. 2022, 198, 116924. [Google Scholar]

- Shijie, Z.; Tianran, Z.; Shilin, M.; Mengchen, W. Sea-horse optimizer: A novel nature-inspired meta-heuristic for global optimization problems. Appl. Intell. 2022, 53, 11833–11860. [Google Scholar]

- Agushaka, J.O.; Ezugwu, A.E.; Saha, A.K.; Pal, J.; Abualigah, L.; Mirjalili, S. Greater cane rat algorithm (GCRA): A nature-inspired metaheuristic for optimization problems. Heliyon 2024, 10, e31629. [Google Scholar] [CrossRef] [PubMed]

- Shengwei, F.; Ke, L.; Haisong, H.; Chi, M.; Qingsong, F.; Yunwei, Z. Red-billed blue magpie optimizer: A novel metaheuristic algorithm for 2D/3D UAV path planning and engineering design problems. Artif. Intell. Rev. 2024, 57, 134. [Google Scholar]

- Li, X.; Zhou, S.; Wang, F.; Fu, L. An improved sparrow search algorithm and CNN-BiLSTM neural network for predicting sea level height. Sci. Rep. 2024, 14, 4560. [Google Scholar] [CrossRef]

- Sarada, M.; Prabhujit, M. Fast random opposition-based learning Golden Jackal Optimization algorithm. Knowl. Based Syst. 2023, 275, 110679. [Google Scholar]

- Khattap, M.G.; Mohamed, A.E.; Ali, H.H.G.E.M.; Ahmed, E.; Mohammed, S. AI-based model for automatic identification of multiple sclerosis based on enhanced sea-horse optimizer and MRI scans. Sci. Rep. 2024, 14, 12104. [Google Scholar] [CrossRef]

- Shen, J.; Hong, T.S.; Fan, L.; Zhao, R.; Ariffin, M.K.A.b.M.; As’arry, A.B. Development of an Improved GWO Algorithm for Solving Optimal Paths in Complex Vertical Farms with Multi-Robot Multi-Tasking. Agriculture 2024, 14, 1372. [Google Scholar] [CrossRef]

- You, D.; Kang, S.; Yu, J.; Wen, C. Path Planning of Robot Based on Improved Multi-Strategy Fusion Whale Algorithm. Electronics 2024, 13, 3443. [Google Scholar] [CrossRef]

- Knypiński, Ł. Constrained optimization of line-start PM motor based on the gray wolf optimizer. Eksploat. I Niezawodn. Maint. Reliab. 2021, 23, 1–10. [Google Scholar] [CrossRef]

- Li, C.; Si, Q.; Zhao, J.; Qin, P. A robot path planning method using improved Harris Hawks optimization algorithm. Meas. Control 2024, 57, 469–482. [Google Scholar] [CrossRef]

- Alejandro, P.C.; Daniel, R.; Alejandro, P.; Enrique, F.B. A review of artificial intelligence applied to path planning in UAV swarms. Neural Comput. Appl. 2021, 34, 153–170. [Google Scholar]

- Jiankai, X.; Bo, S. Dung beetle optimizer: A new meta-heuristic algorithm for global optimization. J. Supercomput. 2022, 79, 7305–7336. [Google Scholar]

- Bin, L.; Peng, G.; Ziqiang, G. Improved Dung Beetle Optimizer to Optimize LSTM for Photovoltaic Array Fault Diagnosis. Proc. CSU-PSA 2024, 36, 70–78. [Google Scholar]

- Dong, S.; Zhenyu, Y.; Songbin, D.; Tingting, Z. Three-dimensional path planning of UAV based on EMSDBO algorithm. Syst. Eng. Electron. 2024, 46, 1756–1766. [Google Scholar]

- Qin, G.; Qiaoxian, Z. Multi-strategy Improved Dung Beetle Optimizer and Its Application. J. Front. Comput. Sci. Technol. 2024, 18, 930–946. [Google Scholar]

- Zhou, X.; Yan, J.; Yan, M.; Mao, K.; Yang, R.; Liu, W. Path Planning of Rail-Mounted Logistics Robots Based on the Improved Dijkstra Algorithm. Appl. Sci. 2023, 13, 9955. [Google Scholar] [CrossRef]

- Jintao, Y.; Zhenhua, G.; Mingze, J.; Enqi, H. Robot path planning based on improved A* algorithm. J. Phys. Conf. Ser. 2023, 2637, 012008. [Google Scholar]

- Quansheng, J.; Kai, C.; Fengyu, X. Obstacle-avoidance path planning based on the improved artificial potential field for a 5 degrees of freedom bending robot. Mech. Sci. 2023, 14, 87–97. [Google Scholar]

- Wang, F.; Gao, Y.; Chen, Z.; Gong, X.; Zhu, D.; Cong, W. A Path Planning Algorithm of Inspection Robots for Solar Power Plants Based on Improved RRT*. Electronics 2023, 12, 4455. [Google Scholar] [CrossRef]

- Lixing, L.; Xu, W.; Xin, Y.; Hongjie, L.; Jianping, L.; Pengfei, W. Path planning techniques for mobile robots: Review and prospect. Expert Syst. Appl. 2023, 227, 120254. [Google Scholar]

- Gao, Y.; Li, Z.; Wang, H.; Hu, Y.; Jiang, H.; Jiang, X.; Chen, D. An Improved Spider-Wasp Optimizer for Obstacle Avoidance Path Planning in Mobile Robots. Mathematics 2024, 12, 2604. [Google Scholar] [CrossRef]

- Gao, R.; Zhou, Q.; Cao, S.; Jiang, Q. Apple-Picking Robot Picking Path Planning Algorithm Based on Improved PSO. Electronics 2023, 12, 1832. [Google Scholar] [CrossRef]

- Si, J.; Bao, X. A novel parallel ant colony optimization algorithm for mobile robot path planning. Math. Biosci. Eng. MBE 2024, 21, 2568–2586. [Google Scholar] [CrossRef] [PubMed]

- Wahab, M.N.A.; Nazir, A.; Khalil, A.; Bhatt, B.; Noor, M.H.M.; Akbar, M.F.; Mohamed, A.S.A. Optimised path planning using Enhanced Firefly Algorithm for a mobile robot. PLoS ONE 2024, 19, e0308264. [Google Scholar] [CrossRef]

- Huijun, L.; Ao, L.; Wenshi, L.; Ping, G. DAACO: Adaptive dynamic quantity of ant ACO algorithm to solve the traveling salesman problem. Complex Intell. Syst. 2023, 9, 4317–4330. [Google Scholar]

- Tianrui, Z.; Wei, X.; Mingqi, W.; Xie, X. Multi-objective sustainable supply chain network optimization based on chaotic particle-Ant colony algorithm. PLoS ONE 2023, 18, e0278814. [Google Scholar]

- Jiao, L.; Yong, W.; Guangyong, S.; Tong, P. Multisurrogate-Assisted Ant Colony Optimization for Expensive Optimization Problems with Continuous and Categorical Variables. IEEE Trans. Cybern. 2021, 52, 11348–11361. [Google Scholar]

- Li, L.; Liu, L.; Shao, Y.; Zhang, X.; Chen, Y.; Guo, C.; Nian, H. Enhancing Swarm Intelligence for Obstacle Avoidance with Multi-Strategy and Improved Dung Beetle Optimization Algorithm in Mobile Robot Navigation. Electronics 2023, 12, 4462. [Google Scholar] [CrossRef]

- Na, Z.; Zedan, Z.; Xiao-an, B.; Jun-yan, Q.; Biao, W. Gravitational search algorithm based on improved Tent chaos. Control Decis. 2020, 35, 893–900. [Google Scholar]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Gandomi, A.H.; Mirjalili, S.Z.; Saremi, S.; Faris, H.; Mirjalili, S.M. Salp Swarm Algorithm: A bio-inspired optimizer for engineering design problems. Adv. Eng. Softw. 2017, 114, 163–191. [Google Scholar] [CrossRef]

- Yazhong, Z.; Yigang, H.; Zhikai, X.; Kaixuan, S.; Zihao, L.; Leixiao, L. Power transformer vibration signal prediction based on IDBO-ARIMA. J. Electron. Meas. Instrum. 2023, 37, 11–20. [Google Scholar]

- Zilong, W.; Peng, S. A Multi-Strategy Dung Beetle Optimization Algorithm for Optimizing Constrained Engineering Problems. IEEE Access 2023, 11, 98805–98817. [Google Scholar] [CrossRef]

- Jincheng, P.; Shaobo, L.; Peng, Z.; Guilin, Y.; Dongchao, L. Dung Beetle Optimization Algorithm Guided by Improved Sine Algorithm. Comput. Eng. Appl. 2023, 59, 92–110. [Google Scholar]

- Xin, K.; Bo, Y.; Hua, M.; Wensheng, T.; Hongfeng, X.; Ling, C. Multi-strategy improved dung beetle optimization algorithm. Comput. Eng. 2024, 50, 119–136. [Google Scholar]

- Wu, Z.; Mu, Y. Improved whale optimization algorithm. Appl. Res. Comput. 2020, 37, 3618–3621. [Google Scholar]

- Wei, Z.; Zhao, H.; Han, B.; Sun, C.; Li, M. Grey Wolf Optimization Algorithm with Self-adaptive Searching Strategy. Comput. Sci. 2017, 44, 259–263. [Google Scholar]

| Function Name | D | S | |

|---|---|---|---|

| 30 | [−100, 100] | 0 | |

| 30 | [−10, 10] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−100, 100] | 0 | |

| 30 | [−30, 30] | 0 | |

| 30 | [−500, 500] | −12,569.5 | |

| 30 | [−5.12, 5.12] | 0 | |

| 30 | [−32, 32] | 0 | |

| 30 | [−600, 600] | 0 | |

| 2 | [−65.536, 65.536] | 1 | |

| 4 | [−5, 5] | 0.0003075 | |

| 4 | [0, 10] | −10.1532 |

| Function | Index | DBO | DBO1 | DBO2 | DBO3 | TSDBO |

|---|---|---|---|---|---|---|

| F1 | Best | 5.00 × 10−173 | 1.30 × 10−179 | 1.11 × 10−248 | 0 | 0 |

| Mean | 7.19 × 10−108 | 6.50 × 10−116 | 4.32 × 10−206 | 0 | 0 | |

| Std | 3.94 × 10−107 | 3.56 × 10−115 | 0 | 0 | 0 | |

| F2 | Best | 1.66 × 10−81 | 4.27 × 10−84 | 4.57 × 10−121 | 9.59 × 10−280 | 2.48 × 10−288 |

| Mean | 2.04 × 10−54 | 1.53 × 10−65 | 2.74 × 10−105 | 9.23 × 10−245 | 1.17 × 10−245 | |

| Std | 1.12 × 10−53 | 8.36 × 10−54 | 1.50 × 10−104 | 0 | 0 | |

| F3 | Best | 4.35 × 10−142 | 3.21 × 10−156 | 1.18 × 10−224 | 0 | 0 |

| Mean | 2.82 × 10−88 | 4.75 × 10−85 | 3.87 × 10−182 | 0 | 0 | |

| Std | 1.02 × 10−87 | 2.58 × 10−84 | 0 | 0 | 0 | |

| F4 | Best | 5.06 × 10−88 | 7.92 × 10−83 | 6.28 × 10−120 | 1.30 × 10−285 | 1.38 × 10−289 |

| Mean | 3.21 × 10−49 | 8.81 × 10−56 | 1.10 × 10−103 | 4.31 × 10−237 | 4.08 × 10−244 | |

| Std | 1.76 × 10−48 | 4.70 × 10−55 | 5.92 × 10−103 | 0 | 0 | |

| F5 | Best | 2.47 × 101 | 2.46 × 101 | 2.40 × 101 | 2.42 × 101 | 3.11 × 10−3 |

| Mean | 2.51 × 101 | 2.51 × 101 | 2.44 × 101 | 2.48 × 101 | 3.52 × 10−1 | |

| Std | 2.20 × 10−1 | 1.81 × 10−1 | 1.67 × 10−1 | 1.81 × 10−1 | 1.02 × 10−1 | |

| F6 | Best | −1.19E × 104 | −1.25 × 104 | −1.25 × 104 | −1.25 × 104 | −1.26 × 104 |

| Mean | −9.32 × 103 | −1.06 × 104 | −9.52 × 103 | −9.41 × 103 | −1.20 × 104 | |

| Std | 1.35 × 103 | 1.60 × 103 | 1.45 × 103 | 1.57 × 103 | 7.60 × 102 | |

| F7 | Best | 0 | 0 | 0 | 0 | 0 |

| Mean | 1.99 × 101 | 1.26 × 100 | 0 | 0 | 0 | |

| Std | 4.80 × 101 | 6.03 × 100 | 0 | 0 | 0 | |

| F8 | Best | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 |

| Mean | 8.88 × 10−16 | 1.01 × 10−15 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | |

| Std | 0 | 6.94 × 10−16 | 0 | 0 | 0 | |

| F9 | Best | 0 | 0 | 0 | 0 | 0 |

| Mean | 2.74 × 10−4 | 3.24 × 10−3 | 0 | 0 | 0 | |

| Std | 1.53 × 10−3 | 1.62 × 10−2 | 0 | 0 | 0 | |

| F10 | Best | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 |

| Mean | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | |

| Std | 1.17 × 10−16 | 1.01 × 10−16 | 9.22 × 10−17 | 1.30 × 10−16 | 1.17 × 10−18 | |

| F11 | Best | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 |

| Mean | 7.36 × 10−4 | 7.84 × 10−4 | 6.19 × 10−4 | 7.97 × 10−4 | 3.37 × 10−4 | |

| Std | 3.36 × 10−4 | 3.94 × 10−4 | 3.93 × 10−4 | 4.67 × 10−4 | 6.40 × 10−5 | |

| F12 | Best | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 |

| Mean | −6.77 × 100 | −7.09 × 100 | −6.92 × 100 | −9.98 × 100 | −1.02 × 101 | |

| Std | 2.43 × 100 | 2.54 × 100 | 2.50 × 100 | 1.37 × 100 | 6.45 × 10−15 |

| Function | Index | SSA | IDBO | GODBO | MSADBO | MSDBO | IWOA | SAGWO | TSDBO |

|---|---|---|---|---|---|---|---|---|---|

| F1 | Best | 1.34 × 10−8 | 1.43 × 10−150 | 0 | 0 | 2.89 × 10−201 | 5.92 × 10−179 | 2.35 × 10−225 | 0 |

| Mean | 2.24 × 10−8 | 3.56 × 10−114 | 2.34 × 10−164 | 0 | 3.02 × 10−185 | 1.85 × 10−111 | 1.34 × 10−214 | 0 | |

| Std | 6.79 × 10−9 | 1.95 × 10−113 | 0 | 0 | 0 | 1.01 × 10−110 | 0 | 0 | |

| F2 | Best | 2.60 × 10−2 | 1.19 × 10−81 | 8.42 × 10−28 | 2.71 × 10−268 | 2.64 × 10−232 | 1.32 × 10−84 | 2.01 × 10−126 | 6.30 × 10−279 |

| Mean | 8.12 × 10−1 | 4.47 × 10−65 | 5.43 × 10−13 | 2.97 × 10−171 | 2.85 × 10−198 | 5.95 × 10−61 | 1.04 × 10−107 | 1.40 × 10−245 | |

| Std | 7.24 × 10−1 | 2.43 × 10−64 | 1.71 × 10−12 | 0 | 0 | 3.21 × 10−60 | 5.43 × 10−107 | 0 | |

| F3 | Best | 9.76 × 101 | 2.78 × 10−93 | 1.13 × 10−21 | 2.45 × 10−173 | 2.63 × 10−162 | 3.44 × 10−148 | 1.75 × 10−226 | 0 |

| Mean | 5.47 × 102 | 1.48 × 10−46 | 8.54 × 10−11 | 8.81 × 10−111 | 7.89 × 10−132 | 5.70 × 10−27 | 5.21 × 10−178 | 0 | |

| Std | 4.56 × 102 | 7.28 × 10−46 | 3.69 × 10−10 | 4.83 × 10−110 | 5.62 × 10−142 | 3.12 × 10−26 | 0 | 0 | |

| F4 | Best | 2.60 × 100 | 4.19 × 10−73 | 5.85 × 10−19 | 9.06 × 10−259 | 8.54 × 10−245 | 7.02 × 10−75 | 2.98 × 10−126 | 2.30 × 10−286 |

| Mean | 6.59 × 100 | 3.74 × 10-14 | 2.31 × 10−10 | 3.24 × 10−181 | 6.78 × 10−201 | 6.14 × 10−46 | 2.21 × 10−107 | 9.50 × 10−236 | |

| Std | 2.51 × 100 | 1.62 × 10-13 | 1.23 × 10−09 | 0 | 9.97 × 10−145 | 3.36 × 10−45 | 1.19 × 10−106 | 0 | |

| F5 | Best | 2.42 × 101 | 2.50 × 101 | 2.50 × 101 | 2.48 × 101 | 2.46 × 101 | 2.44 × 101 | 3.94 × 10-1 | 6.89 × 10−2 |

| Mean | 1.44 × 102 | 2.58 × 101 | 2.56 × 101 | 2.55 × 101 | 2.57 × 101 | 2.50 × 101 | 2.36 × 101 | 2.34 × 10−1 | |

| Std | 3.50 × 102 | 3.47 × 10−1 | 2.30 × 10−1 | 3.33 × 10−1 | 3.50 × 10−1 | 2.17 × 10−1 | 4.38 × 100 | 7.04 × 10−2 | |

| F6 | Best | −9.01 × 103 | −1.03 × 104 | −1.23 × 104 | −1.23 × 104 | −1.22 × 104 | −1.14 × 104 | −1.25 × 104 | −1.26 × 104 |

| Mean | −7.57 × 103 | −8.86 × 103 | −8.34 × 103 | −9.98 × 103 | −9.99 × 103 | −9.13 × 103 | −1.00 × 104 | −1.20 × 104 | |

| Std | 7.28 × 102 | 1.32 × 103 | 1.52 × 103 | 1.73 × 103 | 1.05 × 103 | 1.25 × 103 | 1.32 × 103 | 9.07 × 102 | |

| F7 | Best | 1.39 × 101 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Mean | 3.87 × 101 | 1.56 × 100 | 1.85 × 100 | 9.45 × 10−1 | 0 | 4.88 × 100 | 0 | 0 | |

| Std | 1.43 × 101 | 3.24 × 100 | 1.83 × 100 | 5.63 × 10−1 | 0 | 2.14 × 101 | 0 | 0 | |

| F8 | Best | 2.89 × 10−5 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 |

| Mean | 1.97 × 100 | 8.88 × 10−16 | 4.32 × 10−15 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | 8.88 × 10−16 | |

| Std | 9.20 × 10−1 | 1.00 × 10−31 | 6.49 × 10−16 | 1.00 × 10−31 | 9.32 × 10−30 | 4.35 × 10−28 | 9.36 × 10−23 | 0 | |

| F9 | Best | 9.82 × 10−6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| Mean | 1.06 × 10−2 | 1.94 × 10−3 | 2.05 × 10−4 | 1.98 × 10−4 | 1.95 × 10−4 | 2.01 × 10−4 | 2.55 × 10−4 | 0 | |

| Std | 1.03 × 10−2 | 4.58 × 10−3 | 5.21 × 10−3 | 6.32 × 10−4 | 5.36 × 1−-4 | 3.26 × 10−3 | 5.32 × 10−4 | 0 | |

| F10 | Best | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 | 9.98 × 10−1 |

| Mean | 1.23 × 100 | 2.57 × 100 | 9.98 × 10−1 | 1.20 × 100 | 1.54 × 100 | 1.06 × 100 | 1.26 × 100 | 9.98 × 10−1 | |

| Std | 5.01 × 10−1 | 2.92 × 100 | 4.52 × 10−1 | 6.05 × 10−1 | 5.32 × 10−5 | 3.62 × 10−1 | 3.26 × 10−2 | 1.37 × 10−15 | |

| F11 | Best | 3.08 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 | 3.07 × 10−4 |

| Mean | 1.50 × 10−3 | 4.69 × 10−4 | 1.53 × 10−3 | 4.27 × 10−4 | 5.32 × 10−4 | 6.61 × 10−4 | 3.48 × 10−4 | 3.18 × 10−4 | |

| Std | 3.58 × 10−3 | 2.04 × 10−4 | 2.33 × 10−3 | 2.82 × 10−4 | 3.68 × 10−3 | 2.57 × 10−4 | 9.11 × 10−5 | 9.35 × 10−5 | |

| F12 | Best | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 | −1.02 × 101 |

| Mean | −2.34 × 100 | −1.00 × 101 | −9.64 × 100 | −4.42 × 100 | −3.56 × 100 | −6.69 × 100 | −9.98 × 100 | −1.02 × 101 | |

| Std | 3.56 × 101 | 3.90 × 10−1 | 1.56 × 100 | 1.70 × 100 | 2.10 × 100 | 2.53 × 100 | 9.31 × 10−1 | 6.56 × 10−15 |

| Index | DBO | GODBO | MSADBO | TSDBO |

|---|---|---|---|---|

| Shortest Path | 19.6569 | 19.3137 | 19.6569 | 18.2426 |

| Longest Path | 19.6569 | 24.4853 | 19.6569 | 19.6569 |

| Average Path | 19.6569 | 20.8752 | 19.6569 | 19.3740 |

| Shortest Path time | 3.95 s | 3.45 s | 8.99 s | 5.02 s |

| Average time | 4.88 s | 3.62 s | 9.44 s | 5.41 s |

| Index | DBO | GOBDO | MSADBO | TSDBO |

|---|---|---|---|---|

| Shortest Path | 36.9706 | 35.799 | 36.9706 | 31.5563 |

| Longest Path | 36.9706 | 49.2132 | 36.9706 | 36.9706 |

| Average Path | 36.9706 | 42.9647 | 36.9706 | 32.8436 |

| Shortest Path time | 11.87 s | 10.38 s | 26.20 s | 11.30 s |

| Average time | 13.24 s | 10.99 s | 28.15 s | 11.98 s |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, K.; Dai, Y.; Liu, H. Improvement of Dung Beetle Optimization Algorithm Application to Robot Path Planning. Appl. Sci. 2025, 15, 396. https://doi.org/10.3390/app15010396

Liu K, Dai Y, Liu H. Improvement of Dung Beetle Optimization Algorithm Application to Robot Path Planning. Applied Sciences. 2025; 15(1):396. https://doi.org/10.3390/app15010396

Chicago/Turabian StyleLiu, Kezhen, Yongqiang Dai, and Huan Liu. 2025. "Improvement of Dung Beetle Optimization Algorithm Application to Robot Path Planning" Applied Sciences 15, no. 1: 396. https://doi.org/10.3390/app15010396

APA StyleLiu, K., Dai, Y., & Liu, H. (2025). Improvement of Dung Beetle Optimization Algorithm Application to Robot Path Planning. Applied Sciences, 15(1), 396. https://doi.org/10.3390/app15010396