Abstract

This paper proposes a novel data fusion technique for a wearable multi-sensory patch that integrates an accelerometer and a flexible resistive pressure sensor to accurately capture breathing patterns. It utilizes an accelerometer to detect breathing-related diaphragmatic motion and other body movements, and a flex sensor for muscle stretch detection. The proposed sensor data fusion technique combines inertial and pressure sensors to eliminate nonbreathing body motion-related artifacts, ensuring that the filtered signal exclusively conveys information pertaining to breathing. The fusion technique mitigates the limitations of relying solely on one sensor’s data, providing a more robust and reliable solution for continuous breath monitoring in clinical and home environments. The sensing system was tested against gold-standard spirometry data from multiple participants for various breathing patterns. Experimental results demonstrate the effectiveness of the proposed approach in accurately monitoring breathing rates, even in the presence of nonbreathing-related body motion. The results also demonstrate that the multi-sensor patch presented in this paper can accurately distinguish between varying breathing patterns both at rest and during body movements.

1. Introduction

Changes in the rate of breathing are one of the key indicators of deteriorating health, and monitoring and quantifying the changes in breathing using wearable sensing systems are emerging as powerful tools for the early detection of possible deteriorating conditions. Irregularities or abnormalities in breathing patterns can be early indicators of potential underlying health issues, such as respiratory disorders, cardiovascular problems, sleep apnea, or even stress-related conditions [1]. Sensor systems with continuous monitoring, facilitated by remote connectivity, provide valuable medical insights into a patient’s respiratory signals and help in monitoring the patient’s status [2,3,4,5,6,7]. By closely observing and understanding historic breathing data, clinicians can detect early signs of potential health issues and implement timely interventions. Wearable breathing monitoring systems have implications for devices extending beyond clinical healthcare, with applications in stress management, mindfulness, athletic performance optimization, and general well-being. Wearable breathing sensing devices offer a means to consistently gather data and monitor the breathing rate, even during routine activities.

Various wearable breathing sensors have been suggested in the literature for detecting and identifying breathing parameters. These sensors employ diverse mechanisms including capturing breathing-related chest movements, analyzing exhaled gases, and monitoring changes in blood oxygenation. Wearable sensors used for breath monitoring often incorporate various electronic components. These components may be directly [8,9] or indirectly [10,11,12] attached to the body. Dinh et al. (2020) provided an overview of recent developments in stretchable sensors and wearable technologies for real-time and portable healthcare systems [13]. This review focuses on the sensing mechanisms, design concepts, and integration of variable sensing components of these sensors for breath detection. In [14], a wearable healthcare device made with lightweight and flexible materials was introduced. The device can stick to the skin and track breathing.

Different sensor types, including acoustic sensors, humidity sensors, pressure sensors, and inertial measurement units (IMUs), can be employed to monitor breathing rates, each of which has unique advantages and disadvantages [15,16,17,18]. For instance, acoustic sensors may be negatively impacted by background noise. Despite their greater robustness to environmental conditions than other sensors, humidity sensors may not be ideal for prolonged use. Additionally, although accelerometers and pressure sensors are cost-effective, they are susceptible to non-breathing-related movement artifacts.

Wearable pressure sensors can be used to capture diaphragmatic contractions and relaxation. When attached to the chest or abdomen using a belt, these sensors can measure the force applied during expansion [11]. Capacitive and resistive (flex) sensors are two distinct types of pressure sensors that have been used in many wearable body and muscle movement detection tasks [19]. A comparative analysis between capacitive and resistive (flex) sensors for wearable human joints and motion sensing applications revealed subtle distinctions in their performance characteristics [20]. The study demonstrated that both sensor types exhibit commendable static and dynamic capabilities, and their study showed that capacitive sensors demonstrate superiority in terms of linearity and long-term repeatability; however, capacitive sensors exhibit greater signal noise. Conversely, resistive sensors exhibit greater sensitivity and excel in terms of signal anti-interference capabilities. The optimal choice between capacitive and resistive sensors ultimately depends on the specific requirements of the application.

Resistive flexible sensors used in breathing detection are commonly applied on the chest walls since they detect deformations related to respiratory movements [21]. Several studies have been conducted to explore the use of piezoresistive sensors for breath monitoring [22,23,24,25,26,27,28,29]. Ref. [30] showed that placing piezoresistive sensors on the upper thorax mitigates abdominal motion during daily activities and improves performance. In [29], a wearable device employing a piezoresistive transducer was utilized to monitor the breathing rate. The algorithm used to calculate the breathing rate operates based on the number of zero crossings within a specific time window. In [25], a neural network trained on data collected from ten users successfully predicts respiration patterns. In [24], a wearable device utilized six piezoresistive sensors to detect the motion of the pulmonary rib cage, abdominal rib cage, and abdomen for breath monitoring. Additionally, other types of wearable breathing sensors attached to the diaphragmatic area or around the mouth that utilize piezoelectric, triboelectric, resistive, and capacitive methods showed promising results in detecting breathing [31,32].

In the realm of wearable devices for breath monitoring, IMUs can be mounted around the diaphragmatic area and can be employed to detect inhalation- and exhalation-related motions [13]. IMU sensors include accelerometers and gyroscopes that can measure accelerations and angular velocities [33]. IMU sensors are typically positioned on the chest or abdomen because they can directly measure respiratory-related motion activity [34,35]. However, the overall non-respiratory body movement not related to breathing can significantly impact the recorded IMU data, thus restricting the applicability of these sensors and requiring additional algorithms/filtering to distinguish non-breathing related signals.

Several investigations have explored the application of inertial measurement units (IMUs) in breath monitoring. In [4], a nine-axis IMU along with a MEMS microphone was applied to calculate the breathing rate and coughing frequency. In [36], an accelerometer was used to collect chest movement data. After filtering the raw data, wavelet analysis with multiscale peak detection was applied to determine the number of breath cycles.

Sensor data fusion by combining data from multiple sensors plays a crucial role in enhancing the accuracy and reliability of breath detection systems. Several research investigations have been conducted to examine the utilization of multiple sensors for this purpose [37,38,39,40,41]. The main disadvantage of IMUs and pressure or resistive sensors is their sensitivity to responding to body movement that does not correspond to breathing. This sensitivity can be mitigated by developing hybrid systems that include more than one of these sensors and operate based on fusing the sensors’ data. In [16], a comprehensive examination of the utilization of wearable piezoresistive and inertial sensors for monitoring respiration rates was provided. The authors explored the advancements, challenges, and potential applications of these sensor technologies, offering valuable insights into the evolving landscape of wearable health monitoring devices. In [42], the authors introduced an innovative data fusion algorithm designed to eliminate motion artifacts from respiratory signals obtained using four piezoresistive textile sensors and one IMU sensor. Piezoresistive sensors primarily capture breathing activity, while IMU sensors are highly influenced by physical activity. Independent component analysis (ICA) was subsequently used to blindly separate various sources of activities and derive distinct independent components. By comparing the PSDs of the identified independent components and the IMU signal, researchers have reconstructed the signals from IMUs and piezoresistive sensors, removing the effects of physical activity. In [43], three six-axis IMU sensors were employed for breath monitoring. Two of them are positioned on the chest and abdomen, while the third is strategically placed where it is not affected by breathing. This third IMU sensor is utilized to filter out motions not related to breathing from the first two IMU sensors. For this purpose, quaternions are computed to determine the changes in the orientation of the chest and abdomen with respect to the orientational position, considering the third sensor as the reference sensor. The first main component is then derived through Principal Component Analysis of the quaternions. Subsequently, breathing information is obtained by analyzing the power spectral density of the main component. IMU with a capacitive pressure sensor was used to reduce the error from the IMU sensor and, when positioned near the diaphragm, the multi-sensor system showed the capability to capture breathing patterns [44]. In contrast to the capacitive sensor which requires active components in this paper, we used a flexible resistive sensor which does not require active electronic components.

In addition to utilizing different sensors, several studies have investigated the fusion of sensor data through the application of machine learning techniques to increase breathing pattern identification capability [45,46]. In [47], a deep learning approach was devised, leveraging data from both inertial measurement unit (IMU) sensors and respiratory sound audio captured by earbuds to count exercise repetitions. Wearable breathing sensors and human activity recognition technologies benefit from the extensive research and development that is being reported using various methods for multi-sensor data fusion. A vast body of literature explores wearable sensor networks and fusion algorithms, employing diverse sensor data fusion approaches across different levels of sensor integration [48,49,50,51]. Previous reviews, such as those reported in [45,48,49,50,51], thoroughly examine and compare the advantages and disadvantages of various traditional, advanced, and emerging sensor fusion techniques. Although machine learning and AI techniques are powerful tools for fusing sensor data, they require access to a large amount of data and computation, and their performance highly depends on the quality of the data. Therefore, researchers are also seeking simple but effective data fusion techniques.

To address this issue, in this paper, we develop a simple fusion technique aimed at isolating breathing information from sensory data, effectively mitigating the impact of body movement due to non-breathing activities. Sensor fusion is a technique used for human activity detection to combine information from multiple sensors to improve the reliability and accuracy of activity detection. In this work, we used information from IMU and flexible sensors to improve the performance and accuracy of breathing pattern detection. This work led to the creation of a wearable multisensory patch employing an accelerometer and a flex sensor for accurate motion and muscle stretching capture during both stationary and body movement conditions. Here, we used IMU sensor data to remove nonbreathing-related artifacts from flex sensor data to achieve accurate breathing detection during body motion. Through the analysis of various breathing motions, the sensing system demonstrated high accuracy, providing a more reliable method for identifying breathing types with promising applications in the early detection of respiratory changes. In the present work, we introduce, for the first time, integrating resistive flex sensor and inertial measurement unit (IMU) sensor data to detect breathing rates. This method leverages IMU sensor data to extract insights into body motion resulting from physical activities. Subsequently, a filtering process is applied to the flex sensor data to eliminate motion-related artifacts, ensuring that the filtered signal exclusively conveys information pertaining to breathing.

The main contributions of this paper are as follows:

- A sensor data fusion technique was developed to eliminate non-respiratory information from flex sensor data through the integration of IMU sensor data, thereby attaining a signal that exclusively contains breathing-related information.

- The inherent sensitivity of flex sensor data to nonbreathing activities was addressed, a primary drawback of body motion signals was mitigated, and the device’s reliability was enhanced in diverse usage scenarios.

- We introduced a cost-effective wearable device consisting of an off-the-shelf IMU and flex sensor that can accurately measure breathing rates, even in the presence of body motion resulting from physical activity.

- The system was tested against gold-standard spirometry data across six participants (three males and three females) and showed consistent accuracy across various breathing patterns.

- The noninvasive and compact nature of this device ensures improved patient compliance and comfort, making it a valuable tool for continuous monitoring in both clinical and home environments.

2. Methodology

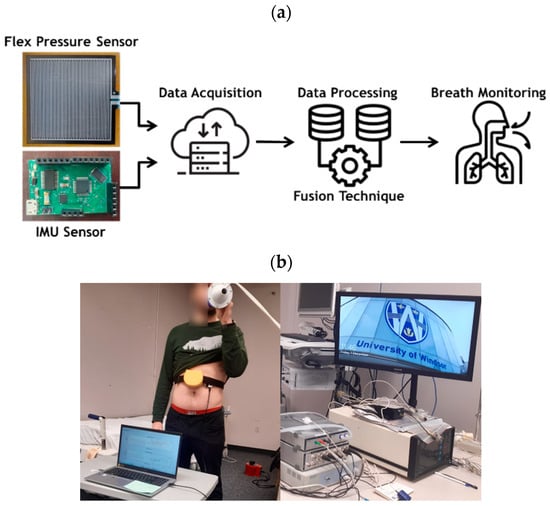

The proposed breath monitoring system works based on noninvasive measurements. This system primarily comprises three subsystems: the sensor subsystem, the microcontroller subsystem, and the data acquisition and analysis subsystem. A wearable breathing sensor system as shown in Figure 1 consisting of an inertial sensor (IMU), flex sensor, and data acquisition system is attached in proximity to the diaphragmatic area. Throughout the breathing process, the periodic motion of the thoracic and abdominal areas can be captured by different sensors, such as IMU sensors and flex sensors. Inhalation involves the diaphragm contracting, pushing the abdominal organs downward, and consequently reducing intrathoracic pressure. Conversely, during exhalation, as the diaphragm relaxes, the chest and abdomen return to their resting positions, leading to a reduction in lung volume. The inhalation and exhalation processes are sensed and waveform data are provided by the inertial and flex sensors.

Figure 1.

(a) Conceptual flow of our wearable multi-sensor system consisting of an inertial measurement unit (IMU) and a flexible resistive sensor connected to a data acquisition module, (b) picture showing our testing process and data collection and analysis experimental setup for breath monitoring.

The data collection, validation, and analysis experimental setup for our breath monitoring system is illustrated in Figure 1, constructed by all commercially available components. In this setup, IMU and flex sensors are used to detect and monitor breathing. For comparison and validation, spirometry is applied to obtain the actual breathing signal (ground truth) to evaluate the performance of the approach.

The breathing sensor testing was done in the Faculty of Human Kinetics breathing testing lab where each interested participant goes over a familiarization session. In the familiarization session, participants were familiarized with the three different laying positions, namely supine, rolled to right, and then rolled to left. On the trial day, after getting their body measurement and age, they are asked to lie down. Our wearable breathing sensor is mounted on the participant’s skin around the diaphragmatic area (same sensor placement location was used across all participants) and data from the sensor is recorded on a PC. The participants also receive a mouthpiece of the spirometer attached to a filter and turbine to monitor their ventilation. The participants also wear a nose clip. The data from the spirometer is also recorded on a PC. In each position, different breathing patterns were exercised, and data were recorded. The sequence and time of each breathing pattern remained the same across all participants. Participants are also given 2 min of rest before and in between breathing patterns or position changes. All experimental steps, protocols, and conditions remained the same across the participants.

Six young (mean age: 23.2 years, SD: 2.4) adults (3 male and 3 female) were recruited for testing the device. The study procedure was approved by the University of Windsor’s Research Ethics Board (REB #22-166) and was in accordance with the Declaration of Helsinki, except for registration in a clinical database. The IMU sensor, flex sensor, and spirometer used are described below.

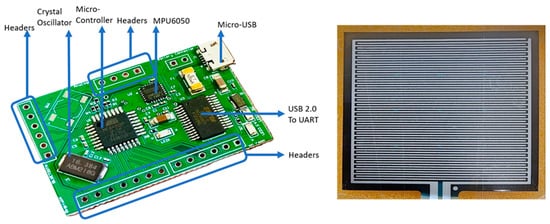

The basic hardware consists of an IMU and a flex resistive sensor. A data acquisition system and controller are needed to collect and process the sensor data; therefore, a data acquisition board was designed. Figure 2 shows the data acquisition hardware used for the wearable breathing sensor, which was designed and developed in-house. The board is developed using all commercially available electronic components and sensors. The breathing sensor hardware board shown in Figure 2 is made with a four-layer PCB (Printed Circuit Board) consisting of two main components, a microcontroller (Atmega328P, Microchip, Chandler, AZ, USA) and an IMU (MPU6050, TDK InvenSense, San Jose, CA, USA), which can measure 3-axis acceleration and 3-axis rotation motion. The board was synchronized with a 16.384 MHz oscillator. The Atmega328P consists of 2 KB SRAM, 32 KB Flash, and 1 KB EEPROM. The EEPROM is a nonvolatile memory, meaning that the data remains the same even if the power is off. Hence, once the board is programmed, it does not need to be programmed again. The MPU6050 communicates with the microcontroller via the I2C protocol. The MPU6050 detects inertial movement due to breathing- and non-breathing-related body movement. Flex pressure sensor data are collected via one of the analog pins on the board.

Figure 2.

Breathing sensor system including IMU and flex sensors.

The flex sensor is connected to the analog pin present in the header. The headers represent various analog and digital pins obtained from the microcontroller. The IMU sensor is connected to the microcontroller via electrical traces on the board. The Micro USB is used to communicate with a laptop on two occasions. First, the breathing sensor was programmed to read all the acceleration and rotation data from the IMU using an Arduino IDE (Version 2.2.1). The programming is performed only once, as the board has the memory to save the code. Second, the breathing sensor was used to read and plot the data continuously via MATLAB (version (R2023b) Update 4)-based code. At the end of each test session with participants, the data were saved in an Excel file. This event is the main data acquisition event and is repeated each time during the experiment.

For the spirometry setup, the participants were instrumented with a mouthpiece and nose clip, and data from the spirometer were collected using the PC, as shown in Figure 1b (the picture of the spirometer and its data acquisition system). The mouthpiece was connected to a calibrated respiratory flow head and high-precision spirometer (ADInstruments®, Colorado Springs, CO, USA). The respiratory rates and volumes were determined in real-time using PowerLab and LabChart Pro software version 8 (ADInstruments®).

3. Sensor Data Fusion

The main challenge addressed in this research is the isolation of breathing-related motion from non-breathing-related body movement through sensor data fusion. The inertial sensor, when attached to the diaphragmatic area, will respond and provide signals corresponding to both breathing- and non-breathing-related body movement. Similarly, the flex sensor, when attached to the skin near the diaphragmatic area, will respond to breathing-related muscle stretch as well as muscle stretch due to body twists or another non-breathing-related signal from muscle movement. The challenge is to isolate the non-breathing-related signals from both sensor signals, which requires a proper sensor fusion technique. To understand the importance of sensor data fusion and challenges in the sensor signal due to non-breathing-related sensor responses, and to determine the appropriate method for performing the fusion, it is necessary to evaluate the performance of the IMU and flex sensors individually. For this purpose, the ability of both sensors to measure breathing signals was studied first. Flex sensors can capture muscle stretching and relaxation at the location to which they are attached. Moreover, IMU sensors can capture breathing-related motion as well as various body movements, such as rolling, bending, walking, and running. To detect abdominal movement using the IMU sensor, accelerometer data in the normal direction were utilized.

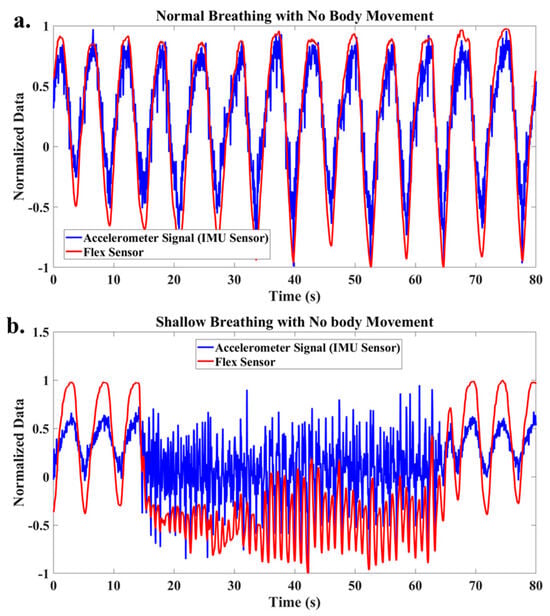

Figure 3 shows the accelerometer data in the normal direction and the flex sensor data during normal (Figure 3a) and shallow breathing (Figure 3b). Notably, there was no body movement during these breathing tests. This shows that without the body movement, both sensors can capture the breathing signal. Therefore, after data processing, it is possible to find the breathing rate and type from these data without sensor fusion when the participant is completely stationary and there is no body movement.

Figure 3.

Raw data of accelerometer and flex signals for (a) normal breathing, (b) shallow breathing.

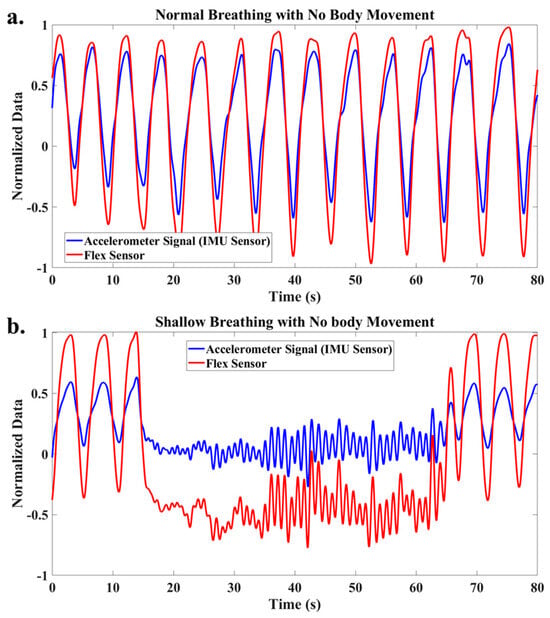

After applying a low-pass filter to address the high noise-to-signal ratio of the accelerometer data, the filtered accelerometer signals for normal and shallow breathing are depicted in Figure 4. After filtering, it is demonstrated that both signals provide consistent information, and monitoring breathing is possible based on the measurements of each sensor when the participant is stationary and in the absence of body motion.

Figure 4.

Filtered accelerometer and flex signals for (a) normal breathing, (b) shallow breathing.

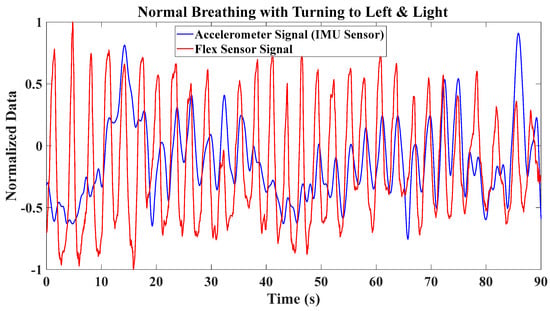

To assess the performance of these sensor signals in the presence of body movement, a normal breathing test was conducted while the participant’s body was gently turning to the left and right. As depicted in Figure 5, the results highlight the significant impact of body motion on the accelerometer data, whereas the flex sensor data exhibit only minor variation. Consequently, the flex sensor is more robust to body motion than the IMU sensor. It is evident that if the accelerometer and flex sensor data are fused, the fusion does not yield additional information compared to using only the flex sensor data in the presence of body motion. This observation leads to the question of whether relying solely on a flex sensor is adequate for breath monitoring.

Figure 5.

Accelerometer and flex sensor signals during normal breathing while body motion is present (turning left and right).

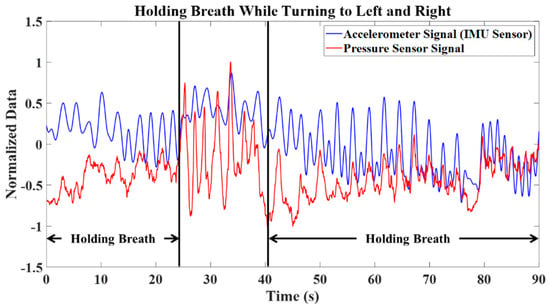

To address this issue, a test involving breath holding during body motion was conducted. Figure 6 shows a breathing test that includes two intervals of breath-holding while the participant turns to the left and right. During these intervals, it was anticipated that the flex sensor data would remain nearly constant. However, due to muscle motion during turns to the right and left, the flex sensor data exhibited cyclic behavior corresponding to the body’s motion. This cyclical pattern introduces the risk of false alarms when detecting breathing. Consequently, relying solely on a flex sensor is insufficient. Therefore, flex sensor data must be integrated with other sensory information.

Figure 6.

Accelerometer and flex sensor signals for breath holding while body motion is present (turning left and right).

The previously mentioned false alarms stem from the motion of abdominal muscles, including contraction and relaxation, induced by the body’s rotational movement. Consequently, it becomes imperative to extract information about the body’s rotational motion and incorporate it to enhance the performance of flex sensor data. Therefore, it is evident that it is necessary to isolate the non-breathing-related signal component from the sensor data to properly identify breathing signals from the flex sensor data during body motion. This concept constitutes the primary framework of the sensor fusion approach developed in this research.

4. Results and Discussion

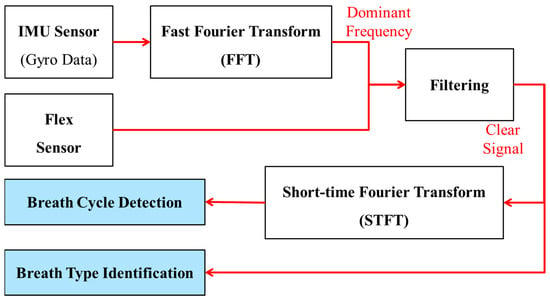

The fusion technique developed in this research is based on excluding information regarding body rotation from flex sensor data to achieve a signal that purely includes breath-related information. An overview of this proposed method is depicted in Figure 7. To capture information about the body’s rotational motion along three axes (x, y, z), the tri-axis gyro data from the IMU are utilized. To exclude the body movement data from the flex sensor data, a simple signal processing technique was used to identify the frequency contents. Fast Fourier Transformation (FFT) transforms the signal from the time domain to the frequency domain, providing a spectrum that illustrates the frequency components present in that signal. Employing FFT allows the identification of important frequencies associated with the body’s rotational motion. Once the dominant frequencies of body rotational motion are determined, they are removed from the flex sensor data through filtering. As a result, the filtered flex sensor data exclusively contain information related to breathing, making them suitable for breath monitoring.

Figure 7.

Proposed sensor fusion algorithm to utilize both flex sensor and accelerometer data to accurately capture breathing signals by compensating for non-breathing-related artifacts from the signals.

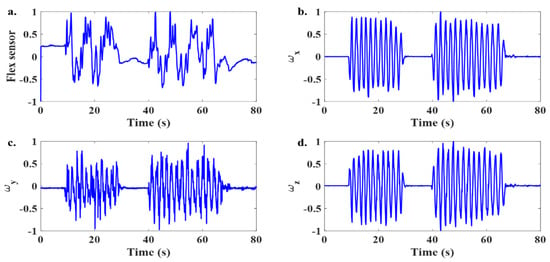

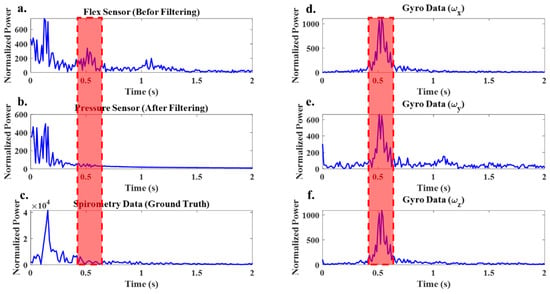

To assess the effectiveness of the developed approach, an experimental test involving deep breathing with motion was conducted. Figure 8 shows the flex sensor data and gyro signals along the three axes. The Fast Fourier Transform (FFT) of these signals is shown in Figure 9. Based on the FFT of the gyro data, the region of dominant frequencies corresponding to the body motion is identified and highlighted in this figure.

Figure 8.

Time domain signal from the sensors during deep breathing with the presence of body movement. (a) Flex sensor data and (b–d) tri-axis gyro signals from the IMU sensor during a deep breathing test in the presence of body motion.

Figure 9.

(a) FFT of the flex sensor signal before filtering, (b) FFT of the flex sensor signal after filtering, (c) FFT of the spirometer signal and (d–f) FFT of the tri-axis gyro signals of the IMU sensor. Red area showing dominant frequency in gyro data.

For this specific experimental test, the dominant frequencies fall within the range of 0.45 to 0.6 Hz. The FFT analysis of the flex sensor data also revealed the presence of these frequencies (Figure 9a), but they were not dominant in the spirometer data (Figure 9c). By applying the developed approach, these frequencies are removed from the flex sensor data. As shown in Figure 9b, the FFT of the filtered flex sensor data closely resembles the FFT of the spirometer data, indicating the absence of frequencies corresponding to body motion in this range. Obtaining a signal that contains only breath-related information allows us to determine the number of breaths or the breathing rate and to determine the breathing type.

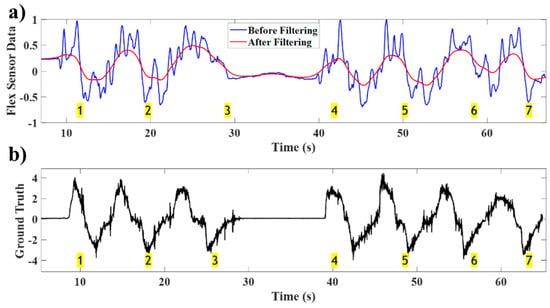

5. Counting the Number of Breath Cycles and Calculating the Breathing Rate

The flex sensor data before and after filtering are shown in Figure 10a, and the spirometer signal, serving as the ground truth, is illustrated in Figure 10b. Before applying the developed approach, the flex sensor data had 31 peaks, indicating 31 breath cycles. However, after applying the developed fusion technique, the number of breath cycles extracted from the filtered flex sensor data is seven, which is consistent with the count obtained using the ground truth. This demonstrates that, in this test, body motion leads to a false alarm rate of more than 300% in counting the breath cycles when the developed approach is not applied. The results highlight the effectiveness of the developed approach and its ability to avoid counting cycles corresponding to body motion as breath cycles.

Figure 10.

(a) Flex sensor data before and after filtering, (b) spirometer data of the deep breathing test in the presence of body motion showing the filtered flex sensor waveform follows that of the ground truth (spirometer). Breath cycles are shown in highlighted (yellow) numbers.

6. Identifying the Breath Type

The Short-Time Fourier Transform (STFT) is used to analyze and understand the temporal frequency characteristics of a signal. In the realm of breathing analysis, the STFT has emerged as a valuable resource. The procedure is initiated by segmenting the respiratory data into brief time intervals, with each window depicting a small segment of the total signal duration. The rationale behind selecting these short time intervals is to capture the dynamic variations in the breathing pattern as it evolves over time. Upon segmenting the signal, the Fast Fourier Transform (FFT) is applied to each window individually. This process allows insights to be gained regarding the evolution of the frequency content of the breathing signal over time. To avoid overlooking vital information, overlapping windows are employed. This approach ensures comprehensive coverage of the entire signal duration, accurately capturing the nuances and variations in the breathing pattern.

In the context of determining breathing types, the STFT facilitates a thorough exploration of frequency variations over short intervals. This method enables the identification of specific patterns or characteristics associated with different breathing types. This comprehensive analysis serves to differentiate between various breathing patterns and enhances the overall accuracy of breath monitoring systems.

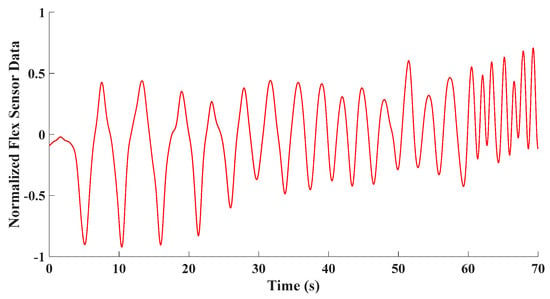

To assess how the developed approach can identify the type of breathing, a test involving deep, normal, and shallow breathing was considered. After the developed fusion technique is applied and nonrespiratory information is filtered from the flex sensor data depicted in Figure 11, the signal is segmented into 6 s time intervals with a 3 s overlap. Subsequently, the FFT is applied to each time interval to extract information concerning the frequencies present within that interval.

Figure 11.

A time signal showing sensor capturing various breathing types in a breathing test including deep, normal, and shallow breathing.

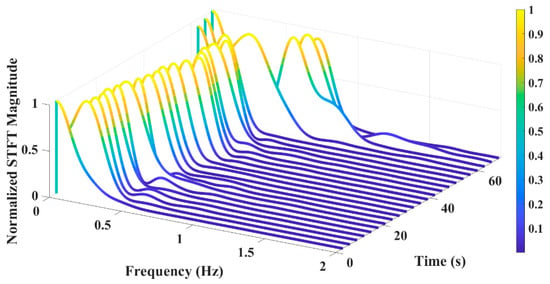

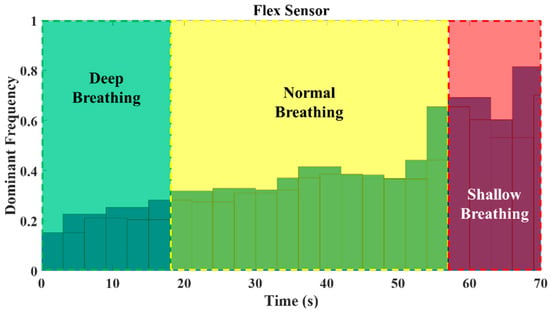

Figure 12 displays the STFTs of the experimental tests and reveals the dominant frequencies within each time interval. The most dominant frequency in each interval corresponds to the maximum magnitude of the FFT within that interval. By determining thresholds as conditions for switching between breath types and utilizing the dominant frequency in each time window, breath types can be classified.

Figure 12.

STFT of the breathing test including deep, normal, and shallow breathing.

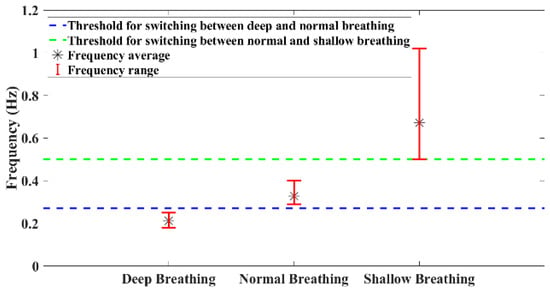

To determine distinct thresholds for breath type identification, experimental tests were conducted. Each participant was instructed to perform deep, normal, and shallow breathing. Figure 13 illustrates the ranges of dominant frequencies observed during these tests. Based on the results, the threshold between the two types of breathing was calculated by averaging the mean frequency of each breathing type. Based on Figure 12, the most dominant frequency of the experimental test within each time interval is shown in Figure 14. Using defined thresholds, it is possible to determine the types of breathing present in the test and determine the duration of each type of breathing. Based on the classification results, the number of breath cycles for each type can be determined.

Figure 13.

Frequency ranges of different breathing types of six participants (3 males and 3 females).

Figure 14.

Histogram of the most dominant frequency in each window of time for the breathing test including deep, normal, and shallow breathing.

Table 1 shows the comparison and validation of our sensor system when compared against the gold-standard spirometer data. The table compares these results with the actual number of breath cycles captured by the spirometer when the participant performed various breathing exercises and data were captured by using both the spirometer and the proposed wearable sensor. The breathing signal data from the spirometer is considered the gold standard data and ground truth. By comparing the performance of the wearable sensor against the gold standard, it ensures the sensing system’s validation and its accuracy and reliability in measuring respiratory parameters. Through the comparison and analysis presented in Table 1, the sensor’s readings are evaluated for consistency and accuracy in capturing respiratory rate. Subjects are provided with a spirometer, where using a mouthpiece, they breathe into the spirometer, which detects the flow of air and calculates the respiration rate. This process typically involves the participants inhaling and exhaling into a mouthpiece connected to the spirometer. Sensors within the spirometer then provide real-time breathing signals which are compared with the wearable sensor data and in Table 1 for validation.

Table 1.

Comparison between the proposed sensor system and gold-standard spirometer data of the number of breath cycles in each breath type.

The results showed that the developed approach could be used to determine the appropriate number of cycles for each breathing type. It can be seen that 6 s time intervals with a 3 s overlap can cause errors of 3 s in the identification of breath types. Another source of slight mismatches between the calculated results and the actual results arises from the identification of breath types during the transition from one breath type to another.

It is essential to note that these thresholds can vary among individuals and may be influenced by body type. This variability highlights the importance of personalizing wearable devices. By instructing individuals to engage in these specific breathing types and subsequently calibrating or adjusting the device based on their unique thresholds, it becomes possible to personalize the device to each person’s individual characteristics.

7. Conclusions

In conclusion, this paper introduces an innovative approach for monitoring breathing rates through the integration of flexible resistive and inertial measurement unit (IMU) sensor data. The developed sensor fusion technique effectively eliminates nonrespiratory information from flex and IMU data to extract breath-related information. The developed technique is a solution for addressing the sensitivity of flex sensors to motion-related artifacts. The effectiveness of the developed approach is validated through experiments. The experimental results showed that by applying the developed fusion technique, nonrespiratory information is effectively filtered from the flex and IMU data, resulting in a signal exclusively containing accurate breathing information. The results demonstrated that, based on the obtained signal, it is possible to accurately count breath cycles. Moreover, by segmenting the obtained signal into short time intervals and analyzing the dominant frequency in each time interval, we can successfully identify different breath types. The system can be further enhanced with wireless connectivity and by incorporating automated thresholding mechanisms by considering various body types. Advanced artificial intelligence (AI)- and machine learning-based learning algorithms can be leveraged to train and calibrate the sensor system to identify various breathing patterns based on demographics and body types. The wearable breathing sensor system presented in this paper can be used for prolonged monitoring of breathing matrices including rate, depth, and pattern of breathing in diverse applications across health, wellness, sports, and safety domains. Such breathing-related parameters can be used in applications including breathing-related sleep disorders, managing chronic respiratory conditions, performance monitoring in sports, breathing monitoring of climbers and miners, worker safety in detecting breathing hazards, health and wellness, and meditation. The ease of fitting, lower cost, higher accuracy, and small footprint make it suitable for wearable applications.

Author Contributions

Conceptualization, M.Z. and M.J.A.; Methodology, M.Z., B., P.P., B.R.S., N.J.L. and A.R.B.; Software, P.P.; Validation, M.Z.; Formal analysis, M.Z. and B.; Investigation, S.R.-G.; Resources, N.J.L. and S.A.; Data curation, B.R.S., N.J.L. and A.R.B.; Writing—original draft, M.Z.; Writing—review & editing, B., P.P., B.R.S., N.J.L., S.A., A.R.B., S.R.-G. and M.J.A.; Visualization, P.P.; Supervision, S.A., A.R.B., S.R.-G. and M.J.A.; Project administration, M.J.A.; Funding acquisition, A.R.B. and S.R.-G.. All authors have read and agreed to the published version of the manuscript.

Funding

WE-SPARK Health Institute through an Igniting Discovery Grant and the Faculty of Engineering Innovating Sustainability Grant.

Institutional Review Board Statement

This work involved human subjects in its research. Approval of all ethical and experimental procedures and protocols was granted by the University of Windsor’s Research Ethics Board under Application No. REB 22-166 and performed in line with the Declaration of Helsinki.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data used in this study are available from the corresponding authors with a reasonable request.

Acknowledgments

The work of SRG was supported by the Natural Sciences and Engineering Research Council of Canada (NSERC) through the Discovery Grant RGPIN-2022-04428. The work of MJA was supported by NSERC through the Discovery Grant RGPIN-2023-03423.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- De Fazio, R.; Sponziello, A.; Cafagna, D.; Velazquez, R.; Visconti, P. An overview of technologies and devices against COVID-19 pandemic diffusion: Virus detection and monitoring solutions. Int. J. Smart Sens. Intell. Syst. 2021, 14, 1–28. [Google Scholar] [CrossRef]

- Qiu, C.; Wu, F.; Han, W.; Yuce, M.R. A wearable bioimpedance chest patch for real-time ambulatory respiratory monitoring. IEEE Trans. Biomed. Eng. 2022, 69, 2970–2981. [Google Scholar] [CrossRef] [PubMed]

- Janusz, M.; Roudjane, M.; Mantovani, D.; Messaddeq, Y.; Gosselin, B. Detecting respiratory rate using flexible multimaterial fiber electrodes designed for a wearable garment. IEEE Sens. J. 2022, 22, 13552–13561. [Google Scholar] [CrossRef]

- Elfaramawy, T.; Fall, C.L.; Arab, S.; Morissette, M.; Lellouche, F.; Gosselin, B. A wireless respiratory monitoring system using a wearable patch sensor network. IEEE Sens. J. 2018, 19, 650–657. [Google Scholar] [CrossRef]

- Honda, S.; Hara, H.; Arie, T.; Akita, S.; Takei, K. A wearable, flexible sensor for real-time, home monitoring of sleep apnea. iScience 2022, 25, 104163. [Google Scholar] [CrossRef] [PubMed]

- Singh, G.; Tee, A.; Trakoolwilaiwan, T.; Taha, A.; Olivo, M. Method of respiratory rate measurement using a unique wearable platform and an adaptive optical-based approach. Intensive Care Med. Exp. 2020, 8, 15. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.; Allen, J.; Zheng, D.; Chen, F. Recent development of respiratory rate measurement technologies. Physiol. Meas. 2019, 40, 07TR01. [Google Scholar] [CrossRef] [PubMed]

- Dieffenderfer, J.; Goodell, H.; Mills, S.; McKnight, M.; Yao, S.; Lin, F.; Beppler, E.; Bent, B.; Lee, B.; Misra, V. Low-power wearable systems for continuous monitoring of environment and health for chronic respiratory disease. IEEE J. Biomed. Health Inform. 2016, 20, 1251–1264. [Google Scholar] [CrossRef] [PubMed]

- Fang, Y.; Jiang, Z.; Wang, H. A novel sleep respiratory rate detection method for obstructive sleep apnea based on characteristic moment waveform. J. Healthc. Eng. 2018, 2018, 1902176. [Google Scholar] [CrossRef]

- Molinaro, N.; Massaroni, C.; Presti, D.L.; Saccomandi, P.; Di Tomaso, G.; Zollo, L.; Perego, P.; Andreoni, G.; Schena, E. Wearable textile based on silver plated knitted sensor for respiratory rate monitoring. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 2865–2868. [Google Scholar]

- Reinvuo, T.; Hannula, M.; Sorvoja, H.; Alasaarela, E.; Myllyla, R. Measurement of respiratory rate with high-resolution accelerometer and EMFit pressure sensor. In Proceedings of the 2006 IEEE Sensors Applications Symposium, Houston, TX, USA, 7–9 February 2006; pp. 192–195. [Google Scholar]

- Li, S.-H.; Lin, B.-S.; Tsai, C.-H.; Yang, C.-T.; Lin, B.-S. Design of wearable breathing sound monitoring system for real-time wheeze detection. Sensors 2017, 17, 171. [Google Scholar] [CrossRef] [PubMed]

- Dinh, T.; Nguyen, T.; Phan, H.-P.; Nguyen, N.-T.; Dao, D.V.; Bell, J. Stretchable respiration sensors: Advanced designs and multifunctional platforms for wearable physiological monitoring. Biosens. Bioelectron. 2020, 166, 112460. [Google Scholar] [CrossRef] [PubMed]

- Dinh, T.; Phan, H.-P.; Nguyen, T.-K.; Qamar, A.; Foisal, A.R.M.; Viet, T.N.; Tran, C.-D.; Zhu, Y.; Nguyen, N.-T.; Dao, D.V. Environment-friendly carbon nanotube based flexible electronics for noninvasive and wearable healthcare. J. Mater. Chem. C Mater. 2016, 4, 10061–10068. [Google Scholar] [CrossRef]

- Yuasa, Y.; Takahashi, K.; Suzuki, K. Wearable flexible device for respiratory phase measurement based on sound and chest movement. In Proceedings of the 2017 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Banff, AB, Canada, 5–8 October 2017; pp. 2378–2383. [Google Scholar]

- De Fazio, R.; Stabile, M.; De Vittorio, M.; Velázquez, R.; Visconti, P. An overview of wearable piezoresistive and inertial sensors for respiration rate monitoring. Electronics 2021, 10, 2178. [Google Scholar] [CrossRef]

- Hou, C.; Wu, Y.; Zeng, X.; Zhao, S.; Zhou, Q.; Yang, G. Novel high sensitivity accelerometer based on a microfiber loop resonator. Opt. Eng. 2010, 49, 14402. [Google Scholar]

- Kano, S.; Mekaru, H. Preliminary comparison of respiratory signals using acceleration on neck and humidity in exhaled air. Microsyst. Technol. 2021, 27, 1–9. [Google Scholar] [CrossRef]

- Seçkin, M.; Seçkin, A.Ç.; Gençer, Ç. Biomedical sensors and applications of wearable technologies on arm and hand. Biomed. Mater. Devices 2023, 1, 443–455. [Google Scholar] [CrossRef]

- Dong, T.; Gu, Y.; Liu, T.; Pecht, M. Resistive and capacitive strain sensors based on customized compliant electrode: Comparison and their wearable applications. Sens. Actuators A Phys. 2021, 326, 112720. [Google Scholar] [CrossRef]

- Fiorillo, A.S.; Critello, C.D.; Pullano, S.A. Theory, technology and applications of piezoresistive sensors: A review. Sens. Actuators A Phys. 2018, 281, 156–175. [Google Scholar] [CrossRef]

- Abbasnejad, B.; Thorby, W.; Razmjou, A.; Jin, D.; Asadnia, M.; Warkiani, M.E. MEMS piezoresistive flow sensors for sleep apnea therapy. Sens. Actuators A Phys. 2018, 279, 577–585. [Google Scholar] [CrossRef]

- Chu, M.; Nguyen, T.; Pandey, V.; Zhou, Y.; Pham, H.N.; Bar-Yoseph, R.; Radom-Aizik, S.; Jain, R.; Cooper, D.M.; Khine, M. Respiration rate and volume measurements using wearable strain sensors. NPJ Digit. Med. 2019, 2, 8. [Google Scholar] [CrossRef] [PubMed]

- Massaroni, C.; Di Tocco, J.; Presti, D.L.; Longo, U.G.; Miccinilli, S.; Sterzi, S.; Formica, D.; Saccomandi, P.; Schena, E. Smart textile based on piezoresistive sensing elements for respiratory monitoring. IEEE Sens. J. 2019, 19, 7718–7725. [Google Scholar] [CrossRef]

- Raji, R.K.; Adjeisah, M.; Miao, X.; Wan, A. A novel respiration pattern biometric prediction system based on artificial neural network. Sens. Rev. 2020, 40, 8–16. [Google Scholar] [CrossRef]

- Raji, R.K.; Miao, X.; Wan, A.; Niu, L.; Li, Y.; Boakye, A. Knitted piezoresistive smart chest band and its application for respiration patterns assessment. J. Eng. Fiber Fabr. 2019, 14, 1558925019868474. [Google Scholar] [CrossRef]

- Nguyen, T.-V.; Ichiki, M. MEMS-based sensor for simultaneous measurement of pulse wave and respiration rate. Sensors 2019, 19, 4942. [Google Scholar] [CrossRef]

- Saha, U.; Kamat, A.; Sengupta, D.; Jayawardhana, B.; Kottapalli, A.G.P. A low-cost lung monitoring point-of-care device based on a flexible piezoresistive flow sensor. In Proceedings of the 2020 IEEE SENSORS, Rotterdam, The Netherlands, 25–28 October 2020; pp. 1–4. [Google Scholar]

- Vanegas, E.; Igual, R.; Plaza, I. Piezoresistive breathing sensing system with 3d printed wearable casing. J. Sens. 2019, 2019, 1–19. [Google Scholar] [CrossRef]

- Atalay, O.; Kennon, W.R.; Demirok, E. Weft-knitted strain sensor for monitoring respiratory rate and its electro-mechanical modeling. IEEE Sens. J. 2014, 15, 110–122. [Google Scholar] [CrossRef]

- Jortberg, E.; Silva, I.; Bhatkar, V.; McGinnis, R.S.; Sen-Gupta, E.; Morey, B.; John, A.W., Jr.; Pindado, J.; Bianchi, M.T. A novel adhesive biosensor system for detecting respiration, cardiac, and limb movement signals during sleep: Validation with polysomnography. Nat. Sci. Sleep 2018, 10, 397–408. [Google Scholar] [CrossRef]

- Mariello, M.; Qualtieri, A.; Mele, G.; Vittorio, M.D. Metal-Free Multilayer Hybrid PENG Based on Soft Electrospun/-Sprayed Membranes with Cardanol Additive for Harvesting Energy from Surgical Face Masks. ACS Appl. Mater. Interfaces 2021, 13, 20606–20621. [Google Scholar] [CrossRef] [PubMed]

- Tamura, T. Wearable inertial sensors and their applications. In Wearable Sensors; Elsevier: Amsterdam, The Netherlands, 2014; pp. 85–104. [Google Scholar]

- Rahmani, M.H.; Berkvens, R.; Weyn, M. Chest-worn inertial sensors: A survey of applications and methods. Sensors 2021, 21, 2875. [Google Scholar] [CrossRef] [PubMed]

- Gaidhani, A.; Moon, K.S.; Ozturk, Y.; Lee, S.Q.; Youm, W. Extraction and analysis of respiratory motion using wearable inertial sensor system during trunk motion. Sensors 2017, 17, 2932. [Google Scholar] [CrossRef] [PubMed]

- Shabeeb, A.G.; Al-Askery, A.J.; Humadi, A.F. Design and implementation of breathing rate measurement systembased on accelerometer sensor. IOP Conf. Ser. Mater. Sci. Eng. 2020, 745, 012100. [Google Scholar] [CrossRef]

- Wang, S. Multisensor data fusion of motion monitoring system based on BP neural network. J. Supercomput. 2020, 76, 1642–1656. [Google Scholar] [CrossRef]

- Qiu, S.; Zhao, H.; Jiang, N.; Wang, Z.; Liu, L.; An, Y.; Zhao, H.; Miao, X.; Liu, R.; Fortino, G. Multi-sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Scebba, G.; Da Poian, G.; Karlen, W. Multispectral video fusion for non-contact monitoring of respiratory rate and apnea. IEEE Trans. Biomed. Eng. 2020, 68, 350–359. [Google Scholar] [CrossRef] [PubMed]

- Lu, L.; Jiang, C.; Hu, G.; Liu, J.; Yang, B. Flexible noncontact sensing for human–machine interaction. Adv. Mater. 2021, 33, 2100218. [Google Scholar] [CrossRef] [PubMed]

- Hssayeni, M.D.; Ghoraani, B. Multi-modal physiological data fusion for affect estimation using deep learning. IEEE Access 2021, 9, 21642–21652. [Google Scholar] [CrossRef]

- Raiano, L.; Di Tocco, J.; Massaroni, C.; Di Pino, G.; Schena, E.; Formica, D. Clean-breathing: A novel sensor fusion algorithm based on ICA to remove motion artifacts from breathing signal. In Proceedings of the 2020 IEEE International Workshop on Metrology for Industry 4.0 & IoT, Roma, Italy, 3–5 June 2020; pp. 734–739. [Google Scholar]

- Cesareo, A.; Nido, S.A.; Biffi, E.; Gandossini, S.; D’Angelo, M.G.; Aliverti, A. A wearable device for breathing frequency monitoring: A pilot study on patients with muscular dystrophy. Sensors 2020, 20, 5346. [Google Scholar] [CrossRef] [PubMed]

- Das, P.S.; Ahmed, H.E.U.; Motaghedi, F.; Lester, N.J.; Khalil, A.; Al Janaideh, M.; Anees, S.; Carmichael, T.B.; Bain, A.R.; Rondeau-Gagne, S.; et al. A Wearable Multisensor Patch for Breathing Pattern Recognition. IEEE Sens. J. 2023, 23, 10924–10934. [Google Scholar] [CrossRef]

- Meng, T.; Jing, X.; Yan, Z.; Pedrycz, W. A survey on machine learning for data fusion. Inf. Fusion 2020, 57, 115–129. [Google Scholar] [CrossRef]

- Uddin, M.Z.; Hassan, M.M.; Alsanad, A.; Savaglio, C. A body sensor data fusion and deep recurrent neural network-based behavior recognition approach for robust healthcare. Inf. Fusion 2020, 55, 105–115. [Google Scholar] [CrossRef]

- Lee, S.; Lim, Y.; Lim, K. Multimodal sensor fusion models for real-time exercise repetition counting with IMU sensors and respiration data. Inf. Fusion 2023, 104, 102153. [Google Scholar] [CrossRef]

- Rachel, C.K.; Villeneuve, E.; White, R.J.; Sherratt, R.S.; Holderbaum, W.; Harwin, W.S. Application of data fusion techniques and technologies for wearable health monitoring. Med. Eng. Phys. 2017, 42, 1–12. [Google Scholar]

- Vanegas, E.; Igual, R.; Plaza, I. Sensing Systems for Respiration Monitoring: A Technical Systematic Review. Sensors 2020, 20, 5446. [Google Scholar] [CrossRef] [PubMed]

- Ramachandran, A.; Karuppiah, A. A Survey on Recent Advances in Machine Learning Based Sleep Apnea Detection Systems. Healthcare 2021, 9, 914. [Google Scholar] [CrossRef] [PubMed]

- Gravina, R.; Alinia, P.; Ghasemzadeh, H.; Fortino, G. Multi-sensor fusion in body sensor networks: State-of-the-art and research challenges. Inf. Fusion 2017, 35, 68–80. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).