Modified Joint Source–Channel Trellises for Transmitting JPEG Images over Wireless Networks

Abstract

1. Introduction

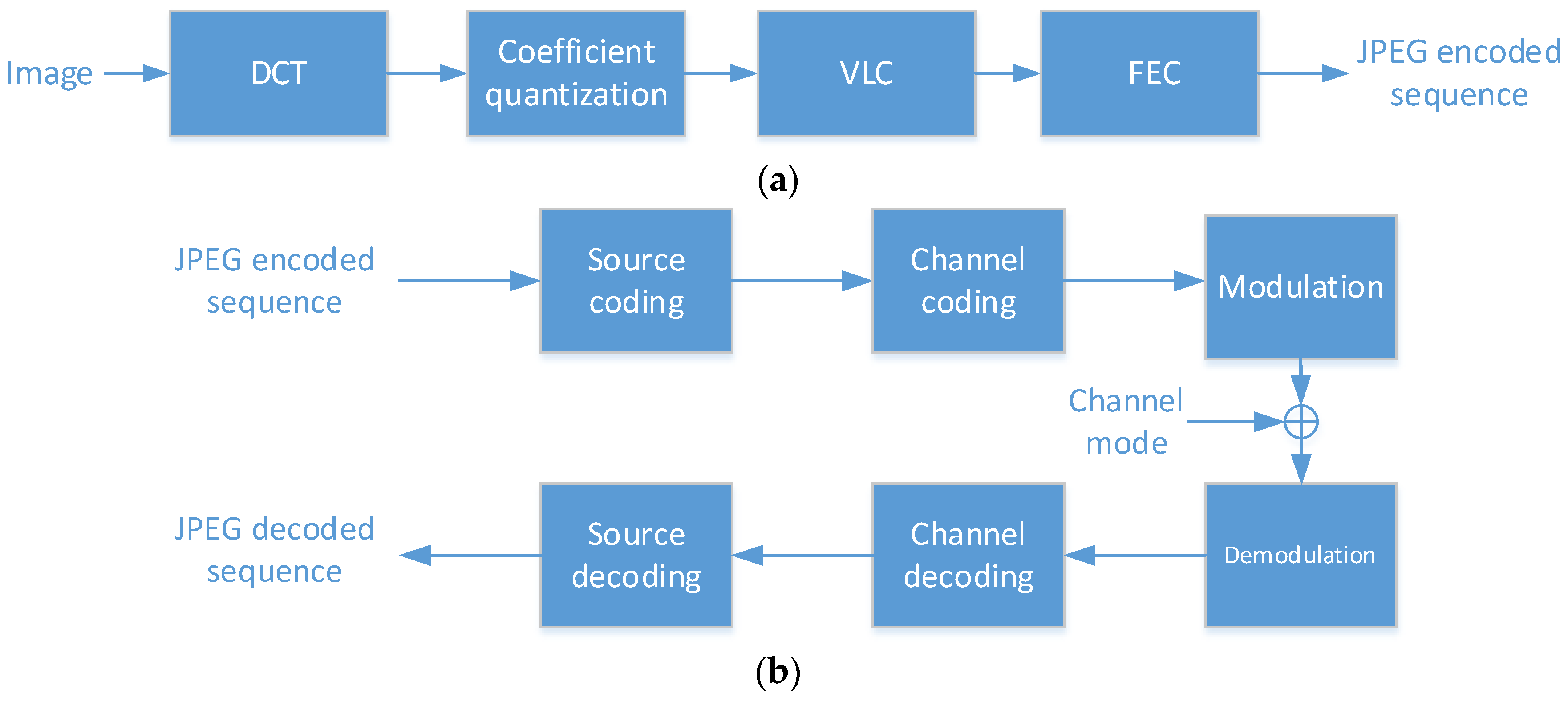

2. The Wireless JPEG Transmission Model

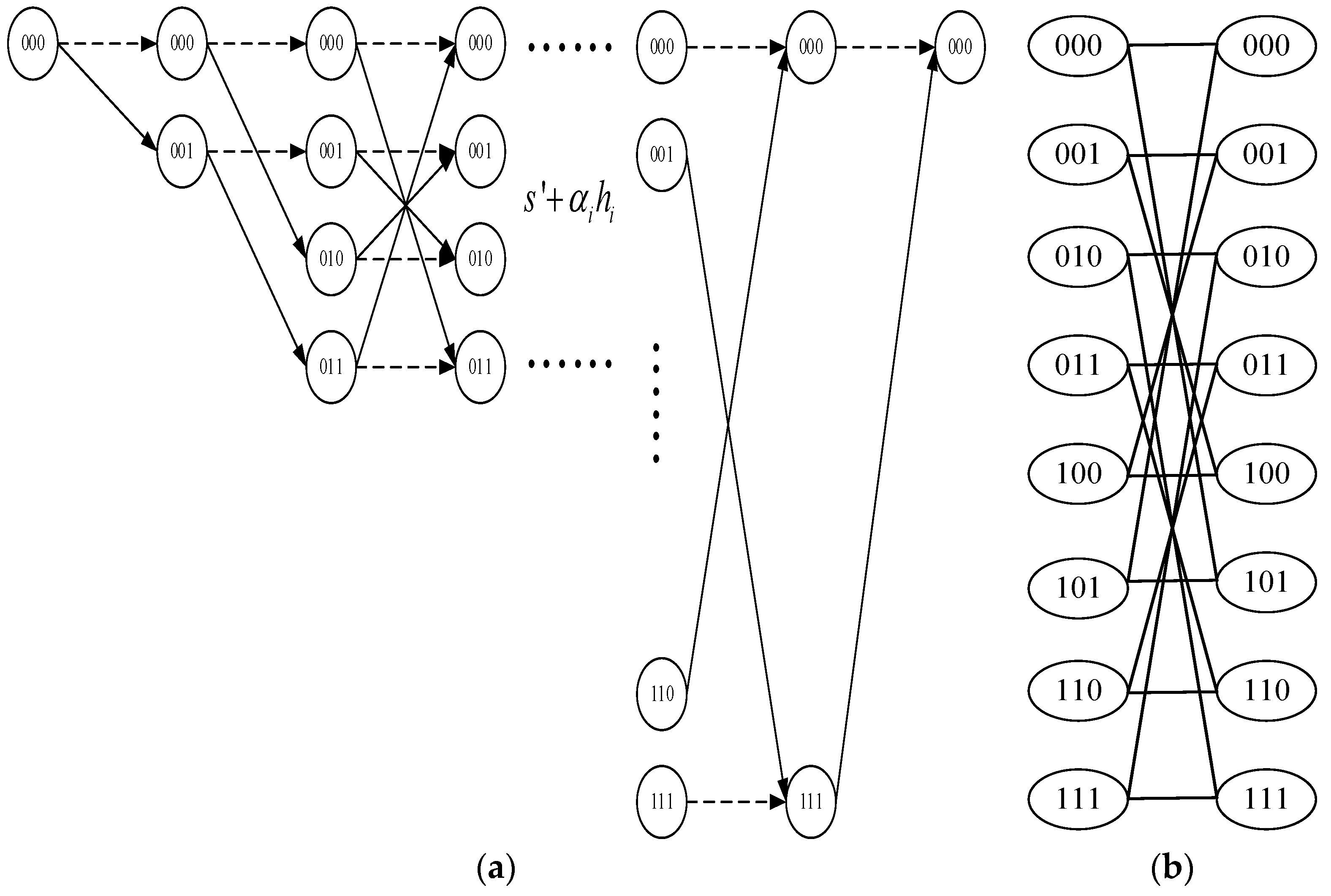

3. Construction of the Combined Source–Channel Trellis

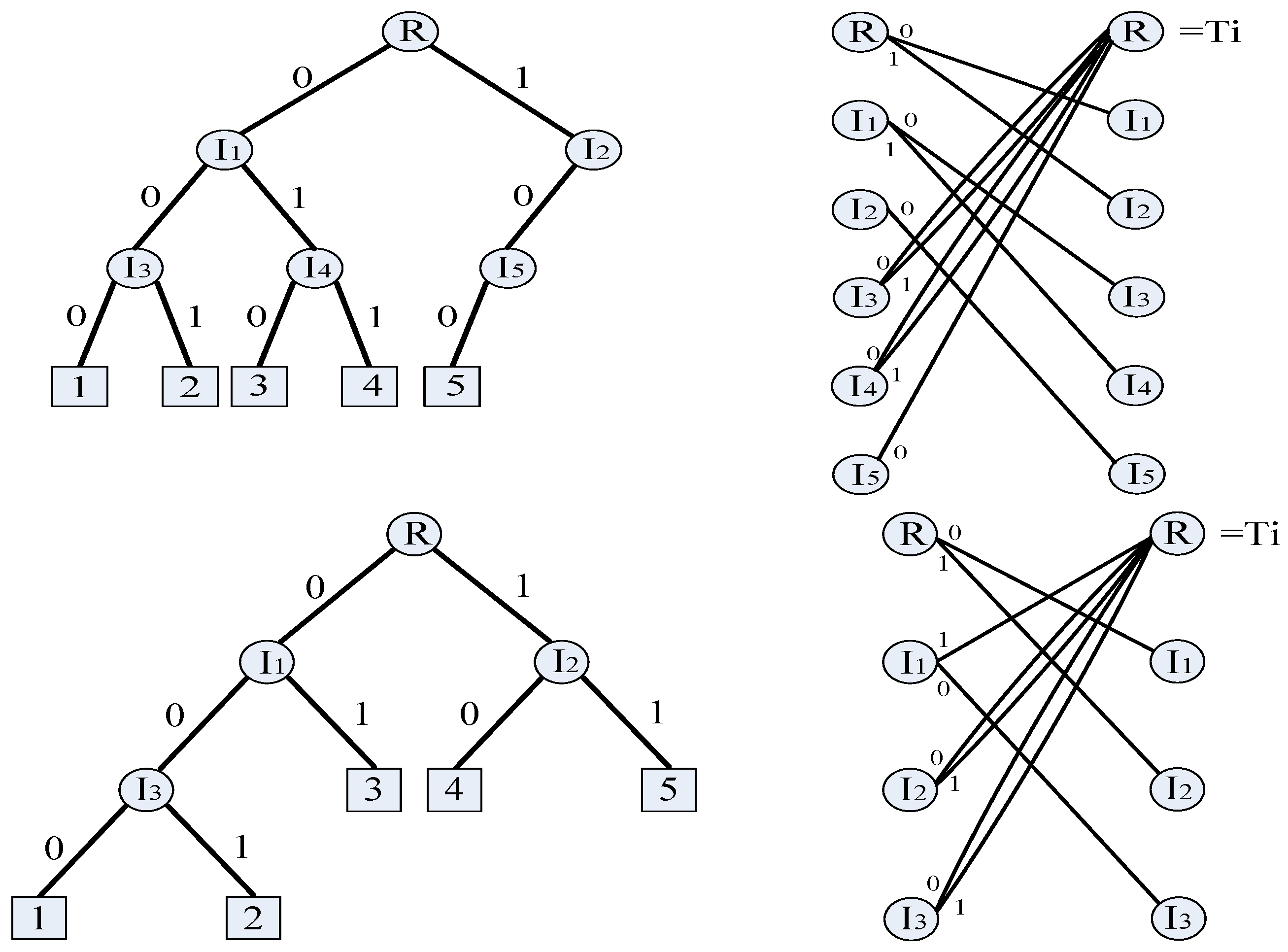

3.1. Tier 1: Combined VLC–FLC Trellis Encoder

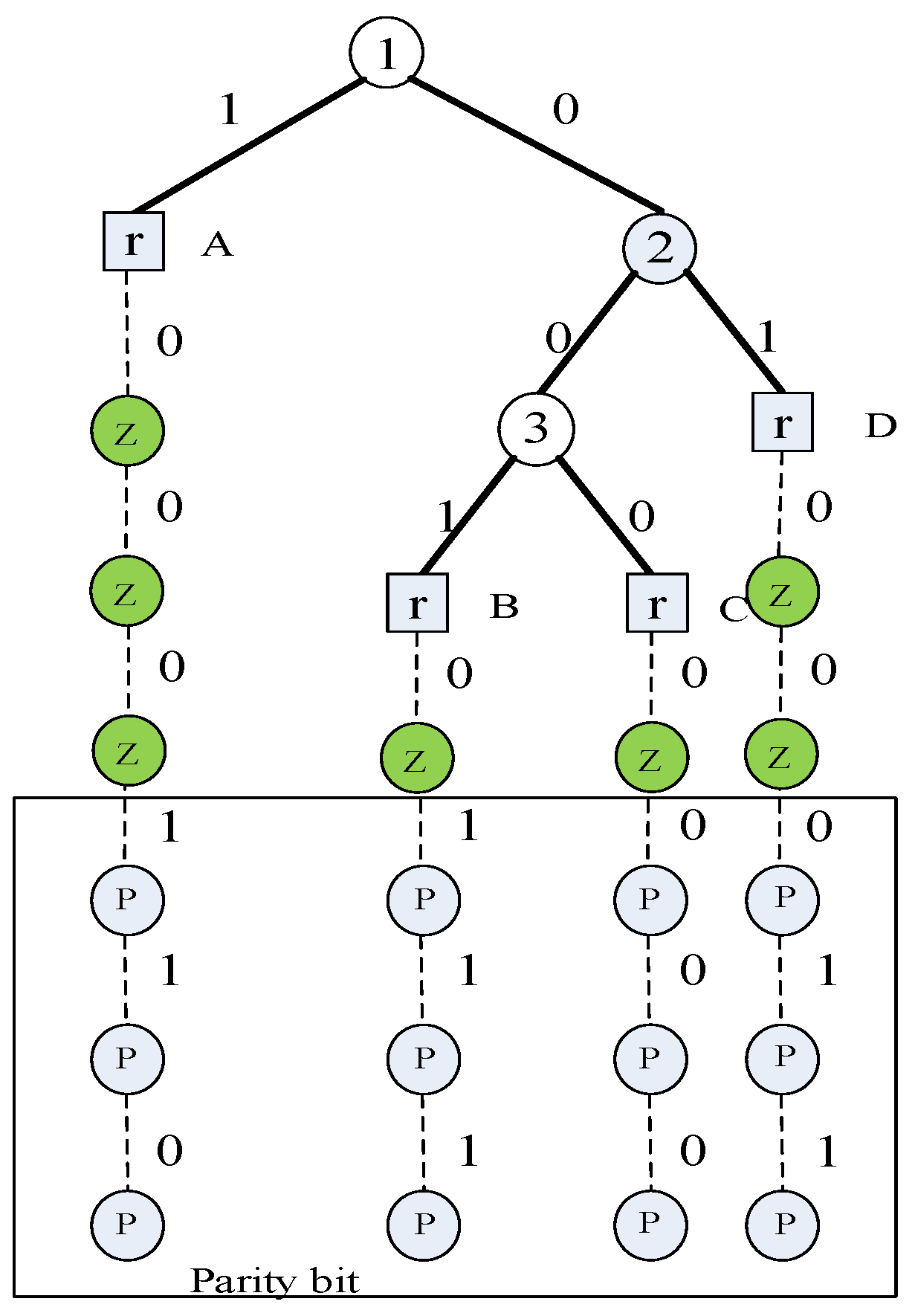

3.2. Tier 2: Modified Nonlinear Block Code Decoder Trellis

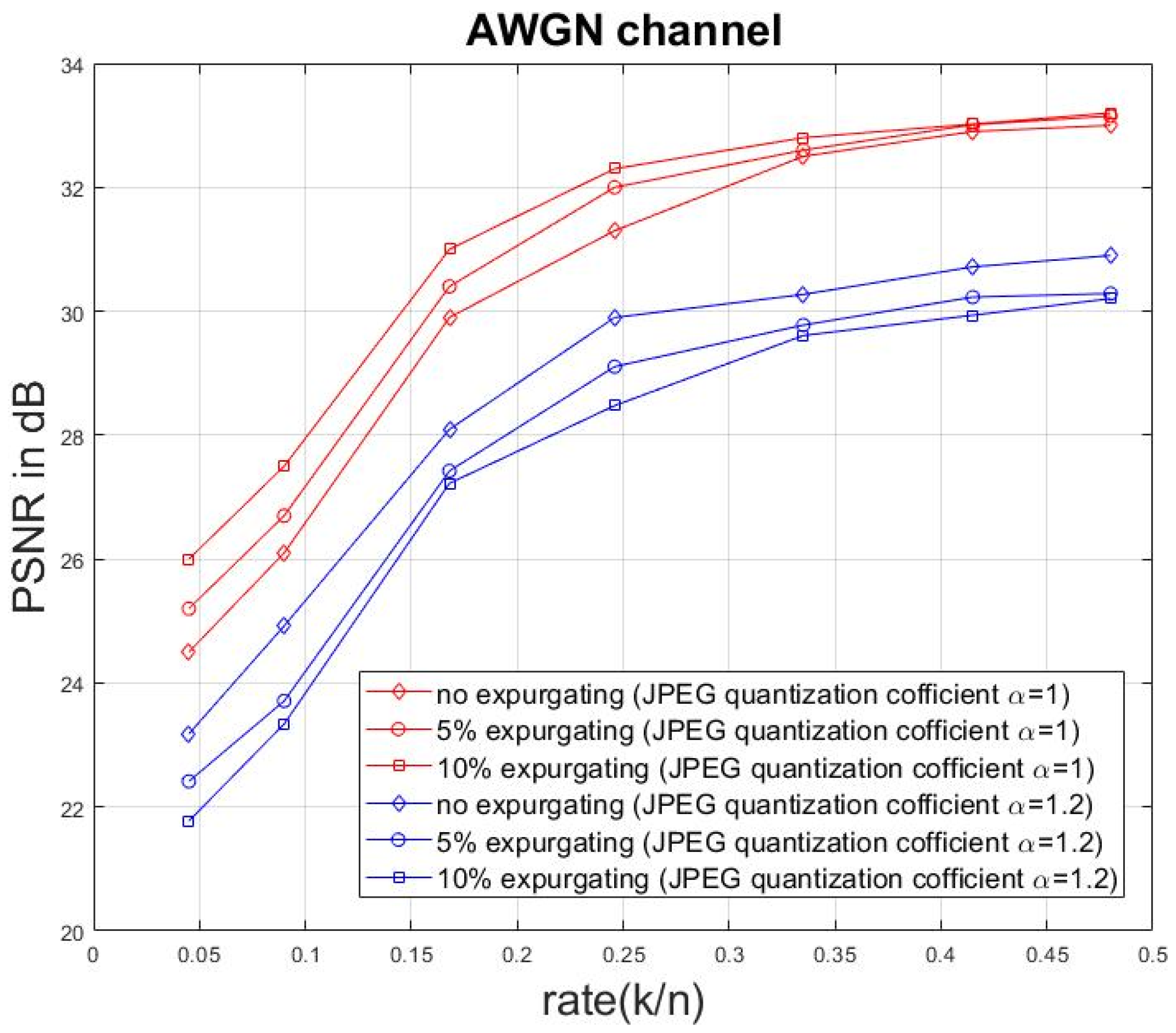

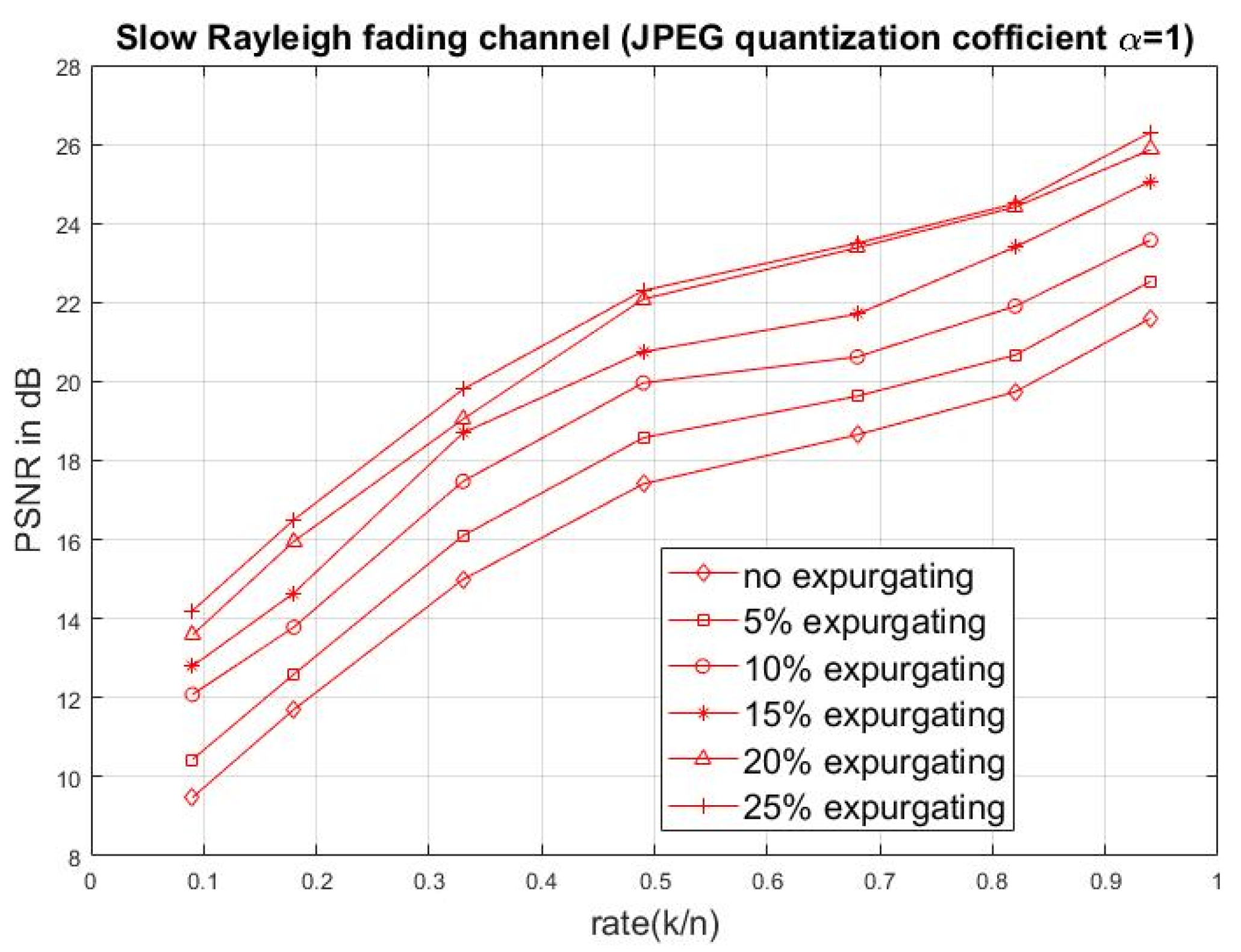

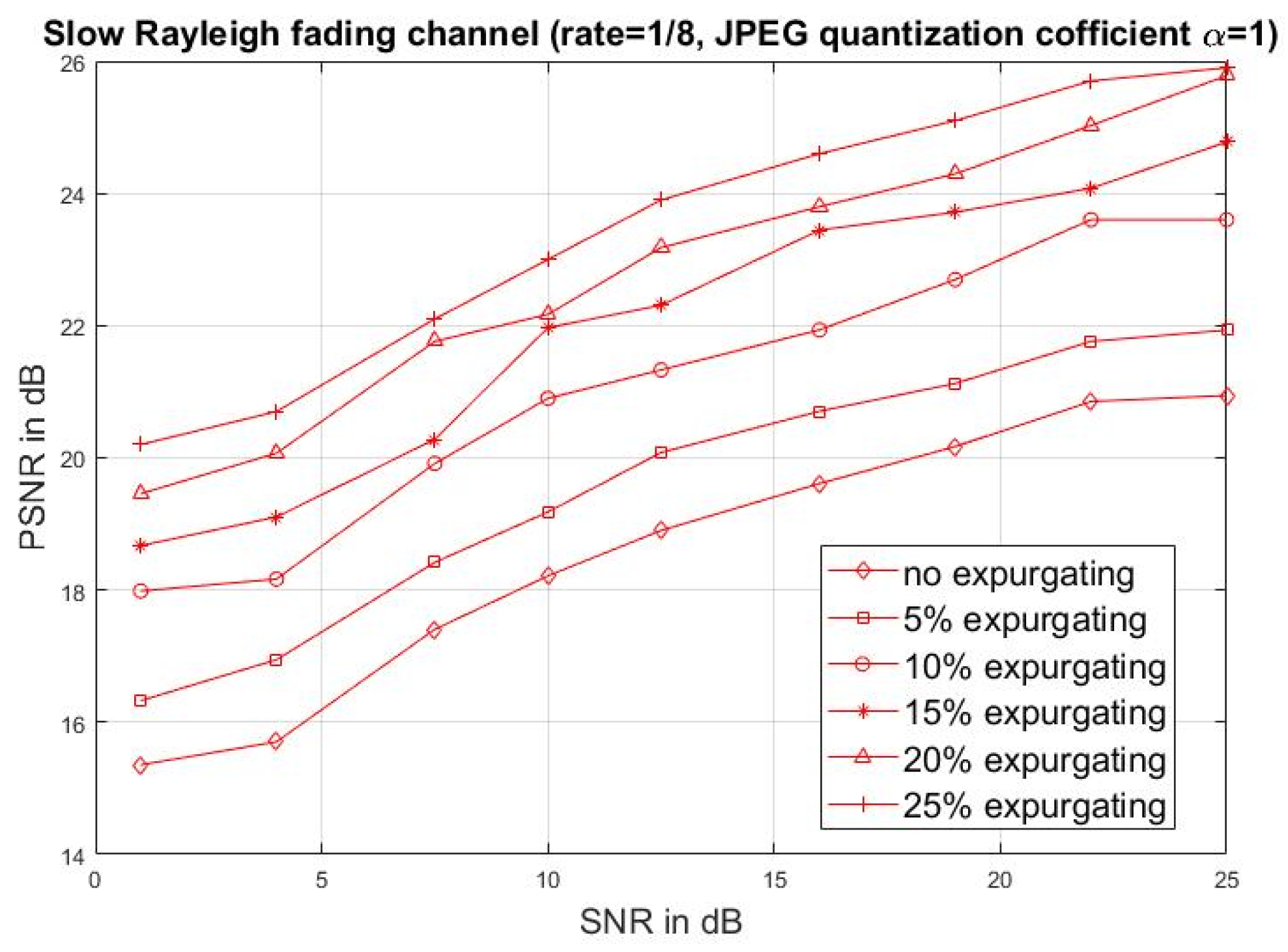

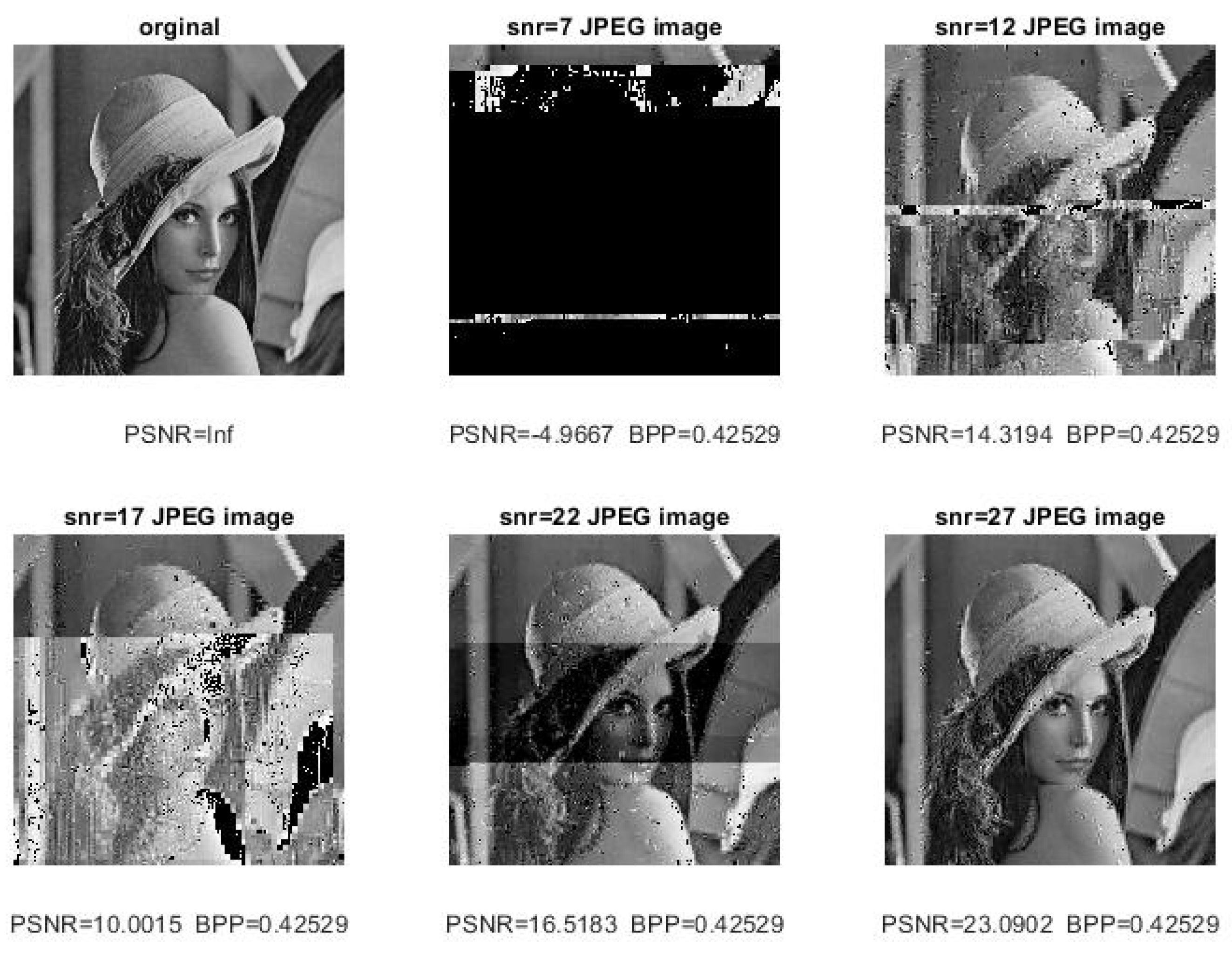

4. Simulated Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hamming, R.W. Error detecting and error correcting codes. Bell Syst. Tech. J. 1950, 29, 147–160. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Peng, Z.; Huang, Y.-F.; Costello, D.J. Turbo codes for image transmission—A Joint channel and source decoding approach. IEEE J. Sel. Areas Commun. 2000, 18, 868–879. [Google Scholar] [CrossRef]

- Zhu, G.-C.; Alajaji, F. Joint source-channel turbo coding for binary Markov sources. IEEE Trans. Wirel. Commun. 2006, 5, 1065–1075. [Google Scholar]

- Jaspar, X.; Gillemot, C.; Vandendorpe, L. Joint source-channel turbo techniques for discrete-valued sources: From theory to practice. Proc. IEEE 2007, 95, 1345–1361. [Google Scholar] [CrossRef]

- Zhai, F.; Eisenberg, Y.; Katsaggelos, A.K. Joint source channel coding for video communications. In Handbook of Image and Video Processing, 2nd ed.; Bovik, A., Ed.; Academic Press: Burlington, ON, Canada, 2005. [Google Scholar]

- Balle, J.; Laparra, V.; Simoncelli, E.P. End-to-end optimized image compression. In Proceedings of the International Conference on Learning Representations (ICLR), Toulon, France, 24–26 April 2017; pp. 1–27. [Google Scholar]

- Fabregas, A.G.; Martinez, A.; Caire, G. Bit-Interleaved Coded Modulation. Found. Trends Commun. Inf. Theory 2008, 5, 1–153. [Google Scholar] [CrossRef]

- Narasimha, M.; Peterson, A. On the Computation of the Discrete Cosine Transform. IEEE Trans. Commun. 1978, 26, 934–936. [Google Scholar] [CrossRef]

- Chui, C.K. An Introduction to Wavelets; Academic Press: Cambridge, MA, USA, 1992. [Google Scholar]

- Goblick, T. Theoretical limitations on the transmission of data from analog sources. IEEE Trans. Inf. Theory 1965, 11, 558–567. [Google Scholar] [CrossRef]

- Tung, T.; Gunduz, D. Sparsecast: Hybrid digital-analog wireless image transmission exploiting frequency-domain sparsity. IEEE Commun. Lett. 2018, 22, 2451–2454. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T. Mobilenets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Chiou, P.T.; Sun, Y.; Young, G.S. A complexity analysis of the JPEG image compression algorithm. In Proceedings of the 9th Computer Science and Electronic Engineering (CEEC), Colchester, UK, 27–29 September 2017; pp. 65–70. [Google Scholar]

- Ding, M.; Li, J.; Ma, M.; Fan, X. SNR-adaptive deep joint source-channel coding for wireless image transmission. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Toronto, ON, Canada, 6–11 June 2021; pp. 1555–1559. [Google Scholar]

- Jankowski, M.; Gündüz, D.; Mikolajczyk, K. Deep joint source-channel coding for wireless image retrieval. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 5070–5074. [Google Scholar]

- Huang, Q.; Jiang, M.; Zhao, C. Learning to design constellation for AWGN channel using auto-encoders. In Proceedings of the IEEE International Workshop on Signal Processing Systems, Nanjing, China, 20–23 October 2019; pp. 154–159. [Google Scholar]

- Luo, X.; Chen, H.-H.; Guo, Q. Semantic communications: Overview open issues and future research directions. IEEE Wirel. Commun. 2022, 29, 210–219. [Google Scholar] [CrossRef]

- Chen, X.; Deng, C.; Zhou, B.; Zhang, H.; Yang, G.; Ma, S. High-accuracy CSI feedback with super-resolution network for massive MIMO systems. IEEE Wirel. Commun. Lett. 2022, 11, 141–145. [Google Scholar] [CrossRef]

- Lin, S.; Kasami, T.; Fujiwara, T.; Fossorier, M. Trellises and Trellis-Based Decoding Algorithms for Linear Block Codes; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Soleymani, M.R.; Gao, Y.; Vilaipornsawai, U. Turbo Coding for Satellite and Wireless Communications; Kluwer Academic: Dordrecht, The Netherlands, 2002. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, Y.-C.; Wang, J.-J.; Yang, S.-C.; Chen, C.-C. Modified Joint Source–Channel Trellises for Transmitting JPEG Images over Wireless Networks. Appl. Sci. 2024, 14, 2578. https://doi.org/10.3390/app14062578

Lin Y-C, Wang J-J, Yang S-C, Chen C-C. Modified Joint Source–Channel Trellises for Transmitting JPEG Images over Wireless Networks. Applied Sciences. 2024; 14(6):2578. https://doi.org/10.3390/app14062578

Chicago/Turabian StyleLin, Yin-Chen, Jyun-Jie Wang, Sheng-Chih Yang, and Chi-Chun Chen. 2024. "Modified Joint Source–Channel Trellises for Transmitting JPEG Images over Wireless Networks" Applied Sciences 14, no. 6: 2578. https://doi.org/10.3390/app14062578

APA StyleLin, Y.-C., Wang, J.-J., Yang, S.-C., & Chen, C.-C. (2024). Modified Joint Source–Channel Trellises for Transmitting JPEG Images over Wireless Networks. Applied Sciences, 14(6), 2578. https://doi.org/10.3390/app14062578