Mobile Robot for Security Applications in Remotely Operated Advanced Reactors

Abstract

1. Introduction

2. Research Methodology

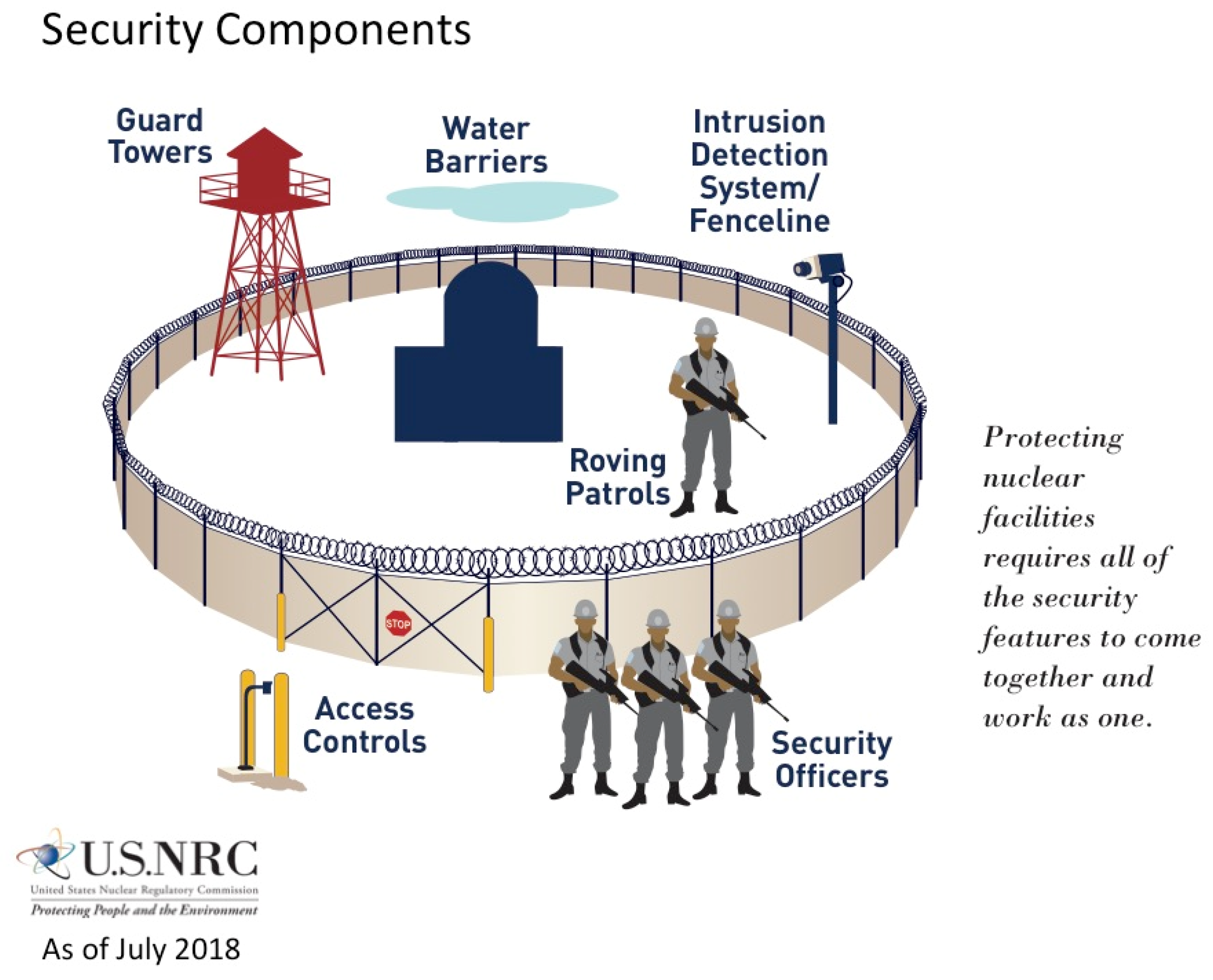

3. Performance Requirements for Physical Security

- Establishment and maintenance of a security organization;

- Usage of security equipment and technology;

- Proper training and qualification of security personnel;

- Implementation of predetermined response plans and strategies;

- Protection of digital computer and communication systems and networks.

- Prevent unauthorized access of persons and vehicles to material access areas and vital areas;

- Permit only authorized activities and conditions within protected areas, material access areas, and vital areas;

- Permit only authorized placement and movement of strategic special nuclear material within material access areas;

- Permit the removal of only authorized and confirmed forms and amounts of strategic special nuclear material from material access areas;

- Provide authorized access and assure detection of and response to unauthorized penetrations of the protected area to satisfy the general performance requirements of 10 CFR 73.20 (a);

- A prompt response by the response force under the physical protection programs.

4. Mobile Robots Categories

4.1. Stationary (Arm/Manipulator)

4.2. Land-Based

4.2.1. Wheeled Mobile Robot (WMR)

4.2.2. Walking (Legged) Mobile Robot

4.2.3. Tracked Slip/Skid Locomotion

4.2.4. Hybrid

4.3. Air-Based/Unmanned Aerial Vehicles

4.4. Water-Based/Unmanned Water Vehicles

4.5. Other

5. Basic Functionality and Capability of a Robot

6. Robots’ Way of Formalizing Problems

7. Qualitative and Quantitative Performance Assessment of a Robot

8. Parameterization of the Performance of a Robot

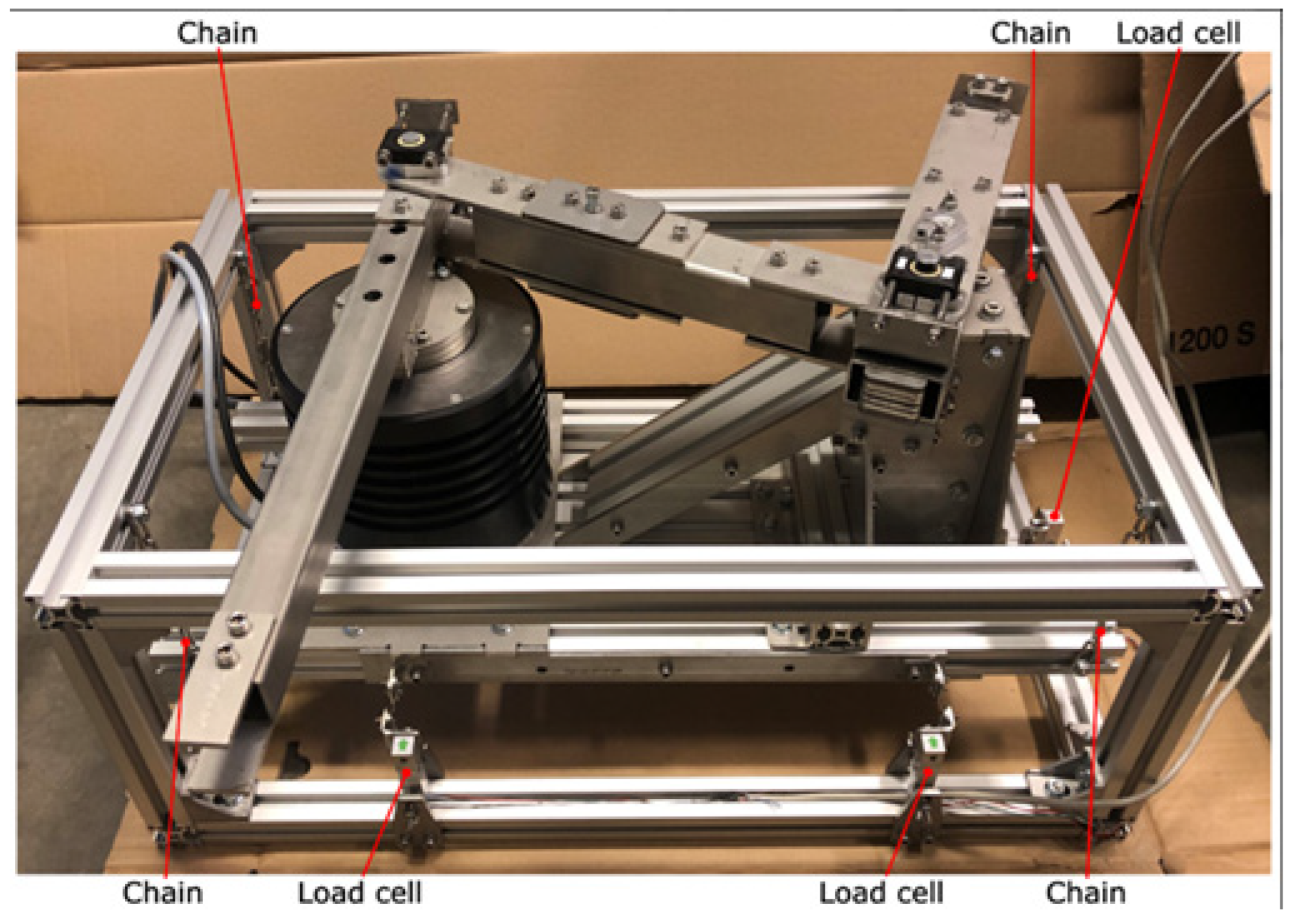

8.1. Robot Performance

8.1.1. Self-Awareness

8.1.2. Human Awareness

8.2. System Performance

8.3. Autonomy

8.4. Human–Robot Interaction (HRI)

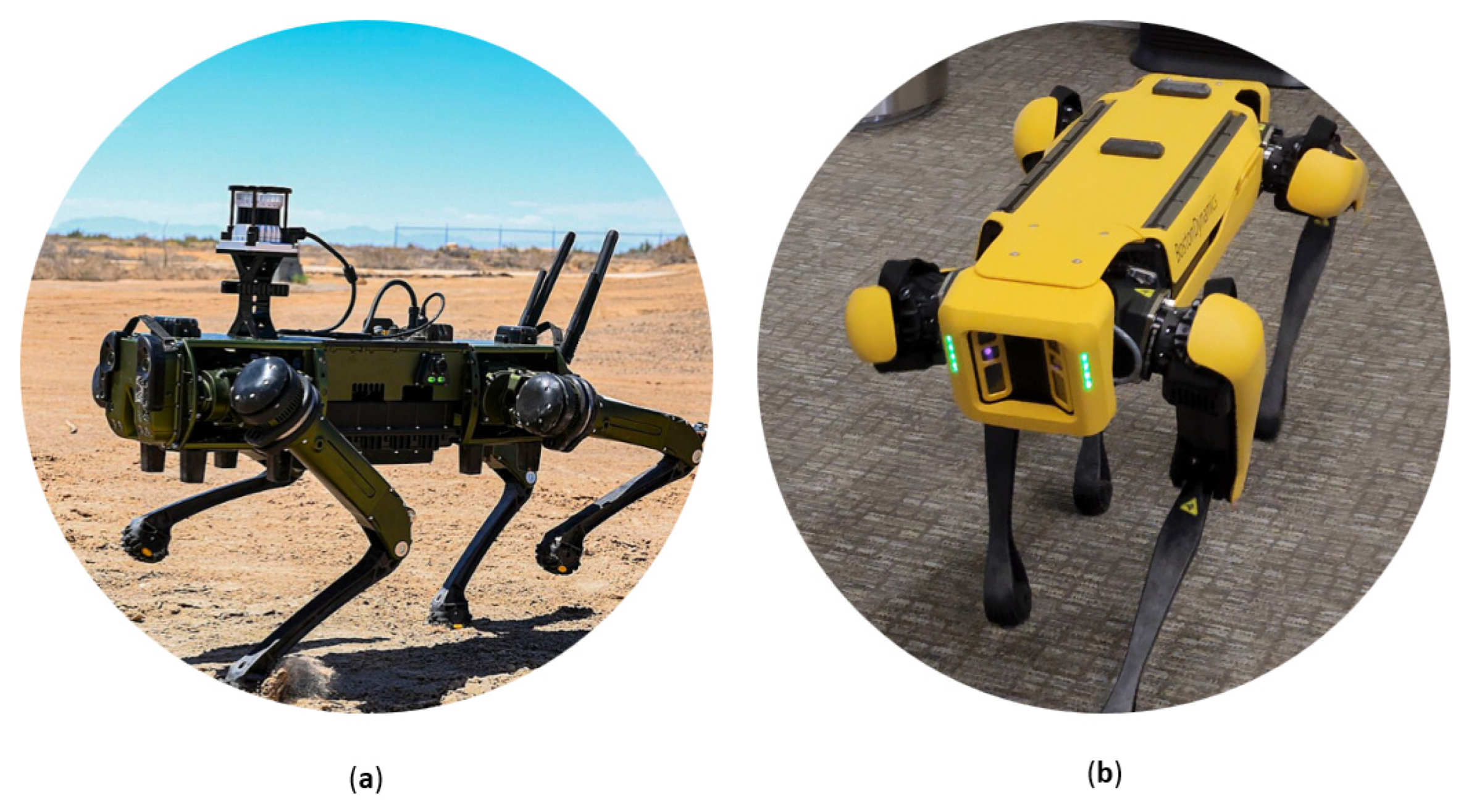

9. Introduction to Mobile Dog Robots

9.1. Operational Capabilities

9.1.1. Payload and Tracking

9.1.2. Speed and Distance

9.1.3. Battery Capabilities

9.1.4. Safety and Protection

9.1.5. Environmental Conditions

9.2. Sensory Capabilities

9.2.1. Rad Sensors

9.2.2. Navigation and Object Detection

9.3. Technical Capabilities

9.3.1. Computing Power

9.3.2. Communication

9.3.3. Integration

9.4. Dexterity Capabilities

9.4.1. Manipulator

9.4.2. Blind and Inverted Locomotion

9.5. Software Capabilities

Simulation

10. Research Roadmap

11. Discussions

12. Conclusions and Future Works

- [a]

- Utilizing an ROS (robot operating system) to create a simulation environment based on detecting objects;

- [b]

- Utilizing an ROS (robot operating system) in a robot through coding and using it for object detection purposes;

- [c]

- Introducing different sensors on an existing robot to oversee how each of the sensors behaves and then collect those data to analyze them.

- [a]

- Setting up an experimental facility required to perform research works;

- [b]

- Performing a pilot demonstration at an existing or future nuclear power plant (NPP) site using a dog robot;

- [c]

- Engaging with physical security stakeholders at currently operating plants and at advanced reactor developers;

- [d]

- Developing a table-top model of a typical NPP-protected area indicating key elements of the target set and physical security posture.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Capabilities | Spot | Ghost | ANYmal |

|---|---|---|---|

| Basic Information | |||

| Base Price | USD 160,000 | USD 207,600 | USD 150,000 |

| Maintenance/service Life | No maintenance plan, only battery changes needed. Two-filter mesh, check those conditions. Foot pads, single-bolt 1 year warranty Service plan, self-charging | Not available (maintenance is as per contract). V60 Q-year PREMIUM Maintenance + Preventive Care + Software Maintenance, version number 8.6.4 | Not available |

| Repairable and components available in the U.S. | From Boston Dynamics | Available to maximum extent | No |

| Operational Capabilities | |||

| Payload and Tracking | |||

| Payload | Up to 14 kg | Weight 9 kg +/− (20 lbs +/−) w/base battery | 10 kg (nominal), 15 kg (reduced performance) |

| Location tracking | HR-GPS, Orion RTLS tag, Robot Odometry | Integrated GPS waypoint navigation and playback from operator control unit (OCU). | High-precision autonomous navigation with centimeter accuracy. |

| Speed and distance | |||

| Speed, agility | 1.6 m/s for 9 km in 1.5 h. | Standard walk: 1 m/s (3.3 ft/s); Fast walk: 1.6 m/s (5.2 ft/s); Run:2.2 m/s (7 ft/s); Sprint: 3 m/s (10 ft/s). | Max speed: 1.3 m/s Safe and efficient operation: 0.75 m/s |

| Maximum distance | 17 km on full charge, one hour and half if it just stands or walks, 3 h if laid down. | 10 km, 3 h at a fast speed. | 4 km at 1.3 m/s |

| Battery Capabilities | |||

| Type and capacity | Capacity 564 WH | Capacity 1250 WH Li-Ion | Swappable Li-Ion battery, UN 38.3 certified 932.4 WH |

| Running time and range | Range on full charge: 17 km; Operating time: 1.5 h (Standing/Walking), 3 h (laying down) | Li-ion battery allows for 6.8–12.8 km (4.2–8 miles) per charge, 8–10 h of mixed use, and 21 h of standby. | Battery life: 90–120 min Range: 4 km full charge, up to 2 km for typical inspection Speed may be reduced on rough or slippery terrain. |

| Recharge time | 60 min | Not available | 3 h for full charge, 100 min for 70% quick charge |

| Dimensions | 380 × 315 × 178 mm (15 × 12.4 × 7 in) L × W × H | Not available | In mm: 466 × 136 × 78 mm (18.35 × 5.35 × 3.07 in) |

| Weight | 5.2 kg (11.5 lbs) | 7.4 kg (16.3 lbs) | 5.5 kg (12.13 lbs) |

| Ingress protection | IP54 | IP67 | IP67 |

| Safety and Protection | |||

| Emergency stop | Not Available | Not available | Push button on robot to disconnect power supply |

| Remote-control emergency stop | Not Available | Not available | Push button on remote control to disable the actuators |

| Compliance | Designed according to ISO 12100 [84] for risk assessment and reduction methodology and IEC 60204-1 [85] for electrical safety | Not Available | CE marked complying with Machinery Directive 2006/42/EC (MD), EMC Directive 2014/30/EU (EMCD) [86], Low Voltage Directive 2014/35/ EU, Radio Equipment Directive 2014/53/EU (RED) [87] and Restriction of Hazardous Substances in Electrical and Electronic Equipment (ROHS) 2011/65/EU [88]. |

| Environmental Conditions | |||

| Temperature | −20 °C to 45 °C | −40 °C to 55 °C (−40° to 131 °F) | Specified: 0–40 °C [32–104 °F]; Typical: −10–50 °C [14–122 °F] |

| Lightning conditions | Ambient light required | Not available | No light required for autonomous operation and inspection. Low light (min. 20 lux) needed for automatic docking and tele-operation. |

| Sensory Capabilities | |||

| Rad sensors | |||

| Dosimetry | RDS-32 m + Supported probes for RDS-32 AccuRad PRD SPIR_Explorer Internal GMs | No dosimetry. Interface package. Open source. ROS/ROS2 API | Not available |

| Extra sensory | Measurement capabilities: Alpha/beta CAM Environmental sensors Toxic chemical sensing | CBRN (Chemical, Biological, radiological, and nuclear) | Ultrasonic microphone: sampling frequency of 0–384 kHz Spotlight: 189 lm continuous, 3790 lm for a short time Range of motion: pan ± 165°, tilt ± 90°/+180°, speed 340°/s |

| Spectroscopy | SPIR-Explorer NaI/LaBr3 Data Analyst with Detector e.g., GR-1 CZT H3DM100/M400 Osprey MCA MicroGe | Ram spectroscopy | Not available |

| Navigation and Detection | |||

| Vision | HD video FLIR/IR video 3D depth imaging and mapping | RGB camera Thermal sensors PTZ camera | Zoom camera; default: 1080 × 1920 px, 15 FPS Maximum: 2160 × 3840 px (QFHD/4 k), 30 FPS 20× optical zoom 70.2° to 4.1° FOV (horizontal) |

| LIDAR | 3D LIDAR scanning station used for surveying and 3D digital reconstruction. Drawback: requires an entire payload space. | Lidar: Ouster | LIDAR 16 channels, 300 k pts/s, 10 Hz sweep; Range: 0.4–100 m, 3 cm accuracy; FOV: 360° × 15.0° to −15.0° (H × V); Laser: 905 nm, Eye-safe Class 1 Top of Form Bottom of Form |

| Depth camera | Not available | 87 × 580 FOV, 640 × 480 at 90 FPS (fore, aft, and belly) | 0.3–3 m range, 87.3 × 58.1 × 95.3° depth FOV (horizontal/vertical/diagonal), class 1 laser product under the EN/IEC 60825-1, Edition 3 (2014) |

| Thermal camera | Price: USD 55 k 30× zoom; 360 view, lighting built into Thermal sensor: Resolution 645–512, refresh 69/56°, −5 °C temp. | Thermal (IR) surround sensor: 4 × 34° FoV lens, 640 × 512 resolution, 30 Hz and NVIDIA GPU. Waterproof | Thermal camera: −40 to +550 °C (radiometry), 336 × 256 px, 46° FOV (horizontal) |

| Technical Capabilities | |||

| Computing power | I5 Intel 8th Gen (Whiskey Lake-U) Core CCG Lifecycle | Power NVIDIA Edge Computing: Xavier 32 GB RAM used in autonomous vehicles, deploy AI and automation applications. | 2 × 8th Gen Intel Core i7 (6-core) CPU with 2 × 8 GB memory (RAM) |

| RAM and data storage | 16 GB DDR4 2666 MHz, 512 GB SSD | NVIDIA Xavier 32 GB RAM, 2 TB NVMe SSD | with 2 × 8 GB Memory (RAM), 2 × 240 GB SSD |

| Communication and Integration | |||

| Communication (connect with the capability) | Gigabit connection to the robot for real-time communication, Ethernet Connector, low-voltage communications connector (TTL Serial), GPS antenna, USB. | Wi-Fi-6, 4G, 5G, and FirstNet radios | Wi-Fi: built-in module 2.4/5 Ghz, 802.11 ac wave2 The access point or client mode 4G LTE: add-on module, LTE Cat.12 |

| Integration (low-level API/high-level API) | Integrate manipulator and API options (Joint space control and end-effector control), 3rd party available for ROS | Integrate any sensor, radio or electronics sign industry-standard ROS/ROS2 framework. C/C++|ROS, ROS2, MAVLink-compatible w/1:1 Gazebo SIM support. | ANYMAL API: gRPC-based API for mission control data integration |

| Dexterity Capabilities | |||

| Manipulator | |||

| Arm/manipulator | Yes. Separate purchase −USD 80 k, does have constraints. Gripper has a 4 k camera 28 inches in front of the robot. Grasping, picking, placing constrained Manipulation Door opening | HDT global customizable arm | Not available |

| Blind and Inverted Locomotion | |||

| Blind locomotion (in dark, snow or tall grass) | Dark: manual control, turn off ground floor detection Snow: can navigate. | Blind-mode operation in difficult terrain, at high speed, in tall grass, and in confined spaces. | Can operate autonomously under no-light conditions. |

| Operates in inverted motion/immobilization | Yes. | Yes. | Yes. |

| Software Capabilities | |||

| Simulator | CAD model. Used in ROS simulator | Yes. | No |

References

- Yadav, V.; Biersdorf, J. Utilizing FLEX Equipment for Operations and Maintenance Cost Reduction in Nuclear Power Plants; INL/EXT-19-55445; Idaho National Laboratory: Idaho Falls, ID, USA, 2019.

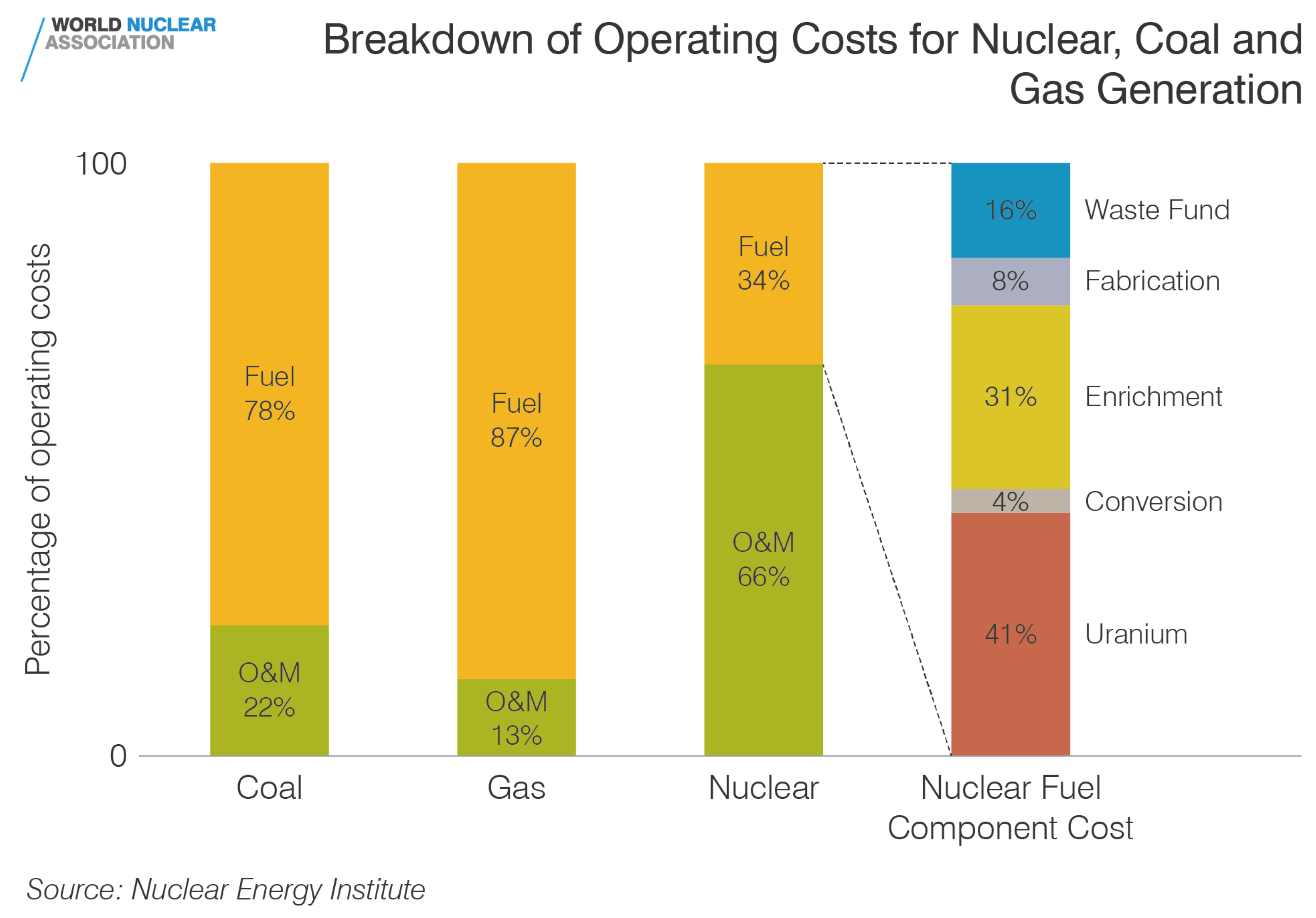

- Gallery—World Nuclear Association. Available online: https://world-nuclear.org/gallery/nuclear-power-economics-and-project-structuring-re/breakdown-of-operating-costs-for-nuclear,-coal-and.aspx (accessed on 2 March 2024).

- Garcia, M.L. Vulnerability Assessment of Physical Protection Systems; Elsevier: Amsterdam, The Netherlands, 2005. [Google Scholar]

- ISO 8373:2012; Robots and Robotic Devices—Vocabulary. International Organization for Standardization: Geneva, Switzerland, 2012.

- Kurfess, T.R. (Ed.) Robotics and Automation Handbook; CRC Press: Boca Raton, FL, USA, 2005; Volume 414. [Google Scholar]

- Zereik, E.; Bibuli, M.; Mišković, N.; Ridao, P.; Pascoal, A. Challenges and future trends in marine robotics. Annu. Rev. Control 2018, 46, 350–368. [Google Scholar] [CrossRef]

- Zhang, F.; Marani, G.; Smith, R.N.; Choi, H.T. Future trends in marine robotics [tc spotlight]. IEEE Robot. Autom. Mag. 2015, 22, 14–122. [Google Scholar] [CrossRef]

- Flores-Abad, A.; Ma, O.; Pham, K.; Ulrich, S. A review of space robotics technologies for on-orbit servicing. Prog. Aerosp. Sci. 2014, 68, 1–26. [Google Scholar] [CrossRef]

- Yoshida, K. Achievements in space robotics. IEEE Robot. Autom. Mag. 2009, 16, 20–28. [Google Scholar] [CrossRef]

- Hirzinger, G.; Brunner, B.; Dietrich, J.; Heindl, J. Sensor-based space robotics-ROTEX and its telerobotic features. IEEE Trans. Robot. Autom. 1993, 9, 649–663. [Google Scholar] [CrossRef]

- Dario, P.; Guglielmelli, E.; Allotta, B.; Carrozza, M.C. Robotics for medical applications. IEEE Robot. Autom. Mag. 1996, 3, 44–56. [Google Scholar] [CrossRef]

- Davies, B. A review of robotics in surgery. Proc. Inst. Mech. Eng. Part H J. Eng. Med. 2000, 214, 129–140. [Google Scholar] [CrossRef]

- Taylor, R.H.; Menciassi, A.; Fichtinger, G.; Fiorini, P.; Dario, P. Medical robotics and computer-integrated surgery. In Handbook of Robotics; Springer: Berlin/Heidelberg, Germany, 2016; pp. 1657–1684. [Google Scholar]

- Fu, K.S.; Gonzalez, R.C.; Lee, C.G.; Freeman, H. Robotics: Control, Sensing, Vision, and Intelligence; McGraw-Hill: New York, NY, USA, 1987; Volume 1. [Google Scholar]

- Moore, T. Robots for nuclear power plants. IAEA Bull. 1985, 27, 31–38. [Google Scholar]

- Iqbal, J.; Tahir, A.M.; ul Islam, R. Robotics for nuclear power plants—Challenges and future perspectives. In Proceedings of the 2012 2nd International Conference on Applied Robotics for the Power Industry (CARPI), Zurich, Switzerland, 11–13 September 2012; pp. 151–156. [Google Scholar]

- Chen, Z.; Li, J.; Wang, S.; Wang, J.; Ma, L. Flexible gait transition for six wheel-legged robot with unstructured terrains. Robot. Auton. Syst. 2022, 150, 103989. [Google Scholar] [CrossRef]

- Chen, Z.; Li, J.; Wang, J.; Wang, S.; Zhao, J.; Li, J. Towards hybrid gait obstacle avoidance for a six wheel-legged robot with payload transportation. J. Intell. Robot. Syst. 2021, 102, 60. [Google Scholar] [CrossRef]

- Wang, S.; Chen, Z.; Li, J.; Wang, J.; Li, J.; Zhao, J. Flexible motion framework of the six wheel-legged robot: Experimental results. IEEE/ASME Trans. Mechatron. 2021, 27, 2246–2257. [Google Scholar] [CrossRef]

- Haynes, G.C.; Rizzi, A.A. Gaits and gait transitions for legged robots. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 1117–1122. [Google Scholar]

- Wermelinger, M.; Fankhauser, P.; Diethelm, R.; Krüsi, P.; Siegwart, R.; Hutter, M. Navigation planning for legged robots in challenging terrain. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 1184–1189. [Google Scholar]

- Moeller, R.; Deemyad, T.; Sebastian, A. Autonomous navigation of an agricultural robot using RTK GPS and Pixhawk. In Proceedings of the 2020 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 2–3 October 2020; pp. 1–6. [Google Scholar]

- Deemyad, T.; Moeller, R.; Sebastian, A. Chassis design and analysis of an autonomous ground vehicle (AGV) using genetic algorithm. In Proceedings of the 2020 Intermountain Engineering, Technology and Computing (IETC), Orem, UT, USA, 2–3 October 2020; pp. 1–6. [Google Scholar]

- Moderator, A. Security and Nuclear Power Plants: Robust and Significant. In U.S. NRC Blog; 23 August 2013. Available online: https://public-blog.nrc-gateway.gov/2013/08/23/security-and-nuclear-power-plants-robust-and-significant/ (accessed on 12 March 2024).

- U.S. Nuclear Regulatory Commission. Code of Federal Regulations, Title 10, Part 73, Section 45. Performance Capabilities for Fixed Site Physical Protection Systems. Available online: https://www.nrc.gov/reading-rm/doc-collections/cfr/part073/part073-0045.html (accessed on 12 March 2024).

- Siegwart, R.; Nourbakhsh, I.R.; Scaramuzza, D. Introduction to Autonomous Mobile Robots, 2nd ed.; The MIT Press: Cambridge, MA, USA; London, UK, 2011. [Google Scholar]

- López, J.; Pérez, D.; Zalama, E.; Gómez-García-Bermejo, J. Bellbot—A hotel assistant system using mobile robots. Int. J. Adv. Robot. Syst. 2013, 10, 40. [Google Scholar] [CrossRef]

- Nagatani, K.; Kiribayashi, S.; Okada, Y.; Otake, K.; Yoshida, K.; Tadokoro, S.; Nishimura, T.; Yoshida, T.; Koyanagi, E.; Fukushima, M.; et al. Emergency response to the nuclear accident at the Fukushima Daiichi Nuclear Power Plants using mobile rescue robots. J. Field Robot. 2013, 30, 44–63. [Google Scholar] [CrossRef]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596. [Google Scholar] [CrossRef]

- Ajwad, S.A.; Iqbal, J.; Ullah, M.I.; Mehmood, A. A systematic review of current and emergent manipulator control approaches. Front. Mech. Eng. 2015, 10, 198–210. [Google Scholar] [CrossRef]

- Zomerdijk, M.J.J.; van der Wijk, V. Structural Design and Experiments of a Dynamically Balanced Inverted Four-Bar Linkage as Manipulator Arm for High Acceleration Applications. Actuators 2022, 11, 131. [Google Scholar] [CrossRef]

- Surati, S.; Hedaoo, S.; Rotti, T.; Ahuja, V.; Patel, N. Pick and place robotic arm: A review paper. Int. Res. J. Eng. Technol. 2021, 8, 2121–2129. [Google Scholar]

- Yudha, H.M.; Dewi, T.; Risma, P.; Oktarina, Y. Arm robot manipulator design and control for trajectory tracking; a review. In Proceedings of the 2018 5th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Malang, Indonesia, 16–18 October 2018; pp. 304–309. [Google Scholar]

- Gao, X.; Li, J.; Fan, L.; Zhou, Q.; Yin, K.; Wang, J.; Song, C.; Huang, L.; Wang, Z. Review of Wheeled Mobile Robots’ Navigation Problems and Application Prospects in Agriculture. IEEE Access 2018, 6, 49248–49268. [Google Scholar] [CrossRef]

- Bae, B.; Lee, D.H. Design of a Four-Wheel Steering Mobile Robot Platform and Adaptive Steering Control for Manual Operation. Electronics 2023, 12, 3511. [Google Scholar] [CrossRef]

- Mittal, S.; Rai, J.K. Wadoro: An autonomous mobile robot for surveillance. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–6. [Google Scholar]

- Ortigoza, R.S.; Marcelino-Aranda, M.; Ortigoza, G.S.; Guzman, V.M.H.; Molina-Vilchis, M.A.; Saldana-Gonzalez, G.; Herrera-Lozada, J.C.; Olguin-Carbajal, M. Wheeled Mobile Robots: A review. IEEE Lat. Am. Trans. 2012, 10, 2209–2217. [Google Scholar] [CrossRef]

- De Luca, A.; Oriolo, G.; Vendittelli, M. Control of Wheeled Mobile Robots: An Experimental Overview. In Ramsete; Lecture Notes in Control and Information Sciences; Nicosia, S., Siciliano, B., Bicchi, A., Valigi, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; Volume 270. [Google Scholar] [CrossRef]

- Kim, S.; Wensing, P.M. Design of Dynamic Legged Robots. Found. Trends® Robot. 2017, 5, 117–190. [Google Scholar] [CrossRef]

- Liu, J.; Tan, M.; Zhao, X. Legged robots—An overview. Trans. Inst. Meas. Control 2007, 29, 185–202. [Google Scholar] [CrossRef]

- Roscia, F.; Cumerlotti, A.; Del Prete, A.; Semini, C.; Focchi, M. Orientation control system: Enhancing aerial maneuvers for quadruped robots. Sensors 2023, 23, 1234. [Google Scholar] [CrossRef] [PubMed]

- Biswal, P.; Mohanty, P.K. Development of quadruped walking robots: A review. Ain Shams Eng. J. 2021, 12, 2017–2031. [Google Scholar] [CrossRef]

- Bruzzone, L.; Nodehi, S.E.; Fanghella, P. Tracked locomotion systems for ground mobile robots: A review. Machines 2022, 10, 648. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, H.; Ma, H.; Wang, S.; Xue, X.; Tian, H.; Liu, P. Simulation and Validation of a Steering Control Strategy for Tracked Robots. Appl. Sci. 2023, 13, 11054. [Google Scholar] [CrossRef]

- Dong, P.; Wang, X.; Xing, H.; Liu, Y.; Zhang, M. Design and control of a tracked robot for search and rescue in nuclear power plant. In Proceedings of the 2016 International Conference on Advanced Robotics and Mechatronics (ICARM), Macau, China, 18–20 August 2016; pp. 330–335. [Google Scholar]

- Ducros, C.; Hauser, G.; Mahjoubi, N.; Girones, P.; Boisset, L.; Sorin, A.; Jonquet, E.; Falciola, J.M.; Benhamou, A. RICA: A tracked robot for sampling and radiological characterization in the nuclear field. J. Field Robot. 2017, 34, 583–599. [Google Scholar] [CrossRef]

- Tadakuma, K.; Tadakuma, R.; Maruyama, A.; Rohmer, E.; Nagatani, K.; Yoshida, K.; Ming, A.; Shimojo, M.; Higashimori, M.; Kaneko, M. Mechanical design of the wheel-leg hybrid mobile robot to realize a large wheel diameter. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 3358–3365. [Google Scholar] [CrossRef]

- Vargas, G.A.; Gómez, D.J.; Mur, O.; Castillo, R.A. Simulation of a wheelleg hybrid robot in Webots. In Proceedings of the IEEE Colombian Conference on Robotics and Automation (CCRA), Bogota, Colombia, 29–30 September 2016; pp. 1–5. [Google Scholar] [CrossRef]

- Yokota, S.; Kawabata, K.; Blazevic, P.; Kobayashi, H. Control law for rough terrain robot with leg-type crawler. In Proceedings of the IEEE International Conference on Mechatronics and Automation, Luoyang, China, 25–28 June 2006; pp. 417–422. [Google Scholar]

- Kim, J.; Kim, Y.G.; Kwak, J.H.; Hong, D.H.; An, J. An wheel &track hybrid robot platform for optimal navigation in an urban environment. In Proceedings of the SICE Annual Conference 2010, Taipei, Taiwan, 18–21 August; 2010; pp. 881–884. [Google Scholar]

- Michaud, F.; Letourneau, D.; Arsenault, M.; Bergeron, Y.; Cadrin, R.; Gagnon, F.; Legault, M.A.; Millette, M.; Paré, J.F.; Tremblay, M.C.; et al. Multi-modal locomotion robotic platform using leg-track-wheel articulations. Auton. Robot. 2005, 18, 137–156. [Google Scholar] [CrossRef]

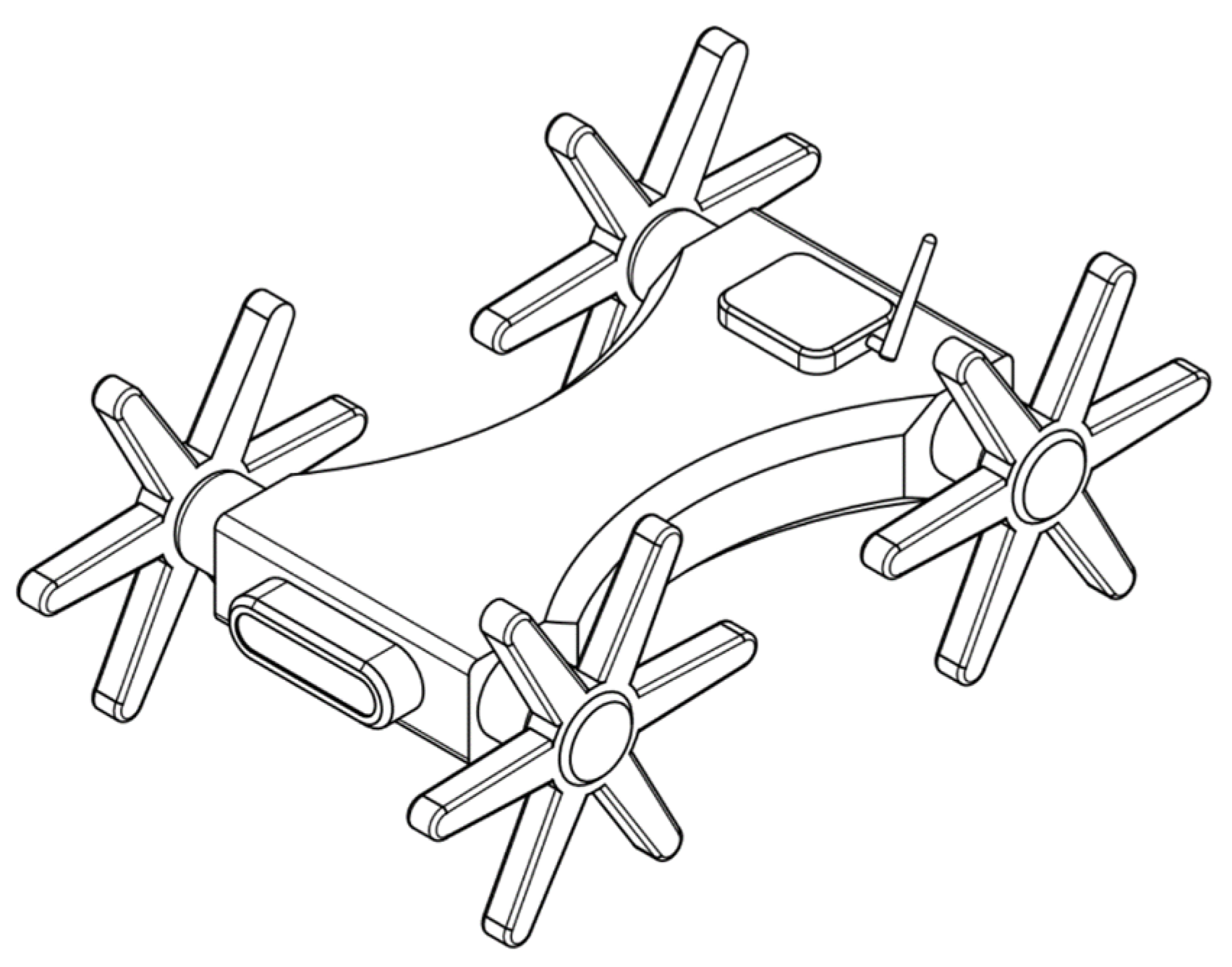

- Ordoñez-Avila, J.L.; Moreno, H.A.; Perdomo, M.E.; Calderón, I.G.C. Designing Legged Wheels for Stair Climbing. Symmetry 2023, 15, 2071. [Google Scholar] [CrossRef]

- Arjomandi, M.; Agostino, S.; Mammone, M.; Nelson, M.; Zhou, T. Classification of Unmanned Aerial Vehicles; Report for Mechanical Engineering Class; University of Adelaide: Adelaide, Australia, 2006; pp. 1–48. [Google Scholar]

- Ahmed, F.; Mohanta, J.C.; Keshari, A.; Yadav, P.S. Recent advances in unmanned aerial vehicles: A review. Arab. J. Sci. Eng. 2022, 47, 7963–7984. [Google Scholar] [CrossRef]

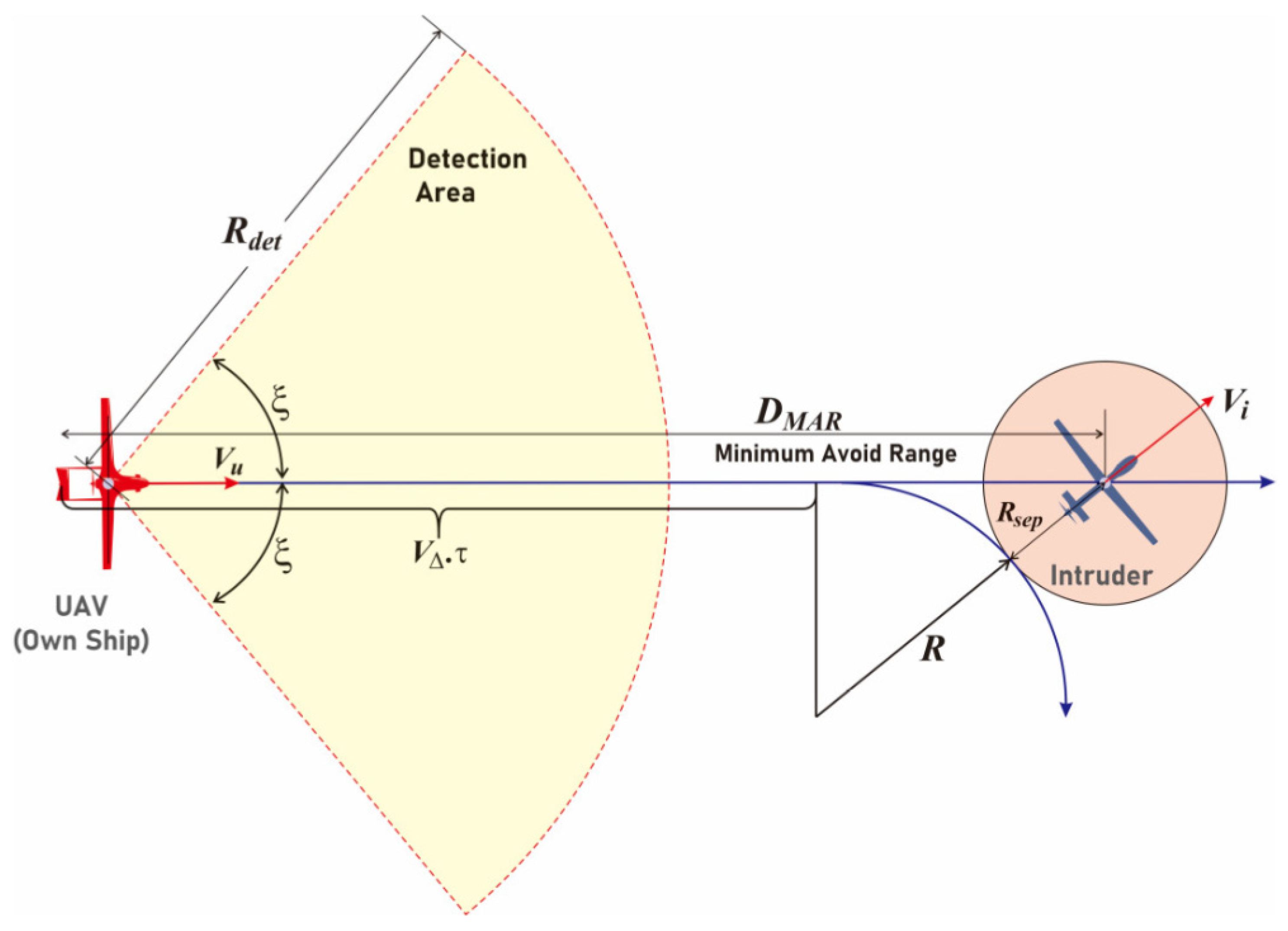

- Fitrikananda, B.P.; Jenie, Y.I.; Sasongko, R.A.; Muhammad, H. Risk Assessment Method for UAV’s Sense and Avoid System Based on Multi-Parameter Quantification and Monte Carlo Simulation. Aerospace 2023, 10, 781. [Google Scholar] [CrossRef]

- Luukkonen, T. Modelling and control of quadcopter. Independent research project in applied mathematics. Espoo 2011, 22, 22. [Google Scholar]

- Neira, J.; Sequeiros, C.; Huamani, R.; Machaca, E.; Fonseca, P.; Nina, W. Review on unmanned underwater robotics, structure designs, materials, sensors, actuators, and navigation control. J. Robot. 2021, 2021, 5542920. [Google Scholar] [CrossRef]

- Jorge, V.A.; Granada, R.; Maidana, R.G.; Jurak, D.A.; Heck, G.; Negreiros, A.P.; Dos Santos, D.H.; Gonçalves, L.M.; Amory, A.M. A survey on unmanned surface vehicles for disaster robotics: Main challenges and directions. Sensors 2019, 19, 702. [Google Scholar] [CrossRef]

- Liu, G.; Zhang, S.; Ma, G.; Pan, Y. Path Planning of Unmanned Surface Vehicle Based on Improved Sparrow Search Algorithm. J. Mar. Sci. Eng. 2023, 11, 2292. [Google Scholar] [CrossRef]

- Akib, A.; Tasnim, F.; Biswas, D.; Hashem, M.B.; Rahman, K.; Bhattacharjee, A.; Fattah, S.A. Unmanned floating waste collecting robot. In Proceedings of the TENCON 2019–2019 IEEE Region 10 Conference (TENCON), Kochi, India, 17–20 October 2019; pp. 2645–2650. [Google Scholar]

- Xu, X.; Wang, C.; Xie, H.; Wang, C.; Yang, H. Dual-Loop Control of Cable-Driven Snake-like Robots. Robotics 2023, 12, 126. [Google Scholar] [CrossRef]

- Soto, F.; Wang, J.; Ahmed, R.; Demirci, U. Medical micro/nanorobots in precision medicine. Adv. Sci. 2020, 7, 2002203. [Google Scholar] [CrossRef] [PubMed]

- Martinez-Sanchez, D.E.; Sandoval-Castro, X.Y.; Cruz-Santos, N.; Castillo-Castaneda, E.; Ruiz-Torres, M.F.; Laribi, M.A. Soft Robot for Inspection Tasks Inspired on Annelids to Obtain Peristaltic Locomotion. Machines 2023, 11, 779. [Google Scholar] [CrossRef]

- Khatib, O.; Yokoi, K.; Brock, O.; Chang, K.; Casal, A. Robots in human environments: Basic autonomous capabilities. Int. J. Robot. Res. 1999, 18, 684–696. [Google Scholar] [CrossRef]

- Khatib, O.; Roth, B. New robot mechanisms for new robot capabilities. In Proceedings of the IROS, Osaka, Japan, 3–5 November 1991; pp. 44–49. [Google Scholar]

- Arbib, M.A. (Ed.) The Handbook of Brain Theory and Neural Networks, 2nd ed.; MIT Press: Cambridge, MA, USA, 2002. [Google Scholar]

- Nebel, B.; Dornhege, C.; Hertle, A. How much does a household robot need to know in order to tidy up. In Proceedings of the AAAI Workshop on Intelligent Robotic Systems, Bellevue, DC, USA, 14–15 July 2013. [Google Scholar]

- Ivaldi, S.; Lyubova, N.; Droniou, A.; Gerardeaux-Viret, D.; Filliat, D.; Padois, V.; Sigaud, O.; Oudeyer, P.Y. Learning to recognize objects through curiosity-driven manipulation with the iCub humanoid robot. In Proceedings of the 2013 IEEE Third Joint International Conference on Development and Learning and Epigenetic Robotics (ICDL), Osaka, Japan, 18–22 August 2013; pp. 1–8. [Google Scholar]

- Messina, E.R.; Jacoff, A.S. Measuring the performance of urban search and rescue robots. In Proceedings of the 2007 IEEE Conference on Technologies for Homeland Security, Woburn, MA, USA, 16–17 May 2007; pp. 28–33. [Google Scholar]

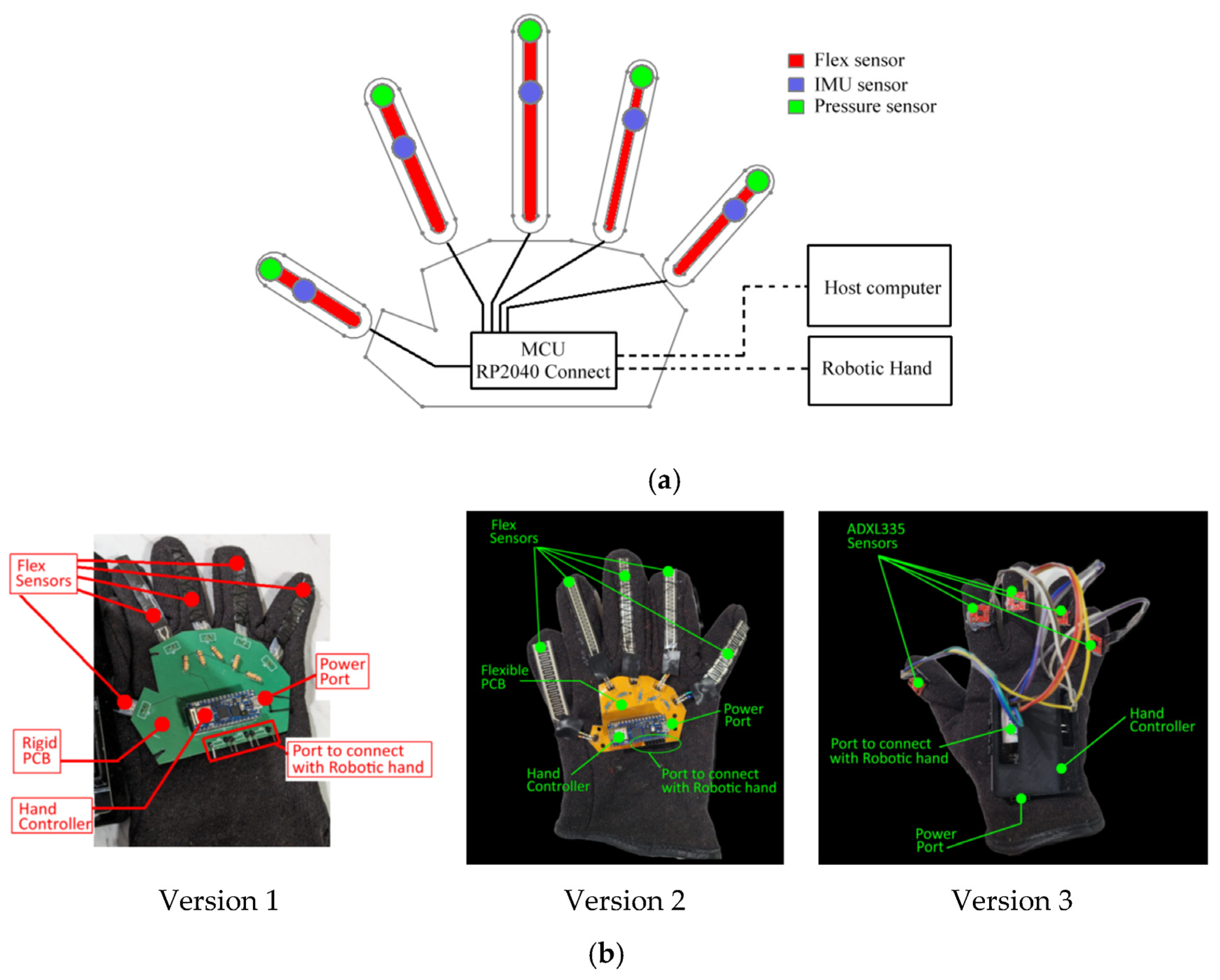

- Singh, R.; Mozaffari, S.; Akhshik, M.; Ahamed, M.J.; Rondeau-Gagné, S.; Alirezaee, S. Human–Robot Interaction Using Learning from Demonstrations and a Wearable Glove with Multiple Sensors. Sensors 2023, 23, 9780. [Google Scholar] [CrossRef] [PubMed]

- Smithers, T. On quantitative performance measures of robot behaviour. Robot. Auton. Syst. 1995, 15, 107–133. [Google Scholar] [CrossRef]

- Madhavan, R.; Lakaemper, R.; Kalmár-Nagy, T. Benchmarking and standardization of intelligent robotic systems. In Proceedings of the 2009 International Conference on Advanced Robotics, Munich, Germany, 22–26 June 2009; pp. 1–7. [Google Scholar]

- Khouja, M.J. An options view of robot performance parameters in a dynamic environment. Int. J. Prod. Res. 1999, 37, 1243–1257. [Google Scholar] [CrossRef]

- Lampe, A.; Chatila, R. Performance measure for the evaluation of mobile robot autonomy. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation ICRA 2006, Orlando, FL, USA, 15–19 May 2006; pp. 4057–4062. [Google Scholar]

- Huang, H.M.; Messina, E.; Wade, R.; English, R.; Novak, B.; Albus, J. Autonomy measures for robots. In Proceedings of the ASME International Mechanical Engineering Congress and Exposition, Anaheim, CA, USA, 13–19 November 2004; Volume 47063, pp. 1241–1247. [Google Scholar]

- Steinfeld, A.; Fong, T.; Kaber, D.; Lewis, M.; Scholtz, J.; Schultz, A.; Goodrich, M. Common metrics for human-robot interaction. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction, Salt Lake City, UT, USA, 2–3 March 2006; pp. 33–40. [Google Scholar]

- Schaefer, K.E. Measuring trust in human robot interactions: Development of the “trust perception scale-HRI”. In Robust Intelligence and Trust in Autonomous Systems; Springer: Boston, MA, USA, 2016; pp. 191–218. [Google Scholar]

- Olsen, D.R.; Goodrich, M.A. Metrics for evaluating human-robot interactions. In Proceedings of the PERMIS, Gaithersburg, MD, USA, 16–18 September 2003; Volume 2003, p. 4. [Google Scholar]

- Kress-Gazit, H.; Eder, K.; Hoffman, G.; Admoni, H.; Argall, B.; Ehlers, R.; Heckman, C.; Jansen, N.; Knepper, R.; Křetínský, J.; et al. Formalizing and guaranteeing human-robot interaction. Commun. ACM 2021, 64, 78–84. [Google Scholar] [CrossRef]

- Crandall, J.W.; Cummings, M.L. Developing performance metrics for the supervisory control of multiple robots. In Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction, Arlington, VA, USA, 8–11 March 2007; pp. 33–40. [Google Scholar]

- Boston Dynamics. Spot Specifications. Boston Dynamics Support Center. 26 October 2022. Available online: https://support.bostondynamics.com/s/article/Robot-specifications (accessed on 26 October 2022).

- Ghost Robotics. Vision 60: Ghost Robotics. Home. Available online: https://www.ghostrobotics.io/vision-60 (accessed on 12 March 2024).

- ANYbotics. Anymal. ANYbotics. 1 September 2023. Available online: https://www.anybotics.com/robotics/anymal/#:~:text=ANYmal%20technical%20specifications%201%20360%C2%B0%20vision%20ANYmal%20navigates,ruggedized%20tablet%20for%20remote%20control%2C%20transportation%20box%20 (accessed on 12 March 2024).

- ISO 12100:2010; Safety of Machinery—General Principles for Design—Risk Assessment and Risk Reduction. International Organization for Standardization: Geneva, Switzerland, 2010.

- IEC 60204-1:2016; Safety of Machinery—Electrical Equipment of Machines—Part 1: General Requirements. International Electrotechnical Commission: Geneva, Switzerland, 2016.

- European Commission. Directive 2014/30/EU of the European Parliament and of the Council of 26 February 2014 on the harmonization of the laws of the Member States relating to electromagnetic compatibility (recast). Off. J. Eur. Union 2014, L 96, 79–107. [Google Scholar]

- European Commission. Directive 2014/53/EU of the European Parliament and of the Council of 16 April 2014 on the harmonisation of the laws of the Member States relating to the making available on the market of radio equipment and repealing Directive 1999/5/EC. Off. J. Eur. Union 2014, L 153, 62–112. [Google Scholar]

- European Commission. Directive 2011/65/EU of the European Parliament and of the Council of 8 June 2011 on the restriction of the use of certain hazardous substances in electrical and electronic equipment (recast). Off. J. Eur. Union 2011, L 174, 88–110. [Google Scholar]

| Tracking Source | Keywords |

|---|---|

| Google Scholar | “mobile robot” “basic functionality of robot” “basic capability of robot” “quantitative performance of robot” “wadoro” “human-robot interaction” “dog robot” “manipulator robot arm” “types of mobile robot” “history of robotics” “robot formalization of problem” “security robot formalization of problem” “unmanned aerial vehicles” “DRONE” “unmanned water vehicle” |

| Publisher | No. of Papers |

|---|---|

| Idaho National Laboratory | 2 |

| Elsevier | 1 |

| CRC press | 1 |

| Annual Reviews in Control | 1 |

| IEEE Robotics & Automation Magazine | 3 |

| Progress in aerospace sciences | 1 |

| IEEE Transactions on robotics and automation | 1 |

| Journal of Engineering in Medicine | 1 |

| McGraw-Hill | 1 |

| 2nd International Conference on Applied Robotics for the Power Industry (CARPI) | 1 |

| U.S. NRC | 2 |

| The MIT Press | 1 |

| International Journal of Advanced Robotic Systems | 2 |

| Journal of Field Robotics | 2 |

| Frontiers of mechanical engineering | 1 |

| Int. Res. J. Eng. Technol | 1 |

| 5th International Conference on Electrical Engineering, Computer Science, and Informatics (EECSI), IEEE | 1 |

| IEEE Access | 1 |

| IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES) | 1 |

| IEEE Latin America Transactions | 1 |

| Springer | 3 |

| Foundations and Trends® in Robotics | 1 |

| Transactions of the Institute of Measurement and Control | 1 |

| Ain Shams Engineering Journal | 1 |

| MDPI—Machines | 2 |

| International Conference on Advanced Robotics and Mechatronics (ICARM), IEEE | 1 |

| Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems | 1 |

| IEEE Colombian conference on robotics and automation (CCRA) | 1 |

| Proceedings of the IEEE International conference on mechatronics and automation | 1 |

| Proceedings of the SICE Annual Conference | 1 |

| Auton Robot | 1 |

| University of Adelaide | 1 |

| Arabian Journal for Science and Engineering | 1 |

| Independent Research Project in Applied Mathematics | 1 |

| Journal of Robotics | 2 |

| MDPI—Sensors | 5 |

| TENCON 2019—2019 IEEE Region 10 Conference (TENCON) | 1 |

| Advanced Science | 1 |

| The International Journal of Robotics Research | 1 |

| IROS | 2 |

| Proceedings of the AAAI workshop on intelligent robotic systems | 1 |

| 2013 IEEE Third Joint International Conference on Development and Learning and Epigenetic Robotics (ICDL), IEEE | 1 |

| IEEE Conference on Technologies for Homeland Security | 1 |

| International Conference on Advanced Robotics | 1 |

| International Journal of Production Research | 1 |

| Proceedings 2006 IEEE International Conference on Robotics and Automation | 2 |

| ASME International Mechanical Engineering Congress and Exposition | 1 |

| Proceedings of the 1st ACM SIGCHI/SIGART conference on Human-Robot Interaction | 1 |

| Communications of the ACM | 1 |

| Proceedings of the ACM/IEEE International Conference on Human-Robot Interaction | 1 |

| Boston Dynamics | 1 |

| Ghost Robotics | 1 |

| ANYbotics | 1 |

| MDPI—Actuators | 1 |

| MDPI—Applied Sciences | 1 |

| MDPI—Symmetry | 1 |

| MDPI—Aerospace | 1 |

| Journal of Marine Science and Engineering | 1 |

| MDPI—Robotics | 1 |

| Robotics and Autonomous Systems | 2 |

| Journal of Intelligent & Robotic Systems | 1 |

| Intermountain Engineering, Technology and Computing (IETC). IEEE | 2 |

| International Conference on Intelligent Robots and Systems (IROS). IEEE | 1 |

| IEEE/ASME Transactions on Mechatronics | 1 |

| S.N. | Measures | Description |

|---|---|---|

| (a) | Understanding of intrinsic limitation | Recognize inherent function limitations. |

| (b) | Effectiveness | Detecting, isolating, and recovering from faults. |

| (c) | Self-monitoring capacity | Ability to monitor health, state, task progress, etc., and detect deviations from the normal. |

| S.N. | Primary Groupings | Sets of Metrics (in Each Grouping) |

|---|---|---|

| (a) | Mission complexity | Tactical behavior. |

| Coordination and collaboration. | ||

| Performance. | ||

| Sensory processing/world modeling. | ||

| (b) | Environmental difficulty | Static environment. |

| Dynamic environment. | ||

| Electronic/electromagnetic environment. | ||

| Mobility. | ||

| Mapping and navigation. | ||

| Urban environment. | ||

| Rural environment. | ||

| Operational environment. | ||

| (c) | Level of HRI [60,61,62] | Human intervention. |

| Operator workload. | ||

| Operator skill level. | ||

| Operator to unmanned system (UMS) ratio. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sharma, U.; Medasetti, U.S.; Deemyad, T.; Mashal, M.; Yadav, V. Mobile Robot for Security Applications in Remotely Operated Advanced Reactors. Appl. Sci. 2024, 14, 2552. https://doi.org/10.3390/app14062552

Sharma U, Medasetti US, Deemyad T, Mashal M, Yadav V. Mobile Robot for Security Applications in Remotely Operated Advanced Reactors. Applied Sciences. 2024; 14(6):2552. https://doi.org/10.3390/app14062552

Chicago/Turabian StyleSharma, Ujwal, Uma Shankar Medasetti, Taher Deemyad, Mustafa Mashal, and Vaibhav Yadav. 2024. "Mobile Robot for Security Applications in Remotely Operated Advanced Reactors" Applied Sciences 14, no. 6: 2552. https://doi.org/10.3390/app14062552

APA StyleSharma, U., Medasetti, U. S., Deemyad, T., Mashal, M., & Yadav, V. (2024). Mobile Robot for Security Applications in Remotely Operated Advanced Reactors. Applied Sciences, 14(6), 2552. https://doi.org/10.3390/app14062552