Low-Light Mine Image Enhancement Algorithm Based on Improved Retinex

Abstract

1. Introduction

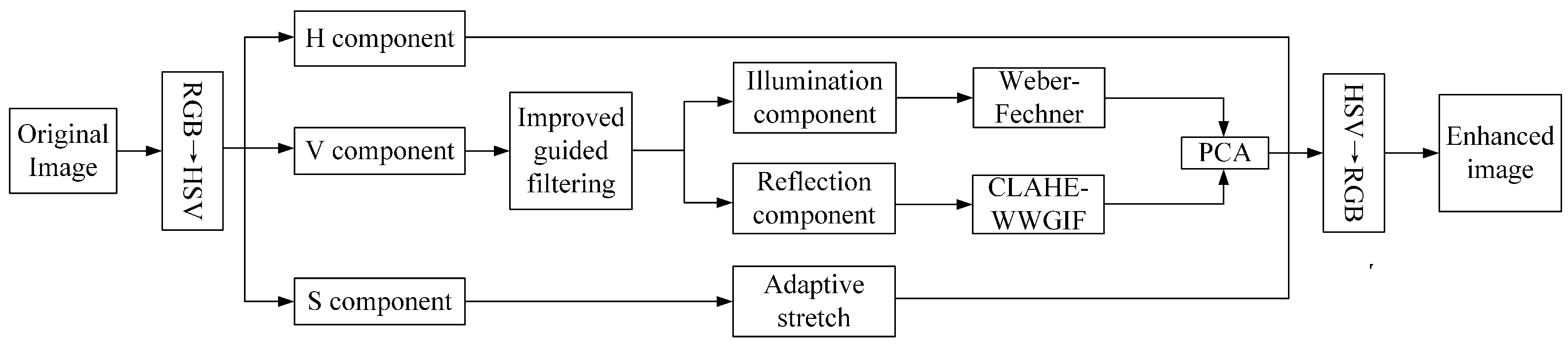

- (1)

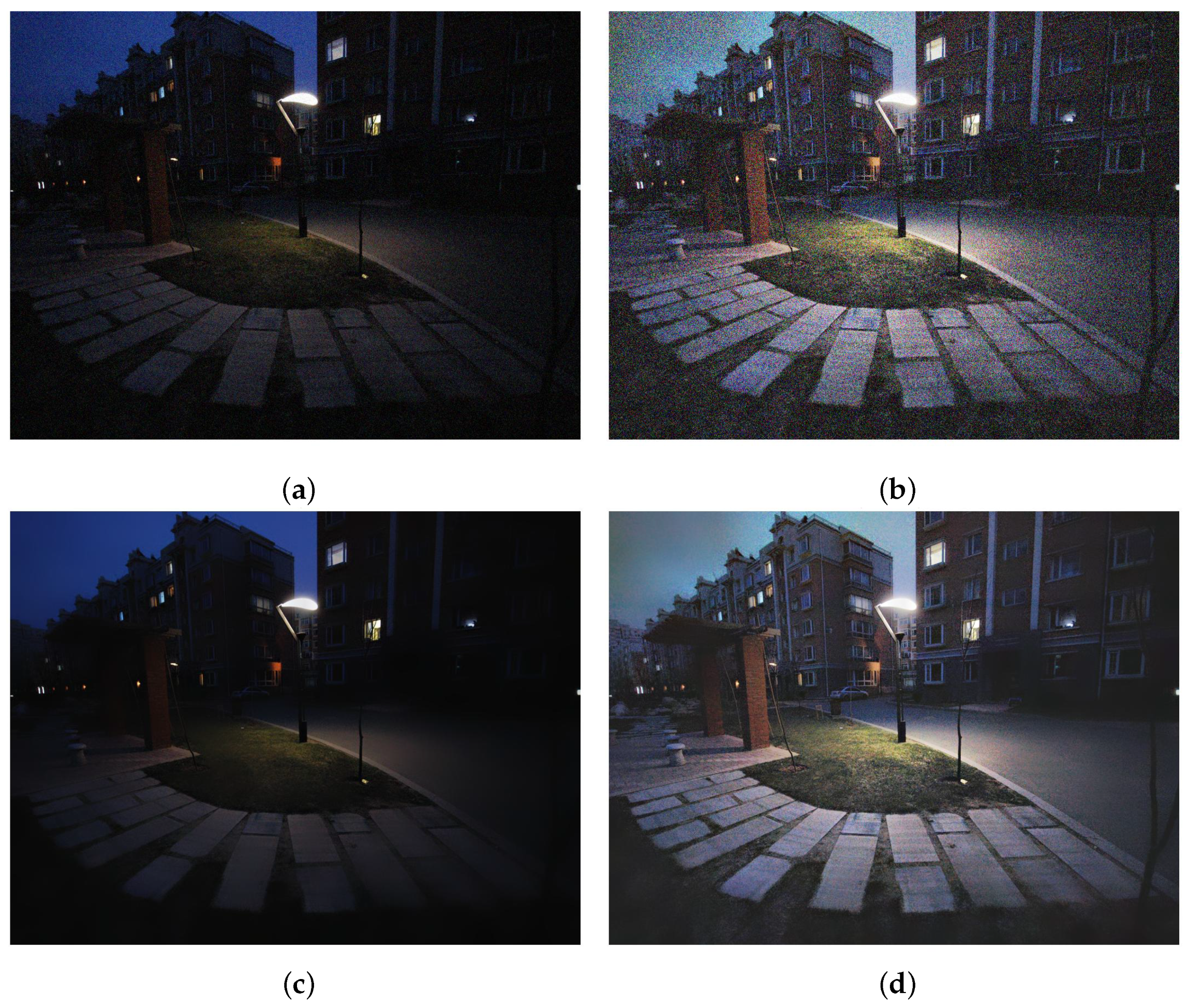

- An improved guided filtering algorithm is proposed to replace the Gaussian filter in the Retinex algorithm to more accurately estimate the illumination component and reflection component from the brightness component.

- (2)

- Fusion of the contrast-limited adaptive histogram equalization algorithm and the improved guided filtering algorithm to process the reflection component to achieve brightness enhancement and denoising at the same time.

- (3)

- Propose an improved adaptive stretching method to process the saturation component to avoid color distortion.

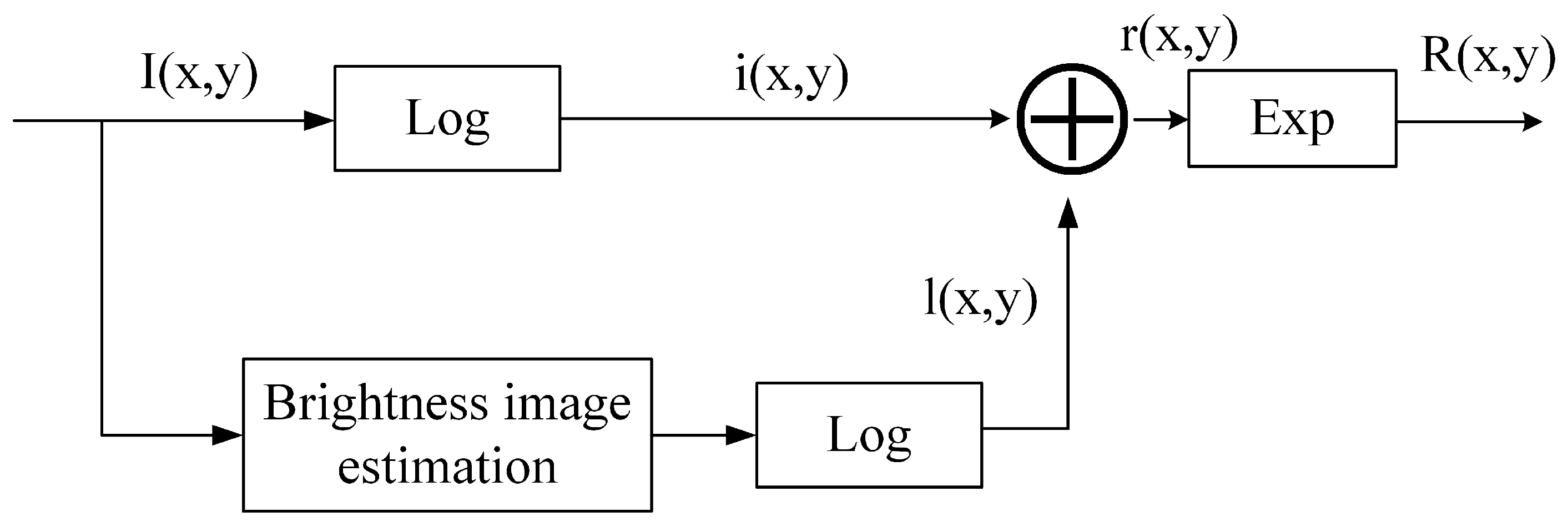

2. Retinex Theory

3. Proposed Method

3.1. Improved Guided Filtering

3.2. Extraction and Enhancement of Illumination Component

3.3. Extraction and Enhancement of Illumination Component

3.4. Enhancement of Saturation Component

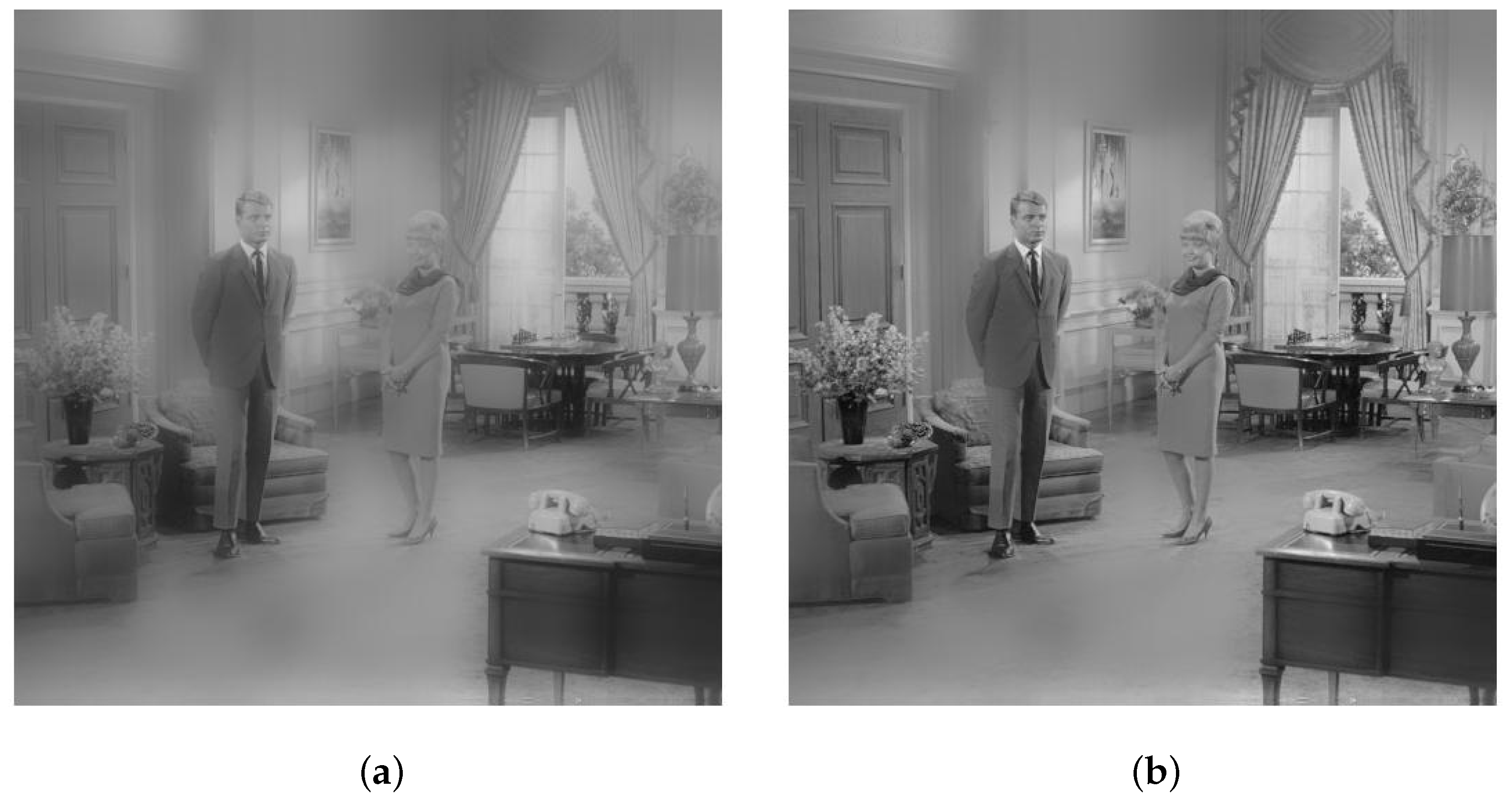

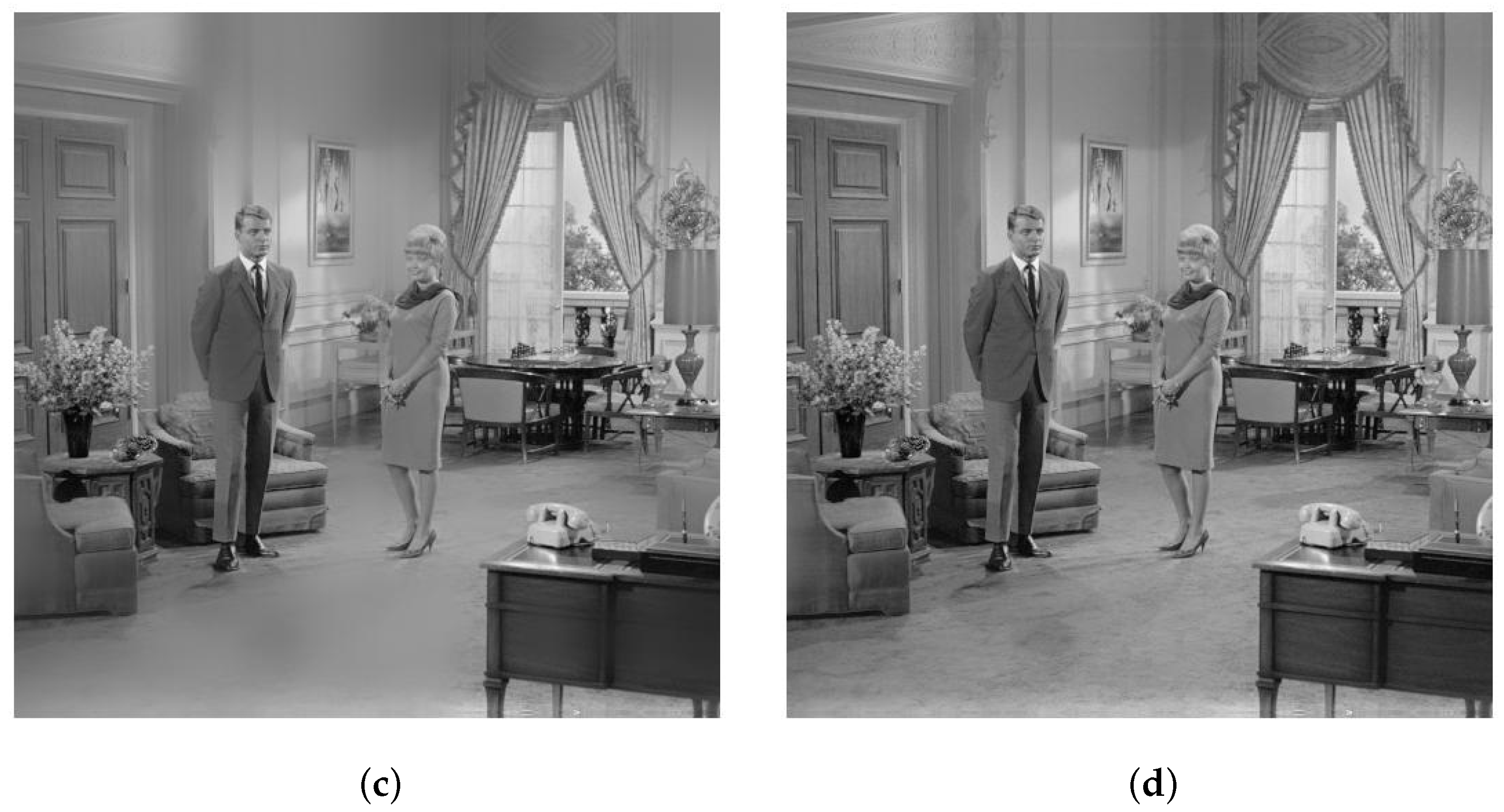

4. Experiment and Analysis

- Mean

- 2.

- Standard deviation

- 3.

- Information entropy

- 4.

- Average gradient

- 5.

- Peak signal-to-noise ratio

- 6.

- Structural similarity

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.; Wang, H.; Sun, Y.; Yang, J. Improved Retinex-Theory-Based Low-Light Image Enhancement Algorithm. Appl. Sci. 2023, 13, 8148. [Google Scholar] [CrossRef]

- Huang, S.; Li, D.; Zhao, W.; Liu, Y. Haze Removal Algorithm for Optical Remote Sensing Image Based on Multi-Scale Model and Histogram Characteristic. IEEE Access 2019, 7, 104179–104196. [Google Scholar] [CrossRef]

- Guo, Y.; Ke, X.; Ma, J.; Zhang, J. A Pipeline Neural Network for Low-Light Image Enhancement. IEEE Access 2019, 7, 13737–13744. [Google Scholar] [CrossRef]

- Zhang, W.; Dong, L.; Pan, X.; Zhou, J.; Qin, L.; Xu, W. Single Image Defogging Based on Multi-Channel Convolution MSRCR. IEEE Access 2019, 7, 72492–72504. [Google Scholar] [CrossRef]

- Qu, J.; Li, Y.; Du, Q.; Xia, H. Hyperspectral and Panchromatic Image Fusion via Adaptive Tensor and Multi-Scale Retinex Algorithm. IEEE Access 2020, 8, 30522–30532. [Google Scholar] [CrossRef]

- Wang, W.; Chen, Z.; Yuan, X.; Wu, X. Adaptive image enhancement method for correcting low-illumination images. Inf. Sci. 2019, 496, 25–41. [Google Scholar] [CrossRef]

- Liu, S.; Long, W.; He, L.; Li, Y.; Ding, W. Retinex-Based Fast Algorithm for Low-Light Image Enhancement. Entropy 2021, 23, 746. [Google Scholar] [CrossRef]

- Wang, P.; Wang, Z.; Lv, D.; Zhang, C.; Wang, Y. Low illumination color image enhancement based on Gabor filtering and Retinex theory. Multimed. Tools Appl. 2021, 80, 17705–17719. [Google Scholar] [CrossRef]

- Lin, C.; Zhou, H.f.; Chen, W.J.A. Improved bilateral filtering for a Gaussian pyramid structure-based image enhancement algorithm. Algorithms 2019, 12, 258. [Google Scholar] [CrossRef]

- Shang, D.; Yang, Z.; Zhang, X.; Zheng, L.; Lv, Z. Research on low illumination coal gangue image enhancement based on improved Retinex algorithm. Int. J. Coal Prep. Util. 2023, 43, 999–1015. [Google Scholar] [CrossRef]

- Yang, L.; Mu, D.; Xu, Z.; Huang, K.; Zhang, C.; Gao, P.; Purves, R. Apple Surface Defect Detection Based on Gray Level Co-Occurrence Matrix and Retinex Image Enhancement. Appl. Sci. 2023, 13, 12481. [Google Scholar] [CrossRef]

- Xu, J.; Hou, Y.; Ren, D.; Liu, L.; Zhu, F.; Yu, M.; Wang, H.; Shao, L. STAR: A Structure and Texture Aware Retinex Model. IEEE Trans. Image Process. 2020, 29, 5022–5037. [Google Scholar] [CrossRef]

- He, K.; Sun, J.; Tang, X. Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1397–1409. [Google Scholar] [CrossRef] [PubMed]

- Li, M.; Liu, J.; Yang, W.; Sun, X.; Guo, Z.J.I.T.o.I.P. Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans. Image Process. 2018, 27, 2828–2841. [Google Scholar] [CrossRef]

- Ochotorena, C.N.; Yamashita, Y. Anisotropic Guided Filtering. IEEE Trans. Image Process. 2019, 29, 1397–1412. [Google Scholar] [CrossRef] [PubMed]

- Li, Z.; Zheng, J.; Zhu, Z.; Yao, W.; Wu, S. Weighted Guided Image Filtering. IEEE Trans. Image Process. 2015, 24, 120–129. [Google Scholar] [CrossRef] [PubMed]

- Sun, Y.; Zhao, Z.; Jiang, D.; Tong, X.; Tao, B.; Jiang, G.; Kong, J.; Yun, J.; Liu, Y.; Liu, X.; et al. Low-Illumination Image Enhancement Algorithm Based on Improved Multi-Scale Retinex and ABC Algorithm Optimization. Front. Bioeng. Biotechnol. 2022, 10, 865820. [Google Scholar] [CrossRef] [PubMed]

- Kou, F.; Chen, W.; Wen, C.; Li, Z. Gradient Domain Guided Image Filtering. IEEE Trans. Image Process. 2015, 24, 4528–4539. [Google Scholar] [CrossRef] [PubMed]

- Maes, C.J.J.o.S.P. Statistical Mechanical Foundation of Weber–Fechner Laws. J. Stat. Phys. 2021, 182, 49. [Google Scholar] [CrossRef]

- Ji, X.; Guo, S.; Zhang, H.; Xu, W.J.A.S. Non-Uniform-Illumination Image Enhancement Algorithm Based on Retinex Theory. Appl. Sci. 2023, 13, 9535. [Google Scholar] [CrossRef]

- Pisano, E.D.; Zong, S.; Hemminger, B.M.; DeLuca, M.; Johnston, R.E.; Muller, K.; Braeuning, M.P.; Pizer, S.M. Contrast limited adaptive histogram equalization image processing to improve the detection of simulated spiculations in dense mammograms. J. Digit. Imaging 1998, 11, 193–200. [Google Scholar] [CrossRef] [PubMed]

- Yuan, Z.; Zeng, J.; Wei, Z.; Jin, L.; Zhao, S.; Liu, X.; Zhang, Y.; Zhou, G. CLAHE-Based Low-Light Image Enhancement for Robust Object Detection in Overhead Power Transmission System. IEEE Trans. Power Deliv. 2023, 38, 2240–2243. [Google Scholar] [CrossRef]

- Tang, H.; Zhu, H.; Tao, H.; Xie, C. An Improved Algorithm for Low-Light Image Enhancement Based on RetinexNet. Appl. Sci. 2022, 12, 7268. [Google Scholar] [CrossRef]

- Wang, T.S.; Kim, G.T.; Kim, M.; Jang, J. Contrast Enhancement-Based Preprocessing Process to Improve Deep Learning Object Task Performance and Results. Appl. Sci. 2023, 13, 10760. [Google Scholar] [CrossRef]

- Cheon, B.W.; Kim, N.H. Enhancement of Low-Light Images Using Illumination Estimate and Local Steering Kernel. Appl. Sci. 2023, 13, 11394. [Google Scholar] [CrossRef]

| GIF | WGIF | GDGIF | WWGIF | |

|---|---|---|---|---|

| PSNR | 23.0908 | 27.3744 | 29.2473 | 34.9946 |

| SSIM | 0.9501 | 0.9529 | 0.9893 | 0.9973 |

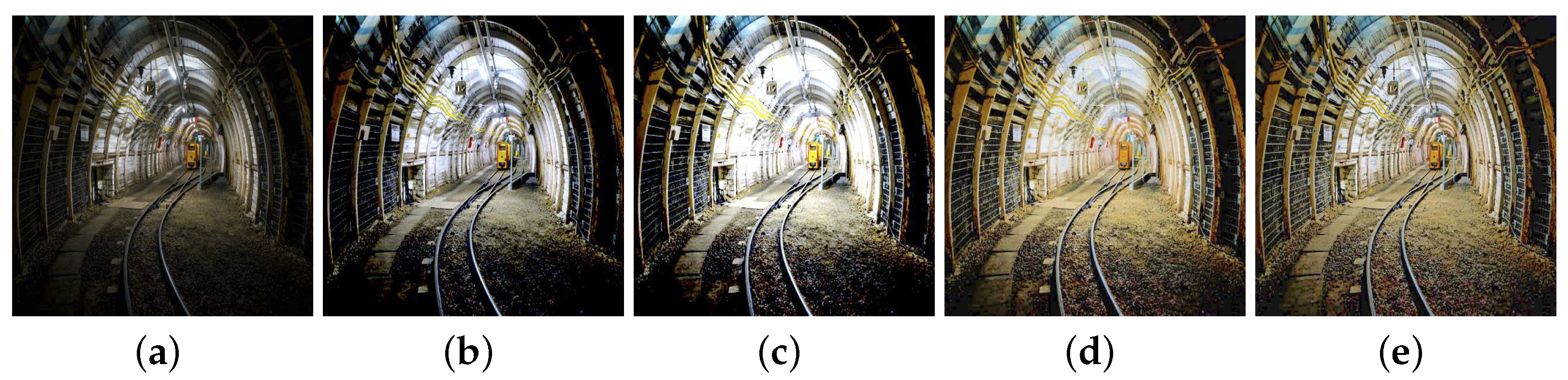

| Image 1 | Mean | SD | IE | AG | PSNR | SSIM |

|---|---|---|---|---|---|---|

| Original | 39.1851 | 47.3219 | 6.5405 | 9.8552 | - | - |

| SSR | 54.7889 | 55.1060 | 7.2588 | 11.9823 | 10.2724 | 0.6128 |

| MSR | 62.5176 | 58.3479 | 7.2629 | 15.1629 | 10.6629 | 0.6515 |

| Ref. [6] | 67.3683 | 60.4638 | 7.2838 | 15.9618 | 12.1916 | 0.6587 |

| Ours | 98.7722 | 73.1727 | 7.6195 | 19.6732 | 14.4768 | 0.7539 |

| Image 2 | Mean | SD | IE | AG | PSNR | SSIM |

|---|---|---|---|---|---|---|

| Original | 34.6971 | 35.0741 | 6.2112 | 6.4716 | - | - |

| SSR | 73.5088 | 36.0588 | 7.2658 | 9.6218 | 10.6560 | 0.6096 |

| MSR | 86.5889 | 38.3281 | 7.2851 | 10.7908 | 11.4824 | 0.6263 |

| Ref. [6] | 89.7056 | 40.1476 | 7.3253 | 11.7218 | 11.4985 | 0.6463 |

| Ours | 110.5771 | 44.4188 | 7.4199 | 12.9321 | 13.0189 | 0.7835 |

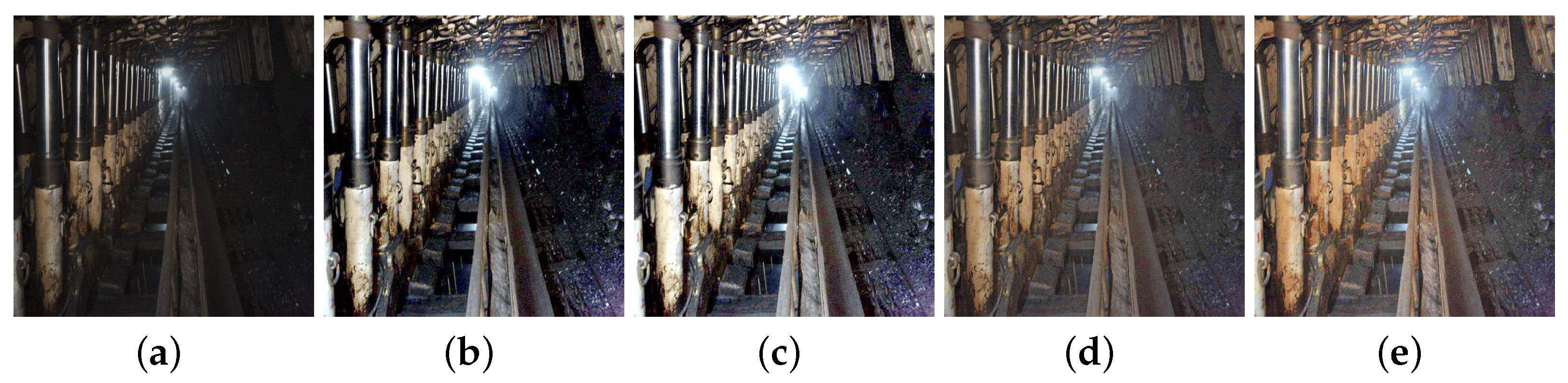

| Image 3 | Mean | SD | IE | AG | PSNR | SSIM |

|---|---|---|---|---|---|---|

| Original | 76.8960 | 34.8910 | 7.2031 | 9.7077 | - | - |

| SSR | 98.9119 | 43.6048 | 7.2848 | 10.9226 | 12.0918 | 0.6156 |

| MSR | 117.0049 | 44.2004 | 7.3035 | 11.5720 | 12.2162 | 0.6397 |

| Ref. [6] | 124.9763 | 45.6719 | 7.3149 | 11.1557 | 14.8119 | 0.7322 |

| Ours | 161.9878 | 46.8571 | 7.4639 | 12.3482 | 15.9980 | 0.7718 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, F.; Wang, M.; Liu, X. Low-Light Mine Image Enhancement Algorithm Based on Improved Retinex. Appl. Sci. 2024, 14, 2213. https://doi.org/10.3390/app14052213

Tian F, Wang M, Liu X. Low-Light Mine Image Enhancement Algorithm Based on Improved Retinex. Applied Sciences. 2024; 14(5):2213. https://doi.org/10.3390/app14052213

Chicago/Turabian StyleTian, Feng, Mengjiao Wang, and Xiaopei Liu. 2024. "Low-Light Mine Image Enhancement Algorithm Based on Improved Retinex" Applied Sciences 14, no. 5: 2213. https://doi.org/10.3390/app14052213

APA StyleTian, F., Wang, M., & Liu, X. (2024). Low-Light Mine Image Enhancement Algorithm Based on Improved Retinex. Applied Sciences, 14(5), 2213. https://doi.org/10.3390/app14052213