Design of a Trusted Content Authorization Security Framework for Social Media

Abstract

1. Introduction

- (1)

- This study considers a series of issues brought about by the rapid development of artificial intelligence-generated content (AIGC), focusing on managing published content in social media. The research seeks practical solutions to address these issues, protect personal rights, and maintain data privacy.

- (2)

- To ensure the uniqueness of user identity, this framework extracts the multimodal information users publish on social media. It integrates it with user identity information, timestamp, address label, and network IP address information into a new token. By verifying the token’s digital signature, the integrity of the content spread in social media can be effectively ensured. Only by comparing the hash value in the token with the recalculated hash value can the file be confirmed not to have been tampered with.

- (3)

- In the proposed framework, the digital signature, OAuth2.0, and other mechanisms are adopted, and the concept of zero trust security is introduced to construct a reliable authentication and authorization system to ensure the security of user identity and content.

2. Related Work

3. SecToken Framework

- UserIss (publisher).

- Sub (content topic and abstract).

- Exp (Token life cycle).

- Situation (publisher timestamp, address label, network IP information).

3.1. Integration Process and Challenges

3.2. Identity Management

3.3. Privacy Layer

3.4. Summarizing

4. Safety Analysis and Experimentation

4.1. Security Analysis

4.2. Experiments

Token Generation

- (1)

- Extracting critical information summary from audio

- (2)

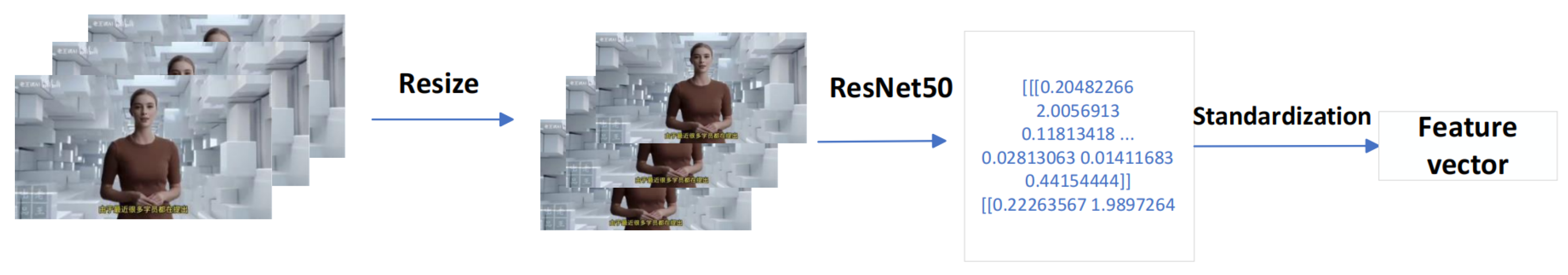

- Extracting critical information summary from video

- (3)

- Multi-factor Token Generation

5. Discussion

5.1. User Experience Impact

5.2. Ethical Considerations

5.3. Privacy Protection

6. Summary and Outlook

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Carvalho, I.; Ivanov, S. ChatGPT for tourism: Applications, benefits and risks. Tour. Rev. 2023, 79, 290–303. [Google Scholar] [CrossRef]

- Vorobyeva, T.; Mouratidis, K.; Diamantopoulos, F.N.; Giannopoulos, P.; Tavlaridou, K.; Timamopoulos, C.; Peristeras, V.; Magnisalis, I.; Shah, S.I.H. A Fake News Classification Frame Work: Application on Immigration Cases. Commun. Today 2020, 11, 118–130. [Google Scholar]

- Allcott, H.; Gentzkow, M. Social media and fake news in the 2016 election. J. Econ. Perspect. 2017, 31, 211–236. [Google Scholar] [CrossRef]

- Ma, J.; Gao, W.; Wei, Z.; Lu, Y.; Wong, K.F. Detect Rumors Using Time Series of Social Context Information on Microblogging Websites. In Proceedings of the 24th ACM International on Conference on Information and Knowledge Management, Melbourne, VIC, Australia, 18–23 October 2015; pp. 1751–1754. [Google Scholar] [CrossRef]

- Centola, D. The Spread of Behavior in an Online Social Network Experiment. Science 2010, 329, 1194–1197. [Google Scholar] [CrossRef] [PubMed]

- Guo, D.; Chen, H.; Wu, R.; Wang, Y. AIGC challenges and opportunities related to public safety: A case study of ChatGPT. J. Saf. Sci. Resil. 2023, 4, 329–339. [Google Scholar] [CrossRef]

- Zhou, X.; Zafarani, R. A survey of fake news: Fundamental theories, detection methods, and opportunities. ACM Comput. Surv. (CSUR) 2020, 53, 109. [Google Scholar] [CrossRef]

- Alsubari, S.N.; Deshmukh, S.N.; Alqarni, A.A.; Alsharif, N.; Aldhyani, T.H.; Alsaade, F.W.; Khalaf, O.I. Data analytics for the identification of fake reviews using supervised learning. Comput. Mater. Contin. 2022, 70, 3189–3204. [Google Scholar] [CrossRef]

- Kim, J. The institutionalization of YouTube: From user-generated content to professionally generated content. Media Cult. Soc. 2012, 34, 53–67. [Google Scholar] [CrossRef]

- Feuerriegel, S.; DiResta, R.; Goldstein, J.A.; Kumar, S.; Lorenz-Spreen, P.; Tomz, M.; Pröllochs, N. Research can help to tackle AI-generated disinformation. Nat. Hum. Behav. 2023, 7, 1818–1821. [Google Scholar] [CrossRef] [PubMed]

- Gupta, A.; Kumaraguru, P.; Castillo, C.; Meier, P. Tweetcred: A real-time web-based system for assessing credibility of content on twitter. arXiv 2014, arXiv:1405.5490. [Google Scholar]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M. “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Graf, A.; Bernardi, R.E. ChatGPT in research: Balancing ethics, transparency and advancement. Neuroscience 2023, 515, 71–73. [Google Scholar] [CrossRef] [PubMed]

- Woods, L. User generated content: Freedom of expression and the role of the media in a digital age. In Freedom of Expression and the Media; Brill Nijhoff: Leiden, The Netherlands, 2012; pp. 141–159. [Google Scholar]

- Roy, P.K.; Tripathy, A.K.; Weng, T.H.; Li, K.C. Securing social platform from misinformation using deep learning. Comput. Stand. Interfaces 2023, 84, 103674. [Google Scholar] [CrossRef]

- Hosseinimotlagh, S.; Papalexakis, E.E. Unsupervised content-based identification of fake news articles with tensor decomposition ensembles. In Proceedings of the Workshop on Misinformation and Misbehavior Mining on the Web (MIS2), Los Angeles, CA, USA, 9 February 2018. [Google Scholar]

- Ali, G.; ElAffendi, M.; Ahmad, N. BlockAuth: A blockchain-based framework for secure vehicle authentication and authorization. PLoS ONE 2023, 18, e0291596. [Google Scholar] [CrossRef] [PubMed]

- Musliyana, Z.; Satira, A.G.; Dwipayana, M.; Helinda, A. Integrated Email Management System Based Google Application Programming Interface Using OAuth 2.0 Authorization Protocol. Elkawnie J. Islam. Sci. Technol. 2020, 6, 109–120. [Google Scholar] [CrossRef]

- Kang, J.; Zhang, J.; Li, W.; Zhuo, L. Crowd activity recognition in live video streaming via 3D-ResNet and region graph convolution network. IET Image Process. 2021, 15, 3476–3486. [Google Scholar] [CrossRef]

- Seamless Communication; Barrault, L.; Chung, Y.A.; Meglioli, M.C.; Dale, D.; Dong, N.; Duquenne, P.A.; Elsahar, H.; Gong, H.; Heffernan, K.; et al. SeamlessM4T: Massively Multilingual & Multimodal Machine Translation. arXiv 2023, arXiv:2308.11596. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Wang, D.; Liang, Y.; Ma, H.; Xu, F. Refined Answer Selection Method with Attentive Bidirectional Long Short-Term Memory Network and Self-Attention Mechanism for Intelligent Medical Service Robot. Appl. Sci. 2023, 13, 3016. [Google Scholar] [CrossRef]

| Field | Name | Description |

|---|---|---|

| Header | type | Parameter type |

| alg | Signature algorithm | |

| PayLoad | userIss | Issuer |

| sub | Abstract | |

| Exp | Expiration date | |

| situation | Time stamp, address label, network IP | |

| Sign | secret | Use base64 encoding and add encryption key storage to the server. |

| Angle | SecToken Framework | Traditional Framework |

|---|---|---|

| Performance | Combined with deep learning, it has high performance. | Based on rules and signatures, the performance of processing multimodal data is low. |

| Scalability | Easy to expand and integrate new authentication mechanisms. | Relatively fixed, and integrating new technologies is relatively cumbersome. |

| Reduce the effectiveness of AI-generated content | Using digital signatures and other methods can effectively reduce the risk of fraud. | Lack of specialized mechanisms to combat the risk of AI-generated content. |

| Steps | Specific Measures |

|---|---|

| Establishing filtering criteria | Based on objective and impartial principles, such as community norms, laws and regulations or industry standards. |

| Clearly define standards | |

| Ensure transparent filtering mechanisms | Users understand the principles and operation of filtering decisions. |

| Provide channels for user participation and feedback. | |

| Differentiating between different types of content | Strict censorship of illegal content |

| A light touch on political views or controversial topics | |

| Establish an independent monitoring and review mechanism | Third-party organizations or committees are responsible for reviewing filtering decisions periodically |

| Continuous evaluation and improvement of the filtering framework | Regular audit standards |

| Gathering user feedback | |

| Monitoring the effect | |

| Raise user awareness through education | Users understand how to identify false information or inappropriate content |

| Users understand their rights and responsibilities on social media platforms |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Han, J.; Li, Q.; Xu, Y.; Zhu, Y.; Wu, B. Design of a Trusted Content Authorization Security Framework for Social Media. Appl. Sci. 2024, 14, 1643. https://doi.org/10.3390/app14041643

Han J, Li Q, Xu Y, Zhu Y, Wu B. Design of a Trusted Content Authorization Security Framework for Social Media. Applied Sciences. 2024; 14(4):1643. https://doi.org/10.3390/app14041643

Chicago/Turabian StyleHan, Jiawei, Qingsa Li, Ying Xu, Yan Zhu, and Bingxin Wu. 2024. "Design of a Trusted Content Authorization Security Framework for Social Media" Applied Sciences 14, no. 4: 1643. https://doi.org/10.3390/app14041643

APA StyleHan, J., Li, Q., Xu, Y., Zhu, Y., & Wu, B. (2024). Design of a Trusted Content Authorization Security Framework for Social Media. Applied Sciences, 14(4), 1643. https://doi.org/10.3390/app14041643