Prediction of Leaf Break Resistance of Green and Dry Alfalfa Leaves by Machine Learning Methods

Abstract

1. Introduction

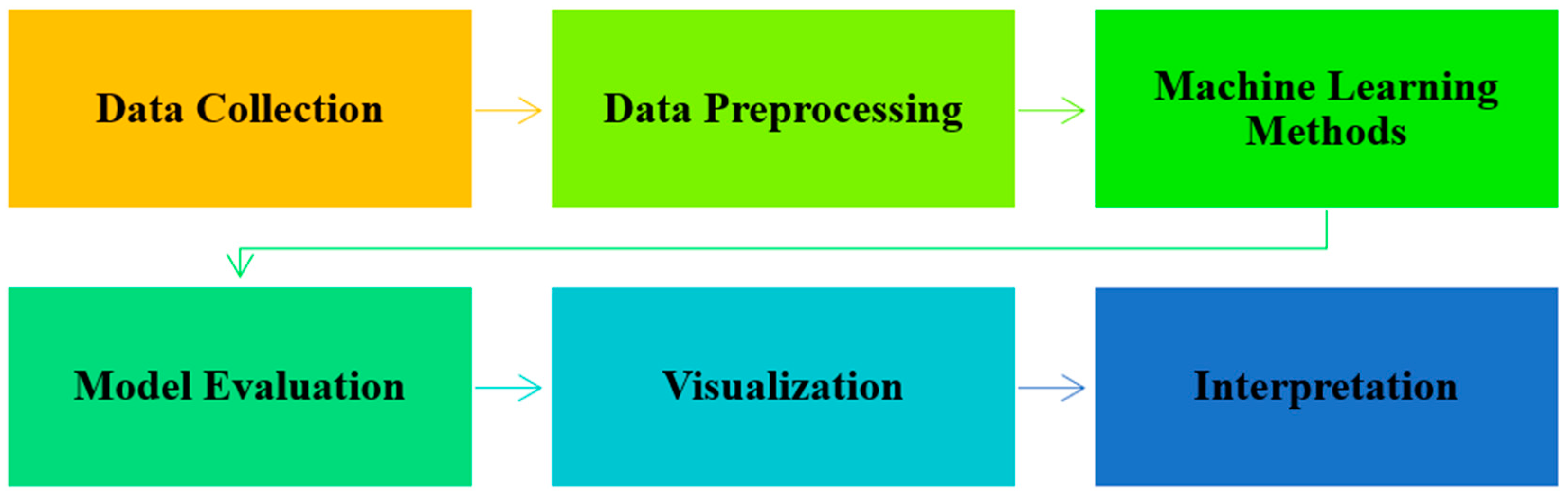

2. Materials and Methods

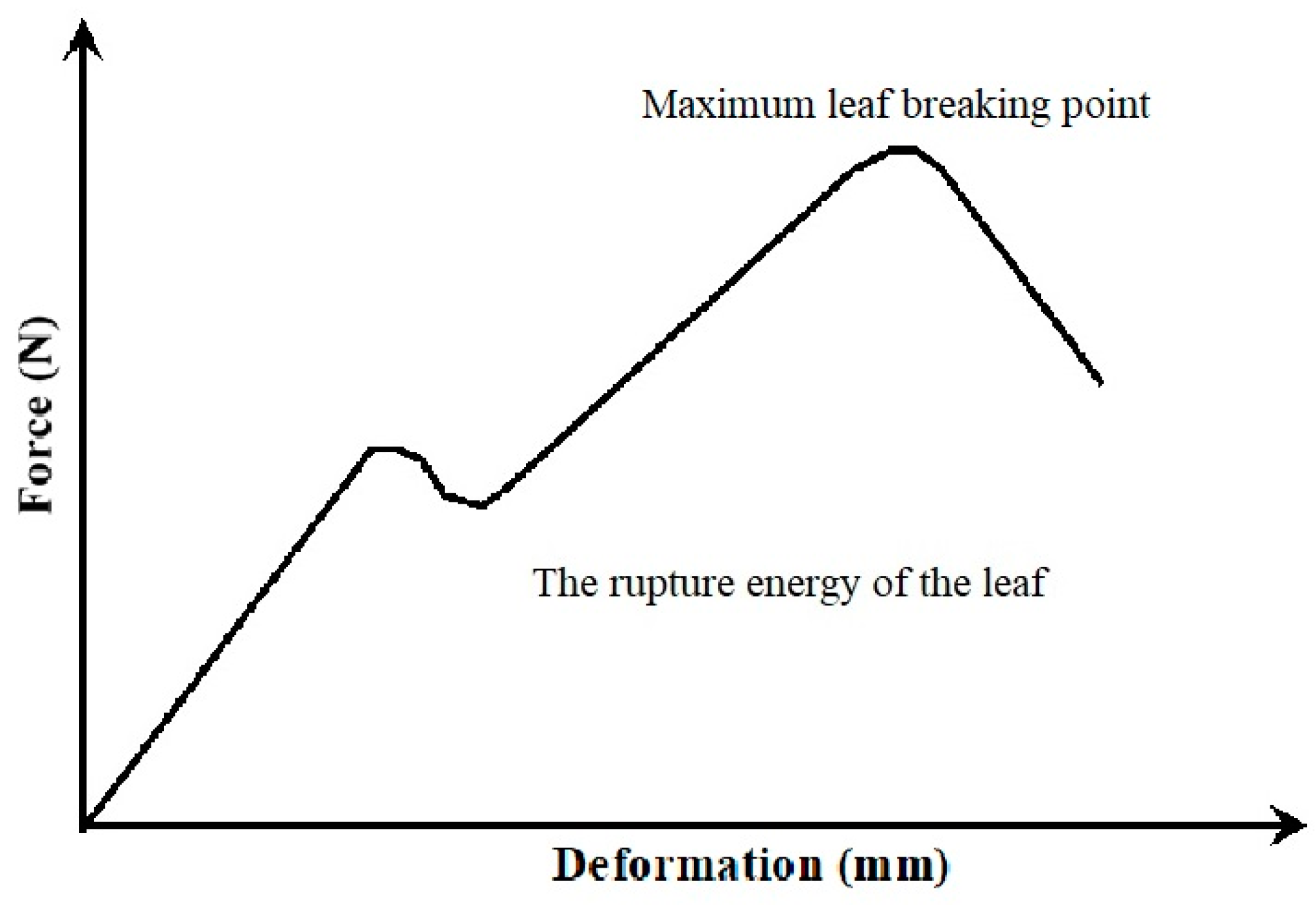

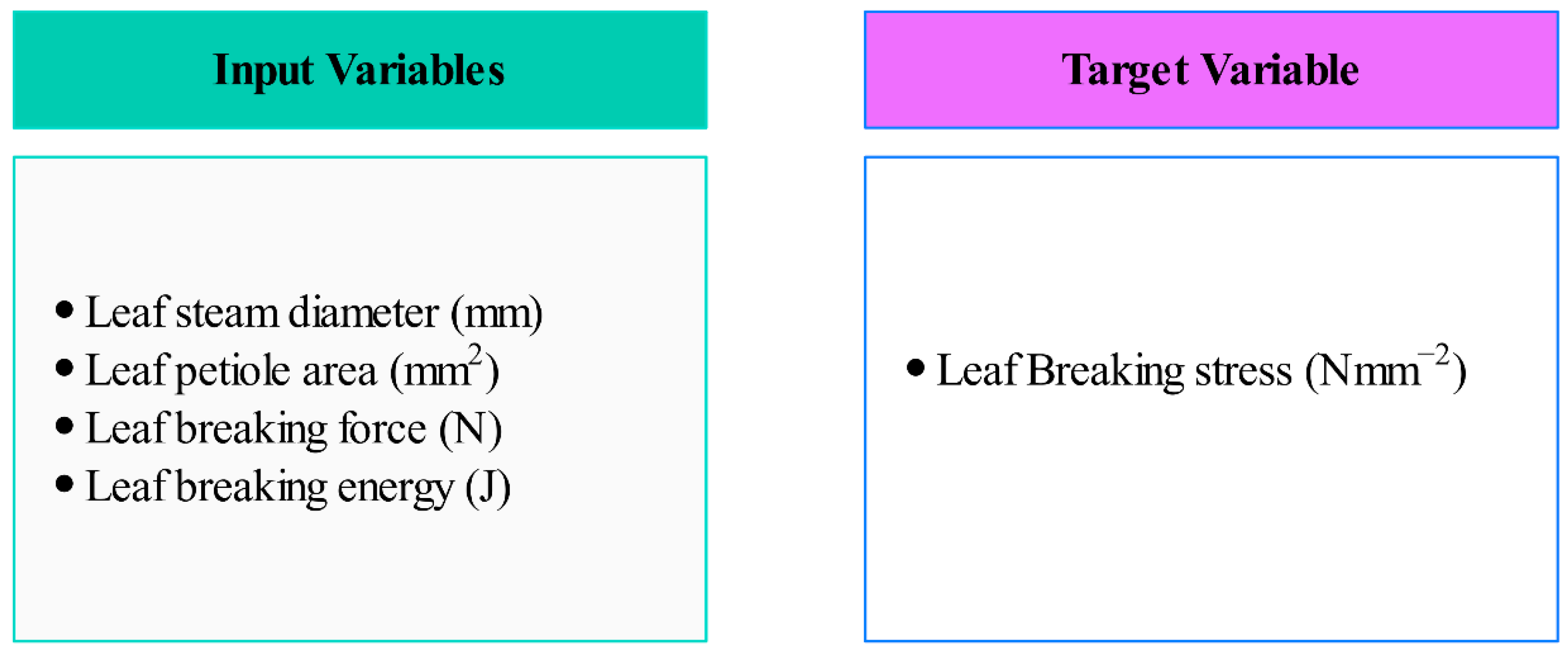

2.1. Data Set

2.2. Machine Learning Methods

2.3. Extra Trees

2.4. CatBoost

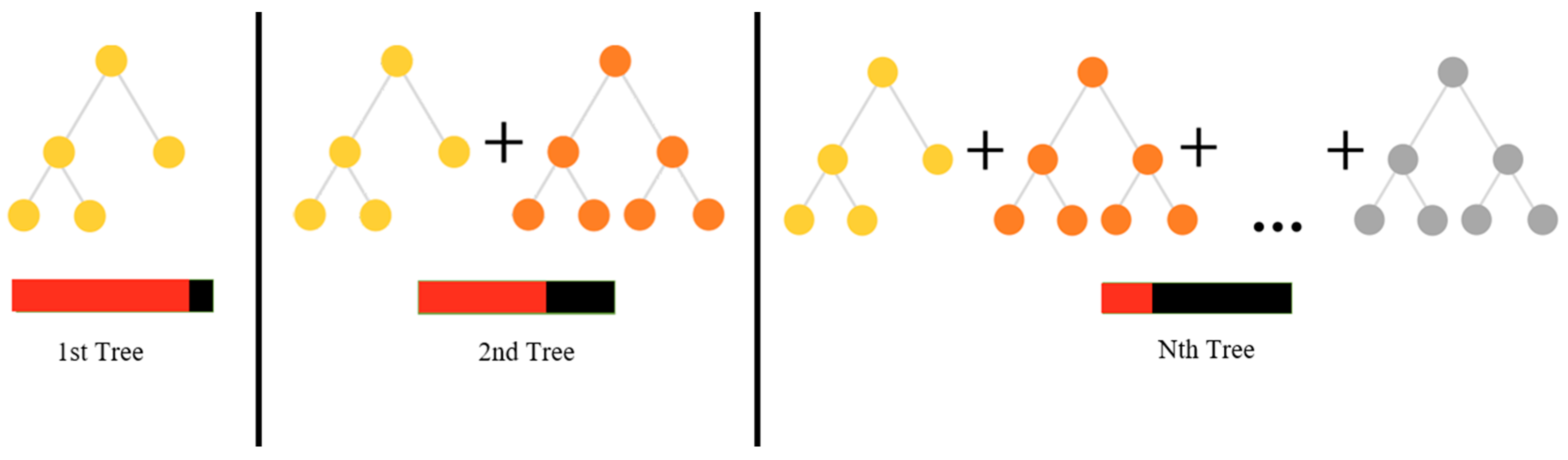

2.5. Gradient Boosting

2.6. Extreme Gradient Boosting

2.7. Random Forest

2.8. Models’ Evaluation Criteria

3. Results and Discussions

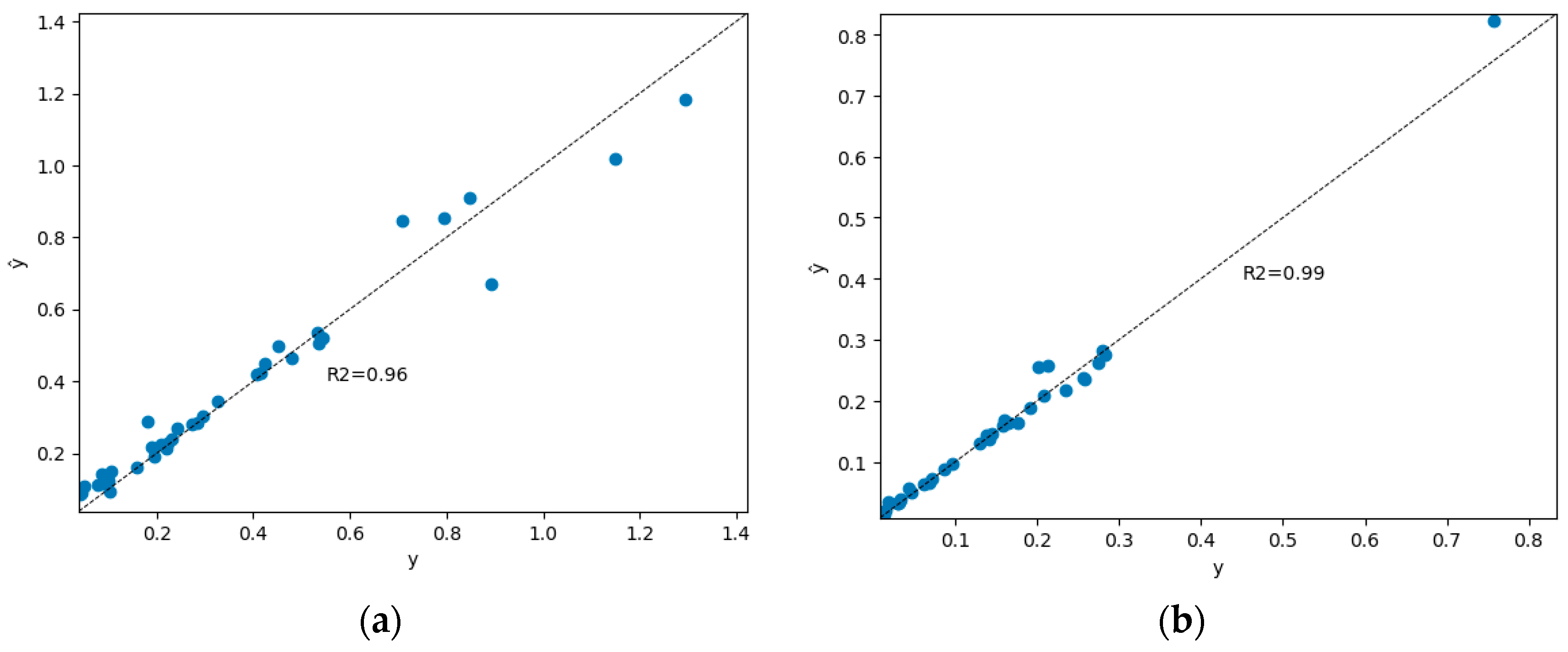

3.1. Interpretation of Modeling Results of Dried Alfalfa

3.2. Interpretation of Modeling Results of Green Alfalfa

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Nomenclature

| ANN | Artificial Neural Network |

| CB | CatBoost |

| DT | Decision Tree |

| ET | Extra Trees |

| GB | Gradient Boost |

| GPa | Giga Pascal |

| H | Hertz |

| J | Joule |

| MAE | Mean Absolute Error |

| MAPE | Mean Absolute Percentage Error |

| ML | Machine Learning |

| MPa | Mega Pascal |

| N | Newton |

| RF | Random Forest |

| R2 | Correlation Coefficient |

| RMSE | Root Mean Square Error |

| XGB | Extreme Gradient Boosting |

References

- Karadavut, U.; Palta, Ç.; Tezel, M.; Aksoyak, Ş. Yonca (Medicago sativa L.) bitkisinde bazı fizyolojik karakterlerin belirlenmesi. Ziraat Fakültesi Derg. 2011, 6, 8–16. [Google Scholar]

- Rotz, C.A. Loss models for forage harvest. Trans. ASAE 1995, 38, 1621–1631. [Google Scholar] [CrossRef]

- Güler, T.; Çerçi, İ.H. Güneş enerjisi destekli yonca kurutma ünitesinin geliştirilmesi ve elde edilen yoncaların toklular üzerine etkisi: 1. kurutma ünitesinin verimliliği ve yonca kalitesinin belirlenmesi. Fırat Üniversitesi Sağlık Bilim. Derg. 1999, 13, 309–318. [Google Scholar]

- Ekiz, H. Çayır ve Mera Yönetimi; The Ankara University: Ankara, Türkiye, 2023; Available online: https://acikders.ankara.edu.tr/pluginfile.php/33774/mod_resource/content/0/%C3%87AYIR%20VE%20MERA%20Y%C3%96NET%C4%B0M%C4%B0%20DERS%20MATERYAL%C4%B0%2014.%20KONU.pdf (accessed on 6 November 2023).

- Toruk, F.; Ülger, P.; Kayışoğlu, B.; Polat, C. Kaba Yem Hasat Mekanizasyonunun Yonca Otu Besin Değeri Kaybına Etkilerinin Saptanması Üzerine Bir Araştırma. In Proceedings of the 18. Tarımsal Mekanizasyon Kongresi, Tekirdağ, Türkiye, 17–18 September 1998; Tar-Mek: Ankara, Türkiye, 1998; pp. 649–660. [Google Scholar]

- King, M.J.; Vincent, J.F.V. Static and dynamic fracture properties of the leaf of new zealand flax phormium tenax (Phormiaceae: Monocotyledones). Proc. R. Soc. B Biol. Sci. 1996, 263, 521–527. [Google Scholar]

- Yilmaz, D.; Gökdüman, M.E. Physical-mechanical properties of origanum onites at different moisture contents. J. Essent. Oil Bear. Plants 2014, 17, 1023–1033. [Google Scholar] [CrossRef]

- Arévalo, C.A.; Castillo, B.; Londoño, M.T. Propiedades mecánicas de los tallos de romero (Rosmarinus officinalis L.). Agron. Colombia 2013, 31, 201–207. [Google Scholar]

- Shinners, K.J.; Koegel, R.G.; Barrington, G.P.; Straub, R.J. Evaluating longitudinal shear as a forage maceration technique. Trans. ASAE 1987, 30, 18–0022. [Google Scholar] [CrossRef]

- Öten, M.; Kabaș, Ö.; Kiremitci, S. Determination of leaf breaking strength in some clover genotypes collected from antalya natural flora. Derim 2018, 35, 81–86. [Google Scholar] [CrossRef][Green Version]

- Prince, R.P. Measurement of ultimate strength of forage stalks. Trans. ASAE 1961, 4, 208–0209. [Google Scholar] [CrossRef]

- Türker, U. Yoncanın Kesilme Direncinin Belirlenmesi; Ankara University: Ankara, Turkey, 1992. [Google Scholar]

- Halyk, R.M.; Hurlbut, L.W. Tensile and shear strength characteristics of alfalfa stems. Trans. ASAE 1968, 11, 256–0257. [Google Scholar]

- Kabas, O.; Kayakus, M.; Ünal, İ.; Moiceanu, G. Deformation energy estimation of cherry tomato based on some engineering parameters using machine-learning algorithms. Appl. Sci. 2023, 13, 8906. [Google Scholar] [CrossRef]

- SAP Makine Öğrenmesi Nedir? Tanım, Türler ve Örnekler. Available online: https://www.sap.com/turkey/products/artificial-intelligence/what-is-machine-learning.html (accessed on 6 November 2023).

- Sidey-Gibbons, J.A.M.; Sidey-Gibbons, C.J. Machine learning in medicine: A practical introduction. BMC Med. Res. Methodol. 2019, 19, 64. [Google Scholar] [CrossRef]

- Roldan-Vasco, S.; Orozco-Duque, A.; Orozco-Arroyave, J.R. Swallowing disorders analysis using surface EMG biomarkers and classification models. Digit. Signal Process. 2023, 133, 103815. [Google Scholar]

- Duman, S.; Elewi, A.; Yetgin, Z. Distance estimation from a monocular camera using face and body features. Arab. J. Sci. Eng. 2022, 47, 1547–1557. [Google Scholar] [CrossRef]

- Vadyala, S.R.; Betgeri, S.N.; Matthews, J.C.; Matthews, E. A Review of physics-based machine learning in civil engineering. Results Eng. 2022, 13, 100316. [Google Scholar] [CrossRef]

- Mele, M.; Magazzino, C. Pollution, economic growth, and COVID-19 deaths in India: A machine learning evidence. Environ. Sci. Pollut. Res. 2021, 28, 2669–2677. [Google Scholar] [CrossRef] [PubMed]

- Cardona, T.; Cudney, E.A.; Hoerl, R.; Snyder, J. Data mining and machine learning retention models in higher education. J. Coll. Stud. Retent. Res. Theory Pract. 2023, 25, 51–75. [Google Scholar] [CrossRef]

- Pallathadka, H.; Wenda, A.; Ramirez-Asís, E.; Asís-López, M.; Flores-Albornoz, J.; Phasinam, K. Classification and prediction of student performance data using various machine learning algorithms. Mater. Today Proc. 2023, 80, 3782–3785. [Google Scholar] [CrossRef]

- van Dun, C.; Moder, L.; Kratsch, W.; Röglinger, M. ProcessGAN: Supporting the creation of business process improvement ideas through generative machine learning. Decis. Support Syst. 2023, 165, 113880. [Google Scholar] [CrossRef]

- Albaity, M.; Mahmood, T.; Ali, Z. Impact of machine learning and artificial intelligence in business based on intuitionistic fuzzy soft WASPAS method. Mathematics 2023, 11, 1453. [Google Scholar] [CrossRef]

- Melnikov, A.; Kordzanganeh, M.; Alodjants, A.; Lee, R.K. Quantum machine learning: From physics to software engineering. Adv. Phys. X 2023, 8, 2165452. [Google Scholar] [CrossRef]

- Zhang, S.Q.; Xu, L.C.; Li, S.W.; Oliveira, J.C.A.; Li, X.; Ackermann, L.; Hong, X. Bridging chemical knowledge and machine learning for performance prediction of organic synthesis. Chem. A Eur. J. 2023, 29, e202202834. [Google Scholar] [CrossRef] [PubMed]

- Giles, B.; Peeling, P.; Kovalchik, S.; Reid, M. Differentiating movement styles in professional tennis: A machine learning and hierarchical clustering approach. Eur. J. Sport Sci. 2023, 23, 44–53. [Google Scholar] [CrossRef] [PubMed]

- Cock, J.; Jiménez, D.; Dorado, H.; Oberthür, T. Operations research and machine learning to manage risk and optimize production practices in agriculture: Good and bad experience. Curr. Opin. Environ. Sustain. 2023, 62, 101278. [Google Scholar] [CrossRef]

- Taner, A.; Mengstu, M.T.; Selvi, K.Ç.; Duran, H.; Kabaş, Ö.; Gür, İ.; Karaköse, T.; Gheorghiță, N.-E. Multiclass apple varieties classification using machine learning with histogram of oriented gradient and color moments. Appl. Sci. 2023, 13, 7682. [Google Scholar] [CrossRef]

- Alkali, B.; Osunde, Z.E.; Sadiq, I.O.; Eramus, C.U. Applications of artificial neural network in determining the mechanical properties of melon fruits. IOSR J. Agric. Vet. Sci. 2014, 6, 12–16. [Google Scholar]

- Cevher, E.Y.; Yıldırım, D. Using artificial neural network application in modeling the mechanical properties of loading position and storage duration of pear fruit. Processes 2022, 10, 2245. [Google Scholar] [CrossRef]

- Ziaratban, A.; Azadbakht, M.; Ghasemnezhad, A. Modeling of volume and surface area of apple from their geometric characteristics and artificial neural network. Int. J. Food Prop. 2017, 20, 762–768. [Google Scholar] [CrossRef]

- Kabas, O.; Kayakus, M.; Moiceanu, G. Nondestructive estimation of hazelnut (Corylus avellana L.) terminal velocity and drag coefficient based on some fruit physical properties using machine learning algorithms. Foods 2023, 12, 2879. [Google Scholar] [CrossRef] [PubMed]

- Baltrusaitis, T.; Ahuja, C.; Morency, L.P. Multimodal machine learning: A survey and taxonomy. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 41, 423–443. [Google Scholar] [CrossRef] [PubMed]

- Akmeşe, Ö.F.; Kör, H.; Erbay, H. Use of machine learning techniques for the forecast of student achievement in higher education. Inf. Technol. Learn. Tools 2021, 82, 297–311. [Google Scholar]

- ASAE S368.3; Compression Test of Food Materials of Convex Shape. American Society of Agricultural and Biological Engineers: St. Joseph, MI, USA, 2009; pp. 678–686.

- Muller, A.C.; Guido, S. Introduction to Machine Learning with Python (Early Release) Raw & Unedited; O’Reilly Media, Inc.: Sebastopol, CA, USA, 2016; pp. 1–376. [Google Scholar]

- Alpaydın, E. Introduction to Machine Learning, 2nd ed.; Dietterich, T., Ed.; The MIT Press: Cambridge, MA, USA, 2010; pp. 1–613. [Google Scholar]

- Brodley, C.E.; Friedl, M.A. Identifying mislabeled training data. J. Artif. Intell. Res. 1999, 11, 131–167. [Google Scholar] [CrossRef]

- Van Hulse, J.; Khoshgoftaar, T. Knowledge discovery from imbalanced and noisy data. Data Knowl. Eng. 2009, 68, 1513–1542. [Google Scholar] [CrossRef]

- Saeed, H.; Ahmed, M. Diabetes type 2 classification using machine learning algorithms with up-sampling technique. J. Electr. Syst. Inf. Technol. 2023, 10, 8. [Google Scholar] [CrossRef]

- Geurts, P.; Ernst, D.; Wehenkel, L. Extremely randomized trees. Mach. Learn. 2006, 63, 3–42. [Google Scholar] [CrossRef]

- El Bilali, A.; Abdeslam, T.; Ayoub, N.; Lamane, H.; Ezzaouini, M.A.; Elbeltagi, A. An interpretable machine learning approach based on DNN, SVR, Extra Tree, and CatBoost models for predicting daily pan evaporation. J. Environ. Manag. 2023, 327, 116890. [Google Scholar] [CrossRef] [PubMed]

- Dorogush, A.V.; Ershov, V.; Yandex, A.G. CatBoost: Gradient boosting with categorical features support. arXiv 2018, arXiv:1810-11363v1. [Google Scholar]

- Hussain, S.; Mustafa, M.W.; Jumani, T.A.; Baloch, S.K.; Alotaibi, H.; Khan, I.; Khan, A. A novel feature engineered-catboost-based supervised machine learning framework for electricity theft detection. Energy Rep. 2021, 7, 4425–4436. [Google Scholar] [CrossRef]

- Beskopylny, A.N.; Stel’makh, S.A.; Shcherban’, E.M.; Mailyan, L.R.; Meskhi, B.; Razveeva, I.; Chernil’nik, A.; Beskopylny, N. Concrete strength prediction using machine learning methods catboost, k-nearest neighbors, support vector regression. Appl. Sci. 2022, 12, 10864. [Google Scholar] [CrossRef]

- CatBoost-Open-Source Gradient Boosting Library. Available online: https://catboost.ai/news/catboost-enables-fast-gradient-boosting-on-decision-trees-using-gpus (accessed on 16 September 2023).

- Flores, V.; Keith, B. Gradient boosted trees predictive models for surface roughness in high-speed milling in the steel and aluminum metalworking industry. Complexity 2019, 2019, 1536716. [Google Scholar] [CrossRef]

- Zulfiqar, H.; Yuan, S.S.; Huang, Q.L.; Sun, Z.J.; Dao, F.Y.; Yu, X.L.; Lin, H. Identification of cyclin protein using gradient boost decision tree algorithm. Comput. Struct. Biotechnol. J. 2021, 19, 4123–4131. [Google Scholar] [CrossRef] [PubMed]

- Natekin, A.; Knoll, A. Gradient boosting machines, a tutorial. Front. Neurorobot. 2013, 7, 21. [Google Scholar] [CrossRef] [PubMed]

- Kumar, G.; Yadav, S.S.; Yogita; Pal, V. Machine learning-based framework to predict finger movement for prosthetic hand. IEEE Sensors Lett. 2022, 6, 6002204. [Google Scholar] [CrossRef]

- Al Daoud, E. Comparison between XGBoost, LightGBM and CatBoost using a home credit dataset. Int. J. Comput. Inf. Eng. 2019, 13, 6–10. [Google Scholar]

- Abdi, A.M. Land cover and land use classification performance of machine learning algorithms in a boreal landscape using sentinel-2 data. GIScience Remote Sens. 2020, 57, 1–20. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the KDD ’16: Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 785–794. [Google Scholar]

- Iannace, G.; Ciaburro, G.; Trematerra, A. Wind turbine noise prediction using random forest regression. Machines 2019, 7, 69. [Google Scholar] [CrossRef]

- Oshiro, T.M.; Perez, P.S.; Baranauskas, J.A. How many trees in a random forest? In Machine Learning and Data Mining in Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2012; pp. 154–168. [Google Scholar]

- Zhang, L.; Liu, Z.; Liu, D.; Xiong, Q.; Yang, N.; Ren, T.; Zhang, C.; Zhang, X.; Li, S. Crop mapping based on historical samples and new training samples generation in Heilongjiang province, China. Sustainability 2019, 11, 5052. [Google Scholar] [CrossRef]

- Kocer, A.; Kabas, O.; Zabava, B.S. Estimation of compressive resistance of briquettes obtained from groundnut shells with different machine learning algorithms. Appl. Sci. 2023, 13, 9826. [Google Scholar] [CrossRef]

- Chicco, D.; Warrens, M.J.; Jurman, G. The coefficient of determination r-squared is more informative than smape, mae, mape, mse and rmse in regression analysis evaluation. PeerJ Comput. Sci. 2021, 7, e623. [Google Scholar] [CrossRef]

- Nagelkerke, N.J.D. A note on a general definition of the coefficient of determination. Biometrika 1991, 78, 691–692. [Google Scholar] [CrossRef]

- Ozer, D. Correlation and the coefficient of determination. Psychol. Bull. 1985, 97, 307–315. [Google Scholar] [CrossRef]

- de Myttenaere, A.; Golden, B.; Le Grand, B.; Rossi, F. Mean absolute percentage error for regression models. Neurocomputing 2016, 192, 38–48. [Google Scholar] [CrossRef]

- Lewis, C.D.; Colin, D. Industrial and Business Forecasting Methods: A Practical Guide to Exponential Smoothing and Curve Fitting; Butterworth Scientific: London, UK, 1982; pp. 1–143. [Google Scholar]

- Witt, S.F.; Witt, C.A. Modeling and Forecasting Demand in Tourism.; Academic Press Ltd.: London, UK, 1992; pp. 1–208. [Google Scholar]

- Christie, D.; Neill, S.P. Measuring and Observing The Ocean Renewable Energy Resource, 2nd ed.; Bangor University: Bangor, UK, 2021; pp. 149–175. [Google Scholar]

- Tüchler, M.; Singer, A.C.; Koetter, R. Minimum mean squared error equalization using a priori information. IEEE Trans. Signal Process. 2002, 50, 673–683. [Google Scholar] [CrossRef]

- Chai, T.; Draxler, R.R. Root mean square error (RMSE) or mean absolute error (MAE)? —arguments against avoiding RMSE in the literature. Geosci. Model Dev. 2014, 7, 1247–1250. [Google Scholar] [CrossRef]

- Le, T.T. Prediction of tensile strength of polymer carbon nanotube composites using practical machine learning method. J. Compos. Mater. 2021, 55, 787–811. [Google Scholar] [CrossRef]

- de Sousa, I.C.; Nascimento, M.; Silva, G.N.; Nascimento, A.C.C.; Cruz, C.D.; Silva, F.F.E.; de Almeida, D.P.; Pestana, K.N.; Azevedo, C.F.; Zambolim, L.; et al. Genomic prediction of leaf rust resistance to arabica coffee using machine learning algorithms. Sci. Agric. 2020, 78, e20200021. [Google Scholar] [CrossRef]

- Kuradusenge, M.; Hitimana, E.; Hanyurwimfura, D.; Rukundo, P.; Mtonga, K.; Mukasine, A.; Uwitonze, C.; Ngabonziza, J.; Uwamahoro, A. Crop yield prediction using machine learning models: Case of Irish potato and maize. Agriculture 2023, 13, 225. [Google Scholar] [CrossRef]

- Mostafaeipour, A.; Fakhrzad, M.B.; Gharaat, S.; Jahangiri, M.; Dhanraj, J.A.; Band, S.S.; Issakhov, A.; Mosavi, A. Machine learning for prediction of energy in wheat production. Agriculture 2020, 10, 517. [Google Scholar] [CrossRef]

- Kabas, O.; Ercan, U.; Dinca, M.N. Prediction of briquette deformation energy via ensemble learning algorithms using physico-mechanical parameters. Appl. Sci. 2024, 14, 652. [Google Scholar] [CrossRef]

| Plan Type | Variables | Mean ± SD |

|---|---|---|

| Dried | Leaf stem diameter (mm) | 0.561 ± 0.157 |

| Leaf petiole area (mm2) | 0.261 ± 0.152 | |

| Leaf breaking force (N) | 0.031 ± 0.023 | |

| Leaf breaking energy (J) | 0.037 ± 0.028 | |

| Leaf breaking stress (N mm−2) | 0.158 ± 0.204 | |

| Green | Leaf stem diameter (mm) | 0.580 ± 0.157 |

| Leaf petiole area (mm2) | 0.280 ± 0.152 | |

| Leaf breaking force (N) | 0.087 ± 0.055 | |

| Leaf breaking energy (J) | 0.104 ± 0.066 | |

| Leaf breaking stress (N mm−2) | 0.412 ± 0.371 |

| Plant Type | Model | Evaluation Criteria | |||

|---|---|---|---|---|---|

| RMSE | MAPE | MAE | R2 | ||

| Dried | Extra Trees | 0.0171 | 0.0969 | 0.0099 | 0.9853 |

| CatBoost | 0.0174 | 0.1068 | 0.0105 | 0.9838 | |

| Gradient Boosting | 0.0265 | 0.1936 | 0.0178 | 0.9624 | |

| Random Forest | 0.0306 | 0.2163 | 0.0191 | 0.9499 | |

| Extreme Gradient Boosting | 0.0223 | 0.1224 | 0.0124 | 0.9736 | |

| Green | Extra Trees | 0.0707 | 0.1604 | 0.0340 | 0.9472 |

| CatBoost | 0.0850 | 0.1806 | 0.0387 | 0.9239 | |

| Gradient Boosting | 0.1194 | 0.1447 | 0.0621 | 0.8497 | |

| Random Forest | 0.0616 | 0.2135 | 0.0363 | 0.9600 | |

| Extreme Gradient Boosting | 0.1026 | 0.1750 | 0.0542 | 0.8889 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ercan, U.; Kabas, O.; Moiceanu, G. Prediction of Leaf Break Resistance of Green and Dry Alfalfa Leaves by Machine Learning Methods. Appl. Sci. 2024, 14, 1638. https://doi.org/10.3390/app14041638

Ercan U, Kabas O, Moiceanu G. Prediction of Leaf Break Resistance of Green and Dry Alfalfa Leaves by Machine Learning Methods. Applied Sciences. 2024; 14(4):1638. https://doi.org/10.3390/app14041638

Chicago/Turabian StyleErcan, Uğur, Onder Kabas, and Georgiana Moiceanu. 2024. "Prediction of Leaf Break Resistance of Green and Dry Alfalfa Leaves by Machine Learning Methods" Applied Sciences 14, no. 4: 1638. https://doi.org/10.3390/app14041638

APA StyleErcan, U., Kabas, O., & Moiceanu, G. (2024). Prediction of Leaf Break Resistance of Green and Dry Alfalfa Leaves by Machine Learning Methods. Applied Sciences, 14(4), 1638. https://doi.org/10.3390/app14041638