Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images

Abstract

1. Introduction

- (1)

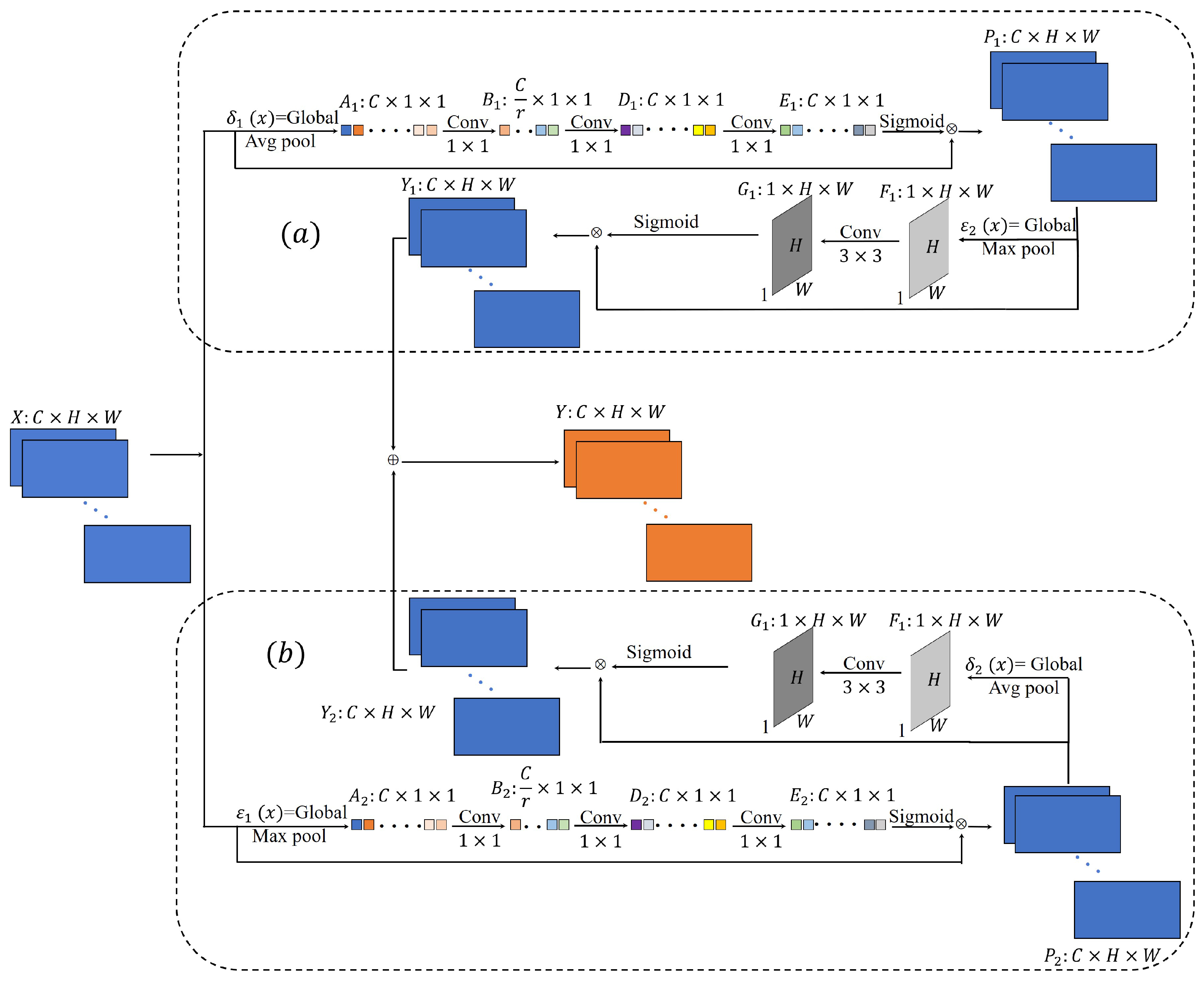

- Taking advantage of the attention mechanism in both the channel and spatial domains, this paper designs a novel feature extraction module named DHA. DHA combines the channel and spatial attention, processing feature maps separately through distinct attention mechanisms. This effectively harnesses the strengths of each attention mechanism, boosting the information screening capability. The result is a more precise and comprehensive feature extraction.

- (2)

- We introduced DHAU-Net by enhancing the skip connection approach in U-Net to tackle the disparities between lower-level and higher-level feature maps. In U-Net, lower-level feature maps contain more detailed spatial location information but weaker semantic details, while higher-level feature maps have stronger semantic information but less precise spatial location details. To bridge this gap, we replace the original simple skip connection with the DHA module. By applying the DHA module to process shallow feature maps and fuse them with deeper feature maps, the network focuses more on foreground building information while disregarding the background interference. This refinement narrows the within-group gap between buildings, leading to improved segmentation accuracy.

2. Methodology

2.1. DHA Module

2.2. DHAU-Net Structure

3. Experiment

3.1. Experimental Data

3.2. Experimental Environment

3.3. Evaluation Indicators

4. Results and Analysis

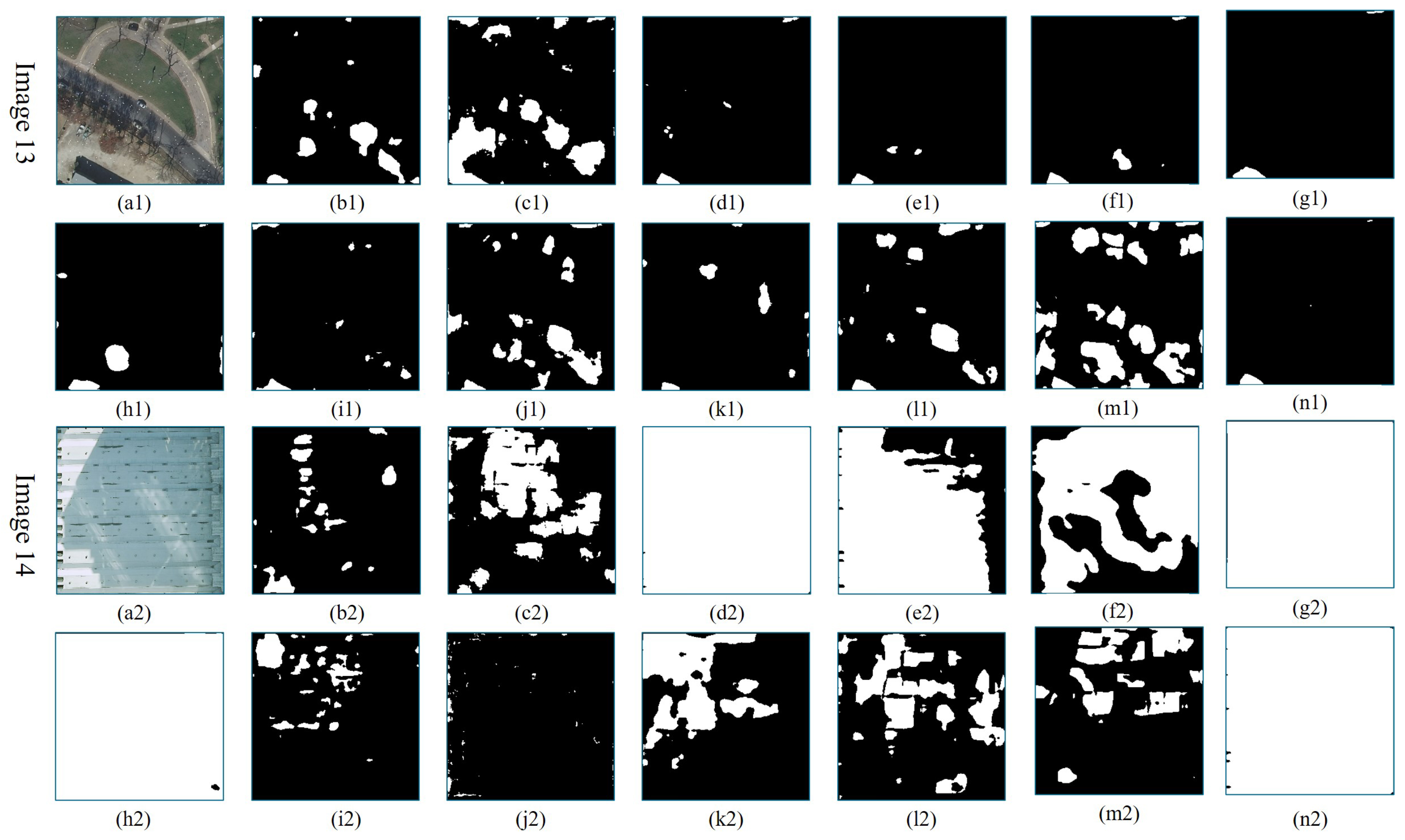

4.1. Massachusetts Dataset

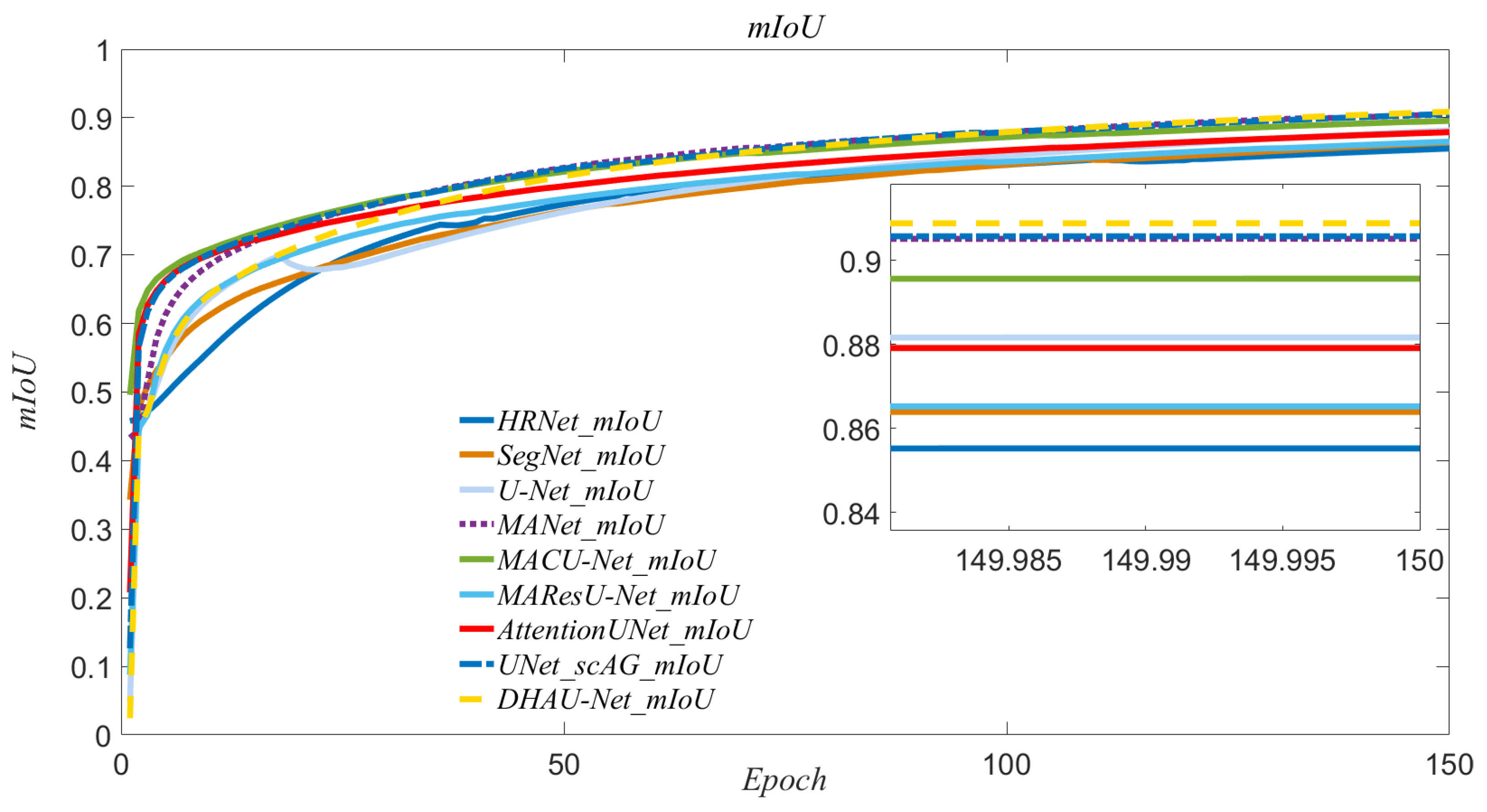

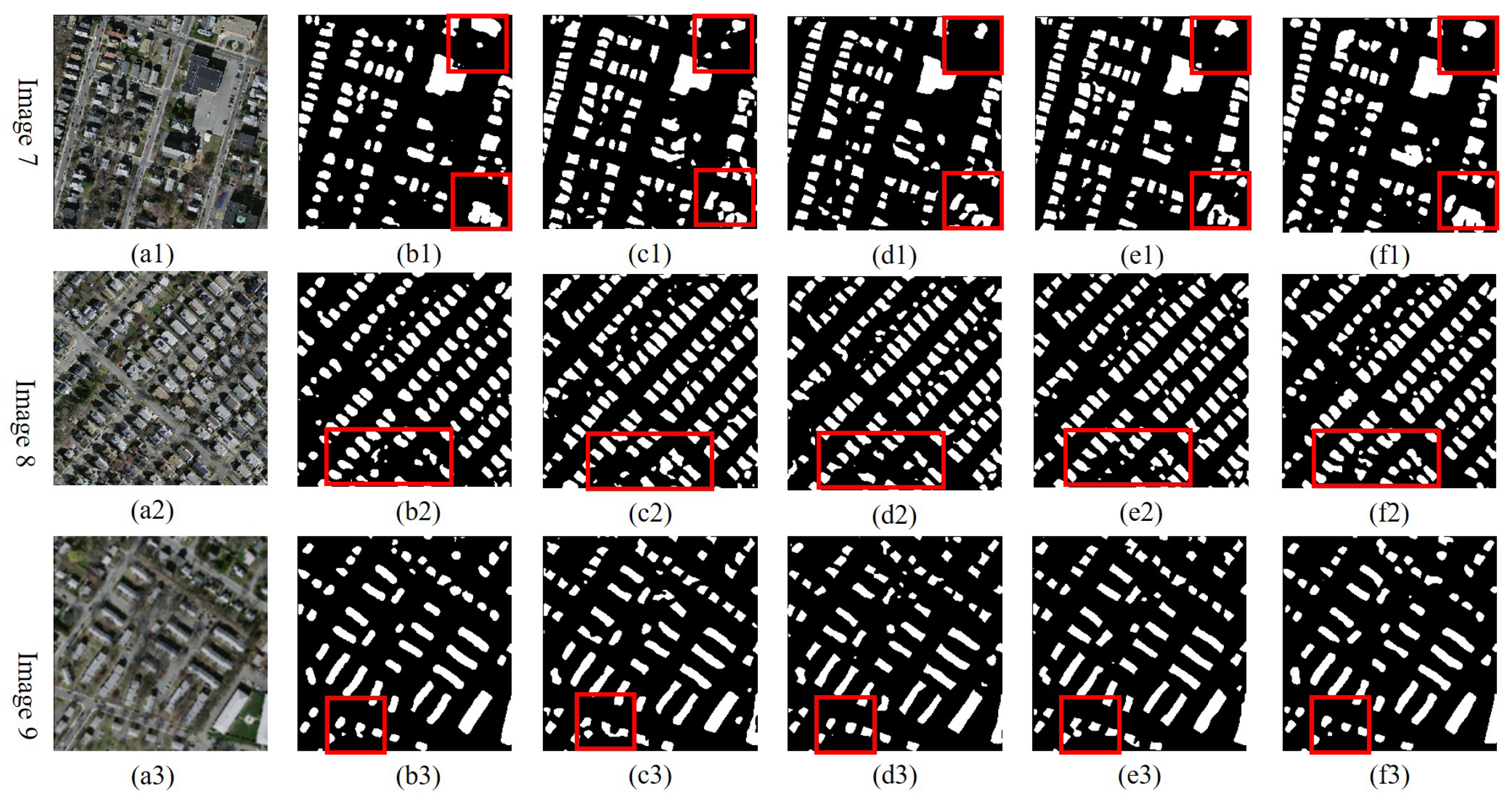

4.1.1. Model Comparison Experiment

4.1.2. Comparison Experiment for the Attention Module

4.1.3. Attention Module-Based Transfer Learning Experiment

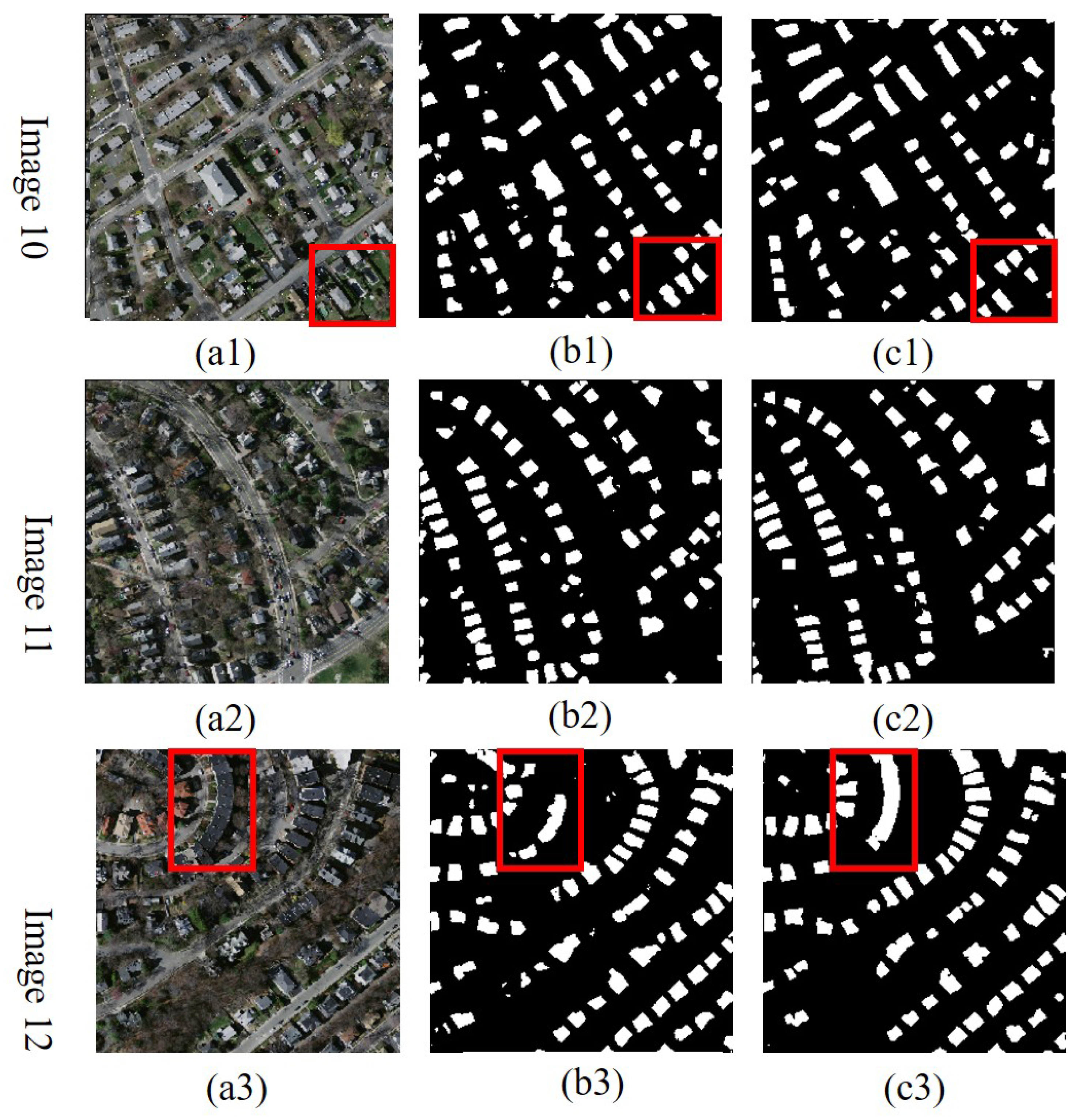

4.2. Inria Aerial Image Labeling Dataset

4.3. Time Complexity Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Mostafa, E.; Li, X.; Sadek, M.; Dossou, J.F. Monitoring and Forecasting of Urban Expansion Using Machine Learning-Based Techniques and Remotely Sensed Data: A Case Study of Gharbia Governorate, Egypt. Remote Sens. 2021, 13, 4498. [Google Scholar] [CrossRef]

- Ünlü, R.; Kiriş, R. Detection of Damaged Buildings after an Earthquake with Convolutional Neural Networks in Conjunction with Image Segmentation. Vis. Comput. 2022, 38, 685–694. [Google Scholar] [CrossRef]

- Reksten, J.H.; Salberg, A.B. Estimating Traffic in Urban Areas from Very-High Resolution Aerial Images. Int. J. Remote Sens. 2021, 42, 865–883. [Google Scholar] [CrossRef]

- Guo, R.; Xiao, P.; Zhang, X.; Liu, H. Updating land cover map based on change detection of high-resolution remote sensing images. J. Appl. Remote Sens. 2021, 15, 044507. [Google Scholar] [CrossRef]

- Wang, J.; Yang, X.; Qin, X.; Ye, X.; Qin, Q. An Efficient Approach for Automatic Rectangular Building Extraction From Very High Resolution Optical Satellite Imagery. IEEE Geosci. Remote Sens. Lett. 2015, 12, 487–491. [Google Scholar] [CrossRef]

- Zhang, X.P.; Desai, M. Segmentation of bright targets using wavelets and adaptive thresholding. IEEE Trans. Image Process. 2001, 10, 1020–1030. [Google Scholar] [CrossRef] [PubMed]

- Felzenszwalb, P.F.; Huttenlocher, D.P. Efficient graph-based image segmentation. Int. J. Comput. Vis. 2004, 59, 167–181. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. In Advances in Neural Information Processing Systems; Pereira, F., Burges, C., Bottou, L., Weinberger, K., Eds.; NIPS’12; Curran Associates, Incorporated: Red Hook, NY, USA, 2012; Volume 25, pp. 1097–1105. [Google Scholar]

- Li, K.; Cheng, G.; Bu, S.; You, X. Rotation-Insensitive and Context-Augmented Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2337–2348. [Google Scholar] [CrossRef]

- Xia, G.S.; Bai, X.; Ding, J.; Zhu, Z.; Belongie, S.; Luo, J.; Datcu, M.; Pelillo, M.; Zhang, L. DOTA: A Large-Scale Dataset for Object Detection in Aerial Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3974–3983. [Google Scholar] [CrossRef]

- Wei, Z.; Huang, Y.; Chen, Y.; Zheng, C.; Gao, J. A-ESRGAN: Training Real-World Blind Super-Resolution with Attention U-Net Discriminators. In Proceedings of the PRICAI 2023: Trends in Artificial Intelligence: 20th Pacific Rim International Conference on Artificial Intelligence, PRICAI 2023, Jakarta, Indonesia, 15–19 November 2023; Proceedings, Part III. Springer: Berlin/Heidelberg, Germany, 2023; pp. 16–27. [Google Scholar] [CrossRef]

- Rehman, M.U.; Ryu, J.; Nizami, I.F.; Chong, K.T. RAAGR2-Net: A brain tumor segmentation network using parallel processing of multiple spatial frames. Comput. Biol. Med. 2023, 152, 106426. [Google Scholar] [CrossRef] [PubMed]

- Ryu, J.; Rehman, M.U.; Nizami, I.F.; Chong, K.T. SegR-Net: A deep learning framework with multi-scale feature fusion for robust retinal vessel segmentation. Comput. Biol. Med. 2023, 163, 107132. [Google Scholar] [CrossRef] [PubMed]

- Kemker, R.; Salvaggio, C.; Kanan, C. Algorithms for semantic segmentation of multispectral remote sensing imagery using deep learning. ISPRS J. Photogramm. Remote Sens. 2018, 145, 60–77. [Google Scholar] [CrossRef]

- Li, G.; Jiang, S.; Yun, I.; Kim, J.; Kim, J. Depth-Wise Asymmetric Bottleneck with Point-Wise Aggregation Decoder for Real-Time Semantic Segmentation in Urban Scenes. IEEE Access 2020, 8, 27495–27506. [Google Scholar] [CrossRef]

- Zhang, C.; Sargent, I.; Pan, X.; Li, H.; Gardiner, A.; Hare, J.; Atkinson, P.M. Joint Deep Learning for land cover and land use classification. Remote Sens. Environ. 2019, 221, 173–187. [Google Scholar] [CrossRef]

- Chen, B.; Liu, Y.; Zhang, Z.; Lu, G.; Kong, A.W.K. TransAttUnet: Multi-Level Attention-Guided U-Net with Transformer for Medical Image Segmentation. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 55–68. [Google Scholar] [CrossRef]

- Liu, Y.; Yao, J.; Lu, X.; Xia, M.; Wang, X.; Liu, Y. RoadNet: Learning to Comprehensively Analyze Road Networks in Complex Urban Scenes from High-Resolution Remotely Sensed Images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2043–2056. [Google Scholar] [CrossRef]

- Samie, A.; Abbas, A.; Azeem, M.M.; Hamid, S.; Iqbal, M.A.; Hasan, S.S.; Deng, X. Examining the impacts of future land use/land cover changes on climate in Punjab province, Pakistan: Implications for environmental sustainability and economic growth. Environ. Sci. Pollut. Res. 2020, 27, 25415–25433. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. arXiv 2014, arXiv:1411.4038. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-Resolution Representations for Labeling Pixels and Regions. arXiv 2019, arXiv:1904.04514, [1904.04514]. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Wei, Y.; Liu, X.; Lei, J.; Yue, R.; Feng, J. Multiscale feature U-Net for remote sensing image segmentation. J. Appl. Remote Sens. 2022, 16, 016507. [Google Scholar] [CrossRef]

- Dong, R.; Pan, X.; Li, F. DenseU-Net-Based Semantic Segmentation of Small Objects in Urban Remote Sensing Images. IEEE Access 2019, 7, 65347–65356. [Google Scholar] [CrossRef]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.C.H.; Heinrich, M.P.; Misawa, K.; Mori, K.; McDonagh, S.G.; Hammerla, N.Y.; Kainz, B.; et al. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar]

- Li, R.; Duan, C.; Zheng, S.; Zhang, C.; Atkinson, P.M. MACU-Net for Semantic Segmentation of Fine-Resolution Remotely Sensed Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8007205. [Google Scholar] [CrossRef]

- Lu, M.; Zhang, Y.X.; Du, X.; Chen, T.; Liu, S.; Lei, T. Attention-Based DSM Fusion Network for Semantic Segmentation of High-Resolution Remote-Sensing Images. In Proceedings of the International Conference on Natural Computation, Fuzzy Systems and Knowledge Discovery, Xi’an, China, 1–3 August 2020; Volume 88, pp. 610–618. [Google Scholar] [CrossRef]

- Khanh, T.L.B.; Dao, D.P.; Ho, N.H.; Yang, H.J.; Baek, E.T.; Lee, G.; Kim, S.H.; Yoo, S.B. Enhancing U-Net with Spatial-Channel Attention Gate for Abnormal Tissue Segmentation in Medical Imaging. Appl. Sci. 2020, 10, 5729. [Google Scholar] [CrossRef]

- Li, R.; Zheng, S.; Duan, C.; Su, J.; Zhang, C. Multistage Attention ResU-Net for Semantic Segmentation of Fine-Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 8009205. [Google Scholar] [CrossRef]

- Yu, M.; Chen, X.; Zhang, W.; Liu, Y. AGs-Unet: Building Extraction Model for High Resolution Remote Sensing Images based on Attention Gates U Network. Sensors 2022, 22, 2932. [Google Scholar] [CrossRef]

- Xu, L.; Liu, Y.; Yang, P.; Chen, H.; Zhang, H.; Wang, D.; Zhang, X. HA U-Net: Improved Model for Building Extraction From High Resolution Remote Sensing Imagery. IEEE Access 2021, 9, 101972–101984. [Google Scholar] [CrossRef]

- Mnih, V. Machine Learning for Aerial Image Labeling. Ph.D. Thesis, University of Toronto, Toronto, ON, Canada, 2013. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for semantic urban scene understanding. In Proceedings of the Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

| Prediction | Positive | Negative | |

|---|---|---|---|

| Reference | |||

| Positive | True Positive | False Negative | |

| Negative | False Positive | True Negative | |

| Methods∖EI | Accuracy | mIoU | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| HRNet | 0.9688 | 0.8552 | 0.6493 | 0.6254 | 0.6371 |

| SegNet | 0.9704 | 0.8639 | 0.8903 | 0.8391 | 0.8639 |

| U-Net | 0.9748 | 0.8816 | 0.9152 | 0.8536 | 0.8833 |

| MANet | 0.9799 | 0.9051 | 0.9252 | 0.8926 | 0.9086 |

| MACU-Net | 0.9778 | 0.8956 | 0.9191 | 0.8789 | 0.8985 |

| AttentionUNet | 0.9740 | 0.8791 | 0.9064 | 0.8564 | 0.8807 |

| MAResU-Net | 0.9707 | 0.8652 | 0.8897 | 0.8425 | 0.8655 |

| UNet_scAG | 0.9799 | 0.9057 | 0.9161 | 0.9028 | 0.9094 |

| DHAU-Net | 0.9808 | 0.9088 | 0.9300 | 0.8932 | 0.9112 |

| Methods∖EI | Accuracy | mIoU | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| U-Net + CBAM | 0.9525 | 0.7876 | 0.8378 | 0.7132 | 0.7705 |

| U-Net + SE | 0.9763 | 0.8885 | 0.9204 | 0.8631 | 0.8909 |

| U-Net + HAM-1 | 0.9725 | 0.8721 | 0.9037 | 0.8426 | 0.8720 |

| U-Net + HAM-2 | 0.9785 | 0.8984 | 0.9236 | 0.8799 | 0.9012 |

| DHAU-Net | 0.9808 | 0.9088 | 0.9300 | 0.8932 | 0.9112 |

| Methods∖EI | Accuracy | mIoU | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

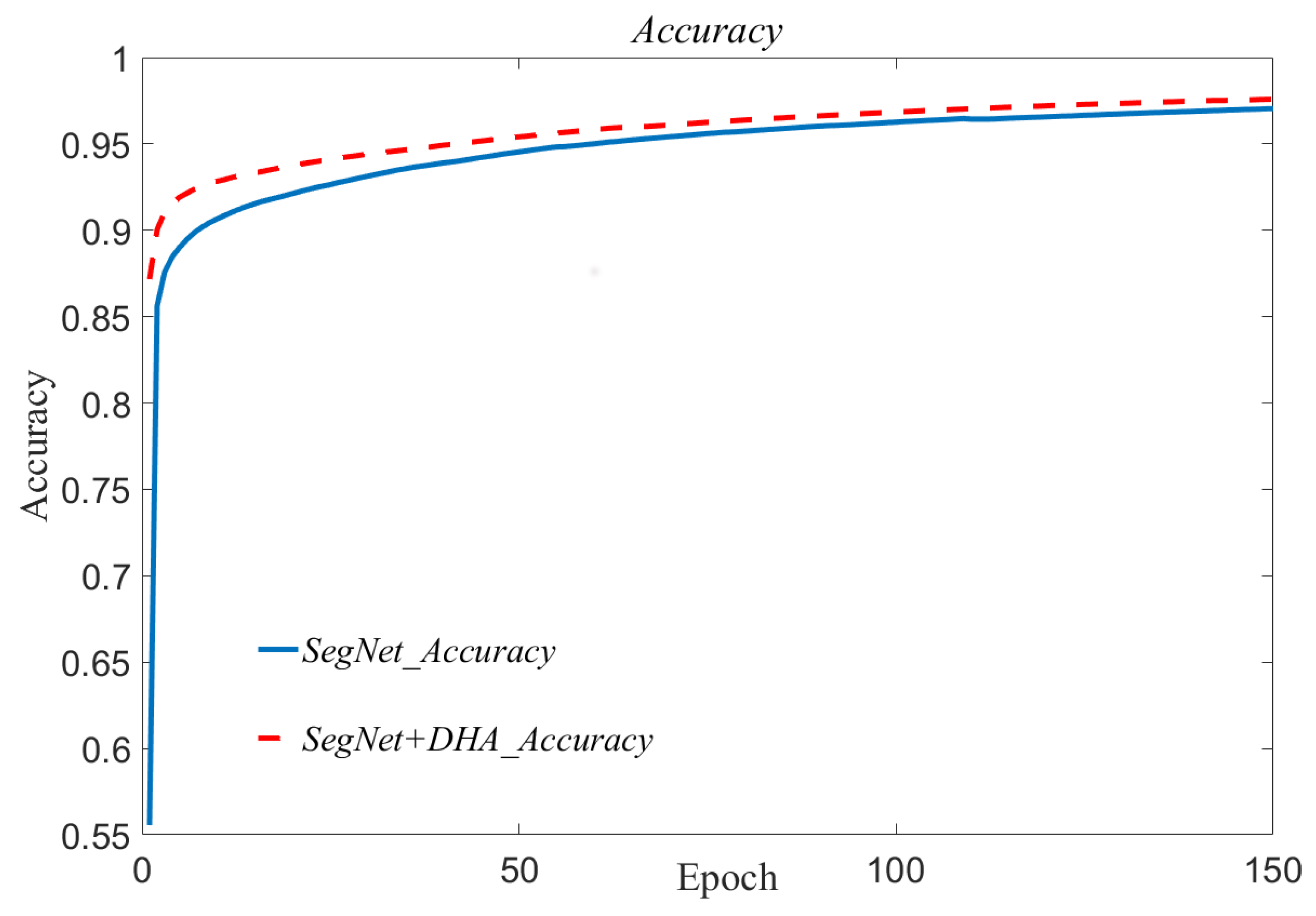

| SegNet | 0.9704 | 0.8639 | 0.8903 | 0.8391 | 0.8639 |

| SegNet + DHA | 0.9759 | 0.8884 | 0.9007 | 0.8817 | 0.8911 |

| Methods∖EI | Accuracy | mIoU | Precision | Recall | F1 Score |

|---|---|---|---|---|---|

| HRNet | 0.9477 | 0.8520 | 0.9116 | 0.8287 | 0.8682 |

| SegNet | 0.9569 | 0.8775 | 0.9168 | 0.8715 | 0.8936 |

| U-Net | 0.9668 | 0.9033 | 0.9503 | 0.8834 | 0.9156 |

| MANet | 0.9612 | 0.8892 | 0.9253 | 0.8848 | 0.9046 |

| MACU-Net | 0.9574 | 0.8793 | 0.9131 | 0.8786 | 0.8955 |

| AttentionUNet | 0.9501 | 0.8618 | 0.9075 | 0.8509 | 0.8782 |

| MAResU-Net | 0.9494 | 0.8579 | 0.9001 | 0.8507 | 0.8747 |

| UNet_scAG | 0.9776 | 0.8802 | 0.9110 | 0.8823 | 0.8964 |

| U-Net + HAM-1 | 0.9548 | 0.8695 | 0.9358 | 0.8356 | 0.8829 |

| U-Net + HAM-2 | 0.9467 | 0.8488 | 0.9124 | 0.8193 | 0.8633 |

| U-Net + CBAM | 0.9684 | 0.9079 | 0.9480 | 0.8909 | 0.9185 |

| U-Net + SE | 0.9551 | 0.8710 | 0.9295 | 0.8449 | 0.8851 |

| DHAU-Net | 0.9688 | 0.9089 | 0.9519 | 0.8897 | 0.9197 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lei, J.; Liu, X.; Yang, H.; Zeng, Z.; Feng, J. Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images. Appl. Sci. 2024, 14, 1293. https://doi.org/10.3390/app14031293

Lei J, Liu X, Yang H, Zeng Z, Feng J. Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images. Applied Sciences. 2024; 14(3):1293. https://doi.org/10.3390/app14031293

Chicago/Turabian StyleLei, Jingxiong, Xuzhi Liu, Haolang Yang, Zeyu Zeng, and Jun Feng. 2024. "Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images" Applied Sciences 14, no. 3: 1293. https://doi.org/10.3390/app14031293

APA StyleLei, J., Liu, X., Yang, H., Zeng, Z., & Feng, J. (2024). Dual Hybrid Attention Mechanism-Based U-Net for Building Segmentation in Remote Sensing Images. Applied Sciences, 14(3), 1293. https://doi.org/10.3390/app14031293