3.2. Regression Approach

In Paulo’s datasets, student performance is stored as a number between 1 and 20. Then, the 5-level grading system classifies student performance into 5 levels according to

Table 2, making it much more discrete than its original value.

From the performance reported in

Table 1, SVM performs the worst on 5-level grading out of all four DM methods, while RF performs better. Considering that RF is not only suitable for classification tasks but also for regression tasks [

17], it is assumed that the regression approach is better than the classification approach in predicting student performance. Furthermore, predicting student performance with a 20-point grading can best reveal the student performance on a more continuous distribution. To verify the assumption that, compared to a classification approach, a regression approach is more suitable for predicting student performance, this research employs a regression approach with the same DM techniques used in Paulo’s research to compare the difference between a regression approach and a classification approach.

In the experiment of this research, NNs and RFs are trained with a 20-point grading scale. Instead of training the NNs and RFs to predict the likelihood that a student “belongs to” a grade, the NNs and RFs directly predict the value of a student’s performance. During the training process, the Mean Squared Error (MSE) is used as the loss function of the NNs and RFs. The NNs used in this experiment all have one input layer, two hidden layers of size of 16 and 4, respectively, and an output layer of output size 1. All layers of the NNs are fully connected. The RFs used in this experiment all consist of 1000 estimators. SVM is replaced by Support Vector Regression (SVR), which uses an SVM structure for regression tasks. In this experiment, the Radial Basis Function (RBF) is chosen as the kernel function of the SVR, which projects the student performance data into a hyper-panel where the SVR can learn the regression. During the testing process, each model first predicts student performance and outputs a float number. The float number is then converted into its nearest integer (from 1 to 20). The predicted grade is then converted into a 5-level grading system, according to

Table 2. Then, the prediction accuracy is calculated with the converted 5-level grades. The experiment in this research uses 70% of the data from the datasets as training data and the other 30% of the data as test data for both the mathematics performance dataset and the Portuguese performance dataset. The experiment results are shown in

Table 3.

Based on the experiment results, SVR and NN using the regression approach outperform those using the classification approach by an average of 15.2%. SVR for mathematics performance prediction achieved a better accuracy of 21.9%, showing the great advantage of the regression approach in predicting students’ performance. In contrast to SVR and NN, the performance of RF changes slightly, which can be considered an experimental error. The experiment result supports the assumption that the regression approach is more suitable for predicting student performance.

3.3. Generation and Use of Synthetic Data

According to

Table 1 and

Table 3, it is evident that NNs perform less effectively on the mathematics dataset compared to the Portuguese dataset, regardless of whether the regression or classification approach is used. With limited artificial design in their structures, simple fully connected NNs are very flexible and, thus, can perform many tasks well. Less artificial design also makes NNs prefer large training datasets; since everything in an NN has to be learned from the very start, the more data, the clearer the knowledge, and the better the learning result.

Given that the student mathematics performance dataset used in this research is smaller than the Portuguese dataset, it is possible that the lack of student mathematics performance data causes NNs to perform worse on the mathematics dataset. Although the best way to improve the performance of NNs on small datasets is to collect more data, it is still unavoidable for researchers to encounter conditions where data volume is limited. Considering the differences between schools, regions, teacher skills, etc., it is difficult to combine student data from different schools. Furthermore, due to privacy concerns, many students may not agree with the use of their personal information for educational data mining. Improving prediction performance on small datasets is still meaningful.

AI-Generated Content (AIGC) has recently become a hot topic, while research into generative AI has been underway for a long time. From 2014 on, Goodfellow has been conducting research using two adversarial networks to generate images [

18] and named the networks Generative Adversarial Networks (GANs). After the success of GANs, some researchers used AI-generated images to improve the performance of models on small datasets [

19,

20]. In this research, GANs are chosen to generate student performance data to augment the student mathematics dataset.

The student data used in this research contain 33 features, each of which has a different value domain. Some of the features use text values (such as school names, gender, etc.). This makes the student data highly discrete. A common method of dealing with text data is to convert it to 0’s and 1’s using one-hot encoding. However, this makes the number of features in the data much larger—there will be 59 features after one-hot encoding, and most data in the features created by one-hot encoding is 0, which contains very little information. As reported in

Table 4, GANs trained with one-hot encoded data cannot properly generate data that cover the data distribution of the original data. The sampled data are very similar—their ages, Medu, and Fedu values are all the same. Furthermore, their grades G1 and G2 are also too low and hardly appear in the collected dataset. Considering that the original outputs of GANs are re-scaled into the value domain by post-processing (e.g., age smaller than 15 will be rescaled into 15 since the age domain is 15~22), it is possible that the original outputs of the GANs are much worse. The experiment result shows that one-hot encoded data are not compatible with GANs. More steps are needed for the GANs to work properly.

An encoder and a decoder are added to help the GANs. The encoder and decoder used in this research are trained as a three-layer neural network—an input layer of 59 dimensions, a hidden layer of 15 dimensions, and an output layer of 59 dimensions. The weights between the input layer and the hidden layer are chosen as the encoder, and the weights between the hidden layer and the output layer are chosen as the decoder. The hidden layer, which is the output layer of the encoder, uses sigmoid as the activation function—this scales the encoding result into 0~1, making it easier for the GANs to learn. The encoder and the decoder are trained on the whole student mathematics performance dataset to best encode and recover the data. Out of eleven trained autoencoders, the one with the best performance (recovering 75.3% of data after encoding) is chosen to embed the data. The imperfection of the auto-encoder also performs as a noise input in this structure since the decoded data are sometimes not the same as the input. The encoder and decoder compress the data dimension into a hyperspace, forming a denser vector, which alleviates the problem caused by highly discrete student data distribution.

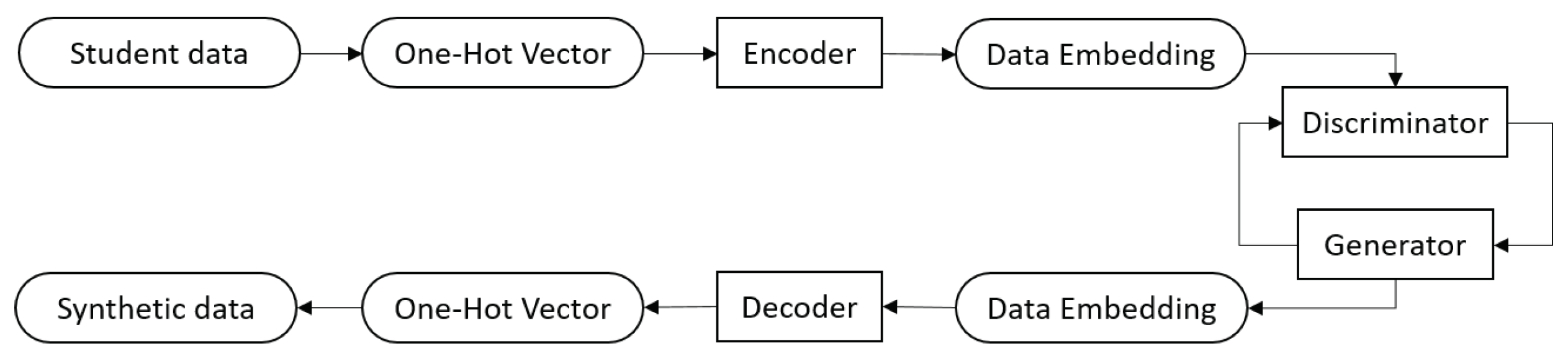

To train and test the GANs, the encoder first embeds the data from a 59-dimension vector (after one-hot encoding) into a 15-dimension vector. Then, the GANs are trained using the 15-dimension vectors as the collected data. After the GANs are trained, the decoder decodes the output vectors from the generator into one-hot vectors, and then the one-hot vectors are eventually parsed into normal student data format (

Figure 1).

A GAN system consists of a generator and a discriminator. The discriminator is trained to discriminate data generated by the generator. The generator is trained to fool the discriminator by generating data that are as real as possible. Ideally, when the GANs training is finished, the discriminator reaches the highest loss because it cannot discriminate the generated data, and the generator reaches the lowest loss—0 because all the generated data are recognized as real data by the discriminator.

The loss function for the discriminator is shown as follows:

The loss function for the generator is shown as follows:

In Equations (

1) and (

2),

x represents the collected data,

z represents the input of the generator,

G(

z) represents generated data,

D(

a) represents the discriminant result of input

a, and

n represents the batch size. BCE stands for binary cross entropy loss.

During the experiment, multiple GANs are trained, and some GANs do not convert as expected.

Table 5 shows some sampled data from an abandoned generator. The sampled data show that this generator generates data with similar feature values. These generated data may match a certain point of the real data distribution so that the discriminator cannot distinguish them. These data pieces are useless because they cannot fill in the gaps between the collected data samples and the real data distribution.

In Goodfellow’s paper [

21], the improvement of GANs in image generation from 2014 to 2017 is simply demonstrated with an illustration. However, when it comes to student data synthesis, it becomes difficult to tell whether a generated piece of student performance data is good or not. In this research, the generator is chosen based on the following rules:

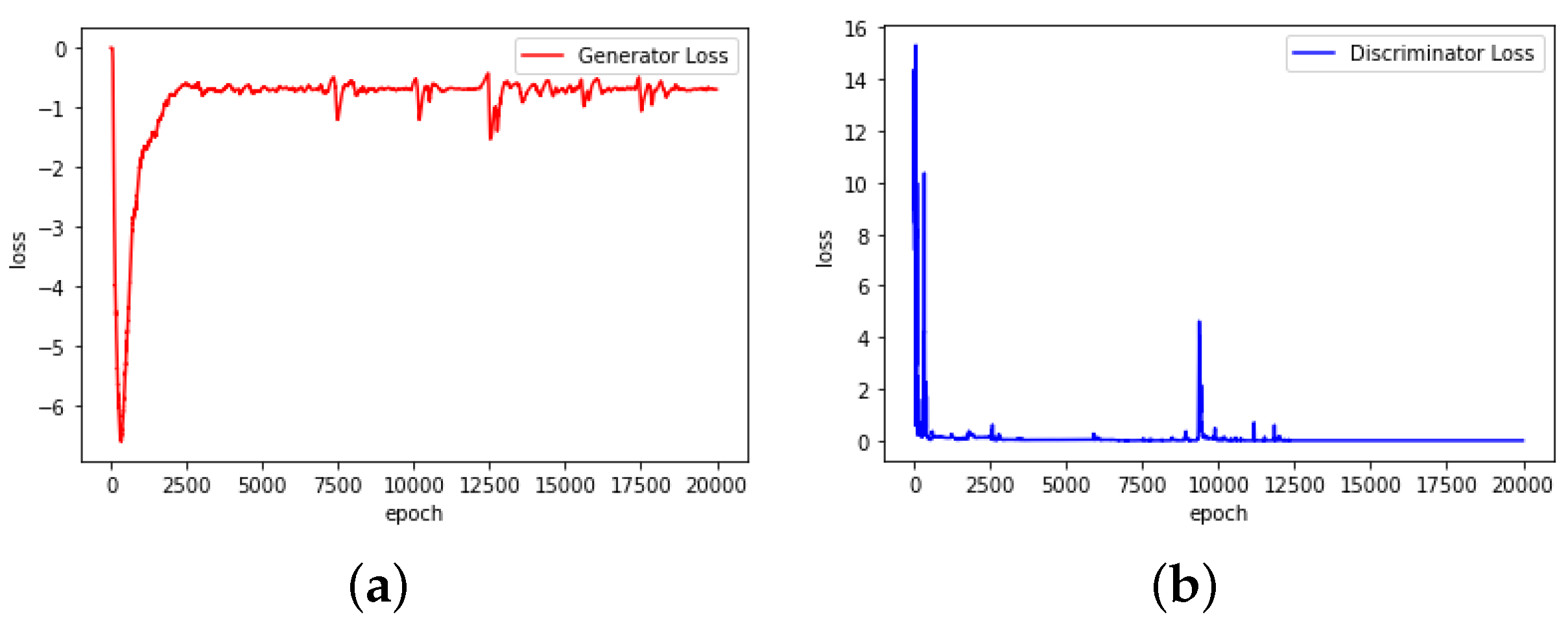

First, the GANs should convert smoothly. As shown in

Figure 2, the abandoned GANs convert with a very unusual loss change. It is found that during the experiment, ln(0) is processed as −1, which has a great impact on the training result.

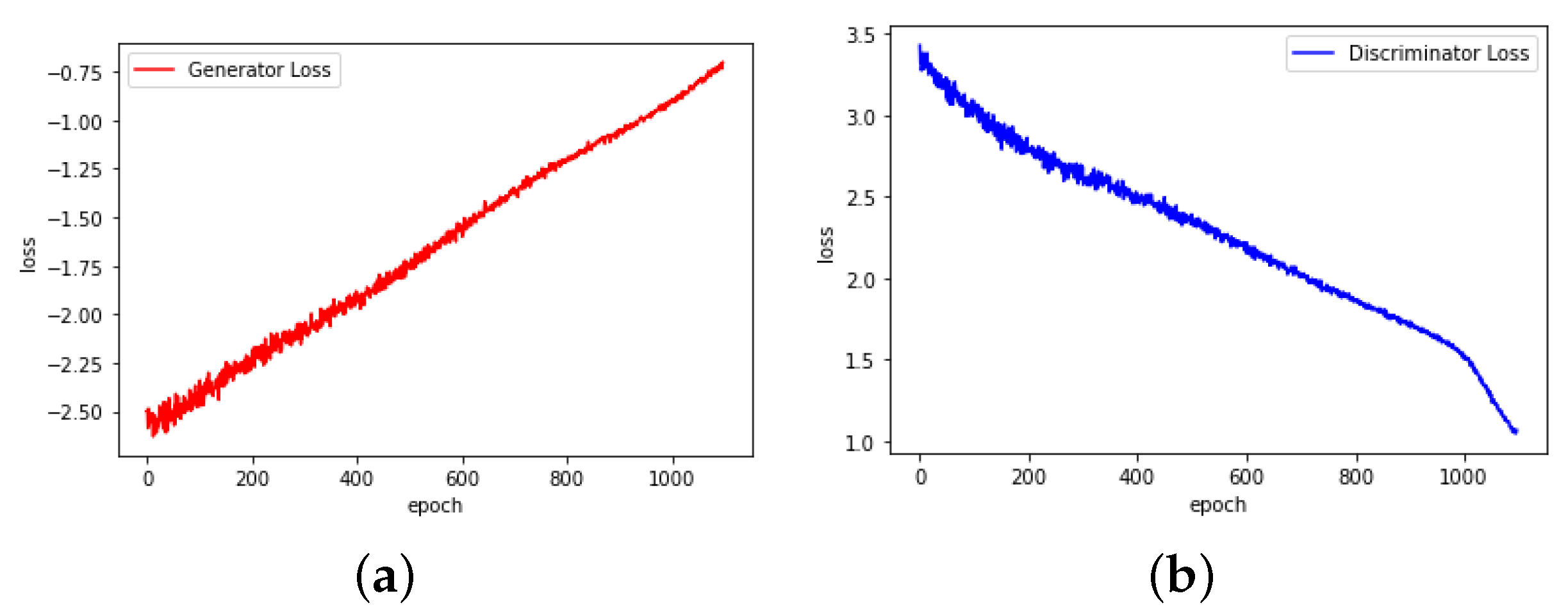

Figure 3 shows the converting process of the chosen GANs, which have smoother loss changes.

Second, the GANs should generate more dispersed data. During the training process of the GANs, it is possible that the generator generates data concentrated in a certain range of the real data distribution. The generated data are real-like but all similar, while the discriminator cannot reflect this problem. By choosing a generator that generates dispersed data, this problem can be alleviated.

The example data generated by GANs chosen according to the above two rules are shown in

Table 6. The data are generated with random inputs to the generator following Gaussian distribution.

To evaluate the ability of the GANs, SVR, NN, and RF are trained again to predict student mathematics performance using a regression approach but on a dataset containing synthetic data. The training dataset contains 100 pieces of synthetic data and 70% of data randomly selected from the mathematics performance dataset collected. The other 30% of the collected data is used as the test set. The result is presented in

Table 7.

An examination of the results presented in

Table 7 shows that although NN has an increase of 2.5% in accuracy, RF and SVR remain largely unaffected. This aligns with the understanding that NN is more responsive to the volume of training data. The marginal improvement in NN’s accuracy suggests that the synthetic data are well matched to the distribution of the collected data. An analysis of the generated data (

Table 6) shows that current synthetic data are still not sufficiently dispersed to fully cover the real data distribution. Some age values never appear in the generated data and failures are all 0. Furthermore, in the generated data, a larger student absence number occurs frequently, which does not match the collected data. As the synthetic data are concentrated, it only smoothes the distribution of the collected data a little, leading to a small improvement in NN’s performance. In addition, the encoder and decoder used in this research are not capable of correctly encoding and decoding each piece of data. Although this feature is used as random noise, it also makes data generation more uncertain. It is expected that a better auto-encoder with more collected data can help GANs achieve better performance.