Abstract

Despite their diminutive neural systems, insects exhibit sophisticated adaptive behaviors in diverse environments. An insect receives various environmental stimuli through its sensory organs and selectively and rapidly integrates them to produce an adaptive motor output. Living organisms commonly have this sensory-motor integration, and attempts have been made for many years to elucidate this mechanism biologically and reconstruct it through engineering. In this review, we provide an overview of the biological analyses of the adaptive capacity of insects and introduce a framework of engineering tools to intervene in insect sensory and behavioral processes. The manifestation of adaptive insect behavior is intricately linked to dynamic environmental interactions, underscoring the significance of experiments maintaining this relationship. An experimental setup incorporating engineering techniques can manipulate the sensory stimuli and motor output of insects while maintaining this relationship. It can contribute to obtaining data that could not be obtained in experiments conducted under controlled environments. Moreover, it may be possible to analyze an insect’s adaptive capacity limits by varying the degree of sensory and motor intervention. Currently, experimental setups based on the framework of engineering tools only measure behavior; therefore, it is not possible to investigate how sensory stimuli are processed in the central nervous system. The anticipated future developments, including the integration of calcium imaging and electrophysiology, hold promise for a more profound understanding of the adaptive prowess of insects.

1. Introduction

The recent shortage of workers due to the declining birthrate and aging population has led to a demand for the automation of tasks and the introduction of systems that can replace human workers. According to a survey by the International Federation of Robotics, the industrial robotics market trend is expected to head back to growth and reach record highs from 2021 onward, despite a period of stagnation due to the impact of the spread of the new coronavirus [1]. Thus, industrial robots in factory production lines and transport robots operating in distribution warehouses contribute to work efficiency and automation [2,3]; however, challenges such as working with humans remain unresolved [4]. Although artificial systems play an active role in limited spaces and tasks, several challenges must be overcome before they can be integrated into human society. While integrating intelligent robots into human society is expected to bring many benefits, we must also consider the social and ethical issues that will emerge. In fact, it has been pointed out that social support robots that interact with people face many ethical issues [5]. Moreover, as a general issue raised, the problems of people losing their jobs as a result of automation and who is responsible when a robot makes a mistake have not been solved. In addition, there is a risk that the data used for learning by artificial intelligence may contain private information, which could lead to more serious privacy violation problems in the future. The most frightening situation is that artificial intelligence may learn wrong data and make decisions that are unacceptable to humans based on wrong inferences, thus causing harm to human society. The potential ethical, privacy, and security issues associated with replacing everything with robots or artificial intelligence cannot be avoided. Therefore, it is important that we firmly promote the development of laws such as the “Robot Law” [6] at a time when robots have not yet penetrated society. It is desirable to build a society in which robots coexist with humans by drawing a line between humans and robots (or artificial intelligence).

However, robots are currently not melted into human society. What are the important elements for robots to permeate society? Among the elements that make up the robot, the implementation of “adaptability”, i.e., the ability to perform a wide range of tasks in complex and unknown environments, is an important and highly anticipated capability. Some researchers have tried to obtain this adaptability by fusing AI and robotics, which have been studied independently [7]. This research motivation has been supported worldwide and competitions, such as the RoboCup Challenge [8] and the DARPA Challenge [9,10], have been held. Thanks to the advancement of the computer, artificial systems have acquired a certain degree of adaptability [11], but they work only to a limited extent. One reason for this is the difficulty for AI in capturing as a formal, symbolic problem from unreliable sensor data as it moves to more natural environments [7]. As another aspect, while artificial intelligence has made remarkable progress in areas such as natural language processing and image recognition, where huge amounts of datasets are available for use in learning, in the real world, where tasks are performed using physical or chemical characteristics, acquiring large amounts of datasets that cover all situations is difficult. Moreover, wear and deterioration of the sensors and actuators installed on the robot change the amount of information it can obtain and its mobility, making it impractical to work while perceiving and relearning them in real time. Therefore, for the further development of artificial intelligence, it is important to let artificial intelligence experience the real world and continue to collect data. In other words, further development of artificial intelligence requires a deeper connection between the robotic body and AI. Moreover, although the computational power of computers has increased dramatically with the development of integrated circuits, it is difficult to expect further improvements in computational power because the end of Moore’s law, which predicts the number of transistors implemented in an integrated circuit [12], is in sight. Moreover, the scale of microcomputers that can be installed in a robot moving around in an unknown environment is limited, and global communication is not always possible.

As an alternative, methods have been considered to reproduce the body structure and intelligence of living things through engineering [13] and incorporate biological materials into artificial systems [14]. In addition, research has been proposed to investigate the brain function of organisms using robots as a tool for analysis [15]. Of course, it is impossible to perfectly reproduce all the flexibility and agility of sensors and actuators in engineering an imitation of an organism. As a result, systems have been proposed that can incorporate gait patterns similar to animals [16] and localize an odor source in the outside environment [17], but because these are only partial imitations, they have not yet achieved performance comparable to that of organisms. To address this problem, cyborg research [18], which utilizes actual insects as robots, is gaining attention. Current cyborg research focuses on the utilization of insects as moving vehicles, and is thus established by having the operator control the insect’s direction of movement. Insects are equipped with many sensory organs that cannot be imitated by artificial sensors, but current insect cyborgs do not benefit from the use of superior sensory organs. In addition, insects also age or vary from one individual to another, making it difficult to achieve uniform performance. However, among living organisms, more than one million species of insects that have small nervous systems [19] but can behave adaptively have been identified worldwide, and it is thought that there are up to several dozen times as many undiscovered insect species [20]. This suggests that insects have a way of adapting to diverse environments. In addition, an insect does not carry out all operations with its brain, but uses the body as part of its computing machines, thereby reducing the load on the brain [21,22]. This is called embodied intelligence. In other words, an organism, including an insect, has a very good balance between the body (hardware) and brain (intelligence or software), and is a good example of the future relationship between robots and AI. An insect can accomplish a variety of tasks despite its small brain by making good use of embodied intelligence. For example, they have a variety of migratory strategies, such as walking, flying, and swimming, which are essential for survival [23,24,25]; navigation strategies that skillfully use multiple senses [26]; and swarming behavior that creates an insect society [27]. The size of insect brains cannot be compared because insect species vary in size. However, most insect brains are extremely small, only a few millimeters in size, despite their multifunctionality. Considering that robots accomplish tasks in real time, their processing power is very high, and it is conceivable that there is still much to be learned for designing economic and efficient autonomous robots.

We focus on navigation among the tasks accomplished by an insect. While there are many types of navigation, such as using polarized light [28] or sound [29], we focus on navigation by using an odor. Navigation requires the insect to select appropriate actions based on environmental stimuli and to perceive the direction and distance it has traveled. The difficulty of navigation that dominates the use of odor is that the structure of odor is complex due to the influence of air dynamics. Moreover, not only is the odor structure constantly changing, but the complexity of the odor distribution is greatly increased by obstacles and other factors that interfere with the odor. Because of these problems, odor-based navigation is considered difficult to solve from an engineering viewpoint [30,31,32]. On the other hand, many organisms universally possess the ability to navigate by odor, and successfully find food or mates [33]. In particular, the sex pheromone transmission system of moths is one of the best examples of sophisticated odor source localization in animals and has been studied extensively for a long time [34,35,36,37]. Male moths use intermittent sex pheromone information emitted by females to detect and orient females of the same species. Because of the relatively simple relationship between sex pheromone chemicals and exploratory behavior, pheromone source-searching behavior can be induced relatively easily in the laboratory. Therefore, this behavior has been studied as an essential model system to better understand the general mechanisms underlying odor source localization in the insect. Accordingly, we focus mainly on the subject of odor-based navigation. This article provides an overview of the research that has been reported to investigate the adaptive capacity of insects, review methods for intervening with insects, and explore their adaptive behavior using engineering tools.

2. Overview of the Biological Experimental Setup Using Engineering Tools

Insects receive various stimuli from the external world, process them appropriately, and convert them into behavior, enabling them to adapt. This behavior is generated by information acquisition by superior sensory organs and information processing by the microbrain [38], which is only a few millimeters in size. Therefore, various experiments have been conducted in the fields of neuroethics, animal behavior, physiology, genetics, and molecular biology to investigate sensory organs and the cranial nervous system.

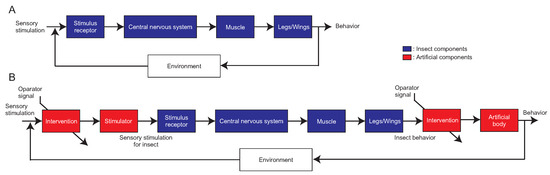

As shown in Figure 1A, when an insect detects a key stimulus among sensory stimuli, a signal is transmitted to the central nervous system and converted into an appropriate motor command. This results in control of the muscles, which is emerged as behavior. We focus on Figure 1, which shows that insects constantly receive new sensory stimuli from the surrounding environment and generate different behaviors each time. Adaptive behaviors of an insect are unlikely to be elicited by the insect alone, and seem to emerge through interactions with the environment [39]. In other words, it is difficult to generate adaptive behaviors in open-loop experiments that are disconnected from the natural environment, and it is necessary to advance the research from other aspects for a true understanding of behavior. However, it is impossible to know when and to what extent insects are receptive to sensory stimuli in free-walking or flight experiments. To solve this problem, the insect–machine hybrid system [18,40] and the virtual reality system for insects [41] have recently appeared, incorporating state-of-the-art engineering technology into biological experiments. These systems allow us to conduct experiments while maintaining the relationship between the insect and its environment, and thus, we can measure adaptive behavior. In addition, by intervening in the input of sensory stimuli to the insect and the output of the robot after the insect has acted, as shown in Figure 1B, it is possible to highlight the conditions under which adaptive behavior occurs.

Figure 1.

A block diagram from sensory stimuli to behavioral output. (A) Block diagram of an insect. The central nervous system generates motor commands. (B) Block diagram of intervention system for an insect. Intervention methods vary depending on the type of experimental setup, such as cyborg or VR for insects.

A necessary element of an experimental system that incorporates engineering technology into biological experiments is an interface between the organism and the artificial system, such as electrophysiology, behavior measurement, and electrical stimulation. Once the interface is established, it is possible to manipulate robots using signals from insect physiological responses or behavior, or to control insects by electrical stimulation. This review deals with the control of robots by insects. Previous studies have attempted to control robots using the insect itself or parts of the insect. By taking a part or element of the insect to be investigated and controlling a robot, the functional contribution of that part in the real world can be clarified. To investigate the characteristics of sensory organs, only the sensory organs can be connected to the robot, or to investigate brain functions, the robot can be operated by electrical signals from the brain. For example, to investigate the characteristics of insect sensory organs, a study has been reported in which behavioral experiments were conducted using insect antennae as sensors for a robot [42,43]. There is research that verified the validity of an antennal lobe model by using technic that use antennae as sensors for robots, reading an electroantennogram of an insect, and controlling a robot from a neuron model that represents the antennal lobe in the brain [44]. Another study controlled the robot with the insect brain to investigate how visual information is processed. Many insects elicit a variety of visually induced behaviors; motion-sensitive visual interneurons are a type of neuron responsible for these behaviors [45,46]. N. Ejaz et al. found that H1 neurons that respond to horizontal optic flow have adaptive gain control for dynamic image motion [47]. The research team also showed that robots can be controlled to perform collision avoidance tasks using techniques that measure the activity of H1 neurons [48]. In addition to studies of controlling robots based on physiological responses, other studies have been reported to investigate adaptive functions by changing physical characteristics. A famous example is research on physically changing the length of the legs of freely moving ants [49]. Intervening in leg length can force ants to change their stride length, which is expected to change the frequency of their gait. This change in gait was found to cause ants to stray when searching for nests. In other words, it is clear that ants rely on their internal pedometers to navigate their desert habitat.

Most of the studies described above are invasive experiments. In the case of non-model organisms, the number of organisms that can be experimented on is limited unless a maintenance strain has been established in a laboratory. For that reason, it is ideal to be able to conduct experiments under various conditions in an intact state. To solve this problem, an insect on-board system and VR for insects can be used to measure behavioral changes when various sensory and motor operations are applied under intact conditions. The obstacle avoidance behavior of a cockroach [50], sound-source localization behavior of a cricket [51], and odor-source localization behavior of a silk moth [52,53] have been investigated using the insect on-board system. Insects that have boarded the robot, even though they are from different species, have successfully controlled the robot as their own body and performed the given task appropriately. Recently, a study has been conducted to investigate the phototaxis of a free-walking pill bug by developing a robot equipped with a light stimulator that tracks the pill bug [54]. The advantages of this system are that it can measure the relationship between behavior and sensory stimuli in a more natural walking state, and it can be used for organisms such as centipedes, for which tethered measurement systems are difficult to use.

In an experiment on VR for insects, Kaushik et al. found that dipterans use airflow and odor information for visual navigation [55]. Ando et al. also reported a VR system for source localization that can be used to quantitatively analyze the source localization behavior of crickets [56]. Although not an insect, Radvansky and Dombeck successfully used a VR system to measure olfactory navigation behavior in mammals (mice) [57]. All motile organisms use spatially distributed chemical features in their surroundings to induce behavior, but investigating the principles of this behavior induction has been difficult due to the technical challenges of spatially and temporally controlling chemical concentrations during behavioral experiments. Their research has demonstrated that the introduction of VR into the olfactory navigation experiment of organisms can solve the above problem. As described above, there is much that is not known about the olfactory behavior of organisms due to the complexity and difficulty in quantifying the spatial and temporal nature of the odor as a sensory stimulus, and the same is true for the olfactory behavior of insects. Therefore, the authors have utilized an insect on-board system and VR for insects to investigate the female localization behavior of an adult male silkmoth. In the following chapters, we introduce our behavioral experiments.

3. Insect On-Board System

An insect on-board system is a tethered behavior measurement system with added mobility, in which the insect acts as a pilot and controls the robot. Hence, we can elucidate the sensory-motor integration mechanisms that support adaptive behavior by manipulating the conditions under which sensory stimuli are presented to the pilot insect and when the robot reflects the insect’s movement. The advantages of using the insect on-board system include the ability to conduct behavioral experiments without significantly disrupting the relationship between the organism and its environment, as is the case with the cyborg system, and the possibility of direct comparison with currently proposed behavioral algorithms because the robot is controlled by an insect equipped with the abilities that we want to extract. In particular, it has been extensively used as a tool to elucidate how insects efficiently track invisible odors.

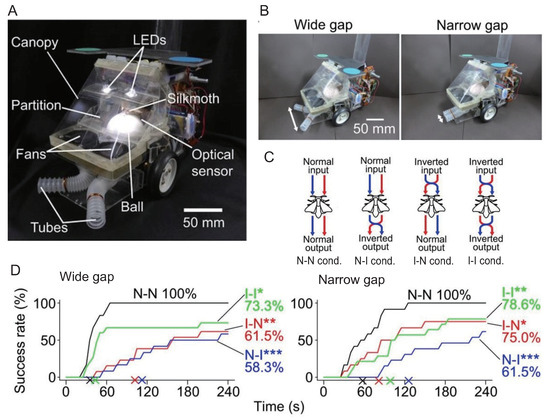

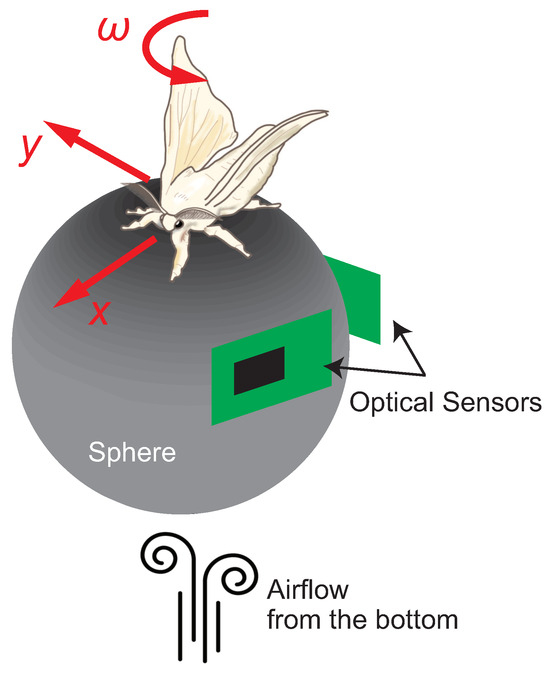

Ando et al. constructed an insect on-board system using an adult male silk moth (Bombyx mori) as the pilot [53] (Figure 2A). Here, we briefly describe the tethered measurement system commonly used to measure the behavior of walking insects [58]. In the tethered measurement system, the insect is placed on a sphere that floats by air from below, as shown Figure 3. The insect is always at the top of the sphere because one of its body parts is fixed, but the sphere rotates when the insect moves its legs. The amount of sphere rotation is read by optical sensors placed along the x- and y-axes. As a result, we can measure the amount of movement of the insect. Adult male silk moths elicit female-searching behavior when they detect a female sex pheromone (bombykol) [59]. The silk moth receives sex pheromones through two antennae on its head, and behavioral experiments have shown that it determines and modulates its behavior based on bilateral odor information [60]. Hence, Ando et al. evaluated the robustness of female localization behavior of the silk moth by manipulating odor-sensory stimuli and intervening in sensory-motor connections [61]. In particular, as shown in Figure 2B, they manipulated the spatial resolution of odor detection by changing the distance of the suction port that captures odor from the environment. Moreover, they investigated sensory-motor integration during odor tracking by setting conditions in which the odor information provided to the silk moth was inverted by crossing the suction port, and the robot moved in the opposite direction of the movement of the silk moth (Figure 2C). A sex pheromone (Bombykol) was placed as the odor source n the behavioral experiment, and the insect on-board system was activated 600 mm downwind of the odor source. The results of the localization experiment are shown in Figure 2D. The horizontal and vertical axes in Figure 2D indicate the elapsed time and localization success rate, respectively. The wider the spatial resolution of odor detection, the faster the localization time and the higher the success rate. The decrease in the left–right odor concentration difference caused by the narrowing distance between the odor suction ports was related to the decrease in localization efficiency, suggesting that the local left–right odor gradient contributes to appropriate action decisions. In addition, the localization performance was significantly lower in the I-N and N-I conditions (Steel’s test, *: p < 0.05, **: p < 0.01, ***: p < 0.001) than in the N-N condition (Steel’s test, *: p < 0.05, **: p < 0.01, ***: p < 0.001), indicating that bilateral odor information affects the determination of movement direction after odor detection. In contrast, the I-I condition, in which both the odor sensation and direction of movement were reversed, was expected to produce results somewhat similar to the N-N condition. However, the final localization success rate was only 73.3%.

Figure 2.

Experiments on odor source localization of an adult male silkmoth using an insect on-board system [61]. (A) Appearance of the system used in the experiments. (B) Method for adjusting spatial resolution in odor detection. (C) Coupling condition of sensory and motor output. (D) Localization success rate under each condition. The horizontal and vertical axes indicate elapsed time and localization success rate, respectively. The N-N condition shows the fastest and highest success rate of localization to the odor source, regardless of the spatial odor detection resolution. We performed the steel’s test on the success rate (*: p < 0.05, **: p < 0.01, ***: p < 0.001). All data are licensed under CC-BY.

Figure 3.

Schematic diagram of the tethered behavior measurement system.

For details of the discussion, we recommend reading the original paper [61]; however, the direction of movement was determined by estimating the local odor gradient using a bilateral odor input. Therefore, reversing the direction of olfactory input increased the likelihood of moving in the direction of low concentration, resulting in a larger proportion of exploratory behavior manifested by rotational movement than by straight-line movement. This may have reduced odor-tracking performance. On the other hand, the reversal of motion output not only causes the silk moth to move in an unintended direction, but also acts as positive visual feedback. This may further cause directional disorientation and decrease the success rate of localization.

Recently, Shigaki and Ando extended the insect on-board system and investigated the performance of odor plume tracking when a delay occurred in the timing of sensory stimuli presented to silkworm moths [62]. The extended insect on-board system is almost the same as that of Ando et al. However, the extended system is equipped with odor sensors and provides pheromone stimuli to a silk moth at the time of detection of the odor sensor. In other words, the silk moth can grasp the odor distribution in the environment through an odor sensor. We found that the odor-tracking performance decreased as the time between the reaction of the odor sensor and the presentation of the pheromone to the silk moth increased. The fact that motor manipulations can be compensated for by enabling a comparison of expected and actual motions, as represented by corollary discharge [63], whereas sensory manipulations cannot, may explain this. Corollary discharge is a means of distinguishing sensory input from own movement from sensory input from the outside world. Previous research focusing on crickets has revealed corollary discharge at the neural circuit level [64]. All animals need a means by which to distinguish sensory inputs caused by their own movements from sensory inputs that are due to sources in the outside world.

After detecting an odor, the silk moth shortens its distance from the odor source by moving in a straight line. Hence, this straight-line behavior is an important element of efficiency; however, its behavioral threshold is not constant and has been reported to fluctuate according to serotonin levels in the brain [65]. Furthermore, it has been suggested that a projection neuron from the antennal lobe, the primary olfactory center where information received at the antennae is first transmitted, may be able to interpret the odor distribution in space by differential quantities and not by the absolute concentration of pheromones [66]. Based on these findings, the 60% localization success rate, even with the manipulation of sensory input and motor output, suggests that adaptive behavior is emergent through simple reflex behavior and constant modulation of the threshold for behavioral decision making.

4. Virtual Reality System for an Insect

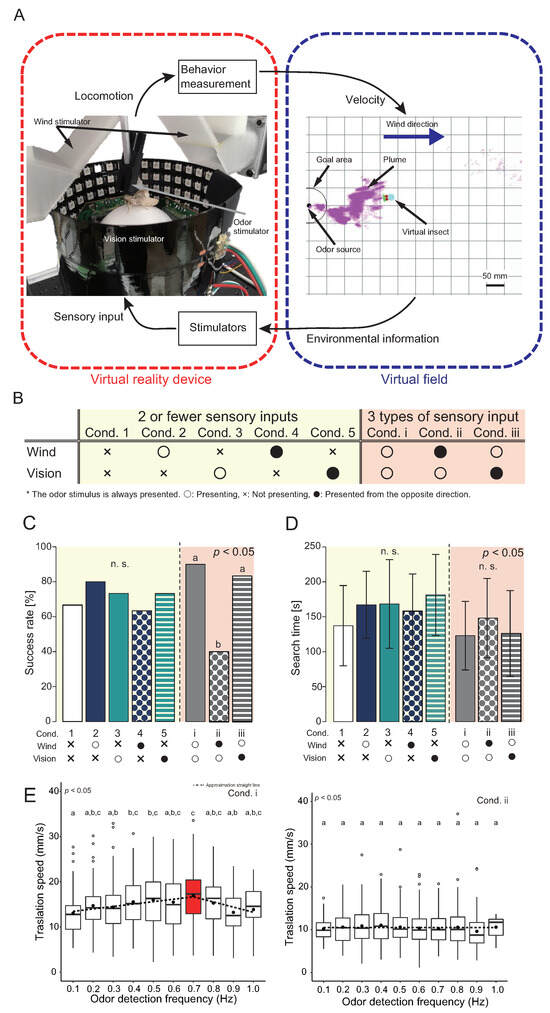

The virtual reality system for an insect can induce the illusion that the insect is moving in a real environment by connecting a behavior measurement device that can provide multisensory stimuli to a virtual space constructed on a computer, as shown in Figure 4A. Yamada et al. [67] focused on the female localization behavior of an adult male silk moth and investigated the integration of multisensory stimuli during localization behavior. This VR is designed based on the tethered measurement system [68], and a multisensory stimulator is installed around the behavioral measurement system. During the experiment, three types of multisensory stimuli were provided: odor, wind, and vision. The odor stimulator provided an odor by spraying air containing sex pheromones onto the right and left antennae on the head of the silk moth at an arbitrary time. The timing of odor spraying was controlled by opening and closing the solenoid valve. Visual stimuli were provided using LED arrays surrounding the silkmoth. The presentation direction of the stripe pattern generated by the LED array is controlled according to the rotation direction of the silk moth because it modifies its behavior by the optical flow [69]. The wind direction stimuli were presented using a push-pull airflow generator called a local exhaust system. The airflow generator was attached to a hollow servomotor, which was controlled according to the heading angle of the silk moth to send air from an arbitrary direction.

Figure 4.

Behavioral experiments using a virtual reality system for an insect [67]. (A) Schematic diagram of the virtual reality system for the insect; a behavior measurement device that can provide multisensory stimuli is represented on the left, and a virtual space constructed on a computer is represented on the right. (B) Wind and visual stimulus presentation conditions. The left and right sides of the table represent differences in the number of sensory stimuli presented. “◯”, “×”, and “•” indicate presented, not presented, and presented from a direction opposite to the actual direction, respectively. (C) The localization success rate for each stimulus condition. (D) Search time for each successful localization trial. (E) Comparison of movement speed between (Cond. i), which has the highest localization success rate in (C), (Cond. ii), which has the lowest localization success rate. The horizontal axis is the odor detection frequency. Identical letters indicate no significant difference. All the data are licensed under CC-BY.

The odor distribution in the virtual space was generated by recording the diffusion of odor (smoke) from the odor source in advance using airflow visualization technology and playing it back randomly in the virtual space. Smoke emitted from the odor source was diffused into the space at a frequency of 1 Hz (Duration: 0.2 s, Interval: 0.8 s) and a wind speed of approximately 0.6 m/s from behind the odor source. This allowed us to represent in the virtual space a phenomenon similar to the diffusion of odors in a real environment.

The presentation timing of each sensory stimulus was linked to the environmental information detected by the agent (virtual insect) in the virtual space, as shown in Figure 4A. The behavioral changes induced by multisensory stimuli are reflected in the amount of movement of the agent in a virtual space. This closed-loop system enabled us to measure the relationship between multisensory stimuli and behavioral changes comprehensively. The multisensory stimuli were presented in the behavioral experiment as shown in Figure 4B. Figure 4B (left and right) shows the differences in the number of sensory stimuli presented, with the left condition presenting ≤2 sensory stimuli and the right condition presenting 3 sensory stimuli. In Figure 4B, “×” indicates the condition in which no sensory stimuli are presented, “◯” indicates that the direction of the sensory stimulus detected by the agent in the virtual space are similar to the direction actually presented to the silkmoth, and “•” indicates that the direction of detection and the direction of stimulus presentation to the silkmoth is reversed. For example, the wind stimulus provided to the silk moth in Cond. ii was from the rear, even though the agent received wind from the front. An odor (a sex pheromone) is an essential sensory stimulus that excites localization behavior; therefore, it was provided under all conditions. The odor stimulus was provided to the left and right antennae of the silk moth according to the sensor responses on the left and right sides of the agent. The agent in the virtual space began its search from a position 300 mm downwind of the odor source. Localization was considered successful if the agent could reach the odor source within the time limit. In this experiment, we set the time limit to 5 min, which was long enough because it was unclear what kind of behavioral changes would occur when sensory information was manipulated.

Figure 4C,D show the localization success rate and search time, respectively. There was no significant change in the localization rate in the condition group in which ≤2 sensory stimuli were provided when the presentation condition of the sensory stimuli was changed. However, in the condition with three sensory stimuli, the localization rate changed significantly depending on the presentation condition of the wind stimulus (Fisher’s exact test with Bonferroni correction, p < 0.05). Additionally, the search times shown in Figure 4D showed that the search time tended to be the shortest in the condition in which all three sensory stimuli were correctly provided to the silkmoth (Cond. i). This suggests that efficient odor source localization can be achieved by integrating the odor and wind information.

They analyzed the relationship between odor detection status and movement speed to investigate the behavioral change behind the significant difference in localization success rates. They used the odor detection frequency as the odor detection state because recent studies on Drosophila have reported that the behavior of Drosophila is coordinated using temporal odor information [70]. An intermittent signal is obtained when the odor is observed at a certain location because the odor emitted from the odor source is carried by air in a mass called a plume [71]. Insects may predict the distance from the odor source by the strength of this intermittency [68]. The odor detection frequency was defined as the number of times a pheromone was received by the silk moth per unit of time. A graph of the odor detection frequency on the horizontal axis and translational movement speed on the vertical axis is shown in Figure 4E. The left side of Figure 4E shows the condition in which all sensory stimuli were provided from the correct direction (Cond. i), and the right side illustrates the condition in which wind stimuli were presented in the opposite direction (Cond. ii). The dotted line in the figure is the result of performing a least-square approximation on the average value. Moreover, we computed approximate straight lines in piecewise linear functional form. In Cond. i, the speed of translational movement increased until the odor detection frequency reached 0.7 Hz, after which the speed decreased. In contrast, the translational velocity was always low, regardless of the odor detection frequency, in Cond. ii. This suggests that by integrating odor and wind information, the moth actively moves in the direction of the odor source; however, when it may be moving in a different direction, it conducts an inactive search until the correct information is obtained. Peak translational velocity at 0.7 Hz. Figure 4E may indicate that the moth moves aggressively to approach the female quickly and slow down after 0.7 Hz to modulate its behavior to accurately locate the female because the frequency at which a female silkmoth emits sex pheromones is approximately 0.8 Hz [66].

It was found that the male silk moth integrates odor and wind information during female localization behavior and modulates its behavior to improve the success rate of localization using a virtual reality system for insects. However, this was a behavior-level investigation. Although flying insects use a behavioral strategy for estimating wind direction after odor detection and moving upwind [72,73], these results support the idea that the silkmoth, a walking insect, also modulates its behavior based on wind direction in addition to olfaction. However, its strategy is different from that of flying insects.

In this section, we utilized VR to elicit the localization behavior of an insect and investigate the behavioral changes induced by multiple sensory information. It has been reported that the VR framework is effective for bee learning [74,75], just as VR can be used to train humans to drive automobiles or pilot airplanes. In insect experiments using VR, we can measure behavioral changes when multiple sensory stimuli are applied while the insect is fixed, making it possible to combine this with physiological experiments, which is difficult with free-walking experiments. Therefore, VR technology is a tool that can contribute to the future development of insect ethology because it can obtain novel data.

5. Conclusions and Future Direction

In this review, we discussed research that has investigated the principles underlying the elicitation of adaptive behavior in insects using biological analysis methods and introduced methods for intervening in the insect’s sensory-motor system using a framework of engineering tools. The sensorimotor intervention system is non-invasive and can be set up under various conditions, enabling us to obtain phenomena and data that conventional biological analysis methods cannot observe. The experiments introduced here were conducted in the laboratory or a virtual environment, and we have not been able to measure the adaptive behavior of the insect in more natural conditions, such as in the outdoor environment, where odor distribution is more natural. To address this issue, one could consider using our proposed “Animal-in-the-Loop” system [76,77]. We have been unable to explore the level of behavioral modulation of the nervous system in insects in response to sensory and motor interventions because both sensory-motor intervention systems introduced here have been analyzed only at the behavioral level. It is essential to add calcium imaging or physiological response measurements to the sensory-motor intervention system to measure the internal state of the insect and to identify the behavioral compensators that support adaptive behavior in insects. Moreover, future directions for the intervention system proposed in this review involve its integration with technology controlling a robot with the insect brain [78] or utilizing electrical stimulation to govern the insect [79,80,81]. This integration aims not only to monitor behavior but also to obtain the activity of the central nervous system, unraveling the principles of adaptive behavior in correlation with the environment, the central nervous system, and the body. In this sense, the combination of the insect on-board system and VR for insects introduced here with calcium imaging [82,83,84] and electrophysiology [85,86] must be another research to deepen our understanding of adaptive behavior.

Author Contributions

Conceptualization, S.S. and N.A.; methodology, S.S. and N.A.; writing—original draft preparation, S.S. and N.A.; writing—review and editing, S.S. and N.A.; visualization, S.S. and N.A.; supervision, S.S. and N.A.; project administration, S.S. and N.A.; funding acquisition, S.S. and N.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by JST PRESTO JPMJPR22S7 and JSPS KAKENHI under grant JP22H05657.

Acknowledgments

Significant contributions to data measurement and analysis were made by the Hosoda Laboratory at Osaka University and Hirono Ohashi at Tokyo University of Agriculture. We acknowledge Ryohei Kanzaki (University of Tokyo), Daisuke Kurabayashi (Tokyo Institute of Technology), Takeshi Sakurai (Tokyo University of Agriculture), and Koh Hosoda (Kyoto University) for their valuable discussions. Our sincere gratitude is extended to them.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- IFR Presents World Robotics 2021 Reports. Available online: https://ifr.org/ifr-press-releases/news/robot-sales-rise-again (accessed on 16 December 2023).

- Ermolov, I. Industrial Robotics Review. In Robotics: Industry 4.0 Issues & New Intelligent Control Paradigms. Studies in Systems, Decision and Control; Kravets, A., Ed.; Springer Nature: Cham, Switzerland, 2020; Volume 272. [Google Scholar]

- Liu, Z.; Quan, L.; Wenjun, X.; Lihui, W.; Zude, Z. Robot learning towards smart robotic manufacturing: A review. Robot. Comput.-Integr. Manuf. 2022, 77, 102360. [Google Scholar] [CrossRef]

- Robla-Gómez, S.; Becerra, V.M.; Llata, J.R.; Gonzalez-Sarabia, E.; Torre-Ferrero, C.; Perez-Oria, J. Working together: A review on safe human–robot collaboration in industrial environments. IEEE Access 2017, 5, 26754–26773. [Google Scholar] [CrossRef]

- Boada, J.P.; Maestre, B.R.; Genís, C.T. The ethical issues of social assistive robotics: A critical literature review. Technol. Soc. 2021, 67, 101726. [Google Scholar] [CrossRef]

- Calo, M.R.; Froomkin, M.; Kerr, I.R. Robot Law; Edward Elgar Publishing: Northampton, MA, USA, 2016. [Google Scholar]

- Rajan, K.; Saffiotti, A. Towards a science of integrated AI and Robotics. Artif. Intell. 2017, 247, 1–9. [Google Scholar] [CrossRef]

- Kitano, H.; Tambe, M.; Stone, P.; Veloso, M.; Coradeschi, S.; Osawa, E.; Hitoshi, M.; Itsuki, N.; Asada, M. The RoboCup synthetic agent challenge 97. In RoboCup-97: Robot Soccer World Cup I; Springer: Berlin/Heidelberg, Germany, 1998; Volume 1, pp. 62–73. [Google Scholar]

- Buehler, M.; Iagnemma, K.; Singh, S. The DARPA Urban Challenge: Autonomous Vehicles in City Traffic; Springer: Berlin/Heidelberg, Germany, 2009; Volume 56. [Google Scholar]

- Krotkov, E.; Hackett, D.; Jackel, L.; Perschbacher, M.; Pippine, J.; Strauss, J.; Pratt, G.; Orlowski, C. The DARPA robotics challenge finals: Results and perspectives. In The DARPA Robotics Challenge Finals: Humanoid Robots to the Rescue; Springer Nature: Cham, Switzerland, 2018; pp. 1–26. [Google Scholar]

- Rubio, F.; Valero, F.; Llopis-Albert, C. A review of mobile robots: Concepts, methods, theoretical framework, and applications. Int. J. Adv. Robot. Syst. 2019, 16, 1729881419839596. [Google Scholar] [CrossRef]

- Theis, T.N.; Wong, H.S.P. The end of moore’s law: A new beginning for information technology. Comput. Sci. Eng. 2017, 19, 41–50. [Google Scholar] [CrossRef]

- Ahmed, F.; Waqas, M.; Jawed, B.; Soomro, A.M.; Kumar, S.; Hina, A.; Umair, K.; Kim, K.H.; Choi, K.H. Decade of bio-inspired soft robots: A review. Smart Mater. Struct. 2022, 31, 073002. [Google Scholar] [CrossRef]

- Webster-Wood, V.A.; Akkus, O.; Gurkan, U.A.; Chiel, H.J.; Quinn, R.D. Organismal engineering: Toward a robotic taxonomic key for devices using organic materials. Sci. Robot. 2017, 2, eaap9281. [Google Scholar] [CrossRef]

- Prescott, T.J.; Wilson, S.P. Understanding brain functional architecture through robotics. Sci. Robot. 2023, 8, eadg6014. [Google Scholar] [CrossRef]

- Owaki, D.; Ishiguro, A. A quadruped robot exhibiting spontaneous gait transitions from walking to trotting to galloping. Sci. Rep. 2017, 7, 277. [Google Scholar] [CrossRef]

- Shigaki, S.; Yamada, M.; Kurabayashi, D.; Hosoda, K. Robust moth-inspired algorithm for odor source lo-calization using multimodal information. Sensors 2023, 23, 1475. [Google Scholar] [CrossRef]

- Siljak, H.; Nardelli, P.H.; Moioli, R.C. Cyborg Insects: Bug or a Feature? IEEE Access 2022, 10, 49398–49411. [Google Scholar] [CrossRef]

- Alivisatos, A.P.; Chun, M.; Church, G.M.; Greenspan, R.J.; Roukes, M.L.; Yuste, R. The brain activity map project and the challenge of functional connectomics. Neuron 2012, 74, 970–974. [Google Scholar] [CrossRef]

- Stork, N.E. How many species of insects and other terrestrial arthropods are there on Earth? Annu. Rev. Entomol. 2018, 63, 31–45. [Google Scholar] [CrossRef]

- Pfeifer, R.; Scheier, C. Understanding Intelligence; MIT Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Wystrach, A. Movements, embodiment and the emergence of decisions. Insights from insect navigation. Biochem. Biophys. Res. Commun. 2021, 564, 70–77. [Google Scholar] [CrossRef]

- David, I.; Ayali, A. From Motor-Output to Connectivity: An In-Depth Study of in vitro Rhythmic Patterns in the Cockroach Periplaneta americana. Front. Insect Sci. 2021, 1, 655933. [Google Scholar] [CrossRef]

- Roth, E.; Hall, R.W.; Daniel, T.L.; Sponberg, S. Integration of parallel mechanosensory and visual pathways resolved through sensory conflict. Proc. Natl. Acad. Sci. USA 2016, 113, 12832–12837. [Google Scholar] [CrossRef] [PubMed]

- Hughes, G.M. The co-ordination of insect movements: III. Swimming in Dytiscus, Hydrophilus and a dragonfly nymph. J. Exp. Biol. 1958, 35, 567–583. [Google Scholar] [CrossRef]

- Heinze, S. Unraveling the neural basis of insect navigation. Curr. Opin. Insect Sci. 2017, 24, 58–67. [Google Scholar] [CrossRef] [PubMed]

- Weitekamp, C.A.; Libbrecht, R.; Keller, L. Genetics and evolution of social behavior in insects. Annu. Rev. Genet. 2017, 51, 219–239. [Google Scholar] [CrossRef]

- Sakura, M.; Okada, R.; Aonuma, H. Evidence for instantaneous e-vector detection in the honeybee using an associative learning paradigms. Proc. R. Soc. B 2012, 279, 535–542. [Google Scholar] [CrossRef]

- Römer, H. Directional hearing in insects: Biophysical, physiological and ecological challenges. J. Exp. Biol. 2020, 223, jeb203224. [Google Scholar] [CrossRef] [PubMed]

- Merriaux, P.; Dupuis, Y.; Boutteau, R.; Vasseur, P.; Savatier, X. Robust robot localization in a complex oil and gas industrial environment. J. Field Robot. 2018, 35, 213–230. [Google Scholar] [CrossRef]

- Li, W.; Farrell, J.A.; Pang, S.; Arrieta, R.M. Moth-inspired chemical plume tracing on an autonomous underwater vehicle. IEEE Trans. Robot. 2006, 22, 292–307. [Google Scholar] [CrossRef]

- Russell, R.A.; Bab-Hadiashar, A.; Shepherd, R.L.; Wallace, G.G. A comparison of reactive robot chemotaxis algorithms. Robot. Auton. Syst. 2003, 45, 83–97. [Google Scholar] [CrossRef]

- Renou, M. Pheromones and general odor perception in insects. Neurobiol. Chem. Commun. 2014, 1, 23–56. [Google Scholar]

- Kaissling, K.E. Pheromone-controlled anemotaxis in moths. In Orientation and Communication in Arthropods; Birkhauser: Basel, Switzerland, 1997; pp. 343–374. [Google Scholar]

- Willis, M.A.; Avondet, J.L. Odor-modulated orientation in walking male cockroaches Periplaneta americana, and the effects of odor plumes of different structure. J. Exp. Biol. 2005, 208, 721–735. [Google Scholar] [CrossRef] [PubMed]

- Wyatt, T.D. Pheromones and Animal Behavior: Chemical Signals and Signatures; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Shiota, Y.; Sakurai, T.; Ando, N.; Haupt, S.S.; Mitsuno, H.; Daimon, T.; Kanzaki, R. Pheromone binding protein is involved in temporal olfactory resolution in the silkmoth. Iscience 2021, 24, 103334. [Google Scholar] [CrossRef]

- Mizunami, M.; Yokohari, F.; Takahata, M. Exploration into the adaptive design of the arthropod “microbrain”. Zool. Sci. 1999, 16, 703–709. [Google Scholar] [CrossRef]

- Asama, H. Mobiligence: Emergence of adaptive motor function through interaction among the body, brain and environment. Environment 2007, 1, 3. [Google Scholar]

- Ando, N.; Kanzaki, R. Insect-machine hybrid robot. Curr. Opin. Insect Sci. 2020, 42, 61–69. [Google Scholar] [CrossRef]

- Naik, H.; Bastien, R.; Navab, N.; Couzin, I.D. Animals in Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2020, 26, 2073–2083. [Google Scholar] [CrossRef] [PubMed]

- Kuwana, Y.; Nagasawa, S.; Shimoyama, I.; Kanzaki, R. Synthesis of the pheromone-oriented behaviour of silkworm moths by a mobile robot with moth antennae as pheromone sensors. Biosens. Bioelectron. 1999, 14, 195–202. [Google Scholar] [CrossRef]

- Yamada, N.; Ohashi, H.; Umedachi, T.; Shimizu, M.; Hosoda, K.; Shigaki, S. Dynamic Model Identification for Insect Electroantennogram with Printed Electrode. Sens. Mater. 2021, 33, 4173–4184. [Google Scholar] [CrossRef]

- Martinez, D.; Chaffiol, A.; Voges, N.; Gu, Y.; Anton, S.; Rospars, J.P.; Lucas, P. Multiphasic on/off pheromone signalling in moths as neural correlates of a search strategy. PLoS ONE 2013, 8, e61220. [Google Scholar] [CrossRef] [PubMed]

- Borst, A. Fly visual course control: Behaviour, algorithms and circuits. Nat. Rev. Neurosci. 2014, 15, 590–599. [Google Scholar] [CrossRef] [PubMed]

- Egelhaaf, M.; Kern, R.; Lindemann, J.P. Motion as a source of environmental information: A fresh view on biological motion computation by insect brains. Front. Neural Circuits 2014, 8, 127. [Google Scholar] [CrossRef] [PubMed]

- Ejaz, N.; Krapp, H.G.; Tanaka, R.J. Closed-loop response properties of a visual interneuron involved in fly optomotor control. Front. Neural Circuits 2013, 7, 50. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.V.; Wei, Y.; Krapp, H.G. A biohybrid fly-robot interface system that performs active collision avoidance. Bioinspiration Biomim. 2019, 14, 065001. [Google Scholar] [CrossRef]

- Wittlinger, M.; Wehner, R.; Wolf, H. The desert ant odometer: A stride integrator that accounts for stride length and walking speed. J. Exp. Biol. 2007, 210, 198–207. [Google Scholar] [CrossRef]

- Hertz, G. Cockroach Controlled Robot, Version 1. New York Times, 7 June 2005. [Google Scholar]

- Wessnitzer, J.; Asthenidis, A.; Petrou, G.; Webb, B.A. cricket controlled robot orienting towards a sound source. In Proceedings of the Conference towards Autonomous Robotic Systems, Sheffield, UK, 31 August–2 September 2011; pp. 1–12. [Google Scholar]

- Emoto, S.; Ando, N.; Takahashi, H.; Kanzaki, R. Insect-controlled robot–evaluation of adaptation ability–. J. Robot. Mechatron. 2007, 19, 436–443. [Google Scholar] [CrossRef]

- Ando, N.; Emoto, S.; Kanzaki, R. Odour-tracking capability of a silkmoth driving a mobile robot with turning bias and time delay. Bioinspir. Biomim. 2013, 8, 016008. [Google Scholar] [CrossRef]

- Shirai, K.; Shimamura, K.; Koubara, A.; Shigaki, S.; Fujisawa, R. Development of a behavioral trajectory measurement system (Bucket-ANTAM) for or-ganisms moving in a two-dimensional plane. Artif. Life Robot. 2022, 27, 698–705. [Google Scholar] [CrossRef]

- Kaushik, P.K.; Renz, M.; Olsson, S.B. Characterizing long-range search behavior in Diptera using complex 3D virtual environments. Proc. Natl. Acad. Sci. USA 2020, 117, 12201–12207. [Google Scholar] [CrossRef]

- Ando, N.; Shidara, H.; Hommaru, N.; Ogawa, H. Auditory Virtual Reality for Insect Phonotaxis. J. Robot. Mechatron. 2021, 33, 494–504. [Google Scholar] [CrossRef]

- Radvansky, B.A.; Dombeck, D.A. An olfactory virtual reality system for mice. Nat. Commun. 2018, 9, 839. [Google Scholar] [CrossRef]

- Dahmen, H.J. A simple apparatus to investigate the orientation of walking insects. Experientia 1980, 36, 685–687. [Google Scholar] [CrossRef]

- Obara, Y. Bombyx mori Mating dance: An essential in locating the female. Appl. Entomol. Zool. 1979, 14, 130–132. [Google Scholar] [CrossRef]

- Takasaki, T.; Namiki, S.; Kanzaki, R. Use of bilateral information to determine the walking direction during orientation to a pheromone source in the silkmoth Bombyx mori. J. Comp. Physiol. A 2012, 198, 295–307. [Google Scholar] [CrossRef]

- Ando, N.; Kanzaki, R. A simple behaviour provides accuracy and flexibility in odour plume tracking–the robotic control of sensory-motor coupling in silkmoths. J. Exp. Biol. 2015, 218, 3845–3854. [Google Scholar] [CrossRef]

- Shigaki, S.; Ando, N.; Sakurai, T.; Kurabayashi, D. Analysis of Odor Tracking Performance of Silk moth using a Sensory-Motor Intervention System. Integr. Comp. Biol. 2023, 63, icad055. [Google Scholar] [CrossRef]

- Crapse, T.B.; Sommer, M.A. Corollary discharge across the animal kingdom. Nat. Rev. Neurosci. 2008, 9, 587–600. [Google Scholar] [CrossRef]

- Poulet, J.F.; Hedwig, B. New insights into corollary discharges mediated by identified neural pathways. Trends Neurosci. 2007, 30, 14–21. [Google Scholar] [CrossRef]

- Gatellier, L.; Nagao, T.; Kanzaki, R. Serotonin modifies the sensitivity of the male silkmoth to pheromone. J. Exp. Biol. 2004, 207, 2487–2496. [Google Scholar] [CrossRef] [PubMed]

- Fujiwara, T.; Kazawa, T.; Sakurai, T.; Fukushima, R.; Uchino, K.; Yamagata, T.; Namiki, S.; Haupt, S.S.; Kanzaki, R. Odorant concentration differentiator for intermittent olfactory signals. J. Neurosci. 2014, 34, 16581–16593. [Google Scholar] [CrossRef] [PubMed]

- Yamada, M.; Ohashi, H.; Hosoda, K.; Kurabayashi, D.; Shigaki, S. Multisensory-motor integration in olfactory navigation of silkmoth, Bombyx mori, using virtual reality system. eLife 2021, 10, e72001. [Google Scholar] [CrossRef]

- Shigaki, S.; Shiota, Y.; Kurabayashi, D.; Kanzaki, R. Modeling of the adaptive chemical plume tracing algorithm of an insect using fuzzy inference. IEEE Trans. Fuzzy Syst. 2019, 28, 72–84. [Google Scholar] [CrossRef]

- Pansopha, P.; Ando, N.; Kanzaki, R. Dynamic use of optic flow during pheromone tracking by the male silkmoth, Bombyx mori. J. Exp. Biol. 2014, 217, 1811–1820. [Google Scholar] [CrossRef]

- Demir, M.; Kadakia, N.; Anderson, H.D.; Clark, D.A.; Emonet, T. Walking Drosophila navigate complex plumes using stochastic decisions biased by the timing of odor encounters. eLife 2020, 9, e57524. [Google Scholar] [CrossRef]

- Murlis, J.; Elkinton, J.S.; Carde, R.T. Odor plumes and how insects use them. Annu. Rev. Entomol. 1992, 37, 505–532. [Google Scholar] [CrossRef]

- Vickers, N.J.; Baker, T.C. Reiterative responses to single strands of odor promote sustained upwind flight and odor source location by moths. Proc. Natl. Acad. Sci. USA 1994, 91, 5756–5760. [Google Scholar] [CrossRef]

- Rutkowski, A.J.; Quinn, R.D.; Willis, M.A. Three-dimensional characterization of the wind-borne pheromone tracking behavior of male hawkmoths, Manduca sexta. J. Comp. Physiol. A 2009, 195, 39–54. [Google Scholar] [CrossRef] [PubMed]

- Lafon, G.; Howard, S.R.; Paffhausen, B.H.; Avarguès-Weber, A.; Giurfa, M. Motion cues from the background influence associative color learning of honey bees in a virtual-reality scenario. Sci. Rep. 2021, 11, 21127. [Google Scholar] [CrossRef]

- Geng, H.; Lafon, G.; Avarguès-Weber, A.; Buatois, A.; Massou, I.; Giurfa, M. Visual learning in a virtual reality environment upregulates immediate early gene ex-pression in the mushroom bodies of honey bees. Commun. Biol. 2022, 5, 130. [Google Scholar] [CrossRef]

- Shigaki, S.; Fikri, M.R.; Hernandez Reyes, C.; Sakurai, T.; Ando, N.; Kurabayashi, D.; Kanzaki, R.; Sezutsu, H. Animal-in-the-loop system to investigate adaptive behavior. Adv. Robot. 2018, 32, 945–953. [Google Scholar] [CrossRef]

- Shigaki, S.; Minakawa, N.; Yamada, M.; Ohashi, H.; Kurabayashi, D.; Hosoda, K. Animal-in-the-loop System with Multimodal Virtual Reality to Elicit Natural Olfactory Localization Behavior. Sens. Mater. 2021, 33, 4211–4228. [Google Scholar] [CrossRef]

- Minegishi, R.; Takashima, A.; Kurabayashi, D.; Kanzaki, R. Construction of a brain–machine hybrid system to evaluate adaptability of an insect. Robot. Auton. Syst. 2012, 60, 692–699. [Google Scholar] [CrossRef]

- Sponberg, S.; Daniel, T.L. Abdicating power for control: A precision timing strategy to modulate function of flight power muscles. Proc. R. Soc. B Biol. Sci. 2012, 279, 3958–3966. [Google Scholar] [CrossRef]

- Cao, F.; Zhang, C.; Choo, H.Y.; Sato, H. Insect–computer hybrid legged robot with user-adjustable speed, step length and walking gait. J. R. Soc. Interface 2016, 13, 20160060. [Google Scholar] [CrossRef]

- Owaki, D.; Dürr, V.; Schmitz, J. A hierarchical model for external electrical control of an insect, accounting for inter-individual variation of muscle force properties. eLife 2023, 12, e85275. [Google Scholar] [CrossRef]

- Carlsson, M.A.; Hansson, B.S. Dose–response characteristics of glomerular activity in the moth antennal lobe. Chem. Senses 2003, 28, 269–278. [Google Scholar] [CrossRef] [PubMed]

- Carlsson, M.A.; Chong, K.Y.; Daniels, W.; Hansson, B.S.; Pearce, T.C. Component information is preserved in glomerular responses to binary odor mix-tures in the moth Spodoptera littoralis. Chem. Senses 2007, 32, 433–443. [Google Scholar] [CrossRef] [PubMed]

- Seelig, J.D.; Jayaraman, V. Feature detection and orientation tuning in the Drosophila central complex. Nature 2013, 503, 262–266. [Google Scholar] [CrossRef]

- Mizunami, M.; Okada, R.; Li, Y.; Strausfeld, N.J. Mushroom bodies of the cockroach: Activity and identities of neurons recorded in freely moving animals. J. Comp. Neurol. 1998, 402, 501–519. [Google Scholar] [CrossRef]

- Martin, J.P.; Guo, P.; Mu, L.; Harley, C.M.; Ritzmann, R.E. Central-complex control of movement in the freely walking cockroach. Curr. Biol. 2015, 25, 2795–2803. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).