1. Introduction

Nuclear facilities, like fission reactors and, in the future, fusion reactors, are extremely complex systems both from a physical and a technological point of view. For example, in fusion reactors, many components are the first of their kind. The widespread use of fusion reactors in the future will be possible if these technologies reach maturity in terms of industrial production. At the same time, the construction of the first fusion reactors will help to achieve this maturity. However, currently, the behavior of these components is characterized by intrinsic uncertainties. When simulating a transient, the uncertainties propagate from the single components to the overall system behavior, possibly leading to serious damages to the reactor. For this reason, it is relevant, from the design phase, to estimate the impact of the uncertainty of the data used as inputs to the model on the final results of the simulations. This will us allow to set proper thresholds on the uncertainties requested from the suppliers of the different components to avoid damage to the reactor. The reference option for the non-intrusive (i.e., not requiring modifications of the model) uncertainty propagation (UP) analysis is the Monte Carlo (MC) method. This approach is based on the definition and the random sampling of a set of uncertain inputs. Then, deterministic calculations with these inputs are performed and the final results can be aggregated to obtain statistical information about the output.

However, in view of the computational time required to perform each single simulation with complex high-fidelity codes, the use of this method is often not feasible due to the very large number of simulations required to reach statistical convergence. To face this problem, several different methods have been proposed in the literature. An option is to reduce the number of simulations for the uncertainty quantification approximating the input distribution rather than the model, like in the Unscented Transform (UT) technique [

1,

2]. Another possibility is to build a surrogate model and use it instead of the real model to perform the uncertainty quantification, as in Gaussian process modeling [

3] and Polynomial Chaos Expansion (PCE) [

4,

5]. The main advantage of this alternative is that the surrogate model is orders of magnitude faster than the corresponding real model.

The PCE has been employed in this work, using the python (version 3.10) module

chaospy (version 4.3.12) [

6] to analyze the uncertainty propagation during a Fast-current Discharge (FD) in the Divertor Tokamak Test (DTT) facility Torioidal Field (TF) magnet system. In

Figure 1, the geometry of a single DTT TF coil is reported.

The uncertainty is given by the parameters of the characteristic of the Fast Discharge Unit (FDU) varistor, influencing the evolution of the coil current during FD. This causes both electrical (e.g., modification of coil peak voltage, variation of deposited energy in the FDU, etc.) and electromagnetic (EM) drawbacks; indeed, varying the evolution of the coil currents means varying the magnetic field time derivative, which translates to a modification of the eddy currents induced within the TF coil casing and, as a consequence, a modification to the Joule power deposited. In this work, the uncertainties of the above-mentioned results have been assessed using PCE. The electrical results have been obtained via an object-oriented model developed using the Modelica language in the open source environment OpenModelica [

7]. The uncertainty obtained with PCE has been benchmarked against the MC method since the computational time required was still reasonable. On the other hand, the EM results have been computed with 3D-FOX [

8]. In this case, the benchmark of the PCE outcomes with those of the MC was impossible due to the excessive computational time required by the EM simulations. For this reason, a benchmark of the results obtained with PCE against the UT method is proposed.

Eventually, from the statistical distribution of the EM results, two worst-case scenarios have been identified and used as input to the thermal–hydraulic (TH) model, developed with the 4C code [

9]. A fast discharge triggered by a quench initiated at the minimum temperature margin location in the coil has been analyzed. These analyses allowed us to demonstrate that the uncertainty on the input data (varistor parameters) will lead to a wide range of possible accidental transients which must be carefully considered not to overlook some potentially dangerous scenario with respect to machine integrity.

It is important to point out that the methodology presented here to analyze the DTT TF FD is actually general and, in principle, applicable to any kind of fault transient in superconducting magnets involving any aspect of physics of interest. Moreover, the employment of PCE strongly reduces the computational time required by the UP analysis if compared to the MC method, making this type of analysis much faster and applicable to a larger number of different scenarios.

2. Methodology

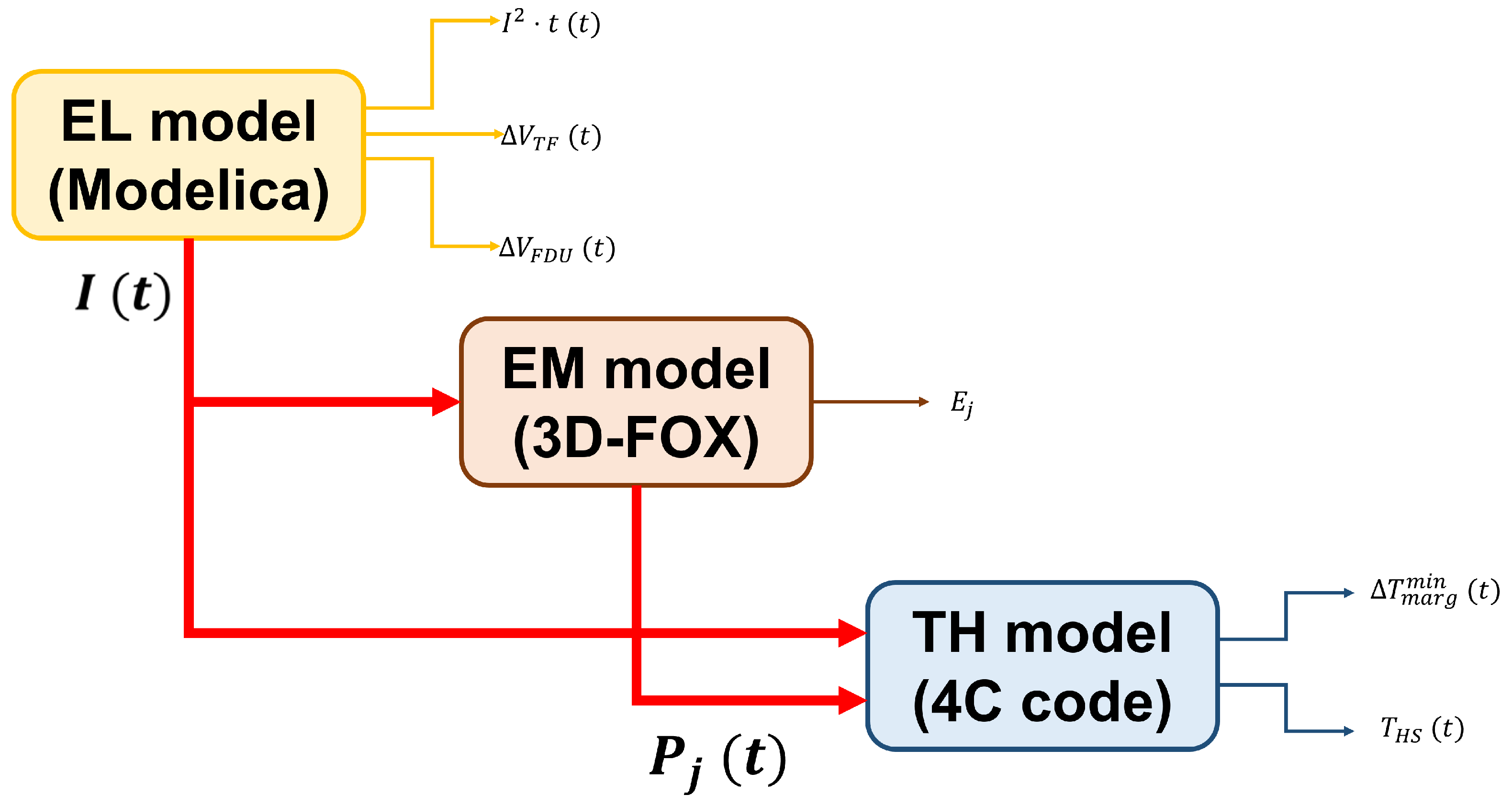

The analysis of the TF FD transient described in this work can be divided into three consecutive sub-blocks related to the three aspects of physics considered in the analysis:

Electrical (EL) simulation of the magnet power supply system;

Electromagnetic (EM) modeling of the TF coil casing to evaluate the Joule power generated by eddy currents induced in it during the transient;

Thermal–hydraulic (TH) analysis of the magnet to assess the effect of the Joule power deposition in the casing and AC losses in the superconducting (SC) cables on the coil performance.

The logical connections between the three aspect of physics analyzed in the sub-blocks, as well as their main outcomes, are schematically represented in

Figure 2.

2.1. Electrical Model

The power supply circuit of the DTT TF coils and its FDUs have been simulated by means of an object-oriented model developed in OpenModelica [

10]. The structure of the electrical circuit is taken from [

11]; the eighteen TF coils are split into three groups of six coils each, connected in series, and the three groups are, in turn, connected in series among them and with the three FDUs, alternating between one FDU and one group of coils. Each TF coil is modeled considering the self inductance and mutual inductances of the other TF coils based on the inductance matrix of the DTT TF system [

12], solving the following equation:

where

L is the inductance matrix and

N is the number of TF coils in the tokamak (18 in DTT). The inductance matrix

L is square and has the coils self inductances on the main diagonal and the mutual inductances out of the main diagonal.

The FDU is modeled as the parallel of the dumper and an ideal switch, normally closed; when the fast discharge is triggered, the switch is opened, forcing the current to flow through the dumper. Meanwhile, the power supply, modeled as an ideal current generator (forcing the nominal current of

kA in DTT [

13]) taken from the Modelica Standard Library [

7], is disconnected from the circuit so that the current in the loop decreases. The FDU dumper is composed of a collection of varistor disks [

12], modeled here as an equivalent single disk whose characteristic is described by the following equation:

The two parameters describing the varistor behavior (

K and

) are affected by epistemic uncertainty (i.e., there is a lack of knowledge of their values due to fabrication processes). Indeed, according to the varistor producer, they can be assumed as uniformly distributed in the following ranges [

12]:

and have been considered here as statistically independent.

K and directly impact the transport current evolution. The latter influences the eddy currents and Joule power generated by the quench and thus the quench propagation and hotspot temperature. As a result, the very large uncertainty on these input parameters has a non-negligible impact on the transient evolution. When trying to numerically predict the behavior of the TF coil system during an FD, the uncertainty of these parameters is then propagated through the analysis of all the model sub-blocks, requiring an uncertainty propagation analysis.

2.2. Electromagnetic Model

The EM model for the evaluation of the eddy currents induced in the coil casing during the FD is developed within the 3D-FOX, a finite element electromagnetic code developed at Politecnico di Torino [

8]. The model solves Faraday’s and Ampère’s laws using the A-formulation. The eddy currents’ distribution is then used to evaluate the power distribution in the TF coil casing by means of Ohm’s law. The Joule power deposition is suitably averaged before providing it as input to the TH model, as explained in [

8].

The simulation setup is the same as presented in [

8], adding cyclic periodic boundary conditions to the model of a single TF coil to exploit the periodicity of the tokamak to simulate the effect of the simultaneous discharge of all the TF coils.

2.3. Thermal–Hydraulic Model

The model of the DTT TF coils developed within the 4C code [

9], presented in [

14] and used for the simulation of several transients, is adopted for the TH analysis. The TH simulation setup is taken from [

15], while the logical connection between the EM module (3D-FOX) and the TH one (4C code) is reported in detail in [

8]. The 4C code has been validated both interpretatively ([

16,

17,

18,

19]) and predictively ([

20,

21]) several times in recent years.

2.4. Uncertainty Propagation Analysis

One of the aims of the uncertainty propagation is to evaluate the impact of the uncertainties of the inputs on the outputs of a generic model and to asses how they affect the performance of the system represented by the computational model. Usually, UP is a demanding activity, requiring a large number of expensive simulations. At the same time, UP is mandatory since uncertainties can have a dramatic impact on the performance of nuclear systems. In fact, one of the most recent methodologies for the licensing of nuclear systems is the so-called Best Estimate Plus Uncertainty method [

22,

23], where the output is provided together with its uncertainty. This approach has been progressively replacing the more traditional conservative approach.

To reduce the computational burden of the UP, several methods have been proposed in the literature. In this work, the Polynomial Chaos Expansion has been used, together with the Unscented Transform for verification purposes, benchmarking the two methods. Only in the case of the EL model has the Monte Carlo approach also been used as a further reference benchmark thanks to the sufficiently short computational time of the detailed model.

2.4.1. Polynomial Chaos Expansion

The Polynomial Chaos Expansion approximates a stochastic model

, where the stochastic nature comes from the uncertainty of the inputs

, through a series of orthogonal polynomials. The random vector

is represented by a joint probability distribution which, in the case of independent variables (as in this work), is simply the product of the single marginal probability density functions associated with each input. It is possible to express the model

in terms of an expansion of orthogonal polynomials

selected according to the joint distribution of the input:

where

denotes the coefficients of the multivariate orthogonal polynomials. The infinite sum in Equation (

5) must be truncated at the first

C terms due to practical reasons. The total number of terms C in the polynomial expansion depends on the polynomial order

p and on the dimension of the input

d and, according to the total degree truncation scheme, it is as follows:

There are several techniques for the evaluation of the polynomial coefficients, which are the unknowns of the PCE method. Some of these techniques are intrusive, requiring the modification of the model equations (e.g., the stochastic Galerkin method [

24]); others are non-intrusive, where the computational model is treated as a black box (e.g., pseudo-spectral projection, point collocation [

25], and stochastic testing [

26]). In this work, the pseudo-spectral projection method implemented in

chaospy has been used.

In the pseudo-spectral projection method, the coefficient of Equation (

5) is computed through a quadrature integration scheme as follows:

where

is the vector of quadrature nodes generated according to the chosen quadrature rule,

denotes the corresponding weights, and

denotes the exact model evaluations at node

. Thus, knowing the values of

for all the nodes and the appropriate expansion of orthogonal polynomials, it is possible to compute the coefficients

and a surrogate model of

according to Equation (

5). Hermite and Legendre polynomials are typically used as orthogonal polynomials for Gaussian and uniform distributions, respectively; see [

5] for a more detailed description about the relation between the type of distribution and the most appropriate orthogonal polynomial set. Once the

Is exact model simulations have been performed, the polynomial metamodel can be used to evaluate the first moments of the output distributions (i.e., mean and variance), as well as the complete distributions and other quantities like Sobol’s sensitivity indices, which allow us to rank the input parameters based on how much they affect the uncertainty of the output.

In principle, the surrogate model is able to reproduce the results of the exact model inside the range of validity of the input distributions, with the advantage of being much faster and simpler. It is this peculiar characteristic which justifies the use of the metamodel for uncertainty quantification purposes.

2.4.2. Unscented Transform

The intuition upon which the Unscented Transform technique is based is that it is simpler to approximate the distribution of the inputs rather than approximate the model used to generate the output distribution. The approximation of the input distribution is obtained by generating a set of sigma points that represent the probability distribution of the input and which are used to feed the exact model. According to the literature [

1], 2

d + 1 sigma points are sufficient to obtain a good representation of the input and of the mean and variance of the output, where

d represents the dimension of the input perturbed data. The UT generally requires fewer simulations than the PCE, but it gives a limited amount of information (namely, the first two moments of the output distribution), assuming a Gaussian distribution for the output. However, in some cases, it could be sufficient to know the mean and the variance of an output distribution, making the UT an interesting technique for preliminary evaluations.

Among the several algorithms presented in the literature, here, the so-called Generalized Unscented Transformation [

27] has been employed since it allows us to deal with constrained sigma points like those generated from the uniform distributions in Equations (

3) and (

4) for the DTT FDU input parameters at hand, avoiding having non-physical sigma points outside of the prescribed distributions.

In this work, the UT has been employed to check that at least the first two moments calculated with the PCE are correct to add an additional benchmark in the EM model in which the comparison to the MC was not viable due to the excessive computational time of the detailed model.

3. Results

In this section, the results of the three modules are summarized, including the statistical analysis of the uncertainty propagation, from the inputs to the outputs of both the EL and EM models. The generation and simulation of a TH worst-case scenario, selected according to the uncertainty quantification analysis, is also presented.

3.1. Results of the Electrical Model

The EL model is sufficiently fast to allow for the analysis of its uncertainty propagation on the results via a Monte Carlo approach. For this reason, an MC analysis has been developed, exploiting the interoperability of Python and Modelica assured by OMPython [

7] and DyMat packages. The MC method simply consists of sampling a large number N of random points from the input distribution and performing a simulation for each of these points, giving a result

. In the MC algorithm, the average results

are computed as follows:

Together with the average value, both the standard deviation

and the relative standard deviation

are computed, according to the following equations:

The is used as an indicator to stop the sampling of random points once the required tolerance is reached. In this case, the required tollerance for the was and was reached after ≈28,500 simulations. The Equation (10) underlines one of the main drawbacks of MC, namely, its slow convergence (as ), requiring a large number of simulations.

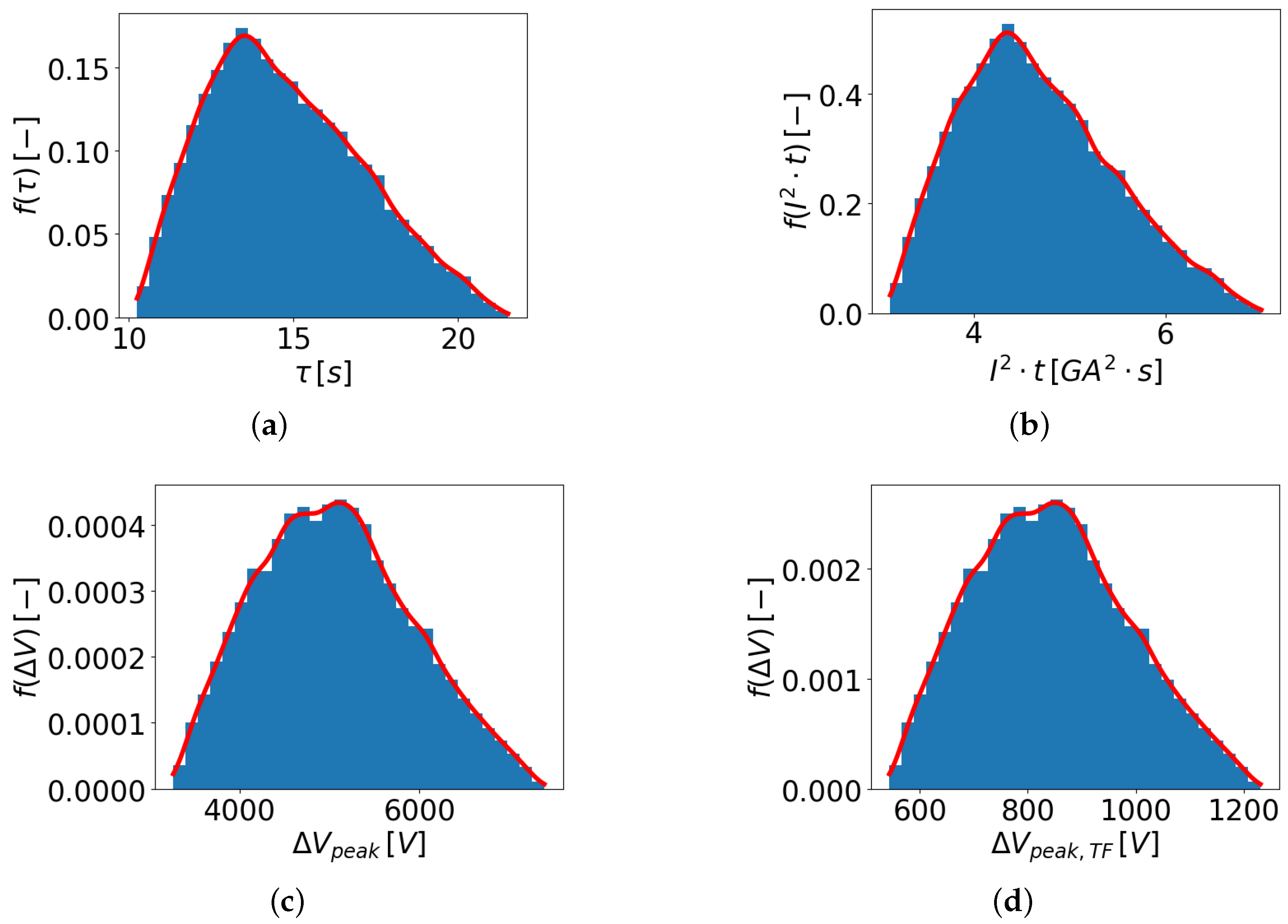

The statistical distributions of the following four relevant results have been obtained from the MC run and are reported in

Figure 3:

time required for the current to decrease from nominal value to ;

, considering its asymptotic value, which is proportional to the energy extracted from the TF coils during the FD;

peak voltage on the FDU during the discharge;

peak voltage on the TF coil during the discharge.

Figure 3.

Statistical distribution of (a) , (b) , (c) , and (d) obtained with the MC method.

Figure 3.

Statistical distribution of (a) , (b) , (c) , and (d) obtained with the MC method.

The resulting mean values of the monitored variables and their standard deviations are reported in

Table 1.

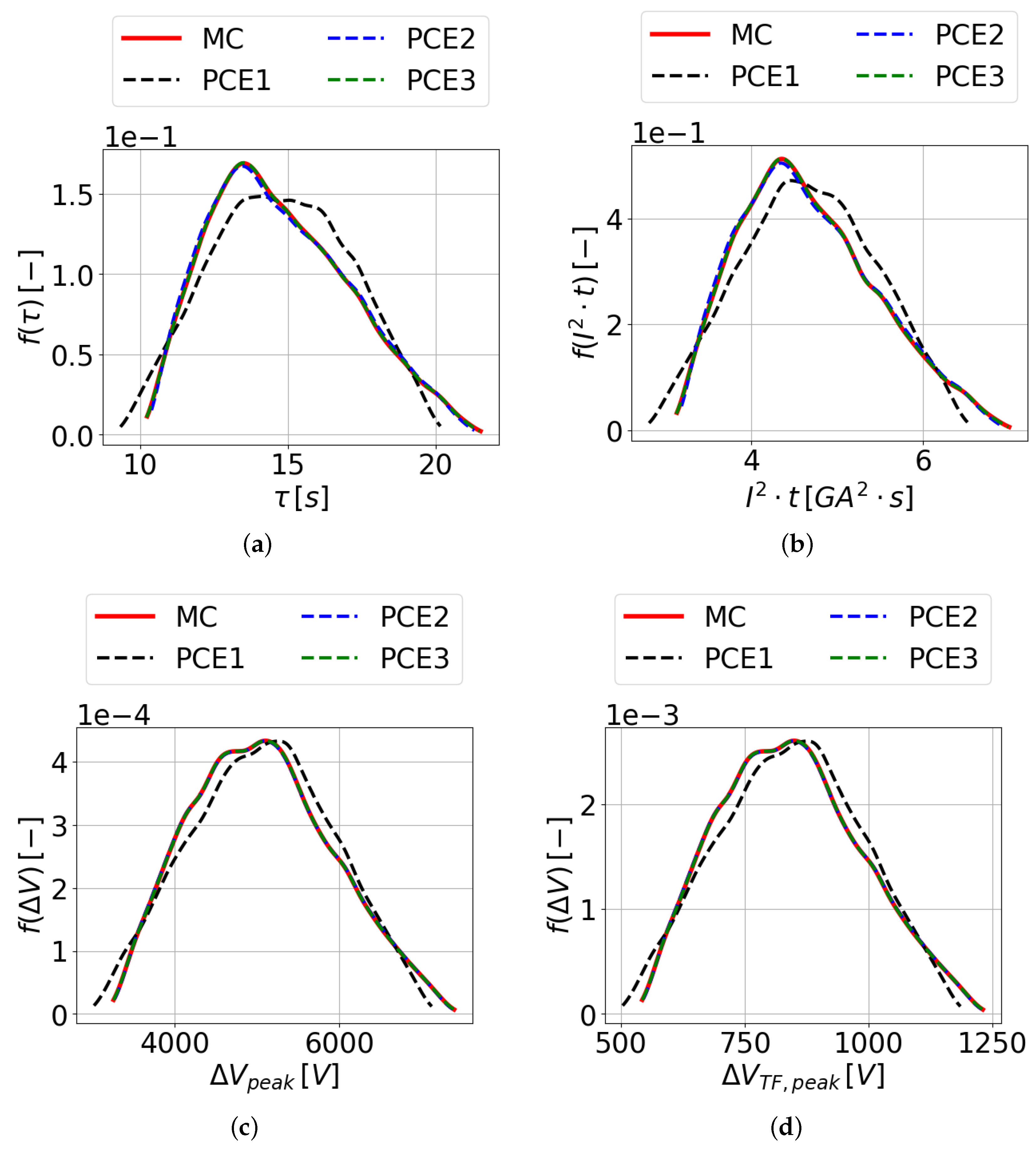

The same model has been analyzed using the PCE to reduce the required number of simulations to obtain the same statistical information. Quadrature and polynomial orders from 1 to 3 have been tested, and the results have been compared to those of the MC method, obtaining extremely good agreement for all the monitored variables using order 3, as is shown in

Figure 4. Indeed, the 2-norm of the relative difference between the MC and PCE order 3 distribution results in a maximum discrepancy of 0.05% between the two.

This allows for the performance of only sixteen simulations of the detailed model as a training set compared to the ≈30,000 simulations of the detailed EL model (≈1 s of CPU time each) required to reach the desired statistical tolerance using the MC approach. In fact, in the case of the pseudo-spectral projection method, the number of simulations required is given by

, where

p is the polynomial degree at which the truncation occurs (3 in this case), and

d is the dimension of the input space (2 in the case at hand). This choice results in a polynomial with 10

coefficients, according to Equation (

6). Here, the quadrature nodes and the weights have been obtained using the Gaussian quadrature rule. To better highlight the accuracy of the PCE results, the comparison between the mean values and their standard deviation obtained with MC and PCE is reported in

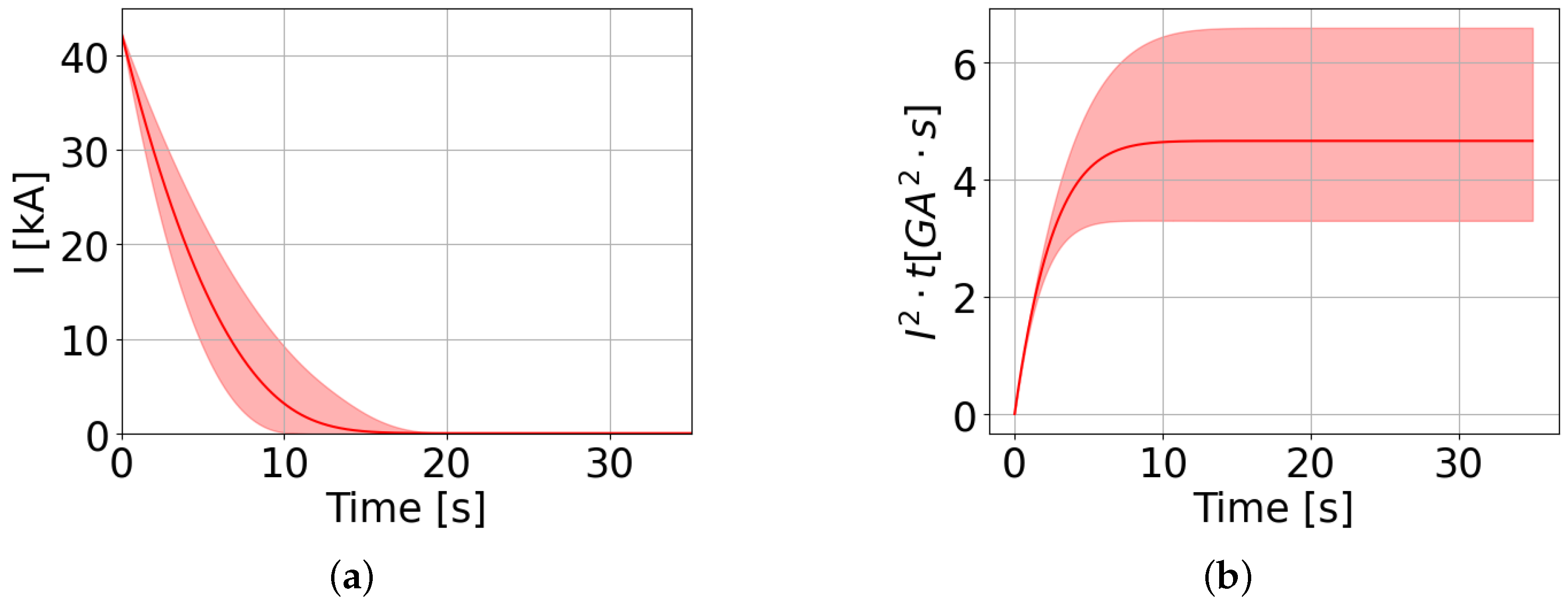

Table 1. Using the PCE, it has also been possible to evaluate the current and

evolution. Knowing the statistical distribution of each point in the evolution, it has been possible to evaluate an average evolution and a 1–99% confidence interval for both variables, as reported in

Figure 5.

Eventually, the UT method was applied as well. In the framework of the EL model, its application is not particularly relevant since the reduction of the required number of simulations is limited (five simulations instead of sixteen) and the amount of statistical information is limited to the average and the standard deviation, without providing effective information on the distribution. However, this method has been introduced as a useful benchmark for the EM model, in which the MC method cannot be applied due to the excessive computational cost.

The five UT sigma points have been generated according to the Generalized Unscented Transformation algorithm and the results are summarized in

Table 1, showing very good agreement between the three methods. Indeed, the maximum relative difference on the computed mean values is ≈0.06 % (smaller than the tolerance imposed to check the convergence of the MC run), while the maximum relative difference on the computed standard deviation is ≈0.6 %.

The results show a non-negligible standard deviation, justifying the necessity to further propagate the uncertainties through the EM model. In particular, as the relative standard deviation for the outputs of the EL model, computed as

, is generally larger than that for the input distributions in Equations (

3) and (

4), the electrical model seems to amplify the uncertainties.

3.2. Results of the Electromagnetic Model

The MC method was not applicable to the uncertainty propagation analysis in the EM model due to the increase in the computational time 1 day/simulation) of the detailed model. For this reason, the PCE of quadrature order 3 has been used to build a surrogate model of the detailed one based on its results, since it proved to be sufficiently accurate to replace the detailed EL model too. The 3D-FOX calculates the evolution of the power deposited in the TF coil casing. The monitoring variables have been extracted from this evolution, considering the following:

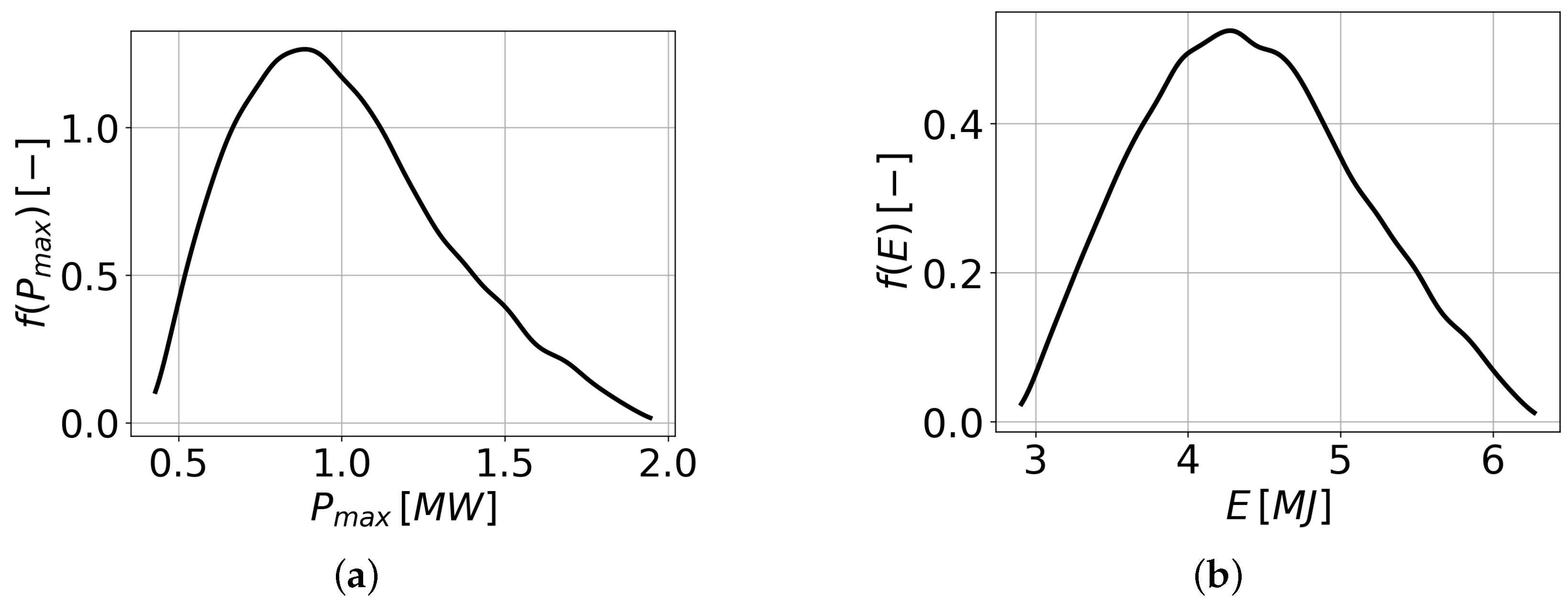

The statistical distributions of the peak of the deposited power and of the energy deposited are reported in

Figure 6; they highlight how the uncertainty in the inputs translates into huge uncertainty in terms of the peak power and deposited energy. Neglecting this uncertainty propagation may lead to disregarding the worst0case scenarios connected to this transient, concerning, e.g., its TH effects.

The average value and the standard deviation of the peak of the deposited power and of the deposited energy computed with the PCE have been benchmarked against those evaluated with the UT. The comparison of the results is shown in

Table 2.

The benchmark shows an excellent agreement between the PCE and the UT. This does not guarantee the accuracy of the statistical distribution obtained with PCE, but it suggests that the metamodel developed properly reproduces the main statistical data, namely, the mean and the variance.

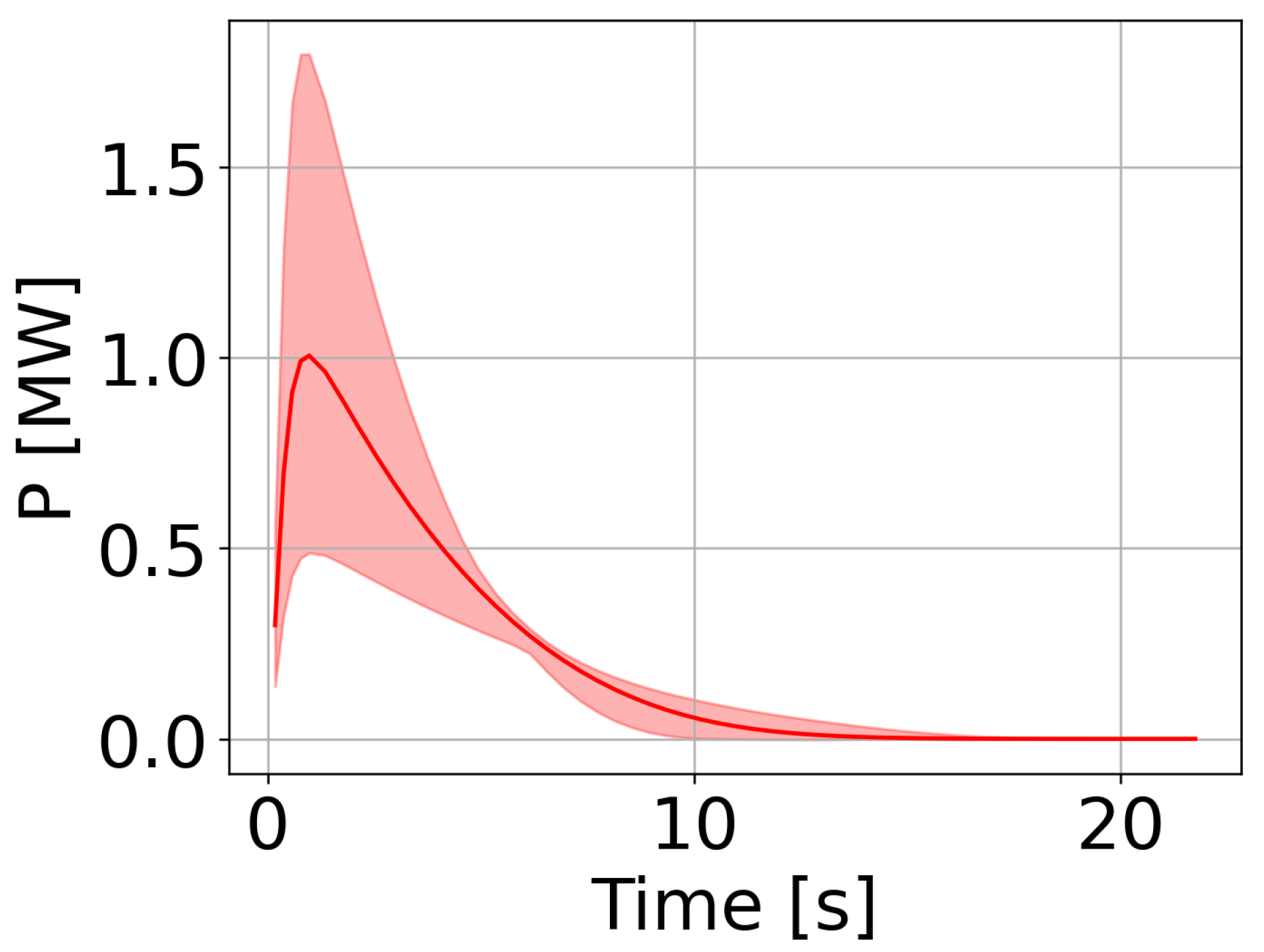

As already undertaken for the evolution of the current and of

in the EL model analysis, here, the average power evolution and its 1–99% confidence range have been calculated using the PCE and are shown in

Figure 7.

The width of the 1–99% confidence range highlights the importance of considering the uncertainty on the input data to evaluate the worst-case scenario to be retained for the analysis of this transient. Moreover, the impact of the uncertainty is much larger in the first part of the transient (peak region). This is expected since the first part of the transient is driven by the large value of the time derivative of the current at the beginning of the FD, which is strongly affected by the K and parameters of the varistors. On the contrary, the last part of the transient is less affected by the uncertainty of the inputs as the time derivative of the current is reduced, consequently reducing the power deposited.

A further benchmark of the metamodel based on PCE is performed by applying it to the inputs represented by the sigma points generated for the UT computations and comparing the results to those obtained in those points with the detailed EM model (3D-FOX). To ensure a fair benchmark, the UT sigma points used do not belong to the PCE model training set.

The comparison is shown in

Table 3, including the relative difference

on variable

V, obtained as follows:

The larger relative discrepancy is smaller than , which is considered a satisfactory accuracy. This benchmark does not guarantee the level of accuracy for the entire parameter space but demonstrates that the model is able to reproduce, properly, the detailed model results in the entire parameter space, covered by the UT sigma points. In fact, by definition, the sigma points are constructed by the UT algorithm to cover the input space in the best possible way, i.e., with the smallest number of points.

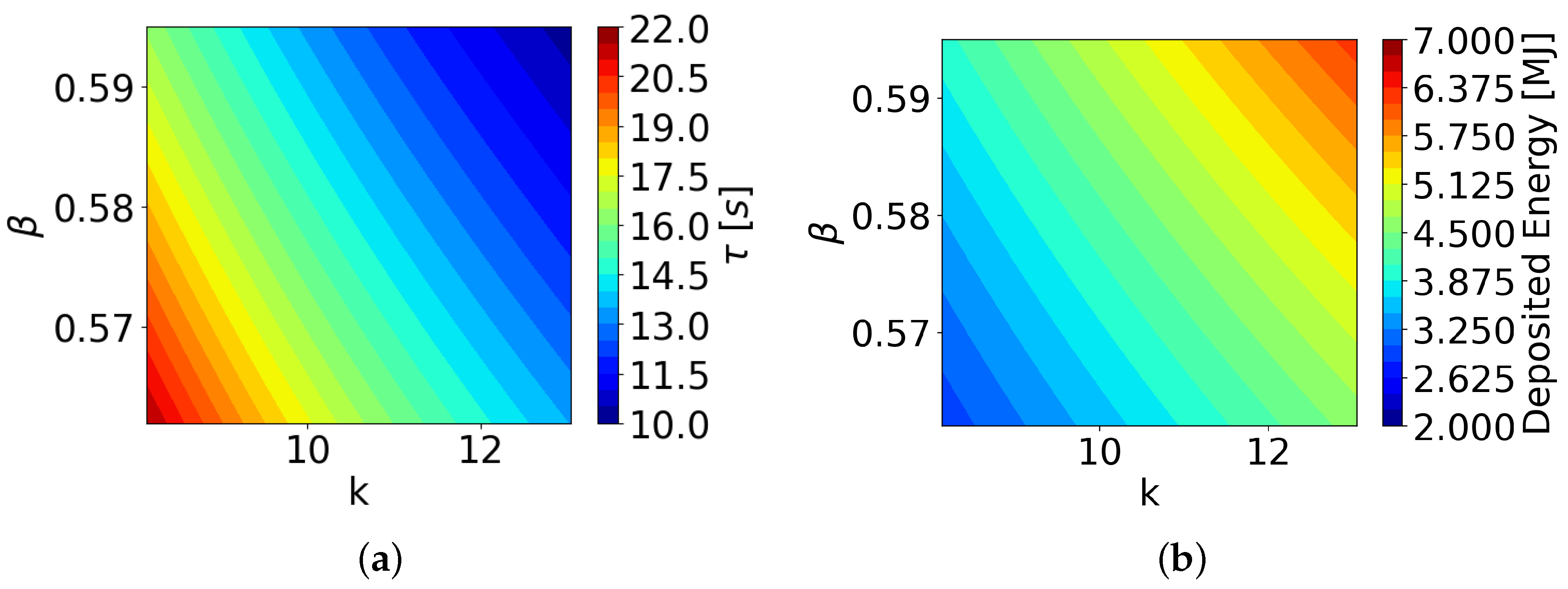

3.3. Identification of the Worst-Case Scenarios

The relevant worst-case scenarios (WCSs) to be used as input to the TH model must now be identified. In the WCS selection, both EL and EM aspects must be considered. Indeed, a fast current discharge is fundamental to protect, promptly, the magnet from the quench, but at the same time, it deposits a lot of energy in the coil casing, contributing to the coil temperature increase. For this reason, it is relevant to evaluate the

couples which cause the slowest discharge (WCS1) and which lead to the highest energy deposition in the coil casing (WCS2), and to perform their TH analysis. These two extreme scenarios in the ballpark of possible values of K and

provide a preliminary indication of the evolution of possible transients to be refined with the future introduction of statistics in the TH model. The two scenarios are clearly distinct, as the slower the discharge, the smaller the energy deposited in the casing (driven by the time derivative of the current). Thus, they will be generated by points standing at the opposite boundaries of the

plane. This statement is confirmed by

Figure 8, in which the

and deposited energy values have been mapped, using the PCE model, on the

plane. The specific values of K and

generating the two WCSs are (

,

) for WCS1 and (

,

) for WCS2.

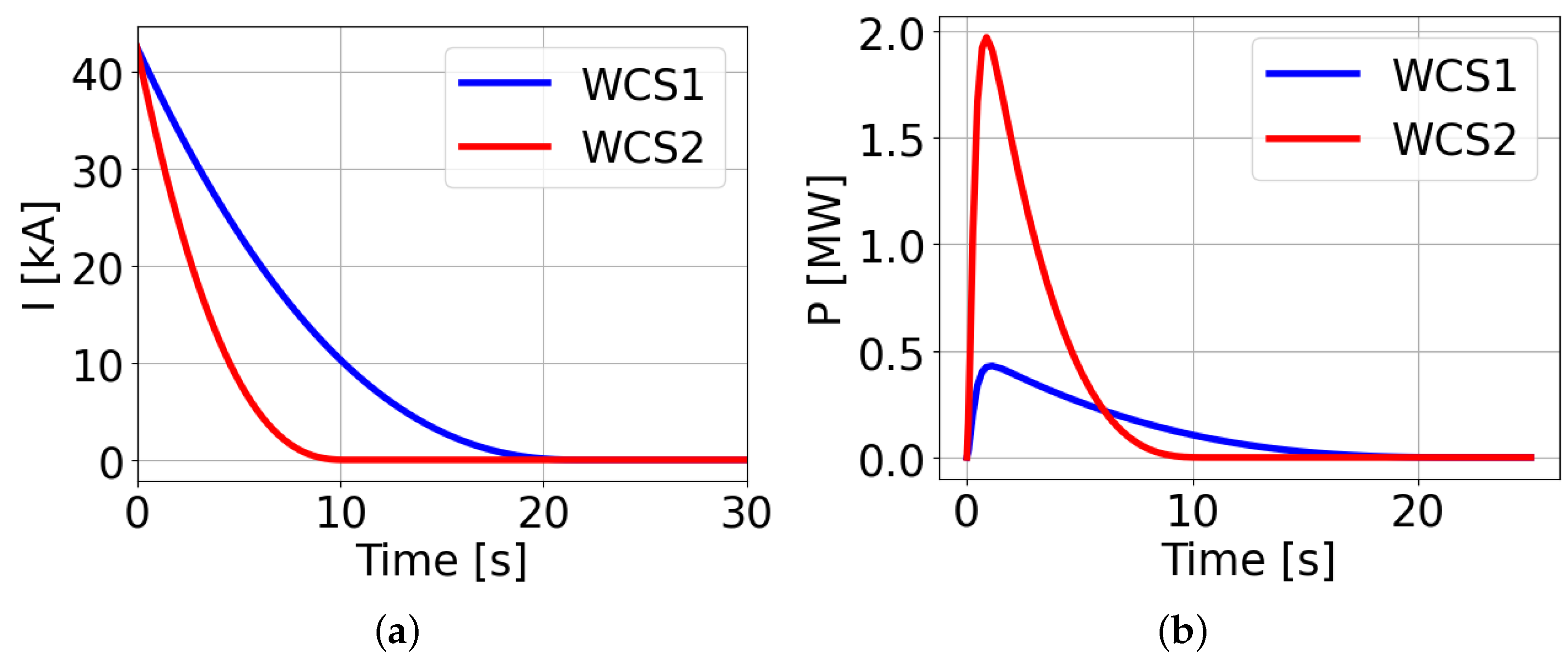

Using these values of K and , the inputs to the TH model have been computed.

The first required input is the evolution of the coil current, evaluated with the EL model, and it is reported in

Figure 9a. As expected, the two evolutions are very different, and this will strongly influence the quench evolution.

The second required input is the power deposited in the casing, including both its evolution and distribution (to account for its non-uniformity). The PCE metamodel has been trained on a set of 3D-FOX simulations, considering the evolution of the overall power deposition, to reduce the computational cost. Therefore, a dedicated run of the 3D-FOX has been performed for each of the two selected WCSs to prepare the detailed input to the 4C code, namely, the spatial discretization of the power deposition in the casing [

8]. The total power deposited in one TF coil casing in the two WCSs is reported in

Figure 9b, confirming that the fastest discharge is actually responsible for the larger energy deposition.

3.4. Results of the Thermal–Hydraulic Model

The inputs have been adopted to simulate an FD triggered by the magnet quench. In the simulation, the quench has been obtained via a local heat deposition at the location where the minimum temperature margin is computed in nominal operation, i.e., in the first turn of the two central pancakes at the inboard equator. In this work, 50 kW/m of external power (e.g., a very concentrated beam of particles coming from the plasma) have been deposited in 10 cm of SC cable around the minimum margin location of pancake 6 for 0.1 s. The power deposition erodes the temperature margin, leading the magnet to quench initiation and propagation. The FD is triggered by the quench detection system when the voltage computed across the coil overcomes the 100 mV threshold, waiting, then, for a validation time of 1.5 s [

28] to reduce the spurious detections.

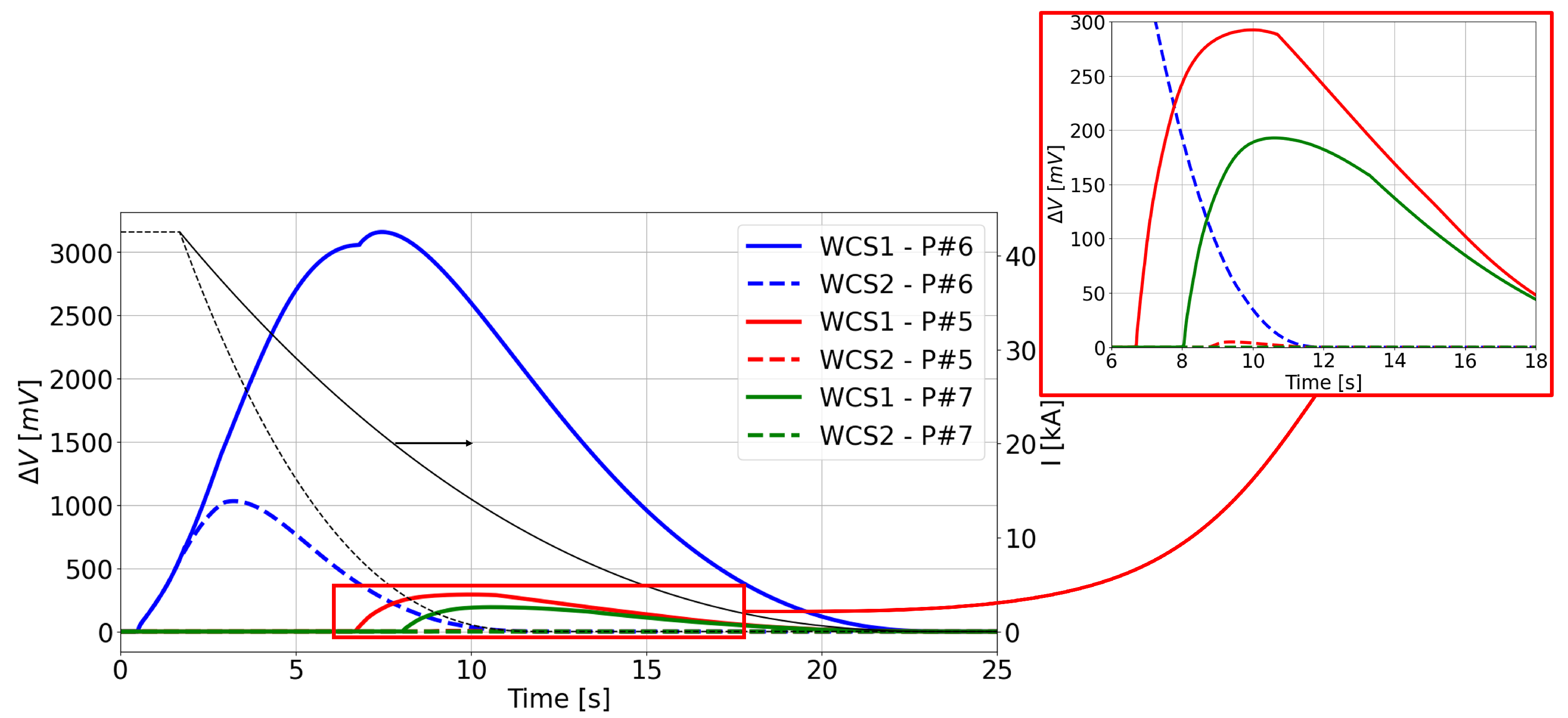

The evolution of the voltage computed with the 4C code in the two WCSs is reported in

Figure 10. The WCS2, characterized by a faster discharge, leads to a faster response to quench protection, reducing the quench propagation (and therefore the voltage buildup) in the Winding Pack (WP). Due to the inter-pancake thermal coupling, the neighboring pancakes (namely, 5 and 7) are also heated up, possibly quenching. However, the voltage raise in pancakes 5 and 7 is almost negligible in WCS2 due to the faster reduction of the current; on the contrary, in WCS1, the delayed current decrease also causes a quench initiation and propagation in those pancakes. Quench initiation in pancake 7 is slightly delayed with respect to pancake 5 due to the smaller magnetic field there, with it being further away from the coil center.

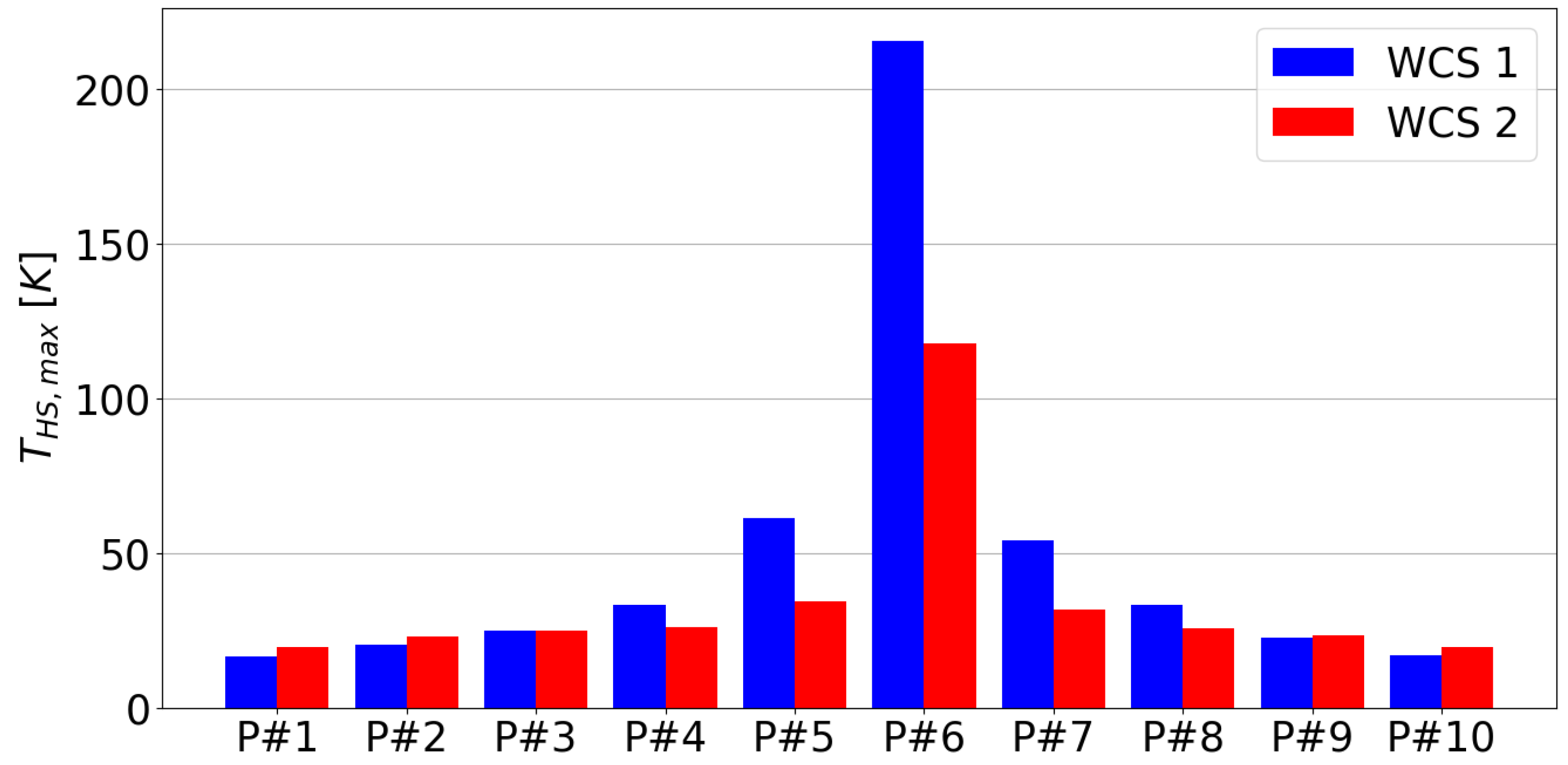

The two scenarios also differ in terms of the hot-spot (HS) temperature, that may lead to permanent damages to the coil. Indeed, as shown in

Figure 11, where the HS temperature reached during the transient is plotted for each pancake, the hot spot temperature is larger, in the central pancakes, in the WCS1, featuring a larger Joule power deposition. The opposite trend is observed in side pancakes (e.g., pancake 1) in which the quench is not initiated and the heating is due to thermal contact with the casing, where the eddy current power is deposited. Given that the power deposition in the casing is larger in WCS2 (see

Figure 9b), the power transferred from the casing to the WP is also larger; thus, the conductor temperature increases more. From the bar plot in

Figure 11, it is also possible to appreciate the effect of the inter-pancake heat diffusion, visible in the progressive temperature decrease moving far away from the quenched (central) pancakes.

The importance of reducing the current in the fastest possible way is clear when comparing the hot spot temperature in the two scenarios. Indeed, the HS temperature is much larger in WCS1 due to the faster current decrease in WCS2. On the contrary, the temperature increase in the side pancakes, given by the eddy current power deposition in the casing, is similar in the two cases, despite the consistent difference in the casing power deposition between them, as can be seen from

Figure 9b. Actually, the timescale of the heat transfer from the casing to the WP is quite slow if compared to the current discharge duration, also in the slower WCS1.

These results show that the uncertainty in the characteristic parameters of the varistors leads, eventually, to a wide range of quench evolutions. Increasing as much as possible the precision on the varistor parameters during their manufacturing is fundamental to attaining reliable predictions and therefore a safe reactor operation. Moreover, the preferable direction in the refinement of the varistor characteristics is that leading to faster current discharges; this, despite the larger power deposition in the coil casing due to eddy currents, ensures a faster response to the quench and thus limits its propagation, reducing the HS temperature and therefore the risk of damage to the coil.

4. Conclusions and Perspective

In this paper, the Polynomial Chaos Expansion has been adopted to assess the uncertainty propagation in a transient relevant for the safe operation of a nuclear fusion plant. The uncertainty in the characteristic parameters of the varistors of the fast discharge units of the DTT TF coils has been propagated to the computed results during a fast discharge transient. The uncertainty has an impact on the actual current evolution during the discharge, which influences both the eddy currents induced in the coil casing and the TH behavior of the coil during quench propagation.

The current evolution during the discharge has been computed as a function of the varistor parameters, with an object-oriented electrical model developed using the Modelica language. The uncertainty propagation from the varistor parameters to the effective current evolution has been assessed using a surrogate model based on the PCE, which has been successfully benchmarked against the Monte Carlo approach. The obtained statistical distribution of the current evolution has been used as input in the electromagnetic model developed using the 3D-FOX tool to compute, again with a PCE surrogate model, the statistical distribution of the power (and energy) deposition in the coil casing due to eddy currents.

Finally, both these results (current and power evolution) have been used to identify the two possible worst-case scenarios to be simulated with the thermal–hydraulic model, developed with the 4C code. The two cases are the slowest current dump and the highest power deposition in the casing.

The comparison of the results of the thermal–hydraulic model shows that the considered uncertainty on the varistor parameters leads to a wide range of different results. As concerns the quench protection, the reduction of the current discharge time, reducing the hot spot temperature, is preferable to the reduction of the power deposited in the casing due to eddy currents.

The effect of the latter is, however, important, especially with respect to hydraulic circuit pressurization and He venting. From this perspective, more detailed models, including the hydraulic circuits, will be used to assess this effect.

The application of the statistical methods to the TH model will, in future, allow us to identify other worst-case scenarios between the two extreme examples analyzed in this work. Moreover, a reverse analysis will be performed with the aim of suggesting the maximum limits of uncertainty which are acceptable on the varistor parameters.

The presented analysis is one example of the possible applications of the newly developed methodology for the uncertainty propagation in fault transients in superconducting magnets. Indeed, the presented approach is totally general and can be applied to any case and input data of interest and, with suitable parameter tuning, to any kind of numerical model.