Pedagogical and Technical Analyses of Massive Open Online Courses on Artificial Intelligence

Featured Application

Abstract

1. Introduction

1.1. Technical and Pedagogical Dimensions of MOOCs

1.2. Artificial Intelligence in Educational Learning

AI uses computer systems to accomplish tasks and activities that have historically relied on human cognition. Advances in computer science are creating intelligent machines that functionally approximate human reasoning more than ever before. Harnessing big data, AI uses foundations of algorithmic machine learning to make predictions that allow for human-like task completion and decision-making. As the programming, data, and networks driving AI mature, so does the potential that industries such as education see in its application. However, as AI develops more human-like capability, ethical questions surrounding data use, inclusivity, algorithmic bias, and surveillance become increasingly important to consider. Despite ethical concerns, the higher education sector of AI applications related to teaching and learning is projected to grow significantly [8] (p. 27).

- Teachers’ modelling: AI can help teachers reflect on and improve the effectiveness of their instructional activities in classrooms.

- Multimodal interactions: Sensing technology, ambient classroom tools, and educational robots introduce alternative dynamics in learning environment by increasing interactivity, engagement, and feedback for students and teachers.

- Educational robots and empathic systems: Making a machine appear to be empathic through encoding can encourage children to adopt positive behaviors.

- Ethical Issues: Ethics in AI is an area that is receiving attention. This is especially important due to the influence of machines on students.

- Content Scaffolding: Depending on the proficiency level of learners, scaffolding provides statistically different questions to various learners. In scaffolding methods, content modules are designed to index concepts.

- Social Interaction: This refers to content-driven group collaboration related to social skills.

- Content Inter-operability: Appropriate content is continuously and dynamically identified through interoperable content management systems.

- Metadata: This method is used for the advanced tagging of content with underlying data on the different content modules (e.g., age, level, subject area identifiers, learning outcomes).

- Normed- vs. Criterion-referenced Assessments: Criterion-referenced assessments show the performance of learners in relation to a defined set of outcomes. Norm-referenced assessments are designed to compare the performance of individual students with the performance of a representative sample of peers or “norm group”.

- Predictive Psychometric Design: Adaptive tests make it possible to accurately place a learner on an individualized learning pathway; this is possible because the predictive capabilities are derived from the adaptive assessment design.

- Diagnostic Classification Modelling: The diagnosis of cognition, competence of a particular skill, or sub-competence of a defined outcome is important in adaptive systems to align teaching, learning, and assessment.

- Zone of Proximal Development: This refers to the difference between what learners can do without help and what they can do with help.

- Self-assessment: Learners’ self-assessment is compared to what the adaptive system knows about a completed sequential piece of work.

- Skill Standards Libraries: Skills are defined by the units of knowledge, skills, and abilities used in assessment. Libraries of skill standards are constructed as a correlative “benchmark” or outcome in modular adaptive content and assessment, informing students of what is expected of them.

- Competences/Sub-competencies: Identified skills and competencies are delineated by “sub-competencies”.

- Prerequisite Knowledge and Prior Knowledge Qualifiers: Prior learning as assessment is learner-centered, and places learners at a starting point for the next viable competence to learn to build on existing knowledge.

2. Materials and Methods

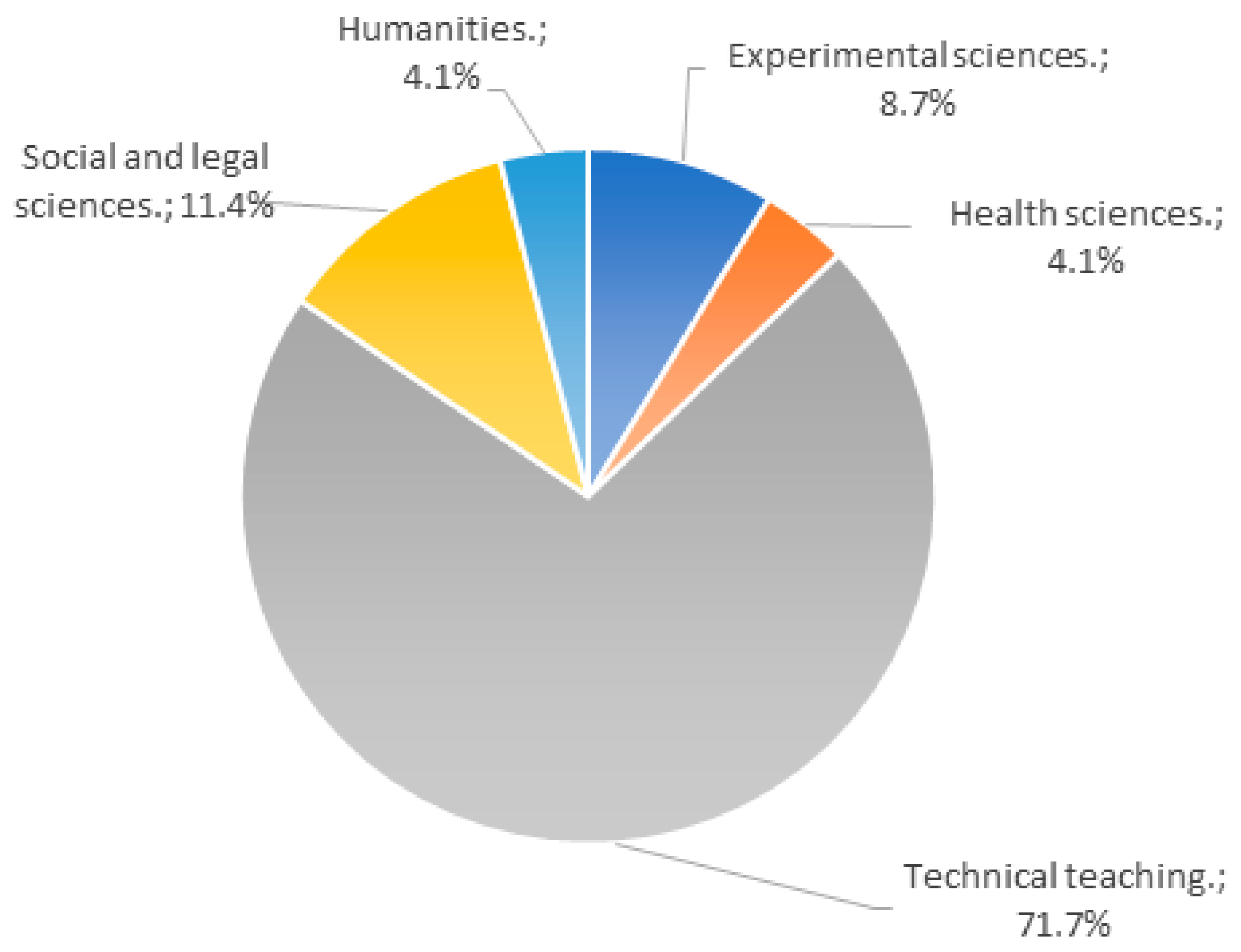

2.1. Research Methodology, Sample, and Data Collection

2.2. Categories System and Data Analysis

3. Results and Discussion

3.1. Technical Dimension of MOOCs

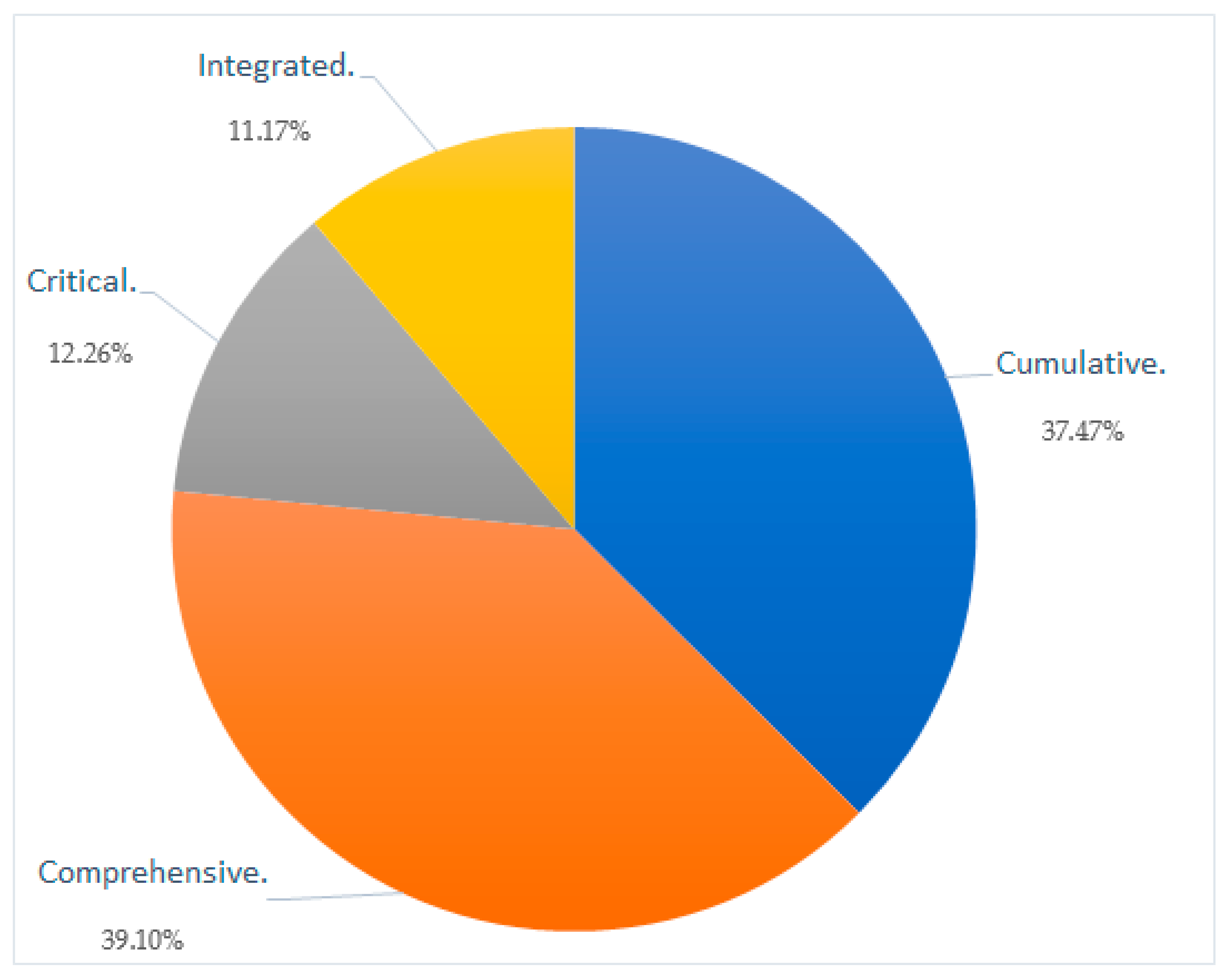

3.2. Pedagogical Dimension of MOOCs

3.3. Exploratory Analysis of Artificial Intelligence Content in MOOCs

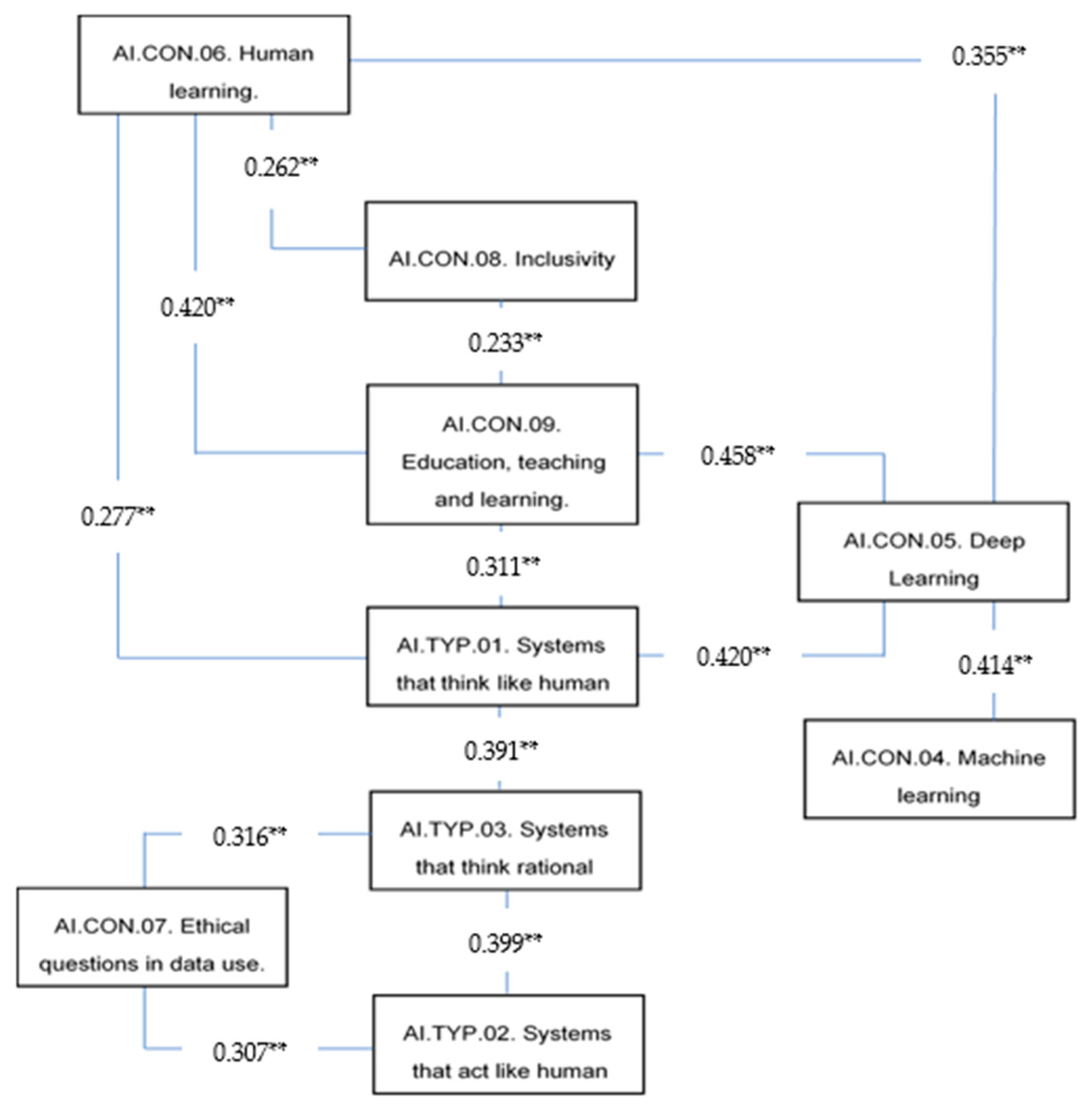

3.4. Factor Analysis of the Artificial Intelligence Content in MOOCs

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Niemczyk, E.K. Glocal Education in Practice: Teaching, Researcher, and Citizenship. BCES Conf. B 2019, 17, 1–6. [Google Scholar]

- Delgado-Algarra, E.J.; Román Sánchez, I.M.; Ordóñez Olmedo, E.; Lorca-Marín, A.A. International MOOC trends in citizenship, participation and sustainability: Analysis of technical, didactic and content dimensions. Sustainability 2019, 11, 5860. [Google Scholar] [CrossRef]

- Delgado-Algarra, E.J. ITCs and Innovation for Didactics of Social Sciences; IGI Global: Hershey, PA, USA, 2020. [Google Scholar]

- Pedreño, A.; Moreno, L.; Ramón, A.; Pernías, P. UniMOOC: Un trabajo colaborativo e innovación educativa. Campus Virtuales 2013, 2, 10–18. [Google Scholar]

- Infante-Moro, A.; Infante-Moro, J.-C.; Torres-Díaz, J.-C.; Martínez-López, F.-J. Los MOOC como sistema de aprendizaje en la Universidad de Huelva (UHU). IJERI Int. J. Educ. Res. Innov. 2017, 8, 163–174. [Google Scholar]

- Butler-Adam, J. The fourth industrial revolution and education. S. Afr. J. Sci. 2018, 114, 1. [Google Scholar] [CrossRef]

- Coberly-Holt, P.; Elufiede, K. Preparing for the Fourth Industrial Revolution with Creative and Critical Thinking. In Proceedings of the Annual Meeting of the Adult Higher Education Alliance, 43rd, Orlando, FL, USA, 7–8 March 2019. [Google Scholar]

- Pelletier, K.; Robert, J.; Muscanell, N.; McCormack, M.; Reeves, J.; Arbino, N.; Grajek, S. 2023 EDUCAUSE Horizon Report. Teaching and Learning Edition; EDUCAUSE: Boulder, CO, USA, 2023. [Google Scholar]

- Mengual-Andrés, S.; Roig Vila, R.; Lloret Catalá, C. Validación del cuestionario de evaluación de la calidad de cursos virtuales adaptado a MOOC. Rev. Iberoam. Educ. Distancia 2015, 18, 145–169. [Google Scholar] [CrossRef]

- Gallego, G.; Roldán López, N.D.; Torres Velásquez, C.F.; Rendón Ospina, F.; Puerta Gil, C.A.; Toro García, C.A.; Giraldo, J.M.A.; Sánchez, J.P.T.; Álvarez, Y.S.; Velásquez, C.F.T. WPD1.13 Informe Sobre Accesibilidad Aplicada a MOOC. MOOC-Maker Construction of Management Capacities of MOOC in Higher Education (561533-EPP-1-2015-1-ES-EPPKA2-CBHE-JP). 2016. Available online: http://www.mooc-maker.org/?dl_id=34 (accessed on 17 August 2023).

- Ortega Ruiz, I.J. Análisis de adecuación de los MOOC al u-Learning: De la Masividad a la Experiencia Personalizada de Aprendizaje. Propuesta uMOOC. 2016. Available online: https://goo.gl/6A5hxZ (accessed on 17 August 2023).

- Bournissen, J.M.; Tumino, M.C.; Carrión, F. MOOC: Evaluación de la calidad y medición de la motivación percibida. IJERI Int. J. Educ. Res. Innov. 2018, 11, 18–32. [Google Scholar]

- Agarwal, A. How Modular Education Is Revolutionizing the Way We Learn (and Work). Forb. Available online: https://bit.ly/30sPsAt (accessed on 30 June 2023).

- European Commission. Proposal for a Council Recommendation on Key Competences for LifeLong Learning. 2018. Available online: https://data.consilium.europa.eu/doc/document/ST-5464-2018-ADD-2/EN/pdf (accessed on 2 March 2023).

- Baartman, L.K.; De Bruijn, E. Integrating knowledge, skills and attitudes: Conceptualising learning processes towards vocational competence. Educ. Res. Rev. 2011, 6, 125–134. [Google Scholar] [CrossRef]

- Pappano, L. The Year of the MOOC. The New York Times, 2 November 2012. Available online: https://shorturl.at/mnoqy (accessed on 30 June 2023).

- Yousef, A.M.F.; Chatti, M.A.; Wosnitza, M.; Schroeder, U. Análisis de clúster de perspectivas de participantes en MOOC. RUSC Univ. Knowl. Soc. J. 2015, 12, 74–91. [Google Scholar] [CrossRef]

- Siemens, G. MOOCs for the Win! ElearnSpace. 2012. Available online: http://www.elearnspace.org/blog/2012/03/05/moocs-for-the%20win/ (accessed on 3 April 2023).

- Downes, S. The Rise of MOOC. 2012. Available online: http://www.downes.ca/post/57911 (accessed on 3 April 2023).

- Cabero, J.; Leiva, J.J.; Moreno, N.M.; Barroso, J.; López Meneses, E. Realidad Aumentada y Educación. Innovación en Contextos Formativos; Octaedro: Barcelona, Spain, 2016. [Google Scholar]

- López Meneses, E.; Vázquez-Cano, E.; Román, P. Analysis and implications of the impact of MOOC movement in the scientific community: JCR and Scopus (2010–2013). Comunicar 2015, 44, 73–80. [Google Scholar] [CrossRef]

- Russel, S.; Norvig, P. Artificial Intelligence: Modern Approach, 4th ed.; Pearson: London, UK, 2020. [Google Scholar]

- Education 2030 Incheon Declaration and Framework for Action for the Implementation of Sustainable Development Goal 4. Ensure Inclusive and Equitable Quality Education and Promote Lifelong Learning Opportunities for All. Available online: https://unesdoc.unesco.org/ark:/48223/pf0000245656 (accessed on 1 September 2016).

- Mohammed, P.; Watson, E.N. Towards Inclusive Education in the Age of Artificial Intelligence: Perspectives, Challenges, and Opportunities. In Artificial Intelligence and Inclusive Education; Knox, J., Wang, Y., Gallager, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 17–37. [Google Scholar]

- Miao, F.; Holmes, W.; Huang, R.; Zhang, H. AI and Education. Guidance for Policy Makers; UNESCO: Paris, France, 2021. [Google Scholar]

- Lui, B.L.; Morales, D.; Chinchilla, J.F.R.; Sabzalieva, E.; Valentini, A.; Vieira, D.; Yerovi, C. Harnessing the Era of Artificial Intelligence in Higher Educatioon. A Primer for Higher Education Stakeholders; UNESCO: Paris, France, 2023. [Google Scholar]

- Pugliese, L. Adaptive Learning Systems: Surviving the Storm. Educ. Rev. 2016, 10, 18–32. [Google Scholar]

- Holmes, W.; Tuomi, I. State of the art and practice in AI in education. Eur. J. Educ. 2022, 57, 542–570. [Google Scholar] [CrossRef]

- Becker, S.A.; Brown, M.; Dahlstrom, E.; Davis, A.; DePaul, K.; Diaz, V.; Pomerantz, J. NMC Horizon Report: 2018 Higher Education Edition; EDUCAUSE: Louisville, KE, USA, 2018. [Google Scholar]

- Guàrdia, L.; Maina, M.; Sangrà, A. MOOC Design Principles. A Pedagogical Approach from the Learner’s Perspective. Elearn. Pap. 2013, 33, 1–6. [Google Scholar]

- Delgado-Algarra, E.J.; Estepa-Giménez, J. Ciudadanía y dimensiones de la memoria en el aprendizaje de la historia. Análisis de un caso de educación secundaria. Vínc. Hist. 2018, 7, 366–388. [Google Scholar]

- Estepa-Giménez, J.; Ferreras-Listán, M.; Cruz, I.; Morón-Monge, H. Análisis del patrimonio en los libros de texto. Obstáculos, dificultades y propuestas. Rev. Educ. 2011, 335, 573–588. [Google Scholar]

- Cuenca, J.M.; Estepa-Giménez, J.; Martín Cáceres, M.J. Patrimonio, educación, identidad y ciudadanía. Profesorado y libros de texto en la enseñanza obligatoria. Rev. Educ. 2017, 375, 136–159. [Google Scholar]

- The Council of European Union. Council Recomendation of 22 May 2018 on Key Competences for Lifelong Learning (Text with EEA Relevance) (2018/C 189/01). 2018. Available online: https://shorturl.at/bqBOT (accessed on 15 April 2023).

- Aksela, M.K.; Wu, X.; Halonen, J. Relevancy of the Massive Open Online Course (MOOC) about Sustainable Energy for Adolescents. Educ. Sci. 2016, 6, 40. [Google Scholar] [CrossRef]

- Terras, M.M.; Ramsay, J. British Massive Open Online Courses (MOOCs): Insights and Challenges from a Psychological Perspective. J. Educ. Technol. 2015, 46, 472–487. [Google Scholar]

- Najafi, H.; Rolheiser, C.; Håklev, S.; Harrison, L. Variations in Pedagogical Design of Massive Open Online Courses (MOOCs) across Disciplines. Teach. Learn. Inq. Issotl J. 2017, 5, 47. [Google Scholar] [CrossRef]

- Krause, K.L.D. Challenging perspectives on learning and teaching in the disciplines: The academic voice. Stud. High. Educ. 2014, 39, 2–19. [Google Scholar] [CrossRef]

- Wang, M. Designing online courses that effectively engage learners from diverse cultural backgrounds. Br. J. Educ. Technol. 2007, 38, 294–311. [Google Scholar] [CrossRef]

- Young, J.R. What professors can learn from ‘hard core’ MOOC students? Chron. High. Educ. 2013, 59, A4. [Google Scholar]

- Plangsorn, B.; Na-Songkhla, J.; Luetkehans, L.M. Undergraduate students’ opinions with regard to ubiquitous mooc for enhancing cross–cultural competence. World J. Educ. Technol. Curr. Issues 2016, 8, 210–217. [Google Scholar] [CrossRef][Green Version]

- Jessop, T.; Maleckar, B. The influence of disciplinary assessment patterns on student learning: A comparative study. Stud. High. Educ. 2016, 41, 696–711. [Google Scholar] [CrossRef]

- Gómez-Hurtado, I.; García Prieto, F.J.; Delgado-García, M. Uso de la red social Facebook como herramienta de aprendizaje en estudiantes universitarios: Estudio integrado sobre percepciones. Perspect. Educ. 2018, 57, 99–119. [Google Scholar] [CrossRef]

- Siau, K.; Wang, W. Artificial Intelligence (AI) Ethics: Ethics of AI and Ethical AI. J. Datab. Manag. 2020, 31, 74–87. [Google Scholar] [CrossRef]

- Vázquez Bernal, B.; Aguaded, S. La percepción de los alumnos de Secundaria de la contaminación: Comparación entre un ambiente rural y otro urbano. In Reflexiones Sobre la Didáctica de las Ciencias Experimentales; Martín Sánchez, M.T., Morcillo Ortega, J.G., Eds.; Universidad Complutense: Madrid, Spain, 2001; pp. 517–525. [Google Scholar]

- Goel, G. Human Learning vs. Machine Learning. Towards Data Science. 2019. Available online: https://towardsdatascience.com/human-learning-vs-machine-learning-dfa8fe421560 (accessed on 15 April 2023).

- Kao, Y.-F.; Venkatachalam, R. Human and machine Learning. Comput. Econ. 2021, 57, 889–909. [Google Scholar] [CrossRef]

- Ethics and Governance of Artificial Intelligence. AI and Inclusion Project. Available online: https://www.media.mit.edu/projects/ai-and-inclusion/overview/ (accessed on 15 August 2023).

- Salleb-Aouissi, A. AI and the Building of a More Inclusive Society. In Proceedings of theGlobal Symposium Artificial Intelligence & Inclusion, Río de Janeiro, Brazil, 8–10 November 2017. [Google Scholar]

- Kuo, T.M.; Tsai, C.C.; Wang, J.C. Linking Web-Based Learning Self-Efficacy and Learning Engagement in MOOCs: The Role of Online Academic Hardiness. Internet High. Educ. 2021, 51, 100819. [Google Scholar] [CrossRef]

- Barak, M.; Watted, A.; Haick, H. Motivation to learn in massive open online courses: Examining aspects of language and social engagement. Comput. Educ. 2016, 94, 49–60. [Google Scholar] [CrossRef]

- Zhu, M.; Sari, A.; Lee, M.M. A systematic review of research methods and topics of the empirical MOOC literature (2014–2016). Internet High. Educ. 2018, 37, 31–39. [Google Scholar] [CrossRef]

- European Parlament. EU AI Act: First Regulation on Artificial Intelligence. 2023. Available online: https://www.europarl.europa.eu/news/en/headlines/society/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (accessed on 11 November 2023).

- Delgado-Algarra, E.J.; Lorca-Marín, A.A. ¿Cómo Debe ser un Maestro de Ciencias en Tiempos de ChatGPT? 2023. Available online: https://theconversation.com/como-debe-ser-un-maestro-de-ciencias-en-tiempos-de-chatgpt-209825 (accessed on 30 September 2023).

- Hoaglin, D.C.; Mosteller, F.; Tukey, J.W. Exploring Data Tables, Trends, and Shapes; Wiley: New York, NY, USA, 1985. [Google Scholar]

- Rosenblatt, K. New bot ChatGPT Will Force Colleges to Get Creative to Prevent Cheating, Experts Say. BBC News, 8 December 2022. Available online: https://www.nbcnews.com/tech/chatgpt-can-generate-essay-generate-rcna60362 (accessed on 15 April 2023).

- González, A.; Carabantes, D. MOOC: Medición de satisfacción, fidelización, éxito y certificación de la educación digital. RIED Rev. Iberoam. Educ. Distancia 2017, 20, 105–123. [Google Scholar]

- Siemens, G. Teaching in Social and Technological Networks. 2010. Available online: https://www.slideshare.net/gsiemens/tcconline (accessed on 17 August 2023).

- Moya, M. La Educación encierra un tesoro: ¿Los MOOCs/COMA integran los Pilares de la Educación en su modelo de aprendizaje online? In SCOPEO INFORME 2. MOOC: Estado de la Situación Actual, Posibilidades, Retos y Futuro; Academic Press: Cambridge, MA, USA, 2013; pp. 157–172. [Google Scholar]

- Arntz, M.T.; Gregory, T.; Zierahn, U. The Risk of Automation for Jobs in OECD Countries: A Comparative Analysis; OECD Social, Employment and Migration Working Papers; OECD Publishing: Paris, France, 2016. [Google Scholar]

- European Commission. Ethics Guidelines for Trustworthy AI. Available online: https://ec.europa.eu/digital-single-market/en/news/ethics-guidelines-trustworthy-ai (accessed on 15 August 2023).

| Subcategory | Indicator |

|---|---|

| Initial Basic Information | DID.BAS.01. Language DID.BAS.02. Temporalization DID.BAS.03. List of modules DID.BAS.04. Number of modules DID.BAS.05. Difficulty level DID.BAS.06. Previous knowledge required DID.BAS.07. Teaching team DID.BAS.08. Guide with general information DID.BAS.09. Contact |

| Objectives And Powers | DID.OYC.01. Objectives DID.OYC.02. Competencies DID.OYC.03. Communication competence DID.OYC.04. Mathematical competence and basic competences in sciences and technology DID.OYC.05. Digital competence 1 DID.OYC.06. Learning to learn competence DID.OYC.07. Social and civic competence DID.OYC.08. Sense of initiative and entrepreneurial competence DID.OYC.09. Cultural awareness and expression competence |

| Content | DID.CON.01. Integration of knowledge, skills and attitudes |

| Methodology | DID.MET.01. Description of teaching activity DID.MET.02. Details of student workload DID.MET.03. Participation in the activities DID.MET.04. Type of learning DID.MET.05. Level of complexity |

| Means | DID.REC.01. Readings DID.REC.02. Videos DID.REC.03. Quizzes DID.REC.04. Social networks DID.REC.05. Webs DID.REC.06. Other applications and resources |

| Schedule | DID.CRO.01. Temporary detail for content development DID.CRO.02. Key dates and deadlines |

| Evaluation | DID.EVA.01. Evaluation criteria DID.EVA.02. When to evaluate DID.EVA.03. How to evaluate DID.EVA.04. Self-assessment DID.EVA.05. Case Analysis DID.EVA.06. Participation in forum DID.EVA.07. Work preparation (essay, report, etc.) |

| Bibliography | DID.BIB.01 Bibliography |

| Subcategory | Indicator |

|---|---|

| Accessibility | TEC.ACC.01. Plugins TEC.ACC.02. Content access |

| Navigation | TEC.NAV.01. Design TEC.NAV.02. Ease of browsing TEC.NAV.03. Browsing support elements TEC.NAV.04. Toolbar with links TEC.NAV.05. Visible links and hypertexts TEC.NAV.06. Help system for course development TEC.NAV.07. Content search engine |

| Interactivity | TEC.INT.01. Facilities or tools for teacher–student interaction TEC.INT.02. Facilities or tools for student–student interaction (cooperative work) TEC.INT.03. Allows interaction by private message TEC.INT.04. Allows interaction by chat TEC.INT.05. Allows interaction by video conference TEC.INT.06. Allows interaction through specific communication programs (Adobe Connect, Blackboard, etc.) |

| Subcategory | Indicator |

|---|---|

| Course Content | AI.CON.01. Algorithmic bias AI.CON.02. Programming AI.CON.03. Analysis AI.CON.04. Machine learning AI.CON.05. Deep Learning AI.CON.06. Human learning AI.CON.07. Ethical questions in data use AI.CON.08. Inclusivity AI.CON.09. Education, teaching and learning |

| Types | AI.TYP.01. Systems that think like humans AI.TYP.02. Systems that act like humans AI.TYP.03. Systems that think rationally AI.TYP.04. Systems that act rationally |

| Content Not Present | Implicit Content | Explicit Content | ||||

|---|---|---|---|---|---|---|

| f. | % | f. | % | f. | % | |

| AI.CON.01. Algorithmic bias. | 524 | 71.4% | 69 | 9.4% | 141 | 19.2% |

| AI.CON.02. Programming. | 339 | 46.2% | 129 | 17.6% | 266 | 36.2% |

| AI.CON.03. Analysis. | 274 | 37.3% | 62 | 8.4% | 398 | 54.2% |

| AI.CON.04. Machine learning. | 119 | 16.2% | 106 | 14.4% | 509 | 69.3% |

| AI.CON.05. Deep Learning. | 284 | 38.7% | 253 | 34.5% | 197 | 26.8% |

| AI.CON.06. Human learning. | 256 | 34.9% | 248 | 47.4% | 130 | 17.7% |

| AI.CON.07. Ethical questions in data use. | 702 | 96.6% | 13 | 1.8% | 19 | 2.6% |

| AI.CON.08. Inclusivity. | 696 | 94.8% | 9 | 1.2% | 29 | 4.0% |

| AI.CON.09. Education, teaching, and learning. | 321 | 43.7% | 286 | 39.0% | 127 | 17.3% |

| Content Not Present | Implicit Content | Explicit Content | ||||

|---|---|---|---|---|---|---|

| f. | % | f. | % | f. | % | |

| AI.TYP.01. Systems that think like humans. | 322 | 43.8% | 280 | 38.1% | 132 | 18.0% |

| AI.TYP.02. Systems that act like humans. | 665 | 90.6% | 60 | 8.2% | 9 | 1.2% |

| AI.TYP.03. Systems that think rationally. | 610 | 83.1% | 63 | 8.6% | 61 | 8.3% |

| AI.TYP.04. systems that act rationally. | 681 | 92.8% | 43 | 5.9% | 10 | 1.4% |

| Kaiser–Meyer–Olkin Sampling Adequacy Measure. | 0.693 | |

| Bartlett’s Sphericity Test | Approximate chi-square | 1228.549 |

| Gl | 45 | |

| Sig. | 0.000 | |

| Component | Initial Eigenvalues | Extraction Sums of Squared Loadings | ||||

|---|---|---|---|---|---|---|

| Total | Variance % | Accumulated % | Total | Variance % | Accumulated % | |

| 1 | 2.608 | 26.081 | 26.081 | 2.608 | 26.081 | 26.081 |

| 2 | 1.707 | 17.073 | 43.154 | 1.707 | 17.073 | 43.154 |

| 3 | 1.066 | 10.655 | 53.810 | 1.066 | 10.655 | 53.810 |

| 4 | 1.033 | 10.330 | 64.140 | 1.033 | 10.330 | 64.140 |

| 5 | 0.782 | 7.820 | 71.960 | |||

| 6 | 0.753 | 7.531 | 79.491 | |||

| 7 | 0.647 | 6.473 | 85.964 | |||

| 8 | 0.563 | 5.635 | 91.599 | |||

| 9 | 0.441 | 4.409 | 96.007 | |||

| 10 | 0.399 | 3.993 | 100.000 | |||

| Component | ||||

|---|---|---|---|---|

| 1 | 2 | 3 | 4 | |

| AI.CON.04. Machine learning. | 0.671 | |||

| AI.CON.05. Deep Learning. | 0.827 | |||

| AI.CON.09. Education, teaching, and learning. | 0.595 | |||

| AI.TYP.01. Systems that think like humans. | 0.669 | |||

| AI.CON.07. Ethical questions in data use. | 0.609 | |||

| AI.TYP.02. Systems that act like humans. | 0.735 | |||

| AI.TYP.03. Systems that think rationally. | 0.818 | |||

| AI.CON.06. Human learning. | 0.507 | |||

| AI.CON.08. Inclusivity. | 0.872 | |||

| AI.CON.01. Algorithmic bias. | 0.963 | |||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Delgado Algarra, E.J.; Bernal Bravo, C.; Morales Cevallos, M.B.; López Meneses, E. Pedagogical and Technical Analyses of Massive Open Online Courses on Artificial Intelligence. Appl. Sci. 2024, 14, 1051. https://doi.org/10.3390/app14031051

Delgado Algarra EJ, Bernal Bravo C, Morales Cevallos MB, López Meneses E. Pedagogical and Technical Analyses of Massive Open Online Courses on Artificial Intelligence. Applied Sciences. 2024; 14(3):1051. https://doi.org/10.3390/app14031051

Chicago/Turabian StyleDelgado Algarra, Emilio José, César Bernal Bravo, María Belén Morales Cevallos, and Eloy López Meneses. 2024. "Pedagogical and Technical Analyses of Massive Open Online Courses on Artificial Intelligence" Applied Sciences 14, no. 3: 1051. https://doi.org/10.3390/app14031051

APA StyleDelgado Algarra, E. J., Bernal Bravo, C., Morales Cevallos, M. B., & López Meneses, E. (2024). Pedagogical and Technical Analyses of Massive Open Online Courses on Artificial Intelligence. Applied Sciences, 14(3), 1051. https://doi.org/10.3390/app14031051