Development of a Virtual Reality-Based Environment for Telerehabilitation

Abstract

1. Introduction

2. Materials and Methods

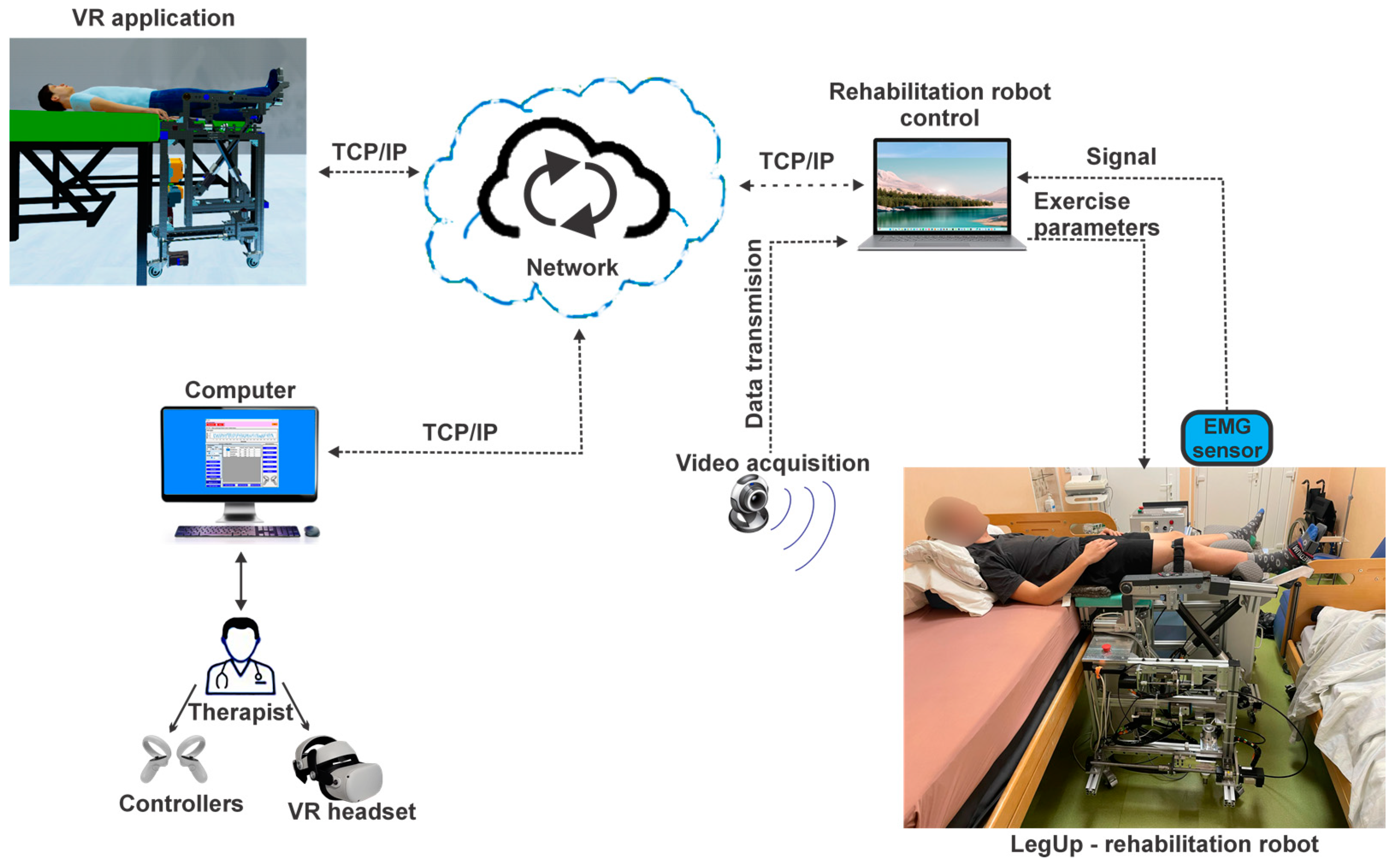

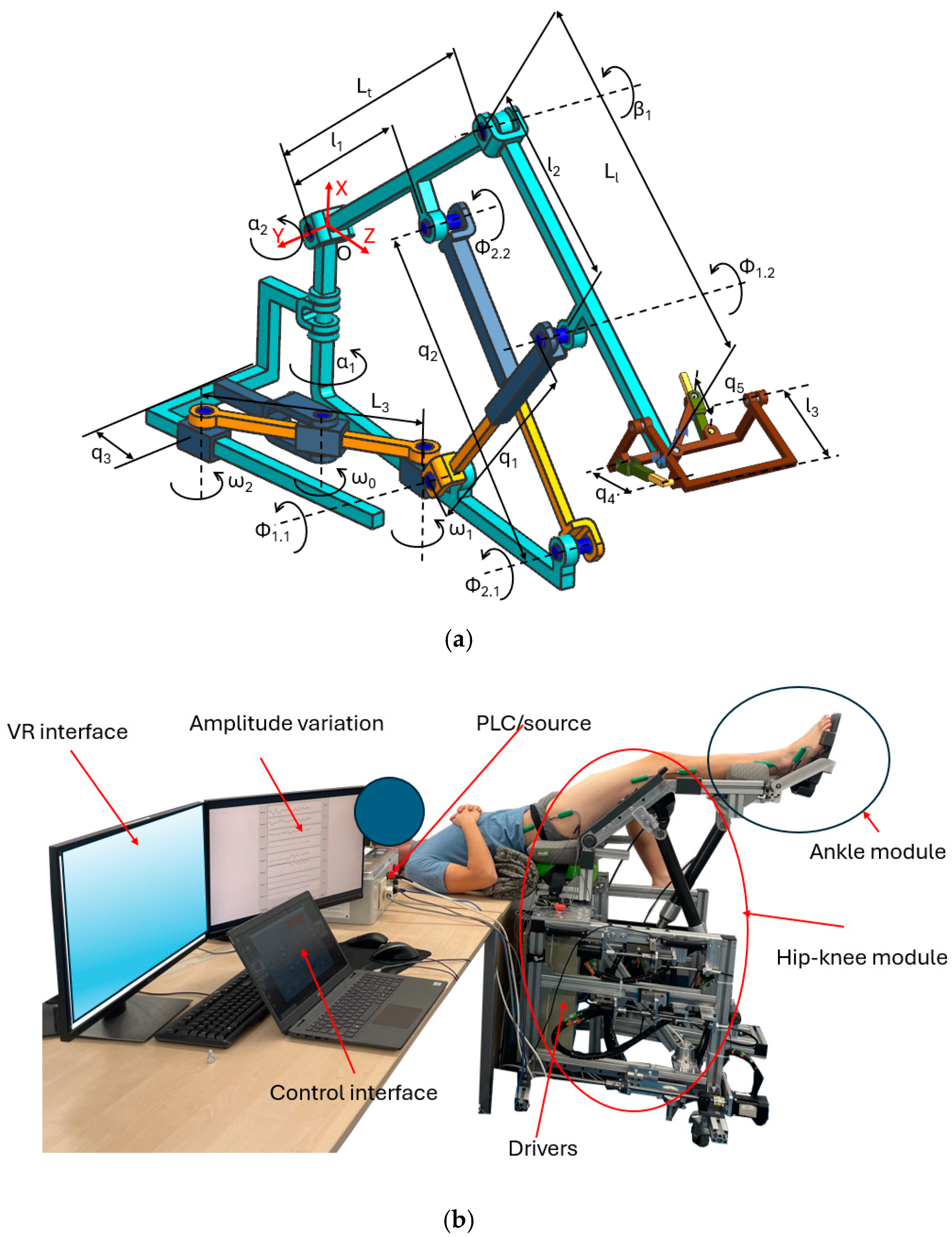

2.1. The Telerehabilitation Robotic System

2.2. The Main User Console

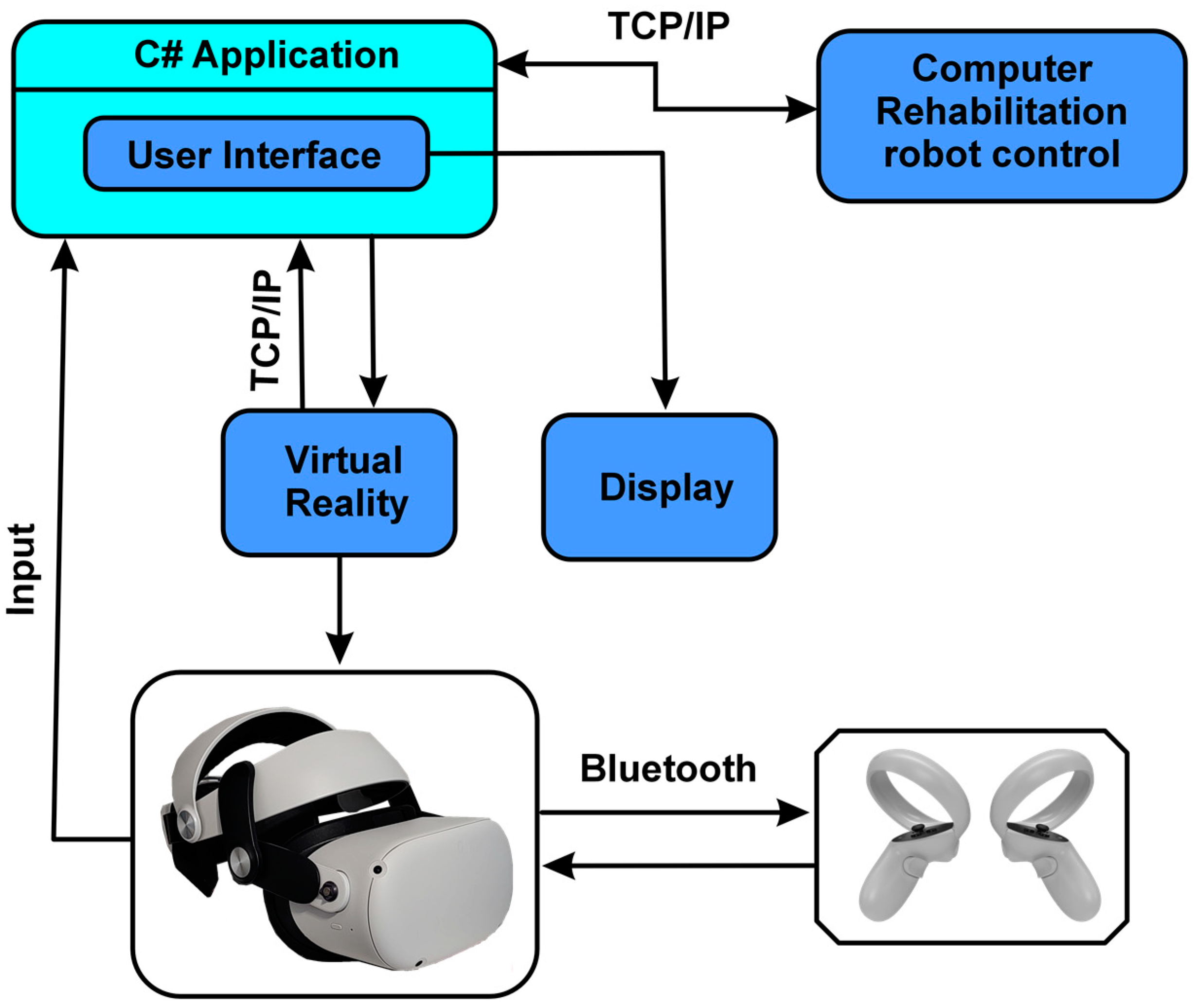

2.2.1. The Multimodal Control Architecture

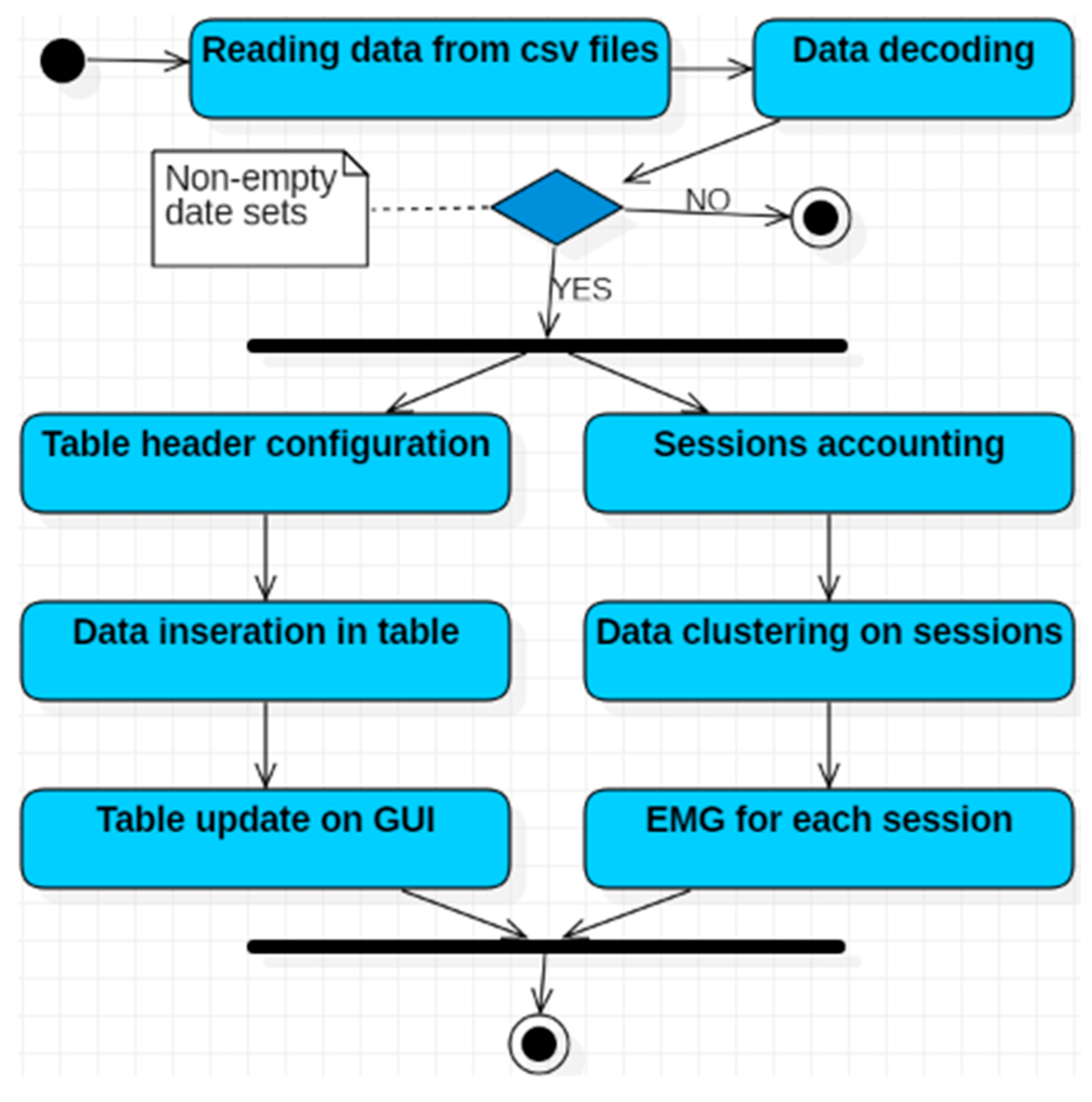

2.2.2. Software Application Development

Software Analysis

- Eighteen use cases detailing the functionalities of the software application: twelve associated with the application implemented in the C# programming language and six related to the VR application;

- Three actors: the human user (physical therapist), the controllers, and the control software of the robotic rehabilitation structure;

- Eleven association relationships between actors and use cases;

- Three dependency relationships between use cases.

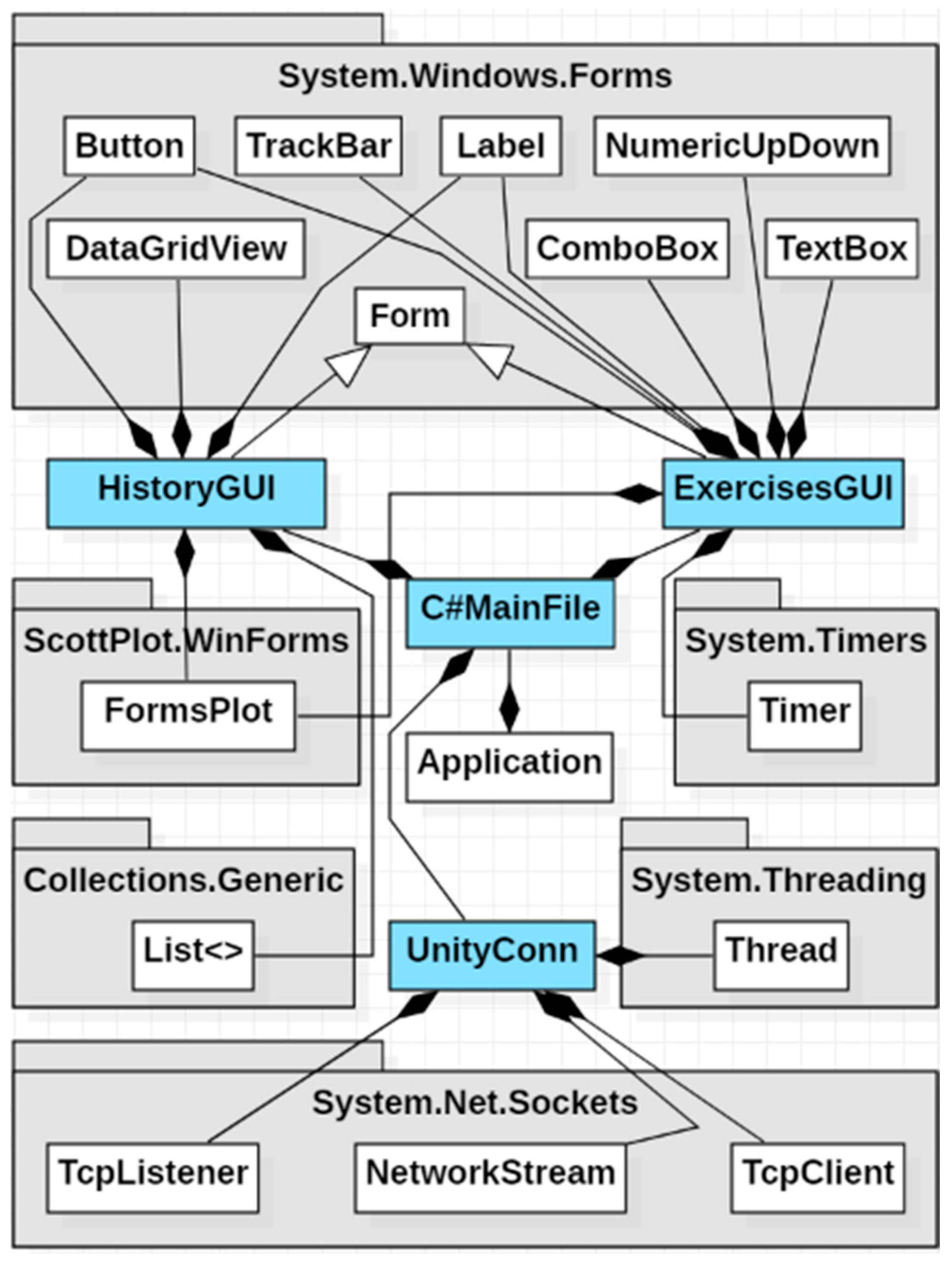

- The ExercisesGUI class allows the therapist to interact with the application’s GUI and is derived from the Form class in the System.Windows.Forms package;

- The HistoryGUI class enables the therapist to monitor the patient’s progress and is also derived from the Form class in the System.Windows.Forms package;

- The RobotGUI class facilitates interaction with the robotic structure control software and is derived from the Form class in the System.Windows.Forms package;

- The RobotConn class establishes connections between the C# application and the robotic structure control software;

- The UnityConn class manages connections between the C# graphical interface and the VR component implemented in Unity;

- The C#MainFile class represents the core class of the C# application, consisting of objects from the ExercisesGUI, HistoryGUI, RobotGUI, RobotConn, and UnityConn classes, according to the composition relationships in the diagram.

User Interface

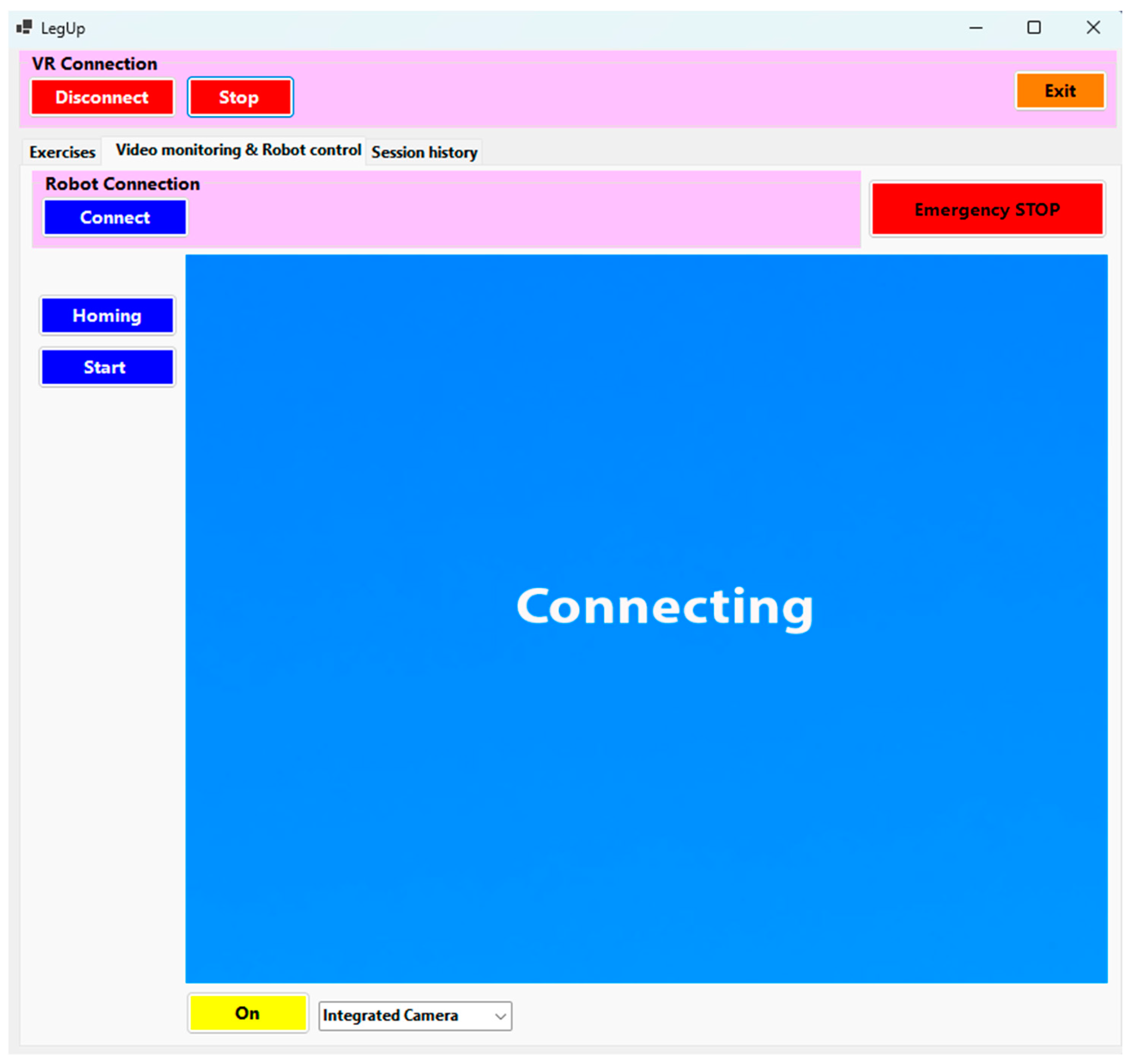

- To initiate the connection between the user interface and the VR application using the TCP/IP protocol, the therapist must press the “Connect” button, which has a blue background. Once the connection is established, the button’s background turns red, and the text changes to “Disconnect” (Figure 8 (1)).

- Data transfer between the user interface and the VR application begins only after the “Start” button is pressed, at which point the button’s background color turns from blue to red, and the text changes from “Start” to “Stop” (Figure 8 (1)).

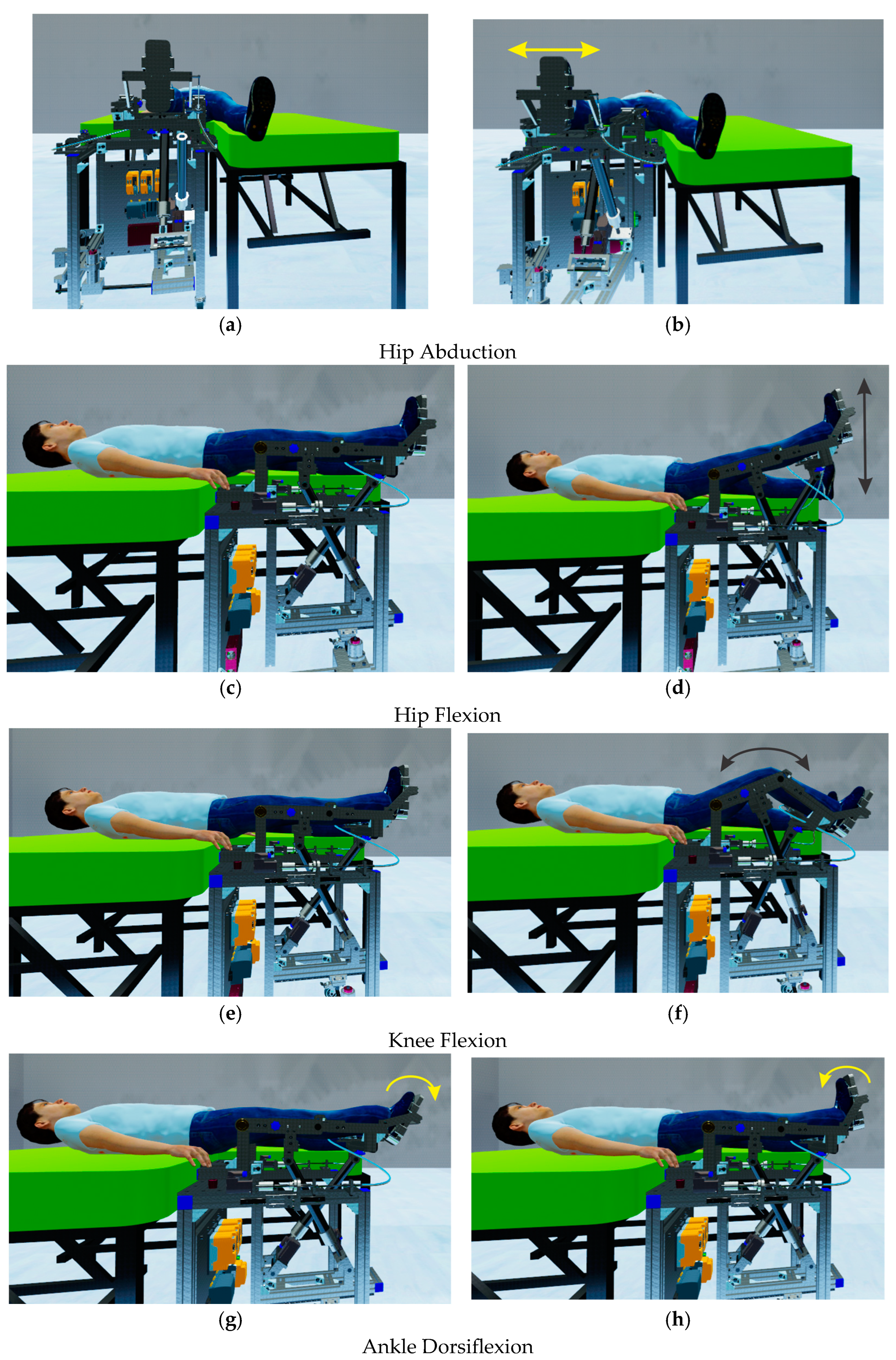

- To command the parallel robot to perform different types of rehabilitation exercises, the following commands are used:The parallel robotic system allows the performance of 5 types of rehabilitation exercises (HipAbduction, Dorsiflexion, Inversion, HipFlexion, KneeFlexion). For each exercise, the therapist can set parameters such as amplitude, speed, and number of repetitions. After configuring the necessary settings, the therapist selects the desired type of exercise by pressing the corresponding button (Figure 8 (2)). Once the desired exercise button is pressed, it is added to a list (Figure 8 (3)) along with the order of the exercise, the execution amplitude, the speed, and the number of repetitions. After selecting and adding the rehabilitation exercises to the list, the therapist can save them for future use or load them into the list (Figure 8 (4)).

- To initiate the rehabilitation exercises, the therapist must press the “Start exercises” button (Figure 8 (5)). At this point, the robotic rehabilitation structure begins performing the selected rehabilitation exercises for the patient, and the elapsed time for each individual exercise is also displayed.

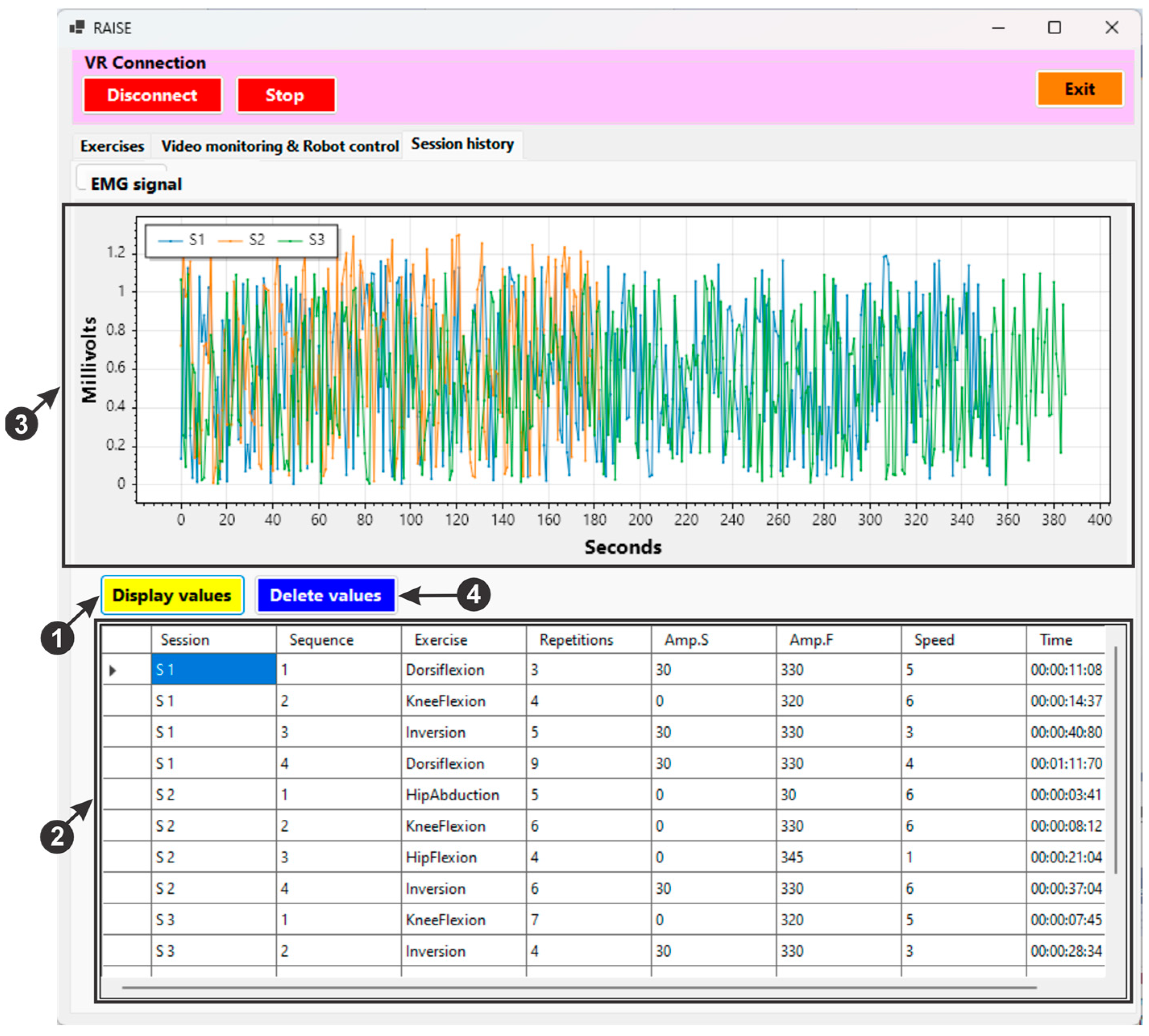

- An EMG (electromyography) sensor is used during patient monitoring to track muscle activity in rehabilitation exercises. This sensor measures the electrical signals (Figure 8 (6)) generated by muscles when they are activated. Increased muscle activity can indicate improvements in muscle strength and function.

- The therapist can demonstrate to the patient the types of exercises that the parallel robotic rehabilitation system can perform using two controllers (Figure 8 (7)).

- To close the user interface, the “Exit” button (Figure 8 (8)) must be pressed.

- Connect button—establishes a connection between the C# application and the control computer of the experimental rehabilitation robot.

- Homing button—initializes the servomotors on the experimental robotic structure when pressed.

- Start button—by pressing this button, the experimental robotic system will start performing the rehabilitation exercises.

- Emergency STOP—an emergency button that cuts the power supply to the experimental robotic system.

- To delete the session data from the file, the “Delete values” button (Figure 10 (4)) must be pressed.

2.2.3. Using the VR Environment for the LegUp Robotic System

- To handle complex graphics and rendering tasks, Unity uses the Universal Render Pipeline (URP) or High Definition Render Pipeline (HDRP), ensuring high-quality images in VR.

- For realistic physical simulations and collision detection, essential in interactive VR environments, algorithms such as PhysX are used.

- The A* (A-star) algorithm is frequently used for path identification in 3D spaces, facilitating the navigation of characters or objects in the VR environment.

- The implementation of spatial audio algorithms ensures that sound sources are perceived as coming from precise locations in 3D space, thus enhancing immersion.

3. Results and Discussion

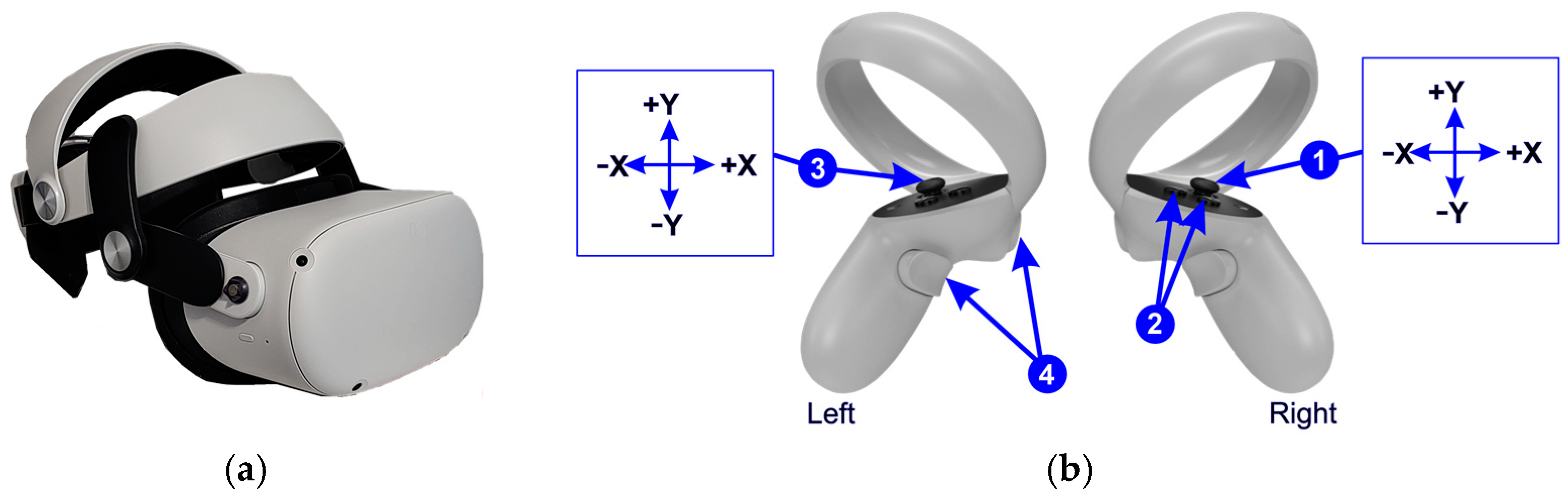

- HipAbduction—performed using the joystick (X axis) on the controller held in the right hand (Figure 11b (1));

- HipFlexion—performed using the joystick (Y axis) on the controller held in the right hand (Figure 11b (1));

- KneeFlexion—executed using two buttons located on the controller held in the right hand (Figure 11b (2)).

- Ankle Dorsiflexion—executed using the joystick (X axis) on the controller held in the left hand (Figure 11b (3));

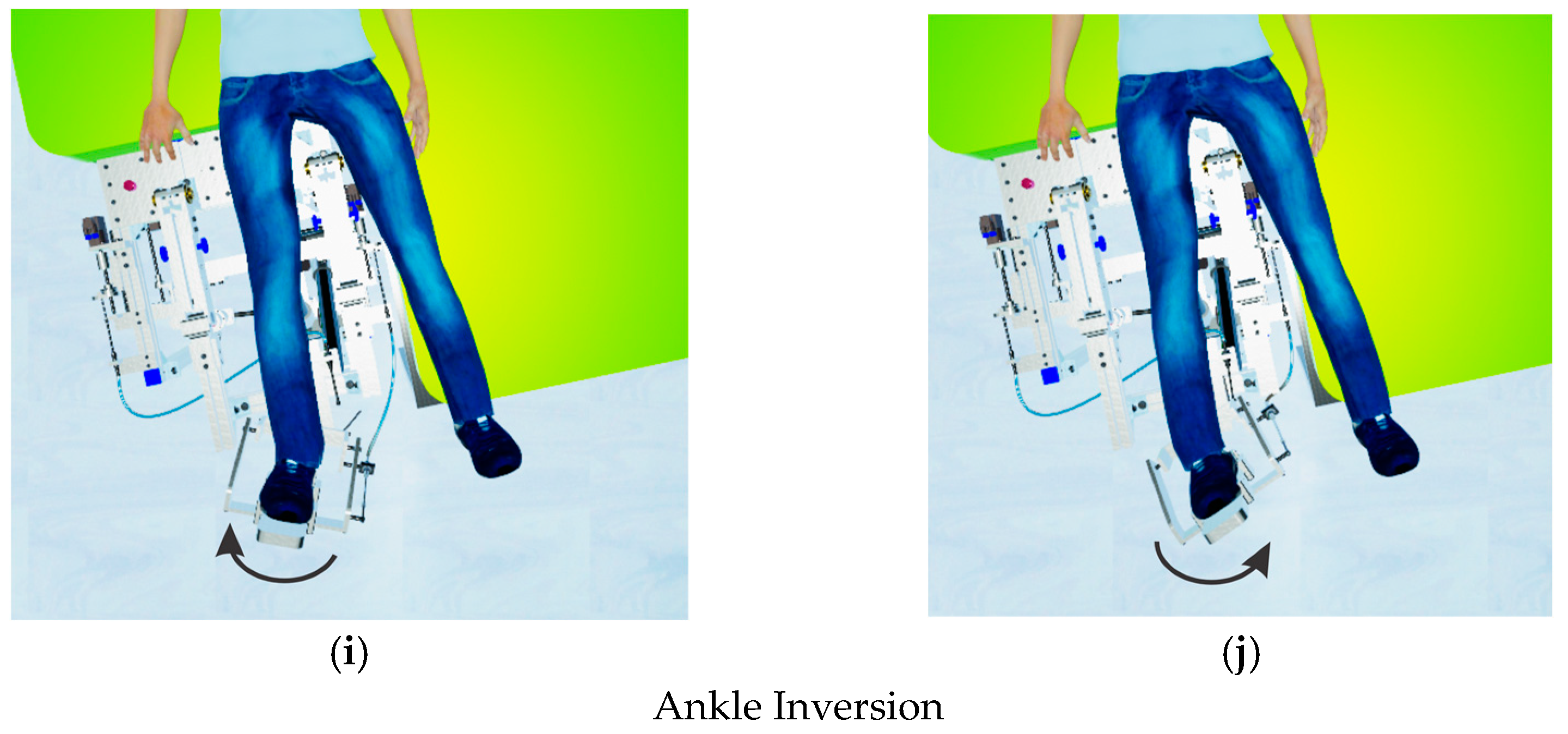

- Ankle Inversion—executed using the joystick (Y axis) on the controller held in the left hand (Figure 11b (3));

4. Summary and Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ackerman, M.J.; Filart, R.; Burgess, L.P.; Lee, I.; Poropatich, R.K. Developing next-generation telehealth tools and technologies: Patients, systems, and data perspectives. Telemed. J. e-Health 2010, 16, 93–95. [Google Scholar] [CrossRef] [PubMed]

- Reynolds, A.; Awan, N.; Gallagher, P. Physiotherapists’ perspective of telehealth during the COVID-19 pandemic. Int. J. Med. Inf. 2021, 156, 104613. [Google Scholar] [CrossRef] [PubMed]

- Miao, M.; Gao, X.; Zhu, W. A Construction Method of Lower Limb Rehabilitation Robot with Remote Control System. Appl. Sci. 2021, 11, 867. [Google Scholar] [CrossRef]

- Machlin, S.R.; Chevan, J.; Yu, W.W.; Zodet, M.W. Determinants of Utilization and Expenditures for Episodes of Ambulatory Physical Therapy Among Adults. Phys. Ther. 2011, 91, 1018–1029. [Google Scholar] [CrossRef]

- Markus, H.S. Reducing disability after stroke. Int. J. Stroke 2022, 17, 249–250. [Google Scholar] [CrossRef]

- Shahid, J.; Kashif, A.; Shahid, M.K. A Comprehensive Review of Physical Therapy Interventions for Stroke Rehabilitation: Impairment-Based Approaches and Functional Goals. Brain Sci. 2023, 13, 717. [Google Scholar] [CrossRef]

- Federico, S.; Cacciante, L.; Cieślik, B.; Turolla, A.; Agostini, M.; Kiper, P.; Picelli, A.; on behalf of the RIN_TR_Group. Telerehabilitation for Neurological Motor Impairment: A Systematic Review and Meta-Analysis on Quality of Life, Satisfaction, and Acceptance in Stroke, Multiple Sclerosis, and Parkinson’s Disease. J. Clin. Med. 2024, 13, 299. [Google Scholar] [CrossRef]

- Hanakawa, T.; Hotta, F.; Nakamura, T.; Shindo, K.; Ushiba, N.; Hirosawa, M.; Yamazaki, Y.; Moriyama, Y.; Takagi, S.; Mizuno, K.; et al. Macrostructural Cerebellar Neuroplasticity Correlates With Motor Recovery After Stroke. Neurorehabilit. Neural Repair 2023, 37, 775–785. [Google Scholar] [CrossRef]

- Maier, M.; Ballester, B.R.; Verschure, P.F.M.J. Principles of Neurorehabilitation After Stroke Based on Motor Learning and Brain Plasticity Mechanisms. Front. Syst. Neurosci. 2019, 13, 74. [Google Scholar] [CrossRef]

- Pisla, D.; Nadas, I.; Tucan, P.; Albert, S.; Carbone, G.; Antal, T.; Banica, A.; Gherman, B. Development of a Control System and Functional Validation of a Parallel Robot for Lower Limb Rehabilitation. Actuators 2021, 10, 277. [Google Scholar] [CrossRef]

- Hao, J.; Pu, Y.; Chen, Z.; Siu, K.C. Effects of virtual reality-based telerehabilitation for stroke patients: A systematic review and meta-analysis of randomized controlled trials. J. Stroke Cerebrovasc. Dis. 2023, 32, 106960. [Google Scholar] [CrossRef] [PubMed]

- Bo, A.P.L.; Casas, L.; Cucho-Padin, G.; Hayashibe, M.; Elias, D. Control Strategies for Gait Telerehabilitation System Based on Parallel Robotics. Appl. Sci. 2021, 11, 11095. [Google Scholar] [CrossRef]

- Covaciu, F.; Pisla, A.; Iordan, A.E. Development of a virtual reality simulator for an intelligent robotic system used in ankle rehabilitation. Sensors 2021, 21, 1537. [Google Scholar] [CrossRef] [PubMed]

- Chan, Y.K.; Tang, Y.M.; Teng, L. A comparative analysis of digital health usage intentions towards the adoption of virtual reality in telerehabilitation. Int. J. Med. Inform. 2023, 174, 105042. [Google Scholar] [CrossRef]

- Alashram, A.R. Effectiveness of combined robotics and virtual reality on lower limb functional ability in stroke survivors: A systematic review of randomized controlled trials. Neurol. Sci. 2024, 45, 4721–4739. [Google Scholar] [CrossRef]

- Catalán, J.M.; García-Pérez, J.V.; Blanco, A.; Ezquerro, S.; Garrote, A.; Costa, T.; Bertomeu-Motos, A.; Díaz, I.; García-Aracil, N. Telerehabilitation Versus Local Rehabilitation Therapies Assisted by Robotic Devices: A Pilot Study with Patients. Appl. Sci. 2021, 11, 6259. [Google Scholar] [CrossRef]

- Zhang, W.; Dai, L.; Fang, L.; Zhang, H.; Li, X.; Hong, Y.; Chen, S.; Zhang, Y.; Zheng, B.; Wu, J.; et al. Effectiveness of repetitive transcranial magnetic stimulation combined with intelligent Gait-Adaptability Training in improving lower limb function and brain symmetry after subacute stroke: A preliminary study. J. Stroke Cerebrovasc. Dis. 2024, 33, 107961. [Google Scholar] [CrossRef]

- Bouteraa, Y.; Abdallah, I.B.; Boukthir, K. A New Wrist–Forearm Rehabilitation Protocol Integrating Human Biomechanics and SVM-Based Machine Learning for Muscle Fatigue Estimation. Bioengineering 2023, 10, 219. [Google Scholar] [CrossRef]

- Latreche, A.; Kelaiaia, R.; Chemori, A.; Kerboua, A. A New Home-Based Upper- and Lower-Limb Telerehabilitation Platform with Experimental Validation. Arab. J. Sci. Eng. 2023, 48, 10825–10840. [Google Scholar] [CrossRef]

- Clemente, C.; Chambel, G.; Silva, D.C.F.; Montes, A.M.; Pinto, J.F.; Silva, H.P.d. Feasibility of 3D Body Tracking from Monocular 2D Video Feeds in Musculoskeletal Telerehabilitation. Sensors 2024, 24, 206. [Google Scholar] [CrossRef]

- Latreche, A.; Kelaiaia, R.; Chemori, A. AI-based Human Tracking for Remote Rehabilitation Progress Monitoring. In Proceedings of the ICAECE 2023—International Conference on Advances in Electrical and Computer Engineering, Tebessa, Algeria, 15–16 May 2023; AIJR Abstract. AIJR Publisher: Balrampur, India, 2024; pp. 7–9. [Google Scholar]

- Available online: https://wiki.dfrobot.com/Analog_EMG_Sensor_by_OYMotion_SKU_SEN0240 (accessed on 10 October 2024).

- Vaida, C.; Birlescu, I.; Pisla, A.; Ulinici, I.-M.; Tarnita, D.; Carbone, G.; Pisla, D. Systematic Design of a Parallel Robotic System for Lower Limb Rehabilitation. IEEE Access 2020, 8, 34522–34537. [Google Scholar] [CrossRef]

- Pisla, D.; Birlescu, I.; Vaida, C.; Tucan, P.; Gherman, B.; Machado, J. Parallel Robot for Joint Recovery of the Lower Limb in Two Planes, OSIM A 00116/20.03.2024. Available online: http://pub.osim.ro/publication-server/pdf-document?PN=RO133814%20RO%20133814&iDocId=12775&iepatch=.pdf (accessed on 12 September 2024).

- Vaida, C.; Birlescu, I.; Pisla, A.; Carbone, G.; Plitea, N.; Ulinici, I.; Gherman, B.; Puskas, F.; Tucan, P.; Pisla, D. RAISE-An innovative parallel robotic system for lower limb rehabilitation. In New Trends in Medical and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 293–302. [Google Scholar]

- Yang, Y.L.; Guo, J.L.; Yao, Y.F.; Yin, H.S. Development of a Compliant Lower-Limb Rehabilitation Robot Using Underactuated Mechanism. Electronics 2023, 12, 3436. [Google Scholar] [CrossRef]

- Birlescu, I.; Tohanean, N.; Vaida, C.; Gherman, B.; Neguran, D.; Horsia, A.; Tucan, P.; Condurache, D.; Pisla, D. Modeling and analysis of a parallel robotic system for lower limb rehabilitation with predefined operational workspace. Mech. Mach. Theory 2024, 198, 105674. [Google Scholar] [CrossRef]

- B&R Home Page. Available online: https://www.br-automation.com/en/products/plc-systems/x20-system/x20-plc/x20cp3586/ (accessed on 28 October 2024).

- B&R Home Page. Available online: https://www.br-automation.com/en/products/motion-control/acoposmicro/inverter-modules/80vd100pdc000-01/ (accessed on 28 October 2024).

- B&R Home Page. Available online: https://www.br-automation.com/en/products/motion-control/8lva-synchronous-motors/standard-motors-available-at-short-notice/8lva23b1030d000-0/ (accessed on 28 October 2024).

- Available online: https://www.br-automation.com/en/products/motion-control/8lva-synchronous-motors/standard-motors-available-at-short-notice/8lva13b1030d000-0/ (accessed on 28 October 2024).

- Akdoğan, H. Performance Analysis of Span Data Type in C# Programming Language. Turk. J. Nat. Sci. 2024, 1, 29–36. [Google Scholar]

- Unity Home Page. Available online: https://unity.com (accessed on 23 October 2024).

- Iordan, A.E. Usage of Stacked Long Short-Term Memory for Recognition of 3D Analytic Geometry Elements. In Proceedings of the 14th International Conference on Agents and Artificial Intelligence, online, 3–5 February 2022; pp. 745–752. [Google Scholar]

- Nedelcu, I.G.; Ionita, A.D. Evaluating the Conformity to Types of Unified Modeling Language Diagrams with Feature-Based Neural Networks. Appl. Sci. 2024, 14, 9470. [Google Scholar] [CrossRef]

- Li, Q.; Zeng, F. Enhancing Software Architecture Adaptability: A Comprehensive Evaluation Method. Symmetry 2024, 16, 894. [Google Scholar] [CrossRef]

- Jha, P.; Sahu, M.; Isobe, T. A UML Activity Flow Graph-Based Regression Testing Approach. Appl. Sci. 2023, 13, 5379. [Google Scholar] [CrossRef]

- Iordan, A.E. An Optimized LSTM Neural Network for Accurate Estimation of Software Development Effort. Mathematics 2024, 12, 200. [Google Scholar] [CrossRef]

- Górski, T. UML Profile for Messaging Patterns in Service-Oriented Architecture, Microservices, and Internet of Things. Appl. Sci. 2022, 12, 12790. [Google Scholar] [CrossRef]

- Di Felice, P.; Paolone, G.; Paesani, R.; Marinelli, M. Design and Implementation of a Metadata Repository about UML Class Diagrams. A Software Tool Supporting the Automatic Feeding of the Repository. Electronics 2022, 11, 201. [Google Scholar] [CrossRef]

- Iordan, A.E. Optimal solution of the Guarini puzzle extension using tripartite graphs. IOP Conf. Ser.-Mater. Sci. Eng. 2019, 477, 012046. [Google Scholar] [CrossRef]

- Major, Z.Z.; Vaida, C.; Major, K.A.; Tucan, P.; Brusturean, E.; Gherman, B.; Birlescu, I.; Craciunaș, R.; Ulinici, I.; Simori, G.; et al. Comparative Assessment of Robotic versus Classical Physical Therapy Using Muscle Strength and Ranges of Motion Testing in Neurological Diseases. J. Pers. Med. 2021, 11, 953. [Google Scholar] [CrossRef] [PubMed]

- Catania, V.; Rundo, F.; Panerai, S.; Ferri, R. Virtual Reality for the Rehabilitation of Acquired Cognitive Disorders: A Narrative Review. Bioengineering 2024, 11, 35. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Covaciu, F.; Vaida, C.; Gherman, B.; Pisla, A.; Tucan, P.; Pisla, D. Development of a Virtual Reality-Based Environment for Telerehabilitation. Appl. Sci. 2024, 14, 12022. https://doi.org/10.3390/app142412022

Covaciu F, Vaida C, Gherman B, Pisla A, Tucan P, Pisla D. Development of a Virtual Reality-Based Environment for Telerehabilitation. Applied Sciences. 2024; 14(24):12022. https://doi.org/10.3390/app142412022

Chicago/Turabian StyleCovaciu, Florin, Calin Vaida, Bogdan Gherman, Adrian Pisla, Paul Tucan, and Doina Pisla. 2024. "Development of a Virtual Reality-Based Environment for Telerehabilitation" Applied Sciences 14, no. 24: 12022. https://doi.org/10.3390/app142412022

APA StyleCovaciu, F., Vaida, C., Gherman, B., Pisla, A., Tucan, P., & Pisla, D. (2024). Development of a Virtual Reality-Based Environment for Telerehabilitation. Applied Sciences, 14(24), 12022. https://doi.org/10.3390/app142412022