Featured Application

The work shows directions for improving the FIDO2 protocol and making it resistant to malicious implementations; it justifies the necessity of a detailed and deep audit of FIDO2 implementations, not limited to a black-box approach.

Abstract

We analyze the popular in practice FIDO2 authentication scheme from the point of view of kleptographic threats that have not been addressed so far in the literature. We show that despite its spartan design and apparent efforts to make it immune to dishonest protocol participants, the unlinkability features of FIDO2 can be effectively broken without a chance to detect it by observing protocol executions. Moreover, we show that a malicious authenticator can enable an adversary to seize the authenticator’s private keys, thereby enabling the impersonation of the authenticator’s owner. As a few components of the FIDO2 protocol are the source of the problem, we argue that either their implementation details must be scrutinized during a certification process or the standardization bodies introduce necessary updates in FIDO2 (preferably, minor ones), making it resilient to kleptographic attacks.

1. Introduction

User authentication is one of the critical components of IT services. With the growing role of digital processing, secure authentication that is resilient to impersonation attacks is more important than ever. The old-fashioned solutions based on passwords memorized by human users have reached their limits; the trend is to rely upon digital tokens kept by the users. Such tokens may offer high protection while relying on strong cryptography. Moreover, the effective inspection of dedicated authentication tokens with no other functionalities might be feasible, in contrast to general-purpose computing devices.

Theoretically, deploying a reliable authentication token is easy, given the current state of the art. However, implementing a global authentication system based on identical dedicated devices is problematic due to economic, technical, and political reasons. Nevertheless, there are efforts to solve this problem. One approach is to enforce some solution by political decisions. The European Union’s eIDAS 2.0 regulation [1] (eIDAS is shorthand for “electronic identification, authentication, and trust services”—see regulation [2]; acronyms used here are summarized at the end of the paper) goes in this direction. The idea of a European Identity Wallet introduced by eIDAS 2.0 is ambitious and far reaching, particularly concerning personal data protection. However, this endeavor may fail due to its complexity and high expectations.

The second strategy is to use the already available resources, both hardware and software products, and attempt to build a flexible ecosystem, where different devices may be integrated smoothly. In that case, the time to market should be substantially shorter, and the overall costs might be significantly reduced. Also, the risks can be addressed appropriately by using devices with security levels proportional to the particular risks.

Nevertheless, such a spontaneous ecosystem requires a common protocol to be executed by all devices. For this reason, it must not rely on any cryptographic primitive that is not commonly used. Challenge-and-response protocols with responses based on digital signatures are definitely well suited for such ecosystems.

1.1. Legal and Technical Background

1.1.1. Legal Requirements

The broad application of an authentication system implies security issues. Enforcing a proper security level by setting minimal requirements for IT systems is the current strategy of the European Union. The rules are tightened over time, and due to cyberspace globality, have a high impact on other countries.

As authentication concerns an identifiable individual, authentication systems fall into the scope of GDPR [3]. One of the basic principles of GDPR is data minimization: only those data should be processed that are necessary for a given purpose. This principle creates a challenge for authentication schemes: The real user identity should be used only if necessary; otherwise, it should be replaced by a pseudonym. Even if the real identity is to be linked with a user account, the link should be concealed in standard processing due to the need to minimize the data leakage risk and implement the privacy-by-design principle: if no real identity is used, then it cannot be leaked. Today, many systems still do not follow this strategy, but FIDO2 authentication discussed in this paper is an example of automatic pseudonymization.

Another European regulation significantly impacting authentication systems is eIDAS 2.0. Its main concept is the European Digital Identity Wallet (EDIW), allowing individuals to authenticate themselves with an attribute and not necessarily with an explicit identity. The technical realization of this concept is an open question, given the member states’ freedom to implement the EDIW and vague general rules. The mandate of European authorities to determine wallet standards does not preclude the diversity of low-level details.

eIDAS 2 may influence many security services in an indirect and somewhat unexpected way. It defines trust services and strict obligations for entities providing such services. Note that “the creation of electronic signatures or electronic seals” falls into the category of trust services (Article 3, point 16c). Therefore, any service where electronic signatures are created on behalf of the user falls into this category. This rule may be interpreted widely, with electronic signatures created by devices provided by the service supplier. Signatures need not serve the classical purpose of signing digital documents; they may relate to any data, particularly to ephemeral challenges during challenge–response authentication processes. From the civil law point of view, such a signature is a declaration of will to log into the system.

Another stepping stone towards security in European cyberspace is the Cybersecurity Act (CSA) [4]. It establishes a European certification framework based on the Common Criteria for, among others, ICT products. An ICT product is understood here as an element or a group of elements of a network or information system. Any online authentication system falls clearly into this category. Although CSA introduces a framework of voluntary certification under the auspices of ENISA, we expect that once the framework reaches its maturity, there will be a trend to request authentication with certified IT products. So, it will be necessary to formulate relevant CC Protection Profiles. This stage is critical since omitting some aspects may result in dangerous products with certificates giving a false sense of security.

1.1.2. FIDO2 Initiative

Given the diversity of authentication schemes and their lack of interoperability, it is desirable to create a common framework that would enable the use of authentication tokens of different vendors within a single ecosystem. This necessity gave rise to the establishment of the Fast Identity Online (FIDO) Alliance created by a large group of leading tech companies including, among others, Google, Amazon, Apple, Intel, Microsoft, and Samsung. The flagship result of the FIDO Alliance is a set of protocols called FIDO2. Its target is an interoperable and convenient passwordless authentication framework implementable on a wide range of user devices offered by different vendors.

From the cryptographic point of view, FIDO2 is based on three fundamental concepts:

- Creating at random separate authentication keys for each service;

- Authentication based on a simple challenge-and-response protocol, where the challenge is signed by the authenticator;

- Separating the authenticator device from the network.

From the user’s point of view, there are the following actors:

- Remote servers offering services and requesting authentication;

- A client—a web browser run on the user’s local computer;

- An authenticator—a device like a hardware key in the hands of the user.

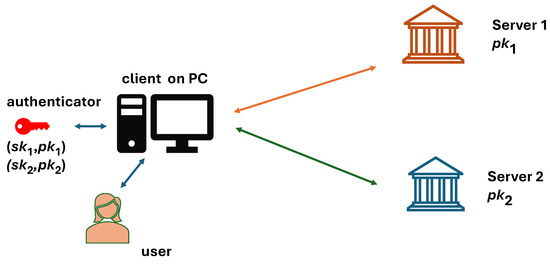

The interaction between these three actors is sketched in Figure 1. As one can see, authentication is based on the secret keys (one per service) stored in the authenticator and the corresponding public keys shared with the corresponding servers.

Figure 1.

FIDO2 system from the point of view of a user.

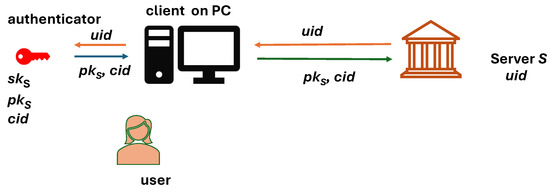

The first step of a user U, when getting in touch with a server S for the first time, is to create a dedicated key pair associated with user identifiers and (see Figure 2).

Figure 2.

FIDO2 registration of a user in a server.

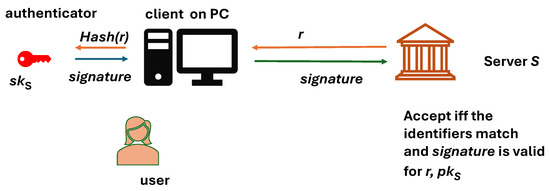

If U has an account in a server S associated with a public key and user ID , U can authenticate themselves by letting the client interact with the authenticator holding the corresponding key . Simplifying a little bit, the authentication mechanism is based on signing a hash of a random challenge received from the server (see Figure 3). A full description with all (crucial) details is given in Section 2.

Figure 3.

FIDO2 authentication.

FIDO2 is an example of a design that may significantly impact security practice: it creates a simple self-organizing distributed ecosystem. FIDO2 also addresses privacy protection issues nicely by separating identities and public keys used for authentication in different systems. Last but not least, it is flexible: the fundamental security primitives—hash function and signature scheme—are used as plugins.

1.2. Lessons from Real Incidents

So far, the history of security incidents with cryptographic products well reflects the famous saying of Adi Shamir: “Cryptography is typically bypassed, not penetrated”. In Table 1, we recall some famous cases.

Table 1.

Examples of failures of processes critical for security.

The lesson learned is that apart from a scheme being broken by cryptanalysts and the failure of physical protection issues, many things may go wrong. Some of the key points are as follows:

- Unintentional implementation errors;

- Rogue implementations of good protocols (with trapdoors);

- Failures of a delivery chains;

- Overoptimistic trust assumptions about protocol participants.

Thus, creating a strong cryptographic scheme with sound security arguments is only the beginning of creating a secure ecosystem. As indicated, for example, in [11], the vendors might be coerced to embed backdoors or hidden espionage tools into their products that could be used against the final users.

A pragmatic approach to the design of cryptographic protocols is to create them not only having in mind the speculative strength of cryptanalysis (e.g., including the quantum threats), but eliminating trust assumptions for the whole life cycle of a cryptographic product implementing the protocol.

1.3. Kleptography

It is known that cryptographic products might be anamorphic in the sense that they pretend to execute the scheme from their official specification while, in reality, they are running a different one with additional functionalities. Moreover, both products can be provably indistinguishable regarding the official output generated by the products according to the specification.

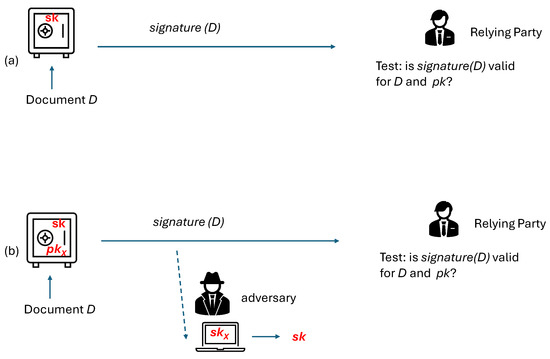

The above-mentioned techniques may serve both good and bad purposes. A good one is implementing a signature creation device so that in a case of forgeries (e.g., resulting from breaking the public key), it is possible to fish out the signatures created by the legitimate device [12]. On the other hand, anamorphic methods may be used on the dark side. A prominent example is kleptography (for an overview, see [13]), where an innocent-looking protocol is used for a covert transfer of secret data. Moreover, only a designated receiver can take advantage of the covert channel, while inspecting the code stored in the infected device (including all keys implemented there) does not help to access the covert channel (see Figure 4).

Figure 4.

A general picture of kleptography: (a) the information flow in the case of an honest setup of a signing device, (b) the case of an infected signing device. The covert channel is protected with asymmetric key stored on the infected device.

Unfortunately, kleptography can be hidden in many standard primitives, such as digital signatures [14] or the post-quantum key encapsulation mechanism [15], or even be adopted as a technical standard [16].

Kleptography takes advantage of a black-box design: to protect the secret keys, a device implementing the protocol must restrict external access to it. So, typically, one can observe only the messages that should be created according to the protocol’s official description but cannot see how these messages have been created.

Entirely eliminating the threat of kleptographic attacks in existing protocols is hard. Some attack methods, like rejection sampling [17], are extremely difficult to combat. What seems to be plausible is to substantially reduce the bandwidth of such channels and eliminate all kleptography-friendly protocol points. This is particularly important in the case of basic components such as user authentication.

The bad news is that kleptographic setups are possible in authentication protocols. For a few recent examples, see [18,19,20].

1.4. Security Status of FIDO2

Interestingly, Microsoft Security states on their web page that FIDO2 “aims to strengthen the way people sign in to online services to increase overall trust. FIDO2 strengthens security and protects individuals and organizations from cybercrimes by using phishing-resistant cryptographic credentials to validate user identities”. This statement does not provide categorical promises about unconditional security. On the other hand, FIDO2 is based on concepts such as digital signatures, challenge-and-response authentication, and separate signature keys generated at random to protect privacy. However, secure components composed together do not always result in a secure product.

The security analysis of FIDO2 (for consecutive versions of the protocol—see Section 2 for details) is given in [21,22]. Both papers cover the core components of FIDO2: the WebAuthn protocol and the Client-to-Authenticator Protocol. However, apart from considering different versions of these protocols, the papers assume different attestation modes [23]. The privacy of FIDO2 is formally defined and proven in [24]. Moreover, the paper [24] augments the protocol with the functionality of global key revocation.

However, the FIDO2 protocol has not been evaluated in the context of kleptography. Note that kleptographic attacks discussed in the literature are typically focused on the possibility of installing an asymmetric leakage in cryptographic primitives. They do not consider more complicated scenarios, such as a contaminated device connecting to a malicious, cooperating server, establishing a shared key, reseeding the deterministic random number generator, and then erasing any traces of its malicious activity. However, such attacks seem closer to reality and should also be examined—consider, for example, spyware [25] installed on a mobile phone.

1.5. Paper Outline and Contribution

The main question considered in this paper is “can we trust FIDO2”? There are some security proofs for the protocol, but do we need to trust the authenticators? What can happen if the authenticators (and servers) deviate from the protocol specification unnoticeably?

Currently, we have trusted manufacturers, supervised accreditation laboratories, whose evaluation reports are internationally recognized, and there are strong penalties for misbehavior. Additionally, there are criminal penalties for reverse-engineering the authenticators. Finally, malicious counterfeit authenticators may appear on the market, and at least some of them may pass the attestation steps of FIDO2. It may also endanger the manufacturers’ reputation, regardless of their honest behavior. These problems concern general public security, especially if FIDO2 becomes widespread (e.g., in the European Digital Identity Wallet). In this situation, learning the extent to which FIDO2 is vulnerable to kleptographic attacks is necessary.

1.5.1. Paper Organization

In Section 2, we recall FIDO2 and the WebAuthn protocol. Section 3 briefly describes the considered attack vectors. In the following sections, we present drafts of kleptographic attacks:

- Section 4 describes techniques enabling a malicious authenticator to leak its identity to the adversary. They apply to authenticators based on discrete logarithm signatures.

- Section 5 is devoted to attacks that leak the private keys to the attacker by a malicious authenticator and apply for FIDO2 with discrete logarithm signatures.

- Section 6 drafts such attacks for FIDO2 with RSA signatures.

The rest of the paper explains some technicalities of the above attacks:

- Section 7 explains how to use error-correcting codes for hidden messages to increase the leakage speed.

- Section 8 explains the technical details of embedding a hidden Diffie–Hellman key agreement between a malicious authenticator and a malicious server within FIDO2 communication.

- Section 9 shows how a malicious server can uncover its TLS communication with the user’s client. This opens room for those attacks, where the attacker only observes the messages sent to the server from the authenticator (via the client).

1.5.2. Our Contribution

We show that the FIDO2 is not resistant to kleptographic attacks. They are relatively easy to install and difficult to detect with conventional methods.

The previous security analysis for the FIDO2 protocol has silently assumed that the authenticators are honest. In practice, this is hard to guarantee, and definitely, in regular application cases, an authenticator cannot be tested against kleptography by its owner.

1.6. Notation

The discrete logarithm problem (DLP) is used extensively in this paper. For compactness, we use multiplicative notation, but the arguments also apply to additive groups defined as elliptic curves.

2. Details of FIDO2

FIDO2 consists of two protocols:

- WebAuthn:

- This is a web API incorporated into the user’s browser. WebAuthn is executed by the server, client’s browser, and the user’s authenticator,

- CTAP:

- The Client To Authenticator Protocol is executed locally by the authenticator and the client. CTAP has to guarantee that the client can access the authenticator only with the user’s consent.

In the following, we consider the last two versions of the FIDO2 standard: FIDO2 with WebAuthn 1 and CTAP 2.0, and FIDO2 with WebAuthn 2 and CTAP 2.1. Interestingly, an authenticator can be implemented as a hardware token or in software [26].

2.1. WebAuthn

WebAuthn 1 is depicted in Figure 5. It consists of registration and authentication procedures. Typically, the connection between the client and the server is over a secure TLS channel. The connection between the client and the authenticator is a local one.

Figure 5.

The WebAuthn 1 protocol (its description is borrowed from [21]).

During Registration, an authenticator may use an attestation protocol—the choice depends on the server’s attestation preferences and the authenticator’s capabilities; see [27] Section 2.1, for more details. Figure 5 is borrowed from [21], where the attestation mode basic is analyzed: the signature is generated with the attestation (private) key , which is expected to be deployed on more than 100,000 authenticators (see [23], Section 3.2).

The parameter defining the minimal bit length of random elements generated during protocol execution is currently set to 128. Accordingly, during Registration, the server generates an at least 128-bit long random string and 512-bit user id . These random values and the server’s identifier are sent to the client as a server’s challenge. The client parses the challenge and aborts if the received identifier does not match the intended identifier . Then, the client hashes and sends the result together with and to the authenticator.

The authenticator parses and generates a fresh key pair , which will be used in subsequent authentications to the server . Next, it sets the counter n to zero (the counter shall be incremented at each subsequent authentication session with ) and generates a random credential of at least bits. Then, the tuple is assembled by the authenticator, and (depending on the choice of attestation mode) a signature on and the hash value is generated. Finally, and the generated signature are sent to the client, and the authenticator updates its registration context by inserting a record containing the server identifier , the user’s identifiers and , the private key , and the counter n.

The client passes to the server; the server runs procedure on it. If the checked conditions are satisfied (the signature is valid, etc.), the server updates its registration context by inserting a record containing the identifiers and , the public key , and the counter n.

During Authentication, the server chooses a string of length at least at random. Next, the server assembles a challenge consisting of and the server identifier . Then, is passed to the client. Then, similarly to the registration procedure, the client transforms the challenge by hashing . The resulting message is sent to the authenticator. The authenticator parses , retrieves and finds a record with created during registration. This record contains, among others, the counter and the dedicated signing key . Then, the authenticator increments the counter and creates a signature over , the counter, and using the key . Finally, the authenticator sends the response (including the signature) to the client and updates the stored record. In the last step, the server accepts if the signature is valid and the signed data matches the expectations.

WebAuthn 2 has a richer set of options compared to WebAuthn 1. However, from our point of view, the most interesting features of its predecessor are preserved: during registration and authentication, random strings are created in the same way. We omit further details of WebAuthn 2, as they are less relevant to our work.

Client-Server TLS Channel

FIDO2 messages between the server and the client are sent over a TLS channel, usually TLS 1.3. An interesting feature of TLS 1.3 is authenticated stream encryption, where encryption can be completed first, and then the authentication tag can be calculated. What is more, if a part T of the plaintext is supposed to be random, then we can reverse the order of the computation:

- Choose a block B of ciphertext bits corresponding to T in the way needed by the attack;

- Determine T by xor-ing B with the key stream bits produced by the encryption algorithm.

Once the plaintext and the ciphertext are fixed, the authentication tag can be calculated.

2.2. Security of FIDO2 Authenticators—Capabilities of an Auditor

From the security point of view of the overall FIDO2 framework, it is essential to know how much we can trust the authenticators. There are two complementary concepts relating to the security of the FIDO protocol: Certified Authentication Levels [26], and Attestation [23,27]. By running attestation, the Relying Party does not check the implementation of an authenticator. All the information obtained is a declaration of origin that may come from a trusted party and/or be based on secure architecture mechanisms. In this way, the Relaying Party may effectively ban the devices coming from unknown or blacklisted sources. However, the most popular attestation mode, called “Basic”, is based on a key shared by a large group of devices. Hence, it suffices to extract such a key from a single device, or to obtain it from the manufacturer (by means of espionage or coercion), to poison a supply chain with counterfeit devices enriched with malicious functionality. Moreover, attestation alone does not guard against an accepted vendor installing trapdoors. The latter problem should be addressed by certification of the devices. On the other hand, even during a more general Common Criteria certification process, the party evaluating the device cooperates with the manufacturer, receives extensive documentation, and might request an open sample of the evaluated product (cf. Section 5.3.4 of [28]). A more specific document [29] states that “A side-channel or fault analysis on a TOE (Target of Evaluation) using ECC in the context of a higher Evaluation Assurance Level (…) is not a black-box analysis”.

In our case, an auditor examining an authenticator for malicious implementation cannot fully trust the manufactured devices. In particular, the auditor cannot always guarantee that the samples received from the manufacturer during the certification process contain precisely the same implementation as all devices delivered to the market. Consequently, to some extent, the auditor needs to use the black-box approach, which is much more difficult, especially for authenticators certified for Level 3+.

We may assume that the auditor has the following capabilities:

- The auditor may run the authenticator with the input data of their choice and may confront its output with the collected history of interactions with the device.

- The auditor may observe the input and output of the authenticator for real input data coming from the original services during real authentication sessions.

However, repeating the investigation of the internals of an authenticator for different copies taken from the market may be beyond the auditor’s capabilities—the high level of protection of the devices may hinder deeper investigation.

3. Attack Modes

We consider two attack vectors against fundamental targets of the FIDO2 ecosystem: privacy protection and impersonation resilience. The two attack vectors are explained below.

3.1. Linking Attacks

A linking attack aims to weaken privacy protection. There are many different scenarios for that. For example, we have the following:

- Two different servers learn whether two accounts in their services have been registered with the same authenticator device;

- A malicious authenticator leaks its unique identity to an observer that merely monitors encrypted TLS communication between the client and the server.

Further scenarios are described in the following sections. The point is that a linking attack does not enable the attacker to do anything else but break (some) privacy protection mechanisms of FIDO2.

3.2. Impersonation Attacks

An impersonation attack aims to successfully run FIDO2 on behalf of a user without holding the user’s authenticator. In this paper, we focus on the fundamental impersonation scenario, where a private key that is generated by the authenticator is leaked to an attacker.

Again, there are many attack options. As we focus on kleptographic threats, the authenticator is a part of the malicious setup. However, there are many options regarding who is obtaining the private key :

- An adversary that only monitors encrypted TLS communication but can derive due to the malicious setup of an authenticator (and possibly a colluding server): in particular, this attack may be performed by a manufacturer providing authenticator devices and the network equipment.

- The target service server that offers to third parties aiming to access the user account.

- An organization temporarily in possession of the authenticator attempting to clone the device in order to impersonate the legitimate owner of the authenticator.

4. Linking Attacks with Discrete Logarithm Signatures

This section focuses on FIDO2 with signatures based on the discrete logarithm problem. The version with RSA signatures is covered in Section 6.

As there are many attack scenarios, for each attack presented, we provide a short sketch of the most important features. To keep the paper as concise as possible, we omit a listing of attack steps in the cases where it can reconstructed easily from the context. We also skip some common technical details and treat them in more detail in the later sections.

4.1. Attack 1: Signature Tagging

- Attack prerequisites:

- Each user U has a unique public identifier (e.g., issued by a state authority).

- The authenticator of user U is aware of .

- is the ID resulting from the registration of with the server S.

- holds the “public” kleptographic key Y of the attacker (see Section 8 for more details).

- The attacker knows the private kleptographic key y, where .

- knows (at least a fraction) of the signatures delivered by while executing FIDO2 with the server S ( might be the target server S but it is not required).

- Attack result:

A linking attack in which learns of the user hidden behind identifier .

- Auxiliary techniques:

- id expansion:

- might be compressed in the sense that its every bit is necessary to identify a user. As we will be running a Coupon Collector process, this is inefficient to learn n bits, and we have to draw at average of one-bit coupons. ( is the nth harmonic number). The idea is to apply encoding of such that the Coupon Collection Process can be interrupted, and the missing bits can be found by error correction. For details, see Section 7.For the sake of simplicity, we assume that consists of bits.

- Two rabbits trick:

- This is a technique borrowed from [30]. It enables transmitting one covert bit per signature and avoids backtracking of the rejection sampling technique.

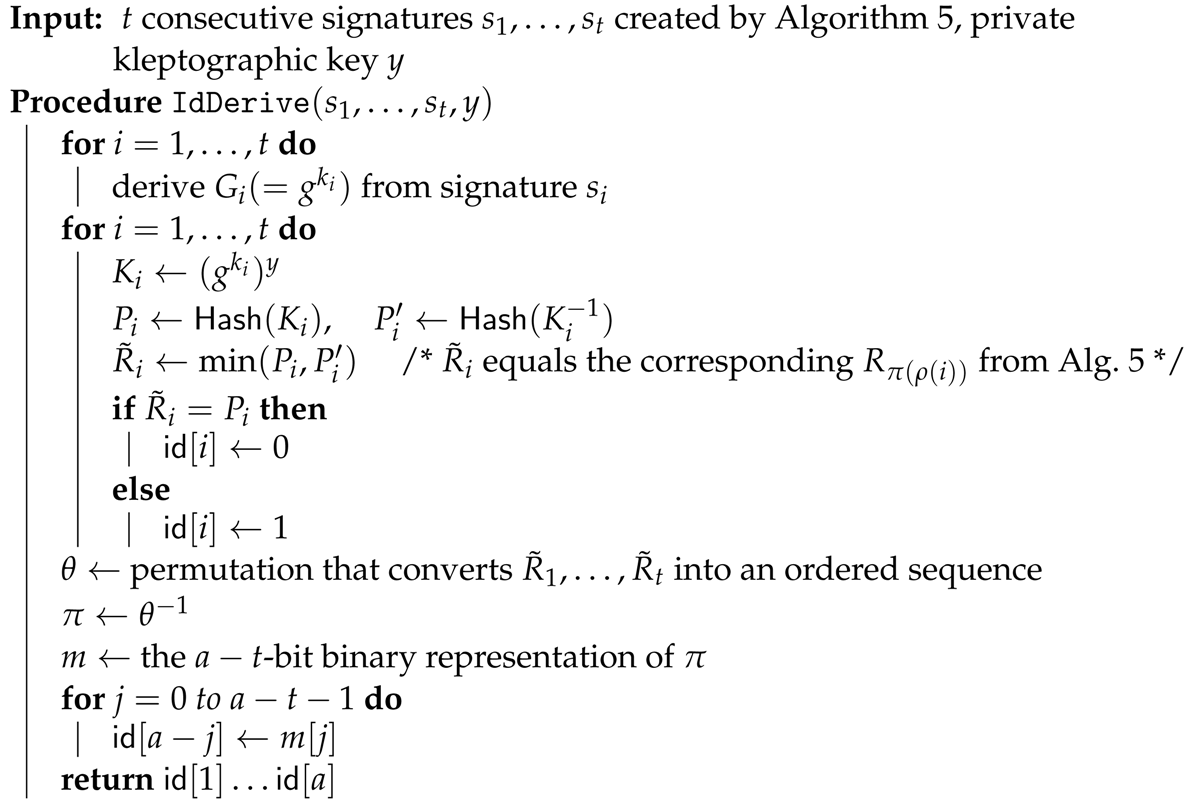

- Algorithm:

The attack follows the classical kleptographic trick of reusing an ephemeral value to establish a secret shared by the authenticator and the adversary .

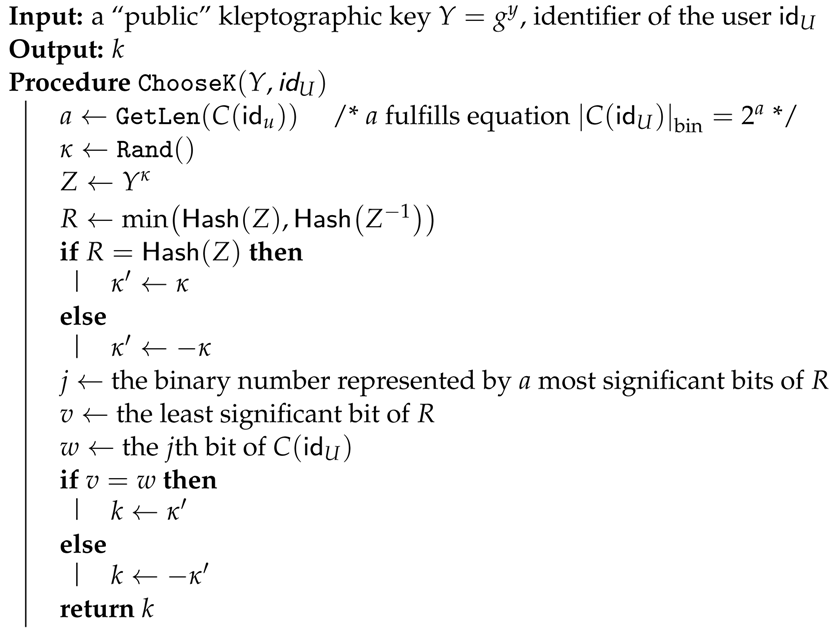

From the point of view of the authenticator, the following changes in FIDO2 execution are introduced when creating a signature. The key moment is the choice of k: instead of choosing k at random, Algorithm 1 is executed.

| Algorithm 1 Selection of parameter k by authenticator. |

|

From the point of view of , each signature of leaks one bit of . executes Algorithm 2 to retrieve this bit. Recall that standard signature schemes based on the discrete logarithm problem allow either reconstructing or directly providing it. For example, for ECDSA signatures, the scalar signature component allows to reconstruct the element needed). Thus, may depend on the signature scheme used.

| Algorithm 2 Retrieving one bit of encoded user identifier. |

|

While collecting the bits of , repetitions (leaking the same bits again) are possible, as the address j is pseudorandom. In Section 7, we discuss in more detail how many signatures are necessary to recover the whole .

4.2. Attack 2: Identity Sieve via Rejection Sampling

- Attack prerequisites:

They are the same as in Section 4.1 but with the following addition:

- The adversary has a set of potential identities I, which may contain of .

- Attack result:

will fish out the identities from I that may correspond to or find that none of them corresponds to .

- Auxiliary techniques:

- Long pseudorandom identifier:

- Instead of , a long pseudorandom identifier is used. Simply, use a cryptographic DRNG (deterministic random number generator, e.g., one recommended by NIST): are the first N output bits of .

- Rejection sampling with errors:

- We take into account that the authenticator must run the protocol without suspicious delays and failures. So, we use the rejection sampling technique, where the output is taken after a few trials, even if it is invalid from the information leakage point of view.

- Two rabbits trick:

- This may also be used, but we skip it here for clarity of presentation.

- Algorithm:

Assume that consists of b-bit blocks. The parameter L is big enough to avoid collisions, while b is very small, like . In particular, .

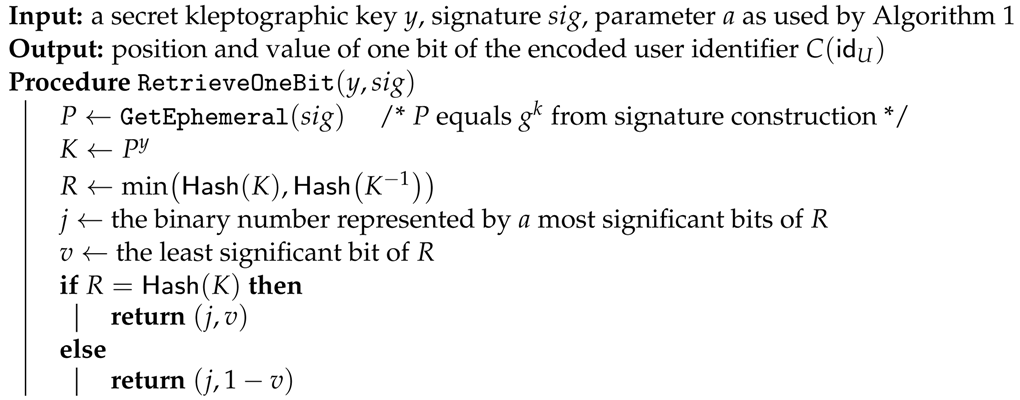

The signing algorithm run by an authenticator is modified. Instead of choosing k at random, Algorithm 3 is executed (rejection sampling with at most backtracking steps).

| Algorithm 3 Selection of parameter k using rejection sampling by authenticator. |

|

From the point of view of , each obtained signature is a witness indicating whether a given may correspond to . Due to the bound m on the number of backtracking steps, false witnesses are possible.

When receiving a signature, executes Algorithm 4 to gather statistics for identities from I. Let S be the table holding the current statistics. Initially, for each .

| Algorithm 4 Updating statistics. |

|

- Retrieve from the signature (k is unavailable to , but standard signature schemes enable either reconstructing or provide it directly).

- Calculate .

- Compute .

- Set j to L most significant bits of R.

- Let v be the b-bit sequence of R starting at position .

- For every , if contains sequence v on positions through , then .

Let us observe that for , the counter is incremented with the probability , provided that we treat as a random function (so, and are stochastically independent). In turn, if , then is incremented with probability . For example, for , is incremented with probability , and with probability on the remaining positions of S.

4.3. Attack 3: Signature Tagging with Permutation

This attack improves the leakage bandwidth with a technique borrowed from [31].

- Attack prerequisites:

- The same as in Section 4.1, except for the last condition;

- has access to all signatures created by in a certain fixed interval of FIDO2 executions;

- can create in advance and store t ephemeral random parameters k used for signing; t is a parameter determining the bandwidth of the covert information channel.

- Attack result:

learns the of the user hidden behind identifier .

- Auxiliary techniques:

They are the same as in Section 4.1, plus the following encoding via permutation:

- Encoding via permutation:

- We convert permutations over to integers. For this purpose, one can use Algorithm P and the “factorial number system” (see [32], (Section 3.3.2)).

- Algorithm:

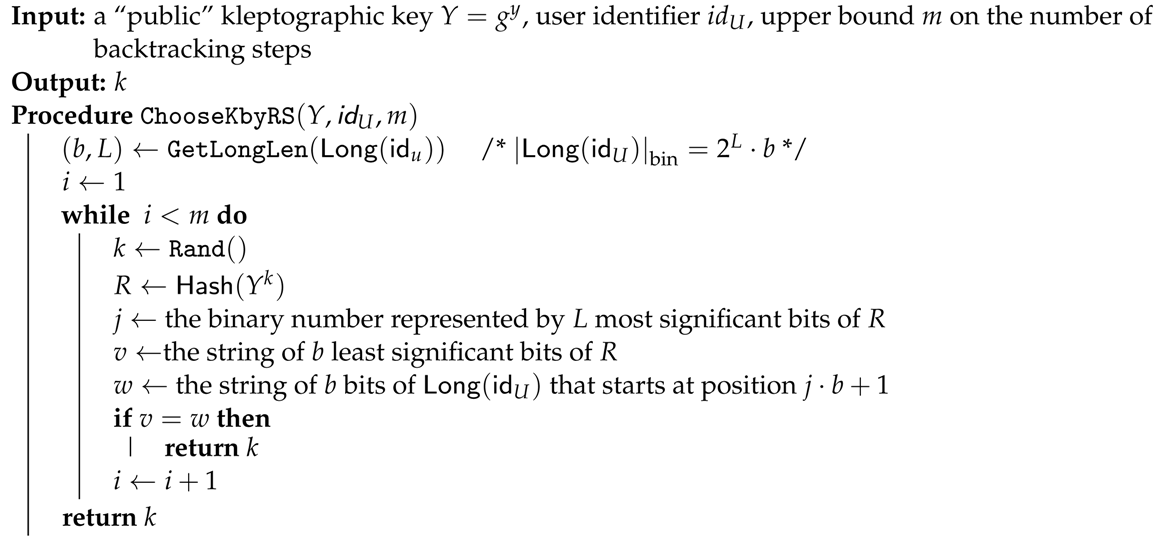

Assume that consists of bits. The attack requires a sequence of t signatures that and agree upon (e.g., t consecutive signatures after some triggering moment). The parameter t must be chosen so that a permutation over can be used to encode a b-bit number, where . In the procedure presented below, the first t bits of are leaked directly via the two rabbits trick, while the remaining bits are encoded via a permutation .

In advance, runs Algorithm 5.

| Algorithm 5 Preparing a list of parameters by the authenticator in advance. |

|

During the ith execution of FIDO2 devoted to leaking , uses already stored to create the signature. Otherwise, the signature is created in the standard way.

Algorithm 6 is used by to derive .

| Algorithm 6 Deriving by |

|

The number of bits that can be transmitted in this way equals . For example, for , one can encode 264 bits, which is enough to leak a secret key for many standard schemes.

4.4. Attack 4: Signature Tagging with a Proof

The attacks from Section 4.1, Section 4.2 and Section 4.3 can be easily converted to attack where can create a proof for a third party T. Note that given the signatures from , the party T can perform the same steps as except for rising to power y. The results for these operations can be provided by with non-interactive zero knowledge proofs of equality of discrete logarithms for all these operations.

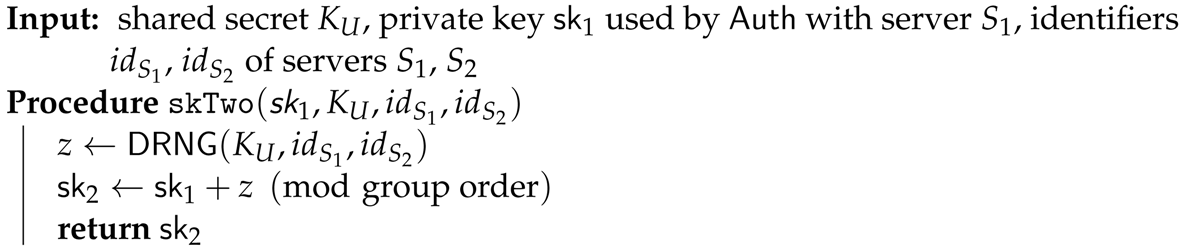

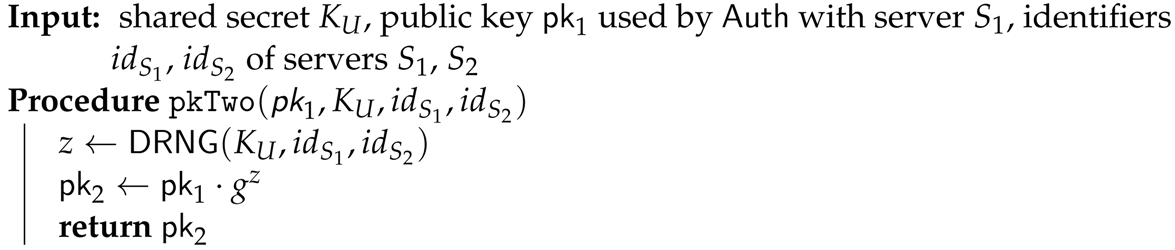

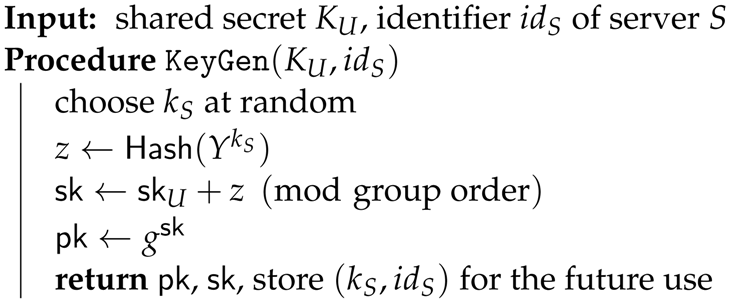

4.5. Attack 5: Correlated Secret Keys with a Shared Secret

In this attack, creates secret keys , with different services so that may detect that the corresponding public keys and originate from the same device. Alternatively, might be the main secret key assigned to user U with known to .

- Attack prerequisites:

- and share a secret (this can be achieved on-the-fly; see Section 8).

- knows the public keys used for FIDO2 authentication with different servers.

- Attack result:

Given a public key , created by for interaction with server , can derive the key that would create for interaction with server . This procedure will fail if does not originate from .

- Algorithm:

The registration procedure is modified on the side of . Instead of choosing at random, the following steps are executed (see Algorithm 7).

| Algorithm 7 Deriving dependent on . |

|

Algorithm 8 may be used to derive the key .

| Algorithm 8 Deriving dependent on by . |

|

The attack enables to reproduce the public keys of but not the private keys, so impersonation in this way is impossible. However, for certain signature schemes, would be able to convert a signature over verifiable with key to a signature over the same and verifiable with key . However, is a random parameter chosen anew during each execution, so in practice, it should never repeat. Moreover, is contained in the of the first signature, so the signature will not be valid for .

4.6. Attack 6: Correlated Secret Keys with a Trigger Signature

In this attack, will be able to convince that the public key used for authentication with a server S was created by an authenticator of user U. Moreover, the proof will be released at a time freely chosen by and hidden in the FIDO2 execution.

- Attack prerequisites:

- There is a key pair associated with user U, where is stored in , while is known to .

- holds the “public” kleptographic key Y of the attacker.

- knows y such that .

- can store a random parameter k for future use.

- has access to the signatures created by in the interaction with server S.

- Attack result:

At a freely chosen time, enables to detect that a public key used in some service S belongs to U, or more precisely, to an authenticator that holds the master secret key of user U. The key is not exposed to .

- Algorithm:

At the moment of registration with a server S, the procedure of generating the key pair on the side of is modified in the following way (Algorithm 9):

| Algorithm 9 Modified key generation during registration with S. |

|

At the moment when the link between U and has to be revealed to , executes FIDO2 with server S in a slightly modified way. Namely, during the signature creation process, instead of choosing k at random, takes , where has been stored during registration with S (Algorithm 9).

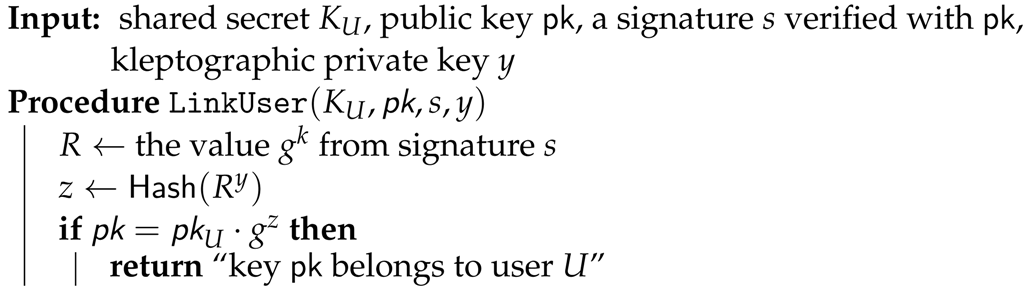

inspects the signatures delivered to S (Algorithm 10).

| Algorithm 10 Attempting to find the key of for service S. |

|

The above attack can be modified slightly, making it even more harmful. Namely, the element triggering the test may originate from a signature presented to a server . Mounting such an attack could be easier since the alternative server may be fully controlled by , while for some reason, user U may be forced to authenticate with (e.g., might be a popular online shopping platform).

5. Impersonation Attacks

The general strategy of the attacks is to enable the adversary to seize the private key used to authenticate. There are two main attack scenarios:

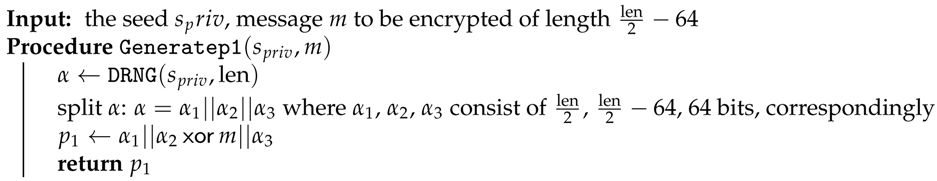

- Scenario 1: The adversary transmits a message M to in a covert channel within an innocent-looking FIDO2 execution. Then, deviates from the protocol specification and, on the basis of M, calculates key K and then uses K as a seed for an and derives the secret keys for server S from , where is the identifier of S. As holds K, the secret keys of can be calculated by .

- Scenario 2: the hidden information flow is reversed: transmits a covert message M to hidden in a FIDO2 execution. Using M, derives the secret key used by the authenticator.

The way in which the key K or is calculated/derived from M is asymmetric, and based on the Diffie–Hellman protocol.

The attack may enable to derive all secret keys generated by . However, it is easy to reduce the leakage to, say, the secret key used to authenticate with a single server S. In this case, the key derivation procedure may be altered: instead of K, we use . Consequently, the leaked information will be instead of K.

5.1. Modified Linking Attacks

In many cases, the impersonation attacks are variants of the linking attacks. Then, we refer to the linking attacks and describe only the necessary modifications.

5.1.1. Modifications of Attacks 1 and 3

In Attack 1 from Section 4.1 and Attack 3 from Section 4.3, we replace by .

Note that now, the adversary may stop collecting bits much earlier. Namely, can guess some number of missing bits (say 30), recalculate the seed as, say, , derive a secret key from , and finally check whether it matches the public key used by the authenticator. On the other hand, the seed is presumably longer than the identifier .

5.1.2. Modifications of Attacks 5 and 6

One can modify the attack from Section 4.5. The difference is that instead of calculating , the authenticator sets .

In the case of the modification of Attack 5, may be used in some “master” service server under the control of the adversary. This way, secret keys to all other servers may be derived by . Alternatively, may leak keys to only selected services.

In the case of the modification of Attack 6, may determine when to leak the secret key devoted to a given service.

5.2. Scenario 2—Dynamic Kleptographic Setup

In this attack, there is no preinstalled kleptographic key on a device; all necessary key material is installed in a covert way, and the key material is retrieved from the seemingly regular FIDO2 execution.

- Attack result:

This is an impersonation attack, where learns the seed used by for random number generation; hence, can recalculate all the private keys generated by after the attack. Moreover, if has access to the signatures created after the attack, then in the case of standard signatures based on the discrete logarithm problem, we can reconstruct private ephemeral values, and thus the signature private keys, even if the private keys were established before the attack.

- Attack prerequisites:

- colludes with a malicious server S.

- For some reason, the user U holding is forced to register with S (e.g., S is the server used by the citizens to fill out a tax return form), or S is a very popular commercial service used by a large part of the population.

- The implementation of supports domain parameters that the server S selects during the attack; on the other hand, the server chooses the domain parameters supported by the malicious implementation of the authenticators.

- Auxiliary techniques

- Ephemeral–ephemeral Diffie–Hellman key exchange:

- this is to be described in Section 8.2.

- Notation:

In FIDO2, all the allowed signature schemes based on the discrete logarithm problem utilize elliptic curves ([33], Section 3.7). To illustrate the attack, we assume that a prime order elliptic curve is used (e.g., the case of ECDSA signatures).

- Algorithm:

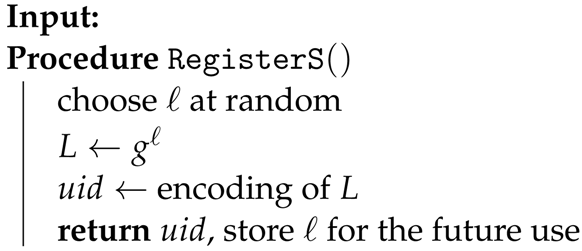

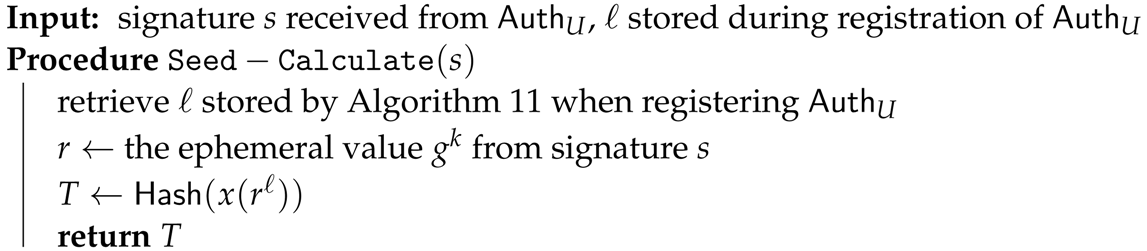

The first stage of the attack is when registers with server S. First, S selects in a specific way, but is still random. Second, adjusts its DRNG based on (Algorithm 11).

| Algorithm 11 selection by S. |

|

The core element of this procedure is encoding L in . The base-point g belongs to the domain parameters used for the signature scheme. Therefore, the two-stage procedure composed from the Elligator Squared [34] and the Probabilistic Bias Removal Method [14] (see Section 8 for details) should be utilized to uniformly encode L as a bit-string . Note that in both versions of the WebAuthn protocol, the server S may choose to be long enough to encode hidden L ( is also called “user handle” and the specification [35] states in Section 14.6.1 that “It is RECOMMENDED to let the user handle be 64 random bytes, and store this value in the user’s account”).

Apart from sending the group element, the server S must indicate which curve has been chosen for the key agreement. The same curve must be chosen by as the domain parameters for the signature scheme. Therefore, the preference list that the server sends to the authenticator is a natural and legal place to indicate the curve (for details, see Section 8.3).

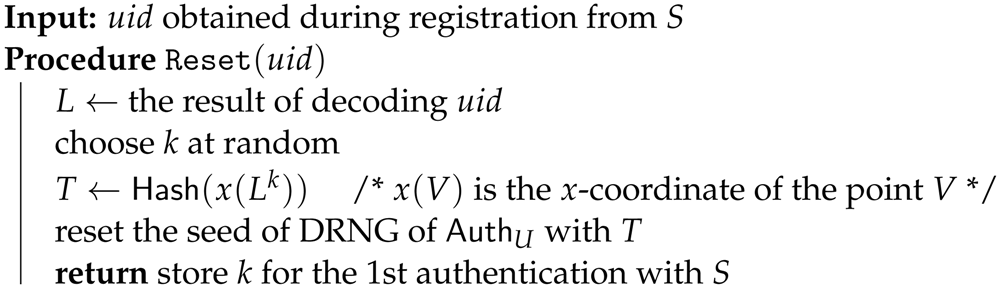

Following the FIDO2 specification, , , and the server’s preference list are delivered to the authenticator by the client, unaware of the evil intent of S and . Note that, unlike other parameters from S, the identifier is not hashed before delivering to the authenticator. This is the key feature enabling efficient attack.

recognizes of the “colluding server” and follows the registration procedure by creating a dedicated key pair using the curve indicated by the server. The key is chosen uniformly at random, and the messages are returned to the client following the FIDO2 specification. However, internally executes the following steps (Algorithm 12):

| Algorithm 12 Resetting DRNG by . |

|

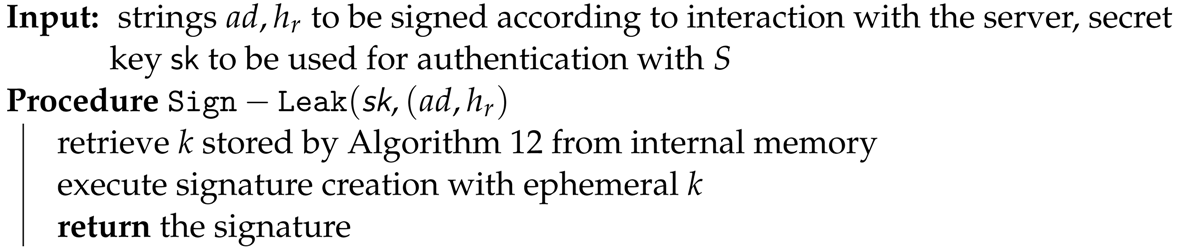

From now on, of creates all random numbers based on the seed T, but S still has to learn T. This is achieved during the first authentication of against S. Namely, creates the signature in a specific way (Algorithm 13).

| Algorithm 13 Leaking seed T. |

|

During the first authentication, S can recover the seed T of the of in the following way (Algorithm 14).

| Algorithm 14 Recalculating seed T. |

|

From now on, S knows all random values generated by , so it, in particular, may recalculate all secret keys generated during registrations that occur after the first authentication with S. The only issue is to align the output with the registration moment. It may require some work and guesses, but it is still possible.

Unfortunately, the secret keys created by before the discussed moment are also unsafe. Namely, assume that has registered with a service before the first authentication with S. In this case, S cannot derive the key for service , as it has been created before a shared seed T has been established. The key and its corresponding key cannot be changed by S since S would first have to impersonate . Unfortunately, can be broken by in the case of standard signatures based on the discrete logarithm problem. Namely, these signatures use a one-time ephemeral value chosen at random. Typically, this ephemeral value must not be revealed since, otherwise, the signing key will be leaked. However, S may derive the same ephemeral value using its clone of the DRNG used by . So, once S learns a single signature delivered to , it can derive . So, the security depends solely on the secrecy of the signature returned by .

Remark 1.

The element is not the only one to pose a threat. The random chosen by can be used similarly if the server’s public key is somehow available to .

Remark 2.

Note that if the auditor logs the first signature and can recover the secret key , then they can calculate T. Indeed, for the signature , where , one might define , , and then and the left-hand side of the equation can be recalculated. Thus, hiding requires keeping away from an auditor. For tamper-proof authenticators, this is automatically the case.

6. RSA Signatures

The list of signature algorithms to be used by ([33], Section 3.7) also contains RSA signatures with RSA PSS and RSA PKCS v1.5 encodings. (Note, however, that according to a disclaimer, the “list does not suggest that this algorithm is or is not allowed within any FIDO protocol”.) Both encodings are standardized in [36]. In ([33], Section 3.7), the lengths of the RSA keys are specified as follows:

- 2048-bit RSA PSS;

- -bit RSA PKCS v1.5 ().

That is, the RSA key length is at least 2048 bits. In the case of PSS encoding, the field can be random. This field is recovered by the signature verifier and thus can be used to transmit a covert message (as noted in ([36], page 36). In point 4 on page 36 of [36], we read that “Typical salt lengths in octets are (the length of the output of the hash function Hash) and 0”. As a result, this channel may have a capacity of at least 256 bits.

From now on, we consider that a deterministic version of the RSA signatures (RSA PKCS v1.5 or RSA PSS without random salt), makes it much harder to mount a kleptographic attack. However, a malicious manufacturer can benefit from the key generation process. The paper [37] presents a kleptographic RSA key generation procedure. An accelerated version is presented in [38] (it is even faster than the OpenSSL implementation of the honest RSA key generation procedure). The papers [37,38] use the ephemeral–static version of the DH protocol, where the ephemeral part is present in the RSA modulus.

There are other ways to install a backdoor in the RSA key, cf. [39] and the references given there, but we adapt the idea presented in [37,38] to also show the feasibility of a linking attack in the case where uses RSA signatures during FIDO2 execution. We also present an impersonation attack, in which we point at a capability of the RSA backdoor that, to our knowledge, has not been discussed in the literature so far. That is, we point to the possibility of transmitting a ciphertext in a prime factor of the RSA modulus.

6.1. Diffie–Hellman Protocol in the RSA Modulus

Let us recall a fragment of GetPrimesFast(bits,e,,) procedure from [38] (Algorithm 15).

| Algorithm 15 Fragment of the procedure for fast primes generation. |

|

Let us explain a few details. In line 2, the length of prime numbers is determined. In line 3, the first prime is generated from the seed . The exponent e must be co-prime to the order of the multiplicative group; hence, the condition is verified when running the GenPrimeWithOracleIncr function. In line 4, the size of random tail is set: in [38], the length of is denoted as , and placed in the RSA modulus is prefixed with 8 bits, , where is a 7-bit random string. In line 6, the first approximation of the RSA modulus is found. In the next line, the first approximation of the second prime is determined; however, is probably not a prime.

The next steps of the procedure, omitted above, consist of setting the least significant bit of and incrementing by 2 until a prime number is found.

The procedure GetPrimesFast includes loops, and sometimes the execution path returns to line 5, but and remain unchanged. By the Prime Number Theorem, the number t of increments by 2 of the number has the expected value .

Let denote the value of just after the last execution of line 5 and after setting the least significant bit. Then, for the final , we have

For a 2048-bit RSA modulus, we have . For a 4096-bit RSA modulus, the upper bound is around 1420. Therefore, taking into account the carry bits and the variance in the search of prime , it seems that usually no more than len+64 least significant bits of the calculated in line 6 will be affected by the component . Consequently, the value residing in the area of the most significant bits of is not at risk of being changed by the component , and it will be available to directly from the RSA modulus.

The value has just 257 bits in length: in [38], a pair of twisted elliptic curves over a binary field , where , is defined. The element is represented in [38] as a compressed point : the x-coordinate and one bit of the y-coordinate. That is why is used in line 4 of the procedure GetPrimesFast. However, just the x-coordinate of the point is enough; hence, the expression can be replaced by the value 257.

Deeper modifications may include the following:

- Replacement of the twisted elliptic curves with the Elligator [40] or with the Elligator Squared on a binary curve [41] (see Section 8 for more details);

- Having switched to one of the methods [40,41], the ephemeral–static DH protocol utilized in [37,38] can be replaced with the ephemeral–ephemeral variant of the DH protocol, where the server’s ephemeral part is encoded in .

6.2. Linking Attack

- Attack result:

learns from public key created by containing a ciphertext decryptable by .

- Attack prerequisites:

We assume that an ephemeral–static version of the DH protocol has already been executed. Hence, the asymmetric key of the is stored by . Consequently, in this version of the DH protocol, does not need to be an active participant. It suffices that learns the public RSA keys generated by .

- Auxiliary techniques:

- Embedding a ciphertext into a RSA modulus:

- Pessimistically, consider the smallest size of RSA keys allowed in WebAuthn, which is 2048 bits. Then, we may assume that from line 4 of GetPrimesFast equals . We assume that the component from (1) will usually change no more than len tailing bits of . Thus, in the case of calculated in line 5 of GetPrimesFast, we have leading bits unchanged when we add to . Therefore, the 695 most significant bits of may carry a ciphertext. Note that when the execution path returns to line 5, then it suffices to re-randomize , e.g., 128 least significant bits of . Consequently, the ciphertext bits of remain unchanged, and enough entropy is still provided.

- Diffie–Hellman key exchange:

- Two options are available:

- Ephemeral–static DH: as mentioned above, in this version, the asymmetric key of is stored on , but does not have to be an active participant of the FIDO 2 protocol.

- Ephemeral–ephemeral DH: if this version is implemented on malicious authenticators, then the cooperating server’s ephemeral key shall be transferred in (see Section 8 for details).

- Algorithm:

It is a modified version of the attack presented in [37,38]. generates during registration so that it passes to S as a ciphertext hidden in .

This time, the shared secret established using the DH protocol is not used as a seed to generate the prime . The factor shall now be generated at random. In this attack, the shared secret is used to derive a key for encrypting a linking identifier. The ciphertext is directly embedded in the generated modulus.

6.3. Impersonation Attack

The previous attack provides more than enough space to leak the identity . On the other hand, the ciphertext space is too small to leak the factorization of an RSA modulus.

- Attack result:

can factor the RSA modulus of generated by and derive the corresponding signing key . Additionally, the channel for covert ciphertexts used in the RSA modulus is widened, and there are new opportunities for an attack, e.g., a covert transmission of the DRNG seed, or linking information for other keys and services.

- Attack prerequisites:

We assume that and share a secret key obtained, for example, by the Diffie–Hellman protocol (see Section 8).

- Auxiliary techniques: Embedding two ciphertexts into a RSA modulus

The channel for encryption will be extended. The first ciphertext is created as in the case of the linking attack.

The second ciphertext is available to only after factoring the RSA modulus. In [37], the prime is generated in a slightly different manner: only the upper half of is generated from the shared seed , and the half with least significant bits is allowed to be random. According to [37], the modulus can be efficiently factorized by : knowledge of the most significant bits of suffices to run the Coppersmith’s attack [42] to find . Accordingly, we modify the accelerated attack from [38], where the prime is generated in the following way:

- First, a bit string of length is obtained from a DRNG with the seed (so the entire number is obtained); the two most significant bits and the least significant bit of are set to ‘1’.

- The next step is to increment by 2 until becomes a prime number. The procedure includes loops, and sometimes the execution path goes back to regenerate the number by the DRNG seeded with . In such a case, the next block of pseudorandom bits is taken from the generator, and the two uppermost bits and the least significant bit of are again set to ‘1’. As previously, by the Prime Number Theorem, the expected number of increments by 2 of the number is . Let denote the value of immediately after taking it from the generator for the last time and setting the least significant bit and the two uppermost bits to ‘1’. Then, for the final , we have

We modify the above procedure. Instead of taking bits from a generator and assigning them to (initially, and each time when is re-generated), we apply the following steps:

We expect that the buffer is usually large enough to completely absorb all the increments (2). Knowledge of the bit string is sufficient for to factorize RSA modulus with the Coppersmith’s attack. Having the factor , the adversary can compare it with the output of the generator and immediately recovers m.

- Algorithm:

The shared secret established with the help of the DH protocol is used to seed the pseudorandom number generator. The first portion of the output bits from this generator is used to generate the first ciphertext, that is, the one to be embedded in the upper half of the RSA modulus. The next portions are consumed on demand to generate the bit strings needed by Algorithm 16.

| Algorithm 16 Modified method of generating . |

|

Remark 3.

A simplified attack version can be implemented to make factorization faster for . That is, exactly like in [38], the entire number is generated from the shared seed , not just its upper half. However, one ciphertext can still be embedded in the most significant area of the generated modulus, as in the case of the linking attack.

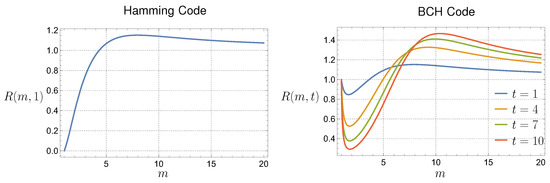

7. Leakage Acceleration with Error Correcting Codes

In the remaining part of the paper, we explain some technical details and ideas that have already been used but not discussed in depth. In this section, we discuss encoding C used in Section 4.1. Specifically, let us consider a secret message consisting of n bits that is leaked one bit at a time at a random position, with possible repetitions. This is an instance of the Coupon Collector’s Problem. It is known that the expected number of steps to recover all n bits is , where is the nth harmonic number.

Let us apply an error-correcting code. Let be the number of bits of the encoded message. Of course, , so we seem to lose by necessity to leak more bits. On the other hand, we do not need to collect all bits. If the code enables us to correct up to t errors, we may replace the missing bits by zeroes and run the error correction procedure. So it suffices to leak bits out of . One can calculate that the expected time becomes .

Although the method seems straightforward, most error correction codes have a fixed block size and can be applied to strings with a length that is a multiple of the block size. Typically, an error-correcting code has two parameters: m is a size parameter, and t is the number of errors that can be corrected. Let denote the number of bits in the original string and total number of bits of its error-correcting code. So, using an error-correcting code makes sense if

Below, we discuss this problem for concrete examples where the leaked data are a Polish personal identity number (PESEL) or a phone number, both with 11 decimal digits. To encode each digit, we use 4 bits. This results in a bit string with 44 bits.

- Hamming Codes

For binary Hamming codes, the code length is typically , where m is a positive integer. The length of the message to be encoded is . Hamming codes can correct up to one error, i.e., .

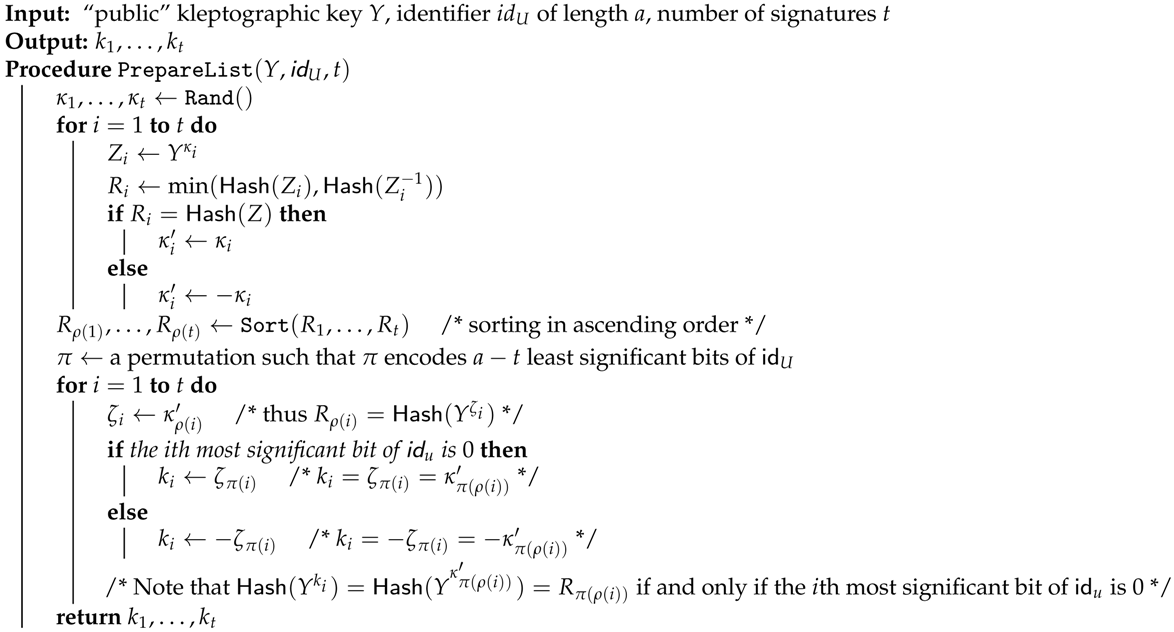

In Figure 6, we see that Hamming codes may reduce the expected time to leak a secret. However, it does not mean that we can save time for any length of the leaked message. For example, for , collecting all bits without error correction requires, on average, steps. For Hamming codes, we have to take and a Hamming code with bits. Then, the expected time to recover the secret is . In this case, the Hamming codes are useless.

Figure 6.

The figure show the ratio (Equation (3)) for the Hamming code and BCH code.

- BCH Codes

Bose–Chaudhuri–Hocquenghem (BCH) codes can correct more than one error. According to [43], for code length , a BCH code can correct up to t errors, where the input string has length , where is a function bounded by .

In Figure 6, we provide plots of the ratio for different values of t and m. These plots illustrate that the R ratio is above 1 for relatively small values of m, indicating that error correction using BCH codes can be beneficial even with moderate code lengths. For our example, we consider the following configurations of the BCH codes:

- For , which corresponds to , we have the following:

- –

- When correcting errors, the number of information bits is ;

- –

- When correcting errors, the number of information bits is .

- For , which corresponds to , we can correct 4 errors. Thus, bits.

The expected times to recover the secret with these BCH codes and with no codes are as follows:

| n’ | n | t | ||

| 63 | 51 | 2 | 230.46 | 203.38 |

| 63 | 45 | 3 | 197.77 | 182.38 |

| 127 | 99 | 4 | 512.56 | 424.43 |

In our example of an 11-digit secret, a 63-bit BCH code correcting 3 errors allows for the transmission of 45 bits of information with an expected time of 182.38, outperforming the 192.40 expected time for transmitting 44 bits without error correction. For leaking longer secrets, like cryptographic keys, the gain is even bigger.

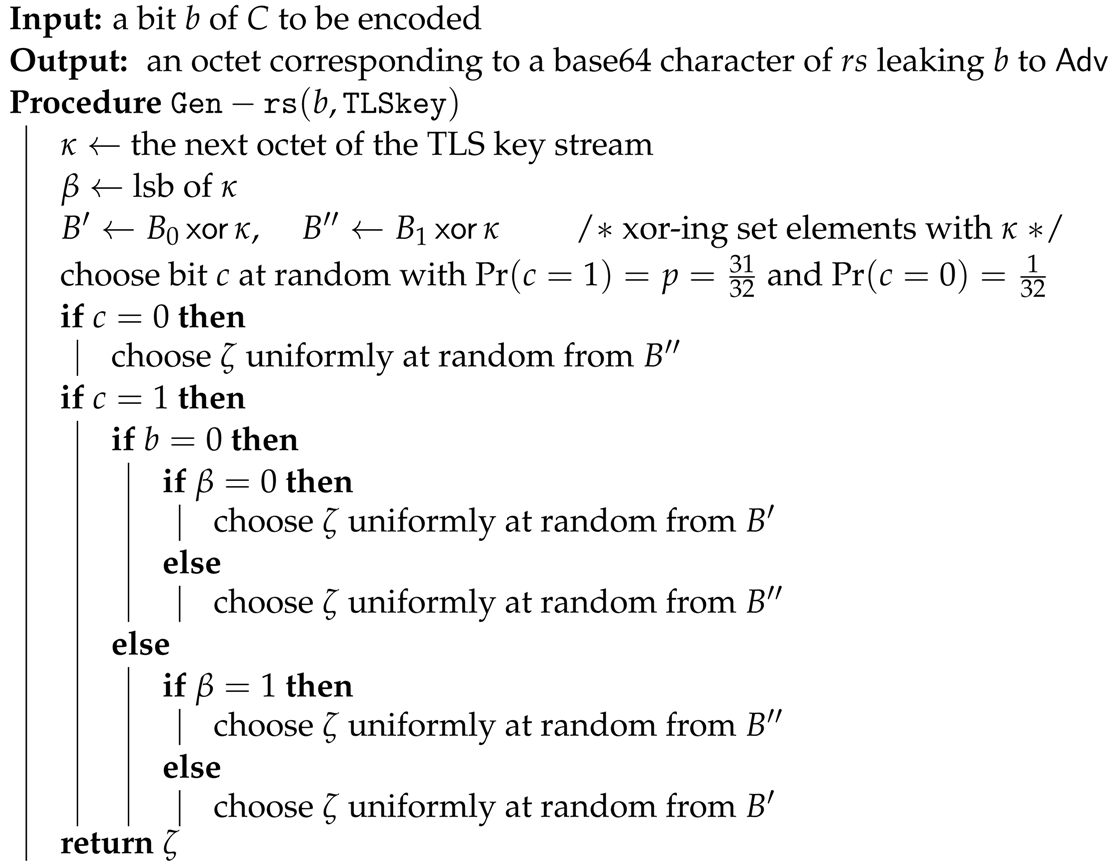

9. Kleptographic Attack on the Server Side

Now, we show that a server can run the WebAuth protocol so that it creates a kleptographic channel to an adversary observing WebAuth messages encrypted by TLS.

9.1. Channel Setup

A malicious server will always negotiate the TLS cipher suite with the key agreement protocol based on the (elliptic curve) Diffie–Hellman protocol and the AEAD encryption mode. All cipher suites used by TLS 1.3 meet this condition. We assume that for each setting of the domain parameters used in the DH protocol and supported by the server, the adversary has left some “public” key Y, and only knows the corresponding y such that , where g is a fixed generator specified by the domain parameters.

We assume that the adversary can see the whole encrypted TLS traffic between the client and the server. We assume that the shared secret resulting from the TLS handshake executed by the client and the server is derived by the ephemeral–ephemeral DH protocol. That is, the client chooses a random exponent , the server chooses a random exponent , and the master shared secret is . (Of course, this key is secure from .)

For the attack, the server creates a secondary key . Note that can calculate K as (recall that is transmitted in clear), while the client would have to break the CDH Problem to derive K. Note that K is unrelated to the TLS encryption. On the other hand, nothing can prevent and the server from deriving K.

9.2. Second Attack Phase

For the sake of simplicity, first, we describe the general attack idea and disregard some technical TLS issues. In Section 9.3, we explain the attack in a real setting.

During the authentication procedure, the server generates a random string and sends it in an encrypted form to the client. The malicious implementation on the server’s side changes the procedure in such a way that the first bits of encrypted will carry covert message M to the adversary :

- Generate the TLS bit stream S to be xor-ed with the plaintext to yield the ciphertext portion corresponding to within the AEAD cipher.

- Generate a separate ciphertext of length encrypted with the key K shared with .

- If is to have length , then generate a string of length at random.

- Set .

Note that in this way, the TLS traffic will contain C at a certain position, and will be able to derive . The rest of WebAuthn is executed according to the original specification. The client will obtain the same , and that looks random for the client.

Note that the adversary does not need to know the content of the TLS payload. It suffices to guess that the TLS traffic encapsulates FIDO2 traffic, and guess the location of C in the traffic and decrypt it with the key K.

In the case of FIDO2 registration, the number of bits to embed the ciphertext is even larger: apart from one can use the random string of length .

9.3. Low-Level Details for TLS 1.3 Ciphertext and Base64url Encoding

According to the programming interface documentation of Web Authentication Protocol ([35], Section 5), the server communicates with the client’s browser through a JavaScript Application run on the client’s side. The key point is that the objects containing the strings and are encoded as base64url strings. The problem to solve is how to encode the ciphertext C for the adversary in an innocent-looking stream ciphertext of the base64url codes of and .

The base64url encoding maps 64 characters to octets. Namely, it maps the following:

- A, B, …, Z to 01000001, 01000010, …, 01011010;

- a, b, …, z to 01100001, 01100010, …, 01111010;

- 0, 1, …, 9 to 00110000, 00110001, …, 00111001;

- The characters - and _ to 00101101, 01011111.

Note that in this way, only 64 octets out of 256 are utilized as base64url codes.

The encoding creates a challenge for the malicious server: one cannot use the next byte b of a ciphertext C and simply set the next byte of the TLS ciphertext to b. Namely, after decryption with the TLS key (already fixed by the TLS handshake), the resulting octet might be invalid as a base64url code. Moreover, this would occur in cases.

The embedding of bits of a ciphertext C must be based only on what the adversary can see during the TLS transmission. Below, we sketch a method that embeds one bit per base64url character but with a small error probability.

Our method focuses on the least significant bit (lsb) of the base64url codes. One can check that 0 occurs on the lsb position 31 times, while 1 occurs there 33 times. (And for no position of base64url codes is there a perfect balance between the numbers of zeroes and ones.) Let denote the set of base64url codes with the least significant bit equal to 0, and let be the set of the remaining base64url codes. To encode a bit b, the server executes Algorithm 17 to determine the next character of :

| Algorithm 17 Encoding a bit of C in an octet. |

|

Let us discuss Algorithm 17. First, note that the encrypted stream is correct from the client’s point of view: , so it is a ciphertext of a base64url code. Therefore, the client will not detect any suspicious encoding error.

For each base64url code , the probability that will be the ciphertext of is . Indeed, if , then it can be chosen only for , and then with the conditional probability . So, the overall probability of obtaining equals . If , then it is chosen with probability . Therefore, the client will detect no irregularity concerning the statistics of occurrences of the base64url codes in .

If , then always encodes b from the adversary’s point of view. So, the encoding of b is correct. If , this is no longer true, as from the adversary’s point of view, the TLS ciphertext always encodes . So, with conditional probability , this is incorrect. Hence, the overall probability that b is not encoded correctly is . So, if C is a ciphertext of length 128, the expected number of falsely encoded bits is 2. There are only options to choose 2 error positions out of 128.

Eliminating the encoding errors may be dealt with in two ways. The first case is that the adversary obtains a key that can be effectively tested. For example, suppose that the plaintext of C is the seed to the generator used by the server to derive the TLS parameter . In that case, the adversary may guess the error positions, recalculate according to the guess, and test the result against really sent by the server. Finding the right seed is relatively easy, as the number of possible guesses is small. The second option is to insert an error detection code in the plaintext of C and use a stream cipher to create C. Both techniques can be combined.

9.4. Attack Consequences

There are many ways to exploit the hidden channel described above. From our point of view, the main one is the possibility to deactivate the protection provided by the TLS channel against the adversary: in this case, the message hidden in C is the seed for DRNG used by the server to generate the TLS ephemeral keys . In this way, the adversary gains access to the content of the TLS channel. Then, can take advantage of the linking and impersonation attacks described in the earlier sections.

Defending against the described attack is not possible within TLS. It is necessary to reshape FIDO2, as it is generally risky to send unstructured random strings over TLS.

10. Final Remarks and Conclusions

FIDO2 is an excellent example of a careful and spartan design of an authentication framework that addresses practical deployment issues remarkably well. FIDO2 has significant advantages concerning its simplicity and flexibility on one side and personal data protection on the other side.

At first look, it seems that nothing can go wrong during FIDO2 implementation, even if the party deploying the system is malicious: the authenticators are well separated from the network and the server, and their functionalities are severely restricted, leaving little or no room for an attack. To some extent, the client plays the role of a watchdog, controlling the information flow to the authenticator.

We show that the initial hopes are false. We have presented a full range of attacks where malicious servers and authenticators use kleptography with severe consequences:

- Dismounting privacy protection by enabling a (third party) adversary to link different public keys generated by an authenticator;

- Enabling the impersonation of the authenticator against a (third party) server.

The attacks are kleptographic: there are no detectable changes in the information flow, and the protocol execution appears to be honest. The kleptographic setup is straightforward and concerns quite minimal changes in protocol execution.

The real threat is a false sense of security: security audits and certification may be waived or can be superficial and fail to detect kleptographic trapdoors. The threats may propagate: a designer of a sensitive service may falsely assume that the protection given by FIDO2 is tight, while the attacker may be able to clone the authenticator remotely.

Final Note

This paper does not provide any link to a prototype implementation of the attacks. This is intentional: we do not want to facilitate any misuse of the information contained in this paper. The extent of the information provided is limited to what an auditor should look for in the inspected system components as potentially malicious code.

Author Contributions

Conceptualization, M.K. and P.K.; Methodology, M.K. and M.Z.; Software, P.K.; Validation, M.K., A.L.-D. and P.K.; Formal analysis, M.K. and P.K.; Investigation, A.L.-D., P.K. and M.Z.; Writing—original draft, M.K., A.L.-D., P.K. and M.Z.; Writing—review & editing, M.K. and P.K.; Supervision, M.K.; Project administration, M.K. and P.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article, further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| CC | Common Criteria [47] |

| CSA | Cybersecurity Act [4] |

| CTAP | Client To Authenticator Protocol |

| DRNG | Deterministic Random Number Generator |

| EDIW | European Digital Identity Wallet |

| eIDAS | Electronic Identification, Authentication, and Trust Services; see [2] |

| ENISA | European Union Agency for Network and Information Security |

| FIDO | Fast Identity Online |

| GDPR | (EU) General Data Protection Regulation [3] |

References

- The European Parliament and the Council of the European Union. Regulation (EU) 2024/1183 of the European Parliament and of the Council of 11 April 2024 Amending Regulation (EU) No 910/2014 as Regards Establishing the European Digital Identity Framework. 2024. Available online: https://eur-lex.europa.eu/eli/reg/2024/1183/oj (accessed on 22 October 2024).

- The European Parliament and the Council of the European Union. Regulation (EU) No 910/2014 of the European Parliament and of the Council of 23 July 2014 on electronic identification and trust services for electronic transactions in the internal market and repealing Directive 1999/93/EC. Off. J. Eur. Union 2014, 257, 73–114. Available online: https://eur-lex.europa.eu/eli/reg/2014/910/oj (accessed on 2 December 2024).

- The European Parliament and the Council of the European Union. REGULATION (EU) 2016/679 OF THE EUROPEAN PARLIAMENT AND OF THE COUNCIL of 27 April 2016 on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, and repealing Directive 95/46/EC (General Data Protection Regulation). Off. J. Eur. Union 2016, 119, 1–88. Available online: https://eur-lex.europa.eu/eli/reg/2016/679/oj (accessed on 2 December 2024).

- The European Parliament and the Council of the European Union. Regulation (EU) No 2019/881 of the European Parliament and of the Council of 17 April 2019 on ENISA (the European Union Agency for Cybersecurity) and on information and communications technology cybersecurity certification and repealing Regulation (EU) No 526/2013 (Cybersecurity Act). Off. J. Eur. Union 2019, 151, 15–69. Available online: https://eur-lex.europa.eu/eli/reg/2019/881/oj (accessed on 22 October 2024).

- National Security Archive. The CIA’s ‘Minerva’ Secret. Available online: https://nsarchive.gwu.edu/briefing-book/chile-cyber-vault-intelligence-southern-cone/2020-02-11/cias-minerva-secret (accessed on 25 November 2024).

- Bencsáth, B.; Pék, G.; Buttyán, L.; Félegyházi, M. The Cousins of Stuxnet: Duqu, Flame, and Gauss. Future Internet 2012, 4, 971–1003. [Google Scholar] [CrossRef]

- Fillinger, M.; Stevens, M. Reverse-Engineering of the Cryptanalytic Attack Used in the Flame Super-Malware. In Proceedings of the Advances in Cryptology—ASIACRYPT 2015, Part II, Auckland, New Zealand, 29 November–3 December 2015; Springer: Berlin/Heidelberg, Germany, 2015; Volume 9453, pp. 586–611. [Google Scholar]

- Nemec, M.; Sýs, M.; Svenda, P.; Klinec, D.; Matyas, V. The Return of Coppersmith’s Attack: Practical Factorization of Widely Used RSA Moduli. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, CCS 2017, Dallas, TX, USA, 30 October–3 November 2017; pp. 1631–1648. [Google Scholar]

- Bernstein, D.J.; Lange, T.; Niederhagen, R. Dual EC: A Standardized Back Door. LNCS Essays New Codebreakers 2015, 9100, 256–281. [Google Scholar]

- Bors, D. CVE-2024-3094—The XZ Utils Backdoor, a Critical SSH Vulnerability in Linux. Available online: https://pentest-tools.com/blog/xz-utils-backdoor-cve-2024-3094 (accessed on 24 November 2024).

- Poettering, B.; Rastikian, S. Sequential Digital Signatures for Cryptographic Software-Update Authentication. In Proceedings of the ESORICS 2022, Part II, Copenhagen, Denmark, 26–30 September 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13555, pp. 255–274. [Google Scholar]

- Kubiak, P.; Kutylowski, M. Supervised Usage of Signature Creation Devices. In Proceedings of the Information Security and Cryptology—9th International Conference, Inscrypt 2013, Guangzhou, China, 27–30 November 2013; Springer: Berlin/Heidelberg, Germany, 2013; Volume 8567, pp. 132–149. [Google Scholar]

- Young, A.L.; Yung, M. Malicious Cryptography—Exposing Cryptovirology; Wiley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Young, A.L.; Yung, M. Kleptography: Using Cryptography Against Cryptography. In Proceedings of the Advances in Cryptology—EUROCRYPT ’97, Konstanz, Germany, 11–15 May 1997; Springer: Berlin/Heidelberg, Germany, 1997; Volume 1233, pp. 62–74. [Google Scholar]

- Xia, W.; Wang, G.; Gu, D. Post-Quantum Backdoor for Kyber-KEM. Selected Areas in Cryptography 2024. 2024. Available online: https://sacworkshop.org/SAC24/preproceedings/XiaWangGu.pdf (accessed on 2 December 2024).

- Green, M. A Few Thoughts on Cryptographic Engineering: A Few More Notes on NSA Random Number Generators. 2013. Available online: https://blog.cryptographyengineering.com/2013/12/28/a-few-more-notes-on-nsa-random-number/ (accessed on 2 December 2024).

- Persiano, G.; Phan, D.H.; Yung, M. Anamorphic Encryption: Private Communication Against a Dictator. In Proceedings of the Advances in Cryptology—EUROCRYPT 2022, Part II, Trondheim, Norway, 30 May–3 June 2022; Springer: Berlin/Heidelberg, Germany, 2022; Volume 13276, pp. 34–63. [Google Scholar]

- Janovsky, A.; Krhovjak, J.; Matyas, V. Bringing Kleptography to Real-World TLS. In Proceedings of the Information Security Theory and Practice—12th IFIP WG 11.2 International Conference, WISTP 2018, Brussels, Belgium, 10–11 December 2018; Blazy, O., Yeun, C.Y., Eds.; Volume 11469, pp. 15–27. [Google Scholar]

- Berndt, S.; Wichelmann, J.; Pott, C.; Traving, T.; Eisenbarth, T. ASAP: Algorithm Substitution Attacks on Cryptographic Protocols. In Proceedings of the ASIA CCS ’22: ACM Asia Conference on Computer and Communications Security, Nagasaki, Japan, 30 May–3 June 2022; Suga, Y., Sakurai, K., Ding, X., Sako, K., Eds.; ACM: New York, NY, USA, 2022; pp. 712–726. [Google Scholar] [CrossRef]

- Schmidbauer, T.; Keller, J.; Wendzel, S. Challenging Channels: Encrypted Covert Channels within Challenge-Response Authentication. In Proceedings of the 17th International Conference on Availability, Reliability and Security, ARES ’22, New York, NY, USA, 23–26 August 2022. [Google Scholar]

- Barbosa, M.; Boldyreva, A.; Chen, S.; Warinschi, B. Provable Security Analysis of FIDO2. In Proceedings of the Advances in Cryptology—CRYPTO 2021, Part III, Virtual, 16–20 August 2021; Springer: Berlin/Heidelberg, Germany, 2021; Volume 12827, pp. 125–156. [Google Scholar]