Abstract

With the rapidly escalating demand for high real-time performance and data throughput capabilities, the limitations of on-board computing resources have rendered traditional computing services inadequate to meet these burgeoning requirements. Vehicular edge computing offers a viable solution to this challenge, yet the roadside units (RSUs) are prone to overloading in congested traffic conditions. In this paper, we introduce an optimal task offloading strategy under congested conditions, which is facilitated by a mixed coverage scenario with both 5G base stations and RSUs with the aim of enhancing the efficiency of computing resource utilization and reducing the task processing delay. This study employs long short-term memory networks to predict the loading status of base stations. Then, based on the prediction results, we propose an optimized task offloading strategy using the proximal policy optimization algorithm. The main constraint is that the data transmission rates of users should satisfy the quality of service. It effectively alleviates the overload issue of RSUs during congested conditions and improves service quality. The simulation results substantiate the effectiveness of the proposed strategy in reducing the task processing delay and enhancing the quality of service.

1. Introduction

With the rapid development of 5G and B5G communication and vehicular network technologies [1,2], various novel applications have emerged for both vehicles and passengers, such as autonomous driving and AR and VR. The massive amount of computation tasks generated by the emerging applications usually cannot be met by the local computing resources of the vehicles. Normally, with the Internet of Vehicles (IoV) network and the cloud computing center of an intelligent transportation system (ITS), tasks can be processed with the traditional cloud service model [3,4], but the transmission delay is massive. By introducing edge computing (EC) [5,6,7,8] to vehicular networks, the vehicular edge computing network (VECN) becomes an effective way to solve the aforementioned problems.

In a vehicular networking environment, EC services are deployed at roadside units (RSUs), expanding the computing capabilities of vehicles. However, due to deployment costs, the computing resources of RSUs in a certain area are limited. When traffic congestion exists, then the covered vehicles under the service area may offload several tasks to the corresponding EC servers, causing the servers to be overloaded. Relying solely on RSUs makes it difficult to meet surging computational demands, resulting in an increased task processing delay. Therefore, how to alleviate the overloading of RSUs under traffic congestion conditions to ensure service quality is a major challenge in VECNs.

In existing urban transportation networks, the 5G cellular network also provides signal coverage for vehicles and passengers on urban roads, and roadside base stations (BSs) can assist vehicle networks in providing computing services [9]. In urban road segments, moving vehicles and passengers are covered by mixed networks with both the RSUs and BSs. Existing solutions have thoroughly discussed how to alleviate the overload of RSUs, mainly considering task allocation, the utilization of RSUs in other areas, and the optimization of offloading tasks assisted by drones [10,11,12]. Tasks are allocated and offloaded to different targets in a certain proportion, offloaded from the local RSU to the adjacent area RSU, and assisted by drones to alleviate the overload of RSUs. In addition, an auxiliary offloading scheme based on BSs has been introduced. However, due to the different communication systems of intelligent connected vehicles, RSUs, and BSs, various protocols are conflicting and complex, making it difficult to know the load status of BSs in real time, which affects the decision making of task offloading [13]. Moreover, due to the dynamic nature of VECNs, it is necessary to consider whether vehicles will exceed the coverage range of RSUs or BSs during the offloading process. The relative positions of vehicles relative to the offloading target and their moving speeds should be primarily considered.

In this paper, we propose an optimal task offloading scheme under the congested traffic conditions in a mixed-coverage scenario. In a congested area covered by both RSUs and BSs, a task can be divided into three parts: offloading to the local area, RSU, and BS. However, the real-time uncertainty of BS loading, the moving of vehicles, and the dynamic transmission channel make it a challenge to design an efficient task offloading scheme. Thus, we propose a real-time prediction method based on long short-term memory (LSTM) for the load status of BSs. After this, for the task offloading allocation optimization problem, we use a proximal policy optimization (PPO) algorithm to find the optimal task offloading allocation strategy, provide vehicle users with a better quality of service (QoS), and alleviate the overload of RSUs. The main contributions are summarized as follows:

- We construct a task offloading system model in a mixed coverage scenario under congested traffic, using both BSs in the 5G network and RSUs in an ITS to assist vehicles in offloading computation tasks. At the same time, it is stipulated that BSs assist RSUs in task offloading, and the computing tasks can be allocated to the local area, RSUs, and BSs.

- We formulate an optimal task offloading problem in which the real-time uncertainty of BS loading, the moving speed of vehicles, and the dynamic transmission channels are primarily considered.

- We propose a two-stage algorithm to solve the formulated joint optimization problem. Firstly, a real-time prediction method based on LSTM is proposed to extract BSs’ load patterns from historical data. Then, considering the real-time position relationship between vehicles and RSUs, BSs in the mixed coverage scenario, and the real-time load status of BSs, we propose a task allocation offloading strategy based on the PPO algorithm to reduce the task offloading delay. Compared with the inherent optimization mode, the task processing delay reduces by approximately 5.6%, and the offloading strategy has good robustness in the congest traffic conditions.

Finally, we conduct extensive experimental analysis to verify the effectiveness of the proposed strategy. The remainder of this article is organized as follows. In Section 2, we review the relevant works. In Section 3, we discuss a task offloading system model in the mixed coverage scenario. In Section 4, a BS load condition prediction scheme based on LSTM and a vehicle task offloading optimization scheme based on PPO are proposed. Section 5 proposes the simulation experiments and performance evaluation. Finally, we summarize our work in Section 6.

2. Related Work

Recently, there has been widespread attention for how to alleviate the overload of EC servers on RSUs during traffic congestion while meeting the needs of users within a service area. To address this issue, genetic algorithms, particle swarm optimization (PSO), deep Q-networks (DQNs), deep deterministic policy gradients (DDPGs), PPO, and other improved algorithms have been introduced to determine the optimal task offloading strategy [14,15,16,17,18]. In [19], Yang et al. proposed a framework and PSO for a cooperative computation algorithm for minimizing the delay and cost of task offloading in VEC. Ling et al. [20] proposed a dynamic computation offloading algorithm based on the estimated time of arrival to optimize the QoS and fairness in the IoV. Luo et al. [21] presented a task offloading method based on the DDPG algorithm and highlighted the benefits of using unmanned aerial vehicles (UAVs) to optimize task offloading ratios in a 5G IoT environment, aiming to improve the efficiency of task offloading decisions. Guo and Hong [22] proposed a DQN model based on deep reinforcement learning (DRL) for future intelligent transportation scenarios. This model aims to balance the trade-off between computational capability and traffic conditions through vehicle-to-vehicle (V2V) self-organizing networks sharing computational resources on an EC platform. They introduced a new metric to resolve the conflicting objectives between traffic performance and computational capacity. Wu et al. [23] proposed a dynamic resource allocation scheme based on policy gradient DRL to optimize the computational performance and energy consumption of mobile edge computing (MEC) systems. In [24], Wang et al. introduced an actor–critic DRL-based method for task offloading in a multi-vehicle assisted MEC system. This method aims to improve system performance, accelerate convergence efficiency, and reduce overall operating costs by enhancing model training efficiency. Gao et al. [18] proposed the “Com-DDPG” algorithm, in which a multi-agent DRL method was used and via DDPG enhances the offloading performance. Huang et al. [25] proposed a novel multi-objective reinforcement learning for a multi-objective offloading problem that considers both the service provider’s revenue and the quality of experience of the vehicle users. The existing work shows that DRL is feasible and has better performance than heuristic algorithms. However, some DRL algorithms, such as DDPG and multi-agent DRL algorithms, require a large amount of computing power and have poor robustness.

Urban roads are often overlapped by both 5G BSs and RSUs. There has been some research on the utilization of idle computing resources of roadside BSs. In [6], a multi-task offloading strategy based on energy consumption is proposed to address the EC issue in vehicular networks. It deploys EC servers at RSUs and macro-BSs and offloads computing tasks to EC servers through wireless cellular networks, enabling resource sharing and mutual benefit between V2V, vehicle-to-roadside (V2R), and vehicle-to-BS communications. Zhu et al. [10] proposed a heterogeneous EC architecture for the IoV, utilizing aerial relay stations to connect vehicles with nearby heterogeneous edge infrastructure for task offloading. By introducing a centralized training distributed execution (CTDE) multi-agent DRL algorithm, they aimed to optimize system computational delay and ensure load balancing among edge infrastructures. Zeng et al. [26] proposed an approach combining LSTM and convolutional neural networks to predict the location of car users to realize the task offloading scheme of vehicles-to-BSs. Li and Jiang [27] proposed a distributed task offloading strategy to low load BSs in MEC environment; this work considers the computing and communication resources of the BSs. Pang et al. [28] proposed a vehicular task offloading for vehicle–edge-cloud collaboration scheme, relying on the BS to expand cloud computing and use a DRL approach for network optimization. However, the existing solutions do not consider how to effectively utilize the idle computing resources of BSs in a stable manner and the constraints on task offloading objectives in mixed coverage scenarios.

In the mixed coverage scenario of BSs and RSUs in congested urban road segments, when the vehicles allocate task offloading proportions without knowing the load status of the BS, the inappropriate task allocation strategy to the BS can result in task rejection or underutilization of its computing resources. Additionally, if vehicles select an offloading target and the vehicle exceeds its coverage range within the offloading delay of the target, it may be unable to return the computation results to the vehicle. Therefore, the selection of vehicle offloading targets and the allocation of task offloading proportions are urgent problems that need to be addressed.

3. System Model and Problem Formalization

3.1. System Architecture and Operation

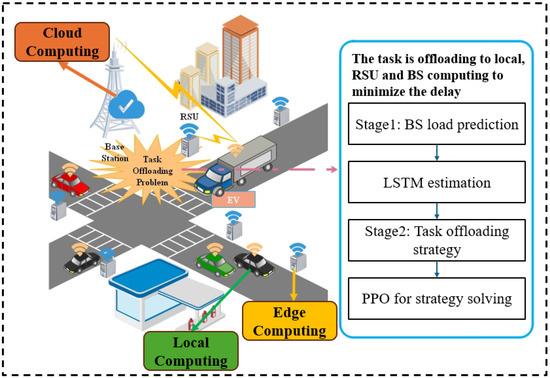

Figure 1 shows a scenario of mixed coverage of BSs and RSUs in urban road segments. An RSU is deployed on a road and provides computing services for the covered passing vehicles. In cases of the abnormal traffic congestion on the road, with a large number of vehicles in the coverage area of the RSU, all these vehicles may offload tasks to the RSU, leading to an excessive load on the RSU, and it is unable to complete tasks within the specified time. At the same time, the roadside BS in the same area has more computing resources and a larger coverage range. It can also provide computing services for vehicles. However, due to communication protocol issues, vehicles are unable to know the real-time BS loading status.

Figure 1.

A scenario of mixed coverage of BSs and RSUs in urban road environments.

Actually, along with the development of artificial intelligence and deep learning methods, the historical load status of BSs and the vehicle access in the previous time periods can help the decision center predict the future load status of the BS for a certain period. This helps the BS provide EC services for vehicles. In this paper, we minimize the system overall service delay, providing more suitable task offloading strategies for vehicles. After the overall design of the optimization strategy, the optimization strategy is divided into the following stages.

The BS provides historical data of the load state to the cloud computing center of the ITS. Within the current time period, the cloud computing center utilizes the historical data of the load state and the vehicle access to the BS in the previous time periods to predict the load state of the BS for the next time period via using LSTM. In addition, when it is in the first time period, it only relies on the historical load status data provided by the BS.

Then, vehicles upload information such as the task size, location, speed, acceleration, etc., to the cloud computing center. During the current time period, based on the complexity of the tasks uploaded by vehicles, the load status of the RSU, the predicted load status of the BS, the relationship between the vehicles and the RSU/BS, and the vehicle moving speed, the cloud computing center uses the PPO algorithm to obtain an approximately optimal task offloading scheme.

Finally, the decision results are sent back to the vehicles. Vehicles perform task offloading and receive the results of whether they can access the BS/RSU or the cloud computing center. In the remaining time of the current time period, the cloud computing space predicts the load status of the BS for the next time period based on the historical data of the BS and the results of whether vehicles could access the BS.

3.2. Task Offloading Problem Formalization

The delay of vehicle task offloading is influenced by several factors. By referring to the formula for calculating the offloading delay to the RSU or locally in [17], we derive the formulas for calculating the offloading delay to the BS, RSU, and locally.

In the proposed system, the tasks can be assigned to the RSU, BS and local for computation. Denote as the proportion of the task allocated to the RSU, represents the proportion of the task allocated to the BS, denotes the proportion of the task allocated to the local. The data size of the task is s. Assume that the vehicle’s transmission power is fixed at . The CPU frequency for task processing at the RSU is , at the BS is , and when processing tasks locally, it is . Let the number of CPU cycles required to complete the computation task be , where k represents the computational complexity of the task. The main notations are summarized in Table 1.

Table 1.

Mathematical notation.

3.2.1. Time Delay Model

Based on Shannon’s theorem, the data transmission rates and from vehicles to RSU or BS can be calculated as shown below:

where represents white noise, N denotes interference among multiple transmissions, stands for the bandwidth allocated by the RSU to vehicles, and is the bandwidth allocated by the BS to vehicles.

Since the delay of downlink transmission is much smaller than that of uplink transmission, we reasonably ignore the calculation result of downlink transmission delay. Therefore, we only focus on the computation delay and uplink transmission delay. When vehicles offload tasks to the RSU, the transmission delay from vehicles to the RSU and the computation delay at RSU can be expressed as shown below:

Similarly, we obtain the computation delay and transmission delay models when a vehicle offloads a task to the BS.

Therefore, the total delay of RSU and BS auxiliary processing tasks are shown as

After task offloading, the size of the task that remains for local computation by the vehicle is , and the total delay for the vehicle to compute the remaining task locally is

According to the time delay models of vehicles to RSUs, vehicles to BSs and local processing, the total delay for the vehicle to compute tasks is

3.2.2. Task Offloading Decision Problem

According to the task offloading ratio allocation to the RSU and BS, we obtain processing rate constraints for offloading tasks to each target.

where the processing rates of vehicle offloading tasks to RSUs, BSs, and the local area are , and , respectively.

In addition to the task allocation and rate constraints, there are also spatial relationship constraints between vehicles, RSUs, and BSs, as

where represents the delay when all tasks are computed locally, represents the delay when all tasks are offloaded to the RSU, represents the delay when all tasks are offloaded to the BS, and represents the minimum value among the three delays.

Based on the evaluated position relationship between the RSU, BS, and vehicle, we can decide whether the task should be offloaded to the RSU or BS. can be calculated as

where is the certain speed of the vehicle.

where and are variables, , is the current position of the vehicle, is the coverage boundary position of the RSU where the vehicle is located, and is the coverage boundary position of the BS where the vehicle is located. It means that the vehicle’s offloading strategy is not affected when the vehicle does not exceed the coverage range of the current RSU/BS after passing at a certain speed . Otherwise, the vehicle will exceed the coverage range of the current RSU/BS, and the vehicle will not choose the RSU/BS as the offloading target.

Therefore, the task offloading optimization problem can be formulated as

subject to

where function (17) minimizes the total task processing delay. Constraint (18) requires that all the processing rates must be larger than the minimum expected rate . Constraint (19) means the task split ratios should add up to 100%. Constraint (22) means that there are always tasks left to compute locally.

The objective problem (17) with constraints (18)–(22) is expressed as a constrained optimization problem in the field of task offloading. Because is related to in the objective problem (17), but it is difficult to obtain the actual data of in the system, therefore, we introduced the LSTM to estimate , which is the load state of BSs. And we use the PPO algorithm to solve the constrained optimization problem, striving to achieve stable and excellent offloading performance. In the next section, we will use the two-stage optimization approach to solve the load state estimation problem of BSs and the task offloading optimization problem.

4. LSTM for Load Estimation and PPO for Task Offloading Strategy

4.1. Load Estimation of BSs via LSTM

LSTM is a specialized type of recurrent neural network (RNN) designed to handle long sequences of data while addressing issues such as vanishing and exploding gradients. This architecture manages the flow of information through a gating mechanism, which allows it to learn and retain long-term dependencies effectively. The key component of the LSTM is its unique cell structure, which contains three gates: the forget gate, the input gate, and the output gate.

The forget gate is responsible for deciding which information, now outdated or irrelevant, should be discarded from the cell state. This ensures that the network remains focused on the most pertinent data. The input gate determines which new information should be stored in the cell state, updating the internal state of the network accordingly. The output gate controls the next hidden state’s value and decides what information should be passed onto the subsequent layers of the network, enabling the network to utilize this information for making predictions.

This gating mechanism allows the LSTM to capture and leverage long-term dependencies in data, making it particularly suitable for time-series prediction tasks. Since the real-time load state of the BS cannot be directly accessed due to protocol conflicts or incompatibilities, the BS load must be estimated with offloading decisions being based on these estimates. Given that the BS server load exhibits periodicity and volatility depending on traffic flow, it is a practical and effective approach to incorporate LSTM for predicting BS load patterns.

4.2. PPO for Task Offloading Strategy

4.2.1. PPO Principles

The PPO algorithm is a DRL approach, which is primarily used to solve problems in continuous action spaces. The core principle of PPO involves transforming on-policy methods based on a policy gradient into off-policy methods and improving the utilization efficiency of interaction data through importance sampling [29]. Importance sampling corrects the value function of samples generated by the current policy, optimizing the policy parameters. We use the PPO-Clip method.

PPO-Clip restricts the difference between the new and old policies using a clipping function to control the magnitude of policy updates. The clipping function computes the ratio of the new and old policies on each action sample and limits it within a predetermined range, ensuring that policy updates do not result in excessive changes, to improve the stability and performance of the algorithm.

Based on the MDP , and where is the agent’s policy at time step t, the comparison of the new policy and old policy can be calculated as

where is the parameter of the new policy, and is the parameter of the old policy.

PPO uses a value learning approach, where an advantage function is constructed to measure the payoff of the current policy, and it is calculated as follows:

where is the discount factor, which is used to weigh the importance of real-time rewards against past rewards. Therefore, is denoted as the clip factors, and the loss function can be constructed as

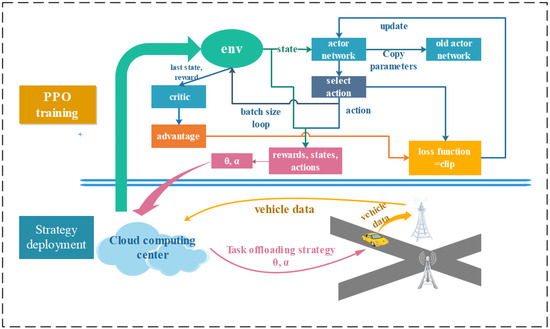

Figure 2 shows the learning process of the agent for the task offloading policy based on the PPO-Clip method as well as the deployment of this policy. It also provides a detailed and clear depiction of the algorithmic workflow of the PPO-Clip method for task offloading. In this process, vehicle users transmit their data to the cloud computing center via the RSU. At the cloud computing center, the agent is trained using the PPO algorithm to generate the appropriate offloading strategy. Once training is completed, the cloud computing center sends the resulting offloading policy back to the vehicle, where the policy is executed.

Figure 2.

PPO-Clip for task offloading strategy flow.

4.2.2. State and Action Spaces Definitions

The input of the state space is related to the driving state of the vehicle and the status of the IoV in the mixed coverage scenario. As shown in Figure 2, the input state includes the position of the vehicle relative to the RSU and BS, the vehicle speed, the task size, the load state of the RSU and BS, and the coverage area, as

where and are the load states of the RSU and the BS. The action can be formulated as

We only make decisions on and ; can be calculated as

4.2.3. Reward Function Definition

The optimization objective is to minimize the system task processing delay, and the output of the agent needs to satisfy the constraint (18); otherwise, it will be penalized. Therefore, the reward can be calculated as

4.3. Integrated Approach for Load Estimation and Task Offloading Strategy

In summary, we propose an integrated approach for load estimation and the task offloading strategy to minimize the system task processing delay. It includes two steps. The first step is to predict the load condition of the BS for the next time period based on the long-term load status table provided by the BS and the feedback from the current time period. This step forms the basis for the rational and efficient utilization of the BS. Utilizing an LSTM prediction model, optimizing the input dimensions and training iterations, memory units in the hidden layer, or the number of hidden layers is essential to achieve a good fit and precision in the prediction. Incorporating the additional step of obtaining feedback from each time period enhances the accuracy of predicting the BS’s load condition.

Then, based on the PPO algorithm, the agent learns to observe the current status of the VEC and reasonably divide the computing tasks in real time, that is, make decisions , , and . The offloading of computing tasks was completed to improve the QoS for users. The main algorithmic procedure is presented in Algorithm 1.

| Algorithm 1: LSTM and PPO for task offloading strategy approach |

| Input: BS load history data; LSTM Training; Output: BS load estimation value ; Input: Epoch e, steps t, , , actor networks , critic networks , batch size m; for do for do Get the vehicle state ; Input to Actor and get output , ; Get the Gaussian distribution from and as policy ; Select action from the policy ; Store in the buffer; if t % m == 0 then Select 10 random samples; Calculate and update by (23)–(25); Calculate loss function based on Critic output and discounted reward; Update based on the MSE loss function; end if end for end for Output: The trained agent parameter |

5. Simulation and Performance Evaluation

In this section, we discuss the simulation experiments and performance analysis of the two-stage optimization algorithm. First, we build a dataset to evaluate the performance of LSTM for BS load predictions. Then, we verify the performance of PPO for the offloading strategy. Comparative experiments and discussions are carried out based on different offloading strategies under different conditions, and we will focus on the processing delay, which is one of the important performance metrics of the system.

5.1. LSTM for Load Estimation

First, based on the temporal traffic flow model, we divided the appropriate time period and reasonably constructed the relevant training dataset. Then, we construct the LSTM neural network, which makes it possible to estimate the current BS load based on the current moment.

5.1.1. Experiment Settings

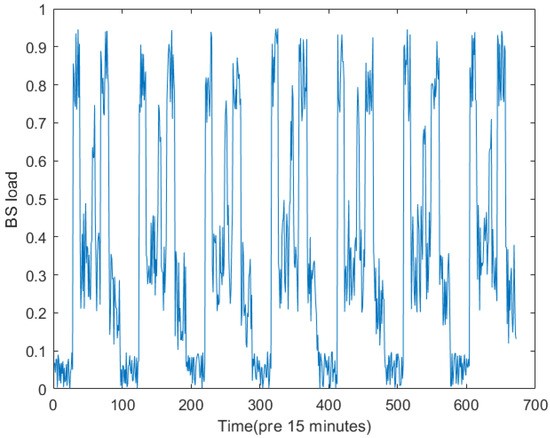

Since the intelligent transportation network is still in its early application, there are still a lack of historical data on the CPU load of the BS server, so we set that the BS loading changes with the traffic flow. Based on the one-day traffic flow variation model, the data of the BS load rate are randomly generated in a certain interval. Figure 3 shows the BS load data for every 15 min of a week.

Figure 3.

BS load data of one week.

The proposed LSTM algorithm is implemented in the Matlab/Simulink R2022b environment running on a Linux virtual OS computer with an NVIDIA 3060x2x4-bit graphics card. The device is manufactured by ASUS Co., Ltd., Suzhou, China. The relevant parameters are shown in Table 2.

Table 2.

LSTM parameters.

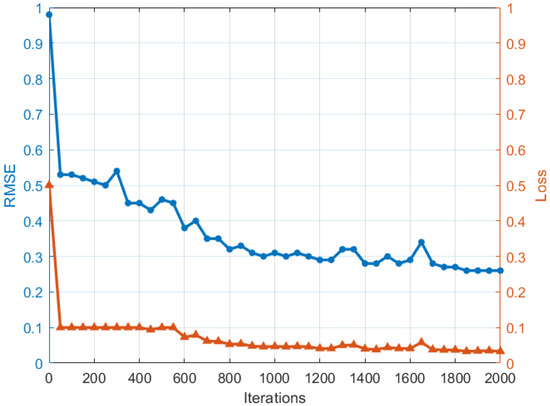

5.1.2. Performance Evaluation

Figure 4 illustrates the convergence curve of the LSTM. The iteration curve shows that in the early stage of training, the relevant RMSE and loss values decrease rapidly. Then, in the later training stage, the RMSE of the predicted value output by the network gradually decreases and becomes stable, and the loss value also converges and tends to 0. It shows that the network has converged after 2000 rounds of iteration, and the load of the BS can be predicted accurately. The method based on LSTM to predict the load of the BS is feasible.

Figure 4.

Performance of LSTM.

5.2. PPO for Task Offloading Strategy

Assuming that a period of BS load conditions has been obtained, the LSTM time series prediction is used to predict the known BS load conditions. Now, it is necessary to use the PPO algorithm to find the vehicle task offloading ratio with the shortest offloading time. We consider the road network, and to validate the reasonableness of the offloading ratio obtained by the PPO algorithm, we train vehicle data under different conditions and analyze the results. To verify the delay performance of the architecture and offloading strategy, the “Local-BS-RSU” collaborative offloading strategy is compared with the “RSU-Local” collaborative offloading under different conditions.

After that, we will use the model parameters trained by the PPO algorithm to simulate different strategies made when receiving different vehicle data and road data during application, observing and analyzing the trend of strategy changes. This aims to demonstrate that the model trained with the training set is robust and can cope with complex and varied situations.

5.2.1. Experiments Setting

First, we need to simulate a congested traffic environment and obtain relevant traffic data. We use SUMO to create a 2 km long road in the east–west direction with seven intersections. The north–south direction of each intersection has a 100 m road, and there is a 300 m gap between each intersection in the east–west direction. Vehicles from all directions are present, but this study focuses on the vehicles traveling in the east–west direction (marked in green). We use a large traffic flow and a combination of traffic lights to create a congested environment.

Starting from the beginning of the road, there are 11 RSUs and six BSs in this scenario. Each RSU has a coverage range of 200 m, and each BS has a coverage range of 400 m. Vehicles generate tasks to be unloaded randomly at certain locations, with the position, speed, task size, acceleration, and the load condition of nearby RSUs/BSs at the time of task generation all randomly selected within a certain range. The relevant parameter settings of mixed coverage scenarios, such as coverage and computing power, are referred to [30,31]. These device parameters are reasonable, and the simulation experiment scene is close to the real mixed coverage traffic scenario.

Table 3 shows the traffic-related parameters, and Table 4 shows the relevant parameters of the PPO-Clip approach.

Table 3.

Simulation road environments parameters.

Table 4.

PPO-Clip parameters.

5.2.2. Performance Evaluation

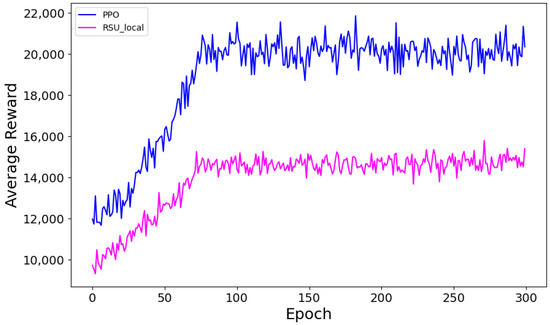

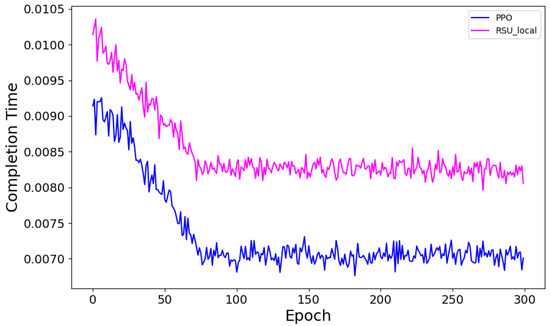

Figure 5 and Figure 6 show the historical reward and offloading times of different strategies. It can be observed that through training with the PPO algorithm, as the number of iterations increases, the average offloading delay per iteration gradually decreases and stabilizes. The “RSU-Local” collaborative offloading strategy exhibits lower delay compared to unilateral offloading. The main reason is that the real-time changes in the load condition of the offloading targets can affect the offloading delay. By utilizing the PPO-based local and RSU collaborative offloading, the proportion can be adjusted promptly when one side has a heavier load, reducing the offloading delay. The proposed “Local-BS-RSU” collaborative offloading strategy in this study achieves the lowest offloading time as it allows for a wider selection of offloading targets. By adjusting the allocation proportion effectively, the offloading delay is minimized, making better use of existing computational resources and exhibiting stronger robustness. A comparison of the proposed offloading strategy with the “RSU-Local” collaborative offloading shows an average delay reduction of approximately 5.6%. That means, under the auspices of the aforementioned offloading strategy, vehicular computing platforms are endowed with the capability to process computational tasks with enhanced velocity, facilitating a more rapid response to traffic information, significantly bolstering safety in driving, and providing robust technical support for the execution of more complex vehicular computational tasks. Furthermore, this offloading strategy optimizes resource allocation, achieving an efficient utilization of computational resources, balancing computational load and energy consumption, and enhancing the overall energy efficiency.

Figure 5.

Average reward for different offloading strategies.

Figure 6.

Average offloading completion times (delay) for different offloading strategies.

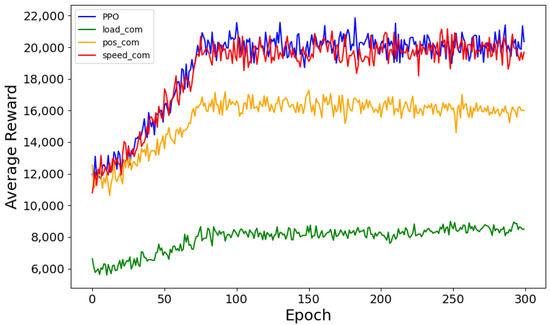

Figure 7 shows the reward of vehicle offloading strategies under different conditions. The three different cases are the load of offloading target increases (“load_com”), the vehicle approaching the covering boundary (“pos_com”) and the vehicle speed decreasing (“speed_com”). It is evident that when the load on the RSU and BS increases while keeping other conditions constant, the offloading delay is most significantly impacted in determining the optimal offloading strategy trained by the PPO algorithm. When vehicles are positioned close to the boundary of the offloading target, to prevent vehicles from exceeding the range during offloading and causing timeouts, the offloading ratio of the RSU and BS in the PPO training decreases, leading to an increase in offloading delay. Furthermore, if the condition of being near the boundary of the offloading target is maintained but only the vehicle speed is set to zero, meaning the vehicle is stationary even when close to the boundary and will not exceed it, the overall offloading delay of the obtained offloading strategy is reduced. Meanwhile, Table 5 shows the average value and standard deviation value of the rewards under different conditions from 100 to 300 epochs, displaying the results of the convergence stage. The standard deviation values do not have much fluctuation, staying within the 200–600 range. That means the strategy trained by PPO has no divergence. The results both indicate that the system model has taken into account the factors affecting vehicle task offloading in mixed coverage scenarios under congested conditions, making the allocation proportion for task offloading more reasonable.

Figure 7.

Average reward comparison of vehicle offloading strategies under different conditions.

Table 5.

Stability analysis of strategy under different conditions (100–300 epochs).

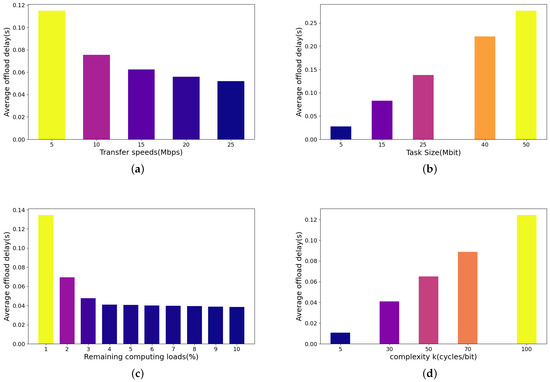

Figure 8 shows the performance of the trained model under different conditions. Where (a) shows the impact of the transfer speed on the average offload delay, (b) shows the impact of the task size on the average offload delay, (c) shows the impact of the remaining computing loads, (d) shows the impact of the computation task complexity. When the data transmission speed of the RSU and BS is low, it has a significant impact on the total offloading delay. However, when the data transmission speed of the RSU and BS reaches a certain level, its impact on the total offloading delay becomes smaller. When the task generation size is large, it will have a significant impact on both transmission delay and computation delay. On the other hand, when the task complexity is high, it only affects the computation delay. Both of these variations will result in different degrees of impact on the total offloading delay. Therefore, when the task generation size and task complexity are large, the total offloading delay will increase. Moreover, when the remaining computing resources of the offloading target are extremely limited, the total offloading delay increases significantly. However, when the remaining computing resources reach a certain value, their impact on the total offloading delay decreases.

Figure 8.

The performance of the trained model under different conditions.

In summary, it can be considered that the training model in this paper can appropriately adjust the task offloading strategy in response to changes in specific influencing factors to reduce the offloading delay generated by vehicles in different scenarios.

6. Conclusions and Future Works

In this paper, we focus on vehicle networks in mixed coverage scenarios and propose an optimal task offloading strategy. By considering the mixed coverage of BSs and RSUs in traffic environments, we formulate a constrained optimization problem based on a communication and computing delay model with the objective of minimizing the task processing delay. To address this, we propose a two-stage optimization algorithm. The first stage utilizes LSTM to predict the load state of BSs, and based on the predictions, we optimize the task offloading ratios for vehicles using the PPO algorithm. The simulation results demonstrate that both the selection of offloading targets and the offloading ratios significantly affect system delay, leading to the efficient utilization of computing resources from nearby RSUs and BSs. LSTM effectively predicts the BS load state, and the PPO output strategy facilitates optimal offloading decisions, reducing task processing delay. The results show that the proposed two-stage optimization algorithm reduces task processing delay by approximately 5.6%. The proposed strategy enhances vehicular task processing speed and improves vehicle intelligence.

Future work will focus on developing strategies suited to more realistic road conditions. We plan to further refine the proposed approach by considering more complex road environments and developing more nuanced strategies.

Author Contributions

Conceptualization, X.H. and C.Y.; methodology, X.H. and C.Y.; software, X.H. and Y.C.; validation, X.H. and Y.C.; formal analysis, X.H. and X.C.; investigation, X.H. and Y.L.; writing—original draft preparation, X.H. and Y.C.; writing—review and editing, Y.C., C.Y. and Y.L.; supervision, X.C. and C.Y.; project administration, X.C.; funding acquisition, C.Y. and X.C. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the 2024 Guangdong Province Science and Technology Innovation Strategic Special Fund with grant: pdjh2024a138 and in part by the Guangdong Basic and Applied Basic Research foundation with Grant 2024A1515012745.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data are contained within article.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Gao, J.; Agyekum, K.O.B.O.; Sifah, E.B.; Acheampong, K.N.; Xia, Q.; Du, X.; Guizani, M.; Xia, H. A Blockchain-SDN-Enabled Internet of Vehicles Environment for Fog Computing and 5G Networks. IEEE Internet Things J. 2020, 7, 4278–4291. [Google Scholar] [CrossRef]

- Silva, L.; Magaia, N.; Sousa, B.; Kobusińska, A.; Casimiro, A.; Mavromoustakis, C.X.; Mastorakis, G.; de Albuquerque, V.H.C. Computing Paradigms in Emerging Vehicular Environments: A Review. IEEE/Caa J. Autom. Sin. 2021, 8, 491–511. [Google Scholar] [CrossRef]

- Lopez, H.J.D.; Siller, M.; Huerta, I. Internet of vehicles: Cloud and fog computing approaches. In Proceedings of the 2017 IEEE International Conference on Service Operations and Logistics, and Informatics (SOLI), Bari, Italy, 18–20 September 2017; pp. 211–216. [Google Scholar]

- Sahbi, R.; Ghanemi, S.; Djouani, R. A Network Model for Internet of vehicles based on SDN and Cloud Computing. In Proceedings of the 2018 6th International Conference on Wireless Networks and Mobile Communications (WINCOM), Marrakesh, Morocco, 16–19 October 2018; pp. 1–4. [Google Scholar]

- Chen, C.; Zeng, Y.; Li, H.; Liu, Y.; Wan, S. A Multihop Task Offloading Decision Model in MEC-Enabled Internet of Vehicles. IEEE Internet Things J. 2023, 10, 3215–3230. [Google Scholar] [CrossRef]

- Song, Z.; Ma, R.; Xie, Y. A collaborative task offloading strategy for mobile edge computing in internet of vehicles. In Proceedings of the 2021 IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; pp. 1379–1384. [Google Scholar]

- Liu, H.; Eldarrat, F.; Alqahtani, H.; Reznik, A.; de Foy, X.; Zhang, Y. Mobile Edge Cloud System: Architectures, Challenges, and Approaches. IEEE Syst. J. 2018, 12, 2495–2508. [Google Scholar] [CrossRef]

- Zhang, J.; Letaief, K.B. Mobile Edge Intelligence and Computing for the Internet of Vehicles. Proc. IEEE 2020, 108, 246–261. [Google Scholar] [CrossRef]

- Ang, L.-M.; Seng, K.P.; Ijemaru, G.K.; Zungeru, A.M. Deployment of IoV for Smart Cities: Applications, Architecture, and Challenges. IEEE Access 2019, 7, 6473–6492. [Google Scholar] [CrossRef]

- Zhu, L.; Zhang, Z.; Lin, P.; Shafiq, O.; Zhang, Y.; Yu, F.R. Learning-Based Load-Aware Heterogeneous Vehicular Edge Computing. In Proceedings of the GLOBECOM 2022-2022 IEEE Global Communications Conference, Rio de Janeiro, Brazil, 4–8 December 2022. [Google Scholar]

- Venticinque, S.; Aversa, R.; Branco, D.; Martino, B.D.; Esposito, A. A Systematic Review on Tasks Offloading techniques from the Edge based on Code Mobility. In Proceedings of the 2021 IEEE Intl Conf on Dependable, Autonomic and Secure Computing, Intl Conf on Pervasive Intelligence and Computing, Intl Conf on Cloud and Big Data Computing, Intl Conf on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Alberta, AB, Canada, 25–28 October 2021; pp. 213–220. [Google Scholar]

- Yu, S.; Gong, X.; Shi, Q.; Wang, X.; Chen, X. EC-SAGINs: Edge-Computing-Enhanced Space–Air–Ground-Integrated Networks for Internet of Vehicles. IEEE Internet Things J. 2022, 9, 5742–5754. [Google Scholar] [CrossRef]

- Cheng, J.; Cheng, J.; Zhou, M.; Liu, F.; Gao, S.; Liu, C. Routing in Internet of Vehicles: A Review. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2339–2352. [Google Scholar] [CrossRef]

- You, Q.; Tang, B. Efficient task offloading using particle swarm optimization algorithm in edge computing for industrial internet of things. J. Cloud Comp. 2021, 10, 41. [Google Scholar] [CrossRef]

- Shu, W.; Li, Y. Joint offloading strategy based on quantum particle swarm optimization for MEC-enabled vehicular networks. Digit. Commun. Netw. 2023, 9, 56–66. [Google Scholar] [CrossRef]

- Yu, Z.; Tang, Y.; Zhang, L.; Zeng, H. Deep Reinforcement Learning Based Computing Offloading Decision and Task Scheduling in Internet of Vehicles. In Proceedings of the 2021 IEEE/CIC International Conference on Communications in China (ICCC), Xiamen, China, 28–30 July 2021; pp. 1166–1171. [Google Scholar]

- Wang, K.; Wang, X.; Liu, X.; Jolfaei, A. Task Offloading Strategy Based on Reinforcement Learning Computing in Edge Computing Architecture of Internet of Vehicles. IEEE Access 2020, 8, 173779–173789. [Google Scholar] [CrossRef]

- Gao, H.; Wang, X.; Wei, W.; Al-Dulaimi, A.; Xu, Y. Com-DDPG: Task Offloading Based on Multiagent Reinforcement Learning for Information-Communication-Enhanced Mobile Edge Computing in the Internet of Vehicles. IEEE Trans. Veh. Technol. 2024, 73, 348–361. [Google Scholar] [CrossRef]

- Yang, X.; Luo, H.; Sun, Y.; Guizani, M. A Novel Hybrid-ARPPO Algorithm for Dynamic Computation Offloading in Edge Computing. IEEE Internet Things J. 2022, 9, 24065–24078. [Google Scholar] [CrossRef]

- Ling, C.; Zhang, W.; He, H.; Yadav, R.; Wang, J.; Wang, D. QoS and Fairness Oriented Dynamic Computation Offloading in the Internet of Vehicles Based on Estimate Time of Arrival. IEEE Trans. Veh. Technol. 2024, 73, 10554–10571. [Google Scholar] [CrossRef]

- Luo, Q.; Li, C.; Luan, T.H.; Shi, W. Minimizing the Delay and Cost of Computation Offloading for Vehicular Edge Computing. IEEE Trans. Serv. Comput. 2022, 15, 2897–2909. [Google Scholar] [CrossRef]

- Guo, X.; Hong, X. DQN for Smart Transportation Supporting V2V Mobile Edge Computing. In Proceedings of the 2023 IEEE International Conference on Smart Computing (SMARTCOMP), Nashville, TN, USA, 26–30 June 2023; pp. 204–206. [Google Scholar]

- Wu, G.; Zhao, Y.; Shen, Y.; Zhang, H.; Shen, S.; Yu, S. DRL-based Resource Allocation Optimization for Computation Offloading in Mobile Edge Computing. In Proceedings of the IEEE INFOCOM 2022–IEEE Conference on Computer Communications Workshops (INFOCOM WKSHPS), New York, NY, USA, 2–5 May 2022; pp. 1–6. [Google Scholar]

- Wang, B.; Liu, L.; Wang, J. Multi-Agent Deep Reinforcement Learning for Task Offloading in Vehicle Edge Computing. In Proceedings of the 2023 IEEE International Symposium on Broadband Multimedia Systems and Broadcasting (BMSB), Beijing, China, 14–16 June 2023; pp. 1–6. [Google Scholar]

- Huang, Z.; Wu, X.; Dong, S. Multi-objective task offloading for highly dynamic heterogeneous Vehicular Edge Computing: An efficient reinforcement learning approach. Comput. Commun. 2024, 225, 27–43. [Google Scholar] [CrossRef]

- Zeng, J.; Gou, F.; Wu, J. Task offloading scheme combining deep reinforcement learning and convolutional neural networks for vehicle trajectory prediction in smart cities. Comput. Commun. 2023, 208, 29–43. [Google Scholar] [CrossRef]

- Li, Y.; Jiang, C. Distributed task offloading strategy to low load base stations in mobile edge computing environment. Comput. Commun. 2020, 164, 240–248. [Google Scholar] [CrossRef]

- Pang, S.; Hou, L.; Gui, H.; He, X.; Wang, T.; Zhao, Y. Multi-mobile vehicles task offloading for vehicle-edge-cloud collaboration: A dependency-aware and deep reinforcement learning approach. Comput. Commun. 2024, 213, 359–371. [Google Scholar] [CrossRef]

- Schulman, J.; Wolski, F.; Dhariwal, P.; Radford, A.; Klimov, O. Proximal policy optimization algorithms. arXiv 2017, arXiv:1707.06347. [Google Scholar]

- Li, F.; Lin, Y.; Peng, N.; Zhang, Y. Deep Reinforcement Learning Based Computing Offloading for MEC-assisted Heterogeneous Vehicular Networks. In Proceedings of the 2020 IEEE 20th International Conference on Communication Technology (ICCT), Nanning, China, 28–31 October 2020; pp. 927–932. [Google Scholar]

- Guo, Y.; Ning, Z.; Kwok, R. Deep Reinforcement Learning Based Traffic Offloading Scheme for Vehicular Networks. In Proceedings of the 2019 IEEE 5th International Conference on Computer and Communications (ICCC), Chengdu, China, 6–9 December 2019; pp. 81–85. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).