A Multiclassification Model for Skin Diseases Using Dermatoscopy Images with Inception-v2

Abstract

1. Introduction

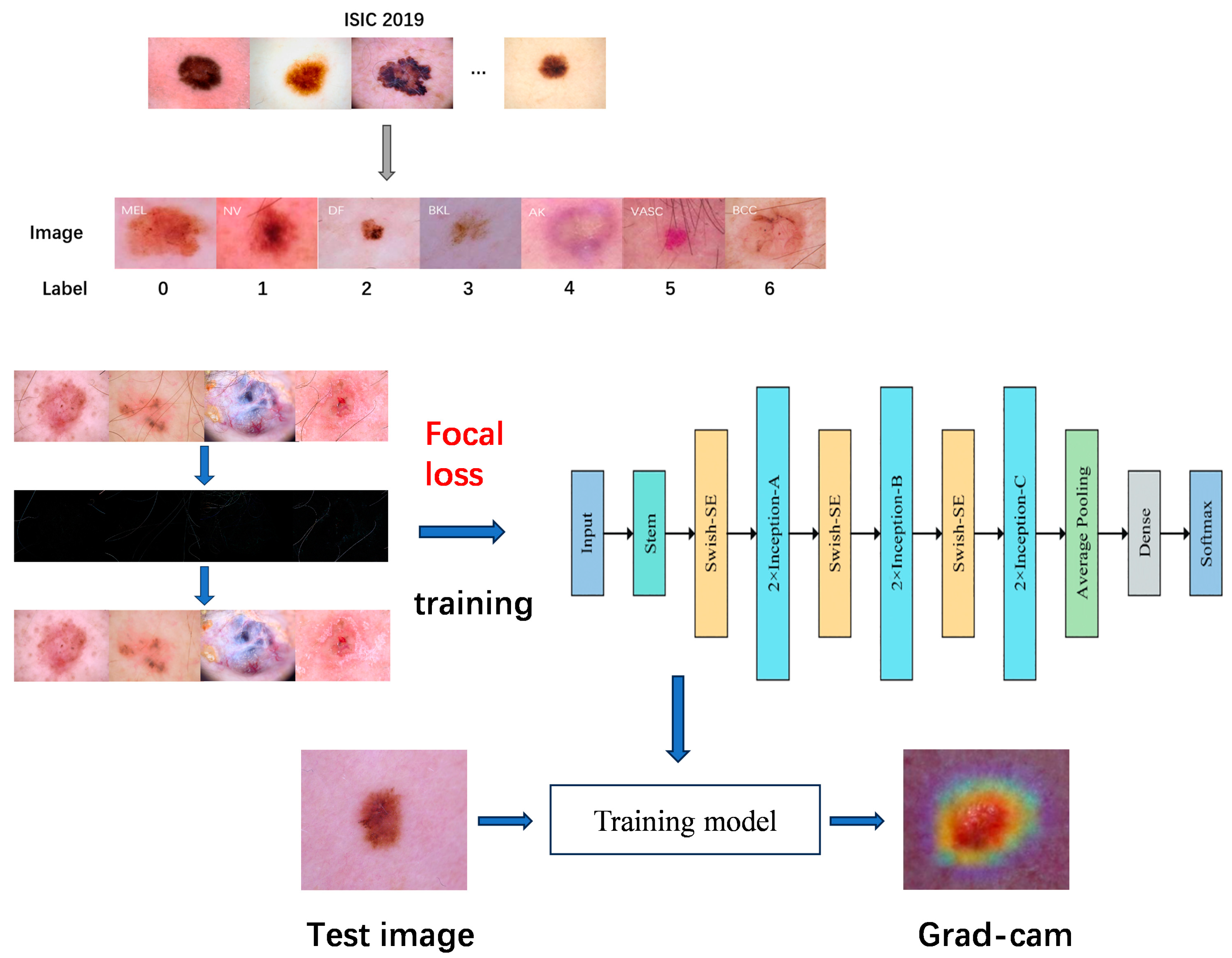

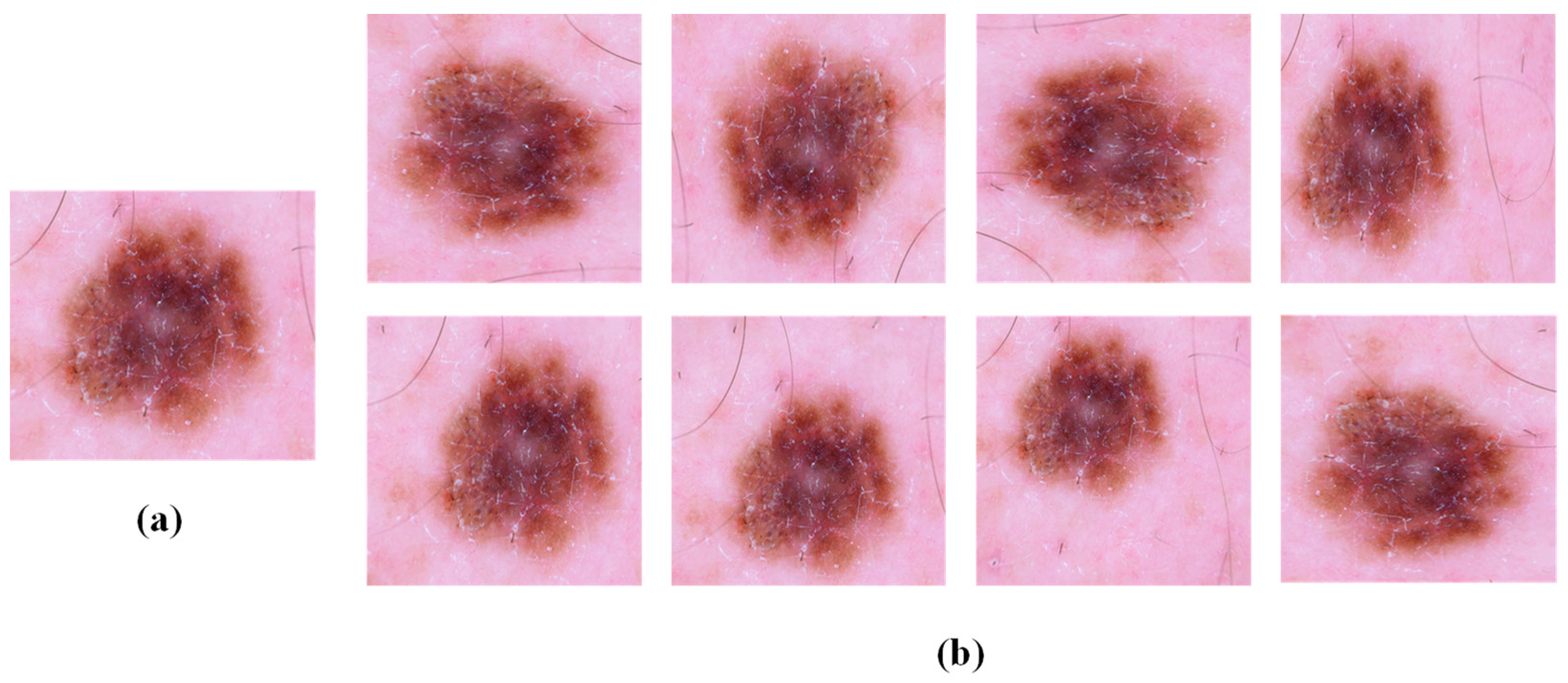

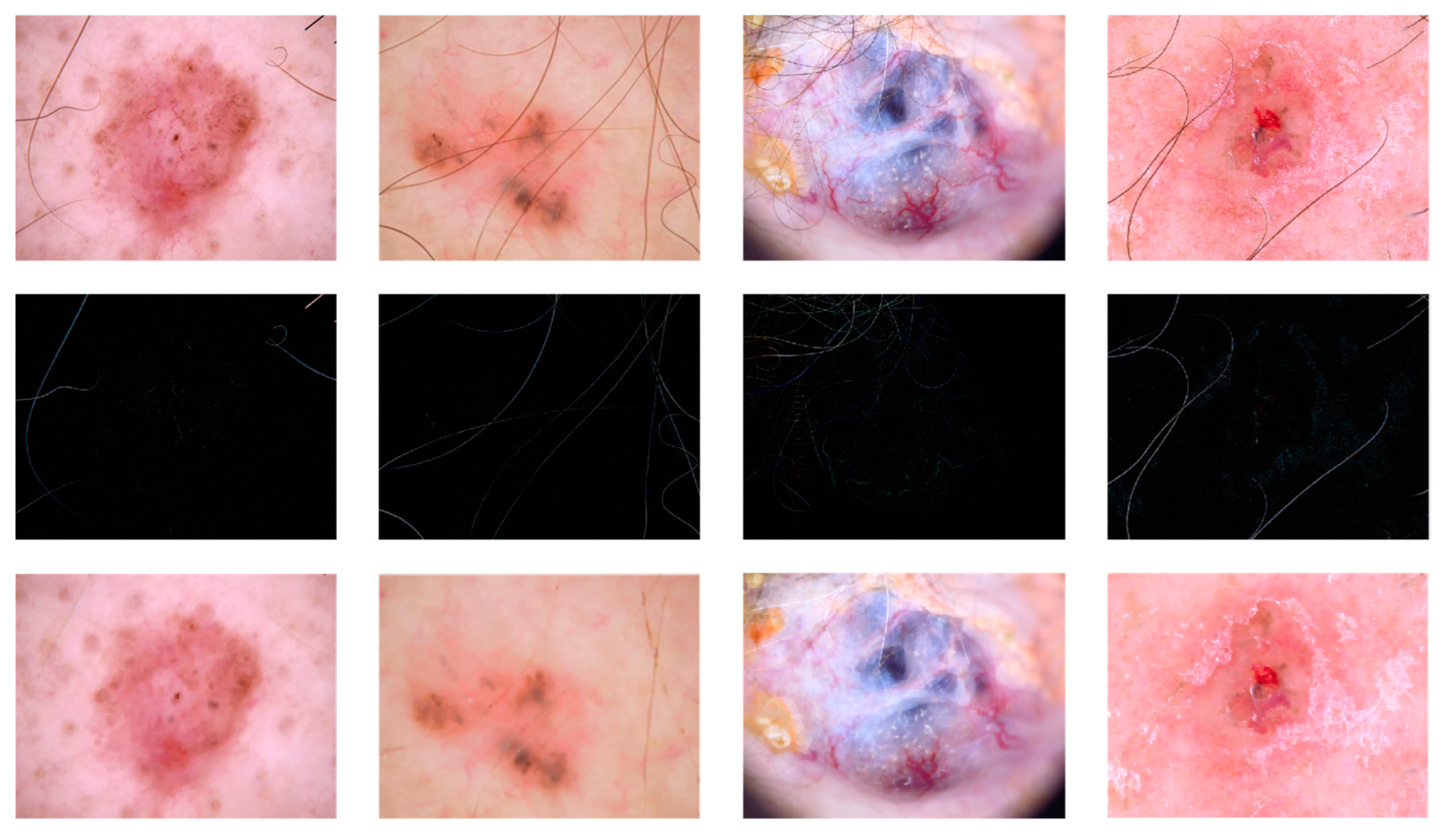

- The data were enhanced and hair was removed, among other things, to address issues such as an uneven distribution of the original dataset and images. The results of these processes were demonstrated to improve the performance and classification accuracy of the model through the use of ablation experiments.

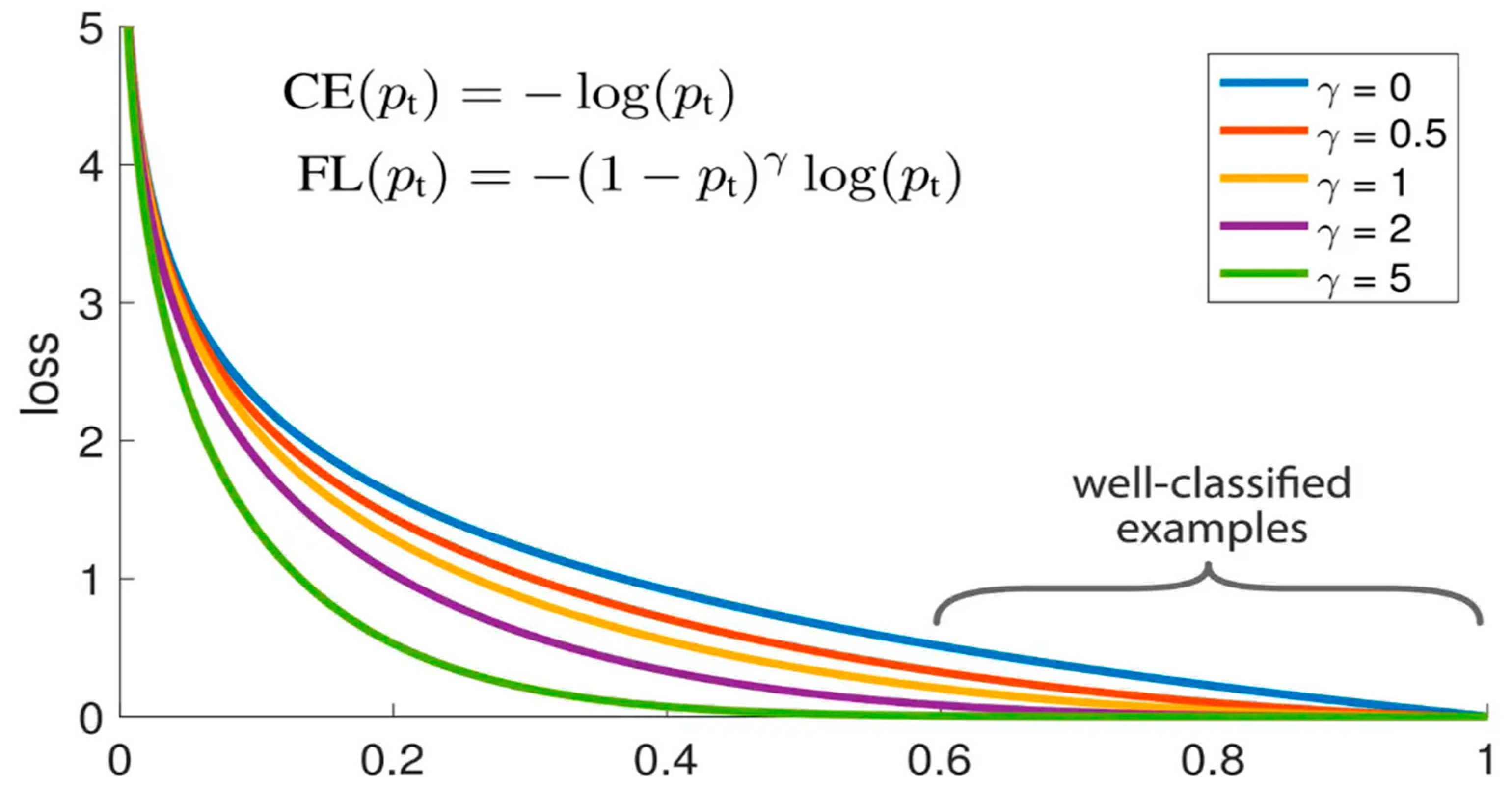

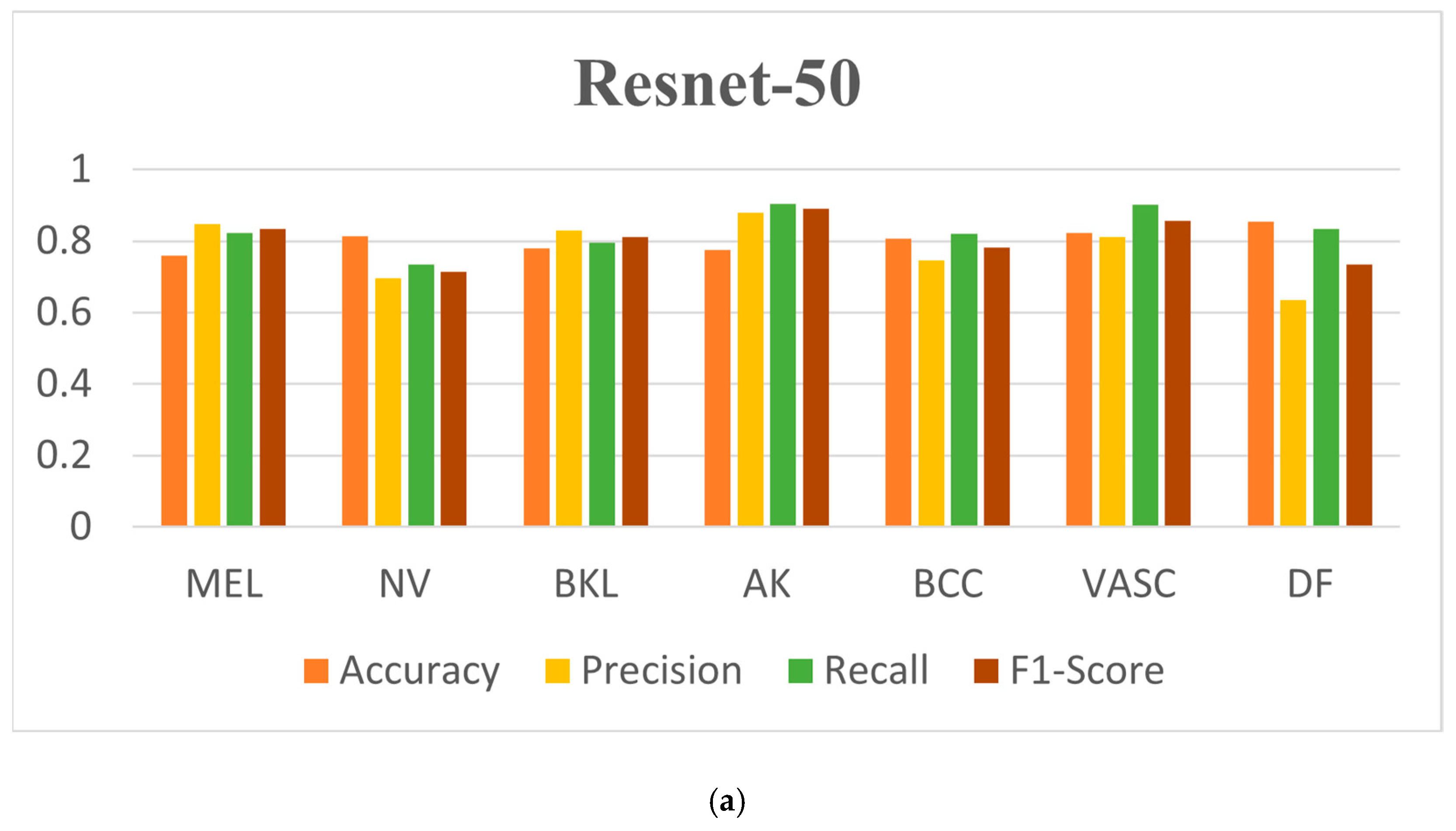

- Testing on three different networks, Resnet-50, Densenet, and Inception-v2, and comparing the NNLLoss and focal loss functions, the results show that the focal loss function has a better performance for the classification model, particularly for diseases with limited original datasets.

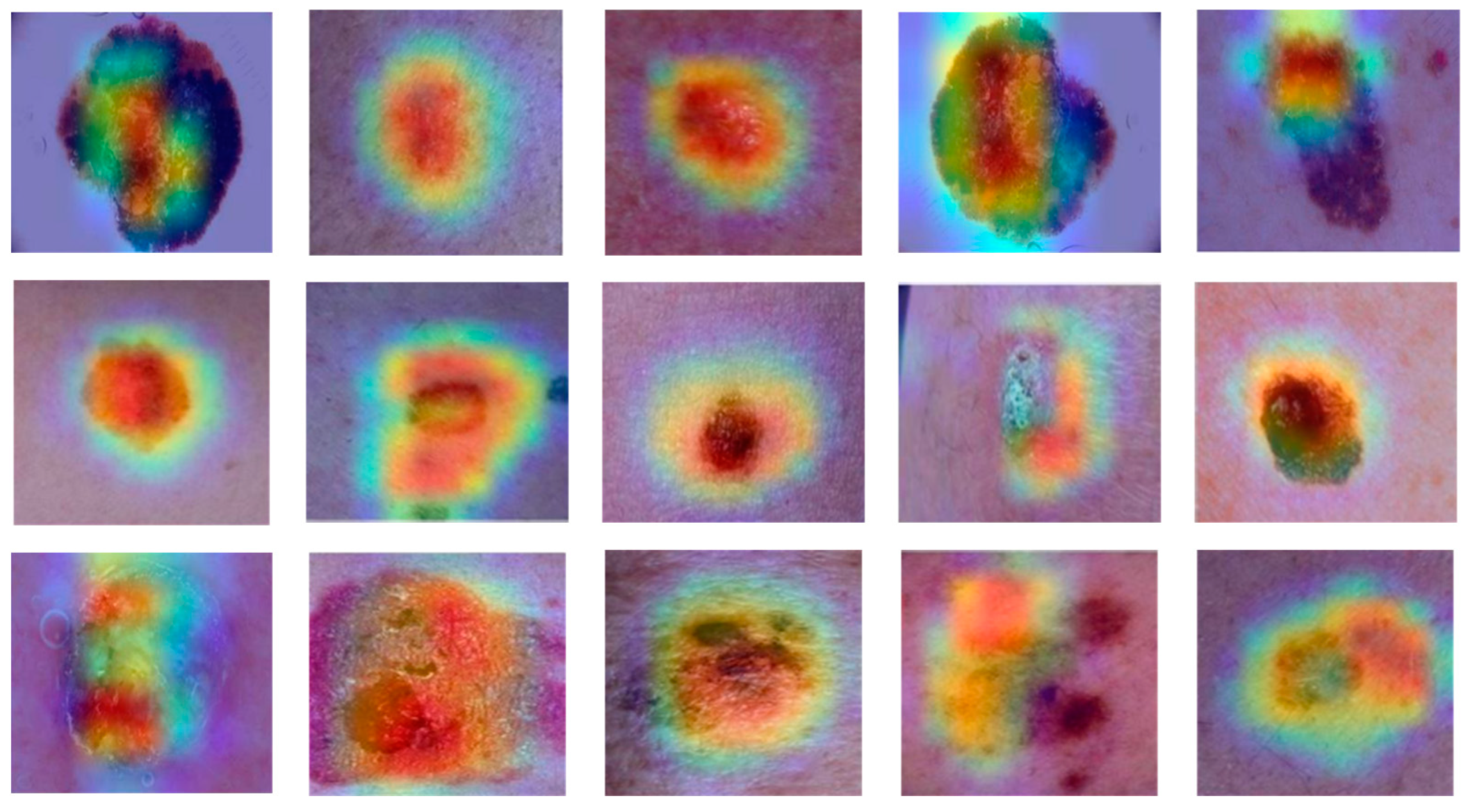

- The utilisation of Grad-CAM heat maps provides insight into the model’s classification methodology for skin disease images, facilitating a deeper comprehension of the underlying processes.

2. Related Work

- The efficacy of feature extraction and classification of dermoscopic images using convolutional neural networks is contingent upon the performance of the network. However, the current algorithms tend to utilise a single convolutional neural network, which has a relatively low classification accuracy rate.

- Classification studies of dermoscopic images are predominantly binary, particularly in the context of melanoma recognition. Furthermore, studies investigating multiclassification problems are scarce and of limited scope. Consequently, there is considerable scope for research in the area of dermoscopic image multiclassification.

3. Materials and Methods

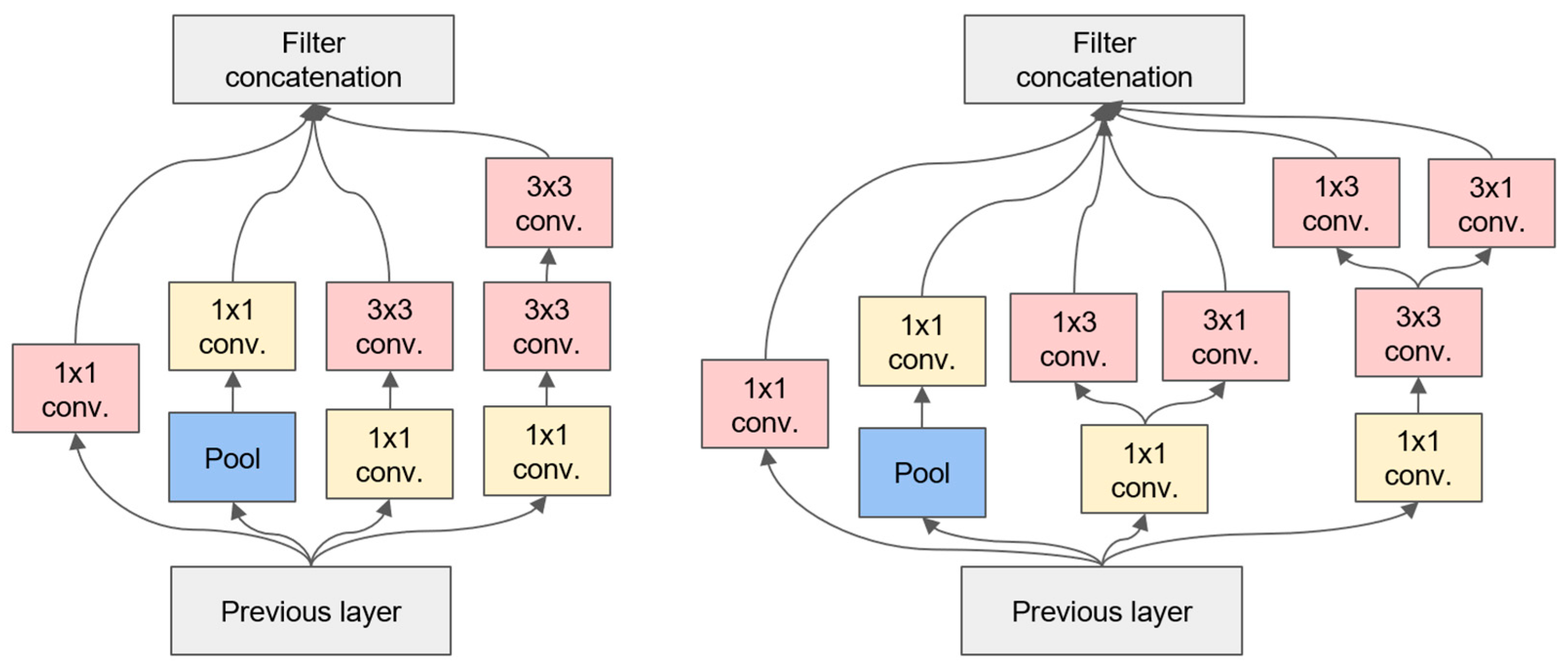

3.1. The Inception-v2 Network

- Convolutional decomposition: Inception-v2 decomposes the 7 × 7 convolution into two one-dimensional convolutions (1 × 7 and 7 × 1) and the 3 × 3 convolution into two one-dimensional convolutions (1 × 3 and 3 × 1). This decomposition has two beneficial effects: it speeds up the computation and increases the nonlinearity of the network, resulting in an increase in both the depth and width of the network.

- An update to the optimisation algorithm was implemented in Inception-v2, which now utilises the RMSProp optimisation algorithm in place of the previous SGD algorithm. The RMSProp algorithm was selected due to its superior ability to adapt to the requirements of network training through the introduction of an accumulation term, which enables the historical gradient to contribute to the alteration of the learning rate with designated weighting.

- In order to mitigate the adverse consequences of labelling errors, Inception v2 employs label smoothing. This approach entails modifying the loss function of the samples so that the output of the label is no longer exclusively 1 or 0 but rather 0.8 or 0.88 approaching 1 and 0.1 or 0.11 approaching 0. This results in the realisation of label smoothing.

3.2. The Focal Loss Function

3.3. Datasets

3.4. Image Enhancement and Hair Removal

3.5. Evaluation Metrics

4. Results and Discussion

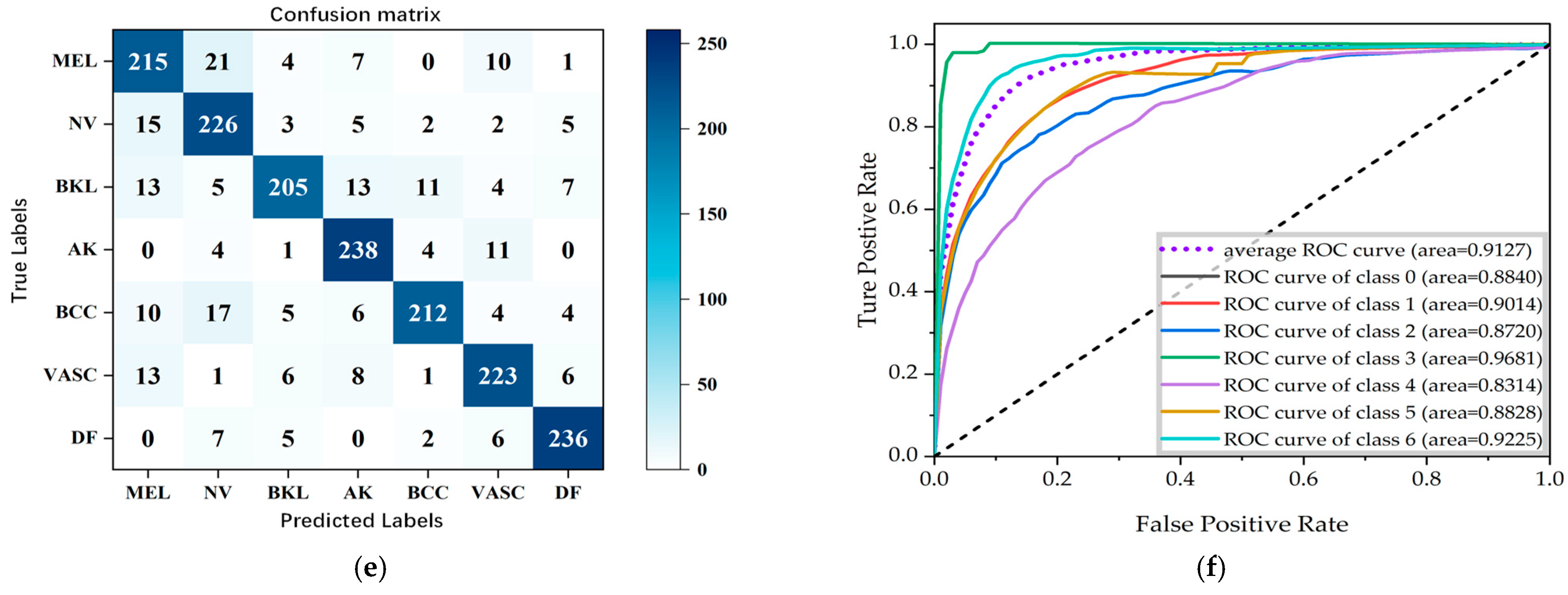

4.1. Comparison with Other Different Models

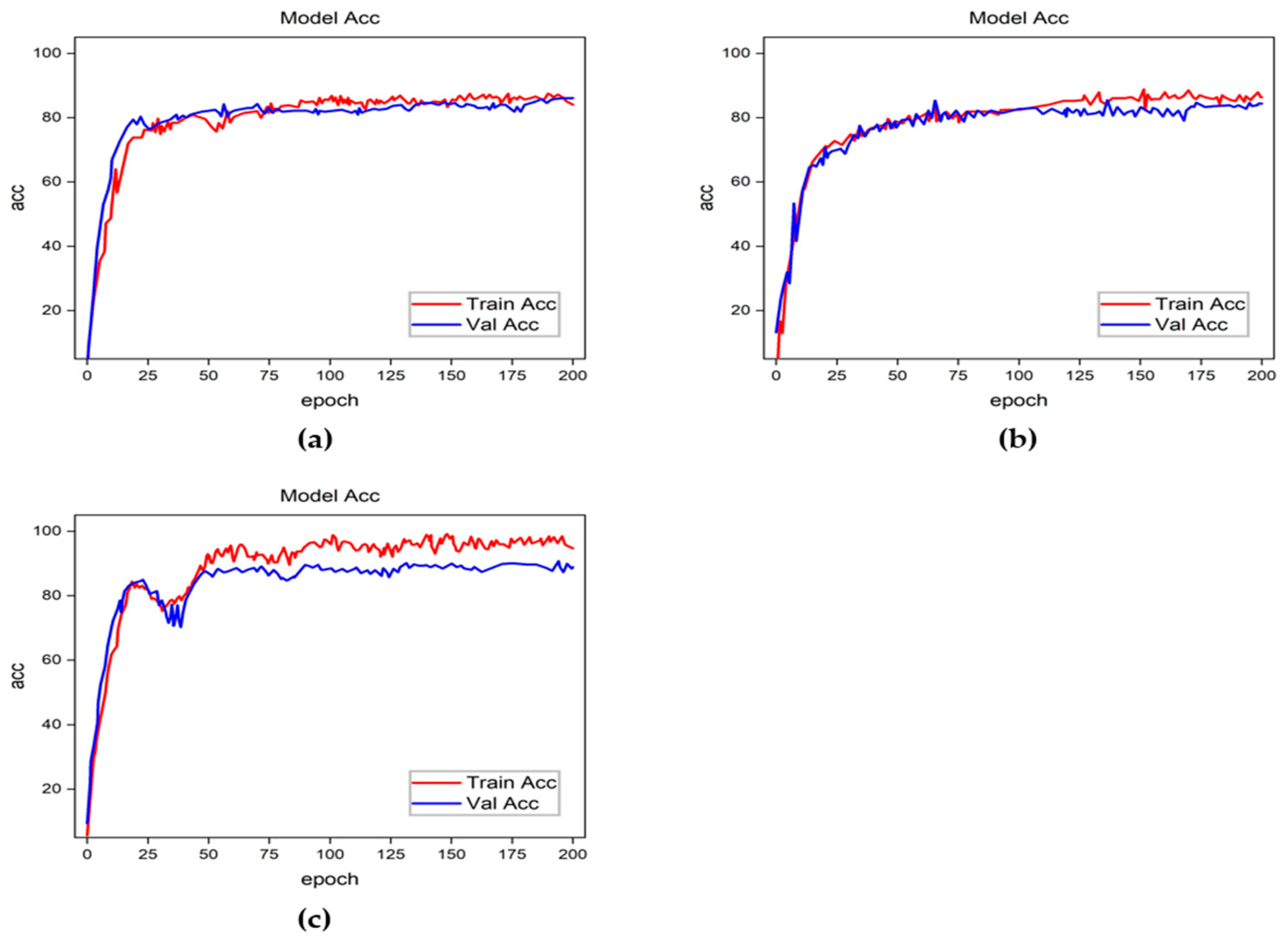

4.2. Ablation Experiments

- Experiment 1: Using hair removal and focal loss without data enhancement;

- Experiment 2: Using data enhancement and focal loss without hair removal;

- Experiment 3: Using hair removal and data enhancement without focal loss;

- Experiment 4: Using all elements.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Keerthana, D.; Venugopal, V.; Nath, M.K.; Mishra, M. Hybrid convolutional neural networks with SVM classifier for classification of skin cancer. Biomed. Eng. Adv. 2023, 5, 100069. [Google Scholar] [CrossRef]

- Arnold, M.; Singh, D.; Laversanne, M.; Vignat, J.; Vaccarella, S.; Meheus, F.; Cust, A.E.; de Vries, E.; Whiteman, D.C.; Bray, F. Global Burden of Cutaneous Melanoma in 2020 and Projections to 2040. JAMA Dermatol. 2022, 158, 495–503. [Google Scholar] [CrossRef] [PubMed]

- Myronenko, A. 3D MRI brain tumor segmentation using autoencoder regularization. In International MICCAI Brainlesion Workshop; Springer International Publishing: Berlin/Heidelberg, Germany, 2018; Volume 3. [Google Scholar]

- Qing, L.; Wei, W.; Zijun, M.; Haixia, X. A dermatoscope image classification method based on FL-ResNet50. Adv. Lasers Optoelectron. 2020, 57, 224–232. [Google Scholar] [CrossRef]

- Piccialli, F.; Di Somma, V.; Giampaolo, F.; Cuomo, S.; Fortino, G. A survey on deep learning in medicine: Why, how and when? Inf. Fusion 2021, 66, 111–137. [Google Scholar] [CrossRef]

- Razmjooy, N.; Ashourian, M.; Karimifard, M.; Estrela, V.V.; Loschi, H.J.; Nascimento, D.D.; França, R.P.; Vishnevski, M. Computeraided diagnosis of skin cancer: A review. Curr. Med. Imaging 2020, 16, 781–793. [Google Scholar] [CrossRef]

- Naeem, A.; Farooq, M.S.; Khelifi, A.; Abid, A. Malignant melanoma classification using deep learning:Datasets, performance measurements, challenges and opportunities. IEEE Access 2020, 8, 110575–110597. [Google Scholar] [CrossRef]

- Yuan, Y.; Chao, M.; Lo, Y.C. Automatic Skin Lesion Segmentation Using Deep Fully Convolutional Networks with Jaccard Distance. IEEE Trans. Med. Imaging 2017, 36, 1876–1886. [Google Scholar] [CrossRef]

- Naeem, A.; Anees, T.; Naqvi, R.A.; Loh, W.-K. A comprehensive analysis of recent deep and federated-learning-based methodologies for brain tumor diagnosis. J. Pers. Med. 2022, 12, 275. [Google Scholar] [CrossRef]

- Duggani, K.; Nath, M.K. A technical review report on deep learning approach for skin cancer detection and segmentation. Data Anal. Manag. Proc. ICDAM 2021, 54, 87–99. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar]

- Celebi, M.E.; Kingravi, H.A.; Uddin, B.; Iyatomi, H.; Aslandogan, Y.A.; Stoecker, W.V.; Moss, R.H. A methodological approach to the classification of dermoscopy images. Comput. Med. Imaging Graph. 2007, 31, 362–373. [Google Scholar] [CrossRef]

- Malik, H.; Anees, T.; Din, M.; Ahmad, N. CDC_Net: Multi-classification convolutional neural network model for detection of COVID-19, pneumothorax, pneumonia, lung Cancer, and tuberculosis using chest X-rays. Multimed. Tools Appl. 2022, 82, 13855–13880. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Ali, M.S.; Miah, S.; Haque, J.; Rahman, M.; Islam, K. An enhanced technique of skin cancer classification using deep convolutional neural network with transfer learning models. Mach. Learn. Appl. 2021, 5, 100036. [Google Scholar] [CrossRef]

- Saba, T.; Khan, M.A.; Rehman, A.; Marie-Sainte, S.L. Region extraction and classification of skin cancer: A heterogeneous framework of deep CNN features fusion and reduction. J. Med. Syst. 2019, 43, 289. [Google Scholar] [CrossRef] [PubMed]

- Ahmad, N.; Anees, T.; Ahmed, K.T.; Naqvi, R.A.; Ahmad, S.; Whangbo, T. Deep learned vectors’ formation using auto-correlation, scaling, and derivations with CNN for complex and huge image retrieval. Complex Intell. Syst. 2022, 4, 1–23. [Google Scholar]

- Chadaga, K.; Prabhu, S.; Sampathila, N.; Chadaga, R.; Sengupta, S. Predicting cervical cancer biopsy results using demographic and epidemiological parameters: A custom stacked ensemble machine learning approach. Cogent Eng. 2022, 9, 2143040. [Google Scholar] [CrossRef]

- Khanna, V.V.; Chadaga, K.; Sampathila, N.; Prabhu, S.; Bhandage, V.; Hegde, G.K. A Distinctive Explainable Machine Learning Framework for Detection of Polycystic Ovary Syndrome. Appl. Syst. Innov. 2023, 6, 32. [Google Scholar] [CrossRef]

- He, X.Y.; Han, Z.Y.; Wei, B.Z. Identification and classification of pigmented skin diseases based on deep convolutional neural network. Comput. Appl. 2018, 38, 3236–3240. [Google Scholar]

- Garnavi, R.; Aldeen, M.; Bailey, J. Computer-Aided Diagnosis of Melanoma Using Borderand Wavelet-Based Texture Analysis. IEEE Trans. Inf. Technol. Biomed. 2012, 16, 1239–1252. [Google Scholar] [CrossRef]

- Alfed, N.; Khelifi, F. Bagged textural and color features for melanoma skin cancer detection in dermoscopic and standard images. Expert Syst. Appl. 2017, 90, 101–110. [Google Scholar] [CrossRef]

- Ganster, H.; Pinz, P.; Rohrer, R.; Wildling, E.; Binder, M.; Kittler, H. Automated melanoma recognition. IEEE Trans. Med. Imaging 2001, 20, 233–239. [Google Scholar] [CrossRef]

- Bajwa, M.N.; Muta, K.; Malik, M.I.; Siddiqui, S.A.; Braun, S.A.; Homey, B.; Dengel, A.; Ahmed, S. Computer-aided diagnosis of skin diseases using deep neural networks. Appl. Sci. 2020, 10, 2488. [Google Scholar] [CrossRef]

- Nugroho, A.A.; Slamet, I.; Sugiyanto, S. Skins cancer identification system of HAMl0000 skin cancer dataset using convolutional neural network. AIP Conf. Proc. 2019, 2202, 020039. [Google Scholar]

- Sadeghi, M.; Lee, T.K.; McLean, D.; Lui, H.; Atkins, M.S. Detection and Analysis of Irregular Streaks in Dermoscopic Images of Skin Lesions. IEEE Trans. Med. Imaging 2013, 32, 849–861. [Google Scholar] [CrossRef] [PubMed]

- Adegun, A.A.; Viriri, S. Deep learning-based system for automatic melanom a detection. IEEE Access 2019, 8, 7160–7172. [Google Scholar] [CrossRef]

- Moldovan, D. Transfer learning based method for two-step skin cancer images classification. In Proceedings of the 2019 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2019; pp. 1–4. [Google Scholar]

- Çevik, E.; Zengin, K. Classification of skin lesions in dermatoscopic images with deep convolution network. Avrupa Bilim Teknol. Derg. 2019, 6, 309–318. [Google Scholar] [CrossRef]

- Hasan, M.; Barman, S.D.; Islam, S.; Reza, A.W. Skin cancer detection using convolutional neural network. In Proceedings of the 2019 5th International Conference on Computing and Artificial Intelligence, Bali, Indonesia, 19–22 April 2019; pp. 254–258. [Google Scholar]

- Gilani, Q.; Syed, S.T.; Umair, M.; Marques, O. Skin Cancer Classification Using Deep Spiking Neural Network. J. Digit. Imaging 2023, 36, 1137–1147. [Google Scholar]

- Kousis, I.; Perikos, I.; Hatzilygeroudis, I.; Virvou, M. Deep learning methods for accurate skin cancer recognition and mobile application. Electronics 2022, 11, 1294. [Google Scholar] [CrossRef]

- Ranpreet, K.; GholamHosseini, H.; Sinha, R.; Lindén, M. Melanoma classification using a novel deep convolutional neural network with dermoscopic images. Sensors 2022, 22, 1134. [Google Scholar] [CrossRef]

- Alwakid, G.; Gouda, W.; Humayun, M.; Sama, N.U. Melanoma Detection Using Deep Learning-Based Classifications. Healthcare 2022, 10, 2481. [Google Scholar] [CrossRef]

- Ali, K.; Shaikh, Z.A.; Khan, A.A.; Laghari, A.A. Multiclass skin cancer classification using EfficientNets—A first step towards preventing skin cancer. Neurosci. Inform. 2022, 2, 100034. [Google Scholar] [CrossRef]

- Majtner, T.; Bajić, B.; Yildirim, S.; Hardeberg, J.Y.; Lindblad, J.; Sladoje, N. Ensemble of convolutional neural networks fordermoscopic images classification. arXiv 2018, arXiv:1808.05071. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar]

- Peng, S.; Jiang, H.; Wang, H.; Alwageed, H.; Zhou, Y.; Sebdani, M.M.; Yao, Y.D. Modulation classification based on signal constellation diagrams and deep learning. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 718–727. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 318–327. [Google Scholar] [CrossRef]

- Malik, H.; Farooq, M.S.; Khelifi, A.; Abid, A.; Qureshi, J.N.; Hussain, M. A comparison of transfer learning performance versushealth experts in disease diagnosis from medical imaging. IEEE Access 2020, 8, 139367–139386. [Google Scholar] [CrossRef]

- Mijwil, M.M. Skin cancer disease images classification using deep learning solutions. Multimed. Tools Appl. 2021, 80, 26255–26271. [Google Scholar] [CrossRef]

- Indraswari, R.; Rokhana, R.; Herulambang, W. Melanoma image classification based on MobileNetV2 network. Procedia Comput. Sci. 2022, 197, 198–207. [Google Scholar] [CrossRef]

- Khan, A.M.; Akram, T.; Zhang, Y.-D.; Sharif, M. Attributes based skin lesion detection and recognition: A mask RCNN andtransfer learning-based deep learning framework. Pattern Recognit. Lett. 2021, 143, 58–66. [Google Scholar] [CrossRef]

- Zhou, Y.; Koyuncu, C.; Lu, C.; Grobholz, R.; Katz, I.; Madabhushi, A.; Janowczyk, A. Multi-site cross-organ calibrated deeplearning (MuSClD): Automated diagnosis of non-melanoma skin cancer. Med. Image Anal. 2023, 84, 102702. [Google Scholar] [CrossRef]

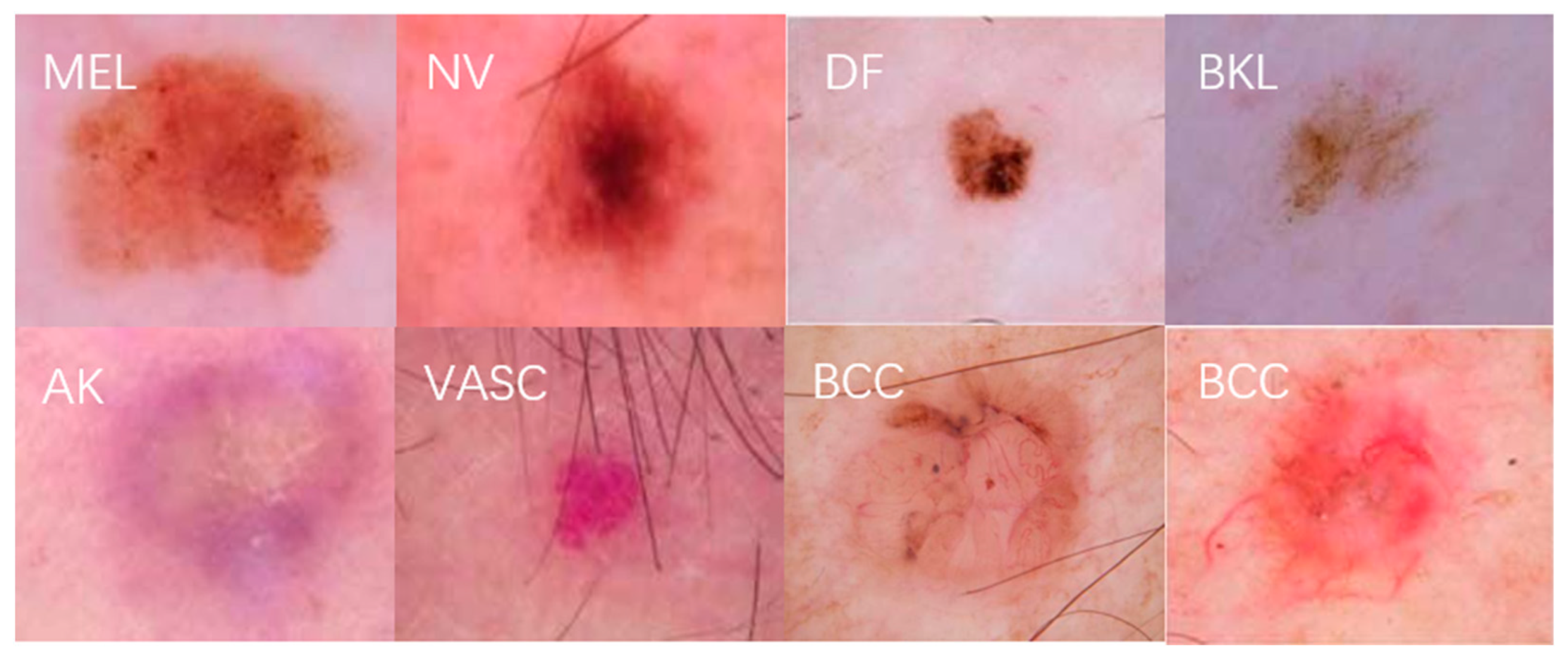

| Skin Disease Name | Descriptive | Number of Pictures | Percentage |

|---|---|---|---|

| MEL | Melanoma can be treated with surgical removal in early stages | 4523 | 17.85% |

| NVs | There are many different variants of melanocytic nevi | 12876 | 50.83% |

| BKL | They have similar characteristics to melanoma | 2625 | 10.36% |

| AK | Actinic keratosis and carcinoma caused by excessive sun exposure | 868 | 3.43% |

| BCC | Basal cell carcinoma is a common epithelial skin cancer | 3324 | 13.12% |

| VASCs | Vascular skin lesions often look red or purple, with clear boundaries | 254 | 1.01% |

| DF | Dermatofibroma is a proliferation of fibrous tissue | 240 | 0.95% |

| Method | Class | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Resnet-50 | MEL | 0.7577 | 0.8283 | 0.8122 | 0.8168 |

| NVs | 0.8133 | 0.6760 | 0.7568 | 0.7230 | |

| BKL | 0.7650 | 0.8123 | 0.7434 | 0.7721 | |

| AK | 0.7877 | 0.8865 | 0.8821 | 0.8822 | |

| BCC | 0.8124 | 0.7256 | 0.7977 | 0.7531 | |

| VASCs | 0.8365 | 0.8006 | 0.9113 | 0.8612 | |

| DF | 0.8428 | 0.6577 | 0.8345 | 0.7455 | |

| Densenet | MEL | 0.8275 | 0.8503 | 0.8290 | 0.8345 |

| NVs | 0.8014 | 0.7566 | 0.7803 | 0.7753 | |

| BKL | 0.8169 | 0.8177 | 0.8554 | 0.8441 | |

| AK | 0.8024 | 0.9059 | 0.8624 | 0.8824 | |

| BCC | 0.8299 | 0.7134 | 0.7805 | 0.7306 | |

| VASCs | 0.8254 | 0.8178 | 0.7942 | 0.8081 | |

| DF | 0.8999 | 0.8502 | 0.8177 | 0.8355 | |

| Inception-v2 | MEL | 0.8105 | 0.8802 | 0.8053 | 0.8237 |

| NVs | 0.8521 | 0.9011 | 0.7825 | 0.8460 | |

| BKL | 0.7346 | 0.8152 | 0.8821 | 0.8513 | |

| AK | 0.8829 | 0.9326 | 0.8765 | 0.9003 | |

| BCC | 0.8199 | 0.7144 | 0.7538 | 0.7325 | |

| VASCs | 0.8214 | 0.8527 | 0.8110 | 0.8211 | |

| DF | 0.8702 | 0.8860 | 0.8723 | 0.8724 |

| Method | Class | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| Resnet-50 | MEL | 0.7602 | 0.8477 | 0.8223 | 0.8350 |

| NVs | 0.8134 | 0.6952 | 0.7341 | 0.7146 | |

| BKL | 0.7798 | 0.8288 | 0.7955 | 0.8121 | |

| AK | 0.7742 | 0.8792 | 0.9041 | 0.8916 | |

| BCC | 0.8076 | 0.7466 | 0.8197 | 0.7831 | |

| VASCs | 0.8235 | 0.8108 | 0.9023 | 0.8565 | |

| DF | 0.8542 | 0.6355 | 0.8345 | 0.7350 | |

| Densenet | MEL | 0.8272 | 0.8766 | 0.8119 | 0.8442 |

| NVs | 0.7961 | 0.7978 | 0.7783 | 0.7880 | |

| BKL | 0.8013 | 0.8402 | 0.8656 | 0.8529 | |

| AK | 0.8105 | 0.9184 | 0.8769 | 0.8976 | |

| BCC | 0.8117 | 0.6875 | 0.8002 | 0.7438 | |

| VASCs | 0.8432 | 0.8333 | 0.8053 | 0.8193 | |

| DF | 0.9088 | 0.8634 | 0.8221 | 0.8427 | |

| Inception-v2 | MEL | 0.8331 | 0.8757 | 0.8169 | 0.8463 |

| NVs | 0.8761 | 0.9104 | 0.7993 | 0.8548 | |

| BKL | 0.7968 | 0.8264 | 0.8976 | 0.8620 | |

| AK | 0.9014 | 0.9424 | 0.8901 | 0.9162 | |

| BCC | 0.8203 | 0.7643 | 0.8105 | 0.7874 | |

| VASCs | 0.8661 | 0.8645 | 0.8263 | 0.8454 | |

| DF | 0.9142 | 0.9325 | 0.8658 | 0.8991 |

| Model | Datasets | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| 2D superpixels + RCNN [40] | HAM-10000 | 0.8550 | 0.8340 | 0.8450 | 0.8530 |

| ResNeXt101 [41] | ISIC-2019 | 0.8850 | 0.8740 | 0.8810 | 0.8830 |

| MobileNetV2 [42] | ISIC-2019 | 0.8530 | 0.7890 | 0.8633 | |

| VGG19 [43] | ISIC-2019, Derm-IS | 0.8857 | 0.9070 | 0.8666 | 0.8765 |

| ConvNet [44] | ISIC-2018, Derm-IS | 0.8690 | 0.8614 | 0.8747 | |

| Ours | ISIC-2019 | 0.8904 | 0.8737 | 0.9015 | 0.8876 |

| Model | Data Enhancement | Hair Removal | Focal Loss | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|---|---|

| Exp.1 | × | ✓ | ✓ | 0.8702 | 0.8422 | 0.8135 | 0.8304 |

| Exp.2 | ✓ | × | ✓ | 0.8651 | 0.8331 | 0.8290 | 0.8315 |

| Exp.3 | ✓ | ✓ | × | 0.8433 | 0.8209 | 0.8311 | 0.8260 |

| Exp.4 | ✓ | ✓ | ✓ | 0.8904 | 0.8737 | 0.9015 | 0.8876 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhi, S.; Li, Z.; Yang, X.; Sun, K.; Wang, J. A Multiclassification Model for Skin Diseases Using Dermatoscopy Images with Inception-v2. Appl. Sci. 2024, 14, 10197. https://doi.org/10.3390/app142210197

Zhi S, Li Z, Yang X, Sun K, Wang J. A Multiclassification Model for Skin Diseases Using Dermatoscopy Images with Inception-v2. Applied Sciences. 2024; 14(22):10197. https://doi.org/10.3390/app142210197

Chicago/Turabian StyleZhi, Shulong, Zhenwei Li, Xiaoli Yang, Kai Sun, and Jiawen Wang. 2024. "A Multiclassification Model for Skin Diseases Using Dermatoscopy Images with Inception-v2" Applied Sciences 14, no. 22: 10197. https://doi.org/10.3390/app142210197

APA StyleZhi, S., Li, Z., Yang, X., Sun, K., & Wang, J. (2024). A Multiclassification Model for Skin Diseases Using Dermatoscopy Images with Inception-v2. Applied Sciences, 14(22), 10197. https://doi.org/10.3390/app142210197