Featured Application

This wearable system employs innovative sonification techniques to enhance balance and overall physical awareness. It offers rehabilitation and healthcare sectors an accessible and intuitive tool for balance training and physical conditioning, where monitoring and improving postural stability is crucial.

Abstract

This paper presents the design, development, and feasibility testing of a wearable sonification system for real-time posture monitoring and feedback. The system utilizes inexpensive motion sensors integrated into a compact, wearable package to measure body movements and standing balance continuously. The sensor data is processed through sonification algorithms to generate real-time auditory feedback cues indicating the user’s balance and posture. The system aims to improve movement awareness and physical conditioning, with potential applications in balance rehabilitation and physical therapy. Initial feasibility testing was conducted with a small group of healthy participants performing standing balance tasks with eyes open and closed. Results indicate that the real-time audio feedback improved participants’ ability to maintain balance, especially in the case of closed eyes. This preliminary study demonstrates the potential for wearable sonification systems to provide intuitive real-time feedback on posture and movement to improve motor skills and balance.

1. Introduction

As our society grapples with an aging population and increased incidence of gait- and balance-related disorders [,,], the need for innovative, accessible, and user-friendly interventions becomes critical. The Internet of Things (IoT) has become an integral part of our everyday lives used to enhance human–machine interfaces (HMI), which allow users to interact and perform tasks. The common forms of HMI are windows, icons, menus, and pointers in personal computers and finger gestures with touch screens or motion sensors. Nevertheless, beyond the control of fingers to strike keys or swipe a screen, greater movements of the fuller body are being examined through wearable technologies. It is argued that movement- and biosensor-based interactions will reach beyond the conventional HMIs, primarily visually mediated and physically constrained, to encompass greater movement expression and awareness through their sensing of the fuller body [,,]. In terms of market adoption, studies have shown that wearable technologies would be used willingly to monitor health [,,,,]. One purpose of this monitoring is to capture continuous movement data []. Continuous data can potentially improve patient experience, facilitate clinical evaluation [], and provide information on the quality of movement via realistic pictures of patient functioning during rehabilitation [].

An increasing number of commercially available low-cost, off-the-shelf devices and open-source software have created opportunities for manufacturers, designers, engineers, technologists, and researchers to design and develop devices that can be worn and enhance interactions between them and their users. When deployed in motion-aware applications, these wearables can improve awareness of the limb’s position, movement, and gait activities [,,,,]. Wearable technologies for accurate gait event detection have come in many different forms, such as inertial measurement units (IMUs) [,,,,] with embedded sensors, including accelerometers [,,,,] and gyroscopes [,,].

Sonification is a process of using non-speech audio to convey information to users []. The application of sonification in gait and rehabilitation is a growing area of interest [,,,,]. It involves translating gait characteristics captured by wearable technology into auditory feedback, aiding in establishing rhythmic and harmonious gait or movement patterns.

The integration of IoT, wearable technology, and sonification offers a promising avenue for personalized healthcare interventions [,,]. The integration of IoT and wearable technology in this study offers a transformative approach to enhancing movement awareness, especially through the innovative use of sonification. By leveraging affordable, compact motion sensors, the research focused on developing a user-centric feedback system and applying sonification techniques for continuous body movement measurement. This facilitated real-time, data-driven insights, crucial for improving physical awareness and balance, particularly for individuals with movement disorders.

2. Related Work

This literature review investigated the developments of wearable technologies and approaches to providing auditory feedback to enhance gait and rehabilitation outcomes through sonification.

An earlier study by Khandelwal and Wickstrom [] used accelerometer signals to detect gait events such as heel strike (HS) and toe off (TO) in a normal gait cycle. To use accelerometers for continuous monitoring or long-term analysis, they developed an algorithm to incorporate the magnitude of the resultant accelerometer signal, obtained from each axis of the three-axis accelerometer, into time-frequency analysis to detect gait events. Their algorithm was validated for its accuracy and robustness using gait experiments conducted in indoor and outdoor environments. The experiment involved gait event detections with varying speeds, terrains, and surface inclinations. However, their algorithm was only developed for a single accelerometer around the ankle. It did not account for the impact due to the left or right foot and the applicability of a multi-accelerometer setup with improved accuracy. Other researchers have found that a gyroscope is the preferred device to detect gait events (HS and TO), because its measurements are not sensitive to the body part where it was placed [,,]. Gouwanda et al. [] developed a low-cost wearable wireless gyroscope worn around the foot and ankle to identify gait events. The gyroscope was coupled with an Arduino Pro Mini for (i) converting raw sensing data to angular rate values, (ii) detecting HS and TO, and (iii) transmitting data to a computer for processing. However, in their experiment, the reliability and accuracy of the wireless gyroscope deteriorated over an extended period. Our investigation identified that the reduced reliability and accuracy of wireless gyroscopes over extended periods could stem from multiple sources. These included the physical deterioration of sensor components, challenges in maintaining system synchronization over time, and accumulated errors and other limitations inherent in the algorithms presented in the literature. Therefore, developing a more resilient algorithmic framework could mitigate these issues, and capitalizing on the advantages of low-cost, single wearable sensing technology could be a feasible solution.

Auditory feedback in wearable technology, especially in the form of sonification, has demonstrated its potential to enhance motor coordination and gait rehabilitation [,,,]. Sonification signals generated according to different types and magnitudes of body motions can provide crucial feedback, especially during a gait cycle and other time-related activities, to assist a person in the perception of their movements [,]. For the last decade, rhythmic auditory cueing has been used to transform human movement patterns into sound to enhance perception accuracy []. Auditory feedback has been widely used to allow users to perceive their movement amplitudes and positioning better and to enhance motor recovery during rehabilitation [,,] and in the execution, control, and planning of movement [,]. Auditory feedback has also been shown to influence postural sway, where acoustic properties of the auditory stimuli might be more influential in reducing sway than any position or velocity information encoded in the stimuli [,,]. Auditory feedback has been shown to improve motor performance in skills such as balancing compared to purely visual feedback []. Swaying movements outside a balance reference region have been corrected by a tone mapped to this movement acceleration in the mediolateral and posterior–anterior directions [,,].

In the context of Parkinson’s disease, sonification, especially when incorporating musical elements, has shown promising results in improving balance []. Another study [] highlighted that the presence or absence of auditory cues can facilitate or hinder typical motor patterns and stability in gaze. The authors investigated the effects of concurrent versus delayed auditory feedback and found that concurrent auditory feedback was beneficial compared to delayed auditory feedback. The delayed auditory feedback led to significant distractions and irritations, significantly affecting the participants’ movement performance. Similarly, a recent study by Wall et al. [] has investigated sonification through manipulating acoustic features (e.g., pitch, timbre, amplitude, tempo, duration, and spatialization) and their potential to influence gait rehabilitation. They found that manipulating acoustic feedback played a vital role in user engagement and enhanced therapeutic outcomes.

The complexities of auditory feedback’s impact on motor tasks have been highlighted, suggesting it can both aid and impair motor execution depending on the sound parameters such as timing [], volume [], pitch [], and continuous or discrete [,,]. Discrete auditory feedback (DAF) has been used extensively to cue and time walking gait via metronomic or musical beats, and is commonly referred to as Rhythmic Auditory Stimulation (RAS) [,]. Roger et al. [] suggested that cueing the step in a walking gait with a beat or a metronome as DAF may not be adequate for sonifying the continuous gait state. Between these beats were intervals that could be further sonified to express the richness of the change in the swing of the legs between these footsteps. Continuous auditory feedback (CAF) provides information on the unfolding and continuous state of movement. CAF appears promising to help and guide people to perform a specific movement consistently, which may avoid any potential injury, improve the quality of their movement, or potentially regain normal function for those with movement disorders [,,]. CAF can reduce participants’ delays in tracking screen-based targets with manually operated joystick and Wacom pen input devices []. One plausible explanation would be that CAF eases participants in continuously estimating and correcting on-screen targets’ trajectories between their start and end points, thus leading to better tracking accuracy [].

Although previous studies have contributed to our understanding of the effects of auditory feedback, research is needed into personalized auditory feedback systems that account for individual variability in gait and rehabilitation responses. Further exploration into integrating auditory cues as a form of feedback mechanism in wearables could also enhance user experience and outcomes. Therefore, this study aims to design, develop, and feasibility test an auditory feedback system prototype for balance training. Balancing is informed via discrete bell-like tones combined with continuous tones that are directly modulated via the rotation of the torso to adjust their brightness. These two auditory mechanisms aim to signal users when they move away from their center of mass (CoM) to aid in balance correction. In this study, we sought to address the following research questions (RQ):

- RQ1: Is a low-cost IMU device attached to the torso an effective means of generating data to provide auditory feedback for balance correction?

- RQ2: Do continuous and discrete tones generated from balance rotation movements aid in correcting balance back to a desired reference region?

3. Materials and Methods

The proposed low-cost wearable sonification system is aimed to provide users with continuous and discrete auditory feedback during their movement awareness. The system was designed and evaluated in the laboratory setting to offer insights into the application in real-life situations to enhance balance awareness and control for healthy and pathological populations.

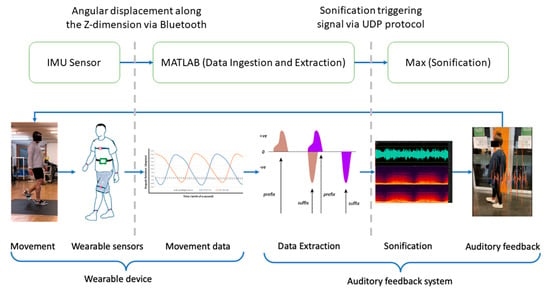

Figure 1 provides an overview of the design and development process for the system, which can be divided into two primary stages. The first stage was the design of the wearable device, which consisted of an inertial measurement unit with an algorithm that captured relevant movement data. The second stage involved the development of the sonification system, including continuous and discrete auditory feedback, which converts the movement data into appropriate sonified features that provide feedback to the participants on their state of balance. Finally, we evaluated this comprehensive system to test its ability to inform participants of their balance via its unique approach to auditory feedback under laboratory conditions.

Figure 1.

An overview of the design and the signal flow diagram of the wearable auditory feedback system.

3.1. Design of the Wearable Device

The wearable device, WT901BLECL, is a commercially available IMU sensor from Wit-Motion©, WitMotion Shenzhen Co., Ltd., Shenzhen, China (Figure 2), which offers wireless data transmission via its built-in Bluetooth chip nRF52832 and has a built-in 260 mAh rechargeable battery. The model WT901BLECL was selected because of the reasonable performance of its embedded motion sensor MPU9250 at measuring rotation, angular velocity, and acceleration in x, y, and z directions, as well as its compact form factor and light weight, making it easy to attach to participants’ bodies with Velcro-style strapping, eliminated the need for cables, enhancing user comfort and mobility. When the IMU unit was attached to a participant’s body, its x-axis rotation was about the human anatomical sagittal plane, while its z-axis rotation was about the frontal plane. The IMU also has built-in Kalman filtering that removes noise from the raw data it records, thereby enhancing the accuracy of the results. As illustrated in Figure 1, the IMU captures the participant’s movement data. Then, the digitalized signal is transmitted over Bluetooth to an ordinary laptop computer. MATLAB (R2022b) was used on the computer to ingest the data and extract the features to trigger the sonification process. Once a trigger is received, the Max© (version 8) software by Cycling’74 will generate a sonified signal. This signal can be played back to the participant either through the computer’s speakers or transmitted via Bluetooth to headphones.

Figure 2.

A WT901BLECL sensor from WitMotion Shenzhen Co., Ltd.

After several trials, we found that positioning the IMU on the sternum offered the most uniform location for all participants, ensuring consistent and reliable torso measurement. Before each experiment, a calibration process was conducted while the participant remained stationary. The process involves the collection of 50 angular rotation samples at the participant’s sternum, from which an average is computed to establish a reference point. The actual angular rotation values, i.e., angular displacement, are determined during the experiment by subtracting this reference value from the sensor’s raw data. This method ensures an accurate estimation of the sternum’s position and orientation.

3.2. Software and Hardware Integration

The IMU was configured to stream data to MATLAB for data processing and then perform sonification through Max. MATLAB’s serialport() function was used to monitor the serial communications and retrieve the sensor’s rotation and acceleration data. The retrieved data were processed and stored in a matrix form. The plot() function was used for real-time data visualization.

After storing the sensor’s rotational and acceleration data, MATLAB sent this data to Max through a loopback Internet Protocol (IP) address using User Datagram Protocol (UDP)—a communication protocol for time-sensitive data transmission. Once the data were received, Max utilized its Open Sound Control (OSC) function for real-time sonification. In this context, Max acted as the sonification platform, translating the sensor data into auditory signals, allowing users to hear the sensor data and offering an innovative method to interpret and interact with the information in real time.

3.3. Developing the Sonification System

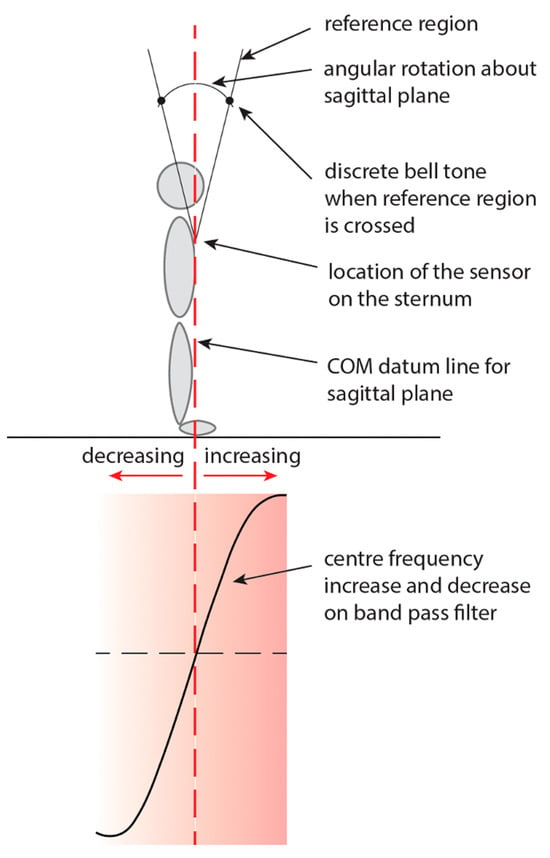

The auditory feedback used in this study combined continuous and discrete tones to indicate the participant’s balance state. The discrete tone was a bell sound triggered by the participant rotating outside the reference region, as illustrated at the top of Figure 3. The reference region was based on a 5% rotational deviation on either side of the reference datum collected in the calibration process. The continuous tones were shifted via an increase or decrease in the center frequency of a bandpass filter over a selection of tuned oscillators. The auditory effect was to brighten the tones as the rotation increased away from the reference region or, conversely, dull these tones as the rotation decreased in the region through the sagittal plane of movement, as shown in the lower half of Figure 3.

Figure 3.

The CAF system takes sagittal rotation data to generate continuous and discrete tones.

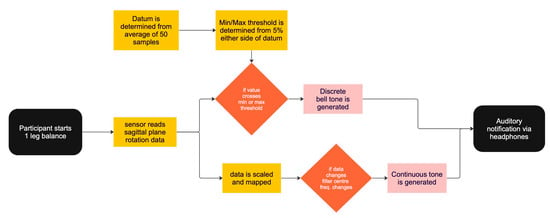

Cueing movement with auditory feedback that changes outside a reference region follows the approach used by [,]. In those studies, an auditory sinusoidal tone was increased in frequency when the participant accelerated in the anterior direction and decreased when the participant accelerated in the posterior direction whilst trying to balance. We sought to implement our sonified signal based on this relationship, as it has been demonstrated as a promising approach. In this study, we further improve it by combining continuous tones that were brightened as the rotation increased or conversely dulled as the rotation decreased, with a discrete bell tone that signaled the movement outside of the reference region in the sagittal plane. Figure 4 shows the proposed flow structure for retrieving data from the sensor and providing sonification to the user.

Figure 4.

Diagram of the algorithm and auditory feedback system.

The auditory feedback system was designed to guide users in real-time to maintain balance while performing a one-leg balance task. The IMU sensor, attached to the participant, started to read sagittal plane rotation data. This data was used to establish a baseline (datum) from the average of 50 sensor samples. From this datum, a minimum or maximum threshold was set at 5% on either side to detect significant deviations in balance.

Information collected by the sensor was sent to MATLAB, which processed and converted them into readable acceleration and angular data. The processed data were then graphed in MATLAB for real-time observation and sent concurrently to Max. In Max, the data underwent further processing to become sonified signals, triggered based on one of two responses: (1) If the rotation data crossed the predefined thresholds, a discrete bell tone was generated to alert the user of a loss of balance. (2) For less critical deviations, the data were scaled and mapped to modify the cut-off frequency of a continuous tone. This continuous tone changes in pitch (or brightness) to provide subtle feedback on the user’s position relative to balance. These auditory cues are transmitted to the user through headphones, providing a sonic representation of their balance in real time. The movement sonification system files can be found in the Supplementary Materials for reference.

Whilst not seeking to time movement events as in other research, we used discrete tones to indicate a threshold was exceeded as an unmistakable sound moment in a corrective manner. This discrete sound moment can contrast and define movement regions with the continuously changing tone.

We agreed with Rodger et al. [] on the need for a dynamic movement unfolding using CAF. While Roger et al. [] applied this concept to walking gait to denote the leg’s swing phase, we have adapted it to balance training as the CoM movement could benefit from real-time CAF to correct this movement back into a reference region and enhance kinesthetic awareness.

4. Evaluation of the Wearable Auditory Feedback System

Four participants took a standing balance test for this pilot study. Standing balance tests were conducted to establish the effectiveness of the wearable system, particularly in evaluating how accurately the IMU unit captures rotation around the x-axis in the sagittal plane and rotation about the z-axis in the frontal plane. The IMU sensor was attached to the sternum of each participant and calibrated, as mentioned in the last section. The sensors were configured with a sampling rate of 10 Hz. The auditory feedback was provided to the participant via headphones through an audio interface that converted the digital signal from the Max software into an analog signal (see Figure 4). The test conditions were as follows:

- Test 1: the participant stood on one leg with open eyes, and no audio feedback was provided.

- Test 2: the participant stood on one leg with eyes closed, and no audio feedback was provided.

- Test 3: the participant stood on one leg with their open opens, and audio feedback was provided.

- Test 4: the participant stood on one leg with eyes closed, and audio feedback was provided.

Each test was conducted for 60 s, a timeframe specifically chosen to gather sufficient data for statistical analysis. This approach helps mitigate the impact of noise and potential errors in the data collection process, thereby enhancing the reliability of the experimental results.

5. Results

5.1. Validation of the Auditory Feedback System

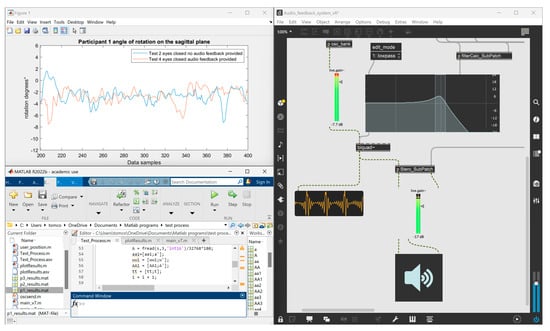

The auditory feedback system has been designed and configured to ensure seamless interaction between the IMU sensor and MATLAB, subsequently bridging MATLAB with Max. Figure 5 presents how this data transfer precipitates a dynamic alteration in the audio output, which is visualized in real-time with MATLAB’s plotting functions.

Figure 5.

Verification of the IMU sensor’s integration with MATLAB and Max for effective sonification and user auditory feedback.

Figure 5 illustrates the verification of integrating the IMU sensor with MATLAB, where the measurements indicated a stable output from the sensor with no data loss during transmission. The software subsequently executed tasks such as filtering and smoothing to prepare the data for sound sonification via Max. Max generated two auditory feedbacks, i.e., continuous and discrete bell tones (see Figure 4). These sounds correspond to the specific 5% thresholds indicated by the sensor, confirming the system’s capability to provide clear and immediate auditory feedback for discrete events.

5.2. Evaluation of Auditory Feedback for Balance Correction

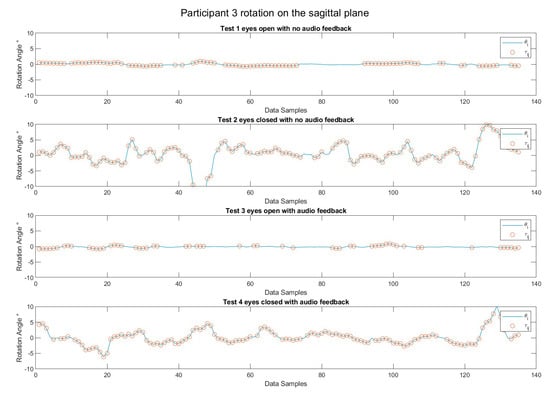

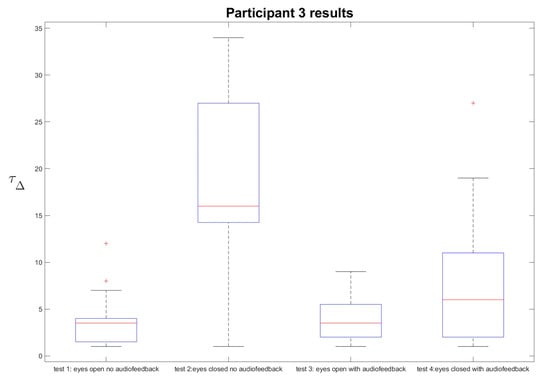

Here, we analyzed how the participant responded to the auditory feedback in the four test conditions. All four participants engaged in each of the four tests to maintain consistency across the data set. Generally, we found that Test 4, eyes closed with auditory feedback, had a greater effect across the participants when compared to Test 2, eyes closed with no auditory feedback. Figure 6 shows that the deviation from the reference point in the x dimension can be visually seen as less in Test 4 when auditory feedback was provided than in Test 2.

Figure 6.

Time series data plot for Participant 3 across the four test conditions.

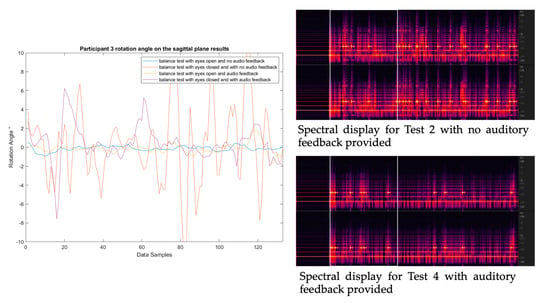

Figure 7 presents the spectrogram for Test 2 and Test 4, without and with auditory feedback, respectively. Without auditory feedback to inform the participant about their movement, the spectral display’s brightness is relatively continuous and uniform. This indicated that without auditory stimulus, the participant was unaware of the position relative to the reference region and hence did not elicit any change in their movement. Conversely, when auditory feedback was provided, bright and discrete flashes at specific intervals signified the bell tones triggered when the participant’s movement deviated from the sagittal plane’s reference region. These bell tones served as auditory cues, informing the participant of their movement’s misalignment with the desired range. The periodic appearance of these bright markers against the darker background of continuous frequencies demonstrates the feedback system’s functionality in alerting the participant through discrete auditory signals.

Figure 7.

Time series data plots and spectrogram for Participant 3 across test conditions with and without auditory feedback.

Figure 8 presents a participant’s mean time series data across four balance tests. It was evident that the participant’s recovery time to the reference region when balancing on one leg with eyes open shows negligible difference, irrespective of auditory feedback. However, when the participant’s eyes were closed, auditory feedback significantly improved their recovery time to the reference region, unlike when no feedback was provided. This is demonstrated by the less pronounced time changes in returning to the reference region with auditory guidance, suggesting an enhanced stabilization effect under these conditions.

Figure 8.

Mean change in time series data plots for Participant 3 across four test conditions (+ significance difference p < 0.05).

6. Discussion

6.1. Feasibility of a Low-Cost Auditory Feedback System

In this feasibility study, we explored whether the continuous and discrete tones generated from balance rotation movements can aid in correcting balance back to a desired reference region. The results from the validation process demonstrated that the system could provide reliable and dynamic auditory feedback in real time based on the input from the IMU sensor. Integrating the sensor with MATLAB, and subsequently with Max, has been established to be effective for modulating auditory signals corresponding to physical motion.

The discrete tone was generated when the user’s rotation angle in the sagittal plane was recorded at predetermined values. These values were obtained during the calibration process. The reference angle on the sagittal plane was then sent to the Max, where two threshold parameters were set. These values were ±5% × average angle, indicating that a loss of balance has occurred. The discrete tone was generated corresponding to a sine wave with a frequency of 440 Hz, and once triggered, the bell tone was produced for 800 milliseconds. The frequency of 440 Hz was chosen as it acted as a fundament tone and worked well with the continuous audio system in parallel.

The IMU device utilizes variations in rotation from a predetermined reference point to drive the sonification process, designed to inform the participant of their positional deviations and guide them back to the reference area through changes in auditory feedback. While we envisage incorporating the sensor into a custom-made garment crafted from aesthetically pleasing, lightweight, and elastic viscose fabrics for comfort and ease of movement (as outlined in []), this study primarily focuses on assessing the efficacy of the sonification process in conjunction with the IMU sensor. Although significant, the development and integration of the wearable device with the garment fall outside the immediate scope of this research.

6.2. Enhancing Kinesthetic Awareness through Discrete and Continuous Auditory

This study has illustrated a dual strategy of auditory cues for movement awareness, comprising continuous and discrete sounds. The CAF system, intricately mapped to a continuous data stream, allowed the participant to cue their movement and correct their position back to a reference region to attain balance. This approach is built on the dynamic auditory response that directly mirrors the participant’s spatial orientation in relation to the reference region boundary. The continuous tones that varied in brightness correlate with the rotation intensity. As the participant’s rotation increased, the tones brightened, enhancing the auditory representation of movement magnitude. Conversely, as the rotation decreased, the tones became progressively duller, providing an intuitive auditory dimming in response to the reduced activity (Figure 7).

In addition to continuous tones, the system incorporated a discrete bell tone to signal deviations from the reference region within the sagittal plane. This discrete auditory cue provided a clear and immediate indication of movements that exceeded the predefined threshold, acting as an alert mechanism to return to the reference region. Interestingly, these results were most evident in the eyes-closed settings, where the amount of sway and the time it took to return to the center of the reference region was reduced with the aid of auditory feedback (Figure 8). This observation agrees with earlier research on stance and audio biofeedback [,] that found a reduction in postural sway in eyes-closed participants with the addition of audio biofeedback, as it helped the participants partially overcome the deficit in visual feedback. Another study [] has shown that adding white noise has reduced postural sway in eye-open and eye-closed standing balance tests.

The effectiveness of the proposed system in aiding participants in returning to their reference region was evident in the results. Upon analyzing the audio files generated for Tests 3 and 4, a few initial observations can be made. In Test 4, the bell tone provided a clear indication when the reference region was exceeded; this was a clear cue for the participant to return to the reference region, and this correction took a mean of approximately 5 s compared to a mean of roughly 15 s in settings without auditory feedback. The continuous sound, represented as a sharpening tone before the bell tone, provided a cue to participants to anticipate the reference region boundary. Specifically, in Test 3, the bell tone only signaled one reference boundary crossing. The eyes-open condition did not require the auditory sense as much as that of closed eyes. This was most evident when comparing the time series graphs of the rotation angles for Tests 3 and 4 (Figure 6 and Figure 8).

This initial analysis underscores the essential role of auditory feedback in balance control and postural stability. This finding agrees with previous studies demonstrating that auditory feedback effectively reduced postural sway in various sensory conditions [,,]. While confirming the potential of auditory feedback in therapeutic applications to enhance balance, its application in gait analysis presented in our previous study [] is important. We noted there that it is crucial to distinguish a focus of the study, which here is balance, whereas the earlier paper [] was focused on gait. Given the differences between movement types and assessment objectives in these two studies, a direct comparison may not yield meaningful insight. We believe that this current study, focused on balance, contributes to understanding the application of auditory feedback in balance control. Moving forward, we suggest that future research focus more on gait analysis. That would entail exploring how auditory feedback could effectively be integrated into gait training and rehabilitation, thereby extending the scope of its application.

6.3. Limitation and Future Work

This feasibility study provided valuable insights into integrating an IMU sensor with MATLAB and Max to deliver auditory feedback in a sonification application. The initial results are promising, showing that the auditory feedback system could inform participants of movements outside the reference regions. However, some limitations should be considered when interpreting these findings.

Our current system setup relied on an ordinary laptop computer that served as the primary medium for processing the data collected by the IMU and subsequently delivering the auditory tones to the users. These tones were transmitted to the users through the same computer setup. It is acknowledged this method of data collection and feedback provision, while effective for the controlled conditions of our study, may present issues in terms of portability and convenience. The use of a more compact and user-friendly device, such as a smartphone, could significantly enhance the applicability and accessibility of this system and warrants further investigation.

We did not conduct a comprehensive cost-analysis of the auditory feedback system; our ‘low-cost’ term referred to the cost-effectiveness attributed to the use of off-the-shelf sensors, open-source software (for data processing and auditory feedback delivery), and an ordinary laptop computer, which has a significantly lower price when compared to systems that involved high-end motion capture or camera systems. Therefore, our system should be viewed cautiously until a thorough cost assessment is conducted. We recommend that future iterations of this research include a complete cost analysis, considering the wearable design, the software development costs, maintenance, and any potential future updates.

We tested the prototype with four participants, which limited the participant sample size. However, we believe that the consistency and control offered by this approach do not diminish the validity of our proof-of-concept. The findings of this study are intended as an initial exploration, and a larger and more diverse cohort would have enabled a more comprehensive understanding of the system’s efficacy across different demographics and movement patterns. As such, the current results may not be generalizable to a wider population. Future research should aim to recruit a larger and varied sample to validate these findings and enhance the study’s external validity.

That said, the CAF system has shown promise in enabling participants to adjust their movements in relation to a reference region and the specific mechanisms by which the combination of continuous and discrete tones contributed to movement correction remain unquantified. Our reliance on an off-the-shelf IMU sensor does require further validation against established measurement tools to confirm the accuracy of the auditory feedback relative to actual movement. As such, in future work, measuring CoM movement on a force plate accompanied with time-synchronized video footage will be essential to understand the movement response to the auditory feedback.

The reference regions were derived from preliminary data, which may not have captured the full range of movement dynamics. This could have affected the sensitivity and specificity of the auditory feedback, potentially leading to false positives or negatives in signaling movement outside these regions. Future studies should focus on refining the calculations for reference regions, possibly by incorporating machine learning algorithms that can adapt to a more comprehensive array of movement patterns.

7. Conclusions

This feasibility study explored whether continuous and discrete tones generated from balance rotation movements can aid in correcting balance back to a desired reference region. The results demonstrated the efficacy of the auditory feedback system in enhancing participants’ ability to maintain movement within the specified reference region.

Specifically, in addressing RQ1 on whether a low-cost IMU device attached to the torso effectively generates data for auditory feedback, the validation results showed that the IMU sensor data could be reliably integrated with the sonification algorithms to produce real-time auditory cues. The changing continuous tones and discrete bell sounds directly corresponded to the participants’ movements, confirming the feasibility of using an inexpensive wearable sensor for posture data.

For RQ2 on whether the auditory feedback aids in balance correction, the reduced sway and faster recovery times evident in the results, particularly for the eyes-closed condition, indicate that both the continuous and discrete tones helped guide participants back to the desired reference region. The auditory cues likely enhanced their movement awareness and supported more rapid balance adjustments.

In summary, this preliminary study demonstrates the potential for wearable sonification systems using low-cost IMU sensors and real-time audio feedback to improve movement control and balance. The auditory augmentation provides an intuitive sensory channel for conveying postural information to facilitate skill acquisition and rehabilitation. Further research with more extensive testing is warranted to optimize and validate the approach for balance applications and other movement-based needs.

Supplementary Materials

The following supporting information can be downloaded at https://doi.org/10.5281/zenodo.10477131 (accessed on 14 January 2024). The dataset comprises the following: 1. MATLAB code and Max patches, both presented in MATLAB file format. 2. The input data from the IMU provided in a CSV file format. 3. the corresponding outputs presented in the form of audio tones, which are made available as MP3 files.

Author Contributions

Conceptualization, F.F., T.Y.P. and C.-T.C.; methodology, F.F., T.Y.P. and C.-T.C.; software, F.F., C.-T.C. and T.C.; validation, F.F., C.-T.C. and T.C.; formal analysis, F.F., T.Y.P. and C.-T.C.; investigation, F.F. and T.C.; resources, F.F. and T.Y.P.; data curation, F.F. and T.C.; writing—original draft preparation, F.F., T.Y.P., C.-T.C. and T.C.; writing—review and editing, F.F., T.Y.P., C.-T.C. and T.C.; visualization, F.F., T.Y.P. and T.C.; supervision, F.F., T.Y.P. and C.-T.C.; project administration, T.Y.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the Strategic Impact Fund 2023, coordinated through the Enabling Impact Platform of the Research & Innovation Portfolio of RMIT University.

Institutional Review Board Statement

The study was conducted in accordance with the research protocol approved by the College Human Ethics Advisory Network of the RMIT University. Approved ID 26627 (15 August 2023).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to privacy.

Acknowledgments

The authors thank the volunteers who participated in this research.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

| CAF | Continuous auditory feedback |

| CoM | Center of mass |

| DAF | Discrete auditory feedback |

| HS | Heel strike |

| HMI | Human–machine interfaces |

| IMUs | Inertial measurement units |

| IP | Internet protocol |

| IoT | Internet of things |

| OSC | Open sound control |

| RAS | Rhythmic auditory stimulation |

| TO | Toe off |

| UDP | User datagram protocol |

References

- Waer, F.B.; Sahli, S.; Alexe, C.I.; Man, M.C.; Alexe, D.I.; Burchel, L.O. The Effects of Listening to Music on Postural Balance in Middle-Aged Women. Sensors 2024, 24, 202. [Google Scholar] [CrossRef] [PubMed]

- Olsen, S.; Rashid, U.; Allerby, C.; Brown, E.; Leyser, M.; McDonnell, G.; Alder, G.; Barbado, D.; Shaikh, N.; Lord, S.; et al. Smartphone-based gait and balance accelerometry is sensitive to age and correlates with clinical and kinematic data. Gait Posture 2023, 100, 57–64. [Google Scholar] [CrossRef]

- Jayakody, O.; Blumen, H.M.; Ayers, E.; Verghese, J. Longitudinal Associations Between Falls and Risk of Gait Decline: Results From the Central Control of Mobility and Aging Study. Arch. Phys. Med. Rehabil. 2023, 104, 245–250. [Google Scholar] [CrossRef]

- Höök, K.; Caramiaux, B.; Erkut, C.; Forlizzi, J.; Hajinejad, N.; Haller, M.; Hummels, C.C.M.; Isbister, K.; Jonsson, M.; Khut, G.; et al. Embracing first-person perspectives in soma-based design. Informatics 2018, 5, 8. [Google Scholar] [CrossRef]

- Gao, S.; Chen, J.; Dai, Y.; Hu, B. Wearable Systems Based Gait Monitoring and Analysis, 1st ed.; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Shull, E.M.; Gaspar, J.G.; McGehee, D.V.; Schmitt, R. Using Human–Machine Interfaces to Convey Feedback in Automated Driving. J. Cogn. Eng. Decis. Mak. 2022, 16, 29–42. [Google Scholar] [CrossRef]

- Page, T. A Forecast of the adoption of wearable technology. In Wearable Technologies: Concepts, Methodologies, Tools, and Applications; Information Resources Management Association, Ed.; IGI Global: Hershey, PA, USA, 2018; pp. 1370–1387. [Google Scholar]

- Deng, Z.; Guo, L.; Chen, X.; Wu, W. Smart Wearable Systems for Health Monitoring. Sensors 2023, 23, 2479. [Google Scholar] [CrossRef]

- Alim, A.; Imtiaz, M.H. Wearable Sensors for the Monitoring of Maternal Health-A Systematic Review. Sensors 2023, 23, 2411. [Google Scholar] [CrossRef]

- Stavropoulos, T.G.; Papastergiou, A.; Mpaltadoros, L.; Nikolopoulos, S.; Kompatsiaris, I. IoT Wearable Sensors and Devices in Elderly Care: A Literature Review. Sensors 2020, 20, 2826. [Google Scholar] [CrossRef]

- de-la-Fuente-Robles, Y.-M.; Ricoy-Cano, A.-J.; Albín-Rodríguez, A.-P.; López-Ruiz, J.L.; Espinilla-Estévez, M. Past, Present and Future of Research on Wearable Technologies for Healthcare: A Bibliometric Analysis Using Scopus. Sensors 2022, 22, 8599. [Google Scholar] [CrossRef]

- Alt Murphy, M.; Bergquist, F.; Hagström, B.; Hernández, N.; Johansson, D.; Ohlsson, F.; Sandsjö, L.; Wipenmyr, J.; Malmgren, K. An upper body garment with integrated sensors for people with neurological disorders—Early development and evaluation. BMC Biomed. Eng. 2019, 1, 3. [Google Scholar] [CrossRef]

- McLaren, R.; Joseph, F.; Baguley, C.; Taylor, D. A review of e-textiles in neurological rehabilitation: How close are we? J. Neuroeng. Rehabil. 2016, 13, 59. [Google Scholar] [CrossRef]

- Levisohn, A.; Schiphorst, T. Embodied Engagement: Supporting Movement Awareness in Ubiquitous Computing Systems. Ubiquitous Learn. Int. J. 2011, 3, 97–112. [Google Scholar] [CrossRef]

- Tortora, S.; Tonin, L.; Chisari, C.; Micera, S.; Menegatti, E.; Artoni, F. Hybrid Human-Machine Interface for Gait Decoding Through Bayesian Fusion of EEG and EMG Classifiers. Front. Neurorobot. 2020, 14, 582728. [Google Scholar] [CrossRef] [PubMed]

- De Jesus Oliveira, V.A.; Slijepčević, D.; Dumphart, B.; Ferstl, S.; Reis, J.; Raberger, A.-M.; Heller, M.; Horsak, B.; Iber, M. Auditory feedback in tele-rehabilitation based on automated gait classification. Pers. Ubiquitous Comput. 2023, 27, 1873–1886. [Google Scholar] [CrossRef]

- Wall, C.; McMeekin, P.; Walker, R.; Hetherington, V.; Graham, L.; Godfrey, A. Sonification for Personalised Gait Intervention. Sensors 2024, 24, 65. [Google Scholar] [CrossRef]

- Pérez-Ibarra, J.C.; Siqueira, A.A.G.; Krebs, H.I. Real-Time Identification of Gait Events in Impaired Subjects Using a Single-IMU Foot-Mounted Device. IEEE Sens. J. 2020, 20, 2616–2624. [Google Scholar] [CrossRef]

- Kok, M.; Hol, J.D.; Schön, T.B. Using Inertial Sensors for Position and Orientation Estimation. arXiv 2017, arXiv:1704.06053. [Google Scholar] [CrossRef]

- Moon, K.S.; Lee, S.Q.; Ozturk, Y.; Gaidhani, A.; Cox, J.A. Identification of Gait Motion Patterns Using Wearable Inertial Sensor Network. Sensors 2019, 19, 5024. [Google Scholar] [CrossRef]

- Godfrey, A.; Del Din, S.; Barry, G.; Mathers, J.C.; Rochester, L. Instrumenting gait with an accelerometer: A system and algorithm examination. Med. Eng. Phys. 2015, 37, 400–407. [Google Scholar] [CrossRef]

- Zhou, H.; Ji, N.; Samuel, O.W.; Cao, Y.; Zhao, Z.; Chen, S.; Li, G. Towards Real-Time Detection of Gait Events on Different Terrains Using Time-Frequency Analysis and Peak Heuristics Algorithm. Sensors 2016, 16, 1634. [Google Scholar] [CrossRef]

- Khandelwal, S.; Wickstrom, N. Gait Event Detection in Real-World Environment for Long-Term Applications: Incorporating Domain Knowledge Into Time-Frequency Analysis. IEEE Trans. Neural Syst. Rehabil. Eng. 2016, 24, 1363–1372. [Google Scholar] [CrossRef]

- Ji, N.; Zhou, H.; Guo, K.; Samuel, O.W.; Huang, Z.; Xu, L.; Li, G. Appropriate mother wavelets for continuous gait event detection based on time-frequency analysis for hemiplegic and healthy individuals. Sensors 2019, 19, 3462. [Google Scholar] [CrossRef]

- Del Din, S.; Hickey, A.; Ladha, C.; Stuart, S.; Bourke, A.; Esser, P.; Rochester, L.; Godfrey, A. Instrumented gait assessment with a single wearable: An introductory tutorial. F1000Research 2016, 5, 2323. [Google Scholar] [CrossRef]

- Bötzel, K.; Marti, F.M.; Rodríguez, M.; Plate, A.; Vicente, A.O. Gait recording with inertial sensors—How to determine initial and terminal contact. J. Biomech. 2016, 49, 332–337. [Google Scholar] [CrossRef] [PubMed]

- Gouwanda, D.; Gopalai, A.A.; Khoo, B.H. A Low Cost Alternative to Monitor Human Gait Temporal Parameters-Wearable Wireless Gyroscope. IEEE Sens. J. 2016, 16, 9029–9035. [Google Scholar] [CrossRef]

- Gouwanda, D.; Gopalai, A.A. A robust real-time gait event detection using wireless gyroscope and its application on normal and altered gaits. Med. Eng. Phys. 2015, 37, 219–225. [Google Scholar] [CrossRef]

- Enge, K.; Rind, A.; Iber, M.; Höldrich, R.; Aigner, W. Towards a unified terminology for sonification and visualisation. Pers. Ubiquitous Comput. 2023, 27, 1949–1963. [Google Scholar] [CrossRef]

- Hildebrandt, A.; Cañal-Bruland, R. Effects of auditory feedback on gait behavior, gaze patterns and outcome performance in long jumping. Hum. Mov. Sci. 2021, 78, 102827. [Google Scholar] [CrossRef]

- Reh, J.; Schmitz, G.; Hwang, T.-H.; Effenberg, A.O. Loudness affects motion: Asymmetric volume of auditory feedback results in asymmetric gait in healthy young adults. BMC Musculoskelet. Disord. 2022, 23, 586. [Google Scholar] [CrossRef]

- Raglio, A.; De Maria, B.; Parati, M.; Giglietti, A.; Premoli, S.; Salvaderi, S.; Molteni, D.; Ferrante, S.; Dalla Vecchia, L.A. Movement Sonification Techniques to Improve Balance in Parkinson’s Disease: A Pilot Randomized Controlled Trial. Brain Sci. 2023, 13, 1586. [Google Scholar] [CrossRef]

- Schaffert, N.; Janzen, T.B.; Mattes, K.; Thaut, M.H. A Review on the Relationship Between Sound and Movement in Sports and Rehabilitation. Front. Psychol. 2019, 10, 244. [Google Scholar] [CrossRef] [PubMed]

- Patania, V.M.; Padulo, J.; Iuliano, E.; Ardigò, L.P.; Čular, D.; Miletić, A.; De Giorgio, A. The Psychophysiological Effects of Different Tempo Music on Endurance Versus High-Intensity Performances. Front. Psychol. 2020, 11, 74. [Google Scholar] [CrossRef] [PubMed]

- Dubus, G.; Bresin, R. A Systematic Review of Mapping Strategies for the Sonification of Physical Quantities. PLoS ONE 2013, 8, e82491. [Google Scholar] [CrossRef]

- Effenberg, A. Movement Sonification: Effects on Perception and Action. Multimed. IEEE 2005, 12, 53–59. [Google Scholar] [CrossRef]

- Thaut, M.H.; McIntosh, G.C.; Hoemberg, V. Neurobiological foundations of neurologic music therapy: Rhythmic entrainment and the motor system. Front. Psychol. 2015, 5, 1185. [Google Scholar] [CrossRef] [PubMed]

- Ghai, S. Effects of Real-Time (Sonification) and Rhythmic Auditory Stimuli on Recovering Arm Function Post Stroke: A Systematic Review and Meta-Analysis. Front. Neurol. 2018, 9, 488. [Google Scholar] [CrossRef] [PubMed]

- Effenberg, A.O.; Fehse, U.; Schmitz, G.; Krueger, B.; Mechling, H. Movement Sonification: Effects on Motor Learning beyond Rhythmic Adjustments. Front. Neurosci. 2016, 10, 219. [Google Scholar] [CrossRef]

- Ross, J.M.; Balasubramaniam, R. Auditory white noise reduces postural fluctuations even in the absence of vision. Exp. Brain Res. 2015, 233, 2357–2363. [Google Scholar] [CrossRef]

- Dyer, J.F.; Stapleton, P.; Rodger, M. Mapping Sonification for Perception and Action in Motor Skill Learning. Front. Neurosci. 2017, 11, 463. [Google Scholar] [CrossRef]

- Dozza, M.; Horak, F.B.; Chiari, L. Auditory biofeedback substitutes for loss of sensory information in maintaining stance. Exp. Brain Res. 2007, 178, 37–48. [Google Scholar] [CrossRef]

- Rath, M.; Schleicher, R. On the Relevance of Auditory Feedback for Quality of Control in a Balancing Task. Acta Acust. United Acust. 2008, 94, 12–20. [Google Scholar] [CrossRef]

- Chiari, L.; Dozza, M.; Cappello, A.; Horak, F.B.; Macellari, V.; Giansanti, D. Audio-biofeedback for balance improvement: An accelerometry-based system. IEEE Trans. Biomed. Eng. 2005, 52, 2108–2111. [Google Scholar] [CrossRef] [PubMed]

- Pang, T.Y.; Feltham, F. Effect of continuous auditory feedback (CAF) on human movements and motion awareness. Med. Eng. Phys. 2022, 109, 103902. [Google Scholar] [CrossRef] [PubMed]

- Rodger, M.W.M.; Craig, C.M. Timing movements to interval durations specified by discrete or continuous sounds. Exp. Brain Res. 2011, 214, 393–402. [Google Scholar] [CrossRef] [PubMed]

- Rodger, M.W.M.; Craig, C.M. Beyond the metronome: Auditory events and music may afford more than just interval durations as gait cues in Parkinson’s disease. Front. Neurosci. 2016, 10, 272. [Google Scholar] [CrossRef]

- Thaut, M.H.; McIntosh, G.C.; Rice, R.R.; Miller, R.A.; Rathbun, J.; Brault, J.M. Rhythmic auditory stimulation in gait training for Parkinson’s disease patients. Mov. Disord. 1996, 11, 193–200. [Google Scholar] [CrossRef]

- Boyer, É.O.; Bevilacqua, F.; Susini, P.; Hanneton, S. Investigating three types of continuous auditory feedback in visuo-manual tracking. Exp. Brain Res. 2017, 235, 691–701. [Google Scholar] [CrossRef]

- Françoise, J.; Chapuis, O.; Hanneton, S.; Bevilacqua, F. SoundGuides: Adapting Continuous Auditory Feedback to Users. In Proceedings of the Conference Extended Abstracts on Human Factors in Computing Systems (CHI 2016), San Jose, CA, USA, 7–12 May 2016; pp. 2829–2836. [Google Scholar]

- Rosati, G.; Oscari, F.; Spagnol, S.; Avanzini, F.; Masiero, S. Effect of task-related continuous auditory feedback during learning of tracking motion exercises. J. NeuroEng. Rehabil. 2012, 9, 79. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).